Significance

Many fundamental neural computations from normalization to rhythm generation emerge from the same cortical hardware, but they often require dedicated models to explain each phenomenon. Recently, the stabilized supralinear network (SSN) model has been used to explain a variety of nonlinear integration phenomena such as normalization, surround suppression, and contrast invariance. However, cortical circuits are also capable of implementing working memory and oscillations which are often associated with distinct model classes. Here, we show that the SSN motif can serve as a universal circuit model that is sufficient to support not only stimulus integration phenomena but also persistent states, self-sustained network-wide oscillations along with two coexisting stable states that have been linked with working memory.

Keywords: recurrent neural networks, neural computation, neuronal dynamics

Abstract

A hallmark of cortical circuits is their versatility. They can perform multiple fundamental computations such as normalization, memory storage, and rhythm generation. Yet it is far from clear how such versatility can be achieved in a single circuit, given that specialized models are often needed to replicate each computation. Here, we show that the stabilized supralinear network (SSN) model, which was originally proposed for sensory integration phenomena such as contrast invariance, normalization, and surround suppression, can give rise to dynamic cortical features of working memory, persistent activity, and rhythm generation. We study the SSN model analytically and uncover regimes where it can provide a substrate for working memory by supporting two stable steady states. Furthermore, we prove that the SSN model can sustain finite firing rates following input withdrawal and present an exact connectivity condition for such persistent activity. In addition, we show that the SSN model can undergo a supercritical Hopf bifurcation and generate global oscillations. Based on the SSN model, we outline the synaptic and neuronal mechanisms underlying computational versatility of cortical circuits. Our work shows that the SSN is an exactly solvable nonlinear recurrent neural network model that could pave the way for a unified theory of cortical function.

Understanding the mechanisms underlying cortical function is the main challenge facing theoretical neuroscience. Nonlinear responses such as normalization, contrast invariance, and surround suppression are often encountered in the cortex, particularly in the visual cortex (1, 2). At the same time, working memory about a transient stimulus, persistent activity in decision-making tasks, and generation of global rhythms represent fundamental computations which have been linked to cognitive functions (3–5). Whether these computations arise from common or different mechanisms is an open question.

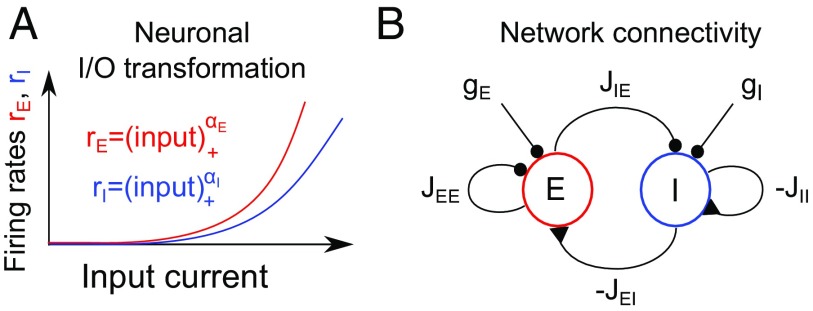

Previous work showed that the response of in vivo cortical neurons is well described by a power law (6) (Fig. 1). Experiments and theory indicate that low firing rates and high variability reported in the cortex are associated with a power-law activation function and noise-driven firing (6–9). Furthermore, a power law is mathematically the only function that permits precise contrast invariance of tuning given input that has contrast-invariant tuning (8, 9). Recent work (1, 2, 10) proposed the stabilized supralinear network (SSN) as a mechanism for normalization, contrast invariance, and surround suppression. While the SSN model is powerful in the context of stimulus integration, its role for other cortical computations remains to be clarified (11).

Fig. 1.

Firing-rate transformation and network wiring. (A) Threshold power-law transfer function determines the relationship between input current and the firing rate of excitatory and inhibitory neurons. (B) Populations of power-law neurons are recurrently connected and receive excitatory external inputs. E, excitatory; I, inhibitory.

Working memory is the ability to remember a transient input long enough for it to be processed (3). It is often implemented as a stimulus-induced switch in a bistable system (12). Persistent activity is thought to be critical for neural implementation of decision making (3, 4). Mechanistically, it is firing observed in the absence of any stimulus between the cue and the go signal in decision-making tasks. Persistent activity was first recorded in the early 1970s in the prefrontal cortex of behaving monkeys (13) and has since been found in other cortical areas (3). Finally, generation of synchronous network-wide rhythms is also a fundamental cortical feature that has been suggested to mediate communication between cortical areas and may be needed for attention, feature integration, and motor control (5).

Here, we investigate whether the SSN motif is sufficient to give rise to network multistability, persistent activity, and global rhythms. Seminal articles have proposed that working memory is a result of network multistability (12). To explain multistability, mechanisms that involve either S-shaped neuronal activation function in combination with a suitably strong excitatory feedback or S-shaped synaptic nonlinearity have been proposed (14–16). Likewise, a persistent state which occurs in the decision-making process has been modeled using synaptic nonlinearities (17). The SSN framework, however, lacks both ingredients: it does not exhibit an S-shaped neural activation function and lacks synaptic plasticity. It is also currently unclear whether the SSN motif alone supports the emergence of stable global oscillations. If oscillations can emerge in the SSN, it would be important to determine the conditions for connectivity and neural power-law exponents when this occurs. On the other hand, if the SSN motif is not sufficient for the emergence of oscillations, it would be necessary to understand what sets it apart from models that can support global rhythms (14, 18, 19).

To investigate whether and when the SSN model can support multistability and persistent and oscillatory activity we need to understand its dynamics systematically. Since the SSN is a nonlinear model, mathematical methods to obtain exact solutions are challenging. Previous studies used the functional shape of nullclines to determine their possible crossings and derive the number of steady states in the Wilson–Cowan model with an S-shaped activation function (14, 15). Here, we aim to explain how location and multiplicity of steady states are influenced by specific parameter choices for which information on the shape of nullclines is not always mathematically tractable. To this end, we developed an analytical method that allows us to map the steady states of the 2D SSN model to the zero crossings of a 1D characteristic function. We determine multiplicity and stability of the SSN steady states for all possible parameter regimes. In particular, we show that the SSN model supports the following three effects. First, we outline network configurations corresponding to a bistable regime that could serve as a substrate for working memory. Second, we show that the SSN model can lead to dynamically stable persistent activity and derive the corresponding connectivity requirements. Third, we prove the occurrence of stable global oscillations in the SSN and provide methods for how to tune the oscillatory frequency to a desired value. Our article is organized as follows. First, we analyze the multiplicity and stability of the SSN steady states and show the existence of a unique mapping between zero crossings of a characteristic function and steady states. Second, we prove for equal-integer power-law exponents that the model has at most four coexisting steady states and at most two steady states can be stable. Finally, we present parameter regimes for which a stable persistent state and global oscillations exist.

Results

We consider a recurrent network as illustrated in Fig. 1 and model the activity of excitatory and inhibitory populations and using the following equations often referred to as the SSN model (2, 10):

| [1] |

Here, and are the nonnegative constant inputs to the respective populations, and and denote the time constants. is the effective synaptic strength from the population to the population , where . By we denote the connectivity matrix where the first row is , , and the second row is , . The susceptibility of a neuronal population to currents is expressed using the power-law activation functions with the exponents , where . The power-law dependence is based on experimental and theoretical studies (6, 8, 9). Here, let us briefly comment on our choice of units. Throughout the article, we use unitless quantities for all parameters including firing rates because the values of connectivities and inputs can be rescaled by any number to match the desired firing-rate range. See Rescaling the Connectivity and Input Constants to Match a Desired Firing-Rate Range for details on the rescaling procedure.

To characterize the SSN activity states, it is crucial to understand how location, multiplicity, and dynamical stability of the SSN steady states depend on connectivity, inputs, and activation functions of neuronal populations. The steady-state firing rates and are described by

| [2] |

While a steady state can be easily found in the case of , the nonlinear case currently lacks analytical solution strategies. Here, we present a unique approach for finding and analyzing the steady states in Eq. 1. We show that the 2D system of nonlinear equations in Eq. 2 can be reduced to a single 1D equation for a nonlinear characteristic function. Specifically, we have identified a one-to-one correspondence between the 2D steady states and the 1D zero crossings of a characteristic function such that the steady-state search algorithm is reduced to a lower-dimensional problem.

Reducing the Dimensionality via a Characteristic Function.

To solve the two nonlinear equations presented in Eq. 2, we take the following steps. We first apply a variable substitution replacing with , as detailed in Materials and Methods. Now, we can simplify the problem by eliminating the variable for and for . We denote the remaining variable by and show that the solutions of Eq. 2 are uniquely mapped to the zero crossings of the characteristic function which depends on . For , this function is given by

| [3] |

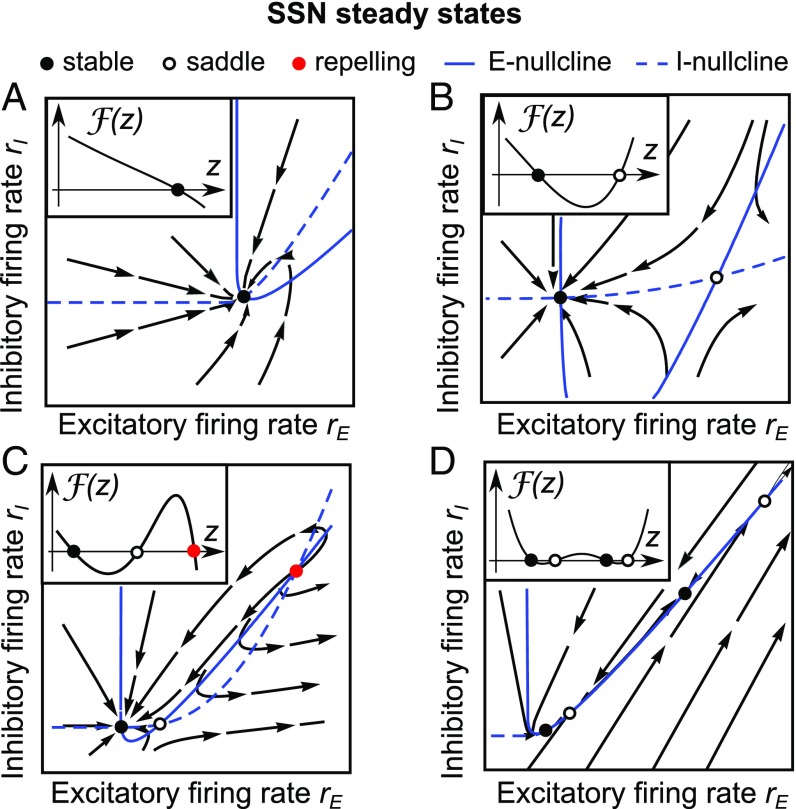

where . The steady-state , corresponding to the zero-crossing is given by and . A similar form of and exists for (Materials and Methods and Characteristic Function). In the following, we refer to the function as a characteristic function. Mathematically, the nullcline crossings in Eq. 2 that define the steady states are now mapped to the zero crossings of a 1D function . This transformation significantly enhances the mathematical tractability because steady states are now intersection points of a 1D function with a coordinate axis and are no longer intersections of two complex nonlinear nullclines. In Fig. 2, we depict the zero crossings of and the corresponding steady states for four representative classes of solutions. Additionally, in Location of SSN Steady States Is Preserved in the Presence of Firing-Rate Saturation and Fig. S2 we show that these steady states remain at the same location if activation functions saturate at high firing rates. Saturation of activation functions provides boundedness of all solution trajectories; however, it can introduce additional steady states.

Fig. 2.

Steady states and characteristic functions. Steady states are related to zero crossings of the characteristic function (A–D, Insets). Black flowlines represent trajectories starting from different initial points. (A) A single steady state can exist only for . (B) Two SSN steady states can coexist for . Zero crossings of with a positive derivative correspond to saddles. (C) Three steady states are possible if . Dependent on the sign in Eq. 5 zero crossings with the negative derivative can correspond to either stable or repelling steady states. (D) Four steady states can coexist only for . Parameters are as in Table 2.

Stability Conditions.

The characteristic functions in Fig. 2 exhibit zero crossings with positive derivatives which correspond to saddles, while those with negative derivatives correspond to either stable or repelling steady states. To support this finding mathematically, we consider the eigenvalues and of the Jacobian matrix at the steady state of interest. We find that at the zero-crossing is related to the negative product of the eigenvalues at the corresponding steady state:

| [4] |

Notably, Eq. 4 is similar to the relation derived in ref. 20 where a 2D system of equations corresponding to a conductance-based model was reduced to a 1D function whose derivative coincided with the determinant of the Jacobian. A steady state is a saddle if the eigenvalues are real and have opposite signs. This can occur if and only if is positive at the zero-crossing (Fig. 2). Stable steady states require that both and the sum of the eigenvalues are negative (18). The sum of the eigenvalues is negative only if

| [5] |

Thus, the stability of a steady state is equivalent to the requirements that is negative at the zero crossing and that the condition Eq. 5 is fulfilled. We note that if is negative, then Eq. 5 can always be fulfilled by choosing to be sufficiently small. Interestingly, we have found that if and , then the sign of directly determines the stability of the steady state. If in this case, then Eq. 5 is automatically fulfilled and the steady state is stable. If , then the corresponding steady state is a saddle (Stability Conditions).

Determining the Number of Coexisting Steady States.

We now determine how many steady states can coexist for a given synaptic connectivity and inputs. First, let us note that previous work (14, 15) reported up to five steady states in the Wilson–Cowan model with an S-shaped activation for positive and nonnegative or up to nine if and are allowed to be negative. Here, we aim to find how many steady states emerge from a power-law activation function and determine exactly the possible number of steady states for a given combination of inputs, exponents, and connectivity. To obtain exact results, we consider a situation where the excitatory and the inhibitory populations have the same integer exponents, such that and . Here, it is plausible to assume that the characteristic function, which now contains a polynomial of degree up to , could have zero crossings leading to steady states. If the number of steady states was indeed dependent on the steepness of the activation function and grew proportional to , then networks of neurons with steep activation functions and higher exponents would likely have a fractured phase space with many coexisting steady states. The steady state assumed by the network could then be highly dependent on the initial condition. Since such networks have not yet been biologically observed, it is likely that the number of steady states remains bounded even if the steepness of the activation function increases. Thus, we decided to check our initial intuition that the number of steady states grows with . Surprisingly, it turned out not to be the case.

To study the multiplicity of the SSN steady states, we partition the parameter space of the SSN model into nine classes. Such partition makes the problem more accessible for mathematical analysis. For details we refer to Materials and Methods. First, we identify three classes according to the sign of (Table 1). Second, we further separate each of three classes into three parameter subsets according to the sign of the constants for and for (Table 1 and Materials and Methods). To determine the multiplicity of steady states for each of the nine parameter classes presented in Table 1, we follow the steps outlined in Materials and Methods and Multiplicity of Steady States. We find that there can be at most four steady states and this number does not grow with . For there are at most three steady states (Multiplicity of Steady States). For , we show that at most two stable steady states can coexist (Fig. 2 and Table 1).

Table 1.

Multiplicity of SSN steady states for , whereby and

| , | 2(1)/0 | 2(1)/0 | 4(2)/2(1)/0 |

| , | 2(1)/0 | 2(1)/0 | 2(1)/0 |

| , | 3(2)/2(1)/1(1)/0 | 2(1)/1(1)/0 | 2(1)/1(1)/0 |

| , | 2(1)/1(1)/0 | 2(1)/1(1)/0 | 2(1)/1(1)/0 |

| , | 3(2)/1(1) | 3(2)/1(1) | 3(2)/1(1) |

For each class, we give the possible numbers of steady states (in parentheses is the maximal number of stable states, which is for example possible for sufficiently small , Eq. 5). All alternative steady-state configurations are indicated with “/”. In cases where results for and differ, we show them on a separate line. Parameter sets supporting bistability are highlighted in boldface type. Ordered by the distance to the origin, the first and the third steady states always correspond to the zero crossings of the characteristic function with negative derivative and dependent on the sign in Eq. 5 are either stable or repelling. The second and the fourth steady states always correspond to the zero crossings with the positive derivative and represent saddles. For completeness, let us note that we have ignored nongeneric cases (destroyed by any perturbation of parameters) in which there is a steady state with a zero eigenvalue.

Taken together, our results indicate that the power-law–type networks with equal integer exponents can support two coexisting stable states, but no more than two stable states can occur at a time. The ability of a circuit to support bistability has been suggested as a substrate for working memory where the circuit transitions from the lower to the upper state follow the presentation of a brief stimulus. The emergence of bistability has so far been confirmed in neural networks with S-shaped synaptic or neuronal nonlinearities (16). However, our results show that neither synaptic nonlinearities nor S-shaped activation functions are prerequisites for bistability in a network. Two coexisting steady states can simply emerge in a network with a globally convex power-law activation.

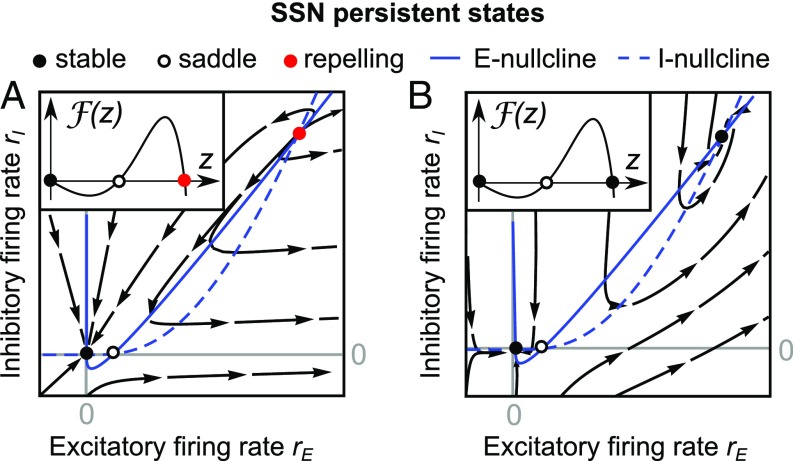

Persistent State.

One of the hallmarks of nonlinear systems is the ability to sustain a response after the input is switched off. Such response is referred to as a persistent state. We ask whether it is possible to observe a persistent state in a system described by Eq. 1 with the exponents . Here, we consider the same integer exponents of the excitatory and the inhibitory populations. To approach this question, we examined the characteristic function for zero inputs and and looked for its positive zero crossings with the negative derivative. We note that only a zero crossing with the negative derivative can lead to a stable steady state. In absence of inputs, we derived the following necessary condition for the existence of such zero crossing which can be directly verified for and :

| [6] |

(Materials and Methods and Existence of Persistent States). The inequality on the left side of Eq. 6 implies that a persistent state in the network described by Eq. 1 is possible only when the cross-population connectivity dominates the connectivity within the individual populations. Moreover, the excitability of individual neurons has to fulfill the inequality on the right side of Eq. 6. We note that a stable persistent state does not exist outside the parameter set specified by the inequality Eq. 6 (see Materials and Methods for further details). To guarantee that a given persistent steady state is stable and can thus be reached dynamically, the time constants and need to meet the stability condition Eq. 5 presented above. Fig. 3 A and B shows examples of a stable and repelling persistent state. If the existence of a positive zero crossing with negative derivative in absence of inputs has been established, can be chosen sufficiently small (or large) such that the persistent state fulfills the stability condition Eq. 5.

Fig. 3.

Persistent state in the SSN model. The nonzero persistent state can be stable (black solid circle) or repelling (red solid circle). If Eq. 5 is not satisfied, the persistent state is repelling (A); otherwise it is stable (B). Parameters are as in Table 2.

Table 2.

| Fig. 2 | Fig. 3 | Fig. 4 | |||||||

| Parameters | A | B | C | D | A | B | A | B | |

| 1.1 | 1.5 | 1.1 | 2.25 | 1.5 | 1.5 | 1.5 | 1.5 | ||

| 0.9 | 1 | 1 | 44.4 | 1 | 1 | 1 | 1 | ||

| 2 | 0.5 | 0.5 | 1 | 0.5 | 0.5 | 10 | 10 | ||

| 1 | 1 | 0.1 | 20 | 0.1 | 0.1 | 1 | 1 | ||

| 0.4 | 0.1 | 0.2 | 0.2808 | 0 | 0 | 0.7 | 5 | ||

| 0.3 | 0.1 | 0.01 | 0.015 | 0 | 0 | 0.01 | 0.01 | ||

| 1 | 1 | 1 | 1 | 1 | 15 | 0.1 | 0.1 | ||

For all figures, we use and .

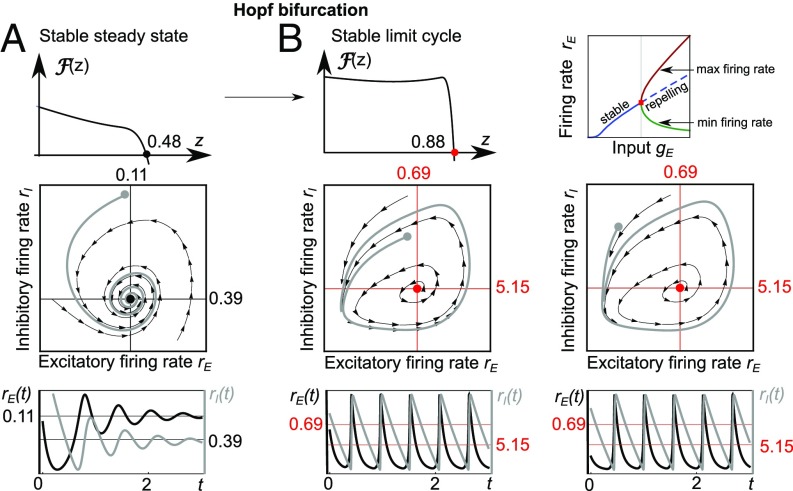

Emergence of Oscillations.

Periodic fluctuations of neural activity are a common occurrence across the cortex and have been associated with many information-processing tasks (5). Some cognitive disorders are also reportedly accompanied by a change in the oscillatory cortical activity (21). In some instances, the oscillatory activity has been explained by the amplification of selected input frequencies (22) while in others, it has been proposed to arise from intrinsic, self-sustained oscillations originating for example from a Hopf bifurcation (18). Here, we study whether the SSN can support global oscillations arising from a Hopf bifurcation and if so which synaptic or neuronal parameters can lead to oscillations and which ones control the oscillatory frequency. An extensive analysis of the Hopf bifurcation for the Wilson–Cowan model with an S-shaped activation function can be found in ref. 23. A Hopf bifurcation occurs when the complex conjugate eigenvalues corresponding to a stable steady state cross the imaginary axis when the input current or other parameter values are varied. In this way, the initial frequency of the global oscillation corresponds to the imaginary part of the eigenvalues (18). To determine when the steady-state eigenvalues in the SSN can become complex and when the limit cycle is stable, we have followed the steps outlined in Existence of Global Oscillations and in ref. 24. If , then it is always possible to find inputs and time constants for which the eigenvalues become purely imaginary. Moreover, for a Hopf bifurcation in the SSN can occur only for , because our stability analysis implies that for eigenvalues are always real. When a Hopf bifurcation occurs, the emergent oscillation frequency is . We note that can be tuned to any value; e.g., decreasing the time constants or will result in an increasing oscillatory frequency. The stability of the limit cycle can be determined using the Lyapunov coefficient; see Existence of Global Oscillations and the code accompanying this article. In Fig. 4 we present the example of a stable limit cycle emerging from a supercritical Hopf transition.

Fig. 4.

Emergence of oscillations in the SSN model. (A) A steady state corresponding to the zero crossing of at (Top) is located at , (Middle). Initializing the network at , leads to a converging spiral (Middle and Bottom). (B) If the input to the excitatory population is increased, the steady state becomes repelling (bifurcation diagram, Top Right). The zero crossing of is shifted to (Top Left). The corresponding steady-state , is repelling, and a stable limit cycle can be observed (Middle row). The initial conditions located inside (B, Left) and outside (B, Right) both converge to the limit cycle orbit. Parameters are as in Table 2.

Discussion

Multipurpose models that explain more than just the functions they are designed for are currently in short supply. Here, we show that the SSN can serve as a universal circuit model that can support rhythm generation and working-memory features such as bistability and persistent states along with many stimulus integration rules as well as contrast invariance, normalization, and surround suppression (2, 6, 8, 9). Importantly, we provide a unique analytical method to obtain exact solutions for the SSN model that are valid for all parameter regimes. This makes the SSN model a nonlinear, yet exactly solvable, recurrent neural network model. Remarkably, the computational versatility of the SSN model emerges from the nonlinear activation function and does not require synaptic plasticity, delay tuning, or specific ion channel models. The characteristic function method we derived here allows us to show that the connectivity regime where the product of cross-connectivities dominates the recurrent connections () is particularly efficient and supports all three of the fundamental activity types: bistability, persistent state, and oscillations. In our work, we derived exact conditions that describe how to control the frequency of global rhythms and determined when persistent activity emerges and when two coexisting states with finite firing rates can subserve working memory. For example, we found that persistent activity in the SSN model can occur only if and if the power-law steepness is carefully matched to the connectivity matrix (Eq. 6). Our work thus provides an important step toward a unified theory of cortical function by identifying connectivity regimes supporting working memory and network oscillations and uniting these fundamental computations via the SSN.

Materials and Methods

Correspondence Between the Steady States and the Zero Crossings of a Characteristic Function.

We show how the SSN steady states can be unambiguously mapped to the zero crossings of a characteristic function , a correspondence which we illustrate in Fig. 2. Here, we derive for . Using similar arguments, we derive in Characteristic Function for and summarize both in Eq. 15.

As a first step toward for we substitute

| [7] |

Now the equilibrium equations in Eq. 2 can be written as

| [8] |

Since , we now express the old variables , using the new variables , by inverting Eq. 7. We obtain

| [9] |

where and . Our next goal is to eliminate and from the above equations. To this end, we substitute Eq. 8 into Eq. 9 and obtain

| [10] |

| [11] |

Now, the above equations contain only the new variables and . Our next goal is to eliminate the variable from the system Eqs. 10 and 11 and reduce the system to 1D. To this end, we introduce an abbreviation for the left side of Eq. 10:

| [12] |

Now, Eq. 10 can be written as . We substitute into Eq. 11 and obtain

| [13] |

For , we denote by the left side of Eq. 13,

| [14] |

with defined by Eq. 12. We thereby have shown that if , is a steady state for , then satisfies with the functions and given by Eqs. 12 and 14, respectively. Next, we show that no spurious zeros of exist that do not correspond to steady states. To this end, we consider a real number such that where is as defined by Eq. 14 for . We define , , in which is given by Eq. 12, and insert these variables into . Thereby we obtain the first steady-state relation from Eq. 2. Next, we use the relation to obtain the second relation in Eq. 2. This shows that our definition of establishes a bijective mapping between , , and the zero crossings of .

In summary, the steps outlined above for and those in Characteristic Function for lead to the following form of :

| [15] |

Here, the function is given by

with the constants and defined above.

Notably, we obtain a bijective mapping such that if , is a steady state of Eq. 1, then whereby

And vice versa, if , then the steady state of Eq. 1 is

We note that using different functions for and as in Eq. 15 is necessary for calculating the number of zero crossings of later (it is critical to the Descartes rule of signs and other statements) (Multiplicity of Steady States). When applying the same and for all values of one would also obtain a bijective mapping but multiplicity statements would no longer be readily available.

Stability of Steady States.

In the previous paragraph, we presented the method of finding the steady states using the characteristic function in Eq. 15. Next, we derive exact stability conditions for the steady states. Our goal is to relate stability of steady states to the behavior of the characteristic function in the vicinity of its zero crossings. To this end, we first move the time constants to the right side of Eq. 1 and consider the resulting dynamical system . Its Jacobian is given by

where and . Remarkably, we find that and are related and always have opposite signs:

| [16] |

Here, for and for . Since the product of the eigenvalues and of the Jacobian is equal to and their sum coincides with the trace of , we obtain Eqs. 4 and 5.

For completeness, we note that a previous study (10) showed that if , is a stable state for and , then , is also stable for all that satisfy Eq. 5. Here, we significantly expanded this result and revealed the full SSN stability repertoire.

Multiplicity of Steady States.

In the previous two paragraphs, we demonstrated how to determine the location of steady states and to characterize their stability using the characteristic function. Here, we build on these findings and use the characteristic function to derive the multiplicity of possible steady states. To provide exact results, we limit our analysis to integer power-law exponents with . The numerical study in Fig. S1 indicates that integer exponents are a good starting point to understand the expected location and multiplicity of the SSN steady states. In Multiplicity of Steady States, we detailed why the maximal number of coexistent steady states with equal integer exponents is four and that at most two can be stable simultaneously. This proves that bistability is supported by the SSN model.

To determine the multiplicity of steady states, we need to find the number of zero crossings. To this end, we separate the parameter space into nine distinct classes as indicated in Table 1 and determine possible multiplicity configurations of zero crossings in each class. The goal of parameter separation is to simplify the equation for the characteristic function Eq. 15 in each class and make it more accessible for mathematical analysis. We distinguish three subsets of the parameter space according to the sign of , because they lead to different functions in Eq. 15. Further, we separate parameter space based on the sign of .

To understand why, consider the expressions and which enter the definition of the characteristic function . As long as is negative, the expression is zero. If is positive, then . The critical point for is . Similarly, since is a monotonically increasing function, there is a unique critical point for ; i.e., for and zero for . The parameter, which determines whether the critical point is above or below the critical point 0, is the sign of . Specifically, we have , , or , if , , or , respectively. Thus, different signs of lead to different separations of the axis into subintervals, where coincides with an usual polynomial. Taken together, we partition the parameter space into nine classes that correspond to different signs of and as presented in Table 1. Following the steps outlined in Multiplicity of Steady States, we determine possible stability and multiplicity configurations of steady states in each class. The parameter classes in which two stable steady states can coexist are indicated in boldface type in Table 1. The constants and are modifications of the constants and introduced in ref. 10.

Persistent Stable State.

A persistent state is a stable positive steady state for zero input. To guarantee its existence, it is necessary to show two features. First, we show the existence of a positive zero-crossing with for zero input because only such zero crossing can lead to a positive stable steady state. Second, we verify that this steady state is indeed stable (see Eq. 5). Using the steps outlined in Existence of Persistent States, we show that a required positive zero crossing of the characteristic function with the negative derivative exists in absence of inputs if and only if is constrained as

| [17] |

Here, is the unique solution of the equation

| [18] |

in the interval .

Based on this result, we derived an easy-to-verify necessary condition Eq. 6 for the existence of a stable persistent state. For , the explicit solution of Eq. 18 can be directly inserted in the inequality Eq. 17; see Existence of Persistent States for more details.

Supplementary Material

Acknowledgments

We thank K. Miller, H. Cuntz, B. Ermentrout, A. Nold, and members of our group for comments and discussions. We thank K. Miller and an anonymous reviewer for contributing to the persistent-state condition Eq. S11 and Eq. 6. This work is supported by the Max Planck Society (T.T.) and a grant from the German Research Foundation via Collaborative Research Center 1080 (to T.T.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: The code for Figs. 2–4 and the Lyapunov coefficient has been deposited in GitHub, https://github.molgen.mpg.de/MPIBR/CodeSSN.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1700080115/-/DCSupplemental.

References

- 1.Persi E, Hansel D, Nowak L, Barone P, Van Vreeswijk C. Power-law input-output transfer functions explain the contrast-response and tuning properties of neurons in visual cortex. PLoS Comput Biol. 2011;7:e1001078. doi: 10.1371/journal.pcbi.1001078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Rubin DB, Van Hooser SD, Miller KD. The stabilized supralinear network: A unifying circuit motif underlying multi-input integration in sensory cortex. Neuron. 2015;85:402–417. doi: 10.1016/j.neuron.2014.12.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Curtis CE, Lee D. Beyond working memory: The role of persistent activity in decision making. Trends Cogn Sci. 2010;14:216–222. doi: 10.1016/j.tics.2010.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wang XJ. Synaptic reverberation underlying mnemonic persistent activity. Trends Neurosci. 2001;24:455–463. doi: 10.1016/s0166-2236(00)01868-3. [DOI] [PubMed] [Google Scholar]

- 5.Singer W. Synchronization of cortical activity and its putative role in information processing and learning. Annu Rev Physiol. 1993;55:349–374. doi: 10.1146/annurev.ph.55.030193.002025. [DOI] [PubMed] [Google Scholar]

- 6.Priebe NJ, Ferster D. Inhibition, spike threshold, and stimulus selectivity in primary visual cortex. Neuron. 2008;57:482–497. doi: 10.1016/j.neuron.2008.02.005. [DOI] [PubMed] [Google Scholar]

- 7.Margrie TW, Brecht MSB. In vivo, low-resistance, whole-cell recordings from neurons in the anaesthetized and awake mammalian brain. Pflugers Arch. 2002;444:491–498. doi: 10.1007/s00424-002-0831-z. [DOI] [PubMed] [Google Scholar]

- 8.Miller KD, Troyer TW. Neural noise can explain expansive, power-law nonlinearities in neural response functions. J Neurophysiol. 2002;87:653–659. doi: 10.1152/jn.00425.2001. [DOI] [PubMed] [Google Scholar]

- 9.Hansel D, Van Vreeswijk C. How noise contributes to contrast invariance of orientation tuning in cat visual cortex. J Neurosci. 2002;22:5118–5128. doi: 10.1523/JNEUROSCI.22-12-05118.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ahmadian Y, Rubin DB, Miller KD. Analysis of the stabilized supralinear network. Neural Comput. 2013;25:1994–2037. doi: 10.1162/NECO_a_00472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wolf F, Engelken R, Puelma-Touzel M, Weidinger JDF, Neef A. Dynamical models of cortical circuits. Curr Opin Neurobiol. 2014;25:228–236. doi: 10.1016/j.conb.2014.01.017. [DOI] [PubMed] [Google Scholar]

- 12.Amit DJ. The Hebbian paradigm reintegrated: Local reverberations as internal representations. Behav Brain Sci. 1995;18:617–626. [Google Scholar]

- 13.Fuster JM, Alexander GE. Neuron activity related to short-term memory. Science. 1971;173:652–654. doi: 10.1126/science.173.3997.652. [DOI] [PubMed] [Google Scholar]

- 14.Wilson HR, Cowan JD. Excitatory and inhibitory interactions in localized populations of model neurons. Biophys J. 1972;12:1–24. doi: 10.1016/S0006-3495(72)86068-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ermentrout GB, Terman DH. Mathematical Foundations of Neuroscience. Springer; New York: 2010. [Google Scholar]

- 16.Mongillo G, Hansel D, van Vreeswijk C. Bistability and spatiotemporal irregularity in neuronal networks with nonlinear synaptic transmission. Phys Rev Lett. 2012;108:158101. doi: 10.1103/PhysRevLett.108.158101. [DOI] [PubMed] [Google Scholar]

- 17.Amit DJ, Brunel N. Model of global spontaneous activity and local structured activity during delay periods in the cerebral cortex. Cereb Cortex. 1997;7:237–252. doi: 10.1093/cercor/7.3.237. [DOI] [PubMed] [Google Scholar]

- 18.Izhikevich EM. Dynamical Systems in Neuroscience. MIT Press; Cambridge, MA: 2007. [Google Scholar]

- 19.Tiesinga P, Sejnowski TJ. Cortical enlightenment: Are attentional gamma oscillations driven by ing or ping? Neuron. 2009;63:727–732. doi: 10.1016/j.neuron.2009.09.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Rinzel J, Ermentrout B. Methods in Neuronal Modeling. MIT Press; Cambridge, MA: 1989. [Google Scholar]

- 21.Lewis DA, Hashimoto T, Volk DW. Cortical inhibitory neurons and schizophrenia. Nat Rev Neurosci. 2005;6:312–324. doi: 10.1038/nrn1648. [DOI] [PubMed] [Google Scholar]

- 22.Burns SP, Xing D, Shapley RM. Is gamma-band activity in the local field potential of v1 cortex a “clock” or filtered noise? J Neurosci. 2011;31:9658–9664. doi: 10.1523/JNEUROSCI.0660-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Hoppensteadt FC, Izhikevich EM. Weakly Connected Neural Networks. Springer; New York: 1997. [Google Scholar]

- 24.Kuznetsov Y. Elements of Applied Bifurcation Theory. Vol 112 Springer; New York: 2013. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.