Abstract

Mobile animal groups provide some of the most compelling examples of self-organization in the natural world. While field observations of songbird flocks wheeling in the sky or anchovy schools fleeing from predators have inspired considerable interest in the mechanics of collective motion, the challenge of simultaneously monitoring multiple animals in the field has historically limited our capacity to study collective behaviour of wild animal groups with precision. However, recent technological advancements now present exciting opportunities to overcome many of these limitations. Here we review existing methods used to collect data on the movements and interactions of multiple animals in a natural setting. We then survey emerging technologies that are poised to revolutionize the study of collective animal behaviour by extending the spatial and temporal scales of inquiry, increasing data volume and quality, and expediting the post-processing of raw data.

This article is part of the theme issue ‘Collective movement ecology’.

Keywords: collective behaviour, collective motion, remote sensing, bio-logging, reality mining

1. Introduction

Group living is common in animals and directly influences important biological processes such as resource acquisition, predator avoidance and social learning [1]. In addition to the biological and ecological significance of collective behaviour, the spectacle of coordinated animal groups navigating the environment (e.g. flocking birds, marching locusts, schooling fish) continues to drive an intense interest in understanding the mechanics behind these impressive displays. The past several decades have marked a revolution in scientific understanding of the causes and consequences of collective behaviour. This is due, in large part, to a feedback between high-precision measurements of the behaviours of animal groups, and mathematical and computational models that seek to re-create these behaviours. In 1987, Reynolds [2] took an unlikely but germinal step in this direction when he showed, via computer simulations, that complex collective motion resembling the flocking, herding and schooling behaviours of animals could result from simple, local rules of interaction among individuals. In the following decades, researchers extended these early models to describe larger groups of individuals with more sophisticated and biologically justifiable interaction rules [3–5]. Simultaneously, advancements in videography and computer vision have made it possible to empirically test some of these models in the laboratory [6–9]. This feedback between mathematical and computational models and high-resolution data from laboratory experiments has defined an era of hypothesis-driven research and facilitated the development of a mechanistic understanding of collective decision-making in animal groups.

Extending this theoretical–empirical feedback to include group-living species in their natural environments is a critical step toward understanding how the dynamics of collective behaviour relate to broader ecological and evolutionary questions. Recent advances in field-deployable tracking technologies (e.g. stationary imaging techniques, bio-loggers and remote sensing; figure 1) present new opportunities for conducting field-based studies of collective behaviour at ecologically meaningful spatio-temporal scales. By studying social interactions in wild animal groups, researchers are starting to identify the social and ecological mechanisms that drive collective behaviours in a broader range of animal species, to quantitatively describe interaction rules at the individual level that drive movement decisions at the group level, and to empirically assess the ecological significance of collective movement in the wild [10–12]. In addition, we are poised to explore collective processes that cannot be studied in the laboratory, such as long distance collective migration, predator–prey interactions in large, group-living species, and information transfer across the landscape.

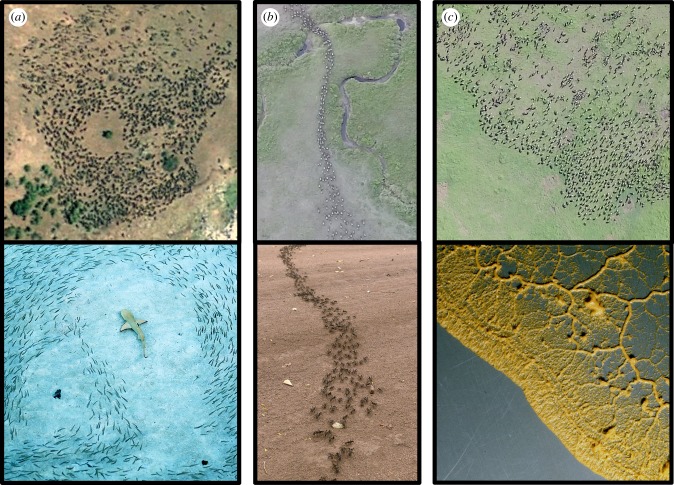

Figure 1.

Technology is changing our view of collective behaviour, offering a variety of different perspectives on animal movement and interactions. High-resolution satellite imaging, fixed-wing or multicopter photography allows imaging groups of animals as they move across the landscape or migrate great distances. Stationary or semi-stationary imaging techniques allow high-definition tracking of large groups, potentially in three dimensions, using standard cameras, imaging sonar or infrared cameras. Bio-logging tags that sample location, behaviour, activity, or interactions with conspecifics provide a continuous stream of data from tagged individuals, even in otherwise inaccessible locations or when moving across large distances.

This prospectus aims to provide an overview of existing and emerging technologies used to collect data on movements, behaviour and interactions within animal groups in the field and highlights the challenges and opportunities presented by each. We have omitted a discussion of the extensive literature on collective behaviour of wild social insects, as well as the literature on human groups, primarily because the techniques used in these systems often differ substantially from techniques used to study other social animals. Our aim is to survey current and state of the art technologies used to study social animals in the wild, as well as to look towards the kinds of studies these technologies will make possible in the future.

2. Stationary field imaging techniques

High-resolution stationary imaging has been one of the most widely used methods for studying the collective behaviour of wild animals. Modern imaging methods include three-dimensional (3D) videography, high-speed single-camera and multi-camera videography, thermal infrared imaging, and imaging sonar. All of these methods are capable of recording high-resolution data on both animals and environmental features within the camera field of view, facilitating the study of social and ecological interactions on a fine spatial scale. In addition, many stationary cameras have the advantage of being compatible with a large, external power supply. This can extend the duration and frequency of data collection, making stationary cameras appropriate for a wide range of taxa, habitats and movement modes (i.e. from disparate individuals to large, cohesive groups). However, the inherent limitation of imaging from a fixed location may reduce the utility of stationary cameras in complex environments or areas of low animal density. In this section, we provide a selective review of some of these technologies and address challenges that arise when using stationary cameras to study collective behaviour of animals in the field.

(a). Imaging large groups

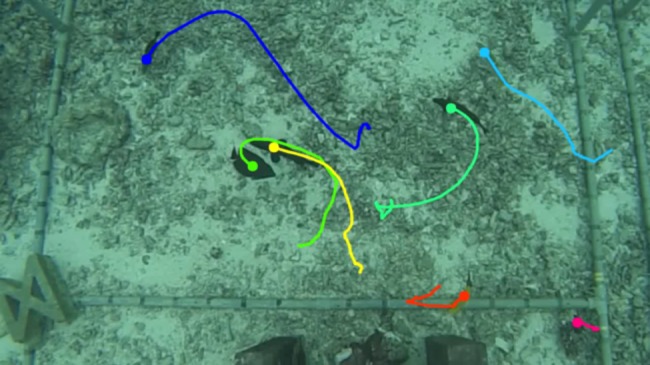

Stationary cameras have provided important opportunities to make precise measurements of collective behaviour in the wild. For example, Cavagna et al. [13] used carefully calibrated cameras placed atop a building to record individual positions and movements of starlings (Sturnus vulgaris) in large flocks. Similarly, Ginelli et al. [14] used digital cameras placed atop a tower to record the behaviours of large groups of domestic sheep (Ovis aries) in outdoor enclosures, and Theriault et al. [15] reconstructed flight paths of groups of wild Brazilian free-tailed bats (Tadarida brasiliensis) and cliff swallows (Petrochelidon pyrrhonota) flying through volumes of up to 7000 m3. In all of these studies, researchers chose imaging equipment and configurations to strike a balance between achieving a wide field of view and maintaining sufficient resolution to allow tracking of individual movements. When it is not possible to film animals from a distance, or high-resolution images are required, multiple synchronized cameras may be used to increase the total field of view (e.g. an array of downward looking cameras in shallow water [16]; figure 2).

Figure 2.

Still frame from a video sequence showing movement tracks of individual fish filmed from a stationary camera array in shallow water [16].

When designing a camera set-up, it is important to consider the speeds and spatial scale of the movements of the study animal, in addition to the method by which data will be analysed. Many studies of collective behaviour make inferences by studying covariance among positions, speed or accelerations of tracked animals. This type of analysis requires tracks that are long enough to encompass the behavioural sequences of interest, but also replicated enough to detect correlations in the presence of noise. Using stationary cameras positioned far from the group of interest might make it possible to observe animals for longer periods of time before they leave the camera frame, but this typically comes at the cost of lower resolution, which can lead to increased tracking noise, tracking errors and lower quality tracks. Therefore, it is worth performing power analyses on simulated data in advance of data collection to determine what kind of track resolution, track lengths and replication will be needed to detect phenomena of interest. In some cases, the best strategy may be to dispense with tracking individuals altogether, and instead to focus on studying the detailed behaviours of individuals when they are present at a particular site using fixed-location cameras (e.g. [16]) or other means (e.g. passive integrated transponder (PIT) tag readers sensu [17]).

(b). Tracking animal positions from field imagery

More often than not, image-based analyses of collective behaviour involve tracking animal positions from one image to the next. This has become a highly streamlined task in laboratory studies (but see Hong et al. [18] and Berman et al. [19] for more challenging extensions), where behavioural arenas can be configured to minimize occlusions (i.e. instances where one animal passes between another individual and the camera), and to facilitate the use of inexpensive recording equipment and off-the-shelf tracking software (see Dell et al. [20] for a review).

Tracking animals in field images with complex backgrounds and objects in the foreground is far more challenging. Moreover, the need to simultaneously track many individuals that may frequently occlude one another makes studying collective behaviour using field imagery particularly difficult. However, in some field settings, one or more of these complications can be avoided. For example, Attanasi et al. [21] achieved high-precision 3D reconstructions of individual fly (the midge Cladotanytarsus atridorsum) trajectories by filming swarms in front of a suspended dark cloth background. In many cases, however, modifying the background will be either impossible or undesirable, and occlusions are almost inevitable when many animals interact in the same place at the same time. Alternatively, there are several technologies that have made it possible to extract high-precision tracks from field imagery, even when conditions are far from optimal. The most common of these are 3D imaging and specialized filtering, detection and tracking algorithms.

Three-dimensional information can help resolve ambiguities introduced when an individual passes in front of an object with similar colour and texture. For example, in a laboratory study, Hong et al. [18] used 3D cameras to record pairs of laboratory mice interacting in an experimental chamber. The authors were able to use the camera's depth sensor to separate mice with low-contrast coat colours from the background and to resolve occlusion events in which mice passed over one another. Three-dimensional cameras remove some of the need for careful calibrations and multi-camera reconstructions; however, commercially available 3D cameras currently have relatively narrow working range. Depending on the camera model, depth information is generally only reliable for objects that are located within a few metres of the camera lens [18], although stereo camera systems with larger apertures have been developed for tracking animals at longer ranges [18,22]. Moreover, the most common 3D technologies measure the depth of each pixel in an image by projecting an infrared beam and measuring the return time of that signal, limiting these tools to environments where emissions in the infrared range are not strongly attenuated. This limits the utility of 3D cameras in aquatic environments, although researchers have recently developed technologies that can improve the performance of 3D cameras for underwater use [23].

Heterogeneous, dynamic lighting is another challenge commonly encountered in field imagery, particularly in shallow water systems, where refraction of sunlight through surface waves results in rapidly changing illumination patterns on the substrate, known as ‘sunflicker’ [24]. Sunflicker makes object tracking challenging because features that are useful for detecting an individual in one image may yield poor performance in the next if local light conditions change. Dynamic lighting also renders background subtraction—a standard technique in which a background image is subtracted from recorded images to retain only moving objects—far less useful.

When it is not possible to avoid sunflicker altogether, it may still be possible to correct for dynamic lighting through video post-processing. Modern methods for correcting local dynamic light patterns in video were adapted from algorithms originally developed to produce smooth transitions between images in photo mosaics such as those created by cell phone apps [24]. De-flickering techniques apply similar methods to smooth the severe local gradients in pixel intensity produced when nearby regions of an image are illuminated to different degrees by sunflicker. Though these techniques have been applied to underwater imagery with promising results [24,25], in our experience, they can require significant tuning. More recent methods for automatically tuning de-flickering filters may dramatically reduce the need for manual tuning, making it more feasible to correct lighting in long sequences of images from field video [26].

Finally, cameras that record spectral bands outside of the visible range (e.g. thermal) or acoustic imaging systems such as acoustic sonar can be useful as either primary or secondary imaging devices. For example, Wu and co-workers [27] used thermal imaging cameras to reconstruct large groups of free-ranging bats in nocturnal footage. Benoit-Bird & Gilly [28] used split-beam sonar to track movements of individual jumbo squid (Dosidicus gigas) in the Gulf of California, which allowed them to measure the trajectory, velocity, tortuosity, and depth of multiple individuals at once. Other studies have used sonar to observe synchronous diving and foraging behaviour of cetaceans [29,30], and collective hunting and evasion in fish shoals [12,31]. Thermal and sonar imaging techniques are particularly exciting because they extend the range of environmental conditions where collective behaviour can be studied to include low-light environments previously hidden from traditional videography techniques. However, both spatial and temporal resolution are currently limited for these methods.

(c). Postural tracking and fine-scale behaviours

Technological developments will undoubtedly continue to improve the usefulness of visual imagery for studying collective behaviour. Among the most exciting of these is the development of algorithms that automatically extract more detailed information about individuals than body or head centroid locations. These include segmentation schemes, which may be able to provide postural information about individuals. For example, fully convolutional networks—relatively new tools from deep learning—appear to be well suited to semantic segmentation of complex images in which objects of interest can have variable size and shape, and be partially occluded [32]. Algorithms that explicitly model body orientation, structure and limb orientation using multi-camera reconstructions [33] or 3D cameras [18,34] also appear promising. These and similar methods will allow researchers to access information about individuals that is not contained in the time series of positions typically collected from tracked field imagery. Access to features like body posture and gait could fundamentally deepen what we can learn from visual imagery. For example, in dense schools or swarms, postural tracking can allow one to reconstruct the visual information available to each individual within the group (see laboratory studies by Strandburg-Peshkin et al. [9] and Rosenthal et al. [35]). Information about body posture, limb motion, and morphology may make it possible to apply new quantitative methods for characterizing behavioural states of individuals [18,19,36,37] and to better understand how social interactions might influence these states [38].

3. Remote sensing

While stationary cameras have facilitated some of the earliest field-based studies of collective animal behaviour, remote imaging platforms now offer a promising opportunity to extend these investigations to organisms moving across increasingly large spatial scales ([39]; figure 3). In addition, the flexibility of remote operation makes it possible to track specific animals or entire groups of interest while executing experimental manipulations under natural conditions. Together, these capabilities afford an opportunity to expand the scope of theoretical and empirical insights to be gained from studying collective motion to a broad range of natural systems.

Figure 3.

Remotely sensed imagery affords a unique opportunity to empirically study the ecology of collective motion in large animal systems. For example, satellite (a) and aerial (b,c) imagery of wildebeest herds (top row) reveals aggregation patterns that are structurally similar to those previously described for smaller taxa (bottom row): (a) vacuole (fish), (b) cruise (insects), (c) wave front (slime mould). Remote sensing now enables hypotheses regarding the form and function of these repeated patterns to be experimentally tested under natural conditions and for a wider range of taxa than ever before. Images were reproduced with the following permissions. Top row: (a) Google Earth, © 2017 Digital Globe; (b) 'River crossing' by Colin J. Torney, Elaine Ferguson and Lacey Hughey; (c) 'Wave front' by Lacey Hughey. Bottom row: (a) iStock.com/Connah/, cropped from original; (b) ‘A column of Matabele ants streaming towards a termite mound' by Piotr Naskrecki © 2013, cropped from original; (c) ‘Physarum polycephalum (Physaridae)' by Norbert Hülsmann, used under CCBY-NC-SA-2.0 (https://creativecommons.org/licenses/by-nc-sa/2.0/), cropped and rotated from original.

(a). Unmanned aerial vehicles

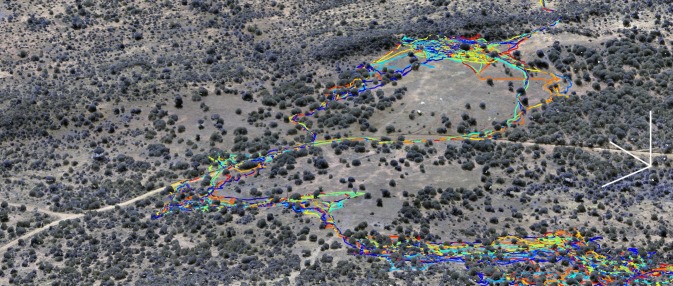

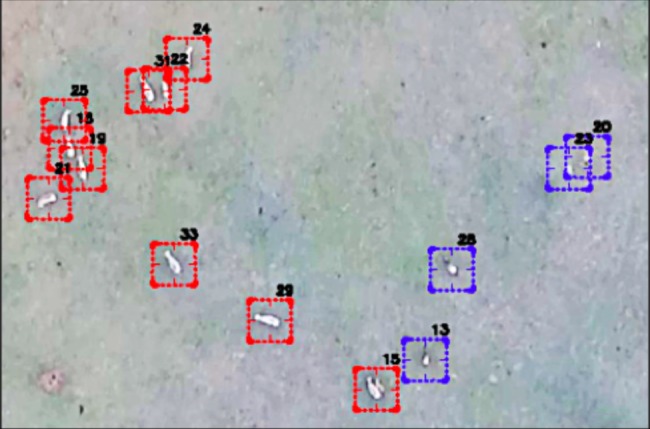

Unmanned aerial vehicles (UAVs) currently provide the most affordable and flexible imaging platforms for obtaining an aerial perspective in the field. In addition to greatly expanding the simultaneous field of view afforded by stationary cameras, UAVs provide the ability to adjust camera positioning on the fly and at distances up to several kilometres from the operator. This capability facilitates truly non-invasive filming of collective animal behaviour (when following 'best practices' outlined in [40,41]) and when combined with computer vision techniques (e.g. [20,39,42]; figure 4 and electronic supplementary material, ESM1 and ESM2) or bio-loggers (e.g. [10]; figure 5), can be used to track the fine-scale movements (e.g. individual positions, trajectories and turning angles) of entire groups over large distances and time scales. For example, Torney et al. [39] used UAV videography and computer vision to measure individual trajectories and quantify information transfer across large groups of migrating caribou.

Figure 4.

Still frame from a UAV video sequence demonstrating ability to automatically track unique individuals and species (e.g. zebra (15–19, 21, 22, 24, 25, 29, 31, 33) in red versus wildebeest (13, 20, 23, 28) in blue) across video frames (sensu [39,43]). Still frame was reproduced with permission from Colin J. Torney.

Figure 5.

Combining bio-logging with UAV imagery enables investigation of how the environment shapes collective movement in wild animal groups. Coloured lines show trajectories for the majority of baboons within a single troop (obtained using GPS collars), and background image shows 3D point cloud rendering of their habitat (obtained from UAV imagery). White lines show scale (each line extends 50 m). Adapted from [10].

In addition, a growing commercial market is continually increasing the utility and affordability of UAVs by offering a wide range of airframe designs, payload capacities, and technical configurations to suit the needs and budget of most academic research programmes [44,45]. Alternatively, a thriving DIY community offers limitless opportunities for researchers needing bespoke solutions at low cost. Given this range of equipment configurations and capabilities, specific recommendations will depend on the question of interest, focal species, budget and logistical constraints of the field site, and there are several technical and political considerations to be made before establishing any UAV-based research programme for wildlife (see Anderson & Gaston [44] for a more thorough treatment of these topics).

The inability to film animals through dense canopy or turbid water, or to resolve smaller species (less than about 30 kg) at appropriate altitude is currently the largest limitation of UAVs for studies of collective animal behaviour. However, thermal infrared and increasingly compact, high-resolution cameras are rapidly expanding future possibilities for filming under these conditions. Limited battery life presents an additional challenge, though significant gains stand to be made from utilizing alternative airframes. For example, fixed-wing UAVs afford significantly longer flight times than compact, multi-rotor designs (i.e. up to 2 days for the largest fixed-wing versus less than 1 h for most multi-rotor systems [44]). However, a multi-rotor system affords the advantage of hovering in place without the need to circle continuously as required by a fixed-wing aircraft. Regardless of design, all aerial platforms bring a suite of post-processing challenges such as image stabilization, correction for oblique filming angles, changing light and environmental conditions, plus many of the limitations outlined previously for processing footage from field cameras (see ‘Stationary field imaging techniques’ (section 2) above).

In addition, many low-cost commercial systems can produce stimuli perceived to be threatening by many species (i.e. motor noise [46] or semblance of an aerial predator [47]), though impacts may be reduced by modifying equipment or methodology [40,41]. Furthermore, there is some evidence that UAVs may cause physiological changes in study animals (i.e. increased heart rate [46]), which may not manifest as behavioural changes, but could confound results if not properly accounted for. Though all of these issues are addressed with increasing efficiency in new versions of hardware and software, there is no replacement for thoughtfully developed ‘best practices’ for UAV use around wildlife [40,41]. Alternatively, non-motorized platforms (i.e. kites, aerostats and stratospheric balloons [48]) offer some advantages over traditional UAVs, including reduced noise, significantly longer flight times and increased payloads. Of course, these gains come at the cost of manoeuvrability, though this may be partially mediated by use of a remote controlled camera gimbal, or increased altitude.

Finally, depending on the study area, UAVs may present a multitude of legal challenges, which will generally require advance permitting and licensing at a minimum, and partial to total restriction of flights at a maximum. Thus, it is essential to work with local stakeholders and law enforcement agencies during the early phases of project planning to clarify procedures and ensure compliance prior to beginning work.

(b). Satellites

While UAVs offer unparalleled affordability, flexibility and resolution for imaging animal groups from an aerial perspective, there have been notable advances in satellite remote sensing technology that will facilitate truly ‘landscape-scale’ studies of collective behaviour in the very near future [49]. Commercial satellite companies maintain the largest collection of archived images with the resolution appropriate for identifying individual animals (30 cm [50] to 50 cm [50,51]), but the random and disparate temporal distribution of coverage generally limits the use of archived images for studies of collective movement. While there is some promise for using new, commissioned images to capture time series of large animal groups moving across the landscape, this will require future increases in satellite availability for civilian use coupled with a significant decrease in cost.

Alternatively, the advent of ‘CubeSats’ (i.e. miniaturized satellite constellations) has recently disrupted the traditional market for high-resolution satellite imagery by providing low-cost access to high-resolution still imagery (80 cm–5 m) and video (1 m, up to 90 s at 30 fps) collected at daily or near-daily intervals (e.g. [52–54]). Obtaining such high-resolution, high-frequency satellite imagery presents a first opportunity to study entire herds of large animals (e.g. migratory wildebeest, caribou, livestock) moving across hundreds of square kilometres without disturbance from observers on the ground. In addition, this truly multi-scale perspective will afford researchers the opportunity to better understand how social and environmental processes interact across environmentally relevant spatial scales and facilitate the study of collective behaviour in more natural systems than ever before (figure 3).

4. Bio-loggers

Animal-mounted sensors (or bio-loggers) present another promising and complementary approach to image-based studies of collective behaviour. Such on-board sensors—including GPS, accelerometers, magnetometers, pressure sensors and acoustic recorders, among others—are opening up new directions in a range of biological disciplines, as they allow data to be collected continuously and directly at the location of the study animal, irrespective of changes in accessibility or visibility of the animal, and without need for re-identifying the same individual repeatedly. For studying collective behaviour in particular, on-board sensors allow animal position, movement and behaviour to be monitored with increasing resolution and across a range of habitats and contexts [55,56]. In addition, many tags now include multiple types of sensors integrated with one another, making it possible to test how the movements, vocalizations, behaviours and social interactions of freely-moving animals influence one another [57].

However, the utility of bio-loggers is limited by the need to affix sensors to each monitored animal, a process that usually requires capture (for collars, backpacks or glue attachment) or close range physical interaction (for suction cup or dart attachments). Additionally, the need for animals to carry devices imposes strong weight and size restrictions, thereby limiting the sensor payload and battery size, and resulting in trade-offs between sensor sampling rate, duty cycling, and battery life. Retrieving data can also present challenges. In some cases, it may be possible to download data remotely from tags, while in others, tags must be retrieved (either through recapturing animals or by having a remote drop-off system) to offload data. Another complication that is especially relevant to studies of collective behaviour is the need to deploy many devices simultaneously. If instrumentation happens over an extended period of time, tags need a pre-programmed start time to maximize simultaneous recording time. Additionally, the internal clocks of independent tags will drift over time, and thus tags that do not include a GPS sensor will need a system for intermittently synchronizing tags. Lastly, on-board sensors are typically expensive, so deploying many tags may become cost-prohibitive for some research projects. Despite these challenges, continued advances in technology have reduced the size and cost of on-board sensors while also increasing their spatial and temporal resolution. Owing to these advances, their use in behavioural biology is rapidly growing, and they are becoming an increasingly powerful tool for studying collective animal behaviour. We explore these advances and associated challenges in greater detail below.

(a). Monitoring location

Modern GPS tags are capable of monitoring animal locations at sub-second rates, and with spatial resolution that can achieve sub-metre precision. These advances mean that data can now be collected at the temporal and spatial scales necessary for studying fine-scale social interactions within groups [55]. Several recent studies have deployed GPS tags on all or most individuals within animal groups to study collective movement dynamics, including work on pigeons (Columba livia domestica) [58], baboons (Papio anubis) [59], domestic sheep [60], African wild dogs (Lycaon pictus) [60,61] and domestic dogs (Canis lupus familiaris) [62] (see figure 4 for an example with baboons).

Collecting movement data via GPS tags has a number of advantages. First and foremost, it is possible to monitor animals in areas where visual observation is impossible. Moreover, animals can be tracked over multiple spatial scales (from local interactions within groups to long-range collective migrations [63]) and with an adjustable temporal rate. Such high-density data can allow estimation of individual interaction rules and leadership [64], differences in relative position within a group that are related to individual differences or personality traits [65,66], or tracking fine-scale interactions with the local environment [10,63]. GPS sensors require a relatively large amount of power, but recent low-power GPS tags now allow for multi-week continuous (1 Hz position updates) tracking of medium-sized animals such as baboons [59]. However, this increased spatial or temporal resolution may not be high enough to resolve fine-scale movements and social interactions for some systems and contexts. Therefore, these methods are most appropriate for groups that are dispersed over at least tens of metres, or for addressing interactions that take place over such distances. In contrast to overhead imaging, there are no limits to maximum separation distance so it is more feasible to study social dynamics of fluid groups on the move. For smaller animals or more compact group interactions, high-resolution imaging from either stationary cameras or UAVs is likely a better approach to differentiating interactions.

For marine animals or other systems where a significant component of movement takes place vertically, cheap and power-efficient pressure sensors can monitor the depth of a tagged animal. Tags with pressure sensors generally store and transmit summary data or store raw depth measurements. This information can provide data on dive and foraging behaviour, and can be merged with Argos positions (i.e. GPS locations from Argos satellite system) to provide detailed data on foraging ecology of deep-diving animals [67]. Although it is possible to use pressure sensors to quantify dive initiation and other characteristics of leadership, so far this technology has only been used to a limited extent for studies of collective behaviour [68]. This is in part due to problems with separating lack of coordination from lack of horizontal cohesion, and in part due to inevitable clock drift between independently sampling tags. Novel approaches to solve these two issues are therefore needed, such as synchronization pulses or incorporation of GPS or fast-lock GPS technology with accurate timing information.

(b). Detecting presence, proximity and social networks

Even when precise positions are not known, information on the presence or proximity of animals to one another, or to fixed geographical locations, can still provide a useful quantification of social structure and interactions. Such methods can be particularly important for species whose size, environment or behaviour makes continuous monitoring impractical or impossible, or for processes that span longer time scales, such as social learning. A range of active and passive transponder systems have been used to obtain such data so far, and are thought to be increasingly important to future work [69].

Passive integrated transponder (PIT) tags are extremely small, lightweight and inexpensive devices that carry a unique barcode and are typically implanted internally in animals. PIT tags do not require an internal power source so they can usually remain with an animal for its entire lifetime and are well suited to automated set-ups. While PIT tag systems do not monitor position continuously, they are well suited to systems in which animals spend time at specific locations such as nests and foraging patches, or to monitor their movements through specific movement corridors such as rivers (e.g. during migration). Arrays of transponder readers can also give more detailed information on animal positions and movement directions [70], and co-occurrences at specific locations can be used to infer social structure [71]. A limitation of PIT tags is that their detection range is very short, typically on the order of a few metres or less. In the context of collective behaviour, PIT tags have been used to monitor decision-making, social network structure, and information transfer in populations of wild birds [17,72,73], bats (Myotis bechsteinii) [74] and house mice (Mus musculus) [75], among others.

Active transponder tags, including VHF radio beacons or acoustic transponders that contain their own power source for signal generation, can provide a longer-range alternative, though these also require deployed receiving stations. Several lakes have recently been instrumented with relatively dense arrays of acoustic receivers to track active transponders implanted in multiple species of fish, allowing a detailed perspective into interactions both within and between species in an ecosystem [69,76].

Proximity sensors are active transponder tags that can themselves receive information from other transponders and store information on time and ID of encountered tags [77]. Tags can be tuned either to record signals above a certain threshold or to record signals and signal strength, where the latter can be used to infer encounter distance [78]. These tags have been used to automatically map association patterns and investigate social learning in free-ranging New Caledonian crows (Corvus moneduloides) [79] and to investigate social dynamics of zebras (Equus quagga) [80] and sharks (Carcharhinus galapagensis) [81,82].

(c). Estimating body orientation, activity and behaviour

A full understanding of how animal groups coordinate movement will require data, not just on where animals are, but on the sensory information they are taking in and the behaviours that they are engaging in. Recent laboratory studies of animal groups have begun to incorporate sensory information, such as the visual field of each individual in a school of fish [9,35,83], to build more predictive and biologically motivated models of collective motion [84]. Onboard inertial sensors such as accelerometers, magnetometers and gyroscopes provide an opportunity to obtain detailed behavioural information for animal groups in the wild, even when they cannot be directly observed by humans, and may also provide the means for tracking body orientation and gaze direction of animals within moving groups. Both accelerometers and magnetometers are commonly used in bio-logging tags since they are compact, cheap and power efficient [85,86]. Gyroscopes have some advantages when measuring energetics and body posture, but have seen only limited use in bio-logging tags owing to their higher power consumption, drift and complex data processing [87].

Tri-axial accelerometers measure both static acceleration (caused by the gravitational field of the Earth) and dynamic acceleration (caused by acceleration of the animal and thereby the sensor itself) along three dimensions. Depending on sensor placement, dynamic acceleration can be related to the movement of the animal itself, and various proxies for energy expenditure or activity level using tri-axial accelerometers have been developed as a result (ODBA [88]; veDBA [89]; MSA [90]). Accelerometers may also be used to estimate body orientation, often quantified as the pitch, roll and heading of an animal. To measure all three axes of body orientation, an accelerometer and magnetometer are needed, and magnetic heading must be corrected for the magnetic inclination and declination at the study site. Magnetometers are seldom used by themselves because they cannot fully specify the orientation of the tag owing to rotational ambiguity around the magnetic field vector. However, with triaxial accelerometers and magnetometers, time series of body orientation can be used to quantify the gait of an animal over time [91]. Packages combining accelerometers and magnetometers with gyroscopes provide a more robust quantification of both energetics and gait [87,92]. See Martín López et al. [87] for a comparison between these approaches.

Since accelerometers and magnetometers are more power efficient, they can generally be sampled much faster (typically tens to thousands of times per second) than GPS tracking systems, which are constrained by battery power. Thus, using inertial sensors there is increasing potential for using time-series analysis to estimate movement influence and social interactions between simultaneously tagged animals at higher temporal resolution using inertial sensors than is possible using GPS sensors. Inertial sensors also offer the possibility of identifying specific behaviours (e.g. foraging events or prey capture success [93,94]) and behavioural states [95–97]. To do this, a ground-truthed dataset consisting of time-synchronized behavioural observations is typically collected during a subset of sensor recordings. Based on this training dataset, machine learning techniques can then be used to develop an automatic behavioural classifier, allowing behaviours to be identified in the absence of direct observation [93].

(d). Improving positional data using inertial sensors

Integrating data from sensors with different spatial or temporal resolutions can help improve tracking accuracy. For example, by merging high sample rate inertial data from accelerometers, magnetometers and/or gyroscopes with low sample rate, larger-error position data from GPS tags, it is possible to determine the orientation of an animal, then combine this information with estimates of speed and integrate across velocity vectors to reconstruct movement tracks [98]. Such ‘dead-reckoning’ methods (reviewed in [99]) can help establish movement tracks without directly measuring positions [100] and can also be combined with GPS, ARGOS or acoustic localization position data to improve the temporal resolution of movement tracks [101,102]. Dead-reckoning methods are also critical for species that live in areas where GPS reception is poor, such as marine environments and densely forested areas. However, it is important to note that errors in the inferred positions of animals will accumulate over the length of a track and rapidly limit the accuracy of dead-reckoned position estimates, whereas estimated orientation will keep the same accuracy throughout. Thus, it is better to base studies of movement influence between animals on orientation estimates rather than dead-reckoned tracks.

(e). Interactions beyond proximity

Collective behaviours are mediated by a variety of passive and active information flows between individuals in a group. Behaviours other than movement, such as vocalizations and gestures, are key to the coordination of movement in many species (primates [103,104]; meerkats (Suricata suricatta) [105]; birds [106]; elephants (Loxodonta africana) [107]; dolphins (Tursiops truncatus) [108]). Animal-mounted cameras, sound recorders or accelerometers provide a number of options for measuring interactions between individuals in the field, and linking these to individual-level movement decisions recorded simultaneously by GPS or other sensors.

Perhaps the most intuitive option is the use of still or video imaging from the perspective of the study animal itself [109,110]. Animal-borne video can be used to identify or validate behaviours, especially as recorded by other lower cost sensors (e.g. accelerometers), and has been used extensively to understand foraging ecology of many species; it also has great potential for contributing to our understanding of collective behaviour. Cameras can map encounters or social interactions with conspecifics that occur out of sight of observers [111–113]. While technology is continuously improving, video cameras consume more power than many other sensors, analysis is often labour intensive, and it may be difficult to get a field of view that can capture all interactions of interest.

The last 15 years have seen an increase in animal-borne sound recorders, especially for research on cetaceans [114–116], but also on terrestrial mammals [117], birds and bats (e.g. [57,118]). Since acoustic communication is a fundamental means of information transfer in many systems, acoustic recorders that can pick up these signals from tagged animals open a wide range of possibilities for understanding collective behaviours, from active mediation of group cohesion to negotiation of consensus decisions.

While manual processing of acoustic data can be time-consuming, automated detection and discrimination algorithms can speed up analysis dramatically [118–120]. One potential advantage over camera tags is that a single acoustic sensor can record sounds from the tagged animal, incoming sounds from other nearby conspecifics, and sounds from other sources in the environment [57]. However, for many species, it can be a significant challenge to correctly discriminate vocalizations of the tagged individual from nearby conspecifics, and accurate differentiation of tagged animal vocalizations can be difficult to demonstrate without a ground-truthed dataset. Stereo tags may help since one can use time differences between channels to estimate a bearing to an incoming sound [121], thereby more easily identifying sounds from the tagged animal [122,123]. Additionally, high sample rate accelerometers may be able to pick up on body vibrations associated with sound production in both marine [124] and terrestrial [125] systems.

While bio-loggers that monitor the orientation and movement of animals are only beginning to be employed in studies of collective animal behaviour [95,126], their use offers great promise for achieving a deeper understanding of the mechanics governing collective motion. Such data will also provide valuable information about the context in which group coordination occurs, and will allow individual behaviours—not just locations—to be incorporated into models of collective movement. At the same time, the ability to collect such detailed data opens up a new set of challenges, as integrating multiple streams of raw sensor data to obtain biologically relevant information is a difficult analytical and computational task, though software to facilitate this process is gradually becoming available [127]. Furthermore, since instrumentation of animals is both costly and time intensive, future studies that combine animal bio-logging methods with other tools, such as visual tracking of group members from overhead cameras, may facilitate studies of collective behaviour while building on the strengths of each method.

5. Discussion

Deeper knowledge of the ecology and evolution of collective behaviour is important both for the advancement of basic scientific understanding and for the conservation of fundamental ecosystem processes that occur in communities around the world [1,128–130]. The technologies discussed above offer new, and in many cases, more efficient tools for studying the dynamics of these processes in the wild. Each of these approaches comes with their own advantages and caveats, and thus the choice of study approach will depend heavily on the problem, especially the spatio-temporal scale at which data are needed.

In general, both stationary and remotely sensed imagery afford the advantage of simultaneously capturing high-resolution data on environmental features and animal movement, but differ in the range of spatio-temporal scales that can be captured. For example, fixed cameras provide high-definition (and, in some cases, 3D) imaging at a local scale that is constrained by the field of view of the (often immobile) camera, and thus are most suitable for monitoring movement interactions of small, less mobile animals, or for monitoring interactions in specific areas (e.g. fish moving around a reef, birds foraging in a tree). For larger, group-living or highly mobile animals, UAVs offer a promising alternative. The choice of airframe design will depend on the scale of inquiry, with larger aggregations or longer time periods necessitating fixed-wing UAVs which fly higher and cannot hover, but which reach extended flight times of hours to days compared with the tens of minutes of commercial multi-copters. For landscape-scale questions, high-resolution satellite imaging is becoming an increasingly accessible option that may allow tracking of mass movements of larger animals over time scales of weeks to months, albeit at low temporal scales that do not allow tracking of individual animals without the coordinated use of bio-loggers or stationary cameras.

In contrast to field imaging techniques, bio-logging tags offer the ability to track unique individuals over time scales of weeks to years, which can be a significant advantage when studying highly mobile [10,58] or highly fluid social groups. In addition, bio-loggers afford the advantage of incorporating environmental sensors such as cameras or microphones that can record social interactions in situ and allow researchers to test mechanistic hypotheses for the collective decision-making processes observed in a broad range of taxa. Finally, it may be advantageous to think about bridging these approaches, for example by combining fine-scale habitat mapping from UAV with high-resolution individual-level tracking of animals [10] (figure 4).

While we have emphasized the new research opportunities these methods will facilitate, the methods themselves should not be viewed as a panacea, or as a replacement for more traditional techniques of field biology. As Hebblewhite & Hayden [131] point out, higher-resolution datasets do not necessarily lead to increased understanding of animal ecology. Additionally, one should critically evaluate the true costs of data collection (i.e. handling wildlife to apply sensors, or processing and analysing large amounts of data) before adopting any new techniques for research. It is also important to note that there is no replacement for the deep intuition and novel questions born from directly observing animal behaviour in the field. Thus, these new technologies should be viewed as complementary approaches to more traditional field methods and encourage deeper understanding of classic ecological theories through cross-discipline collaborations.

Moving forward, there are a number of promising avenues for extending collective behaviour research in both theoretical and applied directions through experimental, field-based enquiry. Much of what we currently know about collective animal behaviour, both in the laboratory and in the wild, comes from observational studies rather than experimental manipulations. With the aid of mathematical and computational models, these studies have shed considerable light on the interaction rules that generate phenomena such as coordinated motion (e.g. [6,8,11,132,133]) and collective predator evasion (e.g. [12,35]). However, it is becoming increasingly clear that hypotheses about the causes and consequences of collective behaviour should be tested further through manipulative experiments in a natural setting. Several field studies (e.g. [16,134]) have already begun to move in this direction, and recent technological advancements will enable researchers to build on these early efforts by combining the power of modern animal tracking technology with traditional methods for studying behaviour in the field. For example, acoustic playbacks (e.g. [105,134–136]), food manipulation (e.g. [73,137]) and predator threat stimuli [16] can be used in combination with any of the imaging or bio-logging technologies discussed above to experimentally test hypotheses about how information is transmitted among individuals and how that information affects collective dynamics across natural landscapes.

In addition to these new applications, the technologies reviewed here hold tremendous potential to extend the study of collective behaviour to contexts where it has seldom been studied in the past. Questions about what selects for and maintains collective migration, how collective foraging might influence nutrient dynamics and ecosystem processes, how individuals balance information they gather directly from the environment with information gleaned by watching neighbours, and how the demography and persistence of species might depend on social interactions have long fascinated biologists. The technological revolution that is currently taking place in the study of collective behaviour is bringing answers to these questions more rapidly than ever before, and should continue to strengthen the relationship between theoretical models, empirical observations and manipulative experiments in the years to come.

Acknowledgements

We thank Douglas J. McCauley, Benjamin Martin, Eric Danner, Máté Nagy and one anonymous referee for helpful comments on the manuscript. We thank the guest editors for the invitation to submit this paper to the theme issue.

Data accessibility

This article has no additional data.

Authors' contributions

L.F.H., A.M.H., A.S.-P. and F.H.J. wrote the manuscript.

Competing interests

We declare we have no competing interests.

Funding

This work was supported by the following: NSF grant IOS-1545888; NSF Graduate Research Fellowship (L.F.H.; 1650114); James S. McDonnell Foundation fellowship (A.M.H.); Max Planck Institute for Ornithology (A.S.-P.), the Human Frontier Science Program (A.S.-P.; LT000492/017); Gips-Schüle Foundation (A.S.-P.); Office of Naval Research (F.H.J.; N00014-1410410); Carlsberg Foundation (F.H.J.; CF15-0915); AIAS-COFUND fellowship from Aarhus Institute of Advanced Studies (F.H.J.).

References

- 1.Parrish JK, Viscido SV, Grünbaum D. 2002. Self-organized fish schools: an examination of emergent properties. Biol. Bull. 202, 296–305. ( 10.2307/1543482) [DOI] [PubMed] [Google Scholar]

- 2.Reynolds CW. 1987. Flocks, herds and schools: a distributed behavioral model. In Proc. 14th Annual Conference on Computer Graphics and Interactive Techniques – SIGGRAPH ‘87, 27–31 July 1987, Anaheim, CA ACM SIGGRAPH Comput. Graph. 21(4). ( 10.1145/37401.37406) [DOI] [Google Scholar]

- 3.Gordon DM. 2014. The ecology of collective behavior. PLoS Biol. 12, e1001805 ( 10.1371/journal.pbio.1001805) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Couzin ID, Krause J. 2003. Self-organization and collective behavior in vertebrates. In Advances in the study of behavior (eds Slater P, Rosenblatt J, Snowdon C, Roper T, Naguib M), pp. 1–75. London: Elsevier Academic Press. [Google Scholar]

- 5.Axelsen BE, Anker-Nilssen T, Fossum P, Kvamme C, Nøttestad L. 2001. Pretty patterns but a simple strategy: predator-prey interactions between juvenile herring and Atlantic puffins observed with multibeam sonar. Can. J. Zool. 79, 1586–1596. ( 10.1139/cjz-79-9-1586) [DOI] [Google Scholar]

- 6.Katz Y, Tunstrom K, Ioannou CC, Huepe C, Couzin ID. 2011. Inferring the structure and dynamics of interactions in schooling fish. Proc. Natl Acad. Sci. USA 108, 18 720–18 725. ( 10.1073/pnas.1107583108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Buhl J, Sumpter DJT, Couzin ID, Hale JJ, Despland E, Miller ER, Simpson SJ. 2006. From disorder to order in marching locusts. Science 312, 1402–1406. ( 10.1126/science.1125142) [DOI] [PubMed] [Google Scholar]

- 8.Herbert-Read JE, Perna A, Mann RP, Schaerf TM, Sumpter DJT, Ward AJW. 2011. Inferring the rules of interaction of shoaling fish. Proc. Natl Acad. Sci. USA 108, 18 726–18 731. ( 10.1073/pnas.1109355108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Strandburg-Peshkin A, et al. 2013. Visual sensory networks and effective information transfer in animal groups. Curr. Biol. 23, R709–R711. ( 10.1016/j.cub.2013.07.059) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Strandburg-Peshkin A, Farine DR, Crofoot MC, Couzin ID. 2017. Habitat and social factors shape individual decisions and emergent group structure during baboon collective movement. eLife 6, e19505 ( 10.7554/eLife.19505) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Ballerini M, et al. 2008. Interaction ruling animal collective behavior depends on topological rather than metric distance: evidence from a field study. Proc. Natl Acad. Sci. USA 105, 1232–1237. ( 10.1073/pnas.0711437105) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Handegard NO, Boswell KM, Ioannou CC, Leblanc SP, Tjøstheim DB, Couzin ID. 2012. The dynamics of coordinated group hunting and collective information transfer among schooling prey. Curr. Biol. 22, 1213–1217. ( 10.1016/j.cub.2012.04.050) [DOI] [PubMed] [Google Scholar]

- 13.Cavagna A, Giardina I, Orlandi A, Parisi G, Procaccini A, Viale M, Zdravkovic V. 2008. The STARFLAG handbook on collective animal behaviour: 1. Empirical methods. Anim. Behav. 76, 217–236. ( 10.1016/j.anbehav.2008.02.002) [DOI] [Google Scholar]

- 14.Ginelli F, Peruani F, Pillot M-H, Chaté H, Theraulaz G, Bon R. 2015. Intermittent collective dynamics emerge from conflicting imperatives in sheep herds. Proc. Natl Acad. Sci. USA 112, 12 729–12 734. ( 10.1073/pnas.1503749112) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Theriault DH, Fuller NW, Jackson BE, Bluhm E, Evangelista D, Wu Z, Betke M, Hedrick TL. 2014. A protocol and calibration method for accurate multi-camera field videography. J. Exp. Biol. 217, 1843–1848. ( 10.1242/jeb.100529) [DOI] [PubMed] [Google Scholar]

- 16.Gil MA, Hein AM. 2017. Social interactions among grazing reef fish drive material flux in a coral reef ecosystem. Proc. Natl Acad. Sci. USA 114, 4703–4708. ( 10.1073/pnas.1615652114) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Aplin LM, Farine DR, Mann RP, Sheldon BC. 2014. Individual-level personality influences social foraging and collective behaviour in wild birds. Proc. R. Soc. B 281, 20141016 ( 10.1098/rspb.2014.1016) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hong W, Kennedy A, Burgos-Artizzu XP, Zelikowsky M, Navonne SG, Perona P, Anderson DJ. 2015. Automated measurement of mouse social behaviors using depth sensing, video tracking, and machine learning. Proc. Natl Acad. Sci. USA 112, E5351–E5360. ( 10.1073/pnas.1515982112) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Berman GJ, Choi DM, Bialek W, Shaevitz JW. 2014. Mapping the stereotyped behaviour of freely moving fruit flies. J. R. Soc. Interface 11, 20140672 ( 10.1098/rsif.2014.0672) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dell AI, et al. 2014. Automated image-based tracking and its application in ecology. Trends Ecol. Evol. 29, 417–428. ( 10.1016/j.tree.2014.05.004) [DOI] [PubMed] [Google Scholar]

- 21.Attanasi A, et al. 2014. Collective behaviour without collective order in wild swarms of midges. PLoS Comput. Biol. 10, e1003697 ( 10.1371/journal.pcbi.1003697) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Macfarlane NBW, Howland JC, Jensen FH, Tyack PL. 2015. A 3D stereo camera system for precisely positioning animals in space and time. Behav. Ecol. Sociobiol. 69, 685–693. ( 10.1007/s00265-015-1890-4) [DOI] [Google Scholar]

- 23.Anwer A, Ali SSA, Khan A, Mériaudeau F. 2017. Underwater 3D scanning using Kinect v2 time of flight camera In 13th Int. Conf. Quality Control by Artificial Vision, 14 May 2017, Tokyo, Japan Proc. SPIE 10338, 103380C ( 10.1117/12.2266834) [DOI] [Google Scholar]

- 24.Gracias N, Negahdaripour S, Neumann L, Prados R, Garcia R. 2009. A motion compensated filtering approach to remove sunlight flicker in shallow water images In OCEANS 2008 MTS/IEEE OCEANS Conference, 15–18 September 2008, Quebec City, Canada. IEEE ( 10.1109/OCEANS.2008.5152111) [DOI] [Google Scholar]

- 25.Shihavuddin ASM, Nuno G, Rafael G. 2012. Online sunflicker removal using dynamic texture prediction In Proc. Int. Joint Conf. Computer Vision, Imaging and Computer Graphics, Theory and Applications, (VISAPP), 24–26 February 2012, Rome, Italy, pp. 161–167. Setúbal, Portugal: SciTePress. [Google Scholar]

- 26.Trabes E, Jordan MA. 2015. Self-tuning of a sunlight-deflickering filter for moving scenes underwater In Information Processing and Control (RPIC), 2015 XVI Workshop on Information Processing and Control (RPIC), 6–9 October 2015. IEEE. [Google Scholar]

- 27.Zheng W, Wu Z, Hristov NI, Hedrick TL, Kunz TH, Betke M. 2009. Tracking a large number of objects from multiple views In Computer Vision, 2009 IEEE 12th Int. Conf. 29 Sep. 2009, Kyoto, Japan, pp. 1546–1553. IEEE ( 10.1109/ICCV.2009.5459274) [DOI] [Google Scholar]

- 28.Benoit-Bird KJ, Gilly WF. 2012. Coordinated nocturnal behavior of foraging jumbo squid Dosidicus gigas. Mar. Ecol. Prog. Ser. 455, 211–228. ( 10.3354/meps09664) [DOI] [Google Scholar]

- 29.Godø OR, Sivle LD, Patel R, Torkelsen T. 2013. Synchronous behaviour of cetaceans observed with active acoustics. Deep Sea Res. Part 2 Top. Stud. Oceanogr. 98, 445–451. ( 10.1016/j.dsr2.2013.06.013) [DOI] [Google Scholar]

- 30.Benoit-Bird K, Au W. 2003. Hawaiian spinner dolphins aggregate midwater food resources through cooperative foraging. J. Acoust. Soc. Am. 114, 2300 ( 10.1121/1.4780872) [DOI] [Google Scholar]

- 31.Rieucau G, Sivle LD, Handegard NO. 2015. Herring perform stronger collective evasive reactions when previously exposed to killer whales calls. Behav. Ecol. 27, 538–544. ( 10.1093/beheco/arv186) [DOI] [Google Scholar]

- 32.Shelhamer E, Long J, Darrell T. 2017. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 39, 640–651. ( 10.1109/TPAMI.2016.2572683) [DOI] [PubMed] [Google Scholar]

- 33.Cheng XE, Wang SH, Chen YQ. 2016. Estimating orientation in tracking individuals of flying swarms In 2016 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP), 20–25 March 2016, Shanghai, PR China, pp. 1496–1500. IEEE. ( 10.1109/ICASSP.2016.7471926) [DOI] [Google Scholar]

- 34.Barnard S, Calderara S, Pistocchi S, Cucchiara R, Podaliri-Vulpiani M, Messori S, Ferri N. 2016. Quick, accurate, smart: 3D computer vision technology helps assessing confined animals' behaviour. PLoS ONE 11, e0158748 ( 10.1371/journal.pone.0158748) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Rosenthal SB, Twomey CR, Hartnett AT, Wu HS, Couzin ID. 2015. Revealing the hidden networks of interaction in mobile animal groups allows prediction of complex behavioral contagion. Proc. Natl Acad. Sci. USA 112, 4690–4695. ( 10.1073/pnas.1420068112) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Berman GJ, Bialek W, Shaevitz JW. 2016. Predictability and hierarchy in Drosophila behavior. Proc. Natl Acad. Sci. USA 113, 11 943–11 948. ( 10.1073/pnas.1607601113) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Stephens GJ, Johnson-Kerner B, Bialek W, Ryu WS. 2008. Dimensionality and dynamics in the behavior of C. elegans. PLoS Comput. Biol. 4, e1000028 ( 10.1371/journal.pcbi.1000028) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Sumpter DJT, Szorkovszky A, Kotrschal A, Kolm N, Herbert-Read JE. 2018. Using activity and sociability to characterize collective motion. Phil. Trans. R. Soc. B 373, 20170015 ( 10.1098/rstb.2017.0015) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Torney CJ, Lamont M, Debell L, Angohiatok RJ, Leclerc L-M, Berdahl AM. 2018. Inferring the rules of social interaction in migrating caribou. Phil. Trans. R. Soc. B 373, 20170385 ( 10.1098/rstb.2017.0385) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hodgson JC, Koh LP. 2016. Best practice for minimising unmanned aerial vehicle disturbance to wildlife in biological field research. Curr. Biol. 26, R404–R405. ( 10.1016/j.cub.2016.04.001) [DOI] [PubMed] [Google Scholar]

- 41.Mulero-Pázmány M, Jenni-Eiermann S, Strebel N, Sattler T, Negro JJ, Tablado Z. 2017. Unmanned aircraft systems as a new source of disturbance for wildlife: a systematic review. PLoS ONE 12, e0178448 ( 10.1371/journal.pone.0178448) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Weinstein BG. In press. A computer vision for ecology. J. Anim. Ecol. ( 10.1111/1365-2656.12780) [DOI] [PubMed] [Google Scholar]

- 43.Torney CJ, et al. 2016. Assessing rotation-invariant feature classification for automated wildebeest population counts. PLoS ONE 11, e0156342 ( 10.1371/journal.pone.0156342) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Anderson K, Gaston KJ. 2013. Lightweight unmanned aerial vehicles will revolutionize spatial ecology. Front. Ecol. Environ. 11, 138–146. ( 10.1890/120150) [DOI] [Google Scholar]

- 45.Lowman M, Voirin B. 2016. Drones—our eyes on the environment. Front. Ecol. Environ. 14, 231 ( 10.1002/fee.1290) [DOI] [Google Scholar]

- 46.Ditmer MA, Vincent JB, Werden LK, Tanner JC, Laske TG, Iaizzo PA, Garshelis DL, Fieberg JR. 2015. Bears show a physiological but limited behavioral response to unmanned aerial vehicles. Curr. Biol. 25, 2278–2283. ( 10.1016/j.cub.2015.07.024) [DOI] [PubMed] [Google Scholar]

- 47.Korczak-Abshire M, Kidawa A, Zmarz A, Storvold R, Karlsen SR, Rodzewicz M, Chwedorzewska K, Znój A. 2016. Preliminary study on nesting Adélie penguins disturbance by unmanned aerial vehicles. CCAMLR Sci. 23, 1–6. [Google Scholar]

- 48.Witze A. 2018. Scientific ballooning takes off. Nature 553, 135–136. ( 10.1038/d41586-018-00017-5) [DOI] [PubMed] [Google Scholar]

- 49.Torney CJ, Grant C. Hopcraft J, Morrison TA, Couzin ID, Levin SA. 2018. From single steps to mass migration: the problem of scale in the movement ecology of the Serengeti wildebeest. Phil. Trans. R. Soc. B 373, 20170012 ( 10.1098/rstb.2017.0012) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Digital Globe. About us/Our constellation. https://www.digitalglobe.com/about/our-constellation (accessed 1 July 2017). [Google Scholar]

- 51.Airbus Defence and Space. Pléiades Satellite Imagery. The very-high resolution constellation.http://www.intelligence-airbusds.com/pleiades/ (accessed 1 July, 2017). [Google Scholar]

- 52.Urthecast. The UrtheDaily™ Constellation.https://www.urthecast.com/urthedaily/">www.urthecast.com/urthedaily/ (accessed 1 June 2017). [Google Scholar]

- 53.earth-i. The DMC3 Constellation. http://earthi.space/dmc3/ (accessed 1 June 2017). [Google Scholar]

- 54.Planet. Planet application program interface: in space for life on Earth. https://api.planet.com (accessed 1 June 2017). [Google Scholar]

- 55.Kays R, Crofoot MC, Jetz W, Wikelski M. 2015. Ecology. Terrestrial animal tracking as an eye on life and planet. Science 348, aaa2478 ( 10.1126/science.aaa2478) [DOI] [PubMed] [Google Scholar]

- 56.Fehlmann G, King AJ. 2016. Bio-logging. Curr. Biol. 26, R830–R831. ( 10.1016/j.cub.2016.05.033) [DOI] [PubMed] [Google Scholar]

- 57.Cvikel N, Berg KE, Levin E, Hurme E, Borissov I, Boonman A, Amichai E, Yovel Y. 2015. Bats aggregate to improve prey search but might be impaired when their density becomes too high. Curr. Biol. 25, 206–211. ( 10.1016/j.cub.2014.11.010) [DOI] [PubMed] [Google Scholar]

- 58.Nagy M, Akos Z, Biro D, Vicsek T. 2010. Hierarchical group dynamics in pigeon flocks. Nature 464, 890–893. ( 10.1038/nature08891) [DOI] [PubMed] [Google Scholar]

- 59.Strandburg-Peshkin A, Farine DR, Couzin ID, Crofoot MC. 2015. Shared decision-making drives collective movement in wild baboons. Science 348, 1358–1361. ( 10.1126/science.aaa5099) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.King AJ, Wilson AM, Wilshin SD, Lowe J, Haddadi H, Hailes S, Morton AJ. 2012. Selfish-herd behaviour of sheep under threat. Curr. Biol. 22, R561–R562. ( 10.1016/j.cub.2012.05.008) [DOI] [PubMed] [Google Scholar]

- 61.Hubel TY, Myatt JP, Jordan NR, Dewhirst OP, McNutt JW, Wilson AM. 2016. Additive opportunistic capture explains group hunting benefits in African wild dogs. Nat. Commun. 7, 11033 ( 10.1038/ncomms11033) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Ákos Z, Beck R, Nagy M, Vicsek T, Kubinyi E. 2014. Leadership and path characteristics during walks are linked to dominance order and individual traits in dogs. PLoS Comput. Biol. 10, e1003446 ( 10.1371/journal.pcbi.1003446) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Nagy M, Couzin ID, Fiedler W, Wikelski M, Flack A. 2018. Synchronization, coordination and collective sensing during thermalling flight of freely migrating white storks. Phil. Trans. R. Soc. B 373, 20170011 ( 10.1098/rstb.2017.0011) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Strandburg-Peshkin A, Papageorgiou D, Crofoot MC, Farine DR. 2018. Inferring influence and leadership in moving animal groups. Phil. Trans. R. Soc. B 373, 20170006 ( 10.1098/rstb.2017.0006) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Sasaki T, Mann RP, Warren KN, Herbert T, Wilson T, Biro D. 2018. Personality and the collective: bold homing pigeons occupy higher leadership ranks in flocks. Phil. Trans. R. Soc. B 373, 20170038 ( 10.1098/rstb.2017.0038) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Farine DR, Strandburg-Peshkin A, Couzin ID, Berger-Wolf TY, Crofoot MC. 2017. Individual variation in local interaction rules can explain emergent patterns of spatial organization in wild baboons. Proc. R. Soc. B 284, 20162243 ( 10.1098/rspb.2016.2243) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Robinson PW, et al. 2012. Foraging behavior and success of a mesopelagic predator in the northeast Pacific Ocean: insights from a data-rich species, the northern elephant seal. PLoS ONE 7, e36728 ( 10.1371/journal.pone.0036728) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Akamatsu T, Sakai M, Wang D, Wang K, Li S. 2013. Acoustic time synchronization among tags on porpoises to observe their social relationships. J. Acoust. Soc. Am. 134, 4006 ( 10.1121/1.4830613) [DOI] [Google Scholar]

- 69.Krause J, Krause S, Arlinghaus R, Psorakis I, Roberts S, Rutz C. 2013. Reality mining of animal social systems. Trends Ecol. Evol. 28, 541–551. ( 10.1016/j.tree.2013.06.002) [DOI] [PubMed] [Google Scholar]

- 70.Lucas MC, Mercer T, Armstrong JD, McGinty S, Rycroft P. 1999. Use of a flat-bed passive integrated transponder antenna array to study the migration and behaviour of lowland river fishes at a fish pass. Fish. Res. 44, 183–191. ( 10.1016/s0165-7836(99)00061-2) [DOI] [Google Scholar]

- 71.Psorakis I, et al. 2015. Inferring social structure from temporal data. Behav. Ecol. Sociobiol. 69, 857–866. ( 10.1007/s00265-015-1906-0) [DOI] [Google Scholar]

- 72.Farine DR, Aplin LM, Garroway CJ, Mann RP, Sheldon BC. 2014. Collective decision making and social interaction rules in mixed-species flocks of songbirds. Anim. Behav. 95, 173–182. ( 10.1016/j.anbehav.2014.07.008) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Aplin LM, Farine DR, Morand-Ferron J, Cockburn A, Thornton A, Sheldon BC. 2015. Experimentally induced innovations lead to persistent culture via conformity in wild birds. Nature 518, 538–541. ( 10.1038/nature13998) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Kerth G, Ebert C, Schmidtke C. 2006. Group decision making in fission–fusion societies: evidence from two-field experiments in Bechstein's bats. Proc. R. Soc. B 273, 2785–2790. ( 10.1098/rspb.2006.3647) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.König B, Lindholm AK, Lopes PC, Dobay A, Steinert S, Buschmann FJ-U. 2015. A system for automatic recording of social behavior in a free-living wild house mouse population. Anim. Biotelem. 3, 541 ( 10.1186/s40317-015-0069-0) [DOI] [Google Scholar]

- 76.Arlinghaus R, Klefoth T, Cooke SJ, Gingerich A, Suski C. 2009. Physiological and behavioural consequences of catch-and-release angling on northern pike (Esox lucius L.). Fish. Res. 97, 223–233. ( 10.1016/j.fishres.2009.02.005) [DOI] [Google Scholar]

- 77.Ji W, White PCL, Clout MN. 2005. Contact rates between possums revealed by proximity data loggers. J. Appl. Ecol. 42, 595–604. ( 10.1111/j.1365-2664.2005.01026.x) [DOI] [Google Scholar]

- 78.Rutz C, Morrissey MB, Burns ZT, Burt J, Otis B, St Clair JJH, James R. 2015. Calibrating animal-borne proximity loggers. Methods Ecol. Evol. 6, 656–667. ( 10.1111/2041-210X.12370) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Rutz C, Burns ZT, James R, Ismar SMH, Burt J, Otis B, Bowen J, St Clair JJH. 2012. Automated mapping of social networks in wild birds. Curr. Biol. 22, R669–R671. ( 10.1016/j.cub.2012.06.037) [DOI] [PubMed] [Google Scholar]

- 80.Zhang P, Sadler CM, Liu T, Fischhoff I, Martonosi M, Lyons SA, Rubenstein DI. 2005. Habitat monitoring with ZebraNet: design and experiences. In Wireless sensor networks: a systems perspective, pp. 235–257. Norwood, MA: Artech House. [Google Scholar]

- 81.Holland KN, Meyer CG, Dagorn LC. 2009. Inter-animal telemetry: results from first deployment of acoustic ‘business card’ tags. Endanger. Species Res. 10, 287–293. ( 10.3354/esr00226) [DOI] [Google Scholar]

- 82.Guttridge TL, Gruber SH, Krause J, Sims DW. 2010. Novel acoustic technology for studying free-ranging shark social behaviour by recording individuals' interactions. PLoS ONE 5, e9324 ( 10.1371/journal.pone.0009324) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Harpaz R, Schneidman E. 2014. Receptive-field like models accurately predict individual zebrafish behavior in a group. J. Mol. Neurosci. 53, S61. [Google Scholar]

- 84.Collignon B, Séguret A, Halloy J. 2016. A stochastic vision-based model inspired by zebrafish collective behaviour in heterogeneous environments. R. Soc. Open Sci. 3, 150473 ( 10.1098/rsos.150473) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Ropert-Coudert Y, Wilson RP. 2005. Trends and perspectives in animal-attached remote sensing. Front. Ecol. Environ. 3, 437 ( 10.2307/3868660) [DOI] [Google Scholar]

- 86.Brown DD, Kays R, Wikelski M, Wilson R, Klimley A. 2013. Observing the unwatchable through acceleration logging of animal behavior. Anim. Biotelem. 1, 20 ( 10.1186/2050-3385-1-20) [DOI] [Google Scholar]

- 87.Martín López LM, Aguilar de Soto N, Miller P, Johnson M. 2016. Tracking the kinematics of caudal-oscillatory swimming: a comparison of two on-animal sensing methods. J. Exp. Biol. 219, 2103–2109. ( 10.1242/jeb.136242) [DOI] [PubMed] [Google Scholar]

- 88.Wilson RP, White CR, Quintana F, Halsey LG, Liebsch N, Martin GR, Butler PJ. 2006. Moving towards acceleration for estimates of activity-specific metabolic rate in free-living animals: the case of the cormorant. J. Anim. Ecol. 75, 1081–1090. ( 10.1111/j.1365-2656.2006.01127.x) [DOI] [PubMed] [Google Scholar]

- 89.Qasem L, Cardew A, Wilson A, Griffiths I, Halsey LG, Shepard ELC, Gleiss AC, Wilson R. 2012. Tri-axial dynamic acceleration as a proxy for animal energy expenditure; should we be summing values or calculating the vector? PLoS ONE 7, e31187 ( 10.1371/journal.pone.0031187) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Simon M, Johnson M, Madsen PT. 2012. Keeping momentum with a mouthful of water: behavior and kinematics of humpback whale lunge feeding. J. Exp. Biol. 215, 3786–3798. ( 10.1242/jeb.071092) [DOI] [PubMed] [Google Scholar]

- 91.Martín López LM, Miller PJO, Aguilar de Soto N, Johnson M. 2015. Gait switches in deep-diving beaked whales: biomechanical strategies for long-duration dives. J. Exp. Biol. 218, 1325–1338. ( 10.1242/jeb.106013) [DOI] [PubMed] [Google Scholar]

- 92.Ware C, Trites AW, Rosen DAS, Potvin J. 2016. Averaged propulsive body acceleration (APBA) can be calculated from biologging tags that incorporate gyroscopes and accelerometers to estimate swimming speed, hydrodynamic drag and energy expenditure for Steller sea lions. PLoS ONE 11, e0157326 ( 10.1371/journal.pone.0157326) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Williams TM, Wolfe L, Davis T, Kendall T, Richter B, Wang Y, Bryce C, Elkaim GH, Wilmers CC. 2014. Mammalian energetics. Instantaneous energetics of puma kills reveal advantage of felid sneak attacks. Science 346, 81–85. ( 10.1126/science.1254885) [DOI] [PubMed] [Google Scholar]

- 94.Ydesen KS, Wisniewska DM, Hansen JD, Beedholm K, Johnson M, Madsen PT. 2014. What a jerk: prey engulfment revealed by high-rate, super-cranial accelerometry on a harbour seal (Phoca vitulina). J. Exp. Biol. 217, 2239–2243. ( 10.1242/jeb.100016) [DOI] [PubMed] [Google Scholar]

- 95.Fehlmann G, O'Riain MJ, Hopkins PW, O'Sullivan J, Holton MD, Shepard ELC, King AJ. 2017. Identification of behaviours from accelerometer data in a wild social primate. Anim. Biotelem. 5, 6 ( 10.1186/s40317-017-0121-3) [DOI] [Google Scholar]

- 96.Nathan R, Spiegel O, Fortmann-Roe S, Harel R, Wikelski M, Getz WM. 2012. Using tri-axial acceleration data to identify behavioral modes of free-ranging animals: general concepts and tools illustrated for griffon vultures. J. Exp. Biol. 215, 986–996. ( 10.1242/jeb.058602) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Wilson RP, Shepard E, Liebsch N. 2008. Prying into the intimate details of animal lives: use of a daily diary on animals. Endanger. Species Res. 4, 123–137. ( 10.3354/esr00064) [DOI] [Google Scholar]

- 98.Wilson RP, Wilson M-P.1988. Dead reckoning: a new technique for determining penguin movements at sea. https://books.google.com/books/about/Dead_reckoning.html?hl=&id=YzmAtwAACAAJ .

- 99.Bidder OR, et al. 2015. Step by step: reconstruction of terrestrial animal movement paths by dead-reckoning. Movement Ecol. 3, 23 ( 10.1186/s40462-015-0055-4) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Ware C, Arsenault R, Plumlee M, Wiley D. 2006. Visualizing the underwater behavior of humpback whales. IEEE Comput. Graph. Appl. 26, 14–18. ( 10.1109/MCG.2006.93) [DOI] [PubMed] [Google Scholar]

- 101.Schmidt V, Weber TC, Wiley DN, Johnson MP. 2010. Underwater tracking of humpback whales (Megaptera novaeangliae) with high-frequency pingers and acoustic recording tags. IEEE J. Ocean. Eng. 35, 821–836. ( 10.1109/joe.2010.2068610) [DOI] [Google Scholar]

- 102.Wensveen PJ, Thomas L, Miller PJO. 2015. A path reconstruction method integrating dead-reckoning and position fixes applied to humpback whales. Movement Ecol. 3, 31 ( 10.1186/s40462-015-0061-6) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Fischer J, Zinner D. 2011. Communication and cognition in primate group movement. Int. J. Primatol. 32, 1279–1295. ( 10.1007/s10764-011-9542-7) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.King AJ, Sueur C. 2011. Where next? Group coordination and collective decision making by primates. Int. J. Primatol. 32, 1245–1267. ( 10.1007/s10764-011-9526-7) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Gall GEC, Manser MB. 2017. Group cohesion in foraging meerkats: follow the moving ‘vocal hot spot’. R. Soc. Open Sci. 4, 170004 ( 10.1098/rsos.170004) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Radford AN. 2004. Vocal coordination of group movement by green woodhoopoes (Phoeniculus purpureus). Ethology 110, 11–20. ( 10.1046/j.1439-0310.2003.00943.x) [DOI] [Google Scholar]

- 107.Leighty KA, Soltis J, Wesolek CM, Savage A. 2008. Rumble vocalizations mediate interpartner distance in African elephants, Loxodonta africana. Anim. Behav. 76, 1601–1608. ( 10.1016/j.anbehav.2008.06.022) [DOI] [Google Scholar]

- 108.Lusseau D, Conradt L. 2009. The emergence of unshared consensus decisions in bottlenose dolphins. Behav. Ecol. Sociobiol. 63, 1067–1077. ( 10.1007/s00265-009-0740-7) [DOI] [Google Scholar]

- 109.Marshall G. 2007. Advances in animal-borne imaging. Mar. Technol. Soc. J. 41, 4–5. ( 10.4031/002533207787441926) [DOI] [Google Scholar]

- 110.Marshall GJ. 1998. Crittercam: an animal-borne imaging and data logging system. Mar. Technol. Soc. J. 32, 11. [Google Scholar]

- 111.Troscianko J, Rutz C. 2015. Activity profiles and hook-tool use of New Caledonian crows recorded by bird-borne video cameras. Biol. Lett. 11, 20150777 ( 10.1098/rsbl.2015.0777) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Rosen H, Gilly W, Bell L, Abernathy K, Marshall G. 2015. Chromogenic behaviors of the Humboldt squid (Dosidicus gigas) studied in situ with an animal-borne video package. J. Exp. Biol. 218, 265–275. ( 10.1242/jeb.114157) [DOI] [PubMed] [Google Scholar]

- 113.Yoda K, Murakoshi M, Tsutsui K, Kohno H. 2011. Social interactions of juvenile brown boobies at sea as observed with animal-borne video cameras. PLoS ONE 6, e19602 ( 10.1371/journal.pone.0019602) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Akamatsu T, Matsuda A, Suzuki S, Wang D, Wang K, Suzuki M, Muramoto H, Sugiyama N, Oota K. 2005. New stereo acoustic data logger for free-ranging dolphins and porpoises. Mar. Technol. Soc. J. 39, 3–9. ( 10.4031/002533205787443980) [DOI] [Google Scholar]

- 115.Johnson MP, Tyack PL. 2003. A digital acoustic recording tag for measuring the response of wild marine mammals to sound. IEEE J. Ocean. Eng. 28, 3–12. ( 10.1109/joe.2002.808212) [DOI] [Google Scholar]

- 116.Burgess WC. 2000. The bioacoustic probe: a general-purpose acoustic recording tag. J. Acoust. Soc. Am. 108, 2583 ( 10.1121/1.4743598) [DOI] [Google Scholar]

- 117.Lynch E, Angeloni L, Fristrup K, Joyce D, Wittemyer G. 2013. The use of on-animal acoustical recording devices for studying animal behavior. Ecol. Evol. 3, 2030–2037. ( 10.1002/ece3.608) [DOI] [PMC free article] [PubMed] [Google Scholar]