Version Changes

Revised. Amendments from Version 1

This revision has addressed the concerns raised by the reviewers of this article. Following are the details: Minor changes including typos like ‘gaussian’- > ‘Gaussian’, ‘prior masking’ -> ‘prior to masking’ etc. have been performed according to Prof. Norris’s comments. It has been explicitly mentioned that ‘axial’ slices were acquired. Moreover, the conversion of units from ‘FWHM’ to ‘cycles/mm’ has been properly referred to. The probable reason for auditory decoding performing better than visual decoding is also discussed in the Results and Conclusion section. In order to address Dr. Pernet’s comments, explicit mentions in the manuscript have been made to highlight the use of inclusion of temporal derivatives in the design matrix. In addition, the appropriateness of applying repeated random sampling instead of leave-one-out cross validation is also discussed in the manuscript.

Abstract

Spatial filtering strategies, combined with multivariate decoding analysis of BOLD images, have been used to investigate the nature of the neural signal underlying the discriminability of brain activity patterns evoked by sensory stimulation – primarily in the visual cortex. Previous research indicates that such signals are spatially broadband in nature, and are not primarily comprised of fine-grained activation patterns. However, it is unclear whether this is a general property of the BOLD signal, or whether it is specific to the details of employed analyses and stimuli. Here we applied an analysis strategy from a previous study on decoding visual orientation from V1 to publicly available, high-resolution 7T fMRI on the response BOLD response to musical genres in primary auditory cortex. The results show that the pattern of decoding accuracies with respect to different types and levels of spatial filtering is comparable to that obtained from V1, despite considerable differences in the respective cortical circuitry.

Keywords: musical-genre decoding, 7 Tesla fMRI, primary auditory cortex, spatial band-pass filtering

Introduction

We recently reported 1 that spatial band-pass filtering of 7 Tesla BOLD fMRI data boosts accuracy of decoding visual orientations from human V1. We observed this result in comparison to data without any dedicated spatial filtering applied, and spatially low-pass filtered data – a typical preprocessing strategy for BOLD fMRI. This effect was present across a range of tested spatial acquisition resolutions, ranging from 0.8 mm to 2 mm isotropic voxel size (Figure 4 in 1). The bandpass spatial filtering procedure was performed by a difference-of-Gaussians (DoG) filter similar to Supplementary Figure 5 in 1. The frequency bands indicated the presence of orientation-related signal in a wide range of spatial frequencies as indicated by above-chance decoding performance for nearly all tested bands. Maximum decoding performance was observed for a band of 5–8 mm full width at half maximum (FWHM), indicating that low spatial frequency fMRI components also contribute to noise with respect to orientation discrimination.

This finding raises the question whether this reflects a specific property of early visual cortex and the particular stimuli used in 1, or whether it represents a more general aspect of BOLD fMRI data with implications for data preprocessing of decoding analyses. Here, we investigate this question by applying the identical analysis strategy from 1 to a different public 7 Tesla BOLD fMRI dataset 2, with the aim of decoding the musical genres of short audio clips from the early auditory cortex.

Methods

As this study aims to replicate previously reported findings, by employing a previously published analysis strategy on an existing dataset, the full methodological details are not repeated here. Instead the reader is kindly referred to 2, 3 for comprehensive descriptions of the data, and to 1 for details on the analysis strategy and previous findings. Only key information and differences are reported below.

Stimulus and fMRI data

Data were taken from a published dataset 2 which were repeatedly analyzed previously 4, 5, and publicly available from the studyforrest.org project of 20 participants passively listening to five natural, stereo, high-quality music stimuli (6 s duration; 44.1 kHz sampling rate) for each of five different musical genres: 1) Ambient, 2) Roots Country 3) Heavy Metal, 4) 50s Rock’n’Roll, and 5) Symphonic, while fMRI data were recorded in a 7 Tesla Siemens scanner (1.4 mm isotropic voxel size, TR=2 s, matrix size 160 ×160, 36 axial slices, 10% interslice gap). fMRI data were scanner-side corrected for spatial distortions 6. Stimulation timing and frequency were roughly comparable to 1: 25 vs. 30 trials per run, 10 s vs. 8 s minimum inter-trial stimulus onset asynchrony in a low event-related design, 8 vs. 10 acquisition runs. Subject 20 was excluded from the analysis due to incomplete data.

Region of interest (ROI) localization

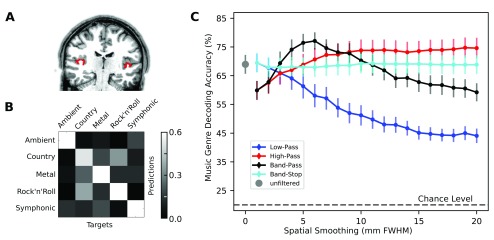

Analogous to 1, ROIs were localized separately for each individual brain. ROIs were left and right transversetemporal gyri, as defined by the structural Desikan-Killiany atlas 7 from the previously published Freesurfer-based cortex parcellations for all studyforrest.org participants 3. This ROI approximates the location of primary auditory cortex, including Broadmann areas 41 and 42 ( Figure 1A). The average number of voxels in the ROI across participants was 1412 (std=357).

Figure 1.

( A) Localization of early auditory cortex (transversetemporal gyrus) as shown in coronal slice of a participant (sub-16). ( B) Confusion matrix showing the mean performance of the LinearCSVM classifier across all participants in decoding musical genres from early auditory cortex in spatially unfiltered data. ( C) Classification accuracy of decoding musical genres across different types and levels of spatial Gaussian filtering. The theoretical chance performance of 20% is shown by the dashed line. This figure has been generated from original analysis of the dataset made publicly available 2 under the terms of the Public Domain Dedication and License.

fMRI data analysis

Motion-corrected and distortion-corrected BOLD images from the publicly available dataset 2 were analyzed. Images for each participant, available from the dataset as the filename pattern of sub*/BOLD/task002_run*/bold_dico_bold7Tp1_to_subjbold7Tp1.nii.gz were already aligned across acquisition runs. Analogous to 1, BOLD images were masked to the defined bilateral ROI, and voxelwise BOLD response were univariately modelled for each run using the GLM implementation in NiPy [v0.3; 8] while accounting for serial correlation with an autoregressive term (AR1). The GLM design matrix included hemodynamic response regressors, one for each genre and its corresponding temporal derivatives for improved parameter estimation 9, six nuisance regressors for motion (translation and rotation), and polynomial regressors (up to 2nd-order) modeling temporal signal drift as regressors of no-interest. The β weights thus computed for each run were Z-scored per voxel. Multivariate decoding was performed on these Z-scored β weights using linear support vector machines [SVM; PyMVPA’s LinearCSVMC implementation of the LIBSVM classification algorithm; 10, 11 in a within-subject leave-one-run-out cross-validation of 5-way multi-class classification of musical genres. Leave-one-out cross-validation was performed in order to enable comparison with previous results although it has been recently argued that repeated random splits are a superior validation scheme 12. The hyper-parameter C of the SVM classifier was scaled to the norm of the data. Decoding was performed using the entire bilateral ROI.

In-line with 1, 13, complete BOLD images were spatially filtered prior to masking and GLM-modeling, as prior results suggest negligible impact of alternative filtering strategies (see Figure S4 in 1). The magnitude of spatial filtering used is expressed in terms of the size of the Gaussian filter kernel(s) described by their FWHM in mm (a conversion of this unit to (cycles/mm) is shown in Supplementary Figure 5 in 1). The image_smooth() function in the nilearn package 14 was used to implement all spatial smoothing procedures. The implementations of Gaussian low-pass (LP), and high-pass (HP) filters, as well as the DoG filters for bandpass (BP) and bandstop (BS) filtering are identical to those of 1 (1 mm FWHM filter size difference).

Results and conclusions

Figure 1 shows the mean accuracy across 19 participants for classifying the genre of music clips from BOLD response patterns of bilateral early auditory cortex. Compared to visual orientation decoding from V1 1, the mean accuracy of decoding musical genres without dedicated spatial filtering exhibits a substantially higher baseline (for 1.4 mm unfiltered data, mean orientation decoding accuracy was around 35%, whereas mean decoding of musical genres was at around 65%). However, the general pattern of accuracies across all filter sizes and filter types strongly resembles the results of orientation decoding from V1. The superior decoding performance here, in comparison to oriented gabor gratings used for visual decoding, could be the result of the richer naturalistic stimuli with features like pitch, timbre, and speech lead to more separable fMRI activation patterns across genres. LP filtering led to a steady decline of performance with increasing filter size, but does not reach chance level even with a 20 mm smoothing kernel. In contrast to LP filtering, HP filtered data yielded superior decoding results for filter sizes of 4 mm and larger. Congruent with 1, BP filtering led to maximum decoding accuracy in the ≈5–8 mm FWHM band. The accuracy achieved on BP filtered data at 6mm FWHM was significantly higher than that without any dedicated spatial filtering (McNemar test with continuity correction 15: χ 2=33.22, p<10 −6). BS filtering led to an approximately constant performance regardless of the base filter size, on the same level as with no dedicated spatial filtering.

In line with Gardumi et al. 16, these results suggest that BOLD response patterns informative for decoding musical genre from early auditory cortex are spatially distributed and are represented at different spatial scales. However, despite their broadband nature, relevant information seems to be concentrated in the spatial frequency band corresponding to a ≈5–8 mm DoG filter. Most notably, the present findings show a striking similarity to the visual orientation decoding accuracy patterns in V1 1. The origin and spatial scale of signals beneficial for decoding BOLD response patterns are an intensely debated topic in the literature, and various studies have looked at this question in the context of anatomical or topographical structure of visual cortex 13, 17– 19. There are substantial differences between the auditory and visual cortex in terms of anatomy, synaptic physiology, and the circuity of cortical layers and their connections with other cortical areas and subcortical nuclei 20. The present results indicate that these differences have little impact on the spatial characteristics of those BOLD signal components that are relevant for decoding visual orientation or genre of music. In summary, these findings call for further investigations of neural and physiological signals underlying decoding models that are common across sensory domains, and individual cortical areas. The increasing availability of diverse open brain imaging data can help to aid the evaluation of generality and validity of explanatory models.

Data and software availability

OpenFMRI.org: High-resolution 7-Tesla fMRI data on the perception of musical genres. Accession number: ds000113b.

Article sources for 7-Tesla fMRI data on the perception of musical genres are available: https://doi.org/10.5281/zenodo.18767 21

“Forrest Gump” data release source code is available: https://doi.org/10.5281/zenodo.18770 22

The codes used in this study for analysis are made openly available: https://doi.org/10.5281/zenodo.1158836 23

Funding Statement

AS and SP were supported by a grant from the German Research Concil (DFG) awarded to S.~Pollmann (PO~548/15-1), MH was supported by funds from the German federal state of Saxony-Anhalt and the European Regional Development Fund (ERDF), Project: Center for Behavioral Brain Sciences. This research was, in part, also supported by the German Federal Ministry of Education and Research (BMBF) as part of a US-German collaboration in computational neuroscience (CRCNS; awarded to J.V. Haxby, P. Ramadge, and M. Hanke), co- funded by the BMBF and the US National Science Foundation (BMBF 01GQ1112; NSF 1129855).

The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

[version 2; referees: 2 approved]

References

- 1. Sengupta A, Yakupov R, Speck O, et al. : The effect of acquisition resolution on orientation decoding from V1 BOLD fMRI at 7T. Neuroimage. 2017;148:64–76. 10.1016/j.neuroimage.2016.12.040 [DOI] [PubMed] [Google Scholar]

- 2. Hanke M, Dinga R, Häusler C, et al. : High-resolution 7-Tesla fMRI data on the perception of musical genres – an extension to the studyforrest dataset [version 1; referees: 2 approved with reservations]. F1000Res. 2015;4:174 10.12688/f1000research.6679.1 [DOI] [Google Scholar]

- 3. Hanke M, Baumgartner FJ, Ibe P, et al. : A high-resolution 7-Tesla fMRI dataset from complex natural stimulation with an audio movie. Sci Data. 2014;1: 140003. 10.1038/sdata.2014.3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Güçlü U, Thielen J, Hanke M, et al. : Brains on beats. In Advances in Neural Information Processing Systems2016;2101–2109. Reference Source [Google Scholar]

- 5. Casey MA: Music of the 7Ts: Predicting and Decoding Multivoxel fMRI Responses with Acoustic, Schematic, and Categorical Music Features. Front Psychol. 2017;8:1179. 10.3389/fpsyg.2017.01179 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. In MH, Speck O: Highly accelerated PSF-mapping for EPI distortion correction with improved fidelity. MAGMA. 2012;25(3):183–192. 10.1007/s10334-011-0275-6 [DOI] [PubMed] [Google Scholar]

- 7. Desikan RS, Ségonne F, Fischl B, et al. : An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. Neuroimage. 2006;31(3):968–980. 10.1016/j.neuroimage.2006.01.021 [DOI] [PubMed] [Google Scholar]

- 8. Millman KJ, Brett M: Analysis of functional magnetic resonance imaging in Python. Comput Sci Eng. 2007;9(3):52–55. 10.1109/MCSE.2007.46 [DOI] [Google Scholar]

- 9. Pernet CR: Misconceptions in the use of the General Linear Model applied to functional MRI: a tutorial for junior neuro-imagers. Front Neurosci. 2014;8:1. 10.3389/fnins.2014.00001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Chang CC, Lin CJ: LIBSVM: A library for support vector machines. ACM Trans Intell Syst Technol. 2011;2(3):27. 10.1145/1961189.1961199 [DOI] [Google Scholar]

- 11. Hanke M, Halchenko YO, Sederberg PB, et al. : PyMVPA: A Unifying Approach to the Analysis of Neuroscientific Data. Front Neuroinform. 2009;3:3. 10.3389/neuro.11.003.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Varoquaux G, Raamana PR, Engemann DA, et al. : Assessing and tuning brain decoders: Cross-validation, caveats, and guidelines. Neuroimage. 2017;145(Pt B):166–179. 10.1016/j.neuroimage.2016.10.038 [DOI] [PubMed] [Google Scholar]

- 13. Swisher JD, Gatenby JC, Gore JC, et al. : Multiscale pattern analysis of orientation-selective activity in the primary visual cortex. J Neurosci. 2010;30(1):325–30. 10.1523/JNEUROSCI.4811-09.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Pedregosa F, Varoquaux G, Gramfort A, et al. : Scikit-learn: Machine learning in Python. J Mach Learn Res. 2011;12:2825–2830. Reference Source [Google Scholar]

- 15. Edwards AL: Note on the correction for continuity in testing the significance of the difference between correlated proportions. Psychometrika. 1948;13(3):185–187. 10.1007/BF02289261 [DOI] [PubMed] [Google Scholar]

- 16. Gardumi A, Ivanov D, Hausfeld L, et al. : The effect of spatial resolution on decoding accuracy in fmri multivariate pattern analysis. Neuroimage. 2016;132:32–42. 10.1016/j.neuroimage.2016.02.033 [DOI] [PubMed] [Google Scholar]

- 17. Freeman J, Brouwer GJ, Heeger DJ, et al. : Orientation decoding depends on maps, not columns. J Neurosci. 2011;31(13):4792–804. 10.1523/JNEUROSCI.5160-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Alink A, Krugliak A, Walther A, et al. : fMRI orientation decoding in V1 does not require global maps or globally coherent orientation stimuli. Front Psychol. 2013;4:493. 10.3389/fpsyg.2013.00493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Freeman J, Heeger DJ, Merriam EP: Coarse-scale biases for spirals and orientation in human visual cortex. J Neurosci. 2013;33(50):19695–703. 10.1523/JNEUROSCI.0889-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Linden JF, Schreiner CE: Columnar transformations in auditory cortex? A comparison to visual and somatosensory cortices. Cereb Cortex. 2003;13(1):83–89. 10.1093/cercor/13.1.83 [DOI] [PubMed] [Google Scholar]

- 21. Hanke M: paper-f1000_pandora_data: Initial submission (Version submit_v1). Zenodo. 2015. Data Source [Google Scholar]

- 22. Hanke M, v-iacovella, Häusler C: gumpdata: Matching release for pandora data paper publication (Version pandora_release1). Zenodo. 2015. Data Source [Google Scholar]

- 23. Sengupta A: psychoinformatics-de/studyforrest-paper-auditorydecoding: v1.0 (Version v1.0). Zenodo. 2018. Data Source [Google Scholar]