Abstract

When a meta-analysis consists of a few small trials that report zero events, accounting for heterogeneity in the (interval) estimation of the overall effect is challenging. Typically, we predefine meta-analytical methods to be employed. In practice, data poses restrictions that lead to deviations from the pre-planned analysis, such as the presence of zero events in at least one study arm. We aim to explore heterogeneity estimators behaviour in estimating the overall effect across different levels of sparsity of events. We performed a simulation study that consists of two evaluations. We considered an overall comparison of estimators unconditional on the number of observed zero cells and an additional one by conditioning on the number of observed zero cells. Estimators that performed modestly robust when (interval) estimating the overall treatment effect across a range of heterogeneity assumptions were the Sidik-Jonkman, Hartung-Makambi and improved Paul-Mandel. The relative performance of estimators did not materially differ between making a predefined or data-driven choice. Our investigations confirmed that heterogeneity in such settings cannot be estimated reliably. Estimators whose performance depends strongly on the presence of heterogeneity should be avoided. The choice of estimator does not need to depend on whether or not zero cells are observed.

Keywords: Meta-analysis, Zero events, Small populations, Rare diseases, Heterogeneity

1. Introduction

Meta-analyses (MAs) techniques are commonly employed in order to obtain a more precise and more general effect estimate of a treatment. Heterogeneity (τ) of treatment effects measured in multiple Randomized Controlled Trials (RCTs) is a crucial part of the estimation [1].

In MAs of RCTs, methodological challenges arise when the disease under examination is rare and only a few small RCTs are available [2,3]. This is mostly due to the large sample assumptions on which most MA methods are based. In the case of rare diseases with binary endpoints, zero cells are more likely to be observed in at least one of the treatment arms of at least one contributing trial [[4], [5], [6]]. Zero cells in MAs pose challenges as they induce bias in both the estimation of the overall effect and the between-study variance (heterogeneity) [[7], [8], [9], [10], [11], [12], [13], [14]].

When conducting a MA, the estimation method might be adjusted conditionally on observing zero cells. Corrections are typically introduced by adding a number to the zero cells observed; furthermore, the choice of the heterogeneity estimator could change. The latter choice is by itself a challenging task, given the large pool of options [[15], [16], [17], [18], [19], [20], [21], [22], [23], [24]]. Prospective choice of analysis strategies is a fundamental element of statistical inference. The extent to which conditional (on the observed zero cells) analysis choices can affect robustness is of obvious concern.

Especially for dealing with a MA of a few RCTs, there is no straightforward answer to which estimator would be robust across several heterogeneity assumptions [21]. Most estimators face difficulties in case of a limited number of trials; they induce bias in the estimation of τ [25,26] and may result in inappropriate interval estimation of the treatment effect. However, not much is known regarding their behaviour in the presence of zero cells and small populations.

The primary objective of this work is to assess the robustness of heterogeneity estimators in the (interval) estimation of treatment effect across ranges of sparsity of events and assumed heterogeneity. The starting point is the acknowledged poor estimation of heterogeneity in this setting. We evaluate the estimators in case they are predefined (unconditional), as well as when they are chosen depending on the observed zero cells in contributing trials (conditional on the observed data, in short: conditional), and explore whether such a retrospective analysis choice can substantially affect inference.

The paper is organized as follows. First we describe the standard random-effects (RE) model and introduce the heterogeneity estimators briefly. Subsequently, we present two motivating examples and their analysis. Then we describe the simulation study and evaluate the two distinct approaches. We conclude with recommendations on evidence synthesis for a sparse-events MA in small populations.

2. Methods

We consider a set of k trials with binary outcomes that compare an experimental treatment to a control. Patients are randomized between two groups: treatment (T) and control (C).

By we denote the log odds ratio (logOR) in the trial. Following standard theory (e.g. Ref. [1]), we assume:

| (1) |

The study-specific treatment effect estimates are , while their variances are , where and denote the number of responders and the total number of subjects in each trial, respectively.

Assuming a fixed-effects (FE) model, θ is common for all studies (). Assuming a RE model, the are considered exchangeable and follow a normal distribution, that is,

| (2) |

where θ is the overall effect and is the between-study variance. When , then the RE model reduces to the FE model. The pooled effect estimate is calculated as a weighted average . The inverse variance (IV) weights are then defined as for the RE model and as for the FE model.

A standard confidence interval is calculated as, , where is the () quantile of the standard normal distribution and .

To apply the RE model, estimation of heterogeneity is required. In the presence of zero cells, heterogeneity estimators entail the addition of a small continuity correction (CC) on zero cells in order to provide finite estimates. Several methods for estimating are proposed in the literature. Table 1 presents a summary of the 15 estimators that are included in this study. For a detailed overview of heterogeneity estimators, we refer the reader to two systematic reviews [27,28].

Table 1.

Summary of heterogeneity estimators, including their equation, abbreviation and source.

| Methods | Equation | Abbreviation | Source |

|---|---|---|---|

| DerSimonian Laird | dl | [15] | |

| Positive DerSimonian Laird | and | dlp | [17] |

| Two-step Der Simonian Laird | dl2 | [16] | |

| Hedges | he | [24] | |

| Two step Hedges | Similar to DL2 using the Hedges estimator | he2 | [16] |

| Positive Sidik-Jonkman | , | sj | [20] |

| Model error variance - vc | , | mvvc | [20] |

| Paul-Mandel | , | pm | [18] |

| Improved Paul-Mandel | , | Ipm | [19] |

| Hartung - Makambi | hm | [22] | |

| Hunter-Schmidt | hs | [23] | |

| Maximum Likelihood | ml | – | |

| Restricted Maximum likelihood | reml | – | |

| Rukhin Bayes zero estimator | rb0 | [21] | |

| Rukhin Bayesian positive | rbp | [21] |

, , , , : Observed control event rate, . The pm, ipm, ml and reml are iterative estimators.

3. Motivating examples

3.1. Intravenous immunoglobulin (IVIG) for Guillain-Barre syndrome (GBS)

GBS syndrome has a prevalence of 1–9/100.000 [29], the term is used to describe a number of rare post-infection neuropathies. Patients may recover completely, remain unable to walk 6 months after disease onset or have a fatal outcome. A recent Cochrane review and MA summarized four RCTs that compared IVIG to plasma exchange [4]. Treatment discontinuation was reported, as a secondary outcome. Trials which were relatively small either failed to report any event or they only had one in each arm. On the contrary, the largest of these trials reported a considerable number of events in both arms (Fig. 1). For the initial analysis the Mantel-Haenszel (MH) FE risk ratio 0.14 (95% 0.05–0.36) was used. By using the MH, the authors excluded information from trials with no reported event, which resulted in a significant overall effect with moderate estimated heterogeneity.

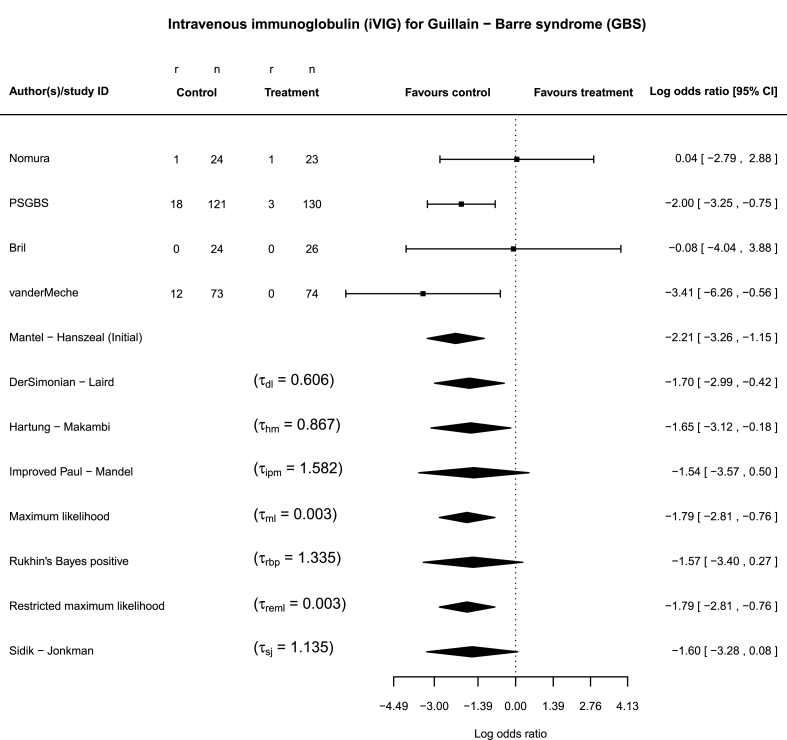

Fig. 1.

Forest plot of the overall treatment effect (log odds ratio) for the Guillain-Barre syndrome (GBS) example. The inverse-variance random-effects method is applied in combination with the seven heterogeneity estimators. The between-study standard deviations τ are presented alongside each estimator The confidence intervals are calculated as . The Mantel-Haenszel analysis is plotted as well.

3.2. Sapropterin dihydrochloride for phenylketonuria (PHK)

PHK is a common inborn error of amino acid metabolism that causes mental disability (mild to severe) to patients who are not treated properly. It is considered a rare child disorder with a prevalence of 1–5/10.000 [29]. A Cochrane review consisted of two studies on sapropterin dihydrochloride and reported on several adverse events, such as vomiting [5]. The two studies produced contradictory but not significant results overall (Supplementary material A - Table 1). Even though, the estimated heterogeneity was substantial, the studies were again pooled using a FE MH on the risk ratio 1.04 (95% 0.28–3.91) [5].

3.3. Analysis of motivating examples

In regards to our first example (GBS), the final conclusion is influenced considerably by the choice of the heterogeneity estimators. Estimators that lead to a larger estimate value of τ fail to reject the null hypothesis and therefore result in a more conservative conclusion (Fig. 1).

In the second example (PHK), the overall treatment effect changes direction, depending on the choice of estimator (Supplementary material A - Table 2). The overall treatment effect remains non-significant due to the contradictory results of the two available trials. When estimating the heterogeneity, we observe a behaviour similar to the first example.

4. Simulation study

In order to assess the performance of a predefined versus a data-driven choice of analysis in the aforementioned setting, we conducted a simulation study that is divided in two parts; (1) evaluating the operational characteristics for the whole simulation, which represents the ”unconditional approach” strategy and (2) evaluating the operational characteristics for subsets of the whole simulation that are defined by the number of observed zero cells in a simulated MA. The second part represents the ”conditional approach” strategy.

4.1. Unconditional approach

Following the strategy of Hartung and Knapp [30] we simulated logORs from the null and alternative hypothesis. We varied the overall treatment effect as and set the heterogeneity equal to . These four values correspond to and to levels of relative heterogeneity, which are calculated via simulation of , where j: number of simulations. The total number of trials was restricted within . Ten fixed values as of were used for the control group event rate of the outcome. By simulating a uniformly random draw between for each trial arm, we varied the samples sizes between trials, while we kept the allocation ratio within each trial equal to 1:1. The sample size and allocation ratio were kept fixed in order for similar number of zero events to be produced in each arm. In this way, the small sample sizes in combination with different levels of control event rate led to specific levels of expected zero-event arm percentages (Supplementary material A - Table 3).

4.2. Conditional approach

For the second approach we focused on the evaluation of a four (k = 4) trial MA, since the relative performance of the heterogeneity estimators was similar across k = 2,3,4 trials. The conditional simulation theoretically leads up to a maximum of 8 distinct subsets, since a four trial MA results from a minimum of 1 to a maximum of 8 zero-event arms. Of course, the latter ones are not useful to consider for a meta-analysis.

For the unconditional approach we based performance measures on 10,000 simulated MAs and evaluated all 15 τ estimators, while for the conditional approach we based performance measures on 1,000,000 simulated MAs and evaluated 7 selected τ estimators that we considered important from the unconditional analysis. A constant CC of 0.5 was added to all cells of a trial that reported at least one zero event. All computations were performed using R and the high performance cluster supported by Utrecht Bioinformatics Center. An overview of the varied parameters for each simulation approach is presented in Supplementary material A (Table 4).

4.3. Performance measures

We assessed the bias of heterogeneity and overall treatment effect estimates. We calculated the empirical type I error, the power and coverage of the 95% confidence intervals of the overall effect estimate. Finally, we computed the probability of each estimator to observe a non-zero heterogeneity estimate ().

5. Results

In our small population settings, many heterogeneity estimators performed similarly. More specifically, estimators can be grouped -based on their performance-in two groups. Estimators dl, dl2, dlp, he, he2, mvvc, pm and rb0 displayed similar behaviour in our study. Estimators ml and hs showed a similar insufficient performance in identifying heterogeneity (Supplementary material B). Based on this we selected a key set of 7 estimators for detailed evaluation; dl from the first group, ml from the second group and five estimators that displayed the most divergent behaviour sj, ipm, rbp, hm and reml. In the case of two studies, most heterogeneity estimators behaved similarly.

Regarding the unconditional approach, we summarize the results in two figures Fig. 2 () and Fig. 3 (). The same two scenarios are presented for the conditional approach in Fig. 4, Fig. 5. Interested readers can find the figures of the remaining scenarios in Supplementary material B.

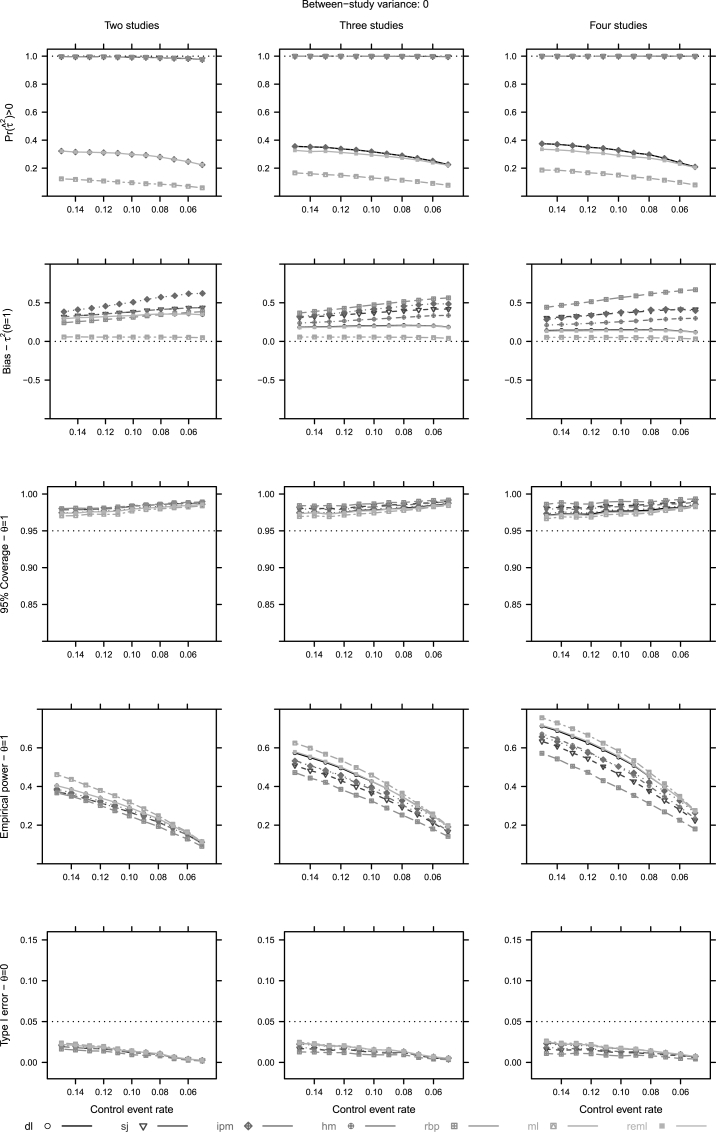

Fig. 2.

Unconditional approach operational characteristics (, mean bias of τ, coverage of the 95% confidence intervals, empirical power and type I error of θ) for two to four studies and . For abbreviations see Table 1.

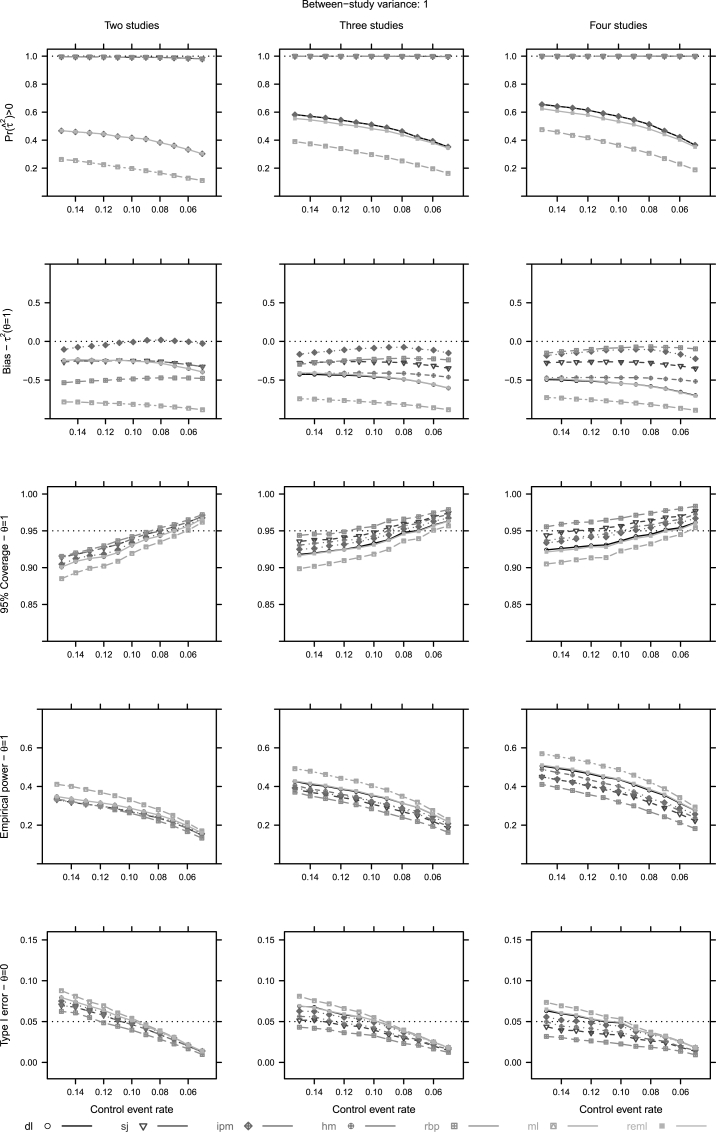

Fig. 3.

Unconditional approach operational characteristics (, mean bias of τ, coverage of the 95% confidence intervals, empirical power and type I error of θ) for two to four studies and . For abbreviations see Table 1.

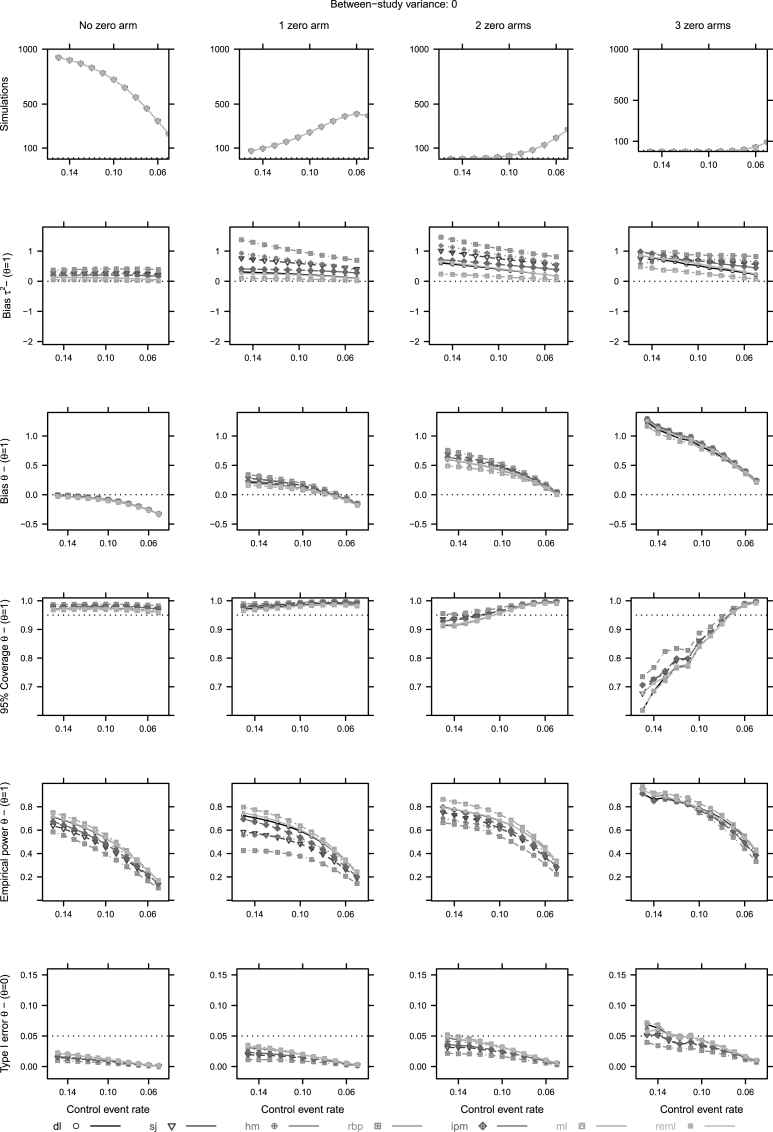

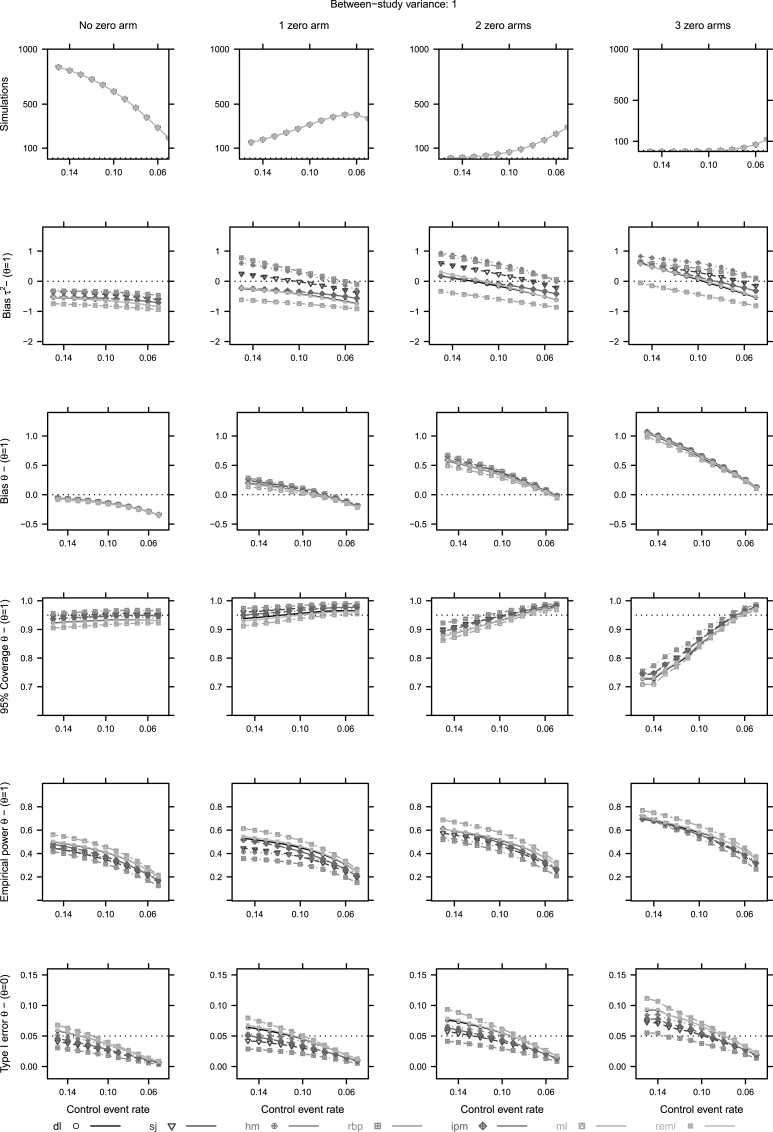

Fig. 4.

Conditional approach operational characteristics (Mean bias of τ, mean bias, coverage of the 95% confidence intervals, empirical power and type I error of θ) for four studies and . For abbreviations see Table 1. First row y-axis - 1000: 1,000,000, 500: 500,000, 100: 100,000 simulations.

Fig. 5.

Conditional approach operational characteristics (Mean bias of τ, mean bias, coverage of the 95% confidence intervals, empirical power and type I error of θ) for four studies and . For abbreviations see Table 1. First row y-axis - 1000: 1,000,000, 500: 500,000, 100: 100,000 simulations.

5.1. Unconditional approach

Alternative heterogeneity estimators had little impact on the bias of . As the control event rate () decreases, bias increases for all estimators. In addition, the point estimation of τ is problematic as well. Under homogeneity (), all estimators greatly overestimate τ, except for ml, while under heterogeneity () rbp, sj and ipm induce the least bias on τ (Fig. 2, Fig. 3).

The presence of heterogeneity impacts the type I error heavily. In non-sparse conditions, when , all estimators behave conservatively in interval estimating the overall effect, while in heterogeneous conditions () most of the estimators behave liberally. On the contrary, all estimators display conservative behaviour in very sparse conditions, regardless of the presence of heterogeneity (Fig. 2, Fig. 3). In addition, decreasing levels impact the 95% coverage. We also note that no estimator shows potential to control the coverage, when only two or three small trials are available (Fig. 2, Fig. 3).

The properties of the estimators' depend on the levels of true heterogeneity. As true heterogeneity will not be known, nor very reliably estimated we seek some robustness. And thus, we would prefer estimators that are less dependent on levels of true heterogeneity; for example, sj, hm and ipm (Fig. 2, Fig. 3).

5.2. Conditional approach

The first row in Fig. 4, Fig. 5 represents simulations that produce a specific number of zero cells. The first column refers to MAs with no observed zero cell. The rest refer to MAs with an exact number of observed zero cells.

In terms of bias of , we notice similar properties across conditional subsets; hence, an increase of negative bias, as the decreases (Fig. 4, Fig. 5). In the particular case of exactly no zero cell we observe an overall negative bias (Fig. 4, Fig. 5). The point estimation of τ is impacted by zero cells as well. When no zero cell trial is observed in a MA, all estimators produce values that are relatively close to each other. The increasing number of zero cells makes the estimation of heterogeneity unstable (Fig. 4, Fig. 5).

The performance of the estimators in terms of 95% coverage and type I error, depends again on the levels of true heterogeneity. In homogeneous cases (), independently of observed zero cells, all estimators lead to conservative inferences. When no zero cell trial is observed in a MA, and heterogeneity exists (), then most estimators result in liberal inferences. As the number of zero cells increases, estimators result in conservative inferences (Fig. 5). Again estimators whose performance is less dependent on levels of true heterogeneity are sj, hm and ipm. In addition, ipm produces relative higher power in comparison to sj and hm when one or two zero cells are observed in a MA (Fig. 4, Fig. 5).

In the case of no observed zero cells in a MA of heterogeneous settings (), all estimators induce negative bias on the estimation of θ and the estimation of τ (Fig. 5). When at least one zero trial is observed, inference becomes unstable. Such a behaviour could be explained by the impact of CCs on the study weights. When a zero cell trial is observed and a CC is applied, this trial's weight decreases. Therefore, RCTs with low event rates that probably point towards a small or no treatment effect would be down-weighted.

5.3. Revisiting the motivating examples

According to our simulation study, the conditional selection of heterogeneity estimator, which is based on the exact number of zero cells, would bring no added value, compared to the unconditional selection of an estimator when a sparse-events MA in small populations is expected. As heterogeneity cannot be reliably estimated in such sparse settings, the chosen estimator should be robust against the level of true heterogeneity. For example, if we had selected the sj, an estimator that was found to be less impacted by the levels of true heterogeneity, we would not have rejected the null hypothesis for the GBS example (Fig. 1).

Supplementary material A (Table 2) presents an extensive analysis that demonstrates the effect of applying alternative heterogeneity estimators on the overall treatment effect for the two motivating examples.

6. Discussion

In this paper we assess and discuss the problematic (interval) estimation of the overall treatment effect, in the presence of heterogeneity for a MA of a few small RCTs with zero events. In this context a truly robust estimation of heterogeneity appears not feasible. Neither can we recommend a single heterogeneity estimator which provides overall satisfactory performance in our small population sparse-event setting. In addition, the comparison between the two simulation approaches showed that the relative performance of heterogeneity estimators did not differ. Therefore, there is no material issue between making a predefined (unconditional) or a data-driven (conditional) choice. Further insights are provided by the conditional approach, which showed that even one observed zero cell has a considerable impact on the inference.

When performing a MA of rare diseases with anticipated or reported zero cells, regardless of a predefined or a data-driven analysis choice, one should avoid methods whose performance depends strongly on the presence of heterogeneity. Following this context, we identify and suggest estimators that perform modestly robust in (interval) estimating the overall treatment effect across a range of heterogeneity assumptions such as sj, hm and ipm. On the contrary, estimators whose performance depends heavily on the true level of heterogeneity, such as rbp and ml, should be avoided. In such a setting, one strategy might be to apply the key set of heterogeneity estimators. If this leads to treatment effect estimates and confidence intervals, which are not comfortably in the same direction, we should probably be cautious to draw firm conclusions.

With few events, the estimated study effects are biased, a bias which reveals itself in between-study variance. Few events also result in large within-study variance which masks between-study variance. Therefore, a trade-off exists; due to the biased effect estimates, heterogeneity increases but due to the large within-study variances, heterogeneity decreases. Hence, we conclude the following; (i) when no heterogeneity exists it can only be overestimated due to the biased estimates but (ii) when large heterogeneity exists, it is masked and underestimated.

The simulation study results pair with previous research. In our small population setting, a number of heterogeneity estimators showed small differences in performance [31]. In the particular case of two studies, most of the heterogeneity estimators behaved similarly as also was theoretically expected [31]. As noted already, a considerable difference was observed on the (interval) estimation of the overall treatment effect among heterogeneity estimators that are known to overestimate (rbp) or underestimate (ml) the true heterogeneity [17,25,28]. Such choices should be avoided in our setting as their performance is dependent on the level of true heterogeneity, which cannot be properly estimated. Furthermore, note that simulation studies results in such settings can significantly depend on the Data-Generating Model employed in the design of the simulation [32]. The relative behaviour of the compared heterogeneity estimators did not differ under alternative generating models applied. Relative behaviour also did not differ when applying a CC of 0.1 instead of 0.5, though the general performance of the inverse variance method was affected (see Supplementary Material B).

We only considered a simple Wald test for hypothesis testing via the IV method. We note the existence of an alternative test [30], which has the ability to control the type I error, in a more effective manner than the Wald test for a small number of trials. However, this test does not have sufficient power to detect a true effect [33,34]. In addition, the simple IV RE model might underperform in a few trials MA, thus sophisticated techniques that control type I error might be preferred. In this context a sensitivity analysis based on a variety of techniques was suggested [35].

We also restricted our comparison to the commonly employed RE model which assumes normally distributed effects across studies, with a common variance. This assumption and Data Generating Model has been challenged, and alternative methods for data synthesis, based on quasi-likelihood approaches have been proposed [36]. Such methods might be useful for robust interval estimation but their operational characteristics need to be further examined.

Simulation studies have evaluated several other meta-analytical methods regarding their ability to account for zero cells [9,[12], [13], [14]]. Among others, they include: (1) the evaluated IV method with alternative CCs [9], (2) the Peto method, which excludes trials with zero events in both arms internally from a MA [14], (3) the MH method for the OR [14], (4) methods that use alternative effect measures, such as the arcsine difference [13] and (5) multilevel models or with alternations in their likelihood [12]. The latter are prone to convergence issues when the number of levels (groups or trials) and the number of events or patients is limited [12,37]. These studies [9,[12], [13], [14]] focused on sparse-events MA, particularly in cases of relatively large sample sizes and large numbers of available studies. Hence, results could not be generalized directly to rare diseases, as the latter have both a limited number of trials and small sample sizes. Further research could focus on the aforementioned methods' behaviour, on the basis of the exact number of observed zero cells in a MA when only a few trials are available.

Further, by utilizing historical data, experts' opinions or priors that cover plausible heterogeneity values, Bayesian inference might provide a suitable alternative for cases of small populations [[38], [39], [40]]. Although it was not our primary focus, initial evaluations showed that a similar two-level normal Bayesian hierarchical model combined with informative priors [39] produces smaller biases on the estimation of heterogeneity but similarly problematic 95% coverage for very low control event rates.

In this study, we did not evaluate heterogeneity estimation within complex meta-analytical settings, such as a multiple outcome MA [41] or a network MA [42,43]. However, we expect that the impact of zero cells in small MAs could be relevant for this context as well, and a similar conditional examination could offer further insight.

Concluding, the choice of heterogeneity estimator does not need to depend on whether or not zero cells are observed in a MA of few small trials. Therefore, regardless of a predefined or data-driven analysis choice, when dealing with zero cells in a MA of rare diseases, we recommend methods with performance that does not strongly depend on the presence or absence of heterogeneity.

Author's contributions

KP, SN, DM and KR contributed to the conception and design of the study. KP performed the simulation study and produced the first draft. SN, KR and DM critically reviewed the article. All authors have read and accepted the final manuscript.

Acknowledgment

Konstantinos Pateras, Stavros Nikolakopulos and Kit Roes were supported by the EU FP7 HEALTH.2013.4.2-3 project Advances in Small Trials dEsign for Regulatory Innovation and eXcellence (Asterix): Grant 603160. The authors would like to thank Putri W. Novianti for early discussions on the heterogeneity estimators.

Footnotes

Supplementary data related to this article can be found at https://doi.org/10.1016/j.conctc.2017.11.012.

Appendix A. Supplementary data

The following are the supplementary data related to this article:

References

- 1.Higgins J.P.T., Thompson S.G., Spiegelhalter D.J. A re-evaluation of random-effects meta-analysis. J. R. Stat. Soc. Series A (Stat. Soc.) 2009;172(1):137–159. doi: 10.1111/j.1467-985X.2008.00552.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Pontes C., Fontanet J.M., Gomez-Valent M., Rios Guillermo J., Vives Vilagut R., Morros R. Milestones on orphan medicinal products development: the 100 first drugs for rare diseases approved throughout Europe. Clin. Therapeut. 2016;37(8):e132. [Google Scholar]

- 3.Edwards S.J.L., Lilford R.J., Braunholtz D., Jackson J. Why ’underpowered’ trials are not necessarily unethical. Lancet. 1997;350(9080):804–807. doi: 10.1016/s0140-6736(97)02290-3. [DOI] [PubMed] [Google Scholar]

- 4.Hughes R., Swan A., Van Doorn P. Intravenous immunoglobulin for Guillain-Barré syndrome ( review ) Cochrane Database Syst. Rev. 2014;(9):66. doi: 10.1002/14651858.CD002063.pub6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Somaraju U.R., Merrin M. Sapropterin dihydrochloride for phenylketonuria. Cochrane Database Syst. Rev. 2015;3(3) doi: 10.1002/14651858.CD008005.pub4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Lands L.C., Stanojevic S. Oral non-steroidal anti-inflammatory drug therapy for lung disease in cystic fibrosis. Cochrane Database Syst. Rev. 2013;(6) doi: 10.1002/14651858.CD001505.pub3. [DOI] [PubMed] [Google Scholar]

- 7.Cox D.R. The continuity correction. Biometrika. 1970;57(1):217–219. [Google Scholar]

- 8.Nissen S.E., Wolski K. Effect of rosiglitazone on the risk of myocardial infarction and death from cardiovascular causes. N. Engl. J. Med. 2007;356(24):2457–2471. doi: 10.1056/NEJMoa072761. [DOI] [PubMed] [Google Scholar]

- 9.Sweeting M.J., Sutton A.J., Lambert P.C. What to add to nothing? Use and avoidance of continuity corrections in meta-analysis of sparse data. Stat. Med. 2004;23(9):1351–1375. doi: 10.1002/sim.1761. [DOI] [PubMed] [Google Scholar]

- 10.Shuster J.J., Jones L.S., Salmon D.A. Fixed vs random effects meta-analysis in rare event studies: the Rosiglitazone link with myocardial infarction and cardiac death. Stat. Med. 2007;26(24):4375–4385. doi: 10.1002/sim.3060. [DOI] [PubMed] [Google Scholar]

- 11.Lane P.W. Meta-analysis of incidence of rare events. Stat. Meth. Med. Res. 2013;22(2):117–132. doi: 10.1177/0962280211432218. [DOI] [PubMed] [Google Scholar]

- 12.Kuss O. Statistical methods for meta-analyses including information from studies without any events-add nothing to nothing and succeed nevertheless. Stat. Med. 2015;34(7):1097–1116. doi: 10.1002/sim.6383. [DOI] [PubMed] [Google Scholar]

- 13.Rücker G., Schwarzer G., Carpenter J., Olkin I. Why add anything to nothing? The arcsine difference as a measure of treatment effect in meta-analysis with zero cells. Stat. Med. 2009;28(5):721–738. doi: 10.1002/sim.3511. [DOI] [PubMed] [Google Scholar]

- 14.Bradburn M.J., Deeks J.J., Berlin J.A., Localio A.R. Much ado about nothing: a comparison of the performance of meta-analytical methods with rare events. Stat. Med. 2007;26(1):53–77. doi: 10.1002/sim.2528. [DOI] [PubMed] [Google Scholar]

- 15.DerSimonian R., Laird N. Meta-analysis in clinical trials. Contr. Clin. Trials. 1986;7(3):177–188. doi: 10.1016/0197-2456(86)90046-2. [DOI] [PubMed] [Google Scholar]

- 16.DerSimonian R., Kacker R. Random-effects model for meta-analysis of clinical trials: an update. Contemp. Clin. Trials. 2007;28(2):105–114. doi: 10.1016/j.cct.2006.04.004. [DOI] [PubMed] [Google Scholar]

- 17.Kontopantelis E., Da Springate, Reeves D. A re-analysis of the cochrane library data: the dangers of unobserved heterogeneity in meta-analyses. PLoS One. 2013;8(7) doi: 10.1371/journal.pone.0069930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Paule R.C., Mandel J. Consensus values and weighting factors. J. Res. Natl. Bur. Stand. 1982;87(5):377–385. doi: 10.6028/jres.087.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Bhaumik D.K., Amatya A., Normand S.L.T., Greenhouse J., Kaizar E., Neelon B. Meta-analysis of rare binary adverse event data. J. Am. Stat. Assoc. 2012;107(498):555–567. doi: 10.1080/01621459.2012.664484. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Sidik K., Jonkman J.N. Simple heterogeneity variance estimation for meta-analysis. J. Roy. Stat. Soc. C Appl. Stat. 2005;54(2):367–384. [Google Scholar]

- 21.Rukhin A.L. Estimating heterogeneity variance in meta-analysis. J. Roy. Stat. Soc. B Stat. Meth. 2013;75(3):451–469. [Google Scholar]

- 22.Hartung J., Makambi K.H. Reducing the number of unjustified significant results in meta-analysis. Commun. Stat. Simulat. Comput. 2003;32(4):1179–1190. [Google Scholar]

- 23.Schmidt F.L., Hunter J.E. Sage Publications; 2004. Methods of Meta-analysis: Correcting Error and Bias in Research Findings. [Google Scholar]

- 24.Hedges L.V., Olkin I. Academic Press; 2014. Statistical Methods for Meta-analysis. [Google Scholar]

- 25.Sidik K., Jonkman J.N. A comparison of heterogeneity variance estimators in combining results of studies. Stat. Med. 2007;26(9):1964–1981. doi: 10.1002/sim.2688. [DOI] [PubMed] [Google Scholar]

- 26.Viechtbauer W. Bias and efficiency of meta-analytic variance estimators in the random-effects model. J. Educ. Behav. Stat. 2005;30(3):261–293. [Google Scholar]

- 27.Langan D., Higgins J.P.T., Simmonds M. An empirical comparison of heterogeneity variance estimators in 12894 meta-analyses. Res. Synth. Meth. 2015;6(2):195–205. doi: 10.1002/jrsm.1140. [DOI] [PubMed] [Google Scholar]

- 28.Veroniki A.A., Jackson D., Viechtbauer W., Bender R., Bowden J., Knapp G. Methods to estimate the between-study variance and its uncertainty in meta-analysis. Res. Synth. Meth. 2016;7(1):55–79. doi: 10.1002/jrsm.1164. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Orphanet [Internet] 2016. Accessed: 2016-11-14.http://www.orpha.net/consor/cgi-bin/index.php Available from. [Google Scholar]

- 30.Hartung J., Knapp G. On tests of the overall treatment effect in meta-analysis with normally distributed responses. Stat. Med. 2001;20(12):1771–1782. doi: 10.1002/sim.791. [DOI] [PubMed] [Google Scholar]

- 31.Rukhin A.L. Estimating common mean and heterogeneity variance in two study case. Stat. Probab. Lett. 2012;82(7):1318–1325. [Google Scholar]

- 32.Pateras K., Nikolakopoulos S., Roes K.C.B. Data generating models of dichotomous outcomes: heterogeneity in simulation studies for a random-effects meta-analysis. Stat. Med. 2017 doi: 10.1002/sim.7569. [epub] [DOI] [PubMed] [Google Scholar]

- 33.IntHout J., Ioannidis JPa, Borm G.F. The Hartung-Knapp-Sidik-Jonkman method for random effects meta-analysis is straightforward and considerably outperforms the standard DerSimonian-Laird method. BMC Med. Res. Meth. 2014;14(1):25. doi: 10.1186/1471-2288-14-25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gonnermann A., Framke T., Großhennig A., Koch A. No solution yet for combining two independent studies in the presence of heterogeneity. Stat. Med. 2015;34(16):2476–2480. doi: 10.1002/sim.6473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Guolo A., Varin C. Random-effects meta-analysis: the number of studies matters. Stat. Meth. Med. Res. 2015;0(0):1–19. doi: 10.1177/0962280215583568. [DOI] [PubMed] [Google Scholar]

- 36.Doi S.A.R., Barendregt J.J., Khan S., Thalib L., Williams G.M.C.B. Advances in the meta-analysis of heterogeneous clinical trials I: the inverse variance heterogeneity model. Contemp. Clin. Trials. 2015;45:130–138. doi: 10.1016/j.cct.2015.05.009. [DOI] [PubMed] [Google Scholar]

- 37.Stijnen T., Hamza T.H., Ozdemir P. Random effects meta-analysis of event outcome in the framework of the generalized linear mixed model with applications in sparse data. Stat. Med. 2010;29(29):3046–3067. doi: 10.1002/sim.4040. [DOI] [PubMed] [Google Scholar]

- 38.Viele K., Berry S., Neuenschwander B., Amzal B., Chen F., Enas N. Use of historical control data for assessing treatment effects in clinical trials. Pharmaceut. Stat. 2014;13(1):41–54. doi: 10.1002/pst.1589. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Friede T., Röver C., Wandel S., Neuenschwander B. Meta-analysis of few small studies in orphan diseases. Res. Synth. Meth. 2016;0(0):1–14. doi: 10.1002/jrsm.1217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Bai O., Chen M., Wang X. Bayesian estimation and testing in random effects meta-analysis of rare binary adverse events. Stat. Biopharm. Res. 2016;8(1):49–59. doi: 10.1080/19466315.2015.1096823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Mavridis D., Salanti G. A practical introduction to multivariate meta-analysis. Stat. Meth. Med. Res. 2012;22(2):133–158. doi: 10.1177/0962280211432219. [DOI] [PubMed] [Google Scholar]

- 42.Salanti G. Indirect and mixed-treatment comparison, network, or multiple-treatments meta-analysis: many names, many benefits, many concerns for the next generation evidence synthesis tool. Res. Synth. Meth. 2012;3(2):80–97. doi: 10.1002/jrsm.1037. [DOI] [PubMed] [Google Scholar]

- 43.Warren F.C., Abrams K.R., Sutton A.J. Hierarchical network meta-analysis models to address sparsity of events and differing treatment classifications with regard to adverse outcomes. Stat. Med. 2014;33(14):2449–2466. doi: 10.1002/sim.6131. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.