Abstract

Models of the representation of numerosity information used in discrimination tasks are integrated with a diffusion decision model. The representation models assume distributions of numerosity either with means and standard deviation that increase linearly with numerosity or with means that increase logarithmically with constant standard deviation. The models produce coefficients that are applied to differences between two numerosities to produce drift rates and these drive the decision process. The linear and log models make differential predictions about how response time (RT) distributions and accuracy change with numerosity and which model is successful depends on the task. When the task is to decide which of two side-by-side arrays of dots has more dots, the log model fits decreasing accuracy and increasing RT as numerosity increases. When the task is to decide, for dots of two colors mixed in a single array, which color has more dots, the linear model fits decreasing accuracy and decreasing RT as numerosity increases. For both tasks, variables such as the areas covered by the dots affect performance, but if the task is changed to one in which the subject has to decide whether the number of dots in a single array is more or less than a standard, the variables have little effect on performance. Model parameters correlate across tasks suggesting commonalities in the abilities to perform them. Overall, results show that the representation used depends on the task and no single representation can account for the data from the different paradigms.

Keywords: Diffusion model, approximate number system, response time and accuracy, integrated models, individual differences

What is the mental representation of numerosity? This is a classic question in psychophysics and also a topical one because it has been claimed that scores on simple, non-symbolic numerosity tasks are predictive of math development in childhood and math achievement later in life (Halberda et al., 2008; Park & Brannon, 2013). For instance, for a large internet sample, Halberda et al. (2012) found that performance on a nonsymbolic task was related to numeracy ability across the life span (to age 85). Currently, numerosity knowledge is said to be represented in an Approximate Number System (ANS) in which numerosities are represented by distributions around their central values (Dehaene, 2003), a system which might be present in animals as well as humans (Gallistel & Gelman, 1992). There is also a body of work in which research using animals and human neurophysiological measurements has been used to identify neural structures that are involved in numerosity judgments (e.g., Hyde & Spelke, 2008, Nieder & Miller, 2003; Piazza et al., 2004). We review these in the discussion.

It has also been asserted that the ability to perform non-symbolic tasks forms a scaffold on which symbolic mathematical skills are built (Gallistel & Gelman, 1992, 2000). This was expressed explicitly by Park and Brannon (2013): “Humans and nonhuman animals share an approximate number system (ANS) that permits estimation and rough calculation of quantities without symbols. Recent studies show a correlation between the acuity of the ANS and performance in symbolic math throughout development and into adulthood, which suggests that the ANS may serve as a cognitive foundation for the uniquely human capacity for symbolic math.” In accord with this, Park and Brannon (2013, 2014; also Hyde, Khanum, & Spelke, 2014) found that repeated training on nonsymbolic arithmetic improved symbolic arithmetic, but repeated training on other tasks (a visuo-spatial short-term memory task and a numerical ordering task) did not. However, it has also been argued that symbolic and nonsymbolic magnitude knowledge have separate effects on mathematics achievement (Fazio et al., 2014) and that the relation between nonsymbolic performance and achievement is currently not clear (De Smedt et al., 2013).

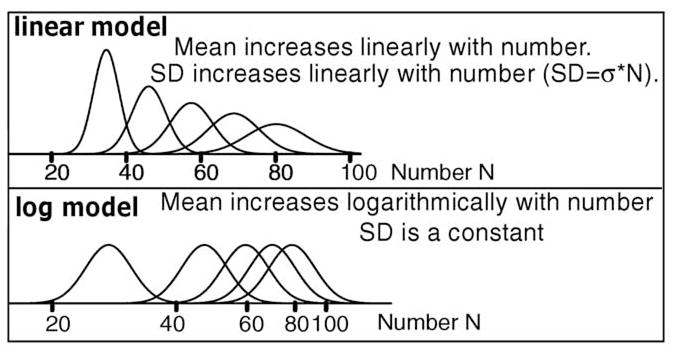

There are currently two, competing, ANS models that have roots in Weber and Fechner’s work in the 1800’s. In one, numerosity in the ANS is represented on a linear scale and variability around numerosities increases as numerosity increases. In the other, numerosity in the ANS is represented on a decreasing logarithmic scale with equal variability around all numerosities (Figure 1). In both, the distributions of variability are Gaussian (Dehaene & Changeux, 1993; Gallistel & Gelman, 1992; see Zorzi et al., 2005, for a review). Both models explain two standard findings (cf., Weber’s law) - why it is easier to discriminate 10 from 20 objects than 18 from 20 (accuracy decreases as the difference in two numerosities decreases, the distance effect) and why it is easier to discriminate 20 objects from 30 than 60 objects from 70 (accuracy decreases as numerosities increase; the size effect). It has been claimed that the two models are not discriminable (Dehaene, 2003) but that argument is based solely on the accuracy with which numerosity tasks are performed. Here we show that they are, in fact, discriminable when response times (RTs) are considered.

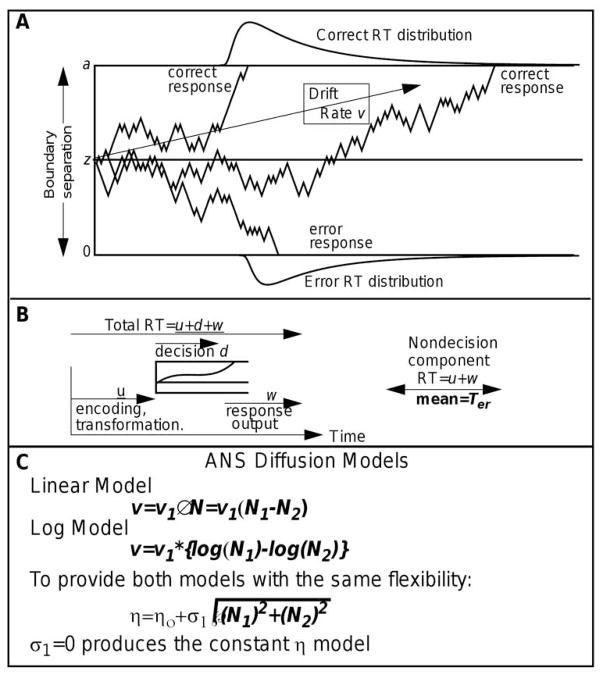

Figure 1.

Models of numerosity representation.

In this article, we present a model for numerosity discrimination, a fundamental numeracy skill. Typical tasks include deciding whether the number of blue dots in a display is greater or less than some specified number, deciding whether there are more blue dots in a display than yellow dots, and deciding whether there are more dots in one versus another array that are spatially separated. We model RTs and their full distributions for both correct responses and errors jointly with accuracy. We test the representations of numerosity that the ANS models predict by mapping them to accuracy and RT data via the diffusion decision-making model (Ratcliff, 1978; Ratcliff & McKoon, 2008). When the ANS models are integrated with the diffusion model, they make strong differential predictions because they must account for RTs as well as accuracy.

One reason an approach that explains the decision-making process is needed is that the field of numerical cognition has been unable to settle on empirical measures to be used in individual-difference analyses. Considerable controversy has arisen about the presence or absence of correlations among dependent variables and between them and individual differences such as IQ and math ability. In the diffusion model and other sequential-sampling models (Ratcliff & McKoon, 2008; Ratcliff & Smith, 2004), accuracy and RTs arise from the same underlying components of processing but in the numeracy literature, some hypotheses have been based on RTs, some on accuracy, and some on the slope of a function that relates accuracy or RTs to the difficulty of a test item. Many studies in numeracy have used RTs alone and many have used accuracy alone, and this has led to inconsistent findings about how individual differences affect performance. For example, sometimes correlations are found between symbolic tasks (“is 5 greater than 2”) and nonsymbolic tasks (“is the number of dots in one array greater than in another array”), and sometimes not (e.g., Price, et al., 2012; Sasanguie, et al., 2011; Maloney et al., 2010; Holloway & Ansari, 2009; De Smedt et al., 2009). Sometimes correlations are found between non-symbolic number tasks and math ability, and sometimes not (e.g., Lyons & Beilock, 2011; Libertus et al., 2011; Gilmore et al., 2010; Halberda et al., 2008, 2012; Inglis, et al., 2011; Holloway & Ansari, 2009; Mundy & Gilmore, 2009; Price, et al., 2012).

In a comprehensive study, Gilmore et al. (2011) found little correlation between all combinations of accuracy and RT across a range of symbolic and nonsymbolic tasks. A recent meta-analysis by Chen and Li (2014) further illustrated the extent of the problem. For 36 recent studies, they found 21 used overall accuracy, 9 used mean RT, 17 used the Weber fraction (an accuracy-based measure), and 8 used a numerical distance effect based on RT. Other analyses of individual differences confirm this diversity by reviewing studies that use a range of different dependent variables (De Smedt at al., 2013; Fazio et al., 2014). In the face of such inconsistencies, and their finding that RTs and Weber fractions were largely uncorrelated in an experiment they conducted, Halberda et al. (2012, p. 11116) suggested that the two dependent variables might index independent abilities. Price et al. (2012, p. 54) concurred, saying that “the relationship between RT slope and the Weber fraction is not very strong, which might be explained by the fact that one is a measure of RT while the other is a measure of accuracy.”

In many numeracy studies, including most of those just cited and the studies we present in this article, the response required of a subject is a decision between two alternatives. Whatever the quality of a subject’s numerosity information, a response must be chosen and the choice will take some amount of time. Accuracy and speed can trade off, and the trade-off is under a subject’s control. A subject might decide to respond as quickly as possible, sacrificing accuracy, or as accurately as possible, sacrificing speed. In consequence, the quality of the numeracy information on which an individual bases his or her decision can be obscured by the speed/accuracy setting he or she chooses. This means that neither accuracy by itself nor RTs by themselves can provide a direct measure of an individual’s numeracy knowledge.

In the diffusion model (and other sequential sampling models, Ratcliff & Smith, 2004), joint consideration of accuracy and RTs allows an individual’s speed/accuracy setting to be separated from the quality of the information upon which decisions are based. The central mechanism in the model (Ratcliff, 1978; Ratcliff & McKoon, 2008) is the noisy accumulation of information from a stimulus representation over time. A response is made when the amount of accumulated information reaches one or the other of two criteria, or boundaries, one for each of the two possible choices (e.g., deciding whether the number of dots in a display is larger or smaller than 25). The rate of accumulation, called drift rate, is determined by the quality of the information encoded from a stimulus. The distance between the two boundaries is determined by the speed/accuracy setting-- faster, less accurate responses if the distance is small, slower, more accurate responses if the distance is large. The independence of drift rate and the distances to the boundaries means that information quality is separated from speed/accuracy settings and so can be independently observed.

In the diffusion model, accuracy and RTs must be explained by the same mechanism. This is required in order to account for the locations of RT distributions (longer RTs for more difficult decisions than easier ones) and the characteristic, right-skewed, shape of the distributions. It is also required to account for the inverted U-shaped function that typically results when RTs are plotted against accuracy (a latency-probability function, Ratcliff, Smith, & McKoon, 2015, which is discussed in detail later.)

For all the experiments in this article we compared the two ANS models, each integrated with the diffusion model. Recently there has been concern about the lack of replicability of studies in psychology. Less prominently, there has been concern that models or empirical results apply only to the specific design of a single experiment. We addressed these concerns with 11 experiments and 5 tasks. Each major empirical and modeling result was replicated at least once. In three experiments, subjects were tested on more than one task to examine correlations among an individual’s numeracy abilities across tasks.

Three tasks used displays of dots. Two are common in numeracy research, one in which blue and yellow dots are intermingled in a single array and subjects decide whether there are more blue dots or more yellow dots, and one in which there are two side-by-side arrays of dots all of the same color and subjects decide which of them has more dots. For the third task, subjects decided whether the number of dots of one of two colors, intermingled in a single array, is larger or smaller than a criterion number (e.g., 25). The fourth task used X’s and O’s in a single array and subjects decided which had the greater number. The fifth task used asterisks in a single array and subjects decided whether the number of asterisks was larger or smaller than a criterion number.

There were five independent variables, all replicated in at least two experiments, and numerosity (number of dots, X’s and O’s, or asterisks) was manipulated in all 11 experiments. In most of the experiments with dots, either the summed areas of the two sets of dots (e.g., the blue and yellow ones) were the same or they were proportional to their number (i.e., a larger total area for a larger numerosity). In some experiments, the dots were all relatively large, averaging about 13 pixels in diameter, or small, averaging about 4.5 pixels in diameter. When subjects decided whether the number of dots of one color was larger or smaller than a criterion number, the number of dots of the other color was manipulated.

Preview

To preview the results, we summarize the most salient of them here. The first was highly counter-intuitive. As mentioned above, it is almost always found that as decisions become more difficult and accuracy goes down, responses become slower. This is the pattern that was obtained with two of the tasks we used, deciding which of two side by side arrays has the greater number of dots and deciding whether the number of dots in a single array is larger or smaller than a standard. However, when the task was to decide which of two colors of dots in a single array had the greater number, we found a highly unusual and counter-intuitive pattern: as difficulty increased and accuracy decreased, responses became faster.

The second result was that, when the linear and log ANS models were integrated with the diffusion model, they could be discriminated (because accuracy and RT data must be explained jointly), something that has not been possible in the past, as we pointed out above.

The third result was that which model could account for the data was different for different tasks. The linear ANS-diffusion model did well for the first pattern of data (the counter-intuitive one) but the log ANS-model failed in clear qualitative ways. The log ANS-diffusion model did well for the second pattern of data (the usual one) but the linear model failed in clear qualitative ways.

Fourth, whichever ANS-diffusion model was successful for a given task, it fit the data well. It captured the data for accuracy, mean RTs for correct responses and errors, the shapes and locations of the RT distributions, and the ways these all changed across experimental conditions that varied in difficulty.

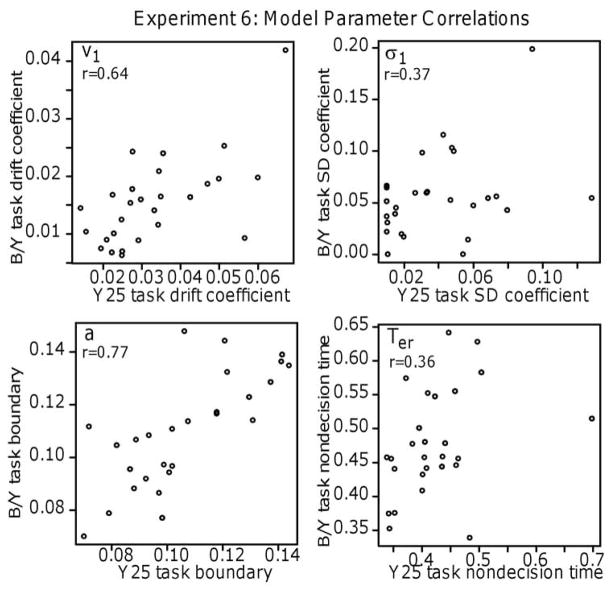

Fifth, we found large correlations among the tasks in drift rates, which suggests that individuals bring similar numeracy skills to all the tasks we used.

The sixth result was a solution for an issue that has bedeviled research on numerosity discrimination-- it has been difficult to divorce confounding variables from judgments of numerosity (DeWind et al., 2015; DeWind & Brannon, 2012; Feigenson et al., 2002; Gebuis & Gevers, 2011; Gebius & Reynvoet, 2012a, 2012b, 2013; Mix et al., 2002). For example, if all the dots in an array of dots of two colors have the same size, then the total area of the dots of the larger-numerosity color will be larger than the total area of the dots of the smaller-numerosity color. But if the totals are equated, then the totals of the circumferences of the dots will be larger for the larger-numerosity color. With any manipulations designed to control one variable, some other variable will be confounded with numerosity. With the ANS-diffusion models, the contributions of individual variables can be measured.

The seventh result was that, when we examined the effects of confounding variables on our tasks, we found variables that affected performance on some numerosity tasks but not others.

The Two-Choice Diffusion Model

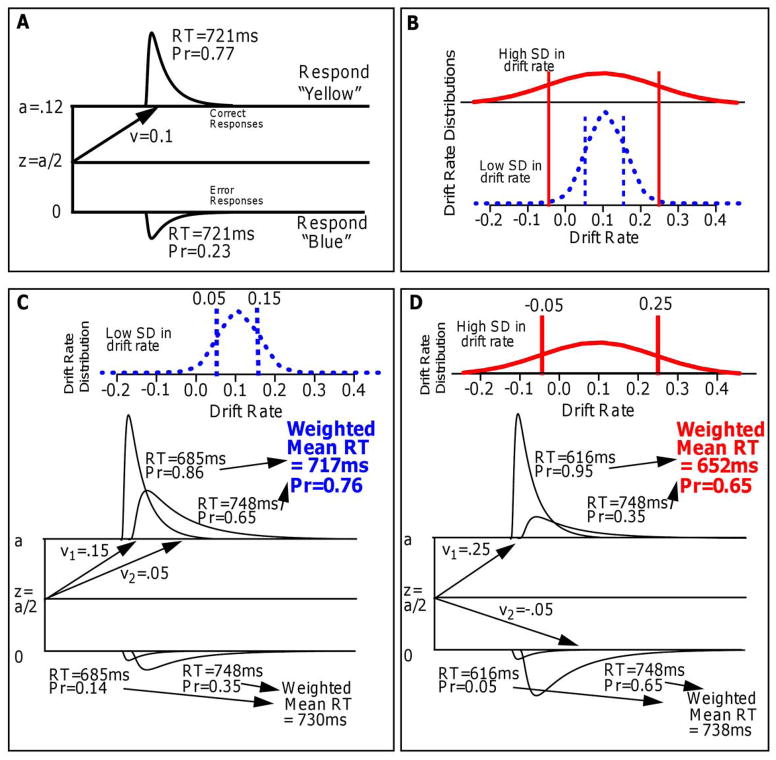

The model is designed to explain the cognitive processes that make simple two-choice decisions that take place in under a second or two. The model has been applied in a wide range of domains including clinical applications and applications in neuroeconomics and neuroscience in humans, monkeys, rodents, and even insect swarms (Forstmann, Ratcliff, & Wagenmakers, 2016; Ratcliff, Smith, Brown, & McKoon, 2016). Figure 2A illustrates the model. Information is accumulated from a starting point, z, toward one or the other of two boundaries, a or 0. The zig-zag lines indicate noise in the accumulation process. For the example in the figure, the mean rate of accumulation, drift rate (v), is positive. Drift rate is determined by the quality of the information extracted from the stimulus in perceptual tasks and the quality of the match between a test item and memory in, for example, lexical decision and memory tasks. Processes outside the decision process such as stimulus encoding and response execution are combined into one component of the model, nondecision time, with mean Ter. Total RT (Figure 2B) is the sum of the time to reach a boundary and nondecision time. The noise in the accumulation of information (Gaussian distributed) results in decision processes with the same mean drift rate terminating at different times, producing RT distributions, and sometimes at the wrong boundary, producing errors.

Figure 2.

A illustrates the diffusion decision model. B shows the additional components of the decision model that produce the total RT. C shows the standard assumption about drift rate and its variability across trials. D shows equations for drift rates and across trial SD in drift rate for the two ANS-diffusion models.

The values of the components of processing are assumed to vary from trial to trial, under the assumption that subjects cannot accurately set the same parameter values from one trial to another (e.g., Laming, 1968; Ratcliff, 1978). Across-trial variability in drift rate is normally distributed with SD η, across-trial variability in starting point (equivalent to across-trial variability in the boundaries) is uniformly distributed with range sz, and across-trial variability in the nondecision component is uniformly distributed with range st. In signal detection theory, which deals only with accuracy, all sources of across-trial variability are collapsed into one parameter, the variability in information across trials. In contrast, with the diffusion model, the separate sources of across-trial variability are identified (Ratcliff & Tuerlinckx, 2002; Ratcliff & Childers, 2015).

For experiments in which subjects compare a stimulus to a standard, there is one more component of processing, the drift-rate criterion (Ratcliff, 1985). For example, when asked to decide whether the number of dots in an array is more or less than 25, then drift rates should be such that their mean is toward the “large” boundary when there are more than 25 dots and toward the “small” value when there are fewer than 25. That is, the drift-rate criterion should be set at 25. However, subjects do not always behave in this way. They may set their criterion at 24 or 26 or some other number. It is to accommodate shifts like this that the drift-rate criterion is a free parameter when a discrimination task involves comparison to a standard.

Boundary settings, nondecision time, starting point, drift rates for each condition in an experiment that varies in difficulty, the drift-rate criterion, and the across-trial variabilities in drift rate, nondecision time, and starting point are all identifiable. When data are simulated from the model (with numbers of observations approximately equal to those that would be obtained in real experiments) and the model is fit to the simulated data, the parameters used to generate the data are well recovered (Ratcliff & Tuerlinckx, 2002). The success of parameter identifiability comes in part from the tight constraint that the model account for the full distributions of RTs for correct and error responses (Ratcliff, 2002).

Integrating the Diffusion Model and the ANS Models

When the diffusion model is combined with a model for how information is represented in cognitive structures, the representation model must produce a value, drift rate (and in some models, SD in drift rate across trials), that when taken through the decision process accounts for all the data. In other words, the diffusion model provides a meeting point between data and models of representation.

The ANS linear and log models (Figure 1) have their roots in research tracing back to Weber’s and Fechner’s research in the 1800’s (e.g., Woodworth, 1938). Weber’s law states that as stimulus intensity increases, the size of the just-noticeable difference between stimuli increases so that the ratio of the difference in intensity to intensity (ΔS/S) remains constant. Fechner derived a logarithmic representation from this: the intensity of a stimulus is proportional to the logarithm of the physical intensity and the psychological difference between two stimulus intensities is the difference in the logarithms of their intensities. Thus as intensity grows, the psychological difference between equally spaced stimuli decreases (e.g., log(10)-log(5) = 0.69 while log(20)-log(15) = 0.29). In this model, the SD around mean numerosity values has to be constant as intensity grows to explain Weber’s law. Weber’s law can also be explained by the linear model: the psychological difference between two intensities is linear with the intensity values and the SD in the psychological representation also increases linearly thus leading to decreasing discriminability as intensity grows. These alternatives have had extensive discussion in numerosity research (e.g., Gallistel & Gelman, 1992; Dehaene & Changeux, 1993) with the conclusion mentioned above, that they cannot be discriminated (Dehaene, 2003). ROGER

In the integrated models, drift rate and the SD in drift rate are both provided by the ANS representation model, and boundary settings, nondecision times, and the ranges in starting point and nondecision time come from the diffusion model. Figure 2C shows how drift rate for the two models is computed. For the linear model, drift rate (v) is the difference between the two numerosities multiplied by a coefficient (v1) and for the log model, drift rate is the difference in the logs of two numerosities multiplied by a coefficient (v1). It is the coefficient of drift rate that separates individuals; a larger coefficient gives better performance.

Figure 2C also shows how across-trial variability (the SD, η in the models) in drift rate is computed. For the linear model, (η) is a constant (η0) plus a coefficient (σ1) multiplied by the square root of the sum of squares of the two numerosities (the square root of the sum of squares is how standard deviations are combined - variances are added). For the log model, we might assume that η remains constant as numerosity increases, just as for traditional models based on accuracy measures. However, there is no guarantee that a diffusion model will behave in the same way and so we gave our log model the same flexibility in accounting for data as the linear model: η could either stay constant as numerosity increases or increase with numerosity with the same expression for η as for the linear model. This also has the advantage of giving the linear and log models the same number of parameters which makes model selection less ambiguous because different measures such as AIC and BIC give the same results. Thus, the only difference between the linear and log models was that the drift rate assumption was different: linear versus log.

The integrated models are severely constrained. Without a representation (i.e., ANS) model, drift rates are usually estimated separately for each condition of an experiment when the diffusion model is applied to data. Instead, for the integrated models, drift rates are set by the representation model and cannot be adjusted to, for example, produce a better fit for one data point without affecting predictions for all the other data. There is only one coefficient for drift rates for all values of numerosity for each condition (e.g., a condition with large dots or one with small dots) and only two coefficients for η for all conditions of the experiment. If the model failed to fit even one value of accuracy or one RT distribution from the numerosity conditions, modifying the parameters to accommodate that one miss would make the fit worse for all the other conditions.

When the linear and log models are integrated with the diffusion model, there are no more than eight free parameters plus one drift-rate coefficient for each independent variable (excluding numerosity). From the diffusion model, there are always the distance between the boundaries, nondecision time, and the ranges in the starting point and nondecision time. When the task is to compare stimuli against a standard there is also the drift-rate criterion. For some tasks, the starting point is a free parameter. For others, it can be set to half the distance between the boundaries and so is not a free parameter; this occurs when the RT distributions at one of the two boundaries are symmetric with those at the other. From the ANS models, there are the drift-rate coefficients for each independent variable except numerosity, the constant component of SD across-trials, and the coefficient for SD. (If the SD coefficient is close to zero, the model is one with constant SD in drift rate.)

For the experiments in which the task was to compare the number of dots of a color or the number of asterisks to a standard value, there was only one value of numerosity, so we set N1 in the computation of drift rate and its SD (Figure 2C) to that numerosity value and N2 to the standard (e.g., 25).

Fitting the Integrated Diffusion Models to Data

The values of all the parameters are estimated together by fitting the model to the data from all the conditions in an experiment simultaneously using a standard method of fitting. The data for each subject is fit individually and the model parameters presented in the tables are the means across subjects. RT distributions are represented by 5 quantiles, the .1, .3, .5, .7, and .9 quantiles. The quantiles and the response proportions for each condition are entered into a minimization routine and the diffusion model is used to generate the predicted cumulative probability of a response occurring by that quantile RT. Subtracting the cumulative probabilities for each successive quantile from the next higher quantile gives the proportion of responses between adjacent quantiles. For a G-square computation, these are the expected proportions, to be compared to the observed proportions of responses between the quantiles (i.e., the proportions between 0, .1, .3, .5, .7, .9, and 1.0, which are .1, .2, .2, .2, .2, and .1). The proportions for the observed (po) and expected (pe) frequencies and summing over 2Npolog(po/pe) for all conditions gives a single G-square (log multinomial likelihood) value to be minimized (where N is the number of observations for the condition).

The number of degrees of freedom in the data is computed as follows: there are 6 proportions (bins) between the quantiles and outside the .1 and .9 quantiles. These proportions are multiplied by the proportion of responses for that condition and across correct and error responses; these 12 proportions must add to 1 so there are 11 degrees of freedom in the data for each condition of the experiment. For example, if there were 10 numerosity conditions crossed with a variable that has two levels, then there would be 220 degrees of freedom in the data. When the models are fit to data, the number of degrees of freedom is the number in the data minus the number of the model’s free parameters.

Usually in fits of the diffusion model to data, there are no models of stimulus representation like those the ANS models provide and so there is a separate drift rate for each condition of an experiment. For Experiment 1, for example, this would lead to a model with 26 parameters whereas for the ANS-diffusion models, the number of parameters is greatly reduced, to only eight.

The model was fit to the data using the G-square statistic in the same way as fitting the chi-square method described by Ratcliff and Tuerlinckx (2002; see also Ratcliff & Childers, 2015; Ratcliff & Smith, 2004). G-square statistics are asymptotically chi-square and so critical chi-square values can be used to assess goodness of fit. In many applications we have found that if the value of the chi-square (or G-square) is below 2 times the critical value, the fit is good (Ratcliff, Thapar, Gomez, & McKoon, 2004; Ratcliff, Thapar, & McKoon, 2010) even in the less constrained case in which the diffusion model is applied without a representation model and so each condition has its own drift rate.

In the results sections for the experiments, the mean values of the model parameters and the G-square statistic across subjects are reported. For the plots in the figures for the experiments, the quantile RTs and response proportions in the data are averaged across subjects. The predictions from the models are generated from the best-fitting parameters for each subject and then these predictions are averaged across subjects in exactly the same way as the data are averaged.

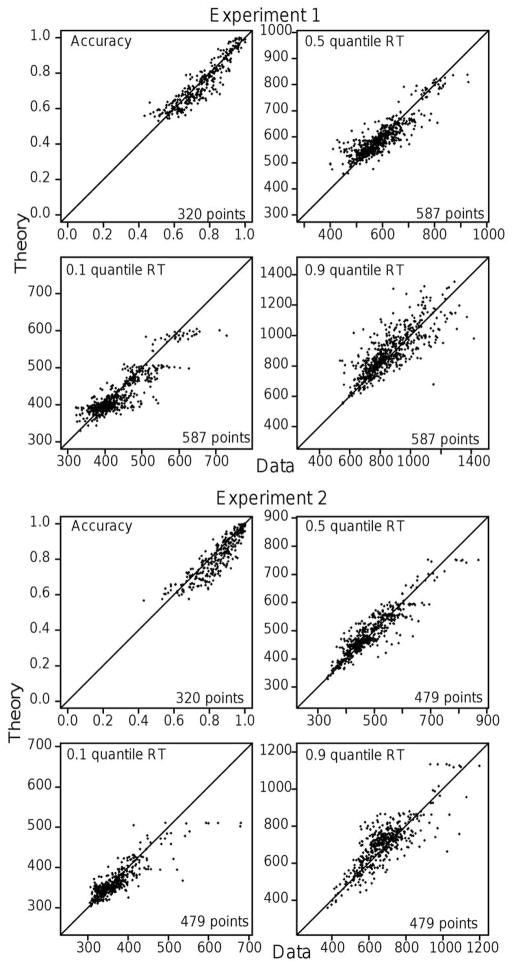

Because the fits are presented as averages over subjects, it may be that there are some very bad fits for some individuals. Appendix A shows plots of the experimental and predicted response proportions and 0.1, 0.5, and 0.9 quantile RTs plotted against each other for Experiments 1 and 2. These show a visual representation of the quality of the fits for each condition for each subject and so allow an assessment of how good or bad fits are for each condition and subject.

The difference in G-square values between the log and linear models provides a numerical goodness of fit measure from which the models can be compared. As noted above, because the number of parameters for the two models was the same, G-squares provide the same results for comparisons of models as do the AIC and BIC values (because these measures are G-square plus a penalty term based on the number of parameters - which is the same for the pairs of models). However, in our view, small numerical differences are not enough to be sure that one model should be preferred over another. We prefer to see qualitative differences in predictions between the models as well as numerical differences that are not small. Furthermore, for each experiment we report the number of subjects that favor each model from the G-square value. By a binomial test, if 22 (or more) out of 32 subjects or 12 (or more) out of 16 subjects support one model over the other model, then the result is significant. This provides another measure of support at an individual subject level for one model over the other model.

Displaying the match between data and model

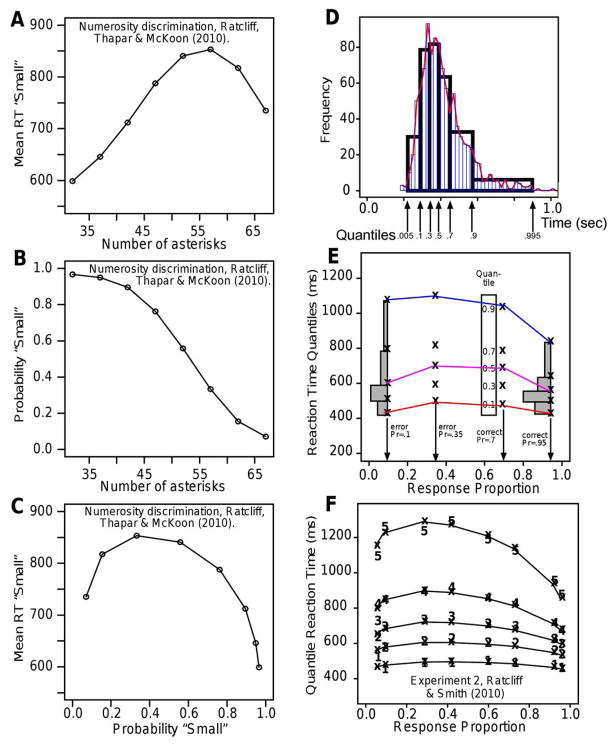

The match can be displayed in latency-probability functions and quantile-probability functions (Ratcliff, 2001; Ratcliff, Van Zandt, & McKoon, 1999). To illustrate latency probability functions (termed a parametric plot), data from a numerosity discrimination task (Ratcliff, Thapar, & McKoon, 2010) are plotted in Figures 3A–3C. The stimuli were arrays of asterisks mixed with empty spaces and subjects decided whether the number of asterisks was larger or small than 50. Figure 3A shows mean RTs (in ms) for “small” responses as a function of the number of asterisks for eight conditions that vary in difficulty (responses were grouped: 30–34, 35–39, 40–44, …, 65–69, for means 32, 37, 42, ..., 67). “Small” is the correct response for numbers smaller than 50 (on the left side of the function) and the incorrect response for numbers larger than 50 (on the right side of the function). RTs are shorter for the easier conditions for both correct and incorrect responses (the outer data points) and longer for the more difficult conditions (the nearer-center data points). Figure 3B shows the probabilities of “small” responses, fewer of them as the number of asterisks increases. The bottom panel shows the inverted U-shaped latency-probability function derived from plotting the RTs against the response probabilities. As the probability of a correct response decreases from right to left, RTs first increase and then decrease (and for “large” responses, the functions are similar). Predictions from the model can be plotted in the same way (e.g., Ratcliff & McKoon, 2008, Figure 6). Often in experiments with symmetric responses for the two choices, conditions are combined, for example, in Figure 3A, mean RT for “large” responses might be the left to right mirror image of those for “small” responses. Then correct “small” responses to the 32 asterisk condition would be combined with correct “large” responses to the 67 asterisk condition to produce one of four levels of difficulty from the eight conditions. Errors would be combined in the same way so that the four levels of difficulty would produce eight data points as in Figure 3F (the error RTs on the left correspond to the symmetric correct responses on the right).

Figure 3.

Construction of latency-probability functions and quantile probability functions.

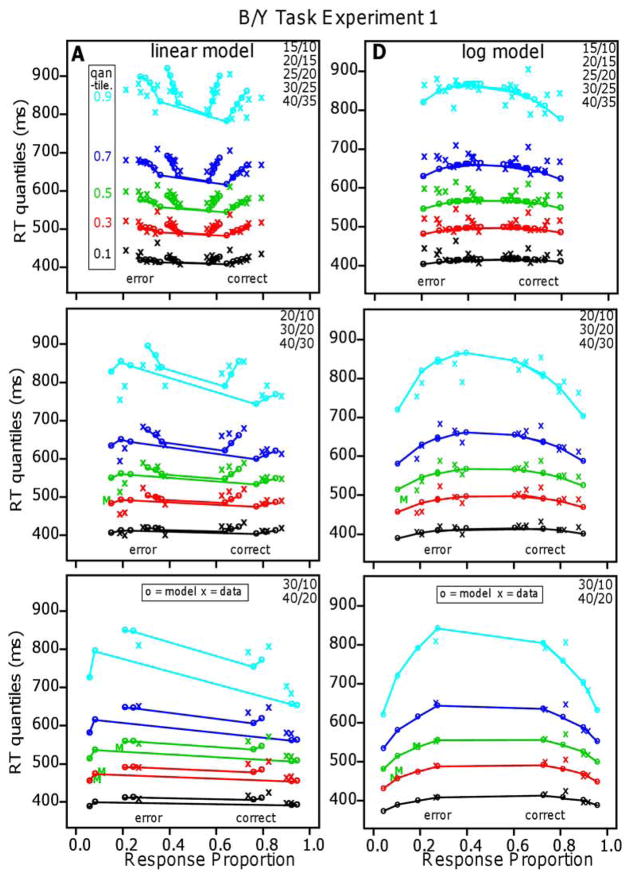

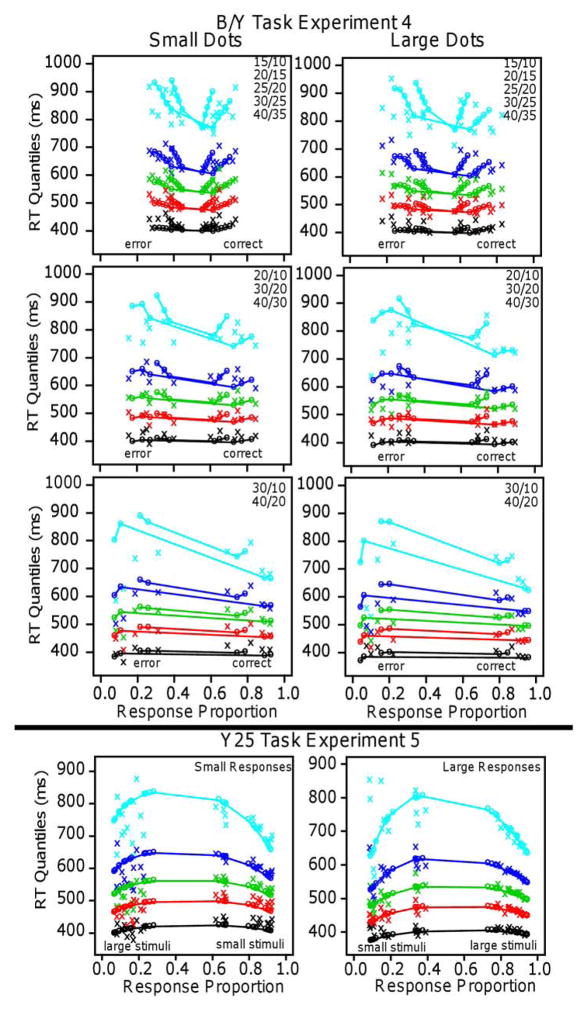

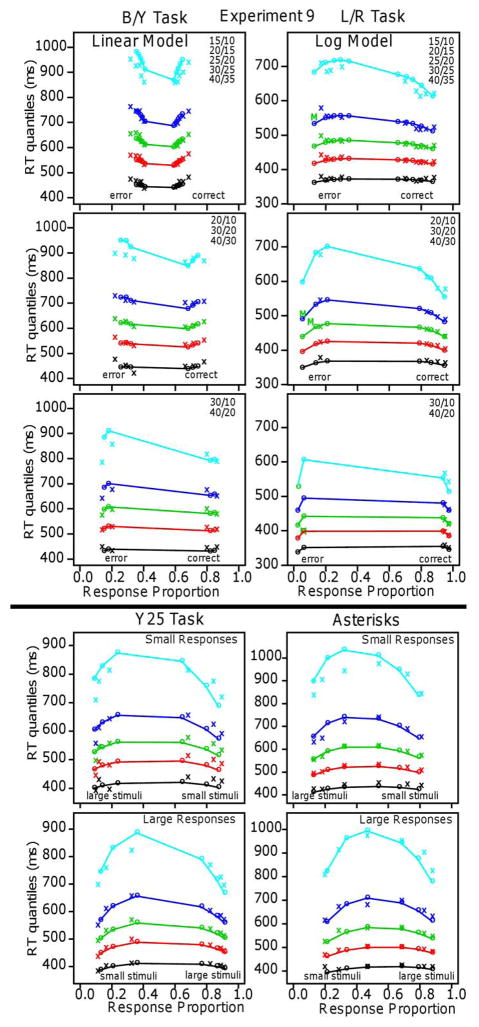

Figure 6.

Quantile-probability functions for Experiment 1 for the linear and log models. These plot RT quantiles against response proportions (correct responses to the right of 0.5 and errors to the left). The green/central lines are the median RTs. The number of dots in the conditions in the plots are shown in the top right corner and the more extreme functions are for proportional-area conditions and the less extreme for equal-area conditions.

For most of the experiments described in this article, we display the data and model predictions in quantile probability plots. Figure 3D shows how they are constructed. The top panel shows a histogram of the data (thin narrow bars and red line) overlaid with rectangles derived from the 0.1, 0.3, 0.5, 0.7, and 0.9 RT quantiles. The rectangles represent equal areas of 0.2 probability mass between each pair of middle quantiles and 0.1 probability mass outside of the 0.1 and 0.9 quantiles. The quantile rectangles capture the main features of the RT distribution (as can be seen in the figure) and therefore provide a reasonable summary of the overall distribution shape. Figure 3E shows a quantile-probability plot. Quantile RTs for the 0.1, 0.3, 0.5, 0.7, and 0.9 quantiles (stacked vertically) are plotted against the proportions of responses that were made for each condition for four experimental conditions different in difficulty. Correct responses for two conditions are on the right, errors for two conditions are on the left. For the more difficult condition, the proportion of correct responses is 0.7 and for the easier condition, the proportion of correct responses is 0.95. Errors for the other two conditions are plotted on the left with error probabilities 0.1 and 0.35. Figure 3F shows an example of the fit between model and data for an experiment with four conditions, with the numbers representing the data for the five quantiles and the x’s and lines representing the model predictions (from Ratcliff & Smith, 2010, Experiment 2). The latency-probability function for the median RT instead of the mean RT is the middle line in Figure 3F.

Quantile-probability plots make it easy to see changes in RT distribution locations and spread as a function of response probabilities and how model and data compare. In Figure 3F, as response probability changes from about 0.6 (the most difficult condition) to near 1.0 (the easiest condition), the 0.1 quantile (leading edge) changes little, but the 0.9 quantile changes by as much as 400 ms. Thus, the change in mean RT is mainly in the tail; the whole distribution does not shift. Also, error responses are slower than correct responses mainly because of their spread, not the location of the leading edge. In these ways, quantile-probability plots allow all the important aspects of both the accuracy and RT data to be read from a single plot.

Experiments: Stimuli, Subjects, and Procedures

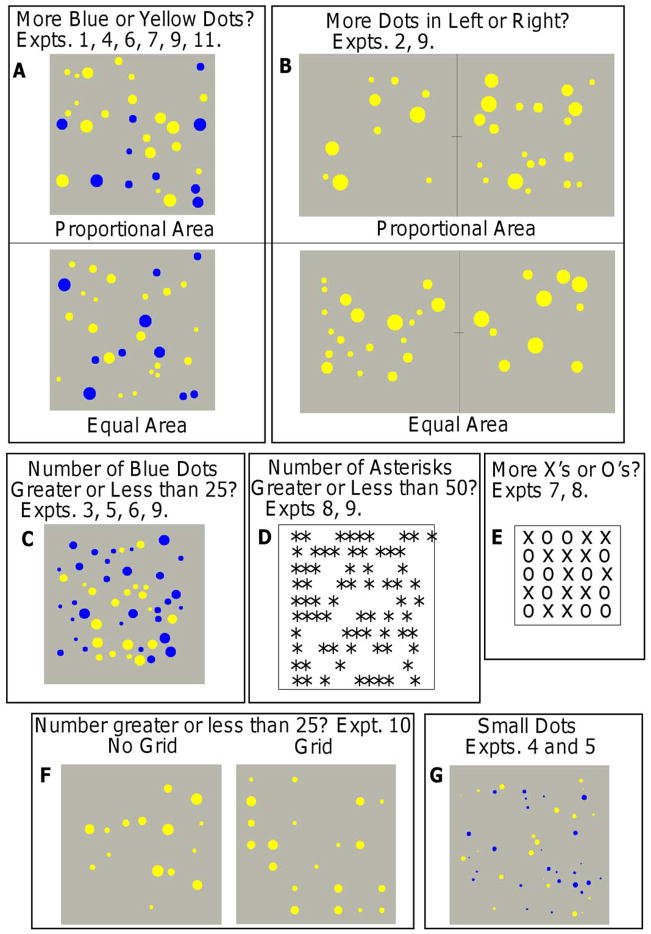

Figure 4 illustrates the displays that were used in the experiments. We list all of them here to provide a summary and then describe them again in the discussion of each experiment. In Figure 4A, blue and yellow dots are intermingled in a single array and the question was for which color is the number of dots larger. In Figure 4B, there are two arrays side by side with dots all of the same color and the question was which array has more dots.

Figure 4.

Examples of stimuli for the experiments.

In many of the experiments, there was an area manipulation, equal versus proportional. For blue and yellow dots mixed in a single array, the summed areas of the dots of the two colors were either equal or proportional (larger summed area for the larger numerosity color). At the same time, the summed areas of dots of different numerosities were either equal or proportional. For example, consider two conditions: 10 blue dots intermingled with 15 yellow dots and 35 blue dots intermingled with 40 yellow dots. For equal area, the sum of the areas of the 10 blue dots would be the same as the sum of the areas of the 15 yellow dots, the 35 blue dots, and the 40 yellow dots. For proportional area, the sums would be larger for larger numerosities than smaller ones. For dots of the same color in two arrays, the area manipulation is the same: the sums of the areas of the dots in the two arrays were equal or proportional and the sums were equal from one numerosity to another, or they were all proportional to their number. Figures 4A and 4B illustrate the area manipulation.

In Figure 4C, blue and yellow dots are intermingled in a single array and the question was whether the number of dots of one of the colors was greater or less than 25. In Figure 4D, asterisks are intermingled with white spaces and the question was whether the number of asterisks was greater or less than 50. In Figure 4E, X’s and O’s are intermingled and the question was whether there were more X’s or more O’s. In Figure 4F, there was one array of dots presented and for some of the displays, the dots were positioned randomly (the left example) or positioned on a grid (the right example); in both cases, the question was whether the number of dots was greater or less than 25. Finally, Figure 4G shows small dots which were tested along with regular-size dots in different stimulus arrays. These experiments used single arrays of intermingled blue and yellow dots; in Experiment 4, the question was for which color is the number of dots greater, and in Experiment 5, it was whether the number of dots of one of the colors is greater or less than 25.

For the single-array stimuli with dots (Figures 4A, 4C, 4G, and 4F), the dots were displayed on a 17-inch diagonal CRT monitor with a width of 32 cm and a height of 24 cm and with a 4×3 screen set to 1280×960 pixels (with 256 colors). The background was gray to control luminance (Halberda et al., 2008). The dots were presented in a 640×640 gray array in the middle of the screen that was 17.3 × 17.3 degrees of visual angle when viewed from a distance of 53 cm. For all but Experiments 4 and 5, the dots had radii of 6, 8, 10, 12, 14, or 16 pixels subtending angles of 0.324, 0.432, 0.540, 0.648, 0.756, and 0.864 degrees in diameter, respectively. For Experiments 4 and 5, the smaller dots’ radii were 2, 3, 4, 5, 6, or 7 pixels.

For each trial of a single-array experiment, either dot sizes were selected randomly but constrained so that the summed areas of the two colors of dots in an array (and the areas across all the numerosities, as described above) were equal, or they were selected randomly without any other constraint and so the areas were proportional to the number of dots. We constrained the positions of the dots so that the maximum horizontal/vertical distance dot centers could be separated by was 360 pixels (10.58 degrees) and the minimum spacing between dot edges was 5 pixels (0.135 degrees).

For the stimuli with two side-by-side arrays of dots, the same CRTs were used with the same settings as for the single-array experiments. The gray background within which the two arrays of dots were presented was 640 pixels high x 1160 pixels wide which is 17.3 by 31.3 degrees of visual angle. The minimum spacing between dot edges was 5 pixels and between the two arrays, there was an 80 pixel separation between dot centers. There was a thin vertical line between the two arrays (Figure 4B) within which stimulus arrays were presented. There was also a small fixation cross between the two arrays and subjects were instructed to look at that on the beginning of each trial. The radii of the dots were the same as the larger ones listed above.

Stimuli in most of the experiments with dots were presented for 250 or 300 ms and then the screen returned to the background color. This was done to reduce the possibility that subjects used slow strategic search processes to perform the task. Subjects were instructed to respond as quickly and accurately as possible. Responses were collected by key presses on a PC keyboard, usually the/and z keys, one for each choice. For all the tasks, there were several practice trials (e.g., 4) and for these, the correct response was given on each trial so that subjects would be certain to understand the instructions (e.g., it would say “an example of more blue dots” when the decision was about which color had the more dots). Subjects initiated each block by pressing the space bar on the keyboard.

For most of the experiments, the subjects were students in an introductory psychology class who participated for class credit. As is typical in our pool, some of them were not cooperative and began, from the beginning or in the middle of the experiment, to respond with fast guesses. For this reason, about 20% of the subjects were eliminated in each experiment. We identified the non-cooperative subjects by placing an upper cutoff at 300 ms and lower cutoff at 0 and examining the proportion of responses in this range and their accuracy. If there were more than 5% and accuracy was at or near chance for these responses, we eliminated the subject (based only on these aspects of the data, without examining other results). We also eliminated one or two subjects from a few of the experiments who were not fast guessing but responded with chance accuracy. For data analyses for all the experiments, we placed a lower RT cutoff at 300 ms and an upper cutoff at 2000 ms. This eliminated less than 5% of the responses in each experiment.

For experiments with one task, there were typically 20 blocks of 96 or 100 trials giving about 2000 observations per subject and for experiments with two or more tasks, two tasks were tested per session with about 1000 trials per session. We aimed for 16 subjects in the experiments with one task and 32 for the experiments with two or more tasks. Because of the fast-guessing subjects, we usually tested a few extra subjects and this led to larger numbers in some of the experiments. Experiments 1–5 had 16 subjects, Experiment 6 had 35, Experiments 7, 8, and 9 had 32, Experiment 10 had 15 (because classes ended before we could get the 16th), and Experiment 11 had 18.

Experiment 1

The stimuli in Experiment 1 were blue and yellow dots intermingled in a single array (Figure 4A) and subjects decided whether there were more blue or more yellow dots. We label this task the B/Y task. It is in this experiment that we first found the counter-intuitive result that as accuracy decreases, responses speed up.

To manipulate numerosity, the numbers of the blue and yellow dots differed in their numerosities and the differences between their numerosities. There were 10 combinations of the numbers of blue and yellow dots; 15/10, 20/15, 25/20, 30/25, and 40/35 for differences of 5; 20/10, 30/20, and 40/30 for differences of 10; and 30/10 and 40/20 for differences of 20. The sums of the dot areas were equal or proportional.

Accuracy and RT Results

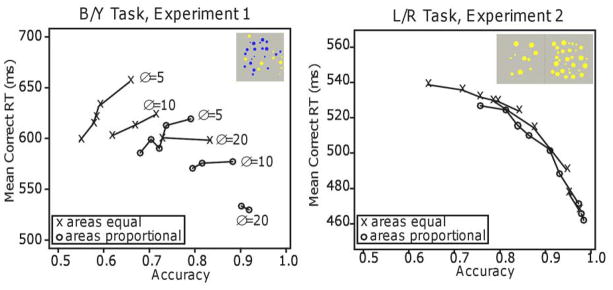

The data for “blue” and “yellow” responses were symmetric so correct responses for blue and yellow dots were combined and errors for blue and yellow dots were combined. Table 1 shows accuracy and mean correct RTs as a function of the area manipulation with the data averaged over the 10 proportional-area and 10 equal-area conditions. The left panel of Figure 5 shows mean RTs plotted against accuracy with the x’s for equal-area conditions and the o’s for proportional-area conditions. Lines were drawn between conditions with the same numerosity difference (5, 10, and 20) for the two area conditions separately.

Table 1.

Experiments 1 and 2: accuracy and correct mean RTs

| Experiment | measure | proportional area | equal area |

|---|---|---|---|

| 1 | accuracy | 0.817 | 0.675 |

| 1 | RT | 601 | 638 |

| 2 | accuracy | 0.900 | 0.824 |

| 2 | RT | 495 | 513 |

Figure 5.

Plots of mean RT against accuracy for Experiments 1 and 2. The x’s are for equal-area conditions and the o’s are for proportional-area conditions. Δ represents the difference in numerosity between the two stimuli.

As expected, accuracy decreased as the difficulty of the discrimination increased. Specifically, accuracy decreased both as the numerosity of the dots increased and as the difference between the numerosities of the dots of the two colors decreased, the standard result with these manipulations. Also as expected, equal-area discriminations were more difficult than proportional-area discriminations, with accuracy higher and RTs shorter with proportional areas.

The RT data show the unexpected finding and demonstrate why RTs must be considered in data analyses. For numerosity differences of 5 and 10, as accuracy decreased, RTs also decreased (for differences of 20, RTs changed little). For example, for the top function in the figure, as the probability of a correct response decreases from around 0.68 to around 0.55, RTs speed up from around 660 ms to around 600 ms. It is this joint consideration of accuracy and RTs that gives the counter-intuitive result.

Analyses of variance with two factors, the two area conditions and the 10 combinations of numbers of blue and yellow dots, showed significant effects on accuracy (F(1,15)=203.6, p<.05; F(9,135)=116.7, p<.05) and on mean RTs (F(1,15)=68.1, p<.05; F(9,135)=27.6, p<.05, respectively). The interaction was not significant for accuracy (F(9,135)=1.6, p>.05) but it was for RTs (F(9,135)=5.5, p<.05). We were not concerned with power in the statistical tests because it is qualitative patterns along with model fits to the sizes of the effects that are most relevant, not the size relative to the variability in the data. While a 2% effect on accuracy, for example, might be significant and have a high effect size, it might have no practical effect on performance in the context of the modeling.

Experiment 2

In Experiment 1, accuracy decreased as difficulty increased and RTs decreased. In Experiment 2, accuracy decreased as difficulty increased and RTs increased (as opposed to decreasing as occurred in Experiment 1). The stimuli were side-by-side arrays (Figure 4B) and the dots were always yellow for both arrays. Subjects decided which of the two arrays had more dots, the left or the right. We call this the L/R task. Summed areas were either equal or proportional. There were the same 10 combinations of numbers of dots as for Experiment 1.

Accuracy and RT Results

The data for “left” and “right” responses were symmetric so correct responses for left and right dots were grouped and errors for left and right dots were grouped as for Experiment 1. Table 1 shows the accuracy and mean correct RT results as a function of the area manipulation with the data averaged over the 10 proportional-area and 10 equal-area conditions. The right panel of Figure 5 shows plots constructed like those of Experiment 1. Accuracy decreased as the difference in numerosity between the two arrays decreased and as the numerosity of the two arrays increased, and it was lower for the equal-area conditions than the proportional-area ones. The result for RTs was the typical one, that RTs increased as accuracy decreased. Unlike Experiment 1, the data from all the equal-area conditions and all the proportional-area conditions fell on a single parametric plot.

Analyses of variance showed significant differences in accuracy among the 10 combinations of numerosity and the two area conditions, F(9,135)=151.7, p<.05, and F(1,15)=68.2, p<.05, respectively, and their interaction was significant, F(9,135)=8.0, p<.05. There were also significant differences in RTs among the numerosity conditions and the area conditions, F(9,135)=25.7, p<.05 and F(1,15)=38.7, p<.05, and their interaction was not significant, F(9,135)=1.3. These results show that both the area and numerosity manipulations affected performance on this task. In following analyses for later experiments, we average over the numerosity conditions (because the numerosity effect is always large) to simplify the ANOVAs and t-tests.

Fitting the Integrated Models to the Results of Experiments 1 and 2

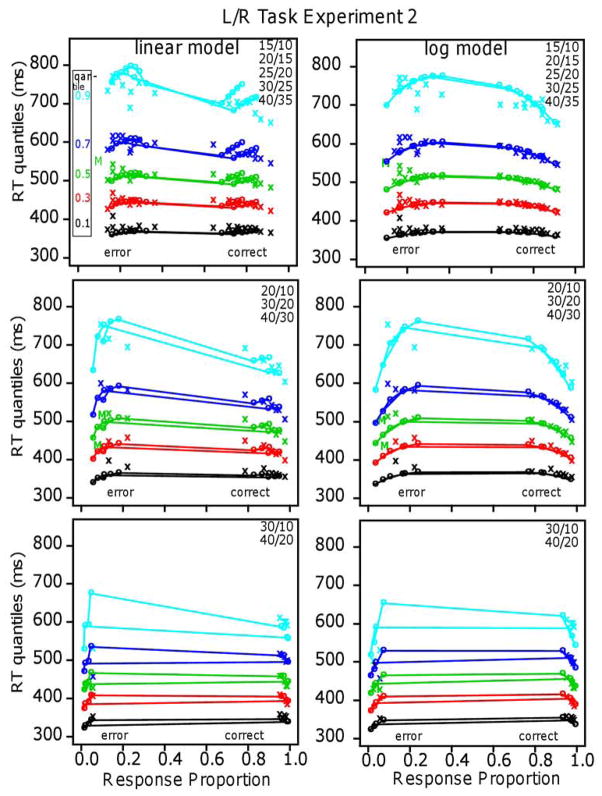

Quantile-probability plots (Figures 6 and 7) show accuracy and the full distributions of RTs for correct and error responses and how these change across conditions. As illustrated in Figure 3, the 0.1, 0.3, 0.5 (median), 0.7, and 0.9 quantiles of the RT distribution for each condition are plotted vertically on the y-axis and the proportions of responses are plotted on the x-axis. Because the probability of a correct response is larger than .5, quantiles for correct responses are on the right of .5 and quantiles for errors on the left (the two probabilities sum to 1.0). The difficulty of the stimuli in each condition determines the probabilities of correct and error responses, that is, the location of the stacks of quantiles on the x-axis.

Figure 7.

Quantile-probability functions for Experiment 2.

For the models, nondecision time determines the placement of the functions vertically. The shapes of the functions are determined by just three values (Ratcliff & McKoon, 2008): the distance between the boundaries, the range across trials in the starting point (which is equivalent to across-trial variability in the settings of the boundaries), and the SD across trials in drift rates (η). The drift rates for the different levels of difficulty (i.e., the different conditions) sweep out functions across response probabilities.

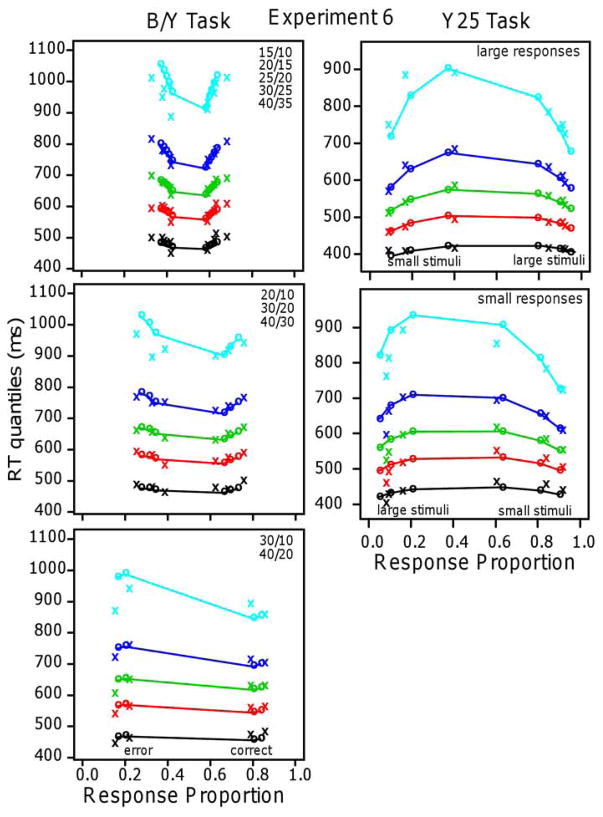

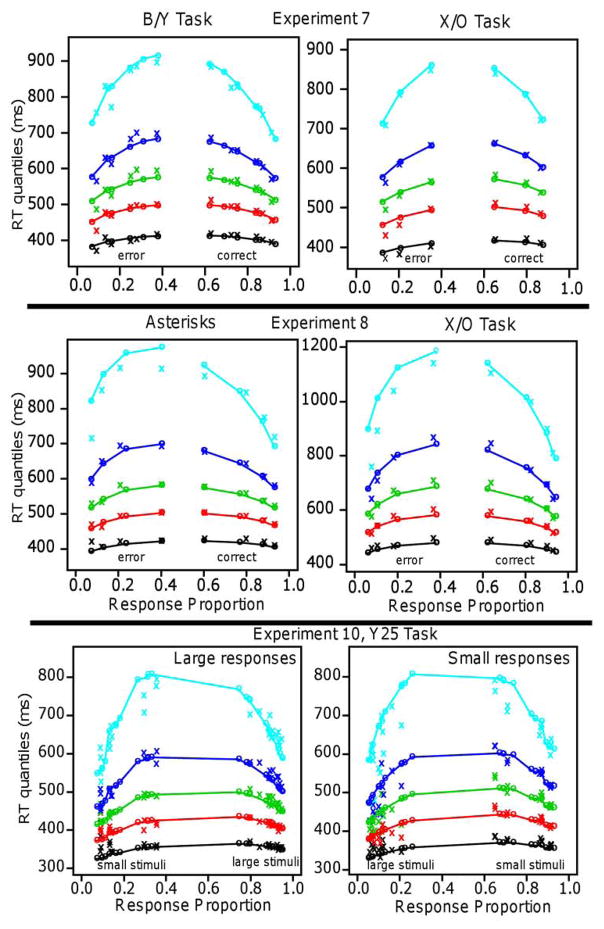

Figures 6 and 7 show the quantile probability functions for Experiments 1 and 2, respectively, and the fits of the models to them. The x’s are the data and the o’s and lines joining them are the predictions of the models. The proportional-area conditions are farther to the left and right because they have higher accuracy than the equal-area conditions, which are nearer the center. The horizontal lines that connect correct and error responses across 0.5 are not meaningful; they are there only to show which correct responses correspond to which error responses.

The quantile-probability functions for Experiment 1 (Figure 6) show the unexpected result for the five quantiles. They decrease sharply from their left and right ends (error responses and correct responses, respectively) toward the center, showing the decrease in RTs as accuracy decreases. This is true for the equal-area conditions and the proportional-area conditions. In contrast, the functions for Experiment 2 (Figure 7) show the typical inverted U shaped functions bending up from their left and right ends with RTs increasing as accuracy decreases.

To fit the models to the data, there were four parameters from the diffusion model: the distance between the boundaries, across-trial range in the starting point and across-trial range in nondecision time. There were four parameters from the ANS models: a drift-rate coefficient (v1) for the equal-area conditions, a drift-rate coefficient for the proportional-area conditions (v2), the SD coefficient (σ1), and the constant component of the across-trial SD in drift rate (η0). The number of degrees of freedom was 212: the number of conditions multiplied by the 11 degrees of freedom for the proportions of responses between and outside the .1, .3, .5, .7, and .9 bins for correct and error responses minus 1 because the proportions add to 1 and minus the number of parameters.

The results for the linear model for Experiment 1 show a remarkable qualitative and quantitative match between theory and data. The model produces the decreases in RT quantiles as accuracy decreases, the larger and sharper decreases for the equal-area conditions than the proportional-area conditions, and the larger decreases for the higher than the lower quantiles. It also produces the flattening of the functions as the difference in numerosities between the blue and yellow dots increases (from 5 to 10 to 20). (Accuracy for the easiest condition, 15/10 dots, was a little higher than the model’s predictions but this could be accommodated by allowing drift rate to increase a little more quickly than linearly as numerosities decrease.)

The critical difference in the predictions between the linear and log models is the counterintuitive result that for the linear model, for a constant numerosity difference, as the total number of dots increases, RT decreases. In our data, this effect is largest for differences in numerosity of 5. In order to provide another measure of which qualitative pattern of results was obtained for individual subjects, we fit median RTs as a function of the number of dots for differences of 5 with linear regression. We examined this qualitative effect in the data from the experiments with the B/Y task and the L/R task and we report how many subjects had a slope less than zero. For the counterintuitive result (decreasing RT with decreasing accuracy), the slope is less than zero, and for the standard result and log model, the slope is greater than zero.

Tables 2 and 3 show the parameter values of the linear model that best fit the data. The mean G-square value for the linear model was 261 and the critical value of the chi-square for 212 degrees of freedom is 246.0. The mean G-square over subjects is just above the critical value, which indicates a good fit of the model to data. For individuals, G-square values were lower for the linear model for 13 out of 16 subjects and the slope of the median RT versus overall numerosity function for differences of 5 was less than 0 for 28 out of 32 comparisons (equal and proportional area for 16 subjects). Both of these support the linear model for individual subject data.

Table 2.

Diffusion model parameters

| Experiment and task | Model | a | Ter | η0 | 10σ1 | sz | st | z |

|---|---|---|---|---|---|---|---|---|

| 1, B/Y | linear | 0.114 | 0.446 | 0.010 | 0.066 | 0.083 | 0.266 | a/2 |

| 1, B/Y | log | 0.103 | 0.425 | 0.038 | 0.021 | 0.043 | 0.254 | a/2 |

| 2, L/R | linear | 0.098 | 0.398 | 0.032 | 0.071 | 0.073 | 0.237 | a/2 |

| 2, L/R | log | 0.092 | 0.390 | 0.152 | 0.005 | 0.057 | 0.224 | a/2 |

| 3, Y25 | linear | 0.102 | 0.386 | 0.026 | 0.027 | 0.076 | 0.203 | 0.054 |

| 3, Y25 | log | 0.093 | 0.389 | 0.022 | 0.002 | 0.064 | 0.215 | 0.052 |

| 4, B/Y | linear | 0.113 | 0.421 | 0.027 | 0.078 | 0.087 | 0.235 | a/2 |

| 4, B/Y | log | 0.103 | 0.406 | 0.024 | 0.045 | 0.055 | 0.227 | a/2 |

| 5, Y25 | linear | 0.098 | 0.419 | 0.028 | 0.038 | 0.068 | 0.201 | 0.045 |

| 5, Y25 | log | 0.091 | 0.412 | 0.030 | 0.012 | 0.049 | 0.199 | 0.040 |

| 6, Y25 | linear | 0.109 | 0.433 | 0.027 | 0.038 | 0.066 | 0.222 | 0.059 |

| 6, Y25 | log | 0.102 | 0.427 | 0.037 | 0.017 | 0.051 | 0.220 | 0.058 |

| 6, B/Y | linear | 0.111 | 0.485 | 0.030 | 0.053 | 0.064 | 0.297 | a/2 |

| 6, B/Y | log | 0.108 | 0.481 | 0.053 | 0.020 | 0.058 | 0.291 | a/2 |

| 7, B/Y | linear | 0.107 | 0.403 | 0.019 | 0.063 | 0.061 | 0.220 | a/2 |

| 7, B/Y | log | 0.109 | 0.413 | 0.069 | 0.050 | 0.077 | 0.227 | a/2 |

| 7, X/O | linear | 0.099 | 0.425 | 0.037 | 0.035 | 0.072 | 0.235 | a/2 |

| 7, X/O | log | 0.099 | 0.427 | 0.033 | 0.036 | 0.073 | 0.236 | a/2 |

| 8, asterisks | linear | 0.121 | 0.409 | 0.068 | 0.019 | 0.090 | 0.185 | a/2 |

| 8, asterisks | log | 0.113 | 0.396 | 0.039 | 0.012 | 0.065 | 0.171 | a/2 |

| 8, X/O | linear | 0.129 | 0.431 | 0.015 | 0.052 | 0.059 | 0.203 | a/2 |

| 8, X/O | log | 0.129 | 0.429 | 0.009 | 0.052 | 0.069 | 0.199 | a/2 |

| 10, Y25 | linear | 0.105 | 0.364 | 0.036 | 0.024 | 0.080 | 0.177 | 0.054 |

| 10, Y25 | log | 0.096 | 0.357 | 0.033 | 0.005 | 0.047 | 0.191 | 0.051 |

Table 3.

Diffusion model drift rate coefficients and individual fit measures

| Experiment and task | Model | v1 | v2 | v3 | v4 | vc | Number preferred | Number slopes<0 | G2 | df |

|---|---|---|---|---|---|---|---|---|---|---|

| 1, B/Y | linear | 0.037 | 0.016 | 13/16 | 28/32 | 261 | 212 | |||

| 1, B/Y | log | 0.501 | 0.225 | 3/16 | 287 | 212 | ||||

| 2, L/R | linear | 0.066 | 0.048 | 6/16 | 11/32 | 303 | 212 | |||

| 2, L/R | log | 1.067 | 0.784 | 10/16 | 280 | 212 | ||||

| 3, Y25 | linear | 0.033 | 0.033 | 0.029 | 0.030 | −0.004 | 13/16 | 301 | 252 | |

| 3, Y25 | log | 0.634 | 0.629 | 0.581 | 0.571 | −0.029 | 3/16 | 312 | 252 | |

| 4, B/Y | linear | 0.030 | 0.016 | 0.040 | 0.020 | 11/16 | 56/64 | 475 | 430 | |

| 4, B/Y | log | 0.436 | 0.229 | 0.566 | 0.268 | 5/16 | 494 | 430 | ||

| 5, Y25 | linear | 0.032 | 0.030 | 0.037 | 0.035 | −0.032 | 12/16 | 328 | 252 | |

| 5, Y25 | log | 0.559 | 0.531 | 0.643 | 0.592 | −0.050 | 4/16 | 348 | 252 | |

| 6, Y25 | linear | 0.033 | −0.045 | 15/35 | 95 | 57 | ||||

| 6, Y25 | log | 0.607 | −0.071 | 20/35 | 98 | 57 | ||||

| 6, B/Y | linear | 0.015 | 21/35 | 32/35 | 136 | 103 | ||||

| 6, B/Y | log | 0.275 | 14/35 | 138 | 103 | |||||

| 7, B/Y | linear | 0.040 | 0.025 | 24/32 | 90.6 | 58 | ||||

| 7, B/Y | log | 0.534 | 0.321 | 8/32 | 95.0 | 58 | ||||

| 7, X/O | linear | 0.031 | 16/32 | 43.2 | 26 | |||||

| 7, X/O | log | 0.386 | 16/32 | 42.6 | 26 | |||||

| 8, asterisks | linear | 0.015 | 24/32 | 41.2 | 37 | |||||

| 8, asterisks | log | 0.557 | 8/32 | 41.7 | 37 | |||||

| 8, X/O | linear | 0.029 | 24/32 | 45.4 | 37 | |||||

| 8, X/O | log | 0.355 | 8/32 | 47.6 | 37 | |||||

| 10, Y25 | linear | 0.035 | 0.033 | 0.036 | 0.036 | −0.041 | 10/15 | 338 | 252 | |

| 10, Y25 | log | 0.627 | 0.608 | 0.638 | 0.628 | −0.069 | 5/15 | 342 | 252 |

Note: For Experiments 1, 2, and 7, v1 proportional area, v2 equal area. For Experiments 3 and 5, v1 small dots few distrac-tors, v2 small dots many distractors, v3 large dots few distractors, v4 large dots many distractors. For Experiment 4, v1 small dots proportional area, v2 small dots equal area, v3 large dots proportional area, v4 large dots equal area. For Experiment 10, v1 random arrangement proportional area, v2 random arrangement equal area, v3 grid arrangement proportional area, v4 grid arrangement equal area.

The proportional-area and equal-area drift-rate coefficients were significantly different, 0.037 and 0.016, a difference of over a factor of 2 (t(15)=8.1, p<.05). We discuss these coefficients below.

The fit of the linear model to the data is impressive for several reasons. First, there is only one drift-rate coefficient for the 10 equal-area conditions and only one for the 10 proportional-area conditions-- drift rate is determined by the coefficient and the two numerosities being compared. Second, the values of the four parameters from the diffusion model and the constant component of the across-trial SD in drift rate are fixed across all 20 conditions. There is no model freedom with which to alter a single parameter to accommodate, for example, a miss in one data point.

The fit is also impressive in relation to the number of parameters that would usually be used to fit the diffusion model to data, as mentioned above. For Experiments 1 and 2, there would be 20 drift-rate parameters and possibly 20 parameters for across-trial SD in drift rates (because the SD in drift rates increases with numerosity in order to fit the data). Integrating the linear model with the diffusion model reduces this to 2 drift-rate coefficients and 2 SD coefficients, the constant SD coefficient i(η0) and the one that specifies how the SD changes with numerosity (σ1).

The log model completely and qualitatively misses the decreases in RTs with decreasing accuracy. It does, however, produce predictions that go through the middles of the quantile-probability functions and so the G-square value is not markedly different from that for the linear model.

For Experiment 2, the results were the opposite: The log model fit the data well, the linear model did not, and the functions in Figure 7 show the result that would be expected intuitively: as accuracy decreased, RTs increased. The results are also different from Experiment 1 in that the quantile data from the equal-area and proportional-area conditions fall on the same function (if they were plotted together) as they do for the means in Figure 5. The fit of the log model to the data was good: It produced predicted values that match the quantile-probability functions with the mean G-square value a little above the critical value, 246.0. The number of parameters, the number of conditions, and the number of degrees of freedom were the same as for Experiment 1. There was one drift-rate coefficient for the 10 equal-area conditions, one for the 10 proportional-area conditions, the four diffusion-model parameters, the constant component of the SD in drift-rate across trials, and two parameters for the SD coefficients. The linear model missed the data qualitatively but its predictions go through the middles of the quantile-probability functions and so its G-square value is not a great deal larger than that of the log model. For individuals, G-square values were lower for the log model for 10 out of 16 subjects and the slope of the RT versus overall numerosity function for differences of 5 was greater than 0 for 21 out of 32 comparisons (equal and proportional area for 32 subjects). Both of these support the log model for individual subject data, but not as strongly as the linear model is supported for Experiment 1. The results for Experiment 2 are consistent with those obtained for a side-by-side task by Park and Starns (2015). They found that, for constant differences in numerosity, as overall numerosity increased (12/9 vs. 21/18 and 14/12 vs. 20/18), mean RT increased (12 ms and 5 ms effects, respectively, supporting the log model.

In fits of the standard model to other experimental paradigms, across-trial variability in drift rate has been a free parameter that is equated across conditions and its value typically varies between .08 and .3. We report SD coefficient values (σ1) and the constant values (η0). The values of across-trial SD in drift rate can be computed from these using the equation in Figure 2C. For comparison with other fits of the model to data in other articles, we present values of η for Experiments 1 and 2 below. (Note that the SD coefficients are labeled 10σ1 because the values in the table are multiplied by 10). For Experiment 1, the smallest and largest values of η are 0.13 and 0.36 (for the 15/10 and 40/35 numerosity conditions) and for Experiment 2, the smallest and largest values of η are 0.16 and 0.18. Thus, there are large differences in η for Experiment 1 across conditions while for Experiment 2, the values of η are almost constant across conditions.

Estimating the Contributions of Confounding Variables

As mentioned above, research on numeracy has been concerned with whether experimental results can be explained by numerosity alone, without some confounding variable such as area, the length of a line drawn around the dots, or their density (e.g., DeWind et al., 2015; DeWind & Brannon, 2012; Feigenson et al., 2002; Gebuis, Cohen Kadosh, & Gevers, 2016; Gebuis & Gevers, 2011; Gebius & Reynvoet, 2012a, 2012b, 2013; Leibovich, Katzin, Harel, Henik, 2016; Mix et al., 2002). Efforts to control for such variables face the problem that controlling for one leaves another confounded with numerosity.

Our results show that the ANS-diffusion models can provide a way of measuring the effects of these variables. As Experiments 1 and 2 versus Experiment 3 (presented next) demonstrate, some confounded variables affect performance for some tasks but not others. In Experiments 1 and 2, the summed areas of the dots were either equal or proportional to the number. To the extent that area contributed to decisions, the drift-rate coefficient should be larger for proportional-area conditions and, if it is, then the difference in the equal- and proportional-area coefficients provides an estimate of the relative contributions of area and numerosity. In Experiment 1, the difference in the drift-rate coefficients from the linear model was 0.21 (means 0.37 minus 0.16), i.e., the effect of area was over double that for the equal-area condition. In Experiment 2, the difference in the coefficients from the log model was 0.29 (1.07 minus 0.78) and so the effect of area was about 35% over the value for the equal-area condition.

Our results argue against the notion that effects that have been attributed to representations of numerosity can be explained instead completely by non-numerical cues (Gebius, Gevers, & Cohen-Kadosh, 2014; Tibber et al., 2013). For example, Gebius and Reynvoet (2013) argued that numerosity information is not extracted automatically from visual stimuli in either an active task (subjects had to monitor numerosity and occasionally make a judgment) or a passive task (subjects viewed sequences of arrays of dots and neurophysiological measures were collected).

DeWind et al. (2015) have recently proposed a different method for measuring the effects of confounding variables. They used a linear combination of the logs of the ratios of the differences in independent variables between two sets of stimuli (e.g., their areas, numerosities, etc.) to produce a decision variable (cf., signal strength in signal detection theory). The inverse z-transformation of this combination was used to predict accuracy and the coefficients of the linear combination were used as estimates of the contributions of the independent variables. However, this method is only about accuracy, not RTs. Ratcliff (2014) found that z-transforms of accuracy can sometimes match drift rates, but whether that applies with DeWind et al.’s method and for numerosity discrimination tasks will require further research.

Why Does the Linear Model Produce Shorter RTs as Accuracy Decreases?

To illustrate, we use the simple case for which the boundaries of the diffusion process are equidistant from the starting point (although the logic is the same if they are not equidistant). Incidentally, with equidistant boundaries (Figure 8A), the correct and error RT distributions for a single drift rate (i.e., no trial-to-trial variability in starting point or drift rate) are identical except that there is lower probability mass in the error distribution.

Figure 8.

An illustration of how the predictions of the linear model arise. A illustrates a single diffusion process with a single drift rate (with no across trial variability in drift rate). RT distributions for correct and error responses are equal if the starting point is equidistant from the boundaries. B shows distributions of drift rate (across trials) for high numerosity (wide red solid distribution) and low numerosity (narrow blue dashed distribution). To represent these distributions for illustration, two drift rates are chosen (v1 and v2 and accuracy is the average of the two accuracy values and mean RT is a weighted sum of the two RTs. C shows the averages for the low-SD condition and D shows the averages for the high-SD condition with the averages for correct responses shown in green. For completeness, error responses are also shown; note that for boundaries equidistant from the starting point, for a single drift rate, correct and error RTs are the same.

Figure 8B shows trial-to-trial variability in drift rate with two normal distributions both centered on a drift rate of 0.1. The red solid function represents a larger numerosity, which has a larger SD, and the blue dashed function represents a smaller numerosity, which has a smaller SD. For illustration, two values of drift rate were selected from each function, at about plus and minus one SD, −0.05 and 0.25 for the larger numerosity and 0.05 and 0.15 for the smaller.

Figures 8C and 8D show the RT distributions for correct and error responses from 8A, with the two values of v for the smaller numerosity (7C) and the two values for the larger numerosity (7D). For the smaller numerosity, the 0.15 and 0.05 drift rates produce accuracy values of 0.86 and 0.65, respectively, which average to 0.76, and they produce RTs of 685 ms and 748 ms for correct responses which, when weighted by their probabilities (0.86 and 0.65), average to 717 ms. For the larger numerosity, the 0.25 and −0.05 drift rates produce accuracy values of 0.95 and 0.35, which average to 0.65. They produce RTs of 616 ms and 748 ms for correct responses which, when weighted by their probabilities (0.95 and 0.35), average to 652 ms. Thus, accuracy is lower for the larger numerosity, 0.65, than the smaller, 0.76, and-- the counterintuitive result-- RTs are shorter, 652 ms and 717 ms. The computations for RTs for errors are shown at the bottom boundary in the figures.

To explain this more generally: when the distribution of drift rates has a large SD, then drift rates in the left tail are negative. They are slower than responses in the right tail but they have lower probabilities of correct responses (because their drift rate is toward the error boundary). This means that fast correct responses in the right tail are weighted more heavily (there are more of them) than slower responses in the left tail, which leads to overall faster responses. As numerosity increases, the SD increases which leads to the lower probability and faster responses.

There is an alternative hypothesis that has been suggested to explain counter-intuitive results similar to those obtained in Experiment 1 but in different perceptual tasks. The assumption is that within-trial variability increases with stimulus strength, or in our case, numerosity (e.g., Donkin, Brown, & Heathcote, 2009; Smith & Ratcliff, 2009; Teodorescu, Moran, & Usher, 2016; Teodorescu & Usher, 2013). In the diffusion model, usually the variability in the accumulation of information from the starting point to the boundaries is constant across levels of difficulty. If within-trial variability increases with numerosity, processes hit the boundaries faster because of increased variability, which leads to the decrease in RTs with decreasing accuracy. However, there is a major problem with this within-trial variability account: it cannot explain why the decrease in RT with decreasing accuracy only occurs for intermingled blue/yellow dot displays and not side-by-side displays (and in the experiments described below, not for single arrays matched against a standard). An increase in within-trial variability with numerosity would be expected to be a general property of numerosity decisions, not a property of a particular stimulus configuration and task.

Experiment 3

In Experiment 1, accuracy decreased as difficulty increased and RTs decreased. In this experiment, like Experiment 2, accuracy decreased as difficulty increased and RTs increased. In Experiments 1 and 2, the proportional-area conditions were easier than the equal-area ones; in this experiment, performance was about the same.

The stimuli were single arrays of intermingled blue and yellow dots (Figure 4A). Subjects decided whether the number of yellow dots (or the number of blue dots) was larger or smaller than 25; we call this the Y25 task. There were three variables: the number of dots for the target color (the dots to be compared to 25) was 10, 15, 20, 30, 35, or 40, there were either 15 or 35 dots of the other color, and areas were either equal or proportional, for a total of 24 conditions, collapsing over whether the target color was blue or yellow. The target color alternated from one block to the next.

Results

Table 4 shows accuracy and mean RTs. Correct responses for 10, 15, and 20 dots were combined with correct responses for 30, 35, and 40 dots and then averaged. The data show the standard result that RTs increase as accuracy decreases. The data were not collapsed over “larger” responses to larger-than-25 stimuli and “smaller” responses to smaller-than-25 stimuli because the two sets of data were not symmetric.

Table 4.

Experiment 3: accuracy and correct mean RTs collapsed over stimulus difficulty

| measure | 15 non-target dots | 35 non-target dots | ||

|---|---|---|---|---|

| proportional area | equal area | proportional area | equal area | |

| accuracy | 0.856 | 0.858 | 0.837 | 0.841 |

| mean RT | 537 | 528 | 537 | 534 |

Analyses of variance were conducted with two factors, area and the number of non-target dots. The data were averaged over the numerosity conditions for these analyses. The difference in accuracy between the equal- and proportional-area conditions was only 0.2% and the difference in correct mean RTs was only 1 ms and neither was significant, F(1,15)=1.0 for accuracy and F(1,15)=0.4 for RTs. The differences in accuracy and correct mean RTs between the two numbers of non-target dots were small, 1.8% in accuracy and 8 ms in RTs, but they were significant, F(1,15)=12.7, p<.05 for accuracy and F(1,15)=12.7, p<.05 for RTs. The interactions were not significant, F(1,15)=0.1 and F(1,15)=1.2 for accuracy and RTs, respectively.

To fit the models, drift rates were calculated with the drift-rate coefficient multiplying the difference between the number of target dots and 25 for the linear model and the difference between the logs of the number of target dots and 25 for the log model. The SD coefficient was also calculated using the number of target dots and 25.

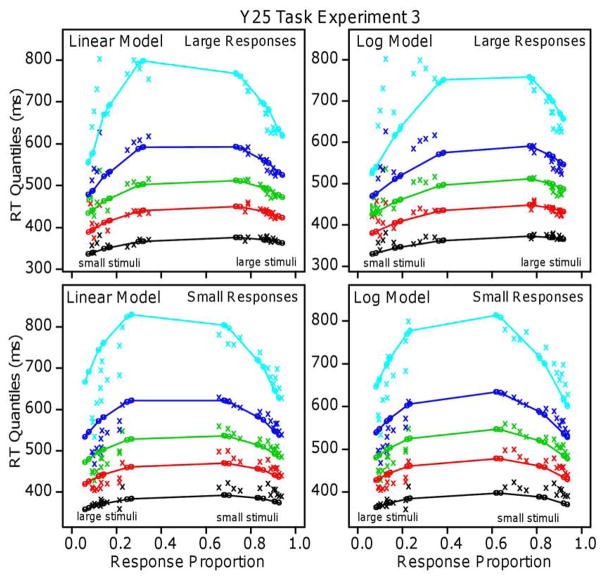

Figure 9 shows the quantile-probability plots for the data and the models’ predictions, with the x’s for the data and the o’s and lines that connect them for the predictions. The top two panels are for “larger” responses and the bottom two are for “smaller” responses. For each plot, there are 12 sets of quantile data points for correct responses and 12 for errors, where the 12 are made up of the combinations of the area variable, the number of non-target dots, and three numerosities, 10, 15, and 20 target dots for “smaller” stimuli or 30, 35, and 40 dots for “larger” stimuli. The best-fitting values of the parameters and mean G-square measures are shown in Tables 2 and 3.

Figure 9.

Quantile probability plots for Experiment 3 for the log and linear models for large and small responses separately.

There were six numerosity values, two area conditions, and two numbers of non-target dots, giving 264 (24 times 11) degrees of freedom in the data. There were twelve parameters, so the number of degrees of freedom for fitting the models was 252. The twelve parameters were the usual four for the diffusion model, the starting point of the diffusion process, a drift-rate criterion, a drift-rate coefficient for each of the four combinations of area and number of non-targets, and 2 SD coefficients (one constant and one specifying how the SD changes with numerosity). A drift-rate criterion (this was subtracted from all the drift rates produced from the expressions for drift rates in Figure 2C) was needed because some of the subjects did not set the zero point of drift rate exactly at 25.

The mean G-square value was a little lower for the linear model than for the log model and 13 out 16 G-square values for individual subjects were smaller for the linear as opposed to the log model, thus supporting the linear model. However, the linear and log models fit the data qualitatively about equally well. There were larger differences in accuracy for small stimuli than large stimuli and both models predict this. Both models miss slightly the leading edges of the RT distributions for “smaller” responses to small stimuli.

We conducted analyses of variance on the drift-rate coefficients. The effect of the area variable was not significant for either model, F(1,15)=1.5 and F(1,15)=1.2 for the linear and log models, respectively; the effect of the number of non-targets was significant, F(1,15)=27.1, p<.05, and F(1,15)=12.5, p<.05, respectively; and the interactions were not significant (F(1,15)=1.3 and F(1,15)=0.1). The area variable had less than a 2% effect and the difference between 15 and 35 non-target dots was only about 10%, notably small relative to the 100% and 30% effects of the area variable on the drift rate coefficient in Experiments 1 and 2, respectively.

Comparison of Parameter Values for Experiments 1, 2, and 3

There were no large or systematic differences across the experiments in the best-fitting values for the parameters of the diffusion model. The distance between the boundaries was about the same for the three experiments and nondecision time was a modest 50 ms longer for Experiment 1 than for Experiments 2 and 3. The across-trial ranges in nondecision time and starting point were similar across the experiments (note that they are estimated less well than the other parameters with larger SD’s in their estimates, Ratcliff & Tuerklinckx, 2002; Ratcliff & Chiilders, 2015).

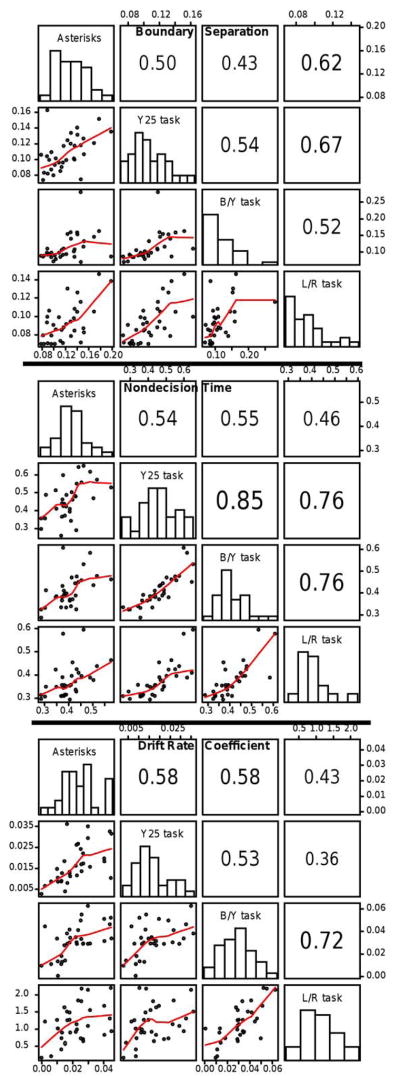

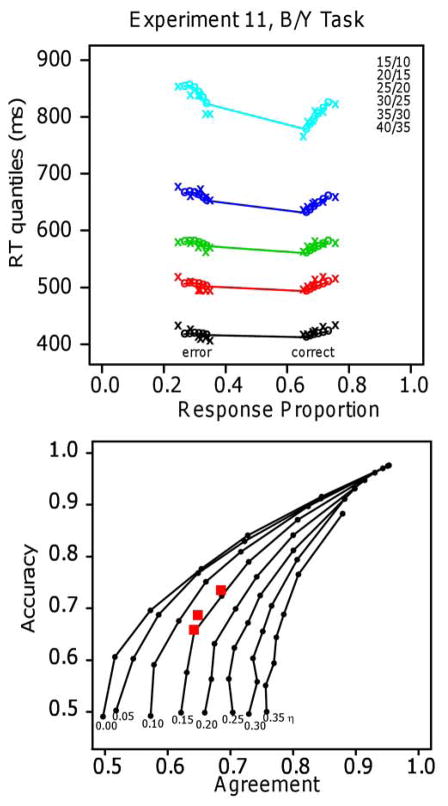

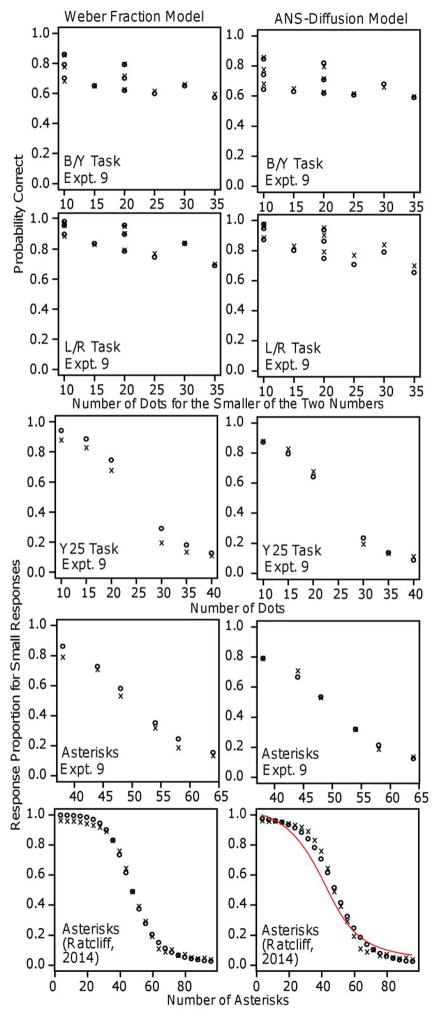

Drift-rate coefficients can be compared within the linear or within the log model across conditions of an experiment, but not between them because the log and linear models place numerosity on different scales. However, drift rates track difficulty in both models and the relative sizes of the differences among conditions can be used to understand how manipulations affected the quality of encoded representations of stimuli. The main results were that the area manipulation affected the drift-rate coefficients most in the B/Y task, next in the L/R task, and almost not at all in the Y25 task. Proportional-area stimuli increased drift-rate coefficients by 100% for the B/Y task, 30% for the L/R task, and less than 10% for the Y25 task.