Abstract

Tactile sensing is an essential component in human–robot interaction and object manipulation. Soft sensors allow for safe interaction and improved gripping performance. Here we present the TacTip family of sensors: a range of soft optical tactile sensors with various morphologies fabricated through dual-material 3D printing. All of these sensors are inspired by the same biomimetic design principle: transducing deformation of the sensing surface via movement of pins analogous to the function of intermediate ridges within the human fingertip. The performance of the TacTip, TacTip-GR2, TacTip-M2, and TacCylinder sensors is here evaluated and shown to attain submillimeter accuracy on a rolling cylinder task, representing greater than 10-fold super-resolved acuity. A version of the TacTip sensor has also been open-sourced, enabling other laboratories to adopt it as a platform for tactile sensing and manipulation research. These sensors are suitable for real-world applications in tactile perception, exploration, and manipulation, and will enable further research and innovation in the field of soft tactile sensing.

Keywords: : tactile sensors, dexterous manipulation, soft sensors

Introduction

The sense of touch is essential for interacting physically with our environment,1 such as with other humans in social interactions.2 In robotics, tactile feedback is essential for complex precision manipulation tasks3 as well as for safe human–robot interaction. Developing robust, customizable tactile sensors is thus an important task that could drive advances in the safety, interactivity, and manipulation capabilities of robots.

A large variety of tactile sensors have been developed over the years,4 relying on various technologies such as capacitive taxels, resistive wires, and piezoelectric materials. However, there is a general lack of cheap customizable tactile sensors in the field, which is hampering the ease of researching applications of robot touch. We aim to develop these types of tactile sensors and make them available through open resources such as the soft robotics toolkit.5

Our sensors are soft, with a compliant modular tip that protects sensitive electronic parts from physical contact with objects. When integrated into robotic grippers, compliant sensors have also been shown to improve grasping.6 Developing soft sensors is also key for safe and comfortable human–robot interaction.3

Advances in multimaterial 3D printing allow researchers to manufacture rapidly prototyped robot hands and sensors with integrated soft surfaces for compliant, adaptable, and sensorized manipulation. Recent work in the application of soft, 3D-printed tactile sensors, for instance, in demonstrating tactile super-resolution,7 extends the scope of these devices from prototypes to useful, working versions of sensors whose materials and morphologies can be quickly, easily, and cheaply adapted to suit different practical applications.

The aim of this study is to present a suite of soft sensors of various morphologies using 3D printing, including the tactile fingertip (TacTip) by successive modifications of the original cast tip version (Fig. 1). The TacTip8 is a low-cost, robust, 3D-printed optical tactile sensor based on a human fingertip and developed at Bristol Robotics Laboratory (BRL). We also introduce the TacTip-M2, TacTip-GR2, and TacCylinder (Fig. 2), more skin-like derived sensors whose fabrication is made possible by multimaterial 3D printing and which are designed for integration in two-fingered grippers and for capsule endoscopy, respectively.

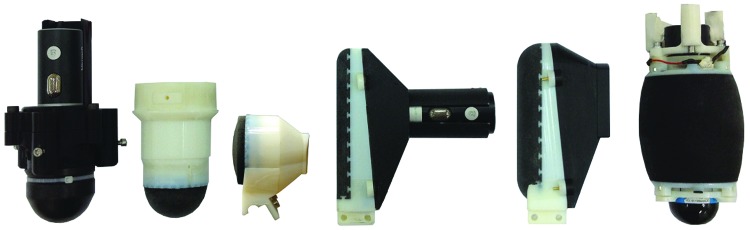

FIG. 1.

TacTip project sensors. From left to right: open-TacTip (original), TacTip (improved), TacTip-GR2, TacTip-M2 (flat), TacTip-M2 (round), and TacCylinder.

FIG. 2.

The TacTip-M2 integrated into the open-hand model M2 gripper (left), the TacTip-GR2 mounted on the GR2 gripper (middle), and the TacCylinder in a simulated tumor detection experiment (right).

We have open-sourced the open TacTip (www.softroboticstoolkit.com/tactip) to offer other research institutions the opportunity to adopt and adapt our sensor designs, such as to create different morphologies, as we have done with the TacCylinder.9 The sensor design can also be modified to integrate with robot hands, as demonstrated with the TacTip-M2 (formerly TacThumb)10 and TacTip-GR2.

This article presents the first comparative study of our suite of soft sensors, all of which are highly accurate, being able to localize objects to submillimeter accuracy that demonstrates super-resolved acuity. This high performance of the TacTip family of sensors suggests that analogous designs could result in a range of novel soft complex tactile sensors from regions of tactile skin to tactile feet and proboscises.

Background

Related technologies

Most tactile sensors are soft, comprising at least some compliant elements, and rely on a variety of underlying technologies (e.g., strain-gauge,11 barometric,12 capacitive,13 piezoresistive,14 piezoelectric15 …) to transmit and record tactile information. Here we review existing compliant optical tactile sensors, which relate most closely to our family of TacTip sensors.

At first glance, the design of the Optoforce force/torque sensor (www.optoforce.com) seems closely related to the TacTip, based on its overall shape and design. However, the Optoforce is designed to compute only the overall force and torque of the contact, rather than relaying tactile information that comprises an array of sensor readings across a sensing surface. Force/torque sensing is unlike human touch and inadequate for tasks requiring more information than a force vector, such as multicontact sensing.

An early precursor to the TacTip used a molded transparent dome with a black dotted pattern.16 However, the use of ambient light for imaging the dots has drawbacks, including lack of contrast on objects that obscure the ambient lighting and difficulties for automated tracking of the dots; moreover, the design presents difficulties for image recognition of tactile elements independent of lighting conditions.

A further tactile sensor based on optics is the GelSight sensor,17 which uses colored lights and photometric stereo to reconstruct highly accurate deformations of its surface. This sensor obtains high resolutions, uses inexpensive materials, and can be made into a portable device. While in many ways it represents an excellent, low-cost optical tactile sensor, it presently requires a flat surface and there would be challenges in adapting it to more complex morphologies such as domed fingertips. Similar considerations apply to the GelForce sensor,18 where the sensing surface is a flat elastomer pad.

Other examples include tactile sensors that use an optical waveguide approach,19–21 or patterns of dots22 or lines23 drawn on the inside surface of a flexible skin, tracked by a CCD camera. Some sensors also make use of fiber optics to relay light intensities to the camera,24 allowing the sensor's contact area to be made very small, ideal for medical applications.

All tactile sensors have their pros and cons, and ultimately, the best choice will depend on the application. That being said, an important distinction between the TacTip and the sensors described above is the presence of physical pins attached to the sensing surface; these structures mimic intermediate ridges within the human fingertip, giving a biomimetic basis for the sensor design, as described below.

Inspiration

When the human finger makes contact with an object or surface, deformation occurs in the epidermal layers of the skin and the change is detected and relayed by its mechanoreceptors.1 Chorley et al.8 were inspired to consider the behavior of the human glabrous (hairless) skin, as found on the palms of human hands and soles of our feet. They built on previous research showing that the Merkel cell complex of sensory receptors works in tandem with the morphology of the intermediate ridges (Fig. 3) to provide edge encoding of a contacted surface. The TacTip device seeks to replicate this response by substituting intermediate ridges with internal pins on the underside of its soft, skin-like membrane, with optical pin tracking via an internal camera taking the place of mechanosensory transduction of the sensing surface (Fig. 4).

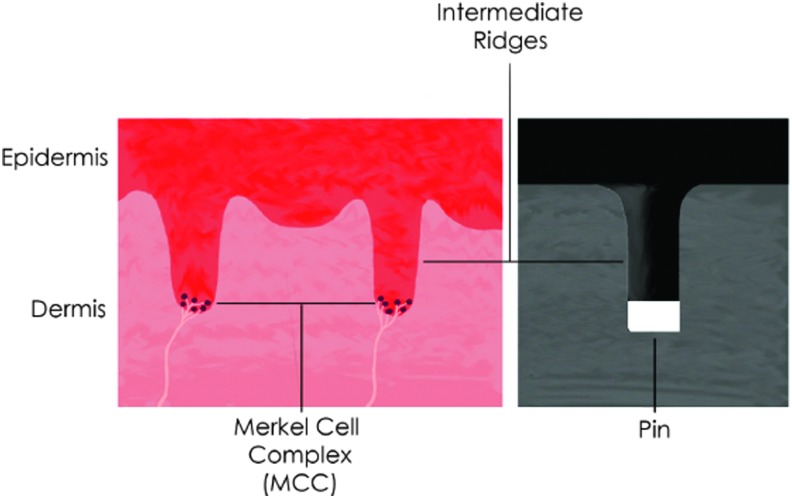

FIG. 3.

Intermediate ridges in the human skin (left) and a corresponding pin in the TacTip (right).

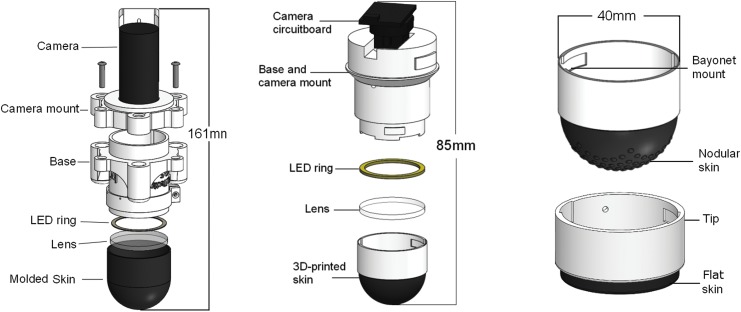

FIG. 4.

Open-TacTip (left): the original version of the sensor comprises a 3D-printed camera mount and base and a cast silicone skin. Improved TacTip (center): the redesigned base houses a reassembled webcam, and modular tips with 3D-printed rubber skin. Modular tips (right): separate modular tips with a nodular fingerprint (above) and flat tip (below).

This biomimetic inspiration was recently extended by exploring the role of an artificial fingerprint on tactile sensing with the TacTip.25 In that study, an artificial fingerprint consisting of a series of outer nodules on the TacTip's skin was shown to enhance high spatial frequency detection. This finding suggests that the inclusion of artificial fingerprints in biomimetic fingertips will improve their ability to perform tactile tasks such as edge perception, contour following, and fine feature classification, with potential implications for object perception and tactile manipulation.

Development

The focus of this section is a description of the developments leading to the TacTip sensors presented here (Fig. 4).

The original TacTip8 is a soft, robust, and high-sensitivity sensor making use of biomimetic methods for active perception. This sensor has been shown to achieve 40-fold localization super-resolution7 and successfully perform tactile manipulation on a cylinder rolling task.26

Further efforts to integrate the TacTip for use with 3D-printed robot hands and grippers have led to the development of the TacTip-M210 and TacTip-GR2 sensors, integrated on the M2 gripper26 and GR2 gripper,27 respectively. These open-source robot hands developed at Yale's GRAB Lab enable the investigation of in-hand tactile manipulation with the TacTip sensors.

The TacCylinder, designed for capsule endoscopy,28 is another adaptation of the TacTip design, expanding the range of tasks that can be tackled with TacTip sensors.

The TacTip is thus an extremely useful research tool, due to its low cost, robustness, and adaptability. It fills the current gap in the field for a cheap, compliant, customizable tactile sensor, and can be applied to a number of challenges in industrial robotics, medical robotics, and robotic manipulation.

Materials and Methods

Open-TacTip

In the original version of the open-TacTip,7,8,29 the base, camera mount, and rigid part of the tip are 3D printed, the skin is cast in VytaFlex 60 silicone rubber, and the pins' tips are (painstakingly) painted white by hand. The tip is filled with optically clear silicone gel (Techsil, RTV27905). In the fabrication of the open-TacTip, emphasis is placed on the low cost of the sensor, straightforward manufacture, and ease of assembly.

To enhance the functionality of the open-TacTip, a series of modifications are made to the original version that was described above. These modifications aim to further reduce cost, minimize the sensor form factor, optimize sensor accuracy, and make the TacTip easier to use and modify. The main modifications to the improved version of the TacTip are summarized in the following section.

TacTip (improved)

3D-printed skin

Rather than cast the TacTip's skin in silicone rubber, dual-material rapid prototyping with an Objet 3D printer is used to create the sensor's rigid base in hard plastic (Vero White) and its soft skin in a rubber-like material (Tango Black+). This lowers the cost and accelerates the creation of new prototype TacTip skins, by avoiding the time-consuming mold creation and skin casting/painting fabrication stages. In particular, Vero White tips are now printed directly onto the end of the pins avoiding the need to paint them. Examples of different types of skins include tips with a fingerprint25 and a rotationally symmetric pin layout.30 Three-dimensional printing also increases reproducibility of design. Differences in 3D-printed tip dimensions are based on the accuracy of the 3D printer. Conversely, in molded tips, the skin is molded by hand, introducing variability in skin thickness between tips. While one would expect that 3D-printed skins would be less robust over the long term than their cast counterparts, we have used such 3D-printed skins over months on a daily basis with no obvious drop in performance. Damage is usually due to human error.

The new printing method also provides the opportunity to add complex features to the sensor's skin. For instance, an exterior fingerprint of rubber nodules was included that mechanically coupled to the white pin tips through rigid internal plastic cores. The creation of this complex skin structure was made possible through the use of multimaterial 3D printing and has been shown to improve perceptual acuity at high spatial frequencies.31

Modularity

To facilitate skin testing and optimization, the skin is printed in a single structure attached to a hard plastic casing (Fig. 4, right panel), forming a tip that connects to the TacTip base with a bayonet mount. The tip (made up of the skin, gel, lens, and plastic casing) is thus a modular component of the sensor, which is easily replaceable or upgradable. By printing tips in this way, it is possible to produce and test different pin layouts and tip structures to optimize the sensor capabilities at a much lower cost than has been possible previously.

We are thus able to update the pin layout to a hexagonal projection of 127 pins with a regular spacing when imaged by the camera, an improvement over the uniform geodesic distribution that had been used in past molded TacTips (Fig. 5). This design gives better performance of the pin tracking algorithms during image processing. We also experimented with different skin thicknesses and pin lengths, eventually converging on a 1 mm thick skin with 2.0 mm long pins for the improved TacTip (3° taper) as a good balance between robustness and sensitivity of the pins to deflection. 3D printing was essential in enabling these trial-and-error experiments requiring extensive hardware testing and leading to an improved sensor design.

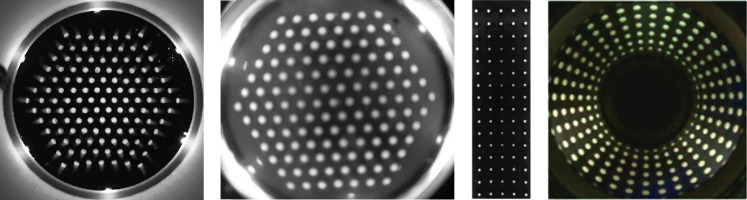

FIG. 5.

Raw images from the TacTip family of sensors. From left to right: improved TacTip, TacTip-GR2, TacTip-M2, and TacCylinder. The TacTip images pins with a Microsoft Cinema HD webcam, whereas the TacTip-GR2 uses a Raspberry Pi spycam (Adafruit) and a fisheye lens to reduce the sensor's form factor. The TacCylinder uses a catadioptric mirror to achieve 360° vision.

Shorter sensor

A more compact sensor is both easier to deploy in practical scenarios and facilitates integration with a wider range of robot hands and arms. Another important benefit is to reduce the torque on the base if struck laterally, which would be an issue for a long sensor. Thus, we take apart the webcam, retaining only the essential components, and reconnect its circuit boards in a horizontal arrangement (Fig. 4). This shortens the spread along the sensor's long axis, reducing the TacTip's height by approximately a factor of 2 (from 161 to 85 mm). The base and camera mount are also combined into a single piece to simplify the overall sensor design (Fig. 4, middle panel). This sensor design is ideal for mounting as an end-effector to an industrial robot arm, which has been the preferred platform for investigating tactile perception and control in our laboratory.7,31–33

TacTip-GR2

A version of the TacTip created for integration onto the GR2 gripper,27 the TacTip-GR2 combines the design features of the TacTip sensor with a reduced overall form factor (44 mm; Table 1). A smaller camera (Adafruit spy camera for Raspberry Pi) and fisheye lens replace the Microsoft LifeCam HD webcam, to enable this reduction in size.

Table 1.

Details of Pin Properties for Each Sensor of the TacTip Family

| Sensor | Sensor dimensions (mm) | Number of pins | Pin dimensions (mm) |

|---|---|---|---|

| TacTip | 40 × 40 × 85 | 127 | 1.2 × 2.0 |

| TacTip-GR2 | 40 × 40 × 44 | 127 | 1.2 × 2.3 |

| TacTip-M2 | 32 × 102 × 95 | 80 | 1.5 × 2.1 |

| TacCylinder | 63 × 63 × 82 | 180 | 3.0 × 3.0 |

The pin layout of the modular tips is maintained for this version of the TacTip, but a flatter skin component creates more space between the gripper's fingers, allowing larger objects to be grasped by the gripper.

This flatter skin (Fig. 4) leads to a change in sensor dynamics, with a smaller volume tip reducing the pin deflections but increasing the contact surface area for flat objects.

TacTip-M2

Applying TacTip design principles to the OpenHand model M2 gripper,26 we created the TacTip-M2,10 an elongated tactile thumb (Fig. 6) for application to in-hand dexterous manipulation of an object using only tactile feedback as guidance. We believe tactile manipulation to be an essential component in allowing robots to effectively interact with objects in complex, dynamic environments. The M2 gripper was chosen for integration as it is 3D printed and open source, has good grasping capability, and provides an opportunity for simple tactile manipulation to be investigated along one dimension.

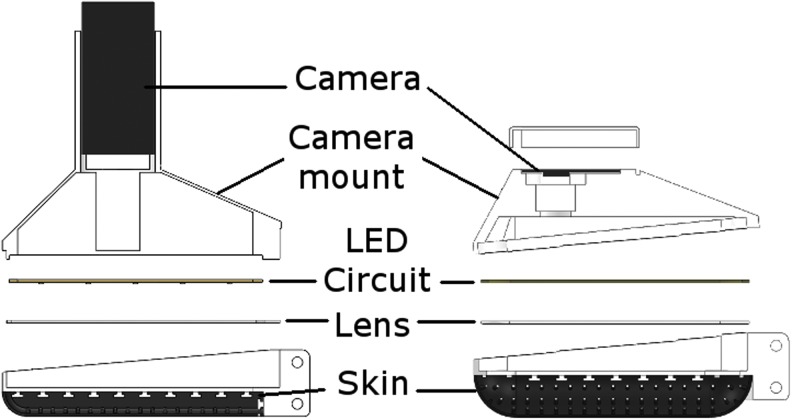

FIG. 6.

The original TacTip-M2 (left) is used when the form factor is nonessential and the improved TacTip-M2 (right), more compact, is designed for integration onto the OpenHand M2 gripper.

As with the TacTip, the TacTip-M2 is fabricated through multimaterial 3D printing and has regularly spaced rows of pins on the inside surface of its skin. The TacTip-M2 features both an original version for deployment where form-factor is not an issue (e.g., for mounting at the end of a robot arm) and an improved, more compact version for integration on the M2 gripper featuring a rearranged webcam and macro lens.

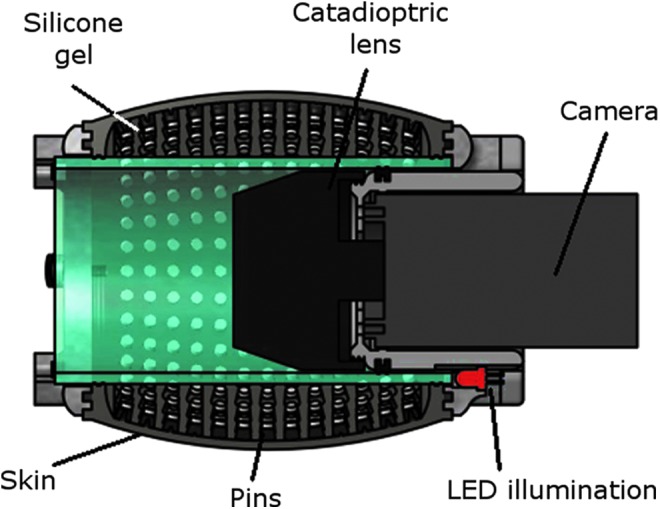

TacCylinder

The TacTip has been adapted with a catadioptric mirror system to provide a 360° cylindrical tactile sensing surface (Fig. 7), forming the TacCylinder sensor.

FIG. 7.

The TacCylinder is designed for capsule endoscopy. The cylindrical design comprises a 3D-printed cylindrical skin and catadioptric mirror system to achieve 360° tactile sensing.

The TacCylinder is designed for capsule endoscopy, providing remote tactile sensing capabilities within the gastrointestinal tract.28 Capsule endoscopy is a pill-like technology swallowed by the patient, which travels through the intestines visually surveying the lumen for suspicious indications of ill health.

The TacCylinder is a larger sensor than the TacTip and thus contains more pins of larger dimensions (Table 1). A tube through its center holds the camera and a 360° mirror system. Filling the inside cavity of the sensor with the optically clear silicone gel is further aided by integrated 3D-printed O-ring-type seals.

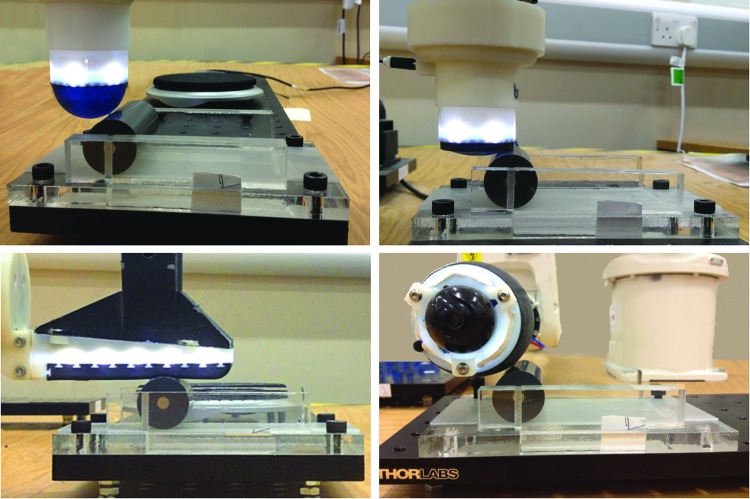

Experimental setup and data collection

For validation of perceptual performance, we test the TacTip, TacTip-GR2, TacTip-M2, and TacCylinder sensors on the same cylinder rolling task, to evaluate localization performance for each sensor. Note the TacTipGR2 is mounted on the standard TacTip body for convenience (Fig. 8). This experiment was chosen because it is simple to set up for all tactile sensors and was also compatible with the range of different designs, morphologies, and uses of the sensors, from manipulation tasks with robot hands to contacting objects with stand-alone sensors.

FIG. 8.

The TacTip, TacTip-GR2, TacTip-M2, and TacCylinder mounted on the ABB robot arm, with the 25 mm diameter cylinder being rolled over a 72 mm range.

The sensors are attached as end-effectors to a 6-DOF ABB IRB120 industrial robot arm (Fig. 8), and brought into contact with a 25 mm diameter cylinder, by lowering the sensor's tip to 3 mm below its first point of contact with the cylinder. The cylinder is then horizontally rolled, with a custom platform constraining movements to one dimension (defined as y). The platform consists of a flat Perspex bottom plate, with two Perspex walls that constrain the cylinder to move along them (Fig. 8). A rubber surface is added to the bottom plate to ensure the cylinder does not slip while rolling. Magnets mounted at the ends of the cylinder and one end of the roller provide a home position for the cylinder.

The cylinder is rolled forward in a nonslip motion in 0.1 mm increments over a 72 mm range, totaling 720 different locations along the y-axis.

At each location, 10 images are recorded (640 × 480 pixels (px), sampled at ∼20 fps). These images are then filtered and thresholded in OpenCV (www.opencv.org), and the pin center coordinates are detected using a contour detection algorithm. Each pin is identified based on its proximity to a default set of pin positions recorded when the sensor is not in contact with the cylinder; if no pin is detected within a radius of 20 px from its default, the position from the previous frame is used instead. A time series of x- and y-deflections of the sensor's pins are then extracted and treated as individual taxel inputs. Several frames are collected to reduce noise arising from the pin detection algorithm and minor displacements of the sensor.

These data are collected twice for use as distinct training and test sets for offline cross-validation (see the Validation section), ensuring results are obtained from sampling on an independent set from the training data.

Data processing

Formally, data are in the form of contact data  encoded as a multidimensional time-series of sensor values

encoded as a multidimensional time-series of sensor values

|

with indices  labeling the time sample and data dimension, respectively. In our case, 10 frames are gathered per location, thus

labeling the time sample and data dimension, respectively. In our case, 10 frames are gathered per location, thus  and we consider x- and y-deflections of each of the pins as a separate dimension k, with

and we consider x- and y-deflections of each of the pins as a separate dimension k, with  . These contact data give evidence for the present location class yl,

. These contact data give evidence for the present location class yl,  , considered one of a set of distinct punctual locations (here

, considered one of a set of distinct punctual locations (here  locations spanning 72 mm are used).

locations spanning 72 mm are used).

The location likelihoods  use a measurement model of the training data for each location class yl.

use a measurement model of the training data for each location class yl.

|

constructed by assuming all data dimensions k and samples  within each contact are independent (so individual log likelihoods sum). Here this sum is normalized by the total number of data points

within each contact are independent (so individual log likelihoods sum). Here this sum is normalized by the total number of data points  to ensure that the likelihoods do not scale with the sample number of a contact.

to ensure that the likelihoods do not scale with the sample number of a contact.

As with previous work on robot tactile perception,34,35 the probabilities  are found with a histogram method applied to training data for each location class yl. The sensor values sk for data dimension k are binned into equal intervals

are found with a histogram method applied to training data for each location class yl. The sensor values sk for data dimension k are binned into equal intervals  over their range (here with

over their range (here with  ). The sampling distribution is given by the normalized histogram counts

). The sampling distribution is given by the normalized histogram counts  for training class yl:

for training class yl:

|

where  is the sample count in bin b for dimension k over all training data in class yl.

is the sample count in bin b for dimension k over all training data in class yl.

Technically, the likelihood is ill-defined if any histogram bin is empty, which is fixed by regularizing the bin counts with a small constant ( ).

).

Validation

Sensor validation provides an analysis of localization accuracy and algorithm performance using cross-validation performed after data collection. Two sets of data, termed training and testing, are gathered for cross-validation. Data zt is then sampled from the test set and classified according to a maximal likelihood approach, identifying the location yl based on the maximal location likelihoods  of that contact data. The mean absolute error for each location class

of that contact data. The mean absolute error for each location class  is then evaluated over each test run at a given location, with the mean error

is then evaluated over each test run at a given location, with the mean error  the average over all locations.

the average over all locations.

Results

Inspection of data

TacTip

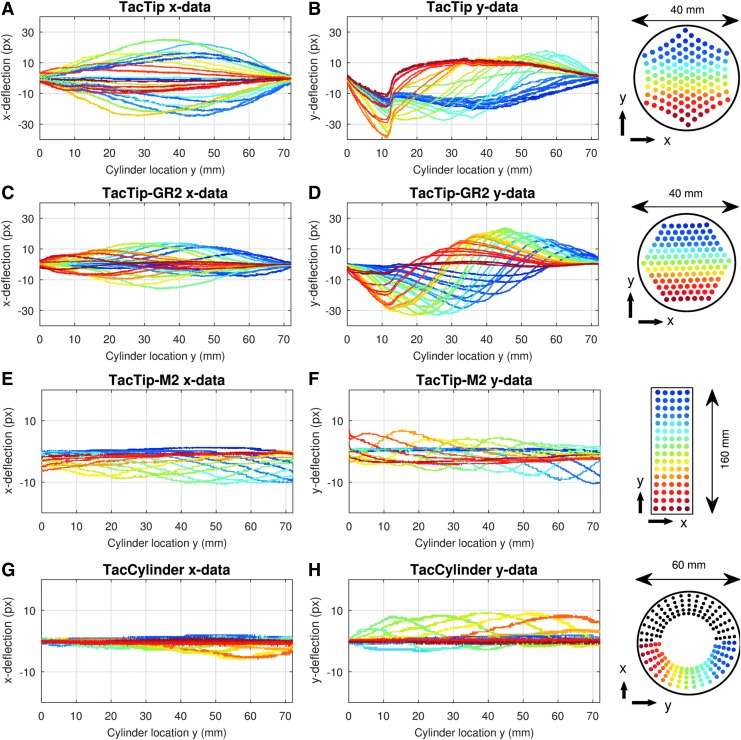

As the TacTip rolls the cylinder across a flat surface in the y direction (as described in the Experimental Setup and Data Collection section), we note the pin deflections in the x-direction (perpendicular to cylinder movement direction) have a regular pattern, with successive rows of pins deflecting outward (deflection reaching −30 to 30 px) and then returning to baseline (Fig. 9A, B). The y-deflections of pins (in the direction of cylinder motion) display an irregular pattern, however, with all pins initially dipping downward before recovering sequentially to baseline positions. This pattern is likely due to initial static friction between the cylinder and the sensor's skin.

FIG. 9.

Tactile data from all four sensors. Data were sampled at 0.1 mm intervals along the 72 mm range of cylinder motion (720 samples). (A, C, E, G) pin displacements along the x-axis and (B, D, F, H) along the y-axis (direction of the cylinder roll). The four right-most panels identify pins for each sensor and display the x- and y-directions.

TacTip-GR2

Data acquired from the TacTip-GR2 produced similar patterns of x- and y-deflections to the TacTip, although deflections are less pronounced (Fig. 9C, D). This is most visible in the x-direction, with an approximate deflection range of −12 to 12 px for the TacTip-GR2 (c.f. −30 to 30 px for the TacTip).

We note that the pins which contact the cylinder first (red and orange in Fig. 9) have the largest y-deflections in the TacTip case, whereas in the TacTip-GR2 data, the pins in the middle of the sensor (yellow and green in Fig. 9) are the most deflected in the y direction. This difference is a consequence of the shape of the sensors, with the TacTip's dome-shaped tip creating large y-deflections close to the initial contact. The TacTip-GR2 is mostly flat with a slight bulge around its center, creating a central area in which internal dynamics enables larger deflections. Note that these dome-shaped morphologies also explain the greater deflections of central pins (yellow and green) relative to pins around the sensors' edges (dark red, dark blue).

TacTip-M2

Data from the TacTip-M2 have a regular, repeated sinusoidal pattern, with a deflection range of −9 to 4 px in the x-direction and more pronounced deflections (−14 to 6 px) in the y-direction (Fig. 9E, F). This makes sense as it is the direction of movement of the cylinder, and is also the direction with most freedom of movement for pins, since it corresponds to the sensor's long axis. The sinusoidal pattern arises from the synchronized movement of rows of pins on the TacTip-M2 as the cylinder moves across them.

An asymmetry is also noticeable in both the x- and y-directions, with the magnitude of x-deflections increasing as the cylinder is rolled forward, and peaks of the sinusoidal pattern in the y-deflections gradually migrating downward from +8 to +2 px. This is likely due to the intrinsic mechanical asymmetry of the TacTip-M2, arising from the way in which the skin and base connect at each end of the sensor (Fig. 6).

TacCylinder

Data for the TacCylinder show a regular pattern of deflections (−6 to 2 px in the x-direction), which is greater in the y-direction (−4 to 12 px) (Fig. 9G, H). We only consider pins from the lower half of the TacCylinder, as the pins on the top half are unaffected by contact with the cylinder. We note a slight initial increase and then decrease of the peak amplitudes of deflections in the y-direction, showing that lower pins with more pressure applied are deflected further.

Thus, we can observe from the data gathered in this experiment from all four sensors that sensor morphology has a huge impact on the aspect and quality of collected data. The overall pattern of pin deflections, their relative and absolute amplitudes, and the order in which they deflect are all strongly dependent on sensor morphology. The next section explores how these differences affect performance on cylinder localization.

Validation

TacTip

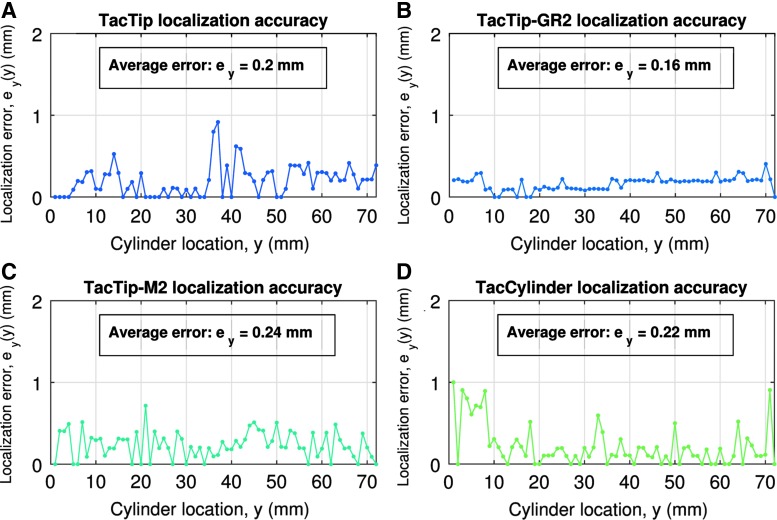

Localization performance of the TacTip is tested using the validation procedure detailed in the Materials and Methods (Validation section), and results are summarized in Table 2. The localization accuracy is an average  over all location classes (Fig. 10A), and is below 1 mm everywhere. Considering the closest pins on the TacTip skin (in the center) are spaced 2.4 mm apart, and the x- and y-deflections of these pins act as taxels, we consider the resolution of the sensor to be 2.4 mm. As such, the TacTip demonstrates ∼12-fold super-resolution7 over the cylinder's movement range.

over all location classes (Fig. 10A), and is below 1 mm everywhere. Considering the closest pins on the TacTip skin (in the center) are spaced 2.4 mm apart, and the x- and y-deflections of these pins act as taxels, we consider the resolution of the sensor to be 2.4 mm. As such, the TacTip demonstrates ∼12-fold super-resolution7 over the cylinder's movement range.

Table 2.

Validation Results for Each Sensor from the TacTip Family

| Sensor |

. (mm) . (mm) |

Pin spacing (mm) | Super-resolved accuracy |

|---|---|---|---|

| TacTip | 0.20 | 2.4 | 12-Fold |

| TacTip-GR2 | 0.16 | 2.4 | 15-Fold |

| TacTip-M2 | 0.24 | 3.5 | 15-Fold |

| TacCylinder | 0.22 | 4.3 | 19-Fold |

FIG. 10.

Localization accuracy of the four sensors: TacTip (A), TacTip-GR2 (B), TacTip-M2 (C), and TacCylinder (D). The results are shown for 72 location classes, each corresponding to a 1 mm range.

In a previous study,31 the TacTip with cast silicone skin was applied to the cylinder roll task as a demonstration of tactile manipulation along complex trajectories. That study found approximately eightfold super-resolved acuity; thus, our novel 3D-printed TacTip gives a similar order of super-resolved acuity.

TacTip-GR2

The TacTip-GR2's localization accuracy averages  and remains below 0.3 mm over the entire range (Fig. 10B), corresponding to 15-fold super-resolution over the pin spacing of 2.4 mm. We interpret this slight improvement in localization relative to the TacTip sensor as a consequence of the flat surface of the TacTip-GR2 creating a more consistent pattern of pin deflections over the cylinder location range (Fig. 9).

and remains below 0.3 mm over the entire range (Fig. 10B), corresponding to 15-fold super-resolution over the pin spacing of 2.4 mm. We interpret this slight improvement in localization relative to the TacTip sensor as a consequence of the flat surface of the TacTip-GR2 creating a more consistent pattern of pin deflections over the cylinder location range (Fig. 9).

TacTip-M2

Localization accuracy for the TacTip-M2 averages  over all location classes (Fig. 10C), and submillimeter accuracy is evident over the full location range. Internal pins acting as taxels are spaced 3.5 mm apart on the sensor skin in the x- and y-directions. As such, the TacTip-M2 again demonstrates ∼15-fold super-resolution over the cylinder's movement range.

over all location classes (Fig. 10C), and submillimeter accuracy is evident over the full location range. Internal pins acting as taxels are spaced 3.5 mm apart on the sensor skin in the x- and y-directions. As such, the TacTip-M2 again demonstrates ∼15-fold super-resolution over the cylinder's movement range.

TacCylinder

Localization accuracy for the TacCylinder averages  over all location classes (Fig. 10D), and submillimeter accuracy is displayed over most of the range of locations (3–72 mm). Note that the high errors on the initial range (0–7 mm) are linked to the TacCylinder not yet being fully in contact with the cylinder. Pins on the TacCylinder are spaced by a minimum of 4.3 mm on the sensor skin. As such, the TacCylinder demonstrates ∼19-fold super-resolution over the cylinder's movement range.

over all location classes (Fig. 10D), and submillimeter accuracy is displayed over most of the range of locations (3–72 mm). Note that the high errors on the initial range (0–7 mm) are linked to the TacCylinder not yet being fully in contact with the cylinder. Pins on the TacCylinder are spaced by a minimum of 4.3 mm on the sensor skin. As such, the TacCylinder demonstrates ∼19-fold super-resolution over the cylinder's movement range.

Applications

Manufacturing

The TacTip has been applied to a quality control task with potential applications to car manufacturing. In this study, active touch algorithms were used to identify gap widths to 0.4 mm accuracy, and vertical depth above the gap to 0.1 mm accuracy.32

Thus, mounting the TacTip on an industrial robot arm offers an accurate and reliable solution to automated quality control on the production line.

Another application of the TacTip sensor is in composite layup (Elkington et al. Using tactile sensors to detect defects during composite layup; unpublished data), in which tactile sensing could provide a real-time feedback to industrial robots to detect defects and irregularities during composite layup. This is a step toward fully automated composite layup, eliminating the need for costly and time-consuming manual “hand” layup.

The TacTip sensor thus presents solutions to the manufacturing industry to automate and potentially improve on tasks currently carried out manually.

In-hand manipulation

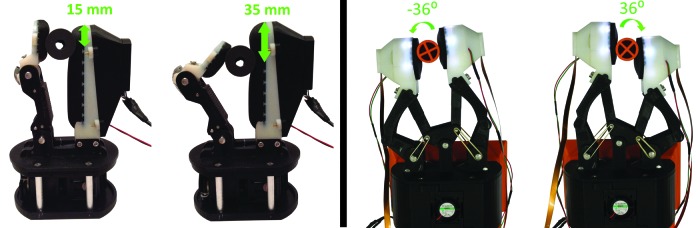

The TacTip-M2 is adapted for use on the M2 gripper.26 As such, objects can be rolled vertically up and down the sensorized gripper along a 20 mm range (Fig. 11, left panel). After training, trajectories can be followed based solely on tactile data, successfully performing one-dimensional in-hand tactile manipulation.10

FIG. 11.

Range of movement for the M2 gripper (left) and the GR2 gripper (right) with integrated TacTips.

The TacTip-GR2 integrated in the GR2 gripper can perform in-hand tactile reorientation of objects, a different form of tactile manipulation. Here both mobile fingers are tactile-enabled, rotating objects along a curved trajectory36 (Fig. 11, right panel).

Tactile manipulation tasks allow for the complex and precise handling of objects in-hand. These capabilities will enhance the safety, interactivity, and overall potential of robots in the fields of human–robot interaction, assistive and industrial robotics.

Object exploration

Exploratory tactile servoing has been demonstrated with the TacTip in experiments involving several two-dimensional objects: a circular disk, a volute laminar, and circular or spiral ridges.33 A similar approach to that used here to validate the performance of the tactile sensors was used, adapted with principles from biomimetic active perception to perceive and control the edge orientation and radial location relative to the edge. The control policy rotated the sensor to maintain its orientation and radial location as the sensor moves tangentially along the edge, successfully following the contours of all the tested objects.

This approach combines active perception and haptic exploration into a common active touch algorithm, with the potential to generalize to more complex, 3D tasks. It also relates to human exploratory procedures37 (contour following here), and the control policy could thus be extended to include more of these exploratory procedures (for instance, enclosure to detect volume in a robot hand).

Psychophysics

The TacTip was also used to investigate discrimination-based perception.38 In that study, the TacTip was trained to discriminate between two edges of different sharpnesses and obtained a just noticeable difference (JND) of 9.2°, comparing favorably to a previously reported human JND of 8.6°.

Future work with the TacTip sensors could further this approach to explore the concept of robo-psychophysics,39 in which human psychophysics experimental approaches are used to evaluate artificial sensors.

Medical applications

Recent work by Winstone et al.28 has shown the ability of the TacCylinder to detect surface deformation of various lumps associated with suspect tissue that could reside within the gastrointestinal tract. These sensing data have been applied to create a 3D rendering of the test environment. Currently, work is focused on the discrimination between lump features and tissue density toward more accurate identification of submucosal tumors.9 Work has been carried out in parallel to create a self-contained pneumostatic palpating sensor.40

In the past, the TacTip41 has been used as part of a teletaction system for lump detection, in which tactile feedback is relayed to the surgeon. More recently, the TacTip's design principles were applied to a pillow used during magnetic resonance imaging (MRI) scans to detect subtle head movements.42 Thus, TacTip sensors hold promise for multiple medical applications, particularly in tumor detection, capsule endoscopy, and MRI scans.

Further applications

Future iterations of TacTip sensors could create novel solutions for known practical problems in robotics, bringing tactile sensing to new areas and applications. The 3D-printed nature of these sensors and the open availability of CAD files and fabrication methods (softroboticstoolkit.com/tactip) enable easy use, adaptation, and improvement of the TacTip sensors. As well as further exploration of the areas described above, novel applications include patches of tactile skin to cover a robot surface or tactile feet to improve walking in bipedal robots.

Discussion

We tested four 3D-printed sensors on a cylinder rolling task: the TacTip, TacTip-GR2, TacTip-M2, and TacCylinder. We found that all four sensors were able to localize the cylinder with submillimeter accuracy. All four sensors demonstrated above 10-fold super-resolution, with the TacTip-GR2 performing best (although it also had a closer pin spacing than the TacCylinder), possibly because its morphology is the most suited to a rolling task.

All the TacTip sensors utilize the same working principle, yet their different morphologies yield appreciable differences in behavior. These results reinforce the validity of the tight link between shape and function in a sensor and show the advantage of using 3D-printing techniques, which allow morphological customization. In particular, multimaterial printing enables a full sensor to be 3D printed, including its soft skin, opening up further possibilities of experimentation with different materials and morphologies. The sensor's compliance could be adjusted for different tasks by modifying the 3D-printed skin material and the shore hardness of silicone gel used in the tip. Further experiments on parameters such as pin length, pin spacing, and pin width could also reveal optimal solutions for TacTip designs applied to specific tasks. The TacTip-M2 and TacCylinder sensors could be made modular to facilitate these experiments. Directions for future work include accelerating the data processing algorithm and overall control loop to establish tasks with continuous, uninterrupted motion and miniaturizing the TacTip further for integration into a wide range of robotic hands.

To encourage the use of the TacTip design principles for new tactile sensing applications, we have open-sourced the hardware on the soft robotics toolkit (www.softroboticstoolkit.com/tactip) along with fabrication instructions. This submission won the “2016 contributions to soft robotics research” prize and aims to provide open access to cheap customizable tactile sensors, which are currently lacking from the field. It is our intention that research groups will use and develop TacTip sensors, and take advantage of 3D-printing technologies to apply our design principles to novel sensors and systems of their own devising.

Conclusion

Soft tactile sensors are essential for manipulation tasks and safe human–robot interaction. Our suite of soft biomimetic tactile sensors displays strong super-resolved performance on localization tasks. These sensors provide a basis for future research and innovation in the field of tactile sensing.

Acknowledgments

The authors thank Sam Coupland, Gareth Griffiths, and Samuel Forbes for their help with 3D printing and Jason Welsby for his assistance with electronics. N.L. was supported, in part, by a Leverhulme Trust Research Leadership Award on “A biomimetic forebrain for robot touch” (RL-2016-039), and N.L. and M.E.G. were supported, in part, by an EPSRC grant on Tactile Super-resolution Sensing (EP/M02993X/1). L.C. was supported by the EPSRC Centre for Doctoral Training in Future Autonomous and Robotic Systems (FARSCOPE).

The data used in this article can be accessed in the repositories at http://doi.org/ckcc.

Author Disclosure Statement

No competing financial interests exist.

References

- 1.Johansson RS, Flanagan JR. Coding and use of tactile signals from the fingertips in object manipulation tasks. Nat Rev Neurosci 2009;10:345–359 [DOI] [PubMed] [Google Scholar]

- 2.Dunbar RIM. The social role of touch in humans and primates: behavioural function and neurobiological mechanisms. Neurosci Biobehav Rev 2010;34:260–268 [DOI] [PubMed] [Google Scholar]

- 3.Dahiya R, Metta G, Valle M, Sandini G. Tactile sensing—from humans to humanoids. Robot IEEE Trans 2010;26:1–20 [Google Scholar]

- 4.Kappassov Z, Corrales J, Perdereau V. Tactile sensing in dexterous robot hands—review. Rob Auton Syst 2015;74:195–220 [Google Scholar]

- 5.Holland DP, Park EJ, Polygerinos P, Bennett GJ, Walsh CJ. The soft robotics toolkit: shared resources for research and design. Soft Robot 2014;1:224–230 [Google Scholar]

- 6.Natale L, Torres-Jara E. A sensitive approach to grasping. In: Proceedings of the Sixth International Workshop on Epigenetic Robotics, Paris, 2006, pp. 87–94 [Google Scholar]

- 7.Lepora N, Ward-Cherrier B. Superresolution with an optical tactile sensor. In: IEEE International Conference on Intelligent Robots and Systems (IROS), Hamburg, Germany, 2015, pp. 2686–2691 [Google Scholar]

- 8.Chorley C, Melhuish C, Pipe T, Rossiter J. Development of a tactile sensor based on biologically inspired edge encoding. In: International Conference on Advanced Robotics (ICAR), Munich, 2009, pp. 1–6 [Google Scholar]

- 9.Winstone B, Melhuish C, Pipe T, Callaway M, Dogramadzi S. Towards Bio-inspired Tactile Sensing Capsule Endoscopy for Detection of Submucosal Tumours. IEEE Sens. 2017;17:848–857 [Google Scholar]

- 10.Ward-Cherrier B, Cramphorn L, Lepora N. Tactile manipulation with a TacThumb integrated on the Open-Hand M2 Gripper. Robot Autom Lett 2016;1:169–175 [Google Scholar]

- 11.Engel J, Chen J, Liu C. Development of polyimide flexible tactile sensor skin. J Micromech Microeng 2003;13:359–366 [Google Scholar]

- 12.Tenzer Y, Jentoft L, Howe R. The feel of MEMS barometers: inexpensive and easily customized tactile array sensors IEEE Robot Autom Mag 2014;21:89–95 [Google Scholar]

- 13.Lee H-K, Chung J, Chang S-I, Yoon E. Real-time measurement of the three-axis contact force distribution using a flexible capacitive polymer tactile sensor. J Micromech Microeng 2011;21:35010 [Google Scholar]

- 14.Beccai L, Roccella S, Ascari L, et al. . Development and experimental analysis of a soft compliant tactile microsensor for anthropomorphic artificial hand. IEEE/ASME Trans Mechatronics 2008;13:158–168 [Google Scholar]

- 15.Seminara L, Capurro M, Cirillo P, Cannata G, Valle M. Electromechanical characterization of piezoelectric PVDF polymer films for tactile sensors in robotics applications. Sens Actuators A Phys 2011;169:49–58 [Google Scholar]

- 16.Obinata G, Kurashima T, Moriyama N. Vision-based tactile sensor using transparent elastic fingertip for dexterous handling. In: International Symposium on Robotics, vol. 36, Tokyo, 2005, p. 32 [Google Scholar]

- 17.Johnson M, Adelson E. Retrographic sensing for the measurement of surface texture and shape. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) 2009, pp. 1070–1077 [Google Scholar]

- 18.Kamiyama K, Kajimoto H, Kawakami N, Tachi S. Evaluation of a vision-based tactile sensor. In: International Conference on Robotics and Automation (ICRA), vol. 2, New Orleans, LA, 2004, pp. 1542–1547 [Google Scholar]

- 19.Maekawa H, Tanie K, Komoriya K, Kaneko M, Horiguchi C, Sugawara T. Development of a finger-shaped tactile sensor and its evaluation by active touch. In: Proceedings 1992 IEEE International Conference on Robotics and Automation IEEE Computer Society Press, pp. 1327–1334. DOI: 10.1109/ROBOT.1992.220165 [DOI] [Google Scholar]

- 20.Ohka M, Kobayashi H, Mitsuya Y. Sensing characteristics of an optical three-axis tactile sensor mounted on a multi-fingered robotic hand. In: 2005 IEEE/RSJ International Conference on Intelligent Robots and Systems IEEE, 2005, pp. 493–498. DOI: 10.1109/IROS.2005.1545264 [DOI] [Google Scholar]

- 21.Knoop E, Rossiter J. Dual-mode compliant optical tactile sensor. In: 2013 IEEE International Conference on Robotics and Automation IEEE, 2013, pp. 1006–1011. DOI: 10.1109/ICRA.2013.6630696 [DOI] [Google Scholar]

- 22.Hristu D, Ferrier N, Brockett R. The performance of a deformable-membrane tactile sensor: basic results on geometrically-defined tasks. In: Robotics and Automation, IEEE International Conference on, vol. 1 2000, pp. 508–513 [Google Scholar]

- 23.Lang P. Optical tactile sensors for medical palpation. Canada-Wide Sci Fair. 2004. Available at: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.93.3020&rep=rep1&type=pdf Accessed March27, 2017

- 24.Xie H, Jiang A, Wurdemann HA, Liu H, Seneviratne LD, Althoefer K. Magnetic resonance-compatible tactile force sensor using fiber optics and vision sensor. IEEE Sens J 2014;14:829–838 [Google Scholar]

- 25.Cramphorn L, Ward-Cherrier B, Lepora NF. Addition of a biomimetic fingerprint on an artificial fingertip enhances tactile spatial acuity. IEEE Robot Autom Lett 2017;2:1336–1343 [Google Scholar]

- 26.Ma R, Spiers A, Dollar A. M2 Gripper: extending the dexterity of a simple, underactuated gripper. In: Advances in Reconfigurable Mechanisms and Robots II. Beijing: Springer, 2016, pp. 795–805 [Google Scholar]

- 27.Rojas N, Ma R, Dollar A. The {GR2} gripper: an underactuated hand for open-loop in-hand planar manipulation. IEEE Trans Robot 2016;32:763–770 [Google Scholar]

- 28.Winstone B, Pipe T, Melhuish C, Dogramadzi S, Callaway M. Biomimetic tactile sensing capsule. In: Biomimetic and Biohybrid Systems. Barcelona: Springer, 2015, pp. 113–122 [Google Scholar]

- 29.Winstone B, Griffiths G, Pipe T, Melhuish C, Rossiter J. TACTIP-tactile fingertip device, texture analysis through optical tracking of skin features. In: Biomimetic and Biohybrid Systems. London: Springer, 2013, pp. 323–334 [Google Scholar]

- 30.Ward-Cherrier B, Cramphorn L, Lepora NF. Exploiting sensor symmetry for generalized tactile perception in biomimetic touch. IEEE Robot Autom Lett 2017;2:1218–1225 [Google Scholar]

- 31.Cramphorn L, Ward-Cherrier B, Lepora N. Tactile manipulation with biomimetic active touch. In: International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 2016 [Google Scholar]

- 32.Lepora N, Ward-Cherrier B. Tactile quality control with biomimetic active touch. Robot Autom Lett 2016;1:646–652 [Google Scholar]

- 33.Lepora N, Aquilina K, Cramphorn L. Exploratory tactile servoing with active touch. IEEE Robot Autom Lett 2017;2:1156–1163 [Google Scholar]

- 34.Lepora N, Martinez-Hernandez U, Prescott T. Active touch for robust perception under position uncertainty. In: IEEE International Conference on Robotics and Automation (ICRA), Karlsruhe, Germany, 2013, pp. 3020–3025 [Google Scholar]

- 35.Lepora N, Martinez-Hernandez U, Evans M, Natale L, Metta G, Prescott T. Tactile superresolution and biomimetic hyperacuity. Robot IEEE Trans 2015;31:605–618 [Google Scholar]

- 36.Ward-Cherrier B, Rojas N, Lepora N. Model-free precise in-hand manipulation with a 3d-printed tactile gripper. Robot Autom Lett. 2017;2:2056–2063 [Google Scholar]

- 37.Lederman SJ, Klatzky RL. Extracting object properties through haptic exploration. Acta Psychol (Amst) 1993;84:29–40 [DOI] [PubMed] [Google Scholar]

- 38.Roscow E, Kent C, Leonards U, Lepora NF. Discrimination-based perception for robot touch. In: Springer, Cham. 2016, pp. 498–502. DOI: 10.1007/978-3-319-42417-0_53 [DOI] [Google Scholar]

- 39.Delhaye BP, Schluter EW, Bensmaia SJ. Robo-psychophysics: extracting behaviorally relevant features from the output of sensors on a prosthetic finger. IEEE Trans Haptics 2016;9:499–507 [DOI] [PubMed] [Google Scholar]

- 40.Hinitt AD, Rossiter J, Conn AT. WormTIP: An invertebrate inspired active tactile imaging pneumostat. In: Springer, Cham, 2015, pp. 38–49. DOI: 10.1007/978-3-319-22979-9_4 [DOI] [Google Scholar]

- 41.Roke C, Melhuish C, Pipe T, Drury D, Chorley C. Lump localisation through a deformation-based tactile feedback system using a biologically inspired finger sensor. Rob Auton Syst 2012;60:1442–1448 [Google Scholar]

- 42.Goldsworthy S, Dapper T, Griffiths G, Morgan A, Mccormack S, Dogramadzi S. Motion Capture Pillow shows potential to replace thermoplastic masks in H&N radiotherapy. Radiother Oncol 2017;123:S38–S39 [Google Scholar]