Abstract

Painful events establish opponent memories: cues that precede pain are remembered negatively, whereas cues that follow pain, thus coinciding with relief are recalled positively. How do individual reinforcement-signaling neurons contribute to this “timing-dependent valence-reversal?” We addressed this question using an optogenetic approach in the fruit fly. Two types of fly dopaminergic neuron, each comprising just one paired cell, indeed established learned avoidance of odors that preceded their photostimulation during training, and learned approach to odors that followed the photostimulation. This is in striking parallel to punishment versus relief memories reinforced by a real noxious event. For only one of these neuron types, both effects were strong enough for further analyses. Notably, interfering with dopamine biosynthesis in these neurons partially impaired the punishing effect, but not the relieving after-effect of their photostimulation. We discuss how this finding constraints existing computational models of punishment versus relief memories and introduce a new model, which also incorporates findings from mammals. Furthermore, whether using dopaminergic neuron photostimulation or a real noxious event, more prolonged punishment led to stronger relief. This parametric feature of relief may also apply to other animals and may explain particular aspects of related behavioral dysfunction in humans.

Behavior is largely tuned to reinforcers: animals often act to minimize exposure to punishment and maximize gain of reward. A fundamental property of reinforcers is that their effects are double-faced (Konorski 1948; Solomon and Corbit 1974; Solomon 1980; Wagner 1981): an event that is “punishing” triggers a positive affective state of “relief” at its offset. Those cues that coincide with or precede punishment are subsequently avoided (in fruit flies: Tanimoto et al. 2004; Yarali et al. 2008; Vogt et al. 2015); they potentiate startle behavior (in rats and man: Andreatta et al. 2010, 2012) and are verbally reported as having negative emotional valence (in man: Andreatta et al. 2010, 2013). In contrast, those cues or contexts that concur with the relieving cessation of punishment are subsequently approached; they attenuate startle and can—depending on the paradigm—be verbally rated as emotionally positive (Tanimoto et al. 2004; Seymour et al. 2005; Yarali et al. 2008; King et al. 2009; Andreatta et al. 2010, 2012, 2013, 2016; Vogt et al. 2015) (see Gerber et al. [2014] for a cross-species review; see Supplemental Fig. S1 for a meta-analysis of fly data). Likewise, cues that are learned as predictors for a reward versus its termination are, respectively, acted upon appetitively versus aversively (Hellstern et al. 1998; Felsenberg et al. 2013). Understanding this timing-dependent valence-reversal is crucial for comprehending how behavior is molded by reinforcers.

In the fruit fly Drosophila melanogaster, memories about odors that predict electric shock punishment versus relief at shock-offset have been compared to each other in terms of parametric features, genetic effectors and molecular mechanisms as well as neural circuits (Yarali et al. 2008, 2009; Yarali and Gerber 2010; Diegelmann et al. 2013b; Niewalda et al. 2015; Appel et al. 2016). So far, however, a detailed account of the neural circuit only exists for punishment memory (for reviews, see Heisenberg 2003; Gerber et al. 2004; Owald and Waddell 2015; Hige 2018). During odor → electric shock training, the odor evokes combinatorial patterns of activity, first across the olfactory sensory neuron and then the projection neuron layers (for reviews, see Fiala 2007; Gerber et al. 2009; Masse et al. 2009; Wilson 2013). Projection neurons, in addition to innervating the lateral horn, side-branch onto the mushroom bodies, where Kenyon cells (KC) sparsely code for odors (Turner et al. 2008; Honnegger et al. 2011; Campbell et al. 2013; Barth et al. 2014). Electric shock on the other hand, by as yet unidentified means, activates particular dopaminergic neurons, which target large sets of KC at defined compartments along their axons (Schwaerzel et al. 2003; Riemensperger et al. 2005; Tanaka et al. 2008; Mao and Davis 2009; Cohn et al. 2015). Only in those KC that respond to the trained odor, does the odor-evoked rise in intracellular Ca++ concentration coincide with the shock-induced activation of G-protein-coupled dopamine receptors (Han et al. 1996; Kim et al. 2007). This coincidence is detected by Type 1 adenylate cyclase (AC) (Connolly et al. 1996; Zars et al. 2000; McGuire et al. 2003), resulting in cAMP synthesis (Tomchik and Davis 2009) and downstream molecular events, including Protein kinase A activation (Gervasi et al. 2010) and possibly Synapsin phosphorylation (Diegelmann et al. 2013a; Niewalda et al. 2015). This eventually leads to a modification of the output synapses at the respective compartments (Hige et al. 2015). When the trained odor is encountered again, owing to these modified Kenyon cell output synapses, it activates the particular downstream mushroom body output neurons (MBONs) to a different extent than before, tipping the behavior with respect to this odor in favor of avoidance (Séjourné et al. 2011; Aso et al. 2014b; Bouzaiane et al. 2015; Hige et al. 2015; Owald et al. 2015). For fly relief memory, the requirement for dopaminergic or other monoaminergic signaling has remained unclear, probably as a result of the poor signal-to-noise ratio of the behavioral scores (Yarali and Gerber 2010). Importantly, however, just as for punishment memory, for relief memory, the site of memory formation also seems to be the mushroom body, as both kinds of memory impairment in flies lacking the presynaptic protein Synapsin are fully rescued by restoring this protein only to the KC (Niewalda et al. 2015; see also Supplemental Fig. S2). Common mechanisms for punishment and relief memories have been postulated by two different computational models, based, respectively, on spike-timing-dependent plasticity (STDP) (Drew and Abbott 2006) and coincidence detection by Type 1 AC (Yarali et al. 2012). In both of these models, a single cellular source of dopamine suffices to reinforce both punishment and relief memories. In line with this, Aso and Rubin (2016) (see also Saumweber et al. [2018]) made the striking observation that artificial stimulation of just one paired dopaminergic neuron in the fly brain can establish learned avoidance of odors that precede it and learned approach to those that follow it. Thus, these dopaminergic neurons, identified in various nomenclatures as PPL1-01, PPL1-γ1pedc, or MB-MP1 (Table 1) implement timing-dependent valence-reversal.

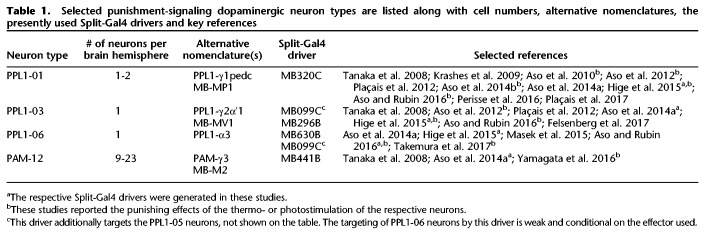

Table 1.

Selected punishment-signaling dopaminergic neuron types are listed along with cell numbers, alternative nomenclatures, the presently used Split-Gal4 drivers and key references

Here, we revisited timing-dependent valence-reversal by fly dopaminergic neurons. Of the four candidate dopaminergic neuron-types we looked at, photostimulation of the PPL1-01 neurons and to a lesser extent the PPL1-06 neurons led to learned avoidance and learned approach for odors that, respectively, preceded and followed photostimulation, in striking parallel to punishment and relief memories reinforced by electric shock. We characterized the PPL1-01-reinforced opponent memories in terms of their strength and stability over time, as well as dependence on intact dopamine biosynthesis in these very neurons. Furthermore, we found that, whether using PPL1-01 neuron photostimulation or electric shock, prolonged punishment led to a larger relief effect. Our results constrain fly circuit models of punishment versus relief memory, and may inspire research on reinforcement processing and its behavioral consequences in rodents and humans.

Results

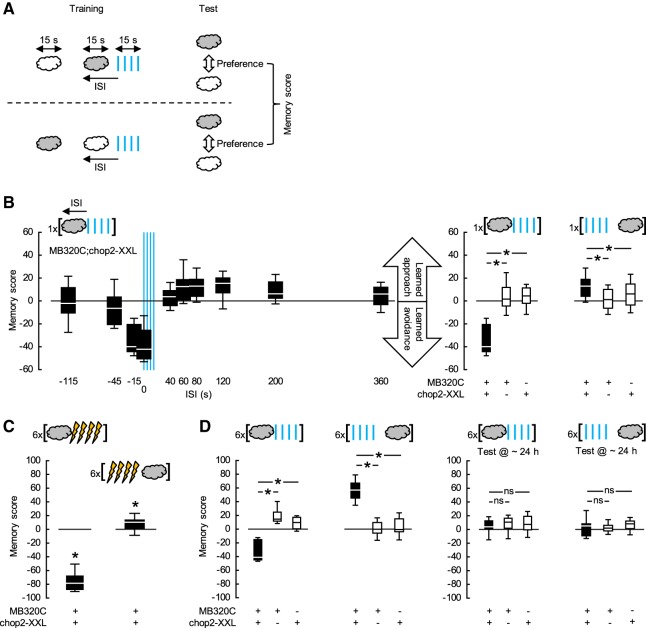

Using the Split-Gal4 driver MB320C (Hige et al. 2015), we expressed the blue-light-gated cation channel channelrhodopsin-2-XXL (chop2-XXL; Dawydow et al. 2014) in the dopaminergic PPL1-01 neurons, thus rendering them receptive to blue light. Flies were trained and tested “en masse” (Fig. 1A; Supplemental Fig. S3). During training, a control odor was presented alone, while a trained odor was paired with pulses of blue light with an onset-to-onset interstimulus interval (ISI) that was varied across groups. Negative ISIs meant that the trained odor started before the blue light; positive ISIs that the order was reversed. After a single episode of training, the flies were given a choice between the two odors. Associative memory scores were calculated on the basis of the preferences of two subgroups of flies trained and tested with swapped roles for two chemicals as the control and trained odor to reflect learned avoidance (<0) or approach (>0) cleared of any innate response bias toward either of the two odors. The memory scores of MB320C;chop2-XXL flies nonmonotonically depended on the ISI (Fig. 1B-left, statistical reports for all experiments are given in the figure legends). When the trained odor was presented long before the blue light (ISI = −115 sec), the flies showed neither learned avoidance nor approach. When the trained odor came shortly before the blue light or overlapped with it (ISI = −15 or 0 sec), it was subsequently strongly avoided. Learned behavior gradually turned from avoidance to approach as the trained odor followed the blue light (ISI = 60, 80, 120, or 200 sec). As the time interval from the blue light to the odor lengthened (ISI = 360 sec), this learned approach diminished. Since the blue light is visible to the flies and may in itself be reinforcing to them, it was important to ask whether after optimally timed odor → blue light or blue light → odor training the scores of the experimental genotype significantly differed from those of the genetic controls, as was indeed the case (Fig. 1B-right). This allowed us to conclude that photostimulation of the PPL1-01 neurons establishes learned avoidance versus approach for odors that precede versus follow it (Aso and Rubin 2016).

Figure 1.

Timing-dependent valence-reversal by PPL1-01 neurons. (A) Two subgroups of flies were trained and tested in parallel (for details see Supplemental Fig. S3). For each subgroup, a control odor was presented alone, while a trained odor was paired with pulses of blue light with an onset-to-onset ISI. Negative ISIs meant that the trained odor started before blue light; positive values meant that the order was reversed. Between the subgroups, the roles of two chemicals (gray and white clouds) as the control and trained odor were swapped. Approximately 20 min after training, both subgroups were given the choice between the two odors, and an associative memory score was calculated based on their preferences to reflect learned avoidance (<0) or learned approach (>0). (B) The Split-Gal4 driver MB320C was used to express chop2-XXL in the PPL1-01 neurons and, as detailed in A, flies were trained only once and then tested. (Left) Memory scores of MB320C;chop2-XXL flies depended on the ISI (KW-test: H = 89.71, d.f. = 9, P < 0.0001, N = 15, 15, 12, 12, 13, 19, 69, 19, 24, 32), gradually turning from negative scores when the trained odor preceded the blue light to positive scores when the trained odor followed the blue light. (Right) After odor → blue light training (ISI = −15 sec), the memory scores of the experimental genotype MB320C;chop2-XXL were more negative than those of the genetic controls MB320C and chop2-XXL, indicating learned avoidance (U-tests: MB320C;chop2-XXL versus MB320C: U = 1.00, P = 0.0003; MB320C;chop2-XXL versus chop2-XXL: U = 1.00, P = 0.0003; N = 12, 8, 8). In contrast, after blue light → odor training (ISI = 80 sec), the experimental genotype had more positive scores as compared to the genetic controls, indicating learned approach (U-tests: MB320C;chop2-XXL versus MB320C: U = 384.00, P < 0.0001; MB320C;chop2-XXL versus chop2-XXL: U = 753.00, P = 0.0185; N = 69, 24, 31). (C) MB320C;chop2-XXL flies were trained and tested as detailed in A, except that electric shock was used instead of blue light and training was repeated six times with ∼15 min intervals. Approximately 20 min after this repetitive, spaced odor → electric shock training (ISI = −15 sec), memory scores were significantly negative, indicating learned avoidance (OSS-test: P < 0.0001, N = 19). In contrast, repetitive, spaced electric shock → odor training (ISI = 40 sec) led to significantly positive scores, reflecting learned approach (OSS-test: P = 0.0352, N = 16). For electric shock → odor training, ISI = 40 sec was chosen as an optimum based on previous studies (Supplemental Fig. S1). (D) Flies were trained and tested as detailed in A, except that the training was repeated six times, with ∼15 min intervals. (Left) Approximately 20 min after repetitive, spaced odor → blue light training (ISI = −15 sec), scores of MB320C;chop2-XXL flies were significantly more negative than those of the genetic controls, indicating learned avoidance (U-tests: MB320C;chop2-XXL versus MB320C: U = 0.00, P = 0.0002; MB320C;chop2-XXL versus chop2-XXL: U = 0.00; P = 0.0003; N = 11, 9, 8). This learned avoidance was about half as strong as that after parametrically equivalent training with odor → electric shock as presented in C. Approximately 20 min after repetitive, spaced blue light → odor training (ISI = 80 sec), MB320C;chop2-XXL flies had significantly more positive scores than the genetic controls, indicating learned approach (U-tests: MB320C;chop2-XXL versus MB320C: U = 0.00, P < 0.0001; MB320C;chop2-XXL versus chop2-XXL: U = 0.00, P < 0.0001; N = 12, 10, 10). This learned approach was about six times as strong as that after the parametrically equivalent electric shock → odor training as presented in C. (Right) Approximately 24 h after repetitive, spaced odor → blue light (ISI = −15 sec) or blue light → odor (ISI = 80 sec) training, the scores of MB320C;chop2-XXL flies did not differ from those of the genetic controls, suggesting complete decay of both kinds of memory established the day before (U-tests: ISI = −15 sec: MB320C;chop2-XXL versus MB320C: U = 27.00, P = 0.4134; MB320C;chop2-XXL versus chop2-XXL: U = 25.00, P = 0.5254; N = 9, 8, 7; ISI = 80 sec: MB320C;chop2-XXL versus MB320C: U = 48.00, P = 0.9097; MB320C;chop2-XXL versus chop2-XXL: U = 31.50, P = 0.4772; N = 10, 10, 8). See Supplemental Figure S4 for significant learned avoidance in MB320C;chop2-XXL flies ∼24 h after repetitive, spaced odor → electric shock training. Box plots show the median, 25% and 75% and 10% and 90% quartiles as midline, box-boundaries and whiskers, respectively. * and ns indicate significance and lack thereof in U- or OSS-tests, where α was adjusted with a Bonferroni–Holm correction to keep the experiment-wide type I error rate at 0.05.

Although the real-world meaning of PPL1-01 photostimulation to the flies is elusive, the timing-dependent opponent memories reinforced by it are in striking parallel to punishment versus relief memories reinforced by a real noxious event, electric shock (Tanimoto et al. 2004; Yarali et al. 2008; see also Supplemental Fig. S1). Nevertheless, one might argue that the offset of PPL1-01 photostimulation mimics a food reward, especially given that inhibition of certain other dopaminergic neurons can be rewarding (Yamagata et al. 2016). In the present set of experiments, this is unlikely, as fully satiated flies approached odors that followed PPL1-01 photostimulation. Reward memories reinforced by food on the other hand typically depend on starvation (Gruber et al. 2013). Also, the rewarding effects of dopaminergic neuron photostimulation or -suppression have been observed in starved flies (Ichinose et al. 2015; Yamagata et al. 2015, 2016) and to the extent tested do not apply to satiated flies (Liu et al. 2012). In the light of this reasoning, we considered the timing-dependent opponent memories reinforced by PPL1-01 neurons to be punishment versus relief memories.

When electric shock is used as a reinforcer, training must be repeated to establish even weak relief memory (Yarali et al. 2008). Nonetheless, MB320C;chop2-XXL flies showed small but significant learned approach toward the odor after only one blue light → odor pairing (Fig. 1B). This prompted us to compare the reinforcement potencies of electric shock versus PPL1-01 photostimulation using repetitive training. When MB320C;chop2-XXL flies were given repeated odor → blue light pairings, the resulting learned avoidance was only about half as strong as that after repeated odor → electric shock pairings (compare Fig. 1C versus Fig. 1D-left). Strikingly, however, after multiple blue light → odor training episodes, the learned approach of MB320C;chop2-XXL flies was about sixfold stronger than that after multiple pairings of electric shock → odor (compare Fig. 1C; Supplemental Fig. S1 versus Fig. 1D-left). In comparison to electric shock, photostimulation of PPL1-01 neurons thus acted as a moderate punishment, whereas the relieving after-effect of this stimulation was massive.

With electric shock as a reinforcer, even multiple training episodes spaced with pauses establish relatively short-lived relief memory, which decays within 24 h (Diegelmann et al. 2013b). We tested whether the strong learned approach in MB320C;chop2-XXL flies upon repetitive spaced blue light → odor training (Fig. 1D-left) could persist up to 24 h. This turned out not to be the case (Fig. 1D-right). Furthermore, learned avoidance in MB320C;chop2-XXL flies upon repetitive spaced odor → blue light training was also completely lost within 24 h (Fig. 1D-right). In contrast, matched experiments using electric shock as a reinforcer yielded weak but significant 24 h-punishment memory (Supplemental Fig. S4). Thus, photostimulation of PPL1-01 neurons in general reinforced short-term memories, but not longer-lasting ones.

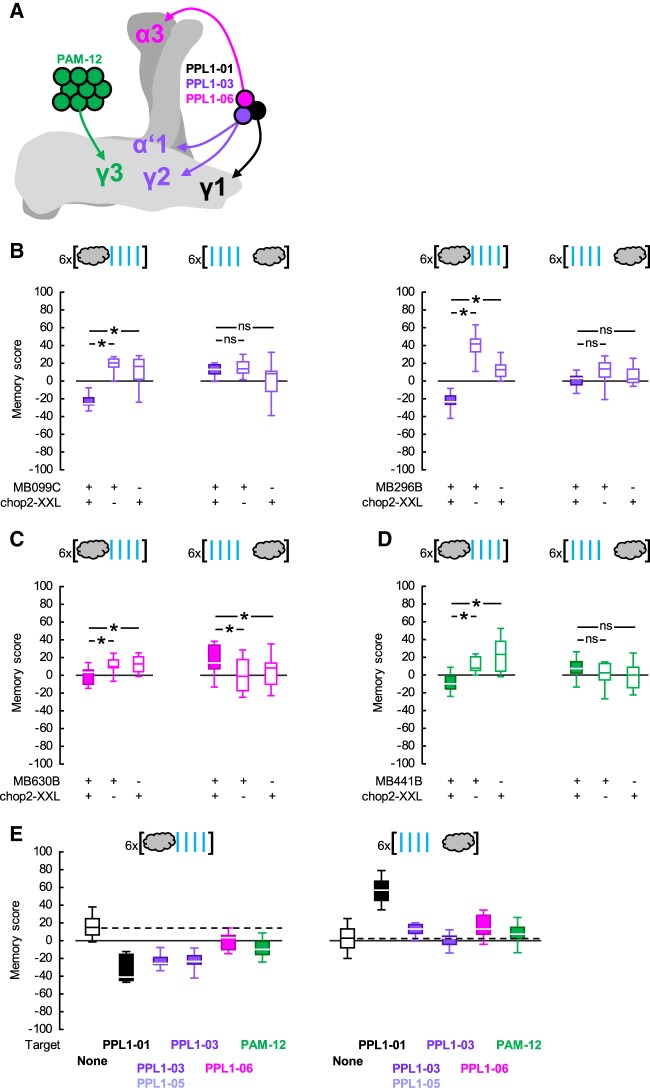

Several dopaminergic neuron types other than PPL1-01 have been reported to reinforce olfactory punishment memories when artificially stimulated (Aso et al. 2010, 2012; Aso and Rubin 2016; Yamagata et al. 2016; Takemura et al. 2017). This raises the question whether these neurons too implement timing-dependent valence-reversal. To address this we used appropriate Split-Gal4 drivers to express chop2-XXL, respectively, in the dopaminergic neurons PPL1-03 (alone or together with another dopaminergic neuron type, PPL1-05), PPL1-06 or PAM-12 (Table 1; Fig. 2A). In each experiment the experimental genotype-scores were compared to those of the respective genetic controls after either repetitive odor → blue light or blue light → odor training (Fig. 2B–D). Figure 2E provides an overview by comparing the memory scores of each experimental genotype to a common pooled data set for all genetic controls, including also the data from Figure 1D-left regarding PPL1-01. Repetitive odor → blue light training resulted in overall positive memory scores in control flies (Fig. 2B–E), which may reflect a mildly rewarding effect of the blue light per se for the flies. Importantly, similar to the case with PPL1-01 (Fig. 1D-left), when PPL1-03, -06 or PAM-12 neurons were targeted, repetitive odor → blue light training led to learned avoidance as compared to the control scores (Fig. 2B–E). Upon repetitive blue light → odor training, no consistent learned behavior was found in control flies (Fig. 2B–E), whereas targeting the PPL1-06 neurons resulted in learned approach (Fig. 2C,E) that was significant albeit markedly weaker than that found upon targeting PPL1-01 neurons (Figs. 1D-left, 2E). Thus, all candidate dopaminergic neuron types tested indeed signaled punishment. If their signals were to some extent additive, this could potentially explain why photostimulation of any individual neuron type was not as potent as electric shock in reinforcing punishment memory. Of these four punishment-signaling neuron types, only two, PPL1-01 and to a lesser extent PPL1-06 implemented a timing-dependent reversal to relief. Previously, Aso and Rubin (2016) have observed clear timing-dependent valence-reversal only with respect to PPL1-01 neurons, whereas such an effect has remained inconclusive for the PPL1-03 neurons and has not been studied for PPL1-06 or PAM-12 neurons. In our experiments as well as in previous studies (Aso et al. 2010, 2012, 2014b; Hige et al. 2015; Aso and Rubin 2016; Yamagata et al. 2016; Takemura et al. 2017), the differences in the strength of punishment (or relief) memories reinforced by the photostimulation of various dopaminergic neuron types could well be due to different levels of effector expression supported by the drivers used. Alternatively, the temporal dynamics of punishment (or relief) signaling might differ between dopaminergic neuron types, calling for a full characterization of ISI-learned behavior functions for each dopaminergic neuron type.

Figure 2.

Probing for timing-dependent valence reversal by PPL1-03, -06 or PAM-12 neurons. (A) The dopaminergic neuron types PPL1-01, -03, -06, and PAM-12 are sketched, respectively, in black, purple, pink, and green to show their cell body positions and the regions they innervate in the mushroom body (see also Table 1). The γ, α′/β′, and α/β lobes of the right mushroom body are sketched in light, darker, and yet darker gray, respectively. Please note that PPL1-01 neurons in addition innervate the mushroom body peduncle, not shown on the sketch. (B) (Left) The Split-Gal4 driver MB099C was used to express chop2-XXL in the PPL1-03 and -05 neurons. Upon repetitive odor → blue light training, MB099C;chop2-XXL flies had more negative memory scores compared to genetic controls, indicating learned avoidance (U-tests: MB099C;chop2-XXL versus MB099C: U = 0.00, P = 0.0009; MB099C;chop2-XXL versus chop2-XXL: U = 4.00; P = 0.0039; N = 8, each). In contrast, upon repetitive blue light → odor training, the scores of the MB099C;chop2-XXL flies did not differ from those of the controls (U-tests: MB099C;chop2-XXL versus MB099C: U = 30.00, P = 0.8748; MB099C;chop2-XXL versus chop2-XXL: U = 18.00; P = 0.1562; N = 8, each). (Right) The Split-Gal4 driver MB296B was used to express chop2-XXL in the PPL1-03 neurons. Repetitive odor → blue light training led to more negative scores in the MB296B;chop2-XXL flies than in the genetic controls, indicating learned avoidance (U-tests: MB296B;chop2-XXL versus MB296B: U = 0.00, P = 0.0009; MB296B;chop2-XXL versus chop2-XXL: U = 0.00; P = 0.0015; N = 8, 8, 7). In contrast, upon repetitive blue light → odor training, the scores of the MB296B;chop2-XXL flies were not different from those of the controls (U-tests: MB296B;chop2-XXL versus MB296B: U = 14.00, P = 0.0661; MB296B;chop2-XXL versus chop2-XXL: U = 27.00; P = 0.6365; N = 8, each). (C) The Split-Gal4 driver MB630B was used to express chop2-XXL in the PPL1-06 neurons. Repetitive odor → blue light training led to more negative scores in the MB630B;chop2-XXL flies than in the genetic controls, indicating learned avoidance (U-tests: MB630B;chop2-XXL versus MB630B: U = 38.00, P = 0.0193; MB630B;chop2-XXL versus chop2-XXL: U = 49.00, P = 0.0440; N = 14, 12, 13). Upon repetitive blue light → odor training, the MB630B;chop2-XXL flies had more positive scores than the genetic controls, indicating learned approach (U-tests: MB630B;chop2-XXL versus MB630B: U = 121.00, P = 0.0128; MB630B;chop2-XXL versus chop2-XXL: U = 135.00, P = 0.0203; N = 21, 22, 22). (D) The Split-Gal4 driver MB441B was used to express chop2-XXL in the PAM-12 neurons. Repetitive odor → blue light training led to more negative scores in the MB441B;chop2-XXL flies than in the controls, indicating learned avoidance (U-tests: MB441B;chop2-XXL versus MB441B: U = 18.00, P = 0.0021; MB441B;chop2-XXL versus chop2-XXL: U = 14.00; P = 0.0010; N = 13, 11, 11). Upon repetitive blue light → odor training, the MB441B;chop2-XXL scores tended to be more positive than those of the controls; this effect, however, did not reach significance (U-tests: MB441B;chop2-XXL versus MB441B: U = 141.00, P = 0.3700; MB441B;chop2-XXL versus chop2-XXL: U = 116.00; P = 0.0977; N = 19, 18, 18). (E) To provide an overview of the data in Figures 1D-left, 2B–D, the memory scores of all experimental genotypes are shown in comparison to a data set for genetic control scores pooled across experiments. Box plots, * and ns as in Figure 1.

Interestingly, while relief memory scores obtained using electric shock as a reinforcer have a notoriously poor signal-to-noise ratio (Fig. 1C; Supplemental Fig. S1), hindering analysis of this type of memory (Yarali and Gerber 2010; Appel et al. 2016), those obtained by PPL1-01 photostimulation were massive (Fig. 1D-left). We reasoned that this may be due to the prolonged photostimulation of the PPL1-01 neurons in our experiments, since the optogenetic effector we used, chop2-XXL, is characterized by a long open-state (Dawydow et al. 2014). This prompted us to study the effect of punishment duration on the timing-dependent opponent memories, for which we used two complementary approaches.

In a first approach, we used real electric shock as punishment. Wild-type flies were repetitively trained with ISI varying across groups. Electric shock was delivered either as four pulses evenly distributed over ∼15 sec or as 12 pulses spread out over ∼60 sec. Thus the two conditions differed in terms of the number of shock pulses, the net duration of shock and the total period over which punishment was administered. Both 4- and 12-pulses of electric shock reinforced learned behavior that depended on the ISI (Fig. 3-top and -middle). Comparing the ISI-learned behavior functions between the two conditions (Fig. 3-bottom), we indeed observed that with more pulses of electric shock distributed over a longer period, conditioned approach was possible with a wider range of ISIs and was generally stronger. Two additional data sets provided further support for the conclusion that prolonged punishment led to stronger relief memories (Supplemental Fig. S5).

Figure 3.

More electric shock pulses delivered over a longer time lead to stronger relief. Wild-type flies were trained and tested as in Figure 1C, while the ISI was systematically varied across groups and the electric shock was delivered in four pulses spread across 15 sec or as 12 pulses within 1 min. Whether using 4 or 12 pulses of electric shock, the memory scores depended on the ISI (KW-tests: top, four pulses: H = 33.57, d.f. = 3, P < 0.0001, N = 12, 12, 36, 12; middle, 12 pulses: H = 61.42, d.f. = 5, P < 0.0001, N = 12, 11, 12, 24, 12, 12). The median memory scores under both conditions plotted on a common ISI-axis make a comparison possible (bottom): the number of electric shock pulses did not significantly influence the strength of learned avoidance (U-tests: ISI = −15 and 0 sec: U = 61.00 and 43.00, P = 0.5444 and 0.1661), although 12 electric shock pulses seemed to establish learned avoidance with a wider range of ISIs (U-test: ISI = 40 sec: U = 103.00, P = 0.0074). Learned approach, in contrast, was both more pronounced and was possible with a larger ISI-range when a higher number of electric shock pulses were spread across a longer time (U-test: ISI = 80 sec: U = 55.00, P = 0.0030). See Supplemental Figure S5 for two supporting data sets. Box plots as in Figure 1.

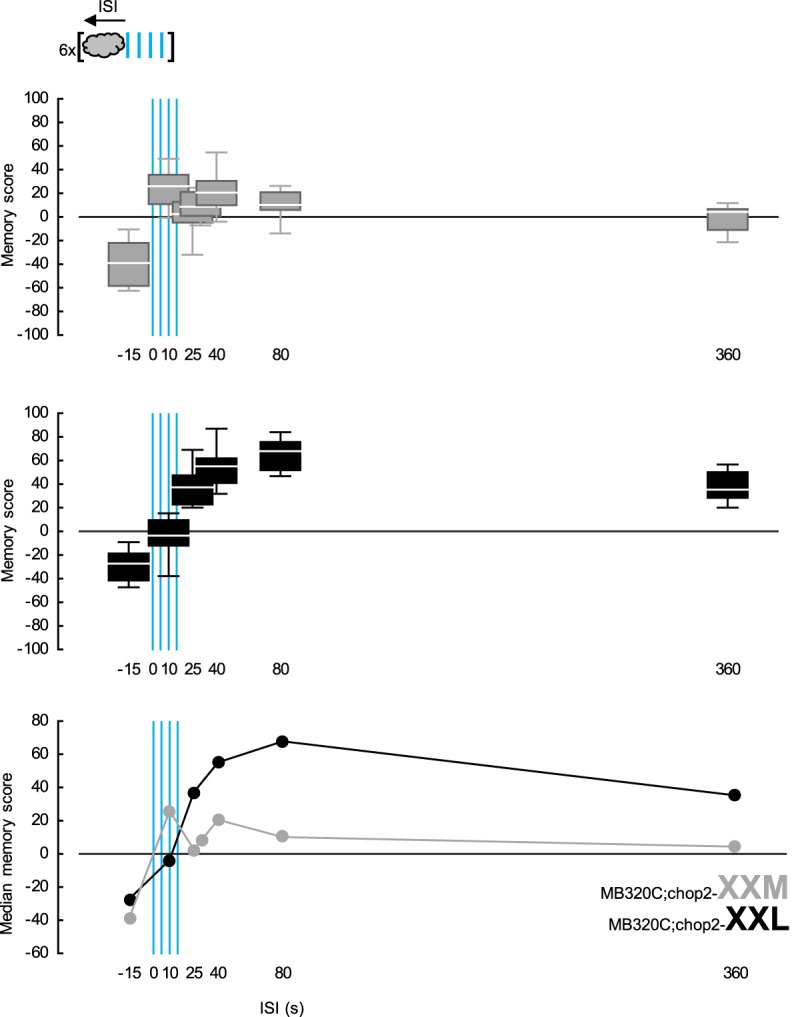

In a second approach, we used PPL1-01 photostimulation as punishment. The optogenetic effector chop2-XXL used in the previous experiments has a notoriously long open-state life-time (Dawydow et al. 2014), whereas the recently introduced chop2-XXM is faster-closing (Scholz et al. 2017). Experiments in frog oocytes as well as various types of fruit fly neuron suggest that following a brief, seconds long pulse of blue light, a chop2-XXL-expressing cell will remain depolarized for several tens of seconds, while a chop2-XXM-expressing cell will return to resting state after a few seconds (Dawydow et al. 2014; Scholz et al. 2017). Although these two chop2 variants are yet to be directly compared to each other in fly central nervous system neurons, we hypothesized that they may enable us to photostimulate PPL1-01 neurons for different durations with the same brief blue light presentation. Flies expressing either chop2-XXL or -XXM in the PPL1-01 neurons were thus given repetitive training, while the ISI between odor and the blue light was varied across groups. Regardless of the optogenetic effector used, the memory scores varied significantly with the ISI, highlighting the timing-dependent opponent memories (Fig. 4-top and -middle). Interestingly, when chop2-XXL was used, the range of ISIs supporting learned approach was much wider, and learned approach was generally much stronger (Fig. 4-bottom). This supported the hypothesis that even with a brief blue light application, chop2-XXL enabled a prolonged stimulation of the PPL1-01 neurons and that the offset of this prolonged stimulation acted as a potent relief signal, stronger than that induced by the offset of a real electric shock. More important, using either real electric shock or dopaminergic neuron photostimulation, the same rule applied: more prolonged punishment led to stronger relief.

Figure 4.

More prolonged punishing photostimulation of PPL1-01 neurons leads to stronger relief. The Split-Gal4 driver MB320C was used to express either the fast-closing, blue-light-gated cation channel chop2-XXM (gray) or its slow-closing variant chop2-XXL (black) in PPL1-01 neurons. Flies were trained and tested as in Figure 1D-left, while ISI was systematically varied across groups. In both MB320C;chop2-XXM and -XXL flies, memory scores depended on the ISI (KW-tests: top, using chop2-XXM: H = 38.13, d.f. = 6, P < 0.0001, N = 11, 10, 17, 12, 16, 10, 10; middle, using chop2-XXL: H = 52.10, d.f. = 5, P < 0.0001, N = 11, 9, 9, 9, 16, 16). The median memory scores of both genotypes plotted on a common ISI-axis make a comparison possible (bottom): the type of optogenetic effector used did not significantly affect the strength of learned avoidance (U-test: ISI = −15 sec: U = 44.00, P = 0.2934). Learned approach, however, was more pronounced and was possible with a larger ISI-range when chop2-XXL was used (U-tests: ISI = 40, 80 and 360 sec: U = 15.00, 0.00, and 0.00, P = 0.0014, <0.0001, and <0.0001). Box plots as in Figure 1.

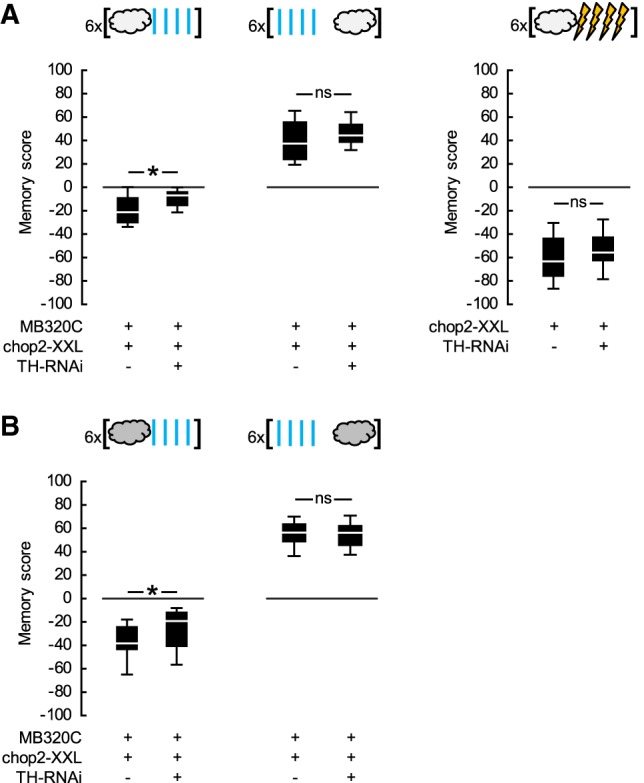

The next question we asked was whether the opponent reinforcement signals mediated by the PPL1-01 neurons rely equally on dopamine within these very neurons. To address this question, we used the Split-Gal4 driver MB320C to express chop2-XXL in the PPL1-01 neurons, either alone or together with an RNAi construct to knockdown Tyrosine hydroxylase, the rate-limiting enzyme for dopamine biosynthesis (TH-RNAi; Riemensperger et al. 2013; Rohwedder et al. 2016). In two independent experiments using different odor concentrations, upon repetitive odor → blue light training, the MB320C;chop2-XXL,TH-RNAi flies showed learned avoidance that was about half as strong as that of the MB320C;chop2-XXL flies (Fig. 5A-left,B; see also Supplemental Fig. S6B). This difference in punishment memory scores between the two genotypes was unlikely to be due to differences in learning ability because of genetic background, leaky expression of TH-RNAi or the insertion of the TH-RNAi transgene per se, as effector control flies chop2-XXL versus chop2-XXL,TH-RNAi performed equally well when trained as above, but with real electric shock as reinforcer (Fig. 5A-right). Thus, intact dopamine biosynthesis in the PPL1-01 neurons was indeed necessary for full-fledged punishment memory reinforced by these very neurons. Interestingly, upon repetitive blue light → odor training, learned approach was equally strong in both genotypes used (Fig. 5A-left,B; see also Supplemental Fig. S6B), providing no evidence for a requirement for dopamine biosynthesis in the PPL1-01 neurons in order for them to reinforce relief memory and suggesting that these neurons may rely on another neurotransmitter for this function. If dopaminergic versus other neurotransmission from these neurons were differently sensitive to photostimulation, this may explain why PPL1-01 photostimulation was overly potent in reinforcing relief memories but only moderately so in reinforcing punishment memories.

Figure 5.

PPL1-01-reinforced opponent memories differ in their dopamine biosynthesis requirement in these neurons. (A) (Left) The Split-Gal4 driver MB320C was used to express chop2-XXL either alone or together with an RNAi construct targeting Tyrosine hydroxylase (TH-RNAi) in the PPL1-01 neurons. Flies were trained and tested as in Figure 1D-left, but using lower odor concentrations. Upon repetitive odor → blue light training, MB320C;chop2-XXL,TH-RNAi flies had significantly less negative scores as compared to MB320C;chop2-XXL flies, indicating an impairment in learned avoidance (U-test: U = 60.00, P = 0.0187, N = 15, 16). This impairment was not complete, as each genotype had significantly negative scores (OSS-tests: P = 0.0074 and 0.0005). Upon repetitive blue light → odor training, the memory scores were not different between genotypes (U-test: U = 95.00, P = 0.3328, N = 15, 16), and when pooled they were significantly positive, indicating learned approach (OSS-test: P < 0.0001). (Right) The chop2-XXL effector control flies, with or without the additional TH-RNAi effector, were trained and tested using electric shock instead of blue light. Upon repetitive odor → electric shock training, the scores did not differ between the genotypes (U-test: U = 92.00, P = 0.1809, N = 16, each) and were significantly negative when pooled, indicating learned avoidance (OSS-test: P < 0.0001). (B) Experiment in A-left was repeated but with higher odor concentrations. Upon repetitive odor → blue light training, the MB320C;chop2-XXL,TH-RNAi flies had significantly less negative scores compared to the MB320C;chop2-XXL flies, indicating an impairment in learned avoidance (U-test: U = 145.00, P = 0.0503, N = 18, 25). This impairment was not complete, however, as each genotype had significantly negative scores (OSS-tests: P = 0.0001, each). Upon repetitive blue light → odor training, the memory scores were not different between genotypes (U-test: U = 96.00, P = 0.9450, N = 14, 14), and when pooled they were significantly positive, indicating learned approach (OSS-test: P < 0.0001). See Supplemental Figure S6B for a supporting data set. Box plots, * and ns as in Figure 1.

Discussion

A painful event leaves behind two opponent memories: cues that precede pain or overlap with it are remembered negatively, whereas cues that follow pain and thus coincide with relief are recalled positively. Using the fruit fly, we asked whether and how this timing-dependent valence-reversal in associative memory may be rooted in the properties of the individual reinforcement-signaling dopaminergic neurons. We considered four candidate types of fly dopaminergic neuron. Photostimulation of each indeed established learned avoidance of odors that preceded it. Interestingly, just two of these neuron types, PPL1-01 and -06, each comprising only one neuron per brain hemisphere, established learned approach to odors that followed their photostimulation. Only in the case of the PPL1-01 neurons, both effects were strong, allowing further analysis. Most notably, intact dopamine biosynthesis in PPL1-01 neurons turned out to be required for their full-fledged punishing effect; whereas no evidence for such requirement was found with respect to the relieving after-effect of their photostimulation. This finding cannot be trivially accommodated by the two computational models so far proposed for explaining punishment versus relief memories.

In Yarali et al.’s model (2012), during training, electric shock-induced dopamine transiently activates the Type 1 AC, resulting in a baseline amount of cAMP production in all KC, leading to modification of their output synapses to a downstream avoidance circuit. Odor-induced Ca++ accelerates either the activation or the deactivation of AC depending on its timing relative to dopamine. Thus, odor → electric shock versus electric shock → odor training result in, respectively, above versus below base-line levels of cAMP production and plasticity in the trained odor-coding KC. This, in a choice test translates to learned avoidance versus approach. This model is compatible with existing data on punishment memory and explains relief memory at the same time. However, it relies on dopamine for both kinds of memory, and thus cannot account for our finding that when reinforced by a single dopaminergic neuron, these differ in their requirements for dopamine signaling from that neuron.

In Drew and Abbott's model (2006), odors induce rigorous and persistent spiking activity in KC, while electric shock activates a postsynaptic MBON. Given a baseline synaptic strength and a STDP rule, the synapses from the trained odor-coding KC to the MBON are modified in opposite directions upon odor → electric shock versus electric shock → odor training, which pertain to opposite sequences of pre- and postsynaptic spiking. Since the MBON is assumed to drive olfactory behavior, this translates into opposite learned behaviors. As odor-induced spiking in KC is likely weak and brief (Turner et al. 2008; Honegger et al. 2011); and MBON-spiking is dispensable for the modification of KC-to-MBON synapses during punishment training (Hige et al. 2015), this model is weak in explaining punishment memory. It could explain relief memory, provided a strong and persistent MBON-response to electric shock. This in principle could be mediated by the recently discovered dopaminergic neuron-to-MBON connections (in larvae: Eichler et al. [2017]; in adults: Takemura et al. [2017]; see also Sitaraman et al. [2015]). Based on such connections, once an olfactory memory has been formed, dopaminergic neuron activity at test would be expected to impair learned behavior by driving the MBON to a ceiling and precluding its differential response to the trained versus control odors. Indeed when we established punishment or relief memories using PPL1-01 photostimulation, additionally activating these neurons at test partially impaired the scores (Supplemental Fig. S6A). Interestingly, for this effect of PPL1-01 neurons at test, just like their ability to reinforce relief memory at training, we found no evidence for a requirement for dopamine biosynthesis in these very neurons (Supplemental Fig. S6B). This may suggest an additional neurotransmitter at work at the dopaminergic neuron-to-MBON connections, supported also by the multiple types of synaptic vesicle found in dopaminergic neurons (Eichler et al. 2017; Takemura et al. 2017).

The punishment versus relief dichotomy has been studied not only in flies, but also in mammals. When rats are given electric shock → light cue training, the cue subsequently attenuates a typical fear-behavior, the acoustic startle (Andreatta et al. 2012). The formation of relief memory requires intact neuronal activity, dopamine D1-, NMDA-, and endocannabinoid-receptor signaling in the nucleus accumbens (Mohammadi et al. 2014; Mohammadi and Fendt 2015; Bergado Acosta et al. 2017a,b), while its consolidation requires accumbal protein synthesis (Bruning et al. 2016), and its retrieval relies on neuronal activity and dopamine D1-receptor signaling in the same brain region (Andreatta et al. 2012; Bergado Acosta et al. 2017a). Interestingly, in a parallel human paradigm, retrieval of relief memory is accompanied by activity in the ventral striatum (Andreatta et al. 2012). Supporting the role of the mammalian mesolimbic dopamine system in relief memory, rats fail to associate a particular place with pharmacologically induced relief from ongoing pain when dopamine release in the nucleus accumbens is hindered (Navratilova et al. 2012). Learned place preference is in turn established by pairing a place with artificial inhibition of midbrain (dopaminergic) neurons (Schultz 2016). Thus, mammalian midbrain (dopaminergic) neurons, which are most notable for reward processing, seem also to process relief, although the way these neurons deal with punishment remains a matter of controversy (for reviews, see Schultz 2007, 2016; Bromberg-Martin et al. 2010; Navratilova et al. 2015). Both activations and inhibitions in the midbrain have been found in response to punishment. Some studies have related the activations to sensory impact rather than negative valence or have attributed them to nondopaminergic neurons, concluding that the “valence-related” response of midbrain “dopaminergic” neurons to punishment is inhibition only (Ungless et al. 2004; Fiorillo et al. 2013a,b). Others have assigned punishment-induced activations versus inhibitions to distinct subsets of midbrain (dopaminergic) neurons (Brischoux et al. 2009; Matsumoto and Hikosaka 2009). Noteworthy is that, some of the (dopaminergic) neurons inhibited by punishment respond to punishment-offset by a rebound activation (Brischoux et al. 2009; Wang and Tsien 2011), probably resulting in dopamine release and activation at target areas (Budygin et al. 2012; Navratilova et al. 2012; Becerra et al. 2013). This rebound dopaminergic neuron activity is indeed an attractive candidate reinforcement signal for relief memory formation in mammals. This concept can also be applied to the fruit fly, yielding a new working model.

In this model (Supplemental Fig. S7), electric shock acts on the interplay of two dopaminergic neurons, “X” and “Y,” each innervating all KC but at different subcellular compartments along their axons. Critically, these dopaminergic neurons mutually inhibit each other as recently suggested by physiological data (Cohn et al. 2015), most likely through indirect connections. During training, when electric shock is presented, this activates dopaminergic neuron X, which in turn inhibits dopaminergic neuron Y to below its baseline level. If the odor is presented at this time, as in odor → electric shock training, only in those KC that respond to this odor and only at the particular compartment that receives dopamine from X, the coincidence of the two signals triggers modification of the KC output synapses to a corresponding MBON (MBON I) in such a way as to enable future learned avoidance. Upon the cessation of electric shock, the activity of X drops back to its baseline level and thus its inhibition on Y is lifted. A critical assumption of the model is that this results in rebound activation in Y. If the odor is presented at this time, as in electric shock → odor training, only in those KC that respond to this odor, and only at the particular compartment that receives dopamine from Y, the coincidence of the two signals triggers modification of the KC output synapses to a different MBON from the one mentioned above (MBON II), in such a way as to enable future learned approach. This model not only agrees with the existing data on punishment memory, but can also accommodate our present key findings: artificial stimulation of X will indeed suffice to reinforce both punishment and relief memories, the former directly by X and the latter indirectly, through Y. Furthermore, if the inhibition between X and Y relies not on dopamine but on another transmitter, the punishment memory established through X will indeed require dopamine biosynthesis within X, whereas the relief memory will need dopamine not in X, but in Y.

The charm of this model is its applicability and testability in both fruit fly and mammalian systems. Comparative analyses of the punishment versus relief dichotomy across phyla can yield and refine overarching models like this one, particularly suited for technological application. Such analyses can also aid our understanding of each separate system. In the fruit fly, for example, we found that whether using a real noxious event such as electric shock or the artificial stimulation of an appropriate dopaminergic neuron such as PPL1-01, prolonged punishment leads to more pronounced relief. This parametric feature of relief may apply also to rodents or even humans and may explain certain aspects of related dysfunction, such as the typically drawn-out nature of self-cutting in nonsuicidal self-injury, which may aim at yielding a stronger state of relief (Franklin et al. 2013).

Materials and Methods

Drosophila melanogaster were kept in mass culture on standard cornmeal-molasses food at 60%–70% relative humidity and 25°C temperature under a 12 h: 12 h light: dark cycle. For the memory assays, 1- to 3-d-old adults were collected in fresh food bottles and kept under the same culture conditions except at 18°C temperature (25°C in Fig. 3), at least overnight and at most until they were 4-d-old. Experimental and control genotypes in Figures 1, 2, 4, 5; Supplemental Figures S4 and S6 were treated likewise but kept in darkness throughout.

The Split-Gal4 driver strains MB099C, MB296B, MB320C, MB441B, and MB630B were from the Fly Light Split-GAL4 Driver Collection, gift of G. Rubin, HHMI Janelia Research Campus. Detailed information on these can be found on the respective database (http://splitgal4.janelia.org/cgi-bin/splitgal4.cgi) as well as in Aso et al. (2014a); Hige et al. (2015); and Aso and Rubin (2016). The effector strains UAS-chop2-XXL and UAS-chop2-XXM were gifts from R. Kittel, University of Würzburg. Their properties were reported in Dawydow et al. (2014) and Scholz et al. (2017). The double-effector strain UAS-chop2-XXL,TH-RNAi was generated using UAS-chop2-XXL and UAS-TH-RNAi strains (Bloomington Stock Center #25796; Riemensperger et al. [2013]; Rohwedder et al. [2016]). In order to obtain experimental genotypes, females of the effector strains were mated to males of the driver strains. Driver controls were generated by mating females of a white null-mutant strain (w1118; Hazelrigg et al. 1984) to the males of the respective driver strains, whereas effector controls were the progeny of females of the respective effector strains and males of a strain bearing an enhancerless Gal4 insertion (Hampel et al. 2015; Hige et al. 2015; Aso and Rubin 2016).

Memory assays took place at 23°C–25°C temperature and 60%–80% humidity. Training took place in red light, test in darkness (for Fig. 3; Supplemental Fig. S5, white room light was used throughout). As odorants, 50 µL benzaldehyde (BA) and 250 µL 3-octanol (OCT) (CAS 100-52-7, 589-98-0; both from Fluka) were applied undiluted to 1 cm-deep Teflon containers of 5 and 14 mm diameter, respectively. In Figure 5A, the odors were diluted 100-fold in paraffin oil (AppliChem, CAS: 8042-47-5). The flies were trained and tested en masse, in cohorts of ∼100. We used either one or six repetitions of training as depicted in Supplemental Figure S3. At time 0:00 min, the flies were gently loaded into the experimental setup (CON-ELEKTRONIK). From 4:00 min on, the control odor was presented for 15 sec. Blue light or electric shock were applied from 7:30 min on (from 6:30 min on for the ISI = 360-sec group in Fig. 1B and throughout Fig. 4). In those experiments where electric shock duration was varied (Fig. 3; Supplemental Fig. S5), the offset of electric shock was kept constant across groups, while the onset was adjusted accordingly. To apply blue light, we used 2.5 cm-diameter and 4.5 cm-length hollow tubes, with 24 LEDs of 465 nm peak wavelength mounted on the inner surface. These tubes fitted around transparent training tubes harboring the flies. Blue light was applied as four pulses, each 1.2-sec long and followed by the next pulse with a 5 sec onset-to-onset interval. The absolute irradiance in the middle of the training tube during blue light pulses was 200 µW/cm2 as measured with an STS-VIS Spectrometer (Ocean Optics). To apply electric shock, we used training tubes of 1.5 cm inner diameter and 9 cm length, coated inside with a copper wire coil harboring the flies. Either 4, 6, 12, or 24 pulses of 100 V were given; each pulse was 1.2 sec long and was followed by the next pulse with an onset-to-onset interval of 5 sec. A 15-sec long trained odor was paired with the blue light, or electric shock, with an onset-to-onset ISI. Negative ISI values indicated that the odor started before the blue light or electric shock; positive ISIs indicated the reverse order of events. At 12:00 min (13:30 min for the ISI = 360-sec group in Fig. 1B and throughout Fig. 4) the flies were transferred out of the setup into food vials, where they stayed for 16 min (14 min 30 sec for the ISI = 360-sec group in Fig. 1B and throughout Fig. 4). Then, either the next training episode or the test ensued. In Figure 1D-right and Supplemental Figure S4, the test took place ∼24 h after the end of training. For the test, the flies were given a 5 min accommodation period, after which they were transferred to the choice point between the two odors used during training. After 2 min (30 sec in Supplemental Fig. S6), the arms of the maze were closed and the flies on each side were counted to calculate a preference as:

| (1) |

# indicates the number of flies found in the respective maze-arm. Two subgroups of flies were trained and tested in parallel. For one subgroup BA was the control odor and OCT was trained; for the second subgroup contingencies were reversed. An associative memory score was obtained based on the Preferences from the two subgroups as:

| (2) |

Subscripts of Preference indicate the respective trained odor. Positive memory scores reflected learned approach, negative values learned avoidance.

For innate odor preference assays, the flies were loaded into the setup at 0:00 min, then at 5:00 min transferred to the choice point of a T-maze between a scented and an unscented arm to distribute for 30 sec. A Preference was calculated as:

| (3) |

Memory scores and Preferences were analysed using Statistica version 11.0 (StatSoft) and R version 2.15.1 (www.r-project.org) on a PC. We used Kruskal–Wallis tests (KW-test) to probe for differences across more than two groups, Mann–Whitney U-tests (U-test) for pair-wise comparisons, and one-sample sign tests (OSS-test) to compare scores of a group to zero. Experiment-wide type 1 error rates were limited to 0.05 by Bonferroni–Holm corrections.

Supplementary Material

Acknowledgments

We thank R. Kittel and G. Rubin for fly strains; J. Felsenberg, M. Fendt, R. Khalil, A. Moustafa, and M. Schleyer for valuable discussions; R. Glasgow for professional language editing of the manuscript; H. Kaderschabek and K. Oechsner for excellent technical support. Institutional support: Center of Behavioral and Brain Sciences Magdeburg, HHMI Janelia Research Campus Ashburn, Leibniz Institut für Neurobiologie Magdeburg, Otto von Guericke Universität Magdeburg, Wissenschaftsgemeinschaft Gottfried Wilhelm Leibniz. Grant support: Schram-Stiftung (T287/25458/2013 to A.Y.), Deutsche Forschungsgemeinschaft (SFB779-Motiviertes Verhalten, TPB15 to A.Y. and TPB11 to B.G.; GE1091/4-1 to B.G.).

Footnotes

[Supplemental material is available for this article.]

Article is online at http://www.learnmem.org/cgi/doi/10.1101/lm.047308.118.

Freely available online through the Learning & Memory Open Access option.

References

- Andreatta M, Mühlberger A, Yarali A, Gerber B, Pauli P. 2010. A rift between implicit and explicit conditioned valence in human pain relief learning. Proc Biol Sci 277: 2411–2416. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andreatta M, Fendt M, Mühlberger A, Wieser MJ, Imobersteg S, Yarali A, Gerber B, Pauli P. 2012. Onset and offset of aversive events establish distinct memories requiring fear and reward networks. Learn Mem 19: 518–526. [DOI] [PubMed] [Google Scholar]

- Andreatta M, Mühlberger A, Glotzbach-Schoon E, Pauli P. 2013. Pain predictability reverses valence ratings of a relief-associated stimulus. Front Syst Neurosci 7: 53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andreatta M, Mühlberger A, Pauli P. 2016. When does pleasure start after the end of pain? The time course of relief. J Comp Neurol 524: 1653–1667. [DOI] [PubMed] [Google Scholar]

- Appel M, Scholz CJ, Kocabey S, Savage S, König C, Yarali A. 2016. Independent natural genetic variation of punishment- versus relief-memory. Biol Lett 12: 20160657. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aso Y, Rubin GM. 2016. Dopaminergic neurons write and update memories with cell-type-specific rules. Elife 5: e16135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aso Y, Siwanowicz I, Bräcker L, Ito K, Kitamoto T, Tanimoto H. 2010. Specific dopaminergic neurons for the formation of labile aversive memory. Curr Biol 20: 1445–1451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aso Y, Herb A, Ogueta M, Siwanowicz I, Templier T, Friedrich AB, Ito K, Scholz H, Tanimoto H. 2012. Three dopamine pathways induce aversive odor memories with different stability. PLoS Genet 8: e1002768. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aso Y, Hattori D, Yu Y, Johnston RM, Iyer NA, Ngo TT, Dionne H, Abbott LF, Axel R, Tanimoto H, et al. 2014a. The neuronal architecture of the mushroom body provides a logic for associative learning. Elife 3: e04577. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aso Y, Sitaraman D, Ichinose T, Kaun KR, Vogt K, Belliart-Guérin G, Plaçais PY, Robie AA, Yamagata N, Schnaitmann C, et al. 2014b. Mushroom body output neurons encode valence and guide memory-based action selection in Drosophila. Elife 3: e04580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barth J, Dipt S, Pech U, Hermann M, Riemensperger T, Fiala A. 2014. Differential associative training enhances olfactory acuity in Drosophila melanogaster. J Neurosci 34: 1819–1837. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Becerra L, Navratilova E, Porreca F, Borsook D. 2013. Analogous responses in the nucleus accumbens and cingulate cortex to pain onset (aversion) and offset (relief) in rats and humans. J Neurophysiol 110: 1221–1226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bergado Acosta JR, Kahl E, Kogias G, Uzuneser TC, Fendt M. 2017a. Relief learning requires a coincident activation of dopamine D1 and NMDA receptors within the nucleus accumbens. Neuropharmacology 114: 58–66. [DOI] [PubMed] [Google Scholar]

- Bergado Acosta JR, Schneider M, Fendt M. 2017b. Intra-accumbal blockade of endocannabinoid CB1 receptors impairs learning but not retention of conditioned relief. Neurobiol Learn Mem 144: 48–52. [DOI] [PubMed] [Google Scholar]

- Bouzaiane E, Trannoy S, Scheunemann L, Plaçais PY, Preat T. 2015. Two independent mushroom body output circuits retrieve the six discrete components of Drosophila aversive memory. Cell Rep 11: 1280–1292. [DOI] [PubMed] [Google Scholar]

- Brischoux F, Chakraborty S, Brierley DI, Ungless MA. 2009. Phasic excitation of dopamine neurons in ventral VTA by noxious stimuli. Proc Natl Acad Sci 106: 4894–4899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bromberg-Martin ES, Matsumoto M, Hikosaka O. 2010. Dopamine in motivational control: rewarding, aversive, and alerting. Neuron 68: 815–834. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruning JEA, Breitfeld T, Kahl E, Bergado-Acosta JR, Fendt M. 2016. Relief memory consolidation requires protein synthesis within the nucleus accumbens. Neuropharmacology 105: 10–14. [DOI] [PubMed] [Google Scholar]

- Budygin EA, Park J, Bass CE, Grinevich VP, Bonin KD, Wightman RM. 2012. Aversive stimulus differentially triggers subsecond dopamine release in reward regions. Neuroscience 201: 331–337. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Campbell RA, Honegger KS, Qin H, Li W, Demir E, Turner GC. 2013. Imaging a population code for odor identity in the Drosophila mushroom body. J Neurosci 33: 10568–10581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohn R, Morantte I, Ruta V. 2015. Coordinated and compartmentalized neuromodulation shapes sensory processing in Drosophila. Cell 163: 1742–1755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connolly JB, Roberts IJ, Armstrong JD, Kaiser K, Forte M, Tully T, O'Kane CJ. 1996. Associative learning disrupted by impaired Gs signaling in Drosophila mushroom bodies. Science 274: 2104–2107. [DOI] [PubMed] [Google Scholar]

- Dawydow A, Gueta R, Ljaschenko D, Ullrich S, Hermann M, Ehmann N, Gao S, Fiala A, Langenhan T, Nagel G, et al. 2014. Channelrhodopsin-2-XXL, a powerful optogenetic tool for low-light applications. Proc Natl Acad Sci 111: 13972–13977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Diegelmann S, Klagges B, Michels B, Schleyer M, Gerber B. 2013a. Maggot learning and Synapsin function. J Exp Biol 216: 939–951. [DOI] [PubMed] [Google Scholar]

- Diegelmann S, Preuschoff S, Appel M, Niewalda T, Gerber B, Yarali A. 2013b. Memory decay and susceptibility to amnesia dissociate punishment–from relief-learning. Biol Lett 9: 20121171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Drew PJ, Abbott LF. 2006. Extending the effects of spike-timing-dependent plasticity to behavioral timescales. Proc Natl Acad Sci 103: 8876–8881. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eichler K, Li F, Litwin-Kumar A, Park Y, Andrade I, Schneider-Mizell CM, Saumweber T, Huser A, Eschbach C, Gerber B, et al. 2017. The complete connectome of a learning and memory centre in an insect brain. Nature 548: 175–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felsenberg J, Plath JA, Lorang S, Morgenstern L, Eisenhardt D. 2013. Short- and long-term memories formed upon backward conditioning in honeybees (Apis mellifera). Learn Mem 21: 37–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Felsenberg J, Barnstedt O, Cognigni P, Lin S, Waddell S. 2017. Re-evaluation of learned information in Drosophila. Nature 544: 240–244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiala A. 2007. Olfaction and olfactory learning in Drosophila: recent progress. Curr Opin Neurobiol 17: 720–726. [DOI] [PubMed] [Google Scholar]

- Fiorillo CD, Song MR, Yun SR. 2013a. Multiphasic temporal dynamics in responses of midbrain dopamine neurons to appetitive and aversive stimuli. J Neurosci 33: 4710–4725. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiorillo CD, Yun SR, Song MR. 2013b. Diversity and homogeneity in responses of midbrain dopamine neurons. J Neurosci 33: 4693–4709. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Franklin JC, Lee KM, Hanna EK, Prinstein MJ. 2013. Feeling worse to feel better: pain-offset relief simultaneously stimulates positive affect and reduces negative affect. Psychol Sci 24: 521–529. [DOI] [PubMed] [Google Scholar]

- Gerber B, Tanimoto H, Heisenberg M. 2004. An engram found? Evaluating the evidence from fruit flies. Curr Opin Neurobiol 14: 737–744. [DOI] [PubMed] [Google Scholar]

- Gerber B, Stocker RF, Tanimura T, Thum AS. 2009. Smelling, tasting, learning: Drosophila as a study case. Results Probl Cell Differ 47: 139–185. [DOI] [PubMed] [Google Scholar]

- Gerber B, Yarali A, Diegelmann S, Wotjak CT, Pauli P, Fendt M. 2014. Pain-relief learning in flies, rats, and man: basic research and applied perspectives. Learn Mem 21: 232–252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gervasi N, Tchénio P, Preat T. 2010. PKA dynamics in a Drosophila learning center: coincidence detection by rutabaga adenylyl cyclase and spatial regulation by dunce phosphodiesterase. Neuron 65: 516–529. [DOI] [PubMed] [Google Scholar]

- Gruber F, Knapek S, Fujita M, Matsuo K, Bräcker L, Shinzato N, Siwanowicz I, Tanimura T, Tanimoto H. 2013. Suppression of conditioned odor approach by feeding is independent of taste and nutritional value in Drosophila. Curr Biol 23: 507–514. [DOI] [PubMed] [Google Scholar]

- Hampel S, Franconville R, Simpson JH, Seeds AM. 2015. A neural command circuit for grooming movement control. Elife 4: e08758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han KA, Millar NS, Grotewiel MS, Davis RL. 1996. DAMB, a novel dopamine receptor expressed specifically in Drosophila mushroom bodies. Neuron 16: 1127–1135. [DOI] [PubMed] [Google Scholar]

- Hazelrigg T, Levis R, Rubin GM. 1984. Transformation of white locus DNA in Drosophila: dosage compensation, zeste interaction, and position effects. Cell 36: 469–481. [DOI] [PubMed] [Google Scholar]

- Heisenberg M. 2003. Mushroom body memoir: from maps to models. Nat Rev Neurosci 4: 266–275. [DOI] [PubMed] [Google Scholar]

- Hellstern F, Malaka R, Hammer M. 1998. Backward inhibitory learning in honeybees: a behavioral analysis of reinforcement processing. Learn Mem 4: 429–444. [DOI] [PubMed] [Google Scholar]

- Hige T. 2018. What can tiny mushrooms in fruit flies tell us about learning and memory? Neurosci Res 129: 8–16. [DOI] [PubMed] [Google Scholar]

- Hige T, Aso Y, Modi MN, Rubin GM, Turner GC. 2015. Heterosynaptic plasticity underlies aversive olfactory learning in Drosophila. Neuron 88: 985–998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Honegger KS, Campbell RA, Turner GC. 2011. Cellular-resolution population imaging reveals robust sparse coding in the Drosophila mushroom body. J Neurosci 31: 11772–11785. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ichinose T, Aso Y, Yamagata N, Abe A, Rubin GM, Tanimoto H. 2015. Reward signal in a recurrent circuit drives appetitive long-term memory formation. Elife 4: e10719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim YC, Lee HG, Han KA. 2007. D1 dopamine receptor dDA1 is required in the mushroom body neurons for aversive and appetitive learning in Drosophila. J Neurosci 27: 7640–7647. [DOI] [PMC free article] [PubMed] [Google Scholar]

- King T, Vera-Portocarrero L, Gutierrez T, Vanderah TW, Dussor G, Lai J, Fields HL, Porreca F. 2009. Unmasking the tonic-aversive state in neuropathic pain. Nat Neurosci 12: 1364–1366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konorski J. 1948. Conditioned reflexes and neuron organisation. Cambridge University Press, Cambridge. [Google Scholar]

- Krashes MJ, DasGupta S, Vreede A, White B, Armstrong JD, Waddell S. 2009. A neural circuit mechanism integrating motivational state with memory expression in Drosophila. Cell 139: 416–427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu C, Plaçais PY, Yamagata N, Pfeiffer BD, Aso Y, Friedrich AB, Siwanowicz I, Rubin GM, Preat T, Tanimoto H. 2012. A subset of dopamine neurons signals reward for odour memory in Drosophila. Nature 488: 512–516. [DOI] [PubMed] [Google Scholar]

- Mao Z1, Davis RL. 2009. Eight different types of dopaminergic neurons innervate the Drosophila mushroom body neuropil: anatomical and physiological heterogeneity. Front Neural Circuits 3: 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masek P, Worden K, Aso Y, Rubin GM, Keene AC. 2015. A dopamine-modulated neural circuit regulating aversive taste memory in Drosophila. Curr Biol 25: 1535–1541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masse NY, Turner GC, Jefferis GS. 2009. Olfactory information processing in Drosophila. Curr Biol 19: R700–R713. [DOI] [PubMed] [Google Scholar]

- Matsumoto M, Hikosaka O. 2009. Two types of dopamine neuron distinctly convey positive and negative motivational signals. Nature 459: 837–841. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGuire SE, Le PT, Osborn AJ, Matsumoto K, Davis RL. 2003. Spatiotemporal rescue of memory dysfunction in Drosophila. Science 302: 1765–1768. [DOI] [PubMed] [Google Scholar]

- Mohammadi M, Fendt M. 2015. Relief learning is dependent on NMDA receptor activation in the nucleus accumbens. Br J Pharmacol 172: 2419–2426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mohammadi M, Bergado-Acosta JR, Fendt M. 2014. Relief learning is distinguished from safety learning by the requirement of the nucleus accumbens. Behav Brain Res 272: 40–45. [DOI] [PubMed] [Google Scholar]

- Navratilova E, Xie JY, Okun A, Qu C, Eyde N, Ci S, Ossipov MH, King T, Fields HL, Porreca F. 2012. Pain relief produces negative reinforcement through activation of mesolimbic reward-valuation circuitry. Proc Natl Acad Sci 109: 20709–20713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navratilova E, Atcherley CW, Porreca F. 2015. Brain circuits encoding reward from pain relief. Trends Neurosci 38: 741–750. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Niewalda T, Michels B, Jungnickel R, Diegelmann S, Kleber J, Kähne T, Gerber B. 2015. Synapsin determines memory strength after punishment- and relief-learning. J Neurosci 35: 7487–7502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owald D, Waddell S. 2015. Olfactory learning skews mushroom body output pathways to steer behavioral choice in Drosophila. Curr Opin Neurobiol 35: 178–184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owald D, Felsenberg J, Talbot CB, Das G, Perisse E, Huetteroth W, Waddell S. 2015. Activity of defined mushroom body output neurons underlies learned olfactory behavior in Drosophila. Neuron 86: 417–427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perisse E, Owald D, Barnstedt O, Talbot CB, Huetteroth W, Waddell S. 2016. Aversive learning and appetitive motivation toggle feed-forward inhibition in the Drosophila mushroom body. Neuron 90: 1086–1099. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plaçais PY, Trannoy S, Isabel G, Aso Y, Siwanowicz I, Belliart-Guérin G, Vernier P, Birman S, Tanimoto H, Preat T. 2012. Slow oscillations in two pairs of dopaminergic neurons gate long-term memory formation in Drosophila. Nat Neurosci 15: 592–599. [DOI] [PubMed] [Google Scholar]

- Plaçais PY, de Tredern É, Scheunemann L, Trannoy S, Goguel V, Han KA, Isabel G, Preat T. 2017. Upregulated energy metabolism in the Drosophila mushroom body is the trigger for long-term memory. Nat Commun 8: 15510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riemensperger T, Völler T, Stock P, Buchner E, Fiala A. 2005. Punishment prediction by dopaminergic neurons in Drosophila. Curr Biol 15: 1953–1960. [DOI] [PubMed] [Google Scholar]

- Riemensperger T, Issa AR, Pech U, Coulom H, Nguyễn MV, Cassar M, Jacquet M, Fiala A, Birman S. 2013. A single dopamine pathway underlies progressive locomotor deficits in a Drosophila model of Parkinson disease. Cell Rep 5: 952–960. [DOI] [PubMed] [Google Scholar]

- Rohwedder A, Wenz NL, Stehle B, Huser A, Yamagata N, Zlatic M, Truman JW, Tanimoto H, Saumweber T, Gerber B, et al. 2016. Four individually identified paired dopamine neurons signal reward in larval Drosophila. Curr Biol 26: 661–669. [DOI] [PubMed] [Google Scholar]

- Saumweber T, Rohwedder A, Schleyer M, Eichler K, Chen YC, Aso Y, Cardona A, Eschbach C, Kobler O, Voigt A, et al. 2018. Functional architecture of reward learning in mushroom body extrinsic neurons of larval Drosophila. Nat Commun 9: 1104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scholz N, Guan C, Nieberler M, Grotemeyer A, Maiellaro I, Gao S, Beck S, Pawlak M, Sauer M, Asan E, et al. 2017. Mechano-dependent signaling by Latrophilin/CIRL quenches cAMP in proprioceptive neurons. Elife 6: e28360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W. 2007. Multiple dopamine functions at different time courses. Annu Rev Neurosci 30: 259–288. [DOI] [PubMed] [Google Scholar]

- Schultz W. 2016. Dopamine reward prediction-error signalling: a two- component response. Nat Rev Neurosci 17: 183–195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwaerzel M, Monastirioti M, Scholz H, Friggi-Grelin F, Birman S, Heisenberg M. 2003. Dopamine and octopamine differentiate between aversive and appetitive olfactory memories in Drosophila. J Neurosci 23: 10495–10502. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Séjourné J, Plaçais PY, Aso Y, Siwanowicz I, Trannoy S, Thoma V, Tedjakumala SR, Rubin GM, Tchénio P, Ito K, et al. 2011. Mushroom body efferent neurons responsible for aversive olfactory memory retrieval in Drosophila. Nat Neurosci 14: 903–910. [DOI] [PubMed] [Google Scholar]

- Seymour B, O'Doherty JP, Koltzenburg M, Wiech K, Frackowiak R, Friston K, Dolan R. 2005. Opponent appetitive-aversive neural processes underlie predictive learning of pain relief. Nat Neurosci 8: 1234–1240. [DOI] [PubMed] [Google Scholar]

- Sitaraman D, Aso Y, Rubin GM, Nitabach MN. 2015. Control of sleep by dopaminergic inputs to the Drosophila mushroom body. Front Neural Circuits 9: 73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Solomon RL. 1980. The opponent-process theory of acquired motivation: the costs of pleasure and the benefits of pain. Am Psychol 35: 691–712. [DOI] [PubMed] [Google Scholar]

- Solomon RL, Corbit JD. 1974. An opponent-process theory of motivation. I. Temporal dynamics of affect. Psychol Rev 81: 119–145. [DOI] [PubMed] [Google Scholar]

- Takemura SY, Aso Y, Hige T, Wong A, Lu Z, Xu CS, Rivlin PK, Hess H, Zhao T, Parag T, et al. 2017. A connectome of a learning and memory center in the adult Drosophila brain. Elife 6: e26975. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka NK, Tanimoto H, Ito K. 2008. Neuronal assemblies of the Drosophila mushroom body. J Comp Neurol 508: 711–755. [DOI] [PubMed] [Google Scholar]

- Tanimoto H, Heisenberg M, Gerber B. 2004. Experimental psychology: event timing turns punishment to reward. Nature 430: 983. [DOI] [PubMed] [Google Scholar]

- Tomchik SM, Davis RL. 2009. Dynamics of learning-related cAMP signaling and stimulus integration in the Drosophila olfactory pathway. Neuron 64: 510–521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Turner GC, Bazhenov M, Laurent G. 2008. Olfactory representations by Drosophila mushroom body neurons. J Neurophysiol 99: 734–746. [DOI] [PubMed] [Google Scholar]

- Ungless MA, Magill PJ, Bolam JP. 2004. Uniform inhibition of dopamine neurons in the ventral tegmental area by aversive stimuli. Science 303: 2040–2042. [DOI] [PubMed] [Google Scholar]

- Vogt K, Yarali A, Tanimoto H. 2015. Reversing stimulus timing in visual conditioning leads to memories with opposite valence in Drosophila. PLoS One 10: e0139797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner AR. 1981. A model of automatic memory processing in animal behavior. In Information processing in animals: memory mechanisms (ed. Spear NE, Miller RR), pp. 5–47. Erlbaum, Hillsdale, NJ. [Google Scholar]

- Wang DV, Tsien JZ. 2011. Convergent processing of both positive and negative motivational signals by the VTA dopamine neuronal populations. PLoS One 6: e17047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilson RI. 2013. Early olfactory processing in Drosophila: mechanisms and principles. Annu Rev Neurosci 36: 217–241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamagata N, Ichinose T, Aso Y, Plaçais PY, Friedrich AB, Sima RJ, Preat T, Rubin GM, Tanimoto H. 2015. Distinct dopamine neurons mediate reward signals for short- and long-term memories. Proc Natl Acad Sci 112: 578–583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yamagata N, Hiroi M, Kondo S, Abe A, Tanimoto H. 2016. Suppression of dopamine neurons mediates reward. PLoS Biol 14: e1002586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yarali A, Gerber B. 2010. A neurogenetic dissociation between punishment-, reward-, and relief-learning in Drosophila. Front Behav Neurosci 4: 189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yarali A, Niewalda T, Chen Y, Tanimoto H, Duerrnagel S, Gerber B. 2008. Pain relief-learning in fruit flies. Anim Behav 76: 1173–1185. [Google Scholar]

- Yarali A, Krischke M, Michels B, Saumweber T, Mueller MJ, Gerber B. 2009. Genetic distortion of the balance between punishment and relief learning in Drosophila. J Neurogenet 23: 235–247. [DOI] [PubMed] [Google Scholar]

- Yarali A, Nehrkorn J, Tanimoto H, Herz AV. 2012. Event timing in associative learning: from biochemical reaction dynamics to behavioural observations. PLoS One 7: e32885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zars T, Fischer M, Schulz R, Heisenberg M. 2000. Localization of a short-term memory in Drosophila. Science 288: 672–675. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.