Abstract

Evaluating the marginal likelihood in Bayesian analysis is essential for model selection. Estimators based on a single Markov chain Monte Carlo sample from the posterior distribution include the harmonic mean estimator and the inflated density ratio estimator. We propose a new class of Monte Carlo estimators based on this single Markov chain Monte Carlo sample. This class can be thought of as a generalization of the harmonic mean and inflated density ratio estimators using a partition weighted kernel (likelihood times prior). We show that our estimator is consistent and has better theoretical properties than the harmonic mean and inflated density ratio estimators. In addition, we provide guidelines on choosing optimal weights. Simulation studies were conducted to examine the empirical performance of the proposed estimator. We further demonstrate the desirable features of the proposed estimator with two real data sets: one is from a prostate cancer study using an ordinal probit regression model with latent variables; the other is for the power prior construction from two Eastern Cooperative Oncology Group phase III clinical trials using the cure rate survival model with similar objectives.

Keywords: Bayesian model selection, cure rate model, harmonic mean estimator, inflated density ratio estimator, ordinal probit regression, power prior

1 Introduction

The Bayes factor quantifying evidence of one model over a competing model is commonly used for model comparison or variable selection in Bayesian inference. The Bayes factor is a ratio of two marginal likelihoods, where the marginal likelihood is essentially the average fit of the model to the data. However, the integration for the marginal likelihood is often analytically intractable due to the complex kernel (product of likelihood and prior) structure. To deal with this computational problem, several Monte Carlo methods have been developed. They include the importance sampling (IS) of Geweke (1989), the harmonic mean (HM) of Newton and Raftery (1994) and its generalization (GHM) by Gelfand and Dey (1994), the serial approaches of Chib (1995) and Chib and Jeliazkov (2001), the inflated density ratio method (IDR) of Petris and Tardella (2003) and Petris and Tardella (2007), the thermodynamic integration (TI) of Lartillot and Philippe (2006) and Friel and Pettitt (2008), the constrained GHM estimator with the highest posterior density (HPD) region of Robert and Wraith (2009) and Marin and Robert (2010), and the steppingstone sampling of Xie et al. (2011) and Fan et al. (2011). Under some mild conditions, they are all shown to be asymptotically convergent to the marginal likelihood by the ergodic theorem. They vary in using Monte Carlo samples or kernels in the Monte Carlo integration.

We assume only a single Markov chain Monte Carlo (MCMC) sample from the posterior distribution, which may be readily available from standard Bayesian software, and the known kernel function for computing the marginal likelihood. The HM and IDR estimators are the only existing methods that need only these two minimal assumptions. The main difference between the HM and the IDR estimators lies in the different weights assigned to the inverse of the kernel function. The former uses the prior function as a weight, while the latter uses the difference between a perturbed density and its kernel function. Although the HM estimator has been used in practice because of its simplicity, it can be unstable when the prior has heavier tails than the likelihood function and it is known to overestimate the marginal likelihood (Lartillot and Philippe, 2006; Xie et al., 2011).

While the IDR estimator has better control over the tails of the kernel than the HM estimator, it requires reparameterization, posterior mode calculation, and a careful selection of radius. Under the aforementioned two minimal assumptions, we extend the HM and IDR methods to develop a new Monte Carlo method, namely, the partition weighted kernel (PWK) estimator. The PWK estimator is constructed by first partitioning the working parameter space, where the kernel is bounded away from zero, and then estimating the marginal likelihood by a weighted average of the kernel values evaluated at the MCMC sample, where weights are assigned locally using a representative kernel value in each subset. We show the PWK estimator is consistent and has finite variance. When the partition is refined enough to make the kernel values in the same region similar, we can construct the best (minimum variance) PWK estimator. Our simulation studies empirically show that the proposed PWK estimator outperforms both the HM and IDR estimators with respect to root mean square error.

The rest of the article is organized as follows. Section 2 is a review of the HM, GHM and IDR methods that motivate the PWK estimator. In Section 3, we develop the PWK estimator and its theoretical properties. Additionally, in the class of the general PWK estimator, we find the best (minimum variance) PWK estimator and provide a spherical shell approach to realize it. In Section 4, an extended general PWK estimator defined on the full support of the kernel function is investigated. Besides the theoretical properties, we show that the HM and IDR estimators are special cases in this family. In Section 5, we conduct simulation studies of a bivariate normal case with the normal-inverse-Wishart prior and a mixture of two bivariate normal distributions to compare the performance and computing time of the HM, IDR and PWK estimators. In Section 6, we compare the results and performance of the PWK estimator to the methods by Chib (1995) and Chen (2005) for an ordinal probit regression model. Moreover, we apply the PWK estimator to the determination of the optimal power prior using two Eastern Cooperative Oncology Group (ECOG) clinical trial data sets. Finally, we conclude with a discussion in Section 7. The proofs of all theorems are given in the Supplementary Web Materials (Wang et al., 2017a).

2 Preliminary

We review several Monte Carlo methods that only require a known kernel function and an MCMC sample from the posterior distribution to compute the marginal likelihood. Suppose θ is a p-dimensional vector of parameters and D denotes the data. Then, the kernel function for the joint posterior density π(θ|D) is q(θ) = L(θ|D)π(θ), where L(θ|D) is the likelihood function and π(θ) is a proper prior density. Assume Θ ⊂ Rp is the support of q(θ). The unknown marginal likelihood c is defined to be ∫Θq(θ)dθ. The integration is often analytically intractable due to complicated kernel structure.

To estimate the normalizing constant c, Newton and Raftery (1994) suggest the following equation to motivate the HM method,

| (1) |

Let {θt, t = 1, …, T } be an MCMC sample from the posterior distribution π(θ|D) = q(θ)/c. The HM estimator is then given by

| (2) |

where the prior π(θt) can be viewed as the weight assigned to 1/q(θt). Although it has the features of simplicity and asymptotic convergence to the marginal likelihood, the finite variance is not guaranteed. Xie et al. (2011) also point out that the HM estimator tends to overestimate the marginal likelihood.

Gelfand and Dey (1994) suggest the GHM estimator where π(θ) in (1) is replaced by a lighter-tailed density function f(θ) compared to q(θ):

| (3) |

By proposing a light-tailed density, the ratio f(θt)/q(θt) can be controlled. Consequently, the estimator has finite variance. However, in high dimensional problems, finding a suitable density f(θ) may be a challenge.

Petris and Tardella (2003) propose the IDR estimator. They use the difference between a perturbed distribution qr(θ), which is inflated in the center of the kernel, and the posterior kernel q(θ) as the weight. The perturbed density qr(θ) is defined as

| (4) |

where r is the chosen radius and w(θ) = θ (1 − rp/||θ||p)1/p. It follows,

| (5) |

where br = Volume of the ball {θ: ||θ|| ≤ r} = πp/2rp/Γ(p/2 + 1). This leads to the following equation,

| (6) |

and the IDR estimator is given by

| (7) |

Under some mild conditions, the estimator is shown to have finite variance by Petris and Tardella (2007). However, the method requires a careful selection of radius and unbounded support of q(θ). Any bounded parameter must be reparameterized to the full real line. Also, in order to have a more efficient estimator, mode finding is essential and standardization of an MCMC sample with respect to the mode and the sample covariance matrix is required.

3 A New Monte Carlo Estimator

We first modify (1) and (6) by imposing a working parameter space Ω ⊂ Θ, where Ω = {θ: q(θ) is bounded away from zero} to avoid regions with extremely low kernel values. Then we assume there is a function h(θ) such that ∫Ω h(θ)dθ = Δ can be evaluated. Consequently, we have the identity:

| (8) |

We next partition the working parameter space into K subsets, where the ratio of h(θ) over q(θ) has similar values within each subset, to reduce the variance of the Monte Carlo estimator. The general form of the PWK estimator with unspecified local weights is essentially a weighted average for the harmonic mean estimator for q(θ) with the same weights assigned locally to an MCMC sample in a subset.

The working parameter space is essentially the constrained support considered by Robert and Wraith (2009) and Marin and Robert (2010). However, we do not require h(θ) to be a density function as in GHM or constrained GHM. Consequently, we allow a larger class of estimators to be considered.

3.1 General Monte Carlo Estimator

Suppose {A1, …, AK} forms a partition of the working parameter space Ω, where for an integer K >0, w1, …, wK are the weights assigned to these K regions, respectively.

Let the weight function be the step function:

| (9) |

So we can evaluate Δ:

where V (Ak) is the volume of the kth subset in the partition, that is, V (Ak) = ∫Ω 1{θ ∈ Ak}dθ.

Using the step function h(.) in (9), the PWK estimator for d ≡ 1/c is given by

| (10) |

In order to establish consistency and finite variance of the PWK estimator, we introduce two assumptions.

Assumption 1

The volume of each region V (Ak) < ∞ for k = 1, 2, …, K.

Assumption 2

q(θ) is positive and continuous on Āk, where Āk is the closure of Ak for k = 1, …, K.

Theorem 1

Under Assumptions 1 to 2 and certain ergodic (e.g., time-reversible, invariant, and irreducible) conditions, d̂ in (10) is a consistent estimator of d. In addition, Var(d̂) < ∞.

Note that we consider the estimator for d rather than c because we can obtain an unbiased estimator with finite variance for d = 1/c.

Remark 1

Another property of d̂ in (10) is that when a certain full conditional density is available, the computation can be lessened. This is often the case in the generalized linear model with latent variables or random effects, and in any Gibbs sampler or its hybrid. To be specific, let (ϑ1,ϑ2) be 2 blocks of parameters, ϑ1 = (θ1, …, θq)′ and ϑ2 = (θq+1, …, θp)′. Assume that a full conditional density, π(ϑ1|D,ϑ2), is available. Then, the p-dimensional estimation problem can be reduced to p − q dimensions:

where q(ϑ2) = ∫Rq q(θ)dϑ1, which has a closed form expression. Therefore, instead of investigating the kernel q(θ), we can work on the kernel q(ϑ2). In this case, (10) becomes

where {B1, …, BK} is a partition of the working parameter space Ω2,Ω2 ⊂ Θ2, which is the support of q(ϑ2), and V (B1), …, V (BK) are the corresponding volumes.

3.2 The Optimal Monte Carlo Estimation

Our next step is to find the optimal weight wk in the class of PWK estimators (10), motivated by Chen and Shao (2002).

Assume {θt, t = 1, …, T } is an MCMC sample from the posterior distribution π(θ|D). Let and αk = E[(1/q2(θ))1{θ ∈ Ak}]. Write such that . Then, we have .

Theorem 2

Letting for k = 1, …, K, we have , and for any weight function defined on each Ak.

Remark 2

In practice, it is quite difficult to estimate the second moment αk. A very large sample size is required in order to obtain an accurate estimate of αk. However, the results shown in Theorem 2 shed light on the choices of A1, …, AK and wk. First, it is only required that wk be proportional to V (Ak)/αk. Second, if q(θ) is roughly constant over Ak, then , where . Thus, in this case, we can simply choose and d̂ in (10) reduces to

| (11) |

Remark 3

Following on Remark 1, when a full conditional density π(ϑ1|D,ϑ2) is available, the estimator d̂ in (11) reduces further to

Remark 4

In practice, the marginal likelihood is often reported in log scale. Considering the dependence within the MCMC sample, we use the Overlapping Batch Statistics (OBS) of Schmeiser et al. (1990) to estimate the Monte Carlo (MC) standard error of −log(d̂). Let η̂b denote an estimate of the reciprocal of the marginal likelihood in log scale using the bth batch, {θt, t = b, b + 1, …, b + B − 1}, of the MCMC sample for b = 1, 2, …, T − B + 1, where B < T is the batch size. Then, the OBS estimated MC standard error of η̂ = −log(d̂) is given by

| (12) |

where and a batch size B is suggested to be 10 ≤ T/B ≤ 20 in Schmeiser et al. (1990).

3.3 Construction of the Partition with Subsets A1,A2, …, AK

In order to make q(θ) roughly constant over Ak for each k, which is a sufficient condition for the PWK estimator in (11) to be optimal, we provide the following rings approach for achieving it:

Step 1: Assume Θ is Rp; if not, then a transformation ϕ = G1(θ) is needed so that the parameter space of ϕ is Rp.

Step 2: Use the MCMC sample to compute the mean ϕ̄ and the covariance matrix Σ̂ of ϕ and then standardize ϕ by ψ = G2(ϕ) = Σ̂−1/2(ϕ − ϕ̄).

Step 3: Construct a working parameter space for ψ by choosing a reasonable radius r such that ||ψ|| < r for most of the standardized MCMC sample.

Step 4: Partition the working parameter space into a sequence of K spherical shells such that Ak = {ψ: r(k − 1)/K ≤ ||ψ|| < rk/K}, with k = 1, …, K.

Step 5: Select a in Ak as a representative point, for example a such that .

Sept 6: Compute the new kernel value , where J = |∂θ/∂ϕ||∂ϕ/∂ψ|. Also compute the new kernel value q̃(ψt), t = 1, …, T, for the standardized MCMC sample.

-

Step 7: Estimate d = 1/c by

(13) where V (Ak) = {(rk/K)p − [r(k − 1)/K]p}πp/2/Γ(p/2 + 1).

Remark 5

When K is sufficiently large, q̃(ψt) in (13) will be roughly constant over Ak and the PWK estimate will be close to optimal. In addition, each kernel value q̃(ψt) is simply the original kernel value q(θt) multiplied by the absolute value of the Jacobian function.

4 Extension of the General PWK Estimator

In this section, we generalize the PWK estimator from the working parameter space to the full support space and from the locally constant weight function to a general weight function of θ. We call this class extended PWK (ePWK) estimators.

Suppose {A1, …, AK*} is a partition of Θ, and wk(θ) is a weight function defined on Ak. We need the following assumption to define this ePWK class:

Assumption 3

The weight function wk is integrable, that is, ∫ |wk(θ)|dθ < ∞ for k = 1, …, K*.

Under Assumption 3, the extended form of the general PWK in (10) is given by

| (14) |

Theorem 3

Under Assumption 3 and q(θ) > 0, then the ePWK estimator d̂* in (14) is a consistent estimator of d. In addition, if ∫Ak [wk(θ)2/q(θ)]dθ < ∞ for k = 1, …, K*, then Var(d̂*) < ∞.

Remark 6

It is easy to see that d̂ in (10) is a special case of d̂* in (14). When K* = K + 1 and each fixed weight wk is assigned to an MCMC sample in each region Ak except wK* = 0, d̂* reduces to d̂.

Remark 7

The HM estimator is another special case of d̂* in (14). When using the prior π(θi) as weights, the inverse of d̂* is the HM estimator.

Remark 8

In addition, d̂* in (14) includes the IDR estimator as a special case. Let K* = 2, A1 = {θ: ||θ|| ≤ r}, w1(θ) = q(0) − q(θ), A2 = {θ: ||θ|| > r}, and w2(θ) = qr(θ) − q(θ). We can show that ∫A1 w1(θ)dθ = q(0)br − ∫A1 q(θ)dθ and ∫A2 w2(θ)dθ = c − ∫A2 q(θ)dθ, implying . Thus, the inverse of d̂* reduces to the IDR estimator. Note w1(θt) and w2(θt) in IDR are allowed to be negative.

Remark 9

When the posterior kernel q(.) after the transformation is roughly symmetric, the constant weight wk assigned to partition set Ak constructed using the rings approach discussed in Section 3.3 often leads to an efficient PWK estimator in (10) as empirically demonstrated in Section 5.1 and Section 6. However, when the posterior kernel q(.) is very skewed or multimodal, the constant weight wk would result in an inefficient PWK estimator. For such a complex case, we can apply the ePWK estimator in (14). The functional weight wk(θ) can be constructed as follows. We first divide the kth ring Ak into mk subsets Ak1, …, Akmk based on mk slices such that and Ak1, …, Akmk are disjoint, and then assign for θ ∈ Akℓ, where is a representative point in Akℓ, for ℓ = 1, …, mk. In Section 5.2, we apply this version of the ePWK estimator to an example involving a bimodal distribution to examine its empirical performance.

5 Simulation Studies

5.1 A Bivariate Normal Example

We apply the PWK estimator for computing the normalizing constant of the posterior of the parameters of a bivariate normal distribution with the normal-inverse-Wishart prior. We consider both location and scale parameters to be unknown. Including the scale parameters makes computation challenging. Let y = (y1, y2, …, yn)′ be n observations from a bivariate normal distribution,

where μ ∈ R2 and Σ are unknown parameters. The likelihood function is

The prior for μ and Σ is specified as follows:

with hyperparameters μ0, κ0, ν0, and Λ0. Then, the joint posterior kernel is given by

with , where Γ2(ν0/2) = π1/2Γ(ν0/2)Γ(ν0/2 − 1/2). Under this setting, the analytical form of the normalizing constant is available as follows:

| (15) |

where , κn = κ0 + n, and νn = ν0 + n. We set the hyperparameters μ0 = (0, 0)′, k0 = 0.01, ν0 = 3, and . We generated a random sample y with n = 200 from a bivariate normal distribution with μ = (0, 0) and . The corresponding sample mean ȳ was (−0.029, 0.040)′, and the sample variance–covariance matrix S was . Using (15), the marginal likelihood in log scale is −507.278. In this example, in order to apply the spherical shell approach in Section 3.3, a transformation of Σ was needed. Here, we used the log transformation for each variance parameter and the Fisher z-transformation for the correlation coefficient parameter to have unbounded support for each of them. Then, we standardized each transformed MCMC sample from its transformed sample mean and standard deviation. In the new parameter space, we constructed the working parameter space and its partition by choosing r = 1.5, 2, or 2.5 and K = 10, 20, or 100. After selecting a representative point in each spherical shell, we estimated d = 1/c using (13). We compare our method to the HM and IDR methods based on 1,000 independent MCMC samples with T = 1, 000 or T = 10, 000 in Table 1. Let d̂ℓ be the estimate of d based on the ℓth MCMC sample for ℓ = 1, 2, …, 1, 000. Then, the simulation estimate (Mean), the MC standard error (MCSE), and the root mean square error (RMSE) of the estimates in log scale are defined as , and , respectively.

Table 1.

Simulation results for the bivariate normal case.

| log c = −507.2776 | |||||||||

|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||

| T=1,000 | T=10,000 | Time (sec.) | |||||||

|

| |||||||||

| K | r | Mean | MCSE | RMSE | Mean | MCSE | RMSE | ||

| HM | −494.671 | 0.908 | 12.639 | −495.142 | 0.762 | 12.159 | 0.644 | ||

|

| |||||||||

| IDR | 1.5 | −509.064 | 0.302 | 1.811 | −509.123 | 0.145 | 1.851 | 1.638 | |

| 2.0 | −509.095 | 0.537 | 1.895 | −509.284 | 0.387 | 2.043 | 1.634 | ||

| 2.5 | −508.926 | 0.710 | 1.795 | −509.216 | 0.629 | 2.038 | 1.621 | ||

|

| |||||||||

| PWK | 10 | 1.5 | −507.260 | 0.064 | 0.067 | −507.264 | 0.020 | 0.025 | 0.329 |

| 2.0 | −507.262 | 0.053 | 0.055 | −507.264 | 0.016 | 0.021 | 0.596 | ||

| 2.5 | −507.259 | 0.057 | 0.060 | −507.264 | 0.019 | 0.023 | 0.784 | ||

|

| |||||||||

| 20 | 1.5 | −507.260 | 0.064 | 0.066 | −507.264 | 0.020 | 0.024 | 0.327 | |

| 2.0 | −507.262 | 0.052 | 0.054 | −507.264 | 0.016 | 0.021 | 0.596 | ||

| 2.5 | −507.259 | 0.055 | 0.058 | −507.264 | 0.018 | 0.023 | 0.792 | ||

|

| |||||||||

| 100 | 1.5 | −507.260 | 0.064 | 0.066 | −507.264 | 0.020 | 0.024 | 0.426 | |

| 2.0 | −507.261 | 0.052 | 0.054 | −507.264 | 0.016 | 0.021 | 0.660 | ||

| 2.5 | −507.260 | 0.055 | 0.058 | −507.264 | 0.018 | 0.022 | 0.877 | ||

Table 1 shows the results, where the average computing time (in seconds) per MCMC sample on an Intel i7 processor machine with 12 GB of RAM memory using a Windows 8.1 operating system is given in the last column. From Table 1, we see that (i) PWK has the best performance with much smaller MCSE and RMSE than HM and IDR under both T = 1, 000 and T = 10, 000; (ii) when T increases, the MCSE and the RMSE of the PWK estimator become smaller under all choices of r and K; (iii) the performance of the HM estimator slightly improves but the IDR estimator does not when T increases; and (iv) the computing time of the PWK estimator is comparable to that of the HM estimator while the IDR estimator requires the most computing time. It is interesting to mention that the MCSE and the RMSE of the PWK estimator are very similar for all choices of r and K under each T, implying the robustness of the PWK estimator with respect to the specification of the working parameter space and the number of partition subsets.

In this example, we also examine the performance of ePWK by adding a subset AK+1 = Θ ∩ Ωc = {θ: ||θ|| > r} such that K* = K + 1 and . We further specify for θ ∈ AK+1, where is a point on the boundary of AK+1 and

Under this specification, we have . Holding the other subsets A1, …, AK and their corresponding weights the same as for PWK, the resulting values of MCSE and RMSE by ePWK are 0.06332 and 0.06579 when T = 1, 000, K = 100, and r = 1.5; 0.05167 and 0.05420 when r = 2.0; and 0.05500 and 0.05772 when r = 2.5. Compared to the results of PWK (0.06375 and 0.06621 when r = 1.5; 0.05168 and 0.05420 when r = 2.0; and 0.05499 and 0.05772 when r = 2.5), ePWK performs very similarly to PWK, which is expected since the posterior kernel has light tails and very low values on AK+1.

To evaluate the effect of a vague prior on the precision of the PWK estimator, we extend our simulation study by considering different values of hyperparameters κ0 and ν0. Note that the value of log c in Table 1 is computed under κ0 = 0.01 and ν0 = 3, which corresponds to a relatively vague prior for (μ, Σ). Table 2 shows the simulation results of the PWK estimators with r = 2 and K = 100 for (κ0, ν0) = (0.0001, 3), (1, 3), and (1, 10) in addition to (0.01, 3). From Table 2, we see that the MCSE values under these different values of (κ0, ν0) are almost the same while the RMSE values are comparable except the last one with (κ0, ν0) = (1, 10), in which the RMSE values are slightly larger.

Table 2.

Simulation results of PWK estimators for different hyperparameters κ0 and ν0.

| T=1,000 | T=10,000 | |||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| κ0 | ν0 | log c | Mean | MCSE | RMSE | Mean | MCSE | RMSE |

| 0.0001 | 3 | −511.883 | −511.866 | 0.052 | 0.054 | −511.869 | 0.016 | 0.021 |

| 0.01 | 3 | −507.278 | −507.261 | 0.052 | 0.054 | −507.264 | 0.016 | 0.021 |

| 1 | 3 | −502.682 | −502.665 | 0.052 | 0.054 | −502.669 | 0.016 | 0.021 |

| 1 | 10 | −512.773 | −512.721 | 0.053 | 0.074 | −512.725 | 0.016 | 0.050 |

5.2 A Mixture of Two Bivariate Normal Distributions Example

To evaluate the performance of ePWK, we consider the two-dimensional normal mixture in Chen et al. (2006)

| (16) |

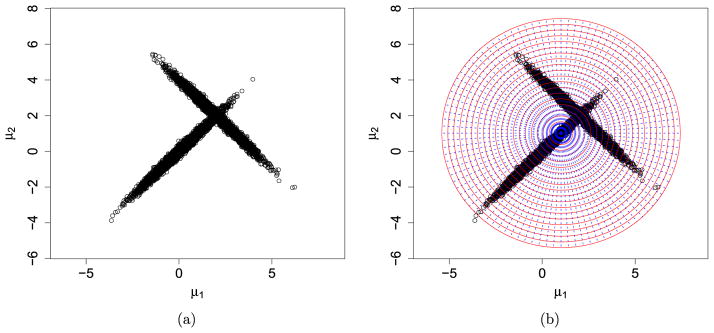

where μ = (μ1, μ2)′, μ01 = (0, 0)′, μ02 = (2, 2)′ and with σ1 = σ2 = 1, ρ1 = 0.99, and ρ2 = −0.99. Figure 1(a) is a scatter plot of a random sample with T = 10, 000 generated from (16). Based on the random sample, we apply ePWK to estimate the normalizing constant in (16), which is known to be 1. Due to the high but opposite correlations (i.e., ρ1 = 0.99 and ρ2 = −0.99), π(μ) cannot be homogeneous over a partition ring formed by the spherical shell approach in Section 3.3. To circumvent this difficulty, following Remark 9, we additionally slice the existing partition rings by dividing them equally along the angle from 0 to 360 degrees as shown by the dashed lines in Figure 1(b), where the center of the circle is the sample posterior mean (denoted as μ̂). Now, the heterogeneity of π(μ) over each partition subset is effectively eliminated by this additional slicing step. We note that this version of ePWK is the same as PWK except for additional slicing over the partition rings.

Figure 1.

Forming the working parameter space and its partition for a mixture normal distribution with means (0,0) and (2,2).

Table 3 shows the results of HM, IDR, and ePWK estimators based on 1,000 independent random samples with T = 1, 000 or T = 10, 000 from (16). For ePWK, we consider different values of K (the number of rings) with the same mk = m (the number of slices) for k = 1, …, K and r (75%, 90%, or 95% × max1≤t≤T ||μt − μ̂||). We use the same values of r for both IDR and ePWK. From Table 3, we see that (i) the RMSE values of the ePWK are considerably smaller than those of HM and IDR; (ii) the performance of ePWK improves when the sample size (T) or the number of rings (K) increases; and (iii) ePWK takes slightly longer computing time than HM and IDR.

Table 3.

Simulation results for the mixture normal with means equal to (0,0) and (2,2).

| log c = 0 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||

| T = 1,000 | T = 10,000 | Time (sec.) | ||||||||

|

| ||||||||||

| K | m | r | Mean | MCSE | RMSE | Mean | MCSE | RMSE | ||

| HM | −2.868 | 0.685 | 2.948 | −3.069 | 0.519 | 3.113 | 1.647 | |||

|

| ||||||||||

| IDR | 5.065 | 1.879 | 0.639 | 1.985 | 1.706 | 0.448 | 1.764 | 1.680 | ||

| 6.078 | 2.149 | 0.650 | 2.245 | 1.935 | 0.485 | 1.995 | 1.839 | |||

| 6.415 | 2.243 | 0.659 | 2.337 | 2.015 | 0.485 | 2.073 | 1.717 | |||

|

| ||||||||||

| ePWK | 20 | 100 | 5.065 | 0.001 | 0.020 | 0.020 | 0.000 | 0.006 | 0.006 | 2.167 |

| 6.078 | 0.000 | 0.025 | 0.025 | 0.000 | 0.008 | 0.008 | 2.375 | |||

| 6.415 | 0.000 | 0.025 | 0.025 | −0.001 | 0.008 | 0.008 | 2.187 | |||

|

| ||||||||||

| 100 | 100 | 5.065 | 0.000 | 0.011 | 0.011 | 0.000 | 0.003 | 0.003 | 2.933 | |

| 6.078 | 0.000 | 0.011 | 0.011 | 0.000 | 0.004 | 0.004 | 3.037 | |||

| 6.415 | 0.000 | 0.011 | 0.011 | 0.000 | 0.004 | 0.004 | 2.929 | |||

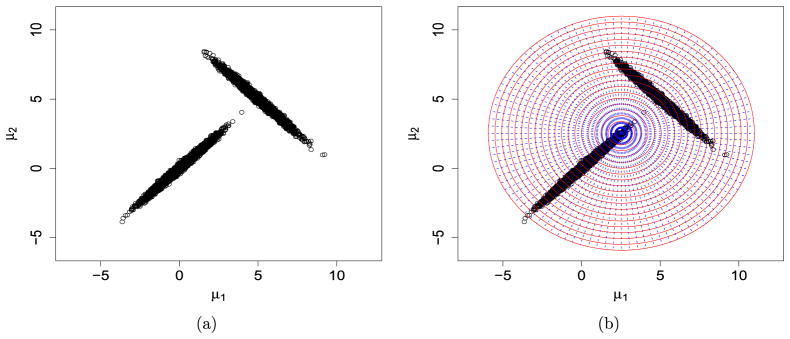

Next, we consider a more challenging case, where μ02 is replaced by (5, 5)′ so that the two modes are much further away from each other. Figure 2(a) is a scatter plot of a random sample with T = 10, 000 and Figure 2(b) shows the partition subsets of the chosen working parameter space.

Figure 2.

Forming the working parameter space and its partition for a mixture normal distribution with means (0,0) and (5,5).

Table 4 summarizes the simulation results with the same simulation setting as before. We see that ePWK outperforms both HM and IDR under this more challenging case. As expected, the RMSE values in Table 4 are larger than those in Table 3 for all three methods. However, the RMSE values of the ePWK estimator are still quite small when K and T are reasonably large.

Table 4.

Simulation results for the mixture normal with means equal to (0,0) and (5,5).

| log c = 0 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

| ||||||||||

| T = 1,000 | T = 10,000 | Time (sec.) | ||||||||

|

| ||||||||||

| K | m | r | Mean | MCSE | RMSE | Mean | MCSE | RMSE | ||

| HM | −2.915 | 0.681 | 2.993 | −3.107 | 0.500 | 3.147 | 1.728 | |||

|

| ||||||||||

| IDR | 6.675 | 2.340 | 1.586 | 2.825 | 2.791 | 1.695 | 3.263 | 1.763 | ||

| 8.011 | 1.658 | 1.780 | 2.429 | 2.409 | 1.350 | 2.760 | 1.730 | |||

| 8.456 | 1.568 | 1.985 | 2.524 | 2.216 | 1.430 | 2.636 | 1.822 | |||

|

| ||||||||||

| ePWK | 20 | 100 | 6.675 | −0.001 | 0.035 | 0.035 | 0.000 | 0.011 | 0.011 | 2.277 |

| 8.011 | 0.003 | 0.060 | 0.060 | 0.000 | 0.019 | 0.019 | 2.253 | |||

| 8.456 | 0.000 | 0.060 | 0.060 | 0.000 | 0.018 | 0.018 | 2.374 | |||

|

| ||||||||||

| 100 | 100 | 6.675 | 0.000 | 0.018 | 0.018 | 0.000 | 0.006 | 0.006 | 3.022 | |

| 8.011 | 0.000 | 0.018 | 0.018 | 0.000 | 0.006 | 0.006 | 2.933 | |||

| 8.456 | 0.000 | 0.019 | 0.019 | 0.000 | 0.006 | 0.006 | 3.114 | |||

6 Application of the PWK to Real Data Examples

6.1 The Ordinal Probit Regression Model

In the first example, we apply the PWK method to computing the marginal likelihood under the ordinal probit regression model. Let y = (y1, y2, …, yn)′ denote the vector of observed ordinal responses, each is coded as one value from 0, 1, …, J −1, X denote the n × p covariate matrix with the ith row equal to the covariate of the ith subject , and u = (u1, u2, …, un)′ denote the vector of latent random variables. We consider the following hierarchical model as in Albert and Chib (1993) such that

and

where j = 0, 1, …, J − 1, β is a p-dimensional vector of regression coefficients, and . Based on the reparameterization of Nandram and Chen (1996), the cutpoints for dividing the latent variable ui can be specified as −∞ = γ0 < γ1 = 0 ≤ γ2 ≤ ⋯ ≤ γJ−1 = 1 < γJ = ∞. Under this setting, the likelihood function is given in Chen (2005)

where θ = (β′, σ, γ2, …, γJ−2)′ if J ≥ 4, otherwise, θ = (β′, σ)′, and Φ(.) is the cumulative standard normal distribution function. Then, we specify normal, inverse gamma, and uniform priors for the parameters β, σ2, and γ, respectively.

To examine the performance of the PWK estimator under this model, we consider the prostate cancer data of n = 713 patients as in Chen (2005). In this data set, Pathological Extracapsular Extension (PECE, y) is a clinical ordinal response variable, and Prostate Specific Antigen (PSA, x1), Clinical Gleason Score (GLEAS, x2), and Clinical Stage (CSTAGE, x3) are three covariates. PECE takes values of 0, 1, or 2, where 0 means that there is no cancer cell present in or near the capsule, 1 denotes that the cancer cells extend into but not through the capsule, and 2 indicates that cancer cells extend through the capsule. PSA and GLEAS are continuous variables while CSTAGE is a binary outcome, which was assigned to 1 if the 1992 American Joint Commission on cancer clinical stage T-category was 1, and assigned to 2 if the T-category was 2 or higher.

In this application, J = 3 so that all four cutpoints can be assigned to fixed values: −∞ = γ0 < γ1 = 0 < γ2 = 1 < γ3 = ∞. Then, the prior distribution is specified as

where β|σ2 ~ N(0, 10σ2I4) and σ2 ~ IG(a0 = 1, b0 = 0.1). The density function of an inverse gamma distribution IG(a0, b0) is proportional to (σ2)−(a0+1) exp(−b0/σ2).

The marginal likelihood is not analytically available. Nevertheless, the estimates of this are obtained in Table 1 of Chen (2005) using the method proposed by Chen (called Chen’s method) and the method proposed by Chib (1995) (called Chib’s method). Chen’s method needs only a single MCMC sample from the joint posterior distribution π(β, σ2|D). However, Chib’s method with two blocks requires an additional MCMC sample from the conditional posterior distribution π(σ2|β*,D), where β* is the posterior mean of β. We compare PWK to these two methods under the same MCMC sample sizes T = 2, 500, or 5, 000 as in Chen (2005), except that Chib’s method doubles them.

For the PWK, we apply a log transformation for σ2. Then, after the standardization of the transformed MCMC sample, we consider K = 10, 20, and 100 and , and to investigate robustness of the PWK estimates with respect to these choices. We note that is the square-root of the 95th percentile of the Chi-square distribution with p = dim(θ) = 5 degrees of freedom, which is derived by computing the norm of p independent standard normal distributions as in Yu et al. (2015). Table 5 shows the PWK estimates and the corresponding estimated MCSE (eMCSE) under the MCMC samples with T = 2,500 and 5,000, where eMCSE is computed using (12) with T/B = 10. We note that we use the same MCMC sample sizes as in Chen (2005). The results show the PWK estimators are relatively robust to the choice of the radius r and the number K of partition subsets.

Table 5.

The PWK estimates of the marginal likelihood for the prostate cancer data.

|

| |||||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| PWK (K=10) | PWK (K=20) | PWK (K=100) | |||||

|

| |||||||

| T | −log d̂ | eMCSE | −log d̂ | eMCSE | −log d̂ | eMCSE | |

| 2, 500 | −758.73 | 0.026 | −758.73 | 0.025 | −758.73 | 0.025 | |

| 5, 000 | −758.70 | 0.021 | −758.70 | 0.020 | −758.70 | 0.020 | |

|

| |||||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| PWK (K=10) | PWK (K=20) | PWK (K=100) | |||||

|

| |||||||

| T | −log d̂ | eMCSE | −log d̂ | eMCSE | −log d̂ | eMCSE | |

| 2, 500 | −758.70 | 0.020 | −758.70 | 0.019 | −758.70 | 0.020 | |

| 5, 000 | −758.70 | 0.016 | −758.70 | 0.016 | −758.70 | 0.016 | |

|

| |||||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| PWK (K=10) | PWK (K=20) | PWK (K=100) | |||||

|

| |||||||

| T | −log d̂ | eMCSE | −log d̂ | eMCSE | −log d̂ | eMCSE | |

| 2, 500 | −758.69 | 0.020 | −758.69 | 0.019 | −758.69 | 0.017 | |

| 5, 000 | −758.70 | 0.018 | −758.70 | 0.015 | −758.69 | 0.014 | |

From Table 1 of Chen (2005), the estimates of log c and eMCSE’s are −758.71 and 0.038 based on Chen’s method and −758.67 and 0.037 based on Chib’s method for T = 2, 500; and −758.71 and 0.024 based on Chen’s method and −758.70 and 0.023 based on Chib’s method for T = 5, 000. We see from Table 5 that the PWK estimates of log c are similar to those under both Chen’s and Chib’s methods but with smaller eMCSE’s under the MCMC samples with T = 2,500 and 5,000, respectively. For instance, the PWK estimates of log c and the corresponding eMCSE’s are −758.70 and 0.020 for T = 2, 500 and −758.70 and 0.016 for T = 5, 000 when and K = 100. Thus, the PWK yields a slightly more precise estimate of log c than the other two methods.

6.2 Analysis of ECOG Data

In this subsection, we apply the PWK estimator to the problem of determining the power prior based on historical data for the current analysis. Assume we have conducted two clinical trials for the same objective. A natural way to combine these two trials is to consider the power prior setting, which allows us to borrow information from the historical data to construct the prior for the current analysis. Assume we have an initial prior for the unknown parameters that is determined before observing the historical data. To quantify the heterogeneity between the current data and the historical data, the power prior weights the historical likelihood function by the power a0, where 0 ≤ a0 ≤ 1, to indicate the extent to which the historical likelihood is incorporated into the initial prior. Our objective is to find the optimal a0 which maximizes the marginal likelihood for the current data. Ibrahim et al. (2015) point out the difficulty of finding this solution except for normal linear regression models. Therefore, they resort to using the deviance information criterion (DIC) and the logarithm of pseudo-marginal likelihood (LPML) criterion for constructing the parameter a0 of the power prior in Ibrahim et al. (2012, 2015). To evaluate DIC, we need to plug the MCMC sample into the sum of the log likelihood over all data points; to evaluate LPML, we need to take the sum of the log transformation of each CPO, where the ith CPO is the harmonic mean of the ith likelihood evaluated at the MCMC sample from the posterior distribution based on the full sample. Both methods yield much less computational burden than the marginal likelihood method. We will show how the PWK estimator can circumvent the computational burden in evaluating the marginal likelihood.

The effectiveness of Interferon Alpha-2b (IFN) in immunotherapy for melanoma patients has been evaluated by two observation-controlled clinical trials: Eastern Cooperative Oncology Group (ECOG) phase III, E1684, followed by E1690. The first trial E1684 was conducted with 286 patients randomly assigned to either IFN or Observation. The IFN arm demonstrated a significantly better survival curve, but with substantial side effects due to high dose regimen. To confirm the results of the E1684 and the benefit of IFN at a lower dosage, a later trial E1690 was conducted with three arms: high dose IFN, low dose IFN, and Observation. We use the data in E1684 as the historical data and a subset (high dose arm and Observation) of the E1690 trial as our current data. There are 427 patients in this subset.

For n = 427 patients in the current trial (E1690), we follow the model in Chen et al. (1999). Let yi denote the relapse-free survival time for the ith patient, νi denote the censoring status, which is equal to 1 if yi is a failure time and to 0 if it is right censored, xi = (1, trti)′ denote the vector of covariates, where trti = 1 if the ith patient received IFN and trti = 0 if the ith patient was assigned to Observation. Then, the likelihood function is given by

| (17) |

where D = (n, y, ν, X) is the observed current data, β = (β0, β1)′, and F(y|λ) is the cumulative distribution function and f(y|λ) is the corresponding density function. In (17), we use the same piecewise exponential model for F(y|λ) as Ibrahim et al. (2012), which is given by

where sj−1 ≤ y < sj, s0 = 0 < s1 < s2 < … < s5 = ∞, and λ = (λ1, …, λ5)′.

For n0 = 286 patients in the historical trial (E1684), we attempt to extract some of its information to set up the prior distribution for the current analysis. Similarly, we let y0i denote the survival time for the ith patient, ν0i denote the censoring status, and x0i = (1, trt0i)′ denote the vector of covariates. So D0 = (n0, y0, ν0, X0) is the observed historical data. Assume π0(β, λ) is an initial prior. Here, we specify an initial proper prior N(0, 100I2) for β and Exp(λ0 = 1/100) (λ0: rate parameter) for each λj, j = 1, …, 5, to come close to the flat prior in Ibrahim et al. (2012). To update the initial prior with the historical data, the power prior is intuitively set as the initial prior π0 multiplied by the historical likelihood function with power a0 as follows:

| (18) |

where π(β, λ|D0, a0) is called the power prior and 0 ≤ a0 ≤ 1. In this setting, we can see when a0 = 0, the power prior is exactly equal to the initial prior, which integrates to be 1, and when a0 ≠ 0, the power prior is equal to the right-hand side kernel function in (18) divided by c0 = ∫ L(β, λ|D0)a0π0(β, λ)dβdλ. Combining the likelihood function in (17) and the power prior in (18), the posterior distribution of β and λ given (D, D0, a0) will be

| (19) |

In this framework, we compare the marginal likelihoods of L(β, λ|D)π(β, λ|D0, a0) for 0 ≤ a0 ≤ 1. The one with the highest marginal likelihood is our final model, and its corresponding a0 determines the power prior.

However, as we point out earlier, except for a0 = 0, π(β, λ|D0, a0) is known up to a normalizing constant c0. Hence, a two-step evaluation is needed to obtain the marginal likelihood:

We apply the PWK to estimate the numerator, L(β, λ|D)L(β, λ|D0)a0π0(β, λ), and the denominator, L(β, λ|D0)a0π0(β, λ), respectively.

For each choice of a0 with an increment of 0.1 from 0 to 1, an MCMC sample size is fixed at 10,000. The log transformation of each λj is needed. After the standardization of the transformed MCMC sample, we choose the maximum radius due to p = 7, and the number of spherical shells K = 100. By (13) and (12), we can obtain the marginal likelihood estimate and its eMCSE for each chosen a0. We summarize the results in Table 6. Table 6 also includes the PWK estimates under and K = 10, 20 to investigate the robustness of the PWK method.

Table 6.

PWK estimates for marginal likelihood with different power priors under different choices of r and K.

|

| |||||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| K=10 | K=20 | K=100 | |||||

| a0 | ln(d̂0/d̂1) | eMCSE | ln(d̂0/d̂1) | eMCSE | ln(d̂0/d̂1) | eMCSE | |

| 0.0 | −552.717 | 0.028 | −552.713 | 0.026 | −552.709 | 0.028 | |

| 0.1 | −523.619 | 0.055 | −523.614 | 0.051 | −523.621 | 0.053 | |

| 0.2 | −522.091 | 0.044 | −522.078 | 0.044 | −522.073 | 0.044 | |

| 0.3 | −521.408 | 0.043 | −521.420 | 0.043 | −521.419 | 0.043 | |

| 0.4 | −521.336 | 0.046 | −521.332 | 0.047 | −521.338 | 0.045 | |

| 0.5 | −521.201 | 0.057 | −521.229 | 0.060 | −521.229 | 0.060 | |

| 0.6 | −521.189 | 0.037 | −521.202 | 0.034 | −521.187 | 0.033 | |

| 0.7 | −521.356 | 0.050 | −521.363 | 0.044 | −521.353 | 0.044 | |

| 0.8 | −521.553 | 0.054 | −521.558 | 0.056 | −521.576 | 0.058 | |

| 0.9 | −521.592 | 0.061 | −521.618 | 0.051 | −521.612 | 0.050 | |

| 1.0 | −521.702 | 0.052 | −521.724 | 0.055 | −521.732 | 0.050 | |

|

| |||||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| K=10 | K=20 | K=100 | |||||

| a0 | ln(d̂0/d̂1) | eMCSE | ln(d̂d0/d̂1) | eMCSE | ln(d̂0/d̂1) | eMCSE | |

| 0.0 | −552.732 | 0.022 | −552.707 | 0.025 | −552.708 | 0.027 | |

| 0.1 | −523.633 | 0.059 | −523.646 | 0.049 | −523.624 | 0.054 | |

| 0.2 | −522.098 | 0.052 | −522.093 | 0.050 | −522.077 | 0.045 | |

| 0.3 | −521.433 | 0.039 | −521.432 | 0.040 | −521.417 | 0.043 | |

| 0.4 | −521.309 | 0.046 | −521.321 | 0.048 | −521.339 | 0.043 | |

| 0.5 | −521.179 | 0.062 | −521.187 | 0.059 | −521.230 | 0.059 | |

| 0.6 | −521.186 | 0.039 | −521.174 | 0.037 | −521.187 | 0.033 | |

| 0.7 | −521.365 | 0.034 | −521.361 | 0.042 | −521.349 | 0.044 | |

| 0.8 | −521.535 | 0.055 | −521.568 | 0.056 | −521.573 | 0.056 | |

| 0.9 | −521.627 | 0.047 | −521.613 | 0.055 | −521.613 | 0.050 | |

| 1.0 | −521.746 | 0.059 | −521.739 | 0.049 | −521.732 | 0.050 | |

|

| |||||||

|---|---|---|---|---|---|---|---|

|

| |||||||

| K=10 | K=20 | K=100 | |||||

| a0 | ln(d̂0/d̂1) | eMCSE | ln(d̂0/d̂1) | eMCSE | ln(d̂0/d̂1) | eMCSE | |

| 0.0 | −552.740 | 0.039 | −552.719 | 0.033 | −552.708 | 0.027 | |

| 0.1 | −523.551 | 0.057 | −523.622 | 0.052 | −523.622 | 0.053 | |

| 0.2 | −522.105 | 0.045 | −522.077 | 0.044 | −522.071 | 0.045 | |

| 0.3 | −521.427 | 0.048 | −521.422 | 0.045 | −521.421 | 0.042 | |

| 0.4 | −521.311 | 0.048 | −521.317 | 0.046 | −521.335 | 0.044 | |

| 0.5 | −521.239 | 0.052 | −521.232 | 0.057 | −521.227 | 0.059 | |

| 0.6 | −521.186 | 0.037 | −521.171 | 0.033 | −521.184 | 0.032 | |

| 0.7 | −521.381 | 0.047 | −521.376 | 0.045 | −521.350 | 0.043 | |

| 0.8 | −521.569 | 0.067 | −521.578 | 0.063 | −521.578 | 0.057 | |

| 0.9 | −521.597 | 0.052 | −521.621 | 0.054 | −521.609 | 0.049 | |

| 1.0 | −521.705 | 0.060 | −521.740 | 0.046 | −521.730 | 0.051 | |

Note the marginal likelihood function c can be shown to be continuous in a0. Therefore, from Table 6, we see that the best choice of a0 is between 0.5 and 0.6 under the marginal likelihood criterion. This result is quite comparable to the result of a0 = 0.4 in Ibrahim et al. (2012) obtained by DIC and LPML criteria, where a suitable marginal likelihood computation was not accessible. We also observe that the results are quite robust to the different values of r and K, and all point out that the best choice of a0 is between 0.5 and 0.6.

7 Discussion

The marginal likelihood is often analytically intractable due to a complicated kernel structure. Nevertheless, an MCMC sample from the posterior distribution is readily available from Bayesian computing software. Additionally, the likelihood values evaluated at the MCMC sample are output in a file. Consequently, we can produce kernel values easily using the output and the prior function. In this paper, we propose a new algorithm, PWK, for estimating the marginal likelihood based on this single MCMC sample and its corresponding kernel values. Unlike some existing algorithms requiring knowledge of the structure of the kernel, we only need to know the kernel values evaluated at the MCMC sample. Therefore, our algorithm can be applied to Bayesian model selection, assessing the sensitivity of conclusions to the prior distribution, and Bayes hypothesis tests. We implement our methodology using the R programming language (R Core Team, 2015). The R codes along with README files are available as Online Supplementary Materials (Wang et al., 2017b).

We extend PWK to handle the parameter space with the full support (ePWK) and we show that HM and IDR are special cases of ePWK. We conduct a simulation study from a bivariate normal distribution with 5 parameters in a Bayesian conjugate prior inference problem to compare our estimator to HM and IDR; our results show that PWK has the smallest empirical MCSE and RMSE. The computation time for our method is only slightly longer than that for the HM which indicates our spherical shell partition approach is very efficient. We conduct another simulation study for a mixture of two bivariate normal distributions to illustrate the ePWK estimator, which is obtained by additionally slicing the partition rings in the partition step of the PWK method. We show that the ePWK method reduces the MCSE and RMSE by a great deal when compared to the HM and IDR methods at the cost of slightly more computation time.

In example analyses of real data, we first consider an ordinal probit regression model, and compare our method to that in Chib (1995) and Chen (2005) with the same MCMC sample size for Chen’s method (Chib’s method requires twice this sample size). We find the three methods produce comparable estimates for the marginal likelihood and the PWK method produces the smallest eMCSE. In the second example, we consider a cure rate survival model with the piecewise constant baseline hazard function and a power prior construction based on two clinical trial data sets. We obtain the optimal power prior using the marginal likelihood criterion as opposed to the DIC and LPML methods considered by Ibrahim et al. (2012). We obtain similar results, except that the PWK approach indicates more borrowing of the historical data.

In unimodal problems, we suggest using the square root of the 95th percentile in a Chi-square distribution with p degrees of freedom as a guide to choosing a value for the radius r for constructing the working parameter space of the standardized MCMC sample. This is because, after standardizing the MCMC sample, the marginal distribution of each parameter is approximately standard normal. Although the results are quite robust to the choices of r as shown in simulation and case studies, using the Chi-square distribution for guidance ensures that we make use of most of the MCMC sample and avoid the region with posterior density close to 0. For multimodal problems, we suggest using 95% × max1≤t≤T ||μt − μ̂|| as a guide value for constructing the working parameter space of the transformed MCMC sample. Since this approach may result in many partition subsets with extremely small posterior density in the working parameter space, we can use the spherical rings approach as demonstrated in Section 5.2 to obtain the homogeneity of the MCMC sample in each subset. This new partition approach can also be extended to a p-dimensional problem (p > 2) by introducing another p − 2 angular coordinates as in Lehnen and Wesenberg (2003) and slicing them as in Section 5.2.

Supplementary Material

Acknowledgments

We would like to thank the editor-in-chief, an editor, and an associate editor for their very helpful comments and suggestions, which have led to a much improved version of the paper. This material is based upon work supported by the National Science Foundation under grant number DEB-1354146 to POL, MHC, LK, and Louise Lewis. MHC’s research was also partially supported by NIH grant number GM 70335 and P01 CA142538.

Footnotes

SupplementaryWeb Materials for “A New Monte Carlo Method for Estimating Marginal Likelihoods” (DOI: 10.1214/17-BA1049SUPPA;.pdf).

Online Supplementary Materials for “A New Monte Carlo Method for Estimating Marginal Likelihoods” (DOI: 10.1214/17-BA1049SUPPB;.zip).

References

- Albert JH, Chib S. Bayesian Analysis of Binary and Polychotomous Response Data. Journal of the American of Statistical Assocation. 1993;88:669–679. [Google Scholar]

- Chen MH. Computing Marginal Likelihoods from a Single MCMC Output. Statistica Neerlandica. 2005;59:16–29. [Google Scholar]

- Chen MH, Ibrahim JG, Sinha D. A New Bayesian Model for Survival Data With a Surviving Fraction. Journal of the American Statistical Association. 1999;94:909–919. doi: http://dx.doi.org/10.2307/2670006. [Google Scholar]

- Chen MH, Kim S, et al. Discussion of Equi-Energy Sampler by Kou, Zhou and Wong. The Annals of Statistics. 2006;34(4):1629–1635. doi: http://dx.doi.org/10.1214/009053606000000515. [Google Scholar]

- Chen MH, Shao QM. Partition-Weighted Monte Carlo Estimation. Annals of the Institute of Statistical Mathematics. 2002;54:338–354. doi: http://dx.doi.org/10.1023/A:1022426103047. [Google Scholar]

- Chib S. Marginal Likelihood from the Gibbs Output. Journal of the American Statistical Association. 1995;90:1313–1321. [Google Scholar]

- Chib S, Jeliazkov I. Marginal Likelihood from the Metropolis–Hastings Output. Journal of the American Statistical Association. 2001;96:270–281. [Google Scholar]

- Fan Y, Wu R, Chen MH, Kuo L, Lewis PO. Choosing among Partition Models in Bayesian Phylogenetics. Molecular Biology and Evolution. 2011;28(1):523–532. doi: 10.1093/molbev/msq224. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friel N, Pettitt AN. Marginal Likelihood Estimation via Power Posteriors. Journal of the Royal Statistical Society, Series B. 2008;70:589–607. [Google Scholar]

- Gelfand AE, Dey DK. Bayesian Model Choice: Asymptotics and Exact Calculations. Journal of the Royal Statistical Society, Series B. 1994;56:501–514. [Google Scholar]

- Geweke J. Bayesian Inference in Econometric Models Using Monte Carlo Integration. Econometrica. 1989;57:1317–1339. [Google Scholar]

- Ibrahim JG, Chen MH, Chu H. Bayesian Methods in Clinical Trials: a Bayesian Analysis of ECOG Trials E1684 and E1690. BMC Medical Research Methodology. 2012;12:170–183. doi: 10.1186/1471-2288-12-183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibrahim JG, Chen M-H, Gwon Y, Chen F. The Power Prior: Theory and Applications. Statistics in Medicine. 2015 doi: 10.1002/sim.6728. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lartillot N, Philippe H. Computing Bayes Factors Using Thermodynamic Integration. Systematic Biology. 2006;55:195–207. doi: 10.1080/10635150500433722. [DOI] [PubMed] [Google Scholar]

- Lehnen A, Wesenberg GE. The Sphere Game. The AMATYC Review. 2003;25 URL http://faculty.madisoncollege.edu/alehnen/sphere/hypers.htm. [Google Scholar]

- Marin JM, Robert CP. Importance Sampling Methods for Bayesian Discrimination between Embedded Models. In: Chen M-H, Dey DK, Muller P, Sun D, Ye K, editors. Frontiers of Statistical Decision Making and Bayesian Analysis. New York: Springer; 2010. pp. 513–527. [Google Scholar]

- Nandram B, Chen MH. Reparameterizing the Generalized Linear Model to Accelerate Gibbs Sampler Convergence. Journal of Statistical Computation and Simulation. 1996;54:129–144. doi: http://dx.doi.org/10.1080/00949659608811724. [Google Scholar]

- Newton MA, Raftery AE. Approximate Bayesian Inference by the Weighted Likelihood Bootstrap. Journal of the Royal Statistical Society, Series B. 1994;56:3–48. [Google Scholar]

- Petris G, Tardella L. A Geometric Approach to Transdimensional Markov Chain Monte Carlo. The Canadian Journal of Statistics. 2003;31(4):469–482. [Google Scholar]

- Petris G, Tardella L. New Perspectives for Estimating Normalizing Constants via Posterior Simulation. Technical report, Universitá di Roma “La Sapienza” 2007 [Google Scholar]

- R Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; Vienna, Austria: 2015. URL https://www.R-project.org/ [Google Scholar]

- Robert CP, Wraith D. Computational Methods for Bayesian Model Choice. MaxEnt 2009 Proceedings 2009 [Google Scholar]

- Schmeiser BW, Avramidis TN, Hashem S. Overlapping Batch Statistics. Proceedings of the 22nd Conference on Winter Simulation; IEEE Press; 1990. pp. 395–398. [Google Scholar]

- Wang Y-B, Chen M-H, Kuo L, Lewis PO. Supplementary Web Materials for “A New Monte Carlo Method for Estimating Marginal Likelihoods”. Bayesian Analysis. 2017a doi: 10.1214/17-BA1049. doi: http://dx.doi.org/10.1214/17-BA1049SUPPA. [DOI] [PMC free article] [PubMed]

- Wang Y-B, Chen M-H, Kuo L, Lewis PO. Online Supplementary Materials for “A New Monte Carlo Method for Estimating Marginal Likelihoods”. Bayesian Analysis. 2017b doi: 10.1214/17-BA1049. doi: http://dx.doi.org/10.1214/17-BA1049SUPPB. [DOI] [PMC free article] [PubMed]

- Xie W, Lewis PO, Fan Y, Kuo L, Chen MH. Improving Marginal Likelihood Estimation for Bayesian Phylogenetic Model Selection. Systematic Biology. 2011;60(2):150–160. doi: 10.1093/sysbio/syq085. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu F, Chen MH, Kuo L, Talbott H, Davis JS. Confident Difference Criterion: A New Bayesian Differentially Expressed Gene Selection Algorithm With Applications. BMC Bioinformatics. 2015;16(1):245. doi: 10.1186/s12859-015-0664-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.