Abstract

A cornerstone of statistical inference, the maximum entropy framework is being increasingly applied to construct descriptive and predictive models of biological systems, especially complex biological networks, from large experimental data sets. Both its broad applicability and the success it obtained in different contexts hinge upon its conceptual simplicity and mathematical soundness. Here we try to concisely review the basic elements of the maximum entropy principle, starting from the notion of ‘entropy’, and describe its usefulness for the analysis of biological systems. As examples, we focus specifically on the problem of reconstructing gene interaction networks from expression data and on recent work attempting to expand our system-level understanding of bacterial metabolism. Finally, we highlight some extensions and potential limitations of the maximum entropy approach, and point to more recent developments that are likely to play a key role in the upcoming challenges of extracting structures and information from increasingly rich, high-throughput biological data.

Keywords: Systems biology, Molecular biology, Mathematical bioscience, Computational biology, Bioinformatics

1. Introduction

It is not unfair to say that the major drivers of biological discovery are currently found in increasingly accurate experimental techniques, now allowing to effectively probe systems over scales ranging from the intracellular environment to single cells to multi-cellular populations, and in increasingly efficient bioinformatic tools, by which intracellular components and their putative interactions can be mapped at genome and metabolome resolution. Yet, at least to some degree, these approaches still appear hard to integrate into quantitive predictive models of cellular behaviour. In a sense this is not surprising. Even if we possessed detailed information about all sub-cellular parts and processes (including intracellular machines, their interaction partners, regulatory pathways, mechanisms controlling the exchange with the medium, etc.), it would be hard to build a comprehensive mechanistic model of a cell, and possibly even harder to infer deep organization principles from it. In large part, this is due to the fact that cells have an enormous number of degrees of freedom (e.g. protein levels, RNA levels, metabolite levels, reaction fluxes, etc.) which, collectively, can take on an intimidatingly large number of physico-chemically viable states. On the other hand, experiments necessarily probe only a tiny portion of these states. Therefore, understanding how all internal variables might coordinate so that certain “macroscopic” quantities, like the cell's growth rate, behave as observed in experiments is quite possibly a hopeless task. In addition, these models would most likely require some tuning of the multitude of parameters that characterize intracellular affairs, rendering overfitting a very concrete prospect. At the same time, though, the deluge of data coming from both sides (experiments and bioinformatics) begs for the development of bridges connecting them, not just as descriptive frameworks and predictive tools but also as guides for novel targeted experiments and bioengineering applications.

The problem appears to be that of finding a reasonable ‘middle-ground’ between full fledged mechanistic approaches and qualitative phenomenological descriptions based on coarse-grained quantities only. Perhaps following the lesson of thermodynamics (which, one might argue, has faced a similar question of bridging microscopic and macroscopic descriptions of physical systems), an increasing number of studies is undertaking a route different from – and in many ways inverse of – the mechanistic one. The key issues to be faced along such route are the following: To what degree do experimental results constrain the space of allowed states of a living system? Can we learn something about internal variables from experiments that probe a relatively small number of states? Is there a way to perform reliable statistical inference on the values of un-observed internal variables from empirical data? Ultimately, all of these questions boil down to the problem of inferring probability distributions (or, in other terms, statistical models) from limited data. This age-old challenge dating back to the origin of probability theory (see e.g. Laplace's ‘principle of indifference’, Ref. [1], Ch. 2) has found a self-consistent answer, the only such answer under certain conditions, in the so-called principle of maximum entropy.

Over the past decade, entropy maximization or closely related ideas have been repeatedly employed for the analysis of large-scale biological data sets in contexts ranging from the determination of macromolecular structures and interactions [2], [3], [4], [5], [6], [7], [8], [9], [10], [11], [12], [13], [14] to the inference of regulatory [15], [16], [17], [18], [19] and signaling networks [20], [21], [22], [23] and of the organization of coding in neural populations [24], [25], [26], [27], [28], [29], [30], [31], [32], [33], [34], [35], [36], [37]; from the analysis of DNA sequences (e.g. for the identification of specific binding sites) [38], [39], [40] to the study of the HIV fitness landscape [41], [42], [43]; from the onset of collective behaviour in large animal groups [44], [45], [46] to the emergence of ecological relationships [47], [48], [49], [50], [51], [52], [53], [54], [55], [56], [57]. The type of insight derived from these models is remarkably diverse, from fundamental organization principles in structured populations to specific gene-gene interaction networks. Revealingly, the challenge of dealing with high dimensional and limited data posed by biology has in turn stimulated the search for novel efficient implementations of the maximum entropy principle at the interface between computational biology, statistical physics and information theory, leading to an impressive improvement of inference schemes and algorithms. Future ramifications of these studies are likely to explore new application areas, as more/better data become available, theoretical predictions get sharper, and computational methods improve. In many ways, the maximum entropy approach now appears to be the most promising provider of ‘middle grounds’ where empirical findings and bioinformatic knowledge can be effectively bridged.

Several excellent reviews, even very recent ones, cover the more technical aspects of maximum entropy inference from the viewpoint of statistical physics, computational biology or information theory (see e.g. [58], [59], [60], [61], [62]). Our goal here is to provide a compact, elementary and self-consistent introduction to entropy maximization and its usefulness for inferring models from large-scale biological data sets, starting from the very basics (i.e. from the notion of ‘entropy’) and ending with a recent application (a maximum entropy view of cellular metabolism). We mainly hope to convey its broad applicability and potential to deliver new biological insight, and to stimulate further cross-talk while keeping mathematics to a minimum. A few basic mathematical details are nevertheless given in the Supplementary Material for sakes of completeness. Given the vastness of the subject, we shall take a shortcut through most of the subtleties that have accompanied the growth of the field since the 1940s, focusing instead on the aspects that (we believe) are of greater immediate relevance to our purposes. A partial and biased list of additional ingredients and new directions will be presented in the Discussion. The interested reader will however find more details and food for thought in the suggested literature.

For our purposes, the path leading to entropy maximization can start from the intuitively obvious idea that, when extracting a statistical model from data, one should avoid introducing biases other than those that are already present in the data, as they would be unwarranted and discretionary. For instance, if we had to model a process with E possible outcomes (like the throw of a dice) and had no prior knowledge of it, our best guess for a probability law underlying this process would have to be the uniform distribution, where each outcome occurs with probability . In essence, the framework of entropy maximization generalizes this intuition to more complex situations and provides a recipe to construct the ‘optimal’ (i.e. least biased) probability distribution compatible with a given set of data-derived constraints. Central to it is, of course, the concept of ‘entropy’.

2. Main text

2.1. Entropy and entropy maximization: a bird's eye view

The notion of ‘entropy’ as originated in thermodynamics is usually associated to that of ‘disorder’ by saying that the former can be regarded as a measure of the latter. The word ‘disorder’ here essentially means ‘randomness’, ‘absence of patterns’, or something similar. While not incorrect, these words clearly require a more precise specification to be useful at a quantitative level. As we shall see, once the stage is characterized more clearly, the entropy of a system (e.g. of a population of cells) with prescribed values for certain observables (e.g. the rate of growth of the population) quantifies the number of distinct arrangements of its basic degrees of freedom (e.g. the protein levels, RNA levels, metabolic reaction rates, etc. of each cell) that lead to the same values for the constrained observables. The larger this number, the larger the entropy. In this sense, the entropy of a system is really a measure of the microscopic multiplicity (the ‘degeneracy’) underlying its macroscopically observable state. What makes entropy a powerful inference tool is closely connected to this characterization.

To make things more precise, one can consider a classical, highly stylized example. Imagine having N identical balls distributed in K urns so that balls are placed in the i-th urn, with and . (To fix ideas, one can think of the N balls as the N different cells and of the K urns as K distinct configurations for the basic degrees of freedom of each cell. An arrangement defined by specific values of therefore represents how cells are distributed over the allowed internal states, with cells in state 1, in state 2, and so on.) By simple combinatorics (see [63], Ch. 3), the number of ways in which the N balls can be placed in the K urns leading to the same values of is given by the multinomial coefficient

| (1) |

This number1 describes the ‘microscopic’ degeneracy underlying the specific arrangement of balls described by the numbers and grows fast with N. Actually, for sufficiently large N, (1) turns out to be well approximated (see Supplementary Material, Sec. S1) by

| (2) |

where

| (3) |

The quantity H defined in (3) is the entropy of the arrangement described by the urn occupation numbers . Note (see Supplementary Material, Sec. S2) that . In a nutshell, Equations (2) and (3) say that, for large N, some arrangements can be realized in a huge number of ways (as a matter of fact, in a number of microscopic ways that is exponentially large in N), and that the entropy H ultimately quantifies this number. On the other hand, some specific arrangements can have a very small degeneracy. For instance, the arrangement with N balls in urn 1 and no balls elsewhere (i.e. with and for ) can be realized in a unique way, having and .2

Note that the quantity represents the fraction of balls appearing in urn i in arrangement or, equivalently for our purposes, the probability that a ball selected at random and uniformly comes from the i-th urn. Therefore H is a function of the probabilities (), i.e.

| (4) |

and the condition simply corresponds to the fact that probabilities should sum to one, i.e. .

It is rather intuitive that, if balls were ‘thrown’ into urns randomly (i.e. so that each of the N balls has equal probability of ending up in any of the K urns), the resulting arrangement would much more likely be one with large Ω (and entropy) than one with small Ω (and entropy). In particular, the most likely arrangement (or, equivalently, the most likely distribution of probabilities ) should coincide with that carrying the largest degeneracy, or maximum entropy, satisfying the constraint (or, equivalently, ). In this sense, the safest bet on the outcome of an experiment in which N balls are randomly assigned to K urns would be to place money on the maximum entropy (MaxEnt) distribution.

This is the gist of the maximum entropy principle: if one is to infer a probability distribution given certain constraints, out of all distributions compatible with them, one should pick the distribution having the largest value of (4). The only constraint considered in the above example of balls and urns is the normalization of probabilities, i.e. the fractions should sum to one: . In this case, the MaxEnt distribution is uniform, namely for each (see Supplementary Material, Sec. S3). However, constraints can involve other quantities, leading to different MaxEnt distributions (see Supplementary Material, Sec. S4 for a few simple examples). This just reflects the diverse information that constraints inject into the inference problem in each case.

It is important to understand that, because they correspond to maximal underlying degeneracy, MaxEnt distributions are the least biased given the constraints: any other distribution compatible with the same constraints would have smaller degeneracy and therefore would artificially exclude some viable (i.e. constraint-satisfying) configurations of the underlying variables. In other terms, a MaxEnt distribution is completely undetermined by features that do not appear explicitly in the constraints subject to which it has been computed.

These ideas, which ultimately make the maximum entropy principle the central conceptual tool for inferring probability distributions subject to constraints, have been placed on firmer and firmer mathematical ground starting from the 1940s. In our view, three classical results are especially noteworthy in the present context.

Firstly, landmark work by Shannon [64] and Khinchin [65] formally characterized H, Equation (4), as the only function complying with a set of a priori requirements (known as Shannon–Khinchin axioms) to be satisfied by a measure of the ‘uncertainty’ or ‘lack of information’ associated to a probability distribution . Here, ‘uncertainty’ relates in essence to how (im)precisely one can identify the configuration of basic degrees of freedom from knowledge of the distribution . In this sense, a larger uncertainty corresponds to a larger underlying degeneracy Ω and hence to a larger entropy H. Therefore, MaxEnt distributions compatible with given constraints formally correspond to those that maximize the uncertainty on every feature except for those that are directly encoded in the constraints.

A strict characterization of MaxEnt distributions is instead encoded in a result known as the ‘entropy concentration theorem’ [61]. In short, and referring to the urn-and-balls example discussed above, it rigorously quantifies the observation that, when N is sufficiently large, the number of microscopic states underlying the MaxEnt distribution is exponentially larger (in N) than the number of microscopic states underlying any other distribution. This is also seen from (2), albeit at a heuristic level. Denoting respectively by and the degeneracy and the entropy of the MaxEnt distribution, one sees that, for sufficiently large N,

| (5) |

for any Ω and H corresponding to a distribution different from the MaxEnt one. Because is the maximum value attained by the entropy, . Hence, (5) states that microscopic arrangements underlying the MaxEnt distribution are more numerous than those underlying any other distribution by an exponentially large (in N) factor. In turn, for large enough N, observing an arrangement of balls that corresponds to a distribution different from the MaxEnt one is exponentially (in N) less likely.

Finally (and perhaps most importantly for our purposes), H has been shown to be the only quantity whose constrained maximization allows for least-biased inference satisfying certain generic logical requirements (known as Shore–Johnson axioms) [66]. This result ultimately provides a rigorous basis for using the maximum entropy principle as a general inference technique, independently of the meaning assigned to Eq. (4). In other words, by maximizing H one is not looking for a state of maximum indeterminacy (apart from constraints), but rather following the only recipe for self-consistent inference having certain desirable properties. In this sense, the maximum entropy principle ‘simply’ allows to infer least-biased, constraint-satisfying probability distributions in a mathematically rigorous and logically sound manner.

It is clear at this point that the nature of the microscopic variables and the constraints one wants to impose are crucial in the business of using maximum entropy inference in general, and specifically to obtain information about biological systems from complex, high-dimensional data. In addition, the concrete usefulness of MaxEnt distributions besides their theoretical appeal is not a priori obvious. Mathematical arguments guarantee that they can provide a compact statistical description of a dataset that is least-biased and compatible with empirical observations. But can that description be employed e.g. for predictive purposes?

2.2. Maximum entropy inference in biology: the case of gene interaction networks

The answers to the questions posed above clearly depend on the specifics of the system under consideration and of the available data, and require a case-by-case discussion. Applications of the maximum entropy framework to biology have however become more numerous as the size and quality of data sets has increased, and currently range from protein science and neuroscience to collective animal behaviour and ecology. Such an impressive span suggests that at least some aspects must be recurrent across many if not all of these instances (see the discussion presented in [67] for a broader perspective). It is on these and on the lessons that can be drawn from them that we shall try to focus now. For sakes of clarity and simplicity, we shall phrase things in the context of the study of gene expression. Similar considerations can however be formulated in almost all of the cases listed above.

We begin by re-considering the urn-and-balls model described above in the gene expression scenario. The N balls would now represent N cellular samples whose complete expression profiles (e.g. RNA levels) have been experimentally characterized, while the K urns would represent all possible expression profiles. An expression profile of R genes is described by a vector , where stands for the expression level of gene i, with . Usually, the expression of a large set of genes is monitored (). A specification of an expression profile for each of the N cells corresponds to an assignment of the N balls to the K urns, and fully describes our experimental sample. Note that the number of possible vectors x, corresponding to the number K of urns, is in principle huge. By contrast, the number of samples (i.e. N) is typically much smaller than K, so that experiments will vastly under-sample the space of possible expression profiles.

Given the data (i.e. the measured expression profiles), the problem is that of inferring a probability distribution of expression profiles that is (i) least-biased with respect to unavailable information, and (ii) consistent with empirical constraints. According to the maximum entropy principle, we have to find the distribution that maximizes the entropy

| (6) |

subject to data-derived constraints, the above sum being formally carried out over all possible expression profiles. Whether a quantity should be constrained or not is ultimately determined by whether one can reliably estimate it from data or not. In the instances encountered most often and of greater practical relevance, constraints involve low-order moments of the underlying variables, especially averages (first moments) and correlations (second moments). This is because the statistically accurate computation of moments requires more and more experimental samples as the order of the moment increases, so that higher-order moments are generically harder to estimate than lower-order ones. We shall therefore consider only the simplest case in which the mean expression levels of each gene and the gene-gene correlations are required to match those derived from data, namely

| (7) |

where the index a runs over samples from 1 to N, while i and j range over genes from 1 to R. (It should however be kept in mind that this aspect is ultimately limited by data availability only.) Hence, we must look for the distribution that maximizes (6) with the usual normalization condition

| (8) |

and such that the mean expression levels and the correlations match empirical ones (7), i.e.

| (9) |

| (10) |

Such a distribution can be computed as shown in Supplementary Material, Sec. S5 (see also [16]) and reads

| (11) |

where Z, () and ( with ) are constants known as ‘Lagrange multipliers’ that are introduced to enforce the constraints [68]. Equation (11) is often referred to as the ‘pairwise MaxEnt probability distribution’ [24], [28], [40], [62], as it involves at most couplings between pairs of variables through the last term in the argument of the exponential. Clearly, this is due to the fact that only moments up to the second are constrained.

Eq. (11) provides a formal solution to our problem. To fully evaluate it, though, the values of the Lagrange multipliers Z, and have to be computed self-consistently from (8), (9) and (10).3 As (11) represents, after all, the least-biased data-informed model for the expression profiles, solving this problem amounts to inferring the model's parameters from data. Performing this task in a realistic context with genes, which is ultimately the key for the effective implementation of the maximum entropy framework with biological data sets, can be an extremely challenging computational problem. Luckily, a number of mathematically subtle but computationally effective methods have been developed for this goal over the past decade at the interface between statistical physics and computer science. A discussion of these techniques is however beyond our scopes, and excellent and up-to-date overviews can be found e.g. in [62], [69]. We shall henceforth assume that the parameters 's and the 's have been computed, and focus on their physical and biological interpretation.

Based on the MaxEnt distribution (11), one sees that measures the intrinsic propensity of gene i to be expressed, as larger (resp. smaller) values of favor expression profiles x with larger (resp. smaller) values of . On the other hand, the 's characterize the strength of pairwise gene-gene interactions as well as their character (via their signs: positive for positive interactions, negative for negative ones). Hence the 's can in principle yield regulatory information that may be scaled up to the reconstruction of an effective genome-resolution gene-gene interaction network. In [16], for instance, such coefficients were used to infer regulatory interactions in S. cerevisiae, after expression profiles were experimentally characterized in cultures at different time points (representing the different samples) via microarrays. Ultimately, knowledge of the 's allowed to extract a putative, highly-interconnected gene-gene interaction network that emphasized a few hub regulators (including ribosomal and mitochondrial genes as well as genes involved in TOR signaling) implicating global mechanisms devoted to the coordination of growth and nutrient intake pathways.

The ability to bring to light interconnections between genes belonging to different functional categories is a major advantage of the maximum entropy method over alternatives based on the straightforward analysis of correlations, such as clustering techniques. While the latter naturally focus on the identification of genes having a similar expression profile (and therefore tend to group functionally related genes together), the 's point to a refined notion of correlation. The origin of this fact is especially transparent when the 's are taken to be continuous unbounded variables ranging from −∞ to +∞ (a reasonable approximation whenever expression levels are quantified via centered log-fluorescence values). In this case, Eq. (11) describes a multivariate Gaussian distribution and it can be shown that the matrix of 's is related to the inverse of the matrix of Pearson correlation coefficients, rather than to the correlation matrix itself [16], [62]. This makes a substantial difference. Indeed, the covariance of the expression levels of two genes (say, A and B) can be large both when A and B are mutually dependent (e.g. when A codes for a transcription factor of B) and when, while mutually independent, they both separately correlate with a third gene C. In the latter case, though, the behaviour of C would explain the observed correlation between A and B. Specifically, by conditioning on the expression level of C, one would see that A and B are roughly uncorrelated. In other terms, the correlation matrix captures the unconditional correlation between variables and therefore contains effects due to both direct and indirect mechanisms. On the other hand, its inverse describes the correlations that remain once the indirect effects are removed [70], [71], and thereby provides a more robust and consistent characterization of the interactions between variables. (See Figure 1A for a summary of the scenario just discussed.)

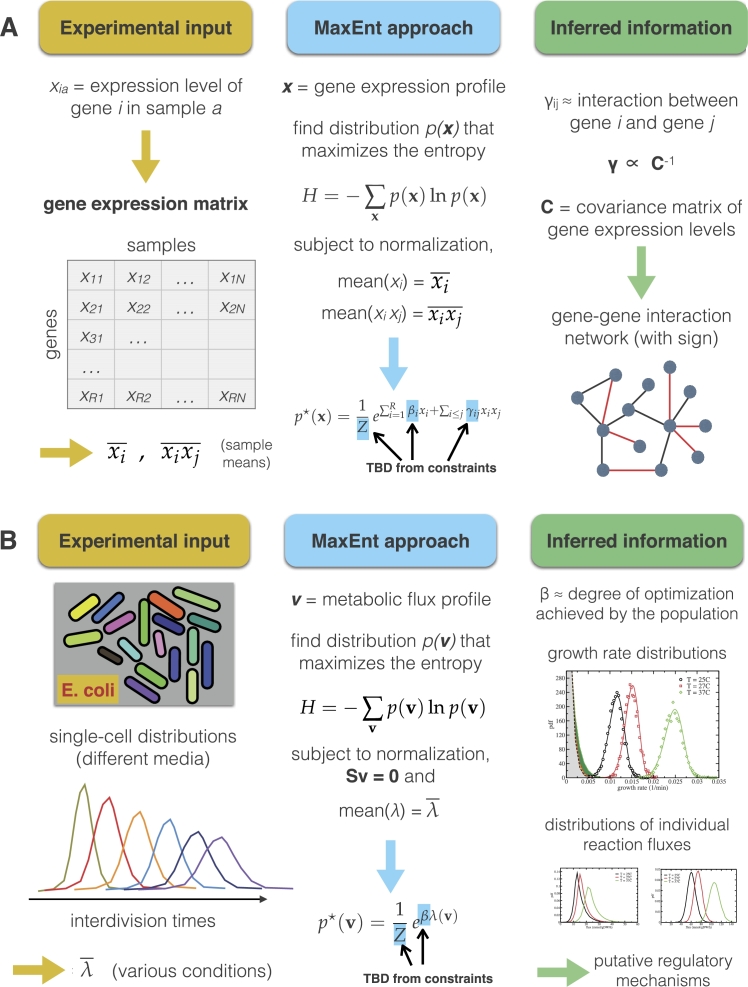

Figure 1.

Sketch of the two examples of applications of the maximum entropy principle to biological data analysis discussed in the text. (A) Inference of gene interaction networks from empirical expression data (see Sec. 3 for details). (B) Inference of genome-scale metabolic flux patterns from empirical growth rate distributions in bacteria (see Sec. 4 for details). For each case, we describe schematically the empirical input (left column), the formulation of the maximum entropy inference problem (middle column), and an example of the inferred biological insight (right column).

Naturally, some adjustments must come in place when one is to go beyond the Gaussian case. Yet, it can be argued that the above picture is quite generic. While the term ‘interaction’ can acquire different meanings in different cases, the MaxEnt distribution focuses on the most relevant part of the correlations and is therefore capable of extracting a more reliable interaction structure from data than that obtainable via a more standard correlation analysis. This property lies in our view at the heart of the success encountered by the maximum entropy method in several applications, in biology as well as in other fields [72], [73], [74]. The above example also shows the centrality of empirical data for maximum entropy inference. In some cases, though, constraints that are not derived from experiments can be employed, together with empirical ones, to guide the inference. An example of this is found in the maximum entropy approach to the analysis of metabolic networks.

2.3. Maximum entropy approach to cellular metabolism

Novel experimental techniques employing e.g. microfluidic devices are capable of probing growth in bacterial populations at single cell resolution, yielding detailed data that monitor growth in thousands of individual cells over many generations [75], [76]. These experiments have quantified a number of features linking gene expression and metabolism to overall control mechanisms in proliferating bacteria [77], [78], [79], [80], [81]. While the emergent picture is being increasingly refined, tracking its ‘microscopic’ origin, and particularly the causes of growth rate fluctuations, is largely an open problem. Given the time scales involved in these processes, it is reasonable to think that the regulatory layer controlling energy metabolism is crucially involved in establishing this scenario. Indeed, substantial empirical evidence is connecting growth physiology and heterogeneity to metabolic activity in bacteria [77]. Experimental approaches to characterize the fluxes of intracellular metabolic reactions can provide a population-level picture of the mean activity of central carbon pathways [82]. On the other hand, cellular metabolic networks have been mapped, for many organisms, at the scale of the whole genome [83], and a host of self-consistent computational frameworks exist that can connect metabolic phenotypes (i.e. patterns of material fluxes through the enzyme-catalyzed network of intracellular reactions that processes nutrients into macromolecular building blocks, free energy and biomass) to physiological observables such as the growth rate [84]. The question is whether the reverse problem of relating measured growth rates to flux states of genome-scale networks is feasible. In particular, given the distribution of growth rates found in experiments, can one infer the state of the underlying metabolic network, e.g. the rates of individual reactions? Such knowledge might provide important insight into the metabolic bottlenecks of growth in proliferating cells, which could be especially useful in view of the limited experimental accessibility of intracellular reactions.

To progress along this route, it is important to characterize the map between metabolic networks and growth rates in some more detail. The so-called Constraint-Based Models (CBMs) [85] represent highly effective in silico schemes to obtain information about cellular metabolic activity from minimal input ingredients known at genome resolution. To be more specific, the central assumption behind CBMs is that, because of the timescale separation between metabolic (fast) and regulatory (slow) processes, over a sufficiently long time (compared to the cell's cycle) metabolic reactions operate close to a non-equilibrium steady state (NESS) where metabolite and enzyme levels are stationary. Under this homeostatic scenario, a viable configuration of fluxes through enzyme-catalyzed reactions can be represented by a vector (with i indexing reactions) that satisfies the linear system of equations taking the matricial form

| (12) |

where S denotes the reaction network's matrix of stoichiometric coefficients. From a physical viewpoint, if exchange fluxes with the surrounding medium are included in S (as usually done), the above conditions simply express the fact that, at stationarity, the total mass of each chemical species should be conserved, and correspond to Kirchhoff-like laws: the overall production (including external supply) and consumption (including excretions) fluxes of every metabolite should balance. S is obtained from genome-scale reconstructions and has R columns (one per reaction, numbering to about 1,200 in an organism like E. coli) and C rows (one per chemical species, amounting to several hundreds for E. coli). Therefore (12) compactly represents C linear equations with R unknowns (the individual fluxes ). Any flux vector v solving (12) corresponds in principle to a viable NESS flux pattern of the metabolic network specified by S, and the structure of stoichiometric matrices usually allows for an infinite number of solutions. In particular, when ranges of variability of the type are specified for each flux, reflecting empirical kinetic, thermodynamic or regulatory priors (see e.g. [86] for an overview of these data-driven factors), solutions of (12) form a particular kind of set called a ‘convex polytope’ in mathematical jargon [87]. We shall denote convex polytopes by the letter . On the other hand, to each of the solutions, i.e. to each point in , CBMs associate a unique value for the biomass output (the growth rate), which we denote as . Therefore, any rule to sample points from (i.e. to generate solutions of (12)) will in turn yield a distribution of values for the growth rate. Usually, though, convex polytopes corresponding to genome-scale stoichiometric matrices S have very high dimensionality (e.g. several hundreds for E. coli), and therefore sadly tend to escape both imagination and computational analysis. We will not detail here how points from can be generated. For our purposes, it will suffice to say that efficient algorithms exist that allow to extract solutions of (12) with any desired probability distribution for any metabolic network reconstruction, i.e. any S (see e.g. [84], [88], [89]).

Given this setup allowing to link metabolic phenotypes to their corresponding growth rates, we are interested in characterizing the inverse map. Specifically, what can we learn about flux configurations from empirical growth rate distributions? Following the maximum entropy idea, one can start by studying the least-biased distribution compatible with data. The simplest data-borne constraint one can impose concerns the mean growth rate. In addition, however, one can inject extra information by requiring that flux vectors are viable NESS of the metabolic network, i.e. that they belong to the polytope of solutions of (12) for the organism under study. This is a substantial change compared e.g. to the case of regulatory networks discussed in the previous section. There, no restriction on the vectors x of expression profiles applied as no specific assumption on the ranges of variability and mutual dependence of individual expression levels was made. Here, instead, we are effectively adding a (reasonable and motivated) guess for the underlying model for flux profiles: they should satisfy (12) with pre-determined ranges of variability on each flux. The MaxEnt flux distribution for this case can be computed in full analogy with the previous ones (see Supplementary Material, Sec. S6), and it turns out to be given by

| (13) |

where Z and β are the Lagrange multipliers respectively ensuring normalization () and the given mean growth rate. The MaxEnt distribution is only defined on the feasible space where (i.e. flux vectors must be viable). In addition, the probability to observe a certain v depends on its growth rate , while the parameter β quantifies the inferred “degree of optimality”. In a nutshell, a sufficiently large value of β causes to concentrate around flux vectors yielding large values of the growth rate λ. On the other hand, as β decreases towards more and more negative values, the MaxEnt distribution selects metabolic phenotypes growing more and more slowly. For , becomes the uniform distribution over the polytope, in which case each viable flux vector v is assumed to be equally probable.

When applied to modeling data describing steady growth of E. coli populations using the genome-scale metabolic network reconstruction given in [90], Eq. (13) turned out to reproduce not just the mean growth rates obtained in different experiments for a number of growth medium/strain combinations, but the entire distributions [91]. The fact that the exponential form (13), which ultimately depends on the single parameter β (see Supplementary Material, Sec. S6), coincides with empirical distributions confirms the observation that growth rate distributions are one-parameter functions (i.e., the variance is a function of the mean, at odds with Gaussian distributions where the mean and the variance are separate parameters) [79]. Strikingly, recent work has shown that the maximum entropy approach, besides capturing the statistics of the growth rate, is also capable of describing the behaviour of intracellular fluxes belonging to the central carbon processing pathways, which can be estimated by mass spectrometry, without additional assumptions [92]. Equation (13) is likewise capable of describing bacterial growth distributions obtained in a fixed medium at different temperatures (see Figure 2A), while two examples of predictions for how metabolic flux distributions will be modulated as the growth temperature increases are displayed in Figures 2B and 2C. (See Figures 1B for an overview of this case.)

Figure 2.

Maximum entropy modeling of growth rate distributions describing E. coli growth at different temperatures. Data are taken from [76]. (A) Empirical distributions (markers) are shown together with the MaxEnt distributions obtained by fitting β to match the corresponding means (continuous lines), for three different temperatures. For comparison, the dashed lines and the corresponding shaded areas describe the growth rate distributions corresponding to uniform samplings of the solution spaces of the metabolic model, Eq. (12), in the three cases. In each case, such distributions are described by Eq. (14), with a = 0, b = 22 and different values of λmax. (B) and (C): inferred distributions of the ATP synthase flux (B) and of the flux through phosphofructokinase (PFK) (C) at different temperatures.

An especially important feature that the maximum entropy approach brings to light is the fact that the value of β that provides the best fit to experiments corresponds to mean growth rates that are significantly smaller (usually between 50% and 80%) than the maximum growth rate achievable in the same growth medium according to the CBM prediction, which we denote as . In other terms, based on the experimental data sets considered in [76], [91], bacteria appear unable to strictly maximize their growth rates. On the other hand, the distribution of growth rates implied by (13) at (i.e., when all flux patterns satisfying (12) are equally likely) is of the form

| (14) |

where a and b are constants that depend on the metabolic network reconstruction one is employing4 and A is a normalization constant. The growth-rate landscape depicted by (14) is extremely heterogeneous, with a small set of states with growth rates close to living in a huge sea of slow-growing flux patterns (see the dashed curves in Figures 2A). This suggests that any random noise added to a fast-growing flux pattern will overwhelmingly likely causes a drastic growth rate reduction.

Taking these observations together, one sees that the space of allowed flux patterns can affect, in a quantitatively measurable way, the growth rate distributions that a population of cells in a given medium will achieve. In particular, one could argue that empirical growth rate distributions found in experiments at single-cell resolution might be explained in terms of a trade-off between the higher fitness of fast-growing phenotypes and the higher entropy (numerosity) of slow-growing ones that is established due to the action of noise. This idea has been tested in a mathematical model of an E. coli population evolving in time in the CBM-based fitness landscape (14). Indeed, when bacterial growth rates, along with driving replication, were assumed to fluctuate in time according a small diffusive noise in the feasible space (which contrasts, with high probability, the tendency of the population to concentrate around the fastest growth rates achievable), a scenario that is essentially identical to that described by the MaxEnt distribution was recovered despite starting from very different premises [91]. A more careful mathematical study of the same dynamical model has predicted, among other things, that, within such a scenario, response times to perturbations should be inversely correlated to the difference between the maximum achievable growth rate and the population average [93]. In other terms, populations growing sub-optimally may be more efficient in responding to stresses, a prediction that in principle can be tested experimentally.

Given that a single number, i.e. β, appears to provide a full description of empirical growth rate distributions via (13), it would be important to have a more thorough understanding of its physical and biological meaning. According to (13), a larger β implies a faster mean growth rate, but can one point to the physico-chemical and biological determinants of growth that contribute to establishing its value in a bacterial population? More specifically, can one identify the factors that limit β? In principle, one would expect the growth medium to play a central role in the answer to these questions. However, the picture emerging from a mathematical model in which β can be computed from first principles is more involved. In particular, Ref. [94] considered a population growing in a certain environment and characterized by a growth rate distribution . Imagine sampling individuals from that population, each carrying its intrinsic growth rate sampled from , and subsequently planting them as the initial inoculum in a new growth medium with carrying capacity K. By assuming that growth follows the basic logistic model, it was found that the seeds evolve in time into a population with growth rate distribution proportional to , where β is now a quantity that depends in a mathematically precise way on the capacity-to-inoculum ratio and on the growth rate distribution from which the seeds were sampled, i.e. on . The former dependence encodes, as expected, for the growth medium via K. Interestingly, though, the presence of says that the population maintains a memory of initial conditions. On the other hand, the fact that β is also a function of points, perhaps unexpectedly, towards history-dependence. This theoretical picture, which characterizes the maximum entropy scenario at a deeper level and provides quantitative support to some possibly intuitive facts (such as history- and inoculum-dependence of growth properties), has been in part confirmed by empirical data on cancer growth rates [94]. Clearly, this oversimplified model does not account explicitly for factors like direct cell-cell interactions or feedbacks between growth and regulation or nutrient availability. Still, it is interesting that the parameter β, which in principle is introduced here only to enforce a constraint in the maximum entropy scenario, can be given a well defined physical interpretation. The integration of further empirical data and possibly constraints (e.g. concerning individual fluxes) will hopefully provide new insight into this picture. Preliminary results obtained in this direction are encouraging [95].

While the maximum entropy idea has been employed within CBMs for specific purposes like objective function reconstruction, metabolic pathway analysis or to compute distributions of individual fluxes or chemical potentials over the polytope [96], [97], [98], [99], [100], [101], [102], [103], [104], [105], [106], [107], [108], [109], the approach just discussed presents an overall view of cellular metabolism that differs significantly from that of mechanistic CBMs such as Flux Balance Analysis [110] or related ideas [111], [112], [113], despite the fact that both rely on essentially the same physical NESS assumption via (12). In many ways, the two frameworks appear to be complementary. Flux Balance Analysis is capable of describing the optimal metabolic states of fastest growth achievable by a cell in a multitude of environmental and intracellular conditions. The maximum entropy approach, instead, can clarify in quantitative terms how far from the optimum an actual population is, and what population-level feature might be shaping the observed growth rate heterogeneity. These views might become more tightly linked upon further investigating the underlying regulatory mechanisms, molecular interactions or trade-offs limiting growth.

2.4. Beyond the basic framework

The major advantage of the maximum entropy principle lies perhaps in its ability to cope effectively with limited data. The space of states accessible to a living system is huge and always undersampled in experiments. Still, as long as the available data permit the estimation of basic statistical observables with sufficient accuracy, a variety of efficient computational methods exist that allow to compute the parameters of the MaxEnt distribution reliably, leading to a compact, least-biased and mathematically sound representation of a complex, high-dimensional interacting system. We have attempted to describe the fundamentals of entropy maximization and its use for biological applications, opting to focus on two of perhaps the simplest instances involving the study of biological genome-scale networks. Different applications do not require conceptual changes to the approach we presented, but may rely on a more careful and/or involved definition of the state variables (expression profiles or metabolic flux profiles in the examples we considered). Yet some of the issues that we have chosen to leave aside so far now deserve a deeper (albeit brief) discussion.

In first place, we have seen that computing the MaxEnt distribution is akin to constructing a statistical model of the system one is interested in. The inferred model will inevitably depend on the empirical information encoded in the constraints under which entropy is maximized. In turn, while MaxEnt models necessarily reproduce the information used to build them, their predictive power will depend on the encoded constraints as well. In many cases, maximum entropy models can correctly reproduce correlations of order higher than those included as constraints (see e.g. [16], [24] for examples, and [114] for a broader theoretical analysis). Still, predictions concerning other quantities may turn out to be incorrect. Assuming the imposed constraints are factual, this can happen essentially for a unique reason: the constrained quantities do not, by themselves, localize the distribution over states where the new observations are matched. In other terms, more (or different) constraints are required. Clearly, as constraints map to physical or biological ingredients, missing constraints can point to useful insight about of the system under study. An example of this is shown in [115], where some phenomenological aspects of the coexistence between fast-growing and persister phenotypes in bacterial populations are explained in terms of a maximum entropy approach with a constraint on growth rate fluctuations.

Secondly, in our presentation we have taken for granted that cells are indistinguishable (i.e., the arrangement is indistinguishable from the arrangement obtained by exchanging the positions of two balls) and that the set of allowed states is discrete (e.g., K urns). As it turns out, these are the simplest and ideal conditions for applying the maximum entropy principle. In many of the biological applications discussed in the literature, it can be argued that both conditions are satisfied. However, this is not generally so. For instance, reaction fluxes are continuous variables and the space of allowed flux configurations is therefore continuous rather than discrete. While nothing invalidates the maximum entropy principle in such cases and the conclusions are unchanged, some more care is needed in setting the stage for it in presence of continuous variables. The interested reader will find more details e.g. in [61], [116].

Thirdly, MaxEnt models like that described by (11) implicitly postulate, via the constraint (10), that the coefficients are symmetric, i.e. . This amounts to assuming that the gene-gene interaction network is a priori undirected, which obviously is at odds with a host of biological evidence. To overcome this limitation, which evidently occurs in the maximum entropy approach whenever correlations are constrained, one has to abandon the framework discussed here and resort to methods that infer dynamical models (as opposed to static ones that can be fully described by the probability to observe a certain state). The reasons are rooted in the fundamental distinction between equilibrium and off-equilibrium processes, a nice discussion of which can be found in [60]. Efficient computational methods have been developed to deal with such situations as well, and we again refer the reader to [69] for a recent overview. A specific generalization of the maximum entropy idea that focuses on inferring distributions of dynamical trajectories in the configuration space is known as ‘Maximum Caliber’ [117] (see also [118]). Applications of these ideas are presented e.g. in [119], [120], [121], [122], [123] (see also [60] for a review).

Fourth, in our presentation we have tacitly assumed that (i) a MaxEnt distribution exists (i.e., that, once the mathematical problem is constructed, there is a distribution that actually maximizes the entropy subject to the imposed constraints), and that (ii) it is unique (as intuitively desirable to avoid ambiguities, and in compliance with one of the Shore–Johnson axioms). Whether this is the case, ultimately depends on the imposed constraints. For our purposes, it should suffice to say that whenever the constraints are linear functions of the probability distribution , as are e.g. (8), (9) and (10), as well as in all instances discussed here, both (i) and (ii) are true. Yet, in certain situations this may not be the case [124]. The maximum entropy approach then loses some of its appeal and the problem of performing robust inference from limited data has to be treated on a case-by-case basis with extra care.

A final important point we have so far not addressed concerns the treatment of measurement errors affecting the features used to constrain entropy maximization. In practice, inference is always performed under uncertain constraints, which in turn leads to uncertain estimates of the inferred parameters (i.e., for instance, of the inferred interaction structure). The straightforward application of the Maximum Entropy idea discussed so far effectively ignores this aspect. In order to account for it, one can extend the MaxEnt framework presented here in the direction of Bayesian inference, which explicitly deals with probability distributions of parameters given the data [125]. The reconciliation of the Maximum Entropy and Bayesian approaches poses several challenges and has a long and intriguing history [126], [127], up to very recent applications [6], [11], [128], [129], [130]. A related aspect concerns MaxEnt inference with missing or incomplete data, a well-known example of which is discussed in [48], [131]. Such cases have been long considered in the inference literature [132] and specific methods have been developed to deal with them [133], [134], [135]. As more high-resolution data probing large-scale biological networks become available, these techniques are highly likely to be extended well beyond their current application domains.

3. Conclusions

The existence of reproducible quantitative relationships connecting the growth of a bacterial population to the composition of the underlying cells (e.g. in terms of the RNA/protein ratio) suggests that, when integrated over large enough cell populations, regulatory and metabolic mechanisms can generate stable outcomes in spite of the heterogeneity and noise that affect them all [136]. Significant deviations from the expected outcome are rare. From a statistical viewpoint, one might say that in such cases the law of large numbers (or more precisely the central limit theorem [137]) is at work, and population-level properties will be roughly independent of the way in which cells in the population distribute over allowed states (or, equivalently, that all viable distributions lead to the same population-level properties). On the other hand, this distribution encodes for critical biological information related to robustness, selection and evolvability, and having access to it would be of paramount importance.

With detailed information about individual intracellular processes, one may hope to construct sufficiently comprehensive mechanistic models capable of mapping the behaviour of individual cells, in terms of their regulatory and metabolic activity, to population-level observables. It is however unclear whether such a ‘direct’ approach, whose concrete realization would be severely hampered by the huge number of variables and parameters to be accounted for, would allow to fully uncover how cells are distributed over allowed regulatory states, as the space of states to be explored would be dauntingly large. The ‘inverse’ route consists in trying to infer the distribution from the observed population-level behaviour. As many distributions are likely to be compatible with empirical results, one would ideally want to be able to sample all of them. The space of such distributions can however be prohibitively large for systems as complex as cells. Therefore, the problem of selecting, out of this space, the most informative distribution has to be faced. The maximum entropy principle provides a constructive and mathematically controlled answer: it is the distribution that maximizes the entropy (4) subject to empirical constraints that yields the optimal choice.

Some of the issues raised above however suggest that, as much as the maximum entropy principle provides powerful means to extract models and/or useful low-dimensional representations from complex, high-dimensional and limited data, there is room to dissect its fundamentals [138], [139], re-analyze its use [140], [141], or search for alternatives [142]. In our view, besides providing essential theoretical insight, these contributions also highlight some of the main practical challenges that biological datasets pose to computational and theoretical scientists, whose ultimate goals are interpreting them and using them to build e.g. predictive models and de novo design protocols. These challenges have ultimately been the key driver behind the massive progress achieved in the study of the so-called ‘inverse problems’ in statistical physics over the past decade. As one can only envision that data will continue to get more and more abundant, of higher quality and increasingly diverse (as novel conceptual schemes emerge), the push for technical improvements and new schemes will likely escalate. In turn, maximum entropy methods may spread further in the coming years, as they are capable of extracting the simplest and least biased conclusions that one can reliably draw from limited data. Some of the new directions that are being probed have already shown promise for applications in biology [143], [144].

Author contribution statement

All authors listed have significantly contributed to the development and the writing of this article.

Funding statement

This work was supported by the European Union's Horizon 2020 Research and Innovation Staff Exchange program MSCA-RISE-2016 under grant agreement no. 734439 INFERNET, and by the People Programme (Marie Curie Actions) of the European Union's Seventh Framework Programme (FP7/2007-2013) under Research Executive Agency (REA) Grant Agreement No. 291734.

Competing interest statement

The authors declare no conflict of interest.

Additional information

Supplementary content related to this article has been published online at https://doi.org/10.1016/j.heliyon.2018.e00596.

No additional information is available for this paper.

Acknowledgements

We gratefully acknowledge Alfredo Braunstein, Anna Paola Muntoni and Andrea Pagnani for useful insight and suggestions.

Footnotes

To give an idea, for and , the arrangement with , and has . If , the arrangement with , and has instead (twice as large). If and , the arrangement with , and has instead .

Notice however that many arrangements have . In particular, any arrangement with N balls in one urn and none in the others has . Hence there are K ways to realize an arrangement, corresponding to the fact that there are K possible choices for the urn in which to place the N balls.

Note that, because , the number of constraints is (where the three addends correspond respectively to (8), (9) and (10)), and it matches the number of constants to be determined. This makes the problem of retrieving the values of the above parameters mathematically well defined.

For instance, one has and for E. coli's on the carbon catabolic core, but and for the full genome-scale network given in [90].

Supplementary material

The following Supplementary material is associated with this article:

Supplementary Text: basic derivations.

References

- 1.Jaynes E.T. Cambridge University Press; 2003. Probability Theory: The Logic of Science. [Google Scholar]

- 2.Lapedes A.S., Giraud B.G., Liu L., Stormo G.D. vol. 33. Institute of Mathematical Statistics; 1999. Statistics in Molecular Biology and Genetics; pp. 236–256. (Lect. Notes Monogr. Ser.). [Google Scholar]

- 3.Seno F., Trovato A., Banavar J.R., Maritan A. Maximum entropy approach for deducing amino acid interactions in proteins. Phys. Rev. Lett. 2008;100(7) doi: 10.1103/PhysRevLett.100.078102. [DOI] [PubMed] [Google Scholar]

- 4.Weigt M., White R.A., Szurmant H., Hoch J.A., Hwa T. Identification of direct residue contacts in protein–protein interaction by message passing. Proc. Natl. Acad. Sci. 2009;106(1):67–72. doi: 10.1073/pnas.0805923106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Morcos F., Pagnani A., Lunt B., Bertolino A., Marks D.S., Sander C., Zecchina R., Onuchic J.N., Hwa T., Weigt M. Direct-coupling analysis of residue coevolution captures native contacts across many protein families. Proc. Natl. Acad. Sci. 2011;108(49):E1293–E1301. doi: 10.1073/pnas.1111471108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Pitera J.W., Chodera J.D. On the use of experimental observations to bias simulated ensembles. J. Chem. Theory Comput. 2012;8(10):3445–3451. doi: 10.1021/ct300112v. [DOI] [PubMed] [Google Scholar]

- 7.Hopf T.A., Colwell L.J., Sheridan R., Rost B., Sander C., Marks D.S. Three-dimensional structures of membrane proteins from genomic sequencing. Cell. 2012;149(7):1607–1621. doi: 10.1016/j.cell.2012.04.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Roux B., Weare J. On the statistical equivalence of restrained-ensemble simulations with the maximum entropy method. J. Chem. Phys. 2013;138(8) doi: 10.1063/1.4792208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cavalli A., Camilloni C., Vendruscolo M. Molecular dynamics simulations with replica-averaged structural restraints generate structural ensembles according to the maximum entropy principle. J. Chem. Phys. 2013;138(9) doi: 10.1063/1.4793625. [DOI] [PubMed] [Google Scholar]

- 10.Ekeberg M., Lövkvist C., Lan Y., Weigt M., Aurell E. Improved contact prediction in proteins: using pseudolikelihoods to infer Potts models. Phys. Rev. E. 2013;87(1) doi: 10.1103/PhysRevE.87.012707. [DOI] [PubMed] [Google Scholar]

- 11.Boomsma W., Ferkinghoff-Borg J., Lindorff-Larsen K. Combining experiments and simulations using the maximum entropy principle. PLoS Comput. Biol. 2014;10(2) doi: 10.1371/journal.pcbi.1003406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Zhang B., Wolynes P.G. Topology, structures, and energy landscapes of human chromosomes. Proc. Natl. Acad. Sci. 2015;112(19):6062–6067. doi: 10.1073/pnas.1506257112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Cesari A., Reißer S., Bussi G. Using the maximum entropy principle to combine simulations and solution experiments. 2018. arXiv:1801.05247 preprint.

- 14.Farré P., Emberly E. A maximum-entropy model for predicting chromatin contacts. PLoS Comput. Biol. 2018;14(2) doi: 10.1371/journal.pcbi.1005956. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.D'haeseleer P., Liang S., Somogyi R. Genetic network inference: from co-expression clustering to reverse engineering. Bioinformatics. 2000;16(8):707–726. doi: 10.1093/bioinformatics/16.8.707. [DOI] [PubMed] [Google Scholar]

- 16.Lezon T.R., Banavar J.R., Cieplak M., Maritan A., Fedoroff N.V. Using the principle of entropy maximization to infer genetic interaction networks from gene expression patterns. Proc. Natl. Acad. Sci. 2006;103(50):19033–19038. doi: 10.1073/pnas.0609152103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Dhadialla P.S., Ohiorhenuan I.E., Cohen A., Strickland S. Maximum-entropy network analysis reveals a role for tumor necrosis factor in peripheral nerve development and function. Proc. Natl. Acad. Sci. 2009;106(30):12494–12499. doi: 10.1073/pnas.0902237106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Remacle F., Kravchenko-Balasha N., Levitzki A., Levine R.D. Information-theoretic analysis of phenotype changes in early stages of carcinogenesis. Proc. Natl. Acad. Sci. 2010;107(22):10324–10329. doi: 10.1073/pnas.1005283107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Huynh-Thu V.A., Sanguinetti G. Gene regulatory network inference: an introductory survey. 2018. arXiv:1801.04087 preprint. [DOI] [PubMed]

- 20.Locasale J.W., Wolf-Yadlin A. Maximum entropy reconstructions of dynamic signaling networks from quantitative proteomics data. PLoS ONE. 2009;4(8) doi: 10.1371/journal.pone.0006522. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Graeber T.G., Heath J.R., Skaggs B.J., Phelps M.E., Remacle F., Levine R.D. Maximal entropy inference of oncogenicity from phosphorylation signaling. Proc. Natl. Acad. Sci. 2010;107(13):6112–6117. doi: 10.1073/pnas.1001149107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Shin Y.S., Remacle F., Fan R., Hwang K., Wei W., Ahmad H., Levine R.D., Heath J.R. Protein signaling networks from single cell fluctuations and information theory profiling. Biophys. J. 2011;100(10):2378–2386. doi: 10.1016/j.bpj.2011.04.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sharan R., Karp R.M. Reconstructing Boolean models of signaling. J. Comput. Biol. 2013;20(3):249–257. doi: 10.1089/cmb.2012.0241. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Schneidman E., Berry M.J., Segev R., Bialek W. Weak pairwise correlations imply strongly correlated network states in a neural population. Nature. 2006;440(7087):1007–1012. doi: 10.1038/nature04701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Shlens J., Field G.D., Gauthier J.L., Grivich M.I., Petrusca D., Sher A., Litke A.M., Chichilnisky E.J. The structure of multi-neuron firing patterns in primate retina. J. Neurosci. 2006;26(32):8254–8266. doi: 10.1523/JNEUROSCI.1282-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Tang A., Jackson D., Hobbs J., Chen W., Smith J.L., Patel H., Prieto A., Petrusca D., Grivich M.I., Sher A., Hottowy P. A maximum entropy model applied to spatial and temporal correlations from cortical networks in vitro. J. Neurosci. 2008;28(2):505–518. doi: 10.1523/JNEUROSCI.3359-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Cocco S., Leibler S., Monasson R. Neuronal couplings between retinal ganglion cells inferred by efficient inverse statistical physics methods. Proc. Natl. Acad. Sci. 2009;106(33):14058–14062. doi: 10.1073/pnas.0906705106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Roudi Y., Nirenberg S., Latham P.E. Pairwise maximum entropy models for studying large biological systems: when they can work and when they can't. PLoS Comput. Biol. 2009;5(5) doi: 10.1371/journal.pcbi.1000380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tkacik G., Prentice J.S., Balasubramanian V., Schneidman E. Optimal population coding by noisy spiking neurons. Proc. Natl. Acad. Sci. 2010;107(32):14419–14424. doi: 10.1073/pnas.1004906107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Ohiorhenuan I.E., Mechler F., Purpura K.P., Schmid A.M., Hu Q., Victor J.D. Sparse coding and high-order correlations in fine-scale cortical networks. Nature. 2010;466(7306):617–621. doi: 10.1038/nature09178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Yeh F.C., Tang A., Hobbs J.P., Hottowy P., Dabrowski W., Sher A., Litke A., Beggs J.M. Maximum entropy approaches to living neural networks. Entropy. 2010;12(1):89–106. [Google Scholar]

- 32.Ganmor E., Segev R., Schneidman E. Sparse low-order interaction network underlies a highly correlated and learnable neural population code. Proc. Natl. Acad. Sci. 2011;108(23):9679–9684. doi: 10.1073/pnas.1019641108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Granot-Atedgi E., Tkacik G., Segev R., Schneidman E. Stimulus-dependent maximum entropy models of neural population codes. PLoS Comput. Biol. 2013;9(3) doi: 10.1371/journal.pcbi.1002922. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Tkacik G., Marre O., Mora T., Amodei D., Berry M.J., II, Bialek W. The simplest maximum entropy model for collective behavior in a neural network. J. Stat. Mech. 2013;2013(03) [Google Scholar]

- 35.Ferrari U., Obuchi T., Mora T. Random versus maximum entropy models of neural population activity. Phys. Rev. E. 2017;95(4) doi: 10.1103/PhysRevE.95.042321. [DOI] [PubMed] [Google Scholar]

- 36.Rostami V., Mana P.P., Grün S., Helias M. Bistability, non-ergodicity, and inhibition in pairwise maximum-entropy models. PLoS Comput. Biol. 2017;13(10) doi: 10.1371/journal.pcbi.1005762. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Nghiem T.A., Telenczuk B., Marre O., Destexhe A., Ferrari U. Maximum entropy models reveal the correlation structure in cortical neural activity during wakefulness and sleep. 2018. arXiv:1801.01853 preprint. [DOI] [PubMed]

- 38.Yeo G., Burge C.B. Maximum entropy modeling of short sequence motifs with applications to RNA splicing signals. J. Comput. Biol. 2004;11(2–3):377–394. doi: 10.1089/1066527041410418. [DOI] [PubMed] [Google Scholar]

- 39.Mora T., Walczak A.M., Bialek W., Callan C.G. Maximum entropy models for antibody diversity. Proc. Natl. Acad. Sci. 2010;107(12):5405–5410. doi: 10.1073/pnas.1001705107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Santolini M., Mora T., Hakim V. A general pairwise interaction model provides an accurate description of in vivo transcription factor binding sites. PLoS ONE. 2014;9(6) doi: 10.1371/journal.pone.0099015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Ferguson A.L., Mann J.K., Omarjee S., Ndungu T., Walker B.D., Chakraborty A.K. Translating HIV sequences into quantitative fitness landscapes predicts viral vulnerabilities for rational immunogen design. Immunity. 2013;38(3):606–617. doi: 10.1016/j.immuni.2012.11.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Shekhar K., Ruberman C.F., Ferguson A.L., Barton J.P., Kardar M., Chakraborty A.K. Spin models inferred from patient-derived viral sequence data faithfully describe HIV fitness landscapes. Phys. Rev. E. 2013;88(6) doi: 10.1103/PhysRevE.88.062705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Mann J.K., Barton J.P., Ferguson A.L., Omarjee S., Walker B.D., Chakraborty A., Ndungu T. The fitness landscape of HIV-1 gag: advanced modeling approaches and validation of model predictions by in vitro testing. PLoS Comput. Biol. 2014;10(8) doi: 10.1371/journal.pcbi.1003776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Bialek W., Cavagna A., Giardina I., Mora T., Silvestri E., Viale M., Walczak A.M. Statistical mechanics for natural flocks of birds. Proc. Natl. Acad. Sci. 2012;109(13):4786–4791. doi: 10.1073/pnas.1118633109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bialek W., Cavagna A., Giardina I., Mora T., Pohl O., Silvestri E., Viale M., Walczak A.M. Social interactions dominate speed control in poising natural flocks near criticality. Proc. Natl. Acad. Sci. 2014;111(20):7212–7217. doi: 10.1073/pnas.1324045111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Cavagna A., Giardina I., Ginelli F., Mora T., Piovani D., Tavarone R., Walczak A.M. Dynamical maximum entropy approach to flocking. Phys. Rev. E. 2014;89(4) doi: 10.1103/PhysRevE.89.042707. [DOI] [PubMed] [Google Scholar]

- 47.Shipley B., Vile D., Garnier É. From plant traits to plant communities: a statistical mechanistic approach to biodiversity. Science. 2006;314(5800):812–814. doi: 10.1126/science.1131344. [DOI] [PubMed] [Google Scholar]

- 48.Phillips S.J., Anderson R.P., Schapire R.E. Maximum entropy modeling of species geographic distributions. Ecol. Model. 2006;190(3):231–259. [Google Scholar]

- 49.Pueyo S., He F., Zillio T. The maximum entropy formalism and the idiosyncratic theory of biodiversity. Ecol. Lett. 2007;10(11):1017–1028. doi: 10.1111/j.1461-0248.2007.01096.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Dewar R.C., Porté A. Statistical mechanics unifies different ecological patterns. J. Theor. Biol. 2008;251(3):389–403. doi: 10.1016/j.jtbi.2007.12.007. [DOI] [PubMed] [Google Scholar]

- 51.Phillips S.J., Dudik M. Modeling of species distributions with Maxent: new extensions and a comprehensive evaluation. Ecography. 2008;31(2):161–175. [Google Scholar]

- 52.Harte J., Zillio T., Conlisk E., Smith A.B. Maximum entropy and the state-variable approach to macroecology. Ecology. 2008;89(10):2700–2711. doi: 10.1890/07-1369.1. [DOI] [PubMed] [Google Scholar]

- 53.Volkov I., Banavar J.R., Hubbell S.P., Maritan A. Inferring species interactions in tropical forests. Proc. Natl. Acad. Sci. 2009;106(33):13854–13859. doi: 10.1073/pnas.0903244106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.He F. Maximum entropy, logistic regression, and species abundance. Oikos. 2010;119(4):578–582. [Google Scholar]

- 55.White E.P., Thibault K.M., Xiao X. Characterizing species abundance distributions across taxa and ecosystems using a simple maximum entropy model. Ecology. 2012;93(8):1772–1778. doi: 10.1890/11-2177.1. [DOI] [PubMed] [Google Scholar]

- 56.Fisher C.K., Mora T., Walczak A.M. Variable habitat conditions drive species covariation in the human microbiota. PLoS Comput. Biol. 2017;13(4) doi: 10.1371/journal.pcbi.1005435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Menon R., Ramanan V., Korolev K.S. Interactions between species introduce spurious associations in microbiome studies. PLoS Comput. Biol. 2018;14(1) doi: 10.1371/journal.pcbi.1005939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Jaynes E.T. Information theory and statistical mechanics I. Phys. Rev. 1957;106:620–630. [Google Scholar]

- 59.Jaynes E.T. Information theory and statistical mechanics II. Phys. Rev. 1957;108:171–190. [Google Scholar]

- 60.Pressé S., Ghosh K., Lee J., Dill K.A. Principles of maximum entropy and maximum caliber in statistical physics. Rev. Mod. Phys. 2013;85(3):1115. [Google Scholar]

- 61.Lesne A. Shannon entropy: a rigorous notion at the crossroads between probability, information theory, dynamical systems and statistical physics. Math. Struct. Comput. Sci. 2014;24(3) [Google Scholar]

- 62.Stein R.R., Marks D.S., Sander C. Inferring pairwise interactions from biological data using maximum-entropy probability models. PLoS Comput. Biol. 2015;11(7) doi: 10.1371/journal.pcbi.1004182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Brualdi R.A. 5th ed. Prentice-Hall; 2010. Introductory Combinatorics. [Google Scholar]

- 64.Shannon C. A mathematical theory of communication. Bell Syst. Tech. J. 1948;27:379–423. and 623-656. [Google Scholar]

- 65.Khinchin A.Y. Courier Corporation; 2013. Mathematical Foundations of Information Theory. [Google Scholar]

- 66.Shore J., Johnson R. Axiomatic derivation of the principle of maximum entropy and the principle of minimum cross-entropy. IEEE Trans. Inf. Theory. 1980;26(1):26–37. [Google Scholar]

- 67.Bialek W. Princeton University Press; 2012. Biophysics: Searching for Principles. Chapter 6. [Google Scholar]

- 68.Bertsekas D.P. Academic Press; 2014. Constrained Optimization and Lagrange Multiplier Methods. [Google Scholar]

- 69.Nguyen H.C., Zecchina R., Berg J. Inverse statistical problems: from the inverse Ising problem to data science. Adv. Phys. 2017;66(3):197–261. [Google Scholar]

- 70.Cramér H. Princeton University Press; 2016. Mathematical Methods of Statistics. [Google Scholar]

- 71.Varoquaux G., Craddock R.C. Learning and comparing functional connectomes across subjects. NeuroImage. 2013;80:405–415. doi: 10.1016/j.neuroimage.2013.04.007. [DOI] [PubMed] [Google Scholar]

- 72.Berger A.L., Pietra V.J.D., Pietra S.A.D. A maximum entropy approach to natural language processing. Comput. Linguist. 1996;22(1):39–71. [Google Scholar]

- 73.Stephens G.J., Bialek W. Statistical mechanics of letters in words. Phys. Rev. E. 2010;81(6) doi: 10.1103/PhysRevE.81.066119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Muñoz-Cobo J.L., Mendizábal R., Miquel A., Berna C., Escrivá A. Use of the Principles of Maximum Entropy and Maximum Relative Entropy for the Determination of Uncertain Parameter Distributions in Engineering Applications. Entropy. 2017;19(9):486. [Google Scholar]

- 75.Wang P., Robert L., Pelletier J., Dang W.L., Taddei F., Wright A., Jun S. Robust growth of Escherichia coli. Curr. Biol. 2010;20(12):1099–1103. doi: 10.1016/j.cub.2010.04.045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Tanouchi Y., Pai A., Park H., Huang S., Buchler N.E., You L. Long-term growth data of Escherichia coli at a single-cell level. Sci. Data. 2017;4 doi: 10.1038/sdata.2017.36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Kiviet D.J., Nghe P., Walker N., Boulineau S., Sunderlikova V., Tans S.J. Stochasticity of metabolism and growth at the single-cell level. Nature. 2014;514(7522):376–379. doi: 10.1038/nature13582. [DOI] [PubMed] [Google Scholar]

- 78.Wallden M., Fange D., Lundius E.G., Baltekin Ö., Elf J. The synchronization of replication and division cycles in individual E. coli cells. Cell. 2016;166(3):729–739. doi: 10.1016/j.cell.2016.06.052. [DOI] [PubMed] [Google Scholar]

- 79.Kennard A.S., Osella M., Javer A., Grilli J., Nghe P., Tans S.J., Cicuta P., Lagomarsino M.C. Individuality and universality in the growth-division laws of single E. coli cells. Phys. Rev. E. 2016;93(1) doi: 10.1103/PhysRevE.93.012408. [DOI] [PubMed] [Google Scholar]

- 80.Si F., Li D., Cox S.E., Sauls J.T., Azizi O., Sou C., Schwartz A.B., Erickstad M.J., Jun Y., Li X., Jun S. Invariance of initiation mass and predictability of cell size in Escherichia coli. Curr. Biol. 2017;27(9):1278–1287. doi: 10.1016/j.cub.2017.03.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Jun S., Taheri-Araghi S. Cell-size maintenance: universal strategy revealed. Trends Microbiol. 2015;23(1):4–6. doi: 10.1016/j.tim.2014.12.001. [DOI] [PubMed] [Google Scholar]

- 82.Zampieri M., Sekar K., Zamboni N., Sauer U. Frontiers of high-throughput metabolomics. Curr. Opin. Chem. Biol. 2017;36:15–23. doi: 10.1016/j.cbpa.2016.12.006. [DOI] [PubMed] [Google Scholar]

- 83.Thiele I., Palsson B.O. A protocol for generating a high-quality genome-scale metabolic reconstruction. Nat. Protoc. 2010;5(1):93–121. doi: 10.1038/nprot.2009.203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Schellenberger J., Que R., Fleming R.M., Thiele I., Orth J.D., Feist A.M., Zielinski D.C., Bordbar A., Lewis N.E., Rahmanian S., Kang J. Quantitative prediction of cellular metabolism with constraint-based models: the COBRA Toolbox v2.0. Nat. Protoc. 2011;6(9):1290–1307. doi: 10.1038/nprot.2011.308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Bordbar A., Monk J.M., King Z.A., Palsson B.O. Constraint-based models predict metabolic and associated cellular functions. Nat. Rev. Genet. 2014;15(2):107–120. doi: 10.1038/nrg3643. [DOI] [PubMed] [Google Scholar]

- 86.Reed J.L. Shrinking the metabolic solution space using experimental datasets. PLoS Comput. Biol. 2012;8 doi: 10.1371/journal.pcbi.1002662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Schrijver A. John Wiley & Sons; 1998. Theory of Linear and Integer Programming. [Google Scholar]

- 88.De Martino D., Mori M., Parisi V. Uniform sampling of steady states in metabolic networks: heterogeneous scales and rounding. PLoS ONE. 2015;10(4) doi: 10.1371/journal.pone.0122670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Haraldsdottir H.S., Cousins B., Thiele I., Fleming R.M., Vempala S. CHRR: coordinate hit-and-run with rounding for uniform sampling of constraint-based models. Bioinformatics. 2017;33(11):1741–1743. doi: 10.1093/bioinformatics/btx052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Reed J.L., Vo T.D., Schilling C.H., Palsson B.O. An expanded genome-scale model of Escherichia coli K-12 (iJR904 GSM/GPR) Genome Biol. 2003;4(9):R54. doi: 10.1186/gb-2003-4-9-r54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.De Martino D., Capuani F., De Martino A. Growth against entropy in bacterial metabolism: the phenotypic trade-off behind empirical growth rate distributions in E. coli. Phys. Biol. 2016;13 doi: 10.1088/1478-3975/13/3/036005. [DOI] [PubMed] [Google Scholar]

- 92.De Martino D., Andersson A., Bergmiller T., Guet C.C., Tkacik G. Statistical mechanics for metabolic networks during steady-state growth. 2017. arXiv:1703.01818 preprint. [DOI] [PMC free article] [PubMed]

- 93.De Martino D., Masoero D. Asymptotic analysis of noisy fitness maximization, applied to metabolism & growth. J. Stat. Mech. 2016;2016(12) [Google Scholar]

- 94.De Martino D., Capuani F., De Martino A. Quantifying the entropic cost of cellular growth control. Phys. Rev. E. 2017;96 doi: 10.1103/PhysRevE.96.010401. 010401(R) [DOI] [PubMed] [Google Scholar]

- 95.De Martino D., De Martino A. Constraint-based inverse modeling of metabolic networks: a proof of concept. 2017. arXiv:1704.08087 preprint.

- 96.Burgard A.P., Maranas C.D. Optimization-based framework for inferring and testing hypothesized metabolic objective functions. Biotechnol. Bioeng. 2003;82(6):670–677. doi: 10.1002/bit.10617. [DOI] [PubMed] [Google Scholar]

- 97.Knorr A.L., Jain R., Srivastava R. Bayesian-based selection of metabolic objective functions. Bioinformatics. 2006;23(3):351–357. doi: 10.1093/bioinformatics/btl619. [DOI] [PubMed] [Google Scholar]

- 98.Heino J., Tunyan K., Calvetti D., Somersalo E. Bayesian flux balance analysis applied to a skeletal muscle metabolic model. J. Theor. Biol. 2007;248(1):91–110. doi: 10.1016/j.jtbi.2007.04.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Chiu H.C., Segrè D. Comparative determination of biomass composition in differentially active metabolic States. Genome Inform. Ser. 2008;20:171–182. doi: 10.1142/9781848163003_0015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Gianchandani E.P., Oberhardt M.A., Burgard A.P., Maranas C.D., Papin J.A. Predicting biological system objectives de novo from internal state measurements. BMC Bioinform. 2008;9(1):43. doi: 10.1186/1471-2105-9-43. [DOI] [PMC free article] [PubMed] [Google Scholar]