Abstract

Purpose

Brain–computer interfaces (BCIs) have the potential to improve communication for people who require but are unable to use traditional augmentative and alternative communication (AAC) devices. As BCIs move toward clinical practice, speech-language pathologists (SLPs) will need to consider their appropriateness for AAC intervention.

Method

This tutorial provides a background on BCI approaches to provide AAC specialists foundational knowledge necessary for clinical application of BCI. Tutorial descriptions were generated based on a literature review of BCIs for restoring communication.

Results

The tutorial responses directly address 4 major areas of interest for SLPs who specialize in AAC: (a) the current state of BCI with emphasis on SLP scope of practice (including the subareas: the way in which individuals access AAC with BCI, the efficacy of BCI for AAC, and the effects of fatigue), (b) populations for whom BCI is best suited, (c) the future of BCI as an addition to AAC access strategies, and (d) limitations of BCI.

Conclusion

Current BCIs have been designed as access methods for AAC rather than a replacement; therefore, SLPs can use existing knowledge in AAC as a starting point for clinical application. Additional training is recommended to stay updated with rapid advances in BCI.

Individuals with severe speech and physical impairments often rely on augmentative and alternative communication (AAC) and specialized access technologies to facilitate communication on the basis of the nature and severity of their speech, motor, and cognitive impairments. In some cases, people who use AAC are able to use specially modified computer peripherals (e.g., mouse, joystick, stylus, or button box) to access AAC devices, whereas in other, more severe cases, sophisticated methods are needed to detect the most subtle of movements (e.g., eye gaze tracking; Fager, Beukelman, Fried-Oken, Jakobs, & Baker, 2012). In the most serious cases of total paralysis with loss of speech (e.g., locked-in syndrome; Plum & Posner, 1972), even these advanced methods are not sufficient to provide access to language and literacy (Oken et al., 2014). Access to communication is critical for maintaining social interactions and autonomy of decision-making in this population (Beukelman & Mirenda, 2013); therefore, individuals with paralysis and akinetic mutism have been identified as potential candidates for brain–computer interface (BCI) access to AAC (Fager et al., 2012).

BCIs for communication take AAC and access technology to the next level and provide a method for selecting and constructing messages by detecting changes in brain activity for controlling communication software (Wolpaw, Birbaumer, McFarland, Pfurtscheller, & Vaughan, 2002). In particular, they are devices that provide a direct link between an individual and a computer device through brain activity alone, without requiring any overt movement or behavior. As an access technique, BCIs have the potential to reduce or eliminate some physical barriers to successful AAC intervention for individuals with severe speech and physical impairments. Similar to AAC and associated access techniques, current BCI technology can take a variety of forms on the basis of the neural signal targeted and the method used for individuals to interact with the communication interface. Each of these factors may impose different demands on the cognitive and motor abilities of individuals who use BCI (Brumberg & Guenther, 2010). Although the field of BCI has grown over the past decade, many stakeholders including speech-language pathologists (SLPs), other practitioners, individuals who use AAC and potentially BCI, and caretakers are unfamiliar with the technology. SLPs are a particularly important stakeholder given their role as the primary service providers who assist clients with communicative challenges secondary to motor limitations through assessment and implementation of AAC interventions and strategies. A lack of core knowledge on the potential use of BCI for clinical application may limit future intervention with BCI for AAC according to established best practices. This tutorial will offer some basic explanations regarding BCI, including the benefits and limitations of this access technique, and the different varieties of BCI. It will also provide a description of individuals who may be potentially best suited for using BCI to access AAC. An understanding of this information is especially important for SLPs specializing in AAC who are most likely to interact with BCI as they move from research labs into real-world situations (e.g., classrooms, home, work).

Tutorial Descriptions by Topic Area

Topic 1: How Do People Who Use BCI Interact With the Computer?

BCIs are designed to allow individuals to control computers and communication systems using brain activity alone and are separated according to whether signals are recorded noninvasively from/through the scalp or invasively through implantation of electrodes in or on the brain. Noninvasive BCIs, those that are based on brain recordings made through the intact skull without requiring a surgical procedure (e.g., electroencephalography or EEG, magnetoencephalography, functional magnetic resonance imaging, functional near-infrared spectroscopy), often use an indirect technique to map brain signals unrelated to communication onto controls for a communication interface (Brumberg, Burnison, & Guenther, 2016). Though there are many signal acquisition modalities for noninvasive recordings of brain activity, noninvasive BCIs typically use EEG, which is recorded through electrodes placed on the scalp according to a standard pattern (Oostenveld & Praamstra, 2001) and record voltage changes that result from the simultaneous activation of millions of neurons. EEG can be analyzed for its spontaneous activity, or in response to a stimulus (e.g., event-related potentials), and both have been examined for indirect access BCI applications. In contrast, another class of BCIs attempts to directly output speech from imagined/attempted productions (Blakely, Miller, Rao, Holmes, & Ojemann, 2008; Brumberg, Wright, Andreasen, Guenther, & Kennedy, 2011; Herff et al., 2015; Kellis et al., 2010; Leuthardt et al., 2011; Martin et al., 2014; Mugler et al., 2014; Pei, Barbour, Leuthardt, & Schalk, 2011; Tankus, Fried, & Shoham, 2012); however, these techniques typically rely on invasively recorded brain signals (via implanted microelectrodes or subdural electrodes) related to speech motor preparation and production. Though in their infancy, direct BCIs for communication have the potential to completely replace the human vocal tract for individuals with severe speech and physical impairments (Brumberg, Burnison, & Guenther, 2016; Chakrabarti, Sandberg, Brumberg, & Krusienski, 2015); however, the technology does not yet provide a method to “read thoughts.” For the remainder of this tutorial, we focus on noninvasive, indirect methods for accessing AAC with BCIs, and we refer readers to other sources for descriptions of direct BCIs for speech (Brumberg, Burnison, & Guenther, 2016; Chakrabarti et al., 2015).

Indirect methods for BCI parallel other access methods for AAC devices, where nonspeech actions (e.g., button press, direct touch, eye gaze) are translated to a selection on a communication interface. The main difference between the two access methods is that BCIs rely on neurophysiological signals related to sensory stimulation, preparatory motor behaviors, and/or covert motor behaviors (e.g., imagined or attempted limb movements), rather than overt motor behavior used for conventional access. The way in which individuals control a BCI greatly depends on the neurological signal used by the device to make selections on the communication interface. For instance, in the case of an eye-tracking AAC device, one is required to gaze at a communication icon, and the system makes a selection on the basis of the screen coordinates of the eye gaze location. For a BCI, individuals may be required to (a) attend to visual stimuli to generate an appropriate visual–sensory neural response to select the intended communication icon (e.g., Donchin, Spencer, & Wijesinghe, 2000), (b) take part in an operant conditioning paradigm using biofeedback of EEG (e.g., Kübler et al., 1999), (c) listen to auditory stimuli to generate auditory–sensory neural responses related to the intended communication output (e.g., Halder et al., 2010), or (d) imagine movements of the limbs to alter the sensorimotor rhythm (SMR) to select communication items (e.g., Pfurtscheller & Neuper, 2001).

At present, indirect BCIs are more mature as a technology, and many have already begun user trials (Holz, Botrel, Kaufmann, & Kübler, 2015; Sellers, Vaughan, & Wolpaw, 2010). Therefore, SLPs are most likely to be involved with indirect BCIs first as they move from the research lab to the real world. Indirect BCI techniques are very similar to current access technologies for high-tech AAC; for example, the output of the BCI system can act as an input method for conventional AAC devices. Below, we review indirect BCI techniques and highlight their possible future in AAC.

The P300-Based BCI

The visual P300 grid speller (Donchin et al., 2000) is the most well-known and most mature technology with ongoing at-home user trials (Holz et al., 2015; Sellers et al., 2010). Visual P300 BCIs for communication use the P300 event-related potential, a neural response to novel, rare visual stimuli in the presence of many other visual stimuli, to select items on a communication interface. The traditional graphical layout for a visual P300 speller is a 6 × 6 grid that includes the 26 letters of the alphabet, space, backspace, and numbers (see Figure 1). Each row and column 1 on the spelling grid are highlighted in a random order, and a systematic variation in the EEG waveform is generated when one attends to a target item for selection, the “oddball stimulus,” which occurs infrequently compared with the remaining items (Donchin et al., 2000). The event-related potential in response to the target item will contain a positive voltage fluctuation approximately 300 ms after the item is highlighted (Farwell & Donchin, 1988). The BCI decoding algorithm then selects items associated with detected occurrences of the P300 for message creation (Donchin et al., 2000). The P300 grid speller has been operated by individuals with amyotrophic lateral sclerosis (ALS; Nijboer, Sellers, et al., 2008; Sellers & Donchin, 2006) and has been examined as part of at-home trials by individuals with neuromotor impairments (Holz et al., 2015; Sellers & Donchin, 2006), making it a likely candidate for future BCI-based access for AAC.

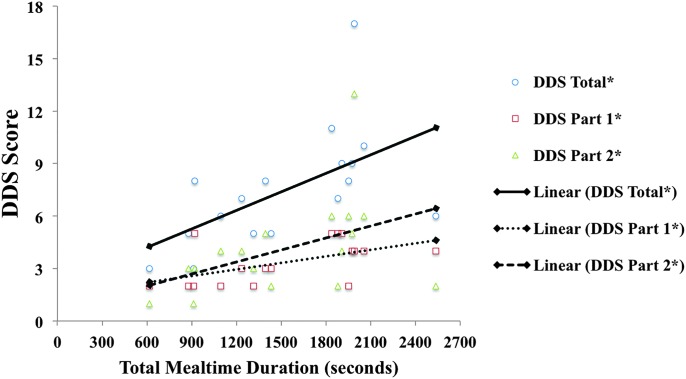

Figure 1.

From left to right, example visual displays for the following BCIs: P300 grid speller, RSVP P300, SSVEP, and motor-based (SMR with keyboard). For the P300 grid, each row and column are highlighted until a letter is selected. In the RSVP, each letter is displayed randomly, sequentially in the center of the screen. For the SSVEP, this example uses four flickering stimuli (at different frequencies) to represent the cardinal directions, which are used to select individual grid items. This can also be done with individual flicker frequencies for all 36 items with certain technical considerations. For the motor-based BCI, this is an example of a binary-selection virtual keyboard; imagined right hand movements select the right set of letters. RSVP = rapid serial visual presentation; SSVEP = steady state visually evoked potential; SMR = sensorimotor rhythm; BCI = brain–computer interfaces. Copyright © Tobii Dynavox. Reprinted with permission.

In addition to the cognitive requirements for operating the P300 speller, successful operation depends somewhat on the degree of oculomotor control (Brunner et al., 2010). Past findings have shown that the P300 amplitude can be reduced if individuals are unable to use an overt attention strategy (gazing directly at the target) and, instead, must use a covert strategy (attentional change without ocular shifting), which can degrade BCI performance (Brunner et al., 2010). An alternative P300 interface displays a single item at a time on the screen (typically to the center as in Figure 1, second from left) to alleviate concerns for individuals with poor oculomotor control. This interface, known as the rapid serial visual presentation speller, has been successfully controlled by a cohort of individuals across the continuum of locked-in syndrome severity (Oken et al., 2014). All BCIs that use spelling interfaces require sufficient levels of literacy, though many can be adapted to use icon or symbol-based communication (e.g., Figure 2).

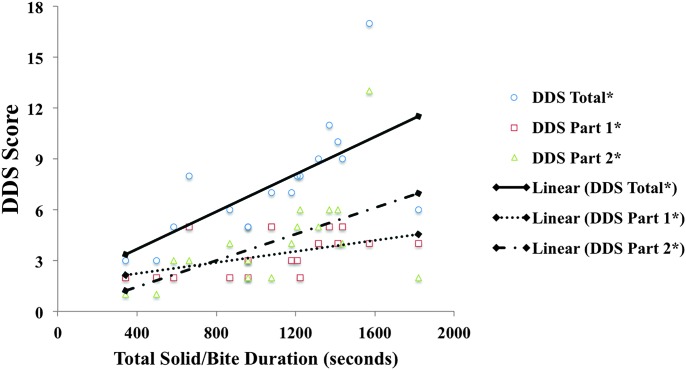

Figure 2.

From left to right, examples of how existing BCI paradigms can be applied to page sets from current AAC devices: P300 grid, SSVEP, motor based (with icon grid). For the P300 grid interface, a row or column is highlighted until a symbol is selected (here, it is yogurt). For the SSVEP, either directional (as shown here) or individual icons flicker at specified strobe rates to either move a cursor or directly select an item. For motor based, the example shown here uses attempted or imagined left hand movements to advance the cursor and right hand movements to choose the currently selected item. SSVEP = steady state visually evoked potential; SMR = sensorimotor rhythm; BCI = brain–computer interfaces; AAC = augmentative and alternative communication. Copyright © Tobii Dynavox. Reprinted with permission.

Auditory stimuli can also be used to elicit P300 responses for interaction with BCI devices for individuals with poor visual capability (McCane et al., 2014), such as severe visual impairment, impaired oculomotor control, and cortical blindness. Auditory interfaces can also be used in poor viewing environments, such as outdoors or in the presence of excessive lighting glare. Like its visual counterpart, the auditory P300 is elicited via an oddball paradigm, and has been typically limited to binary (yes/no) selection by attending to one of two different auditory tones presented monaurally to each ear (Halder et al., 2010), or linguistic stimuli (e.g., attending to a “yep” target among “yes” presentations in the right ear vs. “nope” and “no” in the left; Hill et al., 2014). The binary control achieved using the auditory P300 interface has the potential to be used to navigate a spelling grid similar to conventional auditory scanning techniques for accessing AAC systems, by attending to specific tones that correspond to rows and columns (Käthner et al., 2013; Kübler et al., 2009). There is evidence that auditory grid systems may require greater attention than their visual analogues (Klobassa et al., 2009; Kübler et al., 2009), which should be considered when matching clients to the most appropriate communication device.

Steady State Evoked Potentials

BCIs can be controlled using attention-modulated steady state brain rhythms, as opposed to event-related potentials, in both visual (steady state visually evoked potential [SSVEP]) and auditory (auditory steady state response [ASSR]) domains. Both the SSVEP and ASSR are physiological responses to a driving input stimulus that are amplified when an individual focuses his or her attention on the stimulus (Regan, 1989). Strobe stimuli are commonly used for SSVEP, whereas amplitude-modulated tones are often used for ASSR (Regan, 1989).

BCIs using SSVEP exploit the attention-modulated response to strobe stimuli by simultaneously presenting multiple communication items for selection, each flickering at a different frequency (Cheng, Gao, Gao, & Xu, 2002; Friman, Luth, Volosyak, & Graser, 2007; Müller-Putz, Scherer, Brauneis, & Pfurtscheller, 2005). 2 As a result, all item flicker rates will be observed in the EEG recordings, but the frequency of the attended stimulus will contain the largest amplitude (Lotte, Congedo, Lécuyer, Lamarche, & Arnaldi, 2007; Müller-Putz et al., 2005; Regan, 1989) and greatest temporal correlation to the strobe stimulus (Chen, Wang, Gao, Jung, & Gao, 2015; Lin, Zhang, Wu, & Gao, 2007). The stimulus with the greatest neurophysiological response will then be selected by the BCI to construct a message, typically via an alphanumeric keyboard (shown in Figure 1), though icons can be adapted for different uses and levels of literacy (e.g., Figure 2). Major advantages of this type of interface are the following: (a) high accuracy rates, often reported above 90% with very little training (e.g., Cheng et al., 2002; Friman et al., 2007); (b) overlapping, centrally located stimuli could be used for individuals with impaired oculomotor control (Allison et al., 2008). A major concern with this technique, however, is an increased risk for seizures (Volosyak, Valbuena, Lüth, Malechka, & Gräser, 2011).

BCIs that use the ASSR require one to shift his or her attention to a sound stream that contains a modulated stimulus (e.g., a right monoaural 38-Hz amplitude modulation, 1000-Hz carrier tone presented with a left monoaural 42-Hz modulated, 2500-Hz carrier; Lopez, Pomares, Pelayo, Urquiza, & Perez, 2009). As with the SSVEP, the modulation frequency of the attended sound stream is observable in the recorded EEG signal and will be amplified relative to the other competing stream. Therefore, in this example, if the BCI detects the greatest EEG amplitude at 38 Hz, it will perform a binary action associated with the right-ear tone (e.g., yes or “select”), whereas detection of the greatest EEG amplitude at 42 Hz will generate a left-ear tone action (e.g., no or “advance”).

Motor-Based BCIs

Another class of BCIs provides access to communication interfaces using changes in the SMR, a neurological signal related to motor production and motor imagery (Pfurtscheller & Neuper, 2001; Wolpaw et al., 2002), for individuals with and without neuromotor impairments (Neuper, Müller, Kübler, Birbaumer, & Pfurtscheller, 2003; Vaughan et al., 2006). The SMR is characterized by the μ (8–12 Hz) and β (18–25 Hz) band spontaneous EEG oscillations that are known to desynchronize, or reduce in amplitude, during covert and overt movement attempts (Pfurtscheller & Neuper, 2001; Wolpaw et al., 2002). Many motor-based BCIs use left and right limb movement imagery because the SMR desynchronization will occur on the contralateral side, and are most often used to control spelling interfaces (e.g., virtual keyboard: Scherer, Müller, Neuper, Graimann, & Pfurtscheller, 2004; DASHER: Wills & MacKay, 2006; hex-o-spell: Blankertz et al., 2006; see Figure 1, right, for an example), though they can be used as inputs to commercial AAC devices as well (Brumberg, Burnison, & Pitt, 2016).

Two major varieties of motor-based BCIs have been developed for controlling computers: those that provide continuous cursor control (analogous to mouse/joystick and eye gaze) and others that use discrete selection (analogous to button presses). An example layout of keyboard-based and symbol-based motor-BCI interfaces are shown in Figures 1 and 2. Cursor-style BCIs transform changes in the SMR continuously over time into computer control signals (Wolpaw & McFarland, 2004). One example of a continuous, SMR-based BCI uses imagined movements of the hands and feet to move a cursor to select progressively refined groups of letters organized at different locations around a computer screen (Miner, McFarland, & Wolpaw, 1998; Vaughan et al., 2006). Another continuous-style BCI is used to control the “hex-o-spell” interface in which imagined movements of the right hand rotate an arrow to point at one of six groups of letters, and imagined foot movements extend the arrow to select the current letter group (Blankertz et al., 2006).

Discrete-style motor BCIs perform this transformation using the event-related desynchronization (Pfurtscheller & Neuper, 2001), a change to the SMR in response to some external stimulus, like an automatically highlighted row or column via scanning interface. One example of a discrete-style motor BCI uses the event-related desynchronization to control a virtual keyboard consisting of a binary tree representation of letters, in which individuals choose between two blocks of letters, selected by (imagined) right or left hand movements until a single letter or item remains (Scherer et al., 2004). Most motor-based BCIs require many weeks or months for successful operation and report accuracies greater than 75% for individuals without neuromotor impairments and, in one study, 69% accuracy for individuals with severe neuromotor impairments (Neuper et al., 2003).

Motor-based BCIs are inherently independent from interface feedback modality because they rely only on an individual's ability to imagine his or her limbs moving, though users are often given audio or visual feedback of BCI choices (e.g., Nijboer, Furdea, et al., 2008). A recent, continuous motor BCI has been used to produce vowel sounds with instantaneous auditory feedback by using limb motor imagery to control a two-dimensional formant frequency speech synthesizer (Brumberg, Burnison, & Pitt, 2016). Other recent discrete motor BCIs have been developed for row–column scanning interfaces (Brumberg, Burnison, & Pitt, 2016; Scherer et al., 2015).

Operant Conditioning BCIs

This interface operates by detecting a stimulus-independent change in brain activity, which is used to select options on a communication interface. The neural signals used for controlling the BCI are not directly related to motor function or sensation. Rather, it uses EEG biofeedback for operant conditioning to teach individuals to voluntarily change the amplitude and polarity of the slow cortical potential, a slow-wave (< 1 Hz) neurological rhythm that is related to movements of a one-dimensional cursor. In BCI applications, cursor vertical position is used to make binary selections for communication interface control (Birbaumer et al., 2000; Kübler et al., 1999).

Topic 2: Who May Best Benefit From a BCI?

At present, BCIs are best suited for individuals with acquired neurological and neuromotor impairments leading to paralysis and loss of speech with minimal cognitive involvement (Wolpaw et al., 2002), for example, brainstem stroke and traumatic brain injury (Mussa-Ivaldi & Miller, 2003). Nearly all BCIs require some amount of cognitive effort or selective attention, though the amount of each depends greatly on the style and modality of the specific device. Individuals with other neuromotor disorders, such as cerebral palsy, muscular dystrophies, multiple sclerosis, Parkinson's disease, and brain tumors, may require AAC (Fried-Oken, Mooney, Peters, & Oken, 2013; Wolpaw et al., 2002) but are not yet commonly considered for BCI studies and interventions (cf. Neuper et al., 2003; Scherer et al., 2015), due to concomitant impairments in cognition, attention, and memory. In other instances, elevated muscle tone and uncontrolled movements (e.g., spastic dysarthria, dystonia) limit the utility of BCI due to the introduction of physical and electromyographic movement artifacts (i.e., muscle-based signals that are much stronger than EEG and can distort recordings of brain activity). BCI research is now beginning to consider important human factors involved in appropriate use of BCI for individuals (Fried-Oken et al., 2013) and for coping with difficulties in brain signal acquisition due to muscular (Scherer et al., 2015) and environmental sources of artifacts. Developing BCI protocols to help identify the BCI technique most appropriate for each individual must be considered as BCI development moves closer to integration with existing AAC techniques.

BCI Summary

BCIs use a wide range of techniques for mapping brain activity to communication device control through a combination of signals related to sensory, motor, and/or cognitive processes (see Table 1 for a summary of BCI types). The choice of BCI protocol and feedback methods trade off with cognitive abilities needed for successful device operation (e.g., Geronimo, Simmons, & Schiff, 2016; Kleih & Kübler, 2015; Kübler et al., 2009). Many BCIs require individuals to follow complex, multistep procedures and require potentially high levels of attentional capacity that are often a function of the sensory or motor process used for BCI operation. For example, the P300 speller BCI (Donchin et al., 2000) requires that individuals have an ability to attend to visual stimuli and make decisions about them (e.g., recognize the intended visual stimulus among many other stimuli). BCIs that use SSVEPs depend on the neurological response to flickering visual stimuli (Cheng et al., 2002) that is modulated by attention rather than other cognitive tasks. These two systems both use visual stimuli to elicit neural activity for controlling a BCI but differ in their demands on cognitive and attention processing. In contrast, motor-based BCI systems (e.g., Pfurtscheller & Neuper, 2001; Wolpaw et al., 2002) require individuals to have sufficient motivation and volition, as well as an ability to learn how changing mental tasks can control a communication device.

Table 1.

Summary of BCI varieties and their feedback modality.

| EEG signal type | Sensory/Motor modality | User requirements |

|---|---|---|

| Event-related potentials | Visual P300 (grid) | Visual oddball paradigm, requires selective attention around the screen |

| Visual P300 (RSVP) | Visual oddball paradigm, requires selective attention to the center of the screen only (poor oculomotor control) | |

| Auditory P300 | Auditory oddball paradigm, requires selective auditory attention, no vision requirement | |

| Steady state evoked potentials | Steady state visually evoked potential | Attention to frequency tagged visual stimuli, may increase seizure risk |

| Auditory steady state response | Attention to frequency modulated audio stimuli | |

| Motor-based | Continuous sensorimotor rhythm | Continuous, smooth control of interface (e.g., cursors) using motor imagery (first person) |

| Discrete event-related desynchronization | Binary (or multichoice) selection of interface items (# choices = # of imagined movements), requires motor imagery ability | |

| Motor preparatory signals, for example, contingent negative variation | Binary selection of communication interface items using imagined movements | |

| Operant conditioning | Slow cortical potentials | Binary selection of communication interface items after biofeedback-based learning protocol |

Note. BCI = brain–computer interface; EEG = electroencephalography; RSVP = rapid serial visual presentation.

Sensory, Motor, and Cognitive Factors

Alignment of the sensory, motor, and cognitive requirements for using BCI to access AAC devices with individuals' unique profile will help identify and narrow down the number of candidate BCI variants (e.g., feature matching; Beukelman & Mirenda, 2013; Light & McNaughton, 2013), which is important for improving user outcomes with the chosen device (Thistle & Wilkinson, 2015). Matching possible BCIs should also include overt and involuntary motor considerations, specifically the presence of spasticity or variable muscle tone/dystonia, which may produce electromyographic artifacts that interfere with proper BCI function (Goncharova, McFarland, Vaughan, & Wolpaw, 2003). In addition, there may be a decline in brain signals used for BCI decoding as symptoms of progressive neuromotor diseases become more severe (Kübler, Holz, Sellers, & Vaughan, 2015; Silvoni et al., 2013) that may result in decreased BCI performance. The wide range in sensory, motor, and cognitive components of BCI designs point to a need for user-centered design frameworks (e.g., Lynn, Armstrong, & Martin, 2016) and feature matching/screening protocols (e.g., Fried-Oken et al., 2013; Kübler et al., 2015), like those used for current practices in AAC intervention (Light & McNaughton, 2013; Thistle & Wilkinson, 2015).

Topic 3: Are BCIs Faster Than Other Access Methods for AAC?

Current AAC devices yield a range of communication rates that depend on access modality (e.g., direct selection, scanning), level of literacy, and information represented by each communication item (e.g., single-meaning icons or images, letters, icons representing complex phrases; Hill & Romich, 2002; Roark, Fried-Oken, & Gibbons, 2015), as well as word prediction software (Trnka, McCaw, Yarrington, McCoy, & Pennington, 2008). Communication rates using AAC are often less than 15 words per minute (Beukelman & Mirenda, 2013; Foulds, 1980), and slower speeds (two to five words per minute; Patel, 2011) are observed for letter spelling due to the need for multiple selections for spelling words (Hill & Romich, 2002). Word prediction and language modeling can increase both speed and typing efficiency (Koester & Levine, 1996; Roark et al., 2015; Trnka et al., 2008), but the benefits may be limited due to additional cognitive demands (Koester & Levine, 1996). Scan rate in auto-advancing row–column scanning access methods also affects communication rate, and though faster scan rates should lead to faster communication rates, slower scan rates can reduce selection errors (Roark et al., 2015). BCIs are similarly affected by scan rate (Sellers & Donchin, 2006); for example, a P300 speller can only operate as fast as each item is flashed. Increases in flash rate may also increase cognitive demands for locating desired grid items while ignoring others, similar to effects observed using commercial AAC visual displays (Thistle & Wilkinson, 2013).

Current BCIs for communication generally yield selection rates that are slower than existing AAC methods, even with incorporation of language prediction models (Oken et al., 2014). Table 2 provides a summary of selection rates from recent applications of conventional access techniques and BCI to communication interfaces. Both individuals with and without neuromotor impairments using motor-based BCIs have achieved selection rates under 10 selections (letters, numbers, symbols) per minute (Blankertz et al., 2006; Neuper et al., 2003; Scherer et al., 2004), and those using P300 methods commonly operate below five selections per minute (Acqualagna & Blankertz, 2013; Donchin et al., 2000; Nijboer, Sellers, et al., 2008; Oken et al., 2014). A recent P300 study using a novel presentation technique has obtained significantly higher communication rates of 19.4 characters per minute, though the method has not been studied in detail with participants with neuromotor impairments (Townsend & Platsko, 2016). BCIs, on the basis of the SSVEP, have emerged as a promising technique often yielding both high accuracy (> 90%) and communication rates as high as 33 characters per minute (Chen et al., 2015).

Table 2.

Communication rates from recent BCI and conventional access to communication interfaces.

| BCI method | Population | Selection rate | Source |

|---|---|---|---|

| Berlin BCI (motor imagery) | Healthy | 2.3–7.6 letters/min | Blankertz et al. (2006) |

| Graz BCI (motor imagery) | Healthy | 2.0 letters/min | Scherer et al. (2004) |

| Graz BCI (motor imagery) | Impaired | 0.2–2.5 letter/min | Neuper et al. (2003) |

| P300 speller (visual) | Healthy | 4.3 letters/min | Donchin et al. (2000) |

| 19.4 char/min (120.0 bits/min) | Townsend and Platsko (2016) | ||

| P300 speller (visual) | ALS | 1.5–4.1 char/min (4.8–19.2 bits/min) | Nijboer, Sellers, et al. (2008) |

| ALS | 3–7.5 char/min | Mainsah et al. (2015) | |

| RSVP P300 | LIS | 0.4–2.3 char/min | Oken et al. (2014) |

| RSVP P300 | Healthy | 1.2–2.5 letter/min | Acqualagna and Blankertz (2013), Oken et al. (2014) |

| SSVEP | Healthy | 33.3 char/min | Chen et al. (2015) |

| 10.6 selections/min (27.2 bits/min) | Friman et al. (2007) | ||

| AAC (row–column) | Healthy | 18–22 letters/min | Roark et al. (2015) |

| LIS | 6.0 letters/min | ||

| AAC (direct selection) | Healthy | 5.2 words/min | Trnka et al. (2008) |

Note. BCI = brain–computer interface; ALS = amyotrophic lateral sclerosis; RSVP = rapid serial visual presentation; LIS = locked-in syndrome; SSVEP = steady state visually evoked potential; AAC = augmentative and alternative communication; char = character.

From these reports, BCI performance has started to approach levels associated with AAC devices using direct selection, and the differences in communication rates for scanning AAC devices and BCIs (shown in Table 2) are reduced when making comparisons between individuals with neuromotor impairments rather than individuals without impairments (e.g., AAC: six characters per minute; Roark et al., 2015; BCI: one to eight characters per minute; Table 2). Differences in communication rate can also be reduced based on the type of BCI method (e.g., 3–7.5 characters per minute; Mainsah et al., 2015). These results suggest that BCI has become another clinical option for AAC intervention that should be considered during the clinical decision-making process. BCIs have particular utility when considered for the most severe cases; the communication rates described in the literature are sufficient to provide access to language and communication for those who are currently without both. Recent improvements in BCI designs have shown promising results (e.g., Chen et al., 2015; Townsend & Platsko, 2016), which may start to push BCI communication efficacy past current benchmarks for AAC. Importantly, few BCIs have been evaluated over extended periods of time (Holz et al., 2015; Sellers et al., 2010); therefore, it is possible that BCI selection may improve over time with training.

Topic 4: Fatigue and Its Effects

BCIs, like conventional AAC access techniques, require various levels of attention, working memory, and cognitive load that all affect the amount of effort (and fatigue) needed to operate the device (Kaethner, Kübler, & Halder, 2015; Pasqualotto et al., 2015). There is evidence that scanning-type AAC devices are not overly tiring (Gibbons & Beneteau, 2010; Roark, Beckley, Gibbons, & Fried-Oken, 2013), but prolonged AAC use can have a cumulative effect and reduce communication effectiveness (Trnka et al., 2008). In these cases, language modeling and word prediction can reduce fatigue and maintain high communication performance using an AAC device (Trnka et al., 2008). Within BCI, reports of fatigue, effort, and cognitive load are mixed. Individuals with ALS have reported that visual P300 BCIs required more effort and time compared with eye gaze access (Pasqualotto et al., 2015), whereas others reported that a visual P300 speller was easier to use, and not overly exhausting compared with eye gaze, because it does not require precise eye movements (Holz et al., 2015; Kaethner et al., 2015). Other findings from these studies indicate that the visual P300 speller incurred increased cognitive load and fatigue for some (Kaethner et al., 2015), whereas for others, there is less strain compared to eye-tracking systems (Holz et al., 2015). The application of many conventional and BCI-based AAC access techniques with the same individual may permit an adaptive strategy to rely on certain modes of access based on each individual's level of fatigue. This will allow one to change his or her method of AAC access to suit his or her fatigue level throughout the day.

Topic 5: BCI as an Addition to Conventional AAC Access Technology

At their current stage of development, BCIs are mainly the primary choice for individuals with either absent, severely impaired, or highly unreliable speech and motor control. As BCIs advance as an access modality for AAC, it is important that the goal of intervention remains on selecting an AAC method that is most appropriate versus selecting the most technologically advanced access method (Light & McNaughton, 2013). Each of the BCI devices discussed has unique sensory, motor, and cognitive requirements that may best match specific profiles of individuals who may require BCI, as well as the training required for device proficiency. The question then of BCIs replacing any form of AAC must be determined according to the needs, wants, and abilities of the individual. These factors play a crucial role on motivation, which has direct impact on BCI effectiveness (Nijboer, Birbaumer, & Kübler, 2010). Other assessment considerations include comorbid conditions, such as a history of seizures, which is a contraindication for some visual BCIs due to the rapidly flashing icons (Volosyak et al., 2011). Cognitive factors, such as differing levels of working memory (Sprague, McBee, & Sellers, 2015) and an ability to focus one's attention (Geronimo et al., 2016; Riccio et al., 2013), are also important considerations because they have been correlated to successful BCI operation.

There are additional considerations for motor-based BCIs, including (a) a well-known observation that the SMR, which is necessary for device control, cannot be adequately estimated in approximately 15%–30% of all individuals with or without impairment (Vidaurre & Blankertz, 2010) and (b) the possibility of performance decline or instability as a result of progressive neuromotor disorders, such as ALS (Silvoni et al., 2013). These concerns are currently being addressed using assessment techniques to predict motor-based BCI performance, including a questionnaire to estimate kinesthetic motor imagery (e.g., first person imagery or imagining performing and experiencing the sensations associated with motor imagery) performance (Vuckovic & Osuagwu, 2013), which is known to lead to better BCI performance compared with a third person motor imagery (e.g., watching yourself from across the room; Neuper, Scherer, Reiner, & Pfurtscheller, 2005). Overall, there is limited research available on the inter- and intraindividual considerations for BCI intervention that may affect BCI performance (Kleih & Kübler, 2015); therefore, clinical assessment tools and guidelines must be developed to help determine the most appropriate method of accessing AAC (that includes both traditional or BCI-based technologies) for each individual. These efforts have already begun (e.g., Fried-Oken et al., 2013; Kübler et al., 2015), and more work is needed to ensure that existing AAC practices are well incorporated with BCI-based assessment tools.

In summary, the ultimate purpose of BCI access techniques should not be seen as a competition or a replacement for existing AAC methods that have a history of success. Rather, the purpose of BCI-based communication is to provide a feature-matched alternate or complementary method for accessing AAC for individuals with suitability, preference, and motivation for BCI or for those who are unable to utilize current communicative methods.

Topic 6: Limitations of BCI and Future Directions

Future applications of noninvasive BCIs will continue to focus on increasing accuracy and communication rate for use either as standalone AAC options or to access existing AAC devices. One major area of future work is to improve the techniques for noninvasively recording brain activity needed for BCI operation. Though a large majority of people who may potentially use BCI have reported that they are willing to wear an EEG cap (84%; Huggins, Wren, & Gruis, 2011), the application of EEG sensors and their stability over time are still obstacles needed to be overcome for practical use. Most EEG-based BCI systems require the application of electrolytic gel to bridge the contact between electrodes and the scalp for good signal acquisition. Unfortunately, this type of application has been reported to be inconvenient and cumbersome by individuals who currently use BCI and may also be difficult to set up and maintain by a trained facilitator (Blain-Moraes, Schaff, Gruis, Huggins, & Wren, 2012). Further, electrolytic gels dry out over time, gradually degrading EEG signal acquisition. Recent advances in dry electrode technology may help overcome this limitation (Blain-Moraes et al., 2012) by allowing for recording of EEG without electrolytic solutions and may lead to easier application of EEG sensors and prolonged stability of EEG signal acquisition.

In order to be used in all environments, EEG must be portable and robust to external sources of noise and artifacts. EEG is highly susceptible to electrical artifacts from the muscles, environment, and other medical equipment (e.g., mechanical ventilation). Therefore, an assessment is needed for likely environments of use, as are guidelines for minimizing the effect of these artifacts. Simultaneous efforts should be made toward improving the tolerance of EEG recording equipment to these outsize sources of electrical noise (Kübler et al., 2015).

The ultimate potential of BCI technology is the development of a system that can directly decode brain activity into communication (e.g., written text or spoken), rather than indirectly operate a communication device. This type of neural decoding is primarily under investigation using invasive methods using electrocorticography and intracortical microelectrodes and has focused on decoding phonemes (Blakely et al., 2008; Brumberg et al., 2011; Herff et al., 2015; Mugler et al., 2014; Tankus et al., 2012), words (Kellis et al., 2010; Leuthardt et al., 2011; Pei et al., 2011), and time-frequency representations (Martin et al., 2014). Invasive methods have the advantage of increased signal quality and resistance to sources of external noise but require a surgical intervention to implant recording electrodes either in or on the brain (Chakrabarti et al., 2015). The goal of these decoding studies and other invasive electrophysiological investigations of speech processing is to develop a neural prosthesis for fluent-like speech production (Brumberg, Burnison, & Guenther, 2016). Although invasive techniques come at a surgical cost, one study reported that 72% of individuals with ALS indicated they were willing to undergo outpatient surgery, and 41% were willing to have a surgical intervention with a short hospital stay to access invasive BCI methods (Huggins et al., 2011). That said, very few invasive BCIs are available for clinical research or long-term at-home use (e.g., Vansteensel et al., 2016); therefore, noninvasive methods will likely be first adopted for use in AAC interventions.

Conclusions

This tutorial has focused on a few important considerations for the future of BCIs as AAC: (a) Despite broad speech-language pathology expertise in AAC, there are few clinical guidelines and recommendations for the use of BCI as an AAC access technique; (b) the most mature BCI technologies have been designed as methods to access communication interfaces rather than directly accessing thoughts, utterances, and speech motor plans from the brain; and (c) BCI is an umbrella term for a variety of brain-to-computer techniques that require comprehensive assessment for matching people who may potentially use BCI with the most appropriate device. The purpose of this tutorial was to bridge the gaps in knowledge between AAC and BCI practices, describe BCIs in the context of current AAC conventions, and motivate interdisciplinary collaborations to pursue rigorous clinical research to adapt AAC feature matching protocols to include intervention with BCIs. A summary of take-home messages to help bridge the gap between knowledge of AAC and BCI was compiled from our interdisciplinary team and summarized in Table 3. Additional training and hands-on experience will improve acceptance of BCI approaches for interventionists targeted by this tutorial, as well as people who may use BCI in the future.

Table 3.

Take-home points collated from the interdisciplinary research team that highlight the major considerations for BCI as possible access methods for AAC.

| BCIs do not yet have the ability to translate thoughts or speech plans into fluent speech productions. Direct BCIs, usually involving a surgery for implantation of recording electrodes, are currently being developed as speech neural prostheses. |

| Noninvasive BCIs are most often designed as an indirect method for accessing AAC, whether custom developed or commercial. |

| There are a variety of noninvasive BCIs that can support clients with a range of sensory, motor, and cognitive abilities—and selecting the most appropriate BCI technique requires individualized assessment and feature matching procedures. |

| The potential population of individuals who may use BCIs is heterogeneous, though current work is focused on individuals with acquired neurological and neuromotor disorders (e.g., locked-in syndrome due to stroke, traumatic brain injury, and ALS); limited study has involved individuals with congenital disorders such as CP. |

| BCIs are currently not as efficient as existing AAC access methods for individuals with some form of movement, though the technology is progressing. For these individuals, BCIs provide an opportunity to augment or complement existing approaches. For individuals with progressive neurodegenerative diseases, learning to use BCI before speech and motor function worsen beyond the aid of existing access technologies may help maintain continuity of communication. For those who are unable to use current access methods, BCIs may provide the only form of access to communication. |

| Long-term BCI use is only just beginning; BCI performance may improve as the technology matures and as individuals who use BCI gain greater proficiency and familiarity with the device. |

Note. BCI = brain–computer interface; AAC = augmentative and alternative communication; ALS = amyotrophic lateral sclerosis; CP = cerebral palsy.

Key to the clinical acceptance of BCI are necessary improvements in communication rate and accuracy via BCI access methods (Kageyama et al., 2014). However, many people who may use BCIs understand the current limitations, yet they recognize the potential positive benefits of BCI, reporting that the technology offers “freedom,” “hope,” “connection,” and unlocking from their speech and motor impairments (Blain-Moraes et al., 2012). A significant component of future BCI research will focus on meeting the priorities of people who use BCIs. A recent study assessed the opinions and priorities of individuals with ALS in regard to BCI design and reported that individuals with ALS prioritized performance accuracy of at least 90% and a rate of at least 15 to 19 letters per minute (Huggins et al., 2011). From our review, most BCI technologies have not yet reached these specifications, though some recent efforts have made considerable progress (e.g., Chen et al., 2015; Townsend & Platsko, 2016). A renewed emphasis on user-centered design and development is helping to move this technology forward by best matching the wants and needs of individuals who may use BCI with realistic expectations of BCI function. It is imperative to include clinicians, individuals who use AAC and BCI, and other stakeholders into the BCI design process to improve usability and performance and to help find the optimal translation from the laboratory to the real world.

Acknowledgments

This work was supported in part by the National Institutes of Health (National Institute on Deafness and Other Communication Disorders R03-DC011304), the University of Kansas New Faculty Research Fund, and the American Speech-Language-Hearing Foundation New Century Scholars Research Grant, all awarded to J. Brumberg.

Funding Statement

This work was supported in part by the National Institutes of Health (National Institute on Deafness and Other Communication Disorders R03-DC011304), the University of Kansas New Faculty Research Fund, and the American Speech-Language-Hearing Foundation New Century Scholars Research Grant, all awarded to J. Brumberg.

Footnotes

Each individual item may also be highlighted, rather than rows and columns.

There are other variants that use a single flicker rate with a specific strobe pattern that is beyond the scope of this tutorial.

References

- Acqualagna L., & Blankertz B. (2013). Gaze-independent BCI-spelling using rapid serial visual presentation (RSVP). Clinical Neurophysiology, 124(5), 901–908. [DOI] [PubMed] [Google Scholar]

- Allison B. Z., McFarland D. J., Schalk G., Zheng S. D., Jackson M. M., & Wolpaw J. R. (2008). Towards an independent brain–computer interface using steady state visual evoked potentials. Clinical Neurophysiology, 119(2), 399–408. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beukelman D., & Mirenda P. (2013). Augmentative and alternative communication: Supporting children and adults with complex communication needs (4th ed.). Baltimore, MD: Brookes. [Google Scholar]

- Birbaumer N., Kübler A., Ghanayim N., Hinterberger T., Perelmouter J., Kaiser J., … Flor H. (2000). The thought translation device (TTD) for completely paralyzed patients. IEEE Transactions on Rehabilitation Engineering, 8(2), 190–193. [DOI] [PubMed] [Google Scholar]

- Blain-Moraes S., Schaff R., Gruis K. L., Huggins J. E., & Wren P. A. (2012). Barriers to and mediators of brain–computer interface user acceptance: Focus group findings. Ergonomics, 55(5), 516–525. [DOI] [PubMed] [Google Scholar]

- Blakely T., Miller K. J., Rao R. P. N., Holmes M. D., & Ojemann J. G. (2008). Localization and classification of phonemes using high spatial resolution electrocorticography (ECoG) grids. In 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (pp. 4964–4967). https://doi.org/10.1109/IEMBS.2008.4650328 [DOI] [PubMed] [Google Scholar]

- Blankertz B., Dornhege G., Krauledat M., Müller K.-R., Kunzmann V., Losch F., & Curio G. (2006). The Berlin brain–computer interface: EEG-based communication without subject training. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 14(2), 147–152. [DOI] [PubMed] [Google Scholar]

- Brumberg J. S., Burnison J. D., & Guenther F. H. (2016). Brain-machine interfaces for speech restoration. In Van Lieshout P., Maassen B., & Terband H. (Eds.), Speech motor control in normal and disordered speech: Future developments in theory and methodology (pp. 275–304). Rockville, MD: ASHA Press. [Google Scholar]

- Brumberg J. S., Burnison J. D., & Pitt K. M. (2016). Using motor imagery to control brain–computer interfaces for communication. In Foundations of Augmented Cognition: Neuroergonomics and Operational Neuroscience. Cham, Switzerland: Spring International Publishing. [Google Scholar]

- Brumberg J. S., & Guenther F. H. (2010). Development of speech prostheses: Current status and recent advances. Expert Review of Medical Devices, 7(5), 667–679. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brumberg J. S., Wright E. J., Andreasen D. S., Guenther F. H., & Kennedy P. R. (2011). Classification of intended phoneme production from chronic intracortical microelectrode recordings in speech-motor cortex. Frontiers in Neuroscience, 5, 65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brunner P., Joshi S., Briskin S., Wolpaw J. R., Bischof H., & Schalk G. (2010). Does the “P300” speller depend on eye gaze? Journal of Neural Engineering, 7(5), 056013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chakrabarti S., Sandberg H. M., Brumberg J. S., & Krusienski D. J. (2015). Progress in speech decoding from the electrocorticogram. Biomedical Engineering Letters, 5(1), 10–21. [Google Scholar]

- Chen X., Wang Y., Gao S., Jung T.-P., & Gao X. (2015). Filter bank canonical correlation analysis for implementing a high-speed SSVEP-based brain–computer interface. Journal of Neural Engineering, 12(4), 46008. [DOI] [PubMed] [Google Scholar]

- Cheng M., Gao X., Gao S., & Xu D. (2002). Design and implementation of a brain–computer interface with high transfer rates. IEEE Transactions on Biomedical Engineering, 49(10), 1181–1186. [DOI] [PubMed] [Google Scholar]

- Donchin E., Spencer K. M., & Wijesinghe R. (2000). The mental prosthesis: Assessing the speed of a P300-based brain–computer interface. IEEE Transactions on Rehabilitation Engineering, 8(2), 174–179. [DOI] [PubMed] [Google Scholar]

- Fager S., Beukelman D., Fried-Oken M., Jakobs T., & Baker J. (2012). Access interface strategies. Assistive Technology, 24(1), 25–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farwell L., & Donchin E. (1988). Talking off the top of your head: Toward a mental prosthesis utilizing event-related brain potentials. Electroencephalography and Clinical Neurophysiology, 70(6), 510–523. [DOI] [PubMed] [Google Scholar]

- Foulds R. (1980). Communication rates of nonspeech expression as a function in manual tasks and linguistic constraints. In International Conference on Rehabilitation Engineering (pp. 83–87). [Google Scholar]

- Fried-Oken M., Mooney A., Peters B., & Oken B. (2013). A clinical screening protocol for the RSVP keyboard brain–computer interface. Disability and Rehabilitation. Assistive Technology, 10(1), 11–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friman O., Luth T., Volosyak I., & Graser A. (2007). Spelling with steady-state visual evoked potentials. In 2007 3rd International IEEE/EMBS Conference on Neural Engineering (pp. 354–357). Kohala Coast, HI: IEEE. [Google Scholar]

- Geronimo A., Simmons Z., & Schiff S. J. (2016). Performance predictors of brain–computer interfaces in patients with amyotrophic lateral sclerosis. Journal of Neural Engineering, 13(2), 026002. [DOI] [PubMed] [Google Scholar]

- Gibbons C., & Beneteau E. (2010). Functional performance using eye control and single switch scanning by people with ALS. Perspectives on Augmentative and Alternative Communication, 19(3), 64–69. [Google Scholar]

- Goncharova I. I., McFarland D. J., Vaughan T. M., & Wolpaw J. R. (2003). EMG contamination of EEG: Spectral and topographical characteristics. Clinical Neurophysiology, 114(9), 1580–1593. [DOI] [PubMed] [Google Scholar]

- Halder S., Rea M., Andreoni R., Nijboer F., Hammer E. M., Kleih S. C., … Kübler A. (2010). An auditory oddball brain–computer interface for binary choices. Clinical Neurophysiology, 121(4), 516–523. [DOI] [PubMed] [Google Scholar]

- Herff C., Heger D., de Pesters A., Telaar D., Brunner P., Schalk G., & Schultz T. (2015). Brain-to-text: Decoding spoken phrases from phone representations in the brain. Frontiers in Neuroscience, 9, 217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hill K., & Romich B. (2002). A rate index for augmentative and alternative communication. International Journal of Speech Technology, 5(1), 57–64. [Google Scholar]

- Hill N. J., Ricci E., Haider S., McCane L. M., Heckman S., Wolpaw J. R., & Vaughan T. M. (2014). A practical, intuitive brain–computer interface for communicating “yes” or “no” by listening. Journal of Neural Engineering, 11(3), 035003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holz E. M., Botrel L., Kaufmann T., & Kübler A. (2015). Long-term independent brain–computer interface home use improves quality of life of a patient in the locked-in state: A case study. Archives of Physical Medicine and Rehabilitation, 96(3), S16–S26. [DOI] [PubMed] [Google Scholar]

- Huggins J. E., Wren P. A., & Gruis K. L. (2011). What would brain–computer interface users want? Opinions and priorities of potential users with amyotrophic lateral sclerosis. Amyotrophic Lateral Sclerosis, 12(5), 318–324. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kaethner I., Kübler A., & Halder S. (2015). Comparison of eye tracking, electrooculography and an auditory brain–computer interface for binary communication: A case study with a participant in the locked-in state. Journal of Neuroengineering and Rehabilitation, 12(1), 76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kageyama Y., Hirata M., Yanagisawa T., Shimokawa T., Sawada J., Morris S., … Yoshimine T. (2014). Severely affected ALS patients have broad and high expectations for brain-machine interfaces. Amyotrophic Lateral Sclerosis and Frontotemporal Degeneration, 15(7–8), 513–519. [DOI] [PubMed] [Google Scholar]

- Käthner I., Ruf C. A., Pasqualotto E., Braun C., Birbaumer N., & Halder S. (2013). A portable auditory P300 brain–computer interface with directional cues. Clinical Neurophysiology, 124(2), 327–338. [DOI] [PubMed] [Google Scholar]

- Kellis S., Miller K., Thomson K., Brown R., House P., & Greger B. (2010). Decoding spoken words using local field potentials recorded from the cortical surface. Journal of Neural Engineering, 7(5), 056007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kleih S. C., & Kübler A. (2015). Psychological factors influencing brain–computer interface (BCI) performance. 2015 IEEE International Conference on Systems, Man, and Cybernetics, 3192–3196. [Google Scholar]

- Klobassa D. S., Vaughan T. M., Brunner P., Schwartz N. E., Wolpaw J. R., Neuper C., & Sellers E. W. (2009). Toward a high-throughput auditory P300-based brain–computer interface. Clinical Neurophysiology, 120(7), 1252–1261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koester H. H., & Levine S. (1996). Effect of a word prediction feature on user performance. Augmentative and Alternative Communication, 12(3), 155–168. [Google Scholar]

- Kübler A., Furdea A., Halder S., Hammer E. M., Nijboer F., & Kotchoubey B. (2009). A brain–computer interface controlled auditory event-related potential (p300) spelling system for locked-in patients. Annals of the New York Academy of Sciences, 1157, 90–100. [DOI] [PubMed] [Google Scholar]

- Kübler A., Holz E. M., Sellers E. W., & Vaughan T. M. (2015). Toward independent home use of brain–computer interfaces: A decision algorithm for selection of potential end-users. Archives of Physical Medicine and Rehabilitation, 96(3), S27–S32. [DOI] [PubMed] [Google Scholar]

- Kübler A., Kotchoubey B., Hinterberger T., Ghanayim N., Perelmouter J., Schauer M., … Birbaumer N. (1999). The thought translation device: A neurophysiological approach to communication in total motor paralysis. Experimental Brain Research, 124(2), 223–232. [DOI] [PubMed] [Google Scholar]

- Leuthardt E. C., Gaona C., Sharma M., Szrama N., Roland J., Freudenberg Z., … Schalk G. (2011). Using the electrocorticographic speech network to control a brain–computer interface in humans. Journal of Neural Engineering, 8(3), 036004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Light J., & McNaughton D. (2013). Putting people first: Re-thinking the role of technology in augmentative and alternative communication intervention. Augmentative and Alternative Communication, 29(4), 299–309. [DOI] [PubMed] [Google Scholar]

- Lin Z., Zhang C., Wu W., & Gao X. (2007). Frequency recognition based on canonical correlation analysis for SSVEP-Based BCIs. IEEE Transactions on Biomedical Engineering, 54(6), 1172–1176. [DOI] [PubMed] [Google Scholar]

- Lopez M.-A., Pomares H., Pelayo F., Urquiza J., & Perez J. (2009). Evidences of cognitive effects over auditory steady-state responses by means of artificial neural networks and its use in brain–computer interfaces. Neurocomputing, 72(16–18), 3617–3623. [Google Scholar]

- Lotte F., Congedo M., Lécuyer A., Lamarche F., & Arnaldi B. (2007). A review of classification algorithms for EEG-based brain–computer interfaces. Journal of Neural Engineering, 4(2), R1–R13. [DOI] [PubMed] [Google Scholar]

- Lynn J. M. D., Armstrong E., & Martin S. (2016). User centered design and validation during the development of domestic brain computer interface applications for people with acquired brain injury and therapists: A multi-stakeholder approach. Journal of Assistive Technologies, 10(2), 67–78. [Google Scholar]

- Mainsah B. O., Collins L. M., Colwell K. A., Sellers E. W., Ryan D. B., Caves K., & Throckmorton C. S. (2015). Increasing BCI communication rates with dynamic stopping towards more practical use: An ALS study. Journal of Neural Engineering, 12(1), 016013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin S., Brunner P., Holdgraf C., Heinze H.-J., Crone N. E., Rieger J., … Pasley B. N. (2014). Decoding spectrotemporal features of overt and covert speech from the human cortex. Frontiers in Neuroengineering, 7, 14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCane L. M., Sellers E. W., McFarland D. J., Mak J. N., Carmack C. S., Zeitlin D., … Vaughan T. M. (2014). Brain–computer interface (BCI) evaluation in people with amyotrophic lateral sclerosis. Amyotrophic Lateral Sclerosis and Frontotemporal Degeneration, 15(3–4), 207–215. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miner L. A., McFarland D. J., & Wolpaw J. R. (1998). Answering questions with an electroencephalogram-based brain–computer interface. Archives of Physical Medicine and Rehabilitation, 79(9), 1029–1033. [DOI] [PubMed] [Google Scholar]

- Mugler E. M., Patton J. L., Flint R. D., Wright Z. A., Schuele S. U., Rosenow J., … Slutzky M. W. (2014). Direct classification of all American English phonemes using signals from functional speech motor cortex. Journal of Neural Engineering, 11(3), 035015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller-Putz G. R., Scherer R., Brauneis C., & Pfurtscheller G. (2005). Steady-state visual evoked potential (SSVEP)-based communication: Impact of harmonic frequency components. Journal of Neural Engineering, 2(4), 123–130. [DOI] [PubMed] [Google Scholar]

- Mussa-Ivaldi F. A., & Miller L. E. (2003). Brain-machine interfaces: Computational demands and clinical needs meet basic neuroscience. Trends in Neurosciences, 26(6), 329–334. [DOI] [PubMed] [Google Scholar]

- Neuper C., Müller G. R., Kübler A., Birbaumer N., & Pfurtscheller G. (2003). Clinical application of an EEG-based brain–computer interface: A case study in a patient with severe motor impairment. Clinical Neurophysiology, 114(3), 399–409. [DOI] [PubMed] [Google Scholar]

- Neuper C., Scherer R., Reiner M., & Pfurtscheller G. (2005). Imagery of motor actions: Differential effects of kinesthetic and visual-motor mode of imagery in single-trial EEG. Cognitive Brain Research, 25(3), 668–677. [DOI] [PubMed] [Google Scholar]

- Nijboer F., Birbaumer N., & Kübler A. (2010). The influence of psychological state and motivation on brain–computer interface performance in patients with amyotrophic lateral sclerosis—A longitudinal study. Frontiers in Neuroscience, 4, 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nijboer F., Furdea A., Gunst I., Mellinger J., McFarland D. J., Birbaumer N., & Kübler A. (2008). An auditory brain–computer interface (BCI). Journal of Neuroscience Methods, 167(1), 43–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nijboer F., Sellers E. W., Mellinger J., Jordan M. A., Matuz T., Furdea A., … Kübler A. (2008). A P300-based brain–computer interface for people with amyotrophic lateral sclerosis. Clinical Neurophysiology, 119(8), 1909–1916. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oken B. S., Orhan U., Roark B., Erdogmus D., Fowler A., Mooney A., … Fried-Oken M. B. (2014). Brain–computer interface with language model-electroencephalography fusion for locked-in syndrome. Neurorehabilitation and Neural Repair, 28(4), 387–394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oostenveld R., & Praamstra P. (2001). The five percent electrode system for high-resolution EEG and ERP measurements. Clinical Neurophysiology, 112(4), 713–719. [DOI] [PubMed] [Google Scholar]

- Pasqualotto E., Matuz T., Federici S., Ruf C. A., Bartl M., Olivetti Belardinelli M., … Halder S. (2015). Usability and workload of access technology for people with severe motor impairment: A comparison of brain–computer interfacing and eye tracking. Neurorehabilitation and Neural Repair, 29(10), 950–957. [DOI] [PubMed] [Google Scholar]

- Patel R. (2011). Message formulation, organization, and navigation schemes for icon-based communication aids. In Proceedings of the 33rd Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC '11) (pp. 5364–5367). Boston, MA: IEEE. [DOI] [PubMed] [Google Scholar]

- Pei X., Barbour D. L., Leuthardt E. C., & Schalk G. (2011). Decoding vowels and consonants in spoken and imagined words using electrocorticographic signals in humans. Journal of Neural Engineering, 8(4), 046028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pfurtscheller G., & Neuper C. (2001). Motor imagery and direct brain–computer communication. Proceedings of the IEEE, 89(7), 1123–1134. [Google Scholar]

- Plum F., & Posner J. B. (1972). The diagnosis of stupor and coma. Contemporary Neurology Series, 10, 1–286. [PubMed] [Google Scholar]

- Regan D. (1989). Human brain electrophysiology: Evoked potentials and evoked magnetic fields in science and medicine. New York, NY: Elsevier. [Google Scholar]

- Riccio A., Simione L., Schettini F., Pizzimenti A., Inghilleri M., Belardinelli M. O., … Cincotti F. (2013). Attention and P300-based BCI performance in people with amyotrophic lateral sclerosis. Frontiers in Human Neuroscience, 7, 732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roark B., Beckley R., Gibbons C., & Fried-Oken M. (2013). Huffman scanning: Using language models within fixed-grid keyboard emulation. Computer Speech and Language, 27(6), 1212–1234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roark B., Fried-Oken M., & Gibbons C. (2015). Huffman and linear scanning methods with statistical language models. Augmentative and Alternative Communication, 31(1), 37–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scherer R., Billinger M., Wagner J., Schwarz A., Hettich D. T., Bolinger E., … Müller-Putz G. (2015). Thought-based row–column scanning communication board for individuals with cerebral palsy. Annals of Physical and Rehabilitation Medicine, 58(1), 14–22. [DOI] [PubMed] [Google Scholar]

- Scherer R., Müller G. R., Neuper C., Graimann B., & Pfurtscheller G. (2004). An asynchronously controlled EEG-based virtual keyboard: Improvement of the spelling rate. IEEE Transactions on Biomedical Engineering, 51(6), 979–984. [DOI] [PubMed] [Google Scholar]

- Sellers E. W., & Donchin E. (2006). A P300-based brain–computer interface: Initial tests by ALS patients. Clinical Neurophysiology, 117(3), 538–548. [DOI] [PubMed] [Google Scholar]

- Sellers E. W., Vaughan T. M., & Wolpaw J. R. (2010). A brain–computer interface for long-term independent home use. Amyotrophic Lateral Sclerosis, 11(5), 449–455. [DOI] [PubMed] [Google Scholar]

- Silvoni S., Cavinato M., Volpato C., Ruf C. A., Birbaumer N., & Piccione F. (2013). Amyotrophic lateral sclerosis progression and stability of brain–computer interface communication. Amyotrophic Lateral Sclerosis and Frontotemporal Degeneration, 14(5–6), 390–396. [DOI] [PubMed] [Google Scholar]

- Sprague S. A., McBee M. T., & Sellers E. W. (2015). The effects of working memory on brain–computer interface performance. Clinical Neurophysiology, 127(2), 1331–1341. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tankus A., Fried I., & Shoham S. (2012). Structured neuronal encoding and decoding of human speech features. Nature Communications, 3, 1015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thistle J. J., & Wilkinson K. M. (2013). Working memory demands of aided augmentative and alternative communication for individuals with developmental disabilities. Augmentative and Alternative Communication, 29(3), 235–245. [DOI] [PubMed] [Google Scholar]

- Thistle J. J., & Wilkinson K. M. (2015). Building evidence-based practice in AAC display design for young children: Current practices and future directions. Augmentative and Alternative Communication, 31(2), 124–136. [DOI] [PubMed] [Google Scholar]

- Townsend G., & Platsko V. (2016). Pushing the P300-based brain–computer interface beyond 100 bpm: Extending performance guided constraints into the temporal domain. Journal of Neural Engineering, 13(2), 026024. [DOI] [PubMed] [Google Scholar]

- Trnka K., McCaw J., Yarrington D., McCoy K. F., & Pennington C. (2008). Word prediction and communication rate in AAC, In Proceedings of the IASTED International Conference on Telehealth/Assistive Technologies (Telehealth/AT '08) (pp. 19–24). Baltimore, MD: ACTA Press Anaheim, CA, USA. [Google Scholar]

- Vansteensel M. J., Pels E. G. M., Bleichner M. G., Branco M. P., Denison T., Freudenburg Z. V., … Ramsey N. F. (2016). Fully implanted brain–computer interface in a locked-in patient with ALS. New England Journal of Medicine, 375(21), 2060–2066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vaughan T. M., McFarland D. J., Schalk G., Sarnacki W. A., Krusienski D. J., Sellers E. W., & Wolpaw J. R. (2006). The Wadsworth BCI Research and Development Program: At home with BCI. IEEE Transactions on Neural Systems and Rehabilitation Engineering, 14(2), 229–233. [DOI] [PubMed] [Google Scholar]

- Vidaurre C., & Blankertz B. (2010). Towards a cure for BCI illiteracy. Brain Topography, 23(2), 194–198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Volosyak I., Valbuena D., Lüth T., Malechka T., & Gräser A. (2011). BCI demographics II: How many (and what kinds of) people can use a high-frequency SSVEP BCI? IEEE Transactions on Neural Systems and Rehabilitation Engineering, 19(3), 232–239. [DOI] [PubMed] [Google Scholar]

- Vuckovic A., & Osuagwu B. A. (2013). Using a motor imagery questionnaire to estimate the performance of a brain–computer interface based on object oriented motor imagery. Clinical Neurophysiology, 124(8), 1586–1595. [DOI] [PubMed] [Google Scholar]

- Wills S. A., & MacKay D. J. C. (2006). DASHER—An efficient writing system for brain–computer interfaces? IEEE Transactions on Neural Systems and Rehabilitation Engineering, 14(2), 244–246. [DOI] [PubMed] [Google Scholar]

- Wolpaw J. R., Birbaumer N., McFarland D. J., Pfurtscheller G., & Vaughan T. M. (2002). Brain–computer interfaces for communication and control. Clinical Neurophysiology, 113(6), 767–791. [DOI] [PubMed] [Google Scholar]

- Wolpaw J. R., & McFarland D. J. (2004). Control of a two-dimensional movement signal by a noninvasive brain–computer interface in humans. Proceedings of the National Academy of Sciences of the United States of America, 101(51), 17849–17854. [DOI] [PMC free article] [PubMed] [Google Scholar]