Abstract

Background

The effectiveness of community clinics and health centers’ efforts to improve the quality of care might be modified by clinics’ workplace climates. Several surveys to measure workplace climate exist, but their relationships to each other and to distinguishable dimensions of workplace climate are unknown.

Objective

To assess the psychometric properties of a survey instrument combining items from several existing surveys of workplace climate and to generate a shorter instrument for future use.

Methods

We fielded a 106-item survey, which included items from 9 existing instruments, to all clinicians and staff members (n=781) working in 30 California community clinics and health centers, receiving 628 responses (80% response rate). We performed exploratory factor analysis of survey responses, followed by confirmatory factor analysis of 200 reserved survey responses. We generated a new, shorter survey instrument of items with strong factor loadings.

Results

Six factors, including 44 survey items, emerged from the exploratory analysis. Two factors (Clinic Workload and Teamwork) were independent from the others. The remaining 4 factors (Staff Relationships, Quality Improvement Orientation, Managerial Readiness for Change, and Staff Readiness for Change) were highly correlated, indicating that these represented dimensions of a higher-order factor we called “Clinic Functionality.” This two-level, six-factor model fit the data well in the exploratory and confirmatory samples. For all but one factor, fewer than 20 survey responses were needed to achieve clinic-level reliability >0.7.

Conclusion

Survey instruments designed to measure workplace climate have substantial overlap. The relatively parsimonious item set we identified might help target and tailor clinics’ quality improvement efforts.

Keywords: organizational culture, surveys, community health centers, primary care

Introduction

Several primary care quality improvement (QI) strategies, such as medical home transformation, involve changes in workflow and responsibilities for clinicians and staff.1 Primary care practices’ workplace climates (e.g., team cohesiveness and readiness for change) can modify the effectiveness of such QI strategies.2–6 For example, practices with greater “adaptive reserve” have been better able to transform into medical homes and improve patient care,7,8 while those with climates less supportive of change can suffer from employee burnout, turnover, and lower-quality patient care.9 Therefore understanding workplace climate might help identify primary care clinics that are well-positioned to participate in QI initiatives.

Many instruments designed to measure workplace climate exist. A recent review found 13 instruments of varying length, scope, and format.10 However, these instruments might assess a relatively limited number of underlying constructs. The extent of this hypothetical overlap is unknown, because such surveys have not been fielded simultaneously within primary care practices. By combining relevant items from multiple instruments, we sought to determine which dimensions of workplace climate are measurable using a relatively small number of items, potentially reducing response burden and redundancy in future surveys.

Methods

This study was approved by the Institutional Review Board of the University of California, Los Angeles (UCLA).

Development of the survey instrument

We developed the survey instrument to support the Innovative Care Approaches through Research and Education (iCARE) study, a randomized controlled trial comparing the effectiveness of 2 interventions designed to improve the quality of diabetes care in 18 community clinics and health centers (CCHCs) in California.11

We selected survey items based on their theoretical relevance to the iCARE interventions, following an extensive review of existing instruments designed to measure workplace climate. Based on this review and after obtaining author permission, we included 91 items culled from 9 instruments: Team Diagnostic Survey (TDS);12 Attitudes Toward Health Care Team Scale;13 Team Climate Inventory (TCI);14 Minimizing Error, Maximizing Outcome (MEMO);15 AHRQ TeamSTEPPS Teamwork Perceptions Questionnaire (AHRQ T-TPQ);16 TransforMed Clinician Staff Questionnaire (TransforMed CSQ);17 AHRQ Medical Office Survey on Patient Safety Culture;18 and Organizational Readiness to Change Assessment (ORCA).19 Appendix Table 1 lists the source instruments, constructs covered in each instrument, and number of items drawn from each. In addition, we created 14 original items to assess the composition of the respondent’s clinical team and 1 original item asking how frequently the respondent’s team met. The final fielded instrument included 106 items.

Some of the source instruments (e.g., TeamSTEPPS, Attitudes Toward Health Care Team Scale) were originally designed for use in hospital and long-term care settings. To understand how the measures would perform in primary care settings, we cognitively tested the draft instrument with 5 clinicians and staff from CCHCs not involved in the intervention study. Using results from the cognitive testing, we revised the wording of several items to enhance clarity, and we identified and removed items that were less relevant to primary care practice.

All but 1 of the survey items were scored on a 5-point Likert scale with response anchors (1) Strongly Disagree to (5) Strongly Agree. The remaining item assessed clinic atmosphere and was scored on a 5-point scale with anchors (1) Calm to (5) Hectic/chaotic.

Survey administration and sample

We fielded the survey in June-August 2011 via mail to the full census of 781 clinicians (e.g., physicians, nurses, allied health professionals) and other staff (e.g., receptionists, clerks) from 30 CCHCs (including those involved in the iCARE study). We enclosed Starbucks gift cards in the amount of $10 with the initial survey, and reminders to non-respondents referenced this gift card. We received a total of 628 completed surveys (80% overall response rate). Clinic-level response rates ranged from 44% to 100%.

Respondents in 1 clinic reported to the UCLA Institutional Review Board (whose contact information was included in survey recruitment materials) that their supervisors had attempted to influence or change their survey responses. We excluded all responses received from individuals in this clinic (n=27), leaving a final analytic sample of 601 completed surveys.

Data analysis

We randomly divided the 601 completed surveys into 2 samples, one to be used for exploratory factor analyses (EFA; n=401) and one to be used for confirmatory factor analyses (CFA; n=200). We then conducted a series of EFAs of the 401 survey responses, treating responses as categorical, including the TYPE=complex statement to control for clustering of staff within clinic, and using the WLSMV estimator (which employs implicit imputation via maximum likelihood for missing data) and a Geomin rotation in Mplus.20

We assessed factor structures based on eigenvalue magnitude (greater than 1), shape of the scree plot, pattern of factor loadings, face validity (conceptually meaningful groupings), and goodness of fit for each exploratory solution. After determining the optimal number of factors, we dropped items with redundant content, loadings <0.4 across all identified factors, or high cross-loadings (i.e., loadings exceeding 0.3 on two or more factors).

Using the best EFA model according to our criteria, we conducted a CFA, first with the exploratory sample and then with the confirmatory sample, to evaluate goodness of fit based on three indices: the root mean square error of approximation (RMSEA; <0.05 representing excellent fit and 0.05 to 0.08 representing reasonable or fair fit)21; the Tucker-Lewis index (TLI; ≥0.90 representing adequate fit and >0.95 representing excellent fit)22; and the comparative fit index (CFI; ≥0.90 representing adequate fit and >0.95 representing excellent fit).23–25

After completing the CFA, we combined the two samples and calculated scores for each clinic on each factor. To calculate scores for first-order factors, we took the means of the corresponding item-level Likert scores, using both equal item weighting and factor loading weights. To calculate higher-order factor scores, we took the mean of the corresponding first-order factors, again weighting each factor equally and by factor loading. For all factor scores (first- and higher-order), the correlation coefficients between these two weighting approaches exceeded 0.9. Therefore, we retained only the scores calculated using equal weighting.

Finally, for each factor we calculated means, standard deviations, internal consistency estimates (Cronbach’s alpha for higher-order factor scores, ordinal alpha for first-order factors), average clinic-level reliabilities (reliabilities calculated for each clinic and then averaged), and minimum number of responses necessary to reach reliability ≥0.7 (using the Spearman-Brown Prophesy formula).

Results

The median number of physicians per participating clinic was 4, and most of the patients they served were covered by Medicaid (42.9%) or were self-pay (i.e., uninsured; 21.4%). Among survey respondents, the median age was 40 years, 85% were female, 53% were of white race, 63% were of non-Hispanic ethnicity, and 68% had worked at their clinic for more than 2 years.

EFA of the survey items yielded 18 eigenvalues greater than 1. However, the scree plot elbowed sharply at 2 and then reduced steadily (eigenvalues 35.46, 4.16, 3.64, 2.84, 2.55, 2.33, 1.93, 1.86, 1.76, 1.58…). Because eigenvalue and scree plot criteria disagreed regarding the optimal number of factors, we examined loading patterns and model fit statistics to assess the exploratory models. Substantive considerations, including strength of factor loadings and total number of items loading on each factor, led us to consider solutions with 15 or fewer factors.

Solutions specifying as few as 6 and as many as 9 factors yielded relatively interpretable patterns of loadings and reasonable fit. However, some of these factors had significant overlap in their substantive content and, therefore, were not sufficiently distinct. Before specifying the number of factors and re-running the EFA, we eliminated 12 items whose loadings were <0.4 on all factors.

Using the remaining 80 items, we conducted a second set of EFAs in which we specified up to 10 factors. Using this solution, we discarded another 36 items that met one or more of the following criteria: items that did not load >0.4 on any factor, items with substantial cross-loadings, and items with redundant content on a factor that already had several items. After these exclusions, 44 items remained for further modeling.

A final EFA with the reduced item set identified 6 clearly interpretable factors including: clinic workload, teamwork, staff relationships, quality improvement (QI) orientation, manager readiness for change, and staff readiness for change. This 6-factor model also provided excellent fit (CFI = 0.982, TLI = 0.975, RMSEA = 0.031).

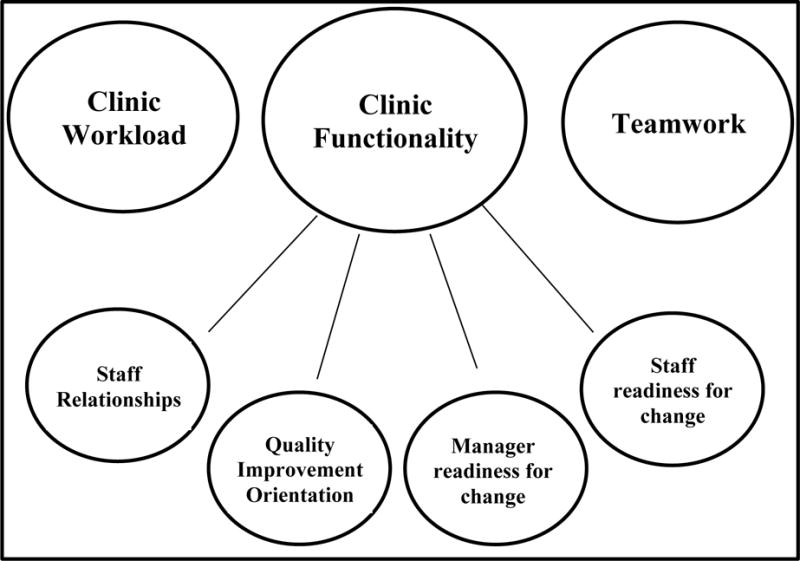

The pattern of model-based (i.e., disattenuated) correlations among the six factors indicated a possible higher-order dimension comprised of 4 of the first-order factors (staff relationships, QI orientation, manager readiness for change and staff readiness for change), which displayed relatively high intercorrelations [Table 2]. To account for the correlation of these factors, we conducted a CFA, still using the exploratory sample data, that specified a factor model in which the 4 most strongly intercorrelated factors (staff relationships, QI orientation, manager readiness for change, and staff readiness for change) loaded on a higher-order factor (deemed “clinic functionality”), and the remaining 2 factors (clinic workload and teamwork) remained distinct from this higher-order factor [Figure 1]. The CFA model did not allow a given item to load on more than 1 factor.

Table 2.

Model-based factor correlation coefficients from 6-factor EFA solution.

| Staff relationships | QI orientation | Manager readiness for change | Staff readiness for change | Clinic workload | Teamwork | |

|---|---|---|---|---|---|---|

| Staff relationships | 1 | |||||

| QI orientation | 0.605* | 1 | ||||

| Manager readiness for change | 0.475* | 0.548* | 1 | |||

| Staff readiness for change | 0.383* | 0.477* | 0.354* | 1 | ||

| Clinic workload | 0.118 | 0.152* | 0.049 | 0.014 | 1 | |

| Teamwork | 0.081* | 0.074 | 0.054 | 0.020 | 0.121* | 1 |

Correlation coefficient significant at p<.05

Figure 1.

Final factor structure

This final model fit the data well in the exploratory sample (CFI = 0.975, TLI = 0.974 and RMSEA = 0.034) and replicated well in the validation sample of 200 reserved survey responses (CFI = 0.943, TLI = 0.939, RMSEA = 0.045). We then combined both of the samples together and reran the CFA. Table 3 shows the survey items and loadings for the final factor solution using the total sample (n=601), which had excellent fit (CFI = 0.968, TLI = 0.966, RMSEA = 0.034) with moderate model-based correlations among the factors (r=0.281 for clinic workload with teamwork, r=0.447 for clinic workload with clinic functionality, r=0.366 for teamwork with clinic functionality).

Table 3.

Item factor loadings for the final confirmatory factor analysis model using the full sample

| Factor 1: Clinic Workload | loading |

|---|---|

| Which best describes the atmosphere in your clinic? | 0.79 |

| In this clinic, we often feel rushed when taking care of patients. | 0.69 |

| This clinic has too many patients to be able to handle everything effectively. | 0.63 |

| We have too many patients for the number of providers in this clinic. | 0.63 |

| We have enough staff to handle our patient load. | 0.63 |

| Your team has too few members for what it has to accomplish. | 0.38 |

| Factor 2: Teamwork | |

| Some members of your team lack the knowledge and skills they need to do their parts of the team’s work. | 0.76 |

| Some members of your team do not carry their fair share of the overall workload. | 0.74 |

| Patients are less satisfied with their care when it is provided by a team. | 0.65 |

| In most instances, the time required for team meetings could better be spent in other ways. | 0.53 |

| Working in teams unnecessarily complicates things most of the time. | 0.53 |

| Different people are constantly joining and leaving your team. | 0.49 |

| Members of your team have their own individual jobs to do, with little need to work together. | 0.44 |

| Your team is larger than it needs to be. | 0.42 |

| Factor 3: Clinic Functionality | |

| Sub-factor: Staff relationships | 0.88* |

| Staff effectively anticipate each other’s needs | 0.85 |

| We have a “we are in it together” attitude | 0.81 |

| Staff treat each other with respect | 0.80 |

| We feel understood and accepted by each other | 0.79 |

| Staff skills overlap sufficiently so that work can be shared when necessary | 0.75 |

| Everyone on your team is motivated to have the team succeed | 0.70 |

| There is a good working relationship between staff and providers | 0.65 |

| Sub-factor: QI orientation | 0.95* |

| People in the practice cooperate to help develop and apply new ideas | 0.86 |

| The clinic is good at changing care processes to make sure the same problems don’t happen again | 0.85 |

| When there is a problem in the clinic, we see if we need to change the way we do things | 0.83 |

| People in the clinic are always searching for fresh, new ways of looking at problems | 0.83 |

| After the clinic makes changes to improve the patient care process, we check to see if the changes worked | 0.83 |

| When we experience a problem in the clinic, we make a serious effort to figure out what’s really going on | 0.79 |

| The clinic encourages everyone (front office staff, clinical staff, nurses, and clinicians) to share ideas | 0.75 |

| There is a high level of commitment to measuring clinical outcomes | 0.74 |

| The clinic makes efficient use of resources (e.g., staff supplies, equipment, information) | 0.73 |

| The clinic seeks ways to improve patient education and increase patient participation in treatment | 0.70 |

| The quality of each provider’s work is closely monitored | 0.67 |

| We have very good methods to assure providers change their practices to include new technologies and research findings | 0.63 |

| Sub-factor: Manager readiness for change | 0.81* |

| Your supervisor/manager provides opportunities to discuss the unit’s performance | 0.91 |

| Your supervisor/manager models appropriate team behavior | 0.90 |

| Your supervisor/manager resolves conflicts successfully | 0.88 |

| Your supervisor/manager ensures that staff are aware of any situations or changes that may affect patient care | 0.88 |

| Your supervisor/manager considers staff input when making decisions about patient care | 0.88 |

| Your supervisor/manager takes time to meet with staff to develop a plan for patient care | 0.86 |

| Your supervisor/manager ensures that adequate resources (e.g., staff, supplies, equipment, information) are available | 0.83 |

| Sub-factor: Staff readiness for change | 0.73* |

| Staff cooperate to maintain and improve effectiveness of patient care | 0.93 |

| Staff are willing to innovate and/or experiment to improve clinical processes | 0.92 |

| Staff have a sense of personal responsibility for improving patient care and outcomes | 0.88 |

| Staff are receptive to changes in clinical processes | 0.84 |

First-order factor loading on higher-order factor

Table 4 presents summary statistics for scores on the final 6 factors. Mean factor scores ranged from 2.69 to 3.79, standard deviations from 0.66 to 0.92, and internal consistency estimates from 0.78 to 0.96. The number of observations needed to reach a reliability of >0.7 ranged from 10 to 19 respondents for all but 1 factor (staff readiness for change, which required 48 respondents per clinic).

Table 4.

Summary statistics for factor composite scores

| Composite Measure | Number of items | Mean score | SD | Internal consistency# | Number of survey responses* needed for reliability >0.7 |

|---|---|---|---|---|---|

| Clinic Functionality | 30ˆ | 3.70 | 0.66 | 0.87 | 14 |

| Staff relationships | 7 | 3.67 | 0.76 | 0.91 | 10 |

| QI orientation | 12 | 3.64 | 0.71 | 0.94 | 16 |

| Manager readiness for change | 7 | 3.70 | 0.92 | 0.96 | 19 |

| Staff readiness for change | 4 | 3.79 | 0.69 | 0.92 | 48 |

| Clinic workload | 6 | 2.69 | 0.70 | 0.79 | 18 |

| Teamwork | 8 | 3.40 | 0.66 | 0.78 | 12 |

Abbreviations: SD, standard deviation; QI, quality improvement.

Internal consistency is calculated as ordinal alpha for first-order factors and Cronbach’s alpha for higher-order factor

Calculated using Spearman-Brown Prophesy Formula

Clinic Functionality factor includes four sub-factors: staff relationships, QI orientation, manager readiness for change, and staff readiness for change.

Discussion

We developed, fielded, and analyzed a survey of workplace climate, drawing from multiple source instruments. We found that, within the setting of CCHCs, only 3 higher-order factors could be distinguished (clinic functionality, clinic workload, and teamwork), along with 4 first-order factors within clinic functionality (staff relationships, QI orientation, manager readiness for change, and staff readiness for change). At 44 items, the final survey instrument is of reasonable length, limiting respondent burden.

Assessing workplace climates of primary care practices can be important. Such measurements might help health systems plan and tailor their QI interventions, thus increasing the likelihood that interventions will reach their goals, and allow stratified analyses by evaluators of QI initiatives, thus identifying workplace climates that modify intervention effects.26 However, the proliferation of practice survey instruments may tempt stakeholders to field multiple or lengthy surveys to ensure that they have captured all relevant aspects of organizational culture necessary for success in practice transformation and QI. For some stakeholders, comprehensive assessment using multiple instruments might not be practical.

Our survey instrument might be attractive to health innovation champions who understand the value of preparing for QI implementation but have limited time and resources to do so. We suggest computing the 3 higher-order factors as a general approach to tailoring interventions and stratifying analyses. The 4 first-order factors nesting in “clinic functionality” might also be of interest, depending on the logic model underlying a given QI intervention (e.g., one that seems likely to require especially high levels of staff readiness for change).

Limitations

Our study has limitations. First, the survey was fielded among CCHCs in California. The extent to which our findings are generalizable to larger practices in different settings is unknown. Second, the ability of the survey to predict the effects of QI initiatives on primary care practices has not been established. Third, factor analysis results might be influenced by common method bias, which can in theory mimic a higher-order cultural construct (such as the “clinic functionality” factor emerging from our analyses).27 Without a “marker variable” (i.e., a survey item or item set designed to measure response tendency exclusively, without being influenced by clinic climate) we cannot disentangle the extent to which covariance is driven by common method bias, as opposed to a true latent construct. However, if clinic functionality were actually a measure of response tendency alone, it would arguably emerge as a higher-order factor for all factors, rather than a subset of them. Finally, our procedure for protecting human subjects and data integrity identified and excluded from analysis 1 clinic in which study protocol was violated. Despite having no evidence to suggest similar protocol violations in other sites, we cannot be absolutely certain that none occurred.

Conclusion

By combining relevant items from multiple survey instruments, we found that practice climate could be measured by 3 higher-order factors: clinic workload, teamwork, and clinic functionality (encompassing 4 first-order factors: staff relationships, QI orientation, manager readiness for change, and staff readiness for change). The survey instrument emerging from our analyses might be useful for stakeholders who need a parsimonious item set to efficiently guide their implementation of QI initiatives.

Supplementary Material

Table 1.

Respondent characteristics

| Median (IQR) | |

|---|---|

| Age (years) | 40 (31-51) |

| Gender | Proportion of respondents |

| Male | 14.8% |

| Female | 85.2% |

| Race | Proportion of respondents |

| White | 52.7% |

| Asian | 21.0% |

| Black | 1.9% |

| American Indian or Native Alaskan | 3.1% |

| Other | 21.4% |

| Ethnicity | Proportion of respondents |

| Hispanic | 37.0% |

| Non-Hispanic | 63.0% |

| Length of time worked at the practice | Proportion of respondents |

| Less than 6 months | 5.9% |

| 6-12 months | 10.2% |

| 1-2 years | 15.9% |

| More than 2 years | 68.1% |

Abbreviation: IQR, interquartile range

Acknowledgments

Funding source: Agency for Healthcare Research and Quality (R18 HS20120-01)

References

- 1.O’Malley AS, Gourevitch R, Draper K, Bond A, Tirodkar MA. Overcoming challenges to teamwork in patient-centered medical homes: a qualitative study. J Gen Intern Med. 2015;30(2):183–192. doi: 10.1007/s11606-014-3065-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Bodenheimer T, Wang MC, Rundall TG, et al. What are the facilitators and barriers in physician organizations’ use of care management processes? Jt Comm J Qual Saf. 2004;30(9):505–514. doi: 10.1016/s1549-3741(04)30059-6. [DOI] [PubMed] [Google Scholar]

- 3.Solberg LIHMC, Sperl-Hillen JM, Harper PG, Crabtree BF. Transforming medical care: Case study of an exemplary, small medical group. Annals of family medicine. 2006;4(2):109–116. doi: 10.1370/afm.424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Yano EM, Soban LM, Parkerton PH, Etzioni DA. Primary care practice organization influences colorectal cancer screening performance. Health Serv Res. 2007;42(3 Pt 1):1130–1149. doi: 10.1111/j.1475-6773.2006.00643.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Benzer JK, Young G, Stolzmann K, et al. The relationship between organizational climate and quality of chronic disease management. Health Serv Res. 2011;46(3):691–711. doi: 10.1111/j.1475-6773.2010.01227.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Dugan DP, Mick SS, Scholle SH, Steidle EF, Goldberg DG. The relationship between organizational culture and practice systems in primary care. The Journal of ambulatory care management. 2011;34(1):47–56. doi: 10.1097/JAC.0b013e3181ff6ef2. [DOI] [PubMed] [Google Scholar]

- 7.Miller WL, Crabtree BF, Nutting PA, Stange KC, Jaen CR. Primary Care Practice Development: A Relationship-Centered Approach. Ann Fam Med. 2010;8(Suppl_1):S68–79. doi: 10.1370/afm.1089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Nutting PA, Crabtree BF, Stewart EE, et al. Effect of Facilitation on Practice Outcomes in the National Demonstration Project Model of the Patient-Centered Medical Home. Ann Fam Med. 2010;8(Suppl_1):S33–44. doi: 10.1370/afm.1119. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Graber JE, Huang ES, Drum ML, et al. Predicting changes in staff morale and burnout at community health centers participating in the health disparities collaboratives. Health Serv Res. 2008;43(4):1403–1423. doi: 10.1111/j.1475-6773.2007.00828.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Scott T, Mannion R, Davies H, Marshall M. The quantitative measurement of organizational culture in health care: a review of the available instruments. Health Serv Res. 2003;38(3):923–945. doi: 10.1111/1475-6773.00154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Van der Wees PJ, Friedberg MW, Guzman EA, Ayanian JZ, Rodriguez HP. Comparing the implementation of team approaches for improving diabetes care in community health centers. BMC Health Serv Res. 2014;14:608. doi: 10.1186/s12913-014-0608-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wageman R, Hackman JR, Lehman E. Team diagnostic survey: development of an instrument. Journal of Applied Behavioral Science. 2005;41:373–398. [Google Scholar]

- 13.Heinemann GD, Schmitt MH, Farrell MP, Brallier SA. Development of an Attitudes Toward Health Care Teams Scale. Eval Health Prof. 1999;22(1):123–142. doi: 10.1177/01632789922034202. [DOI] [PubMed] [Google Scholar]

- 14.Anderson NR, West MA. Measuring climate for work group innovation: development and validation of the team climate inventory. J Organ Behav. 1998;19(3):235–258. [Google Scholar]

- 15.Linzer M, Baier Manwell L, Mundt M, et al. Organizational Climate, Stress, and Error in Primary Care: The MEMO Study. In: Henriksen K, Battles JB, Marks ES, Lewin DI, editors. Advances in Patient Safety: From Research to Implementation (Volume 1: Research Findings) Rockville (MD): 2005. [PubMed] [Google Scholar]

- 16.Baker DP, Krokos KJ, Amodeo AM. Team STEPPS Teamwork Attitudes Questionnaire Manual. Washington, DC: American Institutes for Research; 2008. [Google Scholar]

- 17.Jaen CR, Crabtree BF, Palmer RF, et al. Methods for Evaluating Practice Change Toward a Patient-Centered Medical Home. Ann Fam Med. 2010;8(Suppl_1):S9–20. doi: 10.1370/afm.1108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Agency for Healthcare Research and Quality. Medical Office Survey on Patient Safety Culture. 2013 http://www.ahrq.gov/professionals/quality-patient-safety/patientsafetyculture/medical-office/index.html. Accessed July 11, 2013.

- 19.Helfrich CD, Li YF, Sharp ND, Sales AE. Organizational readiness to change assessment (ORCA): development of an instrument based on the Promoting Action on Research in Health Services (PARIHS) framework. Implement Sci. 2009;4:38. doi: 10.1186/1748-5908-4-38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Muthen LK, Muthen BO. Mplus user’s guide. Los Angeles, CA: Muthen & Muthen; pp. 1998–2004. [Google Scholar]

- 21.Steiger JH, Lind J. Statistically based tests for the number of common factors. Annual Meeting of the Psychometric Society; 1980; Iowa City, Iowa. [Google Scholar]

- 22.Tucker LR, Lewis C. A reliability coefficient for maximum likelihood factor analysis. Psychometrika. 1973;38:1–10. [Google Scholar]

- 23.Bentler PM. Comparative fit indexes in structural models. Psychol Bull. 1990;107(2):238–246. doi: 10.1037/0033-2909.107.2.238. [DOI] [PubMed] [Google Scholar]

- 24.Browne MW, Cudeck R. Testing Structural Equation Models. Thousand Oaks (CA): Sage; 1993. Alternative ways of assessing model fit. [Google Scholar]

- 25.Hu LT, Bentler PM. Cutoff criteria for fit indices in covariance structure analysis: conventional criteria versus new alternatives. Structural Equation Modeling. 1999;6:1–55. [Google Scholar]

- 26.Mensing C, Boucher J, Cypress M, et al. National standards for diabetes self-management education. Diabetes care. 2007;30(Suppl 1):S96–S103. doi: 10.2337/dc07-S096. [DOI] [PubMed] [Google Scholar]

- 27.Podsakoff PM, MacKenzie SB, Lee JY, Podsakoff NP. Common method biases in behavioral research: a critical review of the literature and recommended remedies. J Appl Psychol. 2003;88(5):879–903. doi: 10.1037/0021-9010.88.5.879. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.