Abstract

Objective

Improving health literacy at an early age is crucial to personal health and development. Although health literacy in children and adolescents has gained momentum in the past decade, it remains an under-researched area, particularly health literacy measurement. This study aimed to examine the quality of health literacy instruments used in children and adolescents and to identify the best instrument for field use.

Design

Systematic review.

Setting

A wide range of settings including schools, clinics and communities.

Participants

Children and/or adolescents aged 6–24 years.

Primary and secondary outcome measures

Measurement properties (reliability, validity and responsiveness) and other important characteristics (eg, health topics, components or scoring systems) of health literacy instruments.

Results

There were 29 health literacy instruments identified from the screening process. When measuring health literacy in children and adolescents, researchers mainly focus on the functional domain (basic skills in reading and writing) and consider participant characteristics of developmental change (of cognitive ability), dependency (on parents) and demographic patterns (eg, racial/ethnic backgrounds), less on differential epidemiology (of health and illness). The methodological quality of included studies as assessed via measurement properties varied from poor to excellent. More than half (62.9%) of measurement properties were unknown, due to either poor methodological quality of included studies or a lack of reporting or assessment. The 8-item Health Literacy Assessment Tool (HLAT-8) showed best evidence on construct validity, and the Health Literacy Measure for Adolescents showed best evidence on reliability.

Conclusions

More rigorous and high-quality studies are needed to fill the knowledge gap in measurement properties of health literacy instruments. Although it is challenging to draw a robust conclusion about which instrument is the most reliable and the most valid, this review provides important evidence that supports the use of the HLAT-8 to measure childhood and adolescent health literacy in future school-based research.

Keywords: measurement properties, health literacy, children, adolescents, systematic review

Strengths and limitations of this study.

The COSMIN (COnsensus-based Standards for the selection of health Measurement INstruments) checklist was used as a methodological framework to rate the methodological quality of included studies.

This review has updated previous three reviews of childhood and adolescent health literacy measurement tools and identified 19 additional new health literacy instruments.

Including only studies that aimed to develop or validate a health literacy instrument may eliminate studies that used a health literacy instrument for other purposes.

Individual subjectivity exists in the screening and data synthesis stages.

Introduction

Health literacy is a personal resource that enables an individual to make decisions for healthcare, disease prevention and health promotion in everyday life.1 As defined by the WHO,2 health literacy refers to ‘the cognitive and social skills which determine the motivation and ability of individuals to gain access to, understand and use information in ways which promote and maintain good health’. The literature has shown that health literacy is an independent and more direct predictor of health outcomes than sociodemographics.3 4 People with low health literacy are likely to have worse health-compromising behaviours, higher healthcare costs and poorer health status.5 Given the close relationship between health literacy and health outcomes, many countries have adopted health literacy promotion as a key strategy to reduce health inequities.6

From a health promotion perspective, improving health literacy at an early age is crucial to childhood and adolescent health and development.7 As demonstrated by Diamond et al8 and Robinson et al,9 health literacy interventions for children and adolescents can bring about improvements in healthy behaviours and decreased use of emergency department services. Although health literacy in young people has gained increasing attention, with a rapidly growing number of publications in the past decade,10–13 childhood and adolescent health literacy is still under-researched. According to Forrest et al’s 4D model,14 15 health literacy in children and adolescents is mediated by four additional factors compared with adults: (1) developmental change: children and adolescents have less well-developed cognitive ability than adults; (2) dependency: children and adolescents depend more on their parents and peers than adults do; (3) differential epidemiology: children and adolescents experience a unique pattern of health, illness and disability; and (4) demographic patterns: many children and adolescents living in poverty or in single-parent families are neglected and so require additional care. These four differences pose significant challenges for researchers when measuring health literacy in children and adolescents.

Health literacy is a broad and multidimensional concept with varying definitions.16 This paper uses the definition by Nutbeam,17 who states that health literacy consists of three domains: functional, interactive and critical. The functional domain refers to basic skills in reading and writing health information, which are important for functioning effectively in everyday life. The interactive domain represents advanced skills that allow individuals to extract health information and derive meaning from different forms of communication. The critical domain represents more advanced skills that can be used to critically evaluate health information and take control over health determinants.17 Although health literacy is sufficiently explained in terms of its definitions17–19 and theoretical models,4 7 its measurement remains a contested issue. There are two possible reasons for this. One reason is the large variety of health literacy definitions and conceptual models,12 16 and the other reason is that researchers may have different study aims, populations and contexts when measuring health literacy.20 21

Currently, there are three systematic reviews describing and analysing the methodology and measurement of childhood and adolescent health literacy.10 11 13 In 2013, Ormshaw et al10 conducted a systematic review of child and adolescent health literacy measures. This review used four questions to explore health literacy measurement in children and adolescents: ‘What measurement tools were used? What health topics were involved? What components were identified? and Did studies achieve their stated aims?’ The authors identified 16 empirical studies, with only 6 of them evaluating health literacy measurement as their primary aim. The remaining studies used health literacy measures as either a comparison tool when developing other new instruments or as a dependent variable to examine the effect of an intervention programme. Subsequently, in 2014, Perry11 conducted an integrative review of health literacy instruments used in adolescents. In accordance with the eligibility criteria, five instruments were identified. More recently, Okan et al13 conducted another systematic review on generic health literacy instruments used for children and adolescents with the aim of identifying and assessing relevant instruments for first-time use. They found 15 generic health literacy instruments used for this target group.

Although these three reviews provide general knowledge about the methodology and measurement of health literacy in young people, they all have limitations. Ormshaw et al10 did not evaluate measurement properties of each health literacy instrument. Although Perry11 and Okan et al13 summarised the measurement properties of each instrument, the information provided was limited, mostly descriptive and lacked a critical appraisal. Notably, none of the three reviews considered the methodological quality of included studies.10 11 13 A lack of quality assessment of studies raises concerns about the utility of such reviews for evaluating and selecting health literacy instruments for children and adolescents. Therefore, it is still unclear which instrument is the best in terms of its validity, reliability and feasibility for field use. In addition, it is also unclear how Nutbeam17 three-domain health literacy model and Forrest et al14 15 4D model are considered in existing health literacy instruments for children and adolescents.

To fill these knowledge gaps, this systematic review aimed to examine the quality of health literacy instruments used in the young population and to identify the best instrument for field use. We expect the findings will assist researchers in identifying and selecting the most appropriate instrument for different purposes when measuring childhood and adolescent health literacy.

Methods

Following the methods for conducting systematic reviews outlined in the Cochrane Handbook,22 we developed a review protocol (see online supplementary appendix 1, PROSPERO registration number: CRD42018013759) prior to commencing the study. The Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement23 (see Research Checklist) was used to ensure the reporting quality of this review.

bmjopen-2017-020080supp001.pdf (957.4KB, pdf)

Literature search

The review took place over two time periods: The initial systematic review covered the period between 1 January 1974 and 16 May 2014 (period 1). The start date of 1974 was chosen because this was the date from which the term ‘health literacy’ was first used.24 A second search was used to update the review in February 2018. It covered the period from 17 May 2014 to 31 January 2018 (period 2). The databases searched were Medline, PubMed, Embase, PsycINFO, Cumulative Index to Nursing and Allied Health Literature, Education Resources Information Center, and the Cochrane Library. The search strategy was designed on the basis of previous reviews5 10 25 26 and in consultation with two librarian experts. Three types of search terms were used: (1) construct-related terms: ‘health literacy’ OR ‘health and education and literacy’; (2) outcome-related terms: ‘health literacy assess*’ OR ‘health literacy measure*’ OR ‘health literacy evaluat*’ OR ‘health literacy instrument*’ OR ‘health literacy tool*’; and (3) age-related terms: ‘child*’ OR ‘adolescent*’ OR ‘student*’ OR ‘youth’ OR ‘young people’ OR ‘teen*’ OR ‘young adult’.

No language restriction was applied. The detailed search strategy for each database is available in online supplementary appendix 2. As per the PRISMA flow diagram,23 the references from included studies and from six previously published systematic reviews on health literacy5 10 25–28 were also included.

Eligibility criteria

Studies had to fulfil the following criteria to be included: (1) the stated aim of the study was to develop or validate a health literacy instrument; (2) participants were children or adolescents aged 6–24—this broad age range was used because the age range for ‘children’ (under the age of 18) and ‘adolescents’ (aged 10–24) overlap,29 and also because children aged over 6 are able to learn and develop their own health literacy30; (3) the term ‘health literacy’ was explicitly defined, although studies assessing health numeracy (the ability to understand and use numbers in healthcare settings) were also considered; and (4) at least one measurement property (reliability, validity and responsiveness) was reported in the outcomes.

Studies were excluded if (1) the full paper was not available (ie, only a conference abstract or protocol was available); (2) they were not peer-reviewed (eg, dissertations, government reports); or (3) they were qualitative studies.

Selection process

All references were imported into EndNote V.X7 software (Thomson Reuters, New York, New York) and duplicate records were initially removed before screening. Next, one author (SG) screened all studies based on the title and abstract. Full-text papers of the remaining titles and abstracts were then obtained separately for each review round (period 1 and period 2). All papers were screened by two independent authors (SG and SMA). At each major step of this systematic review, discrepancies between authors were resolved through discussion.

Data extraction

The data that were extracted from papers were the characteristics of the included studies (eg, first author, published year and country), general characteristics of instruments (eg, health topics, components and scoring systems), methodological quality of the study (eg, internal consistency, reliability and measurement error) and ratings of measurement properties of included instruments (eg, internal consistency, reliability and measurement error). Data extraction from full-text papers published during period 1 was performed by two independent authors (SG and TS), whereas data extraction from full-text papers published during period 2 was conducted by one author (SG) and then checked by a second author (TS).

Methodological quality assessment of included studies

The methodological quality of included studies was assessed using the COnsensus-based Standards for the selection of health Measurement INstruments (COSMIN) checklist.31 The COSMIN checklist is a critical appraisal tool containing standards for evaluating the methodological quality of studies on measurement properties of health measurement instruments.32 Specifically, nine measurement properties (internal consistency, reliability, measurement error, content validity, structural validity, hypotheses testing, cross-cultural validity, criterion validity and responsiveness) were assessed.32 Since there is no agreed-upon ‘gold standard’ for health literacy measurement,33 34 criterion validity was not assessed in this review. Each measurement property section contains 5–18 evaluating items. For example, ‘internal consistency’ is evaluated against 11 items. Each item is scored using a 4-point scoring system (‘excellent’, ‘good’, ‘fair’ or ‘poor’). The overall methodological quality of a study is obtained for each measurement property separately, by taking the lowest rating of any item in that section (ie, ‘worst score counts’). Two authors (SG and TS) independently assessed the methodological quality of included studies published during period 1, whereas the quality of included studies published during period 2 was assessed by one author (SG) and then checked by another (TS).

Evaluation of measurement properties for included instruments

The quality of each measurement property of an instrument was evaluated using the quality criteria proposed by Terwee et al,35 who are members of the group that developed the COSMIN checklist (see online supplementary appendix 3). Each measurement property was given a rating result (‘+’ positive, ‘−’ negative, ‘?’ indeterminate and ‘na’ no information available).

Best evidence synthesis: levels of evidence

As recommended by the COSMIN checklist developer group,32 ‘a best evidence synthesis’ was used to synthesise all the evidence on measurement properties of different instruments. The procedure used was similar to the Grading of Recommendations, Assessment, Development and Evaluation (GRADE) framework,36 a transparent approach to rating quality of evidence that is often used in reviews of clinical trials.37 Given that this review did not target clinical trials, the GRADE framework adapted by the COSMIN group was used.38 Under this procedure, the possible overall rating for a measurement property is ‘positive’, ‘negative’, ‘conflicting’ or ‘unknown’, accompanied by levels of evidence (‘strong’, ‘moderate’ or ‘limited’) (see online supplementary appendix 4). Three steps were taken to obtain the overall rating for a measurement property. First, the methodological quality of a study on each measurement property was assessed using the COSMIN checklist. Measurement properties from ‘poor’ methodological quality studies did not contribute to ‘the best evidence synthesis’. Second, the quality of each measurement property of an instrument was evaluated using Terwee’s quality criteria.35 Third, the rating results of measurement properties in different studies on the same instrument were examined whether consistent or not. This best evidence synthesis was performed by one author (SG) and then checked by a second author (TS).

Patient and public involvement

Children and adolescents were not involved in setting the research question, the outcome measures, or the design or implementation of this study.

Results

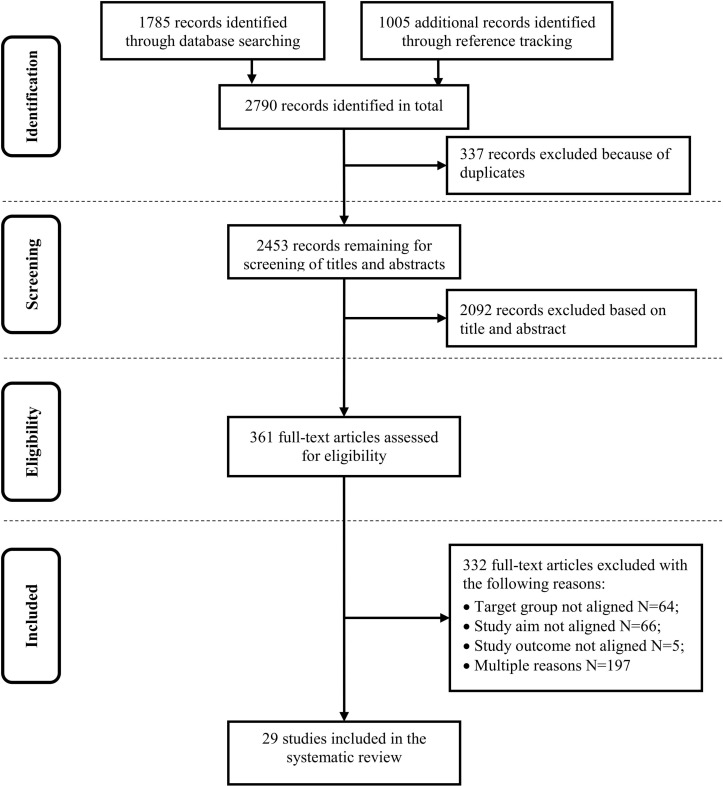

The initial search identified 2790 studies. After duplicates and initial title/abstract screening, 361 full-text articles were identified and obtained. As per the eligibility criteria, 29 studies were included,39–53 yielding 29 unique health literacy instruments used in children and adolescents (see figure 1).

Figure 1.

Flow chart of search and selection process according to the Preferred Reporting Items for Systematic Reviews and Meta-Analyses flow diagram.

Characteristics of included studies

Of the 29 studies identified, 25 were published between 2010 and 2017 (see table 1). Most included studies were conducted in Western countries (n=20), with 11 studies carried out in the USA. The target population (aged 7–25) could be roughly classified into three subgroups: children aged 7–12 (n=5), adolescents aged 13–17 (n=20) and young adults aged 18–25 (n=4). Schools (n=17) were the most common recruitment settings, compared with clinical settings (n=8) and communities (n=4).

Table 1.

Characteristics of included studies

| Study no | Author (year) | Country | Target population | Health literacy instrument | Sample size (% male) |

Sampling method | Recruitment setting |

| 1 | Davis et al (2006)41 | USA | Adolescents aged 10–19 years (mean age=14.8±1.9) | REALM-Teen | 1533 (47.4) | na | Middle schools, high schools, paediatric primary care clinic and summer programmes |

| 2 | Norman and Skinner (2006)43 | Canada | Adolescents aged 13–21 years (mean age=14.95±1.24) |

eHEALS | 664 (55.7) | Sampling from one arm of a randomised controlled trial | Secondary schools |

| 3 | Chisolm and Buchanan (2007)48 | USA | Young people aged 13–17 years (mean age=14.7) | TOFHLA | 50 (48.0) | na | Children’s hospital |

| 4 | Steckelberg et al

(2009)47 |

Germany | Students in grades 10–11 and university | CHC Test | Sample 1: 322 (36.6) Sample 2: 107 (32.7) |

na | Secondary schools, university |

| 5 | Schmidt et al

(2010)46 |

Germany | Children aged 9–13 years (mean age=10.4) | HKACSS | 852 (52.9) | na | Primary school |

| 6 | Wu et al

(2010)40 |

Canada | Students in grades 8–12 | HLAB | 275 (48.0) | Convenience sampling | Secondary schools |

| 7 | Levin-Zamir et al

(2011)49 |

Israel | Adolescents in grades 7, 9 and 11 (approximately aged 13, 15 and 17) | MHL | 1316 (52.0) | Probability sampling and random cluster sampling | Public schools |

| 8 | Chang et al

(2012)51 |

Taiwan | Students in high school (mean age=16.01±1.02) | c-sTOFHLAd | 300 (52.6) | Multiple-stage stratified random sampling | High schools |

| 9 | Hoffman et al

(2013)50 |

USA | Youth aged 14–19 years (mean age=17) | REALM-Teen, NVS, s-TOFHLA | 229 (61.6) | na | Private high school |

| 10 | Massey et al

(2013)44 |

USA | Adolescents aged 13–17 years (mean age=14.8) | MMAHL | 1208 (37.6) | Sampling from a large health insurance network | Public health insurance network |

| 11 | Mulvaney et al

(2013)53 |

USA | Adolescents aged 12–17 years (sample 1: mean age=13.92; sample 2: mean age=15.10) | DNT-39 and DNT-14 | Sample 1: 61 (52.5) Sample 2: 72 (55.6) |

na | Diabetes clinics |

| 12 | Abel et al

(2015)45 |

Switzerland | Young adults aged 18–25 years (male mean age: 19.6; female mean age=18.8) | HLAT-8 | 7428 (95.5) | Sampling from compulsory military service for men and two-stage random sampling for women | Compulsory military service, communities |

| 13 | Driessnack et al

(2014)52 |

USA | Children aged 7–12 years | NVS | 47 (53.0) | Convenience sampling | The science centre |

| 14 | Harper (2014)42 | New Zealand | Students aged 18–24 years | HLAT-51 | 144 (41.0) | Purposeful sampling | College |

| 15 | Warsh et al

(2014)39 |

USA | Children aged 7–17 years (median age=11) | NVS | 97 (46.0) | Convenience sampling | Paediatric clinics |

| 16 | Liu et al

(2014)54 |

Taiwan | Children in grade 6 | CHLT | 162 609 (51.1) | National sampling | Primary schools |

| 17 | Ueno et al

(2014)55 |

Japan | Students in high school grade 1 (age range: 15–16 years) | VOHL | 162 (46.3) | Convenience sampling | A senior high school |

| 18 | Manganello et al

(2015)56 |

USA | Youth aged 12–19 years (mean age=15.6) | HAS-A | 272 (37.0) | Convenience sampling | A paediatric clinic and the community |

| 19 | Guttersrud et al

(2015)57 |

Uganda | Pregnant adolescents aged 15–19 years | MaHeLi | 384 (0) | Random sampling | Health centres |

| 20 | de Jesus Loureiro (2015)64 |

Portugal | Adolescents and young people aged 14–24 years (mean age=16.75±1.62) | QuALiSMental | 4938 (43.3) | Multistage cluster random sampling | Schools |

| 21 | McDonald et al

(2016)65 |

Australia | Adolescents and young adults diagnosed with cancer (age range: 12–24 years) | FCCHL-AYAC | 105 (33.3) | Sampling from a support organisation | An organisation for young people living with cancer |

| 22 | Smith and Samar (2016)58 |

USA | Deaf/hard-of-hearing and hearing adolescents in high school (mean age=17.0±0.84 and 15.8±1.1) | ICHL | Sample 1: 154 (53.2) Sample 2: 89 (33.0) |

Convenience sampling | Medical centre summer programmes |

| 23 | Ghanbari et al

(2016)59 |

Iran | Adolescents aged 15–18 years (mean age=16.2±1.03) | HELMA | 582 (48.8) | Multistage sampling | High schools |

| 24 | Paakkari et al

(2016)60 |

Finland | Pupils (seventh graders aged 13 years: n=1918; ninth graders aged 15 years: n=1935) | HLSAC | 3853 (na) | Cluster sampling | Secondary schools |

| 25 | Manganello et al

(2017)66 |

USA | Adolescents aged 14–19 years (mean age=16.6) | REALM-TeenS | 174 (na) | na | Adolescent medicine clinics |

| 26 | Tsubakita et al

(2017)61 |

Japan | Young adults aged 18–26 years (mean age=19.65±1.34) | funHLS-YA | 1751 (76.8) | Convenience sampling | A private university |

| 27 | Intarakamhang and Intarakamhang (2017)62 |

Thailand | Overweight children aged 9–14 years | HLS-TCO | 2000 (na) | Quota-stratified random sampling | Schools |

| 28 | Bradley-Klug et al

(2017)63 |

USA | Youth and young adults with chronic health conditions aged 13–21 years (mean age=17.6) | HLRS-Y | 204 (24.3) | National sampling | Community-based agencies and social media outlets |

| 29 | Quemelo et al

(2017)67 |

Brazil | University students (mean age=22.7±5.3) | p_HLAT-8 | 472 (33.9) | na | A university |

c-sTOFHLAd, Chinese version of short-form Test of Functional Health Literacy in Adolescents; CHC Test, Critical Health Competence Test; CHLT, Child Health Literacy Test; DNT, Diabetes Numeracy Test; eHEALS, eHealth Literacy Scale; FCCHL-AYAC, Functional, Communicative, and Critical Health Literacy-Adolescents and Young Adults Cancer; funHLS-YA, Functional Health Literacy Scale for Young Adults; HAS-A, Health Literacy Assessment Scale for Adolescents; HELMA, Health Literacy Measure for Adolescents; HKACSS, Health Knowledge, Attitudes, Communication and Self-efficacy Scale; HLAB, Health Literacy Assessment Booklet; HLAT-8, 8-item Health Literacy Assessment Tool; HLAT-51, 51-item Health Literacy Assessment Tool; HLRS-Y, Health Literacy and Resiliency Scale: Youth Version; HLS-TCO, Health Literacy Scale for Thai Childhood Overweight; HLSAC, Health Literacy for School-aged Children; ICHL, Interactive and Critical Health Literacy; MaHeLi, Maternal Health Literacy; MHL, Media Health Literacy; MMAHL, Multidimensional Measure of Adolescent Health Literacy; na, no information available; NVS, Newest Vital Sign; p_HLAT-8, Portuguese version of the 8-item Health Literacy Assessment Tool; QuALiSMental, Questionnaire for Assessment of Mental Health Literacy; REALM-Teen, Rapid Estimate of Adolescent Literacy in Medicine; REALM-TeenS, Rapid Estimate of Adolescent Literacy in Medicine Short Form; s-TOFHLA, short-form Test of Functional Health Literacy in Adults; TOFHLA, Test of Functional Health Literacy in Adults; VOHL, Visual Oral Health Literacy.

General characteristics of included instruments

Compared with previous systematic reviews,10 11 13 this review identified 19 additional new health literacy instruments (eHealth Literacy Scale (eHEALS), short-form Test of Functional Health Literacy in Adults (s-TOFHLA), Diabetes Numeracy Test (DNT)-39, DNT-14, 51-item Health Literacy Assessment Tool (HLAT-51), 8-item Health Literacy Assessment Tool (HLAT-8), Child Health Literacy Test (CHLT), Visual Oral Health Literacy (VOHL), Health Literacy Assessment Scale for Adolescents (HAS-A), Questionnaire for Assessment of Mental Health Literacy (QuALiSMental), Functional, Communicative, and Critical Health Literacy-Adolescents and Young Adults Cancer (FCCHL-AYAC), Interactive and Critical Health Literacy (ICHL), Health Literacy Measure for Adolescents (HELMA), Health Literacy for School-aged Children (HLSAC), Rapid Estimate of Adolescent Literacy in Medicine Short Form (REALM-TeenS), Functional Health Literacy Scale for Young Adults (funHLS-YA), Health Literacy Scale for Thai Childhood Overweight (HLS-TCO), Health Literacy and Resiliency Scale: Youth Version (HLRS-Y), and the Portuguese version of the 8-item Health Literacy Assessment Tool (p_HLAT-8)). The 29 health literacy instruments were classified into three groups based on whether the instrument was developed bespoke for the study or not (see table 2).10 The three groups were (1) newly developed instruments for childhood, adolescent and youth health literacy (n=20)40–47 49 50 54–63; (2) adapted instruments that were based on previous instruments for adult/adolescent health literacy (n=6)51 53 64–67; and (3) original instruments that were developed for adult health literacy (n=3).39 48 50 52

Table 2.

General and important characteristics of included instruments used in children and adolescents

| No | HL instrument | HL domain and component (item number) | Participant characteristics consideration | Health topic and content (readability level) | Response category | Scoring system | Burden | Administration form |

| 1 | NVS39 50 52 | Functional HL

|

Demographic patterns. | Nutrition-related information about the label of an ice cream container (na). | Open-ended. | Score range: 0–6; ordinal category: 0–1: high likelihood of limited literacy; 2–3: possibility of limited literacy; 4–6: adequate literacy. | No longer than 3 min. | Interviewer-administered and performance-based. |

| 2 | TOFHLA48 | Functional HL

|

Developmental change. Demographic patterns. |

Instruction for preparation for an upper gastrointestinal series (4.3 grade), a standard informed consent form (10.4 grade), patients’ rights and responsibilities section of a Medicaid application form (19.5 grade), actual hospital forms and labelled prescription vials (9.4 grade). | 4 response options. | Score range: 0–100; ordinal category: 0–59: inadequate health literacy; 60–74: marginal health literacy; 75–100: adequate health literacy. | 12.9 min (8.9–17.3 min). | Interviewer-administered and performance-based. |

| 3 | s-TOFHLA50 | Functional HL

|

Demographic patterns. | Instruction for preparation for an upper gastrointestinal series (fourth grade), patients’ rights and responsibilities section of a Medicaid application form (10th grade). | 4 response options | Score range: 0–36; ordinal category: 0–16: inadequate literacy; 17–22: marginal literacy; 23–36: adequate literacy. | na. | Interviewer-administered and performance-based. |

| 4 | c-sTOFHLAd51 | Functional HL

|

Developmental change. Demographic patterns. |

Instruction for preparation for an upper gastrointestinal series (fourth grade), patients’ rights and responsibilities section of a Medicaid application form (10th grade). | 4 response options. | Score range: 0–36; ordinal category: 0–16: inadequate literacy; 17–22: marginal literacy; 23–36: adequate literacy. | 20 min class period. | Self-administered and performance-based. |

| 5 | REALM-Teen41 50 | Functional HL

|

Developmental change. Demographic patterns. |

66 health-related words such as weight, prescription and tetanus (sixth grade). | Open-ended. | Score range: 0–66; ordinal category: 0–37: ≤3rd; 38–47: 4th–5th; 48–58: 6th–7th; 59–62: 8th–9th; 63–66: ≥10th | 2–3 min. | Interviewer-administered and performance-based. |

| 6 | HLAB40 | Functional and critical HL

|

Developmental change. Demographic patterns. |

A range of topics such as nutrition and sexual health (pilot-tested). | Open-ended. | Score range: 0–107; continuous score. | Two regular classroom sessions. | Self-administered and performance-based. |

| 7 | MMAHL44 | Functional, interactive and critical HL

|

Developmental change. Demographic patterns. Dependency. |

Experiences of how to access, navigate and manage one’s healthcare and preventive health needs (sixth grade). | 5-point Likert scale. | Score range: na; continuous score. | na. | Self-administered and self-reported. |

| 8 | MHL49 | Functional, interactive and critical HL

|

Dependency. Demographic patterns. |

Nutrition/dieting, physical activity, body image, sexual activity, cigarette smoking, alcohol consumption, violent behaviour, safety habits and/or friendship and family connectedness (pilot-tested). | Open-ended and multiple choice. | Score range: 0–24; continuous score. | na. | Video-assisted interviewer-administered and performance-based. |

| 9 | DNT-3953 | Functional health literacy

|

Differential epidemiology. Demographic patterns. |

Nutrition, exercise, blood glucose monitoring and insulin administration (na). | Open-ended. | Score range: 0–100; continuous score. | na. | Interviewer-administered and performance-based. |

| 10 | DNT-1453 | Functional health literacy

|

Differential epidemiology. Demographic patterns. |

Nutrition, exercise, blood glucose monitoring and insulin administration (na). | Open-ended. | Score range: 0–100; continuous score. | na. | Interviewer-administered and performance-based. |

| 11 | eHEALS43 | Functional, interactive and critical HL

|

Developmental change. Dependency. Demographic patterns. |

General health topics about online health information (pilot-tested). | 5-point Likert scale. | Score range: na; continuous score | na. | Self-administered and self-reported. |

| 12 | CHC Test47 | Critical HL

|

Developmental change. Demographic patterns. |

Echinacea and common cold, MRI in knee injuries, treatment of acne, breast cancer screening (pilot-tested). | Open-ended and multiple choice. | na. | Less than 90 min. | Interviewer-administered and performance-based. |

| 13 | HKACSS46 | Functional and interactive HL

|

Developmental change. Dependency. Demographic patterns. |

Physical activities, nutrition, smoking, vaccination, tooth health and general health (na). | 2 response options; 5-point Likert scale; 4-point Likert scale. | Score range: na; continuous score. | na. | Self-administered and performance-based and self-reported. |

| 14 | HLAT-5142 | Functional, interactive and critical HL

|

Developmental change. Dependency. Demographic patterns. |

Health topics such as gout and uric acid, high cholesterol and triglyceride levels, health information-seeking skills (na). | Yes/no; multiple choice. | na. | 30–45 min. | Self-administered and performance-based and self-reported. |

| 15 | HLAT-845 | Functional, interactive and critical HL

|

Dependency. Demographic patterns. |

General health topics in people’s daily life (na). | 5-point Likert scale; 4-point Likert scale. | Score range: 0–37; continuous score. | na. | Self-administered and self-reported. |

| 16 | CHLT54 | Functional, interactive and critical HL

|

Developmental change. Dependency. Demographic patterns. |

Personal hygiene, growth and ageing, sexual education and mental health, healthy eating, safety and first aid, medicine safety, substance abuse prevention, health promotion and disease prevention, consumer health, health and environment (pilot-tested). | Multiple choice. | Score range: 0–32; continuous score. | na. | Self-administered and performance-based. |

| 17 | VOHL55 | Functional HL

|

Developmental change | Oral health for tooth and gingiva (na). | Visual drawing. | Score range: 0–6; continuous score. | na. | Self-administered and performance-based. |

| 18 | HAS-A56 | Functional, interactive and critical HL

|

Developmental change. Demographic patterns. Dependency. |

General health topics in daily life (pilot-tested). | 5-point Likert scale. | Score range: 0–24 (understanding), 0–20 (communication), 0–16 (confusion); continuous score. |

na. | Self-administered and self-reported. |

| 19 | MaHeLi57 | Functional, interactive and critical HL

|

Developmental change. Demographic patterns. Dependency. Differential epidemiology. |

General health and maternal health topics (na). | 6-point Likert scale. | na. | na. | Interviewer-administered and self-reported. |

| 20 | QuALiSMental64 | Functional, interactive and critical HL

|

Developmental change. Demographic patterns. Dependency. |

Mental health vignettes (na). | Yes/no; multiple choice. | na. | 40–50 min. | Self-administered and performance-based. |

| 21 | FCCHL-AYAC65 | Functional, interactive and critical HL

|

Developmental change. Dependency. Differential epidemiology. |

Health topics regarding cancer in daily life (second grade). | 4-point Likert scale. | Score range: 13–52; continuous score. | na. | Self-administered and self-reported. |

| 22 | ICHL58 | Interactive and critical HL

|

Developmental change. Demographic patterns. Dependency. Differential epidemiology. |

General health topics in daily life (pilot-tested). | 5-point Likert scale. | na. | 40–55 min (together with other measures). | Self-administered and self-reported. |

| 23 | HELMA59 | Functional, interactive and critical HL

|

Developmental change. Dependency. |

General health topics in daily life (pilot-tested). | 5-point Likert scale. | Score range: 0–100; ordinal category: 0–50: inadequate; 50.1–66: problematic; 66.1–84: sufficient; 84.1–100: excellent. | 15 min. | Self-administered and self-reported. |

| 24 | HLSAC60 | Functional, interactive and critical HL

|

Developmental change. Dependency. |

General health topics in daily life (seventh grade). | 4-point Likert scale. | na. | 45 min (together with the HBSC survey). | Self-administered and self-reported. |

| 25 | REALM-TeenS66 | Functional HL

|

Developmental change. Demographic patterns. |

10 health-related words such as diabetes (sixth grade). | Open-ended. | Score range: 0–10; ordinal category: 0–2: ≤3rd; 3–4: 4th–5th; 5–6: 6th–7th; 7–8: 8th–9th; 9–10: ≥10th. | 13.6 s (range: 7.8–23.0). | Interviewer-administered and performance-based. |

| 26 | funHLS-YA61 | Functional HL

|

Developmental change. | Diseases and symptoms, nutrition and diet, biology of the human body (na). | Multiple choice. | Score range: na; continuous score. | 5 min. | Self-administered and performance-based. |

| 27 | HLS-TCO62 | Functional, interactive and critical HL

|

Developmental change. Demographic patterns. Dependency. Differential epidemiology. |

Health information for obesity preventive behaviours (pilot-tested). | Multiple choice; Likert scale. | Score range: 0–135; ordinal category: low:<21 for FHL, <33 for IHL, <27 for CHL; fair: 21–27.99 for FHL, 33–43.99 for IHL, 27–35.99 for CHL; high: 28–35 for FHL, 44–54.9 for IHL, 36–45 for CHL. | na. | Self-administered and performance-based and self-reported. |

| 28 | HLRS-Y63 | Functional, interactive and critical HL

|

Developmental change. Demographic patterns. Differential epidemiology. |

Health information about chronic health conditions (pilot-tested). | 4-point Likert scale. | Score range: na; continuous score. | 15–20 min. | Self-administered and self-reported. |

| 29 | p_HLAT-867 | Functional, interactive and critical HL

|

Dependency. | General health topics in daily life (pilot-tested). | 5-point Likert scale; 4-point Likert scale. | Score range: 0–37; continuous score. | na. | Self-administered and self-reported. |

c-sTOFHLAd, Chinese version of short-form Test of Functional Health Literacy in Adolescents; CHC Test, Critical Health Competence Test; CHL, critical health literacy; CHLT, Child Health Literacy Test; DNT, Diabetes Numeracy Test; eHEALS, eHealth Literacy Scale; FCCHL-AYAC, Functional, Communicative, and Critical Health Literacy-Adolescents and Young Adults Cancer; FHL, functional health literacy; funHLS-YA, Functional Health Literacy Scale for Young Adults; HAS-A, Health Literacy Assessment Scale for Adolescents; HBSC, Health Behaviour in School-aged Children; HELMA, Health Literacy Measure for Adolescents; HKACSS, Health Knowledge, Attitudes, Communication and Self-efficacy Scale; HL, health literacy; HLAB, Health Literacy Assessment Booklet; HLAT-8, 8-item Health Literacy Assessment Tool; HLAT-51, 51-item Health Literacy Assessment Tool; HLRS-Y, Health Literacy and Resiliency Scale: Youth Version; HLS-TCO, Health Literacy Scale for Thai Childhood Overweight; HLSAC, Health Literacy for School-aged Children; ICHL, Interactive and Critical Health Literacy; IHL, interactive health literacy; MaHeLi, Maternal Health Literacy; MHL, Media Health Literacy; MMAHL, Multidimensional Measure of Adolescent Health Literacy; na, no information available; NVS, Newest Vital Sign; p_HLAT-8, Portuguese version of the 8-item Health Literacy Assessment Tool; QuALiSMental, Questionnaire for Assessment of Mental Health Literacy; REALM-Teen, Rapid Estimate of Adolescent Literacy in Medicine; REALM-TeenS, Rapid Estimate of Adolescent Literacy in Medicine Short Form; s-TOFHLA, short-form Test of Functional Health Literacy in Adults; TOFHLA, Test of Functional Health Literacy in Adults; VOHL, Visual Oral Health Literacy.

Health literacy domains and components

Next, Nutbeam’s three-domain health literacy model17 was used to classify the 29 instruments according to which of the commonly used components of health literacy were included. Results showed that ten instruments measured only functional health literacy39 41 48 50–53 55 61 66 and one instrument measured only critical health literacy.47 There was one instrument measuring functional and interactive health literacy,46 one measuring functional and critical health literacy,40 and one measuring interactive and critical health literacy.58 Fifteen instruments measured health literacy by all three domains (functional, interactive and critical).42–45 49 54 56 57 59 60 62–65 67

Consideration of participants’ characteristics

As per Forrest et al’s 4D model,14 15 the 29 included instruments were examined for whether participant characteristics were considered when developing a new instrument or validating an existing instrument. The results showed most of the health literacy instruments considered developmental change, dependency and demographic patterns. In contrast, only seven instruments considered differential epidemiology.53 57 58 62 63 65

Health topics, contents and readability levels

Health literacy instruments for children and adolescents covered a range of health topics such as nutrition and sexual health. Most instruments (n=26) measured health literacy in healthcare settings or health promotion contexts (eg, general health topics, oral health or mental health), while only three instruments measured health literacy in the specific context of eHealth or media health.42 43 49 In relation to the readability of tested materials, only eight health literacy instruments reported their readability levels, ranging from 2nd to 19.5th grade.

Burden and forms of administration

The time to administer was reported in seven instruments, ranging from 3 to 90 min. There were three forms of administration: self-administered instruments (n=19), interviewer-administered instruments (n=9), and video-assisted, interviewer-administered instruments (n=1). Regarding the method of assessment, 15 instruments were performance-based, 11 instruments were self-report and 3 included both performance-based and self-report items.

Evaluation of methodological quality of included studies

According to the COSMIN checklist, the methodological quality of each instrument as assessed by each study is presented in table 3. Almost all studies (n=28) examined content validity, 24 studies assessed internal consistency and hypotheses testing, 17 studies examined structural validity, 8 studies assessed test–retest/inter-rater reliability, 2 studies assessed cross-cultural validity and only 1 study assessed responsiveness.

Table 3.

Methodological quality of each study for each measurement property according to the COSMIN checklist

| Health literacy instrument (author, year) |

Internal consistency | Reliability | Measurement error | Content validity | Construct validity | Responsive ness |

||

| Structural validity | Hypotheses testing | Cross-cultural validity | ||||||

| NVS (Hoffman et al, 2013)50 | Poor | na | na | Poor | na | Fair | na | na |

| NVS (Driessnack et al, 2014)52 | Poor | na | na | Poor | na | Poor | na | na |

| NVS (Warsh et al, 2014)39 | na | na | na | Poor | na | Fair | na | na |

| TOFHLA (Chisolm and Buchanan, 2007)48 | na | na | na | Poor | na | Fair | na | na |

| s-TOFHLA (Hoffman et al, 2013)50 | Poor | na | na | Poor | na | Fair | na | na |

| c-sTOFHLAd (Chang et al, 2012)51 | Fair | Fair | na | Good | Fair | Fair | Fair | na |

| REALM-Teen (Davis et al, 2006)41 | Poor | Fair | na | Good | na | Fair | na | na |

| REALM-Teen (Hoffman et al, 2013)50 | Poor | na | na | Poor | na | Poor | na | na |

| HLAB (Wu et al, 2010)40 | Fair | Poor | na | Good | na | Fair | na | na |

| MMAHL (Massey et al, 2013)44 | Good | na | na | Good | Good | na | na | na |

| MHL (Levin-Zamir et al, 2011)49 | Poor | na | na | Good | na | Good | na | na |

| DNT-39 (Mulvaney et al, 2013)53 | Fair | na | na | Poor | na | Fair | na | na |

| DNT-14 (Mulvaney et al, 2013)53 | Fair | na | na | Poor | na | Fair | na | na |

| eHEALS (Norman and Skinner, 2006)43 | Fair | Fair | na | Good | Fair | Fair | na | na |

| CHC Test (Steckelberg et al, 2009)47 | na | Poor | na | Good | Poor | na | na | na |

| HKACSS (Schmidt et al, 2010)46 | Excellent | na | na | Good | na | Good | na | na |

| HLAT-51 (Harper, 2014)42 | Poor | na | na | Good | Poor | na | na | na |

| HLAT-8 (Abel et al, 2015)45 | Excellent | na | na | Poor | Excellent | Good | na | na |

| CHLT (Liu et al, 2014)54 | Fair | na | na | Good | Fair | Fair | na | na |

| VOHL (Ueno et al, 2014)55 | na | Fair | na | na | na | Fair | na | Fair |

| HAS-A (Manganello et al, 2015)56 | Fair | na | na | Good | Fair | Fair | na | na |

| MaHeLi (Guttersrud et al, 2015)57 | Fair | na | na | Poor | Fair | na | na | na |

| QuALiSMental (de Jesus Loureiro, 2015)64 | Fair | na | na | Excellent | Fair | Fair | na | na |

| FCCHL-AYAC (McDonald et al, 2016)65 | Fair | na | na | Good | Fair | Fair | na | na |

| ICHL (Smith and Samar, 2016)58 | na | na | na | Good | na | Fair | na | na |

| HELMA (Ghanbari et al, 2016)59 | Good | Good | na | Good | Good | na | na | na |

| HLSAC (Paakkari et al, 2016)60 | Fair | Fair | na | Good | Fair | Fair | na | na |

| REALM-TeenS (Manganello et al, 2017)66 | Good | na | na | Good | na | Good | na | na |

| funHLS-YA (Tsubakita et al, 2017)61 | Fair | na | na | Poor | Fair | Fair | na | na |

| HLS-TCO (Intarakamhang and Intarakamhang, 2017)62 | Fair | na | na | Good | Fair | Fair | na | na |

| HLRS-Y (Bradley-Klug et al, 2017)63 | Fair | na | na | Excellent | Fair | Fair | na | na |

| p_HLAT-8 (Quemelo et al, 2017)67 | Fair | na | na | Good | Fair | Fair | Fair | na |

c-sTOFHLAd, Chinese version of short-form Test of Functional Health Literacy in Adolescents; CHC Test, Critical Health Competence Test; CHLT, Child Health Literacy Test; COSMIN, COnsensus-based Standards for the selection of health Measurement Instruments; DNT, Diabetes Numeracy Test; eHEALS, eHealth Literacy Scale; FCCHL-AYAC, Functional, Communicative, and Critical Health Literacy-Adolescents and Young Adults Cancer; funHLS-YA, Functional Health Literacy Scale for Young Adults; HAS-A, Health Literacy Assessment Scale for Adolescents; HELMA, Health Literacy Measure for Adolescents; HKACSS, Health Knowledge, Attitudes, Communication and Self-efficacy Scale; HLAB, Health Literacy Assessment Booklet; HLAT-8, 8-item Health Literacy Assessment Tool; HLAT-51, 51-item Health Literacy Assessment Tool; HLRS-Y, Health Literacy and Resiliency Scale: Youth Version; HLS-TCO, Health Literacy Scale for Thai Childhood Overweight; HLSAC, Health Literacy for School-aged Children; ICHL, Interactive and Critical Health Literacy; MaHeLi, Maternal Health Literacy; MHL, Media Health Literacy; MMAHL, Multidimensional Measure of Adolescent Health Literacy; na, no information available; NVS, Newest Vital Sign; p_HLAT-8, Portuguese version of the 8-item Health Literacy Assessment Tool; QuALiSMental, Questionnaire for Assessment of Mental Health Literacy; REALM-Teen, Rapid Estimate of Adolescent Literacy in Medicine; REALM-TeenS, Rapid Estimate of Adolescent Literacy in Medicine Short Form; s-TOFHLA, short-form Test of Functional Health Literacy in Adults; TOFHLA, Test of Functional Health Literacy in Adults; VOHL, Visual Oral Health Literacy.

Evaluation of instruments’ measurement properties

After the methodological quality assessment of included studies, the measurement properties of each health literacy instrument were examined according to Terwee’s quality criteria (see online supplementary appendix 5).35 The rating results of the measurement properties of each instrument are summarised in table 4.

Table 4.

Evaluation of measurement properties for included instruments according to Terwee’s quality criteria

| Health literacy instrument (author, year) | Internal consistency | Reliability | Measurement error | Content validity | Construct validity | Responsive ness |

||

| Structural validity | Hypotheses testing | Cross-cultural validity | ||||||

| NVS (Hoffman et al, 2013)50 | − | na | na | ? | na | − | na | na |

| NVS (Driessnack et al, 2014)52 | + | na | na | ? | na | − | na | na |

| NVS (Warsh et al, 2014)39 | na | na | na | ? | na | + | na | na |

| TOFHLA (Chisolm and Buchanan, 2007)48 | na | na | na | ? | na | − | na | na |

| s-TOFHLA (Hoffman et al, 2013)50 | + | na | na | ? | na | − | na | na |

| c-sTOFHLAd (Chang et al, 2012)51 | + | + | na | + | ? | + | ? | na |

| REALM-Teen (Davis et al, 2006)41 | + | + | na | + | na | + | na | na |

| REALM-Teen (Hoffman et al, 2013)50 | + | na | na | ? | na | − | na | na |

| HLAB (Wu et al, 2010)40 | + | + | na | + | na | − | na | na |

| MMAHL (Massey et al, 2013)44 | + | na | na | + | − | na | na | na |

| MHL (Levin-Zamir et al, 2011)49 | + | na | na | + | na | + | na | na |

| DNT-39 (Mulvaney et al, 2013)53 | + | na | na | ? | na | − | na | na |

| DNT-14 (Mulvaney et al, 2013)53 | + | na | na | ? | na | − | na | na |

| eHEALS (Norman and Skinner, 2006)43 | + | − | na | + | + | − | na | na |

| CHC Test (Steckelberg et al, 2009)47 | na | + | na | + | + | na | na | na |

| HKACSS (Schmidt et al, 2010)46 | + (HC) − (HA) | na | na | + | na | + | na | na |

| HLAT-51 (Harper, 2014)42 | ? | na | na | + | ? | na | na | na |

| HLAT-8 (Abel et al, 2015)45 | − | na | na | ? | + | + | na | na |

| CHLT (Liu et al, 2014)54 | + | na | na | + | + | + | na | na |

| VOHL (Ueno et al, 2014)55 | na | − (TS) + (GS) | na | na | na | − | na | + |

| HAS-A (Manganello et al, 2015)56 | + | na | na | + | + | − | na | na |

| MaHeLi (Guttersrud et al, 2015)57 | + | na | na | ? | + | na | na | na |

| QuALiSMental (de Jesus Loureiro, 2015)64 | − | na | na | + | + | + | na | na |

| FCCHL-AYAC (McDonald et al, 2016)65 | + (FHL) − (IHL) + (CHL) |

na | na | + | + | − | na | na |

| ICHL (Smith and Samar, 2016)58 | na | na | na | + | na | + | na | na |

| HELMA (Ghanbari et al, 2016)59 | + | + | na | + | + | na | na | na |

| HLSAC (Paakkari et al, 2016)60 | + | + | na | + | − | + | na | na |

| REALM-TeenS (Manganello et al, 2017)66 | + | na | na | + | na | + | na | na |

| funHLS-YA (Tsubakita et al, 2017)61 | + | na | na | ? | + | − | na | na |

| HLS-TCO (Intarakamhang and Intarakamhang, 2017)62 | + | na | na | + | + | + | na | na |

| HLRS-Y (Bradley-Klug et al, 2017)63 | + | na | na | + | + | + | na | na |

| p_HLAT-8 (Quemelo et al, 2017)67 | + | na | na | + | + | − | + | na |

+, positive rating; −, negative rating; ?, indeterminate rating; c-sTOFHLAd, Chinese version of short-form Test of Functional Health Literacy in Adolescents; CHC Test, Critical Health Competence Test; CHL, Critical Health Literacy; CHLT, Child Health Literacy Test; DNT, Diabetes Numeracy Test; eHEALS, eHealth Literacy Scale; FCCHL-AYAC, Functional, Communicative, and Critical Health Literacy-Adolescents and Young Adults Cancer; FHL, Functional Health Literacy; funHLS-YA, Functional Health Literacy Scale for Young Adults; GS, Gingiva Score; HA, health attitude; HAS-A, Health Literacy Assessment Scale for Adolescents; HC, health communication; HELMA, Health Literacy Measure for Adolescents; HKACSS, Health Knowledge, Attitudes, Communication and Self-efficacy Scale; HLAB, Health Literacy Assessment Booklet; HLAT-8, 8-item Health Literacy Assessment Tool; HLAT-51, 51-item Health Literacy Assessment Tool; HLRS-Y, Health Literacy and Resiliency Scale: Youth Version; HLS-TCO, Health Literacy Scale for Thai Childhood Overweight; HLSAC, Health Literacy for School-aged Children; ICHL, Interactive and Critical Health Literacy; IHL, interactive health literacy; MaHeLi, Maternal Health Literacy; MHL, Media Health Literacy; MMAHL, Multidimensional Measure of Adolescent Health Literacy; na, no information available; NVS, Newest Vital Sign; p_HLAT-8, Portuguese version of the 8-item Health Literacy Assessment Tool; QuALiSMental, Questionnaire for Assessment of Mental Health Literacy; REALM-Teen, Rapid Estimate of Adolescent Literacy in Medicine; REALM-TeenS, Rapid Estimate of Adolescent Literacy in Medicine Short Form; s-TOFHLA, short-form Test of Functional Health Literacy in Adults; TOFHLA, Test of Functional Health Literacy in Adults; TS, Tooth Score; VOHL, Visual Oral Health Literacy.

The synthesised evidence for the overall rating of measurement properties

Finally, a synthesis was conducted for the overall rating of measurement properties for each instrument according to ‘the best evidence synthesis’ guidelines recommended by the COSMIN checklist developer group.32 This synthesis result was derived from information presented in table 3 and table 4. The overall rating of each measurement property for each health literacy instrument is presented in table 5. In summary, most information (62.9%, 146/232) on measurement properties was unknown due to either poor methodological quality of studies or a lack of information on reporting or assessment.

Table 5.

The overall quality of measurement properties for each health literacy instrument used in children and adolescents

| Health literacy instrument | Internal consistency | Reliability | Measurement error | Content validity | Construct validity | Responsive ness |

||

| Structural validity | Hypotheses testing | Cross-cultural validity | ||||||

| NVS39 50 52 | ? | na | na | ? | na | ± | na | na |

| TOFHLA48 | na | na | na | ? | na | − | na | na |

| s-TOFHLA50 | ? | na | na | ? | na | − | na | na |

| c-sTOFHLAd51 | + | + | na | ++ | ? | + | ? | na |

| REALM-Teen41 50 | ? | + | na | ++ | na | + | na | na |

| HLAB40 | + | ? | na | ++ | na | − | na | na |

| MMAHL44 | ++ | na | na | ++ | − − | na | na | na |

| MHL49 | ? | na | na | ++ | na | ++ | na | na |

| DNT-3953 | + | na | na | ? | na | − | na | na |

| DNT-1453 | + | na | na | ? | na | − | na | na |

| eHEALS43 | + | − | na | ++ | + | − | na | na |

| CHC Test47 | na | ? | na | ++ | ? | na | na | na |

| HKACSS46 | +++ (HC) − − − (HA) | na | na | ++ | na | ++ | na | na |

| HLAT-5142 | ? | na | na | ++ | ? | na | na | na |

| HLAT-845 | − − − | na | na | ? | +++ | ++ | na | na |

| CHLT54 | + | na | na | ++ | + | + | na | na |

| VOHL55 | na | − (TS) + (GS) | na | na | na | − | na | + |

| HAS-A56 | + | na | na | ++ | + | − | na | na |

| MaHeLi57 | + | na | na | ? | + | na | na | na |

| QuALiSMental64 | − | na | na | +++ | + | + | na | na |

| FCCHL-AYAC65 | + (FHL) − (IHL) + (CHL) | na | na | ++ | + | − | na | na |

| ICHL58 | na | na | na | ++ | na | + | na | na |

| HELMA59 | ++ | ++ | na | ++ | ++ | na | na | na |

| HLSAC60 | + | + | na | ++ | − | + | na | na |

| REALM-TeenS66 | ++ | na | na | ++ | na | ++ | na | na |

| funHLS-YA61 | + | na | na | ? | + | − | na | na |

| HLS-TCO62 | + | na | na | ++ | + | + | na | na |

| HLRS-Y63 | + | na | na | +++ | + | + | na | na |

| p_HLAT-867 | + | na | na | ++ | + | − | + | na |

+ or −, limited evidence and positive/negative result; ++ or − −, moderate evidence and positive/negative result; +++ or − − −, strong evidence and positive/negative result; ±, conflicting evidence; ?, unknown due to poor methodological quality or indeterminate rating of a measurement property; c-sTOFHLAd, Chinese version of short-form Test of Functional Health Literacy in Adolescents; CHC Test, Critical Health Competence Test; CHL, Critical Health Literacy; CHLT, Child Health Literacy Test; DNT, Diabetes Numeracy Test; eHEALS, eHealth Literacy Scale; FCCHL-AYAC, Functional, Communicative, and Critical Health Literacy-Adolescents and Young Adults Cancer; FHL, Functional Health Literacy; funHLS-YA, Functional Health Literacy Scale for Young Adults; GS, Gingiva Score; HA, health attitude; HAS-A, Health Literacy Assessment Scale for Adolescents; HC, health communication; HELMA, Health Literacy Measure for Adolescents; HKACSS, Health Knowledge, Attitudes, Communication and Self-efficacy Scale; HLAB, Health Literacy Assessment Booklet; HLAT-8, 8-item Health Literacy Assessment Tool; HLAT-51, 51-item Health Literacy Assessment Tool; HLRS-Y, Health Literacy and Resiliency Scale: Youth Version; HLS-TCO, Health Literacy Scale for Thai Childhood Overweight; HLSAC, Health Literacy for School-aged Children; ICHL, Interactive and Critical Health Literacy; IHL, interactive health literacy; MaHeLi, Maternal Health Literacy; MHL, Media Health Literacy; MMAHL, Multidimensional Measure of Adolescent Health Literacy; na, no information available; NVS, Newest Vital Sign; p_HLAT-8, Portuguese version of the 8-item Health Literacy Assessment Tool; QuALiSMental, Questionnaire for Assessment of Mental Health Literacy; REALM-Teen, Rapid Estimate of Adolescent Literacy in Medicine; REALM-TeenS, Rapid Estimate of Adolescent Literacy in Medicine Short Form; s-TOFHLA, short-form Test of Functional Health Literacy in Adults; TOFHLA, Test of Functional Health Literacy in Adults; TS, Tooth Score; VOHL, Visual Oral Health Literacy.

Discussion

Summary of the main results

This study identified and examined 29 health literacy instruments used in children and adolescents and exemplified the large variety of methods used. Compared with previous three systematic reviews,10 11 13 this review identified 19 additional new health literacy instruments and critically appraised the measurement properties of each instrument. It showed that, to date, only half of included health literacy instruments (15/29) measure all three domains (functional, interactive and critical) and that the functional domain is still the focus of attention when measuring health literacy in children and adolescents. Additionally, researchers mainly focus on participant characteristics of developmental change (of cognitive ability), dependency (on parents) and demographic patterns (eg, racial/ethnic backgrounds), and less so on differential epidemiology (of health and illness). The methodological quality of included studies as assessed via measurement properties varied from poor to excellent. Most information (62.9%) on measurement properties was unknown due to either the poor methodological quality of studies or a lack of reporting or assessment. It is therefore difficult to draw a robust conclusion about which instrument is the best.

Health literacy measurement in children and adolescents

This review found that health literacy measurement in children and adolescents tends to include Nutbeam’s three-domain health literacy construct (ie, functional, interactive and critical), especially in the past 5 years. However, almost one-third of included instruments focused only on the functional domain (n=10). Unlike health literacy research for patients in clinics, health literacy research for children and adolescents (a comparatively healthy population) should be considered from a health promotion perspective,68 rather than a healthcare or disease management perspective. Integrating interactive and critical domains into health literacy measurement is aligned with the rationale of emphasising empowerment in health promotion for children and adolescents.69 The focus of health literacy for this population group should therefore include all three domains and so there is a need for future research to integrate the three domains within health literacy instruments.

Similar to previous findings by Ormshaw et al10 and Okan et al,13 this review also revealed that childhood and adolescent health literacy measurement varied by its dimensions, health topics, forms of administration and by the level to which participant characteristics were considered. There are likely four main reasons for these disparities. First, definitions of health literacy were inconsistent. Some researchers measured general health literacy,40 45 while others measured eHealth literacy or media health literacy.43 49 Second, researchers had different research purposes for their studies. Some researchers used what were originally adult instruments to measure adolescent health literacy,39 48 52 whereas others developed new or adapted instruments.40–42 53 Third, the research settings affected the measurement process. As clinical settings were busy, short surveys were more appropriate than long surveys.39 41 44 On the other hand, health literacy in school settings was often measured using long and comprehensive surveys.40 42 47 Fourth, researchers considered different participant characteristics when measuring health literacy in children and adolescents. For example, some researchers took considerations of students’ cognitive development,40 41 44 46 51 some focused on adolescents’ resources and environments (eg, friends and family contexts, eHealth contexts, media contexts),43 45 49 and others looked at the effect of different cultural backgrounds and socioeconomic status.40 41 43 44 46 47 49–52 Based on Forrest et al’s 4D model,14 15 this review showed that most health literacy instruments considered participants’ development, dependency and demographic patterns, with only seven instruments considering differential epidemiology.53 57 58 62 63 65 Although the ‘4D’ model cannot be used to reduce the disparities in health literacy measurement, it does provide an opportunity to identify gaps in current research and assist researchers to consider participants’ characteristics comprehensively in future research.

The methodological quality of included studies

This review included a methodological quality assessment of included studies, which was absent from previous reviews on this subject.10 11 Methodological quality assessment is important because strong conclusions about the measurement properties of instruments can only be drawn from high-quality studies. In this review, the COSMIN checklist was shown to be a useful framework for critically appraising the methodological quality of studies via each measurement property. Findings suggested that there was wide variation in the methodological quality of studies for all instruments. Poor methodological quality of studies was often seen in the original or adapted health literacy instruments (the Newest Vital Sign (NVS), the Test of Functional Health Literacy in Adults (TOFHLA), the s-TOFHLA, the DNT-39 and the DNT-14) for two main reasons. The first reason was the vague description of the target population involved. This suggested that researchers were less likely to consider an instrument’s content validity when using the original, adult instrument for children and/or adolescents. Given that children and adolescents have less well-developed cognitive abilities, in future it is essential to assess whether all items within an instrument are understood. The second reason was a lack of unidimensionality analysis for internal consistency. As explained by the COSMIN group,70 a set of items can be inter-related and multidimensional, whereas unidimensionality is a prerequisite for a clear interpretation of the internal consistency statistics (Cronbach’s alpha). Future research on the use of health literacy instruments therefore needs to assess and report both internal consistency statistics and unidimensionality analysis (eg, factor analysis).

Critical appraisal of measurement properties for included instruments

This review demonstrated that of all instruments reviewed, three instruments (the Chinese version of short-form Test of Functional Health Literacy in Adolescents (c-sTOFHLAd), the HELMA and the HLSAC) showed satisfactory evidence about internal consistency and test–retest reliability. Based on the synthesised evidence, the HELMA showed moderate evidence and positive results of internal consistency (α=0.93) and test–retest reliability (intraclass correlation coefficient (ICC)=0.93), whereas the HLSAC (α=0.93; standardised stability estimate=0.83) and the c-sTOFHLAd (α=0.85; ICC=0.95) showed limited evidence and positive results. Interestingly, compared with the overall reliability rating of the s-TOFHLA,50 the c-sTOFHLAd showed better results.51 The reason for this was probably the different methodological quality of the studies that examined the s-TOFHLA and the c-sTOFHLAd. The c-sTOFHLAd study had fair methodological quality in terms of internal consistency and test–retest reliability, whereas the original s-TOFHLA study had poor methodological quality for internal consistency and unknown information for test–retest reliability. Given the large disparity of rating results between the original and translated instrument, further evidence is needed to confirm whether the s-TOFHLA has the same or a different reliability within different cultures, thus assisting researchers to understand the generalisability of the s-TOFHLA’s reliability results.

Four instruments were found to show satisfactory evidence about both content validity and construct validity (structural validity and hypotheses testing). Construct validity is a fundamental aspect of psychometrics and was examined in this review for two reasons. First, it enables an instrument to be assessed for the extent to which operational variables adequately represent underlying theoretical constructs.71 Second, the overall rating results of content validity for all included instruments were similar (ie, unknown or moderate/strong evidence and positive result). The only difference was that the target population was involved or not. Given that all instruments’ items reflected the measured construct, in this review, construct validity was determined to be key to examining the overall validity of included instruments. In this context, only the HLAT-8 showed strong evidence and positive result for structural validity (CFI=0.99; TLI=0.97; RMSEA=0.03; SRMR=0.03) and moderate evidence on hypotheses testing (known-group validity results showed differences of health literacy by gender, educational status and health valuation). However, in the original paper,45 the HLAT-8 was only tested for its known-group validity, not for convergent validity. Examination of convergent validity is important because it assists researchers in understanding the extent to which two examined measures’ constructs are theoretically and practically related.72 Therefore, future research on the convergent validity of the HLAT-8 would be beneficial for complementing that which exists for its construct validity.

Similar to a previous study by Jordan et al,26 this review demonstrated that only one included study contained evidence of responsiveness. Ueno et al55 developed a visual oral health literacy instrument and examined responsiveness by comparing changes in health literacy before and after oral health education. Their results showed students’ health literacy scores increased significantly after health education. Responsiveness is the ability of an instrument to detect change over time in the construct being measured, and it is particularly important for longitudinal studies.31 However, most studies included in this review were cross-sectional studies, and only one study (on the Multidimensional Measure of Adolescent Health Literacy44) discussed the potential to measure health literacy over time. Studies that measure health literacy over time in populations are needed, because this is a prerequisite for longitudinal studies and so that the responsiveness of instruments can be monitored and improved.

Feasibility issues for included instruments

This review showed that the feasibility aspects of instruments varied markedly. In relation to forms of administration, this review identified 19 self-administered instruments and 10 interviewer-administered instruments. This suggests that self-administered instruments are more commonly used in practice than interviewer-administered instruments. However, both administration modes have limitations. Self-administered instruments are cost-effective and efficient, but may bring about respondent bias, whereas interviewer-administered instruments, while able to ensure high response rates, are always resource-intensive and expensive to administer.73 Although the literature showed that there was no significant difference in scores outcome between these two administration modes,74 75 the relevant studies mostly concerned health-related quality of life instruments. It is still unknown whether the same is true for health literacy instruments. Among children and adolescents, health literacy research is more likely to be conducted through large-scale surveys in school settings. Therefore, the more cost-effective, self-administered mode seems to have great potential for future research. To further support the wide use of self-administered instruments, there is a need for future research to confirm the same effect of administration between self-administered and interviewer-administered instruments.

With regard to the type of assessment method, this review revealed that performance-based health literacy instruments (n=15) are more preferable than self-report instruments (n=11). There might be two reasons for this. First, it is due to participant characteristics. Compared with adults, children and adolescents are more dependent on their parents for health-related decisions.15 Measurement error is more likely to occur when children and adolescents answer self-report items.76 Therefore, performance-based assessment is often selected to avoid such inaccuracy. Second, performance-based instruments are objective, whereas self-report instruments are subjective and may bring about overestimated results.77 However, the frequent use of performance-based instruments does not mean that they are more appropriate than self-report instruments when measuring childhood and adolescent health literacy. Compared with performance-based instruments, self-report instruments are always time-efficient and help to preserve respondents’ dignity.21 The challenge in using self-report instruments is to consider the readability of tested materials. If children and adolescents can understand what a health literacy instrument measures, then they are more able to accurately self-assess their own health literacy skills.69 The difference between self-report and performance-based instruments of health literacy has been discussed in the literature,78 but the evidence about the difference is still limited due to a lack of specifically designed studies for exploring the difference. Further studies are needed to fill this knowledge gap.

Recommendations for future research

This review identified 18 instruments (the Rapid Estimate of Adolescent Literacy in Medicine (REALM-Teen), the NVS, the s-TOFHLA, the c-sTOFHLAd, the eHEALS, the Critical Health Competence Test (CHC Test), the Health Knowledge, Attitudes, Communication and Self-efficacy Scale (HKACSS), the Health Literacy Assessment Booklet (HLAB), the Media Health Literacy (MHL), the HLAT-51, the CHLT, the VOHL, the QuALiSMental, the HELMA, the HLSAC, the funHLS-YA, the HLS-TCO and the p_HLAT-8) that were used to measure health literacy in school settings. Although it is difficult to categorically state which instrument is the best, this review provides useful information that will assist researchers to identify the most suitable instrument to use when measuring health literacy in children and adolescents in school contexts.

Among the 18 instruments, 6 tested functional health literacy (the REALM-Teen, the NVS, the s-TOFHLA, the c-sTOFHLAd, the VOHL and the funHLS-YA), 1 examined critical health literacy (the CHC Test), 1 measured functional and interactive health literacy (the HKACSS), 1 examined functional and critical health literacy (the HLAB), and 9 tested health literacy comprehensively focusing on functional, interactive and critical domains (the eHEALS, the MHL, the HLAT-51, the CHLT, the QuALiSMental, the HELMA, the HLSAC, the HLS-TCO and the p_HLAT-8). However, only one of these three-domain instruments (the HLSAC) was considered appropriate for use in schools because of its quick administration, satisfactory reliability and one-factor validity. Eight three-domain instruments were excluded due to the fact that they focused on non-general health literacy (the eHEALS, the MHL, the QuALiSMental, the HLS-TCO) or were burdensome to administer (the HLAT-51, the HELMA-44) or were not published in English (the CHLT and the p_HLAT-8).

Compared with the HLSAC, the HLAT-8 examines the construct of health literacy via three domains rather than one-factor structure, thus enabling a more comprehensive examination of the construct. Meanwhile, although the p_HLAT-8 (Portuguese version) is not available in English, the original HLAT-8 is. After comparing measurement domains and measurement properties, the HLAT-8 was deemed to be more suitable for measuring health literacy in school settings for four reasons: (1) it measures health literacy in the context of family and friends,45 a highly important attribute because children and adolescents often need support for health decisions from parents and peers7 15; (2) it is a short but comprehensive tool that captures Nutbeam’s three-domain nature of health literacy17; (3) it showed satisfactory structural validity (RMSEA=0.03; CFI=0.99; TLI=0.97; SRMR=0.03)45; and (4) it has good feasibility (eg, the p_HLAT-8 is self-administered and time-efficient) in school-based studies. However, there are still two main aspects that need to be considered in future. One aspect is its use in the target population. Given the HLAT-8 has not been tested for children and adolescents under 18, its readability and measurement properties need to be evaluated. The other aspect is that its convergent validity (the strength of association between two measures of a similar construct, an essential part of construct validity) has not been examined. Testing convergent validity of the HLAT-8 is important because high convergent validity assists researchers to understand the extent to which two examined measures’ constructs are theoretically and practically related.

Limitations

This review was not without limitation. First, we restricted the search to studies aiming to develop or validate a health literacy instrument. Thus we may have missed relevant instruments in studies that were not aiming to develop instruments.79 80 Second, although the COSMIN checklist provided us with strong evidence of the methodological quality of a study via an assessment of each measurement property, it cannot evaluate a study’s overall methodological quality. Third, criterion validity was not examined due to lack of ‘gold standard’ for health literacy measurement. However, we examined convergent validity under the domain of ‘hypotheses testing’. This can ascertain the validity of newly developed instruments against existing commonly used instruments. Finally, individual subjectivity inevitably played a part in the screening, data extraction and synthesis stage of the review. To reduce this subjectivity, two authors independently managed the major stages.

Conclusion

This review updated previous reviews of childhood and adolescent health literacy measurement (cf Ormshaw et al, Perry and Okan et al)10 11 13 to incorporate a quality assessment framework. It showed that most information on measurement properties was unknown due to either the poor methodological quality of studies or a lack of assessment and reporting. Rigorous and high-quality studies are needed to fill the knowledge gap in relation to health literacy measurement in children and adolescents. Although it is challenging to draw a robust conclusion about which instrument is the best, this review provides important evidence that supports the use of the HLAT-8 to measure childhood and adolescent health literacy in future research.

Supplementary Material

Acknowledgments

The authors appreciate the helpful comments received from the reviewers (Martha Driessnack and Debi Bhattacharya) at BMJ Open.

Footnotes

Contributors: SG conceived the review approach. RA and EW provided general guidance for the drafting of the protocol. SG and SMA screened the literature. SG and TS extracted the data. SG drafted the manuscript. SG, GRB, RA, EW, XY, SMA and TS reviewed and revised the manuscript. All authors contributed to the final manuscript.

Funding: This paper is part of SG’s PhD research project, which is supported by the Melbourne International Engagement Award. This research received no specific grant from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient consent: Not required.

Provenance and peer review: Not commissioned; externally peer reviewed.

Data sharing statement: There are no additional data available.

References

- 1.Nutbeam D. The evolving concept of health literacy. Soc Sci Med 2008;67:2072–8. 10.1016/j.socscimed.2008.09.050 [DOI] [PubMed] [Google Scholar]

- 2.Nutbeam D. Health Promotion Glossary. Health Promot Int 1998;13:349–64. 10.1093/heapro/13.4.349 [DOI] [Google Scholar]

- 3.Paasche-Orlow MK, Wolf MS. The causal pathways linking health literacy to health outcomes. Am J Health Behav 2007;31(Suppl 1):19–26. 10.5993/AJHB.31.s1.4 [DOI] [PubMed] [Google Scholar]

- 4.Squiers L, Peinado S, Berkman N, et al. The health literacy skills framework. J Health Commun 2012;17(Suppl 3):30–54. 10.1080/10810730.2012.713442 [DOI] [PubMed] [Google Scholar]

- 5.Berkman ND, Sheridan SL, Donahue KE, et al. Low health literacy and health outcomes: an updated systematic review. Ann Intern Med 2011;155:97–107. 10.7326/0003-4819-155-2-201107190-00005 [DOI] [PubMed] [Google Scholar]

- 6.Dodson S, Good S, Osborne R. Health literacy toolkit for low-and middle-income countries: a series of information sheets to empower communities and strengthen health systems. New Delhi: World Health Organization, Regional Office for South-East Asia, 2015. [Google Scholar]

- 7.Manganello JA. Health literacy and adolescents: a framework and agenda for future research. Health Educ Res 2008;23:840–7. 10.1093/her/cym069 [DOI] [PubMed] [Google Scholar]

- 8.Diamond C, Saintonge S, August P, et al. The development of building wellness, a youth health literacy program. J Health Commun 2011;16(Suppl 3):103–18. 10.1080/10810730.2011.604385 [DOI] [PubMed] [Google Scholar]

- 9.Robinson LD, Calmes DP, Bazargan M. The impact of literacy enhancement on asthma-related outcomes among underserved children. J Natl Med Assoc 2008;100:892–6. 10.1016/S0027-9684(15)31401-2 [DOI] [PubMed] [Google Scholar]

- 10.Ormshaw MJ, Paakkari LT, Kannas LK. Measuring child and adolescent health literacy: a systematic review of literature. Health Educ 2013;113:433–55. 10.1108/HE-07-2012-0039 [DOI] [Google Scholar]

- 11.Perry EL. Health literacy in adolescents: an integrative review. J Spec Pediatr Nurs 2014;19:210–8. 10.1111/jspn.12072 [DOI] [PubMed] [Google Scholar]

- 12.Bröder J, Okan O, Bauer U, et al. Health literacy in childhood and youth: a systematic review of definitions and models. BMC Public Health 2017;17:361 10.1186/s12889-017-4267-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Okan O, Lopes E, Bollweg TM, et al. Generic health literacy measurement instruments for children and adolescents: a systematic review of the literature. BMC Public Health 2018;18:166 10.1186/s12889-018-5054-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Forrest CB, Simpson L, Clancy C. Child health services research. Challenges and opportunities. JAMA 1997;277:1787–93. [PubMed] [Google Scholar]