Abstract

Prior neuroimaging and neuropsychological research indicates that the left inferior parietal lobule in the human brain is a critical substrate for representing object manipulation knowledge. In the present functional MRI study we used multivoxel pattern analyses to test whether action similarity among objects can be decoded in the inferior parietal lobule independent of the task applied to objects (identification or pantomime) and stimulus format in which stimuli are presented (pictures or printed words). Participants pantomimed the use of objects, cued by printed words, or identified pictures of objects. Classifiers were trained and tested across task (e.g., training data: pantomime; testing data: identification), stimulus format (e.g., training data: word format; testing format: picture) and specific objects (e.g., training data: scissors vs. corkscrew; testing data: pliers vs. screwdriver). The only brain region in which action relations among objects could be decoded across task, stimulus format and objects was the inferior parietal lobule. By contrast, medial aspects of the ventral surface of the left temporal lobe represented object function, albeit not at the same level of abstractness as actions in the inferior parietal lobule. These results suggest compulsory access to abstract action information in the inferior parietal lobe even when simply identifying objects.

Keywords: conceptual representation, fMRI, multivariate pattern classification, object-directed actions, tool use

Introduction

On a daily basis we are constantly identifying, grasping and manipulating objects in our environment (e.g., forks, scissors, and pens). The ability to recognize, grasp, and then manipulate tools according to their function requires the integration of high-level visual and attentional processes (this is the handle of the fork), action knowledge (this is how a fork is grasped and manipulated), and function knowledge (a fork is the appropriate object in order to eat spaghetti). Many tools and utensils share similarities in action or function. For example, scissors and pliers are similar in terms of their manner of manipulation (both involving a hand-squeeze movement), whereas scissors and knife, while not manipulated similarly, share a similar function (cutting). Here we capitalize on these similarity relations among tools to study the neural representation of object-directed action and function knowledge in the human brain.

The Tool Processing Network include the left premotor cortex, the left inferior parietal lobule and superior parietal lobules bilaterally, the left posterior middle temporal gyrus, and the medial fusiform gyrus bilaterally (Chao et al. 1999; Chao and Martin 2000; Rumiati et al. 2004; Noppeney 2006; Mahon et al. 2007, 2013; Garcea and Mahon 2014; for reviews, see Lewis 2006; Mahon and Caramazza 2009; Martin 2007, 2009, 2016). The medial fusiform gyrus is an important neural substrate for high-level visual information about small manipulable objects (Chao et al. 1999; Mahon et al. 2007; Konkle and Oliva 2012), and is adjacent to representations of surface feature and texture information relevant to object-directed grasping (Cant and Goodale 2007—for neuropsychological data see Bruffaerts et al. 2014). The left premotor cortex is believed to support the translations of high-level praxis information into specific motor actions, while the left posterior middle temporal gyrus is believed to interface high-level visual representations with high-level praxis knowledge, perhaps containing semantically interpreted information about object properties (Tranel et al. 2003; Buxbaum et al. 2014). Here we focus on the left inferior parietal lobule which is thought to play an important role in representing action knowledge (Ochipa et al. 1989; Buxbaum et al. 2000; Mahon et al. 2007; Negri et al. 2007a, 2007b; Garcea et al. 2013; for discussion, see Rothi et al. 1991; Cubelli et al. 2000; Johnson-Frey 2004; Mahon and Caramazza 2005; Goldenberg 2009; Binkofski and Buxbaum 2013; Osiurak 2014). The specific question that we seek to answer is whether specific and abstract high-level praxis representations in the left inferior parietal lobule are automatically accessed regardless of whether participants are overtly pantomiming object-directed action or identifying the objects. The approach that we take is to use multivariate analyses over fMRI data to measure relations among objects in the way in which they are manipulated (action similarity), and to contrast that with multivariate analyses of similarity in function or purpose of use of the same objects (function similarity).

A growing literature has investigated the neural representation of action and function knowledge. Neuropsychological studies of brain-damaged patients (Sirigu et al. 1991; Buxbaum et al. 2000; Buxbaum and Saffran 2002; Mahon et al. 2007; Negri et al. 2007a, 2007b; Garcea et al. 2013; Martin et al. 2015), fMRI studies of healthy participants performing a range of different tasks (Kellenbach et al. 2003; Boronat et al. 2005; Canessa et al. 2008; Yee et al. 2010; Chen et al. 2016b), as well as brain stimulation studies (Pobric et al. 2010; Ishibashi et al. 2011; Pelgrims et al. 2011; Evans et al. 2016) suggest that frontoparietal regions are involved in representing action knowledge while the temporal lobe is engaged in retrieving function knowledge. Three prior studies, in particular, motivate the approach that we take in the current study. One study found that fMRI adaptation transferred between objects in a manner proportional to their semantic similarity (similarity in shape, or function, or both; Yee et al. 2010). Another prior study used multivoxel pattern analysis (MVPA) to disentangle representations of conceptual properties of the objects (knowledge related to action and location) from perceptual and low-level visual properties (Peelen and Caramazza 2012). A third prior study employed multivoxel pattern analyses to dissociate object-directed action and function during pantomime of tool use (Chen et al. 2016b).

Most prior studies have used only one task (e.g., semantic judgments or pantomime of tool use), or presented stimuli in only one stimulus format (i.e., either a word or a picture). Thus it cannot be adduced based on prior work if the “action representations” identified in the left inferior parietal lobule are accessed automatically and independent of task, nor whether those representations are accessed in a manner that is invariant to the format in which the stimulus is presented. To clarify this, we employ 2 tasks—pantomime of tool use and picture identification. In the pantomime task, the printed name of an object was presented and was the cue to pantomime object use; in the identification task, participants were shown images of the same objects corresponding to the items used in the pantomime task, and were required to silently name the pictures. Thus, these 2 tasks differed not only in task response (overt pantomime vs. identification), but also in stimulus format (word vs. picture). In order to correctly pantomime the use of objects, conceptual information, including information about object function, must be accessed. Thus, all extant theories would predict that function information should be engaged in both the pantomime and identification tasks. The key question is whether action information would be accessed during the identification task.

Prior research has found differential activity in the left inferior parietal lobule using univariate measures when participants identify images of tools compared with various nontool baseline categories (Chao et al. 1999; Chao and Martin 2000; Rumiati et al. 2004; Noppeney 2006; Mahon et al. 2007, 2013; Garcea and Mahon 2014). However, those prior univariate analyses do not decisively show that activity in the left inferior parietal lobule indexes access to the “specific” object manipulation information associated with the items being identified. Various other general action-related dimensions might explain that activity, such as the automatic computation of object-directed grasps, or even a general “arousal” of the praxis system. A definitive test would consist of demonstrating that the specific object pantomimes associated with the stimuli being identified are automatically elicited during the identification task. To test this, one would want to exclude that nonpraxis-related yet object-specific representations might be driving responses in the inferior parietal cortex. To jointly satisfy these constraints, we apply the stringent test of “cross-item multivariate pattern classification.” For example, we train a classifier to discriminate between scissors and corkscrew and then test the trained classifier with a new pair of objects that share the same actions, such as pliers and screwdriver. Because the action of using scissors is similar to that of using pliers, and likewise for corkscrew and screwdriver, accurate classification across objects indicates that the voxels being classified differentiate the action representations and in a way that generalizes across objects. With this approach, successful transfer of the classifier can only work if the classifier is sensitive to the specific actions themselves, over and above the objects used to engage subjects in those actions.

We were also interested in evaluating the hypothesis that the inferior parietal lobule represents actions in a manner that abstracts away from the specific task in which participants are engaged. To address that issue, we used mutivoxel pattern analysis to test whether action relations among objects could be decoded both within tasks (identification and pantomime) and critically, between tasks. For the within-task classification, the classifiers were trained and tested on data from the same task (but always still using separate object stimuli for training and testing). For example, classifiers were trained with “pantomime” data (for “scissors” vs. “corkscrew”) and then tested with “pantomime” data (for “pliers” vs. “screwdriver”). Successful within-task classification, even if observed for 2 different tasks, does not guarantee that the action representations being decoded abstract away from the specific task. It could be that a brain area uses a different underlying code to represent actions in an identification task and in an overt pantomime task. Therefore, the most stringent test of whether the inferior parietal lobule represents “abstract” action representations would be to carry out cross-task (and cross-item) classification. To that end, classifiers are trained with “pantomime” data and tested with “identification” data, and vice versa. For instance, we trained the classifier over data from the pantomime task over word stimuli on one pair of words (training on word stimuli: “scissors” vs. “corkscrew”), and then tested the classifier over data from the identification task over pictures (testing on picture stimuli: “pliers” vs. “screwdriver”). Thus, these analyses are particularly stringent as they are “cross-task”, “cross-item” and “cross-stimulus format”. Successful generalization (i.e., above chance classification) could occur only based on action representations that are tolerant to changes in tasks, items and stimulus formats, thus ruling out a range of alternative variables (e.g., such as structural similarity among objects with similar actions). Successful transfer of the classifier both within-task (for each task separately, and always across objects) and across tasks (and stimulus format) would constitute evidence for “abstract” representations of specific object-directed actions being compulsorily accessed independent of task context.

Methods

Participants

Sixteen students from the University of Rochester participated in the study in exchange for payment (8 females; mean age, 21.8 years, standard deviation, 2.4 years). All participants were right-hand dominant, as assessed with the Edinburgh Handedness Questionnaire (Oldfield 1971), had no history of neurological disorders, and had normal or corrected to normal vision. All participants gave written informed consent in accordance with the University of Rochester Research Subjects Review Board.

General Procedure

Stimulus presentation was controlled with “A Simple Framework” (Schwarzbach 2011) written in Matlab Psychtoolbox (Brainard 1997; Pelli 1997). Participants lay supine in the scanner, and stimuli were presented to the participants binocularly through a mirror attached to the MR headcoil, which allowed for foveal viewing of the stimuli via a back-projected monitor (spatial resolution: 1400 × 1050; temporal resolution: 120 Hz).

Each participant took part in 2 scanning sessions. The first scanning session began with a T1 anatomical scan, and then proceeded with (1) a 6-min resting state functional MRI scan, (2) eight 3-min functional runs of a category localizer experiment (see below for experimental details), (3) a second 6-min resting state functional MRI scan, and (4) a 15-min diffusion tensor imaging (DTI) scan. The resting state and DTI data are not analyzed herein. The second scanning session was completed 1–2 weeks later, and was composed of 2 experiments within a single session (Tool Pantomiming and Tool Identification). Participants completed 8 runs across the 2 experiments: (1) four 7-min runs in which participants pantomimed object use to printed object names, using their right hand (see below), and (2) four 7-min runs in which participants fixated upon visually presented images of manipulable objects and silently named them (see below).

Scanning Session I: Category Localizer

To localize tool-responsive regions of cortex, each participant took part in an independent functional localizer experiment. Participants were instructed to fixate upon intact and phase-shifted images of tools, animals, famous faces, and famous places (for details, see Fintzi and Mahon 2013; see also Chen et al. 2016a, 2016b). Twelve items from each category were presented in a miniblock design (6-s miniblocks; 2 Hz presentation rate; 0 ms ISI), interspersed by 6-s fixation periods. Within a run, 8 miniblocks of intact stimuli from the 4 categories (each category repeated twice) and 4 miniblocks of scrambled images from the 4 categories (one miniblock per phase-shifted category) were presented.

Scanning Session II: Tool Pantomiming and Tool Identification

We used 6 “target items” in both the pantomiming and identification experiments (see Chen et al. 2016b): scissors, pliers, knife, screwdriver, corkscrew, and bottle opener (Fig. 1A). For each experiment, each item (e.g., scissors) was presented 4 times per run, in random order, with the constraint that an item did not repeat on 2 successive presentations. The items were chosen so as to be analyzable in triads, in which 2 of the 3 items (of a triad) were similar by manner of manipulation or by function. For example, for the triad of corkscrew, screwdriver, and bottle opener, corkscrew and screwdriver are similar in terms of their manner of manipulation (both involving rotate movements), whereas corkscrew and bottle opener share a similar function (opening a bottle). The selection of these items was based on previous behavioral work from our lab (Garcea and Mahon 2012), which was in turn motivated by prior research by Buxbaum and colleagues (both neuropsychological, Buxbaum et al. 2000; Buxbaum and Saffran 2002, and neuroimaging work, see Boronat et al. 2005).

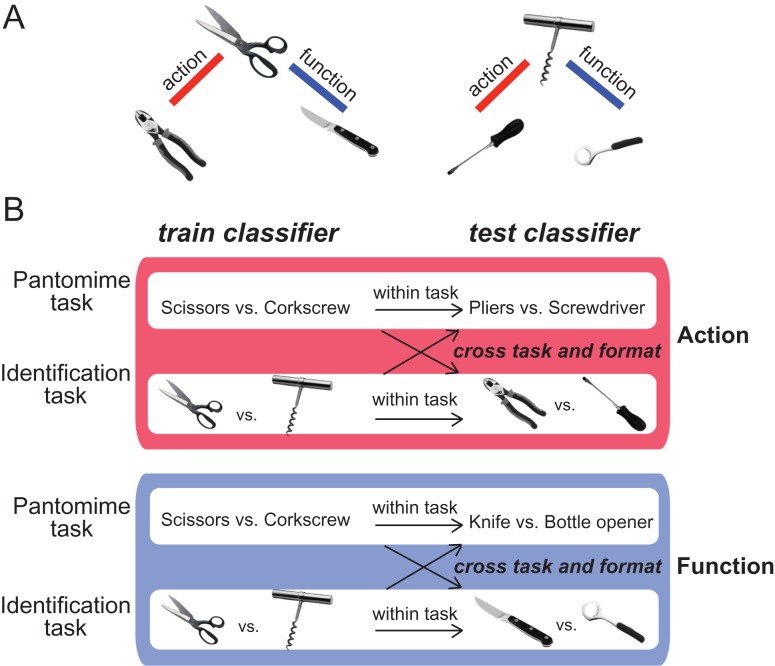

Figure 1.

Schematic of cross-item multivoxel pattern analysis. (A) From the perspective of the experimental design, the stimuli are organized into 2 triads, in which 2 of the 3 items (of a triad) were similar by manner of manipulation or function. (B) To decode action, the classifier was trained to discriminate, for example, “scissors” from “corkscrew” and the classifier was then tested on “pliers” versus “screwdriver”. Because the action of using scissors is similar to that of using pliers, and likewise for corkscrew and screwdriver, accurate classification across objects indicates that the voxels being classified differentiate the action representations over and above the specific objects themselves. The same procedure was used to decode object function.

In the Tool Pantomiming task, participants were presented with printed words corresponding to the 6 items: one word was presented for 8 s, and stimuli were separated by 8 s of fixation. When the word was presented, participants were instructed to pantomime the use of the object, using their dominant hand, and using primarily their hand and forearm (participants were trained on the object pantomimes prior to scanning). Participants generated the pantomime for as long as the word stimulus was on the screen.

In the Tool Identification task, the participants were instructed to deploy their attention to visually presented images of the 6 target items; they were told to think about “the features of the objects, including its name, its associated action/s, function, weight, context in which it is found, and material properties.” Within each miniblock, 8 exemplars of each item were presented at a frequency of 1 Hz (0 ms ISI, i.e., 8 different scissors were presented in 8 s). Stimulus blocks were interspersed by 8-s fixation periods.

The order of Tool Pantomiming and Tool Identification was counterbalanced across subjects in an AB/BA manner. Odd numbered participants took part in 4 runs of the Tool Identification experiment, followed by 4 runs of the Tool Pantomiming experiment; even numbered participants took part in 4 runs of the Tool Pantomiming experiment, followed by 4 runs of the Tool Identification Experiment.

MRI Parameters

Whole brain imaging was conducted on a 3-T Siemens MAGNETOM Trio scanner with a 32-channel head coil located at the Rochester Center for Brain Imaging. High-resolution structural T1 contrast images were acquired using a magnetization prepared rapid gradient echo (MP-RAGE) pulse sequence at the start of each participant’s first scanning session (TR = 2530, TE = 3.44 ms, flip angle = 7°, FOV = 256 mm, matrix = 256 × 256, 1 × 1 × 1 mm3 sagittal left-to-right slices). An echo-planar imaging pulse sequence was used for T2* contrast (TR = 2000 ms, TE = 30 ms, flip angle = 90°, FOV = 256 × 256 mm2, matrix = 64 × 64, 30 sagittal left-to-right slices, voxel size = 4 × 4 × 4 mm3). The first 6 volumes of each run were discarded to allow for signal equilibration (4 volumes at data acquisition and 2 volumes at preprocessing).

fMRI Data Analysis

fMRI data were analyzed with the BrainVoyager software package (Version 2.8) and in-house scripts drawing on the BVQX toolbox written in MATLAB. Preprocessing of the functional data included, in the following order, slice scan time correction (sinc interpolation), motion correction with respect to the first volume of the first functional run, and linear trend removal in the temporal domain (cutoff: 2 cycles within the run). Functional data were registered (after contrast inversion of the first volume) to high-resolution deskulled anatomy on a participant-by-participant basis in native space. For each participant, echo-planar and anatomical volumes were transformed into standardized space (Talairach and Tournoux 1988). Functional data were interpolated to 3 mm3. The data were not spatially smoothed.

For all experiments, the general linear model was used to fit beta estimates to the experimental events of interest. Experimental events were convolved with a standard 2-gamma hemodynamic response function. The first derivatives of 3D motion correction from each run were added to all models as regressors of no interest to attract variance attributable to head movement.

Statistical Analysis

MVPA was performed using a linear support vector machine (SVM) classifier. All MVPA analyses were performed over individual participants. Software written in MATLAB, utilizing the BVQX toolbox for MATLAB was used to perform the analysis. The classifiers were trained and tested in a cross-item manner. Figure 1B illustrates how the cross-item SVM decodes actions and functions. For example, to decode action, the classifier was trained to discriminate “scissors” from “corkscrew” and then the classifier was tested with “pliers” versus “screwdriver.” To continue the example, to decode function, the classifier was trained to discriminate between “scissors” and “corkscrew.” The trained classifier was then tested using a new pair of objects that match in function (in this case, “knife” and “bottle opener”). The classification accuracies for action and function knowledge were computed by averaging together the 4 accuracies generated by using different pairs of objects for classifier training and testing, separately for each participant. We then averaged classification accuracies across participants, and tested whether the group mean was significantly greater than chance (50%) using a one-sample t-test (one-tailed). Furthermore, to test whether decoding generalizes across tasks, we conducted cross-task cross-item classification, in which classifiers were trained with “pantomime” data and tested with “identification” data, and vice versa. For example, in action decoding, we trained the classifier to discriminate the pantomimes of “scissors” versus “corkscrew,” and then tested it on data from the identification task with pictures (i.e., “pliers” vs. “screwdriver”).

Whole Brain Searchlight Analyses

Whole-brain multivoxel pattern analyses were performed in each individual using a searchlight approach (Kriegeskorte et al. 2006). In this analysis, a cross-item classifier moved through the brain voxel-by-voxel. At every voxel, the beta values for each of 6 object stimuli (scissors | pliers | knife | screwdriver | corkscrew | bottle opener) for the cube of surrounding voxels (n = 125) were extracted and passed through a classifier. Classifier performance for that set of voxels was then written to the central voxel. The whole-brain results used cluster-size corrected alpha levels, by thresholding individual voxels at P < 0.01 and applying a subsequent cluster-size threshold of P < 0.001 based on Monte-Carlo simulations (1000 iterations) on cluster size. If no cluster survived the threshold, a more lenient threshold (P < 0.05 at voxel level, cluster corrected at P < 0.01) was used. Recent research (Woo et al. 2014; Eklund et al. 2016) indicates that alpha levels of 0.05 or even 0.01 prior to cluster thresholding permit an unacceptably high number of false positive results (i.e., are not sufficiently conservative). However, the objective of the analyses herein was to test whether the intersection of 4 independent whole-brain searchlight analyses identifies the inferior parietal lobule (train pantomime, test pantomime; train identification, test identification; train pantomime, test identification; train identification, test pantomime). As described below, the precluster-corrected whole-brain alpha levels that were used were 0.01 (decoding within pantomime, within identification, and cross-task decoding training on pantomime and testing on identification) and 0.05 (train on identification and test pantomime). Thus, and bracketing for the moment that we also performed cluster correction, the effective likelihood of observing any isolated voxel in the brain as being identified in common across the 4 independent analyses is 0.01 × 0.01 × 0.01 × 0.05 (i.e., 5 × 10−8, or 1 in 20 million). The point here is that the core analysis derives its statistical rigor by testing for an intersection among 4 independent analyses in a specific brain region. These arguments are of course specific to the fact that we are testing a hypothesis via an intersection analysis across 4 maps, and are therefore not at odds with the valid arguments and demonstrations about the caution that is warranted regarding precluster-corrected alpha-levels (Woo et al. 2014; Eklund et al. 2016). It is also important to note that we complement this whole-brain intersection test with independent (functionally defined) regions of interest (ROIs) to further establish the core finding regarding the role of the inferior parietal lobule in coding abstract representations of actions.

Definition of ROIs

We conducted ROI analyses in order to test the hypothesis that the tool preferring left inferior parietal lobule represents object-directed actions in a task-independent manner. For comparison, 3 other tool-preferring regions, left premotor cortex, the left posterior middle temporal gyrus and the left medial fusiform gyrus were also tested. All subjects participated in a category localizer. Tool-preferring voxels were defined for the left inferior parietal lobule, the left premotor cortex, and the left posterior middle temporal gyrus in each participant, based on the contrast [Tools] > [Animals], thresholded at P < 0.05 (uncorrected). For the purposes of subsequent analyses, and in order to have the same number of voxels contributed from each ROI in each participant, a 6 mm-radius sphere centered on the peak voxel was defined on a participant-by-participant basis.

Results

Classification of Object Direct Actions

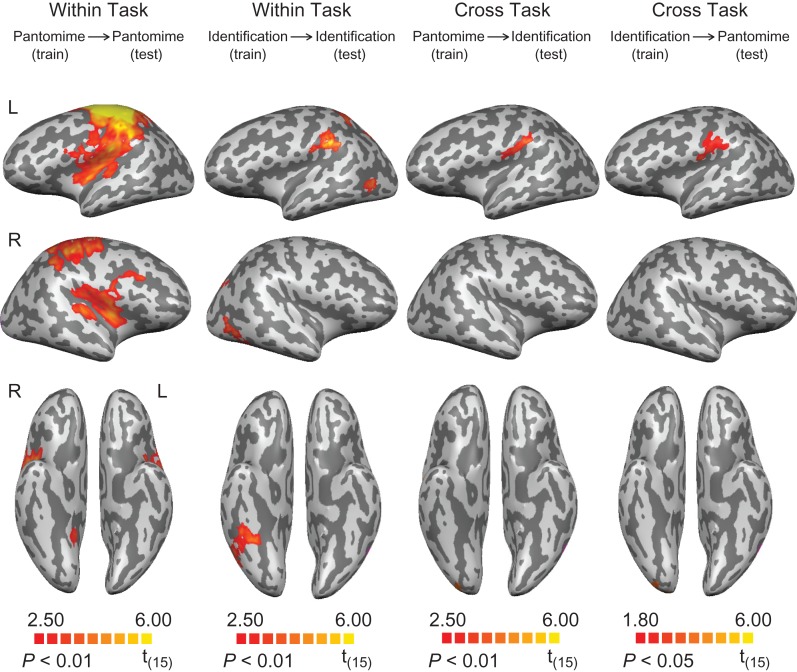

The searchlight analysis revealed successful classification of object-directed actions during the pantomime task in frontoparietal brain areas, including bilateral motor and premotor areas, the left inferior parietal lobule (principally within the supramarginal gyrus and the anterior intraparietal sulcus), and the left superior parietal lobule. The bilateral putamen, the right insula and the right cerebellum were also involved in decoding action (Fig. 2 and Table 1). For action classification during the identification task, we found significant clusters in the left inferior parietal lobule (the supramarginal gyrus at the junction with the anterior inferior parietal sulcus), the left superior parietal lobule, the bilateral posterior parietal lobule, the bilateral posterior middle temporal gyrus, and the right inferior temporal gyrus (Fig. 2 and Table 1). For the cross-task classification of actions, above chance classification was restricted to the supramarginal gyrus when classifiers were trained on “pantomime” and tested on “identification”; a similar region also showed significant decoding of action when the classifiers were trained on “identification” and tested on “pantomime” (voxel level threshold, P < 0.05, cluster corrected at P < 0.01) (see Fig. 2 and Table 1, and discussion in “Methods”).

Figure 2.

Searchlight analyses of cross-item classification for action. First column: decoding of action during tool pantomiming. Second column: decoding of action during tool identification. Third and last column: cross-task decoding of action. All results are thresholded at P < 0.01 (cluster corrected), except for the cross-task decoding of action (trained on “identification” and tested on “pantomime,” thresholded at P < 0.05, cluster corrected).

Table 1.

Talairach coordinates, cluster sizes, significance levels, and anatomical regions for the cross-item classification searchlight results for action

| Region | Talairach coordinates | Cluster size (mm2) | t-value | P-value | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Action, within-task: pantomime (vs. 50% chance) | ||||||

| Dorsal premotor cortex LH | −33 | −20 | 63 | 94 160 | 10.37 | <0.001 |

| Ventral premotor cortex LH | −48 | −1 | 10 | – | 4.03 | <0.002 |

| Motor cortex LH | −36 | −25 | 58 | – | 12.82 | <0.001 |

| Postcentral gyrus LH | −51 | −25 | 40 | – | 7.07 | <0.001 |

| Inferior parietal lobule LH (SMG/aIPS) | −45 | −34 | 42 | – | 5.88 | <0.001 |

| Superior parietal lobule LH | −30 | −43 | 55 | – | 6.11 | <0.001 |

| Putamen LH | −27 | −1 | 1 | 7346 | 7.20 | <0.001 |

| Middle frontal gyrus RH | 33 | 14 | 28 | 56 639 | 5.39 | <0.001 |

| Motor cortex RH | 33 | −26 | 49 | – | 5.51 | <0.001 |

| Postcentral gyrus RH | 45 | −28 | 56 | – | 6.93 | <0.001 |

| Putamen RH | 21 | −4 | 2 | – | 4.25 | <0.001 |

| Insula RH | 39 | 2 | 7 | – | 6.92 | <0.001 |

| Cerebellum RH | 6 | −46 | −11 | 27 682 | 8.46 | <0.001 |

| Action, within-task: identification (vs. 50% chance) | ||||||

| Inferior parietal lobule LH (SMG/aIPS) | −60 | −37 | 25 | 4924 | 6.97 | <0.001 |

| Superior parietal lobule LH | −42 | −52 | 46 | 3433 | 4.57 | <0.001 |

| Posterior parietal lobe LH | −15 | −64 | 55 | 6681 | 7.03 | <0.001 |

| Posterior middle temporal gyrus LH | −45 | −55 | −2 | 2301 | 4.71 | <0.001 |

| Posterior middle temporal gyrus RH | 42 | −58 | 1 | 6108 | 4.83 | <0.001 |

| Inferior temporal gyrus RH | 39 | −46 | −23 | – | 5.31 | <0.001 |

| Precuneus RH | 24 | −55 | 49 | 3604 | 5.13 | <0.001 |

| Action, cross-task: pantomime to identification (vs. 50% chance) | ||||||

| Inferior parietal lobule LH (mainly SMG) | −57 | −28 | 28 | 4264 | 5.36 | <0.001 |

| Action, cross-task: identification to pantomime (vs. 50% chance) | ||||||

| Inferior parietal lobule LH (mainly SMG) | −48 | −13 | 16 | 6151 | 4.82 | <0.001 |

Note: All results are thresholded at P < 0.01 (cluster corrected) except for cross-task decoding of action from identification to pantomime (thresholded at P < 0.05, cluster corrected). Regions for which the cluster size (mm) is indicated as “–” were contiguous with the region directly above, and hence included in the above volume calculation. SMG, supramarginal gyrus; aIPS, anterior inferior parietal sulcus.

The critical hypothesis test was whether there is overlap (i.e., an intersection) in the searchlight maps across the 4 independent whole-brain analyses. As is shown in Figure 3, action representations could be decoded in the left supramarginal gyrus within the pantomime and the identification tasks, and across tasks (in both directions). This region overlapped with the tool preferring left inferior parietal lobule. These findings constitute stringent evidence that (1) the left inferior parietal lobule represents object-directed actions independent of the task (overt action, silent naming), and (2) that a common neural code is present in that region across tasks, objects, and stimulus format.

Figure 3.

Direct comparison of tool-preferring regions and searchlight analyses for action. The searchlight results for the within-task decoding of action (red and pink) overlapped tool-preferring regions in the left hemisphere. The overlap of voxels identified as representing actions in both tasks in the searchlight analysis is in yellow. The searchlight results for the cross-task decoding of action were outlined in dark blue and light blue. Tool-preferring regions outlined in black are shown based on group results, thresholded at P < 0.01 (cluster corrected). All results are overlaid on a representative brain.

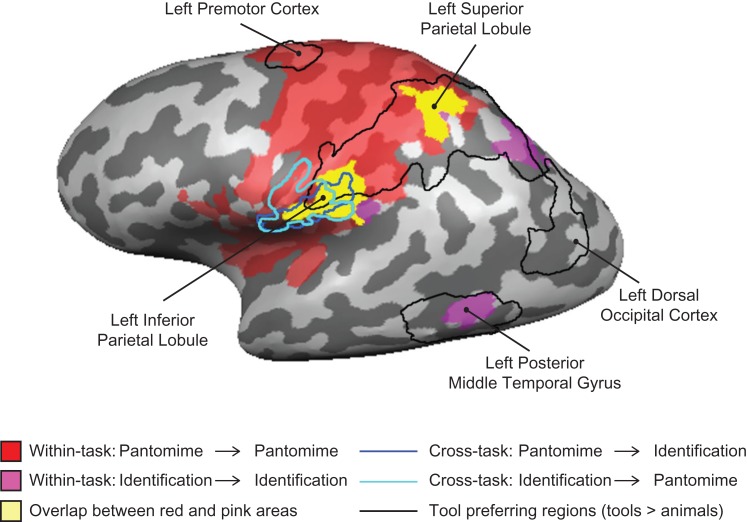

Classification of Object Function

During the tool use pantomime task, object-associated functions were classified above chance (voxel threshold P < 0.05, cluster corrected at P < 0.01) in medial aspects of left ventral temporal cortex (around parahippocampal cortex), and the middle and orbital frontal gyri bilaterally (Fig. 4 and Table 2). During the identification task, object-associated functions were classified above chance in medial aspects of ventral temporal cortex bilaterally (around parahippocampal cortex), the middle frontal gyrus bilaterally, the left precentral gyrus and the occipital pole bilaterally (Fig. 4 and Table 2). For the analyses of cross-task classification of function, a significant cluster was revealed in the medial aspect of ventral temporal cortex (posterior to parahippocampal cortex) regardless of whether decoding was from pantomiming to identification or identification to pantomiming (i.e., train on “pantomime,” test on “identification,” and vice versa; see Fig. 4 and Table 2).

Figure 4.

Searchlight analyses of cross-item classification for function. First column: decoding of function during tool pantomiming. Second column: decoding of function during tool identification. Third and last column: cross-task decoding of function. All results are thresholded at P < 0.01 (cluster corrected), except for the decoding of function during tool pantomiming (thresholded at P < 0.05, cluster corrected).

Table 2.

Talairach coordinates, cluster sizes, significance levels, and anatomical regions for the cross-item classification searchlight results for function

| Region | Talairach coordinates | Cluster size (mm2) | t-value | P-value | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Function, within-task: pantomime (vs. 50% chance) | ||||||

| Middle fontal gyrus LH | −27 | 17 | 40 | 45 821 | 6.36 | <0.001 |

| Medial frontal gyrus LH | −15 | 47 | 10 | – | 3.65 | <0.002 |

| Parahippocampal gyrus LH | −18 | −22 | −26 | 4469 | 4.18 | <0.001 |

| Retrosplenial cortex LH | −9 | −44 | 1 | 5643 | 3.17 | <0.006 |

| Middle fontal gyrus RH | 36 | 17 | 40 | 24 701 | 4.68 | <0.001 |

| Medial frontal gyrus RH | 9 | 50 | 4 | – | 6.32 | <0.001 |

| Function, within-task: identification (vs. 50% chance) | ||||||

| Middle frontal gyrus LH | −48 | 23 | 32 | 16 571 | 6.98 | <0.001 |

| Parahippocampal gyrus LH | −30 | −22 | −27 | 6656 | 5.60 | <0.001 |

| Occipital lobe LH | −21 | −82 | −17 | 13 151 | 9.63 | <0.001 |

| Precentral gyrus RH | 51 | −4 | 28 | 4089 | 5.89 | <0.001 |

| Middle frontal gyrus RH | 36 | 26 | 34 | 5643 | 7.89 | <0.001 |

| Parahippocampal gyrus RH | 33 | −43 | −5 | 4377 | 6.61 | <0.001 |

| Occipital lobe RH | 6 | −91 | −5 | 27 167 | 9.32 | <0.001 |

| Function, cross-task: pantomime to identification (vs. 50% chance) | ||||||

| Parahippocampal gyrus LH | −21 | −49 | −11 | 5505 | 6.37 | <0.001 |

| Medial frontal gyrus RH | 9 | 50 | 4 | 3725 | 6.32 | <0.001 |

| Function, cross-task: identification to pantomime (vs. 50% chance) | ||||||

| Medial fusiform gyrus LH | −21 | −58 | −8 | 8983 | 4.21 | <0.001 |

| Occipital lobe LH | −36 | −82 | −23 | – | 6.16 | <0.001 |

Note: All results are thresholded at P < 0.01 (cluster corrected) except for the decoding of function during the pantomime task (thresholded at P < 0.05, cluster corrected). Regions for which the cluster size (mm) is indicated as “–” were contiguous with the region directly above, and hence included in the above volume calculation.

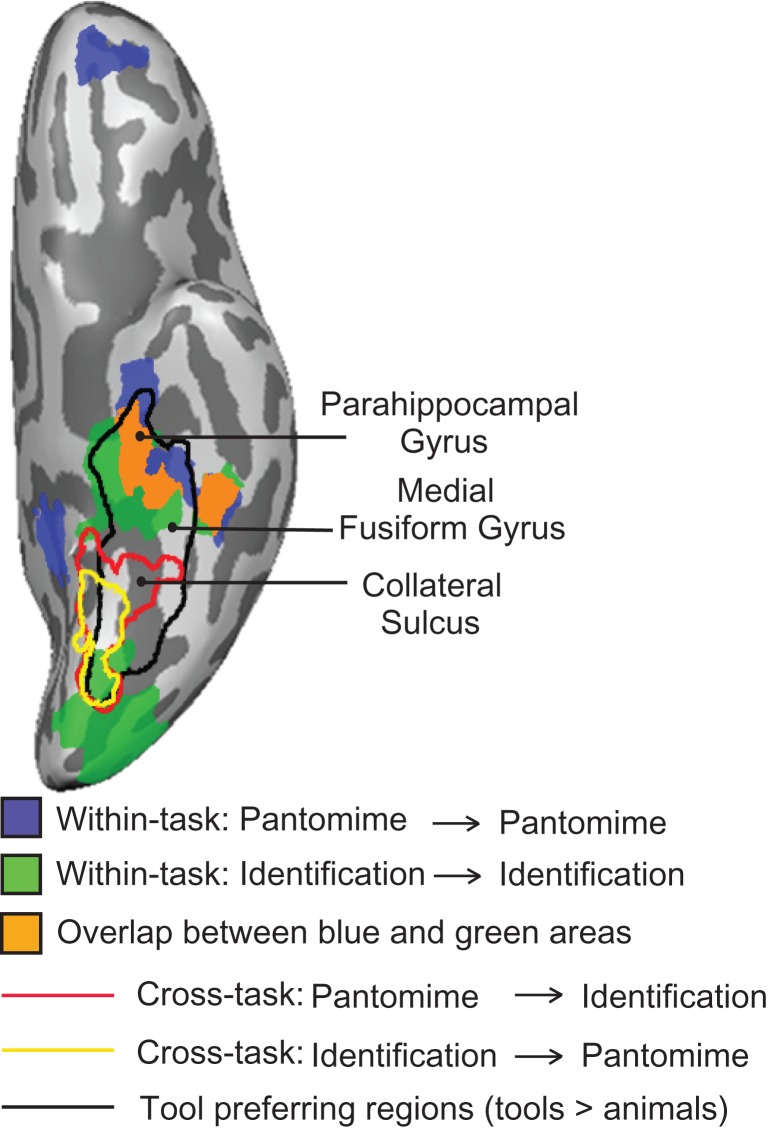

In Figure 5 we summarize the ventral stream regions with common above-chance classification of object function, both within- and across-tasks, and compared them directly to independently defined tool preferring cortex (i.e., tools > animals). A region overlapping the anterior aspect of functionally defined tool-preferring ventral temporal cortex showed above chance classification of object-directed function within both identification and pantomiming. Just posterior, a region in ventral temporal cortex was observed to decode function between identification and pantomiming (in both directions). Thus in the ventral stream, there was a common set of voxels that decoded object function both within the pantomime and a identification tasks, and a slightly posterior region that consistently decoded function between the tasks; we return to this distinction in the ventral stream in the General Discussion.

Figure 5.

Direct comparison of tool-preferring regions and searchlight analyses for function. The searchlight results for the within-task decoding of function (light blue and green) overlapped tool-preferring regions in the left hemisphere. The overlap of voxels identified as representing function in both tasks in the searchlight analysis is in dark blue. The searchlight results for the cross-task decoding of function are outlined in red and yellow. Tool-preferring regions outlined in black are shown based on group results, thresholded at P < 0.01 (cluster corrected). All results are overlaid on a representative brain.

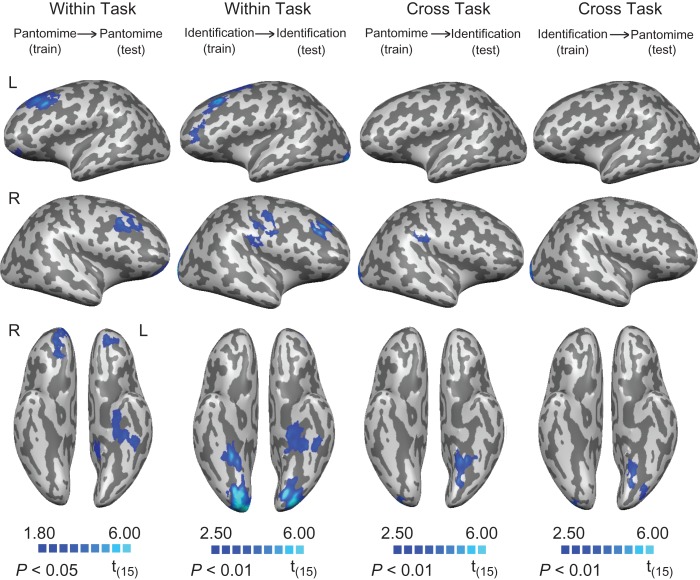

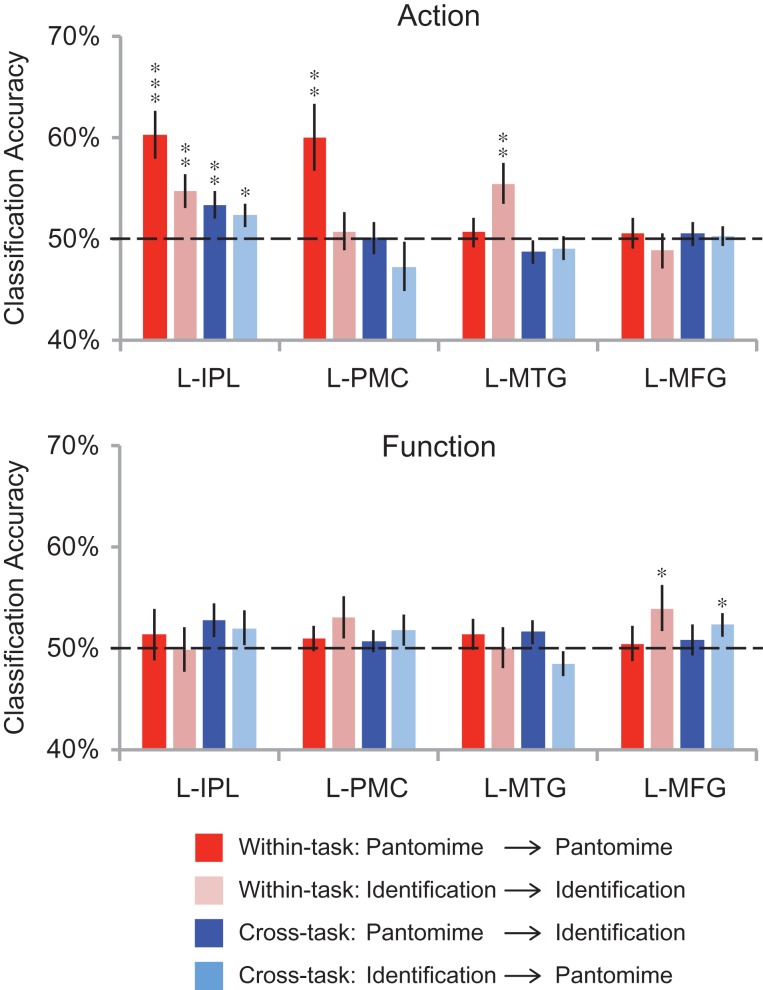

ROI Analyses

We also conducted ROI analyses in the inferior parietal lobule, left premotor cortex, the left posterior middle temporal gyrus, and the left medial fusiform gyrus. These ROIs were defined by contrasts over independent data sets (see Table 3 for Talairach coordinates). As shown in Figure 6, during the tool use pantomime task, we were able to decode actions in the left inferior parietal lobule (t(15) = 4.36, P = 0.0003) and left premotor cortex (t(15) = 3.05, P = 0.004). During the tool identification task, we were able to decode actions in the left inferior parietal lobule (t(15) = 2.97, P = 0.005) and the left posterior middle temporal gyrus (t(15) = 3.05, P = 0.004). Significant cross-task classification for actions was found only in the inferior parietal lobule (train on “pantomime”, test on “identification”: t(15) = 2.52, P = 0.01; train on “identification”, test on “pantomime”: t(15) = 1.78, P = 0.048). In contrast, while it was not possible to decode object function during tool pantomiming in any of the ROIs, object function was decoded above chance during tool identification in the left medial fusiform gyrus (t(15) = 2.00, P = 0.03; see Fig. 6 right). There was also significant cross-task classification for function in the left medial fusiform gyrus (train on “identification”, test on “pantomime”; t(15) = 2.15, P = 0.02).

Table 3.

Talairach coordinates of peak activations for ROIs

| ROI | Talairach coordinates | t-value | ||

|---|---|---|---|---|

| x | y | z | ||

| L-IPL | −38 ± 5.5 | −41 ± 6.7 | 41 ± 5.2 | 4.29 ± 2.04 |

| L-PMC | −24 ± 9.0 | −9 ± 12.2 | −52 ± 8.2 | 2.94 ± 1.03 |

| L-MTG | −47 ± 5.5 | −61 ± 6.8 | −6 ± 8.7 | 5.35 ± 1.91 |

| L-MFG | −28 ± 5.2 | −52 ± 10.9 | −12 ± 6.0 | 5.01 ± 1.94 |

Note: All subjects participated in a category localizer to functionally define tool areas within the left inferior parietal lobule (L-IPL), left premotor cortex (L-PMC), the left posterior middle temporal gyrus (L-MTG), and the left medial fusiform gyrus (L-MFG) These regions were defined as a 6 mm-radius sphere centered on the peak coordinate showing greater activation to tools compared with animals.

Figure 6.

ROI analyses of cross-item classification. The bars represent the mean classification accuracy for within- and cross-task decoding (red: trained with “pantomime” data and tested with “pantomime” data; pink: trained with “identification” data and tested with “identification” data; dark blue: trained with “pantomime” data and tested with “identification” data; light blue: trained with “identification” data and tested with “pantomime” data). Black asterisks indicate statistical significance with one-tailed t tests across subjects with respect to 50% (*P < 0.05; **P < 0.01; ***P < 0.001). Solid black lines indicate chance accuracy level (50%). Error bars represent the standard error of the mean across subjects. L-IPL, left inferior parietal lobule; L-PMC, left premotor cortex; L-MTG, left posterior middle temporal gyrus; L-MFG, left medial fusiform gyrus.

Discussion

In the current study, we found that the left inferior parietal lobule represents object-directed actions in an abstract manner. There are 4 pieces of evidence that, collectively, support the proposal that action representations in the inferior parietal lobule are abstract. First, throughout this study, all SVM classification of object-directed action was carried out in a cross-object manner—for instance a classifier was trained to distinguish “scissors” from “corkscrew,” then tested on “pliers” versus “screwdriver.” Thus, successful classification means that the classifier was sensitive to action relations among objects over-and-above the objects themselves. Second, the left inferior parietal lobule represented object-directed actions both when participants were performing an overt pantomiming task (see Chen et al. 2016b for precedent), and when participants were identifying the pictures. Third, successful classification of object-directed actions was observed in a cross-task manner: thus, when the classifier was trained on “scissors” versus “corkscrew” during an overt pantomiming task, it then generalized to “pliers” versus “screwdriver” in an identification task (and vice versa—training on the identification task generalized to the overt pantomiming task). Fourth, when the classifier generalized across tasks it was also generalizing across stimulus format. This last point is important, because it implies that the action representations that are driving classification performance in the inferior parietal lobule are not simply the result of a bottom-up “read out” based on visual structural properties of the stimuli (since word stimuli were used for the pantomime task).

In comparison to the pattern observed in the left inferior parietal lobule, action relations among objects could be decoded in the premotor cortex only during overt action execution, and in the left posterior middle temporal gryus only during the object identification task. The fact that some regions (premotor, posterior middle temporal gyrus) represented object-directed actions in a task-specific manner serves as an important control because it means that participants were not simply “simulating” the object-directed actions during the identification task. If that were the case, then there would be no reason why above chance classification for actions would not have been observed in premotor cortex for the identification task. Thus the dissociations observed in premotor cortex and in the left posterior middle temporal gyrus strengthen the pattern of findings in the left inferior parietal lobule, by indicating cross-task decoding for action relations is not observed whenever within-task decoding of actions is observed.

The observation that premotor cortex encodes actions only during overt tool use pantomiming is consistent with the findings of a recent decoding study by Wurm and Lingnau (2015). Those authors found that when viewing videos of actions, premotor cortex codes actions only at a concrete level: classifiers were trained and (successfully generalized) to the opening and closing of a specific bottle, but failed at a more abstract level that generalized across movement kinematics. The pattern observed in the left posterior middle temporal gryus is also in line with previous MVPA findings. For instance, Gallivan et al. (2013) found the posterior middle temporal gyrus tool area discriminated upcoming object-directed actions. It is also potentially important that the left posterior middle temporal gyrus is very close, if not overlapping, with what is often referred to as lateral occipitotemporal cortex, which has been argued to be involved in the representation of abstract action concepts (Oosterhof et al. 2013; Watson et al. 2013; Lingnau and Downing 2015; Wurm and Lingnau 2015). Importantly, in studies in which the left posterior middle temporal gyrus has been observed to represent actions in an abstract manner (Wurm and Lingnau 2015), participants could see the tools and hands involved in the action performed by themselves or others. However, when tools and hands are not visible, as in the tool use pantomime task in the current study and our prior study (Chen et al. 2016b), the posterior middle temporal gryus does not decode actions. Thus, the generalization emerges that decoding of actions in the posterior middle temporal gryus is driven by perceptually relevant properties about tools and hand configurations (see also Beauchamp et al. 2002; 2003; Buxbaum et al. 2014). This raises the question of what our findings would have looked like had we used an actual tool use task in which subjects could see the tool and their hand. Goodale et al. (1994) proposed that pantomimed actions are qualitatively different from real actions and may engage different networks. It is likely that with an actual tool use task, with the tool and hand in view, decoding of action relations among objects would be observed in the left posterior middle temporal gyrus. It is an interesting open issue as to whether, under those task constraints, it would also be possible to decode action relations among objects in a cross-task manner—this would require not only that the posterior middle temporal gyrus processes perceptual characteristics of actions, but also that it does so with an underlying neural code that matches the pattern elicited by identifying objects. This question merits further study, ideally with a setup such as used by Culham and colleagues where subjects’ heads are oriented forward so as to be able to see a small “workspace” within the bore of the magnet (Macdonald and Culham 2015; Fabbri et al. 2016). We also note that our current approach (pantomimed tool use) is modeled on a common neuropsychological test for apraxia, in which patients are cued to pantomime tool use from verbal command. In this regard, it is noteworthy that the area of cross-task overlap is restricted to the supramarginal gyrus, which has long been implicated in apraxic impairments (Liepmann 1905; Rumiati et al. 2001; Johnson-Frey 2004; Mahon et al. 2007). It is also important to note, and perhaps as an impetus for follow up work, that patients who struggle with pantomime to command can show a dramatic improvement in praxis abilities when the tool is “in hand.” It would thus be worthwhile to ask whether there is a relation between spared cortex in such patients and the additional regions that exhibit action decoding when healthy subjects perform actions over real tools with the tool and hand in view.

In addition to the inferior parietal lobe, the superior parietal lobe could also decode action information during each task, but not in a cross-task manner. Previous neuroimaging and patient studies suggest that this region plays a key role in planning and executing tool-use movements or hand gestures (Heilman et al. 1986; Fukui et al. 1996; Choi et al. 2001; Johnson-Frey et al. 2005). Our findings indicate that it represents action relations among objects during both pantomime and identification, but in a task-specific code. While our current findings do not permit a definitive interpretation of why a task-specific code may implement action relations among objects in this region, one possibility is that within-task decoding is succeeding for different reasons in the 2 tasks. For instance, it could be that while pantomime-related dimensions drive classification during the pantomime task, it is grasp-related information that drives classification during the identification task. If that were the case, it would be possible to observe within-task decoding (in each task individually) in the absence of cross-task classification.

There has been much discussion, at both an empirical and theoretical level, about whether object function is represented in the inferior parietal lobule. At an empirical level, prior findings have been somewhat mixed, with some studies indicating a lack of neural responses in the left inferior parietal lobule for object function (Canessa et al. 2008; Chen et al. 2016b) and some studies, notably the recent study by Leshinskaya and Caramazza (2015), reporting effects for object function in the left inferior parietal lobule. Our findings on this issue are clear: object function is not represented in parietal cortex, but rather in the temporal lobe, and specifically in medial aspects of its ventral surface. These data are in agreement with prior fMRI research using an adaptation paradigm (Yee et al. 2010), as well as with neuropsychological studies indicating that patients with atrophy of temporal areas can exhibit impairments for knowledge of object function (Sirigu et al. 1991; Negri et al. 2007a). It is also potentially relevant that the parahippocampal gyrus has been argued to play a role in representing contextual information about objects (Bar and Aminoff 2003; Aminoff et al. 2007); thus, one might speculate whether knowledge of object function is an example of such “contextual” knowledge (Leshinskaya and Caramazza 2015).

There were 2 unexpected aspects of our findings that deserve further comment. First, in 2 relatively early visual regions (visible in Figs 2 and 4), there was cross-task decoding (for action, Fig. 2; for function, Fig. 4) in voxels that did not exhibit within-task decoding. The question is: how could there be cross-task decoding if there is not also within-task decoding? While a definitive answer to this question will require further experimental work, one possibility is that there are distinct but “multiplexed” or “inter-digitated” codes for objects in early visual areas—some of which reflect bottom up visual information and some of which reflect top-down feedback from higher order areas. For instance, it could be that there is an underlying code for specific properties of the visual stimulus (bottom up), and overlaid, more general or “task non-specific” codes that reflect glimmers of inputs from higher order areas. If that were the case, then because stimulus format is preserved across training and test for within-task decoding, the classifiers may be biased toward the dominant (bottom-up driven) patterns, and hence fail (i.e., perform at chance) because of the cross-object nature of our classification approach. In the cross-task context, the classifier would have little attraction to bottom-up based patterns (since training and testing were across different stimulus formats), and classification would depend only on those patterns that reflect feedback from higher order areas. Of course, this interpretation must be regarded as speculative and as motivating additional empirical work.

A second set of findings that merits discussion is that there was a topographical dissociation within the medial temporal lobe for decoding object function. The following 3 findings emerged: (1) within-task decoding for pantomiming was present in the left anterior portion of the medial temporal lobe; (2) cross-task decoding (in both directions, from pantomime to identification and from identification to pantomime) was present in the left posterior portion of the medial temporal lobe; and (3) within-task decoding for identification was present in both the left anterior and bilateral posterior medial temporal areas (all on the ventral surface). Prior neuropsychological studies showing that anterior temporal lesions can lead to impaired function knowledge (Sirigu et al. 1991; Buxbaum et al. 1997; Lauro-Grotto et al. 1997; Magnié et al. 1998; Hodges et al. 1999) reinforce the inference that at the global level, function relations among objects drive these findings (for relevant neuroimaging work, see Canessa et al. 2008; Yee et al. 2010; Anzellotti et al. 2011). However, the observation of cross-task but not within-task decoding in posterior temporal areas could be due to properties such as object texture that may be correlated with function similarity. Cant and Goodale (2007) have shown that while object shape is represented in lateral occipital cortex, object texture is represented in medial ventral regions of posterior temporal-occipital cortex (see also Snow et al. 2015). For example, both scissors and knife have sharp blades for cutting and a smooth and shiny texture. Interestingly, all searchlight analyses for function show significant decoding in the posterior medial temporal lobe, with the exception of within-task decoding during pantomiming (Figs 4 and 5). Considering that picture stimuli were used in the identification and word stimuli in the pantomime task, one might speculate that successful generalization of texture information across objects depends on pictures having been used to generate the neural responses being decoded (either for testing or training data, or for both). While this interpretation must remain speculative with the current dataset, it nonetheless warrants direct empirical work to understand the boundary conditions (tasks, stimulus formats) that define when object texture information might be extracted and processed. This could be done most effectively by separating texture similarity from function and manipulation similarity.

Conclusion

Our findings indicate that neural activity in the left inferior parietal lobule when processing tools indexes abstract representations of specific object-directed actions being compulsorily accessed independent of task context. Our conclusion that the left inferior parietal lobule represents “abstract” action representations for specific objects is in excellent agreement with previous neuroimaging studies (Kellenbach et al. 2003; Rumiati et al. 2004; Boronat et al. 2005; Mahon et al. 2007; Canessa et al. 2008; Gallivan et al. 2013; Garcea and Mahon 2014; Ogawa and Imai 2016) and with over a century of research with patients with acquired brain lesions to the left inferior parietal lobule. Lesions to the left inferior parietal lobule can result in limb apraxia, a neuropsychological impairment characterized by difficulty in using objects correctly according to their function (Liepmann 1905; Ochipa et al. 1989; Buxbaum et al. 2000; Negri et al. 2007b; Garcea et al. 2013). Critically, in order to have limb apraxia, by definition, patients must be spared for elemental sensory and motor processing—indicating that the action impairment is to relatively “abstract” representations. An interesting theoretical question to unpack with future neuropsychological and functional neuroimaging work is whether “abstract” may or may not imply an “amodal” representational format (for discussion see, Reilly et al. 2014, 2016; Barsalou 2016; Leshinskaya and Caramazza 2016; Mahon and Hickok 2016; Martin 2016).

Notes

Conflict of Interest: None declared.

Funding

National Institutes of Health (grant R01 NSO89069 to B.Z.M.)

References

- Aminoff E, Gronau N, Bar M. 2007. The parahippocampal cortex mediates spatial and nonspatial associations. Cereb Cortex. 17(7):1493–1503. [DOI] [PubMed] [Google Scholar]

- Anzellotti S, Mahon BZ, Schwarzbach J, Caramazza A. 2011. Differential activity for animals and manipulable objects in the anterior temporal lobes. J Cogn Neurosci. 23:2059–2067. [DOI] [PubMed] [Google Scholar]

- Bar M, Aminoff E. 2003. Cortical analysis of visual context. Neuron. 38(2):347–358. [DOI] [PubMed] [Google Scholar]

- Barsalou LW. 2016. On staying grounded and avoiding quixotic dead ends. Psychon Bull Rev. 23:1122–1142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. 2002. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 34(1):149–159. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. 2003. FMRI responses to video and point-light displays of moving humans and manipulable objects. J Cogn Neurosci. 15(7):991–1001. [DOI] [PubMed] [Google Scholar]

- Binkofski F, Buxbaum LJ. 2013. Two action systems in the human brain. Brain Lang. 127(2):222–229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boronat CB, Buxbaum LJ, Coslett HB, Tang K, Saffran EM, Kimberg DY, Detre JA. 2005. Distinctions between manipulation and function knowledge of objects: evidence from functional magnetic resonance imaging. Cogn Brain Res. 23(2–3):361–373. [DOI] [PubMed] [Google Scholar]

- Brainard DH. 1997. The psychophysics toolbox. Spat Vis. 10(4):433–436. [PubMed] [Google Scholar]

- Bruffaerts R, De Weer AS, De Grauwe S, Thys M, Dries E, Thijs V, Sunaert S, Vandenbulcke M, De Deyne S, Storms G, et al. . 2014. Noun and knowledge retrieval for biological and non-biological entities following right occipitotemporal lesions. Neuropsychologia. 62:163–174. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Saffran EM. 2002. Knowledge of object manipulation and object function: dissociations in apraxic and nonapraxic subjects. Brain Lang. 82(2):179–199. [DOI] [PubMed] [Google Scholar]

- Buxbaum LJ, Schwartz MF, Carew TG. 1997. The role of semantic memory in object use. Cogn Neuropsychol. 14(2):219–254. [Google Scholar]

- Buxbaum LJ, Shapiro AD, Coslett HB. 2014. Critical brain regions for tool-related and imitative actions: a componential analysis. Brain. 137(Pt 7):1971–1985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buxbaum LJ, Veramontil T, Schwartz MF. 2000. Function and manipulation tool knowledge in apraxia: knowing “what for” but not “how”. Neurocase. 6(2):83–97. [Google Scholar]

- Canessa N, Borgo F, Cappa SF, Perani D, Falini A, Buccino G, Tettamanti M, Shallice T. 2008. The different neural correlates of action and functional knowledge in semantic memory: an FMRI study. Cereb Cortex. 18(4):740–751. [DOI] [PubMed] [Google Scholar]

- Cant JS, Goodale MA. 2007. Attention to form or surface properties modulates different regions of human occipitotemporal cortex. Cereb Cortex. 17(3):713–731. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. 1999. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 2(10):913–919. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. 2000. Representation of manipulable man-made objects in the dorsal stream. NeuroImage. 12(4):478–484. [DOI] [PubMed] [Google Scholar]

- Chen Q, Garcea FE, Almeida J, Mahon BZ. 2016. a. Connectivity-based constraints on category-specificity in the ventral object processing pathway. Neuropsychologia. (in press). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen Q, Garcea FE, Mahon BZ. 2016. b. The representation of object-directed action and function knowledge in the human brain. Cereb Cortex. 26(4):1609–1618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi SH, Na DL, Kang E, Lee KM, Lee SW, Na DG. 2001. Functional magnetic resonance imaging during pantomiming tool-use gestures. Exp Brain Res. 139(3):311–317. [DOI] [PubMed] [Google Scholar]

- Cubelli R, Marchetti C, Boscolo G, Della Sala S. 2000. Cognition in action: testing a model of limb apraxia. Brain Cogn. 44(2):144–165. [DOI] [PubMed] [Google Scholar]

- Eklund A, Nichols TE, Knutsson H. 2016. Cluster failure: why fMRI inferences for spatial extent have inflated false-positive rates. Proc Natl Acad Sci. 113(28):7900–7905. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans C, Edwards MG, Taylor LJ, Ietswaart M. 2016. Perceptual decisions regarding object manipulation are selectively impaired in apraxia or when tDCS is applied over the left IPL. Neuropsychologia. 86:153–166. [DOI] [PubMed] [Google Scholar]

- Fabbri S, Stubbs KM, Cusack R, Culham JC. 2016. Disentangling representations of object and grasp properties in the human brain. J Neurosci. 36(29):7648–7662. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fintzi AR, Mahon BZ. 2013. A bimodal tuning curve for spatial frequency across left and right human orbital frontal cortex during object recognition. Cereb Cortex. 24(5):1311–1318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fukui T, Sugita K, Kawamura M, Shiota J, Nakano I. 1996. Primary progressive apraxia in Pick’s disease: a clinicopathologic study. Neurology. 47(2):467–473. [DOI] [PubMed] [Google Scholar]

- Gallivan JP, Adam McLean D, Valyear KF, Culham JC. 2013. Decoding the neural mechanisms of human tool use. eLife. 2013(2):1–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcea FE, Dombovy M, Mahon BZ. 2013. Preserved tool knowledge in the context of impaired action knowledge: implications for models of semantic memory. Front Hum Neurosci. 7:120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcea FE, Mahon BZ. 2012. What is in a tool concept? Dissociating manipulation knowledge from function knowledge. Mem Cognit. 40(8):1303–1313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garcea FE, Mahon BZ. 2014. Parcellation of left parietal tool representations by functional connectivity. Neuropsychologia. 60(1):131–143. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldenberg G. 2009. Apraxia and the parietal lobes. Neuropsychologia. 47(6):1449–1459. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Jakobson LS, Keillor JM. 1994. Differences in the visual control of pantomimed and natural grasping movements. Neuropsychologia. 32:1159–1178. [DOI] [PubMed] [Google Scholar]

- Heilman KM, Rothi LG, Mack L, Feinberg T, Watson RT. 1986. Apraxia after a superior parietal lesion. Cortex. 22(1):141–150. [DOI] [PubMed] [Google Scholar]

- Hodges JR, Spatt J, Patterson K. 1999. “What” and “how”: evidence for the dissociation of object knowledge and mechanical problem-solving skills in the human brain. Proc Natl Acad Sci. 96(16):9444–9448. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishibashi R, Lambon Ralph MA, Saito S, Pobric G. 2011. Different roles of lateral anterior temporal lobe and inferior parietal lobule in coding function and manipulation tool knowledge: evidence from an rTMS study. Neuropsychologia. 49(5):1128–1135. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey S. 2004. The neural bases of complex tool use in humans. Trends Cogn Sci. 8:71–78. [DOI] [PubMed] [Google Scholar]

- Johnson-Frey SH, Newman-Norlund R, Grafton ST. 2005. A distributed left hemisphere network active during planning of everyday tool use skills. Cereb Cortex. 15:681–695. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. 2003. Actions speak louder than functions: the importance of manipulability and action in tool representation. J Cogn Neurosci. 15(1):30–46. [DOI] [PubMed] [Google Scholar]

- Konkle T, Oliva A. 2012. A real-world size organization of object responses in occipitotemporal cortex. Neuron. 74(6):1114–1124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. 2006. Information-based functional brain mapping. Proc Natl Acad Sci USA. 103(10):3863–3868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lauro-Grotto R, Piccini C, Shallice T. 1997. Modality-specific operations in semantic dementia. Cortex. 33(4):593–622. [DOI] [PubMed] [Google Scholar]

- Leshinskaya A, Caramazza A. 2015. Abstract categories of functions in anterior parietal lobe. Neuropsychologia. 76:27–40. [DOI] [PubMed] [Google Scholar]

- Leshinskaya A, Caramazza A. 2016. For a cognitive neuroscience of concepts: moving beyond the grounding issue. Psychon Bull Rev. 23(4):991–1001. [DOI] [PubMed] [Google Scholar]

- Lewis JW. 2006. Cortical networks related to human use of tools. Neuroscientist. 12(3):211–231. [DOI] [PubMed] [Google Scholar]

- Liepmann H. (1905). The left hemisphere and action. (Translation from Munch. Med. Wschr. 48–49). (Translations from Liepmann’s essays on apraxia. In Research Bulletin (Vol. 506). London, Ontario: Department of Psychology, University of Western Ontario; 1980).

- Lingnau A, Downing PE. 2015. The lateral occipitotemporal cortex in action. Trends Cogn Sci. 19(5):268–277. [DOI] [PubMed] [Google Scholar]

- Macdonald SN, Culham JC. 2015. Do human brain areas involved in visuomotor actions show a preference for real tools over visually similar non-tools? Neuropsychologia. 77:35–41. [DOI] [PubMed] [Google Scholar]

- Magnié MN, Ferreira CT, Giusiano B, Poncet M. 1998. Category specificity in object agnosia: preservation of sensorimotor experiences related to objects. Neuropsychologia. 37(1):67–74. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. 2005. The orchestration of the sensory-motor systems: clues from neuropsychology. Cogn Neuropsychol. 22(3–4):480–494. [DOI] [PubMed] [Google Scholar]

- Mahon BZ, Caramazza A. 2009. Concepts and categories: a cognitive neuropsychological perspective. Annu Rev Psychol. 60:27–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Hickok G. 2016. Arguments about the nature of concepts: symbols, embodiment, and beyond. Psychon Bull Rev. 23:941–958. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Kumar N, Almeida J. 2013. Spatial frequency tuning reveals interactions between the dorsal and ventral visual systems. J Cogn Neurosci. 25(6):862–871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mahon BZ, Milleville SC, Negri GAL, Rumiati RI, Caramazza A, Martin A. 2007. Action-related properties shape object representations in the ventral stream. Neuron. 55(3):507–520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin A. 2007. The representation of object concepts in the brain. Annu Rev Psychol. 58:25–45. [DOI] [PubMed] [Google Scholar]

- Martin A. 2009. Circuits in mind: the neural foundations for object concepts In: Gazzaniga M, editor. The cognitive neurosciences. 4th ed. Cambridge (MA): MIT Press, p. 1031–1045. [Google Scholar]

- Martin A. 2016. GRAPES—Grounding representations in action, perception, and emotion systems: how object properties and categories are represented in the human brain. Psychon Bull Rev. 23(4):979–990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martin M, Beume L, Kümmerer D, Schmidt CS, Bormann T, Dressing A, Ludwig VM, Umarova RM, Mader I, Rijntjes M, et al. . 2015. Differential roles of ventral and dorsal streams for conceptual and production-related components of tool use in acute stroke patients. Cereb Cortex. 26(9):3754–3771. [DOI] [PubMed] [Google Scholar]

- Negri GA, Lunardelli A, Reverberi C, Gigli GL, Rumiati RI. 2007. a. Degraded semantic knowledge and accurate object use. Cortex. 43(3):376–388. [DOI] [PubMed] [Google Scholar]

- Negri GA, Rumiati RI, Zadini A, Ukmar M, Mahon BZ, Caramazza A. 2007. b. What is the role of motor simulation in action and object recognition? Evidence from apraxia. Cogn Neuropsychol. 24(8):795–816. [DOI] [PubMed] [Google Scholar]

- Noppeney U. 2006. Two distinct neural mechanisms for category-selective responses. Cereb Cortex. 16(3):437–445. [DOI] [PubMed] [Google Scholar]

- Ochipa C, Rothi LJ, Heilman KM. 1989. Ideational apraxia: a deficit in tool selection and use. Ann Neurol. 25(2):190–193. [DOI] [PubMed] [Google Scholar]

- Ogawa K, Imai F. 2016. Hand-independent representation of tool-use pantomimes in the left anterior intraparietal cortex. Exp Brain Res. 234(12):3677–3687. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. 1971. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 9(1):97–113. [DOI] [PubMed] [Google Scholar]

- Oosterhof NN, Tipper SP, Downing PE. 2013. Crossmodal and action-specific: neuroimaging the human mirror neuron system. Trends Cogn Sci. 17(7):311–318. [DOI] [PubMed] [Google Scholar]

- Osiurak F. 2014. What neuropsychology tells us about human tool use? The four constraints theory (4CT): mechanics, space, time, and effort. Neuropsychol Rev. 24(2):88–115. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Caramazza A. 2012. Conceptual object representations in human anterior temporal cortex. J Neurosci. 32:15728–15736. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelgrims B, Olivier E, Andres M. 2011. Dissociation between manipulation and conceptual knowledge of object use in the supramarginalis gyrus. Hum Brain Mapp. 32(11):1802–1810. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelli DG. 1997. Pixel independence: measuring spatial interactions on a CRT display. Spat Vis. 10(4):443–446. [DOI] [PubMed] [Google Scholar]

- Pobric G, Jefferies E, Lambon Ralph MA. 2010. Amodal semantic representations depend on both anterior temporal lobes: evidence from repetitive transcranial magnetic stimulation. Neuropsychologia. 48(5):1336–1342. [DOI] [PubMed] [Google Scholar]

- Reilly J, Harnish S, Garcia A, Hung J, Rodriguez A, Crosson B. 2014. Lesion correlates of generative naming of manipulable objects in aphasia: can a brain be both embodied and disembodied? Cogn Neuropsychol. 31(4):1–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reilly J, Peelle JE, Garcia A, Crutch SJ. 2016. Linking somatic and symbolic representation in semantic memory: the dynamic multilevel reactivation framework. Psychon Bull Rev. 23(4):1002–1004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rothi LJG, Ochipa C, Heilman KM. 1991. A cognitive neuropsychological model of limb praxis. Cogn Neuropsychol. 8:443–458. [Google Scholar]

- Rumiati RI, Weiss PH, Shallice T, Ottoboni G, Noth J, Zilles K, Fink GR. 2004. Neural basis of pantomiming the use of visually presented objects. NeuroImage. 21(4):1224–1231. [DOI] [PubMed] [Google Scholar]

- Rumiati RI, Zanini S, Vorano L, Shallice T. 2001. A form of ideational apraxia as a delective deficit of contention scheduling. Cogn Neuropsychol. 18(7):617–642. [DOI] [PubMed] [Google Scholar]

- Schwarzbach J. 2011. A simple framework (ASF) for behavioral and neuroimaging experiments based on the psychophysics toolbox for MATLAB. Behav Res Methods. 43:1194–1201. [DOI] [PubMed] [Google Scholar]

- Snow JC, Goodale MA, Culham JC. 2015. Preserved haptic shape processing after bilateral LOC lesions. J Neurosci. 35(40):13745–13760. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sirigu A, Duhamel JR, Poncet M. 1991. The role of sensorimotor experience in object recognition. A case of multimodal agnosia. Brain. 114(6):2555–2573. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P (1988). Co-Planar Stereotaxis Atlas of the Human Brain. Direct (Vol. 270).

- Tranel D, Kemmerer D, Adolphs R, Damasio H, Damasio AR. 2003. Neural correlates of conceptual knowledge for actions. Cogn Neuropsychol. 20(3–6):409–432. [DOI] [PubMed] [Google Scholar]

- Watson CE, Cardillo ER, Ianni GR, Chatterjee A. 2013. Action concepts in the brain: an activation likelihood estimation meta-analysis. J Cogn Neurosci. 25(8):1191–1205. [DOI] [PubMed] [Google Scholar]

- Woo CW, Krishnan A, Wager TD. 2014. Cluster-extent based thresholding in fMRI analyses: pitfalls and recommendations. Neuroimage. 91:412–419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wurm XMF, Lingnau A. 2015. Decoding actions at different levels of abstraction. J Neurosci. 35(20):7727–7735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yee E, Drucker DM, Thompson-Schill SL. 2010. fMRI-adaptation evidence of overlapping neural representations for objects related in function or manipulation. NeuroImage. 50(2):753–763. [DOI] [PMC free article] [PubMed] [Google Scholar]