Abstract

Objective.

Evidence supports the utility of measurement-based care (MBC) to improve youth mental health outcomes, but clinicians rarely engage in MBC practices. Digital measurement feedback systems (MFS) may reflect a feasible strategy to support MBC adoption and sustainment. This pilot study was initiated to evaluate the impact of a MFS and brief consultation supports to facilitate MBC uptake and sustainment among mental health clinicians in the education sector, the most common mental health service delivery setting for youth.

Method.

Following an initial training in MBC, 14 clinicians were randomized to either: (1) a digital MFS and brief consultation supports, or (2) control. Baseline ratings of MBC attitudes, skill, and use were collected. In addition, daily assessment ratings tracked two core MBC practices (i.e., assessment tool administration, provision of feedback) over a six-month follow-up period.

Results.

Clinicians in the MFS condition demonstrated rapid increases in both MBC practices while the control group did not significantly change. For clinicians in the MFS group, consultation effects were significant for feedback and approached significance for administration. Over the follow-up period, average decreases in the current study were moderate with only one of the two outcome variables (administration) decreasing significantly. Inspection of individual clinician trajectories revealed substantial within-group trend variation.

Conclusions.

MFS may represent an effective MBC implementation strategy beyond initial training, although individual clinician response is variable. Identifying feasible and impactful implementation strategies is critical given the ability of MBC to support precision healthcare.

Keywords: measurement-based care, routine outcome monitoring, measurement-feedback system, technology, implementation

Background

Precision or personalized medicine reflects a framework for improving health outcomes by tailoring health care to an individual’s specific and unique needs (e.g., biology, genetics, environment, and behavior) (Collins & Varmus, 2015; Precision Medicine Initiative, n.d.). Currently, there is increasing attention to precision medicine’s potential for improving mental and behavioral health services. As an extension of the concept, Bickman and colleagues (Bickman, Lyon, & Wolpert, 2016)recently defined precision mental health as “an approach to prevention and intervention that focuses on obtaining an accurate understanding of the needs, preferences, and prognostic possibilities for any given individual, based on close attention to initial assessment, ongoing monitoring, and individualized feedback information, and which tailors interventions and support accordingly in line with the most up-to-date scientific evidence” (p.272).

Measurement-Based Care (MBC)

Measurement-based care (MBC) is a cornerstone of precision mental health. MBC is defined as the use of data collected throughout treatment to drive clinical decisions (Scott & Lewis, 2015), and is often used interchangeably with related terms such as routine outcome monitoring or patient-reported outcome monitoring (Bickman et al., 2016; Lyon, Lewis, Boyd, Hendrix, & Liu, 2016). A substantial body of evidence supports the utility of MBC and systematic assessment in enhancing both intervention processes (e.g., improved patient-clinician relationships, client-centered services, engagement in services) and outcomes for adult and youth service recipients (Bickman, Kelley, Breda, de Andrade, & Riemer, 2011; Carlier et al., 2012; Cashel, 2002; Douglas et al., 2015; Jewell, Handwerk, Almquist, & Lucas, 2004; Rettew, Lynch, Achenbach, Dumenci, & Ivanova, 2009). However, a recent systematic review of MBC with adults has questioned its impact on mental health symptoms, especially for clients other than those who are not-on-track (i.e., deteriorating or at risk for dropout) (Kendrick et al., 2016). Although its effects may be more attenuated than previously believed – at least among adults – the relative simplicity, low burden on clinicians, and existing evidence for effectiveness has led MBC to be identified as a minimal intervention necessary for change (Glasgow et al., 2013; Scott & Lewis, 2015). Unfortunately, research has consistently demonstrated that mental health cliniciansinfrequently engaged in MBC practices (e.g., regular administration of standardized assessment tools; data-driven decision making) (Gilbody, House, & Sheldon, 2002; Hatfield & Ogles, 2004; Lyon et al., 2015; Palmiter, 2004)and that factors such as clinician attitudes and previous training experiences may predict use (Connors, Arora, Curtis, & Stephan, 2015; Jensen-Doss & Hawley, 2010). Clinicianswho fail to use MBC are at risk of delivering services that are not properly individualized for patient presenting problems, are potentially unresponsive to progress or stasis, and may therefore be less effective.

Training and consultation to support MBC.

Collectively, results of a series of studies suggest that implementation of MBC in mental health may be facilitated by initial training – and can be sustained with additional consultation – but that a “decline effect” is apparent without continued contact (e.g., Close-Goedjen and Saunders, 2002; De Rond, De Wit, & Van Dam, 2001). A recent evaluation of a state-wide training and consultation program for child and family mental health clinicians found early increases in positive assessment attitudes, with more gradual increases in self-reported skill and use(Lyon, Dorsey, Pullmann, Silbaugh-Cowdin, & Berliner, 2014). Following the conclusion of consultation supports, assessment use decreased somewhat while still remaining above baseline levels. Another study found use of an online progress monitoring tool increased after training, but decreased significantly at one year follow-up(Persons, Koerner, Eidelman, Thomas, & Liu, 2016). Furthermore, some studies have found variability in clinician responsivity to MBC implementation efforts, suggesting the importance of disaggregating data to better understand impact of following training and consultation (Persons et al., 2016). These findings indicate that MBC implementation supports require further optimization in order to have a sustained effect on target practices, and that examination of individual trends in practice change may also be warranted.

Measurement feedback systems (MFS).

Systems that can prompt or facilitate clinician engagement in MBC behaviors may reflect another strategy to improve implementation and enhance service system accountability (Lyon et al., 2014). Measurement feedback systems (MFS) – digital technologies with the ability to capture service recipient data from regular assessment of treatment progress (e.g., functional outcomes, symptom changes) or processes (e.g., therapeutic alliance, services delivered) and then deliver that information to clinicians (Bickman, 2008)– represent a leading implementation strategy to support the adoption and sustainment of MBC(Lyon & Lewis, 2016). A recent review of MFS technologiesrevealed that most systems support MBC by tracking outcomes with a library of instruments, delivering feedback to providers, and displaying outcomes graphically (Lyon, Lewis et al., 2016).

MBC and MFS in Schools

Schools are the most common service setting for youth mental health care (Farmer, Burns, Philips, Andgold, & Costello, 2003) and reduceservice access barriers (Lyon, Ludwig, Vander Stoep, Gudmundsen, & McCauley, 2013). The implementation of MBC in schools therefore carries considerable potential for public health impact. MBC isalso perceived by school-based clinicians and students to be feasible, acceptable, and to facilitate progress toward treatment goals (Duong, Lyon, Ludwig, Wasse, & McCauley, 2016; Lyon et al., 2015). Nevertheless, school cliniciansinfrequently utilize MBC in their practice (Lyon et al., 2015), making it difficult for them to provide prompt and accurate clinical feedback.As a result of this sizable gap between typical and optimal practice, youth who present for treatment in schools are less likely to receive services that are properly individualized or adequately responsive to their treatment progress. Innovative approaches are needed to improve MBC implementation in education sector mental health services. When implementing MBC, the use of a MFS may allow for lower levels of resources devoted to consultative support. Although some form of consultation has consistently been found to facilitate the use of evidence-based practices following training (Beidas & Kendall, 2010; Herschell, Kolko, Baumann, & Davis, 2010; Lyon, Stirman, Kerns, & Bruns, 2011), it is not at all clear how much follow-up is necessary for sustained practice change. In addition, consultation is expensive and can add more than 50% of a budget to training costs(Olmstead, Carroll, Canning-Ball, & Martino, 2011), which may not be feasible in low-resource settings. It is therefore critical to examine methods that might facilitate decreased investment in post-training consultative supports from experts.

Study Aims

To address these gaps, a pilot study was designed to evaluate: (1) the impact of a MFS and brief consultation following initial training to support MBC practice change among school-based clinicians, (2) sustainment of MBC practice changes, and (3) impact of individual consultation calls on clinician practice, and (4) individual variation in clinician change trajectories. Specifically, we hypothesized that clinicians in the MFS condition would demonstrate behavior changes following the introduction of the MFS (H1) and that these changes would largely be sustained over time (H2). We also asked a series of research questions about the extent to which individual variation could be detected among clinicians in their behavior changes (RQ1), the extent to which each consultation call impacted use of the identified MBC practices in the subsequent week (RQ2), and whether baseline scores on assessment attitudes and use would be related to changes in MBC behaviors (RQ3).

Method

The current study was conducted as part of a larger, ongoing initiative focused on enhancing the quality of the services provided by school-based mental health clinicians. Through a partnership involving the local university, public health department, public school districts, and community health service organizations, the university investigators had been providing some form of training and consultation in evidence-based practice to a subset of the participating clinicians for over 6 years at the time the current study was initiated. Using a randomized control design, the current study investigated implementation of MBC practices (i.e., administering standardized assessments and providing feedback to clients) following training, among school-based health center mental health clinicians. Clinicians were randomized into two conditions in which they either: (1) received access to a MFS (MFS condition) and brief consultation supports, or (2) did not have access to the MFS or consultation supports (control condition).

Participants and Setting

Fourteen mental health clinicians working in middle school and high school school-based health centers in one of two large urban school districts in the Pacific Northwest participated in the study. School-based health centers are an increasingly-popular model for the delivery of health services in the education sector with a proven track record of reducing service access disparities (Gance-Cleveland & Yousey, 2005; Walker, Kerns, Lyon, Bruns, & Cosgrove, 2010). Most clinicians had a master’s degree in social work, education, or counseling (one had a PsyD) and had been in their current positions from 3 months to 15 years (mean = 4.4 years). Clinicians were all female, between 28 and 60 years old (mean age = 42 years), and 79% Caucasian. Most functioned as the sole dedicated mental health provider at their respective schools. Two participants also worked as supervisors within their agencies. Measures of clinician attitudes toward standardized assessments were comparable to national norming samples at baseline (i.e., average scores of 3–4 on a 5-point scale), indicating moderate to positive attitudes(Jensen-Doss & Hawley, 2010).

Selected Measurement Feedback System

The current project used an adapted version of an existing MFS, the Mental Health Integrated Tracking System (MHITS). MHITS was originally developed to support the delivery of an integrated care model for adult depression in primary care settings (Unützer et al., 2002, 2012; Williams et al., 2004). MHITS is a web-based MFS that supports MBC by facilitating administration of standardized measurement instruments for the purposes of screening and progress monitoring, providing graphical results and providing cues to clinicians based on severity or inadequate progress, among other functions. At the time of the current study, MHITS had been adapted to support MBC among clinicians working in school-based health centers (Lyon, Wasse, et al., 2016), yielding a School-Based version of MHITS (SB-MHITS).

Procedures

MBC training.

All participating clinicians in both conditions received training in MBC practices prior to the introduction of SB-MHITS. Training occurred in person and focused on (1) principles of MBC (e.g., rationale, introducing MBC to students), (2) use of assessment tools and data interpretation (e.g., Patient Health Questionnaire – 9 [PHQ-9], Generalized Anxiety Disorder – 7 [GAD-7], Strengths and Difficulties Questionnaire [SDQ; (Goodman, 2001); idiographic/individualized assessments), and (3) effective feedback processes with students and families. Training occurred over a single day and involved active training strategies previously demonstrated to be critical to the uptake of new skills (Rakovshik & McManus, 2010), such as interactive didactic presentations, clinicians’ personal reflections on their current assessment practices, specific practice activities (e.g., case vignettes), and small group discussions.

MFS training and consultation.

Following initial training, clinicians were randomized to either the SB-MHITS condition or a control condition that did not provide structured post-training supports. In the SB-MHITS condition, clinicians completed an online training in the use of SB-MHITS, after which they were given access to the system. SB-MHITS clinicians also participated in a brief series of one-hour consultation calls that included two areas of focus:(1) technical assistance surrounding SB-MHITS (e.g., identifying and addressing system bugs; troubleshooting problems with system functionality and, when necessary, developing workarounds) and (2) assessment and feedback practices using the SB-MHITS technology. Over a period of three weeks, six calls were scheduled. Clinicians were expected to attend three of the six calls and to make one case presentation that incorporated assessment data derived from SB-MHITS. This level was determined based on (a) a desire to limit the consultation sessions as much as possible for the sake of efficiency and (b) an understanding that key functions of consultation include problem-solving, trainee engagement, case support, and accountability among others, which may require multiple contacts to achieve successfully (Nadeem, Gleacher, & Beidas, 2013). Consultation call attendance and case presentation dates were tracked for each clinician (see below).

Data collection procedures.

Data were collected from clinicians at multiple time points during the initiative using a secure, online assessment portal. Following initial MBC training, but one month prior to the introduction of SB-MHITS, all clinicians in both conditions completed measures of attitudes toward standardized assessments, a self-evaluation of their assessment skill, and self-reported use of MBC practices (see below). At this time, clinicians also began completing online daily ratings of their assessment and feedback practices, which continued for the next six months. During the baseline period, clinicians were incentivized with $10 gift cards to complete the daily assessments, but received no additional incentive surrounding their completion of study measures. SB-MHITS was introduced after approximately one month of baseline data collection.

Measures

Attitudes toward Standardized Assessment Scale (ASA; Jensen-Doss & Hawley, 2010).

The ASA (Jensen-Doss & Hawley, 2010)is a 22-item measure of clinician attitudes about using standardized assessments (SA) in practice. Items are rated on a 1–5 scale (‘‘Strongly Disagree’’ to ‘‘Strongly Agree’’) and load onto three subscales: Benefit over Clinical Judgment, Psychometric Quality, and Practicality. Measure psychometrics were originally established for the ASA using a national sample of 1,442 mental health professionals. All subscales were found to demonstrate good psychometrics (α = .72 – .75) and higher ratings on all subscales have been associated with a greater likelihood of standardized assessment use. However, only the Practicality subscale was found to be independently predictive of use. In the current project, the ASA was administered at baseline in the month preceding the introduction of SB-MHITS.

Assessment skill.

Study clinicians reported on their understanding of and skill using SA measures on a on a five-point Likert-style scale ranging from 1 (“Minimal”) to 5 (“Advanced”). Items address selection of clients for SA administration as well as tool selection, administration, scoring, interpretation, integration into treatment, feedback, and progress monitoring. The scale has been found to demonstrate good internal consistency (α = 0.85) (Lyon et al., 2015). Items are averaged to create a total assessment skill score. This measure was also administered preceding SB-MHITS introduction.

Current Assessment Practice Evaluation – Revised (CAPER).

The CAPER is a seven-item version of the original four-item CAPE measure (Lyon et al., 2014). The CAPER assesses clinician self-reported use of variety of MBC practices including administration of assessments at different points during treatment (e.g., at intake, on an ongoing basis), feedback to service recipients, and data-driven decision-making on the basis of assessment results. In the current study, an expanded nine-item version of the CAPER was used in which the two items focusing on the integration of assessment data into a single session or overall treatment planning were split so that each item asked separately about standardized (i.e., nomothetic) and individualized (i.e., idiographic) assessment data. All items are scored on a four-point scale (None, Some [1–39 %], Half [40–60 %], Most [61–100 %) and a total score is calculated. The original CAPE has demonstrated acceptable inter-item reliability (α = 0.72) and sensitivity to change in a larger study of community clinicians (Lyon et al., 2014) and has been applied successfully with school-based clinicians (Lyon et al., 2015). In the current study, the CAPER was also administered to clinicians prior to the introduction of SB-MHITS.

Daily assessment and feedback reports.

Between December 2012 and June 2013, for each day school was in session in their respective districts, clinicians received emails with a link to a brief online survey. The survey asked clinicians (1) if they had been at work that day; (2) if so, how many clients they met with for 20 minutes or more; and (3) of the clients they had seen for at least 20 minutes, to how many had they (a) administered a standardized assessment measure and (b) delivered data-based feedback to service recipients. These variables were used to calculate two continuous variables for percent of clients to whom a measure was administered (i.e., administration) and percent of clients who received feedback (i.e., feedback).

Consultation call attendance and case presentation.

Consultants tracked which of the six possible consultation calls were attended by which clinicians in the SB-MHITS condition, as well as when each participant presented a case using data from the system. As indicated above, clinicians were only asked to attend three of the six possible calls.

Analyses

The primary data for this study (daily assessment and feedback reports) are nested within timepoints (days), within clinicians. We built separate models predicting two dependent variables (DVs), administration (i.e., percent of student clients who received a standardized assessment) and feedback (i.e., percent of student clients who received data-based feedback). Analyses were run using two-level Multilevel growth Models (MLMs) using HLM 7.0(Raudenbush, Bryk, & Congdon, 2011). Missing data were estimated using Restricted Maximum Likelihood, recommended when time points vary among cases(Singer & Willett, 2003). We tested models for significance and parsimony using −2 Log Likelihood deviance statistics, building models in an iterative fashion. Slopes and intercepts were permitted to randomly vary unless otherwise indicated below. We tested for time trends for overall levels and slopes, and group differences on initial level of the DV, slopes, and elevation shifts at SB-MHITS start. We also tested for relationships between participation in consultation calls and administration and feedback using similar model building procedures, but restricting the analysis to those clinicians using MHITS and those days in which MHITS was active. We tested whether the levels of the DVs increased over the seven days following a consultation call. Finally, we tested the hypothesis that baseline clinician scores on the ASA subscales, Assessment Skill, and CAPER would be positively related to scores and rate of change on administration and feedback. For these analyses, we ran individual models for each predictor variable, did not include group, and we grand-mean centered predictor variables to aid interpretation.

Results

Baseline ratings on ASA Benefit over Clinical Judgmentranged from 2.4 to 4.2, with a mean of 3.3 (SD = .63); ASA Psychometric Quality ranged from 3.3 to 4.7, with a mean of 3.9 (SD = .42); ASA Practicality ranged from 2.7 to 3.5, with a mean of 3.2 (SD = .28). Assessment Skill ranged from 2.0 to 4.4 with a mean of 3.4 (SD = .61). The CAPER Total Score ranged from 1.7 to 4.0 with a mean of 2.5 (SD = .55). There were no missing data on any of these measures, and no significant differences on these measures between MHITS and control clinicians. However, Assessment Skill approached significance in favor of the MHITS group (MMHITS = 3.75, Mcontrol = 3.13, t(12) = −2.14, p = .054.).Administrationand feedback data included 1,193 completed timepoints (rated days) across the 14 clinicians over a maximum of 28 weeks. The average number of completed timepoints was 91.0 (SD=22.9) and ranged from 43 to 114. This variation is attributed to two factors. First, three clinicians – representing both study conditions – ended their submission of rated days early, at 19, 21, and 24 weeks. Reasons for fewer weeks of data included a late start to data collection (so the number of weeks was truncated by the end of the school year) and taking a leave of absence from their position (e.g., maternity leave). Second, some clinicians worked full time, while others were employed part time. There were no significant differences in number of completed timepoints between the groups.

Individual Clinician Variability in Administration and Feedback

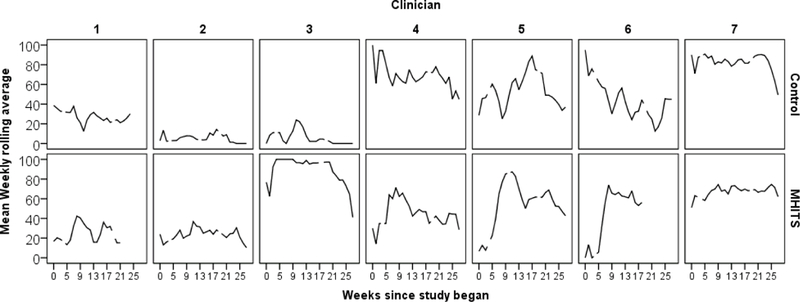

To address our research question about individual clinician variation (RQ1), intraclass correlation coefficients (ICCs) indicated that the amount variance accounted for between clinicians for administration was 54.9%, and for feedback was 52.3%. To examine this variance prior to modeling, individual clinician growth trend graphs for administration and feedback were calculated, using three week span rolling averages to aid interpretation, in order to examine within and between group variability. Administration trends are displayed in Figure 1 and demonstrate considerable variability surrounding the use of assessments over time (feedback trends are omitted here as they are nearly identical; models below include feedback in statistical analyses). Individual behavior change for SB-MHITS and control clinicians was assessed using these graphs by examining shifts in trend, level, and latency (i.e., points prior to shift in trend or level) (Kazdin, 1982) across baseline (first 3–5 weeks) and SB-MHITS phases.

Figure 1.

Individual growth trajectories for assessment administration by SB-MHITS status smoothed as 3-week averages; gaps indicate missing data. SB-MHITS became active around Week 4–5.

Overall trends during the baseline phase were relatively stable across participants, although one control clinician appeared to demonstrate an increasing trend (control clinician #5) and another demonstrated a decreasing trend (control clinician #6). Following the baseline phase, however, relatively abrupt shifts were apparent for five of the seven clinicians in the SB-MHITS condition as the system was introduced in Week 5. Specifically, SB-MHITS clinicians #1, #3, #4, #5, and #6 appeared to demonstrate relatively rapid changes in their trajectories with level increases ranging from approximately 20% to 65%. The only control clinician who showed a similar initial increase in assessment use during this period (#5) had already demonstrated an increasing trend during the baseline period. Few latency effects were observed in the SB-MHITS group so that, for clinicians who demonstrated change following the introduction of the system, those changes tended to occur almost immediately. Finally, a decreasing trend was apparent near the end of the academic year for multiple clinicians in both groups (e.g., control clinicians #4, #5, #7, and SB-MHITS clinicians #3, #5).

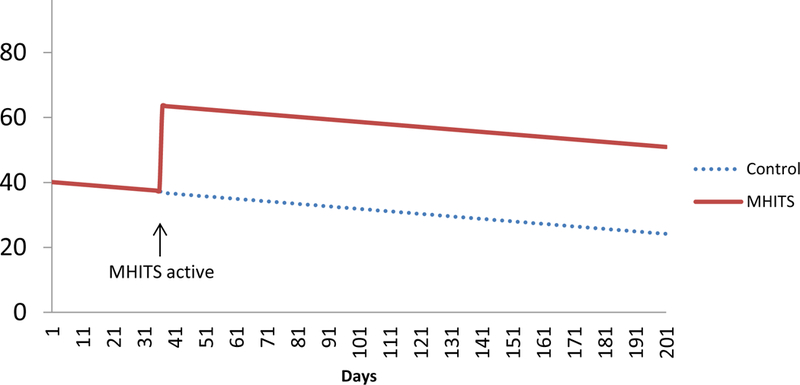

Changes in Administration and Feedback

To address our hypotheses that clinicians in the MFS condition would demonstrate behavior changes following MFS introduction (H1) and that these changes would be sustained (H2), we ran a series of two-level MLMs. The best-fitting models for predicting percent administration and feedback are in Table 1 and the model for feedbackis plotted in Figure 2 (the model for administrationis nearly identical). At baseline (i.e., following initial training, but prior to the introduction of SB-MHITS), clinicians reported administering measures to a predicted average of 43.2% of their daily cases (SD=34.4). This percentage decreased by .095 percentage points per day (SD=.05). Groups did not significantly differ at baseline or on their rate of change over time. When the study became active, those in the SB-MHITS group increased the percentage of cases they administered measures to by 24.5 percentage points (SD=20.7). The control group did not demonstrate any significant change. Clinicians in both groups reported providing feedback, at baseline, to 40.1% of their cases (SD=31.0), a number roughly equivalent to the percentage of cases where they reported administering assessment tools. This percentage decreased by .08 percentage points per day (SD=.09). When the study became active, those in the SB-MHITS group increased the percentage of cases they administered measures to by 26.8 percentage points (SD=18.6). The control group did not significantly change.

Table 1.

Multilevel growth models predicting administration and feedback by group assignment (level 2 n=14, level 1 n=1,193)

| Percent administered | Percent feedback | |||

|---|---|---|---|---|

| Fixed Effect | Coefficient | Standard error |

Coefficient | Standard error |

| Initial status | 43.207*** | 9.418 | 40.109*** | 8.527 |

| Rate of change by days | -0.095*** | 0.023 | -0.077* | 0.029 |

| Study active elevation shift | ||||

| Intercept | 0.826 | 7.613 | -0.531 | 7.177 |

| MHITS | 24.540* | 8.763 | 26.779** | 8.651 |

| Random Effect | Standard Deviation |

Standard Deviation |

||

| Initial status | 34.400*** | 30.968*** | ||

| Rate of change | 0.050 | 0.085** | ||

| Elevation shift | 20.748*** | 18.572*** | ||

| Within person | 26.010 | 26.150 | ||

| Deviance | 11238, df=7 | 11253, df=7 | ||

~p<.10

*p <.05

**p<.01

***p<.001

Figure 2.

MLM estimated growth trajectories for percent of cases receiving feedback from SB-MHITS and control group

Table 2 displays the results of MLMs evaluating our second research question (RQ2) and predicting changes in reported administration and feedback over the seven days following consultation call participation, restricting the sample to the SB-MHITS group and active study days. The estimate for the elevation shift during the week after a consultation call was fixed in both models because results during model building indicated that the estimate did not randomly vary. Clinicians reported administering measures to an average of 60.1% of their daily cases at the point when SB-MHITS was introduced (SD=26.9). This significantly declined at a modest rate of .10 percentage points per day (SD=.07, p<.05). During the week following a consultation call, the daily average of clients who were administered a measure increased by 7.0 percentage points. This change demonstrated borderline significance (p=.09). Similarly, clinicians reported providing feedback on measures to an average of 59.6% of their daily cases at the start of period in which SB-MHITS was introduced (SD=26.7). This declined, with borderline significance, at a rate of .09 percentage points per day (SD=.08, p=.09). During the week after a consultation call, the daily average of clients who received feedback on a measure increased by 8.2 percentage points.

Table 2.

Multilevel growth models predicting change in administration and feedback during seven days after consultation call participation (level 2 n=7, level 1 n=507)

| Percent administered | Percent feedback | |||

|---|---|---|---|---|

| Fixed Effect | Coefficient | Standard error |

Coefficient | Standard error |

| Initial status | 60.113*** | 10.520 | 59.623*** | 10.472 |

| Rate of change by days | -0.103* | 0.041 | -0.091~ | 0.044 |

| Consultation week elevation shift (fixed) | 6.983~ | 4.044 | 8.183* | 4.122 |

| Random Effect | Standard Deviation |

Standard Deviation |

||

| Initial status | 26.886*** | 26.721*** | ||

| Rate of change | 0.071~ | 0.081* | ||

| Within person | 27.670 | 28.199 | ||

| Deviance | 4827, df=4 | 4845, df=4 | ||

~p<.10

*p <.05

**p<.01

***p<.001

Predictors of Administration and Feedback Changes

Table 3 displays the results of MLMs evaluating RQ3 (the effects of baseline scores on MBC practice changes), whichalso indicated that the predicted percentage of administration was significantly related to baseline self-report ratings. For every one point increase on the predictor variables, models found a predicted percentage point increase on assessment administration of 33.4 points (SE = 6.9) from ASA Psychometric Quality, 27.3 points (SE = 9.6) from Assessment Skill, and 33.1 points (SE = 10.1) points from the CAPER. Results for models on predicted percentage of feedback were very similar. For every one point increase on the predictor variables, models found a predicted increase on feedback of 33.1 points (SE = 11.2) from ASA Psychometrics, 28.5 points (SE = 8.6) from Assessment Skill, and 32.4 points (SE = 10.2) from the CAPER. The average percentages of clients receiving assessment and feedback were not significantly predicted by baseline scores on ASA Practicality and ASA Benefit over Clinical Judgment, and none of the baseline scores were significantly related to rate of change.

Table 3.

Separate multilevel growth models predicting the effects of baseline scores on change in administration and feedback.

| Percent Administered | Percent Feedback | |||

|---|---|---|---|---|

| Fixed Effect Only | Coefficient | Standard error |

Coefficient | Standard error |

| ASA Psychometric Quality | 33.4*** | 6.9 | 33.1*** | 11.2 |

| ASA Assessment Skill | 27.3*** | 9.6 | 28.5*** | 8.6 |

| ASA Practicality | 20.2 | 27.2 | 19.3 | 26.0 |

| ASA Benefit Over Clinical Judgement | 18.8 | 11.1 | 16.1 | 10.8 |

| CAPER | 33.1*** | 10.1 | 32.4*** | 10.4 |

~p<.10

*p <.05

**p<.01

***p<.001

ASA = Attitudes toward Standardized Assessment Scale

CAPER = Current Assessment Practice Evaluation, Revised

Discussion

The current study was designed to build upon prior research on the implementation of MBC in mental health service delivery and to determine if a digital MFS, coupled with brief consultation, could support education sector clinicians in the uptake and sustainment of core MBC practices. Findings suggest that, following an initial training in MBC, use of the MFS (SB-MHITS) resulted in rapid increases and sustained use of MBC practices, including the administration of standardized assessments and feedback to clients. This result suggests that MFS may represent an effective strategy – above and beyond initial training – when included as part of a bundled MBC implementation approach. Additionally, a key objective of this study was to evaluate the extent to which the SB-MHITS system could help to reduce previously-documented decreases in MBC practices following the removal of post-training consultation supports. Indeed, although some previous studies have reported mean decreases of 20% or more (De Rond et al., 2001), or even a complete return to baseline (Close-Goedjen & Saunders, 2002), the average decreases in the current study were more moderate with only one of the two outcome variables (administration) decreasing significantly over the-six month follow-up period. Furthermore, while previous studies have had wide variation in the length of follow-up evaluations – ranging from one month (Close-Goedjen & Saunders, 2002) to one year (Persons et al., 2016) – the largest decreases in each study began immediately after the removal of supports and were documented by the three-month follow up, at the latest. Given that the post-support follow-up period in the current study lasted approximately five months – during which MBC practices in our intervention group remained significantly higher than those in our control group – our finding suggests that, beyond its effects during an active implementation phase, the SB-MHITS technology may have additional value as a MBC sustainment strategy.

Furthermore, the overall similarity in results between assessment administration and assessment feedback indicates that, when clinicians administered assessments, they were very likely to provide feedback as well. This is perhaps unsurprising, given that assessment administration is required for the delivery of assessment-based feedback, but it also suggests that the training, consultation, and SB-MHITS technology were collectively able to support multiple core aspects of MBC. This is encouraging, given previous research indicating that it may be more difficult to change mental health clinicians’ incorporation of assessment results into services (e.g., use of feedback) than it is to change assessments themselves (Garland, Kruse, & Aarons, 2003; Lyon et al., 2014). Future research may evaluate whether each of the MBC implementation strategies employed in the current project (i.e., active training, post-training consultation / technical assistance, MFS) differentially impact each component of MBC and whether they may be combined with additional strategies (e.g., organizational change efforts (Aarons, Ehrhart, Farahnak, & Hurlburt, 2015; Glisson, Dukes, & Green, 2006)to further enhance effects on clinical practice.

Predictors of Change

Evaluation of predictors of MBC practice change identified three baseline variables that were related to change in assessment administration and feedback. First, the baseline ASA Psychometric Quality subscale predicted change over time in both behaviors. This finding is interesting, given that prior work with the ASA (Jensen-Doss & Hawley, 2010)found that only the Practicality scale independently predicted assessment use. However, this earlier work was not conducted in the context of any initiatives designed to implement new practices or change clinician behavior. Review of the items contained within the Psychometric subscale reveals that most items are focused on the validity of information resulting from assessment instruments, especially for diagnostic purposes (e.g., “Standardized measures help with differential diagnosis,” “Standardized measures help with accurate diagnosis,” “Clinicians should use assessments with demonstrated reliability and validity.”). It may be that, while the Practicality subscale is more likely to be related to assessment use in usual care (Jensen-Doss & Hawley, 2010) – and also likely to change in response to training (Lyon et al., 2014) – clinicians who felt more positively about the extent to which assessments were reliable, valid, and diagnostically useful were best poised to increase their MBC behaviors.

In addition, clinicians’ self-rated baseline assessment skill and current assessment practices predicted MBC practice changes over the course of the study. These results suggest that clinicians who indicated that they were more skilled, comfortable, and more frequently used MBC practices were best positioned to capitalize on the MBC implementation initiative. This finding aligns with much of the training literature across disciplines and sectors (e.g., medicine, mental health, education) which has frequently underscored the importance of designing professional development efforts that strike a balance between familiarity and novelty for participating clinicians (Lyon et al., 2010). The current resultssuggest that, following initial training, assessments of MBC skill and practice may be important predictors of clinician responsiveness to post-training supports (e.g., consultation, MFS) and subsequent practice change. If so, there are significant implications for these types of assessments to drive decisions about the allocation of post-training resources (e.g., different frequency or duration of consultation based on post-training evaluation).

Incremental Effects of Consultation

In the current project, a brief and efficient telephone consultation model was employed. During calls, clinicians and expert consultants (a) troubleshot use of the SB-MHITS technology and (b) supported clinicians in incorporating MBC practices into their routine services. Given uncertainty in the field about the dosage of consultation required to adequately support clinicians following training (Nadeem et al., 2013; Owens et al., 2014), the emphasis of the current project on maximizing consultation efficiency, evaluated the immediate (i.e., one week) effects of consultation calls on clinician behavior. Results indicated that the effects of consultation calls on MBC practices in the subsequent week were either significant or approached significance for assessment feedback and administration, respectively. Given this relationship, it is possible that additional calls may have resulted in greater practice changes, but would have incurred additional costs. Alternatively, the existing call structure may be augmented, while still minimizing costs, by incorporating other types of digital asynchronous post-training supports. For instance, emerging evidence suggests that online message boards may be an acceptable and useful method of providing this type of support (Stirman et al., 2014).

Individual Differences

Finally, evaluation of individual clinician change trajectories revealed notable within- and between-group differences among clinicians in the extent to which their use of MBC practices shifted over the course of the study (Figure 1). Overall, clinicians in the control condition appeared to demonstrate stability or gradual decreases over time in their assessment use. In contrast, many of the participants in the SB-MHITS condition displayed positive gains, which were often maintained over the subsequent follow-up period. Because it is unclear what factors contributed to those differences, additional research should investigate reasons for between clinician variability. Furthermore, those clinicians in the SB-MHITS condition who demonstrated the most notable changes in response to the MFS did so almost immediately. This has implications for monitoring the effectiveness of MFS as an implementation strategy for MBC. Similar to the potential for evaluating post-training MBC skill and practice (discussed above), additional implementation supports may be needed if there is not a relatively immediate, observable impact of MFS introduction. This proposition could be evaluated effectively in future research using a sequential, multiple assignment, randomized trial (SMART) design (Lei, Nahum-Shani, Lynch, Oslin, & Murphy, 2012) in which adjunctive implementation strategies are only used when the application of a MFS appears to be ineffective.

Limitations

This pilot study has a number of limitations. First, it relied on a relatively small sample of clinicians (n =14), randomized to the two study conditions. Although this decreased the study’s power somewhat, this issue was partially mitigated by the number of level one longitudinal data points. Due to the sample size, the findings should be generalized with caution. Second, MBC practices were evaluated using clinician self-report, which has been criticized as potentially biased relative to “gold standard” practice observation (Hurlburt, Garland, Nguyen, & Brookman-Frazee, 2009; Miller & Mount, 2001; Nakamura et al., 2014). Nevertheless, multiple studies have also found significant, positive relationships between clinician self-report and observational assessments, especially for specific practices that, like MBC, are less complicated than full treatment protocols (Chapman, McCart, Letourneau, & Sheidow, 2013; Hogue, Dauber, Henderson, & Liddle, 2013; Ward et al., 2013). Furthermore, the current study was primarily focused on documenting changes in MBC practice between groups and over time, rather than interpreting absolute rates of assessment administration and feedback. Clinicians in both conditions completed the daily assessment and feedback reports, but only those in the SB-MHITS condition demonstrated markedly different change from baseline. Finally, although there were no significant differences on any baseline measures between conditions, suggesting that randomization was largely effective, differences in ratings of assessment skill approached significance with somewhat higher values for the SB-MHITS group. Despite this, clear changes in the MBC practice trajectories in the SB-MHITS group when the MFS was introduced continue to support a causal link.

Summary and Conclusion

Identification and evaluation of effective MBC implementation strategies is essential given the substantial body of literature supporting the utility of MBC in improving service recipient outcomes, but consistently low levels of use in routine mental health care settings. MBC is also a cornerstone of precision mental health, is a best practice to support individualized service delivery, and has demonstrated a high degree of contextual appropriateness in schools, the most common youth mental health service delivery sector. The current study evaluated the impact of a digital decision support tool – SB-MHITS – and brief telephone consultation on core MBC practices and revealed significant, sustained differences relative to a training only control group. Given that SB-MHITS was not effective in substantially changing the practices of all clinicians in the experimental condition (see Figure 1), future research may use qualitative or mixed methods to explore reasons for within-group variability in response.

There is increasing recognition of the importance of identifying implementation strategies that are both feasible and impactful (Waltz et al., 2015). Digital implementation strategies such as computer-based reminders (Flanagan, Ramanujam, & Doebbeling, 2009), decision support systems (Roshanov et al., 2011), and alteration of computerized record systems (Nemeth, Feifer, Stuart, & Ornstein, 2008)are also becoming more common. These strategies may be more feasible in some settings than more traditional alternatives. Although feasibility was not directly assessed in this trial, the results suggest that the SB-MHITS MFS may offer a relatively inexpensive strategy to enhance the effects of initial MBC training and, ultimately, the quality of education sector mental health services by increasing the use of practices consistently found to improve outcomes in adult and youth mental health services.

Acknowledgments

FUNDING

This publication was supported in part by grant K08 MH095939 (Lyon) awarded from the National Institute of Mental Health. Dr. Lyon is also an investigator with the Implementation Research Institute, at the George Warren Brown School of Social Work, Washington University in St. Louis; through an award from the National Institute of Mental Health (R25 MH080916) and the Department of Veterans Affairs, Health Services Research & Development Service, Quality Enhancement Research Initiative.

References

- Aarons GA, Ehrhart MG, Farahnak LR, & Hurlburt MS (2015). Leadership and organizational change for implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implementation Science, 10, 11 10.1186/s13012-014-0192-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beidas RS, & Kendall PC (2010). Training therapists in evidence-based practice: a critical review of studies from a systems-contextual perspective. Clinical Psychology : Science and Practice, 17(1), 1–30. 10.1111/j.1468-2850.2009.01187.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L (2008). A measurement feedback system (MFS) is necessary to improve mental health outcomes. Journal of the American Academy of Child and Adolescent Psychiatry, 47(10), 1114–1119. 10.1097/CHI.0b013e3181825af8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L, Kelley SD, Breda C, de Andrade AR, & Riemer M (2011). Effects of routine feedback to clinicians on mental health outcomes of youths: results of a randomized trial. Psychiatric Services, 62(12), 1423–1429. 10.1176/appi.ps.002052011 [DOI] [PubMed] [Google Scholar]

- Bickman L, Lyon AR, & Wolpert M (2016). Achieving Precision Mental Health through Effective Assessment, Monitoring, and Feedback Processes. Administration and Policy in Mental Health, 43, 271–276. 10.1007/s10488-016-0718-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlier IVE, Meuldijk D, Van Vliet IM, Van Fenema E, Van der Wee NJA, & Zitman FG (2012). Routine outcome monitoring and feedback on physical or mental health status: evidence and theory. Journal of Evaluation in Clinical Practice, 18(1), 104–110. 10.1111/j.1365-2753.2010.01543.x [DOI] [PubMed] [Google Scholar]

- Cashel ML (2002). Child and adolescent psychological assessment: Current clinical practices and the impact of managed care. Professional Psychology: Research and Practice, 33(5), 446–453. 10.1037/0735-7028.33.5.446 [DOI] [Google Scholar]

- Chapman JE, McCart MR, Letourneau EJ, & Sheidow AJ (2013). Comparison of youth, caregiver, therapist, trained, and treatment expert raters of therapist adherence to a substance abuse treatment protocol. Journal of Consulting and Clinical Psychology, 81(4), 674–680. 10.1037/a0033021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Close-Goedjen JL, & Saunders SM (2002). The effect of technical support on clinician attitudes toward an outcome assessment instrument. The Journal of Behavioral Health Services & Research, 29(1), 99–108. 10.1007/BF02287837 [DOI] [PubMed] [Google Scholar]

- Collins FS, & Varmus H (2015). A New Initiative on Precision Medicine. New England Journal of Medicine, 372(9), 793–795. 10.1056/NEJMp1500523 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Connors EH, Arora P, Curtis L, & Stephan SH (2015). Evidence-based assessment in school mental health. Cognitive and Behavioral Practice, 22(1), 60–73. 10.1016/j.cbpra.2014.03.008 [DOI] [Google Scholar]

- De Rond M, De Wit R, & Van Dam F (2001). The implementation of a Pain Monitoring Programme for nurses in daily clinical practice: results of a follow-up study in five hospitals. Journal of Advanced Nursing, 35(4), 590–598. 10.1046/j.1365-2648.2001.01875.x [DOI] [PubMed] [Google Scholar]

- Douglas SR, Jonghyuk B, Andrade A. R. V. de, Tomlinson MM, Hargraves RP, & Bickman L (2015). Feedback mechanisms of change: How problem alerts reported by youth clients and their caregivers impact clinician-reported session content. Psychotherapy Res, 25(6), 678–693. 10.1080/10503307.2015.1059966 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duong MT, Lyon AR, Ludwig K, Wasse JK, & McCauley E (2016). Student perceptions of the acceptability and utility of standardized and idiographic assessment in school mental health. International Journal of Mental Health Promotion, 18(1), 49–63. 10.1080/14623730.2015.1079429 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farmer EMZ, Burns BJ, Phillips SD, Angold A, & Costello EJ (2003). Pathways into and through mental health services for children and adolescents. Psychiatric Services, 54(1), 60–66. 10.1176/appi.ps.54.1.60 [DOI] [PubMed] [Google Scholar]

- Flanagan ME, Ramanujam R, & Doebbeling BN (2009). The effect of provider- and workflow-focused strategies for guideline implementation on provider acceptance. Implementation Science, 4, 71 10.1186/1748-5908-4-71 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foster S, Rollefson M, Doksum T, Noonan D, Robinson G, & Teich J (2005). School mental health services in the United States, 2002–2003. SAMHSA’s National Clearinghouse for Alcohol and Drug Information (NCADI). Retrieved from http://eric.ed.gov/?id=ED499056 [Google Scholar]

- Gance-Cleveland B, & Yousey Y (2005). Benefits of a school-based health center in a preschool. Clinical Nursing Research, 14(4), 327–342. 10.1177/1054773805278188 [DOI] [PubMed] [Google Scholar]

- Garland AF, Kruse M, & Aarons GA (2003). Clinicians and outcome measurement: What’s the use? The Journal of Behavioral Health Services & Research, 30(4), 393–405. 10.1007/BF02287427 [DOI] [PubMed] [Google Scholar]

- Gilbody SM, House AO, & Sheldon TA (2002). Psychiatrists in the UK do not use outcomes measures. The British Journal of Psychiatry, 180(2), 101–103. 10.1192/bjp.180.2.101 [DOI] [PubMed] [Google Scholar]

- Glasgow RE, Fisher L, Strycker LA, Hessler D, Toobert DJ, King DK, & Jacobs T (2013). Minimal intervention needed for change: definition, use, and value for improving health and health research. Translational Behavioral Medicine, 4(1), 26–33. 10.1007/s13142-013-0232-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Glisson C, Dukes D, & Green P (2006). The effects of the ARC organizational intervention on caseworker turnover, climate, and culture in children’s service systems. Child Abuse & Neglect, 30(8), 855–880. 10.1016/j.chiabu.2005.12.010 [DOI] [PubMed] [Google Scholar]

- Goodman R (2001). Psychometric Properties of the Strengths and Difficulties Questionnaire. Journal of the American Academy of Child & Adolescent Psychiatry, 40(11), 1337–1345. 10.1097/00004583-200111000-00015 [DOI] [PubMed] [Google Scholar]

- Hatfield DR, & Ogles BM (2004). The use of outcome measures by psychologists in clinical practice. Professional Psychology: Research and Practice, 35(5), 485–491. 10.1037/0735-7028.35.5.485 [DOI] [Google Scholar]

- Herschell AD, Kolko DJ, Baumann BL, & Davis AC (2010). The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations ☆. Clinical Psychology Review, 30(4), 448–466. 10.1016/j.cpr.2010.02.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hogue A, Dauber S, Henderson CE, & Liddle HA (2013). Reliability of therapist self-report on treatment targets and focus in family-based intervention. Administration and Policy in Mental Health and Mental Health Services Research, 41(5), 697–705. 10.1007/s10488-013-0520-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hurlburt MS, Garland AF, Nguyen K, & Brookman-Frazee L (2009). Child and family therapy process: Concordance of therapist and observational perspectives. Administration and Policy in Mental Health and Mental Health Services Research, 37(3), 230–244. 10.1007/s10488-009-0251-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen-Doss A, & Hawley KM (2010). Understanding barriers to evidence-based assessment: Clinician attitudes toward standardized assessment tools. Journal of Clinical Child & Adolescent Psychology, 39(6), 885–896. 10.1080/15374416.2010.517169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jewell J, Handwerk M, Almquist J, & Lucas C (2004). Comparing the validity of clinician-generated diagnosis of conduct disorder to the diagnostic interview schedule for children. Journal of Clinical Child & Adolescent Psychology, 33(3), 536–546. 10.1207/s15374424jccp3303_11 [DOI] [PubMed] [Google Scholar]

- Kazdin AE (1982). Single-case experimental designs: Strategies for studying behavior change. New York: Oxford University Press. Retrieved from http://tocs.ulb.tu-darmstadt.de/195084071.pdf [Google Scholar]

- Kendrick T, El‐Gohary M, Stuart B, Gilbody S, Churchill R, Aiken L, ... & Moore M. (2016). Routine use of patient reported outcome measures (PROMs) for improving treatment of common mental health disorders in adults. The Cochrane Library. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lei H, Nahum-Shani I, Lynch K, Oslin D, & Murphy SA (2012). A “SMART” design for building individualized treatment sequences. Annual Review of Clinical Psychology, 8 10.1146/annurev-clinpsy-032511-143152 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Dorsey S, Pullmann M, Silbaugh-Cowdin J, & Berliner L (2014). Clinician use of standardized assessments following a common elements psychotherapy training and consultation program. Administration and Policy in Mental Health and Mental Health Services Research, 42(1), 47–60. 10.1007/s10488-014-0543-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, & Lewis CC (2016). Designing health information technologies for uptake: development and implementation of measurement feedback systems in mental health service delivery. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 344–349. 10.1007/s10488-015-0704-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Lewis CC, Boyd MR, Hendrix E, & Liu F (2016). Capabilities and characteristics of digital measurement feedback systems: results from a comprehensive review. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 441–466. 10.1007/s10488-016-0719-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Ludwig KA, Stoep AV, Gudmundsen G, & McCauley E (2013). Patterns and predictors of mental healthcare utilization in schools and other service sectors among adolescents at risk for depression. School Mental Health, 5(3), 155–165. 10.1007/s12310-012-9097-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Ludwig K, Wasse JK, Bergstrom A, Hendrix E, & McCauley E (2015). Determinants and functions of standardized assessment use among school mental health clinicians: a mixed methods evaluation. Administration and Policy in Mental Health and Mental Health Services Research, 43(1), 122–134. 10.1007/s10488-015-0626-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Stirman SW, Kerns SEU, & Bruns EJ (2011). Developing the mental health workforce: Review and application of training approaches from multiple disciplines. Administration and Policy in Mental Health and Mental Health Services Research, 38(4), 238–253. 10.1007/s10488-010-0331-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Wasse JK, Ludwig K, Zachry M, Bruns EJ, Unützer J, & McCauley E (2016). The Contextualized Technology Adaptation Process (CTAP): Optimizing health information technology to improve mental health systems. Administration and Policy in Mental Health and Mental Health Services Research, 43(3), 394–409. 10.1007/s10488-015-0637-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller WR, & Mount KA (2001). A small study of training in motivational interviewing: Does one workshop change clinician and client behavior? Behavioural and Cognitive Psychotherapy, 29(4), 457–471. 10.1017/S1352465801004064 [DOI] [Google Scholar]

- Nadeem E, Gleacher A, & Beidas RS (2013). Consultation as an implementation strategy for evidence-based practices across multiple contexts: Unpacking the black box. Administration and Policy in Mental Health and Mental Health Services Research, 40(6), 439–450. 10.1007/s10488-013-0502-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura BJ, Selbo-Bruns A, Okamura K, Chang J, Slavin L, & Shimabukuro S (2014). Developing a systematic evaluation approach for training programs within a train-the-trainer model for youth cognitive behavior therapy. Behaviour Research and Therapy, 53, 10–19. 10.1016/j.brat.2013.12.001 [DOI] [PubMed] [Google Scholar]

- Nemeth LS, Feifer C, Stuart GW, & Ornstein SM (2008). Implementing change in primary care practices using electronic medical records: a conceptual framework. Implementation Science, 3, 3 10.1186/1748-5908-3-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olmstead T, Carroll KM, Canning-Ball M, & Martino S (2011). Cost and cost-effectiveness of three strategies for training clinicians in motivational interviewing. Drug and Alcohol Dependence, 116(1–3), 195–202. 10.1016/j.drugalcdep.2010.12.015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Owens JS, Lyon AR, Brandt NE, Warner CM, Nadeem E, Spiel C, & Wagner M (2014). Implementation science in school mental health: Key constructs in a developing research agenda. School Mental Health, 6(2), 99–111. 10.1007/s12310-013-9115-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palmiter DJ Jr. (2004). A survey of the assessment practices of child and adolescent clinicians. American Journal of Orthopsychiatry, 74(2), 122–128. 10.1037/0002-9432.74.2.122 [DOI] [PubMed] [Google Scholar]

- Persons JB, Koerner K, Eidelman P, Thomas C, & Liu H (2016). Increasing psychotherapists’ adoption and implementation of the evidence-based practice of progress monitoring. Behaviour Research and Therapy, 76, 24–31. 10.1016/j.brat.2015.11.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Precision Medicine Initiative. (n.d.). Retrieved June 29, 2016, from https://www.nih.gov/precision-medicine-initiative-cohort-program

- Rakovshik SG, & McManus F (2010). Establishing evidence-based training in cognitive behavioral therapy: A review of current empirical findings and theoretical guidance. Clinical Psychology Review, 30(5), 496–516. 10.1016/j.cpr.2010.03.004 [DOI] [PubMed] [Google Scholar]

- Raudenbush SW, Bryk AS, & Congdon R (2011). HLM for Windows 7.0. Lincolnwood, IL: Scientific Software International. [Google Scholar]

- Rettew DC, Lynch AD, Achenbach TM, Dumenci L, & Ivanova MY (2009). Meta-analyses of agreement between diagnoses made from clinical evaluations and standardized diagnostic interviews. International Journal of Methods in Psychiatric Research, 18(3), 169–184. 10.1002/mpr.289 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roshanov PS, You JJ, Dhaliwal J, Koff D, Mackay JA, Weise-Kelly L, … Brian Haynes, R. (2011). Can computerized clinical decision support systems improve practitioners’ diagnostic test ordering behavior? A decision-maker-researcher partnership systematic review. Implementation Science, 6, 88 10.1186/1748-5908-6-88 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scott K, & Lewis CC (2015). Using measurement-based care to enhance any treatment. Cognitive and Behavioral Practice, 22(1), 49–59. 10.1016/j.cbpra.2014.01.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer JD, & Willett JB (2003). Applied longitudinal data analysis: Modeling change and event occurrence. New York, NY: Oxford University Press; Retrieved from Publisher description http://catdir.loc.gov/catdir/enhancements/fy0612/2002007055-d.html [Google Scholar]

- Stirman SW, Shields N, Deloriea J, Landy M, Belus JM, Maslej MM, & Monson C (2014, November). Using technology to support clinicians in their use of evidence-based therapies . In J. Harrison (Chair), Exploring the boundaries of training: Novel approaches to expanding the impact of evidence-based practice trainings. . Symposium presented at the Association for Behavioral and Cognitive Therapies, Philadelphia, PA. [Google Scholar]

- Unützer J, Chan Y-F, Hafer E, Knaster J, Shields A, Powers D, & Veith RC (2012). Quality improvement with pay-for-performance incentives in integrated behavioral health care. American Journal of Public Health, 102(6), e41–e45. 10.2105/AJPH.2011.300555 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Unützer J, Katon W, Callahan CM, Williams JW Jr, Hunkeler E, Harpole L, … others. (2002). Collaborative care management of late-life depression in the primary care setting: A randomized controlled trial. Jama, 288(22), 2836–2845. 10.1001/jama.288.22.2836 [DOI] [PubMed] [Google Scholar]

- Walker SC, Kerns SEU, Lyon AR, Bruns EJ, & Cosgrove TJ (2010). Impact of school-based health center use on academic outcomes. Journal of Adolescent Health, 46(3), 251–257. 10.1016/j.jadohealth.2009.07.002 [DOI] [PubMed] [Google Scholar]

- Waltz TJ, Powell BJ, Matthieu MM, Damschroder LJ, Chinman MJ, Smith JL, … Kirchner JE (2015). Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: results from the Expert Recommendations for Implementing Change (ERIC) study. Implementation Science, 10, 109 10.1186/s13012-015-0295-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ward AM, Regan J, Chorpita BF, Starace N, Rodriguez A, Okamura K, … Health, T. R. N. on Y. M. (2013). Tracking evidence based practice with youth: Validity of the match and standard manual consultation records. Journal of Clinical Child & Adolescent Psychology, 42(1), 44–55. 10.1080/15374416.2012.700505 [DOI] [PubMed] [Google Scholar]

- Williams J, John W, Katon W, Lin EHB, Nöel PH, Worchel J, Cornell J, … Unützer J (2004). The effectiveness of depression care management on diabetes-related outcomes in older patients. Annals of Internal Medicine, 140(12), 1015–1024. 10.7326/0003-4819-140-12-200406150-00012 [DOI] [PubMed] [Google Scholar]