In the span of their professional lives a radiologist will read over 10 million images, a dermatologist will analyze 200000 skin lesions, and a pathologist will review nearly 100000 specimens. Now imagine a computer doing this work over days, rather than decades, and learning from and refining its diagnostic acumen with each new image. This is the capability that artificial intelligence (AI) will bring to medical care: the potential to interpret clinical data more accurately and more rapidly than medical specialists.

Computer-aided diagnostics are nothing new in medicine. What is different now is that with deep learning, a class of machine learning algorithms, the system autonomously learns and improves. Just as a radiologist, dermatologist, or pathologist, learns to recognize abnormalities by comparing them to the ones analyzed during their past experience, deep learning has the ability to learn from all images in a given database library—across hospitals, cities, or even globally. Interestingly, the process of recognition and diagnosis, both by the physician’s mind and deep learning, is still not well understood, and which feature in a specific image triggers the diagnosis often remains unknown.

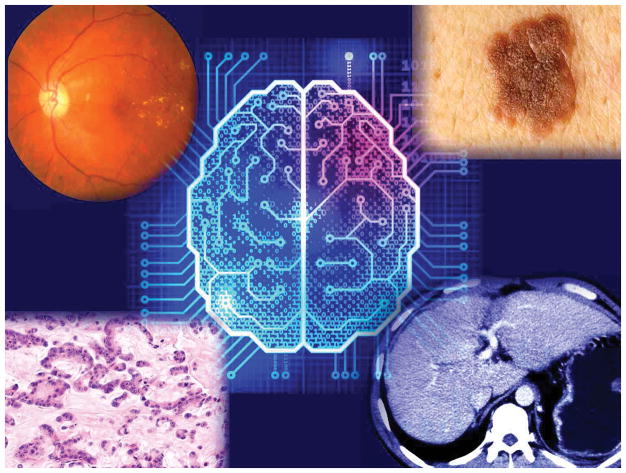

Deep learning has already shown its decision-making skills in the recognition and interpretation of patterns in clinical images. One study found its performance for detecting diabetic retinopathy and macular edema from retinal fundus images was comparable to that of board-certified ophthalmologists. Another study examined deep learning for the identification and classification of skin cancers and showed it had a level of accuracy on a par with dermatologists.

Existing machine learning platforms can be exploited: for example, IBM Watson can suggest the presence of pulmonary embolisms on CT scans, and Google DeepMind can support retinal scan analysis. Besides advancing diagnostic accuracy, the advantages are substantial. AI is faster and has negligible marginal cost, so it can extend screening coverage to virtually any location globally with cellular connectivity. AI has the potential to reduce medical costs both at the outset and by improving patients’ outcomes through more effective disease prevention and appropriate treatment.

Adoption of AI in future clinical practice has the potential to be transformational. Consider an individual interacting with a virtual MD, possibly speaking through a voice interface on their smartphone (think Alexa, Siri, or Cortana) with prompts to take photos of a curious mole of skin rash, tracking it over time, possibly providing suggestions for care, and ultimately requesting an in-person visit to the clinic only if necessary. Further new applications are expected for which AI might be able to objectively quantify pain from facial recognition through video—to date a difficult task— providing additional insights to support diagnosis. In this way health providers will remain the core of the medical care. AI will augment their diagnostic capabilities, saving time previously spent in tedious screening of images. Will innovations in AI address the shortage of primary-care doctors and reduce the cost of care? Perhaps, but several obstacles are slowing its adoption. The first is the availability of labelled data to feed the deep learning algorithm, requiring the collection of a huge set of images validated by multiple medical specialists for each specific disease. The digitization of medical information will accelerate this process. Then, the diagnostic efficacy of deep learning will require testing with repeatable clinical trials, and comparisons to the diagnosis from independent clinical experts. To make this revolution successful, the training of future care providers should also include the basics of machine learning, favoring the future adoption of these technologies in clinical practice.

But AI will not replace physicians any time soon. An important unresolved challenge for medical AI is that it provides no explanatory power. It cannot search for causes of what is observed. It recognizes and accurately classifies a skin lesion, but it falls short in explaining the reasons causing that lesion and what can be done to prevent and eliminate disease. Several machine learning tools, such as probabilistic graphical models, have been developed, but they are far from the practical efficacy that deep learning has achieved in diagnosis support. In the years ahead, however, AI could become the tireless, irreplaceable, and cost-effective complement of physicians giving the doctor more time to focus on the complexities of their individual patient.

Fig. 1.

Images Shutterstock/composition Scripps Translational Science Institute

References

- 1.Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;54:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316:2402–2410. doi: 10.1001/jama.2016.17216. [DOI] [PubMed] [Google Scholar]

- 3.Gillies RJ, Kinahan PE, Hricak H. Radiomics: images are more than pictures, they are data. Radiology. 2016;278:563–577. doi: 10.1148/radiol.2015151169. [DOI] [PMC free article] [PubMed] [Google Scholar]