Abstract

Objective: Infobuttons appear as small icons adjacent to electronic health record (EHR) data (e.g., medications, diagnoses, or test results) that, when clicked, access online knowledge resources tailored to the patient, care setting, or task. Infobuttons are required for “Meaningful Use” certification of US EHRs. We sought to evaluate infobuttons’ impact on clinical practice and identify features associated with improved outcomes.

Methods: We conducted a systematic review, searching MEDLINE, EMBASE, and other databases from inception to July 6, 2015. We included and cataloged all original research in any language describing implementation of infobuttons or other context-sensitive links. Studies evaluating clinical implementations with outcomes of usage or impact were reviewed in greater detail. Reviewers worked in duplicate to select articles, evaluate quality, and abstract information.

Results: Of 599 potential articles, 77 described infobutton implementation. The 17 studies meriting detailed review, including 3 randomized trials, yielded the following findings. Infobutton usage frequency ranged from 0.3 to 7.4 uses per month per potential user. Usage appeared to be influenced by EHR task. Five studies found that infobuttons are used less often than non–context-sensitive links (proportionate usage 0.20–0.34). In 3 studies, users answered their clinical question in > 69% of infobutton sessions. Seven studies evaluated alternative approaches to infobutton design and implementation. No studies isolated the impact of infobuttons on objectively measured patient outcomes.

Conclusions: Weak evidence suggests that infobuttons can help providers answer clinical questions. Research on optimal infobutton design and implementation, and on the impact on patient outcomes and provider behaviors, is needed.

Keywords: infobutton, decision support systems, clinical, hospital information systems, medical informatics applications

INTRODUCTION

Clinicians routinely ask questions during their daily practice,1,2 and seeking and finding accurate answers is associated with improved quality of care.3–6 Efficiently finding answers requires consideration of issues related to the specific patient and clinical setting as well as access to robust knowledge resources.1,3,7,8 Electronic health records (EHRs) can support providers in answering questions at the point of care by, e.g., linking information about the patient, setting, and EHR task (e.g., lab result, medication list) with information contained in online knowledge resources. Context-sensitive knowledge links, commonly referred to as “infobuttons,”9 offer an omnipresent yet unobtrusive way to support point-of-care decisions. Effective September 2012, infobuttons are required for “Meaningful Use” certification of US EHR systems.10,11 However, many EHR functions have failed to improve clinical practice as intended, or have paradoxically increased workload and errors.12–15 As such, it makes sense to empirically study the use of infobuttons in practice.

The typical infobutton appears as a small icon adjacent to EHR data such as a medication, diagnosis, or laboratory test result (see Appendix Figure A1). When clicked, the infobutton opens a Web page with links to online knowledge resources relevant to the EHR task, such as drug dosing or side effects, disease treatments, or test interpretation. This information can be further tailored to the clinical context using characteristics of the patient (e.g., age, gender, comorbid conditions), provider (e.g., specialty), or care setting (e.g., outpatient, inpatient).

Systematic reviews have synthesized the evidence for various clinical information systems and component parts, including EHRs,16 clinical decision support,3,15,17–19 and clinical knowledge resources.3,20,21 However, no such review has focused on infobuttons and similar EHR tools supporting context-sensitive information access (hereafter referred to as infobuttons). Such a review would help clinicians, researchers, administrators, and medical informaticians in deciding when and how to implement, integrate, and use infobuttons to support clinical decisions. Our purpose is to synthesize the evidence on infobuttons, including their impact on clinical care and the factors that influence their use and effectiveness.

METHODS

The systematic review was planned, conducted, and reported using a protocol adhering to PRISMA standards.22

Study questions

We sought to answer the following questions:

What outcomes have been reported in infobutton evaluation studies?

What impact have infobuttons had on clinical practice in terms of patient outcomes and provider learning and behaviors (e.g., usage, influence on clinical questions or decisions)?

What infobutton features are associated with better or worse clinical practice outcomes or user experience?

Data sources and searches

To identify potentially relevant studies, we conducted a comprehensive search of MEDLINE, EMBASE, CINAHL, the Cochrane Registry, Scopus, Web of Science, and ProQuest Dissertations, from each database’s inception to July 6, 2015. We made no restrictions on language. An experienced research librarian (L.J.P.) and author M.T. worked iteratively to develop the search strategy. Keywords and subject headings included Medical Records Systems, Computerized; Decision Support Systems, Clinical; Point-of-Care Systems; context* adj aware*; and infobutton (see Appendix Table A1 for the full strategy). To identify omitted studies, we searched the reference lists of all included reports and asked experts for lists of relevant publications.

Study selection

We used broad inclusion criteria to present a comprehensive overview of infobutton implementation, use, and evaluation. We defined infobutton as: “a knowledge retrieval tool embedded in an EHR that automatically links to knowledge resources tailored to the specific EHR context.” We included all original research studies describing infobuttons for any consumer of medical content, including health care providers, researchers, and patients.

We planned in advance to conduct data abstraction at 2 levels of detail: a brief review for all studies describing infobutton implementation, including studies not reporting outcomes, to create a comprehensive list of studies for interested readers; and a detailed review of studies describing outcomes or comparisons of interest. Criteria for the detailed review were (1) implementation in a clinical setting and (2) evaluation that measured an outcome of usage frequency or clinical impact, or made any comparison using any outcome. We included comparisons with another intervention, with pre-intervention data, or across participant features (i.e., subgroup comparison). We accepted both objective and user-reported measures of clinical impact, including any reported frequency of patient outcomes, provider behaviors, influence on clinical decision, or support in answering clinical questions. Ordinal scales estimating the degree of clinical impact were not considered for inclusion purposes because we anticipated such would be more susceptible to recall bias and acquiescence bias.

Two authors (M.T. and B.H.) worked independently and in duplicate to screen potential articles for inclusion, looking first at titles and abstracts (raw interrater agreement 84%; kappa 0.6) and then reviewing the full text (raw agreement 90%; kappa 0.7). Conflicts were resolved by consensus with the assistance of a third reviewer (G.D.F.) when needed.

Data extraction and quality assessment

We initially planned as our primary outcome the frequency of clinical impact on objective measures of patient outcomes and provider behaviors. However, finding no such studies, we redefined our primary outcomes as usage frequency and user-perceived clinical impact (e.g., on clinical decisions or answering questions). Other outcomes of interest included cost (time, money, and other resources) and satisfaction.

An electronic data abstraction form was developed through iterative testing and revision by all the authors. Working independently and in duplicate, the authors used this form to abstract key information at 2 levels of detail. For all included articles, we abstracted information on setting, comparison, and outcomes. In addition, for studies meeting criteria for detailed review (defined above), we abstracted additional information on study design and quality, institution, users, EHR task, infobutton features, and outcome results. We evaluated methodologic quality using an adaptation of the Newcastle-Ottawa Scale for cohort studies23,24 that evaluates the representativeness of the intervention group, selection of the comparison group and comparability of the cohorts, blinding of outcome assessment, and completeness of follow-up. We reconciled all disagreements by consensus.

Data synthesis

We looked at the prevalence of each abstracted element to identify themes regarding current infobutton implementation and usage. We further explored quantitative details for usage, impact, and satisfaction. To standardize usage across studies, we calculated usage frequency of an information-access resource (e.g., infobutton or Web page with non–context-sensitive links) as the average number of uses per month per potential user, with potential user defined as someone who used the resource at least once during the study period. We calculated the ratio of usage frequency for studies comparing infobuttons vs non–context-sensitive resources. We report quantitative information using forest plots. Cross-study variation in implementation, outcome measurement, and reporting precluded meaningful synthesis using meta-analysis.

RESULTS

Trial flow

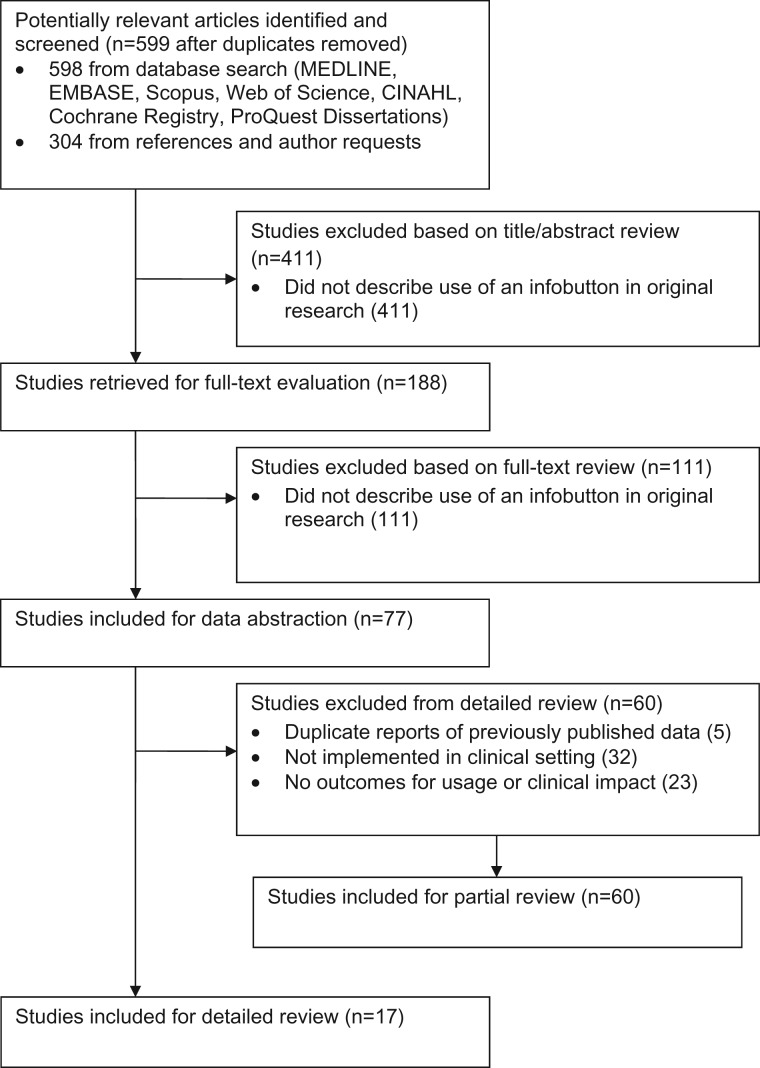

We identified 598 potentially eligible studies from the database search and 1 additional study from article reference lists and expert contacts. Of these 599 articles, 77 described the use of infobuttons and 17 met criteria for detailed review (see Table 1). These 17 studies25–41 reported data on over 24 000 users and 240 000 infobutton searches. We briefly reviewed the other 60 studies (see Appendix Table A2).

Table 1.

Characteristics of studies reviewed in detail (n = 17)

| Author, year | Users No.: type | Site | Study design | Duration (mo.) | Infobutton features | Comparisons | Usage outcomes | Non-usage outcomes |

|---|---|---|---|---|---|---|---|---|

| No comparison | ||||||||

| Reichert (2004)26 | 130: MD | IHC | Cross-sectional | NR | T: Dx, Meds, Test | – | Y | Impact (answered question, improved care), Satisfaction, Time |

| R: DB, Paid | ||||||||

| Del Fiol et al. (2006)29 | 2611: MD, RN | IHC | Cross-sectional | 49 | T: Dx, Meds, Test | – | Y | – |

| R: DB, Paid | ||||||||

| Oppenheim et al. (2009)36 | 272: PG, MD, RN, PA, NP, MS | NSLIJ | Cross-sectional | 1 | T: Clinical Note, Test | – | Y | – |

| R: DB, Paid | ||||||||

| Comparison with non-IB resource | ||||||||

| Cimino et al. (2003)25 | 2607: PG, MD, RN | Columbia | Cross-sectional, compare by resource | 6 | T: Meds, Test | Web page with static links | Ya | Satisfaction |

| R: Paid, DB, Int | ||||||||

| Rosenbloom et al. (2005)27 | 147: PG | Vanderbilt | 2-group, randomized | 12 | T: Dx, Meds, Order, Test | Order entry screen with static links | Y | Impact (cost of care) |

| R: Paid, DB, Int | ||||||||

| Chen et al. (2006)28 | 4,621: MD, PG, RN | Columbia | Cross-sectional, compare by resource | 18 | T: Dx, Meds, Test | Web page with static links | Ya | – |

| R: Int, DB, Paid | ||||||||

| Cimino (2007)32 | > 2901: MD, MS, PG, RN | Columbia | Cross-sectional, compare by resource | 31 | T: Dx, Meds, Test | Web page with static links | Y | Impact (answered question, improved care), Satisfaction |

| R: Int, DB, Paid | ||||||||

| Hunt et al. (2013)39 | 1430: MD, PG, NP, RN | Columbia | Cross-sectional, compare by resource | 12 | T: Test | Web page with static links | Y | – |

| R: DB, Int, Paid | ||||||||

| Hyun et al. (2013)40 | 156: RN | Columbia | Cross-sectional, compare by resource | 12 | T: Dx | Web page with static links | Y | Satisfaction |

| R: Paid | ||||||||

| Borbolla et al. (2014)41 | N not specified: patients | Hospital Italiano, Buenos Aires | Cross-sectional, compare by resource | 14 | T: Dx, Test | Other patient portal resources | Ya | – |

| R: DB | ||||||||

| Comparison with alternate IB implementation | ||||||||

| Maviglia et al. (2006)30 | 590: MD, RN, NP | Partners | 2-group, randomized | 12 | T: Meds | IB linking to 1 of 2 alternative knowledge resources | Y | Impact (answered question, improved care), Satisfaction, Time |

| R: Paid | ||||||||

| Cimino et al. (2007)31 | 4397: mixed “users” | Columbia | Historical control | 14 | T: Dx, Meds, Test | IB with enhanced navigation vs original | Y | Time |

| R: Int, DB, Paid | ||||||||

| Cimino and Borovtsov (2008)33 | 896: MD, PG, RN, MS | Columbia | 2-group, non-randomized | 24 | T: Dx, Meds, Test | IB implemented with e-mail reminder vs no reminder | Y | – |

| R: Int, DB, Paid | ||||||||

| Del Fiol et al. (2008)34 | 104: mixed “clinicians” | IHC | 2-group, randomized | 6 | T: Meds | IB with topic-specific links vs nonspecific links | Y | Impact (answered question, improved care, learning), Time |

| R: DB, Paid | ||||||||

| Cimino (2009)35 | 3483: MD, PG, RN, MS | Columbia | Cross-sectional, compare by resource | 24 | T: Dx, Meds, Test | Alternate methods of IB topic/resource selection IB vs non-IB resource (Web page with static links) | Y | – |

| R: Int, DB, Paid | ||||||||

| Del Fiol et al. (2010)37 | N not specified: mixed “users” | Columbia, IHC, Partners | Prospective prepost cross-site comparison | 24 (12 pre, 12 post) | T: Test | IB augmented to fill topical gaps vs original | b | Impact (answered question) |

| R: Paid | ||||||||

| Cimino et al. (2013)38 | N not specified: mixed “users” | Columbia, IHC, Partners, VHA | Cross-site comparisons | 1–60 (vary by site) | T: Dx, Meds, Test | Site-specific IB implementation factors | Ya | – |

| R: Int, DB, Paid | ||||||||

aReports usage, but insufficient information to calculate frequency (uses per user per month).

bAnalyzed log files to determine the proportion of infobutton uses that retrieved relevant content, but did not report usage rates.

Abbreviations: IB = infobutton; NR = not reported; Users: MD = practicing physician; MS = medical students; NP = nurse practitioner; PA = physician assistant; PG = postgraduate physician (i.e., resident); RN = nurse; Site: Columbia = Columbia University (New York Presbyterian Hospital); IHC = Intermountain Healthcare; NSLIJ = North Shore-Long Island Jewish Health System; Partners = Partners HealthCare System; Vanderbilt = Vanderbilt University Hospital; VHA = Veterans Health Administration; Infobutton features: T- = EHR task/topic (Dx = diagnosis/problem list, M = meds, Order = order entry; Test = test results, Other), R- = resources linked to (Int = internal resources; DB = literature database, e.g., MEDLINE, PubMed; Paid = paid/commercial resource, e.g., MicroMedex, UpToDate).

Characteristics of included studies

Considering all 77 included studies (detailed and brief review) together, 44 (57%) employed infobuttons implemented in a clinical context; the others were conducted in a laboratory or other pilot setting. Twenty-five studies (32%) made some type of comparison (with another group, pre/post-intervention, or by subgroup), 22 (29%) reported usage, and 9 (12%) reported impact. Five (6%) reported data described more completely in another publication; we included only the most complete article for detailed review. Sixty-two studies (81%) took place in the United States.

The remainder of this review focuses only on the 17 studies reviewed in detail (i.e., clinical implementations with data on usage, impact, or a comparison). Of these, 14 (82%) originated from 1 of 3 institutions with locally-developed EHR systems: Columbia University, Intermountain Healthcare, or Partners HealthCare. Sixteen (94%) took place in the United States. Sixteen studies reported infobuttons developed for use by clinicians; 1 study (from Buenos Aires) reported use of infobuttons embedded in a patient portal.41

Study quality

We evaluated study quality for the 17 studies reviewed in detail (see Table 2). We judged the study sample to be representative of the overall potential user base in only 2 studies; for the remainder, investigators included data only for people who had actually used the infobutton (i.e., a potentially biased sample). Three studies used a comparison group with randomized assignment, 1 used a comparison group with nonrandom assignment, 1 used a historical control, 2 contrasted results across institutions, and 7 compared the use of different information-access resources by a single group over a fixed period of time (i.e., cross-sectional study with comparison of resource usage). Three studies reported data from a single group and time point without comparison. For the 7 studies with a separate comparison group, the comparison group was selected from a similar community in 5 studies. Six studies reported outcomes beyond a single time point; among these, 4 had > 75% follow-up. All studies reported log file data on infobutton usage, which we coded as an objective, blinded measurement. Several studies also reported self-report measures of impact on patient care and satisfaction (see Table 1).

Table 2.

Study quality

| Author, year | Representativeness (sampling) | Selection of comparison group | Comparability of cohortsa | Outcome follow-upb |

|---|---|---|---|---|

| No comparison | ||||

| Reichert (2004)26 | 0 | N/A | N/A | 0 |

| Del Fiol et al. (2006)29 | 0 | N/A | N/A | N/A |

| Oppenheim et al. (2009)36 | 0 | N/A | N/A | N/A |

| Comparison with non-IB resource | ||||

| Cimino et al. (2003)25 | 0 | N/Ac | N/A | N/A |

| Rosenbloom et al. (2005)27 | 1 | 1 | 1 (RCT) | 1 |

| Chen et al. (2006)28 | 0 | N/Ac | N/A | N/A |

| Cimino (2007)32 | 0 | N/Ac | N/A | N/A |

| Hunt et al. (2013)39 | 0 | N/Ac | N/A | N/A |

| Hyun et al. (2013)40 | 0 | N/Ac | N/A | N/A |

| Borbolla et al. (2014)41 | 0 | N/Ac | N/A | N/A |

| Comparison with alternate IB implementation | ||||

| Maviglia et al. (2006)30 | 0 | 1 | 1 (RCT) | 0 |

| Cimino et al. (2007)31 | 0 | 1 | 0 | 1 |

| Cimino and Borovtsov (2008)33 | 0 | 0 | 0 | 1 |

| Del Fiol et al. (2008)34 | 0 | 1 | 1 (RCT) | 1 |

| Cimino (2009)35 | 0 | N/Ac | N/A | N/A |

| Del Fiol et al. (2010)37 | 0 | 1 | 0 | N/A |

| Cimino et al. (2013)38 | 0 | 0 | 0 | N/A |

NA = not applicable (no separate comparison group or no follow-up period).

aComparability of cohorts could be demonstrated through randomized group assignment or by statistical adjustment for baseline characteristics; no studies reported the latter approach.

bWe also appraised the quality of outcome assessment. All studies reported log file data on infobutton usage, which we coded as an objective, blinded measurement. Several studies also reported self-report measures of impact on patient care and satisfaction (see Table 1).

cCompared usage of different resources among the same group of potential users, thus providing comparison results without a separate comparison group.

Historical evolution

The first report of infobutton-like context-sensitive decision support was the Hepatopix tool in 1989.42 Subsequent similar tools included Psychotopix,43 Interactive Query Workstation,44 and MedWeaver.45 Cimino coined the term “infobutton” in 1997,9 and this term has now been widely adopted to refer to context-sensitive EHR decision support.46 The infobutton manager47,48 was introduced in 2002 as a bridge between the infobutton itself (i.e., the EHR-embedded link) and the knowledge resource(s). Infobutton managers anticipate the clinical questions likely to emerge from an EHR task, prioritize potential knowledge resources, and provide links that automatically search target resources based on context. An “Infobutton Standard” has been developed by Health Level Seven International (HL7; http://www.hl7.org/) to regulate the EHR context and the search request sent to knowledge resources, thus facilitating infobutton implementation and system interoperability.49 In 2014, infobutton functionality compliant with the HL7 Infobutton Standard became a required criterion for EHR certification in the US EHR Meaningful Use program.11 Since then, the standard has been widely adopted by EHR products and knowledge resources (see https://www.healthit.gov/chpl).

Usage

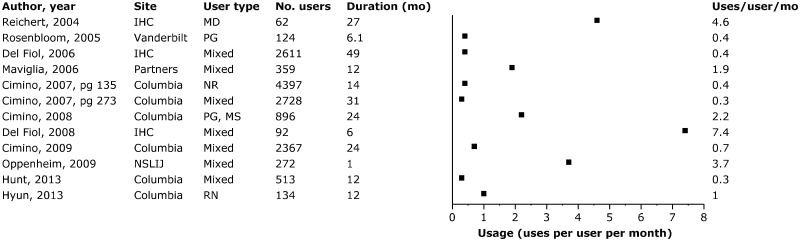

Usage frequency ranged from 0.3 to 7.4 uses per month per user (see Figure 2), with substantial variation within and between institutions. We found no clear pattern in usage rates over time, although 1 study found a steady rise in usage over a 4-year period.29 Subgroup analyses of usage frequency across health care provider type (e.g., practicing physicians, residents, nurses, or other allied health) likewise revealed no consistent patterns (data not shown).25,28,30,35,36,39 Infobutton usage varied substantially, albeit inconsistently by EHR task. Three studies25,29,38 (including 1 multi-institutional study38) found that searches while prescribing or reviewing medications accounted for 70–84% of infobuttons uses, while another study found that that > 80% of searches occurred while reviewing labs.35 A fifth study found a roughly equal distribution of usage across tasks.27

Figure 1.

Trial flow.

Figure 2.

Frequency of infobutton usage Abbreviations: NR = not reported; mo = month. See Table 1 footnotes for other abbreviations.

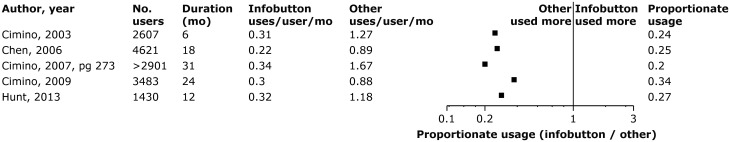

When compared with traditional information-access resources such as non–context-sensitive links on a library Web page or EHR resource tab, infobutton usage frequency is usually lower than the alternative (see Figure 3). Five studies at the same institution over a 10-year period25,28,32,35,39 consistently found that infobuttons are used less often than non–context-sensitive links (proportionate usage [infobutton/non–context-sensitive] range 0.20–0.34). By contrast, another study at the same institution found that while infobuttons were used less often than non–context-sensitive links for outpatient questions, the opposite pattern was found for inpatient questions.33 A randomized trial at another institution evaluated a computerized provider order-entry (CPOE) screen redesigned to include context-sensitive links (i.e., infobuttons) along with other information and alerts, in comparison with the original CPOE screen that presented non–context-sensitive links. This study found proportionate usage of the screen with infobuttons to be 9.2 times higher.27

Figure 3.

Comparative usage for infobuttons vs non–context-sensitive information-access resources. All studies were conducted at Columbia University and all users were mixed providers. The “other” (non–context-sensitive) comparison was a “Health Resources” Web page with static links. Calculations accounted for different sample sizes for infobutton and other (non–context-sensitive links) groups, except that Cimino 2003 and Chen 2006 did not report users for infobutton and other links separately, and for these studies we used the total sample size. Abbreviations: mo = month.

Clinical impact

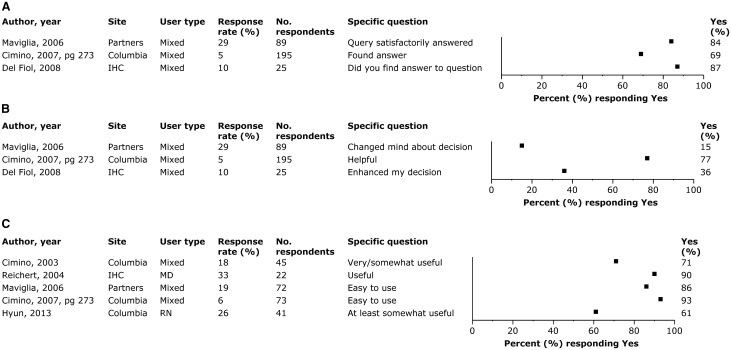

We found no studies evaluating impact on actual patient outcomes or objectively measuring provider behaviors beyond usage. Three studies asked users to complete a brief questionnaire regarding perceived impact immediately following infobutton use (see Figure 4).30,32,34 Although response rates were low (< 30% of uses), available responses suggest that users found an answer to their clinical question at least 69% of the time, although it changed their clinical decision less often (range 15–77%). One study26 asked similar questions on a post-study survey, and found that 68% (of 22 respondents) agreed that infobuttons answered their questions more than half the time, and 91% agreed that infobuttons helped them make better decisions.

Figure 4.

Perceived clinical impact and satisfaction. See Table 1 footnotes for abbreviations. Panel A. Answered question. Panel B. Improved clinical decision. Panel C. Satisfaction.

One study27 hypothesized that a CPOE screen redesigned to include infobuttons along with information about medication and test costs might reduce the total cost of orders placed (i.e., health care resource utilization). However, it found no significant difference between the redesigned and original CPOE screens (average $403 vs $409 per order session, respectively).

Time

Three studies estimated the time required to find an answer with infobuttons, reporting average times of 25,30 39,34 and 9731 seconds per question. We found no studies comparing time for searches using infobuttons vs other information-access resources.

User satisfaction

User satisfaction with infobuttons (i.e., usefulness, ease of use) was universally very favorable (see Figure 4). We found 1 study comparing satisfaction with infobuttons vs non–context-sensitive resources, in which a similar percentage of users perceived infobuttons (68%) and a Web page with static information links (69%) as useful.40

Influential features of infobutton design and implementation

Studies comparing different approaches to implementing or promoting infobuttons shed limited light on effective approaches. For example, 1 study found increased usage frequency following e-mail reminders to increase infobutton awareness.33 Another study found higher usage when the implementation was enhanced to improve coverage of the content domain.37 A change in the infobutton interface to reduce the number of words and improve cross-topic consistency resulted in users selecting resources more often and finding answers more quickly in a controlled (usability lab) setting, but had no impact on usage frequency in clinical settings.31

The choice of knowledge resources accessed by an infobutton matters. One study found that infobuttons linking directly to the subsection of a drug monograph specific to the question (e.g., drug dosing or contraindications) reduced the session time compared with infobuttons linking to the top of the monograph.34 Another found significant differences in usage among 2 commercial knowledge resources that differed in both content and presentation.30 A multi-institutional observational study found that 15% of infobutton topics accounted for 80% of infobutton uses.37 Another multi-institutional study identified important differences in information needs and available knowledge resources at each institution.38

DISCUSSION

We identified 77 studies describing infobutton implementation, 17 of which evaluated infobutton usage or user-reported impact and satisfaction. Usage ranged from 0.3 to 7.4 uses per potential user per month, with high variability within and between institutions. Usage appears to be influenced by the EHR task. Clinicians in practice appear to use infobuttons less often than non-infobutton approaches to access knowledge resources, although most of this evidence comes from 5 nonrandomized studies from a single institution; moreover, lower usage is not unexpected for reasons outlined below. Users report favorable impact on answering questions and making clinical decisions. We found no studies isolating the impact of infobuttons on patient outcomes.

Limitations and strengths

This review has several limitations. First, as with all reviews, our findings are constrained by the quantity and quality of published evidence. Although we identified 77 original research studies, only 17 reported outcomes of primary interest or useful comparisons. The quality of even these studies was generally low, and no studies reported objectively measured impact on patient care or provider behaviors. Rather, all study outcomes were limited to usage rates and self-reported measures of perceived impact and satisfaction. Moreover, incomplete and imprecise reporting in most studies made it difficult to determine key aspects of study methods and results.

Second, infobutton usage—the only outcome reported in most studies—is important, yet problematic, because of varying denominators and context-specific influences. Optimal usage depends on the incidence of user information needs; thus it is hard to know how often an information resource should have been used. Additionally, comparisons of usage between infobuttons and other information-access resources can be misleading since infobuttons are accessible only from a subset of possible EHR contexts, whereas context-free resources are typically available from a variety of locations. Finally, many reports omitted information needed to calculate standardized usage frequencies, or lacked details regarding the implementation and context that might explain cross-study differences in usage.

Third, most studies originated from 1 of 3 academic institutions (similar to the findings of a previous review of health information technology19); generalizability to nonacademic settings remains unknown. Moreover, authors J.J.C. and G.D.F. were involved in several of the included studies. While their expertise is an obvious strength, their close involvement also introduces a potential conflict of interest and lack of objectivity in this review. To mitigate this limitation, other authors (M.T., B.H., D.A.C.) independently synthesized the results to form impartial conclusions and then discussed these conclusions with all authors to incorporate their expert-derived insights. Fourth, heterogeneities in study design or outcome precluded formal meta-analysis; however, we did abstract and report quantitative data whenever possible. Fifth, although many “preliminary” research reports promised more robust reporting of detailed results and analyses, we could not find follow-up reports in most instances; this could reflect publication bias against nonsignificant or unfavorable findings.

Strengths include the systematic search of multiple databases guided by an expert research librarian, duplicate review at all stages of inclusion and data abstraction, involvement of experts in the field, consideration of study design and quality when synthesizing evidence, and reporting of quantitative results whenever possible.

Integration with prior work

Clinical questions arise frequently but usually go unanswered,1 due in large part to insufficient time.50,51 The ideal knowledge resource varies for different questions,37 and resources that are efficient, integrated with clinical workflows, and optimized for the question will facilitate finding answers.3,8 Infobuttons should, in theory, help providers find answers faster and with greater accuracy. We found no studies directly comparing the search time or accuracy for infobuttons vs other information-access resources in clinical practice. However, a systematic review of information-seeking behaviors1 identified 3 studies reporting search time, which provide an indirect comparison. The average search times for infobuttons (range 25–97 s)30,31,34 compare favorably with searches without infobuttons (range 118–241 s).2,52,53 While these data do not allow formal statistical analysis, they suggest that infobuttons could enhance information-seeking efficiency. Research is needed to confirm this, and to clarify how to further optimize the speed and accuracy of information searches.1

Previous reviews of health information technology research have called for improved reporting, greater attention to how implementation and context affect outcomes, and evaluations using commercially-available EHRs to enhance generalizability.15,18,19 We identified similar gaps in the evidence for infobuttons, and affirm the suggested research agendas. In particular, we agree that “the most important improvement… is increased measurement of and reporting of context, implementation, and context-sensitivity of effectiveness.”15

Implications for practice and future research

The incorporation of infobuttons into modern EHRs as part of the Meaningful Use standard creates an imperative for evidence-based guidance in design and implementation. This is particularly important as commercial EHRs with built-in infobutton functionality replace locally-developed systems, because most design features will be prespecified and inflexible. Unfortunately, we found only limited evidence to guide the optimal implementation of infobuttons to support clinical decisions. Authors have suggested that usability, topic availability, and relevance are associated with usage and clinical impact,27,30,31,34,35,39 but data are insufficient to confirm these propositions. In addition to research focusing on the clinical impact of infobutton use (e.g., provider behaviors and patient outcomes), we need research clarifying the barriers and solutions to infobutton design, development, implementation, usage, and maintenance. For example, we need to understand the information needs and workflow of potential users, especially across different settings1,15,51,54; how to optimize the speed and accuracy of identifying trustworthy, concise, and relevant information8; how to focus clinicians’ attention on the most salient information; and how to encourage initial use so that clinicians gain familiarity with and integrate infobuttons into their clinical routine. Studies in controlled settings (laboratory) and in authentic clinical contexts will likely yield complementary evidence. The rising use of commercial EHR systems with standards-based infobutton functionality will enhance the generalizability of research results and could enable multicenter projects.37

These research efforts will require robust outcome measures. Usage remains an important measure, as noted above. However, we note the absence of any studies reporting objective impact of infobuttons on patient care, and suggest this as an important, albeit challenging, aspiration in future research. In addition to patient outcomes such as diagnostic accuracy, treatment success, cost of care, and patient satisfaction, other important clinical outcomes include time to find an answer, adherence to guidelines, and adverse events (e.g., did the infobutton point the provider to misleading information?). Various data sources (EHR data, user self-report, patient survey), each with unique advantages and disadvantages, could be used to measure these outcomes. Validation of these outcome measures,55,56 including correlation between clinically important measures and “surrogate” measures,57 would be an important research direction. Creating standardized definitions for key variables such as usage, potential users, session success, and session time would also be helpful.

In addition to facilitating timely access to information at the point of care, infobuttons may find use in continuing medical education, maintenance of certification, and patient education. For example, providers frequently use infobuttons to access patient education materials.30,40 Perhaps more importantly, with the rise of patient-directed information systems (e.g., patient portals),58,59 we anticipate a growing need for infobuttons directed at patients.41 Given the lack of background knowledge among this target audience, the potential benefits and potential risks (i.e., of misinformation) of patient-directed infobuttons could be even greater than for health care professionals.

Finally, usage in many studies seems lower than anticipated, suggesting that the availability of even well-designed infobuttons does not guarantee their use. In addition, usage varies widely across clinical tasks and between institutions. We suspect this variability reflects the “context-sensitive effectiveness” of information technologies15; i.e., issues related to local culture, clinical task and topic (e.g., laboratory test interpretation vs medication prescription support), workflow (e.g., timing of the task51), infobutton availability (i.e., some infobuttons are available only in limited clinical or EHR contexts), and promotion (advertising and education). Research on infobuttons must focus not only on the technology and user experience, but also on the workflow, cultural, and organizational determinants of their long-term success.

Supplementary Material

ACKNOWLEDGMENTS

We thank Larry J. Prokop, MLS (Mayo Medical Libraries), for his contributions in planning and conducting the literature search, and Nathan C Hulse, PhD, and Jie Long, PhD, (Intermountain Healthcare) for providing the figure showing a representative infobutton.

Funding

B.H. was supported by National Library of Medicine training grant number T15LM007124.

Competing interests

The authors are not aware of any competing interests.

SUPPLEMENTARY MATERIAL

Supplementary material are available at Journal of the American Medical Informatics Association online.

REFERENCES

- 1. Del Fiol G, Workman TE, Gorman PN. Clinical questions raised by clinicians at the point of care: a systematic review. JAMA Intern Med 2014;174:710–718. [DOI] [PubMed] [Google Scholar]

- 2. Ely JW, Osheroff JA, Ebell MH, et al. Analysis of questions asked by family doctors regarding patient care. BMJ 1999;319:358–361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Lobach D, Sanders GD, Bright TJ, et al. Enabling Health Care Decisionmaking Through Clinical Decision Support and Knowledge Management. Evidence Report No. 203 Rockville, MD: Agency for Healthcare Research and Quality; 2012. [PMC free article] [PubMed] [Google Scholar]

- 4. Bonis PA, Pickens GT, Rind DM, Foster DA. Association of a clinical knowledge support system with improved patient safety, reduced complications and shorter length of stay among Medicare beneficiaries in acute care hospitals in the United States. Int J Med Inform 2008;77:745–753. [DOI] [PubMed] [Google Scholar]

- 5. Isaac T, Zheng J, Jha A. Use of UpToDate and outcomes in US hospitals. J Hosp Med 2012;7:85–90. [DOI] [PubMed] [Google Scholar]

- 6. Reed DA, West CP, Holmboe ES, et al. Relationship of electronic medical knowledge resource use and practice characteristics with Internal Medicine Maintenance of Certification Examination scores. J Gen Intern Med 2012;27:917–923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Kawamoto K, Houlihan CA, Balas EA, Lobach DF. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005;330(7494):765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Cook DA, Sorensen KJ, Hersh W, Berger RA, Wilkinson JM. Features of effective medical knowledge resources to support point of care learning: a focus group study. PLoS ONE 2013;8(11):e80318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Cimino JJ, Elhanan G, Zeng Q. Supporting infobuttons with terminological knowledge. Proc AMIA Annu Fall Symp 1997:528–532. [PMC free article] [PubMed] [Google Scholar]

- 10. Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. New Engl J Med 2010;363:501–504. [DOI] [PubMed] [Google Scholar]

- 11. Health and Human Services Department. 2015Edition Health Information Technology (Health IT) Certification Criteria, 2015 Edition Base Electronic Health Record (EHR) Definition, and ONC Health IT Certification Program Modifications. https://federalregister.gov/a/2015-25597. Accessed November 18, 2015. [DOI] [PubMed] [Google Scholar]

- 12. Koppel R, Metlay JP, Cohen A, et al. Role of computerized physician order entry systems in facilitating medication errors. JAMA 2005;293:1197–1203. [DOI] [PubMed] [Google Scholar]

- 13. Rosenbaum L. Transitional chaos or enduring harm? The EHR and the disruption of medicine. New Engl J Med 2015;373(17):1585–1588. [DOI] [PubMed] [Google Scholar]

- 14. Payne TH, Corley S, Cullen TA, et al. Report of the AMIA EHR-2020 Task Force on the status and future direction of EHRs. J Am Med Inform Assoc 2015;22:1102–1110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Jones SS, Rudin RS, Perry T, Shekelle PG. Health information technology: an updated systematic review with a focus on meaningful use. Ann Int Med 2014;160(1):48–54. [DOI] [PubMed] [Google Scholar]

- 16. Campanella P, Lovato E, Marone C, et al. The impact of electronic health records on healthcare quality: a systematic review and meta-analysis. Eur J Public Health 2016;26:60–64. [DOI] [PubMed] [Google Scholar]

- 17. Garg AX, Adhikari NKJ, McDonald H, et al. Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review. JAMA 2005;293:1223–1238. [DOI] [PubMed] [Google Scholar]

- 18. Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems: a systematic review. Ann Intern Med 2012;157:29–43. [DOI] [PubMed] [Google Scholar]

- 19. Chaudhry B, Wang J, Wu S, et al. Systematic review: impact of health information technology on quality, efficiency, and costs of medical care. Ann Intern Med 2006;144:742–752. [DOI] [PubMed] [Google Scholar]

- 20. Lau F, Kuziemsky C, Price M, et al. A review on systematic reviews of health information system studies. J Am Med Inform Assoc 2010;17:637–645. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Gagnon MP, Pluye P, Desmartis M, et al. A systematic review of interventions promoting clinical information retrieval technology (CIRT) adoption by healthcare professionals. Int J Med Inform 2010;79:669–680. [DOI] [PubMed] [Google Scholar]

- 22. Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA Statement. Ann Int Med 2009;151:264–269. [DOI] [PubMed] [Google Scholar]

- 23. Wells GA, Shea B, O’Connell D, et al. The Newcastle-Ottawa Scale (NOS) for Assessing the Quality of Nonrandomised Studies in Meta-analyses 2007. http://www.ohri.ca/programs/clinical_epidemiology/oxford.htm. Accessed July 6, 2016. [Google Scholar]

- 24. Cook DA, Reed DA. Appraising the quality of medical education research methods: the Medical Education Research Study Quality Instrument and the Newcastle-Ottawa Scale-Education. Academic Med 2015;90:1067–1076. [DOI] [PubMed] [Google Scholar]

- 25. Cimino JJ, Li J, Graham M, et al. Use of online resources while using a clinical information system. Annual Symposium Proceedings/AMIA Symposium. AMIA; 2003:175–179. [PMC free article] [PubMed] [Google Scholar]

- 26. Reichert JC. Measuring the Impact of Lowering the Barriers to Information Retrieval on the Use of a Clinical Reference Knowledge Base at the Point of Care [Ph.D. dissertation] Salt Lake City: University of Utah; 2004. [Google Scholar]

- 27. Rosenbloom ST, Geissbuhler AJ, Dupont WD, et al. Effect of CPOE user interface design on user-initiated access to educational and patient information during clinical care. J Am Med Inform Assoc 2005;12(4):458–473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Chen ES, Bakken S, Currie LM, Patel VL, Cimino JJ. An automated approach to studying health resource and infobutton use. Stud Health Technol Inform 2006;122:273–278. [PubMed] [Google Scholar]

- 29. Del Fiol G, Rocha RA, Clayton PD. Infobuttons at Intermountain Healthcare: utilization and infrastructure. Annual Symposium Proceedings/AMIA Symposium. AMIA; 2006:180–184. [PMC free article] [PubMed] [Google Scholar]

- 30. Maviglia SM, Yoon CS, Bates DW, Kuperman G. KnowledgeLink: impact of context-sensitive information retrieval on clinicians' information needs. J Am Med Inform Assoc 2006;13(1):67–73. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Cimino JJ, Friedmann BE, Jackson KM, Li J, Pevzner J, Wrenn J. Redesign of the Columbia University Infobutton Manager. Annual Symposium Proceedings/AMIA Symposium. AMIA; 2007:135–139. [PMC free article] [PubMed] [Google Scholar]

- 32. Cimino JJ. An integrated approach to computer-based decision support at the point of care. Trans Am Clin Climatol Assoc 2007;118:273–288. [PMC free article] [PubMed] [Google Scholar]

- 33. Cimino JJ, Borovtsov DV. Leading a horse to water: using automated reminders to increase use of online decision support. Annual Symposium Proceedings/AMIA Symposium. AMIA; 2008:116–120. [PMC free article] [PubMed] [Google Scholar]

- 34. Del Fiol G, Haug PJ, Cimino JJ, Narus SP, Norlin C, Mitchell JA. Effectiveness of topic-specific infobuttons: a randomized controlled trial. J Am Med Inform Assoc 2008;15(6):752–759. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Cimino JJ. The contribution of observational studies and clinical context information for guiding the integration of infobuttons into clinical information systems. AMIA Annual Symposium Proceedings/AMIA Symposium. AMIA; 2009:109–113. [PMC free article] [PubMed] [Google Scholar]

- 36. Oppenheim MI, Rand D, Barone C, Hom R. ClinRefLink: implementation of infobutton-like functionality in a commercial clinical information system incorporating concepts from textual documents. AMIA Annual Symposium Proceedings/AMIA Symposium. AMIA; 2009:487–491. [PMC free article] [PubMed] [Google Scholar]

- 37. Del Fiol G, Cimino JJ, Maviglia SM, Strasberg HR, Jackson BR, Hulse NC. A large-scale knowledge management method based on the analysis of the use of online knowledge resources. AMIA Annual Symposium Proceedings/AMIA Symposium AMIA; 2010:142–146. [PMC free article] [PubMed] [Google Scholar]

- 38. Cimino JJ, Overby CL, Devine EB, et al. Practical choices for infobutton customization: experience from four sites. AMIA Annual Symposium Proceedings/AMIA Symposium AMIA; 2013:236–245. [PMC free article] [PubMed] [Google Scholar]

- 39. Hunt S, Cimino JJ, Koziol DE. A comparison of clinicians’ access to online knowledge resources using two types of information retrieval applications in an academic hospital setting. J Med Library Assoc 2013;101(1):26–31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Hyun S, Hodorowski JK, Nirenberg A, et al. Mobile health-based approaches for smoking cessation resources. Oncol Nursing Forum 2013;40(4):E312–E319. [DOI] [PubMed] [Google Scholar]

- 41. Borbolla D, Del Fiol G, Taliercio V, et al. Integrating personalized health information from MedlinePlus in a patient portal. Stud Health Technol Inform 2014;205:348–352. [PubMed] [Google Scholar]

- 42. Powsner SM, Riely CA, Barwick KW, Morrow JS, Miller PL. Automated bibliographic retrieval based on current topics in hepatology: hepatopix. Comput Biomed Res 1989;22(6):552–564. [DOI] [PubMed] [Google Scholar]

- 43. Powsner SM, Miller PL. Automated online transition from the medical record to the psychiatric literature. Methods Inform Med 1992;31(3):169–74. [PubMed] [Google Scholar]

- 44. Cimino C, Barnett GO, Hassan L, Blewett DR, Piggins JL. Interactive Query Workstation: standardizing access to computer-based medical resources. Comput Methods Programs Biomed 1991;35(4):293–299. [DOI] [PubMed] [Google Scholar]

- 45. Detmer WM, Barnett GO, Hersh WR. MedWeaver: integrating decision support, literature searching, and Web exploration using the UMLS Metathesaurus. Proceedings/AMIA Annual Fall Symposium 1997:490–494. [PMC free article] [PubMed] [Google Scholar]

- 46. Baorto DM, Cimino JJ. An “infobutton” for enabling patients to interpret on-line Pap smear reports. Proceedings/AMIA. Annual Symposium 2000;47–50. [PMC free article] [PubMed] [Google Scholar]

- 47. Cimino JJ, Li J, Bakken S, Patel VL. Theoretical, empirical and practical approaches to resolving the unmet information needs of clinical information system users. Proceedings/AMIA. Annual Symposium 2002:170–174. [PMC free article] [PubMed] [Google Scholar]

- 48. Del Fiol G, Curtis C, Cimino JJ, et al. Disseminating context-specific access to online knowledge resources within electronic health record systems. Stud Health Technol Inform 2013;192:672–676. [PMC free article] [PubMed] [Google Scholar]

- 49. Del Fiol G, Huser V, Strasberg HR, Maviglia SM, Curtis C, Cimino JJ. Implementations of the HL7 Context-Aware Knowledge Retrieval (“Infobutton”) Standard: challenges, strengths, limitations, and uptake. J Biomed Inform 2012;45:726–735. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Ely JW, Osheroff JA, Ebell MH, et al. Obstacles to answering doctors’ questions about patient care with evidence: qualitative study. BMJ 2002;324:710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51. Cook DA, Sorensen KJ, Wilkinson JM, Berger RA. Barriers and decisions when answering clinical questions at the point of care: a grounded theory study. JAMA Int Med 2013;173:1962–1969. [DOI] [PubMed] [Google Scholar]

- 52. Graber MA, Randles BD, Ely JW, Monnahan J. Answering clinical questions in the ED. Am J Emerg Med 2008;26:144–147. [DOI] [PubMed] [Google Scholar]

- 53. Hoogendam A, Stalenhoef AF, Robbe PF, Overbeke AJ. Answers to questions posed during daily patient care are more likely to be answered by UpToDate than PubMed. J Med Internet Res 2008;10(4):e29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Wears RL, Berg M. Computer technology and clinical work: still waiting for Godot. JAMA 2005;293:1261–1263. [DOI] [PubMed] [Google Scholar]

- 55. Cook DA, Beckman TJ. Current concepts in validity and reliability for psychometric instruments: theory and application. Am J Med 2006;119:166.e7-e16. [DOI] [PubMed] [Google Scholar]

- 56. Cook DA, Brydges R, Ginsburg S, Hatala R. A contemporary approach to validity arguments: a practical guide to Kane’s framework. Med Educ 2015;49:560–575. [DOI] [PubMed] [Google Scholar]

- 57. Fleming TR, DeMets DL. Surrogate end points in clinical trials: are we being misled? Ann Int Med 1996;125:605–613. [DOI] [PubMed] [Google Scholar]

- 58. Goldzweig CL, Orshansky G, Paige NM, et al. Electronic patient portals: evidence on health outcomes, satisfaction, efficiency, and attitudes: a systematic review. Ann Intern Med 2013;159:677–687. [DOI] [PubMed] [Google Scholar]

- 59. Irizarry T, DeVito Dabbs A, Curran CR. Patient portals and patient engagement: a state of the science review. J Med Internet Res 2015;17(6):e148. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.