Abstract

Neuroscientific theories of ADHD alternately posit that cognitive aberrations in the disorder are due to acute attentional lapses, slowed neural processing, or reduced signal-to-noise ratios. However, they make similar predictions about behavioral summary statistics (response times and accuracy), hindering the field’s ability to produce strong and specific tests of these theories. The current study uses the linear ballistic accumulator (LBA: Brown & Heathcote, 2008), a mathematical model of choice response time (RT) tasks, to distinguish between competing theory predictions. Children with ADHD (80) and age-matched controls (32) completed a numerosity discrimination paradigm at two levels of difficulty, and RT data were fit to the LBA model to test theoretical predictions. Individuals with ADHD displayed slowed processing of evidence for correct responses (signal) relative to their peers, but comparable processing of evidence for error responses (noise) and between-trial variability in processing (performance lapses). The findings are inconsistent with accounts that posit an increased incidence of attentional lapses in the disorder, and provide partial support for those that posit slowed neural processing and lower signal-to-noise ratios. Results also highlight the utility of well-developed cognitive models for distinguishing between the predictions of etiological theories of psychopathology.

Keywords: ADHD, response times, LBA, model-based cognitive neuroscience

Introduction

The study of psychological dysfunction, like all sciences, advances through a process of conjectures and refutations. Scientific theories posit strong and specific predictions, and when they disagree with empirical data, a theory is discarded in favor of new theories that explain these data and make novel predictions of their own (Popper, 1963). However, the challenges of realizing such a progression continue to impede the growth of cumulative knowledge that is essential for public health. Attention-Deficit/Hyperactivity Disorder (ADHD), one of the most prevalent mental health diagnoses in the United States (Fulton et al., 2015), is linked to range of serious social and academic impairments (Loe & Feldman, 2007; Wehmeier, Schacht, & Barkley, 2010), but, due to the sparse knowledge of this disorder’s etiology1, treatment is limited to interventions that work for a subset of children and fail to provide long-term gains (Molina et al., 2009). Coghill, Nigg, Rothenberger, Sonuga-Barke and Tannock (2005) asserted that, although multiple etiological theories of ADHD have been proposed, there are considerable challenges to testing them empirically, including causal heterogeneity, developmental change, and the need integrate multiple levels of analysis (e.g., social and genetic). In the decade since, important work has addressed these challenges (e.g., Fair, Bathula, Nikolas, & Nigg, 2012), but theories still abound, and many, including those present a decade ago, have yet to be refuted.

In the current study, we focus on another challenge to forming and testing causal theories of ADHD that we believe runs parallel to those identified by Coghill et al. (2005): the fact that controlled cognitive processes, which play a central role in neuroscientific theories of ADHD, are difficult to define and measure mechanistically. Aberrations in working memory, response inhibition and decision making are ubiquitous in psychiatric populations (Moritz et al., 2002; Willcutt, Doyle, Nigg, Faraone, & Pennington, 2005; Wright, Lipszyc, Dupuis, Thayapararajah, & Schachar, 2014), form the basis of many leading etiological theories of ADHD and related disorders (Nigg, 2000; Pennington & Ozonoff, 1996; Robbins, Gillan, Smith, de Wit, & Ersche, 2012), and serve as a crucial bridge between clinical research and current cognitive neuroscience. Yet, this line of work is currently limited by two interrelated factors.

The first challenge for this general paradigm is the fact that “executive functions”, the broad category in which most cognitive processes relevant to psychopathology are placed (Halperin, 2016), are often difficult to define mechanistically. Critics have long pointed out that theories of these constructs tend to rely on a control “homunculus”, or an intelligent agent who carries out complex operations (e.g., making decisions, managing memories) without an explanation of the specific mechanisms used to accomplish them (Monsell & Driver, 2000; Verbruggen, McLaren, & Chambers, 2014). Without a model of how computational and neural mechanisms complete cognitive tasks, findings of a cognitive deficit in a clinical condition may provide information about how the construct contributes to behavior (e.g., impulsive behavior due to poor inhibition), but are limited in their utility to test theories about the neurocognitive etiology of the condition.

Theoretical uncertainty about the mechanistic processes that underlie complex cognitive functions is compounded by the fact that dependent measures of cognitive performance are multi-determined. The integrity of a cognitive function is typically probed with summary statistics of behavioral performance on a task: response times (RTs) and accuracy rates. Yet, reductions in accuracy and/or increases in the mean or variance of RT may occur for a multitude of reasons: inefficiency in the core cognitive process, changes in caution (i.e., speed/accuracy tradeoffs), momentary lapses of attention to the task, or difficulties initiating a motor response (McVay & Kane, 2012; Ratcliff & McKoon, 2008). This multi-determined nature is problematic because measurements of a process of interest may be contaminated by extraneous processes. Hence, competing etiological theories may posit that separate mechanisms are involved in a clinical condition, but the predictions they make about behavioral performance on a given task (e.g., slowed RT) may be identical.

Such is the case with several major neuroscientific theories of ADHD. Children and adults with ADHD display slower, less accurate and more variable performance on a wide array of cognitive tasks (Castellanos et al., 2005; Epstein et al., 2011; Willcutt et al., 2005), and these findings have thus played a central role in many etiological theories. One causal account, endorsed by at least two major theories, posits that cognitive aberrations in ADHD are primarily due to intermittent attentional lapses, which temporarily hinder performance on affected trials, but leave performance on other trials intact. The functional working memory model (Kofler et al., 2014; Rapport et al., 2008) holds that children with ADHD display incomplete neural maturation in the cortical regions that support the “central executive” (CE) component of working memory, causing increased mind-wandering and other variable processes that disrupt performance on an intermittent subset of trials. A similar proposal is made by a theory positing that spontaneous, low frequency (<0.1 Hz) fluctuations of the default mode network, a brain network that has been linked to resting and off-task states, produce periodic lapses of attention during task performance (Sonuga-Barke & Castellanos, 2007).

In contrast, a second causal account posits that cognitive deficits in ADHD are the result of metabolic constraints that limit the overall speed of neural computation. This account holds that children with ADHD exhibit insufficient production of lactate by astrocytes, which replenishes neural energy on repetitive tasks (Russell et al., 2006; Todd & Botteron, 2001). Insufficient production would be expected to delay the restoration of ionic gradients across neurons’ cell membranes, which would slow neuronal firing rates and thus compromise the general speed with which individuals with ADHD would be able to complete repetitive cognitive operations. The behavioral neuroenergetics theory proposed by Killeen, Russell and Sergeant (2013) refined this hypothesis by positing a model (detailed below) that describes the acute consequences of insufficient neuronal energy as slower and more variable RTs.

A third and distinct account is that cognitive deficits in ADHD are due to reductions in the ratio of task-relevant neural signal to task-irrelevant neural noise during cognitive processing. Karalunas et al. (2014) posit that state-regulation deficits in ADHD, mediated by impairments in phasic responses of, or top-down inputs to, the locus coeruleus-norepinephrine (LC-NE) system (Aston-Jones & Cohen, 2005), result in reduced signal-to-noise ratios. Similarly, the moderate brain arousal model (MBA: Sikström & Söderlund, 2007) references the concept of “stochastic resonance”, in which the addition of moderate amounts of noise to a system allows signals that would otherwise remain undetected to pass a detection threshold (Moss, Ward, & Sannita, 2004). The MBA model posits that individuals with ADHD require more noise than typically-developing individuals for stochastic resonance to occur, effectively reducing the signal-to-noise ratio.

Although these three general accounts, acute attentional lapses, slowed neural computation, and reduced signal-to-noise, provide plausible explanations for behavioral dysfunction, the causal mechanisms that they highlight have similar effects on behavioral summary statistics: increases in the mean and variance of RTs (Karalunas, Geurts, et al., 2014; Killeen et al., 2013; Kofler et al., 2014). As strong tests of theories require predictions to be unambiguous and distinct, analytic tools that extract additional information about the mechanisms that underlie task performance are essential to differentiate such theories.

Model-based cognitive neuroscience, an emerging field that integrates formal models of psychological processes with neuroscience methods and theory (Forstmann & Wagenmakers, 2015; Wiecki, Poland, & Frank, 2015), may provide a framework in which neuroscientific theories of ADHD, and other disorders, can be strongly distinguished. By formally describing how basic computational mechanisms combine to execute complex tasks, mathematical models of cognition have the potential to move the field beyond verbal descriptions of cognitive dysfunction. In turn, the problem of multi-determined measures can be addressed by fitting these models to behavioral data, which allows researchers to observe how model parameters that represent specific mechanistic processes differ between clinically-relevant conditions (White, Ratcliff, Vasey, & McKoon, 2010).

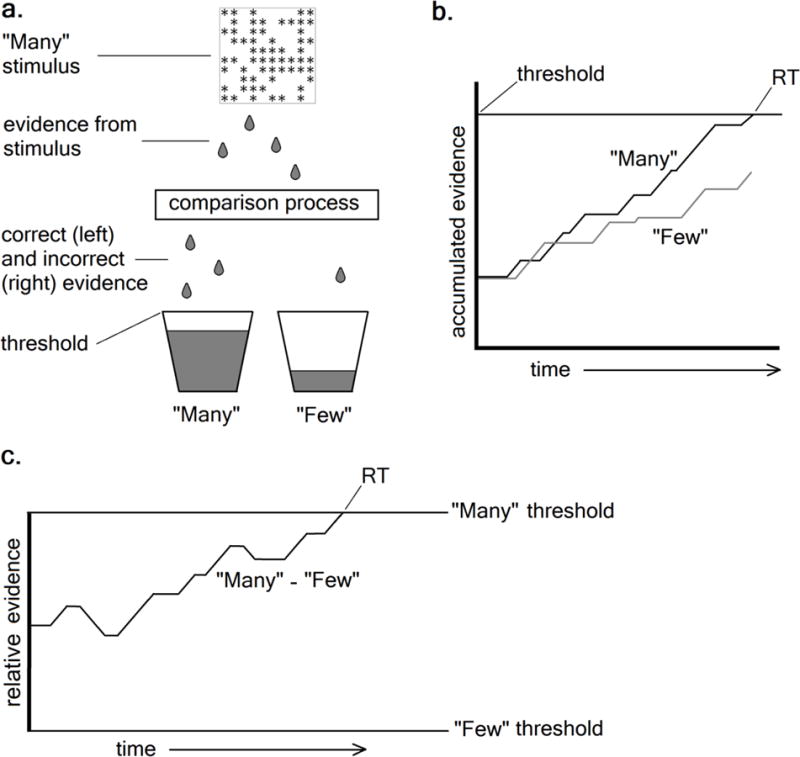

Sequential sampling models (Smith & Ratcliff, 2004), which explain decision making as the gradual, noisy accumulation of sensory evidence in favor of each possible response, have already shown great promise in applications to clinical questions (White et al., 2010; Wiecki et al., 2015). Although there are multiple models in this class, they share the assumption that decisions are the result of a stochastic comparison process (Smith & Ratcliff, 2004), which can be understood with the metaphor of buckets that gather correct and erroneous drops of evidence. In a standard “numerosity discrimination” paradigm (Ratcliff & McKoon, 2008) where participants decide whether a stimulus contains “many” (>50) or “few” (<50) asterisks, (Figure 1a), evidence from the stimulus would enter a noisy process in which it is compared to the “many” and “few” categories. Correct drops of evidence (i.e., drops in the “many” bucket after the presentation of a “many” stimulus) are bits of information that are attributed to the matching category, while erroneous drops are attributed to the other category. Typically, the bucket matching the stimulus fills first, but errors occur when noise causes excessive evidence to accumulate in the non-matching bucket. “Accumulator” models (Figure 1b) describe this process as a race between separate evidence accumulators towards a common threshold, while “diffusion” models (Figure 1c) frame it as a single evidence total that moves between thresholds for each response (Smith & Ratcliff, 2004).

Figure 1.

Representation of the stochastic comparison process assumed by sequential sampling models (a) using the “bucket” metaphor, and illustrating how (b) “accumulator” and (c) “diffusion” models each describe the decision process on a given correct trial.

Initial work involving the Ratcliff diffusion decision model (DDM: Ratcliff, 1978), of the latter class, has already called the conventional interpretation of ADHD-related RT variability into question. Increased RT variability and positive skew are often assumed to reflect attentional lapses (Kofler et al., 2014). However, applications of the DDM to data from individuals with ADHD (Huang-Pollock, Karalunas, Tam, & Moore, 2012; Karalunas, Huang-Pollock, & Nigg, 2012; Metin et al., 2013) have consistently demonstrated that generally lower cognitive efficiency is sufficient to explain these RT features without reference to lapses. Despite these novel insights, no previous empirical studies have explicitly compared competing theories within the DDM framework. Furthermore, as the DDM does not estimate the accumulation speed of correct vs. erroneous evidence separately (Smith & Ratcliff, 2004), the predictions of the slower neural speed and lower signal-to-noise accounts described above cannot be easily distinguished.

The linear ballistic accumulator model (LBA: Brown & Heathcote, 2008), which frames decisions as a race between two or more accumulators of smoothly and linearly increasing evidence for each response, and provides a similar description of behavioral data to the DDM while simplifying several assumptions (Donkin, Brown, Heathcote, & Wagenmakers, 2011), allows the predictions of all three accounts to be made explicit. In the LBA, the rate of accumulation, or “drift rate”, for an accumulator at a given trial is sampled from a normal distribution with a mean of v and a standard deviation of sv. Typically, the speed of evidence accumulation for the correct response (vc) competes with the speed of evidence accumulation for the error response (ve). The starting point of both accumulators is drawn from a uniform distribution bounded at 0 and the parameter A, and when one of the accumulators in the race reaches a response threshold (b), the corresponding response occurs. The model also has a non-decision time parameter (t0), which indexes the amount of time in an RT that is taken up by processes which are not involved in the decision (e.g., encoding, motor response).

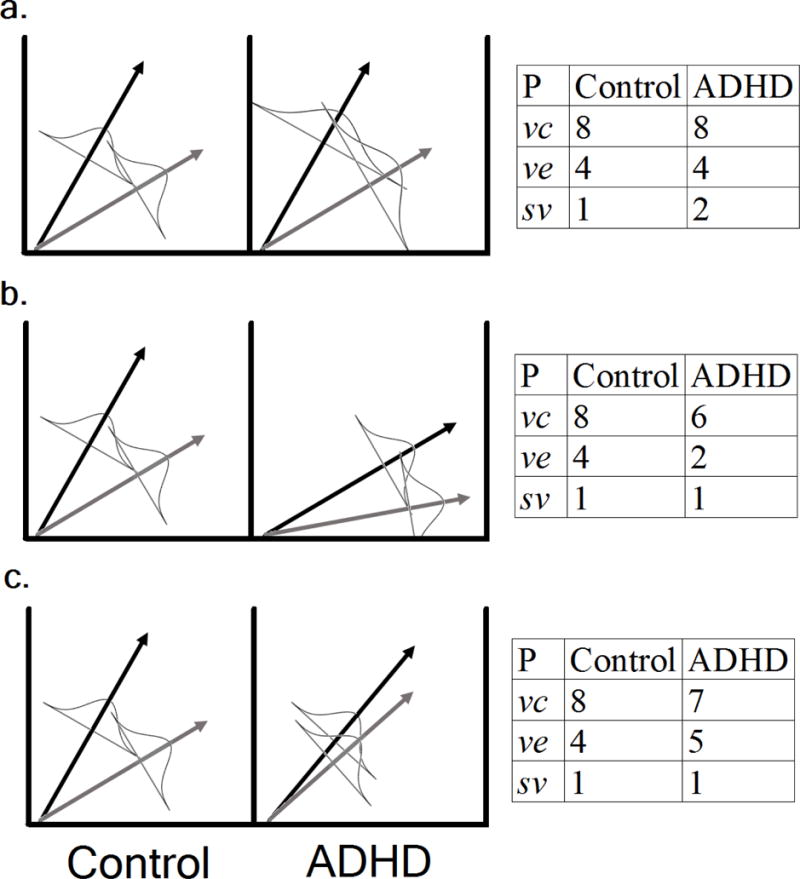

Relevant to the attentional lapses explanation, the LBA parameter indexing between-trial variability of drift rate (sv), which may intuitively be assumed reflect variability in attentional state, has been both theoretically (Hawkins, Mittner, Boekel, Heathcote, & Forstmann, 2015) and empirically (McVay & Kane, 2012) linked to acute attentional lapses and mind-wandering. Therefore, attentional lapse theories predict that children with ADHD should show increases in sv relative to their typical peers (Figure 2a). The explicit formalization of the behavioral neuroenergetics model (Killeen et al., 2013) describes the consequences of slowed neural computation by assuming that RTs in any task are the result of a single, general accumulation process towards a boundary. The drift rate of the process is assumed to be determined by the amount of neuronal energy available, and, for simplicity, to be the same for correct and error RTs. Therefore, as the available energy decreases, the rate of accumulation slows. In the LBA framework, such slowing should be reflected by reductions in both the vc and ve parameters in the disorder (Figure 2b), as global slowing in firing rates would be expected to affect all neurons involved in the decision. Finally, the signal-to-noise account posits that reductions in this ratio would lower an individual’s ability to discriminate between stimuli, analogous to d’ from signal detection theory (Karalunas, Geurts, et al., 2014). In the LBA, reduced d’ can be reflected by reductions in the difference between the vc and ve parameters (Heathcote, Suraev, et al., 2015), as has been found in previous within-subjects manipulations of stimulus discriminability (Ester, Ho, Brown, & Serences, 2014; van Maanen, Forstmann, Keuken, Wagenmakers, & Heathcote, 2016). Thus, reduced signal-to-noise should result in a lower ratio of vc relative to ve because of reductions in the former and increases in the latter (Figure 2c), reflecting similar speed of neural computation, but lower discriminability.

Figure 2.

Demonstration of (left) and hypothetical parameter values for (right) three possible mechanistic causes for cognitive deficits in ADHD: a) increased attentional lapses, b) slowing in the general speed of neural computation, and c) a reduced signal-to-noise ratio. Black and gray arrows represent mean drift rates for correct (vc) and error (ve) information, respectively. Normal distributions centered about the arrows represent between-trial variance in drift.

The current study seeks to explicitly test the predictions of the three causal accounts of ADHD-related performance deficits described above within the LBA model framework. We applied a hierarchical Bayesian implementation of the model (Turner, Sederberg, Brown, & Steyvers, 2013) to data from a perceptual decision task to produce accurate group-level estimates of the vc, ve, and sv parameters for children with ADHD and age-matched typically-developing peers. It should be noted that these accounts do not provide an exhaustive list of etiological theories of ADHD, and that findings of heterogeneity in the disorder (Fair et al., 2012; Karalunas, Fair, et al., 2014) suggest that hopes of identifying a single theory that explains impairment in all cases are likely unrealistic. However, we aimed to 1) provide initial steps toward using model-based analyses to narrow the field of proposed neuroscientific theories of ADHD, and 2) demonstrate the utility of well-developed formal models from cognitive science for distinguishing the predictions of etiological theories of psychiatric disorders, in general.

Methods

Participants

Children ages 8 through 12 with (N=80) and without (N=32) ADHD (Table 1) were recruited from a community sample as part of an ongoing study, which was approved by the Pennsylvania State University’s Institutional Review Board (IRB#32126). Children with ADHD met DSM-IV criteria, including parent-reported age of onset, duration, cross-situational severity, and impairment based on the Diagnostic Interview Schedule for Children (DISC-IV) (Shaffer, Fisher, & Lucas, 1997). In addition, at least one parent and one teacher report of behavior on the Attention, Hyperactivity, or ADHD subscales of the Behavioral Assessment Scale for Children (BASC-2: Reynolds & Kamphaus, 2004) or the Conners’ Rating Scales (Conners’: Conners, 2008) was required to exceed the 85th percentile (T-score>61). Children in the ADHD group who were prescribed a psychostimulant medication (N=25; 31%) ceased taking their medication at least 24-48 hours in advance of the day of testing (median=56 hours). Controls had never previously been diagnosed or treated for ADHD and did not meet diagnostic criteria on the DISC-IV. In addition, they were required to fall below the 80th percentile (T-score≤58) on all of the above listed rating scales. To equate IQ levels between groups, potential non-ADHD controls with an estimated IQ>115, as well as children in both groups with IQ<80, were excluded.

Table 1.

Comparison of group demographic and diagnostic variables.

| Control | ADHD | |

|---|---|---|

| N(Males:Females) | 32(14:18) | 80(52:28) |

| #Subtypes (H,I,C) | 2,36,42 | |

| Age | 9.03(1.28) | 9.43(1.24) |

| Estimated full-scale IQ | 105.31(8.04) | 102.54(13.37) |

| Hyperactivity/Impulsivity | ||

| Total symptoms | 0.28(.58) | 5.66(2.57)*** |

| Parent BASC-2 | 40.97(4.82) | 67.38(13.27)*** |

| Parent Conners | 45.03(3.24) | 69.16(14.42)*** |

| Teacher BASC-2 | 42.97(2.61) | 58.82(12.35)*** |

| Teacher Conners | 45.03(2.51) | 58.26(12.02)*** |

| Inattention | ||

| Total symptoms | 0.34(.48) | 7.91(1.59)*** |

| Parent BASC-2 | 42.72(6.73) | 66.51(7.66)*** |

| Parent Conners | 46.09(3.91) | 70.03(10.54)*** |

| Teacher BASC-2 | 43.16(4.99) | 60.90(7.22)*** |

| Teacher Conners | 46.59(4.46) | 59.14(11.80)*** |

| Comorbidity (DISC: past year) | ||

| MDD | 0 | 4 |

| GAD | 0 | 6 |

| ODD/CD | 2/0 | 34/8 |

Significant differences are noted:

=p<.05,

=p<.01,

=p<.001

The presence of common childhood psychopathology, such as anxiety, depression, oppositional defiant disorder, and conduct disorder was assessed using the DISC-IV and standardized rating scales, but was not exclusionary. Sample demographics, which reflected those of the larger region, were as follows: 71.4% Caucasian/non-Hispanic, 8.0% Caucasian/Hispanic, 1.8% other Hispanic, 10.7% African American, 0.9% Asian, 5.4% mixed and 1.8% unknown/missing.

Experimental Procedure & Stimuli

The data described here were obtained during the second practice block of a task, which was designed to familiarize children with numerosity discrimination trials that would eventually be interleaved within a complex span working memory paradigm. Data from the first (simple spatial span) and third (complex spatial span, interleaved with numerosity trials) blocks are reported by Weigard and Huang-Pollock (2016). The majority of the control (N=27; 84%) and ADHD groups (N=71; 89%) in the current study were also part of the sample reported in this previous study. In the numerosity discrimination paradigm, children were asked to respond with a mouse click as to whether a randomly-distributed array of black asterisks presented on an invisible 10×10 grid within a square box had “a lot” (i.e. >50 asterisks, left mouse click) or “a little bit” (i.e. <50, right mouse click) of asterisks (called “candy”). 100 trials were presented in random order; half were relatively easy to discriminate (Low Difficulty) and contained either 31-35 or 66-70 asterisks, while the other half were more difficult to discriminate (High Difficulty), containing either 41-45 or 56-60 asterisks. Stimuli remained onscreen until a response was made. A feedback cue (“Correct”/“Incorrect”) was then displayed for 500ms above the stimulus, followed by a blank screen for 400ms. Children were asked to complete the task as quickly and accurately as possible.

Model-Based Analyses

RT data were fit to a standard LBA model in which b was parameterized as the distance above the top of the start point distribution (A). An optimization model selection analysis (Donkin, Brown, & Heathcote, 2011), a procedure used in previous work to determine the best-fitting sets of parameter constraints for the LBA (Heathcote, Loft, & Remington, 2015), was first used to select an optimal model to explain within-subjects effects of stimuli and difficulty. Models with all possible sets of constraints were fit using maximum likelihood procedures, and the Akaike Information Criterion (AIC: Akaike, 2011) was used as an index of relative fit. This procedure suggested that an ideal model allowed 1) b to vary by response type (“many”/”few”) to account for response bias, 2) v to vary by accuracy of the information (correct/error, or vc/ve, as expected to account for above-chance performance) and difficulty (high/low), 3) sv to vary by accuracy (svc/sve) and stimulus type (“many”/”few”), and 4) common estimates of t0 and A for all conditions and responses. To constrain the model (Donkin, Brown, & Heathcote, 2009), sve for “few” was fixed to 1 as a scaling parameter (the reasons for this constraint, and potential effects on group differences, are described in Supplemental Materials).

Following model selection, a hierarchical Bayesian version of the LBA (Turner et al., 2013) was fit to RT data from both groups to estimate posterior distributions over model parameter values. This method produces more stable parameter estimates than traditional person-by-person analyses because it uses group-level posterior distributions of parameter values as prior distributions for individual parameter estimates (parameter “shrinkage”). The model assumed that individual-level parameters followed truncated normal distributions defined by two group-level hyper-parameters, a mean (μ) and standard deviation (σ). Prior to estimation, RTs <200ms and >3000ms were removed as fast guesses and outliers, respectively (these exclusion procedures eliminated <3.5% of the raw RT data), to prevent contaminant trials from affecting parameter estimates (Luce, 1986; Ratcliff & Tuerlinckx, 2002). To further reduce the influence of outliers and stabilize estimates, a contaminant mixture (Ratcliff & Tuerlinckx, 2002) assumption was employed in which 5% of trials, uniformly distributed between 200ms and 3000ms and between correct and error responses, were assumed to be contaminants. Details of the priors, sampling procedure, and plots of model fit, which indicated that the model provided an adequate description of behavioral data, are available in Supplemental Materials.

Hypothesis Testing

Behavioral summary statistics were compared with traditional null-hypothesis significance tests (p-values) and Bayes Factors (BFs) using JASP (JASP Team, 2018)2. BFs quantify the likelihood of the data under the research hypothesis (above 1) vs. under the null hypothesis (below 1); a BF of 3, for example, indicates that the research hypothesis is three times more likely than the null hypothesis, given the observed data. Based on guidelines provided by Kass and Raftery (2012), BFs between 1 and 3 provide “anecdotal” or ambiguous evidence for the research hypothesis, BFs of 3 to 20 provide positive evidence, and BFs >20:1 provide strong evidence.

For the estimated model parameters, we carried out inference at the group level using both a BF framework and a parameter estimation framework, which focuses on the location of model parameter posteriors. Each framework has individual limitations, and whether either provides an optimal method for Bayesian inference is the subject of current debate (Kruschke, 2013; Lee & Wagenmakers, 2014; Wagenmakers, Lodewyckx, Kuriyal, & Grasman, 2010). Inference in the parameter estimation framework was carried out by calculating “Bayesian p-values” (Bp), which quantify the degree which the posterior difference distribution is consistent with the hypothesis that a difference exists (Matzke, Hughes, Badcock, Michie, & Heathcote, 2017); Bps close to 0 indicate a greater likelihood of a difference. Inference using the BF framework was carried out using Savage-Dickey density ratios (SDR), which approximate the BF by dividing the density of the posterior for a parameter at a value of interest from the density of the prior at the same value (Wagenmakers et al., 2010). Procedures for the calculation of both values are available in Supplemental Materials. We chose to use both methods of inference and, based on the Kass and Raftery (2012) guidelines3, adopted the following convention for interpreting results: if either SDR<3:1 or Bp>.25, evidence for the effect was considered ambiguous, if both SDR>3:1 and Bp<.25, evidence was considered moderate, and if both SDR>20:1 and Bp<.05, evidence was considered strong.

Results

Behavioral Summary Statistics

Mean RT

As expected, children with ADHD had longer RTs than controls, F(1,110)=12.63,η2=.10,p<.001, BF=23.65 (Supplemental Table 1). There were also main effects of Difficulty, F(1,110)=95.25,η2 =.46,p<.001, BF>10,000, and Stimulus, F(1,110)=31.15,η2=.22,p<.001, BF>10,000, such that RTs were generally faster for low-difficulty trials and stimuli in the “many” category. There were no significant interactions.

RT Variability

Consistent with previous literature, children with ADHD had more variable RTs than controls, F(1,110)=21.54,η2 =.16,p<.001, BF=1015.67. Mirroring the mean RT effects, there were also main effects of Difficulty, F(1,110)=35.15,η2=.23,p<.001, BF>10,000, and Stimulus, F(1,110)=10.91,η2=.09,p=.001, BF=28.02, such that RTs were less variable for low-difficulty trials and “many” stimuli. There were no significant interactions.

Accuracy

Children with ADHD were less accurate than controls, F(1,110)=15.46,η2=.12,p<.001, BF=34.20, and accuracy was worse on high-difficulty trials, F(1,110)=224.21,η2=.67,p<.001, BF>10,000. A small Group x Difficulty x Stimulus interaction was also detected by p-values, but the BF indicated evidence against this interaction, F(1,110)=4.00,η2=.03,p=.048, BF=.02.

Model-Based Analysis

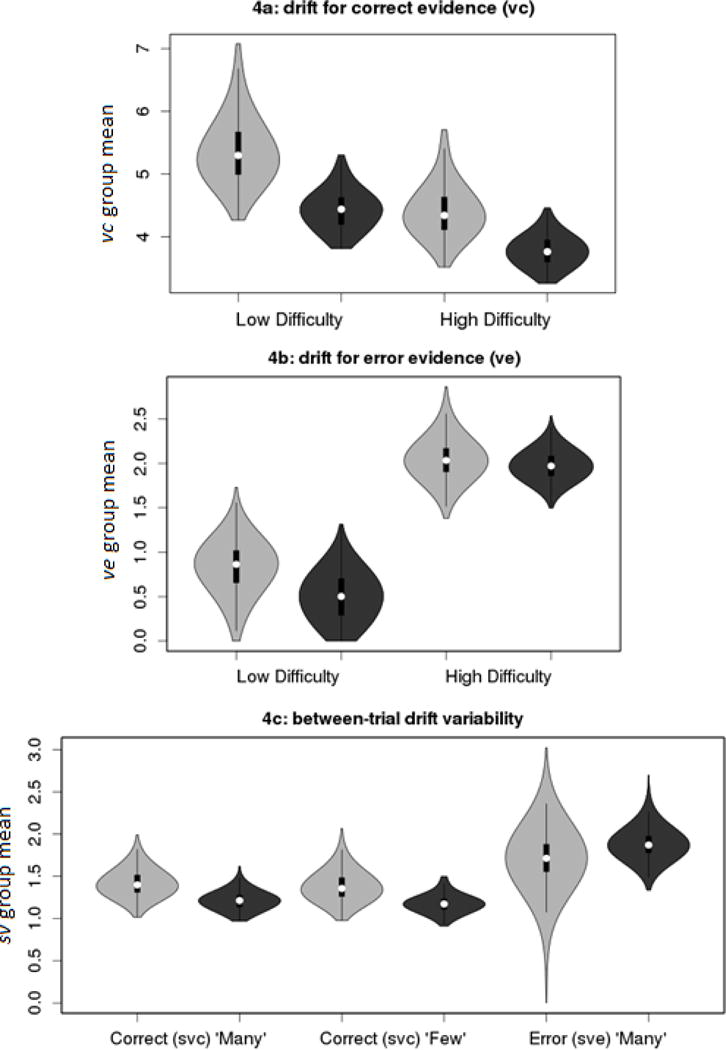

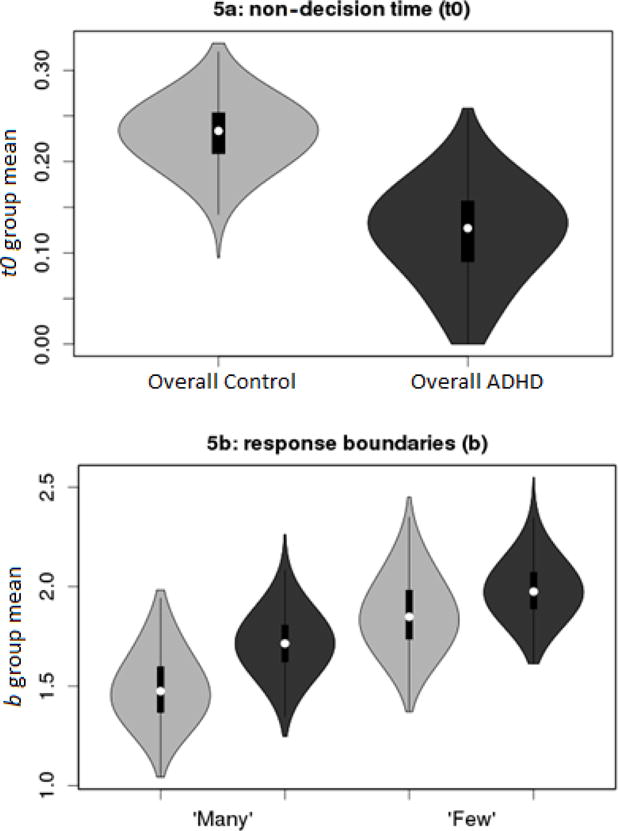

Posterior distributions for the group μ parameters are displayed as violin plots in Figures 3–4. Violin plots include both a standard boxplot that represents variability of the posterior samples and kernel density plots of the same samples. These plots represent uncertainty about the location of the μ parameter estimates, but do not represent between-subject variability. Between-subject variability is, instead, captured by the σ hyper-parameters and represented by population density plots that are reported in Supplemental Materials.

Figure 3.

Violin plots representing the posterior density of group μ parameters for the average drift rate for correct evidence (vc), average drift rate for error evidence (ve) and between-trial drift variability for correct (svc) and error (sve) evidence. Light gray = Control; Dark gray = ADHD.

Figure 4.

Violin plots representing the posterior density of group μ parameters for non-decision time (t0), and response boundaries (b). Light gray =Control; Dark gray =ADHD.

Correct Drift Rate

As expected, there was strong evidence for a main effect of Difficulty (Bp=.01,SDR=25.0:1), such that vc was slower for more difficult trials (Figure 3a). There was also strong evidence for a main effect of Group (Bp=.01,SDR=23.9:1), in which children with ADHD displayed lower vc than their peers. There was ambiguous evidence for a Group x Difficulty interaction (Bp =.66,SDR=4.3:1).

Error Drift Rate

There was strong evidence for a main effect of Difficulty (Bp<.01,SDR>10,000:1), such that ve was faster for the high-difficulty trials (Figure 3b). This effect was expected because the reduced discriminability of high-difficulty stimuli should, theoretically, cause more evidence to be incorrectly routed to the error accumulator, consistent with previous research involving discriminability manipulations (Ester et al., 2014; van Maanen et al., 2016). In contrast, there was ambiguous evidence for Group differences in ve (Bp =.20,SDR=0.8:1), and for an interaction (Bp=.29,SDR=0.5:1). Therefore, a reduction in vc and increase in ve explain the slower and more variable RTs, and higher error rates, observed in the high-difficulty condition, reflecting decreased discriminability. However, slow and variable RTs, and higher error rates, among children with ADHD can only be attributed to slower vc.

Drift Rate Variability

Evidence for Group differences in between-trial variability of drift rate was generally weak (Figure 3c). As sv were estimated separately for “many” and “few” stimuli, based on the initial model selection analysis, and sve for “few” was fixed to 1, effects of Group, Stimulus, and interactions were probed for svc, while Group effects in sve were probed for “many” stimuli. There was moderate evidence that svc was greater in controls (Bp=.05,SDR=3.9:1), in the opposite of the hypothesized direction, and ambiguous evidence for an effect of Stimulus, (Bp=.38,SDR=1.1:1), and an interaction, (Bp=.49,SDR=1.3:1). The sve of “many” (Bp=.28,SDR=0.5:1) stimuli displayed ambiguous evidence for an effect of Group. Thus, there was little evidence for group differences, and effects with the most evidence suggested greater svc in controls.

Non-decision time

There was moderate evidence (Bp=.02,SDR=8.0:1) that non-decision time was shorter in ADHD (Figure 4a). However, as shorter t0 would be expected to decrease RT, and would not affect accuracy or RT variability, this difference does not explain the between-group differences in summary statistics.

Start Point Variability and Boundary

There was ambiguous evidence for Group differences in A (Bp=.25,SDR=0.7:1). Likewise, in b (Figure 4b), there was ambiguous evidence for a main effect of Group (Bp=.14,SDR=2.1:1). Children displayed moderate evidence (Bp=.02,SDR=10.4:1) for an effect in which boundaries for the “many” response were lower than those for the “few” response, and there was little evidence for an interaction (Bp=.37,SDR=1.3:1). This pattern suggests that children were biased to respond “many” over “few”, but that this bias does not contribute to group differences in RT and accuracy.

Between-subject variability

ADHD is a heterogeneous disorder (Fair et al., 2012; Karalunas, Fair, et al., 2014) and aspects of the above results led us to speculate that the ADHD group may contain distinct subgroups of children with different impairments (see Discussion). We therefore sought to test the hypothesis that this group displays greater between-subject variability in parameter estimates. To do so, we conducted a post-hoc analysis in which we used Bayesian p-values to assess whether group standard deviation parameters (σ) for vc, ve, svc, and sve (averaged between difficulty and “many”/”few” conditions) were greater in ADHD. We found rather weak evidence for greater between-subject variability in vc in ADHD, Bp=.24, but moderate evidence for the same effect in ve, Bp=.07. In contrast, we found moderate evidence that svc estimates actually displayed greater between-subject variability in the control group, Bp=.11, and little evidence for effects in sve, Bp=.35. Therefore, there is suggestive evidence that ve, and possibly vc, values are more heterogeneous in ADHD.

Discussion

The goal of the current study was to demonstrate the utility of formal cognitive models for better operationalizing and testing predictions from etiological theories of psychiatric disorders, and to use this approach to provide a strong test of several neuroscientific accounts of cognitive deficits in ADHD. A hierarchical Bayesian implementation of the LBA model (Brown & Heathcote, 2008; Turner et al., 2013) was fit to behavioral data of children with ADHD and typically-developing controls from a numerosity discrimination paradigm. Crucially, behavioral summary statistics demonstrated that children with ADHD were less accurate and had slower and more variable RTs than their typically-developing peers, suggesting that this simple task effectively captures the characteristic features of cognitive performance in ADHD. LBA parameters were then used to test three competing accounts of cognitive deficits.

Several results were highly relevant to these accounts. Both groups showed comparable within-subjects effects of difficulty in which the rate of evidence accumulation for correct information (vc) decreased on high-difficulty trials, but the rate of evidence accumulation for erroneous information (ve) increased. These effects reflect a decreased signal-to-noise ratio in the high-difficulty condition; high-difficulty stimuli are more similar to stimuli in the opposite category than low-difficulty stimuli, which reduces their discriminability and causes more evidence to be accumulated in the incorrect accumulator, consistent with previous manipulations of stimulus discriminability (Ester et al., 2014; van Maanen et al., 2016). However, group differences in mean drift rate parameters followed a distinct pattern. Although vc was lower in ADHD, there was little evidence that ve differed between groups, suggesting that slower, more variable and more erroneous responding in the ADHD group was driven only by slower accumulation of correct evidence. Further, between-trial variability in drift rate (sv) was unlikely to explain poorer performance in ADHD; evidence for group sv differences was relatively weak, and the most substantial evidence suggested that svc was actually greater in controls. Taken together, this pattern has implications for all three theoretical accounts.

The main prediction of the first general account, which posits that children with ADHD have a greater incidence of acute attentional lapses, was not supported by the model-based analysis. This account is proposed by at least two major theories, which hold that intermittent performance lapses occur either due to oscillatory activity within the default mode network during task engagement (Sonuga-Barke & Castellanos, 2007) or due to a core deficit in the CE component of working memory (Kofler et al., 2014; Rapport et al., 2008). Evidence of group differences in the sv parameter, which has been empirically demonstrated to index such lapses (McVay & Kane, 2012), was weak, and suggested that children with ADHD may actually have less between-trial variability in the speed of correct evidence accumulation (svc) than controls.

Implications of the current results for the other two theoretical accounts are more complex. As the slowing in neural computation account (Killeen et al., 2013; Russell et al., 2006) predicts that the speed of evidence accumulation would be globally slowed in ADHD, it does not explain why only vc was reduced. Similarly, the lower signal-to-noise account (Karalunas, Geurts, et al., 2014; Sikström & Söderlund, 2007) is partially supported by the decrease in vc relative to ve. However, it also predicts that ve would be greater in ADHD, similar to the effect produced by the within-subjects discriminability manipulation. Although it appears that both of these accounts do not adequately describe the empirical data, there are two ways in which one or both may be compatible with the results.

First, it is possible that either may explain the selective effect in vc if specific assumptions are considered. Of the signal-to-noise theories, the MBA model, which posits that individuals with ADHD require more noise for stochastic resonance to occur, may provide a framework that most easily accounts for the data. If state-regulation processes increase signal-to-noise ratios through stochastic resonance, but this mechanism is less efficient in ADHD, controls would be expected to exhibit greater signal (vc) at comparable levels of noise (ve), as in the current study. However, the neuroenergetic theory (Killeen et al., 2013) may also explain the current data if it is assumed, for instance, that neurons which are most relevant for selecting correct responses exhaust their energy more quickly than neurons that are less relevant to the task (and thus contribute to noise). To further explore the possibility that selective slowing in vc is congruent with one or both accounts, other predictions could be assessed in the LBA framework. The neuroenergetic theory predicts that neural speed is reduced as time on task increases and inter-stimulus interval decreases, and is disproportionately reduced in ADHD (Killeen et al., 2013). Although the latter prediction has been confirmed with the DDM (Huang-Pollock et al., 2017), exploration of both predictions with the LBA could establish selective reductions in vc as a more specific marker of lower neuronal energy. As the signal-to-noise accounts predict that arousal increases signal-to-noise ratios, experimental manipulations of reward or those that promote task engagement (e.g., game-like features) would be expected to selectively increase vc, but do so to a lesser extent in ADHD. Further, as phasic task-elicited pupil dilations may provide an index of arousal state (Gilzenrat, Nieuwenhuis, Jepma, & Cohen, 2010), a strong test of the signal-to-noise accounts could determine whether these pupillometric measures correlate with individual difference in, and mediate ADHD-related reductions in, vc.

Second, rather than explaining cognitive deficits across the broad population of individuals with ADHD, it is possible that one or both of these accounts may explain performance differences for distinct subsets of this population. Given recent evidence for heterogeneity in ADHD (Fair et al., 2012; Karalunas, Fair, et al., 2014), it is possible that a subgroup of children exhibits globally slowed processing, while a separate subgroup exhibits lower signal-to-noise ratios. As both mechanisms would slow vc, but each would have opposite effects on ve, this combination could explain the group-level results. Indeed, our post-hoc analysis provided tentative evidence that children with ADHD displayed greater between-subject variability in mean drift, but not drift variability, parameters, and in ve in particular, providing initial support for this notion. To further explore the possibility that discrete subgroups exist, individuals’ LBA parameter estimates could be entered into clustering algorithms, such as community detection (Fair et al., 2012). Individual parameter estimates from the current study are inappropriate for these analyses because they were estimated with relatively few per-participant trials, and are therefore unreliable, and because individual parameters from hierarchical models are non-independent. However, clustering analyses could be applied in the future to experimental data sets with larger numbers of trials per participant.

Several effects in LBA model parameters that were less relevant to explaining group differences in performance should also be noted, including the response bias effect in b, and the finding that t0 was shorter in ADHD. It is unclear why a bias toward “many” responses should exist in the numerosity task. However, as we called the asterisk stimuli “candy” in our instructions to children, this bias may relate to classic findings in which the subjective value of stimuli (e.g., “many” pieces of candy are better than “few”) affects perceptual estimation (Bruner & Goodman, 1947). The t0 finding is consistent with several previous DDM studies of ADHD (Karalunas & Huang-Pollock, 2013; Metin et al., 2013), although, as this parameter does not affect accuracy or RT variability, it does not explain the characteristic behavioral phenomena associated with ADHD. As has been previously noted (Karalunas, Geurts, et al., 2014), t0 is likely multi-determined, and the implications of findings in this parameter are currently unclear.

Perhaps the most puzzling finding was the moderate evidence that svc was greater in controls, in the opposite direction of effects hypothesized by attentional lapse accounts. If svc is interpreted strictly as indexing lapses, this finding suggests the surprising conclusion that children with ADHD have fewer lapses than their peers. However, several caveats of this finding, and our broader assertion that the sv results refute attention lapse accounts, are worth noting. First, the empirical evidence for sv as an index of lapses is preliminary; a single study (McVay & Kane, 2012) found links between this parameter and reports of task-unrelated thoughts, as well as other constructs theoretically linked to lapses. A second limitation is that lapses may not cause drift variability that is perfectly Gaussian; as lapses would presumably cause trials with very slow drift rates, but not fast drift rates, there would be a disproportionate increase in variability at the low end of the drift distribution (rather than both ends, as in Figure 2a). Although a hypothetical model that accounts for negative skew in the drift distribution may better describe this phenomenon than the LBA, with its assumption of Gaussian variability, McVay and Kane (2012)’s work suggests that sv provides a reasonable approximation of variability caused by lapses. Finally, drift variability parameters are often more difficult to estimate than other parameters in sequential sampling models (Voss, Nagler, & Lerche, 2013). Although our simulation/recovery study (Supplemental Materials) suggested that the hierarchical model was able to obtain relatively reliable group estimates of sv, the reliability and construct validity of this parameter must be further explored in future research.

The current study has several additional limitations. First, as common psychiatric disorders that are often comorbid with ADHD were not exclusionary, it is possible that children with these conditions either contributed to the mean differences, or the observed heterogeneity, in the ADHD group. A second caveat is that data from a single task was used. Replication of the current results in different cognitive paradigms will be instrumental for both corroborating our findings and clarifying how task parameters may modulate them. A related limitation is that the current study did not explore how broader developmental, contextual, or biological factors, discussed by Coghill et al. (2005) as essential to testing theories of ADHD, were related to the model-based predictions. The combination of model-based techniques with prospective longitudinal studies that include measurements from multiple levels of analysis would arguably provide the most powerful tests of causal theories. We hope that the current study provides an initial step towards the regular inclusion of cognitive modeling in these larger studies.

In addition to these limitations of the data set, results also highlight some broader limitations with the model-based approach used. As stated in the introduction, the LBA was used in place of the DDM, another sequential sampling model commonly applied in the ADHD literature, because the race framework of the LBA allows separate estimates of drift for correct and incorrect evidence, and thus allows the global slowing and lower signal-to-noise accounts to be explicitly distinguished. However, this raises a major conceptual issue with the approach of using well-developed models from cognitive science to test neuroscientific theories of clinical disorders; whether a model-based analysis provides evidence for a particular etiological theory is highly dependent on the assumptions of the specific model chosen. Furthermore, as the LBA analysis found partial support for both of these accounts, it could be argued that this approach was unable to parse them apart or address the possibility that both accounts could explain etiology for a subset of the heterogeneous clinical group. The LBA analysis advanced the field by identifying a unique mechanism of performance deficits in ADHD that both accounts must describe (selective slowing in vc) and suggesting future tests of each account and methods for breaking down heterogeneity. Nonetheless, we acknowledge that, as the nascent field of applying computational modeling methods to clinical questions continues to grow, it must wrestle with the major conceptual issues of the effects of model choice, how to interpret ambiguous results, and how to adequately describe heterogeneity in clinical populations.

Conclusions

The current study produced several key conclusions. First, the model-based analysis found that there was little evidence that children with ADHD exhibit greater between-trial variability in cognitive processing, casting doubt on etiological models that highlight intermittent performance lapses. Second, it demonstrated that children with ADHD display lower signal-to-noise ratios than their peers in decision tasks, but that this effect is distinct from those that occur in response to manipulations of stimulus discriminability; children with ADHD display slower accumulation of correct evidence (signal) but show similar accumulation rates of incorrect evidence (noise) to controls. Third, these results partially supported both the behavioral neuroenergetics theory (Killeen et al., 2013; Russell et al., 2006) and accounts highlighting lower neural signal-to-noise ratios (Karalunas, Geurts, et al., 2014; Sikström & Söderlund, 2007), and provide a roadmap for how these theories can be further tested, distinguished and refined in a model-based framework. Specifically, they suggest that mechanisms through which signal detection is improved without concurrent reductions in noise should be further explored.

Overall, this work demonstrates how formal models of cognition can both make the mechanisms of etiological theories of psychopathology more explicit and provide strong tests of their predictions. Although the complexity of psychological phenomena may not allow all proposed mechanisms in such theories to be formalized, taking a model-based approach to those that can serves to advance the science as a whole by providing stronger conjectures and more definitive refutations.

Supplementary Material

General Scientific Summary (GSS).

Children with ADHD display slower response times and less accurate choices when completing choice response time tasks. This study demonstrates how mathematical models that describe cognitive processes underlying these tasks can be used to test theories about the causes of ADHD.

Footnotes

Herein, we use the term “etiology” to mean any causal process that underlies the observed symptoms of ADHD, including distal factors, such as genes, as well as the neurocognitive processes that are the focus of this study.

All Bayesian tests used standard JASP priors, including the Cauchy prior for effect size (width = .707) for t-tests, prior effect size scale = .5 for fixed factors in ANOVAs, and prior effect size scale = 1 for random factors.

Conversions of BF criteria to Bp are based on the logic that if 3 to 1 odds in favor of the research hypothesis are considered “positive” evidence, a Bp of .25 (.25 =1/4 = a 3 to 1 chance that the posterior is consistent with the hypothesis that a difference exists) should also be treated as positive evidence.

References

- Akaike H. International Encyclopedia of Statistical Science. Springer; 2011. Akaike’s Information Criterion; pp. 25–25. [Google Scholar]

- Aston-Jones G, Cohen JD. An integrative theory of locus coeruleus-norepinephrine function: adaptive gain and optimal performance. Annu Rev Neurosci. 2005;28:403–450. doi: 10.1146/annurev.neuro.28.061604.135709. [DOI] [PubMed] [Google Scholar]

- Brown SD, Heathcote A. The simplest complete model of choice response time: Linear ballistic accumulation. Cognitive psychology. 2008;57(3):153–178. doi: 10.1016/j.cogpsych.2007.12.002. [DOI] [PubMed] [Google Scholar]

- Bruner J, Goodman C. Value and need as organizing factors in perception. The journal of abnormal and social psychology. 1947;42(1):33. doi: 10.1037/h0058484. [DOI] [PubMed] [Google Scholar]

- Castellanos FX, Sonuga-Barke EJ, Scheres A, Di Martino A, Hyde C, Walters JR. Varieties of attention-deficit/hyperactivity disorder-related intra-individual variability. Biological psychiatry. 2005;57(11):1416–1423. doi: 10.1016/j.biopsych.2004.12.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coghill D, Nigg J, Rothenberger A, Sonuga-Barke E, Tannock R. Whither causal models in the neuroscience of ADHD? Developmental Science. 2005;8(2):105–114. doi: 10.1111/j.1467-7687.2005.00397.x. [DOI] [PubMed] [Google Scholar]

- Conners CK. Conners’ Rating Scales— 3 Technical Manual. NY: Multi-Health Systems Inc; 2008. [Google Scholar]

- Donkin C, Brown S, Heathcote A. Drawing conclusions from choice response time models: A tutorial using the linear ballistic accumulator. Journal of Mathematical Psychology. 2011;55(2):140–151. [Google Scholar]

- Donkin C, Brown S, Heathcote A, Wagenmakers EJ. Diffusion versus linear ballistic accumulation: different models but the same conclusions about psychological processes? Psychonomic bulletin & review. 2011;18(1):61–69. doi: 10.3758/s13423-010-0022-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Donkin C, Brown SD, Heathcote A. The overconstraint of response time models: Rethinking the scaling problem. Psychonomic Bulletin & Review. 2009;16(6):1129–1135. doi: 10.3758/PBR.16.6.1129. [DOI] [PubMed] [Google Scholar]

- Epstein JN, Langberg JM, Rosen PJ, Graham A, Narad ME, Antonini TN, Altaye M. Evidence for higher reaction time variability for children with ADHD on a range of cognitive tasks including reward and event rate manipulations. Neuropsychology. 2011;25(4):427. doi: 10.1037/a0022155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ester EF, Ho TC, Brown SD, Serences JT. Variability in visual working memory ability limits the efficiency of perceptual decision making. Journal of vision. 2014;14(4):2–2. doi: 10.1167/14.4.2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fair DA, Bathula D, Nikolas MA, Nigg JT. Distinct neuropsychological subgroups in typically developing youth inform heterogeneity in children with ADHD. Proceedings of the National Academy of Sciences. 2012;109(17):6769–6774. doi: 10.1073/pnas.1115365109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Forstmann BU, Wagenmakers EJ. An Introduction to Model-Based Cognitive Neuroscience. Springer; 2015. Model-Based Cognitive Neuroscience: A Conceptual Introduction; pp. 139–156. [Google Scholar]

- Fulton B, Scheffler R, Hinshaw S, Levine P, Stone S, Brown T, Modrek S. National variation of ADHD diagnostic prevalence and medication use: health care providers and education policies. Psychiatric Services. 2015 doi: 10.1176/ps.2009.60.8.1075. [DOI] [PubMed] [Google Scholar]

- Gilzenrat MS, Nieuwenhuis S, Jepma M, Cohen JD. Pupil diameter tracks changes in control state predicted by the adaptive gain theory of locus coeruleus function. Cognitive, Affective, & Behavioral Neuroscience. 2010;10(2):252–269. doi: 10.3758/CABN.10.2.252. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Halperin JM. Executive functioning–a key construct for understanding developmental psychopathology or a ‘catch-all’term in need of some rethinking? Journal of Child Psychology and Psychiatry. 2016;57(4):443–445. doi: 10.1111/jcpp.12551. [DOI] [PubMed] [Google Scholar]

- Hawkins G, Mittner M, Boekel W, Heathcote A, Forstmann BU. Toward a model-based cognitive neuroscience of mind wandering. Neuroscience. 2015;310:290–305. doi: 10.1016/j.neuroscience.2015.09.053. [DOI] [PubMed] [Google Scholar]

- Heathcote A, Lin Y, Gretton M. DMC: Dynamic Models of Choice. 2017 doi: 10.3758/s13428-018-1067-y. Retrieved from osf.io/pbwx8. [DOI] [PubMed] [Google Scholar]

- Heathcote A, Loft S, Remington RW. Slow down and remember to remember! A delay theory of prospective memory costs. Psychological review. 2015;122(2):376. doi: 10.1037/a0038952. [DOI] [PubMed] [Google Scholar]

- Heathcote A, Suraev A, Curley S, Gong Q, Love J, Michie PT. Decision processes and the slowing of simple choices in schizophrenia. American Psychological Association; 2015. [DOI] [PubMed] [Google Scholar]

- Huang-Pollock CL, Karalunas SL, Tam H, Moore AN. Evaluating vigilance deficits in ADHD: a meta-analysis of CPT performance. Journal of abnormal psychology. 2012;121(2):360. doi: 10.1037/a0027205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Huang-Pollock CL, Ratcliff R, McKoon G, Shapiro Z, Weigard A, Galloway-Long H. Using the diffusion model to explain cognitive deficits in attention deficit hyperactivity disorder. Journal of abnormal child psychology. 2017;45(1):57–68. doi: 10.1007/s10802-016-0151-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- JASP Team. JASP. 2018 (Version 0.8.5) [Google Scholar]

- Karalunas SL, Fair D, Musser E, DAykes K, Iyer SP, Nigg JT. Subtyping attention-deficit/hyperactivity disorder using temperament dimensions: toward biologically based nosologic criteria. JAMA psychiatry. 2014;71(9):1015–1024. doi: 10.1001/jamapsychiatry.2014.763. [DOI] [PMC free article] [PubMed] [Google Scholar] [Retracted]

- Karalunas SL, Geurts HM, Konrad K, Bender S, Nigg JT. Annual Research Review: Reaction time variability in ADHD and autism spectrum disorders: measurement and mechanisms of a proposed trans-diagnostic phenotype. Journal of Child Psychology and Psychiatry. 2014;55(6):685–710. doi: 10.1111/jcpp.12217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karalunas SL, Huang-Pollock CL. Integrating impairments in reaction time and executive function using a diffusion model framework. Journal of abnormal child psychology. 2013;41(5):837–850. doi: 10.1007/s10802-013-9715-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Karalunas SL, Huang-Pollock CL, Nigg JT. Decomposing attention-deficit/hyperactivity disorder (ADHD)-related effects in response speed and variability. Neuropsychology. 2012;26(6):684. doi: 10.1037/a0029936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Killeen PR, Russell VA, Sergeant JA. A behavioral neuroenergetics theory of ADHD. Neuroscience & Biobehavioral Reviews. 2013;37(4):625–657. doi: 10.1016/j.neubiorev.2013.02.011. [DOI] [PubMed] [Google Scholar]

- Kofler MJ, Alderson RM, Raiker JS, Bolden J, Sarver DE, Rapport MD. Working memory and intraindividual variability as neurocognitive indicators in ADHD: examining competing model predictions. Neuropsychology. 2014;28(3):459. doi: 10.1037/neu0000050. [DOI] [PubMed] [Google Scholar]

- Kruschke JK. Bayesian estimation supersedes the t test. Journal of Experimental Psychology: General. 2013;142(2):573. doi: 10.1037/a0029146. [DOI] [PubMed] [Google Scholar]

- Lee MD, Wagenmakers EJ. Bayesian cognitive modeling: A practical course. Cambridge University Press; 2014. [Google Scholar]

- Loe IM, Feldman HM. Academic and educational outcomes of children with ADHD. Journal of pediatric psychology. 2007;32(6):643–654. doi: 10.1093/jpepsy/jsl054. [DOI] [PubMed] [Google Scholar]

- Luce RD. Response times. Oxford University Press; 1986. [Google Scholar]

- Matzke D, Hughes M, Badcock JC, Michie P, Heathcote A. Failures of cognitive control or attention? The case of stop-signal deficits in schizophrenia. Attention, Perception, & Psychophysics. 2017;79(4):1078–1086. doi: 10.3758/s13414-017-1287-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McVay JC, Kane MJ. Drifting from slow to “d’oh!”: Working memory capacity and mind wandering predict extreme reaction times and executive control errors. Journal of Experimental Psychology: Learning, Memory, and Cognition. 2012;38(3):525. doi: 10.1037/a0025896. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Metin B, Roeyers H, Wiersema JR, van der Meere JJ, Thompson M, Sonuga-Barke E. ADHD performance reflects inefficient but not impulsive information processing: A diffusion model analysis. Neuropsychology. 2013;27(2):193. doi: 10.1037/a0031533. [DOI] [PubMed] [Google Scholar]

- Molina BS, Hinshaw SP, Swanson JM, Arnold LE, Vitiello B, Jensen PS, Abikoff HB. The MTA at 8 years: prospective follow-up of children treated for combined-type ADHD in a multisite study. Journal of the American Academy of Child & Adolescent Psychiatry. 2009;48(5):484–500. doi: 10.1097/CHI.0b013e31819c23d0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monsell S, Driver J. 1 Banishing the Control Homunculus. Control of cognitive processes. 2000:3. [Google Scholar]

- Moritz S, Birkner C, Kloss M, Jahn H, Hand I, Haasen C, Krausz M. Executive functioning in obsessive–compulsive disorder, unipolar depression, and schizophrenia. Archives of Clinical Neuropsychology. 2002;17(5):477–483. [PubMed] [Google Scholar]

- Moss F, Ward LM, Sannita WG. Stochastic resonance and sensory information processing: a tutorial and review of application. Clinical neurophysiology. 2004;115(2):267–281. doi: 10.1016/j.clinph.2003.09.014. [DOI] [PubMed] [Google Scholar]

- Nigg JT. On inhibition/disinhibition in developmental psychopathology: views from cognitive and personality psychology and a working inhibition taxonomy. Psychological bulletin. 2000;126(2):220. doi: 10.1037/0033-2909.126.2.220. [DOI] [PubMed] [Google Scholar]

- Pennington BF, Ozonoff S. Executive functions and developmental psychopathology. Journal of child psychology and psychiatry. 1996;37(1):51–87. doi: 10.1111/j.1469-7610.1996.tb01380.x. [DOI] [PubMed] [Google Scholar]

- Popper K. Conjectures and refutations: the growth of scientific knowledge. Routledge & Kegan Paul; 1963. [Google Scholar]

- Rapport MD, Alderson RM, Kofler MJ, Sarver DE, Bolden J, Sims V. Working memory deficits in boys with attention-deficit/hyperactivity disorder (ADHD): the contribution of central executive and subsystem processes. Journal of Abnormal Child Psychology. 2008;36(6):825–837. doi: 10.1007/s10802-008-9215-y. [DOI] [PubMed] [Google Scholar]

- Ratcliff R. A theory of memory retrieval. Psychological review. 1978;85(2):59. [Google Scholar]

- Ratcliff R, Childers R. Individual differences and fitting methods for the two-choice diffusion model of decision making. Decision. 2015;2(4):237. doi: 10.1037/dec0000030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, McKoon G. The diffusion decision model: Theory and data for two-choice decision tasks. Neural Computation. 2008;20(4):873–922. doi: 10.1162/neco.2008.12-06-420. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ratcliff R, Tuerlinckx F. Estimating parameters of the diffusion model: Approaches to dealing with contaminant reaction times and parameter variability. Psychonomic bulletin & review. 2002;9(3):438–481. doi: 10.3758/bf03196302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reynolds C, Kamphaus R. Circle Pines. MN: American Guidance Service; 2004. Behavior Assessment for Children, (BASC-2) [Google Scholar]

- Robbins TW, Gillan CM, Smith DG, de Wit S, Ersche KD. Neurocognitive endophenotypes of impulsivity and compulsivity: towards dimensional psychiatry. Trends in cognitive sciences. 2012;16(1):81–91. doi: 10.1016/j.tics.2011.11.009. [DOI] [PubMed] [Google Scholar]

- Russell VA, Oades RD, Tannock R, Killeen PR, Auerbach JG, Johansen EB, Sagvolden T. Response variability in attention-deficit/hyperactivity disorder: a neuronal and glial energetics hypothesis. Behavioral and Brain Functions. 2006;2(1):30. doi: 10.1186/1744-9081-2-30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shaffer D, Fisher P, Lucas R. NIMH Diagnostic INterview Schedule for Children-IV. New York: Ruane Center for Early Diagnosis, Division of Child Psychiatry, Columbia University; 1997. [Google Scholar]

- Sikström S, Söderlund G. Stimulus-dependent dopamine release in attention-deficit/hyperactivity disorder. Psychological review. 2007;114(4):1047. doi: 10.1037/0033-295X.114.4.1047. [DOI] [PubMed] [Google Scholar]

- Smith PL, Ratcliff R. Psychology and neurobiology of simple decisions. Trends in Neurosciences. 2004;27(3):161–168. doi: 10.1016/j.tins.2004.01.006. [DOI] [PubMed] [Google Scholar]

- Sonuga-Barke EJ, Castellanos FX. Spontaneous attentional fluctuations in impaired states and pathological conditions: a neurobiological hypothesis. Neuroscience & Biobehavioral Reviews. 2007;31(7):977–986. doi: 10.1016/j.neubiorev.2007.02.005. [DOI] [PubMed] [Google Scholar]

- Todd RD, Botteron KN. Is attention-deficit/hyperactivity disorder an energy deficiency syndrome? Biological Psychiatry. 2001;50(3):151–158. doi: 10.1016/s0006-3223(01)01173-8. [DOI] [PubMed] [Google Scholar]

- Turner BM, Sederberg PB, Brown SD, Steyvers M. A method for efficiently sampling from distributions with correlated dimensions. Psychological methods. 2013;18(3):368. doi: 10.1037/a0032222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Maanen L, Forstmann BU, Keuken MC, Wagenmakers EJ, Heathcote A. The impact of MRI scanner environment on perceptual decision-making. Behavior research methods. 2016;48(1):184–200. doi: 10.3758/s13428-015-0563-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Verbruggen F, McLaren IP, Chambers CD. Banishing the control homunculi in studies of action control and behavior change. Perspectives on Psychological Science. 2014;9(5):497–524. doi: 10.1177/1745691614526414. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Voss A, Nagler M, Lerche V. Diffusion models in experimental psychology. Experimental psychology. 2013 doi: 10.1027/1618-3169/a000218. [DOI] [PubMed] [Google Scholar]

- Wagenmakers EJ, Lodewyckx T, Kuriyal H, Grasman R. Bayesian hypothesis testing for psychologists: A tutorial on the Savage–Dickey method. Cognitive psychology. 2010;60(3):158–189. doi: 10.1016/j.cogpsych.2009.12.001. [DOI] [PubMed] [Google Scholar]

- Wehmeier PM, Schacht A, Barkley RA. Social and emotional impairment in children and adolescents with ADHD and the impact on quality of life. Journal of Adolescent Health. 2010;46(3):209–217. doi: 10.1016/j.jadohealth.2009.09.009. [DOI] [PubMed] [Google Scholar]

- Weigard A, Huang-Pollock CL. The role of speed in ADHD-related working memory deficits: a time-based resource-sharing and diffusion model account. Clinical Psychological Science. 2016 doi: 10.1177/2167702616668320. 2167702616668320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- White CN, Ratcliff R, Vasey MW, McKoon G. Using diffusion models to understand clinical disorders. Journal of Mathematical Psychology. 2010;54(1):39–52. doi: 10.1016/j.jmp.2010.01.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiecki TV, Poland J, Frank MJ. Model-Based Cognitive Neuroscience Approaches to Computational Psychiatry Clustering and Classification. Clinical Psychological Science. 2015;3(3):378–399. [Google Scholar]

- Willcutt EG, Doyle AE, Nigg JT, Faraone SV, Pennington BF. Validity of the executive function theory of attention-deficit/hyperactivity disorder: a meta-analytic review. Biological psychiatry. 2005;57(11):1336–1346. doi: 10.1016/j.biopsych.2005.02.006. [DOI] [PubMed] [Google Scholar]

- Wright L, Lipszyc J, Dupuis A, Thayapararajah SW, Schachar R. Response inhibition and psychopathology: A meta-analysis of go/no-go task performance. Journal of Abnormal Psychology. 2014;123(2):429. doi: 10.1037/a0036295. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.