Significance

Multilayer neural networks have proven extremely successful in a variety of tasks, from image classification to robotics. However, the reasons for this practical success and its precise domain of applicability are unknown. Learning a neural network from data requires solving a complex optimization problem with millions of variables. This is done by stochastic gradient descent (SGD) algorithms. We study the case of two-layer networks and derive a compact description of the SGD dynamics in terms of a limiting partial differential equation. Among other consequences, this shows that SGD dynamics does not become more complex when the network size increases.

Keywords: neural networks, stochastic gradient descent, gradient flow, Wasserstein space, partial differential equations

Abstract

Multilayer neural networks are among the most powerful models in machine learning, yet the fundamental reasons for this success defy mathematical understanding. Learning a neural network requires optimizing a nonconvex high-dimensional objective (risk function), a problem that is usually attacked using stochastic gradient descent (SGD). Does SGD converge to a global optimum of the risk or only to a local optimum? In the former case, does this happen because local minima are absent or because SGD somehow avoids them? In the latter, why do local minima reached by SGD have good generalization properties? In this paper, we consider a simple case, namely two-layer neural networks, and prove that—in a suitable scaling limit—SGD dynamics is captured by a certain nonlinear partial differential equation (PDE) that we call distributional dynamics (DD). We then consider several specific examples and show how DD can be used to prove convergence of SGD to networks with nearly ideal generalization error. This description allows for “averaging out” some of the complexities of the landscape of neural networks and can be used to prove a general convergence result for noisy SGD.

Multilayer neural networks are one of the oldest approaches to statistical machine learning, dating back at least to the 1960s (1). Over the last 10 years, under the impulse of increasing computer power and larger data availability, they have emerged as a powerful tool for a wide variety of learning tasks (2, 3).

In this paper, we focus on the classical setting of supervised learning, whereby we are given data points , indexed by , which are assumed to be independent and identically distributed from an unknown distribution on . Here is a feature vector (e.g., a set of descriptors of an image), and is a label (e.g., labeling the object in the image). Our objective is to model the dependence of the label on the feature vector to assign labels to previously unlabeled examples. In a two-layer neural network, this dependence is modeled as

| [1] |

Here, is the number of hidden units (neurons), is an activation function, and are parameters, which we collectively denote by . The factor is introduced for convenience and can be eliminated by redefining the activation. Often and

| [2] |

for some . Ideally, the parameters should be chosen as to minimize the risk (generalization error) , where is a certain loss function. For the sake of simplicity, we will focus on the square loss , but more general choices can be treated along the same lines.

In practice, the parameters of neural networks are learned by stochastic gradient descent (SGD) (4) or its variants. In the present case, this amounts to the iteration

| [3] |

Here denotes the parameters after iterations, is a step size, and is the th example. Throughout the paper, we make the following “One-Pass Assumption”: Training examples are never revisited. Equivalently, are independent and identically distributed. .

In large-scale applications, this is not far from truth: The data are so large that each example is visited at most a few times (5). Further, theoretical guarantees suggest that there is limited advantage to be gained from multiple passes (6). For recent work deriving scaling limits under such an assumption (in different problems), see ref. 7.

Understanding the optimization landscape of two-layer neural networks is largely an open problem even when we have access to an infinite number of examples—that is, to the population risk . Several studies have focused on special choices of the activation function and of the data distribution , proving that the population risk has no bad local minima (8–10). This type of analysis requires delicate calculations that are somewhat sensitive to the specific choice of the model. Another line of work proposes new algorithms with theoretical guarantees (11–16), which use initializations based on tensor factorization.

In this paper, we prove that—in a suitable scaling limit—the SGD dynamics admits an asymptotic description in terms of a certain nonlinear partial differential equation (PDE). This PDE has a remarkable mathematical structure, in that it corresponds to a gradient flow in the metric space : the space of probability measures on , endowed with the Wasserstein metric. This gradient flow minimizes an asymptotic version of the population risk, which is defined for and will be denoted by . This description simplifies the analysis of the landscape of two-layer neural networks, for instance by exploiting underlying symmetries. We illustrate this by obtaining results on several concrete examples as well as a general convergence result for “noisy SGD.” In the next section, we provide an informal outline, focusing on basic intuitions rather than on formal results. We then present the consequences of these ideas on a few specific examples and subsequently state our general results.

An Informal Overview

A good starting point is to rewrite the population risk as

| [4] |

where we defined the potentials , . In particular, is a symmetric positive semidefinite kernel. The constant is the risk of the trivial predictor .

Notice that only depends on through their empirical distribution . This suggests considering a risk function defined for [we denote by the space of probability distributions on ]:

| [5] |

Formal relationships can be established between and . For instance, under mild assumptions, . We refer to the next sections for mathematical statements of this type.

Roughly speaking, corresponds to the population risk when the number of hidden units goes to infinity, and the empirical distribution of parameters converges to . Since is positive semidefinite, we obtain that the risk becomes convex in this limit. The fact that learning can be viewed as convex optimization in an infinite-dimensional space was indeed pointed out in the past (17, 18). Does this mean that the landscape of the population risk simplifies for large and descent algorithms will converge to a unique (or nearly unique) global optimum?

The answer to the latter question is generally negative, and a physics analogy can explain why. Think of as the positions of particles in a -dimensional space. When is large, the behavior of such a “gas” of particles is effectively described by a density (with indexing time). However, not all “small” changes of this density profile can be realized in the actual physical dynamics: The dynamics conserves mass locally because particles cannot move discontinuously. For instance, if for two disjoint compact sets and all , then the total mass in each of these regions cannot change over time—that is, does not depend on .

We will prove that SGD is well approximated (in a precise quantitative sense described below) by a continuum dynamics that enforces this local mass conservation principle. Namely, assume that the step size in SGD is given by , for a sufficiently regular function. Denoting by the empirical distribution of parameters after SGD steps, we prove that

| [6] |

when , (here denotes weak convergence). The asymptotic dynamics of is defined by the following PDE, which we shall refer to as distributional dynamics (DD):

| [7] |

and

| [8] |

[Here, denotes the divergence of the vector field .] This should be interpreted as an evolution equation in . While we described the convergence to this dynamics in asymptotic terms, the results in the next sections provide explicit nonasymptotic bounds. In particular, is a good approximation of , , as soon as and .

Using these results, analyzing learning in two-layer neural networks reduces to analyzing the PDE (Eq. 7). While this is far from being an easy task, the PDE formulation leads to several simplifications and insights. First, it factors out the invariance of the risk (Eq. 4) (and of the SGD dynamics; Eq. 3), with respect to permutations of the units .

Second, it allows us to exploit symmetries in the data distribution . If is left invariant under a group of transformations (e.g., rotations), we can look for a solution of the DD (Eq. 7) that enjoys the same symmetry, hence reducing the dimensionality of the problem. This is impossible for the finite- dynamics (Eq. 3), since no arrangement of the points is left invariant, say, under rotations. We will provide examples of this approach in the next sections.

Third, there is rich mathematical literature on the PDE (Eq. 7) that was motivated by the study of interacting particle systems in mathematical physics. As mentioned above, a key structure exploited in this line of work is that Eq. 7 can be viewed as a gradient flow for the cost function in the space , of probability measures on endowed with the Wasserstein metric (19–21). Roughly speaking, this means that the trajectory attempts to minimize the risk while maintaining the “local mass conservation” constraint. Recall that the Wasserstein distance is defined as

| [9] |

where the infimum is taken over all couplings of and . Informally, the fact that is a gradient flow means that Eq. 7 is equivalent, for small , to

| [10] |

Powerful tools from the mathematical literature on gradient flows in measure spaces (20) can be exploited to study the behavior of Eq. 7.

Most importantly, the scaling limit elucidates the dependence of the landscape of two-layer neural networks on the number of hidden units .

A remarkable feature of neural networks is the observation that, while they might be dramatically overparametrized, this does not lead to performance degradation. In the case of bounded activation functions, this phenomenon was clarified in the 1990s for empirical risk minimization algorithms (see, e.g., ref. 22). The present work provides analogous insight for the SGD dynamics: Roughly speaking, our results imply that the landscape remains essentially unchanged as grows, provided . In particular, assume that the PDE (Eq. 7) converges close to an optimum in time . This might depend on but does not depend on the number of hidden units (which does not appear in the DD PDE; Eq. 7). If , we can then take arbitrarily (as long as ) and will achieve a population risk that is independent of (and corresponds to the optimum), using samples.

Our analysis can accommodate some important variants of SGD, a particularly interesting one being noisy SGD:

| [11] |

where and . (The term corresponds to an regularization and will be useful for our analysis below.) The resulting scaling limit differs from Eq. 7 by the addition of a diffusion term:

| [12] |

where , and denotes the usual Laplacian. This can be viewed as a gradient flow for the free-energy , where is the entropy of [by definition if is singular]. is an entropy-regularized risk, which penalizes strongly nonuniform .

We will prove below that, for , the evolution (Eq. 12) generically converges to the minimizer of , hence implying global convergence of noisy SGD in a number of steps independent of .

Examples

In this section, we discuss some simple applications of the general approach outlined above. Let us emphasize that these examples are not realistic. First, the data distribution is extremely simple: We made this choice to be able to carry out explicit calculations. Second, the activation function is not necessarily optimal: We made this choice to illustrate some interesting phenomena.

Centered Isotropic Gaussians.

One-neuron neural networks perform well with (nearly) linearly separable data. The simplest classification problem that requires multilayer networks is, arguably, the one of distinguishing two Gaussians with the same mean. Assume the joint law of to be as follows:

with probability : , ; and

with probability : , .

(This example will be generalized later.) Of course, optimal classification in this model becomes entirely trivial if we compute the feature . However, it is nontrivial that an SGD-trained neural network will succeed.

We choose an activation function without offset or output weights, namely . While qualitatively similar results are obtained for other choices of , we will use a simple piecewise linear function as a running example: if , if , and interpolated linearly for . In simulations, we use , , , and .

We run SGD with initial weights , where is spherically symmetric. Fig. 1 reports the result of such an experiment. Due to the symmetry of the distribution , the distribution remains spherically symmetric for all and hence is completely determined by the distribution of the norm . This distribution satisfies a one-dimensional reduced DD:

| [13] |

where the form of can be derived from . This reduced PDE can be efficiently solved numerically, see SI Appendix for technical details. As illustrated by Fig. 1, the empirical results match closely the predictions produced by this PDE.

Fig. 1.

Evolution of the radial distribution for the isotropic Gaussian model, with . Histograms are obtained from SGD experiments with , , initial weight distribution , and step size and . Continuous lines correspond to a numerical solution of the DD (Eq. 13).

In Fig. 2, we compare the asymptotic risk achieved by SGD with the prediction obtained by minimizing (cf., Eq. 5) over spherically symmetric distributions. It turns out that, for certain values of , the minimum is achieved by the uniform distribution over a sphere of radius , to be denoted by . The value of is computed by minimizing

| [14] |

where expressions for , can be readily derived from , and are given in SI Appendix.

Fig. 2.

Population risk in the problem of separating two isotropic Gaussians, as a function of the separation parameter . We use a two-layer network with piecewise linear activation, no offset, and output weights equal to 1. Empirical results obtained by SGD (a single run per data point) are marked “+.” Continuous lines are theoretical predictions obtained by numerically minimizing (see SI Appendix for details). Dashed lines are theoretical predictions from the single-delta ansatz of Eq. 14. Notice that this ansatz is incorrect for , which is marked as a solid round dot. Here, .

Lemma 1:

Let be a global minimizer of . Then is a global minimizer of if and only if for all .

Checking numerically, this condition yields that is a global minimizer for in an interval , where and .

Fig. 2 shows good quantitative agreement between empirical results and theoretical predictions and suggests that SGD achieves a value of the risk that is close to optimum. Can we prove that this is indeed the case and that the SGD dynamics does not get stuck in local minima? It turns out that we can use our general theory (see next section) to prove that this is the case for large . To state this result, we need to introduce a class of good uninformative initializations for which convergence to the optimum takes place. For , we let . This risk has a well-defined limit as . We say that if is absolutely continuous with respect to the Lebesgue measure, with bounded density, .

Theorem 1:

For any and , there exists , , and , such that the following holds for the problem of classifying isotropic Gaussians. For any dimension , number of neurons , consider SGD initialized with and step size . Then we have

| [15] |

for any with probability at least .

In particular, if we set , then the number of SGD steps is : The number of samples used by SGD does not depend on the number of hidden units and is only linear in the dimension. Unfortunately, the proof does not provide the dependence of on , but Theorem 6 below suggests exponential local convergence.

While we stated Theorem 1 for the piecewise linear sigmoids, SI Appendix presents technical conditions under which it holds for a general monotone function .

Centered Anisotropic Gaussians.

We can generalize the previous result to a problem in which the network needs to select a subset of relevant nonlinear features out of many a priori equivalent ones. We assume the joint law of to be as follows:

with probability : , ; and

with probability : , .

Given a linear subspace of dimension , we assume that , differ uniquely along : , where and is the orthogonal projector onto . In other words, the projection of on the subspace is distributed according to an isotropic Gaussian with variance (if ) or (if ). The projection orthogonal to has instead the same variance in the two classes. A successful classifier must be able to learn the relevant subspace . We assume the same class of activations as for the isotropic case.

The distribution is invariant under a reduced symmetry group . As a consequence, letting and , it is sufficient to consider distributions that are uniform, conditional on the values of and . If we initialize to be uniform conditional on , this property is preserved by the evolution (Eq. 7). As in the isotropic case, we can use our general theory to prove convergence to a near-optimum if is large enough.

Theorem 2:

For any and , there exists , , and , such that the following holds for the problem of classifying anisotropic Gaussians with , fixed. For any dimension parameters , number of neurons , consider SGD initialized with initialization and step size . Then, we have for any with probability at least .

Even with a reduced degree of symmetry, SGD converges to a network with nearly optimal risk, after using a number of samples , which is independent of the number of hidden units .

A Better Activation Function.

Our previous examples use activation functions without output weights or offset to simplify the analysis and illustrate some interesting phenomena. Here we consider instead a standard rectified linear unit (ReLU) activation and fit both the output weight and the offset: , where . Hence, .

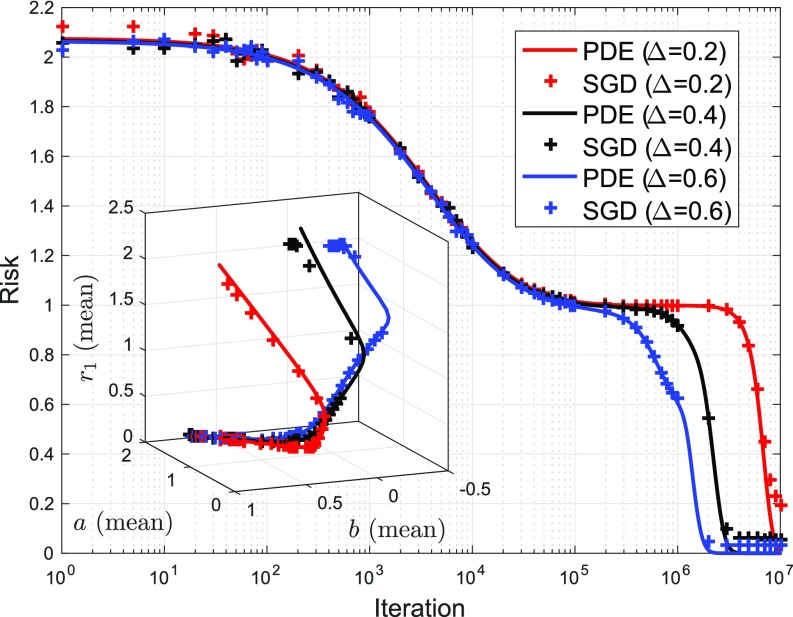

We consider the same data distribution introduced in the last section (anisotropic Gaussians). Fig. 3 reports the evolution of the risk for three experiments with , , and different values of . SGD is initialized by setting , , and for . We observe that SGD converges to a network with very small risk, but this convergence has a nontrivial structure and presents long flat regions.

Fig. 3.

Evolution of the population risk for the variable selection problem using a two-layer neural network with ReLU activations. Here , , and , and we used and to set the step size. Numerical simulations using SGD (one run per data point) are marked +, and curves are solutions of the reduced PDE with . (Inset) Evolution of three parameters of the reduced distribution (average output weights , average offsets , and average norm in the relevant subspace ) for the same setting.

The empirical results are well captured by our predictions based on the continuum limit. In this case, we obtain a reduced PDE for the joint distribution of the four quantities , denoted by . The reduced PDE is analogous to Eq. 13 albeit in 4 dimensions rather than 1 dimension. In Fig. 3, we consider the evolution of the risk, alongside three properties of the distribution —the means of the output weight , of the offset , and of .

Predicting Failure.

SGD does not always converge to a near global optimum. Our analysis allows us to construct examples in which SGD fails. For instance, Fig. 4 reports results for the isotropic Gaussians problem. We violate the assumptions of Theorem 1 by using nonmonotone activation function. Namely, we use , where for , for , and linearly interpolates from (0, –2.5) to (0.5, –4), and from (0.5, –4) to .

Fig. 4.

Separating two isotropic Gaussians, with a nonmonotone activation function (see Predicting Failure for details). Here , , and . The main frame presents the evolution of the population risk along the SGD trajectory, starting from two different initializations of for either or . In Inset, we plot the evolution of the average of for the same conditions. Symbols are empirical results. Continuous lines are predictions obtained with the reduced PDE (Eq. 13).

Depending on the initialization, SGD converges to two different limits, one with a small risk and the second with high risk. Again, this behavior is well tracked by solving a one-dimensional PDE for the distribution of .

General Results

In this section, we return to the general supervised learning problem described in the Introduction and describe our general results. Proofs are deferred to SI Appendix.

First, we note that the minimum of the asymptotic risk of Eq. 5 provides a good approximation of the minimum of the finite- risk .

Proposition 1:

Assume that either one of the following conditions hold: is achieved by a distribution such that ; There exists such that, for any such that , we have . Then

| [16] |

Further, assume that and are continuous, with bounded below. A probability measure is a global minimum of if and

| [17] |

We next consider the DDs (Eqs. 7 and 12). These should be interpreted to hold in a weak sense (cf. SI Appendix). To establish that these PDEs indeed describe the limit of the SGD dynamics, we make the following assumptions:

A1. is bounded Lipschitz: , with .

A2. The activation function is bounded, with sub-Gaussian gradient: , . Labels are bounded .

A3. The gradients , are bounded, Lipschitz continuous [namely , , , ].

We also introduce the following error term that quantifies in a nonasymptotic sense the accuracy of our PDE model:

| [18] |

The convergence of the SGD process to the PDE model is an example of a phenomenon that is known in probability theory as propagation of chaos (23).

Theorem 3:

Assume that conditions A1, A2, A3 hold. For , consider SGD with initialization and step size . For , let be the solution of PDE (Eq. 7). Then, for any fixed , almost surely along any sequence such that , . Further, there exists a constant (depending uniquely on the parameters of conditions A1–A3) such that, for any , with , ,

| [19] |

with probability . The same statements hold for noisy SGD (Eq. 11), provided Eq. 7 is replaced by Eq. 12, and if , , and is sub-Gaussian for some .

Notice that dependence of the error terms in and is rather benign. On the other hand, the error grows exponentially with the time horizon , which limits its applicability to cases in which the DD converges rapidly to a good solution. We do not expect this behavior to be improvable within the general setting of 0.3, which a priori includes cases in which the dynamics is unstable.

We can regard as a current. The fixed points of the continuum dynamics are densities that correspond to zero current, as stated below.

Proposition 2:

Assume to be differentiable with bounded gradient. If is a solution of the PDE (Eq. 7), then is nonincreasing. Further, probability distribution is a fixed point of the PDE (Eq. 7) if and only if

| [20] |

Note that global optimizers of , defined by condition (Eq. 17), are fixed points, but the set of fixed points is, in general, larger than the set of optimizers. Our next proposition provides an analogous characterization of the fixed points of diffusion DD (Eq. 12) (see ref. 21 for related results).

Proposition 3:

Assume that conditions A1–A3 hold and that is absolutely continuous with respect to the Lebesgue measure, with . If is a solution of the diffusion PDE (Eq. 12), then is absolutely continuous. Further, there is at most one fixed point of Eq. 12 satisfying . This fixed point is absolutely continuous and its density satisfies

| [21] |

In the next sections, we state our results about convergence of the DD to its fixed point. In the case of noisy SGD [and for the diffusion PDE (12)], a general convergence result can be established (although at the cost of an additional regularization). For noiseless SGD (and the continuity equation; Eq. 12), we do not have such a general result. However, we obtain a stability condition for a fixed point containing one point mass, which is useful to characterize possible limiting points (and is used in treating the examples in the previous section).

Convergence: Noisy SGD.

Remarkably, the diffusion PDE (Eq. 12) generically admits a unique fixed point, which is the global minimum of , and the evolution (Eq. 12) converges to it, if initialized so that . This statement requires some qualifications. First, we introduce sufficient regularity assumptions to guarantee the existence of sufficiently smooth solutions of Eq. 12:

-

i)

, , is uniformly bounded for .

Next notice that the righthand side of the fixed point equation (Eq. 21) is not necessarily normalizable [for instance, it is not when , are bounded]. To ensure the existence of a fixed point, we need .

Theorem 4:

Assume that conditions A1–A4 hold, and for some . Then has a unique minimizer, denoted by , which satisfies

| [22] |

where is a constant depending on . Further, letting be a solution of the diffusion PDE (Eq. 12) with initialization satisfying , we have, as ,

| [23] |

The proof of this theorem is based on the following formula that describes the free-energy decrease along the trajectories of the DD (Eq. 12):

| [24] |

(A key technical hurdle is of course proving that this expression makes sense, which we do by showing the existence of strong solutions.) It follows that the righthand side must vanish as , from which we prove that (eventually taking subsequences) where must satisfy . This in turns mean is a solution of the fixed point condition 21 and is in fact a global minimum of by convexity.

This result can be used in conjunction with Theorem 3, to analyze the regularized noisy SGD algorithm (Eq. 11).

Theorem 5:

Assume that conditions A1–A4 hold. Let be absolutely continuous with and sub-Gaussian. Consider regularized noisy SGD (cf. Eq. 11) at inverse temperature , regularization with initialization . Then, for any , there exists , and setting , there exists and independent of the dimension and temperature ) such that the following happens for , : For any , we have, with probability ,

| [25] |

Let us emphasize that the convergence time in the last theorem can depend on the dimension and on the data distribution but is independent of the number of hidden units . As illustrated by the examples in the previous section, understanding the dependence of on requires further analysis, but examining the proof of this theorem suggests quite generally [examples in which or can be constructed]. We expect that our techniques could be pushed to investigate the dependence of on (see SI Appendix, Discussion). In highly structured cases, the dimension can be of constant order and be much smaller than .

Convergence: Noiseless SGD.

The next theorems provide necessary and sufficient conditions for distributions containing a single point mass to be a stable fixed point of the evolution. This result is useful to characterize the large time asymptotics of the dynamics (Eq. 7). Here, we write for the gradient of with respect to its first argument and for the corresponding Hessian. Further, for a probability distribution , we define

| [26] |

Note that is nothing but the Hessian of at .

Theorem 6:

Assume to be twice differentiable with bounded gradient and bounded continuous Hessian. Let be given. Then is a fixed point of the evolution (Eq. 7) if and only if .

Define as per Eq. 26. If , then there exists such that, if , then as . In fact, convergence is exponentially fast, namely for some .

Theorem 7:

Under the same assumptions of Theorem 6, let be a fixed point of dynamics (Eq. 7), with and (which, in particular, is implied by the fixed point condition; Eq. 20). Define the level sets and make the following assumptions: (B1) The eigenvalues of are all different from 0, with ; (B2) as ; and (B3) there exists such that the sets are compact for all .

If has a bounded density with respect to the Lebesgue measure, then it cannot be that converges weakly to as .

Discussion and Future Directions

In this paper, we developed an approach to the analysis of two-layer neural networks. Using a propagation-of-chaos argument, we proved that—if the number of hidden units satisfies —SGD dynamics is well approximated by the PDE in Eq. 7, while noisy SGD is well approximated by Eq. 12. Both of these asymptotic descriptions correspond to Wasserstein gradient flows for certain energy (or free energy) functionals. While empirical risk minimization is known to be insensitive to overparametrization (22), the present work clarifies that the SGD behavior is also independent of the number of hidden units, as soon as this is large enough.

We illustrated our approach on several concrete examples, by proving convergence of SGD to a near-global optimum. This type of analysis provides a mechanism for avoiding the perils of nonconvexity. We do not prove that the finite- risk has a unique local minimum or that all local minima are close to each other. Such claims have often been the target of earlier work but might be too strong for the case of neural networks. We prove instead that the PDE (Eq. 7) converges to a near-global optimum, when initialized with a bounded density. This effectively gets rid of some exceptional stationary points of and merges multiple finite stationary points that result into similar distributions .

In the case of noisy SGD (Eq. 11), we prove that it converges generically to a near-global minimum of the regularized risk, in time independent of the number of hidden units.

We emphasize that while we focused here on the case of square loss, our approach should be generalizable to other loss functions as well (cf. SI Appendix).

The present work opens the way to several interesting research directions. We will mention two of them: The PDE (Eq. 7) corresponds to gradient flow in the Wasserstein metric for the risk (see ref. 20). Building on this remark, tools from optimal transportation theory can be used to prove convergence. Multiple finite- local minima can correspond to the same minimizer of in the limit . Ideas from glass theory (24) might be useful to investigate this structure.

Let us finally mention that, after a first version of this paper appeared as a preprint, several other groups obtained results that are closely related to Theorem 3 (25–27).

Supplementary Material

Acknowledgments

This work was partially supported by NSF Grants DMS-1613091, CCF-1714305, and IIS-1741162. S.M. was partially supported by an Office of Technology Licensing Stanford Graduate Fellowship. P.-M.N. was partially supported by a William R. Hewlett Stanford Graduate Fellowship.

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1806579115/-/DCSupplemental.

References

- 1.Rosenblatt F. Principles of Neurodynamics. Spartan Book; Washington, DC: 1962. [Google Scholar]

- 2.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. In: Vardi MY, editor. Advances in Neural Information Processing Systems. Association for Computing Machinery; New York: 2012. pp. 1097–1105. [Google Scholar]

- 3.Goodfellow I, Bengio Y, Courville A, Bengio Y. Deep Learning. Vol 1 MIT Press; Cambridge: 2016. [Google Scholar]

- 4.Robbins H, Monro S. A stochastic approximation method. Ann Math Stat. 1951;22:400–407. [Google Scholar]

- 5.Bottou L. Large-scale machine learning with stochastic gradient descent. In: Lechevallier Y, Saporta G, editors. Proceedings of COMPSTAT’2010. Physica; Heidelberg: 2010. pp. 177–186. [Google Scholar]

- 6.Shalev-Shwartz S, Ben-David S. Understanding Machine Learning: From Theory to Algorithms. Cambridge Univ Press; New York: 2014. [Google Scholar]

- 7.Wang C, Mattingly J, Lu YM. 2017. Scaling limit: Exact and tractable analysis of online learning algorithms with applications to regularized regression and PCA. arXiv:1712.04332.

- 8.Soltanolkotabi M, Javanmard A, Lee JD. 2017. Theoretical insights into the optimization landscape of over-parameterized shallow neural networks. arXiv:1707.04926.

- 9.Ge R, Lee JD, Ma T. 2017. Learning one-hidden-layer neural networks with landscape design. arXiv:1711.00501.

- 10.Brutzkus A, Globerson A. 2017. Globally optimal gradient descent for a convnet with Gaussian inputs. arXiv:1702.07966.

- 11.Arora S, Bhaskara A, Ge R, Ma T. 2014. Provable bounds for learning some deep representations. Proceedings of International Conference on Machine Learning (ICML). Available at https://arxiv.org/abs/1310.6343. Accessed July 18, 2018.

- 12.Sedghi H, Anandkumar A. 2015. Provable methods for training neural networks with sparse connectivity. Proceedings of International Conference on Learning Representation (ICLR). Available at https://arxiv.org/abs/1412.2693. Accessed July 18, 2018.

- 13.Janzamin M, Sedghi H, Anandkumar A. 2015. Beating the perils of non-convexity: Guaranteed training of neural networks using tensor methods. arXiv:1506.08473.

- 14.Zhang Y, Lee JD, Jordan MI. 2016. L1-regularized neural networks are improperly learnable in polynomial time. Proceedings of International Conference on Machine Learning (ICML). Available at https://arxiv.org/abs/1510.03528. Accessed July 18, 2018.

- 15.Tian Y. 2017. Symmetry-breaking convergence analysis of certain two-layered neural networks with ReLU nonlinearity. International Conference on Learning Representation (ICLR). Available at https://openreview.net/forum?id=Hk85q85ee. Accessed July 18, 2018.

- 16.Zhong K, Song Z, Jain P, Bartlett PL, Dhillon IS. 2017. Recovery guarantees for one-hidden-layer neural networks. arXiv:1706.03175.

- 17.Sun Lee W, Bartlett PL, Williamson RC. Efficient agnostic learning of neural networks with bounded fan-in. IEEE Trans Inf Theor. 1996;42:2118–2132. [Google Scholar]

- 18.Bengio Y, Roux NL, Vincent P, Delalleau O, Marcotte P. Convex neural networks. In: Weiss Y, Schölkopf B, Platt JC, editors. Advances in Neural Information Processing Systems. MIT Press; Cambridge, MA: 2006. pp. 123–130. [Google Scholar]

- 19.Jordan R, Kinderlehrer D, Otto F. The variational formulation of the Fokker–Planck equation. SIAM J Math Anal. 1998;29:1–17. [Google Scholar]

- 20.Ambrosio L, Gigli N, Savaré G. Gradient Flows: In Metric Spaces and in the Space of Probability Measures. Birkhäuser; Basel: 2008. [Google Scholar]

- 21.Carrillo JA, McCann RJ, Villani C. Kinetic equilibration rates for granular media and related equations: Entropy dissipation and mass transportation estimates. Reva Matematica Iberoam. 2003;19:971–1018. [Google Scholar]

- 22.Bartlett PL. The sample complexity of pattern classification with neural networks: The size of the weights is more important than the size of the network. IEEE Trans Inf Theor. 1998;44:525–536. [Google Scholar]

- 23.Sznitman A-S. Topics in propagation of chaos. In: Hennequin PL, editor. Ecole d’été de probabilités de Saint-Flour XIX—1989. Springer; Berlin: 1991. pp. 165–251. [Google Scholar]

- 24.Mézard M, Parisi G. Thermodynamics of glasses: A first principles computation. J Phys Condens Matter. 1999;11:A157–A165. [Google Scholar]

- 25.Rotskoff GM, Vanden-Eijnden E. 2018. Neural networks as interacting particle systems: Asymptotic convexity of the loss landscape and universal scaling of the approximation error. arXiv:1805.00915.

- 26.Sirignano J, Spiliopoulos K. 2018. Mean field analysis of neural networks. arXiv:1805.01053.

- 27.Chizat L, Bach F. 2018. On the global convergence of gradient descent for over-parameterized models using optimal transport. arXiv:1805.09545.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.