Abstract

Over the past few decades, neuroscience research has illuminated the neural mechanisms supporting learning from reward feedback. Learning paradigms are increasingly being extended to study mood and psychiatric disorders as well as addiction. However, one potentially critical characteristic that this research ignores is the effect of time on learning: human feedback learning paradigms are usually conducted in a single rapidly paced session, whereas learning experiences in ecologically relevant circumstances and in animal research are almost always separated by longer periods of time. In our experiments, we examined reward learning in short condensed sessions distributed across weeks versus learning completed in a single “massed” session in male and female participants. As expected, we found that after equal amounts of training, accuracy was matched between the spaced and massed conditions. However, in a 3-week follow-up, we found that participants exhibited significantly greater memory for the value of spaced-trained stimuli. Supporting a role for short-term memory in massed learning, we found a significant positive correlation between initial learning and working memory capacity. Neurally, we found that patterns of activity in the medial temporal lobe and prefrontal cortex showed stronger discrimination of spaced- versus massed-trained reward values. Further, patterns in the striatum discriminated between spaced- and massed-trained stimuli overall. Our results indicate that single-session learning tasks engage partially distinct learning mechanisms from distributed training. Our studies begin to address a large gap in our knowledge of human learning from reinforcement, with potential implications for our understanding of mood disorders and addiction.

SIGNIFICANCE STATEMENT Humans and animals learn to associate predictive value with stimuli and actions, and these values then guide future behavior. Such reinforcement-based learning often happens over long time periods, in contrast to most studies of reward-based learning in humans. In experiments that tested the effect of spacing on learning, we found that associations learned in a single massed session were correlated with short-term memory and significantly decayed over time, whereas associations learned in short massed sessions over weeks were well maintained. Additionally, patterns of activity in the medial temporal lobe and prefrontal cortex discriminated the values of stimuli learned over weeks but not minutes. These results highlight the importance of studying learning over time, with potential applications to drug addiction and psychiatry.

Keywords: hippocampus, reinforcement learning, reward, spacing, striatum

Introduction

When making a choice between an apple and a banana, our decision often relies on values shaped by countless previous experiences. By learning from the outcomes of these repeated experiences, we can make efficient and adaptive choices in the future. Over the past few decades, neuroscience research has revealed the neural mechanisms supporting this kind of learning from reward feedback, demonstrating a critical role for the striatum and the midbrain dopamine system (Houk et al., 1995; Schultz et al., 1997; Dolan and Dayan, 2013; Steinberg et al., 2013). However, research in humans has tended to focus on two different timescales: short-term learning from reward feedback across minutes, for example, in “bandit” or probabilistic selection tasks (Frank et al., 2004; Daw et al., 2006), or choices based on well-learned values, for example, over snack foods (Plassmann et al., 2007). There has been remarkably little research in humans that examines how value associations are acquired or maintained beyond a single session (Herbener, 2009; Tricomi et al., 2009; Grogan et al., 2017; de Wit et al., 2018), even though our preferences are often shaped across multiple days, months, or years of experience.

Recently, researchers have begun to use learning tasks in combination with reinforcement learning models to investigate behavioral dysfunctions in mood and psychiatric disorders as well as addiction in the growing area of “computational psychiatry” (Maia and Frank, 2011; Schultz, 2011; Montague et al., 2012; Whitton et al., 2015; Moutoussis et al., 2017). This translational work on human reward-based learning builds on research in animals where circuit functions can be extensively manipulated (Steinberg et al., 2013; Ferenczi et al., 2016). However, at the condensed timescale of most human paradigms, “massed” timing likely allows processes in addition to dopaminergic mechanisms of feedback-based learning, such as working memory, to support behavior (Collins and Frank, 2012; Collins et al., 2014).

Although no studies have directly compared values learned in massed vs spaced sessions, several recent neuroimaging studies have examined the neural representation of values learned across days (Tricomi et al., 2009; Wunderlich et al., 2012), supporting a role for the human posterior striatum in representing the value of well-learned stimuli. These findings align with neurophysiological recordings in the striatum of animals (Yin and Knowlton, 2006; Kim and Hikosaka, 2013). However, reward-related BOLD responses in the putamen are not selective to consolidated or “habitual” reward associations (O'Doherty et al., 2003; Dickerson et al., 2011; Wimmer et al., 2014); moreover, previous studies did not allow for a matched comparison between newly-learned reward associations and consolidated associations.

In addition to the striatum, fMRI and neurophysiological studies have shown that responses in the medial temporal lobe and hippocampus are correlated with reward and value (Lebreton et al., 2009; Wirth et al., 2009; Lee et al., 2012; Wimmer et al., 2012). Although these responses are not easily explained by a relational memory theory of MTL function (Eichenbaum and Cohen, 2001), they may fit within a more general view of the hippocampus in supporting some forms of statistical learning (including stimulus-stimulus associations; Schapiro et al., 2012, 2014). Memory mechanisms in the MTL may also play a role in representing previous episodes that can be sampled to make a reward-based decision (Murty et al., 2016; Wimmer and Büchel, 2016; Bornstein et al., 2017), a role that could be enhanced by consolidation.

To characterize the cognitive and neural mechanisms that support learning long-term reward associations, we used a simple reward-based learning task. Participants initially learned value associations for spaced stimuli in the laboratory and then online across 2 weeks in multiple short massed sessions. Associations for massed stimuli were acquired during a second in-lab session over ∼20 min (followed by fMRI scanning in one group), similar to the kind of training commonly used in reinforcement learning tasks. Finally, to examine maintenance of learning, a long-term test was administered 3 weeks after the completion of training.

Materials and Methods

Participants and overview.

Participants were recruited via advertising on the Stanford Department of Psychology paid participant pool web portal (https://stanfordpsychpaid.sona-systems.com). Informed consent was obtained in a manner approved by the Stanford University Institutional Review Board. In Study 1, behavioral and fMRI data acquisition proceeded until fMRI seed grant funding expired, leading to a total of 33 scanned participants in the reward learning task. To ensure that the fMRI sessions 2 weeks after the first in-lab session were fully subscribed, a total of 62 participants completed the first behavioral session. Of this group, a total of 29 participants did not complete the fMRI and behavioral experiment described below. The results of 33 participants (20 female) are included in the analyses and results, with a mean age of 22.8 years (range: 18–34). Participants were paid $10/h for the first in-lab session and $30/h for the second in-lab (fMRI) session, plus monetary rewards from the learning phase and choice test phase.

In Study 2, a total of 35 participants participated in the first session of the experiment, but four were excluded from the final dataset, as described below. Our sample size was designed to approximately match the size of Study 1. The final dataset included 31 participants (24 female), with a mean age of 23.3 years (range: 18–32). Two participants failed to complete the second in-lab session and all data were excluded; one other participant exhibited poor performance the first session (<54% correct during learning and <40% correct in the choice test) and was therefore excluded from participation in the follow-up sessions. Of the 31 included participants, one participant failed to complete the third in-lab session, but data from other sessions were included. Participants were paid $10/h for the two in-lab sessions, monetary rewards from the learning phase and choice test phase, plus a bonus of $12 for the 5 min duration third in-lab session.

Both Study 1 and Study 2 used the same reward-based learning task (adapted from Gerraty et al., 2014). Participants learned the best response for individual stimuli to maximize their payoff. Two different sets of stimuli were either trained in multiple massed sessions spaced across 2 weeks (“spaced-trained” stimuli) or in a single session (“massed-trained” stimuli; Fig. 1A). Initial spaced learning began in the first in-lab session and continued across three online training sessions spread across ∼2 weeks. Initial learning about massed stimuli began in the second in-lab session and continued until training was complete. Spaced training always preceded massed training so that, by the end of the second in-lab session, both sets of stimuli had been shown on an equal number of learning trials. This design was the same across Study 1 and Study 2, with the difference that Study 1 included an fMRI portion at the time of the second in-lab session and that post-learning tests were conducted at different points during learning in the two studies. Additionally, the 3-week follow-up measurement was conducted online for Study 1 and in-lab for Study 2.

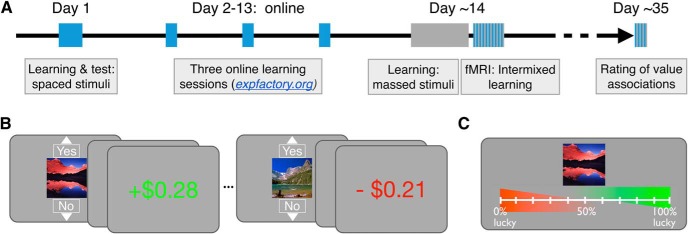

Figure 1.

A, Experimental timeline. Learning for the spaced-trained stimuli is indicated in blue and learning for the massed-trained stimuli is indicated in gray. The initial learning session for spaced-trained stimuli was completed on day 1. Learning for spaced stimuli was then completed in multiple short (massed) sessions, whereas learning for massed stimuli was completed in a single session ∼14 d later. Aside from the separation of spaced learning into multiple condensed sessions, inter-trial timing was matched across conditions. A forced-choice test was also collected after initial learning and the completion of learning. A long-term follow-up measure of reward value using ratings was collected after ∼3 weeks. B, Reward learning task. Participants learned to select “Yes” for reward-associated stimuli and “No” for loss-associated stimuli. Choices were presented for 2 s and feedback followed after a 1 s delay. C, Reward association rating test. This rating phase followed the initial in-lab learning sessions and was also administered 3 weeks after the last learning session.

We chose to use a simple instrumental learning task (adapted from Gerraty et al., 2014) instead of a choice-based learning task for three reasons. First, the majority of the animal work that we are translating involves relatively simple instrumental or Pavlovian value learning designs with a single focal stimulus (Schultz et al., 1997; O'Doherty et al., 2003; Tricomi et al., 2009), including previous work on spacing effects in feedback learning (Spence and Norris, 1950; Teichner, 1952). Most directly, the present design was inspired by the work of Hikosaka and colleagues on long-term memory for value (Kim and Hikosaka, 2013; Kim et al., 2015; Ghazizadeh et al., 2018) and by the work of Collins and colleagues on short-term memory contributions to rapid feedback-based learning (Collins and Frank, 2012; Collins et al., 2014). Second, a single-stimulus design avoids differential attentional allocation toward the relatively more valuable stimulus in a set, which is inherent in multistimulus designs (Daw et al., 2006; Pessiglione et al., 2006). Third, our reward learning paradigm has been shown previously to effectively establish stimulus–value associations (Gerraty et al., 2014), as evidenced by the ability of newly learned stimulus–reward associations to transfer or generalize across previously established relational associations. Such transfer is related to striatal correlates of learned value (Wimmer and Shohamy, 2012). Although our in-task learning measures are related to “Yes”/“No” action value, this previous work and recent human fMRI research indicate that mechanisms supporting the learning of stimulus–action and stimulus–value associations operate at the same time (Colas et al., 2017).

Study 1 experimental design.

In Study 1, before the learning phase, participants rated a set of 38 landscape picture stimuli based on liking using a computer mouse, preceded by one practice trial. The same selection procedure and landscape stimuli were used previously (Wimmer and Shohamy, 2012). These ratings were used to select the 16 most neutrally-rated set of stimuli per participant to be used in Study 1. Stimuli were then randomly assigned to condition (spaced or massed) and value (reward or loss). In Study 2, we used the ratings collected across participants in Study 1 to find the most neutrally-rated stimuli on average and then created two counterbalanced lists of stimuli from this set.

Next, in the reward game in both studies, participants' goal was to learn the best response (arbitrarily labeled “Yes” and “No”) for each stimulus. Participants used up and down arrow keys to make “Yes” and “No” responses, respectively. Reward-associated stimuli led to a win of $0.35 on average when “Yes” was selected and a small loss of −$0.05 when “No” was selected. Loss-associated stimuli led to a neutral outcome of $0.00 when “No” was selected and −$0.25 when “Yes” was selected. These associations were probabilistic such that the best response led to the best outcome 80% of the time during training. If no response was recorded, at feedback, a warning was given: “Too late or wrong key! −$0.50” and participants lost $0.50.

In a single reward learning trial, a stimulus was first presented with the options “Yes” and “No” above and below the image, respectively (Fig. 1B). Participants had 2 s to make a choice. After the full 2 s choice period, a 1 s blank screen inter-trial interval (ITI) preceded feedback presentation. Feedback was presented in text for 1.5 s, leading to a total trial duration of 4.5 s. Reward feedback above +$0.10 was presented in green and feedback below $0.00 was presented in red; other values were presented in white. After the feedback, an ITI of duration 2 preceded the next trial (min, 0.50 s; max, 3.5 s), where in the last 0.25 s before the next trial, the fixation cross turned from white to black. The background for all parts of the experiment was gray (RGB value [111 111 111]). We specifically designed the timing of feedback (3 s from the onset of choice) to fall within the range of previous studies on feedback-based learning and the dopamine system, which show a strong decay of the fidelity of the dopamine reward prediction error response as feedback is delayed beyond several seconds (Fiorillo et al., 2008); beyond this point, other (e.g., hippocampal) mechanisms may support learning (Foerde et al., 2013).

To increase engagement and attention to the feedback, we introduced uncertainty into the feedback amounts in two ways: first, all feedback amounts were jittered ±$0.05 around the mean using a flat distribution. Second, for the reward-associated stimuli, half were associated with a high reward amount ($0.45) and half with a low reward amount ($0.25). We did not find that this second manipulation significantly affected learning performance at the end of the training phase, so our analyses and results collapse across the reward levels.

In the initial spaced learning session in-lab, participants learned associations for spaced-trained stimuli, which differed from the training for massed-trained stimuli only in that training for spaced stimuli was spread across four “massed” sessions, one in-lab and three online. Initial learning followed by completion of learning for massed-trained stimuli occurred in the subsequent second in-lab session. The spaced- and massed-trained conditions each included eight different stimuli, of which half were associated with reward and half were associated with loss. In the initial learning session for both conditions, each stimulus was repeated 10 times. The lists for the initial learning session were pseudorandomized, with constraints introduced to facilitate initial learning and to achieve ceiling performance before the end of training.

To more closely match the delay between repetitions commonly found in human studies of feedback-based learning (Pessiglione et al., 2006), where only several different trial types are included, we staged the introduction of the eight stimuli into two sets. In the initial learning session for both spaced- and massed-trained stimuli, four stimuli were introduced in the first 40 trials and the other four stimuli were introduced in the second 40 trials. Further, when a new stimulus was introduced, the first repetition followed immediately. The phase began with four practice trials, including one reward-associated practice stimulus and one loss-associated practice stimulus, followed by a question about task understanding. Three rest breaks were distributed throughout the rest of the phase.

After the initial learning session in both conditions, participants completed a reward rating phase and an incentive-compatible choice phase. In the reward rating phase, participants tried to remember whether a stimulus was associated with reward or loss. They were instructed to use a rating scale to indicate their memory and their confidence in their memory using a graded scale, with responses made via computer mouse (Fig. 1C). Responses were self-paced. After 0.5 s, trials were followed by a 3 s ITI. For analyses, responses (recorded in pixel left–right location values) were transformed to 0–100%.

In the incentive-compatible choice phase, participants made a forced-choice response between two stimuli, only including spaced stimuli in the first in-lab session and only including massed stimuli in the second in-lab session. Stimuli were randomly presented on the left and right side of the screen. Participants made their choice using the 1–4 number keys in the top row of the keyboard, with a “1” or “4” response indicating a confident choice of the left or right option, respectively, and a “2” or “3” response indicating a guess choice of the left or right option, respectively. The trial terminated 0.25 s after a response was recorded, followed by a 2.5 s ITI. Responses were self-paced. Participants were informed that they would not receive feedback after each choice, but that the computer would keep track of the number of correct choices of the reward-associated stimuli that were made and pay a bonus based on their performance. Because the long-term follow-up only included ratings, choice analyses were limited to comparing how choices aligned with ratings.

At the end of the session, participants completed two additional measures. We collected the Beck Depression Inventory, but scores were too low and lacked enough variability to enable later analysis (median score = 2 of 69 possible; scores >13 indicate mild depression). The second measure we collected was the operation-span task (OSPAN), which was used as an index of working memory capacity (Lewandowsky et al., 2010; Otto et al., 2013). In the OSPAN, participants made accuracy judgments about simple arithmetic equations (e.g., “2 + 2 = 5”). After a response, an unrelated letter appeared (e.g., “B”), followed by the next equation. After arithmetic-letter sequences ranging in length from 4 to 8, participants were asked to type in the letters that they had seen in order, with no time limit. Each sequence length was repeated three times. To ensure that participants were fully practiced in the task before it began, the task was described in-depth in instruction slides, followed by five practice trials. Scores were calculated by summing the number of letters in fully correct letter responses across all 15 trials (mean, 49.9; range, 19–83) (Otto et al., 2013); mean performance on the arithmetic component was 81.9%.

Online training.

Subsequent to the first in-lab session where training on spaced stimuli began, participants completed three short online “massed” sessions with the spaced-trained stimuli (see also Eldar et al. 2018). Sessions were completed on a laptop or desktop computer (but not on mobile devices) using the expfactory.org platform (Sochat et al., 2016). Code for the online reward learning phase can be found at: https://github.com/gewimmer-neuro/reward_learning_js. Each online training session included five repetitions of the eight spaced-trained stimuli in a random order, leading to 15 additional repetitions per spaced-trained stimulus overall. The task and timing were the same as in the in-lab sessions, with the exception that the screen background was white and the white feedback text was replaced with gray. Participants completed the online sessions across ∼2 weeks, initiated with an E-mail from the experimenter including login details for that session. If participants had not yet completed the preceding online session when the notification about the next session was received, participants were instructed to complete the preceding session that day and the next session the following day. Therefore, at least one overnight period was required between sessions. Participants were instructed to complete the session when they were alert and not distracted. We found that data for two sessions in one participant were missing and, for an additional seven participants, data for one online session was missing. Based on follow-up with a subset of participants, we can conclude that missing data was due in some cases to technical failures and in some cases due to noncompliance. Among participants with a missing online spaced training session, performance during scanning for spaced-trained stimuli was above the group mean (94.8% vs 91.0%). Note that, if a subset of participants did not complete some part of the spaced training, this would, if anything, weaken any differences between spaced and massed training.

Second in-lab session.

Next, participants returned for a second in-lab session ∼2 weeks later (mean, 13.5 d; range, 10–20 d). Here, participants began and completed learning on the massed-trained stimuli. Initial training across the first 10 repetitions was conducted as described above for the first in-lab session. Next, participants completed a rating phase including both spaced- and massed-trained stimuli and a choice phase involving only the massed-trained stimuli. After this, participants finished training on the massed-trained stimuli, bringing total experience up to 25 repetitions, the same as for the spaced-trained stimuli to that point.

In Study 1, participants next entered the scanner for an intermixed learning session. Across two blocks, participants engaged in additional training on the spaced- and massed-trained stimuli, with six repetitions per stimulus. With four initial practice trials, there were 100 total trials. During scanning, task event durations were as in the behavioral task above and ITI durations were on average 3.5 s (min, 1.45 s; max, 6.55 s). Responses were made using a button cylinder with the response box positioned to allow finger responses to mirror those made on the up and down arrow keys on the keyboard.

Following the intermixed learning session, participants engaged in a single no-feedback block, where stimuli were presented with no response requirements. This block provided measures of responses to stimuli without the presence of feedback. Lists were designed to allow for tests of potential cross-stimulus repetition suppression (Barron et al., 2013; Klein-Flügge et al., 2013; Barron et al., 2016). Stimuli were presented for 1.5 s, followed by a 1.25 s ITI (range, 0.3–3.7 s). To provide a measure of attention and to promote recollection and processing of stimulus value, participants were instructed to remember whether a stimulus had been associated with reward or with no reward. On ∼10% of trials, 1 s after the stimulus had disappeared, participants were asked to answer whether the best response to the stimulus was a “Yes” or a “No.” Participants had a 2 s window in which to make their response; no feedback was provided unless a response was not recorded, in which case the warning “Too late or wrong key! −$0.50” was displayed. Each stimulus was repeated 10 times during the no-feedback phase, yielding 160 trials. Different stimuli of the same type (spaced training by reward value) were repeated on sequential trials to allow for repetition suppression analyses. At least 18 sequential events for each of these critical four comparisons were presented in a pseudorandom order.

In Study 1, participants also engaged in an additional unrelated cognitive task during the scanning session (∼30 min) and a resting scan (8 min). The order of the cognitive task and the reward learning task were counterbalanced across participants. Results from the cognitive task will be reported separately.

After scanning, participants engaged in an exploratory block to study whether and how participants would reverse their behavior given a shift in feedback contingencies. Importantly, the “reversed” stimuli and control non-reversed stimuli (4 per condition per participant) were not included in the analyses of the 3-week follow-up data. One medium reward stimulus and one loss-associated stimulus each from the spaced and massed conditions were subject to reversal. These reversed stimuli were pseudorandomly interspersed with a non-reversed medium reward stimulus and a non-reversed loss stimulus from each condition, yielding eight stimuli total. In the reversal, the feedback for the first presentation of the reversed stimuli was as expected, whereas the remaining nine repetitions were reversed (at a 78% probability).

We did not find any reliable effect of spaced training on reversal of reward or loss associations. Massed-trained reward-associated stimulus performance across the repetitions following the reversal (3–10) was 65.7% [58.7 76.9]; spaced-trained performance was 60.1% [49.1 71.1]. Although performance on the spaced-trained stimuli was lower, this effect was not significant (t(30) = 1.06, CI [−5.2 16.5]; p = 0.30; two one-sided test [TOST], t(30) = 1.89, p = 0.034). Massed-trained loss-associated stimulus performance across the repetitions following the reversal was 36.3% [23.6 49.0]; spaced-trained performance was 38.3% [23.9 52.8] (t(30) = −0.26, CI [−18.0 13.0]; p = 0.80; TOST equivalence test, t(30) = −2.69, p = 0.005). Because the reversal phase came after a long experiment before and during scanning, including an unrelated demanding cognitive control task, it is possible that the results were affected by general fatigue. The lack of an effect of spaced training on reversal performance indicates that alternative cognitive or short-term learning mechanisms can override well-learned reward associations.

Three-week follow-up.

We administered a follow-up test of memory for the value of conditioned stimuli ∼3 weeks later (mean, 24.5 d; range, 20–37 d). An online questionnaire was constructed with each participant's stimuli using Google Forms (https://docs.google.com/forms). Participants were instructed to try to remember whether a stimulus was associated with winning money or not winning money using an adapted version of the scale from the rating phase of the in-lab experiment. Responses were recorded using a 10-point radio button scale anchored with “0% lucky” on the left to “100% lucky” on the right. Similar to the in-lab ratings, participants were instructed to respond to the far right end of the scale if they were completely confident that a given stimulus was associated with reward and to the far left if they were completely confident that a given stimulus was associated with no reward. Therefore, distance from the center origin represented confidence in their memory. Note that no choice test measures were collected at the long-term follow-up.

In-lab portions of the study were presented using Psychtoolbox 3.0 (Brainard, 1997), with the initial in-lab session conducted on 21.5-inch Apple iMacs. Online training was completed using expfactory.org (Sochat et al., 2016), with functions adapted from the jspsych library (de Leeuw, 2015). At the second in-lab session, before scanning, participants completed massed-stimulus training on a 15-inch MacBook Pro laptop. During scanning, stimuli were presented on a screen positioned above the participant's eyes that reflected an LCD screen placed in the rear of the magnet bore. Responses during the fMRI portion were made using a five-button cylinder button response box (Current Designs). Participants used the top button on the side of the cylinder for “Yes” responses and the next lower button for “No” responses. We positioned the response box in the participant's hand so that the arrangement mirrored the relative position of the up and down arrow keys on the keyboard from the training task sessions.

Study 2 experimental design.

The procedure for Study 2 was the same as for Study 1, with the important difference that the long-term follow-up was conducted in the laboratory rather than online. There were two smaller differences: learning for massed stimuli was conducted in full without interruption for intermediate ratings and choices and fMRI data were not collected.

Stimuli for Study 2 were composed of the most neutrally-rated landscape stimuli from Study 1 pre-experiment ratings. Two counterbalance stimulus lists were created and assigned randomly to participants. The initial learning session for the spaced-trained stimuli and the three online training sessions were completed as described above. Following the training and testing phases, participants completed the OSPAN to collect a measure of working memory capacity. Scores were calculated as in Study 1 (mean, 49.7; range 17–83); mean performance on the arithmetic component was 93.1%.

During the 2 weeks between the in-lab sessions, participants completed three short “massed” online training sessions for the spaced-trained stimuli, as described above. We found that data for three sessions in one participant were missing, data for two sessions in one participant were missing, and data for one session in five participants was missing. Based on the information from Study 1, we can infer that some data were missing for technical reasons and some missing because of noncompliance. Among participants with at least one missing online session, performance during scanning for spaced-trained stimuli was near the group mean (84.8% vs 86.4%). Note that the absence of spaced training in some participants would, if anything, weaken any differences between the spaced and massed condition.

Second in-lab session.

The second in-lab session was completed ∼2 weeks after the first session (mean, 12.8 d; range, 10–17 d). Here, participants engaged in the initial massed learning session, which then continued through all 25 repetitions of each “massed” stimulus. Short rest breaks were included, but Study 2 omitted the intervening reward rating and choice test phases of Study 1. In the last part of the learning phase, to assess end-state performance on both spaced-trained and massed-trained stimuli, three repetitions of each stimulus were presented in a pseudorandom order. Rating and choice phase data were acquired after this learning block, with trial timing as described above.

After the choice phase, we administered an exploratory phase to assess potential conditioned stimulus-cued biases in new learning. This phase was conducted in a subset of 25 participants because the task was still under development when the data from the initial six participants were acquired. Participants engaged in learning about new stimuli (abstract characters) in the same paradigm as described above (Fig. 1B), whereas unrelated spaced- or massed-trained landscape stimuli were presented tiled in the background during the choice period. Across all trials, we found a positive influence of background prime reward value on the rate of “Yes” responding (reward prime mean 54.4% CI [48.2 60.4]; loss prime mean 43.2% CI [36.6 48.0). This did not differ between the spaced and massed conditions (spaced difference, 13.0% CI [4.4 21.6]; massed difference, 11.0% CI [1.6 20.4]; t(24) = 0.71, CI [−3.8 7.8]; p = 0.49; TOST equivalence test, p = 0.017). One limitation in this exploratory phase was that learning for the new stimuli, similar to that reported below for the regular phases, was quite rapid, likely due to the sequential ordering of the first and second presentations of a new stimulus (performance reached 77.5% correct by the second repetition). Rapid learning about the new stimuli may have minimized the capacity to detect differences in priming due to spaced versus massed training.

Three-week follow-up.

Approximately 3 weeks after the second in-lab session (mean, 21.1 d; range, 16–26 d), participants returned to the laboratory for the third and final in-lab session. Using the same testing rooms as during the previous sessions (which included the full training session on massed stimuli), participants completed another rating phase. Participants were reminded of the reward rating instructions and told to “do their best” to remember whether individual stimuli had been associated with reward or loss during training. Trial timing was as described above and the order of stimuli was pseudorandomized. As in Study 1, we did not collect any choice test data in the follow-up session.

fMRI data acquisition.

Whole-brain imaging was conducted on a GE Healthcare 3 T Discovery system equipped with a 32-channel head coil (Stanford Center for Cognitive and Neurobiological Imaging). Functional images were collected using a multiband (simultaneous multi-slice) acquisition sequence (TR = 680 ms, TE = 30 ms, flip angle = 53, multiband factor = 8; 2.2 mm isotropic voxel size; 64 8 × 8 axial slices with no gap). For participant 290, TR was changed due to error, resulting in runs of 924, 874, and 720 ms TRs. Slices were tilted ∼30° relative to the AC–PC line to improve signal-to-noise ratio in the orbitofrontal cortex (OFC) (Deichmann et al., 2003). Head padding was used to minimize head motion.

During learning phase scanning, two participants were excluded for excessive head motion (5 or more >1.5 mm framewise displacement translations from TR to TR). No other participant's motion exceeded 1.5 mm in displacement from one volume acquisition to the next. For seven other participants with 1 or more events of >0.5 mm displacement TR-to-TR, any preceding trial within 5 TRs and any current/following trial within 10 subsequent TRs of the motion event were excluded from multivariate analyses; for univariate analyses, these trials were removed from regressors of interest. For participant 310, the display screen failed in the middle of the first learning phase scanning run. This run was restarted at the point of failure and functional data were concatenated. For four participants, data from the final no-feedback fMRI block was not collected due to time constraints. Additionally, for the no-feedback block, three participants were excluded for excessive head motion, leaving 26 remaining participants for the no-feedback phase analysis.

For each functional scanning run, 16 discarded volumes were collected before the first trial to both allow for magnetic field equilibration and to collect calibration scans for the multiband reconstruction. During the scanned learning phase, two functional runs of an average of 592 TRs (6 min and 42 s) were collected, each including 50 trials. During the no-feedback phase, one functional runs of an average of 722 TRs (8 min and 11 s) was collected, including 160 trials. Structural images were collected either before or after the task, using a high-resolution T1-weighted magnetization prepared rapid acquisition gradient echo (MPRAGE) pulse sequence (0.9 × 0.898 × 0.898 mm voxel size).

Behavioral analysis.

Behavioral analyses were conducted in MATLAB 2016a (The MathWorks). Results presented below are from the following analyses: t tests versus chance for learning performance, within-group (paired) t tests comparing differences in reward- and loss-associated stimuli across conditions, Pearson correlations, and Fisher z-transformations of correlation values. We additionally tested whether nonsignificant results were weaker than a moderate effect size using the TOST procedure (Schuirmann, 1987; Lakens, 2017) and the TOSTER library in R (Lakens, 2017). We used bounds of Cohen's d = 0.51 (Study 1) or d = 0.53 and d = 0.54 (Study 2), where power to detect an effect in the included group of participants is estimated to be 80%.

End-state learning accuracy in Study 1 averaged across the last five of six repetitions in the scanned intermixed learning session. End-state learning accuracy for Study 2 averaged across the last two of three repetitions in the final intermixed learning phase. For the purpose of correlations with working memory, initial learning repetitions 2–10 were averaged (because repetition 1 cannot reflect learning). In Study 1, the post-learning ratings were taken from the ratings collected before the scan (after 25 repetitions across all massed- and spaced-trained stimuli). In Study 2, the post-learning ratings were collected after all learning repetitions were completed.

For the analysis of maintenance of learned values in Study 2, we computed a percentage maintenance measure. This was calculated by dividing the long-term reward rating difference (reward- minus loss-associated mean stimulus ratings) by the post-learning rating difference. The same analysis but with a range restricted to a minimum of 0 (eliminating any reversals in ratings) and a maximum of 100% yielded similar results but with lower variance and correspondingly higher t-value.

fMRI data analysis.

Data from all participants were preprocessed several times to fine tune the parameters. After each iteration, the decision to modify the preprocessing was purely based on the visual evaluation of the preprocessed data and not based on results of model fitting. Results included in this manuscript come from application of a standard preprocessing pipeline using FMRIPREP version 1.0.0-rc2 (http://fmriprep.readthedocs.io), which is based on Nipype (Gorgolewski et al., 2011). Slice timing correction was disabled due to short TR of the input data. Each T1 weighted volume was corrected for bias field using N4BiasFieldCorrection version 2.1.0 (Tustison et al., 2010), skull-stripped using antsBrainExtraction.sh version 2.1.0 (using the OASIS template), and coregistered to skull-stripped ICBM 152 Nonlinear Asymmetrical template version 2009c (Fonov et al., 2009) using nonlinear transformation implemented in ANTs version 2.1.0 (Avants et al., 2008). Cortical surface was estimated using FreeSurfer version 6.0.0 (Dale et al., 1999).

Functional data for each run was motion corrected using MCFLIRT version 5.0.9 (Jenkinson et al., 2002). Distortion correction for most participants was performed using an implementation of the TOPUP technique (Andersson et al., 2003) using 3dQwarp version 16.2.07 distributed as part of AFNI (Cox, 1996). In case of data from participants 276, 278, 310, 328, and 388, spiral fieldmaps were used to correct for distortions due to artifacts induced by the TOPUP approach in those participants. This decision was made based on visual inspection of the preprocessed data before fitting any models. The spiral fieldmaps were processed using FUGUE version 5.0.9 (Jenkinson, 2003). Functional data were coregistered to the corresponding T1-weighted volume using boundary-based registration 9 degrees of freedom implemented in FreeSurfer version 6.0.0 (Greve and Fischl, 2009). Motion correcting transformations, field distortion correcting warp, T1-weighted transformation, and MNI template warp were applied in a single step using antsApplyTransformations version 2.1.0 with Lanczos interpolation. Framewise displacement (Power et al., 2014) was calculated for each functional run using Nipype implementation. For more details of the pipeline, see http://fmriprep.readthedocs.io/en/1.0.0-rc2/workflows.html.

General linear model (GLM) analyses were conducted using SPM (SPM12; Wellcome Trust Centre for Neuroimaging). MRI model regressors were convolved with the canonical hemodynamic response function and entered into a GLM of each participant's fMRI data. Six scan-to-scan motion parameters (x, y, z dimensions as well as roll, pitch, and yaw) produced during realignment were included as additional regressors in the GLM to account for residual effects of participant movement.

We first conducted univariate analyses to identify main effects of value and reward in the learning phase, as well as effects of presentation without feedback in the final phase. The learning phase GLM included regressors for the stimulus onset (2 s duration) and feedback onset (2 s duration). The stimulus onset regressor was accompanied by a modulatory regressor for reward value (reward vs loss) separately for spaced- and massed-trained stimuli. The feedback regressor was accompanied by four modulatory regressors for reward value (reward vs loss) and spacing (spaced- vs massed-trained). The median performance in the scanner was 97.5% and, because learning was effectively no longer occurring during the scanning phase, we did not use a reinforcement learning model to create regressors.

The no-feedback phase GLM included regressors for the stimulus onset (1.5 s duration) and query onset (3.0 s duration). In the no-feedback phase, we conducted an exploratory cross-stimulus repetition suppression analyses (XSS) (Klein-Flügge et al., 2013). Here, non-perceptual features associated with a stimulus are predicted to activate the same neural population representing the feature. This feature coding is then predicted to lead to a suppressed response in subsequent activations, for example, when a different stimulus sharing that feature is presented immediately after the first stimulus (Barron et al., 2016). In the XSS model, we contrasted sequential presentations of stimuli that shared value association (reward and loss) and spacing (spaced vs massed), yielding four regressors. For example, if two different reward-associated and spaced-trained stimuli followed in successive trials, the first trial would receive a value of 1 and the second trial would receive a −1. These regressors were entered into contrasts to yield reward versus loss XSS for spaced-trained stimuli and reward versus loss XSS for massed-trained stimuli.

For multivariate classification analyses, we estimated a mass-univariate GLM where each trial was modeled with a single regressor, giving 100 regressors for the learning phase. The learning phase regressor duration modeled the 2-s-long initial stimulus presentation period. Models included the six motion regressors and block regressors as effects of no interest. Multivariate analyses were conducting using The Decoding Toolbox (Hebart et al., 2014). Classification used a L2-norm learning support vector machine (LIBSVM; Chang and Lin, 2011) with a fixed cost of c = 1. The classifier was trained on the full learning phase data, with the two scanning blocks subdivided into four runs (balancing the number of events within and across runs). We conducted four classification analyses: overall reward- versus loss-associated stimulus classification, spaced- versus massed-trained stimulus classification, and reward- versus loss-associated stimulus classification separately for spaced- and massed-trained stimuli. For the final two analyses, the results were compared to test differences in value classification performance for spaced versus massed stimuli. Leave-one-run-out cross-validation was used, with results reported in terms of percentage correct classification. Statistical comparisons were made using t tests versus chance (50%); for the comparison of two classifier results, paired t tests were used.

In addition to the two ROI analyses, we conducted a searchlight analysis using The Decoding Toolbox (Hebart et al., 2014). We used a 4-voxel-radius spherical searchlight. Training of the classifier and testing were conducted as described above for the region of interest (ROI) multivoxel pattern analysis. Individual subject classification accuracy maps were smoothed with a 4 mm FWHM kernel before group-level analysis. A comparison of value classification between spaced- and massed-trained stimuli was conducted using a t test on the difference between participant's spaced- and massed-trained classification SPMs (equivalent to a paired t test).

For both univariate and searchlight results, linear contrasts of univariate SPMs were taken to a group-level (random-effects) analysis. We report results corrected for family-wise error (FWE) due to multiple comparisons (Friston et al., 1994). We conduct this correction at the peak level within small volume ROIs for which we had an a priori hypothesis or at the whole-brain cluster level (in each case using a cluster-forming threshold of p < 0.005 uncorrected). The striatum and MTL (including hippocampus and parahippocampal cortex) ROIs were adapted from the AAL atlas (Tzourio-Mazoyer et al., 2002). The striatal mask included the caudate and putamen, as well as the addition of a hand-drawn nucleus accumbens mask (Wimmer et al., 2012). All voxel locations are reported in MNI coordinates and results are displayed overlaid on the average of all participants' normalized high-resolution structural images using xjview and AFNI (Cox, 1996).

Data availability.

Complete behavioral data are publicly available on the Open Science Framework (www.osf.io/z2gwf/). Unthresholded whole-brain fMRI results are available on NeuroVault (https://neurovault.org/collections/3340/) and the full fMRI dataset is publicly available on OpenNeuro (https://openneuro.org/datasets/ds001393/versions/00001).

Results

Across two studies, we measured learning and maintenance of conditioned stimulus–value associations over time. In the first in-lab session, participants learned stimulus–value associations for a set of “spaced-trained” stimuli (the spaced initial learning session). Over the course of the next 2 weeks, participants engaged in three short “massed” training sessions online (the spaced online training sessions). Participants then returned to complete a second in-lab session, where they learned stimulus–value associations for a new set of “massed-trained” stimuli (the massed initial learning session and continued training). All learning for the massed-trained stimuli occurred consecutively in the same session. By the end of training on the massed-trained stimuli, experience was equated between the spaced- and massed-trained stimuli. Although the timing of trials was equivalent across the spaced-trained and massed-trained stimuli, the critical difference was that multiple days were inserted between the short training sessions for spaced-trained stimuli. Three weeks after the second in-lab session, participants completed a long-term follow-up reward rating measure.

Study 1

Learning of value associations

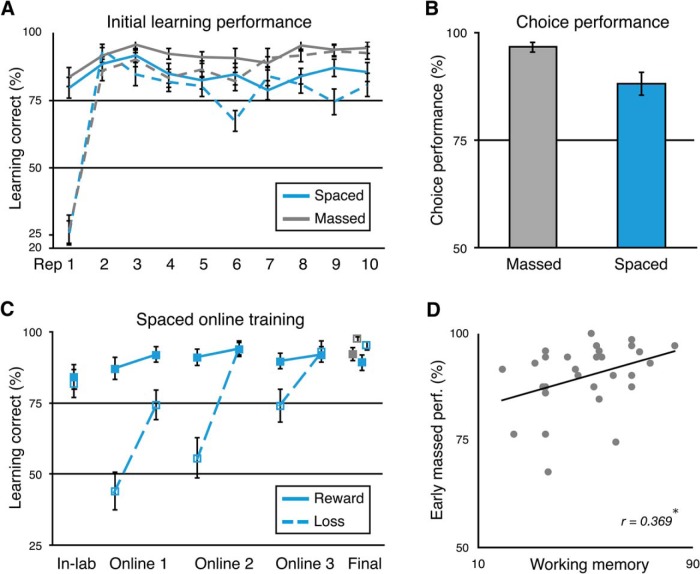

Participants rapidly acquired the best “Yes” or “No” response for the reward- or loss-associated stimuli during the initial spaced (lab session 1) and massed (lab session 2) learning sessions. By the second repetition of each stimulus, accuracy quickly increased to 89.1% with a 95% confidence interval (CI) of [87.4 95.2] for spaced-trained stimuli and to 91.3% with a 95% CI of [84.7 93.5] for massed-trained stimuli (p < 0.001). Participants exhibited a noted bias (77.7%) toward “Yes” responses for the first trial of a given stimulus when no previous information could be used to guide their response. By the end of the initial learning sessions (repetition 10), performance increased to 83.3% (95% CI [76.8 89.8]) for the spaced-trained stimuli and 93.6% (95% CI [90.4 96.7]) for the massed-trained stimuli (Fig. 2A). Performance was higher by the end of the initial learning session for the massed-trained stimuli (t(32) = 3.13, 05% CI [3.6 17.0]; p = 0.0037). Note that the only difference between the spaced and massed learning sessions is that there is greater task exposure at the time of the massed learning session; both sessions have the same within-session trial timing and spacing. After the completion of the online learning sessions for spaced-trained stimuli and further in-lab learning for massed-trained stimuli, as expected, we found that participants showed no significant difference in performance across conditions (repetitions 27–31; spaced-trained = 92.1%, 95% CI [88.3 96.0]; massed-trained = 94.7%, 95% CI [91.9 97.6]; t(32) = 1.59, 95% CI [−1.0 5.9]; p = 0.123; Fig. 2A). However, this effect was not statistically equivalent to a null effect, as indicated by an equivalence test using the TOST procedure (Lakens, 2017): the effect was not significantly within the bounds of a medium effect of interest (Cohen's d = ± 0.51, providing 80% power with 33 participants; t(32) = 1.34, p = 0.094), so we cannot reject the presence of a medium-size effect.

Figure 2.

Study 1 learning results. A, Performance in the initial learning sessions for the spaced- and massed-trained stimuli across the first 10 repetitions of each stimulus. Massed stimuli are shown in gray; spaced in blue; reward-associated stimuli in solid lines; loss-associated stimuli in dotted lines. B, Incentivized two-alternative forced-choice performance between reward- and loss-associated stimuli following the initial spaced and massed learning sessions. C, Spaced performance across online learning sessions and terminal performance for spaced and massed stimuli. Performance is depicted for the last in-lab repetition and the first and last (fifth) repetition of each stimulus per online session, followed by the average of the final 27–31 repetitions in the second in-lab session including fMRI. D, Positive correlation between early massed-trained stimulus learning phase performance and working memory capacity (O-SPAN). (*p < 0.05). Rep., Repetition. Error bars indicate 1 SEM.

Performance in the initial session illustrated that participants learned the reward value of the stimuli during learning. First, participants showed higher learning accuracy for high reward (mean $0.45 feedback) versus medium reward (mean $0.25) spaced-trained stimuli in the second half of learning (high reward = 90.0%, 95% CI [83.2 96.8]; medium reward = 78.0%, 95% CI [70.7 85.4]; t(32) = 2.98, 95% CI [3.8 20.1]; p = 0.0054). After extensive training in the task, however, we did not observe a similar effect for initial learning of the massed-trained stimuli (high reward = 91.9%, 95% CI [85.8 97.9]; medium reward = 93.2%, 95% CI [88.5 98.0]; t(32) = −0.38, 95% CI [−8.6 5.9]; p > 0.70; TOST = t(32) = 2.54, p = 0.008). Second, after the initial learning phase, participants completed an incentivized two-alternative forced choice test phase. Here, no trial-by-trial feedback was given, but additional rewards were paid based on performance. Participants exhibited a strong preference for the reward- versus loss-associated stimuli in choices between both spaced- and massed-trained stimuli (spaced accuracy = 96.5%, 95% CI [82.6 93.6]; massed accuracy = 88.1%, 95% CI [94.4 98.8]; p-values < 0.00001; Fig. 2B).

After the first in-lab session, participants continued learning about the set of spaced-trained stimuli across three short “massed” online sessions. We found that, across the 3 online sessions, mean performance increased for loss-associated stimuli (one-way ANOVA; F(2,72) = 9.26, p = 0.003; Fig. 2B), but not for reward-associated stimuli (F(2,72) = 0.53, p = 0.59). This increase in performance for loss-associated stimuli was accompanied by a significant decrease in performance between sessions (mean change from end of session to beginning of next session: t(24) = 4.71, 95% CI [14.5 37.1]; p < 0.001), but not for reward-associated stimuli (t(24) = 0.38, 95% CI [−3.4 5.0]; p = 0.704). Performance at the beginning of the online sessions may have been influenced by a response bias toward “Yes,” as also shown in first responses to stimuli in initial learning (Fig. 2A). Forgetting that leads to a bias under uncertainty would decrease memory performance for loss-associated stimuli. However, a bias would mask any forgetting for reward-associated stimuli because it would lead to higher performance. Therefore, we cannot rule out the forgetting of reward-associated stimuli in the current design.

During the second in-lab (fMRI) session, learning performance was >90% for both conditions, but massed-trained stimuli showed higher performance than spaced-trained stimuli (spaced choice performance, scan repetitions 2 to 6 = 92.1%, 95% CI [88.3 96.0]; massed = 94.7%, 95% CI [91.9 97.5]; p = 0.01; 95% CI [3.0 20.6]; t(32) = 2.74; Fig. 2C).

After sufficient general experience in the task, we expected to find a positive relationship between learning performance for new stimuli and working memory. We thus estimated the correlation between learning during the initial acquisition of massed-trained stimulus–value associations during the second in-lab session with the operations span measure of working memory. We found that learning performance on the massed-trained stimuli positively related to working memory capacity (r = 0.369, p = 0.049; Fig. 2C). Initial performance for spaced-trained stimuli did not correlate with working memory (r = −0.097, p = 0.617; TOST equivalence test providing 80% power in range r ± 0.34, p = 0.080, so we cannot reject the presence of a medium-size effect). The correlation between working memory and massed performance was significantly greater than the correlation with spaced performance (z = 2.16, p = 0.031). In contrast to the predicted effect for massed performance in the second session, we did not predict a relationship between first session spaced condition performance and working memory. Although working memory clearly contributed to spaced learning performance, given the rapid shift in responding to loss-associated stimuli after the first trial (Fig. 2A), absent a prolonged practice session, working memory is also likely to be used to maintain task instructions (Cole et al., 2013). Initial task performance is also likely to be affected by numerous other noise-introducing factors such as the acquisition of general task rules (“task set”) and adaptation to the testing environment. However, the lack of a correlation with working memory in the spaced may also indicate that the working memory correlations in general are weak and hard to detect if present, even with >30 participants. Future studies are needed to further investigate the effects of working memory on initial learning and task acquisition. When interpreting these working memory correlations with respect to previous studies on the contribution of working memory to feedback-based learning (Collins and Frank, 2012), it is important to note that the eight stimuli in the spaced and massed condition were introduced in two sequential sets of four stimuli. Therefore, participants would only need to maintain four instead of eight stimulus–reward or stimulus–response in short-term memory, well within the range reported in previous studies.

Long-term maintenance

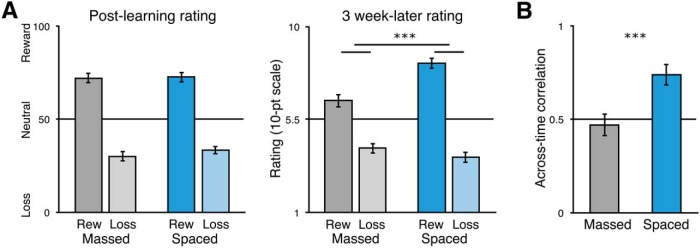

Next, we turned to the critical question of whether spaced training over weeks led to differences in long-term memory for conditioned reward associations. Baseline reward ratings were collected before the fMRI scanning in the second in-lab session. Higher ratings indicate strong confidence in a reward association, whereas lower ratings indicate higher confidence in a neutral/loss association; ratings more toward the middle of the scale indicated less confidence (Fig. 1C). After training but before fMRI scanning, when experience was matched across the spaced and massed conditions, we found that ratings across condition clearly discriminated between reward- and loss-associated stimuli (spaced rating difference = 47.5%, 95% CI [39.2 55.7]; massed rating difference = 62.5%, 95% CI [56.5 68.4]; p-values < 0.00001; condition difference, t(32) = 2.73, 95% CI [3.0 20.6]; p = 0.01; Fig. 3A, left).

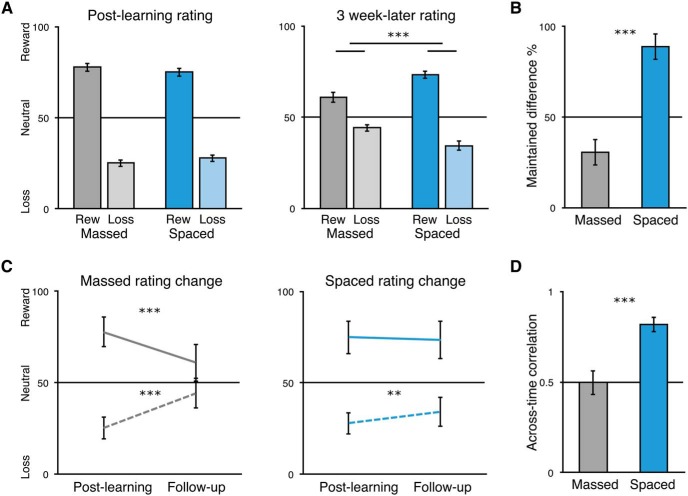

Figure 3.

Study 1 post-learning value association strength and long-term maintenance of value associations. A, Post-learning reward association ratings for the massed- and spaced-trained stimuli (left); 3-week-later reward association ratings (right). Reward-associated stimuli in darker colors; loss-associated stimuli in lighter colors. B, Average of the correlation (r) within-participant of massed-trained stimulus reward ratings and spaced-trained stimulus reward-ratings (statistics were computed on z-transformed ratings). ***p < 0.001. Error bars indicate SEM.

At the long-term follow-up, only rating data were collected. Importantly, to validate the use of the reward rating scale in the follow-up measures, we tested how strongly choices and ratings were related. We found that within-participants, massed-trained ratings were strongly correlated with preferences for stimuli in the separate choice test phase (mean r = 0.92, 95% CI [0.88 0.95]; range 0.68–1.00; t test on z-transformed r values, t(32) = 11.10, 95% CI [1.79 2.59]; p < 0.0001). This strong correlation indicates that the reward ratings capture the same underlying values learned via feedback learning as the forced-choice test measure commonly used as an assessment of learning.

To measure long-term maintenance of conditioning, after ∼3 weeks, participants completed an online questionnaire on reward association strength using a 10-point scale. The instructions for ratings were the same as the in-lab ratings phase. Critically, we found that, although the reward value discrimination was significant in both conditions (spaced difference = 4.55, 95% CI [3.75 5.34]; t(32) = 11.61, p < 0.001; massed difference = 2.24, 95% CI [1.59 3.01]; t(32) = 6.60, p < 0.001), reward value discrimination was significantly stronger in the spaced than in the massed condition (t(32) = 4.55, 95% CI [1.23 3.25]; p < 0.001; Fig. 3B). This effect was driven by greater maintenance of the values of reward-associated stimuli (spaced vs massed, t(32) = 4.73, 95% CI [1.04 2.58]; p < 0.001; loss spaced vs massed, t(32) = −1.37, 95% CI [−1.08 0.21]; p = 0.18; TOST equivalence test, t(32) = 1.56, p = 0.064, n.s.). Note that the benefit of spacing at the long-term follow-up also differs from the baseline at the end of learning, where performance was marginally higher for massed-trained stimuli.

Next, we analyzed the consistency of ratings from the end of learning to the long-term follow-up. The post-learning ratings were collected on a graded scale and the 3-week follow-up ratings were collected on a 10-point scale; this prevents a direct numeric comparison but allows for a correlation analysis. Such an analysis can test whether ratings in the massed case were simply scaled down (preserving ordering) or if actual forgetting introduced noise (disrupting an across-time correlation). We predicted that the value association memory for massed-trained stimuli actually decayed, leading to a higher correlation across time for spaced-trained stimuli. We indeed found that ratings were significantly more correlated across time in the spaced-trained condition (spaced r = 0.74, 95% CI [0.63 0.85]; massed r = 0.47, 95% CI [0.35 0.59]; t test on z-transformed values, t(32) = 4.13, 95% CI [1.28 0.44]; p < 0.001). Although the correlation for the spaced-trained stimuli was high (median r = 0.85), there was still variability in the group, with individual participant r values ranging from −0.36 to 1.0. Overall, these results indicate that spaced-trained stimuli exhibited significantly stronger long-term memory for conditioned associations and more stable memory than massed-trained stimuli.

One limitation to these results is that, in the current design, cues in the learning environment may bias performance in favor of the spaced-trained stimuli: online training for spaced stimuli was conducted outside of the laboratory, likely on the participant's own computer, which was likely the same environment for the 3-week follow-up measure. Although it seems unlikely that a testing environment effect would fully account for the large difference in long-term maintenance that we observed, we conducted a second study to replicate these results in a design where the testing conditions would if anything bias performance in favor of the massed-trained stimuli.

Study 2

Learning of value associations

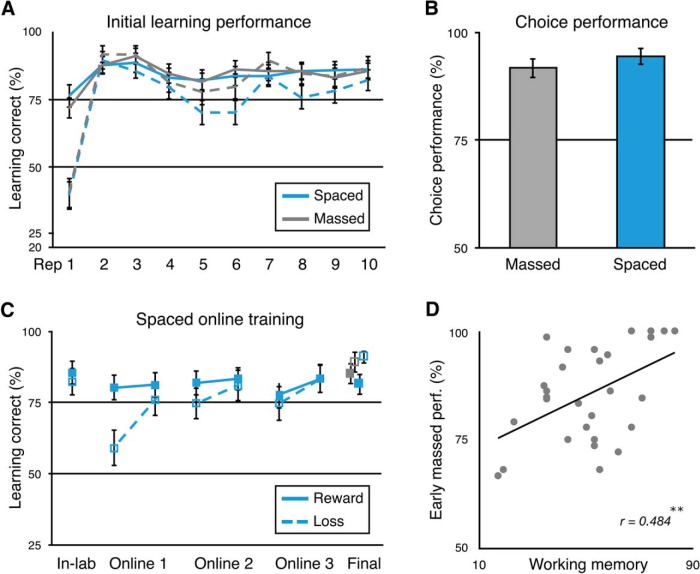

In Study 2, our aim was to replicate the findings of Study 1 and to extend them by conducting the 3-week follow-up session in the lab, allowing for a direct comparison with post-learning performance. Learning sessions for spaced- and massed-trained stimuli were the same as in Study 1, with the exception that massed learning in Study 2 omitted the mid-learning assessment with ratings and choices. During the initial spaced and massed learning sessions, by the second trial, accuracy had increased to 89.9% (95% CI [85.3 94.6]) for spaced-trained stimuli and to 87.2% (95% CI [84.1 93.3]) for massed-trained stimuli (p < 0.001). As before, participants exhibited a noted bias (67.2%) toward “Yes” responses for the first trial of a given stimulus when no previous information could be used to guide their response. By the end of the initial learning sessions, performance was at a level of 84.3% (95% CI [79.3 89.3]) for the spaced-trained stimuli and 86.2% (95% CI [81.2 91.1]) for the massed-trained stimuli (Fig. 4A), which was matched across conditions (10th repetition; t(30) = 0.59, 95% CI [−4.72 8.52]; p = 0.56; TOST equivalence test within a range of Cohen's d = ± 0.53, providing 80% power with 31 participants; t(30) = 2.37, p = 0.012). By the end of training, after the online sessions for spaced-trained stimuli and the completion of the in-lab learning for massed-trained stimuli, we found that performance was equivalent across conditions (spaced-trained = 86.4%, 95% CI [82.2 90.6]; massed-trained = 87.1%, 95% CI [81.4 92.8]; t(30) = 0.248, 95% CI [−4.76 6.08]; p = 0.806; TOST equivalence test, t(30) = 2.70, p = 0.006; Fig. 4C).

Figure 4.

Study 2 learning results. A, Performance in the initial spaced and massed learning sessions across the first 10 repetitions of each stimulus. Massed stimuli are shown in gray; spaced in blue; reward-associated stimuli in solid lines; loss-associated stimuli in dotted lines. B, Incentivized two-alternative forced-choice performance between reward- and loss-associated stimuli following the completion of all learning repetitions. C, Spaced performance across training and terminal performance for spaced and massed stimuli. Performance is shown for the last in-lab repetition and the first and last (fifth) repetition of each stimulus per online session. Terminal performance is represented as the average of the final two repetitions of each stimulus in the last learning session. D, Positive correlation between early massed-trained stimulus learning performance and working memory capacity (OSPAN). **p < 0.01. Error bars indicate SEM.

As in Study 1, performance in the initial learning sessions illustrated that participants learned the reward value of the stimuli during learning. First, participants tended to prefer the high reward versus medium reward spaced-trained stimuli during the second half of learning (high reward = 90.3%, 95% CI [84.7 96.0]; medium reward = 79.9%, 95% CI [71.0 88.7]; t(30) = 2.03, 95% CI [0.0 20.9]; p = 0.051). Later, however, after extensive training in the task, we did not observe a similar effect for initial learning of the massed-trained stimuli (high reward = 84.8%, 95% CI [75.5 94.2]; medium reward = 85.5%, 95% CI [77.7 93.3]; t(30) = −0.11, 95% CI [−12.5 11.2]; p > 0.91; TOST = t(30) = 2.84, p = 0.004). Second, performance in the incentivized forced-choice test phase after the completion of learning showed strong preference for the reward- versus loss-associated stimuli in choices between both spaced- and massed-trained stimuli (spaced-trained = 94.6%, 95% CI [87.2 96.3]; massed-trained = 91.7%, 95% CI [90.8 98.3]; difference between conditions, p > 0.26; TOST equivalence test, t(30) = 1.83, p = 0.04; Fig. 4B). Equivalent choice performance after learning for spaced- and massed-trained stimuli is important for the long-term follow-up measure.

After the initial spaced learning session in the first in-lab visit, participants continued learning about the set of spaced-trained stimuli across three short “massed” online sessions. As in Study 1, we found that across the 3 online sessions, mean performance did not change for reward-associated stimuli (one-way ANOVA; F(2,69) = 0.06, p = 0.94; Fig. 4B). In contrast to the previous study, we did not find an increase in performance across sessions for loss-associated stimuli (F(2,69) = 1.09, p = 0.34), although a post hoc comparison of the first to the third session showed an increase (t(23) = 2.43, 95% CI [1.1 3.4]; p = 0.024). However, we did replicate the finding that loss-associated stimuli showed a significant decrease in performance between sessions (mean change from end of session to beginning of next session: t(23) = 2.69, 95% CI [2.4 18.2]; p = 0.013; reward-associated stimuli: t(23) = 1.40, 95% CI [−1.6 8.3]; p = 0.18). As discussed above, this decrease in performance evident for loss-associated stimuli could indicate forgetting of values and a return toward a default “Yes” response bias (as seen in first exposure responses; Fig. 4A). Such a bias would make it difficult in the current design to determine whether memories for the value of reward-associated stimuli also decayed.

As in Study 1, after sufficient general experience in the reward association learning task, we expected to find a positive relationship between performance on the reward association learning task and working memory. Indeed, we found a significant correlation between massed-stimulus performance and working memory capacity (r = 0.484, p = 0.0058; Fig. 4C). Initial learning performance was relatively lower in Study 2 than in Study 1, which may have helped reveal a numerically stronger correlation between massed-trained stimulus performance and working memory. Meanwhile, the relationship between working memory and initial performance for spaced-trained stimuli was weak (r = 0.040, p = 0.83; TOST equivalence test, p = 0.043, providing 80% power in range r ± 0.35; difference between massed and spaced correlation, z = 1.40 p = 0.16), as expected, given the other noise-introducing factors in initial learning performance discussed above. As in Study 1, however, working memory clearly also contributed to spaced learning performance, as demonstrated by the immediate shift in mean response to loss-associated stimuli from “Yes” to “No” after initial negative feedback (Fig. 4A).

Long-term maintenance

Next, we turned to the critical question of whether spaced training over weeks led to differences in long-term memory for conditioned reward associations. For the baseline post-learning measurement for spaced- and massed-trained stimuli, ratings were collected at the end of the complete massed-stimulus training session (Fig. 5A, left). Reward ratings showed strong discrimination of value (spaced-trained reward minus loss rating difference = 47.1%, 95% CI [40.9 53.2]; massed-trained rating difference = 52.5%, 95% CI [46.7 58.3]; condition difference, t(30) = −1.86, 95% CI [−0.5 11.4]; p = 0.073; Fig. 5A, left). Note that, as in the previous study, we collected ratings data but no choice test data in the long-term follow-up. To again validate the use of the reward rating scale in the follow-up measures, we tested whether within-participant reward ratings were related to choice test preferences. Again, we found that ratings positively correlated with choice preference across all stimuli (mean r = 0.87, 95% CI [0.82 0.91]; range 0.56–1.00; t test on z-transformed r values, t(30) = 14.08, 95% CI [1.33 1.78]; p < 0.0001). By replicating the strong correlation found in Study 1, these results indicate that reward ratings capture the essential underlying values revealed through forced-choice preferences.

Figure 5.

Study 2 post-learning reward association strength and maintenance of value associations. A, Reward association ratings for the massed- and spaced-trained stimuli after the second in-lab session (left) and after the 3-week-later in-lab final reward association rating session (right). Reward-associated stimuli are shown in darker colors; loss-associated stimuli in lighter colors. B, Percentage of initial reward association difference (reward minus loss associated rating) after the second in-lab session maintained across the 3-week delay to the third in-lab session shown separately for massed- and spaced-trained stimuli. C, Post-learning and 3-week follow-up ratings replotted within condition for reward-associated (solid line) and loss-associated stimuli (dotted line). D, Average of the correlation (r) within-participant of massed-trained stimulus reward ratings and spaced-trained stimulus reward ratings (statistics were computed on z-transformed ratings). **p < 0.01, ***p < 0.001. Error bars indicate SEM (A, B, D) or within-participants SEM (C).

To measure long-term maintenance of conditioning, after ∼3 weeks, participants returned for a third in-lab session for a brief session where they gave reward ratings for all stimuli. Rating discrimination between reward- and loss-associated stimuli was significant in both conditions (spaced difference = 39.1%, 95% CI [32.4 45.8]; t(29) = 11.96, p < 0.001; massed difference = 16.7%, 95% CI [9.4 24.1]; t(29) = 4.65, p < 0.001). Importantly, reward value discrimination was significantly stronger in the spaced than in the massed condition (t(29) = 4.98, 95% CI [13.2 31.5]; p < 0.001; Fig. 5A, right). At follow-up, this stronger maintenance of learned value associations in the spaced condition was significant for both reward and loss stimuli (reward, t(29) = 3.43, 95% CI [5.0 20.0]; p = 0.0018; loss, t(29) = −4.11, 95% CI [−14.7 −5.0]; p < 0.001). The design of Study 2 allowed us to directly compare post-learning ratings and 3-week-later ratings to calculate the degree of maintenance of conditioning. As expected, the difference in maintenance for reward associations was significantly greater for spaced- than massed-trained stimuli (spaced = 87.3%, 95% CI [73.2 101.5]; massed = 30.0%, 95% CI [16.2 43.9]; t(29) = 5.49, 95% CI [36.0 78.6]; Fig. 5B). Moreover, we found that ratings significantly decayed toward neutral for both reward- and loss-associated massed-trained stimuli (massed reward, t(29) = −6.09, 95% CI [−21.7 −10.8]; p < 0.001; loss, t(29) = 9.95, 95% CI [15.3 23.3]; p < 0.001). For spaced-trained stimuli, we found no decay for reward-associated stimuli but some decay for loss-associated stimuli (spaced reward, t(29) = −1.21, 95% CI [−4.0 1.0]; p = 0.23; TOST equivalence test, t(29) = 1.74, p = 0.045; loss, t(29) = 3.00, 95% CI [2.1 11.4]; p = 0.0055). Interestingly, we found that the ratings for loss-associated stimuli decayed significantly more than those for reward-associated stimuli (t(29) = −2.18, 95% CI [−10.20 −0.33]; p = 0.037), an effect consistent with the between-sessions drop in performance for loss-associated stimuli. We did not find a difference in ratings decay for the massed-trained stimuli (t(29) = −1.05, 95% CI [−9.01 2.89]; p = 0.302); however, this null finding could be due to floor effects because ratings are near 50%.

Finally, as in Study 1, we predicted that the value association memory for massed-trained stimuli was not decreased by scaling but actually decayed, which would lead to a lower across-time correlation in ratings. To test this, we correlated ratings in the second in-lab session with ratings in the third in-lab session separately for massed- and spaced-trained stimuli. We replicated the finding that ratings were significantly more correlated across time in the spaced-trained condition (spaced r = 0.82, 95% CI [0.74 0.90]; massed r = 0.50, 95% CI [0.37 0.63]; t test on z-transformed values, t(29) = 5.22, 95% CI [0.45 1.03]; p < 0.001).

By collecting the long-term follow-up ratings in the same laboratory environment as the massed training sessions, our design would, if anything, be biased to find stronger maintenance for massed-trained stimuli because the training and testing environments overlap. However, we found similar differences in long-term conditioning across Study 1 and Study 2, suggesting that testing environment was not a significant factor in our measure of conditioning maintenance. Although it will be important in the future to also replicate these results in a choice situation such as a stable bandit task, the replication and extension of the findings of Study 1 provide strong evidence that spaced training leads to more robust maintenance of conditioned value associations at a delay, whereas performance in short-term learning is partly explained by working memory.

fMRI results

In Study 1, after the completion of matched training for the massed-trained associations in the second in-lab session, we collected fMRI data during an additional learning phase, where massed- and spaced-trained stimuli were intermixed. As noted above, during fMRI scanning, we found overall performance >90%, but a slight benefit for massed-trained stimuli (Fig. 2C).

Initial univariate analyses we did not reveal any value or reward-related differences in striatal or MTL responses due to spaced training (Tab. 1-1). At stimulus onset, across conditions, a contrast of reward versus loss-associated stimuli revealed activation in the bilateral occipital cortex and right somatomotor cortex (whole-brain FWE-corrected p < 0.05; Tab. 1-1; unthresholded map available at https://neurovault.org/images/63125/), with no differences due to spaced- versus massed-trained stimuli. At feedback, we found expected effects of reward (hit) versus nonreward (miss) feedback for reward-associated stimuli in the ventral striatum (x, y, z: −10, 9, −8; z = 4.48, p = 0.019 whole-brain FWE-corrected) and ventromedial prefrontal cortex (VMPFC) (−15, 51, −1; z = 4.93, p < 0.001 FWE; Fig. 6-1 and https://neurovault.org/images/59042/, Tab. 1-1). Across conditions, loss (miss) versus neutral (hit) feedback activated the bilateral anterior insula and anterior cingulate (Tab. 1-1; https://neurovault.org/images/63127/). However, we found that miss versus hit feedback elicited greater responses in the anterior insula and anterior cingulate cortex for loss-associated stimuli than for reward-associated stimuli (Fig. 6-1 and https://neurovault.org/images/63126/, Tab. 1-1). Loss feedback led to greater activity for massed- versus spaced-trained stimuli in the bilateral dorsolateral PFC (DLPFC), parietal cortex, and ventral occipital cortex (Tab. 1-1). A second model contrasting spaced- vs massed-trained stimuli across value revealed no significant differences in subcortical regions of interest or in the whole brain. In a subsequent no-feedback scanning block, we examined the effect of cross-stimulus repetition suppression (XSS) for reward- vs loss-associated stimuli. We found no differences due to condition, but several clusters that showed overall repetition enhancement by value, including the right DLPFC and anterior insula (Tab. 1-1). Although our univariate results exhibited no clear differences based on spacing condition, they do align well with previous results on reward-based learning in human fMRI studies (Bartra et al., 2013).

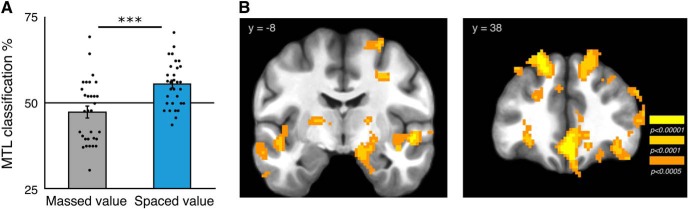

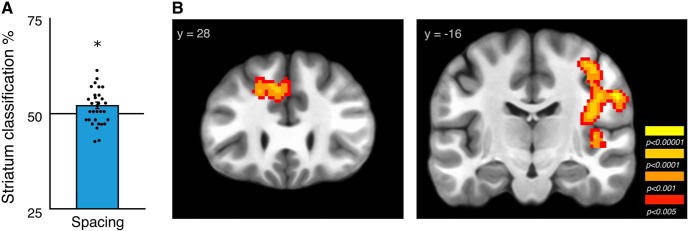

To gain greater insight into the neural response to massed- and spaced-trained stimuli, we leveraged multivariate analysis methods. Specifically, we tested whether distributed patterns of brain activity within regions of interest or in a whole-brain searchlight analysis were able to discriminate between reward value, spaced vs massed training condition, or their interaction. Our primary question was whether patterns of activity differentially discriminated the value of spaced- vs massed-trained stimuli.

Our first analysis tested for patterns that discriminated between reward- vs loss-associated stimuli. In the striatal region of interest, classification was not significantly different from zero (49.5% CI [47.5 51.6]; t(30) = −0.48, p = 0.63), and a similar null result was found in the hippocampus and parahippocampus MTL ROI (49.1% CI [47.1 51.2]; t(30) = −0.89, p = 0.38). Using a whole-brain searchlight analysis, thresholding at the standard cluster-forming threshold of p < 0.005 resulted in a large single cluster spanning much of the brain; for this reason, we used a more stringent cluster-forming threshold of p < 0.0005 to obtain more interpretable clusters. We identified several regions that showed significant value discrimination, including the left precentral and postcentral gyrus and a large bilateral cluster in the posterior and ventral occipital cortex (p < 0.05 whole-brain FWE-corrected; Fig. 6, Table 1).

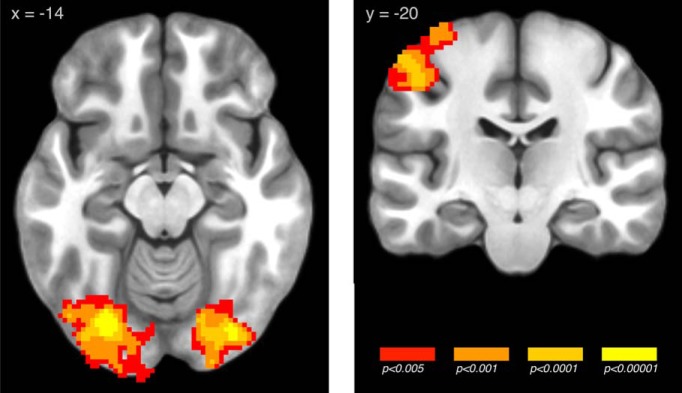

Figure 6.

Searchlight pattern classification of reward- versus loss-associated stimuli across the massed and spaced conditions (images whole-brain p < 0.05, FWE corrected; unthresholded map available at https://neurovault.org/images/59040/) For univariate results of the response to reward and loss feedback, see Figure 6-1.

Table 1.

Summary of multivariate whole-brain searchlight analysis results

| Contrast | Regions | Cluster size | x | y | z | Peak z statistic |

|---|---|---|---|---|---|---|

| Reward vs loss | Bilateral middle occipital gyrus | |||||

| Bilateral inferior occipital gyrus | 4022 | −28 | −79 | −14 | 5.77 | |

| Bilateral fusiform gyrus | ||||||

| L postcentral gyrus | ||||||

| L precentral gyrus | 1013 | −48 | −20 | 47 | 4.24 | |

| L parietal cortex | ||||||

| Massed reward vs loss | — | |||||

| Spaced reward vs loss | R middle frontal gyrus | |||||

| R inferior frontal gyrus | ||||||

| R superior frontal gyrus | ||||||

| R superior temporal gyrus | 4254 | 49 | 26 | 34 | 6.64 | |

| R precentral gyrus | ||||||

| R middle temporal gyrus | ||||||

| R medial frontal gyrus | ||||||

| L middle occipital gyrus | 4032 | −43 | −86 | 6 | 6.37 | |

| L lingual gyrus | ||||||

| L inferior occipital gyrus | ||||||

| L cuneus | ||||||