Abstract

In studies of human episodic memory, the phenomenon of reactivation has traditionally been observed in regions of occipitotemporal cortex (OTC) involved in visual perception. However, reactivation also occurs in lateral parietal cortex (LPC), and recent evidence suggests that stimulus-specific reactivation may be stronger in LPC than in OTC. These observations raise important questions about the nature of memory representations in LPC and their relationship to representations in OTC. Here, we report two fMRI experiments that quantified stimulus feature information (color and object category) within LPC and OTC, separately during perception and memory retrieval, in male and female human subjects. Across both experiments, we observed a clear dissociation between OTC and LPC: while feature information in OTC was relatively stronger during perception than memory, feature information in LPC was relatively stronger during memory than perception. Thus, while OTC and LPC represented common stimulus features in our experiments, they preferentially represented this information during different stages. In LPC, this bias toward mnemonic information co-occurred with stimulus-level reinstatement during memory retrieval. In Experiment 2, we considered whether mnemonic feature information in LPC was flexibly and dynamically shaped by top-down retrieval goals. Indeed, we found that dorsal LPC preferentially represented retrieved feature information that addressed the current goal. In contrast, ventral LPC represented retrieved features independent of the current goal. Collectively, these findings provide insight into the nature and significance of mnemonic representations in LPC and constitute an important bridge between putative mnemonic and control functions of parietal cortex.

SIGNIFICANCE STATEMENT When humans remember an event from the past, patterns of sensory activity that were present during the initial event are thought to be reactivated. Here, we investigated the role of lateral parietal cortex (LPC), a high-level region of association cortex, in representing prior visual experiences. We find that LPC contained stronger information about stimulus features during memory retrieval than during perception. We also found that current task goals influenced the strength of stimulus feature information in LPC during memory. These findings suggest that, in addition to early sensory areas, high-level areas of cortex, such as LPC, represent visual information during memory retrieval, and that these areas may play a special role in flexibly aligning memories with current goals.

Keywords: attention, cortical reinstatement, episodic memory, goal-directed cognition, memory reactivation, parietal cortex

Introduction

Traditional models of episodic memory propose that sensory activity evoked during perception is reactivated during recollection (Kosslyn, 1980; Damasio, 1989). There is considerable evidence for such reactivation in occipitotemporal cortex (OTC), where visual information measured during perception is observed during later memory retrieval, although degraded in strength (O'Craven and Kanwisher, 2000; Wheeler et al., 2000; Polyn et al., 2005). Recent human neuroimaging work has found that reactivation also occurs in higher-order regions, such as lateral parietal cortex (LPC) (Kuhl and Chun, 2014; Chen et al., 2016; Lee and Kuhl, 2016; Xiao et al., 2017). Although these findings are consistent with older observations of increased univariate activity in LPC during successful remembering (Wagner et al., 2005; Kuhl and Chun, 2014), they also raise new questions about whether and how representations of retrieved memories differ between LPC and OTC.

Univariate fMRI studies have consistently found that, in contrast to sensory regions, ventral LPC exhibits low activation when perceptual events are experienced but high activation when these events are successfully retrieved (Daselaar, 2009; Kim et al., 2010). The idea that LPC may be relatively more involved in memory retrieval than perception has also received support from recent pattern-based fMRI studies. Long et al. (2016) found that reactivation of previously learned visual category information was stronger in the default mode network (which includes ventral LPC) than in OTC (see also Chen et al., 2016), whereas the reverse was true of category information during perception. Similarly, Xiao et al. (2017) found that stimulus-specific representations of retrieved stimuli were relatively stronger in LPC than in high-level visual areas, whereas stimulus-specific representations of perceived stimuli showed the opposite pattern.

Collectively, these studies raise the intriguing idea that reactivation, defined as consistent activation patterns across perception and retrieval, may not fully capture how memories are represented during recollection. Rather, there may be a systematic transformation of stimulus information from sensory regions during perception to higher-order regions (including LPC) during retrieval. Critically, however, previous studies have not measured or compared OTC and LPC representations of stimulus features during perception and memory retrieval. This leaves open the important question of whether the same stimulus features represented in OTC during perception are represented in LPC during retrieval, or whether these regions represent different stimulus dimensions across processing stages (Xiao et al., 2017). Finally, consideration of feature-level representations in LPC is also important because subregions of LPC may play a role in flexibly aligning retrieved features of a stimulus with behavioral goals (Kuhl et al., 2013; Sestieri et al., 2017). Given the proposed role of dorsal frontoparietal cortex in top-down attention (Corbetta and Shulman, 2002), a bias toward goal-relevant stimulus features may be particularly likely to occur in dorsal LPC.

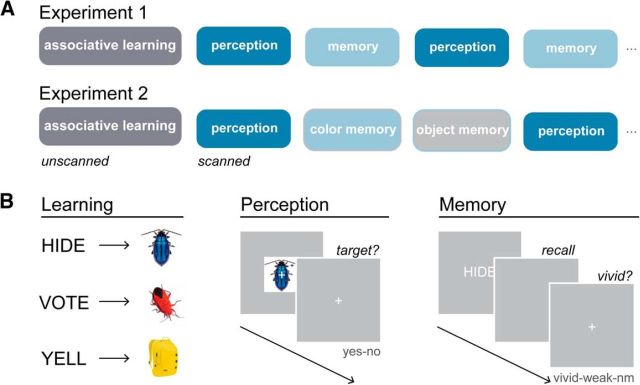

We conducted two fMRI experiments designed to directly compare visual stimulus representations during perception and memory in OTC and LPC. Stimuli were images of common objects with two visual features of interest: color and object categories (Fig. 1). In both experiments (Fig. 2A), human subjects learned word-image associations before a scan session. During scanning, subjects completed separate perception and memory retrieval tasks (Fig. 2B). During perception trials, subjects viewed the image stimuli. During memory trials, subjects were presented with word cues and recalled the associated images. The key difference between Experiments 1 and 2 occurred during scanned memory trials. In Experiment 1, subjects retrieved each image as vividly as possible; whereas in Experiment 2, subjects retrieved only the color feature or only the object feature of each image as vividly as possible. Using data from both experiments, we evaluated the relative strength of color and object feature information in OTC and LPC during stimulus perception and memory. We also compared the strength of feature-level and stimulus-level reinstatement in these regions. Using data from Experiment 2, we evaluated the role of top-down goals on mnemonic feature representations, specifically testing for differences in goal sensitivity across LPC subregions.

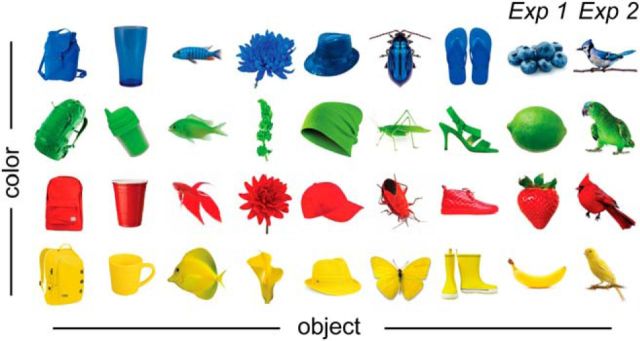

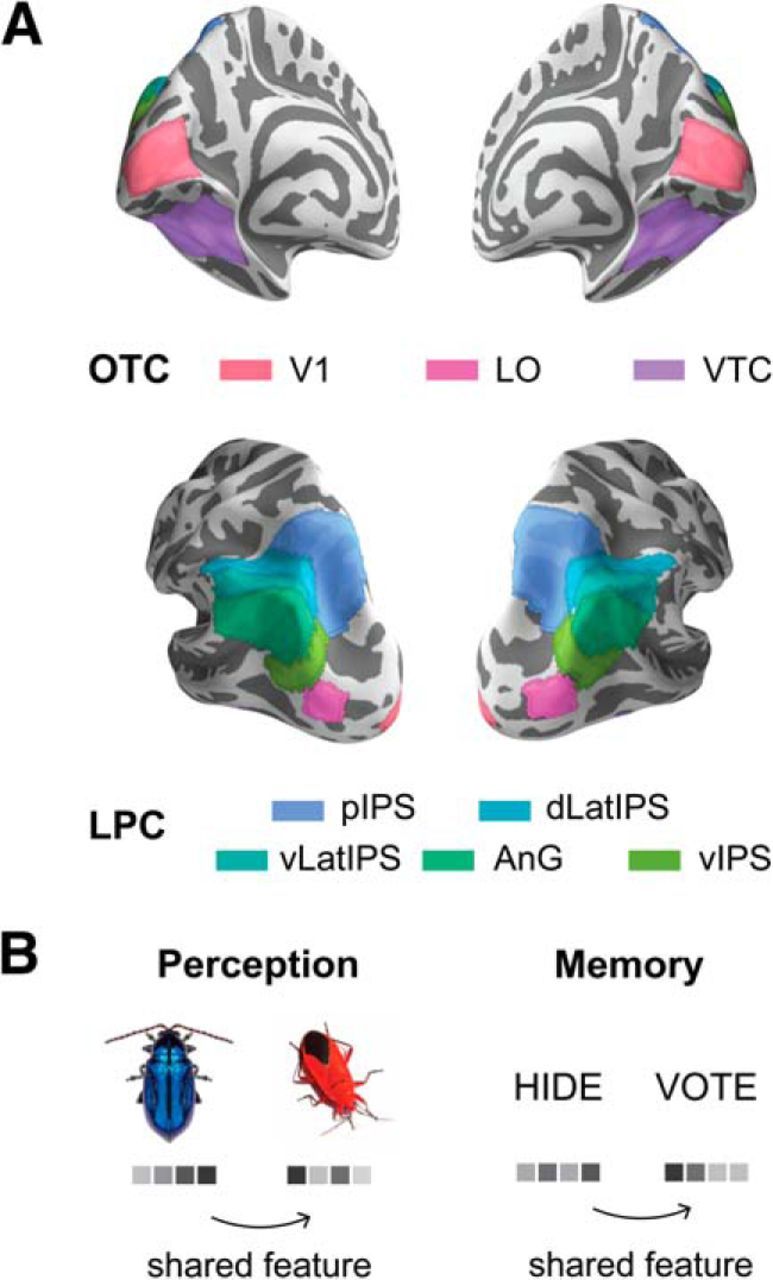

Figure 1.

Stimuli. In both experiments, stimuli were images of 32 common objects. Each object was a unique conjunction of one of four color features and one of eight object features. Color features were blue, green, red, and yellow. Object features were backpacks, cups, fish, flowers, hats, insects, shoes, fruit (Experiment 1 only), and birds (Experiment 2 only). See also Materials and Methods.

Figure 2.

Experimental design and task structure. A, In both experiments, human subjects learned word-image paired associates before scanning. In the scanner, subjects viewed and recalled the image stimuli in alternate perception and memory runs. In Experiment 2, subjects performed two different goal-dependent memory tasks, during which they selectively recalled only the color feature or only the object feature of the associated image. B, Subjects learned 32 word-image pairs to a 100% criterion in the behavioral training session. During scanned perception trials, subjects were briefly presented with a stimulus. Subjects judged whether a small infrequent visual target was present or absent on the stimulus. During scanned memory trials, subjects were presented with a previously studied word cue, and recalled the associated stimulus (Experiment 1) or only the color or object feature of the associated stimulus (Experiment 2). After a brief recall period, subjects made a vividness judgment about the quality of their recollection (vivid, weak, no memory). See also Materials and Methods.

Materials and Methods

Subjects

Forty-seven male and female human subjects were recruited from the New York University (Experiment 1) and University of Oregon (Experiment 2) communities. All subjects were right-handed native English speakers between the ages of 18 and 35 who reported normal or corrected-to-normal visual acuity, normal color vision, and no history of neurological or psychiatric disorders. Subjects participated in the study after giving written informed consent to procedures approved by the New York University or University of Oregon Institutional Review Boards. Of the 24 subjects recruited for Experiment 1, 7 subjects were excluded from data analysis due to poor data quality owing to excessive head motion (n = 3), sleepiness during the scan (n = 2), or poor performance during memory scans (n = 2, <75% combined vivid memory and weak memory responses). This yielded a final dataset of 17 subjects for Experiment 1 (19–31 years old, 7 males). Of the 23 subjects recruited for Experiment 2, 2 subjects withdrew from the study before completion due to either a scanner error (n = 1) or discomfort during the scan (n = 1). An additional 4 subjects were excluded from data analysis due to the following: an abnormality detected in the acquired images (n = 1), poor data quality owing to excessive head motion (n = 2), or poor performance during memory scans (n = 1, <75% combined vivid memory and weak memory responses). This yielded a final dataset of 17 subjects for Experiment 2 (18–31 years old, 8 males).

Stimuli

Stimuli for Experiment 1 consisted of 32 unique object images (Fig. 1). Each stimulus had two visual features of interest: object category (backpacks, cups, fish, flowers, fruit, hats, insects, or shoes) and color category (blue, green, red, or yellow). We chose object category as a feature dimension because there is long-standing evidence that object information can be robustly decoded from fMRI activity patterns (Haxby et al., 2001). We chose color category as a feature because it satisfied our requirement for a second feature that could be orthogonalized from object category and also be easily integrated with object category to generate unique stimulus identities. Finally, we were motivated to select color category as a feature because of prior evidence for color decoding in visual cortex (Brouwer and Heeger, 2009, 2013) and for flexible color representations in monkey parietal cortex (Toth and Assad, 2002).

Each of the 32 stimuli in our experiments represented a unique conjunction of one of the four color categories and one of the eight object categories. In addition, the specific color and object features of each stimulus were unique exemplars of that stimulus's assigned categories. For example, the blue, green, red, and yellow backpack stimuli were all different backpack exemplars. The rationale for using unique exemplars was so that we could measure generalizable information about color and object categories rather than idiosyncratic differences between stimuli. That is, we wanted to measure a representation of “backpacks” as opposed to a representation of a specific backpack. Thirty-two closely matched foil images with the same color and object category conjunctions were also used in the behavioral learning session to test memory specificity. Stimuli for Experiment 2 were identical to those from Experiment 1, with the exception of the fruit object category, which was replaced with a bird object category. All images were 225 × 225 pixels, with the object rendered on a white background. Word cues consisted of 32 common verbs and were the same for both experiments.

Tasks and procedure

Experiment 1.

The experiment began with a behavioral session, during which subjects learned 32 unique word-image associations to 100% criterion. A scan session immediately followed completion of the behavioral session. During the scan, subjects participated in two types of runs: (1) perception, where they viewed the object images without the corresponding word cues; and (2) memory, where they were presented with the word cues and recalled the associated object images (Fig. 2A,B). Details for each of these phases are described below.

Immediately before scanning, subjects learned 32 word-image associations through interleaved study and test blocks. For each subject, the 32 word cues were randomly assigned to each of 32 images. During study blocks, subjects were presented with the 32 word-image associations in random order. On a given study trial, the word cue was presented for 2 s, followed by the associated image for 2 s. A fixation cross was presented centrally for 2 s before the start of the next trial. Subjects were instructed to learn the associations in preparation for a memory test, but no responses were required. During test blocks, subjects were presented with the 32 word cues in random order and tested on their memory for the associated image. On each test trial, the word cue was presented for 0.5 s and was followed by a blank screen for 3.5 s, during which subjects were instructed to try to recall the associated image as vividly as possible for the entire 3.5 s. After this period elapsed, a test image was presented. The test image was either the correct image (target), an image that had been associated with a different word cue (old), or a novel image that was highly similar (same color and object category) to the target (lure). These trial types occurred with equal probability. For each test image, subjects had up to 5 s to make a yes/no response, indicating whether or not the test image was the correct associate. After making a response, subjects were shown the target image for 1 s as feedback. After feedback, a fixation cross was presented centrally for 2 s before the start of the next trial. Lure trials were included to ensure that subjects formed sufficiently detailed memories of each image so that they could discriminate between the target image and another image with the same combination of features. Subjects alternated between study and test blocks until they completed a minimum of 6 blocks of each type and achieved 100% accuracy on the test. The rationale for overtraining the word-image associations was to minimize variability in retrieval success and strength during subsequent scans.

Once in the scanner, subjects participated in two types of runs: perception and memory retrieval. During perception runs, subjects viewed the object images one at a time while performing a cover task of detecting black crosses that appeared infrequently on images. We purposefully avoided using a task that required subjects to make explicit judgments about the stimuli. The rationale for this was that we wanted to measure the feedforward perceptual response to the stimuli without biasing representations toward task-relevant stimulus dimensions. On a given perception trial, the image was overlaid with a central white fixation cross and presented centrally on a gray background for 0.5 s. The central white fixation cross was then presented alone on a gray background for 3.5 s before the start of the next trial. Subject were instructed to maintain fixation on the central fixation cross and monitor for a black cross that appeared at a random location within the borders of a randomly selected 12.5% of images. Subjects were instructed to judge whether a target was present or absent on the image and indicate their response with a button press. Each perception run consisted of 32 perception trials (1 trial per stimulus) and 8 null fixation trials in random order. Null trials consisted of a central white fixation cross on a gray background presented for 4 s and were randomly interleaved with the object trials thereby creating jitter. Every run also contained 8 s of null lead in and 8 s of null lead out time during which a central white fixation cross on a gray background was presented.

During memory runs, subjects were presented with the word cues one at a time, recalled the associated images, and evaluated the vividness of their recollections. In contrast to our task choice for the perception runs, here we chose a task that would maximize our ability to measure subjects' internal stimulus representations (i.e., the retrieved images) as opposed to feedforward perceptual responses. On each memory trial, the word cue was presented centrally in white characters on a gray background for 0.5 s. This was followed by a 2.5 s recall period where the screen was blank. Subjects were instructed to use this period to recall the associated image from memory and to hold it in mind as vividly as possible for the entire duration of the blank screen. At the end of the recall period, a white question mark on a gray background was presented for 1 s, prompting subjects to make one of three memory vividness responses via button box: “vividly remembered,” “weakly remembered,” or “not remembered.” The question mark was replaced by a central white fixation cross, which was presented for 2 s before the start of the next trial. Responses were recorded if they were made during the question mark or the ensuing fixation cross. As in perception runs, each memory run consisted of 32 memory trials (1 trial per stimulus) and 8 null fixation trials in random order. Null trials consisted of a central white fixation cross on a gray background presented for 6 s, and as in perception runs, provided jitter. Each run contained 8 s of null lead in and 8 s of null lead out time during which a central white fixation cross on a gray background was presented.

For both perception and memory tasks, trial orders were randomly generated for each subject and run. Subjects alternated between perception and memory runs, performing as many runs of each task as could be completed during the scan session (range = 7–10, mean = 8.41). Thus, there were between 7 and 10 repetitions of each stimulus across all perception trials and 7 to 10 repetitions of each stimulus across all memory trials. All stimuli were displayed on a projector at the back of the scanner bore, which subjects viewed through a mirror attached to the head coil. Subjects made responses for both tasks on an MR-compatible button box.

Experiment 2.

As in Experiment 1, Experiment 2 began with a behavioral session, during which subjects learned 32 unique word-image associations to 100% criterion. A scan session immediately followed. During the scan, subjects participated in both perception and memory runs. In contrast to Experiment 1, subjects performed one of two goal-dependent memory tasks during memory runs: (1) color memory, where they selectively recalled the color feature of the associated image from the word cue; or (2) object memory, where they selectively recalled the object feature of the associated image from the word cue (Fig. 2A,B). Subjects were introduced to the goal-dependent color and object retrieval tasks immediately before the scan and did not perform these tasks during the associative learning session. Details of each phase of the experiment, in relation to Experiment 1, are described below.

Subjects learned 32 word-image associations following the same procedure as in Experiment 1. Once in the scanner, subjects participated in three types of runs: perception, color memory, and object memory. Procedures were the same as in Experiment 1 unless noted. During perception runs, subjects viewed the images one at a time while performing a cover task of detecting black crosses that infrequently appeared on images. On a given perception trial, the object image was overlaid with a central white fixation cross and presented centrally on a gray background for 0.75 s. The central white fixation cross was then presented alone on a gray background for 1.25, 3.25, 5.25, 7.25, or 9.25 s (25%, 37.5%, 18.75%, 12.5%, or 6.25% of trials per run, respectively) before the start of the next trial. These interstimulus intervals were randomly assigned to trials. Subjects performed the detection task as in Experiment 1. Each perception run consisted of 64 perception trials (2 trials per stimulus) in random order, with lead in and lead out time as in Experiment 1.

During color and object memory runs, subjects were presented with the word cues one at a time, recalled only the color feature or only the object feature of the associated images, and evaluated the vividness of their recollections. We chose not to have subjects explicitly report information about the relevant feature during these runs to avoid conflating memory representations with decision- or motor-related information. On each memory trial, the word cue was presented centrally in white characters on a gray background for 0.3 s. This was followed by a 2.2 s recall period where the screen was blank. Subjects were instructed to use this period to recall only the relevant feature of the associated image from memory and to hold it in mind as vividly as possible for the entire duration of the blank screen. At the end of the recall period, a white fixation cross was presented centrally on a gray background for 1.5, 3.5, 5.5, 7.5, or 9.5 s (37.5%, 25%, 18.75%, 12.5%, or 6.25% of trials per run, respectively), prompting subjects to make one of three memory vividness decisions via button box as in Experiment 1. The interstimulus intervals were randomly assigned to trials. Color and object memory runs consisted of 64 memory trials (2 trials per stimulus) presented in random order, with lead in and lead out time as in Experiment 1.

All subjects completed 4 perception runs, 4 color memory runs, and 4 object memory runs, with each stimulus presented twice in every run. Thus, there were 8 repetitions of each stimulus for each run type. Runs were presented in four sequential triplets, with each triplet composed of one perception run followed by color and object memory runs in random order. As in Experiment 1, stimuli were displayed on a projector at the back of the scanner bore, which subjects viewed through a mirror attached to the head coil. Subjects made responses for all three tasks on an MR-compatible button box.

MRI acquisition

Experiment 1.

Images were acquired on a 3T Siemens Allegra head-only MRI system at the Center for Brain Imaging at New York University. Functional data were acquired with a T2*-weighted EPI sequence with partial coverage (repetition time = 2 s, echo time = 30 ms, flip angle = 82°, 34 slices, 2.5 × 2.5 × 2.5 mm voxels) and an 8-channel occipital surface coil. Slightly oblique coronal slices were aligned ∼120° with respect to the calcarine sulcus at the occipital pole and extended anteriorly covering the occipital lobe, ventral temporal cortex (VTC), and posterior parietal cortex. A whole-brain T1-weighted MPRAGE 3D anatomical volume (1 × 1 × 1 mm voxels) was also collected.

Experiment 2.

Images were acquired on a 3T Siemens Skyra MRI system at the Robert and Beverly Lewis Center for NeuroImaging at the University of Oregon. Functional data were acquired using a T2*-weighted multiband EPI sequence with whole-brain coverage (repetition time = 2 s, echo time = 25 ms, flip angle = 90°, multiband acceleration factor = 3, inplane acceleration factor = 2, 72 slices, 2 × 2 × 2 mm voxels) and a 32-channel head coil. Oblique axial slices were aligned parallel to the plane defined by the anterior and posterior commissures. A whole-brain T1-weighted MPRAGE 3D anatomical volume (1 × 1 × 1 mm voxels) was also collected.

fMRI processing

FSL version 5.0 (Smith et al., 2004) was used for functional image preprocessing. The first four volumes of each functional run were discarded to allow for T1 stabilization. To correct for head motion, each run's time-series was realigned to its middle volume. Each time-series was spatially smoothed using a 4 mm FWHM Gaussian kernel and high-pass filtered using Gaussian-weighted least-squares straight line fitting with σ = 64.0 s. Volumes with motion relative to the previous volume >1.25 mm in Experiment 1 (half the width of a voxel) or >0.5 mm in Experiment 2 were excluded from subsequent analyses. A lower threshold was chosen for Experiment 2 due to high motion artifact susceptibility in multiband sequences. Freesurfer version 5.3 (Fischl, 2012) was used to perform segmentation and cortical surface reconstruction on each subject's anatomical volume. Boundary-based registration was used to compute the alignment between each subject's functional data and their anatomical volume.

All fMRI processing was performed in individual subject space. To estimate the neural pattern of activity evoked by the perception and memory of every stimulus, we conducted separate voxelwise GLM analyses of each subject's smoothed time-series data from the perception and memory runs in each experiment. Perception models included 32 regressors of interest corresponding to the presentation of each stimulus. Events within these, regressors were constructed as boxcars with stimulus presentation duration convolved with a canonical double-gamma hemodynamic response function. Six realignment parameters were included as nuisance regressors to control for motion confounds. First-level models were estimated for each run using Gaussian least-squares with local autocorrelation correction (“prewhitening”). Parameter estimates and variances for each regressor were then registered into the space of the first run and entered into a second-level fixed effects model. This produced t maps representing the activation elicited by viewing each stimulus for each subject. No normalization to a group template was performed. Memory models were estimated using the same procedure, with a regressor of interest corresponding to the recollection of each of the 32 stimuli. For the purposes of this model, the retrieval goal manipulation in Experiment 2 was ignored. All retrieval events were constructed as boxcars with a combined cue plus recall duration before convolution. This produced t maps representing the activation elicited by remembering each stimulus relative to baseline for each subject. The previously described perception and memory GLMs were run two ways: (1) by splitting the perception and memory runs into two halves (odd vs even runs) and running two independent GLMs per run type; and (2) by using all perception and memory runs in each GLM. The split-half models were only used for stimulus-level analyses conducted within run type, whereas models run on all of the data were used for feature-level analyses conducted within run type and for reinstatement analyses conducted across run type. Finally, for Experiment 2, two additional memory models were estimated. These models included only color memory trials or only object memory trials, which allowed us to estimate and compare patterns evoked during the two goal-dependent retrieval tasks.

ROI definition

ROIs (Fig. 3A) were produced for each subject in native subject space using multiple group-defined atlases. Our choice of group atlas for each broader cortical ROI was based on our assessment of the best validated method for parcellating regions in that area. For retinotopic regions in OTC, we relied on a probabilistic atlas published by Wang et al. (2015). We combined bilateral V1v and V1d regions from this atlas to produce a V1 ROI and bilateral LO1 and LO2 regions to produce an LO ROI. For high-level OTC, we used the output of Freesurfer segmentation routines to combine bilateral fusiform gyrus, collateral sulcus, and lateral occipitotemporal sulcus cortical labels to create a VTC ROI. To subdivide LPC, we first selected the lateral parietal nodes of networks 5, 12, 13, 15, 16, and 17 of the 17-network resting state atlas published by Yeo et al. (2011). We refer to parietal nodes from Network 12 and 13 (subcomponents of the frontoparietal control network) as dorsal lateral intraparietal sulcus (dLatIPS) and ventral lateral intraparietal sulcus (vLatIPS), respectively. We altered the parietal node of Network 5 (dorsal attention network) by eliminating vertices in lateral occipital cortex and by subdividing it along the intraparietal sulcus into a dorsal region we refer to as posterior intraparietal sulcus (pIPS) and a ventral region we call ventral IPS (vIPS), following Sestieri et al. (2017). The ventral region also corresponds closely to what others have called PGp (Caspers et al., 2013; Glasser et al., 2016). Finally, due to their small size, we combined the parietal nodes of Networks 15–17 (subcomponents of the default mode network) into a region we collectively refer to as angular gyrus (AnG). All regions were first defined on Freesurfer's average cortical surface (shown in Fig. 3A) and then reverse-normalized to each subject's native anatomical surface. They were then projected into the volume at the resolution of the functional data to produce binary masks.

Figure 3.

ROIs and pattern similarity analyses. A, Anatomical ROIs visualized on the Freesurfer average cortical surface. OTC ROIs included V1 and LO, defined using a group atlas of retinotopic regions (Wang et al., 2015); and VTC, defined using Freesurfer segmentation protocols. LPC ROIs included 5 ROIs that spanned dorsal and ventral LPC: pIPS, dLatIPS, vLatIPS, AnG, and vIPS. LPC ROIs were based on a group atlas of cortical regions estimated from spontaneous activity (Yeo et al., 2011). All ROIs were transformed to subjects' native anatomical surfaces and then into functional volume space before analysis. B, For each ROI, we estimated the multivoxel pattern of activity evoked by each stimulus during perception and memory. Patterns for stimuli that shared color or object features were compared. Analyses quantified feature information within perception trials, within memory trials, and across perception and memory trials (reinstatement). See also Materials and Methods.

Experimental design and statistical analysis

Our experimental design for Experiment 1 included two types of cognitive tasks, which subjects performed in different fMRI runs: perception of visual stimuli and retrieval of the same stimuli from long-term memory. Each of the 32 stimuli had one of four color features and one of eight object features. Experiment 2 was performed on an independent sample of subjects and had a similar design to Experiment 1, except that subjects in Experiment 2 performed two goal-dependent versions of the memory retrieval task: color memory and object memory (see Tasks and procedure). Our sample size for each experiment was consistent with similar fMRI studies in the field and was determined before data collection. Our dependent variables of interest for both experiments were stimulus-evoked BOLD activity patterns. In each experiment, separate t maps were obtained for each stimulus from the perception and memory runs (see fMRI processing and Fig. 3B). Experiment 2 memory t maps were derived from a single model that collapsed across the two goal-dependent memory tasks, except when testing for goal-related effects. When testing for goal-related effects, we used t maps that were separately estimated from the color and object memory tasks. We intersected all t maps with binary ROI masks to produce stimulus-evoked voxel patterns for each ROI. Our ROIs included early and high-level visual areas in OTC that we believed would be responsive to the features of our stimuli, as well as regions spanning all of LPC (see ROI definition). Analyses focused on cortical regions at multiple levels of spatial granularity. To evaluate whether perceptually based and memory-based processing differed between LPC and OTC, we grouped data from individual ROIs according to this distinction and evaluated effects of ROI group (OTC, LPC). Given prior work implicating dorsal parietal cortex in top-down attention (Corbetta and Shulman, 2002), we also tested for differences in goal-modulated memory processing between dorsal and ventral LPC regions. To do this, we grouped individual LPC ROIs according to their position relative to the intraparietal sulcus and evaluated effects of LPC subregion (dorsal, ventral). We report follow-up statistical tests performed on data from individual ROIs in Tables 1, 2, and 3. All statistical tests performed on BOLD activity patterns (described below) were implemented in R version 3.4. All t tests were two-tailed. With the exception of tests performed at the individual ROI level, all tests were assessed at α = 0.05. Tests in the 8 individual ROIs are reported in tables, where uncorrected p values are reported with significance after correcting for multiple comparisons indicated. Here, a conservative Bonferroni-corrected p value of 0.05/8 = 0.00625 was used to indicate significance.

Table 1.

Feature information during perception and memory in individual ROIsa

| ROI | Perception |

Memory |

Perception > memory |

|||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Color |

Object |

Color |

Object |

Color |

Object |

|||||||

| t(33) | p | t(33) | p | t(33) | p | t(33) | p | t(33) | p | t(33) | p | |

| V1 | 2.32 | 0.027 | 3.29 | 0.002* | 2.55 | 0.015 | 1.18 | 0.246 | 0.42 | 0.677 | 1.66 | 0.106 |

| LO | 0.83 | 0.410 | 5.04 | <0.001* | −0.41 | 0.687 | 3.58 | 0.001* | 0.92 | 0.364 | 2.28 | 0.029 |

| VTC | −0.97 | 0.338 | 5.00 | <0.001* | 0.87 | 0.390 | 2.54 | 0.016 | −1.76 | 0.088 | 1.92 | 0.064 |

| pIPS | −2.24 | 0.032 | 0.18 | 0.858 | 1.82 | 0.078 | 2.72 | 0.010 | −3.05 | 0.005* | −1.99 | 0.054 |

| dLatIPS | −2.81 | 0.008 | 0.18 | 0.855 | 0.64 | 0.528 | 2.39 | 0.023 | −2.06 | 0.048 | −1.52 | 0.139 |

| vLatIPS | −1.81 | 0.080 | 0.66 | 0.513 | 1.76 | 0.087 | 3.15 | 0.003* | −2.69 | 0.011 | −1.47 | 0.151 |

| AnG | 0.10 | 0.919 | 0.36 | 0.718 | 3.48 | 0.001* | 3.48 | 0.001* | −2.87 | 0.007 | −2.31 | 0.027 |

| vIPS | 0.31 | 0.761 | 2.82 | 0.008 | 2.18 | 0.036 | 3.48 | 0.001* | −1.55 | 0.130 | −0.39 | 0.699 |

aOne-sample t test comparing perceptual and mnemonic feature information with chance (zero) and paired t test comparing perceptual and mnemonic feature information for each feature dimension and ROI.

*p < 0.00625 following multiple comparisons correction for 8 ROIs.

Table 2.

Feature and stimulus reinstatement in individual ROIsa

| ROI | Color |

Object |

Stimulus > color + object |

|||

|---|---|---|---|---|---|---|

| t(33) | p | t(33) | p | t(33) | p | |

| V1 | 0.56 | 0.582 | 1.16 | 0.253 | 0.42 | 0.674 |

| LO | 3.11 | 0.004* | 2.27 | 0.030 | −0.87 | 0.389 |

| VTC | 0.53 | 0.597 | 2.10 | 0.044 | 2.30 | 0.028 |

| pIPS | 1.29 | 0.207 | 0.60 | 0.556 | 2.47 | 0.019 |

| dLatIPS | 2.10 | 0.043 | −0.64 | 0.524 | 1.61 | 0.118 |

| vLatIPS | 2.04 | 0.050 | −0.59 | 0.560 | 1.92 | 0.063 |

| AnG | 1.94 | 0.062 | 0.75 | 0.461 | 0.65 | 0.519 |

| vIPS | 1.20 | 0.239 | 0.91 | 0.368 | 3.12 | 0.004* |

aOne-sample t test comparing color and object feature reinstatement with chance (zero) and paired sample t test comparing stimulus reinstatement with summed feature reinstatement for each ROI.

*p < 0.00625 following multiple comparisons correction for 8 ROIs.

Table 3.

Feature information during memory by goal-relevance in individual ROIsa

| ROI | Relevant |

Irrelevant |

Relevant > irrelevant |

|||

|---|---|---|---|---|---|---|

| t(16) | p | t(16) | p | t(16) | p | |

| V1 | −1.11 | 0.285 | 2.24 | 0.040 | −2.10 | 0.052 |

| LO | −0.28 | 0.780 | 0.53 | 0.602 | −0.58 | 0.568 |

| VTC | 0.54 | 0.595 | 0.99 | 0.336 | −0.31 | 0.759 |

| pIPS | 1.85 | 0.084 | −0.06 | 0.953 | 1.79 | 0.092 |

| dLatIPS | 1.80 | 0.092 | −0.76 | 0.458 | 2.38 | 0.030 |

| vLatIPS | 3.53 | 0.003* | 1.87 | 0.081 | 0.87 | 0.397 |

| AnG | 2.23 | 0.040 | 3.39 | 0.004* | 0.30 | 0.765 |

| vIPS | 1.33 | 0.204 | 1.06 | 0.304 | 0.54 | 0.600 |

aOne-sample t test comparing goal-relevant and goal-irrelevant feature information during memory retrieval with chance (zero) and paired sample t test comparing goal-relevant with goal-irrelevant feature information for each ROI.

*p < 0.00625 following multiple comparisons correction for 8 ROIs.

We first tested whether perception and memory activity patterns contained stimulus-level information. To do this, we computed the Fisher z-transformed Pearson correlation between t maps estimated from independent split-half GLM models, separately for perception and memory tasks. These correlations were computed separately for each subject and ROI. We then averaged values corresponding to correlations between the same stimulus (within-stimulus correlations; e.g., blue insect/blue insect) and values corresponding to stimuli that shared neither color nor object category (across-both correlations; e.g., red insect/yellow backpack). The average across-both correlation functioned as a baseline and was subtracted from the average within-stimulus correlation to produce a measure of stimulus information. This baseline was chosen to facilitate comparisons between stimulus and feature information metrics (see below). Stimulus information was computed for each subject, ROI, and run type (perception, memory). We used mixed-effects ANOVAs to test whether stimulus information varied as a function of region (within-subject factor), run type (within-subject factor), and/or experiment (across-subject factor).

We next tested whether perception and memory activity patterns contained information about stimulus features (color, object). We computed the Fisher z-transformed Pearson correlation between every pair of t maps from a given subject and ROI, separately for perception and memory. Within-stimulus identity correlations were excluded because the correlation coefficient was 1.0. We then averaged correlation values across stimulus pairs that shared a color feature (within-color correlations; e.g., blue bird/blue insect), stimulus pairs that shared an object category feature (within-object correlations; e.g., blue insect/red insect), and stimulus pairs that shared neither color nor object category (across-both correlations; e.g., red insect/yellow backpack). The average across-both correlation functioned as a baseline and was subtracted (1) from the average within-color correlation to produce a measure of color information, and (2) from the average within-object correlation to produce a measure of object information. Thus, positive values for these measures reflected the presence of stimulus feature information. Because the perception and memory tasks did not require subjects to report the features of the stimuli (in either Experiment 1 or 2), feature information values could not be explained in terms of planned motor responses. Color and object feature information measures were computed for each subject, ROI, and run type (perception, memory). We used mixed-effects ANOVAs to test whether feature information varied as a function of region (within-subject factor), run type (within-subject factor), feature dimension (within-subject factor), and/or experiment (across-subject factor). We also performed one-sample t tests to assess whether feature information was above chance (zero) during perception and memory.

We then tested whether feature-level information and stimulus-level information were preserved from perception to memory (reinstated). We computed the Fisher z-transformed Pearson correlation between perception and memory patterns for every pair of stimuli, separately for each subject and ROI. Excluding within-stimulus correlations, we then averaged correlation values across stimulus pairs that shared a color feature (within-color correlations; e.g., blue insect/blue bird), stimulus pairs that shared an object category feature (within-object correlations; e.g., blue insect/red insect), and stimulus pairs that shared neither color nor object category (across-both correlations; e.g., blue insect/yellow backpack). The average across-both correlation functioned as a baseline and was subtracted (1) from the average within-color correlation to produce a measure of color reinstatement, and (2) from the average within-object correlation to produce a measure of object reinstatement. These metrics are equivalent to those described in the prior analysis, but with correlations computed across perception and memory rather than within perception and memory. Thus, positive values for these measures reflected the preservation of feature information across perception and memory, or feature reinstatement. We used mixed-effects ANOVAs to test whether feature reinstatement varied as a function of region (within-subject factor), feature dimension (within-subject factor), and/or experiment (across-subject factor). We also performed one-sample t tests to assess whether feature reinstatement was above chance (zero). To produce a measure of stimulus reinstatement that was comparable with our measures of feature reinstatement, we averaged within-stimulus correlation values (e.g., blue insect/blue insect) and then subtracted the same baseline (the average of across-both correlations). We evaluated whether stimulus reinstatement could be accounted for by color and object feature reinstatement or whether it exceeded what would be expected by additive color and object feature reinstatement. To do this, we compared stimulus reinstatement to summed color and object feature reinstatement. We used mixed-effects ANOVAs to test whether reinstatement varied as a function of region (within-subject factor), reinstatement level (stimulus, summed features; within-subject factor), and/or experiment (across-subject factor).

To test whether task goals influenced feature information during memory, we recomputed color and object feature information separately using t maps estimated from the color and object memory tasks in Experiment 2. We averaged these feature information values into two conditions: goal-relevant (color information for the color memory task; object information for the object memory task) and goal-irrelevant (color information during the object memory task; object information during the color memory task). We used repeated-measures ANOVAs to test whether feature information varied as function of region and goal relevance (within-subject factors). We also performed one-sample t tests to assess whether goal-relevant feature information and goal-irrelevant feature information were above chance (zero) during memory.

Results

Behavior

Subjects in both experiments completed a minimum of 6 test blocks during the associative learning session before scanning (Experiment 1: 6.65 ± 0.79; Experiment 2: 6.91 ± 0.69, mean ± SD). During fMRI perception runs, subjects performed the target detection task with high accuracy (Experiment 1: 89.0 ± 6.8%; Experiment 2: 91.6 ± 2.7%). In Experiment 1, subjects reported that they experienced vivid memory on 86.4 ± 8.4% of fMRI memory trials, weak memory on 10.4 ± 7.1% of trials, no memory on 1.3 ± 1.8% of trials, and did not respond on the remaining 1.8 ± 2.3% of trials (mean ± SD). In Experiment 2, the mean percentage of vivid, weak, no memory, and no response trials was 86.1 ± 9.0%, 5.2 ± 6.1%, 3.4 ± 5.2%, and 5.4 ± 6.2%, respectively. The percentage of vivid memory responses did not significantly differ between Experiment 1 and Experiment 2 (t(32) = 0.13, p = 0.897, independent-samples t test). Within each experiment, there were no differences in the percentage of vivid memory responses across stimuli with different color features (Experiment 1: F(3,48) = 1.19, p = 0.323; Experiment 2: F(3,48) = 0.48, p = 0.697; repeated-measures ANOVAs) or different object features (Experiment 1: F(7,112) = 1.68, p = 0.121; Experiment 2: F(7,112) = 1.28, p = 0.266).

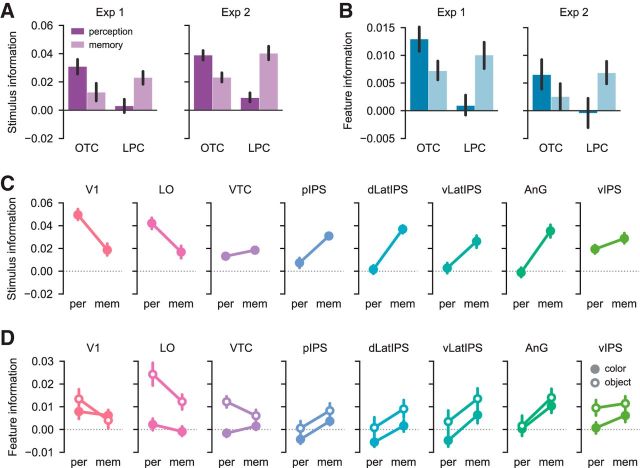

Stimulus information during perception versus memory retrieval

As a first step, we sought to replicate recent work from Xiao et al. (2017) that compared the strength of stimulus-level representations during perception and memory retrieval. Xiao et al. (2017) observed that ventral visual cortex contained stronger stimulus-level representations during perception than memory retrieval, whereas frontoparietal cortex showed the opposite pattern. To test for this pattern in our data, we quantified the strength of stimulus-level information in OTC and LPC, combining data across experiments (see Materials and Methods). We did this separately for patterns evoked during perception and memory retrieval. We then entered stimulus information values into an ANOVA with factors of ROI group (OTC, LPC), run type (perception, memory), and experiment (Experiment 1, Experiment 2). Consistent with Xiao et al. (2017), we observed a highly significant interaction between ROI group and run type (F(1,32) = 113.6, p < 0.001; Fig. 4A,C). In LPC, stimulus information was greater during memory than during perception (main effect of run type: F(1,32) = 40.8, p < 0.001); whereas in OTC, stimulus information was greater during perception than memory (main effect of run type: F(1,32) = 28.0, p < 0.001). These findings support the idea that stimulus-level information in LPC and OTC is differentially expressed depending on whether the stimulus is internally generated from memory or externally presented. This result motivates more targeted questions about the representation of stimulus features in OTC and LPC across perception and memory.

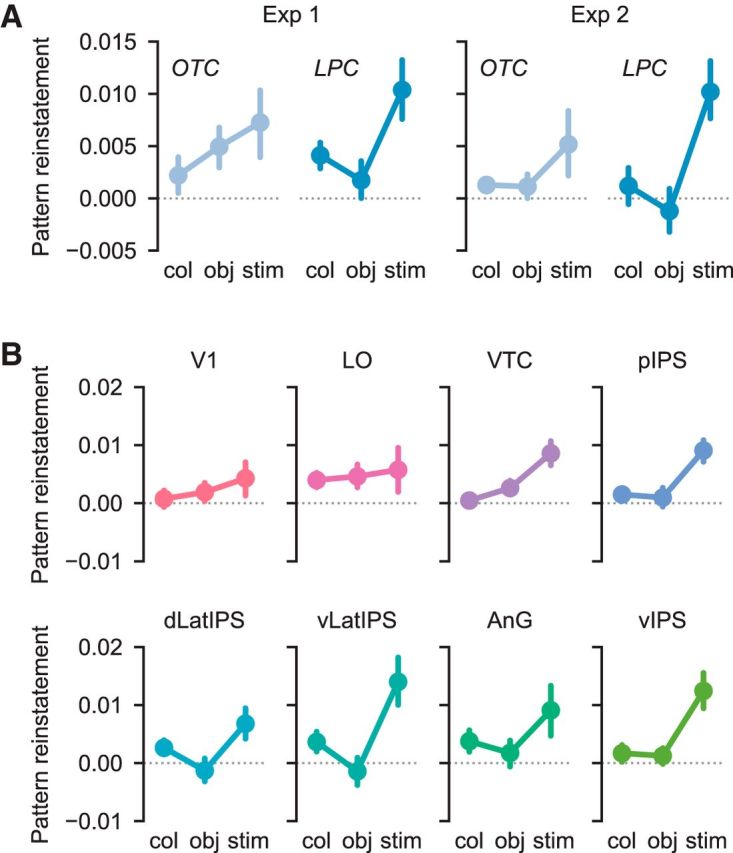

Figure 4.

Stimulus-level and feature-level information during perception versus memory. A, The relative strength of perceptual versus mnemonic stimulus information differed between OTC and LPC (F(1,32) = 113.6, p < 0.001). Across both experiments, OTC contained stronger stimulus information during perception than during memory (F(1,32) = 28.0, p < 0.001), whereas LPC contained stronger stimulus information during memory than during perception (F(1,32) = 40.8, p < 0.001). B, Across both experiments, the relative strength of perceptual versus mnemonic feature information also differed between OTC and LPC (F(1,32) = 29.27, p < 0.001). OTC contained marginally stronger feature information during perception than during memory (F(1,32) = 3.93, p = 0.056), whereas LPC contained stronger feature information during memory than during perception (F(1,32) = 11.65, p = 0.002). Legend is the same as in A. Error bars indicate mean ± SEM across 17 subjects. C, Stimulus information during perception and memory plotted separately for each ROI, collapsed across experiment. D, Color and object feature information during perception and memory plotted separately for each ROI, collapsed across experiment. Error bars indicate mean ± SEM across 34 subjects. For results of t tests assessing perceptual and mnemonic feature information for each ROI separately, see Table 1.

Feature information during perception versus memory retrieval

To assess feature information, we took advantage of the fact that our stimuli were designed to vary along two visual feature dimensions: color and object category. In both experiments, we quantified the strength of color and object feature information during perception and memory (see Materials and Methods). Of critical interest was whether the relative strength of perceptual and mnemonic feature information differed across LPC and OTC. We entered feature information values from all ROIs into an ANOVA with factors of ROI group (OTC, LPC), run type (perception, memory), feature dimension (color, object), and experiment (Experiment 1, Experiment 2). Critically, the relative strength of perception and memory-based feature information differed across LPC and OTC, as reflected by a highly significant interaction between ROI group and run type (F(1,32) = 29.27, p < 0.001; Fig. 4B). This effect did not differ across experiments (ROI group × run type × experiment interaction: F(1,32) = 0.55, p = 0.462; Fig. 4B).

In LPC, feature information was reliably stronger during memory than during perception (main effect of run type: F(1,32) = 11.65, p = 0.002; Fig. 4B), with no difference in this effect across individual LPC ROIs (run type × ROI interaction: F(4,128) = 1.55, p = 0.192; Fig. 4D). Averaging across the color and object dimensions and also across experiments, feature information was above chance during memory (t(33) = 4.79, p < 0.001; one-sample t test), but not during perception (t(33) = 0.14, p = 0.892). In Table 1, we report the results of t tests assessing feature information separately for each LPC ROI. Unrelated to our main hypotheses, there was a marginally significant main effect of feature dimension in LPC (F(1,32) = 3.95, p = 0.056), with somewhat stronger object information than color information. This effect of feature dimension did not interact with run type (F(1,32) = 0.004, p = 0.952).

In OTC, we observed a pattern opposite to LPC: feature information was marginally stronger during perception than during memory (main effect of run type: F(1,32) = 3.93, p = 0.056; Fig. 4B). Again, this effect did not differ across individual OTC ROIs (run type × ROI interaction: F(2,64) = 1.72, p = 0.187; Fig. 4D). Averaging across the color and object dimensions and across experiments, feature information was above chance both during perception (t(33) = 4.68, p < 0.001) and during memory (t(33) = 3.01, p = 0.005). Table 1 includes assessments of feature information for each OTC ROI separately. As in LPC, there was a significant main effect of feature dimension in OTC (F(1,32) = 18.59, p < 0.001), with stronger object information than color information. This effect of feature dimension interacted with run type (F(1,32) = 4.90, p = 0.034), reflecting a relatively stronger difference between color and object information during perception than during memory. Together, these results establish that feature-level information was differentially expressed in OTC and LPC depending on whether stimuli were perceived or remembered.

Reinstatement during memory retrieval

We next quantified stimulus and feature reinstatement during memory retrieval. Whereas the prior analyses examined stimulus and feature information during perception and memory retrieval separately, here we examined whether stimulus-specific and feature-specific activity patterns were preserved from perception to memory retrieval (see Materials and Methods). Because perception and memory trials had no overlapping visual elements, any information preserved across stages must reflect memory retrieval.

To test whether feature information was preserved across perception and memory, we entered feature reinstatement values from all ROIs into an ANOVA with factors of ROI group (OTC, LPC), feature dimension (color, object), and experiment (Experiment 1, Experiment 2). There was no reliable difference in the strength of feature reinstatement between OTC and LPC (main effect of ROI group: F(1,32) = 0.90, p = 0.350). There was a marginal main effect of experiment on feature reinstatement (F(1,32) = 3.10, p = 0.088; Fig. 5A), with numerically lower feature reinstatement in Experiment 2 (where subjects recalled only one stimulus feature) than in Experiment 1 (where subjects recalled the entire stimulus). When collapsing across color and object dimensions, feature reinstatement in OTC was above chance in both Experiment 1 (t(16) = 2.37, p = 0.031; one-sample t test) and Experiment 2 (t(16) = 2.33, p = 0.033). In LPC, feature reinstatement was above chance in Experiment 1 (t(16) = 2.58, p = 0.020), but not in Experiment 2 (t(16) = −0.007, p = 0.995). Thus, the task demands in Experiment 2 may have had a particular influence on LPC feature representations, a point we examine in the next section. In Table 2, we assess feature reinstatement in individual OTC and LPC ROIs (see also Fig. 5B).

Figure 5.

Feature and stimulus reinstatement effects. A, Feature and stimulus reinstatement plotted separately for OTC and LPC and for each experiment. Across both experiments, stimulus reinstatement reliably exceeded summed levels of color and object feature reinstatement in LPC (F(1,32) = 5.46, p = 0.026). This effect was marginally greater than the effect observed in OTC (F(1,32) = 3.59, p = 0.067), where stimulus reinstatement was well-accounted for by summed color and object feature reinstatement (F(1,32) = 0.35, p = 0.560). Error bars indicate mean ± SEM across 17 subjects. B, Color reinstatement, object reinstatement, and stimulus reinstatement plotted separately for each ROI, collapsed across experiment. Error bars indicate mean ± SEM across 34 subjects. For results of t tests assessing feature and stimulus reinstatement for each ROI separately, see Table 2.

To test whether color and object feature reinstatement fully accounted for stimulus reinstatement, we compared summed color and object reinstatement values with stimulus reinstatement values. Reinstatement values from all ROIs were entered into an ANOVA with factors of ROI group (OTC, LPC), reinstatement level (stimulus, summed features), and experiment (Experiment 1, Experiment 2). There was a significant main effect of reinstatement level (F(1,32) = 4.31, p = 0.046), with stimulus reinstatement larger than summed feature reinstatement (Fig. 5A). There was a marginally significant difference in the magnitude of this effect between OTC and LPC (reinstatement level interaction × ROI group: F(1,32) = 3.59, p = 0.067). In LPC, stimulus reinstatement reliably exceeded summed feature reinstatement (main effect of reinstatement level: F(1,32) = 5.46, p = 0.026; Fig. 5A). This effect did not differ across experiments (reinstatement level × experiment interaction: F(1,32) = 0.81, p = 0.375; Fig. 5A) or across LPC ROIs (reinstatement level × ROI interaction: F(4,128) = 0.95, p = 0.438; Fig. 5B). In Table 2, we assess the difference between stimulus reinstatement and summed feature reinstatement for each LPC ROI. In OTC, stimulus reinstatement did not significantly differ from summed feature reinstatement (main effect of reinstatement level: F(1,32) = 0.35, p = 0.560; Fig. 5A), with no difference across experiments (reinstatement level × experiment interaction: F(1,32) = 0.30, p = 0.590) and a marginal difference across ROIs (reinstatement level × ROI interaction: F(2,64) = 2.58, p = 0.084). Tests in individual OTC ROIs (Table 2) showed that stimulus reinstatement significantly exceeded summed feature reinstatement in VTC only. These results replicate prior evidence of stimulus-level reinstatement in LPC (Kuhl and Chun, 2014; Lee and Kuhl, 2016; Xiao et al., 2017) and VTC (Lee et al., 2012), but provide unique insight into the relative strength of feature- versus stimulus-level reinstatement in these regions.

Goal dependence of feature information during memory retrieval

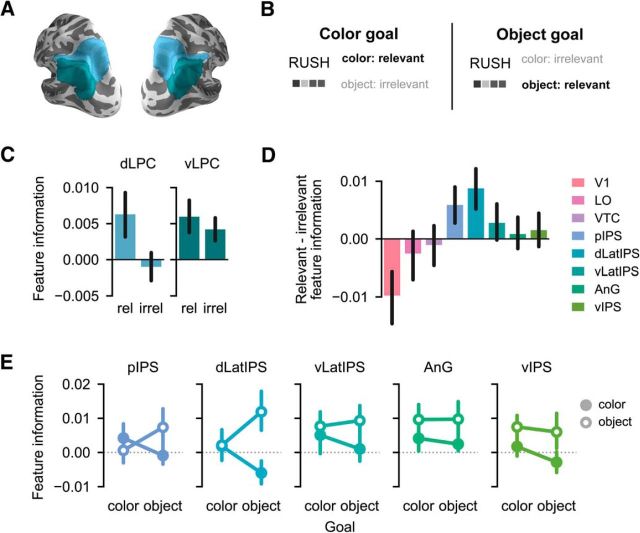

In a final set of analyses, we tested whether retrieval goals influenced feature information expressed in LPC during memory retrieval. Using data from Experiment 2 only, we recomputed color and object feature information separately for trials where the goal was recalling the color feature of the stimulus and trials where the goal was recalling the object feature of the stimulus (see Materials and Methods). Of interest was the comparison between goal-relevant feature information (e.g., color information on color memory trials) and goal-irrelevant feature information (e.g., color information on object memory trials; Fig. 6B). Because there is a strong body of evidence suggesting that dorsal and ventral parietal regions are differentially sensitive to top-down versus bottom-up visual attention (Corbetta and Shulman, 2002), we specifically tested whether sensitivity to retrieval goals varied across dorsal and ventral LPC subregions (Fig. 6A).

Figure 6.

Feature information during memory retrieval as a function of goal relevance. A, ROIs from Figure 3A grouped according to a dorsal/ventral division along the intraparietal sulcus (see Materials and Methods). B, Color and object features were coded as either goal-relevant or goal-irrelevant according to the current retrieval goal. C, The effect of goal relevance on mnemonic feature information differed significantly between dorsal and ventral LPC subregions (F(1,16) = 9.05, p = 0.008). In dorsal LPC, goal-relevant feature information was stronger than goal-irrelevant feature information (F(1,16) = 5.30, p = 0.035). In ventral LPC, there was no effect of goal relevance on feature information (F(1,16) = 0.61, p = 0.447), and both goal-relevant (t(16) = 2.48, p = 0.025) and goal-irrelevant (t(16) = 2.64, p = 0.018) feature information was represented above chance. D, The difference between goal-relevant and goal-irrelevant feature information plotted separately for each ROI. E, Color and object feature information plotted separately for color and object memory tasks and for each dorsal and ventral LPC ROI. Error bars indicate mean ± SEM across 17 subjects. For results of t tests assessing mnemonic feature information according to goal relevance for each ROI separately, see Table 3.

To test whether goal sensitivity varied between dorsal and ventral LPC subregions, we entered memory-based feature information values from LPC ROIs into an ANOVA with factors of LPC subregion (dorsal LPC, ventral LPC) and goal relevance (relevant, irrelevant). In line with our hypothesis, there was a robust interaction between LPC subregion and goal relevance (F(1,16) = 9.05, p = 0.008; Fig. 6C). Namely, there was reliably stronger goal-relevant than goal-irrelevant feature information in dorsal LPC (main effect of goal relevance: F(1,16) = 5.30, p = 0.035; Fig. 6C). This effect did not differ across individual dorsal LPC ROIs (goal relevance × ROI interaction: F(1,16) = 1.01, p = 0.330; Fig. 6E). In dorsal LPC, goal-relevant feature information marginally exceeded chance (goal-relevant: t(16) = 1.93, p = 0.072; one-sample t test), whereas goal-irrelevant feature information did not differ from chance (t(16) = −0.49, p = 0.628). In contrast to the pattern observed in dorsal LPC, feature information was not influenced by goals in ventral LPC (main effect of goal relevance: F(1,16) = 0.61, p = 0.447; Fig. 6C), nor did this effect vary across ventral LPC ROIs (goal relevance × ROI interaction: F(2,32) = 0.16, p = 0.855; Fig. 6E). Indeed, both goal-relevant and goal-irrelevant information was significantly above chance in ventral LPC (goal-relevant: t(16) = 2.48, p = 0.025; goal-irrelevant: t(16) = 2.64, p = 0.018; Fig. 6C). The interaction between dorsal versus ventral LPC and goal relevance was driven primarily by a difference in the strength of goal-irrelevant feature information. Goal-irrelevant feature information was significantly stronger in ventral LPC than in dorsal LPC (t(16) = 3.15, p = 0.006; paired sample t test), whereas the strength of goal-relevant feature information did not significantly differ across ventral and dorsal LPC (t(16) = −0.19, p = 0.850). In Table 3, we assess the goal-relevant and goal-irrelevant feature information in individual ROIs (see also Fig. 6D). Collectively, these findings provide novel evidence for a functional distinction between memory representations in dorsal and ventral LPC, with top-down memory goals biasing feature representations toward relevant information in dorsal LPC, but not ventral, LPC. Because there was no evidence for preferential representation of goal-relevant feature information during memory retrieval in OTC (main effect of goal relevance: F(1,16) = 1.51, p = 0.237; Fig. 6D), the bias observed in dorsal LPC was not inherited from earlier visual regions.

Discussion

Here, across two fMRI experiments, we showed that OTC and LPC were differentially biased to represent stimulus features during either perception or memory retrieval. In OTC, color and object feature information was stronger during perception than during memory retrieval, whereas in LPC, feature information was stronger during memory retrieval than during perception. Despite these biases, we observed that stimulus-specific patterns evoked in LPC during perception were reinstated during memory retrieval. Finally, in Experiment 2, we found that retrieval goals biased dorsal LPC representations toward relevant stimulus features in memory, whereas ventral LPC represented both relevant and irrelevant features regardless of the goal.

Transformation of representations from OTC to LPC

Traditionally, cortical memory reactivation has been studied in sensory regions. Empirical studies focusing on these regions have provided ample evidence for the hypothesis that memory retrieval elicits a weak copy of earlier perceptual activity (O'Craven and Kanwisher, 2000; Wheeler et al., 2000; Slotnick et al., 2005; Pearson et al., 2015). While this idea accounts for our results in OTC, it does not explain our results in LPC, where both stimulus-level information and feature-level information were stronger during memory retrieval than perception. What accounts for this reversal in LPC? Given that our memory task was likely more attentionally demanding than our perception task, one possibility is that LPC is less sensitive to the source of a stimulus (perception vs memory) than to the amount of attention that a stimulus is afforded. While this would still point to an important dissociation between OTC and LPC, there are several reasons why we think that attentional demands do not fully explain the memory bias we observed in LPC, particularly in ventral LPC.

First, although top-down attention has been consistently associated with dorsal, but not ventral, LPC (Corbetta and Shulman, 2002), we observed a bias toward memory representations in both dorsal and ventral LPC. Moreover, in Experiment 2, where we specifically manipulated subjects' feature-based attention during memory retrieval, we found that feature information in ventral LPC was remarkably insensitive to task demands. Indeed, irrelevant feature information was significantly represented in ventral LPC and did not differ in strength from relevant feature information. Second, there is evidence that univariate BOLD responses in ventral LPC are higher during successful memory retrieval than during perception (Daselaar, 2009; Kim et al., 2010), paralleling our pattern-based findings. Third, there is direct evidence that primate ventral LPC receives strong anatomical (Cavada and Goldman-Rakic, 1989; Clower et al., 2001) and functional (Vincent et al., 2006; Kahn et al., 2008) drive from the medial temporal lobe regions that are critical for recollection. Finally, recent evidence from rodents indicates that parietal cortex (though not necessarily a homolog of human ventral LPC) is biased toward memory-based representations (Akrami et al., 2018). Namely, neurons in rat posterior parietal cortex were shown to carry more information about sensory stimuli from prior trials than from the current trial. Strikingly, this bias toward memory-based information was observed, even though information from prior trials was not task-relevant. Thus, there is strong converging evidence that at least some regions of LPC are intrinsically biased toward memory-based representations and that this bias cannot be explained in terms of attention. That said, we do not think attention and memory are unrelated. An alternative way of conceptualizing the present results with regards to attention is that perception and memory exist along an external versus internal axis of attention (Chun and Johnson, 2011). By this account, LPC, and ventral LPC in particular, is biased toward representing internally generated information, whereas OTC is biased toward representing external information (see Honey et al., 2017).

Another factor that potentially influenced our pattern of results is stimulus repetition. Namely, all stimuli and associations in our study were highly practiced, and retrieval was relatively automatic by the time subjects entered the scanner. While the use of overtrained associations was intended to reduce the probability of failed retrieval trials, it is possible that repeated retrieval “fast-tracked” memory consolidation (Antony et al., 2017), thereby strengthening cortical representation of memories (Tompary and Davachi, 2017). While a rapid consolidation account does not directly predict that memory-based representations would be stronger in LPC than OTC, future work should aim to test whether the bias toward memory-based representations in LPC increases as memories are consolidated. To be clear, however, we do not think that overtraining is necessary to observe a memory bias in LPC, as several prior studies have found complementary results with limited stimulus exposure (Long et al., 2016; Akrami et al., 2018).

More broadly, our findings demonstrate a situation where the idea of memory reactivation fails to capture the relationship between neural activity patterns evoked during perception and memory retrieval. Instead, our findings are consistent with a model of memory in which stimulus representations are at least partially transformed from sensory regions to higher-order regions, including LPC (Xiao et al., 2017). Future experimental work will be necessary to establish how stimulus, task, and cognitive factors influence this transformation of information across regions.

Pattern reinstatement within regions

Consistent with prior studies, we observed stimulus-specific reinstatement of perceptual patterns during memory retrieval in LPC (Buchsbaum et al., 2012; Kuhl and Chun, 2014; Ester et al., 2015; Chen et al., 2016; Lee and Kuhl, 2016; Xiao et al., 2017) and VTC (Lee et al., 2012). Interestingly, we observed reinstatement in LPC and VTC despite the fact that these regions each had a bias toward either mnemonic (LPC) or perceptual (VTC) information. Although these findings may seem contradictory, it is important to emphasize that the biases we observed were not absolute. Rather, there was significant feature information in OTC during memory retrieval; and although we did not observe significant feature information in LPC during perception, other studies have reported LPC representations of visual stimuli (Bracci et al., 2017; Lee et al., 2017). Thus, we think it is likely that the reinstatement effects that we and others have observed co-occur with a large but incomplete transfer of stimulus representation from OTC during perception to LPC during retrieval.

Notably, the stimulus reinstatement effects that we observed in LPC could not be explained by additive reinstatement of color and object information. Because we tested subjects on lure images during the associative learning task, subjects were required to learn more than just color-object feature conjunctions in our experiments. Thus, LPC representations, like subjects' memories, likely reflected the conjunction of more than just color and object information. This proposal is consistent with theoretical arguments, and empirical evidence suggesting that parietal cortex, and, in particular, angular gyrus, serves as a multimodal hub that integrates event features in memory (Shimamura, 2011; Wagner et al., 2015; Bonnici et al., 2016; Yazar et al., 2017). Given that ventral LPC is frequently implicated in semantic processing (Binder and Desai, 2011), stimulus-specific representations in ventral LPC may reflect a combination of perceptual and semantic information. In contrast, stimulus-specific representations in dorsal LPC and VTC, which are components of two major visual pathways, are more likely to reflect combinations of high-level but fundamentally perceptual features.

Influence of retrieval goals on LPC representations

Substantial evidence from electrophysiological (Toth and Assad, 2002; Freedman and Assad, 2006; Ibos and Freedman, 2014) and BOLD (Liu et al., 2011; Erez and Duncan, 2015; Bracci et al., 2017; Vaziri-Pashkam and Xu, 2017; Long and Kuhl, 2018) measurements indicates that LPC representations of perceptual events are influenced by top-down goals. Our results provide novel evidence that, in dorsal LPC, specific features of a remembered stimulus are dynamically strengthened or weakened according to the current goal. This finding provides a critical bridge between perception-based studies that have emphasized the role of LPC in goal-modulated stimulus coding and memory-based studies that have found representations of remembered stimuli in LPC. Importantly, because we did not require subjects to behaviorally report any remembered feature information, the mnemonic representations we observed cannot be explained in terms of action planning (Andersen and Cui, 2009). The fact that we observed goal-modulated feature coding in dorsal, but not ventral, LPC is consistent with theoretical accounts arguing that dorsal LPC is more involved in top-down attention, whereas ventral LPC is more involved in bottom-up attention (Corbetta and Shulman, 2002). Cabeza et al. (2008) argued that LPC's role in memory can similarly be explained in terms of top-down and bottom-up attentional processes segregated across dorsal and ventral LPC. However, from this account, LPC is not thought to actively represent mnemonic content. Thus, while our findings support the idea that dorsal and ventral LPC are differentially involved in top-down versus bottom-up memory processes, they provide critical evidence that these processes involve active representation of stimulus features.

Interestingly, although we observed no difference between goal-relevant and goal-irrelevant feature information in ventral LPC, both were represented above chance. This is consistent with the idea that ventral LPC represents information received from the medial temporal lobe, perhaps functioning as an initial mnemonic buffer (Baddeley, 2000; Vilberg and Rugg, 2008; Kuhl and Chun, 2014; Sestieri et al., 2017). Ventral LPC representations may then be selectively gated according to current behavioral goals, with goal-relevant information propagating to dorsal LPC. This proposal is largely consistent with a recent theoretical argument made by Sestieri et al. (2017). However, it differs in the specific assignment of functions to LPC subregions. Whereas Sestieri et al. (2017) argue that dorsal LPC is contributing to goal-directed processing of perceptual information only, our results indicate that dorsal LPC also represents mnemonic information according to current goals. Given the paucity of experiments examining the influence of goals on mnemonic representations in LPC (though see Kuhl et al., 2013), additional work is needed. However, our findings provide important evidence, motivated by existing theoretical accounts, that retrieval goals differentially influence mnemonic feature representations across LPC subregions.

In conclusion, we showed that LPC not only actively represented features of remembered stimuli, but that these LPC feature representations were stronger during memory retrieval than perception. Moreover, whereas ventral LPC automatically represented remembered stimulus features regardless of goals, dorsal LPC feature representations were flexibly and dynamically influenced to match top-down goals. Collectively, these findings provide novel insight into the functional significance of memory representations in LPC.

Footnotes

This work was made possible by generous support from the Lewis Family Endowment to the University of Oregon Robert and Beverly Lewis Center for Neuroimaging. S.E.F. was supported by the National Science Foundation Graduate Research Fellowship Program DGE-1342536, National Eye Institute Visual Neuroscience Training Program T32-EY007136, and National Institutes of Health Blueprint D-SPAN Award F99-NS105223. B.A.K. was supported by a National Science Foundation CAREER Award (BCS-1752921).

The authors declare no competing financial interests.

References

- Akrami A, Kopec CD, Diamond ME, Brody CD (2018) Posterior parietal cortex represents sensory stimulus history and mediates its effects on behaviour. Nature 554:368–372. 10.1038/nature25510 [DOI] [PubMed] [Google Scholar]

- Andersen RA, Cui H (2009) Intention action planning, and decision making in parietal-frontal circuits. Neuron 63:568–583. 10.1016/j.neuron.2009.08.028 [DOI] [PubMed] [Google Scholar]

- Antony JW, Ferreira CS, Norman KA, Wimber M (2017) Retrieval as a fast route to memory consolidation. Trends Cogn Sci 21:573–576. 10.1016/j.tics.2017.05.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley A. (2000) The episodic buffer: a new component of working memory? Trends Cogn Sci 4:417–423. 10.1016/S1364-6613(00)01538-2 [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH (2011) The neurobiology of semantic memory. Trends Cogn Sci 15:527–536. 10.1016/j.tics.2011.10.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnici HM, Richter FR, Yazar Y, Simons JS (2016) Multimodal feature integration in the angular gyrus during episodic and semantic retrieval. J Neurosci 36:5462–5471. 10.1523/JNEUROSCI.4310-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bracci S, Daniels N, Op de Beeck H (2017) Task context overrules object- and category-related representational content in the human parietal cortex. Cereb Cortex 27:310–321. 10.1093/cercor/bhw419 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brouwer GJ, Heeger DJ (2009) Decoding and reconstructing color from responses in human visual cortex. J Neurosci 29:13992–14003. 10.1523/JNEUROSCI.3577-09.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brouwer GJ, Heeger DJ (2013) Categorical clustering of the neural representation of color. J Neurosci 33:15454–15465. 10.1523/JNEUROSCI.2472-13.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchsbaum BR, Lemire-Rodger S, Fang C, Abdi H (2012) The neural basis of vivid memory is patterned on perception. J Cogn Neurosci 24:1867–1883. 10.1162/jocn_a_00253 [DOI] [PubMed] [Google Scholar]

- Cabeza R, Ciaramelli E, Olson IR, Moscovitch M (2008) The parietal cortex and episodic memory: an attentional account. Nat Rev Neurosci 9:613–625. 10.1038/nrn2459 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspers S, Schleicher A, Bacha-Trams M, Palomero-Gallagher N, Amunts K, Zilles K (2013) Organization of the human inferior parietal lobule based on receptor architectonics. Cereb Cortex 23:615–628. 10.1093/cercor/bhs048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavada C, Goldman-Rakic PS (1989) Posterior parietal cortex in rhesus monkey: I. Parcellation of areas based on distinctive limbic and sensory corticocortical connections. J Comp Neurol 287:393–421. 10.1002/cne.902870402 [DOI] [PubMed] [Google Scholar]

- Chen J, Leong YC, Honey CJ, Yong CH, Norman KA, Hasson U (2016) Shared memories reveal shared structure in neural activity across individuals. Nat Neurosci 20:115–125. 10.1038/nn.4450 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chun MM, Johnson MK (2011) Memory: enduring traces of perceptual and reflective attention. Neuron 72:520–535. 10.1016/j.neuron.2011.10.026 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clower DM, West RA, Lynch JC, Strick PL (2001) The inferior parietal lobule is the target of output from the superior colliculus, hippocampus, and cerebellum. J Neurosci 21:6283–6291. 10.1523/JNEUROSCI.21-16-06283.2001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL (2002) Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci 3:215–229. 10.1038/nrn755 [DOI] [PubMed] [Google Scholar]

- Damasio AR. (1989) Time-locked multiregional retroactivation: a systems-level proposal for the neural substrates of recall and recognition. Cognition 33:25–62. 10.1016/0010-0277(89)90005-X [DOI] [PubMed] [Google Scholar]

- Daselaar SM. (2009) Posterior midline and ventral parietal activity is associated with retrieval success and encoding failure. Front Hum Neurosci 3:667–679. 10.3389/neuro.09.013.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erez Y, Duncan J (2015) Discrimination of visual categories based on behavioral relevance in widespread regions of frontoparietal cortex. J Neurosci 35:12383–12393. 10.1523/JNEUROSCI.1134-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ester EF, Sprague TC, Serences JT (2015) Parietal and frontal cortex encode stimulus-specific mnemonic representations during visual working memory. Neuron 87:893–905. 10.1016/j.neuron.2015.07.013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fischl B. (2012) FreeSurfer. Neuroimage 62:774–781. 10.1016/j.neuroimage.2012.01.021 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freedman DJ, Assad JA (2006) Experience-dependent representation of visual categories in parietal cortex. Nature 443:85–88. 10.1038/nature05078 [DOI] [PubMed] [Google Scholar]

- Glasser MF, Coalson TS, Robinson EC, Hacker CD, Harwell J, Yacoub E, Ugurbil K, Andersson J, Beckmann CF, Jenkinson M, Smith SM, Van Essen DC (2016) A multi-modal parcellation of human cerebral cortex. Nature 536:171–178. 10.1038/nature18933 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P (2001) Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science 293:2425–2430. 10.1126/science.1063736 [DOI] [PubMed] [Google Scholar]

- Honey CJ, Newman EL, Schapiro AC (2017) Switching between internal and external modes: a multiscale learning principle. Netw Neurosci 1:339–356. 10.1162/NETN_a_00024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ibos G, Freedman DJ (2014) Dynamic integration of task-relevant visual features in posterior parietal cortex. Neuron 83:1468–1480. 10.1016/j.neuron.2014.08.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kahn I, Andrews-Hanna JR, Vincent JL, Snyder AZ, Buckner RL (2008) Distinct cortical anatomy linked to subregions of the medial temporal lobe revealed by intrinsic functional connectivity. J Neurophysiol 100:129–139. 10.1152/jn.00077.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim H, Daselaar SM, Cabeza R (2010) Overlapping brain activity between episodic memory encoding and retrieval: roles of the task-positive and task-negative networks. Neuroimage 49:1045–1054. 10.1016/j.neuroimage.2009.07.058 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosslyn SM. (1980) Image and mind. Cambridge, MA: Harvard UP. [Google Scholar]