Highlights

-

•

There is concern that the SCORE reporting standard for EEG takes too long.

-

•

This study shows that a normal EEG can typically be reported in SCORE EEG in 8 min.

-

•

Reviewing time is higher for abnormal recordings, and declined by 25% in this study.

Keywords: EEG reporting, EEG review, EEG workload, EEG review time, SCORE EEG

Abstract

Objective

Visual EEG analysis is the gold standard for clinical EEG interpretation and analysis, but there is no published data on how long it takes to review and report an EEG in clinical routine. Estimates of reporting times may inform workforce planning and automation initiatives for EEG. The SCORE standard has recently been adopted to standardize clinical EEG reporting, but concern has been expressed about the time spent reporting.

Methods

Elapsed times were extracted from 5889 standard and sleep-deprived EEGs reported between 2015 and 2017 reported using the SCORE EEG software.

Results

The median review time for standard EEG was 12.5 min, and for sleep deprived EEG 20.9 min. A normal standard EEG had a median review time of 8.3 min. Abnormal EEGs took longer than normal EEGs to review, and had more variable review times. 99% of EEGs were reported within 24 h of end of recording. Review times declined by 25% during the study period.

Conclusion

Standard and sleep-deprived EEG review and reporting times with SCORE EEG are reasonable, increasing with increasing EEG complexity and decreasing with experience. EEG reports can be provided within 24 h.

Significance

Clinical standard and sleep-deprived EEG reporting with SCORE EEG has acceptable reporting times.

1. Introduction

Clinical EEG reporting is based mainly on expert visual analysis (Tatum et al., 2016), but there have been few studies on how long it takes to review and report an EEG. There is significant interest in automating all or part of clinical EEG reporting (Nuwer, 1997, Lodder et al., 2014, Shibasaki et al., 2014). Accessibility of EEG is limited in low and middle income countries (McLane et al., 2015). Knowing how long an EEG takes to review and report is important in planning for adequate EEG accessibility and informing automation initiatives.

While we have anecdotal estimates of the review time and workload, we were not able to find any published data on standard EEG review time. A survey of pediatric EEG practice in the United Kingdom gave EEG reporting times between <24 h to up to three weeks(Keenan et al., 2015), but it must be assumed that this is the total turnaround time from completion of EEG recording until an EEG report is sent out. One survey of EEG in the United Kingdom did not report EEG reporting time (Ganesan et al., 2006). A system for automated EEG reporting, presumably faster than visual interpretation, did not provide data on the time taken for either human manual or computerized automatic interpretation and reporting (Shibasaki et al., 2014). EEG review time for intensive care EEG has been studied (Moura et al., 2014, Haider et al., 2016). Haider found a review time of 19 min per 6 h epoch including detailed seizure annotation but not a clinical report (Haider, personal communication). Moura found a review time of 38 min for 24 h of cEEG.

Literature searches using terms “EEG interpretation time” and “EEG reporting time” and similar phrases did not provide further clarity. Data is available on the delay between an emergency EEG request and start of recording, but not time to a completed report (Gururangan et al., 2016). Google searches using “how long does it take to read an EEG”, “how long does it take a neurologist to read an EEG” and similar shows that a number of patients and caretakers have asked similar questions. One web page by a government entity in Australia suggested that the EEG should usually be reported within 48 h (2014). A similar study in radiology reports average review time of 3.4 min for X-ray, 15 min for CT and 18 min for MRI (MacDonald et al., 2013).

There are indications that EEG reviewers are under increasing time pressures (Ng et al., 2017). The SCORE standard has been adopted to standardize EEG reporting, and facilitate research (Beniczky et al., 2017). A concern about the adoption of the SCORE standard is the time taken to report an EEG (Sperling, 2013, Tatum, 2017). No data is available on the reporting time of EEG; not for free-text based reporting, nor for SCORE-based EEG.

Doctors at the Section for Clinical Neurophysiology, Department of Neurology, Haukeland University Hospital, Bergen, Norway have taken part in the development of the SCORE standard and software since 2009. The software has been used for most standard EEG and sleep deprived EEG reports since January 11th, 2012. Since 2015 the software has recorded the time that the report is signed.

We analyzed time spent to interpret and report clinical EEG to estimate EEG interpretation workload, and its correlates.

2. Methods

All standard and sleep deprived EEGs from the SCORE EEG database at Haukeland University Hospital from November 1st, 2015 to October 30th, 2017 were included. All EEGs were recorded with the NicoletOneTM system from Natus. All EEGs included video, and provocations with hyperventilation and photic stimulation unless contraindications were present. EEG recording duration was usually 20 min for standard EEG and one hour for sleep-deprived EEG. EEGs were recorded by technicians, but reviewed and reported by junior or senior doctors. It is local practice that standard and sleep-deprived EEGs usually be reported within 24 h, and that they be reported in the SCORE EEG software. One senior doctor did not use SCORE because of personal preference. No selection was performed to decide which EEGs were reported in SCORE and which were reported in free text. Estimates of the completeness of the SCORE EEG database in the time period were made by comparing it with the local NicoletOneTM EEG recording database. The study was approved by the Regional Committee for Medical and Health Research Ethics (reference number REK 2017/1512).

Recording stop time was taken from NicoletOneTM. Review times were calculated from the SCORE EEG log. Time points were based on the central server clock. The first opening of an EEG study was taken as the start of the review. The study review period lasted until the report was signed, or another study was opened in the software. If the study was re-opened before signing the final report, this time was added to the study review time.

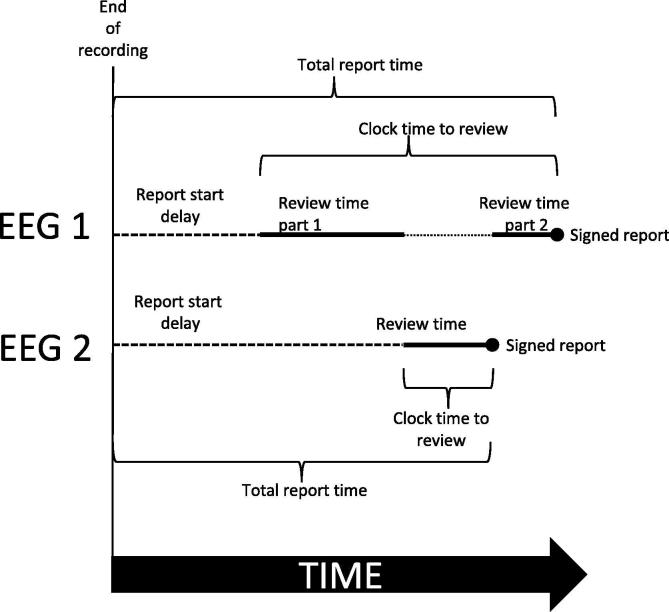

Time intervals were calculated as follows (schematic in Fig. 1):

-

•

The report start delay was calculated as the time from end of recording until review started.

-

•

The review time was calculated as the total time spent with the study open in SCORE EEG.

-

•

The clock time to review was calculated from start of review to time of the finished report.

-

•

The total report time was calculated as the report start delay plus the clock time to review.

Fig. 1.

Schematic representation of times used in this paper. This displays the various intervals for two different EEGs 1 and 2. The review of EEG 1 is interrupted by the review of EEG 2. EEG 1 has two review periods. EEG 2 has one review period.

The extent of concurrent handling of several EEGs was analyzed by converting study open time periods to non-overlapping review spells using the Stata splitit function (Erhardt et al., 2017). The speed of EEG review relative to real EEG time was calculated as the ratio of review time to EEG recording length.

Most of the EEGs were reviewed by one of two different doctors in training, and supervised by certified clinical neurophysiologists. After signing, reports were automatically uploaded into the hospital’s electronic medical record using a HL7-based integration.

The diagnostic significance of the EEG was categorized into normal, no definite abnormality, diffuse or focal cerebral dysfunction, epilepsy, status epilepticus, PNES and other non-epileptic episodes, and EEG abnormality of uncertain clinical significance. The epilepsy category was used when there was an epileptic seizure during the EEG, or interictal epileptiform discharges in the EEG with a history of seizures in the referral. Diagnostic significance was further categorized as either normal (normal and no definite abnormality) or abnormal. Patient age was categorized in analyses as <1 year, 1–9, 10–19, 20–39, 40–59 and 60+ years.

We first analyzed time intervals by EEG type. Then we analyzed EEG review time by categories of diagnostic abnormality, age, gender and study type. A regression model was composed finally for all covariates. We also investigated time trends in review time over the course of the study period, and the occurrence of simultaneous handling of several EEGs.

As all the time intervals had long tailed distributions, we used median and 10th and 90th percentile as measures of central tendency and spread. For comparison of time intervals between two groups, the two-sided non-parametric Wilcoxon rank-sum test was used to test for equality of distribution, and the non-parametric Mood’s median test was used to test for equality of medians. In most analyses, both methods gave highly significant p values and only the highest p value of the two is reported. For comparison of time intervals between several groups, the two-sided non-parametric Kruskal-Wallis test was used. For multivariate modelling, median regression was used. Linear regression was also performed, but de-emphasized due to the skewed distribution of time intervals leading to incorrect p values. Statistical analysis was performed in Stata version 15.1.

3. Results

Estimates showed that 93% of EEGs in the time period were reported in SCORE EEG. Not all EEGs were reported because some EEGs were reported in free text by one senior doctor. We included 5302 standard EEGs and 576 sleep deprived EEGs reported in SCORE EEG. 89% of the EEGs were reviewed primarily by junior doctors in training with supervision, and 11% by a certified clinical neurophysiologist alone.

4. Overall time intervals

Table 1 shows the median time and variability measures for the report start delay, review time, the clock time to review, and the total report time. All time intervals were longer for sleep deprived EEG (p < 0.01).

Table 1.

Time courses (report start delay, review time, clock time to review, total report time) in minutes for standard and sleep deprived EEG using SCORE EEG.

| Standard EEG (n = 5302) |

Sleep deprived EEG (n = 576) |

|||||

|---|---|---|---|---|---|---|

| Median | 10th centile | 90th centile | Median | 10th centile | 90th centile | |

| Report start delay | 46.5 | 14.8 | 166.1 | 55.5 | 14.2 | 245.0 |

| Review time | 12.5 | 5.0 | 36.4 | 20.9 | 9.9 | 56.0 |

| Clock time to review | 13.2 | 5.0 | 68.0 | 25.3 | 10.0 | 147.5 |

| Total report time | 71.3 | 29.0 | 215.4 | 110.8 | 34.7 | 356.1 |

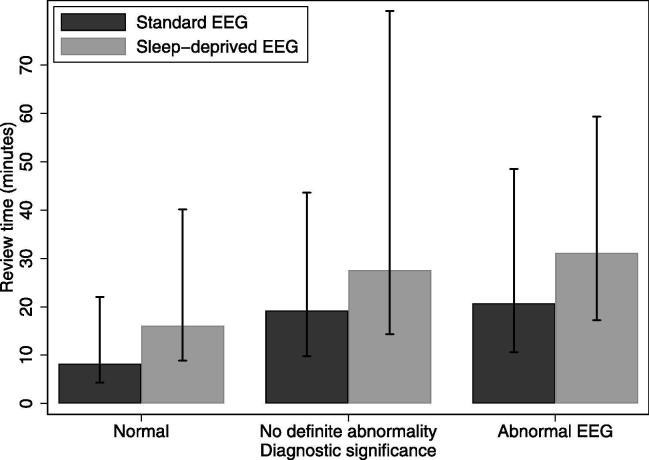

5. Review time by abnormality

Fig. 2 shows that the median review time was longer (p < 0.001) and more variable for abnormal EEGs. A normal standard EEG had a median review time of 8.3 min, an EEG classified as no definite abnormality took 19.2 min, while an abnormal standard EEG had a median review time of 20.7 min. Sleep-deprived EEGs had longer and more variable review times (p < 0.001).

Fig. 2.

Review time (median in bars, 10th centile and 90th centile in vertical lines) by normal or abnormal diagnostic conclusion of the report, for standard and sleep deprived EEG using SCORE EEG.

6. Review time by type of abnormality

Table 2 shows the distribution of review times for more detailed categories of diagnostic significance. Review time depended on diagnostic significance (p < 0.001). Standard EEGs with diffuse and focal cerebral dysfunction took about the same time to report, while EEGs with interictal epileptiform activity took significantly longer. EEGs with epileptic seizures and status epilepticus took the longest.

Table 2.

Review time by categories of diagnostic significance for standard EEG and sleep EEG using SCORE EEG.

| Standard EEG (n = 5302) |

Sleep deprived EEG (n = 576) |

|||||

|---|---|---|---|---|---|---|

| Median | 10th centile | 90th centile | Median | 10th centile | 90th centile | |

| Normal | 8.3 | 4.3 | 22.0 | 16.1 | 8.9 | 40.2 |

| No definite abnormality | 19.2 | 9.8 | 43.6 | 27.6 | 14.4 | 81.2 |

| Diffuse cerebral dysfunction | 18.0 | 9.1 | 40.2 | 14.4 | 10.9 | 98.8 |

| Focal cerebral dysfunction | 18.1 | 9.4 | 42.3 | 26.5 | 19.2 | 48.7 |

| Epilepsy interictal | 23.1 | 12.7 | 48.9 | 31.0 | 17.0 | 60.4 |

| Status epilepticus | 37.1 | 13.1 | 76.7 | n/a | ||

| Epileptic seizure | 38.6 | 19.2 | 77.2 | 41.0 | 33.7 | 64.4 |

| PNES seizure/other event | 25.0 | 14.7 | 67.1 | 42.3 | 29.3 | 63.5 |

| EEG abnormality of uncertain clinical significance | 22.4 | 13.2 | 57.8 | 29.7 | 19.9 | 47.4 |

| Total | 12.5 | 5.0 | 36.4 | 20.9 | 9.9 | 56.0 |

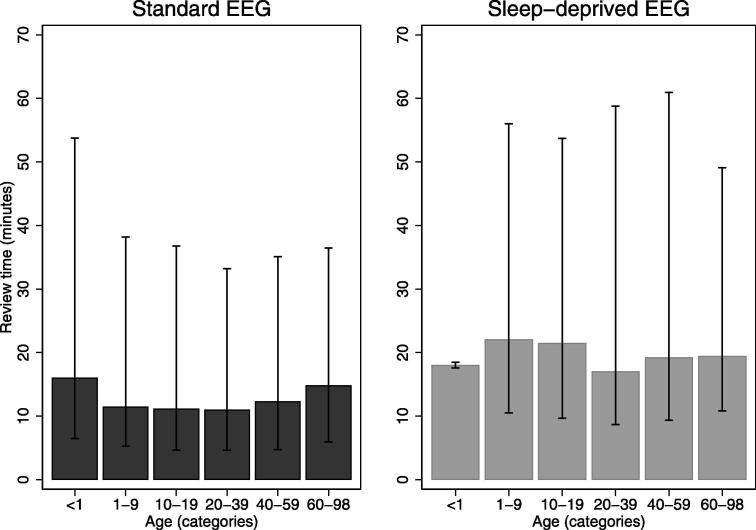

7. Review time by age

Fig. 3 shows that the review time depended on patient age (p < 0.001). Standard EEGs of patients younger than one year of age, and those above 60 years of age, had higher review times. (The 10–90 centile bar for sleep-deprived EEGs < 1 years of age is small because few were done)

Fig. 3.

Review time (median in bars, 10th centile and 90th centile in vertical lines) by age in categories for standard and sleep-deprived EEG using SCORE EEG.

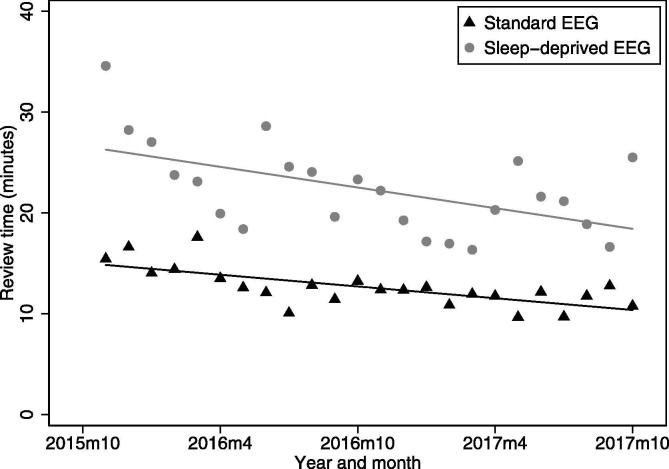

8. Trend in review time

Fig. 4 shows that there was a reduction of around 25% in median review time over the two years of this study. The median review time for standard EEG declined from 15.4 min in November 2015, to 10.8 min in October 2017. The median review time for sleep-deprived EEG declined from 34.6 min in October 2015, to 25.5 min in October 2017. A linear fit to the plots showed a decline (r2 values 0.29 and 0.49, p < 0.01).

Fig. 4.

Time trends in median EEG review time by calendar month for standard EEG and sleep deprived EEG using SCORE EEG from October 2015-October 2017, with a linear trend fitted.

9. Regression modelling

Table 3 shows the results of a median regression model for EEG review time incorporating age in categories, normal or abnormal EEG, and study type as predictors. The base case is a standard EEG from a 10–19 year old patient assessed as normal starting at 8.6 min. Adding the coefficient values for each variable should give a reasonable estimate of the median review time for an EEG of that type. The major correlates of a longer EEG review time are an abnormal EEG, followed by study type, with a smaller effect of age of the patient. This model had limited predictive power, with a pseudo-R2 value of 0.12. Linear regression analysis on review time showed stronger effects of age and abnormal EEG but the same level of effect of study type (data not shown). This probably reflects that some categories of age and abnormality have more skewed distributions (are more difficult) than others.

Table 3.

Regression model for median EEG review time by age in categories, normal or abnormal EEG, and study type, in 5878 standard and sleep EEGs using SCORE.

| Coefficient | 95% C.I. | p-value | |||

|---|---|---|---|---|---|

| Age category | <1 | 3.0 | 1.3 | 4.6 | <0.001 |

| 1–9 | 0.1 | −0.7 | 1.0 | 0.72 | |

| 10–19 | (reference category) | ||||

| 20–39 | −0.4 | −1.2 | 0.5 | 0.39 | |

| 40–59 | −0.8 | −1.6 | 0.1 | 0.08 | |

| 60–98 | −2.0 | −2.8 | −1.2 | <0.001 | |

| Diagnostic conclusion | Normal | (reference category) | |||

| No definite abnormality | 11.8 | 10.8 | 12.8 | <0.001 | |

| Abnormal | 13.2 | 12.6 | 13.8 | <0.001 | |

| Study type | Standard EEG | (reference category) | |||

| Sleep deprived EEG | 7.7 | 6.7 | 8.6 | <0.001 | |

| Constant | 8.6 | 8.0 | 9.2 | ||

Only one of the junior doctors involved in the time period regularly used the software feature to report the name of the supervising physician (for this doctor, the percentage was 26% of EEGs). The minority of EEGs that were reported by a certified clinical neurophysiologist alone did not have significantly shorter review time (data not shown, p > 0.36).

There was a small effect of patient gender of less than one minute when analyzed univariately (p = 0.02) and when included in a regression model (p = 0.03), for which reason it was not studied further.

10. Total report time

Table 4 shows the total report time in categories. 72% of EEGs were reported within 2 h, and >99% of EEGs were reported within 24 h. The median report start delay was 46.5 min, while the median total report time was 71.3 min.

Table 4.

Total report time for standard and sleep deprived EEG using SCORE EEG.

| Standard EEG (n = 5302) | Sleep deprived EEG (n = 576) | Total (n = 5878) | |

|---|---|---|---|

| % | % | % | |

| <1 h | 41.7 | 27.3 | 40.3 |

| 1–2 h | 30.3 | 26.4 | 29.9 |

| 2–4 h | 20.2 | 24.0 | 20.6 |

| 4–24 h | 7.1 | 19.8 | 8.3 |

| >24 h | 0.8 | 2.6 | 0.9 |

| Total | 100.0 | 100.0 | 100.0 |

We investigated how many EEGs were being reported simultaneously by one doctor. The median number of concurrently open EEGs was 1. The mean number of open EEGs was 1.5. The median speed of EEG review relative to elapsed EEG time was 1.67 for standard EEG, and 3.0 for sleep-deprived EEG (p < 0.001 for the difference).

11. Discussion

This is the first study to report clinical EEG review time with SCORE EEG. The median review time was 12.5 min for a standard EEG including supervision. EEG review times were highly variable. A median of 8.3 min to score and report a completely normal standard EEG is reassuring in regards to concerns expressed about time spent with SCORE EEG (Sperling, 2013, Tatum, 2017).

These results are not representative of the time spent EEG reading as a beginning resident. At the start of the study both junior doctors had read > 2300 EEGs, and we could still observe a reduction of the review time, most pronounced for sleep records, possibly reflecting their learning and software improvements. Some hospitals use EEG technicians for scoring EEG (personal communication, Sandor Beniczky and Gerhard Visser). Hospitals using this model may find reviewing times that are different, but reviewing times are likely to be on a similar scale.

As one of the early adopter centers involved in the development of SCORE (Beniczky et al., 2013) and users of a previous structured EEG reporting tool (Aurlien et al., 2004), there was significant experience and institutional momentum for using SCORE EEG. A center just starting with SCORE EEG may initially find our numbers too optimistic.

The predictors of review time investigated here explain only a small fraction of the variability (13%). Since the main driver of review time is the abnormality of the EEG, review time is most likely a function of the complexity of the EEG signal and its clinical context. It is perhaps surprising that the effect of age is small, and that review of a sleep-deprived EEG is twice as fast relative to EEG length compared to standard EEG.

There is a significant discrepancy between the review time and the total report time. One speculation is that EEG reviewers are multitasking, handling several other clinical problems at a time, though we have no data on this apart from EEG. A significant fraction of EEGs reported here are on acutely admitted inpatients (estimated to be around 30%, but we have no easily available data on this). In such cases, there is often a clinical need for a fast EEG report. These semi-acute EEGs probably constitute many of the EEGs with a total report time of <1 h. It is local policy that standard and sleep-deprived EEGs usually be reported within 24 h. Other hospitals have a more relaxed expectation of standard non-acute EEGs (personal communication, Sandor Beniczky). The total report time in other hospitals will reflect case mix, reporting policy, type of involved staff and supervision. These data can be used as a benchmark comparison for automation efforts (Shibasaki et al., 2014) and workforce scaling efforts in low- and middle-income countries. Review time could be routinely measured in any neurodiagnostic service, such as clinical neurophysiology and radiology, to ensure optimal delivery of service and balance between modalities. Optimizing working conditions and procedures to improve speed of delivery of EEG reports may enhance the clinical relevance of EEG.

We have no comparative data for non-SCORE-based EEG reporting. Review times were estimated from the time spent with an EEG open in the software, not discounting non-EEG-related tasks, breaks, or otherwise. The reported review times also include supervision. Review times for some EEGs are probably overestimated by leaving an EEG open in the software. The present data probably reflect actual clinical practice better than a simulated stopwatch environment.

Due to the long tailed distribution of time intervals, we chose to use the median for most analyses. Linear regression analysis on review time showed stronger effects for most covariates examined here (data not shown).

In conclusion, the EEG review time for standard and sleep-deprived EEG with SCORE EEG is highly variable, but reasonable. The most important predictor of longer review time is an abnormal EEG.

Acknowledgements

The idea for recording signing time in SCORE came from Dr. Gerhard Visser at Stichting Epilepsie Instellingen, the Netherlands.

Funding

This work was supported by Holberg EEG AS, providers of the SCORE EEG software in the form of an unrestricted 20% research position at Haukeland University Hospital for the corresponding author from December 1st 2016 to January 5th, 2018.

Conflict of Interest Statement

Jan Brøgger, Harald Aurlien, Tom Eichele, Henning Olberg, Eivind Aanestad, Ina Hjelland are minority shareholders in Holberg EEG AS. Harald Aurlien is employed by Holberg EEG in a 50% position as Chief Medical Officer. Harald Aurlien and Jan Brøgger were the founders of Holberg EEG AS.

Contributor Information

Jan Brogger, Email: jan.brogger@helse-bergen.no.

Tom Eichele, Email: tom.eichele@helse-bergen.no.

Eivind Aanestad, Email: eivind.aanestad@helse-bergen.no.

Henning Olberg, Email: henning.kristian.olberg@helse-bergen.no.

Ina Hjelland, Email: ina.hjelland@helse-bergen.no.

Harald Aurlien, Email: harald.aurlien@helse-bergen.no.

References

- Aurlien H., Gjerde I.O., Aarseth J.H., Eldoen G., Karlsen B., Skeidsvoll H. EEG background activity described by a large computerized database. Clin. Neurophysiol. 2004;115:665–673. doi: 10.1016/j.clinph.2003.10.019. [DOI] [PubMed] [Google Scholar]

- Beniczky S., Aurlien H., Brogger J.C., Fuglsang-Frederiksen A., Martins-da-Silva A., Trinka E. Standardized computer-based organized reporting of EEG: SCORE. Epilepsia. 2013;54:1112–1124. doi: 10.1111/epi.12135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beniczky S., Aurlien H., Brogger J.C., Hirsch L.J., Schomer D.L., Trinka E. Standardized computer-based organized reporting of EEG: SCORE - Second version. Clin. Neurophysiol. 2017;128:2334–2346. doi: 10.1016/j.clinph.2017.07.418. [DOI] [PubMed] [Google Scholar]

- Erhardt, K., Kuenster, R., 2017. SPLITIT: stata module to split chronological overlapping spells in spell data. Statistical Software Components; https://EconPapers.repec.org/RePEc:boc:bocode:s458022.

- Ganesan K., Appleton R., Tedman B. EEG departments in Great Britain: a survey of practice. Seizure. 2006;15:307–312. doi: 10.1016/j.seizure.2006.02.021. [DOI] [PubMed] [Google Scholar]

- Gururangan K., Razavi B., Parvizi J. Utility of electroencephalography: Experience from a U.S. tertiary care medical center. Clin. Neurophysiol. 2016;127:3335–3340. doi: 10.1016/j.clinph.2016.08.013. [DOI] [PubMed] [Google Scholar]

- Haider H.A., Esteller R., Hahn C.D., Westover M.B., Halford J.J., Lee J.W. Sensitivity of quantitative EEG for seizure identification in the intensive care unit. Neurology. 2016;87:935–944. doi: 10.1212/WNL.0000000000003034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keenan N., Sadlier L.G. Paediatric EEG provision in New Zealand: a survey of practice. NZ Med. J. 2015;128:43–50. [PubMed] [Google Scholar]

- Lodder S.S., Askamp J., van Putten M.J. Computer-assisted interpretation of the EEG background pattern: a clinical evaluation. PLoS One. 2014;9:e85966. doi: 10.1371/journal.pone.0085966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MacDonald S.L., Cowan I.A., Floyd R.A., Graham R. Measuring and managing radiologist workload: a method for quantifying radiologist activities and calculating the full-time equivalents required to operate a service. J. Med. Imaging Radiat. Oncol. 2013;57:551–557. doi: 10.1111/1754-9485.12091. [DOI] [PubMed] [Google Scholar]

- McLane H.C., Berkowitz A.L., Patenaude B.N., McKenzie E.D., Wolper E., Wahlster S. Availability, accessibility, and affordability of neurodiagnostic tests in 37 countries. Neurology. 2015;85:1614–1622. doi: 10.1212/WNL.0000000000002090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moura L.M., Shafi M.M., Ng M., Pati S., Cash S.S., Cole A.J. Spectrogram screening of adult EEGs is sensitive and efficient. Neurology. 2014;83:56–64. doi: 10.1212/WNL.0000000000000537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ng M.C., Gillis K. The state of everyday quantitative EEG use in Canada: a national technologist survey. Seizure. 2017;49:5–7. doi: 10.1016/j.seizure.2017.05.003. [DOI] [PubMed] [Google Scholar]

- Nuwer M. Assessment of digital EEG, quantitative EEG, and EEG brain mapping: Report of the American Academy of Neurology and the American Clinical Neurophysiology Society. Neurology. 1997;49:277–292. doi: 10.1212/wnl.49.1.277. [DOI] [PubMed] [Google Scholar]

- Shibasaki H., Nakamura M., Sugi T., Nishida S., Nagamine T., Ikeda A. Automatic interpretation and writing report of the adult waking electroencephalogram. Clin. Neurophysiol. 2014;125:1081–1094. doi: 10.1016/j.clinph.2013.12.114. [DOI] [PubMed] [Google Scholar]

- Sperling M.R. Commentary on Standardized computer-based organized reporting of EEG: SCORE. Epilepsia. 2013;54:1135–1136. doi: 10.1111/epi.12210. [DOI] [PubMed] [Google Scholar]

- State of Victoria, 2014. Better Health Channel: EEG Test. Victoria State Government, Australia and St Vincent's Hospital - Neurology Department; 2014. Accessed on 27.10.17. https://www.betterhealth.vic.gov.au/health/conditionsandtreatments/eeg-test

- Tatum W.O. Standard computerized EEG reporting - It's time to even the score. Clin. Neurophysiol. 2017;128:2330–2331. doi: 10.1016/j.clinph.2017.08.021. [DOI] [PubMed] [Google Scholar]

- Tatum W.O., Olga S., Ochoa J.G., Munger Clary H., Cheek J., Drislane F. American Clinical Neurophysiology Society Guideline 7: Guidelines for EEG Reporting. J. Clin. Neurophysiol. 2016;33:328–332. doi: 10.1097/WNP.0000000000000319. [DOI] [PubMed] [Google Scholar]