Abstract

Background Outpatient providers often do not receive discharge summaries from acute care providers prior to follow-up visits. These outpatient providers may use the after-visit summaries (AVS) that are given to patients to obtain clinical information. It is unclear how effectively AVS support care coordination between clinicians.

Objectives Goals for this effort include: (1) developing usability heuristics that may be applied both for assessment and to guide generation of medical documents in general, (2) conducting a heuristic evaluation to assess the use of AVS for communication between clinicians, and (3) providing recommendations for generating AVS that effectively support both patient/caregiver use and care coordination.

Methods We created a 17-item heuristic evaluation instrument for assessing usability of medical documents. Eight experts used the instrument to assess each of four simulated AVS. The simulations were created using examples from two hospitals and two pediatric patient cases developed by the National Institute of Standards and Technology.

Results Experts identified 224 unique usability problems ranging in severity from mild to catastrophic. Content issues (e.g., missing medical history, marital status of a 2-year-old) were rated as most severe, but widespread formatting and structural problems (e.g., inconsistent indentation, fonts, and headings; confusing ordering of information) were so distracting that they significantly reduced readers' ability to efficiently use the documents. Overall, issues in the AVS from Hospital 2 were more severe than those in the AVS from Hospital 1.

Conclusion The new instrument allowed for quick, inexpensive evaluations of AVS. Usability issues such as unnecessary information, poor organization, missing information, and inconsistent formatting make it hard for patients, caregivers, and clinicians to use the AVS. The heuristics in the new instrument may be used as guidance to adapt electronic health record systems so that they generate more useful and usable medical documents.

Keywords: testing and evaluation, electronic health records and systems, communication barriers, clinical documentation and communications, workarounds and unanticipated consequences, interfaces and usability

CME/MOC-II*

Background and Significance

Sharing data during patient handoffs is of particular importance, given the increased rate of medical errors related to care transitions. 1 2 3 Discharge summaries are the recommended mechanism for communicating relevant information from acute care visits to receiving outpatient clinicians. 4 However, several factors inhibit the delivery of discharge summaries to outpatient providers prior to follow-up visits, including lack of interoperability among electronic health record (EHR) systems, incomplete or missing outpatient provider address information, and absence of a deadline for the delivery of these documents. 5 6 7 8 9 10 11 12 13 This has led to a care coordination work around—out-of-network outpatient clinicians may rely upon patient-facing documents for clinical information even though they were not designed for this purpose. 14 The documents handed to patients when they leave an acute care setting are referred to by many names at different institutions including: discharge instructions, patient instructions, clinical summaries, summary of care documents, and, as they will be called here, after-visit summaries (AVS).

Having access to information from a recent hospitalization is often invaluable to the receiving clinician, particularly for patients who see multiple providers for complex conditions. 6 15 16 17 Numerous factors currently prevent outpatient providers from reliably receiving discharge summaries prior to patient follow-up visits. Traditional methods of sending discharge summaries including postal mail and fax can be slow, are subject to disruptions and delivery failures, and provide limited support to confirm that the documents have reached the intended recipient. Sending and/or receiving institutions may not support electronic transfer of discharge summaries. 10 18 Even when electronic transfer is supported, most EHR systems cannot verify that the correct outpatient clinician has received the message. Time itself can be a barrier. While most hospitals have a policy on timely completion of discharge summaries, they may not have policies on when these are delivered to outpatient providers. Finally, acute care providers often do not know exactly who needs to receive a patient's discharge summary—patients may not have provided a complete list of treating providers. Significant resources and a shift in policy would be required for seamless sharing of discharge summaries.

Without access to discharge summaries, outpatient providers may rely upon AVS for clinical information, an “off-label” use that may impact patient safety. Hence, researchers have advised that health care systems adapt AVS so they can better support care coordination. 16 19 What remains unknown is how effectively, efficiently, and satisfactorily the current AVS can support care coordination between clinicians.

One approach to finding out is conducting a heuristic evaluation, a process in which three to five experts independently apply a set of design best practices, called heuristics, to identify potential usability problems, 20 where usability is defined as “the effectiveness, efficiency, and satisfaction with which specified users achieve specified goals in particular environments.” 21 The expert participants also independently rate the severity of identified usability issues and then all issues and ratings are analyzed collectively. 22 The experts are not expected to discover all the same issues or even every minor issue; as a group, they will generally identify the majority of the important usability problems. 23

Proponents of heuristic evaluation, which has been demonstrated to be a viable alternative to more costly usability testing, recommend developing a set of relevant heuristics for the particular type of item being assessed. 20 The heuristics, which may be validated by having experts apply them in a heuristic evaluation, 24 serve as guidance for developing useable products as well as a tool for assessing usability.

Evaluating usability is one of the aspects of human factors, “the discipline that takes into account human strengths and limitations in the design of interactive systems that involve people, tools, and technology and work environments to ensure safety, effectiveness, and ease of use.” 25 While assessing the usability of printed documents may seem outside of the established domain of clinical informatics, if documents generated by EHR systems are unusable by many patients and/or clinicians, the EHR system cannot be used to provide high-quality health care. Issues with EHR usability can result in patient safety events and adverse outcomes. 26 Solutions to these issues require interactions between clinicians and EHR systems. 27 28 29

Objectives

There are three primary goals for this effort. The first is developing medical document usability heuristics that can be used both to assess how well AVS support care coordination and as guidance to help generate documents that effectively communicate information to clinicians. The second is conducting a heuristic evaluation to determine how effectively AVS from two hospitals, which have EHR systems from two different vendors, support communication between clinicians. The third is developing general recommendations for producing AVS that can be used effectively by patients, caregivers, and any outpatient providers who need to rely upon them to obtain clinical information to support care coordination.

Methods

Development of Usability Heuristics

To identify a set of heuristics for creating and recognizing useful, usable medical documentation, we first reviewed heuristics commonly used to assess medical devices, 30 software user interfaces, 23 31 and online documentation, 32 and then extracted guidance from published literature on quality documentation 33 34 35 and understandable medical writing. 36 37 38 39 A few of the previously established heuristics were retained (e.g., consistency and standards, aesthetic and minimalist design), but the majority were not appropriate for evaluating the usability of medical documents because they were related to technology design (e.g., “always enable a user to undo an action” and “ensure [online] documents are indexed and searchable”).

The guidance extracted from the literature 33 34 35 36 37 38 39 was transformed into additional usability heuristics, after omitting items that were not relevant for assessing usability from an outpatient provider perspective (e.g., ensuring readability scores below an 8th grade level). The new heuristics were added to those that were retained from the initial analysis, yielding a list of 20 candidates. These were summarized in a table which presented each candidate heuristic as a short phrase (e.g., “Color and Contrast”) followed by a description in the form of a question (e.g., “Does the text have sufficient contrast?”) and an example (e.g., “Black or dark gray text on a white or cream background”). The use of questions was intended to make it easier for clinical experts who had not previously participated in heuristic evaluations to use the table. The summary table also grouped the candidate heuristics into five categories to facilitate completion of the heuristic evaluation and data analysis. These categories included:

Readability: The information is presented in a manner that is easy to read.

Comprehensibility: It is easy for the reader to make sense of the information that is presented.

Minimalism: Information is presented as simply and succinctly as possible.

Organization: Information is ordered logically and grouped into reasonably sized sections with prominent and meaningful headings and subheadings.

Content: All the information that is presented is relevant to either a clinical expert or the patient/caregiver and no information needed by either of these parties is missing.

Two clinicians, three human factors engineers, and three patient safety experts reviewed the summary table and provided feedback. Based upon their comments, some candidates were combined (i.e., layout and position; font and capitalization; and structure and format). The remaining 17 medical documentation usability heuristics were incorporated into a data collection instrument that lists the heuristic category, the heuristic name, and a descriptive question (see Table 1 ). This instrument was tested by a human factors engineer who used it to assess usability of two AVS unrelated to the ones used in this study.

Table 1. Medical document usability heuristics.

| Heuristic category | Heuristic name | Description |

|---|---|---|

| Readability | Color and Contrast | Does the text have sufficient contrast? |

| Layout and Position | Is the layout appealing, clear, and consistent across the document? | |

| Font and Capitalization | Are the font and its size consistent and readable? | |

| Structure and Format | Are the structure and format of each section effective and uniform? | |

| Minimalism | Simple and Direct | Are the language and sentence structure simple, direct, specific, concrete, and concise? |

| Progressive Level of Detail | Does the document present the most important information first, following with increasing levels of detail? | |

| Comprehensibility | Terminology | Are complex and technical terms used correctly and consistently? |

| Clarity of Headings | Are the headings clear and understandable? | |

| Content | Clarity of Content | Is the purpose of the material obvious? |

| Emphasis | Are important points emphasized appropriately? Is it clear why certain text is emphasized? | |

| Context | Does the document include creation or printing date and contact information? | |

| Relevance | Is the content relevant to the patient's condition and context? Is there extraneous information? | |

| Absence/Lack of Information | Is any important content missing? | |

| Organization | Grouping | Is the information grouped in a meaningful format? Are the groups reasonably sized? |

| Order | Is the information ordered logically? | |

| Use of Subheadings | Does the document use prominent and meaningful headings and subheadings? | |

| Navigational Tools | Does the material have navigational tools to help orient the reader? |

Simulated AVS Development

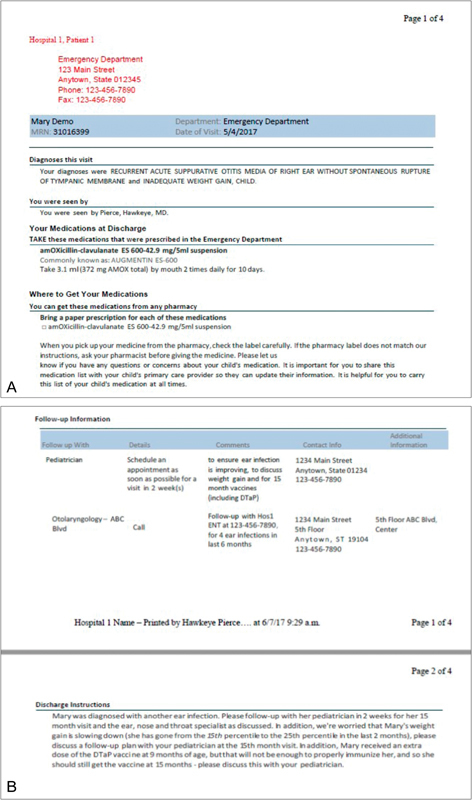

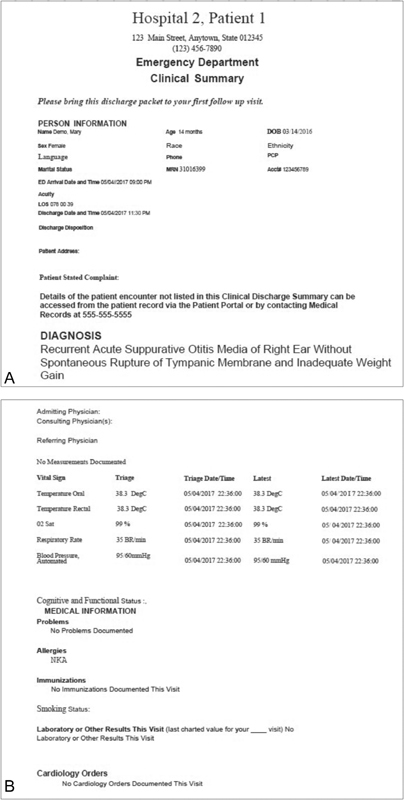

Simulated AVS were created to keep the experts who participated in the heuristic evaluation blinded to the two hospitals that provided examples of AVS generated by their EHR systems. The simulated AVS were populated with patient data from two use cases created by the National Institute of Standards and Technology (NIST). 40 A total of four simulated AVS were developed: each patient use case was used twice, to create simulated AVS based on examples from each hospital. The simulated AVS were reviewed for validity and then evaluated using the new heuristic evaluation instrument. Figs. 1 and 2 show portions of two of the simulated AVS. Complete copies of each of the four simulated AVS may be viewed in the Supplementary Material (available in the online version).

Fig. 1.

( A ) Screenshot of a portion of one of the simulated after-visit summaries (AVS) based upon an example from Hospital 1, showing data from Patient 1. ( B ) Second screenshot of the simulated AVS with Patient 1 data based on an example from Hospital 1.

Fig. 2.

( A ) Screenshot of a portion of one of the simulated after-visit summaries (AVS) based upon an example from Hospital 2, showing data from Patient 1. ( B ) Second screenshot of the simulated AVS with Patient 1 data based on an example from Hospital 2.

Heuristic Evaluation

Four teams, each comprised a human factors expert with experience participating in heuristic evaluations and a clinical expert with no heuristic evaluation experience, independently evaluated each of the four simulated AVS using a stepwise approach. Team-based heuristic evaluation, which reduces the time required by the clinical experts, has previously been used successfully to evaluate medical technology. 41 Each team was given the new heuristic evaluation instrument and instructions on how it should be applied to assess AVS. First, the human factors expert identified potential usability issues based upon the heuristics (that is, instances where the answer to a heuristic description's question was “no”). Then, the human factors expert met with his/her clinical partner, introduced the heuristics, and walked the clinician through the AVS. The clinician identified additional issues and then assigned severity ratings to all issues based upon each issue's clinical significance and/or potential negative impact. The review teams used the 5-step severity scale established by Nielsen 22 which was subsequently used to assess how medical device usability impacts patient safety. 30 Table 2 provides examples of issues that were rated at each of the five severity levels.

Table 2. Examples of issues for each severity rating level.

| Severity rating | Example issue |

|---|---|

| 0 (not an issue) | On page 1, many bold lines separating sections create clutter |

| 1 (cosmetic only) | The terms medicine and medications are used interchangeably |

| 2 (minor) | Date of ED visit does not match date of school note and it is not clear if the school note is in reference to the same ED visit |

| 3 (major) | Discharge instructions are very unclear about whether weight gain or weight loss is a problem. Also, dTap is buried in the instructions and should be highlighted elsewhere because it's important |

| 4 (catastrophic) | Medication instructions list other medications and current medications. It is not clear if the patient is already on ibuprofen and is going to add an additional 200 mg of ibuprofen or if this is the same prescription |

Abbreviation: ED, emergency department.

All the issues reported by the four review teams were aggregated, grouped based upon how they impacted usability, and then paraphrased so duplicates could be removed. After this consolidation process, possible solutions for issues rated 3 (major) or 4 (catastrophic) were developed. In some cases, review teams suggested specific solutions (e.g., “Should address severity of the injury,” “Follow-up table could be adjusted to focus more upon the intended action (call, schedule, etc.),” “should clarify if [medication] was e-prescribed and [include] the pharmacy contact”). All solutions were summarized and grouped so that common themes could be extracted. The themes were transformed into recommendations on how to generate AVS that can effectively support care coordination.

Results

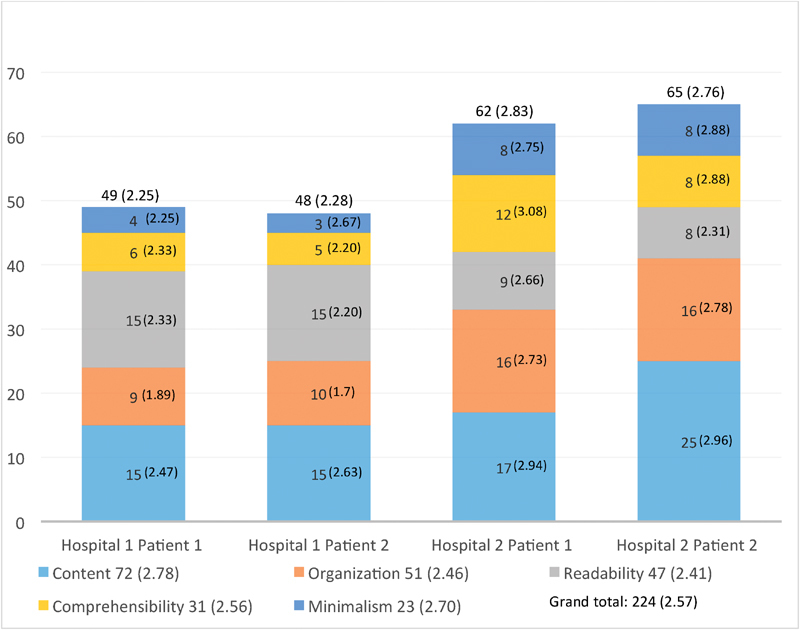

The expert reviewers identified 224 distinct usability problems across the four simulated AVS, ranging in severity from cosmetic (e.g., “Diagnoses are in super-big font but it's unclear why”) to catastrophic (e.g., “Discharge instructions are missing”). Using the 5-point (0–4) severity scale, the average severity rating across all identified issues was 2.57 (between minor and major). The numbers of issues and average severity ratings for each simulated document, broken down by heuristic category, are shown in Fig. 3 .

Fig. 3.

Results of heuristic evaluation. Number of usability issues and average severity ratings for each document, broken down by heuristic category. Total number of issues of each category across all documents and average severity ratings for these categories are shown in the legend. Average severity ratings for each category within each document are shown in parentheses in the relevant portion of each bar.

A two-way analysis of variance with Hospital and Patient as factors yielded a significant main effect of Hospital, F (1, 240) = 23.38, p < 0.001, indicating the average severity ratings for Hospital 2's AVS (2.83 for Patient 1 and 2.76 for Patient 2) were significantly higher than those for Hospital 1's AVS (2.25 and 2.28, respectively). There were also more issues identified in documents from Hospital 2 (62 and 65) than in those from Hospital 1 (49 and 48). The main effect of Patient, F (1, 240) = 0.047, p = 0.83, and the Hospital–Patient interaction, F (1, 240) = 0.071, p = 0.79, were both not significant.

In all four documents, content issues were most prevalent (accounting for 32% of problems overall). The majority of content issues were related to missing information but reviewers also found unnecessary personal information (e.g., marital status of a 2-year-old) and vague language (e.g., lack of clarity about whether weight gain or weight loss is a problem). Content issues also had the highest average severity ratings for three AVS. The exception was the AVS for Patient 1 from Hospital 2, for which comprehensibility issues, primarily related to complex technical terms or lack of clear, understandable headings, were rated highest. Some content issues were consistent across hospitals: the AVS for Patient 1 were missing medical history and allergies, whereas those for Patient 2 did not include severity of injury.

Meanwhile, most readability issues, including poor contrast, unclear layout, and haphazard changes in both font size and indentation, tended to be consistent across both AVS from the same hospital. For example, for Hospital 1 AVS reviewer comments included “excessive and inconsistent use of horizontal lines instead of a clear heading and subheading structure adds visual clutter” and for Hospital 2 AVS comments included “The font type and size varies so much, it makes it hard to tell what is really important.” Similarly, most organization issues, such as nonintuitive ordering, lack of grouping, inconsistent heading styles, and poor use of subheadings, were consistent across both simulated AVS from a respective institution. While readability and organization issues had lower average severity ratings than content issues, reviewers' comments indicated that the former had a significant negative impact on readers' ability to effectively and efficiently extract relevant information (e.g., “very hard to figure out where the important information really is”).

Multiple content, comprehensibility, readability, and organizational issues were rated as catastrophic by at least one reviewer, but no minimalism issues were, as these issues tended to be related to unnecessarily duplicated information. The 12 catastrophic issues are shown in Table 3 .

Table 3. Usability issues rated 4 (catastrophic) by at least 1 expert reviewer.

| Catastrophic usability issues |

|---|

| Discharge instructions are missing. Severity of condition is not conveyed |

| No information explaining inadequate weight gain, which seems clinically important |

| Diagnosis description is not clear. Initial encounter is written twice but it is not clear if the initial encounter is from a fall, a sports accident, or a fall during a sports accident |

| Medication instructions list other medications and current medications. It is not clear if the patient is already on ibuprofen and are going to add an additional 200 mg of ibuprofen or if this is the same prescription |

| Care Plan & Goals is highly confusing. Medications section is unnecessarily complex and redundant |

| Follow-up contact is listed under Patient Education Information |

| Order of information is confusing throughout |

| Lack of structure makes navigation within the document difficult |

| Highly inconsistent indentation, use of columns, font size and type, spacing, tables, and even footer text all contributes to a difficult to discern structure, hard to read, and very confusing |

| Lack of color/contrast and seemingly arbitrary use of large text and bold text make determining, let alone understanding, emphasis very difficult |

| Some subheaders/sections are in larger text than parent header(s) |

| Formatting and issues with page breaks contribute to problems grouping information |

Recommendations to Improve AVS to Better Support Care Coordination

Ensure that clinical information needed by the outpatient providers who will provide follow-up care is included in AVS. The scope of AVS needs to be expanded to support use by outpatient providers. They should contain any clinical information about inpatient visits that outpatient providers need to coordinate care for the patients, including the six items that the Joint Commission mandates be included in discharge summaries: reason for hospitalization, significant findings, procedures and treatments provided, patient's condition at discharge, patient/family instructions, and attending physician's signature. 4 Examples of other necessary clinical information that reviewers identified as missing from at least one of the AVS in our review are: patient medical, surgical, and family history, medication reconciliation information, contact information for the inpatient physician, medication side effects, patient vitals, treatment and testing done during the visit, and pending test results.

Place information provided specifically for clinicians in a separate, clearly labeled section, or into clearly labeled subsections. Discharging clinicians should have the option to insert additional information into this section or these sections so both patients and clinicians can easily recognize text directed toward clinicians.

Establish a standardized order and format for presenting information, with patient diagnoses near the beginning of the document. Inpatient provider organizations should work with EHR vendors to ensure that all documents produced by their EHRs feature consistent font size, font type, indents, and spacing throughout. The standardized format for AVS, which should be developed based upon inputs from outpatient providers as well as other stakeholders, should include standard, consistently formatted headers and subheaders, presented in a standard order. This will improve readability while allowing outpatient providers to easily find information most relevant to them.

If multiple diagnoses are present, make sure that they are clearly defined and differentiated, and that the primary diagnoses are explained first. It is important that all diagnoses are documented and that it is clear which diagnoses were primarily responsible for the acute visit.

Ensure that the content matches the headings and subheadings within each section. During the heuristic evaluation, expert reviewers found that vital signs were buried under discharge instructions rather than appearing in the visit summary section, and instructions on what issues to look for and on follow-up care listed under general information rather than with the discharge instructions. Additionally, in some cases medical instructions were comingled with other medical information and could therefore be missed by patients and caregivers.

Make certain information clear and concise. Minimize or omit extraneous information (that is, information not needed by either the patient/caregiver or a clinician trying to coordinate care) to highlight the most important information. This is especially important when giving instructions on follow-up care as well as describing the diagnosis and treatment plans. The expert review teams found that some descriptions were too wordy and the information was not being clearly conveyed.

Ensure appropriate use of medical, nonmedical, and billing terminology. The diagnosis in one of the simulated AVS was “Recurrent Acute Suppurative Otitis Media of Right Ear Without Spontaneous Rupture of Tympanic Membrane.” This is International Classification of Diseases, Tenth Revision billing terminology. Since AVS may be used by different audiences, it is important that they include language that addresses the needs of each. For example, the diagnosis section should include both medical and nonmedical terminology to make it usable by both patients and outpatient health care providers.

Recommendations 3 and 5 are consistent with recent work describing how AVS provided by acute care and/or outpatient providers could be improved so they would be easier for patients and caregivers to use, 17 42 43 44 45 and 3, 4, and 5 are consistent with a recent compilation of practical recommendations for making hospital discharge summaries more usable for outpatient clinicians. 46 Recommendations 1, 2, and 7 are specifically aimed at ensuring that AVS can be used effectively both by clinicians using them to support care coordination and by patients and caregivers. Recommendation 6 must be satisfied by inpatient providers, but they will be able to fulfill it more easily if they understand how their EHR system pulls information into AVS.

Discussion

EHR systems have the potential to improve care coordination by facilitating knowledge transfer. However, difficulties in sharing information are frequent and there is not a consistent or effective way to manage communications to all the care providers that may need information on a patient's recent treatment. 9 10 11 12 13 As a result, in the near future, discharge summaries may continue to be widely unavailable to outpatient providers prior to follow-up visits. Until it is possible to efficiently and consistently transfer information across EHR systems, AVS will continue to bridge communication gaps between inpatient and outpatient providers. Given this situation, it is important to understand the “off-label” use of AVS by outpatient providers, and associated usability issues and patient safety risks.

The heuristic evaluation reported here contributes to this understanding. This study revealed several issues that make current AVS difficult for outpatient providers to use to support care coordination effectively. In particular, issues related to missing, difficult to find, and hard to comprehend information put patients at risk. Given that AVS were not originally intended to support clinical communication, it was not surprising that expert reviewers report some of the clinical information desired by outpatient providers was missing. However, the presence of unnecessary information, which introduces clutter and confusion, was surprising. Moreover, the fact that the documents were so hard to read due to stylistic and organizational problems was disquieting; the good news is that these issues should be relatively easy to fix, though it will require collaboration between acute care providers and their EHR vendors. Content problems may be harder to address, particularly if inpatient providers are not aware of how information gets pulled from EHRs to generate AVS; however, a great deal of the information needed to support care coordination should already be available in the EHRs. It is in patients' best interests for acute care providers to work with EHR vendors to modify their systems so that the information for outpatient providers' need for care coordination gets pulled into AVS. While making this modification, the modules that produce the AVS should also be changed to prevent the types of structural and formatting issues our expert review teams identified. This will help both readers for whom AVS were originally intended (patients and caregivers) as well as outpatient providers who must rely upon them for information about recent acute care visits.

There are some limitations to this study. The new heuristics were not assessed for reliability; however, four were extracted from commonly used sets of usability heuristics and the remainder were developed based upon published guidance on creating useful medical documentation. This study itself represents validation: the new set of heuristics was used successfully by our eight experts. Another limitation was not considering other possible severity rating scales. 47 48 49 50 Future work should explore whether another rating scale might be more useful for obtaining judgments from clinical experts about usability issues found in medical documents.

A larger sample of AVS and additional review teams would have improved the strength of this study and may have uncovered additional problems. Extended studies that include larger samples from more hospitals using different EHR vendors should be easier in the future given the heuristics that were created in this effort. Additionally, the use of simulated documents impacts generalizability of the recommendations. However, the use of NIST standard cases allows for testing under typical real-world conditions and supports replication of the process. Moreover, a concerted effort was made to transform document-specific suggestions for improvement into more broadly applicable recommendations for generating useful, usable AVS.

Continuing this research using actual AVS would provide additional insight into how clinicians use the EHR and add to the body of evidence. Finally, this study only addresses a single piece of the continuity of care puzzle. A logical next step is to apply the new heuristic evaluation instrument to evaluate the usability of discharge summaries, as these are the intended documents for coordination of care.

Conclusion

All three objectives for this study were met. First, a new set of medical documentation usability heuristics was developed by extracting relevant information from multiple sources, including literature on writing comprehensible medical documents and previously established medical device usability heuristics. The new heuristics were reviewed by multiple human factors, clinical and patient safety experts, and validated by having a human factors engineer apply them to evaluate usability of two AVS unrelated to those used in the study reported here.

Second, a heuristic evaluation instrument containing the new heuristics was used to quickly and inexpensively evaluate four simulated AVS. This evaluation, which included four clinical and four human factors experts, grouped into four two-person teams, identified many issues in the AVS that would make it hard for outpatient providers to use these AVS to coordinate care. In particular, some of the AVS were missing clinically relevant information such as family medical history. The AVS were not designed to support care coordination, so this result is not surprising. However, the AVS also had so many organizational and formatting issues that our expert reviewers concluded that it would be difficult for clinicians to locate the information they need for care coordination. Since acute care discharge summaries frequently do not reach outpatient providers prior to patient follow-up visits, AVS must be redesigned so they can also support care coordination. The results reported here indicate that relying upon current AVS to obtain clinical information about inpatient visits could negatively impact patient safety.

Third, several broad recommendations for producing AVS that effectively support care coordination and are useful for patient and caregivers were produced by analyzing the usability issues identified by the expert reviewers. These recommendations included providing additional clinical information in sections or subsections that are clearly labeled to indicate that they are directed toward clinicians, establishing a logical, standardized order for presenting information, and ensuring that content is related to the section or subsection headings under which it is placed. Since outpatient providers will likely need to continue to rely upon AVS to obtain information about recent acute care visits in the near future, acute care provider organizations are advised to use these recommendations to generate “dual-purpose” AVS. This would not only make it easier for clinicians to use AVS to support care coordination, but also make it easier for patients and caregivers to use the AVS to support home care. Finally, the 17 medical document usability heuristics developed under this effort may be used by care providers and vendors to assess any documents used to communicate clinical information generated by their EHR systems and/or to guide modifications to the portions of these systems that generate these documents.

Clinical Relevance Statement

Until it is possible to efficiently and consistently transfer information across EHR systems, AVS will continue to bridge communication gaps between inpatient and outpatient providers. Organizations providing acute care should adapt their EHR systems so they will produce AVS that better support care coordination. These organizations may use the recommendations and medical documentation usability heuristics described in this article to guide the adaptation of their EHR systems.

Multiple Choice Questions

-

Why do outpatient providers use AVS to learn about recent acute care visits?

EHR systems are not interoperable.

Inpatient provider organizations do not have accurate contact information for patient's outpatient providers.

There are no requirements that discharge summaries be sent to outpatient providers within a specific timeframe.

All of the above.

Correct Answer: The correct answer is option d. There are a variety of factors that can prevent outpatient providers from receiving discharge summaries about acute care visits in advance of patients arriving for follow-up visits, including lack of interoperability among EHR systems, incomplete or missing outpatient provider address information, and absence of a deadline for the delivery of these documents.

-

Which heuristic category is matched correctly with an explanation or with an example that represents the category?

Minimalism – Complex and technical terms are used correctly and consistently, and headers are clear and easy to understand.

Formatting – Multiple fonts and various headings are used throughout to demonstrate the versatility of the system.

Organization – The information in the document is ordered logically and is grouped into reasonably sized sections with prominent and meaningful headings and subheadings.

-

Readability – The AVS content is all relevant to the patient's condition and context; there is no extraneous information and important points are emphasized appropriately. No essential information is missing.

Correct Answer: The correct answer is option c. We define the five categories of heuristics used for our evaluation as follows:

Readability: The information is presented in a manner that is easy to read.

Comprehensibility: It is easy for the reader to make sense of the information that is presented.

Minimalism: Information is presented as simply and succinctly as possible.

Organization: Information is ordered logically and grouped into reasonably sized sections with prominent and meaningful headings and subheadings.

Content: All the information that is presented is relevant to either a clinical expert or the patient/caregiver and no information needed by either of these parties is missing.

Acknowledgments

The authors gratefully acknowledge the helpful contributions provided by the following: Dr. Susan Day, Dr. Elena Huang, Dr. Kate Kellog, Dr. Ellen Deutsch, Dr. Karen Schoelles, Dr. Thomasine Gorry, Dr. A. Zach Hettinger, Dr. Rollin J. Fairbanks, Raj Ratwani, PhD, Dr. Lorraine Possanza, Jeremy Suggs, PhD, Dr. Evan Orenstein, Dr. Levon Utidjian, Dr. Anthony Luberti, Dr. Eric Shelov, Dr. Joel Betesh, Dr. Kai Xu, and Dustin Fife, PhD.

Funding Statement

Funding This effort was fully funded by ECRI Institute.

Conflict of Interest None.

Protection of Human and Animal Subjects

This study was performed in compliance with the World Medical Association Declaration of Helsinki on Ethical Principles for Medical Research Involving Human Subjects and was reviewed by ECRI's Institutional Review Board.

Supplementary Material

References

- 1.Yeaman B, Ko K J, Alvarez del Castillo R. Care transitions in long-term care and acute care: health information exchange and readmission rates. Online J Issues Nurs. 2015;20(03):5. [PubMed] [Google Scholar]

- 2.Moore C, Wisnivesky J, Williams S, McGinn T. Medical errors related to discontinuity of care from an inpatient to an outpatient setting. J Gen Intern Med. 2003;18(08):646–651. doi: 10.1046/j.1525-1497.2003.20722.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hoyer E H, Odonkor C A, Bhatia S N, Leung C, Deutschendorf A, Brotman D J. Association between days to complete inpatient discharge summaries with all-payer hospital readmissions in Maryland. J Hosp Med. 2016;11(06):393–400. doi: 10.1002/jhm.2556. [DOI] [PubMed] [Google Scholar]

- 4.Kind A J, Smith M A. Rockville, MD: Agency for Healthcare Research and Quality; 2008. Documentation of mandated discharge summary components in transitions from acute to subacute care. AHRQ Patient Safety: New Directions and Alternative Approaches; pp. 179–188. [PubMed] [Google Scholar]

- 5.Jones C D, Cumbler E, Honigman B et al. Hospital to post-acute care facility transfers: identifying targets for information exchange quality improvement. J Am Med Dir Assoc. 2017;18(01):70–73. doi: 10.1016/j.jamda.2016.09.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Coghlin D T, Leyenaar J K, Shen M et al. Pediatric discharge content: a multisite assessment of physician preferences and experiences. Hosp Pediatr. 2014;4(01):9–15. doi: 10.1542/hpeds.2013-0022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Solan L G, Sherman S N, DeBlasio D, Simmons J M. Communication challenges: a qualitative look at the relationship between pediatric hospitalists and primary care providers. Acad Pediatr. 2016;16(05):453–459. doi: 10.1016/j.acap.2016.03.003. [DOI] [PubMed] [Google Scholar]

- 8.Shen M W, Hershey D, Bergert L, Mallory L, Fisher E S, Cooperberg D. Pediatric hospitalists collaborate to improve timeliness of discharge communication. Hosp Pediatr. 2013;3(03):258–265. doi: 10.1542/hpeds.2012-0080. [DOI] [PubMed] [Google Scholar]

- 9.The Office of the National Coordinator for Health Information Technology.Report to Congress April 2015: Report on Health Information Blocking Washington, DC: Health IT; 2015 [Google Scholar]

- 10.Adler-Milstein J, Jha A K. Health information exchange among U.S. hospitals: who's in, who's out, and why? Healthc (Amst) 2014;2(01):26–32. doi: 10.1016/j.hjdsi.2013.12.005. [DOI] [PubMed] [Google Scholar]

- 11.Iroju O, Soriyan A, Gambo I, Olaleke J. Interoperability in healthcare: benefits, challenges and resolutions. Int J Innovat Appl Stud. 2013;3(01):262–270. [Google Scholar]

- 12.Khennou F, Khamlichi Y I, Chaoui N EH.Evaluating electronic health records interoperabilityInternational Conference on Information and Software Technologies; 2017:106–118

- 13.Marchibroda J.Health policy brief: interoperabilityHealth Aff 2014. Available at:https://www.healthaffairs.org/do/10.1377/hpb20140811.761828/full/. Accessed July 22, 2018

- 14.Gorry T.Harnessing the power of the EMR to improve written communications. Presentation at “Transforming Health IT by Embedding Safety”; 2017Partnership for Health IT Patient Safety Meeting, Plymouth Meeting, PA [Google Scholar]

- 15.ECRI Institute Patient Safety Organization deep dive: care coordination. Available at:https://www.ecri.org/components/PSOCore/Pages/DeepDive0915_CareCoordination.aspx?tab=2. Accessed April 28, 2018

- 16.Bansard M, Clanet R, Raginel T. Proposal of standardised and logical templates for discharge letters and discharge summaries sent to general practitioners [in French] Sante Publique. 2017;29(01):57–70. [PubMed] [Google Scholar]

- 17.Federman A D, Sanchez-Munoz A, Jandorf L, Salmon C, Wolf M S, Kannry J.Patient and clinician perspectives on the outpatient after-visit summary: a qualitative study to inform improvements in visit summary design J Am Med Inform Assoc 201724(e1):e61–e68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Lim S Y, Jarvenpaa S L, Lanham H J. Barriers to interorganizational knowledge transfer in post-hospital care transitions: review and directions for information systems research. J Manage Inf Syst. 2015;32(03):48–74. [Google Scholar]

- 19.Newnham H, Barker A, Ritchie E, Hitchcock K, Gibbs H, Holton S. Discharge communication practices and healthcare provider and patient preferences, satisfaction and comprehension: a systematic review. Int J Qual Health Care. 2017;29(06):752–768. doi: 10.1093/intqhc/mzx121. [DOI] [PubMed] [Google Scholar]

- 20.Nielsen J, Molich R.Heuristic evaluation of user interfacesProceedings of the SIGCHI Conference on Human Factors in Computing Systems; 1990:249–256

- 21.ISO. 9421–11:Ergonomic requirements for office work with visual display terminals (VDTs). Part 11–guidelines for specifying and measuring usability Geneva: International Standards Organisation; 1997 [Google Scholar]

- 22.Nielsen J. Reliability of severity estimates for usability problems found by heuristic evaluation. Proceeding. 1992:129–130. [Google Scholar]

- 23.Nielsen J.10 usability heuristics for user interface design. 1995Available at:https://www.nngroup.com/articles/ten-usability-heuristics/. Accessed April 28, 2018

- 24.Hermawati S, Lawson G. Establishing usability heuristics for heuristics evaluation in a specific domain: is there a consensus? Appl Ergon. 2016;56:34–51. doi: 10.1016/j.apergo.2015.11.016. [DOI] [PubMed] [Google Scholar]

- 25.Human Factors Engineering. [updated June 2017]. Available at:https://psnet.ahrq.gov/primers/primer/20. Accessed April 28, 2018

- 26.Howe J L, Adams K T, Hettinger A Z, Ratwani R M. Electronic health record usability issues and potential contribution to patient harm. JAMA. 2018;319(12):1276–1278. doi: 10.1001/jama.2018.1171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ratwani R M, Hettinger A Z, Fairbanks R J.Barriers to comparing the usability of electronic health records J Am Med Inform Assoc 201724(e1):e191–e193. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Lowry S Z, Ramaiah M, Patterson E Set al. (NISTIR 7804–1) Technical Evaluation, Testing, and Validation of the Usability of Electronic Health Records: Empirically Based Use Cases for Validating Safety-Enhanced Usability and Guidelines for StandardizationNIST Interagency/Internal Report (NISTIR)-7804–1; 2015

- 29.Middleton B, Bloomrosen M, Dente M Aet al. Enhancing patient safety and quality of care by improving the usability of electronic health record systems: recommendations from AMIA J Am Med Inform Assoc 201320(e1):e2–e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zhang J, Johnson T R, Patel V L, Paige D L, Kubose T.Using usability heuristics to evaluate patient safety of medical devices J Biomed Inform 200336(1-2):23–30. [DOI] [PubMed] [Google Scholar]

- 31.Shneiderman B. New Delhi, India: Pearson Education India; 2010. Designing the User Interface: Strategies for Effective Human-Computer Interaction. [Google Scholar]

- 32.Kantner L, Shroyer R, Rosenbaum S.Structured heuristic evaluation of online documentationIEEE International Professional Communication Conference; 2002:331–342

- 33.Simply put: a guide for creating easy-to-understand materials; 2009. Available at:https://www.cdc.gov/healthliteracy/pdf/Simply_Put.pdf. Accessed April 28, 2018

- 34.Readability Formulas.How to improve the readability of anything you writeAvailable at:http://readabilityformulas.com/articles/how-to-improve-the-readability-of-anything-you-write.php. Accessed April 28, 2018

- 35.Plain Language Action and Information Network.Federal plain language guidelines; 2011Available at:http://www.plainlanguage.gov/howto/guidelines/FederalPLGuidelines/FederalPLGuidelines.pdf. Accessed April 28, 2018

- 36.Centers for Medicare and Medicaid Services.Toolkit for making written material clear and effective. Part; 2010Available at:https://www.cms.gov/Outreach-and-Education/Outreach/WrittenMaterialsToolkit/index.html. Accessed April 28, 2018

- 37.Medline Plus.How to write easy-to-read health materials; 2013Available at:https://medlineplus.gov/etr.html. Accessed April 28, 2018

- 38.Badarudeen S, Sabharwal S. Assessing readability of patient education materials: current role in orthopaedics. Clin Orthop Relat Res. 2010;468(10):2572–2580. doi: 10.1007/s11999-010-1380-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Burke H B, Hoang A, Becher D et al. QNOTE: an instrument for measuring the quality of EHR clinical notes. J Am Med Inform Assoc. 2014;21(05):910–916. doi: 10.1136/amiajnl-2013-002321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Lowry S Z, Quinn M T, Ramaiah Met al. (NISTIR 7865) A Human Factors Guide to Enhance EHR Usability of Critical User Interactions When Supporting Pediatric Patient CareNIST Interagency/Internal Report (NISTIR)-7865; 2012

- 41.Tremoulet P D, McManus M, Baranov D. Rendering ICU data useful via formative evaluations of Trajectory, Tracking, and Trigger (T3TM) software. Proceedings. 2017;6(01):50–56. [Google Scholar]

- 42.Sarzynski E, Hashmi H, Subramanian J et al. Opportunities to improve clinical summaries for patients at hospital discharge. BMJ Qual Saf. 2017;26(05):372–380. doi: 10.1136/bmjqs-2015-005201. [DOI] [PubMed] [Google Scholar]

- 43.Nguyen O K, Kruger J, Greysen S R, Lyndon A, Goldman L E. Understanding how to improve collaboration between hospitals and primary care in postdischarge care transitions: a qualitative study of primary care leaders' perspectives. J Hosp Med. 2014;9(11):700–706. doi: 10.1002/jhm.2257. [DOI] [PubMed] [Google Scholar]

- 44.Ruth J L, Geskey J M, Shaffer M L, Bramley H P, Paul I M. Evaluating communication between pediatric primary care physicians and hospitalists. Clin Pediatr (Phila) 2011;50(10):923–928. doi: 10.1177/0009922811407179. [DOI] [PubMed] [Google Scholar]

- 45.Unaka N I, Statile A, Haney J, Beck A F, Brady P W, Jerardi K E. Assessment of readability, understandability, and completeness of pediatric hospital medicine discharge instructions. J Hosp Med. 2017;12(02):98–101. doi: 10.12788/jhm.2688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Unnewehr M, Schaaf B, Marev R, Fitch J, Friederichs H. Optimizing the quality of hospital discharge summaries--a systematic review and practical tools. Postgrad Med. 2015;127(06):630–639. doi: 10.1080/00325481.2015.1054256. [DOI] [PubMed] [Google Scholar]

- 47.Albert W, Tullis T. Newnes Books; 2013. Measuring the user experience: collecting, analyzing, and presenting usability metrics. [Google Scholar]

- 48.Dumas J S, Fox J E.Usability testing: current practice and future directionsIn:Jacko J Sears A L(eds.). The Human-Computer Interaction Handbook Boca Raton: CRC Press; 2012 [Google Scholar]

- 49.Molich R, Jeffries R. Extended Abstracts; 2003. Comparative expert reviews; pp. 1060–1061. [Google Scholar]

- 50.Rubin J, Chisnell D. Hoboken, NJ: John Wiley & Sons; 2008. Handbook of Usability Testing: How to Plan, Design, and Conduct Effective Tests. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.