Abstract

Recent studies have highlighted the importance of assessing the robustness of putative biomarkers identified from experimental data. This has given rise to the concept of stable biomarkers, which are ones that are consistently identified regardless of small perturbations to the data. Since stability is not by itself a useful objective, we present a number of strategies that combine assessments of stability and predictive performance in order to identify biomarkers that are both robust and diagnostically useful. Moreover, by wrapping these strategies around logistic regression classifiers regularised by the elastic net penalty, we are able to assess the effects of correlations between biomarkers upon their perceived stability.

We use a synthetic example to illustrate the properties of our proposed strategies. In this example, we find that: (i) assessments of stability can help to reduce the number of false positive biomarkers, although potentially at the cost of missing some true positives; (ii) combining assessments of stability with assessments of predictive performance can improve the true positive rate; and (iii) correlations between biomarkers can have adverse effects on their stability, and hence must be carefully taken into account when undertaking biomarker discovery. We then apply our strategies in a proteomics context, in order to identify a number of robust candidate biomarkers for the human disease HTLV1-associated myelopathy/tropical spastic paraparesis (HAM/TSP).

1. Introduction

Several recent articles have emphasised the importance of considering the stability of gene signatures and biomarkers of disease identified by feature selection algorithms (see, for example, Zucknick et al., 2008; Meinshausen and Bühlmann, 2010; Abeel et al., 2010; Alexander and Lange, 2011; Ahmed et al., 2011). The aim is to establish if the selected predictors are specific to the particular dataset that was observed, or if they are robust to the noise in the data. Although not a new concept (see, for example, Turney, 1995, for an early discussion), selection stability has received a renewed interest in biological contexts due to concerns over the irreproducibility of results (Ein-Dor et al., 2005, 2006). Assessments of stability usually proceed by: (i) subsampling the original dataset; (ii) applying a feature selection algorithm to each subsample; and then (iii) quantifying stability using a method for assessing the agreement among the resulting sets of selections (e.g. Kalousis et al., 2007; Kuncheva, 2007; Jurman et al., 2008). There is an increasing body of literature on this subject, and we refer the reader to He and Yu (2010) for a comprehensive review.

One of the principal difficulties with stability is that it is not by itself a useful objective: a selection strategy that chooses an arbitrary fixed set of covariates regardless of the observed data will achieve perfect stability, but the predictive performance provided by the selected set is likely to be poor (Abeel et al., 2010). Since we ultimately seek biomarkers that are not only robust but which also allow us to discriminate between (for example) different disease states, it is desirable to try to optimise both stability and predictive performance simultaneously. The first contribution of this article is to present a number of strategies for doing this. We follow Meinshausen and Bühlmann (2010) in estimating selection probabilities for different sets of covariates, but diverge from their approach by combining these estimates with assessments of predictive performance. Given that our approach uses subsampling for both model structure estimation and performance assessment, it is somewhat related to double cross validation (see Stone, 1974, and also Smit et al., 2007 for an application similar to the one considered here); however, we do not employ a nested subsampling step.

Our second contribution is to provide a procedure for quantifying the effects of correlation upon selection stability. As discussed in Yu et al. (2008), correlations among covariates can have a serious impact upon stability. Since multivariate covariate selection strategies often seek a minimal set of covariates that yield the best predictive performance, a single representative from a group of correlated covariates is often selected in favour of the whole set. This can have a negative impact upon stability (Kirk et al., 2010), as the selected representative is liable to vary from dataset to dataset. We hence consider a covariate selection strategy based upon logistic regression with the elastic net likelihood penalty (see Zou and Hastie, 2005; Friedman et al., 2007, 2010, and Section 2.4), which allows us to control whether we tend to select single representatives or whole sets of correlated covariates. This allows us to investigate systematically how our treatment of correlation affects stability.

2. Methods

Let D be a dataset comprising observations taken on n individuals, Each xi = [xi1, … , xip]⊤ ∈ ℝp is a vector of measurements taken upon p covariates v1, … , vp, and yi ∈ {0, 1} is a corresponding binary class label (e.g. case/control). A classification rule is a function, h, such that h(x) ∈ {0, 1} is the predicted class label for x ∈ ℝp. For the time being, we assume only that h was obtained by fitting some predictive model ℋθ to a training dataset (here, θ denotes the parameters of the model). We write ℋθ (D) to denote the fitted model obtained by training ℋθ on dataset D.

2.1. Assessing predictive performance

Given dataset D and classification rule h, we can calculate the correct classification rate when h is applied to D as the proportion of times the predicted and observed class labels are equal,

| (1) |

where 𝕀(Z) is the indicator function, which equals 1 if Z is true and 0 otherwise.

One approach for assessing the predictive performance of model ℋθ is random subsampling cross validation (Kohavi, 1995). We train our predictive model on a subsample, Dk, of the training dataset, and then calculate the correct classification rate, ck, when the resulting classifier is applied to the remaining (left-out) data, D\k = D\Dk. Repeating for k = 1, … , K, we may calculate the mean correct classification rate and take this as an estimate of the probability that our model classifies correctly,

| (2) |

2.2. Assessing stability

We suppose that – as well as a classification rule – we also obtain a set of selected covariates, sk, when we train ℋθ on subsample Dk. More precisely, we assume that only the covariates in sk appear with non-zero coefficients in the fitted predictive model ℋθ (Dk) (for example, this will be the case if we fit logistic regression models with lasso or elastic net likelihood penalties). For any subset of the covariates, V ⊆ {v1, … , vp}, we may then estimate the probability that the covariates in V are among those selected,

| (3) |

This quantifies the stability with which the covariate set V is selected (Meinshausen and Bühlmann, 2010).

2.3. Combining stability and predictive performance

Equation (2) provides an assessment of predictive performance, but gives no information regarding whether or not there is any agreement among the selected sets sk. On the other hand, Equation (3) allows us to assess the stability of a covariate set V, but does not tell us if the covariates in V are predictive. Since these assessments of stability and predictive performance both require us to subsample the training data, it seems natural to combine them in order to try to resolve their limitations. We here provide a method for doing this.

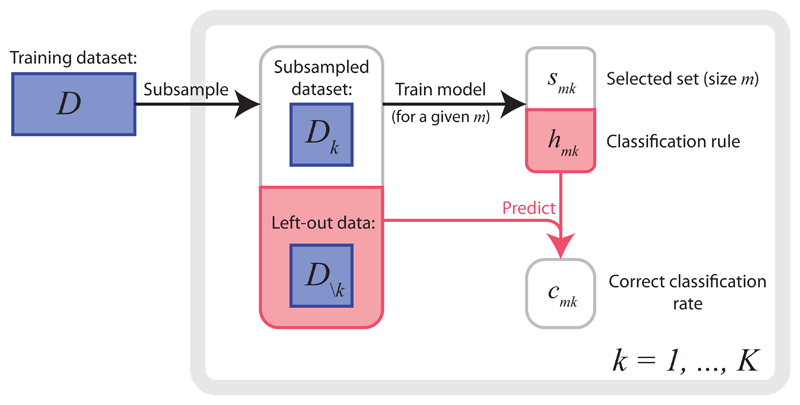

We shall henceforth assume that the parameters, θ, of ℋθ may be tuned in order to ensure that precisely m covariates are selected. We then write smk for the selected set of size m obtained when ℋθ is trained on Dk, and hmk for the corresponding classification rule. Similarly, we define cmk = c(D\k; hmk). Figure 1 provides a summary of this notation and the way in which we find smk and cmk. Having made these definitions, we may additionally condition on m in Equations (2) and (3) to obtain,

| (4) |

and

| (5) |

Figure 1.

Summary of the notation and basic procedure used throughout this article. The training dataset, D, is repeatedly subsampled to obtain a collection of datasets, and left-out datasets, For k = 1, … , K, a predictive model is trained on Dk and then used to predict the class labels of the observations in D\k, yielding a selected set of size m, smk, and a correct classification rate, cmk.

Instead of estimating the probability of correct classification as in Equation (4), we may wish to restrict our attention to those subsamples for which a particular subset V of the covariates were among the selections. This allows us to quantify the predictive performance associated with a particular set of covariates, rather than averaging the predictive performance over all covariate selections. We therefore calculate the mean correct classification rate over the subsamples Dk for which V ⊆ smk, and identify this as an estimate of the conditional probability that our classifier classifies correctly given that it selects V,

| (6) |

By multiplying together Equations (5) and (6), we obtain an estimate of the joint probability of our classifier both selecting V and classifying correctly,

| (7) |

Equation (7) provides a simple probabilistic score that combines assessments of predictive performance and stability.

2.3.1. Covariate selection strategies

Adopting the procedure described in Figure 1 provides us with a collection, of K covariate sets and corresponding correct classification rates. In general, the smk will not all be the same, so we must apply some strategy in order to decide which to return as our final set of putative biomarkers. We could, for example, return the set that is most frequently selected; i.e. choose the set V whose probability of selection (Equation (5)) is maximal. In Table 1, we present a number of probabilistic and heuristic strategies (S1 – S7) that exploit Equations (5) – (7) in order to optimise prediction performance, stability, or combinations of the two. All strategies are defined for a given model ℋθ and set size m.

Table 1.

Selection strategies considered in this article. In each case, we assume that we have a predictive model, ℋθ, and that we specify the number, m, of covariates that we wish to select. For j = 1, … , 6, strategy Sj returns selected set, V*, together with maximised score Pj (V*). S7 returns selected set V* together with the score P7.

| SELECTION STRATEGIES | ||

|---|---|---|

| Joint strategies | Select set V to maximise: | |

| S1 | Prediction only | |

| S2 | Stability only | |

| S3 | Joint prob. of selection & correct classification | |

| S4 | Prediction with stability threshold, τ | |

| Marginal strategies | Select set V to maximise: | |

| S5 | Stability only (marginal case) | |

| S6 | Joint prob. of selection & correct classification (marginal case) | |

| Other | Select set V to maximise: | |

| S7 | Average prediction | |

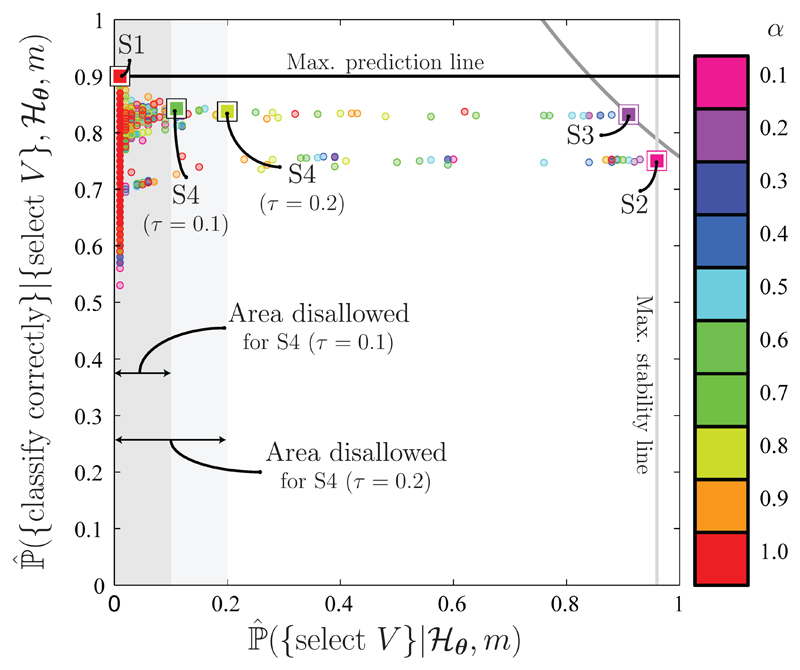

Strategies S1 – S4 of Table 1 are joint strategies, which consider the joint selection and correct classification probabilities associated with sets of covariates. The differences between these strategies are illustrated in Figure 2. In contrast, S5 and S6 make use of the marginal selection and correct classification probabilities associated with individual covariates. S7 is of a slightly different type, discussed further in Section 2.3.2.

Figure 2.

Illustration of the differences between the joint strategies (S1 – S4). The round markers correspond to different covariates sets (of various sizes) returned by 10 different models, , when applied to one of the simulated datasets of Section 3.1. Each model corresponds to a different value of α (see Section 2.4), hence the colours of the markers indicate the model that was used to select each covariate set. The larger, labelled markers correspond to the final sets of selections returned by strategies S1 – S4 (as indicated). S1 returns the set, V, that maximises predictive performance, regardless of how stably it is selected; S2 returns the most stably selected set, regardless of the predictive performance it offers; S3 seeks a compromise between stability and predictive performance; and S4 returns the most predictive covariate set, subject to a stability threshold, τ.

2.3.2. Choosing between different m and ℋθ

Each of the strategies in Table 1 returns a final selected set and an associated score (for each pair ℋθ, m). If we have a range of predictive models and values for m, then we can consider all of them and return as our final selected set the one that gives the highest score (over all m and ℋθ). Adopting this approach, strategy S7 can be viewed as finding the optimal pair for which the estimated probability of correct classification (Equation (4)) is largest, and then returning the most predictive set of size m* selected by This is analogous to the common practice of using predictive performance to determine an appropriate level of regularisation.

2.4. Implementation

We focus on selection procedures that use logistic regression models with elastic net likelihood penalties (Zou and Hastie, 2005). The standard logistic regression model for the binary classification problem is as follows,

| (8) |

where β0 ∈ ℝ, β = [β1, … , βp]⊤ ∈ ℝp, x= [x1, … , xp]⊤ ∈ ℝp, and g is the logistic function. Estimates for the coefficients β0, β1, … , βp can be found by maximisation of the (log) likelihood function.

The elastic net introduces a penalty term λQα(β) comprising a mixture of 𝓁1 and 𝓁2 penalties, so that the estimates for the coefficients are given by,

| (9) |

where

| (10) |

The estimated coefficients now depend upon the values taken by the parameters α and λ. When α = 1, we recover the lasso (𝓁1) penalty, and when α = 0 we recover the ridge (𝓁2) penalty. As α is decreased from 1 toward 0, the elastic net becomes increasingly tolerant of the selection of groups of correlated covariates. In the following, we consider a grid of α values (α = 0.1, 0.2, … , 1), and consider the order in which covariates are selected (acquire a non-zero β coefficient) as λ is decreased from λcrit (the smallest value of λ such that ) toward 0. Each different value of α defines a different classification/selection procedure, where corresponds to α = j/10. Throughout, we use the glmnet package in R (Friedman et al., 2010) to fit our models.

Although we use the elastic net penalty to select covariates, we use an unpenalised logistic regression model when making predictions. This two-step procedure of using the elastic net for variable selection and then obtaining unpenalised estimates of the coefficients in the predictive model is similar to the LARS-OLS hybrid (Efron et al., 2004) or the relaxed lasso (Meinshausen, 2007).

3. Examples

3.1. Simulation example

Following a similar illustration from Meinshausen and Bühlmann (2010), we consider an example in which we have p = 500 predictors v1, … , v500 and n = 200 observations. The predictors v1, … , v500 are jointly distributed according to a multivariate normal whose mean µ is the zero vector and whose covariance matrix Σ is the identity, except that the elements Σ1,2 = Σ3,4 = Σ3,5 = Σ4,5 and their symmetric counterparts are equal to 0.9. Thus, there are two strongly correlated sets, C1 = {v1, v2} and C2 = {v3, v4, v5}, but otherwise the predictors are uncorrelated. Observed class labels y are either 0 or 1, according to the following logistic regression model:

| (11) |

Due to correlations among the covariates, it is also useful to consider the following approximation:

| (12) |

where vi1 ∈ C1 and vi2 ∈ C2.

Since v1, … , v5 are the only covariates that appear in the generative model given in Equation (11), we refer to these as relevant covariates, and to the remainder as noise covariates.

We simulate 1,000 datasets — each comprising 200 observations — by first sampling from a multivariate normal in order to obtain realisations of the covariates v1, … , v500, and then generating values for the response y according to Equation (11). We consider a range m = 1, … , 20 and use K = 100 subsamples.

3.2. HTLV1 biomarker discovery

Human T-cell lymphotropic virus type 1 (HTLV1) is a widespread human virus associated with a number of diseases (Bangham, 2000a), including the inflammatory condition HTLV1-associated myelopathy/tropical spastic paraparesis (HAM/TSP). However, the vast majority (~95%) of individuals infected with HTLV1 remain lifelong asymptomatic carriers (ACs) of the disease (Bangham, 2000b). We seek to identify protein peak biomarkers from SELDI-TOF mass spectral data which allow us to discriminate between ACs and individuals with HAM/TSP.

We have blood plasma samples from a total of 68 HTLV1-seropositive individuals (34 HAM/TSP, 34 AC), processed as in Kirk et al. (2011). Here we analyse the combined dataset, DC, comprising measurements from all 68 patients. We consider m = 1, … , 12 and use K = 250 subsamples.

4. Results

4.1. Simulation example

We applied our selection strategies (Table 1) to each of our 1000 simulated datasets. For each simulation, each strategy returned a final set, V, containing the selected covariates. Each selected covariate must either be a noise or a relevant covariate. We can hence consider that V = R ∪ N, where R ⊆ V is a set containing only relevant covariates and N ⊆ V is a set containing only noise covariates. The case |R| = 5, |N| = 0 is the ideal, as this corresponds to selecting all 5 relevant covariates, but none of the noise covariates. To assess the quality of our strategies, we therefore calculated for each the proportion of simulated datasets for which this ideal case was achieved. This information is provided in Table 2, along with a summary of the proportion of times that other combinations of the covariates were selected.

Table 2.

Summary of the final selections made using the strategies described in Table 1. The first two columns summarise the final selections in terms of the number of relevant covariates, |R|, and the number of noise covariates, |N|, that appear in the final selected set. The entries in the table indicate the percentage of simulated datasets for which each of the combinations of relevant and noise covariates was obtained. Any rows for which the percentage is < 1% for all strategies are omitted (hence columns need not sum to 100%).

| Strategy: | S1 | S2 | S3 | S4 | S4 | S5 | S6 | S7 | |

|---|---|---|---|---|---|---|---|---|---|

| (τ = 0.1) | (τ = 0.2) | ||||||||

| |R| | |N| | Percentage of selections | |||||||

| 5 | 0 | 0 | 36.3 | 50.5 | 5.2 | 10.6 | 42.5 | 66.9 | 38.5 |

| 4 | 0 | 0.6 | 2.4 | 8.5 | 32.4 | 40.3 | 3.4 | 16 | 50.2 |

| 3 | 0 | 1.8 | 57.4 | 39.1 | 39.6 | 36.9 | 52.9 | 13.6 | 2.9 |

| 2 | 0 | 0.2 | 1.4 | 0.5 | 7.5 | 7.8 | 0.6 | 0.1 | 0.4 |

| 1 | 0 | 0 | 2.5 | 1.4 | 0 | 0.1 | 0.6 | 0.1 | 0 |

| 5 | 1 | 10.5 | 0 | 0 | 11.6 | 4.1 | 0 | 0 | 0.5 |

| 4 | 1 | 9.2 | 0 | 0 | 2.8 | 0.2 | 0 | 1.3 | 4.8 |

| 3 | 1 | 2.8 | 0 | 0 | 0.6 | 0 | 0 | 0.4 | 2 |

| 5 | 2 | 19.7 | 0 | 0 | 0.2 | 0 | 0 | 0 | 0 |

| 4 | 2 | 5.1 | 0 | 0 | 0 | 0 | 0 | 0 | 0.1 |

| 3 | 2 | 1.8 | 0 | 0 | 0 | 0 | 0 | 0.5 | 0.2 |

| 5 | 3 | 12.5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 3 | 3.5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 4 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 4 | 1.9 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 5 | 4.7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 6 | 2.6 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 6 | 1.1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 7 | 1.5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 8 | 1.8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 9 | 1.8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 10 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

4.1.1. Fewer false positives for strategies involving stability selection

The selected sets returned by Strategies S2, S3 and S5 always contained at least one relevant covariate, and never any noise covariates. The lack of false positives for these three strategies contrasts with the strategy that uses predictive performance alone (S1), which returned a selected set containing at least 1 noise covariate for 97.4% of the simulated datasets. Additionally enforcing a stability threshold upon the final selected set (S4) decreases this percentage (to 15.3% when τ = 0.1 and 4.3% when τ = 0.2). One of the best performing strategies overall is S6 (the marginal analogue of Strategy S3), which selects all 5 relevant and 0 noise covariates for about two-thirds of the simulated datasets. In contrast to S2, S3 and S5, however, S6 does make some false positive selections, with noise covariates being included among the final selections in 3.3% of cases. S7 also performs well, selecting all 5 relevant and 0 noise covariates for 38.5% of the simulated datasets and making at least one false positive selection in only 8% of cases.

4.1.2. Smaller values of α yield more stable selections

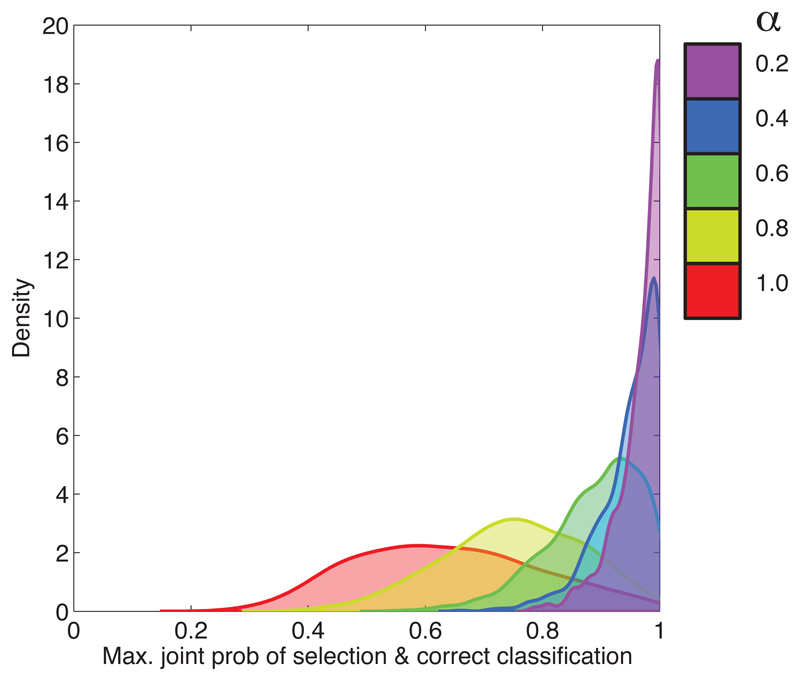

As well as looking at the final selection made for each dataset (chosen over all classification models), we can also consider the results for each of the classification models considered separately. We focus on Strategy S3. For each simulated dataset and for each we use S3 in order to select a final set. Associated with each of these selected sets is a score (the joint probability of selection and correct classification). In Figure 3, we illustrate the distributions of the scores obtained for (i.e. for α = 0.2, 0.4, 0.6, 0.8 and 1).

Figure 3.

Distributions of the scores returned by S3 which were obtained in the simulation example for 5 different values of α.

We can see from Figure 3 that smaller values of α tend to yield higher values of the score. Recall that there are two strongly correlated groups of relevant covariates (see Section 3.1), and smaller values of α will tend to allow all of the covariates in these two groups to be selected, while larger values of α will tend to result in a single representative from each of the two groups being selected. Although this does not have a significant impact in terms of predictive performance (since Equation (12) is a good approximation to Equation (11)), it does have a negative effect upon stability (since, for different subsamples of the data, different representatives can be selected).

4.2. HTLV1 biomarker discovery

We applied our selection strategies to the HTLV1 combined dataset, DC. The selected covariates (protein peaks) are summarised in Table 3.

Table 3.

Covariates selected by strategies S1–S7. Covariates correspond to protein peaks in the mass-spectrum, and are labelled according to the m/z value at which the peak was located (units: kDa).

| Covariate selections | Strategies |

|---|---|

| 11.7 13.3 | S4 (τ = 0.2) |

| 11.7 13.3 17.5 | S4 (τ = 0.1) |

| 11.7 13.3 14.6 | S2, S3, S5, S6, S7 |

| 10.8 11.7 11.9 13.3 14.6 25.1 | S1 |

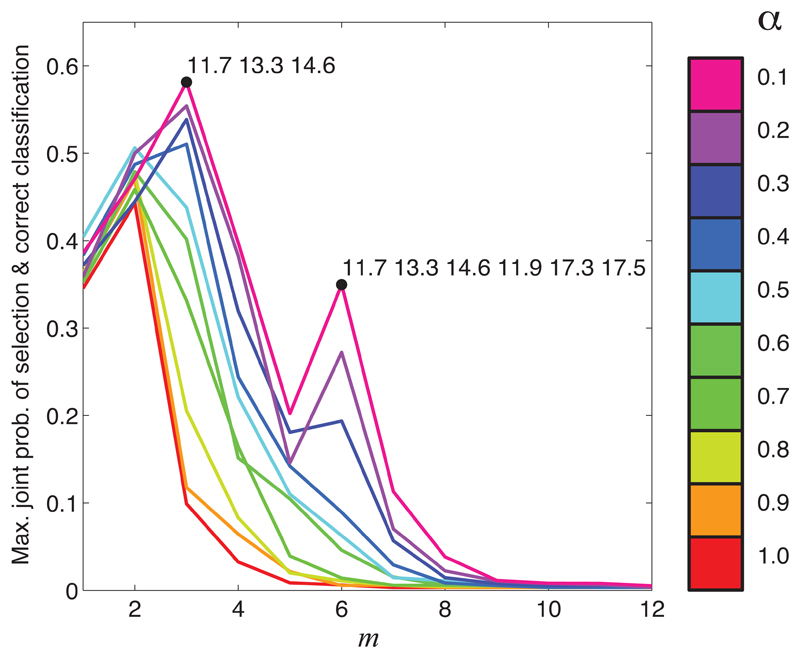

All strategies included the 11.7 and 13.3kDa peaks among their selections. As might be expected from the results of the previous section, Strategy S1 yields the largest selected set. The strategies that we found to provide the best performance in our simulation example (namely, S2, S3, S6 and S7) all selected the same 3 covariates. In Figure 4 we further illustrate the selections made using Strategy S3 by showing how the score returned by this strategy varies as a function of m for each of the classification models (i.e. for α = 0.1, 0.2, … , 1).

Figure 4.

Score returned by S3 considered as a function of m (when applied to the HTLV1 proteomics dataset).

We can see from Figure 4 that the highest joint scores are again achieved for smaller values of α. The second peak in the joint score curve at m = 6 (observed for α = 0.1, 0.2 and 0.3) is notable, and leads us to propose the proteins corresponding to the 13.3, 11.7 and 14.6kDa peaks as “high confidence” biomarkers, and the proteins corresponding to the 11.9, 17.3 and 17.5kDa peaks as potential biomarkers that might be worthy of further investigation. In Kirk et al. (2011) the 11.7 and 13.3kDa peaks were identified as β2-microglobulin and Calgranulin B, and the 17.3kDa peak as apolipoprotein A-II.

5. Discussion

We have considered a number of strategies for covariate selection that employ assessments of stability, predictive performance, and combinations of the two. We have conducted empirical assessments of these strategies using both simulated and real datasets. Our work indicates that including assessments of stability can help to reduce the number of false positive selections, although this might come at the cost of only making a conservative number of (high confidence) selections. In the context of biomarker discovery, where follow-up work to identify and validate putative biomarkers is likely to be expensive and time-consuming, assessments of stability would seem to provide a useful way in which to focus future study. However, for large-scale hypothesis generation, selection strategies that employ stability assessments might be too conservative. Our simulation results (Section 4.1) suggest that combining assessments of stability and predictive performance can yield selection strategies that have lower false positive rates than strategies based on prediction alone, and lower false negative rates than pure stability selection strategies. We also found that classification/selection models that do not select complete sets of correlated predictive covariates run the risk of appearing to make unstable selections (Section 4.1.2). This will have a detrimental effect on stability selection approaches, further increasing the number of false negatives. It would therefore seem that if we are concerned with the stability with which selections are made (which should always be the case if our main aim is covariate selection/biomarker discovery), then it might be counter-productive just to search for the sparsest classification model that yields the maximal predictive performance. In particular, in order to improve the stability of selections, it would seem sensible to favour mixtures of 𝓁1 and 𝓁2 likelihood penalties (i.e. the elastic net) over lasso (𝓁1 only) penalties.

Footnotes

Author Disclosure Statement

No competing financial interests exist.

References

- Abeel T, Helleputte T, de Peer YV, Dupont P, Saeys Y. Robust biomarker identification for cancer diagnosis with ensemble feature selection methods. Bioinformatics. 2010;26:392–8. doi: 10.1093/bioinformatics/btp630. [DOI] [PubMed] [Google Scholar]

- Ahmed I, Hartikainen A-L, Järvelin M-R, Richardson S. False Discovery Rate Estimation for Stability Selection: Application to Genome-Wide Association Studies. Statistical applications in genetics and molecular biology. 2011;10 doi: 10.2202/1544-6115.1663. [DOI] [PubMed] [Google Scholar]

- Alexander DH, Lange K. Stability selection for genome-wide association. Genetic epidemiology. 2011;35:722–728. doi: 10.1002/gepi.20623. [DOI] [PubMed] [Google Scholar]

- Bangham CRM. HTLV-1 infections. J Clin Pathol. 2000a;53:581–6. doi: 10.1136/jcp.53.8.581. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bangham CRM. The immune response to HTLV-I. Curr Opin Immunol. 2000b;12:397–402. doi: 10.1016/s0952-7915(00)00107-2. [DOI] [PubMed] [Google Scholar]

- Efron B, Hastie T, Johnstone I, Tibshirani R. Least angle regression. Ann Stat. 2004;32:407–451. [Google Scholar]

- Ein-Dor L, Kela I, Getz G, Givol D, Domany E. Outcome signature genes in breast cancer: is there a unique set? Bioinformatics. 2005;21:171–8. doi: 10.1093/bioinformatics/bth469. [DOI] [PubMed] [Google Scholar]

- Ein-Dor L, Zuk O, Domany E. Thousands of samples are needed to generate a robust gene list for predicting outcome in cancer. Proc Natl Acad Sci USA. 2006;103:5923–8. doi: 10.1073/pnas.0601231103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman J, Hastie T, Hoefling H, Tibshirani R. Pathwise coordinate optimization. Ann Appl Stat. 2007;1:302–332. [Google Scholar]

- Friedman J, Hastie T, Tibshirani R. Regularization paths for generalized linear models via coordinate descent. Journal of Statistical Software. 2010;33:1–22. [PMC free article] [PubMed] [Google Scholar]

- He Z, Yu W. Stable feature selection for biomarker discovery. Computational biology and chemistry. 2010;34:215–225. doi: 10.1016/j.compbiolchem.2010.07.002. [DOI] [PubMed] [Google Scholar]

- Jurman G, Merler S, Barla A, Paoli S, Galea A, Furlanello C. Algebraic stability indicators for ranked lists in molecular profiling. Bioinformatics. 2008;24:258–64. doi: 10.1093/bioinformatics/btm550. [DOI] [PubMed] [Google Scholar]

- Kalousis A, Prados J, Hilario M. Stability of feature selection algorithms: a study on high-dimensional spaces. Knowledge and information systems. 2007 [Google Scholar]

- Kirk PDW, Lewin AM, Stumpf MPH. Discussion of “Stability Selection”. J Roy Stat Soc B. 2010;72 [Google Scholar]

- Kirk PDW, Witkover A, Courtney A, Lewin AM, Wait R, Stumpf MPH, Richardson S, Taylor GP, Bangham CRM. Plasma proteome analysis in HTLV-1-associated myelopathy/tropical spastic paraparesis. Retrovirology. 2011;8:81. doi: 10.1186/1742-4690-8-81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kohavi R. A study of cross-validation and bootstrap for accuracy estimation and model selection. International Joint Conference on Artificial Intelligence; 1995. [Google Scholar]

- Kuncheva L. A stability index for feature selection. Proceedings of the 25th International Multi-Conference on Artificial Intelligence and Applications; 2007. pp. 390–395. [Google Scholar]

- Meinshausen N. Relaxed lasso. Comput Stat Data An. 2007;52:374–393. [Google Scholar]

- Meinshausen N, Bühlmann P. Stability Selection. J Roy Stat Soc B. 2010;72 [Google Scholar]

- Smit S, van Breemen MJ, Hoefsloot HCJ, Smilde AK, Aerts JMFG, de Koster CG. Assessing the statistical validity of proteomics based biomarkers. Anal Chim Acta. 2007;592:210–7. doi: 10.1016/j.aca.2007.04.043. [DOI] [PubMed] [Google Scholar]

- Stone M. Cross-validatory choice and assessment of statistical predictions. Journal of the Royal Statistical Society. Series B (Methodological) 1974;36:111–147. [Google Scholar]

- Turney P. Technical note: Bias and the quantification of stability. Machine Learning. 1995;20:23–33. [Google Scholar]

- Yu L, Ding C, Loscalzo S. Stable feature selection via dense feature groups. Proceeding of the 14th ACM SIGKDD international conference on knowledge discovery and data mining (KDD’08); 2008. pp. 803–811. [Google Scholar]

- Zou H, Hastie T. Regularization and variable selection via the elastic net. J Roy Stat Soc B. 2005;67:301–320. [Google Scholar]

- Zucknick M, Richardson S, Stronach EA. Comparing the characteristics of gene expression profiles derived by univariate and multivariate classification methods. Statistical applications in genetics and molecular biology. 2008;7:7. doi: 10.2202/1544-6115.1307. [DOI] [PMC free article] [PubMed] [Google Scholar]