Abstract

Objective

To investigate whether the US News & World Report (USNWR) ranking of the medical school a physician attended is associated with patient outcomes and healthcare spending.

Design

Observational study.

Setting

Medicare, 2011-15.

Participants

20% random sample of Medicare fee-for-service beneficiaries aged 65 years or older (n=996 212), who were admitted as an emergency to hospital with a medical condition and treated by general internists.

Main outcome measures

Association between the USNWR ranking of the medical school a physician attended and the physician’s patient outcomes (30 day mortality and 30 day readmission rates) and Medicare Part B spending, adjusted for patient and physician characteristics and hospital fixed effects (which effectively compared physicians practicing within the same hospital). A sensitivity analysis employed a natural experiment by focusing on patients treated by hospitalists, because patients are plausibly randomly assigned to hospitalists based on their specific work schedules. Alternative rankings of medical schools based on social mission score or National Institute of Health (NIH) funding were also investigated.

Results

996 212 admissions treated by 30 322 physicians were examined for the analysis of mortality. When using USNWR primary care rankings, physicians who graduated from higher ranked schools had slightly lower 30 day readmission rates (adjusted rate 15.7% for top 10 schools v 16.1% for schools ranked ≥50; adjusted risk difference 0.4%, 95% confidence interval 0.1% to 0.8%; P for trend=0.005) and lower spending (adjusted Part B spending $1029 (£790; €881) v $1066; adjusted difference $36, 95% confidence interval $20 to $52; P for trend <0.001) compared with graduates of lower ranked schools, but no difference in 30 day mortality. When using USNWR research rankings, physicians graduating from higher ranked schools had slightly lower healthcare spending than graduates from lower ranked schools, but no differences in patient mortality or readmissions. A sensitivity analysis restricted to patients treated by hospitalists yielded similar findings. Little or no relation was found between alternative rankings (based on social mission score or NIH funding) and patient outcomes or costs of care.

Conclusions

Overall, little or no relation was found between the USNWR ranking of the medical school from which a physician graduated and subsequent patient mortality or readmission rates. Physicians who graduated from highly ranked medical schools had slightly lower spending than graduates of lower ranked schools.

Introduction

Given extensive evidence that practice patterns vary widely across physicians,1 2 3 4 5 6 there is increasing interest in measuring the performance of individual physicians and understanding the determinants of physician level variation in patient outcomes and healthcare spending. Such knowledge may help design effective interventions to improve quality of care and reduce low value care.7 8 Education and training are potentially important determinants of a physician’s practice style. Research has found that physicians whose residency training occurred in regions with higher healthcare spending had higher subsequent costs of care after residency completion compared with physicians who trained in lower spending regions.9 A previous study also found that obstetricians who trained in residency programs with higher complication rates for childbirth had higher complication rates compared with obstetricians who trained in residency programs with lower complication rates.10 These findings shed light on the potential importance of physician training in determining the quality and costs of care delivered.

Surprisingly little is known about the association between where a physician completed medical school—in particular a medical school’s US News & World Report (USNWR) national ranking—and subsequent patient outcomes and costs of care. Patients and peer physicians may use a physician’s USNWR medical school ranking as a signal of provider quality,11 12 despite little evidence that the prestige of a medical school (which may correlate with both the quality of medical education and the strength of pre-medical academic records) is associated with the quality of care physicians deliver.13 14 However, it remains largely unknown whether subsequent patient outcomes and spending differ between physicians who graduate from top ranked versus lower ranked medical schools in USNWR rankings.

Using nationally representative data on Medicare beneficiaries admitted to hospital for a medical condition, we examined the association between USNWR rankings of medical schools attended by a cohort of general internists and their clinical performance—30 day mortality rates, 30 day readmission rates, and costs of care. We focused on USNWR rankings in our main analyses because they are the most commonly applied rankings and used in previous studies.15 16 17 As secondary analyses, we also investigated alternative rankings based on social mission score and research funding.18

Methods

Data

We linked the 20% Medicare Inpatient Carrier and Medicare Beneficiary Summary Files from 2011 to 2015 to a comprehensive physician database from Doximity. Doximity is an online professional network for physicians that has assembled data on all US physicians—both those who are registered members of the service as well as those who are not—through multiple sources and data partnerships: the National Plan and Provider Enumeration System National Provider Identifier Registry, state medical boards, specialty societies such as the American Board of Medical Specialties, and collaborating hospitals and medical schools. The database includes information on physician age, sex, year of medical school completion, credentials (allopathic versus osteopathic training), specialty, and board certification.19 20 21 22 Prior studies have validated data for a random sample of physicians in the Doximity database using manual audits.19 20 We were able to match approximately 95% of physicians in the Medicare database to the Doximity database. Details of the Doximity database are described elsewhere.1 19 20 22 23 24

Patient population

We analysed Medicare fee-for-service beneficiaries aged 65 years or older admitted to hospital with a medical condition (as defined by the presence of a medical Medicare Severity Diagnosis Related Group (MS-DRG) on admission) between 1 January 2011 and 31 December 2015. To avoid comparing patient outcomes across physicians of different specialties, we focused on patients treated by general internists. We restricted our sample to patients treated in acute care hospitals, and excluded hospital admissions where a patient left against medical advice. To minimize the influence of patients selecting their physicians or physicians selecting their patients, we focused our analyses on emergency hospital admissions, defined as either emergency or urgent admissions identified in Claim Source Inpatient Admission Code of Medicare data (although we were not only interested in patients admitted as an emergency, this approach was necessary to reduce the impact of unmeasured confounding). To allow sufficient follow-up, we excluded patients admitted in December 2015 from 30 day mortality analyses and patients discharged in December 2015 from readmission analyses.

Medical school rankings

Data on medical school attended were available for approximately 80% of physicians in our data. We restricted analyses to those who graduated from medical schools in the US, excluding 259 (0.6%) who self reported as graduates of “other medical schools.” For those physicians for whom information on medical school was available, we matched schools to the rankings of the US News & World Report (USNWR) in research and primary care (see supplemental eTable 1). The USNWR has published research rankings of medical schools since 1983, and it added a primary care ranking in 1995.25 USNWR uses four attributes to rank medical schools: reputation, research activity, student selectivity, and faculty resources. The rankings are commonly used as a metric for assessing the quality of medical schools15 16 17 (although other less commonly used ranking schemas exist). Rankings are based on a weighted average of indicators, including peer assessment by school deans, evaluation by residency directors, selectivity of student admission (medical college admission test scores, student grade point averages, and acceptance rate), and faculty-student ratio.26 In addition, research rankings also take into account research activity of the faculty; primary care rankings include a measure of the proportion of graduates entering primary care specialties.

To allow for a non-linear relation we categorized medical schools into groups on the basis of USNWR ranking: 1-10, 11-20, 21-30, 31-40, 41-50, and ≥50 (only the top 50 medical schools are ranked, and therefore, we put unranked schools into the last category). We considered these six categories as the ranking categories. To measure the USNWR ranking of a physician’s medical school during the approximate period of school attendance, we used rankings published in 2002 as opposed to current rankings, and we examined patient outcomes of these physicians in 2011-15. Previous studies have found a high correlation between USNWR school rankings across years27 and relatively stable rankings over time for the top 20 medical schools in primary care rankings.28

Outcome variables

Our outcomes of interest included 30 day mortality, 30 day readmissions, and costs of care. Information on dates of death, including deaths out of hospital, was available in the Medicare Beneficiary Summary Files, which have been verified by death certificate.29 We excluded less than 1% of patients with non-validated death dates. We defined costs of care as total Medicare Part B spending (physician fee-for-service spending, including visits, procedures, and interpretations of tests or images) for each hospital admission, because Part A spending (hospital spending) is largely invariant as it is determined by MS-DRGs.

Attribution of patient outcomes to physicians

Based on prior studies,1 21 22 24 30 we defined the physician responsible for patient outcomes and spending as the physician who billed the most Medicare Part B spending costs during that hospital admission. On average, 51%, 22%, and 11% of total Part B spending was accounted for by the first, second, and third highest spending physicians, respectively. We restricted our analyses to hospital admissions in which the assigned physician was a general internist. For patients transferred to other acute care hospitals (1.2% of hospital admissions), we attributed the multi-hospital episode of care and associated outcomes to the assigned physician of the initial hospital admission.31 32

Adjustment variables

We adjusted for patient and physician characteristics and hospital fixed effects. Patient characteristics included age (as a continuous variable, with quadratic and cubic terms to allow for a non-linear relation), sex, race or ethnic group (non-Hispanic white American, non-Hispanic black American, Hispanic American, other), primary diagnosis (indicator variables for MS-DRG), indicators for 27 comorbid conditions (from the Chronic Conditions Data Warehouse developed by the Centers for Medicare and Medicaid Services33), median household income by zip code (in 10ths), an indicator for dual Medicare-Medicaid coverage, day of the week on which the admission occurred, and the indicator variable for year. Physician characteristics included age (as a continuous variable, plus quadratic and cubic terms), sex, credentials (allopathic versus osteopathic training), and patient volume (as a continuous variable, with quadratic and cubic terms). We also adjusted for hospital fixed effects—indicator variables for each hospital, which account for both measured and unmeasured characteristics of hospitals that do not vary over time, including unmeasured differences in patient populations. Therefore, our models effectively compared patient outcomes between physicians who graduated from medical schools of varying USNWR rank, practicing within the same hospital.34 35 36 This method allowed us to circumvent the potential concern that physicians from highly ranked medical school may appear to have better (or worse) patient outcomes because they are differentially employed by hospitals with better support systems or whose patients have, on average, lower severity of illness (or alternatively, worse support systems and patients with, on average, higher severity of illness).

Statistical analysis

We examined the association between the USNWR ranking category of the medical school a physician attended and the physician’s 30 day patient mortality rate using a multivariable linear probability model, adjusting for patient and physician characteristics and hospital fixed effects. We used cluster robust standard errors to account for the possibility that outcomes among patients treated by the same physician may be correlated with each other.37 After fitting the regression model, we calculated adjusted 30 day mortality rates using marginal standardization (also known as predictive margins or margins of responses); for each hospital admission we calculated predicted probabilities of patient mortality with physician medical school ranking fixed at each category and then averaged over the distribution of covariates in our national sample.38 In addition, to test whether mortality rates changed monotonically across USNWR medical school ranking categories, we conducted a trend analysis (P for trend) by refitting the regression model using the ranking categories as a continuous variable.

We then evaluated the relation between the ranking of the medical school a physician attended and 30 day readmission rates and costs of care using a similar method to the analysis of mortality. To estimate these associations, we used multivariable linear probability models adjusting for patient and physician characteristics and hospital fixed effects.

Secondary analyses

We conducted several additional analyses. Firstly, to address the possibility that the USNWR ranking of a physician’s medical school in 2002 may not reflect the ranking at the time the physician attended medical school, we restricted our analysis to physicians who graduated from medical school within four years (from 1998 through 2006) of when the rankings were created (given that the typical duration of medical school education is four years). We also conducted an analysis that used USNWR medical school rankings from 2009 instead of 2002.

Secondly, to address the possibility that physicians who graduated from more highly ranked medical schools may treat patients with greater or lesser unmeasured severity of illness, we repeated our analyses focusing on hospitalists instead of general internists. Hospitalists typically work in scheduled shifts or blocks (eg, one week on and one week off) and in general do not treat patients in the outpatient setting. Therefore, within the same hospital, patients treated by hospitalists may be considered to be plausibly randomised to a particular hospitalist based only on the time of the patient’s admission and the hospitalist’s work schedule.21 22 39 We assessed the validity of this assumption by testing the balance of a broad range of patient characteristics between physicians who graduated from lower ranked versus higher ranked medical schools. We defined hospitalists as general internists who filed at least 90% of their total evaluation-and-management billings in an inpatient setting, a claims based approach that has been previously validated (sensitivity of 84.2%, specificity of 96.5%, and positive predictive value of 88.9%).40 To address the possibility that patients who are admitted multiple times may be assigned to the hospitalist who treated the patient previously, we restricted our analyses to patients’ first admission to a given hospital during the study period.

Thirdly, to evaluate whether our findings were sensitive to how we attributed patients to physicians, we tested two alternative attribution methods: attributing patients to physicians with the largest number of evaluation-and-management claims, and attributing patients to physicians who billed the first evaluation-and-management claim for a given hospital admission (the “admitting physician”).

Fourthly, we used multivariable logistic regression models, instead of multivariable linear probability models, to test whether our findings were sensitive to the model specification for the analyses of binary outcomes (mortality and readmissions). To overcome complete or quasi-complete separation problems (ie, perfect or nearly perfect prediction of the outcome by the model), we combined medical diagnosis related group codes with no outcome event (30 day mortality or readmission) into clinically similar categories.41

Fifthly, because cost data were right skewed we conducted an additional sensitivity analysis using a generalised linear model with a log-link and normal distribution for our cost analyses.42

Sixthly, we investigated whether the association between a physician’s USNWR medical school ranking and subsequent patient outcomes and costs of care was modified by years of experience. We hypothesised that medical school may play a greater role, if any, as a signal of quality for physicians who recently completed residency training as opposed to older physicians for whom any signal of quality owing to medical school may dissipate over time as physician practice norms conform to those of other peers or hospital standards.

Seventhly, to evaluate the influence of hospitals where physicians practice, instead of comparing physicians who graduated from highly ranked versus lower ranked schools within the same hospital, we compared physicians across hospitals, by removing hospital fixed effects from our regression models.

Finally, to address important concerns about the methods used for the USNWR rankings, we repeated the analyses using alternative rankings. Alternative to the primary care ranking, we used the ranking based on social mission score developed by Mullan and colleagues, which is calculated based on the percentages of graduates who work in primary care, graduates who work in health professional shortage areas, and underrepresented minorities.43 Alternative to the research ranking, we used a ranking based on the amount of NIH (National Institute of Health) funding to medical schools. Importantly, our baseline analysis focused on USNWR rankings, rather than these possibly more objective measures of medical school quality, because the key empirical question of interest in this study was whether the commonly used USNWR rankings bear any predictive signal for downstream patient outcomes and costs of care for physicians who graduated from high ranked versus lower ranked schools in USNWR rankings.

Data preparation was conducted using SAS, version 9.4 (SAS Institute), and analyses were performed using Stata, version 14 (Stata Corp).

Patient and public involvement

No patients were involved in setting the research question or the outcome measures, nor were they involved in developing plans for design or implementation of the study. No patients were asked to advise on interpretation or writing up of results. There are no plans to disseminate the results of the research to study participants or the relevant patient community.

Results

Physician and patient characteristics

Among 30 322 physicians included in the study, 13.3% (4039/30 322) graduated from a medical school ranked in the top 20 for primary care in US News & World Report (USNWR) rankings, and 13.4% (4071/30 322) graduated from a school ranked in the top 20 for research. Only seven medical schools were in the top 20 for both primary care and research. Within the same hospital, physicians graduating from top 20 medical schools for primary care were slightly older and more likely to be graduates of allopathic schools (table 1; see eTable 2 for differences in physician and patient characteristics between top 20 research versus lower ranked research schools). Patient characteristics were similar between physicians of top 20 medical schools versus physicians graduating from lower ranked schools, with small differences in the prevalence of diabetes, hypertension, and chronic kidney disease.

Table 1.

Patient and physician characteristics, according to a physician’s medical school US News & World Report (USNWR) ranking for primary care in 2002. Values are numbers (percentages) unless stated otherwise

| Characteristics | USNWR ranking (primary care), 2002 | P value | |

|---|---|---|---|

| Top 20 | ≥21 | ||

| Physicians | |||

| No of physicians | 4039 | 26 283 | — |

| Mean (SE) physician’s age (years) | 46.9 (0.2) | 45.0 (0.08) | <0.001 |

| Women | 1329 (32.9) | 8121 (30.9) | 0.08 |

| Credentials: | |||

| MD (allopathic) | 4013 (99.4) | 23 008 (87.5) | <0.001 |

| DO (osteopathic) | 26 (0.6) | 3275 (12.5) | |

| Patients | |||

| No of Medicare hospital admissions, 2011-15 | 105 617 | 890 595 | — |

| Mean (SE) patient’s age (years) | 76.0 (0.08) | 76.0 (0.02) | 0.74 |

| Women | 63 477 (60.1) | 532 577 (59.8) | 0.08 |

| Race/ethnicity: | |||

| White | 84 601 (80.1) | 713 368 (80.1) | 0.90 |

| Black | 12 991 (12.3) | 109 543 (012.3) | 0.96 |

| Hispanic | 4436 (4.2) | 39 186 (4.4) | 0.02 |

| Other | 3591 (3.4) | 28 449 (3.2) | 0.054 |

| Mean of median (SE) household income ($) | 56 349 (101) | 56 284 (29) | 0.55 |

| Medicaid status | 29 679 (28.1) | 252 039 (28.3) | 0.32 |

| Comorbidities: | |||

| Congestive heart failure | 30 630 (29.0) | 255 601 (28.7) | 0.14 |

| COPD | 24 081 (22.8) | 203 056 (22.8) | 0.69 |

| Diabetes | 27 651 (26.2) | 229 418 (25.8) | 0.03 |

| Hypertension | 55 027 (52.1) | 459 876 (51.3) | 0.003 |

| Chronic kidney disease | 32 742 (31.0) | 269 851 (30.3) | 0.002 |

| Ischaemic heart disease | 35 699 (33.8) | 297 459 (33.4) | 0.09 |

| Cancer | 10 668 (10.1) | 89 060 (10.0) | 0.81 |

| Depression | 23 131 (21.9) | 194 150 (21.8) | 0.44 |

1.00 (£0.77; €0.85).

COPD=chronic obstructive pulmonary disease.

Physicians within the same hospital were compared by fitting ordinary least square regression models adjusting for hospital fixed effects, and then standard marginalization was calculated.

Standard errors were clustered at physician level.

USNWR primary care ranking and patient outcomes/healthcare spending

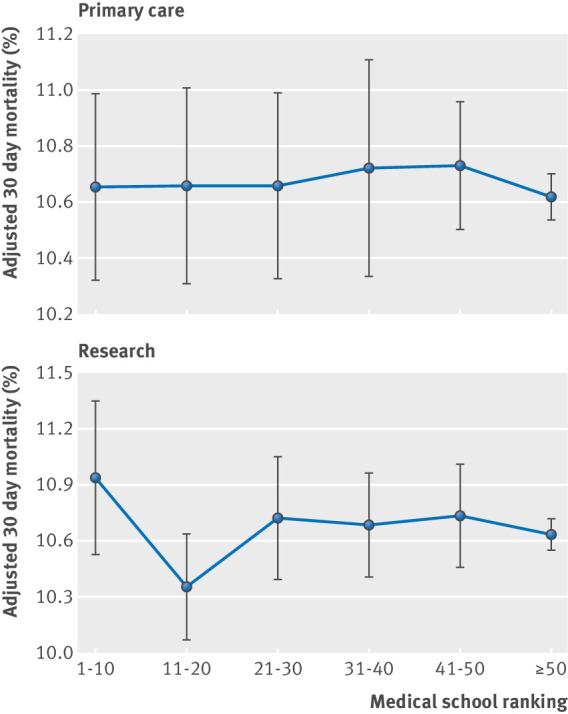

Among 996 212 hospital admissions of Medicare patients, 10.6% (106 003/996 212) died within 30 days of admission. After adjusting for patient and physician characteristics and hospital fixed effects, no systematic relation was observed between the USNWR primary care ranking of the medical school that a physician attended and the physician’s 30 day mortality rate for treated patients (table 2 and fig 1). A formal test for linearity found no association between USNWR medical school primary care ranking and patient mortality (P for trend=0.67).

Table 2.

Association between a physician’s medical school US News & World Report (USNWR) ranking and patient outcomes

| USNWR ranking | 30 day mortality | 30 day readmission | Part B spending | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Adjusted mortality (95% CI) (%) | Adjusted risk difference (95% CI) (%) | P for trend | Adjusted readmission (95% CI) (%) | Adjusted risk difference (95% CI) (%) | P for trend | Adjusted spending (95% CI) ($) | Adjusted difference (95% CI) ($) | P for trend | |||

| Primary care: | |||||||||||

| 1-10 | 10.7 (10.3 to 11.0) | Reference | 0.67 | 15.7 (15.3 to 16.0) | Reference | 0.005 | 1029 (1014 to 1045) | Reference | <0.001 | ||

| 11-20 | 10.7 (10.3 to 11.0) | 0.005 (−0.5 to 0.5) | 16.0 (15.6 to 16.3) | 0.3 (−0.2 to 0.8) | 1062 (1045 to 1079) | 33 (11 to 55) | |||||

| 21-30 | 10.7 (10.3 to 11.0) | 0.004 (−0.5 to 0.5) | 15.8 (15.4 to 16.2) | 0.2 (−0.3 to 0.7) | 1043 (1025 to 1060) | 13 (−10 to 36) | |||||

| 31-40 | 10.7 (10.3 to 11.1) | 0.07 (−0.5 to 0.6) | 15.5 (15.1 to 15.9) | −0.2 (−0.7 to 0.3) | 1054 (1037 to 1071) | 25 (2 to 48) | |||||

| 41-50 | 10.7 (10.5 to 11.0) | 0.08 (−0.3 to 0.5) | 16.1 (15.9 to 16.4) | 0.5 (0.1 to 0.9) | 1053 (1042 to 1064) | 24 (5 to 42) | |||||

| ≥50 | 10.6 (10.5 to 10.7) | −0.04 (−0.4 to 0.3) | 16.1 (16.0 to 16.2) | 0.4 (0.1 to 0.8) | 1066 (1061 to 1070) | 36 (20 to 52) | |||||

| Research: | |||||||||||

| 1-10 | 10.9 (10.5 to 11.3) | Reference | 0.99 | 16.0 (15.6 to 16.5) | Reference | 0.27 | 1050 (1028 to 1071) | Reference | <0.001 | ||

| 11-20 | 10.4 (10.1 to 10.6) | −0.6 (−1.1 to −0.1) | 15.9 (15.6 to 16.3) | −0.1 (−0.6 to 0.5) | 1044 (1030 to 1058) | −6 (−31 to 19) | |||||

| 21-30 | 10.7 (10.4 to 11.0) | −0.2 (−0.7 to 0.3) | 15.7 (15.4 to 16.0) | −0.3 (−0.8 to 0.2) | 1037 (1022 to 1053) | −13 (−39 to 13) | |||||

| 31-40 | 10.7 (10.4 to 11.0) | −0.3 (−0.7 to 0.2) | 16.1 (15.8 to 16.4) | 0.1 (−0.4 to 0.6) | 1056 (1042 to 1070) | 6 (−19 to 31) | |||||

| 41-50 | 10.7 (10.5 to 11.0) | −0.2 (−0.7 to 0.3) | 16.0 (15.7 to 16.3) | −0.04 (−0.6 to 0.5) | 1050 (1037 to 1063) | 0 (−25 to 25) | |||||

| ≥50 | 10.6 (10.5 to 10.7) | −0.3 (−0.7 to 0.1) | 16.1 (16.0 to 16.2) | 0.05 (−0.4 to 0.5) | 1067 (1062 to 1071) | 17 (−5 to 39) | |||||

Analysis of 996 212 (30 322), 973 484 (30 310), and 1 047 103 (30 605) hospital admissions (number of physicians) for mortality, readmissions, and health spending, respectively.

Adjusted for patient characteristics, physician characteristics, and hospital fixed effects.

Standard errors were clustered at the physician level.

Fig 1.

Association between physicians’ US News & World Report medical school ranking for primary care and research and patient 30 day mortality. Adjusted for patient and physician characteristics and hospital fixed effects

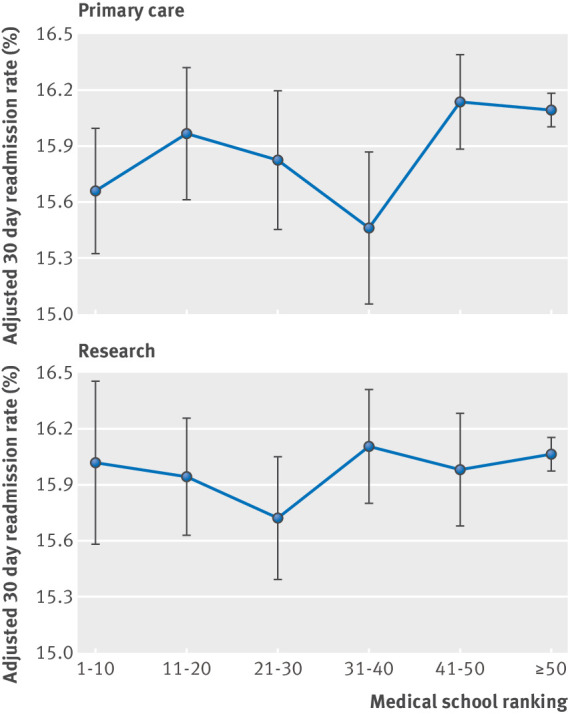

The overall 30 day readmission rate was 16.0% (156 057/973 484). After multivariable adjustment, patients treated by physicians who graduated from medical schools with lower USNWR primary care rankings had slightly higher readmissions compared with patients treated by physicians who graduated from higher ranked medical schools (adjusted 30 day readmission, 15.7% for top 10 schools versus 16.1% for schools ranked ≥50; adjusted risk difference 0.4%, 95% confidence interval 0.1% to 0.8%; P for trend=0.005) (table 2 and fig 2).

Fig 2.

Association between physician US News & World Report medical school ranking for primary care and research and patient 30 day readmission rate. Adjusted for patient and physician characteristics and hospital fixed effects

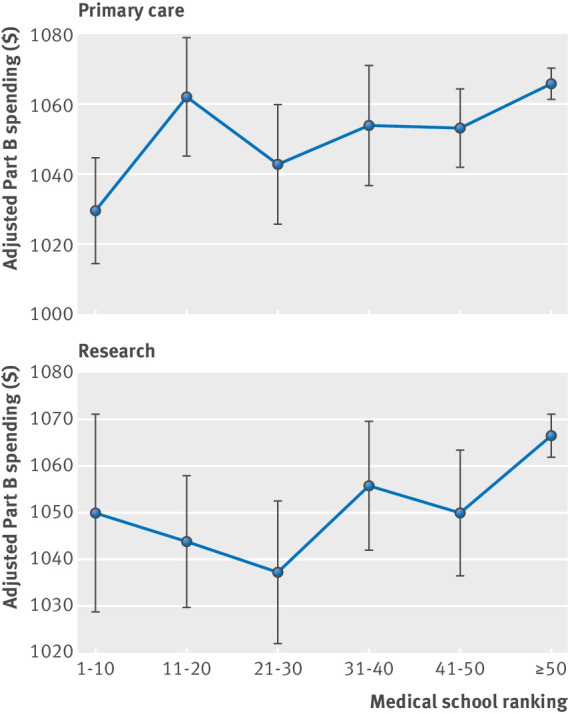

Physicians who graduated from schools highly ranked in primary care had slightly lower spending than physicians who graduated from lower ranked schools (P for trend <0.001). For example, physicians who graduated from top 10 ranked USNWR schools spent slightly less for each patient than physicians who graduated from schools with a ranking of 50 or more (adjusted Part B spending level $1029 (£790; €881) for top 10 schools v $1066 for schools ranked ≥50; adjusted difference $36, 95% confidence interval $20 to $52; P<0.001) (table 2 and fig 3).

Fig 3.

Association between physician US News & World Report medical school ranking for primary care and research and Part B spending for each hospital admission. Adjusted for patient and physician characteristics and hospital fixed effects

USNWR research ranking and patient outcomes/healthcare spending

No statistically significant association was observed between the USNWR research ranking of the medical school that a physician attended and patient 30 day mortality. No systematic (linear) association was found between USNWR medical school research ranking and patient mortality (P for trend=0.99) (table 2 and fig 1).

The USNWR research ranking of a physician’s medical school was not statistically significantly associated with patient 30 day readmission rates (table 2 and fig 2) (P for trend=0.27).

Physicians who graduated from highly ranked schools had slightly lower spending than graduates from lower ranked schools (adjusted Part B spending level $1050 for top 10 schools v $1067 for schools ranked ≥50; P for trend <0.001) (table 2 and fig 3).

Secondary analyses

The overall findings were qualitatively unaffected by restricting analyses to physicians who graduated from medical school within four years of when the USNWR rankings were created (eTable 3), or when 2009 USNWR rankings were used instead of 2002 rankings (eTable 4). The agreement rate between the 2002 and 2009 ranking categories using a weighted κ was 0.90 and 0.71 for research and primary care rankings, respectively. Patient characteristics did not differ between hospitalists who graduated from top ranked versus lower ranked USNWR schools (eTable 5), and findings were similar among hospitalists (eTable 6). Findings were not affected by using alternative physician attribution models (eTables 7 and 8), using logistic regression models (eTable 9), or using a generalised linear model with a log-link and normal distribution for the analysis of costs (eTable 10). A stratified analysis by years since completion of residency programs showed that the association of medical school with subsequent patient outcomes was strongest in the first 10 years of the physicians’ career (eTable 11). For example, physicians who attended top medical schools (either in terms of research or primary care rankings) exhibited statistically significantly lower patient mortality rates in the first 10 years of independent practice, whereas there was no association after 10 years. Comparison of physicians across hospitals (by removing hospital fixed effects from regression models) revealed that physicians who graduated from highly ranked USNWR medical schools—for both primary care and research rankings—had lower mortality rates, readmissions rates, and costs of care compared with physicians who graduated from lower ranked schools (eTable 12). Finally, we found little or no association between medical school rankings and patient outcomes or costs of care when the ranking of the medical school that a physician attended was based on a social mission score (eTable 13). We also found no association between patient outcomes and ranking of the medical school that a physician attended when ranking was based on NIH funding; costs of care were only slightly lower for physicians who graduated from medical schools ranked highly for NIH funding compared with lower ranked schools (eTable 14).

Discussion

In a nationally representative cohort of Medicare patients aged 65 years and older who were admitted to hospital in 2011-15 and treated by a general internist, little or no association was found between the US News & World Report (USNWR) ranking of the medical school from which a physician graduated and patient 30 day mortality or readmission rates. Physicians who graduated from highly ranked medical schools had slightly lower spending compared with physicians who graduated from lower ranked schools. Overall these findings suggest that the USNWR ranking of the medical school from which a physician graduated bears only a weak relation with patient outcomes and costs of care. We also found that alternative ranking schema—based on social mission score for primary care ranking and NIH funding for research ranking—bore little relation with subsequent patient outcomes and costs of care.

There are several potential explanations for why physicians who graduate from USNWR highly ranked medical schools show little or no differences in their clinical outcomes and healthcare spending. Firstly, the medical school accreditation processes, medical school standards, and standardized testing required of all physicians may be sufficiently stringent to ensure that all medical students master the essential competencies necessary to practice as clinicians. In the US, MD (allopathic) granting medical schools are accredited by the Liaison Committee on Medical Education, and DO (osteopathic) granting schools are accredited by the American Osteopathic Association Commission on Osteopathic College Accreditation. That only 17 medical schools were awarded full accreditation by these bodies between 2007 and 2016 suggests that these accrediting bodies hold MD granting and DO granting medical schools to rigorous standards.44 However, it is possible that observed variation would be larger if there were no national standards for medical schools. Secondly, our findings indicate that although different medical schools may focus on training students with different interests and goals—for example, some institutions may focus on training physician-scientists, whereas others may have mandates to produce clinicians for their local communities—schools may have developed strategies for effectively ensuring that students learn the core knowledge and skills necessary to become competent physicians. Thirdly, the findings of our study may in part reflect the study’s design, which compared patient outcomes between physicians practicing in the same hospital. Our within hospital analysis helps address confounding arising from the possibility that physicians who graduate from higher USNWR ranked versus lower ranked medical schools may practice in areas with different patient populations. However, because hospitals perform quality assurance on the physicians that are hired, it is likely that within hospital differences in physician skill may be smaller than the between hospital differences. This hypothesis is supported by our secondary analysis findings that differences in patient outcomes between physicians graduating from medical schools of varying USNWR rank were larger when we removed hospital specific fixed effects from our model (thereby comparing physicians across rather than within hospitals). Fourthly, although patients may view the USNWR ranking of a physician’s medical school as a signal of quality, it is likely that many factors at different stages of physicians’ career, including postgraduate training and the systems in place at a physician’s current place of work, play an important role in determining the quality and costs of care that physicians provide.9 10 Future studies are warranted to understand whether other factors such as residency training have a measurable association with the performance of physicians after completion of training. Lastly, it is possible that the rankings we used in this study do not capture the quality of medical education in a valid and reliable way, and we may need better approaches for measuring the quality of medical schools. For example, in the USNWR primary care ranking, the largest weight is given to graduates selecting internal medicine, family practice, or paediatric residencies; however, only a limited proportion of those trainees entering internal medicine residency programs may remain in primary care. Our findings suggest that there is room for improvement within medical school rankings to ensure that they reflect the actual quality of medical education that students receive at individual medical schools.

To identify whether the quality of medical education has an impact on downstream practice patterns of physicians, it is important to emphasize what this study does and does not attempt. Our main interest was to analyse whether the commonly used USNWR ranking is associated with subsequent patient outcomes and costs of care for physicians who graduated from medical schools with a high ranking versus low USNWR ranking. We chose this question because the USNWR ranking of the medical school from which a physician graduated may be used by patients and clinicians as a signal for physician quality. We found no evidence that the USNWR ranking of the medical school from which a physician graduated bears any relation with subsequent patient outcomes, at least when considering physicians who practice within the same the hospital. We also found no relation between two other ranking schema and subsequent patient outcomes of physicians who graduated from high ranked versus lower ranked medical schools (rankings based on social mission score and NIH funding); however, this does not imply that the quality of medical school training bears no relation with quality of downstream patient care, which is a distinct question. It may, but the main focus of this study was whether common perceptions of a medical school’s quality—based on widely used USNWR rankings—provide any predictive signal for subsequent patient outcomes and costs of care.

Comparison with other studies

The current study relates to prior research on the relation between residency training and subsequent costs and quality of care, which finds that practice patterns embedded in residency training are subsequently implemented into practice after physicians complete their residency.9 10 There is also a limited body of work evaluating the association between the medical school from which a physician graduated and subsequent practice patterns. Reid and colleagues examined physicians practicing in Massachusetts and found no association between graduating from a top 10 medical school, defined using USNWR rankings, and performance on process-of-care measures.13 Hartz and colleagues found no association among cardiothoracic surgeons attending prestigious medical schools and coronary artery bypass graft surgery outcomes.45 Schnell and Currie recently reported that physicians who completed training at highly ranked medical schools write statistically significantly fewer opioid prescriptions than physicians from lower ranked schools.14

Strengths and limitations of this study

Our study has limitations. Firstly, USNWR rankings are, at best, imperfect measures of medical school quality. While no ranking system is perfect, the USNWR rankings system captures a wider array of factors that reflect medical school quality than any other ranking system—including peer assessment scores by school deans, evaluation by residency directors, students’ grades and test scores, and faculty-student ratio.26 Moreover, USNWR rankings systems have been reported to influence applicants’ medical school choices and are often used in scientific research as a proxy for medical school quality.12 13 14 45 46 Importantly, even if USNWR rankings are not accurate measures of medical school quality, to the extent that patient perceptions of doctor quality may partly depend on the ranking of the medical school at which a physician trained, this study suggests that little information about mortality, readmissions, and costs of care should be inferred by patients from that ranking information. Secondly, it is possible that the quality of a medical school’s research and primary care training may not correlate well with the quality of the school’s training for hospital based care, which could confound our assessment of the relation between medical school quality and patient outcomes. Thirdly, we relied on USNWR medical school rankings from a single year, whereas physicians in our data matriculated from medical school across a wide range of years. It is possible that this single year estimate of quality failed to capture variations in medical school quality over time that had an important impact on physician quality, and, in turn, on patients’ clinical outcomes. However, previous studies have found a high correlation of USNWR rankings across years27 and relatively stable rankings over time for top 20 primary care medical schools.28 Our data also confirmed a high correlation of rankings across years. Furthermore, using alternative approaches in sensitivity analyses did not affect the results, supporting the robustness of these findings. Finally, these findings may not apply to non-Medicare populations, outpatient care, or surgical patients. Additional studies are needed to determine if the lack of association between the ranking of the medical school a physician attended and subsequent patient outcomes is generalizable to other types of clinical care and different patient populations.

Conclusion

For physicians practicing within the same hospital, the USNWR ranking of the medical school from which they graduated bears little or no relation with patient mortality after hospital admission, readmissions, and costs of care.

What is already known on this topic

No national data exist on whether the US News & World Report (USNWR) ranking of the medical school from which an internist graduated is associated with hospital patient outcomes and costs of care

Patients may perceive the medical school from which a physician graduated as a signal of care quality

The predictive relation between the USNWR ranking of the medical school a physician attended and subsequent patient outcomes and spending is therefore important to understand

What this study adds

Physicians who graduated from highly USNWR ranked primary care medical schools had slightly lower patient readmission rates and spending compared with those who attended lower ranked schools, but no difference in patient 30 day mortality

Physicians who graduated from highly ranked research medical schools had slightly lower spending but no difference in patient 30 day mortality or readmission rates

Little or no association was found between other rankings—based on social mission score or National Institute of Health funding—and patient outcomes and costs of care

Web extra.

Extra material supplied by authors

Supplementary information: eTables 1-14

Contributors: All authors contributed to the design and conduct of the study, data collection and management, analysis, and interpretation; and preparation, review, or approval of the manuscript. YT is the guarantor. The corresponding author attests that all listed authors meet authorship criteria and that no others meeting the criteria have been omitted.

Funding: ABJ was supported by the Office of the Director, National Institutes of Health (NIH Early Independence Award, grant 1DP5OD017897). ABJ reports receiving consulting fees unrelated to this work from Pfizer, Hill Rom Services, Bristol Myers Squibb, Novartis Pharmaceuticals, Amgen, Eli Lilly, Vertex Pharmaceuticals, Precision Health Economics, and Analysis Group. DMB has received consulting fees unrelated to this work from Precision Health Economics, Amgen, Novartis, and HLM Venture Partners, and is the associate chief medical officer of Devoted Health, which is a health insurance company. Study sponsors were not involved in study design, data interpretation, writing, or the decision to submit the article for publication.

Competing interests: All authors have completed the ICMJE uniform disclosure form at www.icmje.org/coi_disclosure.pdf and declare: ABJ has received consulting fees unrelated to this work from Pfizer, Hill Rom Services, Bristol Myers Squibb, Novartis Pharmaceuticals, Amgen, Eli Lilly, Vertex Pharmaceuticals, Precision Health Economics, and Analysis Group. DMB has received consulting fees unrelated to this work from Precision Health Economics, Amgen, Novartis, and HLM Venture Partners, and is the associate chief medical officer of Devoted Health, which is a health insurance company.

Ethical approval: This study was approved by the institutional review board at Harvard Medical School.

Data sharing: No additional data available.

Transparency: The lead author (YT) affirms that the manuscript is an honest, accurate, and transparent account of the study being reported; that no important aspects of the study have been omitted; and that any discrepancies are disclosed.

References

- 1. Tsugawa Y, Jha AK, Newhouse JP, Zaslavsky AM, Jena AB. Variation in Physician Spending and Association With Patient Outcomes. JAMA Intern Med 2017;177:675-82. 10.1001/jamainternmed.2017.0059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Tisnado D, Malin J, Kahn K, et al. Variations in Oncologist Recommendations for Chemotherapy for Stage IV Lung Cancer: What Is the Role of Performance Status? J Oncol Pract 2016;12:653-62. 10.1200/JOP.2015.008425. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Wang X, Knight LS, Evans A, Wang J, Smith TJ. Variations Among Physicians in Hospice Referrals of Patients With Advanced Cancer. J Oncol Pract 2017;13:e496-504. 10.1200/JOP.2016.018093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Hoffman KE, Niu J, Shen Y, et al. Physician variation in management of low-risk prostate cancer: a population-based cohort study. JAMA Intern Med 2014;174:1450-9. 10.1001/jamainternmed.2014.3021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Lipitz-Snyderman A, Sima CS, Atoria CL, et al. Physician-Driven Variation in Nonrecommended Services Among Older Adults Diagnosed With Cancer. JAMA Intern Med 2016;176:1541-8. 10.1001/jamainternmed.2016.4426. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Green JB, Shapiro MF, Ettner SL, Malin J, Ang A, Wong MD. Physician variation in lung cancer treatment at the end of life. Am J Manag Care 2017;23:216-23. [PMC free article] [PubMed] [Google Scholar]

- 7. Clough JD, McClellan M. Implementing MACRA: Implications for Physicians and for Physician Leadership. JAMA 2016;315:2397-8. 10.1001/jama.2016.7041. [DOI] [PubMed] [Google Scholar]

- 8. McWilliams JM. MACRA: Big Fix or Big Problem? Ann Intern Med 2017;167:122-4. 10.7326/M17-0230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Chen C, Petterson S, Phillips R, Bazemore A, Mullan F. Spending patterns in region of residency training and subsequent expenditures for care provided by practicing physicians for Medicare beneficiaries. JAMA 2014;312:2385-93. 10.1001/jama.2014.15973. [DOI] [PubMed] [Google Scholar]

- 10. Asch DA, Nicholson S, Srinivas S, Herrin J, Epstein AJ. Evaluating obstetrical residency programs using patient outcomes. JAMA 2009;302:1277-83. 10.1001/jama.2009.1356. [DOI] [PubMed] [Google Scholar]

- 11.Chen PW. Rethinking the Way We Rank Medical Schools. The New York Times 2010 June 17, 2010.

- 12. Gao GG, McCullough JS, Agarwal R, Jha AK. A changing landscape of physician quality reporting: analysis of patients’ online ratings of their physicians over a 5-year period. J Med Internet Res 2012;14:e38. 10.2196/jmir.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Reid RO, Friedberg MW, Adams JL, McGlynn EA, Mehrotra A. Associations between physician characteristics and quality of care. Arch Intern Med 2010;170:1442-9. 10.1001/archinternmed.2010.307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Schnell M, Currie J. Addressing the opioid epidemic: Is there a role for physician education? Am J Health Econ 2018;4:383-410. https://www.mitpressjournals.org/doi/abs/10.1162/ajhe_a_00113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Rosati CM, Koniaris LG, Molena D, et al. Characteristics of cardiothoracic surgeons practicing at the top-ranked US institutions. J Thorac Dis 2016;8:3232-44. 10.21037/jtd.2016.11.72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Lascano D, Finkelstein JB, Barlow LJ, et al. The Correlation of Media Ranking’s “Best” Hospitals and Surgical Outcomes Following Radical Cystectomy for Urothelial Cancer. Urology 2015;86:1104-12. 10.1016/j.urology.2015.07.049. [DOI] [PubMed] [Google Scholar]

- 17. Wilson AB, Torbeck LJ, Dunnington GL. Ranking Surgical Residency Programs: Reputation Survey or Outcomes Measures? J Surg Educ 2015;72:e243-50. 10.1016/j.jsurg.2015.03.021. [DOI] [PubMed] [Google Scholar]

- 18. Mullan F, Chen C, Petterson S, Kolsky G, Spagnola M. The social mission of medical education: ranking the schools. Ann Intern Med 2010;152:804-11. 10.7326/0003-4819-152-12-201006150-00009. [DOI] [PubMed] [Google Scholar]

- 19. Jena AB, Khullar D, Ho O, Olenski AR, Blumenthal DM. Sex Differences in Academic Rank in US Medical Schools in 2014. JAMA 2015;314:1149-58. 10.1001/jama.2015.10680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Jena AB, Olenski AR, Blumenthal DM. Sex Differences in Physician Salary in US Public Medical Schools. JAMA Intern Med 2016;176:1294-304. 10.1001/jamainternmed.2016.3284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Tsugawa Y, Jena AB, Figueroa JF, et al. Comparison of Hospital Mortality and Readmission Rates for Medicare Patients Treated by Male vs Female Physicians. JAMA Intern Med 2016;177:206-13. 10.1001/jamainternmed.2016.7875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Tsugawa Y, Jena AB, Orav EJ, Jha AK. Quality of care delivered by general internists in US hospitals who graduated from foreign versus US medical schools: observational study. BMJ 2017;356:j273. 10.1136/bmj.j273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Tsugawa Y, Jena AB, Figueroa JF, Orav EJ, Blumenthal DM, Jha AK. Comparison of Hospital Mortality and Readmission Rates for Medicare Patients Treated by Male vs Female Physicians. JAMA Intern Med 2017;177:206-13. 10.1001/jamainternmed.2016.7875. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Tsugawa Y, Newhouse JP, Zaslavsky AM, Blumenthal DM, Jena AB. Physician age and outcomes in elderly patients in hospital in the US: observational study. BMJ 2017;357:j1797. 10.1136/bmj.j1797. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. McGaghie WC, Thompson JA. America’s best medical schools: a critique of the U.S. News & World Report rankings. Acad Med 2001;76:985-92. 10.1097/00001888-200110000-00005 [DOI] [PubMed] [Google Scholar]

- 26.Morse R, Hines K. Methodology: 2018 Best Medical Schools Rankings: U.S. News; 2017. https://www.usnews.com/education/best-graduate-schools/articles/medical-schools-methodology accessed October 10 2017.

- 27. Clarke M. What Can” US News & World Report” Rankings Tell Us about the Quality of Higher Education? Educ Policy Anal Arch 2002;10:n16 10.14507/epaa.v10n16.2002. [DOI] [Google Scholar]

- 28. Tancredi DJ, Bertakis KD, Jerant A. Short-term stability and spread of the U.S. News & World Report primary care medical school rankings. Acad Med 2013;88:1107-15. 10.1097/ACM.0b013e31829a249a. [DOI] [PubMed] [Google Scholar]

- 29.Research Data Assistance Center (ResDAC). Death Information in the Research Identifiable Medicare Data Minneapolis, MN: Centers for Medicare & Medicaid Services; 2016. https://www.resdac.org/resconnect/articles/117 accessed November 2 2017.

- 30. McWilliams JM, Landon BE, Chernew ME, Zaslavsky AM. Changes in patients’ experiences in Medicare Accountable Care Organizations. N Engl J Med 2014;371:1715-24. 10.1056/NEJMsa1406552 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Ross JS, Normand SL, Wang Y, et al. Hospital volume and 30-day mortality for three common medical conditions. N Engl J Med 2010;362:1110-8. 10.1056/NEJMsa0907130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Drye EE, Normand SL, Wang Y, et al. Comparison of hospital risk-standardized mortality rates calculated by using in-hospital and 30-day models: an observational study with implications for hospital profiling. Ann Intern Med 2012;156:19-26. 10.7326/0003-4819-156-1-201201030-00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Centers for Medicare & Medicaid Services (CMS). Chronic Conditions Data Warehouse–Condition Categories Baltimore, MD: CMS. https://www.ccwdata.org/web/guest/condition-categories accessed September 13 2017.

- 34. Fitzmaurice GM, Laird NM, Ware JH. Applied longitudinal analysis. John Wiley & Sons, 2012. [Google Scholar]

- 35. Gardiner JC, Luo Z, Roman LA. Fixed effects, random effects and GEE: what are the differences? Stat Med 2009;28:221-39. 10.1002/sim.3478. [DOI] [PubMed] [Google Scholar]

- 36. Gunasekara FI, Richardson K, Carter K, Blakely T. Fixed effects analysis of repeated measures data. Int J Epidemiol 2014;43:264-9. 10.1093/ije/dyt221. [DOI] [PubMed] [Google Scholar]

- 37. Arellano M. Practitioners’ Corner: Computing Robust Standard Errors for Within-groups Estimators. Oxf Bull Econ Stat 1987;49:431-4 10.1111/j.1468-0084.1987.mp49004006.x. [DOI] [Google Scholar]

- 38. Williams R. Using the margins command to estimate and interpret adjusted predictions and marginal effects. Stata J 2012;12:308. [Google Scholar]

- 39. Hinami K, Whelan CT, Miller JA, Wolosin RJ, Wetterneck TB, Society of Hospital Medicine Career Satisfaction Task Force Job characteristics, satisfaction, and burnout across hospitalist practice models. J Hosp Med 2012;7:402-10. 10.1002/jhm.1907. [DOI] [PubMed] [Google Scholar]

- 40. Kuo YF, Sharma G, Freeman JL, Goodwin JS. Growth in the care of older patients by hospitalists in the United States. N Engl J Med 2009;360:1102-12. 10.1056/NEJMsa0802381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Heinze G, Schemper M. A solution to the problem of separation in logistic regression. Stat Med 2002;21:2409-19. 10.1002/sim.1047. [DOI] [PubMed] [Google Scholar]

- 42. Buntin MB, Zaslavsky AM. Too much ado about two-part models and transformation? Comparing methods of modeling Medicare expenditures. J Health Econ 2004;23:525-42. 10.1016/j.jhealeco.2003.10.005. [DOI] [PubMed] [Google Scholar]

- 43. Mullan F, Chen C, Petterson S, Kolsky G, Spagnola M. The social mission of medical education: ranking the schools [published Online First: 2010/06/16]. Ann Intern Med 2010;152:804-11. 10.7326/0003-4819-152-12-201006150-00009. [DOI] [PubMed] [Google Scholar]

- 44.Smith-Barrow D. Map: Where to Find the Newest Medical Schools: U.S. News; 2016. https://www.usnews.com/education/best-graduate-schools/top-medical-schools/articles/2016-07-11/map-where-to-find-the-newest-medical-schools accessed October 9 2017.

- 45. Hartz AJ, Kuhn EM, Pulido J. Prestige of training programs and experience of bypass surgeons as factors in adjusted patient mortality rates. Med Care 1999;37:93-103. 10.1097/00005650-199901000-00013 [DOI] [PubMed] [Google Scholar]

- 46. Cua S, Moffatt-Bruce S, White S. Reputation and the Best Hospital Rankings: What Does It Really Mean? Am J Med Qual 2017;32:632-7. 10.1177/1062860617691843. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary information: eTables 1-14