Abstract

Automatic segmentation of esophageal layers in OCT images is crucial for studying esophageal diseases and computer-assisted diagnosis. This work aims to improve the current techniques to increase the accuracy and robustness for esophageal OCT image segmentation. A two-step edge-enhanced graph search (EEGS) framework is proposed in this study. Firstly, a preprocessing scheme is applied to suppress speckle noise and remove the disturbance in the esophageal structure. Secondly, the image is formulated into a graph and layer boundaries are located by graph search. In this process, we propose an edge-enhanced weight matrix for the graph by combining the vertical gradients with a Canny edge map. Experiments on esophageal OCT images from guinea pigs demonstrate that the EEGS framework is more robust and more accurate than the current segmentation method. It can be potentially useful for the early detection of esophageal diseases.

OCIS codes: (100.0100) Image processing, (100.2960) Image analysis, (170.4500) Optical coherence tomography, (170.4580) Optical diagnostics for medicine

1. Introduction

Optical Coherence Tomography (OCT), which was first demonstrated by the MIT group in 1991 [1], is a powerful medical imaging technique. It can generate high-resolution, non-invasive, 3D images of biological tissues in real time. Initial applications of OCT were mainly in ophthalmology, where the microstructures revealed by OCT facilitated retinal disease diagnosis [2–4]. Endoscopic OCT is an important and rapidly growing branch of the OCT technology [5]. By combining fiber-optic flexible endoscopes, OCT is able to image internal luminal organs of human body with minimal invasiveness. It has been shown that gastrointestinal endoscopic OCT can visualize multiple esophageal tissue layers and pathological changes in a variety of esophageal diseases, such as eosinophilic esophagitis (EoE), Barrett’s esophagus (BE) and even esophageal cancer [6–8]. Recently, the development of ultrahigh-resolution gastrointestinal endoscopic OCT enables imaging of the esophagus with much finer details and improved contrast [9, 10]. Many esophageal diseases are manifest by changes in the tissue microstructures, such as changes in the esophageal layer thickness or disruption to the layers. Accurate quantification of the esophageal layered structures from gastrointestinal endoscopic OCT images can be potentially very valuable for objective diagnosis of the diseases and assessment of the disease severity as well as the exploration of potential structure-based biomarkers associated with disease progression [5, 11]. For instance, the OCT image of BE has an irregular mucosal surface and may present an absence of the layered architecture [12]; the OCT image of EoE is featured with increased basal zone thickness in the esophagus [11]. These diseased features can be easily detected provided that the esophageal OCT images are accurately segmented.

Traditional manual segmentation is time-consuming and subjective. As a result, the computer-aided automatic layer segmentation method is in urgent need. In the past few years, research on OCT images segmentation methods mostly targeted retina OCT images, and various algorithms have been published [13–16]. Representative methods can be grouped into the following four categories: the A-scan based methods [2,3], the active contour based methods [4, 17–19], machine learning based methods [20, 21] and the graph based methods [22]. Among these methods, the graph based method is the most widely used one in layer segmentation, and is proven to be quite successful [13, 22, 23]. Representative frameworks are the graph theory and dynamic programming (GTDP) [13] and the 3-D graph based segmentation [22]. It is worth mentioning that the newly developed deep learning algorithms have also been applied to retinal layer segmentation and achieved great success [24–27]. Studies on the segmentation of endoscopic OCT images are not as extensive as the macular ones. Representative researches can be found in the processing of cardiovascular [28–30] and esophageal OCT images [31–36]. As reported, the graph based method is also effective in segmenting cardiovascular [30] and esophageal tissue layers [36].

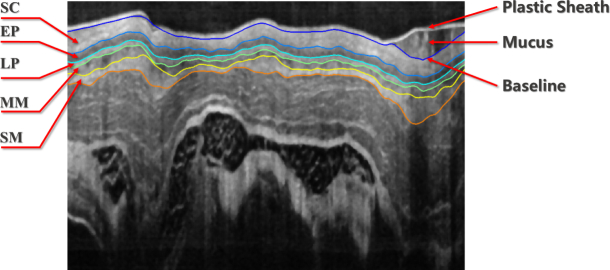

Segmentation of normal esophagus OCT images is supposed to detect layered tissue structures. Considering guinea pig as an example, the layerd structure includes the epithelium stratum corneum (SC), epithelium (EP), lamina propria (LP), muscularis mucosae (MM) and submucosa (SM) as illustrated in Fig. 1, which is the result of our proposed segmentation method. It can be found that these tissues have a similar layered architecture as the retina. In that case, automatic segmentation of esophageal OCT images has to address some common challenges in OCT image processing, such as speckle noise and motion artifacts [13, 36]. Moreover, the esophageal OCT image has some unique challenges resulting from the in vivo environment or the endoscopic setup, including the disturbance from the plastic sheath and the mucus, the discontinuous boundaries due to the non-uniform scanning speed and the irregular bending caused by the sheath distortion.

Fig. 1.

Demonstration of a segmented esophageal OCT image from guinea pig.

Solutions of these common problems, such as speckle noise and the irregular bending have been reported in the literature. Representative speckle noise suppression algorithms include the median filter [3, 37], wavelet shrinkage [38], curvelet denoising [39] and the non-linear anisotropic diffusion filter [4, 22]. Among these methods, the median filter is not the best, but it has the advantages of easy parameter setting, simple algorithm realization and robust noise suppression, which make it popular in OCT image denoising and was adopted in our framework. It is noted that there are some more advanced denoising methods, such as the sparse representation based framework proposed by Fang [40, 41]. Since such methods are not easy to implement and may take more computation time than the simple median filter, they were not adopted in this reported work. The negative effect caused by tissue irregular bending can be reduced by image flattening [20], which is realized by using cross-correlation [20] or the baseline search [42]. Generally, the baseline-related method performs better, but robust baseline extraction is difficult in esophageal OCT images due to the disturbance of the plastic sheath and mucus. To improve the image quality and remove such disturbance, our study designed a comprehensive preprocessing scheme according to the specific problems of the esophageal OCT image, thus creating favorable conditions for the subsequent segmentation.

Considering the previously mentioned problems, this study proposed an edge-enhanced graph search (EEGS) framework to automatically segment esophageal tissue layers. The main contributions lie in two aspects: Firstly, a specific-designed preprocessing scheme is proposed to address the challenges in esophageal OCT images (e.g. speckle noise, plastic sheath and mucus disturbances and boundary distortion). Secondly, an edge-enhanced weight matrix that combines modified canny map [43, 44] and vertical gradients are employed for graph search. In that case, the local feature is preserved while the missing boundary in shadow regions is interpolated. Different from Yang’s work [44], the canny edge detector used in this study was modified to focus on horizontal features, which is consistent with the esophageal tissue orientation, thus making it more suitable for esophageal layer boundary detection.

The paper is organized as follows. Section 2 introduces the detailed process of the proposed EEGS framework. Section 3 illustrates the advantages of the EEGS framework by segmentation experiments on esophageal OCT images of guinea pigs. Comparisons with the GTDP framework and the clinical potential of EEGS are also included in this section. Discussions and conclusions are presented in Sections 4 and 5, respectively.

2. Framework for robust esophageal layer segmentation using EEGS

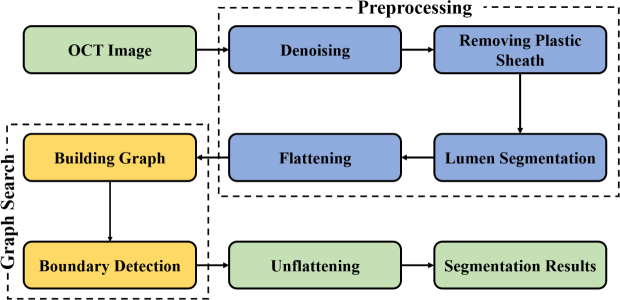

The proposed EEGS method is composed two major steps: 1) preprocessing and 2) graph search using weight matrix based on Canny edge detection. The flowchart of the proposed EEGS framework is illustrated in Fig. 2.

Fig. 2.

Flowchart of the proposed EEGS segmentation scheme.

2.1. Preprocessing

In order to calculate reliable weights that accurately indicate layer boundaries and improve the segmentation performance, we designed a novel preprocessing scheme to deal with the disturbance in esophageal OCT images.

2.1.1. Denoising

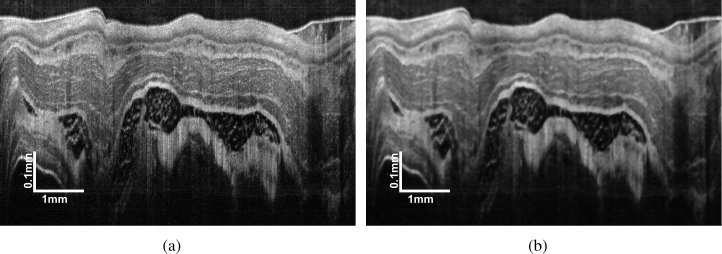

In this study, we chose the simple median filter to suppress the speckle noise and its effectiveness in OCT image denoising has been proven by numerous studies [3, 37]. Besides, the median filter has the advantage of high efficiency and easy parameter setting comparing with other popular OCT denoising methods, such as the wavelet and diffusion filter. A representative original esophageal image and the image denoised by a 7 × 7 median filter are presented in Fig. 3.

Fig. 3.

Demonstration of (a) a representative original esophageal OCT images and (b) the image denoised by a 7 × 7 median filter.

2.1.2. Removing plastic sheath

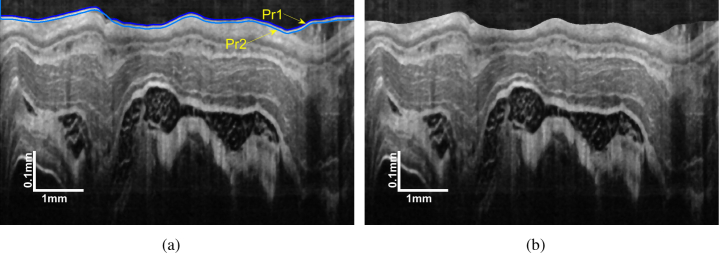

During endoscopic OCT imaging, the probe is protected from biofluid by a plastic sheath. The sheath boundary is so prominent that causes strong disturbance in the search of esophageal tissue layers. To remove the plastic sheath from the OCT image, its upper bound Pr1 and lower bound Pr2 should be determined first.

In this study, the GTDP algorithm [13] was adopted for the boundary identification of the plastic sheath. The GTDP represents image I as a graph G(V, E), where V denotes the graph nodes that correspond to image pixels and E is the edge connecting adjacent nodes. The weight for edge connecting adjacent pixels a and b was set as

| (1) |

where ga and gb are the vertical intensity gradients normalized to [0, 1], and wmin is the minimum possible weight in the graph. The gradients are calculated by convolving the image with a mask k [36], which is defined by

| (2) |

The path with minimal weight is the potential layer boundaries, which was solved by the Dijkstra algorithm [45].

The Pr1 is the boundary that separates the plastic sheath from the background, which possesses the highest intensity contrast. This character indicates the Pr1 owns the highest gradient that can be easily located by GTDP. Pr2 can also be determined using GTDP by limiting the search region with Pr1 and 10 pixels below Pr1. Ten pixels is the approximate sheath thickness in this study. The plastic sheath is then removed by shifting the pixels from Pr1 to Pr2 and the empty pixels are filled with a mirror image. The result is illustrated in Fig. 4.

Fig. 4.

Plastic sheath removal: (a) position of Pr1 and Pr2; (b) image with the plastic sheath removed.

2.1.3. Lumen segmentation

The outer boundary of the esophageal lumen is defined as the baseline (Fig. 1). Baseline is important in this study because it is the foundation of the following image flattening and it also affects the effectiveness of the subsequent search for other layer boundaries.

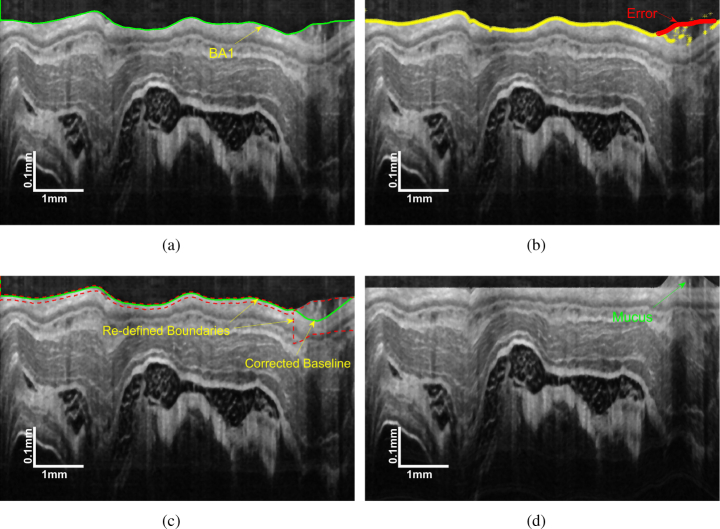

The baseline extraction using GTDP is supposed to be easy since it is the most prominent layer boundary on the image without the plastic sheath as illustrated in Fig. 4(b). Nevertheless, the mucus may induce a great error to GTDP as displayed in Fig. 5(a). Noticing that the SC layer has the highest intensity in the image, which can be used to correct the mucus-influenced baseline.

Fig. 5.

Deomonstration of: (a) the initial baseline BA1; (b) the erroneous part of BA1; (c) corrected baseline and (d) flattened image.

The detailed process is summarized below:

-

(a)

Extract a preliminary baseline BA1 by GTDP as shown in Fig. 5(a).

-

(b)

Find the up-most point that has an intensity higher than a predefined threshold in each column.

-

(c)

Determine if there is a successive part in BA1 above the obtained points. Provided that BA1 is consistent with the obtained points, it can be marked as the valid baseline. Else, recognize the different part as the erroneous region (Fig. 5(b)), and continue to the following steps.

-

(d)

Limit the graph search region for GTDP. As illustrated in Fig. 5(c), in the valid part of BA1, the graph search region is defined around BA1, while in the erroneous part, the graph search is conducted beneath BA1, thus eliminating the negative effects of mucus.

-

(e)

Graph search in the re-defined region to get the final baseline (Fig. 5(c)).

2.1.4. Flattening

Based on the graph search theory, the layer boundary is identified by searching the minimum weighted path across the graph. When the weights are set uniformly, the graph search method tends to find the shortest geometric path. However, the in vivo esophageal OCT images are often accompanied with a steep slope and irregular bending due to tissue movements and sheath distortion, which make the interested boundary lie in complex curves. Flattening is an effective solution to this problem.

The flattened image is created based on the baseline obtained in the previous section. We shift each column up or down such that the baseline is flattened. Empty pixels resulting from the baseline shifting are filled with a mirror image. The final image is shown in Fig. 5(d), which is beneficial to the following segmentation.

2.2. Esophageal layer segmentation by EEGS

EEGS is composed of the following steps. Firstly, a modified Canny edge detector is designed to create a map showing local main edges. Secondly, a gradient map in the axial direction is generated using a convolution mask. In that case, an edge-enhanced graph combining the gradient and Canny maps is obtained. As a result, layer boundaries can be extracted by dynamic programming. Detailed realization is described as follows.

2.2.1. Modified Canny edge detection

The Canny edge detector [43] was modified to create an edge-enhanced weight matrix for the subsequent graph search. This process can be summaried by the following steps:

-

(a)

Apply a Gaussian filter to smooth the image.

-

(b)Calculate the intensity gradients of the smoothed image. The gradient magnitude G and direction α can be determined by

where Gx and Gy are the first derivative in the horizontal and vertical direction, respectively. The gradient magnitude is calculated along the vertical direction since the flattened esophageal tissue layers distribute horizontally.(3) -

(c)Apply non-maximum suppression to get rid of spurious response to edge detection. The matrix indicating edges can be described by

where p is a pre-defined threshold, Ii and Ij denotes the gradient magnitude of the pixel in the positive and negative gradient directions, respectively.(4)

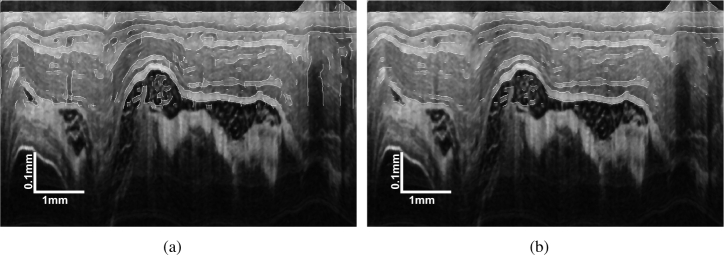

Consequently, a binary matrix Ie indicating image edges can be generated. An example of edge map Ie overlying the original image is shown in Fig. 6(b). By removing vertical edges, the modified canny detector can better describe esophageal tissue layers, thus creating an edge map more suitable for layer segmentation.

Fig. 6.

Edge maps overlying the original image (a) traditional Canny edge and (b) the modified Canny edge.

2.2.2. Construction of edge-enhanced gradient map

In this study, the edge-enhanced gradient map M is defined as

| (5) |

where Gr denotes the vertical intensity gradient calculated by mask k (Eq. (2)), Ie represents the modified Canny edge map and w is a weight parameter. The combination of Gr and Ie has the following advantages. By using neighboring information, Gr provides complementary search guidance where the Canny detector loses its efficacy. Meanwhile, Ie calculated by the Canny strategy compensates for the lack of local precision of Gr caused by the local smoothing effects of k. As a result, M is able to preserve local details while interpolating information into the shadow regions.

2.2.3. Segmentation by EEGS

The EEGS framework uses the GTDP for layer boundary identification. Instead of setting the weight by Eq. (1), the edge weight in EEGS is defined as:

| (6) |

where, and are normalized edge-enhanced map values for connecting adjacent points a and b calculated by Eq. (5).

The extraction of each boundary is realized by performing EEGS iteratively in a limited search area. The area is defined using the previously-identified boundary and the prior knowledge of the tissue layer thickness with a ±20% tolerance [44, 46], so that each search region contains one boundary ideally. The prior knowledge can be obtained by manual segmentation. As a result, all of the six boundaries are acquired automatically.

3. Experiments

3.1. Experimental data

The proposed EEGS segmentation framework was tested on esophageal OCT images of guinea pigs, which were acquired by an 800-nm ultrahigh resolution gastrointestinal endoscopic OCT system [9, 10, 47]. A typical image is illustrated in Fig. 3(a). Some layer boundaries like SC, EP and LP can be visually observed, while the MM and SM layer boundaries have low-contrast and are difficult to identify. Besides, disturbance such as the speckle noise, plastic sheath and the mucus are clearly presented on the image.

3.2. EEGS performance on OCT images with different challenges

In vivo esophageal OCT images present unique difficulties for layer segmentation resulting from motion artifacts and intrinsic disturbance from the endoscopic equipment itself (such as the plastic sheath). Fig. 7 illustrates several typical ill-posed images. Specifically, Fig. 7(a) shows an image with irregular bending, which was caused by the sheath distortion; Fig. 7(c) has quite weak boundaries in some regions of the MM and SM layers; Fig. 7(e) presents discontinuous boundaries, which might be caused by the non-uniform rotation speed of the endoscope; mucus occurs in Fig. 7(g) and separates the probe from the tissue surface. All of the listed problems are addressed in our EEGS scheme by embedding procedures such as flattening, baseline correction and the Canny-based edge-enhanced strategy. Corresponding segmentation results are demonstrated in Figs. 7(b), 7(d), 7(f) and 7(h). Results show that the EEGS is able to accurately identify all the esophageal layers, which confirms the robustness of the proposed method.

Fig. 7.

Representative esophageal OCT images with (a) irregular bending; (c) weak boundary; (e) discontinuous boundary and (g) mucus. (b), (d), (f) and (k) are the corresponding segmentation results with the proposed EEGS method.

3.3. Segmentation result analysis of the EEGS framework

To further confirm the effectiveness of the EEGS framework, we compared the proposed method with manual segmentation of three experienced observers. These observers have segmented numerous OCT images from different organs, such as the retina, esophagus and airway, using a freeform (drawing) method implemented in the open-source software ITK-SNAP [48]. Besides, the comparison of the EEGS and GTDP [13, 36] was also carried out to prove the advantages of the proposed method. The experimental data is composed of 100 esophageal OCT images, each with 2048 × 2048 pixels acquired from one healthy guinea pig. For a quantitative evaluation, we calculate the thickness of the five esophageal layers.

An intuitive segmentation comparison among EEGS, GTDP and one of the observers (Obs. 1) was demonstrated in Fig. 8. It can be seen that both EEGS and GTDP are consistent with the manual segmentation results for the right portion of the image, where the tissue layers are smooth and little disturbance exists. In comparison, for the left portion of the image where distortion occurs, differences between automatic and manual segmentation can be visually found. In that case, the EEGS result is closer to Obs.1 than GTDP because the modified Canny map in EEGS enhances the edge details, thus compensating for the loss of precision of the vertical gradients used by GTDP. The unsigned border position differences between the automatic and manual segmentations are listed in Table 1, where borders BD1 to BD6 represent the layer boundary from the top of SC layer to the bottom of SM layer and the data is presented in the form of mean ± standard deviation in micrometer. It can be found that the EEGS result is closer to the manual segmentation in all cases, which proves its better accuracy in layer boundary identification.

Fig. 8.

Comparison of segmentation results of EEGS, GTDP and Obs.1.

Table 1.

Unsigned border position differences of the automatic segmentation methods and manual segmentation.

| Border | Obs.1 as Ref.

|

Obs.2 as Ref.

|

Obs.3 as Ref.

|

|||

|---|---|---|---|---|---|---|

| GTDP | EEGS | GTDP | EEGS | GTDP | EEGS | |

| BD1 (µm) | 2.41 ± 0.98 | 2.34 ± 0.97 | 2.86 ± 0.71 | 2.84 ± 0.79 | 3.18 ± 1.43 | 3.16 ± 1.42 |

| BD2 (µm) | 3.23 ± 2.56 | 2.15 ± 1.62 | 5.09 ± 2.40 | 3.14 ± 1.45 | 4.3 ±2.17 | 3.61 ± 1.71 |

| BD3 (µm) | 1.13 ± 1.97 | 0.23 ± 0.81 | 1.14 ± 1.16 | 0.21 ± 1.03 | 1.89± 1.91 | 1.52 ± 1.54 |

| BD4 (µm) | 1.03 ± 1.15 | 0.31 ± 0.85 | 1.68 ± 1.82 | 1.23 ± 0.83 | 2.12 ± 2.09 | 2.71 ± 1.54 |

| BD5 (µm) | 5.58 ± 4.26 | 3.96 ± 2.73 | 5.65 ± 4.25 | 3.98 ± 2.82 | 4.87 ± 3.32 | 3.77 ± 2.33 |

| BD6 (µm) | 1.33 ± 2.33 | 1.15 ± 1.07 | 1.99 ± 2.90 | 1.72 ± 1.69 | 2.85 ± 2.56 | 2.70 ± 2.12 |

The average layer thickness of 100 esophageal OCT images and the corresponding standard deviation are listed in Table. 2. Using each of the manual segmentation as a reference separately, the differences of layer thickness between the automatic segmentation and the reference are listed in Table 3. Data in bold indicates the automatic segmentation results that are closer to the manual segmentation. Noticing that the EEGS segmentation results are closer to the manual reference values than the GTDP in all cases, which indicates the proposed EEGS is able to segment five esophageal layers more accurately than GTDP.

Table 2.

Layer thickness obtained by different methods for 100 esophageal OCT images of guinea pig.

| Layer | Obs. 1 | Obs. 2 | Obs. 3 | GTDP | EEGS |

|---|---|---|---|---|---|

| SC (µm) | 36.61 ± 2.60 | 41.74 ± 1.23 | 38.42 ± 1.59 | 35.06 ± 0.92 | 37.43 ± 0.83 |

| EP (µm) | 17.13 ± 2.21 | 14.28 ± 1.20 | 16.45 ± 1.39 | 19.09 ± 0.51 | 18.05 ± 0.50 |

| LP (µm) | 10.29 ± 0.74 | 8.21 ± 0.45 | 11.10 ± 0.48 | 10.56 ± 0.50 | 10.28 ± 0.34 |

| MM (µm) | 20.90 ± 1.70 | 23.12 ± 1.44 | 19.25 ± 1.31 | 16.21 ± 1.43 | 17.39 ± 1.30 |

| SM (µm) | 19.68 ± 1.65 | 19.79 ± 1.23 | 21.85 ± 2.10 | 25.21 ± 2.05 | 23.97 ± 1.42 |

Table 3.

Comparisons of esophageal layer thickness mesurements between EEGS and GTDP using manual segmentation as references.

| Layer | Obs.1 as Ref.

|

Obs.2 as Ref.

|

Obs.3 as Ref.

|

|||

|---|---|---|---|---|---|---|

| GTDP | EEGS | GTDP | EEGS | GTDP | EEGS | |

| SC (µm) | 2.66 ± 1.70 | 2.32 ± 1.73 | 6.67 ± 1.31 | 4.34 ± 1.27 | 3.39 ± 1.64 | 1.58 ± 1.02 |

| EP (µm) | 2.49 ± 1.53 | 2.09 ± 1.20 | 4.81 ± 1.22 | 3.82 ± 1.09 | 2.68 ± 1.42 | 1.81 ± 1.18 |

| LP (µm) | 0.76 ± 0.59 | 0.63 ± 0.48 | 2.36 ± 0.66 | 2.07 ± 0.58 | 0.85 ± 0.54 | 0.72 ± 0.53 |

| MM (µm) | 4.73 ± 2.13 | 3.68 ± 2.01 | 6.91 ± 1.91 | 5.75 ± 1.94 | 3.13 ± 1.86 | 2.14 ± 1.45 |

| SM (µm) | 5.55 ± 2.27 | 4.35 ± 2.06 | 5.42 ± 2.36 | 4.19 ± 1.72 | 3.76 ± 2.87 | 2.79 ± 1.79 |

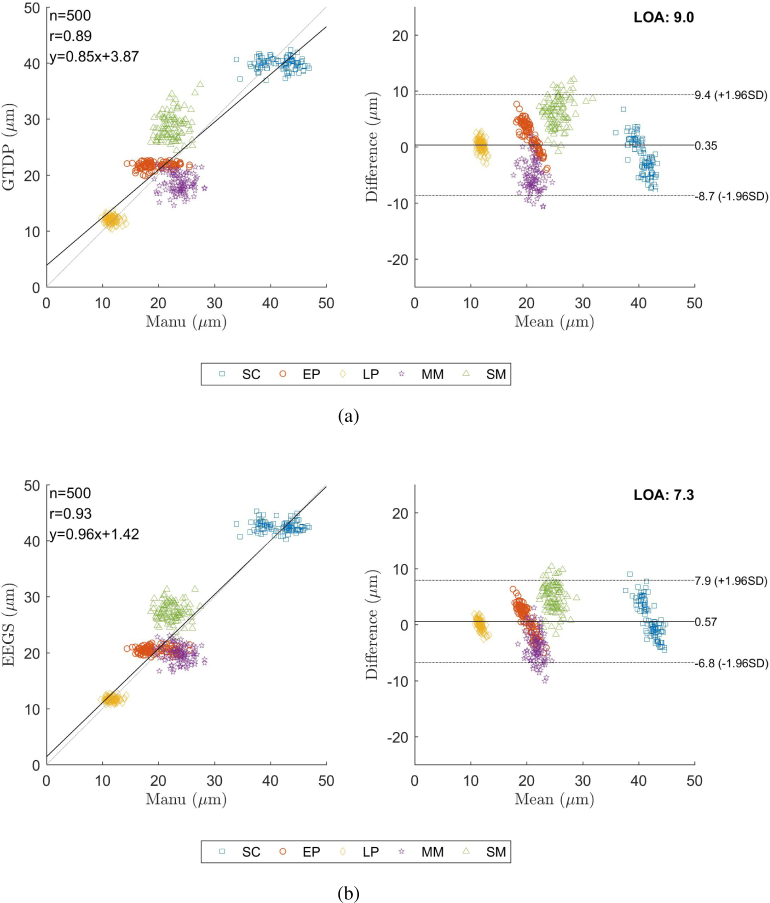

Fig. 9 shows the scatter plots indicating the reliability of the thickness measurements using GTDP and EEGS in comparison with the reference annotations from Obs. 1, as well as the corresponding Bland-Altman plot. In Fig. 9, n is the point number, r denotes the correlation coefficient and LOA represents the limit of agreement with the 95% confidence interval. It can be found that the EEGS method offers a larger r value and a smaller LOA, which indicates its result is closer to the reference annotations.

Fig. 9.

Correlation analysis and the corresponding Bland-Altman plot of (a) the GTDP method and (b) the EEGS framework, compared to the manual segmentation of Obs.1.

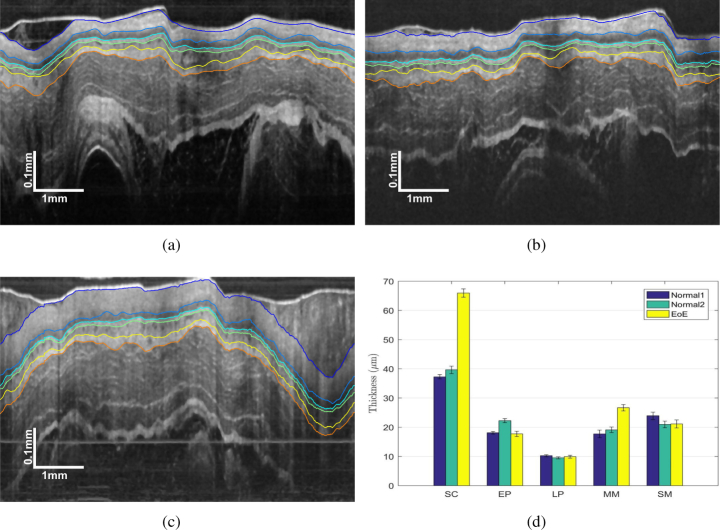

3.4. Clinical potential of EEGS

To demonstrate the clinical potential, the EEGS framework was employed to segment three sets of 30 guinea pig esophagus images, including two normal conditions and one EoE model [49]. EoE is an esophageal disorder featured with eosinophil-predominated allergic inflammation in the esophagus [11]. Representative OCT images of guinea pig esophagus segmented by EEGS are presented in Figs. 10(a) to 10(c), and the corresponding thicknesses of the five tissue layers are shown in Fig. 10(d). It is evident that the thickness of the SC layer of the EoE model is significantly thicker than the normal cases, which indicates our EEGS framework would potentially aid clinical diagnosis [11, 50].

Fig. 10.

Representative segmentation results of EEGS for (a) normal guinea pig 1; (b) normal guinea pig 2; (c) guinea pig EoE model and (d) comparison of the measured layer thicknesses.

4. Discussions

Our image analyses were performed on a personal computer with an Intel Core i7 2.20 GHz CPU and 16 GB RAM. Using MATLAB, it takes about 12 seconds for the EEGS to preprocess and segment an esophageal OCT image with the size 2048 × 2048 pixels, which is less efficient than GTDP (about 8 seconds) due to the additional Canny edge detection. This computational efficiency is suboptimal for real-time processing. To reduce the segmentation time, more efficient GPU-based programming in C will be adopted in the future.

Since the esophageal OCT images were collected successively by the endoscope, the overlapped information in adjacent frames can be used to correct outliers, thus further improving the segmentation accuracy. In addition, the current algorithm requires some apriori knowledge such as the layer numbers to be segmented and the approximate layer thickness. Future work will try to find adaptive parameter setting methods to perform automatic segmentation with less or no user input.

The esophagus layer segmentation experiments on normal and EoE guinea pig models demonstrate the clinical potential of the EEGS framework. In the future, esophageal OCT images for human will be collected and studied, so that the criteria for diagnosing different esophageal diseases would be determined. As a result, an automatic diagnosis system for esophageal diseases will be developed.

5. Conclusions

The main contribution of this paper is proposing the EEGS scheme to accurately segment esophageal layers on OCT images. With reasonable preprocessing before segmentation, the negative effect caused by the OCT imaging system and in vivo motion artifacts is minimized. By introducing Canny edge detection in the construction of the edge-enhanced weight matrix, the local edge information is preserved while the lost information in the shadow region is interpolated. It is worth mentioning that the Canny method utilized in this study focuses on boundaries along the horizontal direction, thus matching the esophageal layer better. Experiments showed that the proposed EEGS method can achieve better esophageal layer segmentation results in accuracy and stability than the GTDP, and it has the potential to be used for diagnosing esophageal diseases.

Funding

Key Program for International S&T Cooperation Projects of China (2016YFE0107700); National Institutes of Health (NIH) (HL121788 and CA153023); Wallace H. Coulter Foundation.

Disclosures

The authors declare that there are no conflicts of interest related to this article.

References and links

- 1.Huang D., Swanson E. A., Lin C. P., Schuman J. S., Stinson W. G., Chang W., Hee M. R., Flotte T., Gregory K., Puliafito C. A., Fujimoto J. G., “Optical coherence tomography,” Science 254, 1178–1181 (1991). 10.1126/science.1957169 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Hee M. R., Izatt J. A., Swanson E. A., Huang D., Schuman J. S., Lin C. P., Puliafito C. A., Fujimoto J. G., “Optical coherence tomography of the human retina,” Arch. Ophthalmol. 113, 325–332 (1995). 10.1001/archopht.1995.01100030081025 [DOI] [PubMed] [Google Scholar]

- 3.Koozekanani D., Boyer K., Roberts C., “Retinal thickness measurements from optical coherence tomography using a Markov boundary model,” IEEE Transactions on Med. Imaging 20, 900–916 (2001). 10.1109/42.952728 [DOI] [PubMed] [Google Scholar]

- 4.Fernandez D. C., Salinas H. M., Puliafito C. A., “Automated detection of retinal layer structures on optical coherence tomography images,” Opt. Express 13, 10200–10216 (2005). 10.1364/OPEX.13.010200 [DOI] [PubMed] [Google Scholar]

- 5.Gora M. J., Suter M. J., Tearney G. J., Li X. D., “Endoscopic optical coherence tomography: technologies and clinical applications [invited],” Biomed. Opt. Express 8, 2405–2444 (2017). 10.1364/BOE.8.002405 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Li X. D., Boppart S. A., Van Dam J., Mashimo H., Mutinga M., Drexler W., Klein M., Pitris C., Krinsky M. L., Brezinski M. E., Fujimoto J. G., “Optical coherence tomography: Advanced technology for the endoscopic imaging of Barrett’s esophagus,” Endoscopy 32, 921–930 (2000). 10.1055/s-2000-9626 [DOI] [PubMed] [Google Scholar]

- 7.Hatta W., Uno K., Koike T., Yokosawa S., Iijima K., Imatani A., Shimosegawa T., “Optical coherence tomography for the staging of tumor infiltration in superficial esophageal squamous cell carcinoma,” Gastrointest. Endosc. 71, 899–906 (2010). 10.1016/j.gie.2009.11.052 [DOI] [PubMed] [Google Scholar]

- 8.Gora M. J., Sauk J. S., Carruth R. W., Gallagher K. A., Suter M. J., Nishioka N. S., Kava L. E., Rosenberg M., Bouma B. E., Tearney G. J., “Tethered capsule endomicroscopy enables less invasive imaging of gastrointestinal tract microstructure,” Nat. Medicine 19, 238–240 (2013). 10.1038/nm.3052 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Xi J. F., Zhang A. Q., Liu Z. Y., Liang W. X., Lin L. Y., Yu S. Y., Li X. D., “Diffractive catheter for ultrahigh-resolution spectral-domain volumetric OCT imaging,” Opt. Lett. 39, 2016–2019 (2014). 10.1364/OL.39.002016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Yuan W., Brown R., Mitzner W., Yarmus L., Li X. D., “Super-achromatic monolithic microprobe for ultrahigh-resolution endoscopic optical coherence tomography at 800 nm,” Nat. Commun. 8, 1531 (2017). 10.1038/s41467-017-01494-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Liu Z. Y., Xi J. F., Tse M., Myers A. C., Li X. D., Pasricha P. J., Yu S. Y., “Allergic inflammation-induced structural and functional changes in esophageal epithelium in a guinea pig model of eosinophilic esophagitis,” Gastroenterology 146, S92 (2014). 10.1016/S0016-5085(14)60334-6 [DOI] [Google Scholar]

- 12.Poneros J. M., Brand S., Bouma B. E., Tearney G. J., Compton C. C., Nishioka N. S., “Diagnosis of specialized intestinal metaplasia by optical coherence tomography,” Gastroenterology 120, 7–12 (2001). 10.1053/gast.2001.20911 [DOI] [PubMed] [Google Scholar]

- 13.Chiu S. J., Li X. T., Nicholas P., Toth C. A., Izatt J. A., Farsiu S., “Automatic segmentation of seven retinal layers in SDOCT images congruent with expert manual segmentation,” Opt. Express 18, 19413–19428 (2010). 10.1364/OE.18.019413 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Vermeer K. A., van der Schoot J., Lemij H. G., de Boer J. F., “Automated segmentation by pixel classification of retinal layers in ophthalmic OCT images,” Biomed. Opt. Express 2, 1743–1756 (2011). 10.1364/BOE.2.001743 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wu M. L., Chen Q., He X. J., Li P., Fan W., Yuan S. T., Park H. J., “Automatic subretinal fluid segmentation of retinal SD-OCT images with neurosensory retinal detachment guided by enface fundus imaging,” IEEE Transactions on Biomed. Eng. 65, 87–95 (2018). 10.1109/TBME.2017.2695461 [DOI] [PubMed] [Google Scholar]

- 16.Lang A., Carass A., Jedynak B. M., Solomon S. D., Calabresi P. A., Prince J. L., “Intensity inhomogeneity correction of SD-OCT data using macular flatspace,” Med. Image Analysis 43, 85–97 (2018). 10.1016/j.media.2017.09.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Yazdanpanah A., Hamarneh G., Smith B., Sarunic M., “Intra-retinal layer segmentation in optical coherence tomography using an active contour approach,” Med. Image Comput. Comput. Interv. - Miccai 2009, Pt Ii, Proc. 5762, 649 (2009). [DOI] [PubMed] [Google Scholar]

- 18.Mayer M. A., Hornegger J., Mardin C. Y., Tornow R. P., “Retinal nerve fiber layer segmentation on FD-OCT scans of normal subjects and glaucoma patients,” Biomed. Opt. Express 1, 1358–1383 (2010). 10.1364/BOE.1.001358 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Ghorbel I., Rossant F., Bloch I., Tick S., Paques M., “Automated segmentation of macular layers in OCT images and quantitative evaluation of performances,” Pattern Recognit. 44, 1590–1603 (2011). 10.1016/j.patcog.2011.01.012 [DOI] [Google Scholar]

- 20.Fuller A. R., Zawadzki R. J., Choi S., Wiley D. F., Werner J. S., Hamann B., “Segmentation of three-dimensional retinal image data,” IEEE Transactions on Vis. Comput. Graph. 13, 1719–1726 (2007). 10.1109/TVCG.2007.70590 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lang A., Carass A., Hauser M., Sotirchos E. S., Calabresi P. A., Ying H. S., Prince J. L., “Retinal layer segmentation of macular OCT images using boundary classification,” Biomed. Opt. Express 4, 1133–1152 (2013). 10.1364/BOE.4.001133 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Garvin M. K., Abramoff M. D., Kardon R., Russell S. R., Wu X. D., Sonka M., “Intraretinal layer segmentation of macular optical coherence tomography images using optimal 3-D graph search,” IEEE Transactions on Med. Imaging 27, 1495–1505 (2008). 10.1109/TMI.2008.923966 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kafieh R., Rabbani H., Abramoff M. D., Sonka M., “Intra-retinal layer segmentation of 3D optical coherence tomography using coarse grained diffusion map,” Med. Image Analysis 17, 907–928 (2013). 10.1016/j.media.2013.05.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Venhuizen F. G., van Ginneken B., Liefers B., van Grinsven M. J. J. P., Fauser S., Hoyng C., Theelen T., Sanchez C. I., “Robust total retina thickness segmentation in optical coherence tomography images using convolutional neural networks,” Biomed. Opt. Express 8, 3292–3316 (2017). 10.1364/BOE.8.003292 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lee C. S., Tyring A. J., Deruyter N. P., Wu Y., Rokem A., Lee A. Y., “Deep-learning based, automated segmentation of macular edema in optical coherence tomography,” Biomed. Opt. Express 8, 3440–3448 (2017). 10.1364/BOE.8.003440 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Fang L. Y., Cunefare D., Wang C., Guymer R. H., Li S. T., Farsiu S., “Automatic segmentation of nine retinal layer boundaries in OCT images of non-exudative amd patients using deep learning and graph search,” Biomed. Opt. Express 8, 2732–2744 (2017). 10.1364/BOE.8.002732 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Loo J., Fang L. Y., Cunefare D., Jaffe G. J., Farsiu S., “Deep longitudinal transfer learning-based automatic segmentation of photoreceptor ellipsoid zone defects on optical coherence tomography images of macular telangiectasia type 2,” Biomed. Opt. Express 9, 2681–2698 (2018). 10.1364/BOE.9.002681 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Ughi G. J., Adriaenssens T., Larsson M., Dubois C., Sinnaeve P. R., Coosemans M., Desmet W., D’hooge J., “Automatic three-dimensional registration of intravascular optical coherence tomography images,” J. Biomed. Opt. 17, 049803 (2012). [DOI] [PubMed] [Google Scholar]

- 29.Gan Y., Tsay D., Amir S. B., Marboe C. C., Hendon C. P., “Automated classification of optical coherence tomography images of human atrial tissue,” J. Biomed. Opt. 21, 101407 (2016). 10.1117/1.JBO.21.10.101407 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Zahnd G., Hoogendoorn A., Combaret N., Karanasos A., Pery E., Sarry L., Motreff P., Niessen W., Regar E., van Soest G., Gijsen F., van Walsum T., “Contour segmentation of the intima, media, and adventitia layers in intracoronary OCT images: application to fully automatic detection of healthy wall regions,” Int. J. Comput. Assist. Radiol. Surg. 12, 1923–1936 (2017). 10.1007/s11548-017-1657-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Qi X., Sivak M. V., Isenberg G., Willis J. E., Rollins A. M., “Computer-aided diagnosis of dysplasia in Barrett’s esophagus using endoscopic optical coherence tomography,” J. Biomed. Opt. 11, 044010 (2006). 10.1117/1.2337314 [DOI] [PubMed] [Google Scholar]

- 32.Qi X., Pan Y. S., Sivak M. V., Willis J. E., Isenberg G., Rollins A. M., “Image analysis for classification of dysplasia in Barrett’s esophagus using endoscopic optical coherence tomography,” Biomed. Opt. Express 1, 825–847 (2010). 10.1364/BOE.1.000825 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Garcia-Allende P. B., Amygdalos I., Dhanapala H., Goldin R. D., Hanna G. B., Elson D. S., “Morphological analysis of optical coherence tomography images for automated classification of gastrointestinal tissues,” Biomed. Opt. Express 2, 2821–2836 (2011). 10.1364/BOE.2.002821 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ughi G. J., Gora M. J., Swager A. F., Soomro A., Grant C., Tiernan A., Rosenberg M., Sauk J. S., Nishioka N. S., Tearney G. J., “Automated segmentation and characterization of esophageal wall in vivo by tethered capsule optical coherence tomography endomicroscopy,” Biomed. Opt. Express 7, 409–419 (2016). 10.1364/BOE.7.000409 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kassinopoulos M., Dong J., Tearney G. J., Pitris C., “Automated detection of esophageal dysplasia in in vivo optical coherence tomography images of the human esophagus,” Proc. SPIE, 10483, 104830R (2018). [Google Scholar]

- 36.Zhang J. L., Yuan W., Liang W. X., Yu S. Y., Liang Y. M., Xu Z. Y., Wei Y. X., Li X. D., “Automatic and robust segmentation of endoscopic OCT images and optical staining,” Biomed. Opt. Express 8, 2697–2708 (2017). 10.1364/BOE.8.002697 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Srinivasan V. J., Monson B. K., Wojtkowski M., Bilonick R. A., Gorczynska I., Chen R., Duker J. S., Schuman J. S., Fujimoto J. G., “Characterization of outer retinal morphology with high-speed, ultrahigh-resolution optical coherence tomography,” Investig. Ophthalmol. & Vis. Sci. 49, 1571–1579 (2008). 10.1167/iovs.07-0838 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Quellec G., Lee K., Dolejsi M., Garvin M. K., Abramoff M. D., Sonka M., “Three-dimensional analysis of retinal layer texture: Identification of fluid-filled regions in SD-OCT of the macula,” IEEE Transactions on Med. Imaging 29, 1321–1330 (2010). 10.1109/TMI.2010.2047023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Jian Z. P., Yu L. F., Rao B., Tromberg B. J., Chen Z. P., “Three-dimensional speckle suppression in optical coherence tomography based on the curvelet transform,” Opt. Express 18, 1024–1032 (2010). 10.1364/OE.18.001024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Fang L. Y., Li S. T., McNabb R. P., Nie Q., Kuo A. N., Toth C. A., Izatt J. A., Farsiu S., “Fast acquisition and reconstruction of optical coherence tomography images via sparse representation,” IEEE Transactions on Med. Imaging 32, 2034–2049 (2013). 10.1109/TMI.2013.2271904 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Fang L. Y., Li S. T., Cunefare D., Farsiu S., “Segmentation based sparse reconstruction of optical coherence tomography images,” IEEE Transactions on Med. Imaging 36, 407–421 (2017). 10.1109/TMI.2016.2611503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Garvin M. K., Abramoff M. D., Wu X. D., Russell S. R., Burns T. L., Sonka M., “Automated 3-D intraretinal layer segmentation of macular spectral-domain optical coherence tomography images,” IEEE Transactions on Med. Imaging 28, 1436–1447 (2009). 10.1109/TMI.2009.2016958 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Canny J., “A computational approach to edge-detection,” IEEE Transactions on Pattern Analysis Mach. Intell. 8, 679–698 (1986). 10.1109/TPAMI.1986.4767851 [DOI] [PubMed] [Google Scholar]

- 44.Yang Q., Reisman C. A., Wang Z. G., Fukuma Y., Hangai M., Yoshimura N., Tomidokoro A., Araie M., Raza A. S., Hood D. C., Chan K. P., “Automated layer segmentation of macular OCT images using dual-scale gradient information,” Opt. Express 18, 21293–21307 (2010). 10.1364/OE.18.021293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Dijkstra E., “A note on two problems in connexion with graphs,” Numer. Math. 1, 269–271 (1959). 10.1007/BF01386390 [DOI] [Google Scholar]

- 46.Yang Q., Reisman C. A., Chan K. P., Ramachandran R., Raza A., Hood D. C., “Automated segmentation of outer retinal layers in macular OCT images of patients with retinitis pigmentosa,” Biomed. Opt. Express 2, 2493–2503 (2011). 10.1364/BOE.2.002493 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Yuan W., Mavadia-Shukla J., Xi J. F., Liang W. X., Yu X. Y., Yu S. Y., Li X. D., “Optimal operational conditions for supercontinuum-based ultrahigh-resolution endoscopic OCT imaging,” Opt. Lett. 41, 250–253 (2016). 10.1364/OL.41.000250 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Yushkevich P. A., Piven J., Hazlett H. C., Smith R. G., Ho S., Gee J. C., Gerig G., “User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability,” Neuroimage 31, 1116–1128 (2006). 10.1016/j.neuroimage.2006.01.015 [DOI] [PubMed] [Google Scholar]

- 49.Liu Z. Y., Hu Y. T., Yu X. Y., Xi J. F., Fan X. M., Tse C. M., Myers A. C., Pasricha P. J., Li X. D., Yu S. Y., “Allergen challenge sensitizes trpa1 in vagal sensory neurons and afferent c-fiber subtypes in guinea pig esophagus,” Am. J. Physiol. Liver Physiol. 308, G482–G488 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Baroni M., Fortunato P., Torre A. La, “Towards quantitative analysis of retinal features in optical coherence tomography,” Med. Eng. & Phys. 29, 432–441 (2007). 10.1016/j.medengphy.2006.06.003 [DOI] [PubMed] [Google Scholar]