Abstract

Background

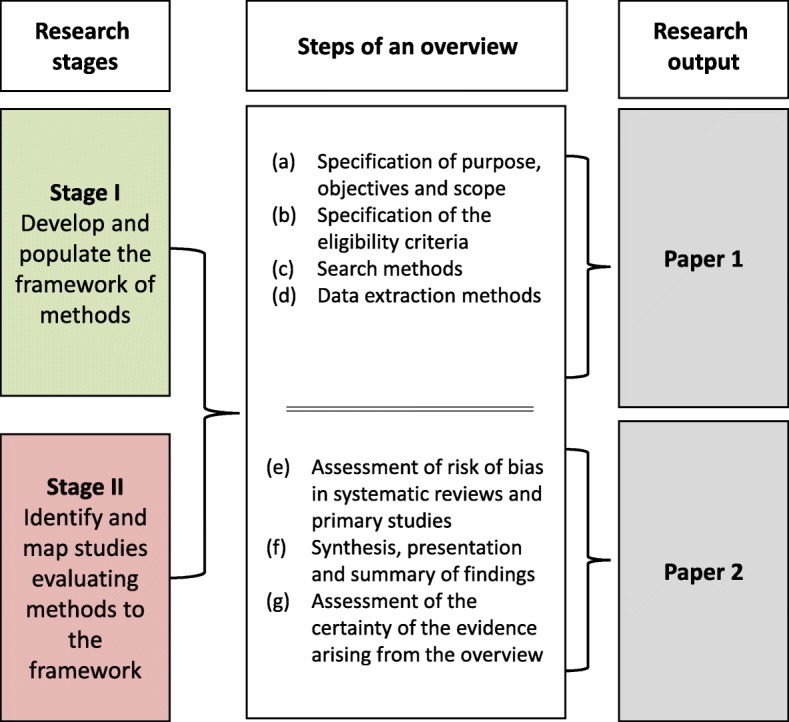

Overviews of systematic reviews (SRs) attempt to systematically retrieve and summarise the results of multiple systematic reviews. This is the second of two papers from a study aiming to develop a comprehensive evidence map of the methods used in overviews. Our objectives were to (a) develop a framework of methods for conducting, interpreting and reporting overviews (stage I)—the Methods for Overviews of Reviews (MOoR) framework—and (b) to create an evidence map by mapping studies that have evaluated overview methods to the framework (stage II). In the first paper, we reported findings for the four initial steps of an overview (specification of purpose, objectives and scope; eligibility criteria; search methods; data extraction). In this paper, we report the remaining steps: assessing risk of bias; synthesis, presentation and summary of the findings; and assessing certainty of the evidence arising from the overview.

Methods

In stage I, we identified cross-sectional studies, guidance documents and commentaries that described methods proposed for, or used in, overviews. Based on these studies, we developed a framework of possible methods for overviews, categorised by the steps in conducting an overview. Multiple iterations of the framework were discussed and refined by all authors. In stage II, we identified studies evaluating methods and mapped these evaluations to the framework.

Results

Forty-two stage I studies described methods relevant to one or more of the latter steps of an overview. Six studies evaluating methods were included in stage II. These mapped to steps involving (i) the assessment of risk of bias (RoB) in SRs (two SRs and three primary studies, all reporting evaluation of RoB tools) and (ii) the synthesis, presentation and summary of the findings (one primary study evaluating methods for measuring overlap).

Conclusion

Many methods have been described for use in the latter steps in conducting an overview; however, evaluation and guidance for applying these methods is sparse. The exception is RoB assessment, for which a multitude of tools exist—several with sufficient evaluation and guidance to recommend their use. Evaluation of other methods is required to provide a comprehensive evidence map.

Electronic supplementary material

The online version of this article (10.1186/s13643-018-0784-8) contains supplementary material, which is available to authorized users.

Keywords: Overview of systematic reviews, Overview, Meta-review, Umbrella review, Review of reviews, Systematic review methods, Evidence map, Evaluation of methods, Methodology, Assessment of risk of bias in systematic reviews

Background

Overviews of systematic reviews aim to systematically retrieve, critically appraise and synthesise the results of multiple systematic reviews (SRs) [1]. Overviews of reviews (also called umbrella reviews, meta-reviews, reviews of reviews; but referred to in this paper as ‘overviews’ [2]) have grown in number in recent years, largely in response to the increasing number of SRs [3]. Overviews have many purposes including mapping the available evidence and identifying gaps in the literature, summarising the effects of the same intervention for different conditions or populations or examining reasons for discordance of findings and conclusions across SRs [4–6]. A noted potential benefit of overviews is that they can address a broader research question than the constituent SRs, since overviews are able to capitalise on previous SR efforts [7].

The steps and many of the methods used in the conduct of SRs are directly transferrable to overviews. However, overviews involve unique methodological challenges that primarily stem from a lack of alignment between the PICO (Population, Intervention, Comparison, Outcome) elements of the overview question and those of the included SRs, and overlap, where the same primary studies contribute data to multiple SRs [7]. For example, overlap can lead to challenging scenarios such as how to deal with discordant risk of bias assessments of the same primary studies across SRs (often further complicated by the use of different risk of bias/quality tools) or how to synthesize results from multiple meta-analyses where the same studies contribute to more than one pooled analysis. Authors need to plan for these scenarios, which may require the application of different or additional methods to those used in systematic reviews of primary studies.

Two recent reviews of methods guidance for conducing overviews found that there were important gaps in the guidance on the conduct of overviews [8, 9]. The results of our first paper—which identified methods for the initial steps in conducting an overview and collated the evidence on the performance of these methods [10]—aligned with these findings. We further identified that there was a lack of studies evaluating the performance of overview methods and limited empirical evidence to inform methods decision-making in overviews [10].

This paper is the second of two papers, which together, aim to provide a comprehensive framework of overview methods and the evidence underpinning these methods—an evidence map of overview methods. In doing so, we aim to help overview authors plan for common scenarios encountered when conducting an overview and enable prioritisation of methods development and evaluation.

Objectives

The objectives of this study were to (a) develop a comprehensive framework of methods that have been used, or may be used, in conducting, interpreting and reporting overviews of systematic reviews of interventions (stage I)—the Methods for Overviews of Reviews (MOoR) framework; (b) map studies that have evaluated these methods to the framework (creating an evidence map of overview methods) (stage II); and (c) identify unique methodological challenges of overviews and methods proposed to address these.

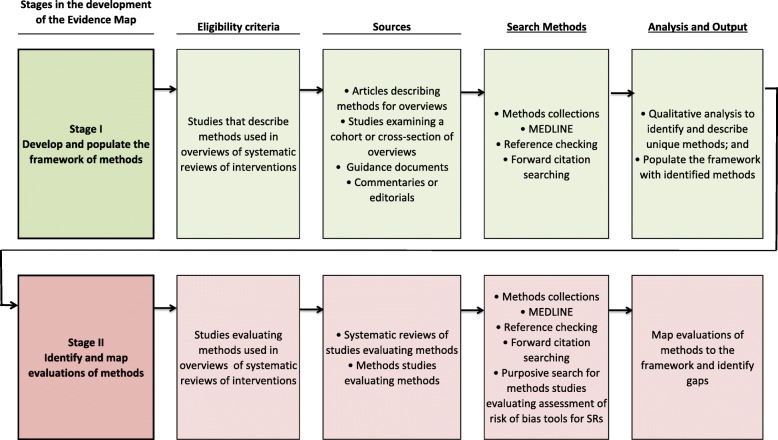

In the first paper, we presented the methods framework, along with the studies that had evaluated those methods mapped to the framework (the evidence map) for the four initial steps of conducting an overview: (a) specification of the purpose, objectives and scope of the overview; (b) specification of the eligibility criteria; (c) search methods and (d) data extraction methods [10]. In this second companion paper, we present the methods framework and evidence map for the subsequent steps in conducting an overview: (e) assessment of risk of bias in SRs and primary studies; (f) synthesis, presentation and summary of the findings and (g) assessment of the certainty of evidence arising from the overview (Fig. 1).

Fig. 1.

Summary of the research reported in each paper

We use the term ‘methods framework’ (or equivalently, ‘framework of methods’) to describe the organising structure we have developed to group-related methods, and against which methods evaluations can be mapped. The highest level of this structure is the broad steps of conducting an overview (e.g. synthesis, presentation and summary of the findings). The methods framework, together with the studies that have evaluated these methods, form the evidence map of overview methods.

Methods

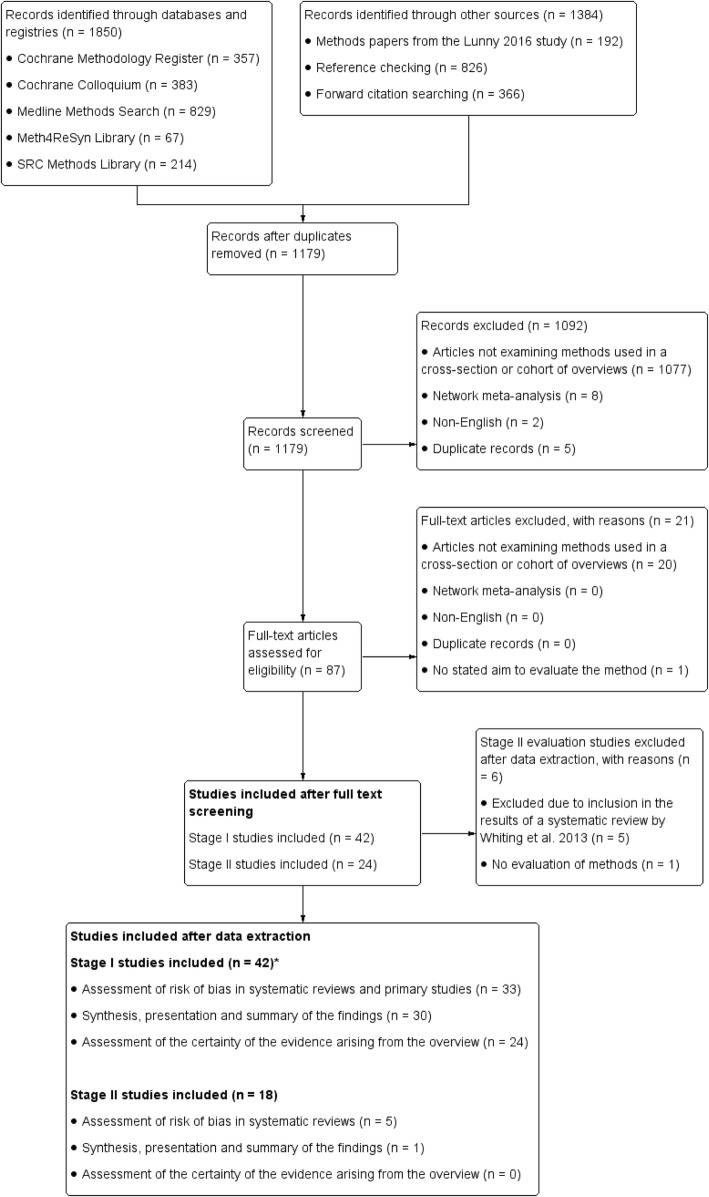

A protocol for this study has been published [11], and the methods have been described in detail in the first paper in the series [10]. The methods for the two research stages (Fig. 2) are now briefly described, along with deviations from the planned methods pertaining to this second paper. A notable deviation from our protocol is that we had planned to include the step ‘interpretation of findings and drawing conclusions’, but after reviewing the literature, felt that there was overlap between this step and the ‘assessment of certainty of the evidence arising from the overview’ step, and so consolidated the identified methods into the latter step.

Fig. 2.

Stages in the development of an evidence map of overview methods

Stage I: development and population of the framework of methods

Search methods

Our main search strategy included searching MEDLINE from 2000 onwards and the following methods collections: Cochrane Methodology Register, Meth4ReSyn library, Scientific Resource Center Methods library of the AHRQ Effective Health Care Program and Cochrane Colloquium abstracts. Searches were run on December 2, 2015 (see Additional file 1 for search strategies). These searches were supplemented by methods articles we had identified through a related research project, examination of reference lists of included studies, contact with authors of conference posters, and citation searches (see Paper 1 [10] for details).

Eligibility criteria

We identified articles describing methods used, or recommended for use, in overviews of systematic reviews of interventions.

Inclusion criteria:

-

(i)

Articles describing methods for overviews of systematic reviews of interventions

-

(ii)

Articles examining methods used in a cross-section or cohort of overviews

-

(iii)

Guidance (e.g. handbooks and guidelines) for undertaking overviews

-

(iv)

Commentaries or editorials that discuss methods for overviews

Exclusion criteria:

-

(i)

Articles published in languages other than English

-

(ii)

Articles describing methods for network meta-analysis

-

(iii)

Articles exclusively about methods for overviews of other review types (i.e. not of interventions)

We populated the framework with methods that are different or additional to those required to conduct a SR of primary research. Methods evaluated in the context of other ‘overview’ products, such as guidelines, which are of relevance to overviews, were included.

The eligibility criteria were piloted by three reviewers independently on a sample of articles retrieved from the search to ensure consistent application.

Study selection

Two reviewers independently reviewed the title, abstracts and full text for their potential inclusion against the eligibility criteria. Any disagreement was resolved by discussion with a third reviewer. In instances where there was limited or incomplete information regarding a study’s eligibility (e.g. when only an abstract was available), the study authors were contacted to request the full text or further details.

Data extraction, coding and analysis

One author collected data from all included articles using a pre-tested form; a second author collected data from a 50% sample of the articles.

Data collected on the characteristics of included studies

We collected data about the following: (i) the type of articles (coded as per our inclusion criteria), (ii) the main contribution(s) of the article (e.g. critique of methods), (iii) a precis of the methods or approaches described and (iv) the data on which the article was based (e.g. audit of methods used in a sample of overviews, author’s experience).

Coding and analysis to develop the framework of methods

We coded the extent to which each article described methods or approaches pertaining to each step of an overview (i.e. mentioned without description, described—insufficient detail to implement, described—implementable). The subset of articles coded as providing description were read by two authors (CL, SB or JM) who independently drafted the framework for that step to capture and categorise all available methods. We grouped conceptually similar approaches together and extracted examples to illustrate the options. Groups were labelled to delineate the unique decision points faced when planning each step of an overview (e.g. determine how to deal with discordance across systematic review (SR)/meta-analyses (MAs) and determine criteria for selecting SR/MAs, where SR/MAs include overlapping studies). To ensure comprehensiveness of the framework, methods were inferred when a clear alternative existed to a reported method (e.g. using tabular or graphical approaches to present discordance (6.2, Table 4)). The drafts and multiple iterations of the framework for each step were discussed and refined by all authors.

Table 4.

Synthesis, presentation and summary of the findings

| Step Sub-step Methods/approaches | Sources (first author, year) ▪ Examples |

|---|---|

| 1.0 Plan the approach to summarising the SR results | |

| 1.1 Determine criteria for selecting SR results/MAs, where SR/MAs include overlapping studies | |

| 1.1.1 Include all SR results/MAs | Caird 2015 [1]; Cooper 2012 [6] |

| 1.1.2 Use decision rules or tools (e.g. Jadad tool [29]) to select results from a subset of SR/MAs | Caird 2015 [1]; Cooper 2012 [6] ▪ Select one SR result/MA from overlapping SR/MAs based on (a) the MA with the most complete information, and if that was equivalent, (b) the MA with the largest number of primary studies (Cooper 2012 [6]) |

| 1.2 Determine the summary approach | |

| 1.2.1 Describe and/or tabulate the characteristics of the included SRs in terms of PICO elements | Becker 2008 [4]; Cooper 2012 [6]; JBI 2014 [39, 59]; Pieper 2014c [66]; Robinson 2015 [24, 69–72]; Ryan 2009 [25]; Smith 2011 [77]; Thomson 2010 [26] ▪ Matrix of studies by PICO elements to allow comparison and assess important sources of heterogeneity across the SRs (Caird 2015 [1]; Kramer 2009 [61]; Smith 2011 [77]; Thomson 2010 [26]) |

| 1.2.2 Describe and/or tabulate the results of the included SRs | Becker 2008 [4]; Caird 2015 [1]; Chen 2014 [46]; Cooper 2012 [6]; Hartling 2012 [53]; JBI 2014 [39, 59]; Pieper 2014c [66]; Robinson 2015 [24, 69–72]; Ryan 2009 [25]; Salanti 2012 [73]; Silva 2014 [75]; Singh 2012 [76]; Smith 2011 [77]; Thomson 2010 [26] ▪ Present pooled effect estimates and their confidence intervals (and associated statistics such as estimates of heterogeneity, I2), number and types of studies, number of participants, meta-analysis model and estimation method, authors conclusions ▪ Present the forest plots from the included SRs (Chen 2014 [46]; Pieper 2014c [66]) |

| 1.2.3 Describe and/or tabulate the results of the included primary studies, including new or additional primary studies a | Caird 2015 [1]; Cooper 2012 [6]; O’Mara 2011 [64]; Robinson 2015 [24, 69–72] ▪ For example, summary data, effect estimates and their confidence intervals, study design, number of study participants (O’Mara 2011 [64]) |

| 1.2.4 Summarise and/or tabulate RoB assessments of SRs and primary studies | Becker 2008 [4]; Caird 2015 [1]; Chen 2014 [46]; Hartling 2012 [53]; JBI 2014 [39, 59]; Li 2012 [62]; Ryan 2009 [25]; Robinson 2015 [24, 69–72]; Smith 2011 [77] ▪ For example, summarise the RoB/quality assessment methods used across the SRs |

| 1.2.5 Summarise and/or tabulate results from any investigations of statistical heterogeneity (e.g. results from subgroup analyses / meta-regression) within the included SRs | Cooper 2012 [6]; JBI 2014 [39, 59]; Smith 2011 [77] |

| 1.2.6 Summarise and/or tabulate results from any investigations of reporting biases (e.g. results from statistical tests for funnel plot asymmetry) within the included SRs | Singh 2012 [76]; Smith 2011 [77] ▪ Tabulate statistical tests of publication bias from the included MAs (Smith 2011 [77]) |

| 1.2.7 Determine the order of reporting the results in text and tables (e.g. by outcome domain, by effectiveness of interventions)a | Becker 2008 [4]; Bolland 2014 [5]; Salanti 2011 [73]; Smith 2011 [77] |

| 1.2.8 Determine methods for converting or standardising effect metrics (either from primary studies or meta-analyses) to the same scale (e.g. odds ratios to risk ratios)a | Becker 2008 [4]; Cooper 2012 [6]; Thomson 2010 [26] ▪ Where a variety of summary statistics, such as odds ratios and risk ratios, are reported across SR/MAs, convert the results into one summary statistic to facilitate interpretation and comparability among results (Thomson 2010 [26]) |

| 1.2.9 Determine methods to group results of specific outcomes (from either primary studies or MAs) into broader outcome domainsa | Ryan 2009 [25]; Thomson 2010 [26] ▪ Use an existing outcome taxonomy (e.g. Cochrane Consumers and Communication Review Group’s taxonomy). For example, results of an intervention on specific outcomes knowledge, accuracy, and risk of perception all map to the outcome domain consumer knowledge and understanding (Ryan 2009 [25]) |

| 1.3 Determine graphical approaches to present the resultsa | Becker 2008 [4]; Chen 2014 [46]; Crick 2015 [48]; Hartling 2014 [55]; JBI 2014 [39, 59]; Pieper 2014c [66]; Pieper 2014a [17] ▪ Use a forest plot to present MA effects (95% CI) from each SR sometimes referred to as ‘forest top plot’ (Becker 2008 [4]; Pieper 2014a [17]) ▪ Use a harvest plot to present the direction of effect for trials or MAs or both, also depicting study size and quality (Crick 2015 [48]) ▪ Use a bubble plot to display three dimensions of information, using colour to differentiate clinical indications: the x-axis (e.g. meta-analytic effect size), y-axis (e.g. SR quality), and the size of the bubble (e.g. number of included primary studies in a SR) ▪ Use a network plot to present the treatments that have been compared, with nodes representing treatments and links between nodes representing comparisons between treatments (Cooper 2012 [6]) |

| 2.0 Plan the approach to quantitatively synthesising the SR results | |

| 2.1 Do not conduct a new quantitative synthesis (e.g. because of lack of time or resources) | Salanti 2011 [73] |

| 2.2 Specify triggers for when to conduct a new quantitative synthesis | |

| 2.2.1 Need to combine results from multiple MAs (with non-overlapping studies) for the same comparison and outcome | Robinson 2015 [24, 69–72] |

| 2.2.2 Need to incorporate additional primary studies; or, incorporate these studies under certain circumstances | Robinson 2015 [24, 69–72]; Pieper 2014a [17] ▪ When the identified SRs are out of date and more recent primary studies have been published (Robinson 2015 [24, 69–72]) ▪ When inclusion of primary studies may change conclusions, strength of evidence judgements, or add new information (e.g. a trial undertaken in a population not currently included in the overview) |

| 2.2.3 Need to apply new meta-analysis methods, fitting a more appropriate meta-analysis method and model, or using a different effect metric | Robinson 2015 [24, 69–72] ▪ When a new meta-analysis method such as prediction intervals are required ▪ When a fixed effect model was fitted in a SR, but a random effects model was more appropriate ▪ When a risk ratio is used instead of an odds ratio |

| 2.2.4 Need to limit or expand the MAs into a new MA that meets the population, intervention and comparator elements of the overview | Thomson 2010 [26]; Whitlock 2008 [24, 69–72] ▪ Extracting the subset of trials that include only children and adolescents from a MA that includes trials with no restriction on age |

| 2.2.5 Need to undertake a new meta-analysis because of concerns regarding the trustworthiness of the SR/MA results | Robinson 2015 [24, 69–72] ▪ Concerns regarding data extraction errors |

| 2.2.6 Need to conduct a MA (if possible and makes sense to do so) because the SRs did not undertake MA | Inferred |

| 2.2.7 Need to conduct a MA to reconcile discordant findings of previous SRs | White 2009 [24, 69–72] ▪ If overview authors cannot determine reasons for the discordant findings among SRs, then they can regard this as an indication that they need to conduct a new MA (White 2009 [24, 69–72]) |

| 2.3 Determine the meta-analysis approach | |

| 2.3.1 Undertake a first-order meta-analysis of effect estimates (meta-analysis of the primary study effect estimates)a | Becker 2008 [4]; Chen 2014 [46]; Cooper 2012 [6]; Pieper 2014a [17]; Robinson 2015 [24, 69–72]; Schmidt 2013 [74]; Tang 2013 [78]; Thomson 2010 [26] ▪ May re-extract data from the primary studies, or use the data reported in the reviews (see ‘Data extraction’ table in [10]) |

| 2.3.2 Undertake a second-order meta-analysis of effect estimates (meta-analysis of meta-analyses) either ignoring the potential correlation across the meta-analysis estimates (arising from the same study included in more than one meta-analysis), or applying an adjustment to account for the potential correlation (e.g. inflating the variance of the meta-analysis) | Caird 2015 [1]; Chen 2014 [46]; Cooper 2012 [6]; Hemming 2012 [56]; Schmidt 2013 [74]; Tang 2013 [78] ▪ This issue of potential correlation (or non-independence) of the meta-analysis effect estimates may be more of a concern in overviews that seek to undertake a meta-analysis of the effects for the same intervention and same population, as compared with undertaking a meta-analysis of effects across populations (with the latter sometimes referred to as panoramic or multiple-indication reviews) (Chen 2014 [46]; Hemming 2012 [56]) ▪ Refer to 5.1.4 for statistical approaches to dealing with overlap |

| 2.3.3 Undertake vote counting (e.g. based on direction of effect)a | Becker 2008 [4]; Caird 2015 [1]; Flodgren 2011 [49]; Ryan 2009 [25]; Tang 2013 [78]; Thomson 2010 [26] |

| 2.4 Determine the method to convert effect metrics (either from primary studies or meta-analyses) to the same scalea | Cooper 2012 [6]; Tang 2013 [78]; Thomson 2010 [26] |

| 2.5 Determine the meta-analysis model and estimation methodsa | Cooper 2012 [6]; Hemming 2012 [56]; Schmidt 2013 [74] ▪ For example, second order meta-analysis: fixed or random effects model to combine meta-analytic effects (Schmidt 2013 [74]) ▪ For example, first-order meta-analysis across clinical conditions (multiple indication, panoramic review): three level hierarchical model, mixed effects model (Chen 2014 [46]; Hemming 2012 [56]) ▪ For example, parametric or non-parametric methods (Cooper 2012 [6]) ▪ For example, DerSimonian and Laird between-study variance estimator (Robinson 2015 [24, 69–72]; Tang 2013 [78]) |

| 2.6 Determine graphical approachesa | Becker 2008 [4]; Chen 2014 [46]; Crick 2015 [48]; Li 2012 [62]; Pieper 2014a [17]; Pieper 2014c [66] ▪ Use forest plots—either of meta-analysis results from each review, or results from individual studies (Becker 2008 [4]; Pieper 2014a [17]; Chen 2014 [46]; Pieper 2014c [66]; ▪ Use a harvest plot, which depicts results according to study size and quality, noting the direction of effect (Crick 2015 [48]) |

| 3.0 Plan to assess heterogeneity | |

| 3.1 Determine summary approaches | |

| 3.1.1 Tabulate results by modifying factors (e.g. study size, quality)a | Caird 2015 [1]; Chen 2014 [46]; Hartling 2012 [53]; JBI 2014 [39, 59]; Singh 2012 [76] ▪ Graph or tabulate results of SRs by modifying factors (e.g. group by the type of included study design [SRs of RCTs, SRs of observational studies); group by methodological quality of the SRs, their completeness in evidence coverage, or how up-to-date they are) (Caird 2015 [1]; Chen 2014 [46]; Hartling 2012 [53]; JBI 2014 [39, 59]) |

| 3.1.2 Graph results by modifying factorsa | (Caird 2015 [1]; Chen 2014 [46]; Hartling 2012 [53]; JBI 2014 [39, 59]) |

| 3.2 Determine approach to identifying and quantifying heterogeneitya | Cooper 2012 [6] ▪ Visual examination of overlap of confidence intervals in the forest plot, I2 statistic, chi-squared test for heterogeneity |

| 3.3 Determine approach to investigation of modifiers of effect in meta-analyses | |

| 3.3.1 Undertake a first-order subgroup analysis of primary study effect estimatesa | Becker 2008 [4]; Chen 2014 [46]; Cooper 2012 [6]; Singh 2012 [76]; Robinson 2015 [24, 69–72]; Thomson 2010 [26] |

| 3.3.2 Undertake a second-order subgroup analysis of meta-analysis effect estimates with moderators categorised at the level of the meta-analysis (e.g. SR quality). Issues of correlation across the meta-analysis estimates may occur (see 2.3.2) | Cooper 2012 [6] |

| 3.4 Determine the meta-analysis model and estimation methodsa | Refer to 2.5 ▪ For example, random effects meta-regression |

| 4.0 Plan the assessment of reporting biases | |

| 4.1 Determine non-statistical approaches to assess missing SRs | Pieper 2014d [68]; Singh 2012 [76] ▪ Search SR registers (e.g. PROSPERO) ▪ Search for SR protocols |

| 4.2 Determine non-statistical approaches to assess missing primary studies | Bolland 2014 [5] ▪ Identify non-overlapping primary studies across SRs and examine reasons for non-overlap (e.g. different SR inclusion / exclusion criteria, different search dates, different databases) as a method for discovering potentially missing primary studies from SRs (Bolland 2014 [5]) ▪ Conduct searches of trial registries to identify missing studies |

| 4.3 Determine statistical methods for detecting and examining potential reporting biases from missing primary studies or results within studies, or selectively reported resultsa | Caird 2015 [1]; JBI 2014 [39, 59]; Singh 2012 [76]; Schmidt 2013 [74]; Smith 2011 [77] ▪ Visual assessment of funnel plot asymmetry of results from primary studies ▪ Statistical tests for funnel plot asymmetry using results from primary studies |

| 5.0 Plan how to deal with overlap of primary studies included in more than one SR | |

| 5.1 Determine methods for quantifying overlap | Cooper 2012 [6]; Pieper 2014b [35] ▪ Statistical measures to quantify the degree of overlap of primary studies across SRs (Pieper 2014b [35]) |

| 5.2 Determine how to visually examine and present overlap of the primary studies across SRs | Caird 2015 [1]; Chen 2014 [46]; Cooper 2012 [6]; JBI 2014 [39, 59]; O’Mara 2011 [64]; Robinson 2015 [24, 69–72]; Thomson 2010 [26] ▪ Display a matrix comparing which primary studies were included in which SRs; or other visual approaches demonstrating overlap (e.g. Venn diagrams as referenced in Patnode [82]) |

| 5.3 Determine methods for dealing with overlap | |

| 5.3.1 Use decision rules, or a tool, to select one (or a subset of) MAs with overlapping studies (see also 1.1.2 above) | Caird 2015 [1]; Chen 2014 [46]; Cooper 2012 [6]; O’Mara 2011 [64]; Pieper 2012 [3]; Robinson 2015 [24, 69–72]; Thomson 2010 [26] ▪ Choose the meta-analyses with the most complete information; methodologically rigorous; recentness of the meta-analysis; inclusion of certain study types (e.g. only randomised trials); publication status ▪ Exclude SRs that do not contain any unique primary studies, when there are multiple SRs (Pieper 2014a [17]) ▪ Use a published algorithm or tool [Jadad 1997 [29]] |

| 5.3.2 Use statistical approaches to deal with overlap | Cooper 2012 [6]; Tang 2013 [78] ▪ Identify meta-analyses with 25% or more of their research in common and eliminate the one with the fewer studies in each comparison, except when multiple smaller meta-analyses (with little overlap) would include more studies if the largest meta-analysis was eliminated (Cooper 2012 [6]) ▪ Sensitivity analyses (e.g. second-order MA including all MAs irrespective of overlap compared with second-order MA including only MAs where there is no overlap in primary studies) (Cooper 2012 [6]) ▪ Inflate the variance of the meta-analysis estimate (Tang 2013 [78]) |

| 5.3.3 Ignore overlap among primary studies in the included SRs | Cooper 2012 [6]; Caird 2015 [1] |

| 5.3.4 Acknowledge overlap as a limitation | Caird 2015 [1] |

| 6.0 Plan how to deal with discordant results, interpretations and conclusions of SRs | |

| 6.1 Determine methods for dealing with or reporting discordance across SRs | |

| 6.1.1 Examine and record discordance among SRs addressing a similar question | Caird 2015 [1]; Chen 2014 [46]; Cooper 2012 [6]; Hartling 2012 [53]; JBI 2014 [39, 59]; Kramer 2009 [61]; Pieper 2014c [66]; Pieper 2012 [3]; Robinson 2015 [24, 69–72]; Smith 2011 [77]; Thomson 2010 [26] ▪ Discordance among SRs can arise from a lack of overlap in studies, or methodological differences |

| 6.1.2 Use decision rules or tools (e.g. Jadad 1997 [29]) to select one (or a subset of) SR/MAs | Bolland 2014 [5]; Caird 2015 [1]; Chen 2014 [46]; Cooper 2012 [6]; Hartling 2012 [53]; Jadad 1997 [29]; JBI 2014 [39, 59]; Kramer 2009 [61]; Moja 2012 [63]; Pieper 2012 [3]; Pieper 2014c [66]; Robinson 2015 [24, 69–72]; Smith 2011 [77]; Tang 2013 [78]; Thomson 2010 [26] ▪ Use a published algorithm based on whether the reviews address the same question, are of the same quality, have the same selection criteria (Jadad 1997 [29]) ▪ Use an adapted algorithm (pre-existing algorithm adapted for the overview) (Bolland 2014 [5]) |

| 6.2 Determine tabular or graphical approaches to present discordance | Inferred |

JBI Joanna Briggs Institute; MA meta-analyses; PICOs Population (P), intervention (I), comparison (C), outcome (O), and study design (s); PROSPERO International Prospective Register of Systematic Reviews; RCT randomised controlled trial; SRs systematic reviews

aAdaptation of the step from SRs to overviews. No methods evaluation required, but special consideration needs to be given to unique issues that arise in conducting overviews

Stage II: identification and mapping of evaluations of methods

Search methods

In addition to the main searches outlined in the ‘Search methods’ section for Stage I, we planned to undertake purposive searches to locate ‘studies evaluating methods’ where the main searches were unlikely to have located these evaluations. For this second paper, we undertook a purposive search to locate studies evaluating assessment of risk of bias tools for SRs, since these studies may not have mentioned ‘overviews’ (or its synonyms) in their titles or abstracts and thus would not have been identified in the main searches. However, through our main search, we identified a SR that had examined quality assessment or critical appraisal tools for assessing SRs or meta-analyses [12]. We therefore did not develop a new purposive search strategy, but instead used the strategy in the SR, and ran it over the period January 2013—August 2016 to locate studies published subsequent to the SR (Additional file 2). For the other steps, the identified methods were specific to overviews, so evaluations were judged likely to be retrieved by our main searches.

Eligibility criteria

To create the evidence map, we identified studies evaluating methods for overviews of systematic reviews of interventions.

Inclusion criteria:

-

(i)

SRs of methods studies that have evaluated methods for overviews

-

(ii)

Primary methods studies that have evaluated methods for overviews

Exclusion criteria:

-

(i)

Studies published in languages other than English

-

(ii)

Methods studies that have evaluated methods for network meta-analysis

We added the additional criterion that methods studies had to have a stated aim to evaluate methods, since our focus was on evaluation and not just application of a method.

Study selection

We used the same process, as outlined in the ‘Study selection’ section, for determining which studies located from the main search met the inclusion criteria. For studies located from the purposive search, one author reviewed title, abstracts and full text for their potential inclusion against the eligibility criteria.

Data extraction

We extracted data from primary methods studies, or SRs of methods studies that evaluated the measurement properties of tools for assessing the risk of bias in SRs and one study that developed measures to quantify overlap of primary studies in overviews. The data extracted from these studies were based on relevant domains of the COSMIN checklist (Table 1) [13, 14]. We had originally planned to extract quantitative results from the methods evaluations relating to the primary objectives; however, on reflection, we opted not to do this since we felt this lay outside the purpose of the evidence map. Data were extracted independently by three authors (CL, SM, SB, JM).

Table 1.

Data extracted from methods studies evaluating tools for assessing risk of bias in SRs

| Study design Category |

Data extracted |

|---|---|

| Primary methods studies | |

| Study characteristics | First author, year |

| Title | |

| Primary objective | |

| Description of primary methods studies | Name of the included tools or measures |

| Type of assessment (e.g. assessment of reliability, content validity) | |

| Content validity—methods of item generation | |

| Content validity—comprehensiveness | |

| Reliability—description of reliability testing | |

| Tests of validity description of correlation coefficient testing | |

| Other assessment (feasibility, acceptability, piloting) | |

| Risk of bias criteria | Existence of a protocol |

| Method to select the sample of SRs to which the tool/measure was applied | |

| Process for selecting the raters/assessors who applied the tool/measure | |

| Pre-specified hypotheses for testing of validity | |

| Systematic reviews of methods studies | |

| Study characteristics | First author, year |

| Title | |

| Description of SRs of methods studies | Primary objective |

| Number of included tools | |

| Number of studies reporting on the included tools | |

| Name of the included tools or measures (unnamed tools are identified by first author name and year of publication) | |

| Content validity—reported method of development (e.g. item generation, expert assessment of content) | |

| Reliability—description of reliability testing | |

| Construct validity—description of any hypothesis testing. For example, how assessments from two or more tools relate, whether assessments relate to other factors (e.g. effect estimates or findings) | |

| Other assessment (feasibility, acceptability, piloting) | |

| Risk of bias criteria (using three domains from the ROBIS tool [15]) | Domain 1—study eligibility criteria: concerns regarding specification of eligibility criteria (low, high or unclear concern) |

| Domain 2—identification and selection of studies: concerns regarding methods used to identify and/or select studies (low, high or unclear concern) | |

| Domain 3—data collection and study appraisal: concerns regarding methods used to collect data and appraise studies (low, high or unclear concern) | |

| Overall judgment: Interpretation addresses all concerns identified in Domains 1–3, relevance of studies was appropriately considered, reviewers avoided emphasising results based on statistical significance. | |

Assessment of the risk of bias

For primary methods studies, we extracted and tabulated study characteristics that may plausibly be associated with either bias or the generalisability of findings (external validity) (Table 1). For SRs of methods studies, we used the ROBIS tool to identify concerns with the review process in the specification of study eligibility (Domain 1), methods used to identify and/or select studies (Domain 2), and the methods used to collect data and appraise studies (Domain 3) (Table 1) [15]. We then made an overall judgement about the risk of bias arising from these concerns (low, high, or unclear). We did not assess Domain 4 of ROBIS, since this domain covers synthesis methods that are of limited applicability to the included reviews.

Analysis

The yield, characteristics and description of the studies evaluating methods were described and mapped to the framework of methods.

Results

Results of the main search

Details of our search results are reported in our first companion paper [10]. Here, we note the results from the additional purposive search and changes in search results between the papers. Our main search strategy retrieved 1179 unique records through searching databases, methods collections and other sources (Fig. 3) [10]. After screening abstracts and full text, 66 studies remained, 42 of which were included in stage I and 24 studies in stage II (exclusions found in Additional file 3). Our purposive search to identify studies evaluating tools for assessing the risk of bias in SRs (rather than primary studies) found no further stage II studies (see Additional file 4 for flowchart).

Fig. 3.

Flowchart of the main search for stages I and II studies

Of the 24 included stage II studies, 12 evaluated search filters for SRs (reported in paper 1 [10]), 11 evaluated risk of bias assessment tools for SRs, and one evaluated a synthesis method. Of the 11 studies evaluating risk of bias assessment tools for SRs, four were SRs of methods studies ([12, 16–18] and seven were primary evaluation studies [15, 17, 19–23].

Four of the seven primary evaluations of risk of bias assessment tools [20–23] and one SR [16] were included in the results of the 2013 SR by Whiting [12] and so were not considered individually in this paper. We excluded one of the SRs since, after close examination, it became clear that it reviewed studies that applied rather than evaluated AMSTAR (A Measurement Tool to Assess Systematic Reviews [22, 23]) and so did not meet our stage II inclusion criteria [18]. Therefore, of the 24 initially eligible stage II studies, 18 met the inclusion criteria, six of which are included in this second paper (Fig. 3).

Stage I: development and population of the framework of methods

We first describe the characteristics of the included stage I articles (see ‘Characteristics of stage I articles’; Table 2) followed by presentation of the developed framework. This presentation is organised into sections representing the main (latter) steps in conducting an overview—‘assessment of risk of bias in SRs and primary studies’, ‘synthesis, presentation and summary of findings’ and the ‘assessment of certainty of the evidence arising from the overview’. In each section, we orient readers to the structure of the methods framework, which includes a set of steps and sub-steps (which are numbered in the text and tables). Reporting considerations for all steps are reported in Additional file 5.

Table 2.

Characteristics of stage I studies and the extent to which each described (two ticks) or mentioned (one tick) methods pertaining to the latter steps in conducting an overview

| Citation | Type of study | Summary description of the article | Latter steps in the conduct of an overview | ||

|---|---|---|---|---|---|

| Assessment of RoB in SRs and primary studies | Synthesis, presentation and summary of the findings | Assessment of the certainty of the evidence arising from the overview | |||

| Baker [43] The benefits and challenges of conducting an overview of systematic reviews in public health: a focus on physical activity. |

Article describing methods for overviews | • Describes the usefulness of overviews for decision-makers and summarises some procedural steps to be undertaken • Provides a case study of an overview on public health interventions for increasing physical activity |

✓ | ✓ | |

| Becker [4] Overviews of reviews. |

Guidance for undertaking overviews | • Early guidance providing the structure and procedural steps for the conduct of an overview • Details the RoB/quality assessment of SRs, and describes how to present findings through tables and figures |

✓✓ | ✓ | ✓✓ |

| Bolland [5] A case study of discordant overlapping meta-analyses: vitamin D supplements and fracture. |

Article describing methods for overviews | • Describes criteria for explaining differences in SR/MAs addressing a similar question with discordant conclusions • Builds on the guide to interpret discordant SRs proposed by Jadad 1997 [29] • Suggests reporting items when an overview contains SR/MAs addressing a similar question |

✓ | ✓ | ✓✓ |

| Brunton [44] Putting the issues on the table: summarising outcomes from reviews of reviews to inform health policy. |

Study examining methods used in a cohort of overviews | • Presents a tabular method of vote counting (positive effect/negative effect/no change) for each reported outcome | ✓ | ||

| Büchter [45, 65] How do authors of Cochrane Overviews deal with conflicts of interest relating to their own systematic reviews? |

Study examining methods used in a cohort of overviews | • Describes potential conflicts arising from dual authorship (where an overview author includes one or more SRs they authored) and suggests ‘safeguards’ to protect against potential bias • Describes reporting items in relation to dual authorship |

✓ | ||

| Caird [1] Mediating policy-relevant evidence at speed: are systematic reviews of systematic reviews a useful approach? |

Article describing methods for overviews | • Describes the methodological challenges in the production of overviews aimed at translating the knowledge to policy makers • Describes the trade-offs between producing a rapid overview and its comprehensiveness and reliability |

✓✓ | ✓✓ | ✓✓ |

| Chen [46] Scientific hypotheses can be tested by comparing the effects of one treatment over many diseases in a systematic review. |

Study examining methods used in a cohort of overviews | • Identifies possible aims of an overview as being to detect unintended effects, improve the precision of effect estimates or explore heterogeneity of effect across disease groups • Describes the value and pitfalls of synthesis of MAs using three case studies |

✓✓ | ✓✓ | |

| CMIMG [47] Review type and methodological considerations. |

Guidance for undertaking overviews | • Builds on the Cochrane guidance for overviews by Becker 2008 [4] • Describes the factors in the decision to conduct an overview vs. an SR • Describes how to handle potential overlap in primary studies across SRs and RoB/quality of SRs |

✓ | ✓ | |

| Cooper [6] The overview of reviews: unique challenges and opportunities when research syntheses are the principal elements of new integrative scholarship. |

Article describing methods for overviews | • Describes steps in the conduct of an overview and methods to address challenges (e.g. dealing with overlap in primary studies across SRs) • Describes methods for second order meta-analysis |

✓✓ | ✓✓ | ✓✓ |

| Crick [48] An evaluation of harvest plots to display results of meta-analyses in overviews of reviews: a cross-sectional study |

Article describing methods for overviews | • Examines the feasibility of using harvest plots as compared to summary tables to depict results of MAs in overviews | ✓✓ | ✓ | |

| Flodgren [49] Challenges facing reviewers preparing overviews of reviews.a |

Article describing methods for overviews | • Mentions the issue of missing or inadequately reported data • Mentions the challenges in summarising and evaluating large amounts of heterogeneous data across SRs |

✓✓ | ✓ | |

| Foisy [50] Mixing with the ‘unclean’: Including non-Cochrane reviews alongside Cochrane reviews in overviews of reviews.a |

Article describing methods for overviews | • Mentions some challenges inherent in defining AMSTAR scoring as inclusion criteria, and inclusion of non-Cochrane reviews alongside Cochrane reviews • Mentions inclusion criteria to minimise overlap in primary studies across SRs |

✓ | ||

| Foisy [51] Grading the quality of evidence in existing systematic reviews: challenges and considerations. |

Study examining methods used in a cohort of overviews | • Mentions problems overview authors may encounter when applying GRADE to included SRs without going back to original data, and potential solutions | ✓ | ||

| Foisy [52] Challenges and considerations involved in using AMSTAR in overviews of reviews. |

Article describing methods for overviews | • Describes differences in AMSTAR scores across Cochrane and non-Cochrane SRs, challenges in using AMSTAR to assess RoB of included SRs, and using the AMSTAR score as inclusion criterion | ✓✓ | ||

| Hartling [53] A descriptive analysis of overviews of reviews published between 2000 and 2011. |

Study examining methods used in a cohort of overviews | • Describes methodological standards for SRs (PRISMA, MECIR) and their applicability to overviews • Describes methods related to RoB of primary studies extracted from the SRs, RoB of the SRs, quantitative analysis and certainty of the evidence |

✓✓ | ✓ | ✓✓ |

| Hartling [54] Generating empirical evidence to support methods for overviews of reviews.a |

Study examining methods used in a cohort of overviews | • Identifies methodological issues when conducting overviews • Mentions RoB of SRs, limitations of existing tools and challenges in assessing the certainty of the evidence |

✓ | ✓ | |

| Hartling [55] Systematic reviews, overviews of reviews and comparative effectiveness reviews: a discussion of approaches to knowledge synthesis. |

Article describing methods for overviews | • Briefly defines overviews, mentions the purposes in conducting an overview, and discusses some methodological challenges | ✓ | ||

| Hemming [56] Pooling systematic reviews of systematic reviews: a Bayesian panoramic meta-analysis. |

Article describing methods for overviews | • Proposes a Bayesian method for meta-analysis (hierarchical meta-analysis) which uses uninformative priors as a means of pooling effect estimates in an overview | ✓✓ | ||

| Ioannidis [57] Integration of evidence from multiple meta-analyses: a primer on umbrella reviews, treatment networks and multiple treatments meta-analyses. |

Article describing methods for overviews | • Defines ‘umbrella reviews’ as a pre-step to network meta-analysis • Describes challenges of overviews • Describes some reporting items for overviews |

✓ | ||

| Jadad [29] A guide to interpreting discordant systematic reviews. |

Article describing methods for overviews | • Seminal paper summarising the potential sources of discordance in results in a cohort of MAs, and types of discordance • Presents an algorithm for interpreting discordant SR results |

✓ | ✓✓ | ✓✓ |

| James [58] Informing the methods for public health overview reviews: a descriptive analysis of Cochrane and non-Cochrane public health overviews a |

Study examining methods used in a cohort of overviews | • Briefly describes several steps in the conduct of overviews • Compares Cochrane and non-Cochrane reviews in terms of tool used for assessing methodological quality, and certainty of the evidence |

✓ | ✓ | |

| Joanna Briggs Institute (JBI) [39, 59] Methodology for JBI umbrella reviews. |

Guidance for undertaking overviews | • Provides guidance as to what methods should be used at which step in the conduct of an overview • Provides stylistic conventions for overviews to meet publication and reporting criteria for the JBI Database of Systematic Reviews and Implementation Reports |

✓✓ | ✓✓ | ✓ |

| Kovacs [60] Overviews should meet the methodological standards of systematic reviews. |

Commentary that discusses methods for overviews | • Mentions methodological challenges of overviews | ✓ | ✓ | |

| Kramer [61] Preparing an overview of reviews: lessons learned. a |

Article describing methods for overviews | • Mentions the challenges encountered when the authors conducted three overviews including dealing with heterogeneity | ✓ | ✓ | |

| Li [62] Quality and transparency of overviews of systematic reviews. |

Article describing methods for overviews | • Presents a pilot reporting/quality checklist • Evaluates a cohort of overviews using the pilot tool, with the mean number of items but no details of the items |

✓ | ||

| Moja [63] Multiple systematic reviews: methods for assessing discordances of results. |

Article describing methods for overviews | • Describes methods to assess discordant finding among MAs based on the Jadad 1997 tool [29] | ✓ | ✓✓ | |

| O’Mara [64] Guidelines for conducting and reporting reviews of reviews: dealing with topic relevances and double-counting.a |

Article describing methods for overviews | • Presents a ‘utility’ rating based on the SRs PICO compared to the overviews’ PICO question • Mentions graphical and tabular approaches to establish the extent of overlap in primary studies across SRs |

✓ | ||

| Pieper [3, 65] Overviews of reviews often have limited rigor: a systematic review. |

Study examining methods used in a cohort of overviews | • Describes the methods used in overviews • Recommends using validated search filters for retrieval of SRs • Discusses whether to update the overview by including primary studies published after the most recent SR |

✓ | ✓ | ✓ |

| Pieper [66] Methodological approaches in conducting overviews: current state in HTA agencies. |

Article describing methods for overviews | • Describes the methods recommended in 8 HTA guideline documents related to overviews • Compares the Cochrane Handbook guidance [67] to guidance produced by HTA agencies |

✓✓ | ✓ | ✓ |

| Pieper [68] Up-to-dateness of reviews is often neglected in overviews: a systematic review. |

Study examining methods used in a cohort of overviews | • Describes the process of searching for additional primary studies in an overview • Presents decision rules for when to search for additional primary studies • Describes sequential searching versus parallel searching for retrieving SRs and primary studies in an overview |

✓ | ✓✓ | |

| Pollock [31] An algorithm was developed to assign GRADE levels of evidence to comparisons within systematic reviews. |

Article describing methods for overviews | • Adapts GRADE guidance to assess the certainty of the evidence for a specific overview • Presents an algorithm to assess certainty of the evidence |

✓ | ✓✓ | |

| Robinson [24, 69–72] Integrating bodies of evidence: existing systematic reviews and primary studies. |

Article describing methods for overviews | • Describes the steps and methods to undertake a complex review that includes multiple SRs, which is similar to methods used in overviews • Describes methods for assessing risk of bias of primary studies in SRs, and methods to assess the certainty of the evidence |

✓✓ | ✓✓ | ✓✓ |

| Ryan [25] Building blocks for meta-synthesis: data integration tables for summarising, mapping, and synthesising evidence on interventions for communicating with health consumers. |

Article describing methods for overviews | • Presents tabular methods to deal with the preparation of overview evidence • Discusses the data extraction process and organisation of data • Presents a table of taxonomy of outcomes from the included SRs and a data extraction table based on this taxonomy |

✓✓ | ✓✓ | ✓✓ |

| Salanti [73] Evolution of Cochrane intervention reviews and overviews of reviews to better accommodate comparisons among multiple interventions. |

Guidance for undertaking overviews | • Defines overviews as integrating or synthesising (rather than summarising) evidence from SRs • Describes methods for synthesising multiple-intervention SRs |

✓ | ||

| Schmidt [74] Methods for second order meta-analysis and illustrative applications. |

Article describing methods for overviews | • Describes statistical methods for second-order meta-analysis • Provides examples of modelling between-MA variation |

✓✓ | ||

| Silva [75] Overview of systematic reviews—a new type of study. Part II |

Study examining methods used in a cohort of overviews | • Examines a cohort of Cochrane reviews for methods used • Documented the sources and types of search strategies conducted |

✓ | ✓✓ | |

| Singh [76] Development of the Metareview Assessment of Reporting Quality (MARQ) Checklist. |

Article describing methods for overviews | • Presents a pilot reporting/quality checklist • Evaluates four case studies using the pilot tool, with the mean number of items but no details of the items |

✓✓ | ✓ | |

| Smith [77] Methodology in conducting a systematic review of systematic reviews of healthcare interventions. |

Article describing methods for overviews | • Describes some steps and challenges in undertaking an overview, namely eligibility criteria, search methods, and RoB/quality assessment • Presents tabular methods for presentation of results in an overview |

✓✓ | ✓✓ | ✓✓ |

| Tang [78] A statistical method for synthesizing meta-analyses. |

Article describing methods for overviews | • Describes statistical methods for second-order MA with examples | ✓✓ | ||

| Thomson [26] The evolution of a new publication type: steps and challenges of producing overviews of reviews. |

Article describing methods for overviews | • Describes some steps in undertaking an overview and some of the challenges in conducting an overview • Discusses that gaps or lack of currency in included evidence will weaken the overview findings |

✓✓ | ✓✓ | ✓✓ |

| Thomson [79] Overview of reviews in child health: evidence synthesis and the knowledge base for a specific population. |

Study examining methods used in a cohort of overviews | • Describes the process of including trials in overviews • Discusses the challenge of overview PICO differing from the PICO of the included SRs • Provides potential solutions as to what to do when mixed populations are reported in SRs and how to extract subgroup data |

✓ | ||

| Wagner [80] Assessing a systematic review of systematic reviews: developing a criteria. |

Article describing methods for overviews | • Presents a quality assessment tool for appraisal of overviews | ✓ | ✓ | |

AHRQ’s EPC Agency for Healthcare Research and Quality’s Evidence-based Practice Center; AMSTAR A Measurement Tool to Assess Systematic Reviews; CMIMG Comparing Multiple Interventions Methods Group; GRADE Grading of Recommendations Assessment, Development, and Evaluation; HTA health technology assessment; JBI Joanna Briggs Institute; MA meta-analysis; MECIR Methodological Expectations of Cochrane Intervention Reviews; PICO Population, Intervention, Comparison, Outcome; PRISMA Preferred Reporting Items for Systematic Reviews and Meta-Analyses; RoB risk of bias; R-AMSTAR revised AMSTAR; SR systematic review

aIndicates a poster presentation

✓✓ Indicates a study describing one or more methods

✓ Indicates a study mentioning one or more methods

We focus our description on methods/options that are distinct; have added complexity, compared with SRs of primary studies; or have been proposed to deal with major challenges in undertaking an overview. Importantly, the methods/approaches and options reflect the ideas presented in the literature and should not be interpreted as endorsement for the use of the methods. We also highlight methods that may be considered for dealing with commonly encountered scenarios for which overview authors need to plan (see ‘Addressing common scenarios unique to overviews’; Table 6).

Table 6.

Methods and approaches for addressing common scenarios unique to overviews

| Scenario for which authors need to plan | Methods/approaches proposed in the literaturea | |||

|---|---|---|---|---|

| Assessment of RoB in SRs and primary studies (Table 3) | Synthesis, presentation and summary of the findings (Table 4) | Assessment of certainty of the evidence (Table 5) | ||

| 1 | Reviews include overlapping information and data (e.g. arising from inclusion of the same primary studies) | 2.1.1 | 1.1.2, 5.0 | 1.1.1–1.1.5 |

| 2 | Reviews report discrepant information and data | 2.1.1, 2.1.2, 2.1.3 | 2.2.1, 2.2.5 | 1.1.1–1.1.5 |

| 3 | Data are missing or reviews report varying information (e.g. information on risk of bias is missing or varies across primary studies because reviews use different tools) | 2.1.1, 2.1.3 | 1.2.9, 2.2.1, 2.2.5 | 1.1.1–1.1.5 |

| 4 | Reviews provide incomplete coverage of the overview question (e.g. missing comparisons, populations) | 2.2.1, 2.2.4 | 1.1.1, 1.1.2, 1.1.5 | |

| 5 | Reviews are not up-to-date | 2.2.2 | 1.1.1, 1.1.2 | |

| 6 | Review methods raise concerns about bias or quality | 2.1.1, 2.1.2, 2.2.3 | 2.2.3, 2.2.5, 4.0 | 1.1.1–1.1.5 |

| 7 | Reviews report discordant results and conclusions | 2.2.7, 6.0 | 1.1.1–1.1.5 | |

aThe methods/approaches could be used in combination and at several steps in the conduct of an overview. When one approach is taken, then another approach may not apply

Characteristics of stage I articles

The characteristics and the extent to which articles (n = 42) described methods pertaining to the latter steps in conducting an overview are indicated in Table 2. The majority of articles were published as full reports (n = 34/42; 81%). The most common type of study was an article describing methods for overviews (n = 26/42; 62%), followed by studies examining methods used in a cohort of overviews (n = 11/42; 26%), guidance documents (n = 4/42; 10%) and commentaries and editorials (n = 1/42; 2%).

Methods for the assessment of risk of bias in SRs and primary studies were most commonly mentioned or described (n = 33), followed by methods for synthesis, presentation and summary of the findings (n = 30), and methods for the assessment of certainty of the evidence in overviews (n = 24). Few articles described methods across all of the latter steps in conducting an overview (n = 6 [1, 4, 6, 24–26]).

Assessment of risk of bias in SRs and primary studies

The three steps in the framework under ‘assessment of risk of bias in SRs and primary studies’ were ‘plan to assess risk of bias (RoB) in the included SRs (1.0)’, ‘plan how the RoB of the primary studies will be assessed or re-assessed (2.0)’ and ‘plan the process for assessing RoB (3.0)’ (Table 3). Note that in the following we use the terminology ‘risk of bias’, rather than quality, since assessment of SR or primary study limitations should focus on the potential of those methods to bias findings. However, the terms quality assessment and critical appraisal are common, particularly when referring to the assessment of SR methods, and hence, our analysis includes all relevant literature irrespective of terminology. We now highlight methods/approaches and options for the first two steps since these involve decisions unique to overviews.

Table 3.

Assessment of risk of bias in SRs and primary studies

| Step Sub-step Methods/approaches | Sources (first author, year) ▪ Examples |

|---|---|

| 1.0 Plan to assess risk of bias (RoB) in the included SRs§ | |

| 1.1 Determine how to assess RoB in the included SRs | |

| 1.1.1 Select an existing RoB assessment tool for SRs | Baker 2014 [43]; Becker 2008 [4]; Bolland 2014 [5]; Büchter 2011 [45, 65]; Caird 2015 [1]; Chen 2014 [46]; CMIMG 2012 [47]; Cooper 2012 [6]; Flodgren 2011 [49]; Foisy 2011 [50]; Foisy 2014 [52]; Hartling 2012 [53]; Hartling 2013 [54]; Jadad 1997 [29]; James 2014 [58]; JBI 2014 [39, 59]; Kovacs 2014 [60]; Kramer 2009 [61]; Li 2012 [62]; Pieper 2012 [3]; Pieper 2014c [66]; Pieper 2014d [68]; Pieper 2014a [17]; Robinson 2015 [24, 69–72]; Ryan 2009 [25]; Silva 2014 [75]; Singh 2012 [76]; Smith 2011 [77]; Thomson 2010 [26]; Whiting 2013 [12] |

| 1.1.2 Adapt an existing RoB tool (e.g. selecting or modifying items for the overview) | CMIMG 2012 [47]; Hartling 2012 [53]; Jadad 1997 [29]; Pollock 2015 [31] ▪ Pollock 2015 assessed 4 (of 11) AMSTAR items thought to be the most important sources of bias, and developed sub-questions for each [31] ▪ Reporting selected items/domains modifies the tool, since some items/domains are ignored [53] |

| 1.1.3 Develop a RoB tool customised to the overview | CMIMG 2012 [47]; Cooper 2012 [6]; Pieper 2012 [3]; Pieper 2014a [17] |

| 1.1.4 Use existing RoB assessments | Baker 2014 [43] ▪ Use quality assessments of SRs published by Health EvidenceTM [27] or Health Systems Evidence [81] |

| 1.1.5 Describe characteristics of included SRs that may be associated with bias or quality without using or developing a tool | Pieper 2014a [17]; Robinson 2015 [24, 69–72] |

| 1.2 Determine how to summarise or score the RoB assessments for SRs | |

| 1.2.1 Report assessment for individual items or domains (with or without rationale for judgements) | Hartling 2012 [53] |

| 1.2.2 Summarise assessments across items or domains by using a scoring system§§ | JBI 2014 [39, 59]; Pieper 2014a [17]; Robinson 2015 [24, 69–72]; Whiting 2013 [12]; Ryan 2009 [25]; Silva 2014 [75] ▪ Sum items, assigning equal or unequal weight to each (JBI 2014 [39, 59]) ▪ Calculate the mean score across items (JBI 2014 [39, 59]) |

| 1.2.3 Summarise assessments across items or domains, then use cut-off scores or thresholds to categorise RoB using qualitative descriptors (e.g. low, moderate or high quality)§§ | Crick 2013 [48]; JBI 2014 [39, 59]; Robinson 2015 [24, 69–72]; Ryan 2009 [25]; Silva 2014 [75]; Singh 2012 [76] ▪ Pollock 2015 [31] set cut-offs for rating an SR as having no serious limitations (‘yes’ response to 4/4 AMSTAR items), serious limitations (‘yes’ to 3/4 items and 1 ‘unclear’), or very serious limitations (‘yes’ to < 3/4) ▪ SRs that score < 3/10 on the AMSTAR scale might be considered low quality, 4-6/10 moderate quality, and 7–10/10 high quality (JBI 2014 [39, 59]) ▪ All domains/items required (all domains/items required for SR to be deemed low RoB) |

| 1.3 Determine how to present the RoB assessments for SRs | |

| 1.3.1 Display assessments in table(s) (e.g. overall rating in summary of findings table, and another table with RoB items for each SR) | Aromataris 2015 [39, 59]; Becker [4]; Chen 2014 [46]; Hartling 2012 [53]; Smith 2011 [77] |

| 1.3.2 Display assessments graphically | Crick 2015 [48] ▪ ROBIS RoB graph depicting authors’ judgments about each domain presented as percentages across all included SRs [15] ▪ Harvest plot, which depicts results according to study size and quality ([48]) |

| 1.3.3 Report assessments in text | Aromataris 2015 [39, 59]; Chen 2014 [46]; Hartling 2012 [53]; Li 2012 [62] |

| 2.0 Plan how the RoB of primary studies will be assessed or re-assessed | |

| 2.1 Determine how to assess the RoB of the primary studies in the included SRs (and any additional primary studies) | |

| 2.1.1 Report RoB assessment of primary studies from the included SRs, using the approaches specified for data extraction to deal with missing, flawed assessments, or discrepant/discordant assessments of the same primary study (i.e. where two or more SRs assess the same study using different tools or report discordant judgements using the same tool) (See ‘Data extraction’ table in [10]). | Aromataris 2015 [39, 59]; Becker 2008 [4]; Caird 2015 [1]; CMIMG 2012 [47]; Cooper 2012 [6]; Hartling 2012 [53]; Hartling 2014 [55]; Jadad 1997 [29]; Ioannidis 2009 [57]; Kramer 2009 [61]; Ryan 2009 [25]; Singh 2012 [76]; Thomson 2010 [26] ▪ Report RoB assessments of primary studies from the included SR(s), noting missing data and discrepancies (Hartling 2012 [53]; JBI 2014 [39]; Robinson 2015 [24, 69–72] ▪ Report RoB assessments from the highest quality SR (Jadad 1997 [29]) |

| 2.1.2 Report RoB assessment of primary studies from the included SRs after performing quality checks to verify that the assessment method has been applied appropriately and consistently across a sample of primary studies | Becker 2008 [4]; Hartling 2014 [55]; Ioannidis 2009 [57]; Jadad 1997 [29]; Kramer 2009 [61]; Moja 2012 [63]; Robinson 2015 [24, 69–72]; Thomson 2010 [26] ▪ Randomly sample a number of included RCTs, retrieve data from the original trial reports, and independently check 10% of RCT data from the included MAs to verify assessments were done without error and consistently ▪ Repeat RoB assessments on a sample of SRs to verify and check for consistency (Robinson 2015 [24, 69–72]) |

| 2.1.3 (Re)-assess RoB of some or all primary studiesa | CMIMG 2012 [47]; Cooper 2012 [6]; Hartling 2012 [53]; Jadad 1997 [29]; Moja 2012 [63]; Thomson 2010 [26] ▪ When two different tools are used, then assess the primary studies using one tool ▪ When two different tools are used (e.g. Cochrane RoB tool [67] and Jadad tool [29]; then re-assess RoB by standardising the assessments based on the Cochrane RoB domains, and match data from assessments from other tools to these domains) |

| 2.1.4 Don’t report or assess RoB of primary studies | Inferred |

| 2.2 Determine how to summarise the RoB assessments for primary studies | |

| 2.2.1 Report assessment for individual items or domains (with or without rationale for judgements)a | JBI 2014 [39, 59]; Pieper 2014c [66]; Robinson 2015 [24, 69–72]; Ryan 2009 [25]; Silva 2014 [75] |

| 2.2.2 Summarise assessments across items or domains by using a scoring system§§ | JBI 2014 [39, 59]; Pieper 2014c [66]; Robinson 2015 [24, 69–72]; Ryan 2009 [25]; Silva 2014 [75] ▪ Sum items, assigning equal or unequal weight to each (JBI 2014 [39, 59]) ▪ Calculate the mean score across items (JBI 2014 [39, 59]) |

| 2.2.3 Summarise assessments across items or domains, then use cut-off scores or thresholds to describe RoB (e.g. low, moderate and high quality)§§ | JBI 2014 [39, 59]; Robinson 2015 [24, 69–72]; Ryan 2009 [25]; Silva 2014 [75]; Singh 2012 [76] |

| 2.3 Determine how to present the RoB assessments for primary studies | |

| 2.3.1 Display assessments in table(s) (e.g. overall rating in summary of findings table, and another table with RoB items for each primary study)a | Aromataris 2015 [39, 59]; Becker 2008 [4]; Chen 2014 [46]; Hartling 2012 [53]; Smith 2011 [77]; JBI 2014 [39, 59] |

| 2.3.2 Display assessments graphicallya | Crick 2015 [48] ▪ Cochrane RoB graph depicting authors’ judgments about each domain presented as percentages across all included SRs [67] ▪ Harvest plot, which depicts results according to study size and quality ([48]) |

| 2.3.3 Report assessments in texta | Aromataris 2015 [39, 59]; Chen 2014 [46]; Hartling 2012 [53]; Li 2012 [62]; Smith 2011 [77] |

| 3.0 Plan the process for assessing RoB | |

| 3.1 Determine the number of overview authors required to assess studiesa | |

| 3.1.1 Independent assessment by 2 or more authors | Baker 2014 [43]; Becker 2008 [4]; Cooper 2012 [6]; JBI 2014 [39]; Li 2012 [62]; Ryan 2009 [25] |

| 3.1.2 One author assessment | Inferred |

| 3.1.3 One assessment, 2nd confirmed | Cooper 2012 [6] |

| 3.1.4 One assessment, 2nd confirms if uncertainty | Cooper 2012 [6] |

| 3.2 Determine if authors (co-)authored one or several of the SRs included in the overview, and if yes, plan safeguards to avoid bias in RoB assessment | Büchter 2011 [45, 65] ▪ Assessment of RoB of included SRs done by overview authors who were not authors of the SRs |

AMSTAR A Measurement Tool to Assess Systematic Reviews; CMIMG Comparing Multiple Interventions Methods Group; JBI Joanna Briggs Institute; OQAQ Overview Quality Assessment Questionnaire; RoB risk of bias; ROBIS Risk of Bias In Systematic reviews; SRs systematic reviews

§We refer to ‘risk of bias’ assessment, since assessment of SR or primary study limitations should focus on the potential of those methods to bias findings. However, the terms quality assessment and critical appraisal are common, particularly when referring to the assessment of SR methods, and hence our analysis includes all relevant literature irrespective of terminology

§§As is the case with assessment of RoB in primary studies, concerns have been raised about the validity of presenting a summary score or qualitative descriptors based on scores (e.g. low, moderate, high quality) [12, 17]

aAdaptation of the step from SRs to overviews. No methods evaluation required, but special consideration needs to be given to unique issues that arise in conducting overviews

When determining how to assess the RoB in SRs (1.1), identified approaches included the following: selecting or adapting an existing RoB assessment tool for SRs (1.1.1, 1.1.2), developing a RoB tool customised to the overview (1.1.3), using an existing RoB assessment such as those published in Health EvidenceTM [27] (1.1.4) or describing the characteristics of included SRs that may be associated with bias or quality without using or developing a tool (1.1.5). More than 40 tools have been identified for appraisal of SRs [12], only one of which is described as a risk of bias tool (ROBIS (Risk of Bias In Systematic reviews tool) [15]). Other tools are described as being for critical appraisal or quality assessment. Studies have identified AMSTAR [22, 23] and the OQAQ (Overview Quality Assessment Questionnaire [28]) as the most commonly used tools in overviews [3, 12]. Methods for summarising and presenting RoB assessments mirror those used in a SR of primary studies (1.2, 1.3).

Authors must also decide on how to assess the RoB of primary studies included within SRs (2.0). Two main approaches were identified: to either report the RoB assessments from the included SRs (2.1.1) or to independently assess RoB of the primary studies (2.1.3) (only the latter option applies when additional primary studies are retrieved to update or fill gaps in the coverage of existing SRs). When using the first approach, overview authors may also perform quality checks to verify assessments were done without error and consistently (2.1.2). In attempting to report RoB assessments from included SRs, overview authors may encounter missing data (e.g. incomplete reporting of assessments) or assessments that are flawed (e.g. using problematic tools). In addition, discrepancies in RoB assessments may be found when two or more SRs report an assessment of the same primary study but use different RoB tools or report discordant judgements for items or domains using the same tool. We identified multiple methods for dealing with these scenarios, most are applied at the data extraction stage (covered in Paper 1 [10]). Options varied according to the specific scenario, but included the following: (a) extracting all assessments, recording discrepancies; (b) extracting from one SR based on a priori criteria; (c) extracting data elements from the SR that meets pre-specified decision rules and (d) retrieving primary studies to extract missing data or reconcile discrepancies ([10]).

Synthesis, presentation and summary of the findings

The six steps in the framework under ‘synthesis, presentation and summary of the findings’ were ‘plan the approach to summarising the SR results (1.0)’, ‘plan the approach to quantitatively synthesising the SR results (2.0)’ ‘plan to assess heterogeneity (3.0)’, ‘plan the assessment of reporting biases (4.0)’, ‘plan how to deal with overlap of primary studies included in more than one SR (5.0)’, and ‘plan how to deal with discordant results, interpretations and conclusions of SRs (6.0)’ (Table 4). As a note on terminology, we distinguish between discrepant data—meaning data from the same primary study that differs between what is reported in SRs due to error in data extraction, and discordant results, interpretation and conclusions of the results of SRs—meaning differences in results and conclusions of SRs based on the methodological decisions authors make, or different interpretations or judgments about the results.

An identified step of relevance to all overviews is determining the summary approach (1.2). This includes determining what data will be extracted and summarised from SRs and primary studies (e.g. characteristics of the included SRs (1.2.1), results of the included SRs (1.2.2), results of the included primary studies (1.2.3), RoB assessments of SRs and primary studies (1.2.4)) and what graphical approaches might be used to present the results (1.3). In overviews that include multiple SRs reporting results for the same population, comparison and outcome, criteria need to be determined as to whether all SR results/MAs are reported (1.1.1), or only a subset (1.1.2). When the former approach is chosen (1.1.1), methods for dealing with overlap of primary studies across SR results need to be considered (5.0), such as acknowledging (5.3.4), statistically quantifying (5.1) and visually examining and depicting the overlap (5.2). Choice of a subset of SR/MAs (1.1.2) may bring about simplicity in terms of summarising the SR results (since there will only be one or a few SRs included), but may lead to a loss of potentially important information through the exclusion of studies that are not overlapping with the selected SR result(s).

A related issue is that of discordance (6.0). Some overviews aim to compare results, conclusions and interpretations across a set of SRs that address similar questions. These overviews typically address a focused clinical question (e.g. comparing only two interventions for a specific condition and population). Identified methods included approaches to examine and record discordance (6.1.1) and the use of tools (e.g. Jadad [29]) or decision rules to aid in the selection of one SR/MA (6.1.2).

In addition to determining the summary approach of SR results, consideration may also be given to undertaking a new quantitative synthesis of SR results (2.0). A range of triggers that may lead to a new quantitative synthesis were identified (2.2) (e.g. incorporation of additional primary studies (2.2.2), need to use new or more appropriate meta-analysis methods (2.2.3), concerns regarding the trustworthiness of the SR/MA results (2.2.5)). When undertaking a new meta-analysis in an overview, a decision that is unique to overviews is whether to undertake a first-order meta-analysis of effect estimates from primary studies (2.3.1), or a second-order meta-analysis of meta-analysis effect estimates from the SRs (2.3.2). If undertaking a second-order meta-analysis, methods may be required for dealing with primary studies contributing data to multiple meta-analyses (5.3.2). A second-order subgroup analysis was identified as a potential method for investigating whether characteristics at the level of the meta-analysis (e.g. SR quality) modify the magnitude of intervention effect (3.3.2). If new meta-analyses are undertaken, decisions regarding the model and estimation method are required (2.5, 3.4).

Investigation of reporting biases may be done through summarising the reported investigations of reporting biases in the constituent SRs (1.2.6), or through new investigations (4.0). Overviews also provide an opportunity to identify missing primary studies through non-statistical approaches (4.2), such as comparing the included studies across SRs. An additional consideration in overviews is investigation of missing SRs. Identified non-statistical approaches to identify missing SRs included searching SR registries and protocols (4.1).

Assessment of the certainty of the evidence arising from the overview

The two steps in the framework under ‘assessment of the certainty of the evidence arising from the overview’ are as follows: ‘plan to assess certainty of the evidence (1.0)’ and ‘plan the process for assessing the certainty of the evidence (2.0)’ (Table 5). GRADE is the most widely used method for assessing the certainty of evidence in a systematic review of primary studies. The methods involve assessing study limitations (RoB, imprecision, inconsistency, indirectness, and publication bias) to provide an overall rating of the certainty of (or confidence in) results for each comparison [30]. In an overview, planning how to assess certainty (1.1) involves additional considerations. These include deciding how to account for limitations of the included SRs (e.g. bias arising from the SR process, whether SRs directly address the overview question) and how to deal with missing or discordant data needed to assess certainty (e.g. non-reporting of heterogeneity statistics needed to assess consistency, SRs that report conflicting RoB assessments for the same study). One approach is to assess certainty of the evidence using a method designed for overviews (1.1.1). However, GRADE methods (or equivalent) have not yet been adapted for overviews and guidance on addressing issues is not available. In the absence of agreed guidance for overviews, another option is to assess the certainty of the evidence using an ad hoc method (1.1.2). For example, Pollock 2015 incorporated the limitations of included SRs in their GRADE assessment by rating down the certainty of evidence for SRs that did not meet criteria deemed to indicate important sources of bias [31, 32].

Table 5.

Assessment of the certainty of the evidence arising from the overview

| Step Sub-step Methods/approaches | Sources (first author, year) ▪ Examples |

|---|---|

| 1.0 Plan to assess certainty of the evidence | |

| 1.1 Determine how to assess the certainty of the evidence | |

| 1.1.1 Assess the certainty of the evidence using a method developed for use in overviews | Wagner 2012 [80] ▪ Wagner 2012 [80] report an approach to assigning levels of evidence in an overview based on the number and quality of included SRs (primary studies were not considered). |

| 1.1.2 Assess the certainty of the evidence using an ad hoc method developed for a specific overview | Bolland 2014 [5]; Cooper 2012 [6]; Crick 2015 [48]; Hartling 2012 [53]; Pollock 2015 [31]; Ryan 2009 [25]; Thomson 2010 [26]; Wagner 2012 [80] ▪ Pollock 2015 [31] adapted GRADE methods for their overview, incorporating an additional domain to account for potential bias arising from the methods used in included SRs. Decision rules were used to ensure consistent grading of domains deemed important to their overview question; these did not specifically address considerations unique to overviews |

| 1.1.3 Report assessments of certainty of the evidence from the included SRs, using the approaches specified for data extraction to deal with missing data, flawed or discordant assessments (e.g. where two SRs use different methods to assess certainty of the evidence or report discordant assessments using the same method) (see ‘Data extraction’ table in [10]). | Becker 2008 [4]; Cooper 2012 [6]; Hartling 2012 [53]; Hartling 2014 [55]; JBI 2014 [39, 59]; Kramer 2009 [61]; Pieper 2014c [66]; Robinson 2015 [24, 69–72]; Ryan 2009 [25]; Silva 2014 [75] ▪ Report assessments of the certainty of the evidence for each comparison and outcome directly from the included SRs, irrespective of the method used, noting missing data and discrepancies (Hartling 2012 [53]; JBI 2014 [39]; Robinson 2015 [24, 69–72]) ▪ Report the certainty of the evidence data from the Cochrane review with the most comprehensive assessment |