ABSTRACT

Background

The Accreditation Council for Graduate Medical Education Clinical Learning Environment Review recommends that quality improvement/patient safety (QI/PS) experts, program faculty, and trainees collectively develop QI/PS education.

Objective

Faculty, hospital leaders, and resident and fellow champions at the University of Chicago designed an interdepartmental curriculum to train postgraduate year 1 (PGY-1) residents on core QI/PS principles, measuring outcomes of knowledge, attitudes, and event reporting.

Methods

The curriculum consisted of 3 sessions: PS, quality assessment, and QI. Faculty and resident and fellow leaders taught foundational knowledge, and hospital leaders discussed institutional priorities. PGY-1 residents attended during protected conference times, and they completed in-class activities. Knowledge and attitudes were assessed using pretests and posttests; graduating residents (PGY-3–PGY-8) were controls. Event reporting was compared to a concurrent control group of nonparticipating PGY-1 residents.

Results

From 2015 to 2017, 140 interns in internal medicine (49%), pediatrics (33%), and surgery (13%) enrolled, with 112 (80%) participating and completing pretests and posttests. Overall, knowledge scores improved (44% versus 57%, P < .001), and 72% of residents demonstrated increased knowledge. Confidence comprehending quality dashboards increased (13% versus 49%, P < .001). PGY-1 posttest responses were similar to those of 252 graduate controls for accessibility of hospital leaders, filing event reports, and quality dashboards. PGY-1 residents in the QI/PS curriculum reported more patient safety events than PGY-1 residents not exposed to the curriculum (0.39 events per trainee versus 0.10, P < .001).

Conclusions

An interdepartmental curriculum was acceptable to residents and feasible across 3 specialties, and it was associated with increased event reporting by participating PGY-1 residents.

What was known and gap

Programs struggle with providing quality improvement (QI) and patient safety (PS) education to residents that also links them to institutional quality and safety priorities and initiatives.

What is new

An interdepartmental QI/PS curriculum for postgraduate year 1 (PGY-1) residents codesigned by faculty, hospital leaders, and resident and fellow champions, with the measurement of knowledge, attitudes, and adverse event reporting.

Limitations

Single site institution; assessment instrument lacks validity evidence.

Bottom line

The QI/PS curriculum was acceptable to residents, feasible in the 3 specialties, and associated with increased event reporting by PGY-1 residents.

Introduction

Training residents to provide safe and effective care is a priority for the Accreditation Council for Graduate Medical Education1,2 and a primary goal of the Clinical Learning Environment Review (CLER).3–6 Residency training programs across the country are implementing quality improvement/patient safety (QI/PS) curricula in keeping with CLER guidance that recommends this education be “developed collaboratively by QI/PS officers, trainees, faculty, and staff.”7

Although QI/PS educational content is relevant to trainees in all specialties, few institutions have described interdepartmental collaboration or graduate medical education (GME) resources in QI/PS.8,9 A systematic review of 41 published QI/PS curricula found that most are designed for residents in a single specialty program.10 The 2016 CLER National Report of Findings further highlighted that GME efforts are often independent of other areas of strategic planning and focus within organizations.7,11 Thus, an opportunity exists to expand QI/PS curricula across departments, simultaneously pooling institutional resources and meeting CLER requirements.

Most residents report having formal training of but limited knowledge in PS, and few report having a working knowledge of QI, according to a comprehensive review of nearly 300 sponsoring institutions.12 This may reflect a lack of trainee involvement in the development of education programs. It also emphasizes the importance of creating curricula clinically relevant and aligned with institutional QI/PS goals.

At the University of Chicago, a group of educators from the internal medicine (IM), surgery, and pediatrics residency programs partnered with hospital leaders (chief medical officer, chief quality officer, vice president and director of patient safety and risk management), residents and fellows, and GME staff to design and deliver an interdepartmental GME quality and safety curriculum (QSC) for postgraduate year 1 (PGY-1) residents. The aims were to build residents' foundational knowledge of core principles of QI/PS and engage learners in institutional QI/PS priorities and goals.

Methods

Participants were categorical interns in the University of Chicago's IM, surgery, pediatrics, and IM-pediatrics residency programs from 2015 to 2017. Controls were PGY-3 to PGY-8 residents who completed training at the University of Chicago in June 2017 in all specialties.

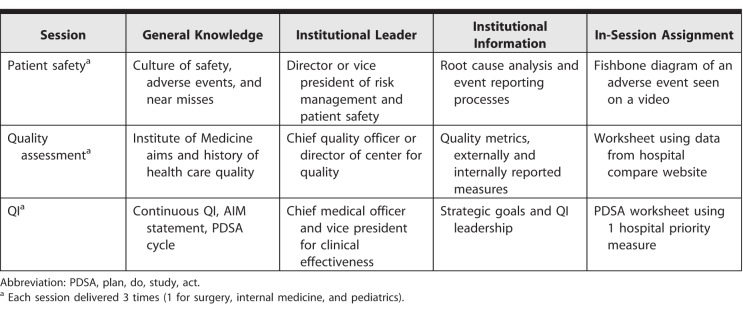

A core group of 5 QI/PS educators (core educators) designed the GME QSC (2 IM faculty, 1 surgery faculty, 1 surgery resident, 1 pediatrics fellow). Hospital leaders were invited to participate based on institutional roles. We focused on 2 CLER pathways: health quality education and patient safety education.8 We developed three 1-hour sessions in PS, quality assessment, and QI (Table 1). In each session, a core educator taught general QI/PS concepts, an institutional leader presented hospital data and initiatives tailored to the PGY-1 audience, and both facilitated the interactive group activity (syllabus provided as online supplemental material). Residency program directors approved the content and format. GME staff scheduled sessions, managed online content, administered tests, analyzed results, and provided ongoing communication.

Table 1.

Session Outline for Quality Improvement (QI) and Patient Safety Curriculum for Postgraduate Year 1 Residents

Lessons were scheduled during programs' protected conference time and were videotaped. A preexisting online portal (MedHub.com) was used to post videos and in-class assignments for residents who could not attend in person. Core educators worked with program directors and chief residents to ensure resident participation.

The study received Institutional Review Board exemption.

Program directors, core educators, and GME staff agreed on requirements for resident participation. Participation was monitored via attendance records and completion of in-class worksheets. Each resident completed 1 worksheet per session.

Participants were asked to complete an online assessment prior to the first lesson (pretest) and following the third lesson (posttest is provided as online supplemental material). The pretest included questions regarding demographics and prior QI/PS education. The posttest included an evaluation of the curriculum and could be filled out 1 day to 3 months after the last session.

Both tests included 23 questions on foundational and institution-specific QI/PS knowledge, grouped by topic and linked to learning objectives. Test questions were modified from QI/PS instruments with validity evidence for use with residents. We did not conduct further validity testing.13,14 Pretest and posttest knowledge scores were paired by resident, and a paired Wilcoxon signed rank test was used to quantify differences.

Both tests included Likert scale responses regarding attitudes and behaviors in QI/PS education. University of Chicago PGY-3 to PGY-8 residents in a range of specialties who completed training in June 2017 answered the same 5 attitude/behavior questions on their exit surveys. We compared PGY-1 pretest and posttest responses, and responses of this graduate control group using Wilcoxon rank sum testing.

To assess behavior, the number of adverse events reported by PGY-1 residents prior to and after participation in the PS lessons was collected. Reports submitted by GME QSC participants were compared to those of nonparticipating PGY-1 residents. An event report index (number of event reports divided by number of trainees in program) and a trainee report index (number of unique trainees submitting reports divided by number of trainees in program) were calculated for residents in participating versus nonparticipating programs. Statistical analyses were performed using R version 3.2.4 (The R Foundation, Vienna, Austria), and statistical significance was determined at P < .05.

Each core educator spent an estimated 5 hours developing and 3 hours delivering content; hospital leaders spent 1 hour developing and 3 hours delivering content. Residents were not required to complete homework beyond the sessions. Residents spent 4 hours completing 3 sessions and the online pretest and posttest. Lesson materials were limited to preexisting audiovisual equipment, slide presentations, and paper worksheets. Sessions were incorporated into monthly conference schedules and used established meeting spaces. There was no incremental cost for MedHub. The GME office provided approximately 10 hours per week of administrative support.

Results

The GME QSC enrolled 140 residents over 2 years, with 112 (80%) residents completing both the pre- and posttest (“respondents”). Respondents were 61% (68 of 112) female, 47% (53 of 112) IM, 33% (37 of 112) pediatrics, 14% (16 of 112) surgery, and 5% (6 of 112) IM-pediatrics. In the pretest, 67% (75 of 112) of respondents reported brief quality training in medical school and 90% (101 of 112) reported brief safety training.

Knowledge Assessment

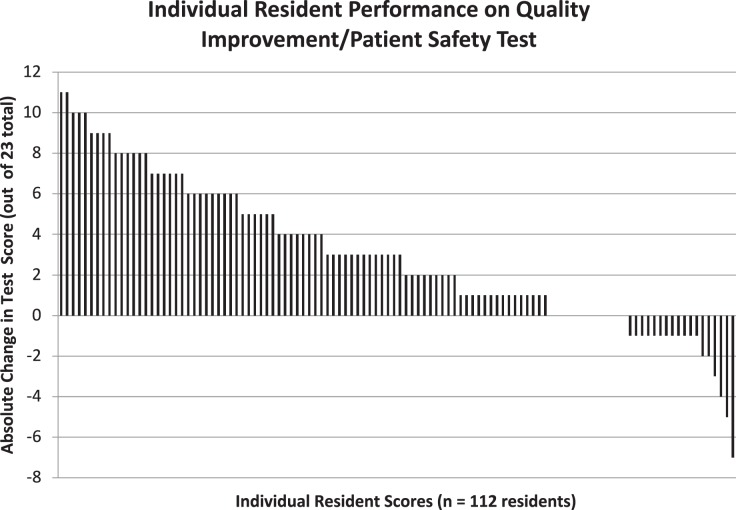

Total knowledge scores improved overall (44% versus 57%, P < .001). As demonstrated in waterfall plots for degree of change in each respondent, a majority of respondents (72%, 81 of 112; Figure 1) improved overall knowledge, with the largest gain in QI (36% [40 of 112] versus 60% [67 of 112], P < .001).

Figure 1.

Waterfall Plot on Individual Resident Performance in Quality Improvement and Patient Safety Curriculum

Note: Individual bars represent the performance of 112 residents on the quality improvement and patient safety curriculum pretest and posttest out of 23 possible points. A majority of residents showed an improvement in knowledge.

Attitude and Behavior Assessment

The GME QSC was generally well received by residents. They appreciated its limited time commitment and interactive nature, and they gained a deeper understanding of the structure of hospital leadership and its role in QI/PS.

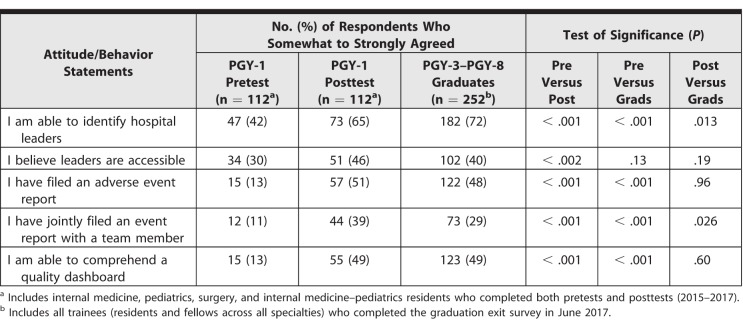

After participating in the curriculum, PGY-1 QI/PS attitudes and behaviors improved significantly (Table 2). A higher percentage of respondents reported hospital leaders were accessible (30% [34 of 112] versus 46% [51 of 112], P < .002), and reported confidence in their ability to comprehend a quality dashboard (13% [15 of 112] versus 49% [55 of 112], P < .001). Notably, PGY-1 posttest responses were similar to 252 PGY-3 to PGY-8 graduate controls for accessibility of hospital leaders, filing adverse event reports, and quality dashboards. PGY-1 participants reported filing more joint event reports (39% [44 of 112]) versus PGY-3 to PGY-8 participants (29% [73 of 252]; P = .026; Table 2).

Table 2.

Attitudes and Behaviors of Postgraduate Year 1 (PGY-1) Quality Improvement and Patient Safety Curriculum Residents Versus Graduating PGY-3 to PGY-8 Controls

Event Reporting

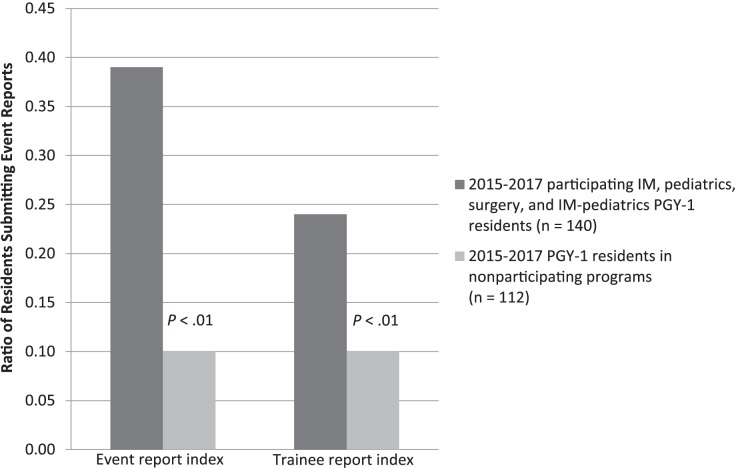

A comparison of the number of event reports submitted by the 140 residents participating in GME QSC with those from 120 residents in nonparticipating programs showed more events were reported by QI/PS participating residents (P < .001), and more unique residents in GME QSC submitted event reports compared with residents who did not participate in the curriculum (P < .001). Of the 55 events submitted by GME QSC program residents, 47% (26) were from general surgery, 33% (18) were from IM, and 13% (7) were from pediatrics (Figure 2).

Figure 2.

Comparison of Event Reporting Between Curriculum Participants and Nonparticipants

Note: Event report index is the number of event reports divided by the number of trainees in the program. Trainee report index is the number of unique trainees submitting reports divided by the number of trainees in the program.

Discussion

The GME QSC achieved formal QI/PS exposure recommended by CLER, increased resident event reporting, and addressed gaps identified in a national report. In completed posttests, respondents demonstrated improved knowledge in PS, quality assessment, and QI, topics that directly correlate with the CLER pathways of health quality education and patient safety education.6

The greatest gains were in QI knowledge (36% versus 60%, P < .001). The QI session focused on using a plan, do, study, act cycle to address current hospital quality measures. Engaging residents in real-time processes of meeting institutional goals and highlighting their clinical relevance likely contributed to successful knowledge gains, and simultaneously addressed CLER goals and objectives for resident involvement in institutional QI.

One gap identified by early CLER reports was in residents' working knowledge of QI/PS.13 The GME QSC led to improvements in knowledge, practical application, and trainee skill acquisition. PGY-1 residents' ability to identify hospital leaders and comprehend quality dashboards significantly improved as a result of meeting leaders and reviewing dashboards during the curriculum. For patient safety event reporting, residents who participated in GME QSC submitted event reports 4 times more often than nonparticipants. However, resident event reporting was still low, with less than half of residents submitting events. The culture of each program also likely influenced event reporting, as shown by the higher levels of reporting in participating surgery (47%) versus pediatrics (13%) residents.

An innovative aspect of the curriculum was the collaboration of multidisciplinary faculty, institutional leadership, residents, and GME staff. This partnership included direct GME involvement, a characteristic not seen in many clinical learning environments at initial CLER visits.11 Past studies have found QI/PS curricula require significant time and resource investment from faculty and support from program leaders,15 and faculty often do not feel prepared to teach QI/PS, nor do they feel that they have the time to create these programs.10 The GME QSC featured a shared teaching model in which a small core group of educators disseminated the curriculum to trainees in multiple residency programs. This allowed a greater number of trainees to receive institutionally relevant QI/PS education, and it also addressed a concern raised in early CLER reports regarding variability in the coordination of educational resources across organizations.9

Our curriculum achieved improvements in PGY-1 QI/PS attitudes and behaviors compared with those of graduating PGY-3 to PGY-8 residents. While PGY-1 attitudes toward institutional leaders likely improved in part due to the direct interaction between leaders and residents during sessions, graduates still felt more confident identifying hospital leaders, which may be attributable to their cumulative exposure over time. Direct exposure to institutional QI/PS data dashboards early in residency helped PGY-1 participants appreciate what data are collected, and it helped them see opportunities for involvement in QI projects aligned with hospital priorities.16 Participating PGY-1 residents reported significantly more joint event reporting than graduates did. We anticipate that the attitudes of PGY-1 residents with early exposure to QI/PS through the GME QSC will continue to improve during the rest of training, and may exceed those of current graduates.

Limitations to this study included a single institution setting with graduate resident controls and selection of responders to both pretests and posttests for evaluation. Confounding education in QI/PS may have occurred during clinical work, bedside teaching, self-study, conferences, or department lectures. Increasing institutional attention to QI/PS in recent years may have altered residents' exposure to such programs, as well as their attitudes, and could be reflected in the PGY-3 to PGY-8 responses.

At the request of University of Chicago program directors, the GME QSC curriculum expanded to 5 additional residency programs. Interested faculty can earn continuing medical education credits after completing GME QSC though online video-recorded modules. Next steps include measuring the QI/PS knowledge and attitudes of each resident cohort at graduation and tracking event reporting in newly participating residency programs.

Conclusion

An interdepartmental GME QI/PS curriculum created by hospital leaders, faculty educators, and trainees achieved formal QI/PS engagement recommended by CLER. The curriculum resulted in improved QI/PS knowledge and attitudes, and was associated with a significant increase in event reporting in the 3 participating residency programs.

Supplementary Material

References

- 1.Institute of Medicine. Graduate Medical Education That Meets the Nation's Health Needs. Washington, DC: National Academies Press; 2014. [PubMed] [Google Scholar]

- 2.Nasca TJ, Philibert I, Brigham T, et al. The next GME accreditation system—rationale and benefits. N Engl J Med. 2012;366(11):1051–1056. doi: 10.1056/NEJMsr1200117. [DOI] [PubMed] [Google Scholar]

- 3.Weiss KB, Bagian JP, Wagner R. CLER. Pathways to Excellence: expectations for an optimal clinical learning environment (executive summary) J Grad Med Educ. 2014;6(3):610–611. doi: 10.4300/JGME-D-14-00348.1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Weiss KB, Bagian JP, Nasca TJ. The clinical learning environment: the foundation of graduate medical education. JAMA. 2013;309(16):1687–1688. doi: 10.1001/jama.2013.1931. [DOI] [PubMed] [Google Scholar]

- 5.CLER Evaluation Committee. CLER Pathways to Excellence: Expectations for an Optimal Clinical Learning Environment to Achieve Safe and High Quality Patient Care, Version 1.0. Chicago, IL: Accreditation Council for Graduation Medical Education; 2014. [Google Scholar]

- 6.Accreditation Council for Graduate Medical Education. Clinical Learning Environment Review (CLER) 2018 http://www.acgme.org/What-We-Do/Initiatives/Clinical-Learning-Environment-Review-CLER Accessed August 13.

- 7.CLER Evaluation Committee. CLER Pathways to Excellence: Expectations for an Optimal Clinical Learning Environment to Achieve Safe and High Quality Patient Care, Version 1.1. Chicago, IL: Accreditation Council for Graduation Medical Education; 2017. [Google Scholar]

- 8.Vath RJ, Musso MW, Rabalais LS, et al. Graduate medical education as a lever for collaborative change: one institution's experience with a campuswide patient safety initiative. Ochs J. 2016;16(1):81–84. [PMC free article] [PubMed] [Google Scholar]

- 9.Wagner R, Weiss KB. Lessons learned and future directions: CLER National Report of Findings 2016. J Grad Med Educ. 2016;8(2 suppl 1):55–56. doi: 10.4300/1949-8349.8.2s1.55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Wong BM, Etchells EE, Kuper A, et al. Teaching quality improvement and patient safety to trainees: a systematic review. Acad Med. 2010;85(9):1425–1439. doi: 10.1097/ACM.0b013e3181e2d0c6. [DOI] [PubMed] [Google Scholar]

- 11.Bagian JP, Weiss KB. The overarching themes from the CLER National Report of Findings 2016. J Grad Med Educ. 2016;8(2 suppl 1):21–23. doi: 10.4300/1949-8349.8.2s1.21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Wagner R, Koh NJ, Patow C, et al. Detailed findings from the CLER National Report of Findings 2016. J Grad Med Educ. 2016;8(2 suppl 1):35–54. doi: 10.4300/1949-8349.8.2s1.35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Kerfoot BP, Conlin PR, Travison T, et al. Web-based education in systems-based practice: a randomized trial. Arch Intern Med. 2007;167(4):361–366. doi: 10.1001/archinte.167.4.361. [DOI] [PubMed] [Google Scholar]

- 14.Singh MK, Ogrinc G, Cox KR, et al. The quality improvement knowledge application tool revised (QIKAT-R) Acad Med. 2014;89(10):1386–1391. doi: 10.1097/ACM.0000000000000456. [DOI] [PubMed] [Google Scholar]

- 15.Hughes M. Implementation. In: Kern D, Thomas P, Howard D, Bass E, editors. Curriculum Development for Medical Education: A Six-Step Approach. Baltimore, MD: Johns Hopkins University Press; 1998. [Google Scholar]

- 16.Schumacher DJ, Frohna JG. Patient safety and quality improvement: a ‘CLER' time to move beyond peripheral participation. Med Educ Online. 2016;21(1):31993. doi: 10.3402/meo.v21.31993. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.