Abstract

Nonverbal communication of emotion is essential to human interaction and relevant to many clinical applications, yet it is an understudied topic in social neuroscience. Drumming is an ancient nonverbal communication modality for expression of emotion that has not been previously investigated in this context. We investigate the neural response to live, natural communication of emotion via drumming using a novel dual-brain neuroimaging paradigm. Hemodynamic signals were acquired using whole-head functional near-infrared spectroscopy (fNIRS). Dyads of 36 subjects participated in two conditions, drumming and talking, alternating between ‘sending’ (drumming or talking to partner) and ‘receiving’ (listening to partner) in response to emotionally salient images from the International Affective Picture System. Increased frequency and amplitude of drum strikes was behaviorally correlated with higher arousal and lower valence measures and neurally correlated with temporoparietal junction (TPJ) activation in the listener. Contrast comparisons of drumming greater than talking also revealed neural activity in right TPJ. Together, findings suggest that emotional content communicated by drumming engages right TPJ mechanisms in an emotionally and behaviorally sensitive fashion. Drumming may provide novel, effective clinical approaches for treating social–emotional psychopathology.

Keywords: fNIRS, drumming, communication, emotion, arousal, valence

Introduction

Research on the communication of emotion has focused primarily on the neural mechanisms of communicating via speech, despite the fact that emotion is also communicated through nonverbal modalities ranging from music to body language. As these modalities are often used instead of or in addition to speech, we hypothesize that they offer something unique or supplemental that merits investigation and may have unique clinical application. Further, the communication of emotion is a bidirectional process, which includes both sensitivity to the emotional cues of others as well as the expression of internal emotional states to others. Generally, emotion research has focused on the unidirectional process of perception or induction (i.e. the brain’s reactivity to emotional stimuli), in large part due to the limitation of conventional neuroimaging modalities to single subjects. This study utilized a simultaneous, dual-brain neuroimaging paradigm to study bidirectional communication of emotion, including sending and receiving emotional content. In particular, we investigated the neural correlates of communicating emotion via drumming and listening, as compared to talking and listening.

Drumming is an ancient nonverbal form of communication that has been used across the world throughout history, with some of the earliest drums dating back to 5500–2350 BCE in China (Liu, 2005). They have typically played a communicative role in social settings, which may point to their evolutionary origin (Randall, 2001; Remedios et al., 2009). For example, slit drums and slit gongs—used from the Amazon to Nigeria to Indonesia—often use particular tonal patterns to convey messages over distance (Stern, 1957). Similarly, the renowned ‘talking drums’ of West Africa convey information with stereotyped stock phrases that mimic the sounds and patterns of speech (Stern, 1957; Carrington, 1971; Arhine, 2009; Oluga and Babalola, 2012). These drums have been used to communicate messages over thousands of kilometers by relaying from village to village (Gleick, 2011). However, these drums are also used in other communal settings, including dancing, rituals, story-telling and other ceremonies (Carrington, 1971; Ong, 1977), suggesting that they hold not only semantic information but also emotional information.

Drums are typically used in social or ceremonial settings, without holding semantic information and without intention to mimic speech. Such drumming serves various functions of emotional communication, such as to instill motivation or fear (e.g. during war), synchronize group activity (e.g. agricultural work or marching) or build social cohesion (recreational and ceremonial drum circles). For example, Wolf (2000) explores how drums are used among South Asian Shi’i Muslims in the mourning process that commemorates the killing of a political and spiritual leader at the Battle of Karbala in 680 CE. He points to how various qualities of drumming may facilitate the listener’s emotional relationship with the event (e.g. slow tempo for sadness, loud drum strikes for the intensity of grief).

The use of drums in situations where verbal communication is also used suggests that drumming provides added value to verbal communication, possibly deepening the emotional experience. In this study, we aim to understand the putative neural mechanisms that underlie bidirectional communication of emotion via drums.

Functional near-infrared spectroscopy (fNIRS) uses signals based on hemodynamic responses, similar to functional magnetic resonance imaging (fMRI), and with distinct features that are beneficial for the study of communication. For example, the signal detectors are head-mounted (i.e. non-invasive caps), participants can be seated directly across from each other while being simultaneously recorded, the system is virtually silent and data acquisition is tolerant of limited head motion (Eggebrecht et al., 2014). These features facilitate a more ecologically valid neuroscience of dual communicating brains.

The fNIRS hemodynamic signals are based on differential absorption of light at wavelengths sensitive to concentrations of oxygenated and deoxygenated hemoglobin (deoxyHb) in the blood. As with fMRI, observed variation in blood oxygenation serves as a proxy for neural activity (Kato, 2004; Ferrari and Quaresima, 2012; Scholkmann et al., 2013; Gagnon et al., 2014). Although it is well documented that blood oxygen level–dependent (BOLD) signals acquired by fMRI and hemodynamic signals acquired by fNIRS are highly correlated (Strangman et al., 2002; Sato et al., 2013), the acquired signals are not identical. The fNIRS system acquires both oxygenated hemoglobin and deoxyHb. The deoxyHb signal most closely resembles the fMRI signal (Sato et al., 2013; Zhang et al., 2016) and is thus reported here. The fNIRS signals originate from larger volumes than fMRI signals, limiting spatial resolution (Eggebrecht et al., 2012). However, the fNIRS signal is acquired at a higher temporal resolution than the fMRI signal (20–30 ms vs 1.0–1.5 s), which benefits dynamic studies of functional connectivity (Xu Cui et al., 2011). Due to the mechanics of emitting infrared light, the sensitivity of fNIRS is limited to cortical structures within 2–3 cm from the skull surface. Although fNIRS is well established (particularly in child research where fMRI approaches remain difficult or contraindicated), recent adaptations of fNIRS for hyperscanning enable significant advances in the neuroimaging of neural events that underlie interactive and social functions in adults (Funane et al., 2011; Dommer et al., 2012; Holper et al., 2012; Cheng et al., 2015; Jiang Jiang et al., 2015; Osaka et al., 2015; Vanutelli et al., 2015; Hirsch et al., 2017; Piva et al., 2017).

Study Overview. We investigated the neural response to the communication of emotional content of visual stimuli via drumming in a face-to-face paradigm, eliciting the contribution of drumming to auditory emotion communication. We chose drumming as the communication modality given its accessibility to a first-time user, thus enhancing the potential for clinical application. In our experimental paradigm, pairs of subjects (dyads) were presented with images from the International Affective Picture System (IAPS; Lang et al., 2008), which served as the topic for communication via either drumming or talking. We identified the relationship between drumming behavior and arousal or valence of the IAPS images. We then evaluated the neural sensitivity to drum behavior in both drummer and listener in order to characterize the communication of emotion. Finally, we compared the neural correlates of drumming and talking to evaluate the possible unique neural contribution of drumming over talking as a communication modality.

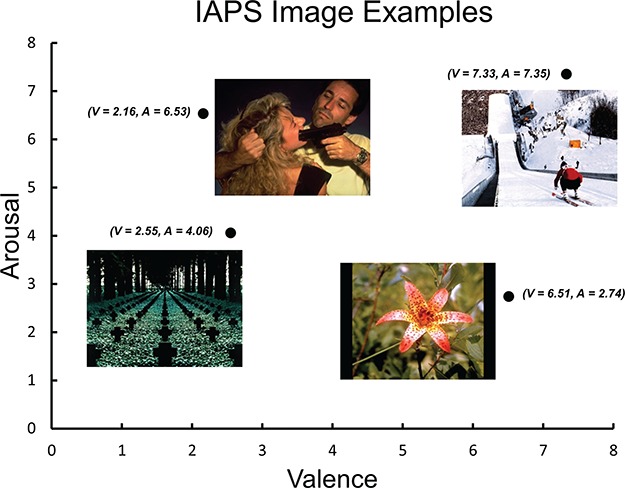

The IAPS images employ a two-dimensional emotion framework that distinguishes the emotional qualities of arousal and valence, as most classically represented in Russell’s circumplex model (1980). Arousal is a measure of the intensity or activating capacity of an emotion, ranging from low (calm) to high (excited); low arousal emotions may include sadness or contentedness, while high arousal emotions may include excitement or anger. Valence is a measure of the pleasure quality of the emotion, ranging from negative (unpleasant) to positive (pleasant); negative valence emotions may include sadness or anger, while positive valence emotions may include contentedness or excitement. Each of the IAPS images is indexed by valence and arousal ratings, allowing us to regress neural activity against these emotional qualities (Lang et al., 2008).

The hypothesis for this investigation was 2-fold. First, we hypothesized that strike-by-strike drum measures that communicate expressions of valence and arousal would elicit activity in brain systems associated with social and emotional functions, i.e. the right temporoparietal junction (TPJ). Second, we hypothesized that drumming in response to emotional stimuli would elicit neural activity that was greater than talking in the TPJ.

Methods

Participants

Thirty-six (36) healthy adults [18 pairs of subjects; mean age, 23.8 ± 3.2; 86% right handed (Oldfield, 1971)] participated in the study. Sample size is based on power analyses of similar prior two-person experiments showing that a power of 0.8 is achieved by an n of 31 (Hirsch et al., 2017). All participants provided written informed consent in accordance with guidelines approved by the Yale University Human Investigation Committee (HIC #1501015178) and were reimbursed for participation. Dyads were assigned in order of recruitment; participants were not stratified further by affiliation or dyad gender mix.

Participants rated their familiarity with their partner, their general musical expertise and their drumming expertise (descriptive statistics in Table 1). To facilitate drumming as a method of communication for participants regardless of previous experience, a brief interactive video tutorial was shown to all participants to acquaint them with various ways of striking the drum using both hands.

Table 1.

Demographic Information

| Category | Subcategory | Total/Avg |

|---|---|---|

| N | 36 | |

| Age | 23.8 ± 3.2 | |

| Gender | ||

| Male | 17 | |

| Female | 19 | |

| Other | 0 | |

| Race | ||

| Asian/Pacific Islander | 17 | |

| Black/African American | 2 | |

| Latin/Hispanic | 0 | |

| Middle East/N African | 1 | |

| Native/indigenous | 0 | |

| White/European | 10 | |

| Biracial/multiracial | 7 | |

| Other | 2 | |

| Dyad gender mix | ||

| Male/male | 5 | |

| Male/female | 8 | |

| Female/female | 5 | |

| Handedness | ||

| Right | 31 | |

| Left | 5 | |

| Music expertise* | 3.14 ± 1.22 | |

| Drum expertise* | 1.64 ± 0.93 | |

| Partner familiarity* | 1.53 ± 1.23 | |

*Based on Likert Scale responses ranging from 1 to 5 for musical expertise (never played to plays professionally), drumming expertise (never played to plays professionally) and partner familiarity (never seen or spoken to best friends).

Table 1 includes demographic information for subjects and dyads, as well as participant characteristics regarding musical expertise, drum expertise and familiarity with experiment partner.

Experimental paradigm

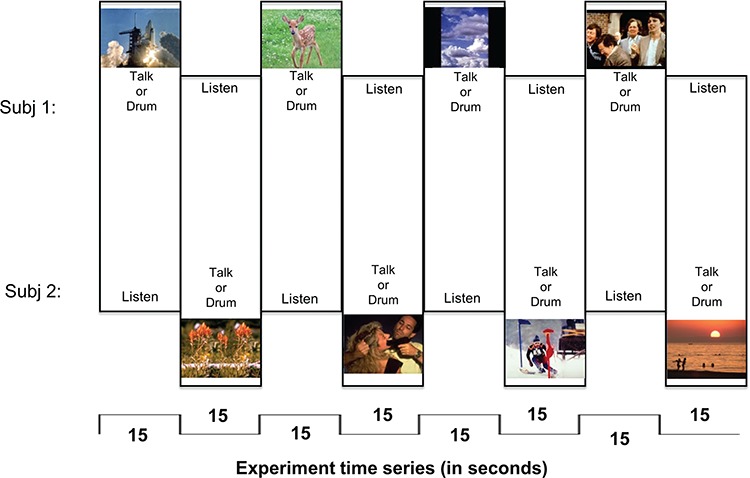

Dyads were positioned face to face across a table 140 cm from each other (Figure 1). Pseudo-randomized image stimuli presented on each trial were selected from a subset of the IAPS (Lang et al., 2008). These images were presented to both participants via a monitor on each side of the table that did not obstruct view of their partner. In each trial, one subject responded to the image stimulus by drumming or talking while the other listened.

Fig. 1.

Experimental paradigm. Each run is 3 min, 12 epochs (8 epochs shown here). Subjects alternate ‘sending’ (speaking or drumming) and ‘receiving’ when triggered by image change. Each image was selected from the IAPS library with established arousal and valence ratings.

In the drumming condition, participants were encouraged to respond to the image however they felt appropriate, including a direct response to the emotional content of the image, drumming as if they were acting within the image (e.g. with punches or strokes), or drumming as if creating the soundtrack to the image. In the talking condition, participants were encouraged to speak about what they see, their experience with the elements of the image, their opinion about the image or elements within it or what came to mind in response to the image.

The images changed and roles alternated between ‘sending’ (drumming or speaking) and ‘receiving’ (listening to partner) every 15 s for 3 min (Figure 2). For example, as illustrated in Figure 2 Event 1, after Subject 1 had spoken about the space shuttle liftoff for 15 s while Subject 2 listened, an image of flowers (Figure 2, Event 2) replaced the space shuttle image on both subjects’ screens, cuing Subject 2 to speak about this new image while Subject 1 listened. This 3-min run of alternating ‘sending’ and ‘receiving’ every 15 s thus totals 12 epochs. This was then repeated for a total of two runs of drumming and two runs of talking for each pair of subjects.

Fig. 2.

Experimental set-up for two interacting partners in the drumming condition. The talking communication condition was identical, but without the drum apparatus.

For each dyad, the following conditions were pseudo-randomized: order of experiment runs (i.e. dialogue runs first or drumming runs first), order of subjects responding within runs and order of subjects responding across runs. The order of presentation of the series of 96 images for each experiment was also randomized.

Image stimuli

The stimuli used for each experiment were a set of 96 images selected from the IAPS (Lang et al., 2008). These images have established ratings for arousal (low to high) and valence (negative to positive) on a 1–9 Likert scale. Examples are given in Figure 3, and a scatterplot depicting the valence and arousal distributions of our image subset is included in Figure S1(Supplementary Material). The library numbers of these images and their relevant statistics can be found in the appendix.

Fig. 3.

Examples of IAPS images with low/high arousal (A) and negative/positive valence (V). The figure illustrates the arousal/valence index system for emotional qualities of each image.

Quantified drumming response

The electronic drums utilized for this study use a Musical Instrument Digital Interface (MIDI) protocol to record quantity and force of drum strikes. For each run, we collected strike-by-strike information, including the average force of drum strikes, the total number of drum strikes and the product of these two values (providing a combined objective quantification of drumming response). This quantified drumming response was then correlated with the established arousal and valence ratings for image stimuli, serving as a behavioral measure of responses to IAPS images. Strike-by-strike measures were taken as the ‘sending’ variable.

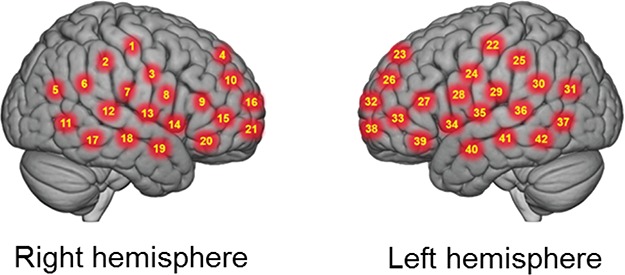

Signal acquisition and processing

NIRS signal acquisition, optode localization and signal processing including global mean removal used here are similar to standard methods described previously for the deoxyHb signal (Noah et al., 2015; Zhang et al., 2016; Dravida et al., 2017; Hirsch et al., 2017; Noah et al., 2017; Zhang et al., 2017). Hemodynamic signals were acquired using a 64-fiber (84-channel) continuous-wave fNIRS system (Shimadzu LABNIRS, Kyoto, Japan). The cap and optode layout of the system provided extended head coverage for both participants achieved by distribution of 42 3-cm channels over both hemispheres of the scalp (Figure 4). Anatomical locations of optodes in relation to standard head landmarks were determined for each participant using a Patriot 3D Digitizer (Polhemus, Colchester, VT) (Okamoto and Dan, 2005; Singh et al., 2005; Eggebrecht et al., 2012; Ferradal et al., 2014). The Montreal Neurological Institute (MNI) coordinates (Mazziotta et al., 2001) for each channel were obtained using NIRS-SPM software (Ye et al., 2009), and the corresponding anatomical locations of each channel were determined by the provided atlas (Rorden and Brett, 2000). Table S1 (Supplementary Material) lists the median MNI coordinates and anatomical regions with probability estimates for each of the channels shown in Figure 4.

Fig. 4.

Right and left hemispheres of a single-rendered brain illustrate average locations (red circles) for channel centroids. See Table S1 (Supplementary Material) for average MNI coordinates and anatomical locations.

We applied pre-coloring in our experiment through high-pass filtering. Pre-whitening was not applied to our data. This decision was guided by a previous report showing a detrimental effect on neural responses during a finger-thumb-tapping task (Ye et al., 2009). Baseline drift was modeled based on the time series and removed using wavelet detrending provided in NIRS-SPM. Global components resulting from systemic effects such as blood pressure (Tachtsidis and Scholkmann, 2016) were removed using a principal component analysis spatial filter (Zhang et al., 2016) prior to general linear model (GLM) analysis. Comparisons between conditions were based on the GLM (Penny et al., 2011). Event epochs were convolved with a standard hemodynamic response function modeled to the contrast between ‘sending’ (drumming or talking) and ‘receiving’ (listening), providing individual beta values of the difference for each participant across conditions. Group results were rendered on a standardized MNI brain template.

Results

Drumming related to arousal and valence

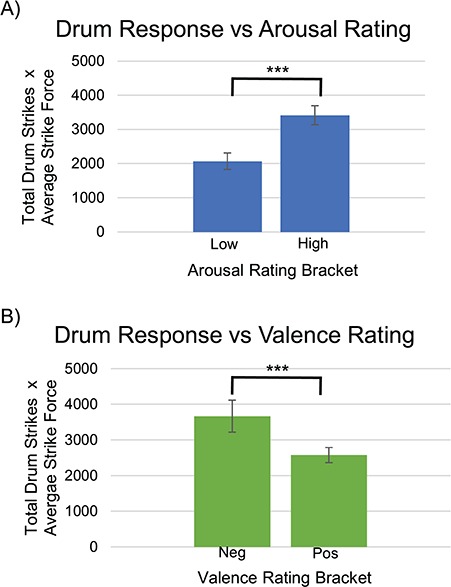

Pearson product–moment correlations were determined between the established arousal ratings for each image and the quantified behavioral measure of drumming response. We observed a positive correlation (r = 0.37), indicating that drumming responses increased with more arousing image stimuli (Figure 5A). Pearson product–moment correlations were also determined between the established valence ratings for each image and the quantified drumming response. We observed a negative correlation (r = −0.22), indicating that drumming responses decreased with more positive-mood image stimuli (Figure 5B).

Fig. 5.

A, A positive correlation (r = 0.37) was observed between the quantified drumming response (number of drum strikes multiplied by average drum strike force) and the arousal ratings of IAPS image stimuli. The bars represent two brackets equally dividing our range of IAPS image stimuli arousal ratings (lowest arousal 2.63 to highest arousal 7.35), P < 0.001. B, A negative correlation (r = −0.22) was observed between the quantified drumming response (number of drum strikes multiplied by average drum strike force) and the valence ratings of IAPS image stimuli. The bars represent two brackets equally dividing our range of IAPS image stimuli valence ratings (lowest valence 2.16 to highest valence 8.34), P < 0.001.

Neural responses to drumming (sending) and listening (receiving)

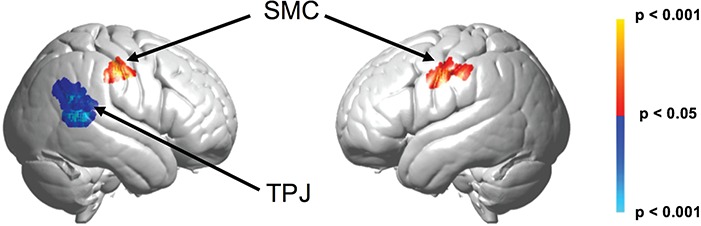

In this contrast comparison of drumming (‘sending’) and listening (‘receiving’), we convolved the strike-by-strike drum intensities with the hemodynamic response function of the block (Figure 6). Contrast comparisons of listening > drumming (blue) show activity in the right hemisphere that correlates with greater amplitude and frequency of drum response, including supramarginal gyrus (SMG) (BA40), superior temporal gyrus (STG) (BA22), angular gyrus (BA39), STG (BA22) and middle temporal gyrus (BA21). These regions are included in the TPJ. On the other hand, comparisons of drumming > listening (red) show activity in two clusters, one in each hemisphere, that correlate with greater amplitude and frequency of drum response. The cluster on the right hemisphere has a spatial distribution including pre-motor and supplementary motor (BA6) and primary somatosensory cortex (BA1,2,3). The cluster on the left has a spatial distribution including pre-motor and supplementary motor cortex (BA6). Together, they are labeled Sensory Motor Cortex (SMC).

Fig. 6.

Convolving strike by strike drumming intensities with the hemodynamic response function for the drumming (‘sending’) block, the listening condition shows greater activity than the drumming condition in two loci (blue), both in the right hemisphere. The first peak voxel was located at 64, −52, 24 (T = −3.49, P < 0.00078, P < 0.05 FDR corrected), and it included SMG (BA40) 49%, STG (BA22) 35% and angular gyrus (BA39) 16%. The second peak voxel was located at 64, −46, 6 (T = −3.84, P < 0.00031, P < 0.05 FDR corrected), and it included STG (BA22) 56% and middle temporal gyrus (BA21) 40%. In contrast, the drumming (‘sending’) condition shows greater activity than the listening (‘receiving’) condition in two loci (red), one in each hemisphere. The right hemisphere peak voxel was located at 60, −16, 42 (T = 3.58, P < 0.00061, P < 0.05 FDR corrected), and it included pre-motor and supplementary motor cortex (BA6) 43% and primary somatosensory cortex (BA 1, 2, 3) 18%, 12%, 17%. The left hemisphere peak voxel was located at −50, −6, 36 (T = 3.11, P < 0.00211), and it included pre-motor and supplementary motor cortex (BA6) 100%.

Comparison of drumming and talking

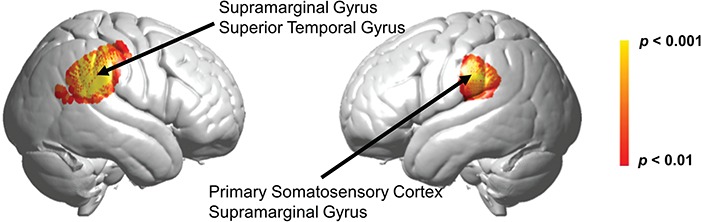

Contrast comparisons of drumming > talking show both left and right hemisphere activity (Figure 7). The spatial distribution of the right hemisphere cluster included the SMG (BA 40), the STG (BA 22) and the primary somatosensory cortex (BA 2). The spatial distribution of the left hemisphere cluster included the primary somatosensory cortex (BA 2) and the SMG (BA 40).

Fig. 7.

Collapsing across qualities of valence and arousal, the drumming condition shows greater activity than the talking condition in two loci, one in each hemisphere, mapped in accordance with the NIRS-SPM atlas (Mazziotta et al., 2001; Tak et al., 2016). The right hemisphere peak voxel was located at 62, −36, 28 (T = 5.32, P < 0.00001, P < 0.05 FDR corrected), and it included SMG (BA 40) 41%, STG (BA 22) 25% and primary somatosensory cortex (BA 2) 21%. The cluster in the left hemisphere had a peak voxel at −66, −30, 30 (T = 3.78, P = 0.00030, P < 0.05 FDR corrected), spatial distribution including primary somatosensory cortex (BA 2) 42% (BA 2) and SMG 20% (BA 40).

Discussion

In this study, we aimed to understand the neural mechanisms that underlie the communication of emotional qualities through drumming, a nonverbal auditory mode of communication. Our neuroimaging system using fNIRS and natural interpersonal interaction between dyads enables the study of ecologically valid communication. We hypothesized that strike-by-strike drum measures that communicate expressions of valence and arousal would elicit activity in brain systems associated with social and emotional functions, i.e. the right TPJ. We also hypothesized that drumming in response to emotional stimuli would elicit neural activity that was distinct from or greater than talking in response to the same stimuli in the right TPJ.

Using behavioral measures, we identified that increased frequency and amplitude of strike-by-strike drum behavior was positively correlated with image arousal and negatively correlated with image valence. We also found that increased frequency and amplitude of strike-by-strike drum behavior was correlated with sensorimotor activity in the ‘sender’ but TPJ activity in the ‘listener’. Taken together, these findings support the conclusion that communication of emotion via drumming engages the right TPJ and that drumming may communicate both arousal and valence with some preference for arousal. Finally, we observed a greater cortical response in the drumming condition than in the talking condition at the right TPJ, including STG and SMG, suggesting that drumming not only activates this social–emotional brain region, but may have a distinct advantage in activating this area over talking.

Communicating arousal and valence

Specific features of drumming may explain its capacity to communicate arousal and valence, with some preference for arousal. In speech, various prosodic features are known to cue emotion, including loudness, rate, rate variability, pitch contours, and pitch variability (Banse and Scherer, 1996; Koike et al., 1998; Juslin and Laukka, 2003; Ilie and Thompson, 2006). Similarly, such features in music include tempo, mode, melodic range, articulation, loudness and pitch (Gabrielsson and Lindström, 2001; Juslin and Laukka, 2003; Jonghwa Kim and André, 2008; Eerola et al., 2013). Prior studies across both speech and music suggest that cues like articulation, loudness, tempo and rhythm tend to influence arousal, while mode, pitch, harmony and melodic complexity influence valence (Husain et al., 2002; Ilie and Thompson, 2006; Jonghwa Kim and André, 2008; Gabrielsson and Lindström, 2010). Drumming has limited pitch or melodic capacity; on the other hand, cues like tempo, loudness and articulation are easily enacted through drumming, and these have been shown to allow a listener to reliably identify particular emotions via drumming (Laukka and Gabrielsson, 2000).

The importance of the right TPJ

The right TPJ, including the STG and SMG, is well established in its social and emotional function (Carter and Huettel, 2013). In a recent example using dual-brain fNIRS, the right TPJ has been directly implicated in functional connectivity during human-to-human vs human-to-computer competitive interaction (Piva et al., 2017), consistent with dedicated human social function (Hirsch et al., 2018). The superior temporal sulcus and gyrus were an early hypothesized node in the social network (Brothers, 1990), and this was substantiated by later research (Allison et al., 2000; Frith, 2007; Pelphrey and Carter, 2008). For example, this region appears to play a role in interpreting biological motion to attribute intention and goals to others (Allison et al., 2000; Adolphs, 2003), consistent with the Theory of Mind model of the TPJ.

The social role of the STG has been further investigated within the context of emotion (Narumoto et al., 2001). Robins et al. (2009) observed increase right STG (rSTG) activation with emotional stimuli; this was especially increased for combined audio-visual stimuli as opposed to either audio or visual stimuli alone, highlighting the importance of the rSTG in processing emotional information in live, natural social interaction. In terms of specifically auditory stimuli, Leitman et al. (2010) also observed greater activity in the posterior STG with increased saliency of emotion-specific acoustic cues in speech, and Plichta et al. (2011) observed auditory cortex activation (within STG) that was modulated by extremes of valence in emotionally salient soundbites. Still more relevant to our investigation, the emotional processing of pleasant and unpleasant music has been lateralized and localized to the rSTG (Zatorre, 1988; Zatorre et al., 1992; Blood et al., 1999). Although drumming does not have the same range of affective cues as other music, our investigation replicates the known sensitivity of rSTG to emotion in music through fewer cues like tempo, loudness and rhythmic characteristics.

The SMG, the other region of the TPJ that plays a significant role in our findings, has also been implicated in social and emotional processing. Activity in the SMG has been associated with empathy and understanding the emotions held by others, suggesting a process of internal qualitative representation to facilitate empathy (Lawrence et al., 2006). Further, there is increased SMG activity particularly on the right side, when one’s own mental state is different from the mental state of another person with whom we are empathizing (Silani et al., 2013).

The TPJ is relevant from a clinical perspective as well. In particular, the STG has been increasingly studied in patients on the autism spectrum given the deficits of both language and social interaction. Decreased capacity to attribute the mental states of animated objects in autism spectrum disorder has been linked to decreased activation of mentalizing networks, including the STG (Castelli et al., 2002). Many other autism studies have shown abnormalities in the rSTG, both functional (Boddaert and Zilbovicius, 2002) and anatomical (Zilbovicius et al., 1995; Casanova et al., 2002; Jou et al., 2010).

Volume loss of rSTG has been noted in those with criminal psychopathy (Müller et al., 2008), perhaps underlying their abnormal emotional responsiveness. Volume increases in rSTG on the other hand have been demonstrated in pediatric general anxiety disorder (De Bellis et al., 2002), in subjects exposed to parental verbal abuse (Tomoda et al., 2011), and in maltreated children and adolescents with post-traumatic stress disorder (PTSD; De Bellis et al., 2002).

Clinical application of drumming: future directions

Music and music therapy have been used in a number of clinical contexts, particularly emotional and behavioral disorders such as schizophrenia (Talwar et al., 2006; Peng et al., 2010), depression (Maratos et al., 2008; Erkkilä et al., 2011) and substance use disorders (Cevasco et al., 2005; Baker et al., 2007). Music therapy is perhaps best known for its utility in autism (Møller et al., 2002; Reschke-Hernández, 2011; Srinivasan and Bhat, 2013), where it has been used to improve emotional and social capacities (Kim et al., 2009; LaGasse, 2014). Given the aforementioned rSTG abnormalities in autism as well as our rSTG results, further research should explore neural correlates and possible neuroplastic effects of music interventions for social and emotional development in autism. Perhaps this may explain the consistent inclusion of drumming in autism music therapy and the special attention paid to rhythmic and motor aspects of music in autism (Wan et al., 2011; Srinivasan and Bhat, 2013).

However, while drumming has a number of musical elements and is often a part of group music-making, drumming and music are not identical. While music has been well established to cue both arousal and valence, we demonstrated the capacity for drumming to communicate arousal better than valence. This suggests that drumming interventions may be more effective for psychopathology typically associated with arousal (e.g. anxiety disorders, like PTSD) than for psychopathology typically associated with valence (e.g. mood disorders, like depression).

Recent work that used drumming in clinical populations substantiates this hypothesis. Bensimon et al. (2008) found drumming to be an effective intervention for PTSD patients by reducing symptoms, facilitating ‘non-intimidating access to traumatic memories’ and allowing for a regained sense of self-control and for release of anger. In another study, the effectiveness of drumming for substance use disorder was heavily linked to its ability to induce relaxation and ‘release’ emotional trauma (Winkelman, 2003). Interestingly, both of these studies highlighted the effect of drumming on increased sense of belonging, intimacy and connectedness, perhaps a reflection of our own cross-brain coherence findings. Further investigation of drumming in high arousal and high anxiety disorders within a neuroscientific framework could improve specificity and efficacy of treatment of these disorders, particularly within social contexts.

Limitations

Limitations of fNIRS investigations are balanced with advantages that enable dual-brain imaging in live, natural, face-to-face conditions. This study is the first to our knowledge to investigate the neural correlates of nonverbal auditory communication of emotion in an ecologically valid setting. One unavoidable limitation is the restriction of fNIRS data acquisition to cortical activity, due to limited penetration of infrared light through the skull. This excludes important limbic and striatal structures, which are known to be active in musical induction and perception of emotion (Blood and Zatorre, 2001; Brown et al., 2004; Koelsch, 2010; Peretz et al., 2013).

In terms of our behavioral data, while we noted a correlation between drum response and both arousal and valence, the negative correlation observed between drum response and valence may actually be due to arousal. The arousal and valence distributions of our IAPS subset (Figure S1 Supplementary Materials) indicate a relative lack of images with low valence and low arousal. This bias, which reflects a similar bias in the complete IAPS image set, results in an overemphasis of low valence images with high arousal, potentially mediating the negative correlation observed where drum response increases with lower valence.

In the comparison of drumming and talking conditions, we recognize that the elicited region of greater TPJ activation in drumming likely contains some contributory activation from nearby SMC, as expected from a drumming task. That said, the higher probability of TPJ regions noted by our digitizing process, as well as the breadth and significance of the observed neural activity in this area, provides confidence that there is a strong component of TPJ activation in drumming over talking. This speculative result invites further investigation into the utility of drumming over talking as communication modality with clinical application that elicits social–emotional engagement.

Finally, our subject population of mostly college students may limit generalizability. In particular, while drum experience was very low, there was a moderate level of averaged musical expertise that may have facilitated subjects’ drum communication of emotion (Methods, Table 1). Further research should replicate these results in both a drum-naïve and music-naïve population.

Our study demonstrated the particular contribution of drumming to emotional communication that is associated with activity in the right TPJ. The observed sensitivity of the STG and SMG within the right TPJ during listening, a canonical social and emotion processing center, holds implications for social–emotional psychopathology. Future research on nonverbal auditory communication in clinical contexts, ranging from autism to PTSD, is informed by these findings.

Supplementary Material

Acknowledgements

We thank Marlon Sobol for offering his expertise to create the participant ‘training video’ on how to use the drums. This study was partially supported by the following grants: NIH NIMH R01 MH 107513 (J.H.); R01 MH111629 (J.H.); and R37 HD 090153. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

References

- Adolphs R. (2003). Cognitive neuroscience of human social behaviour. Nature Reviews. Neuroscience, 4(3), 165. [DOI] [PubMed] [Google Scholar]

- Allison T., Puce A., McCarthy G. (2000). Social perception from visual cues: role of the STS region. Trends in Cognitive Sciences, 4(7), 267–78. [DOI] [PubMed] [Google Scholar]

- Arhine A. (2009). Speech surrogates of Africa: a study of the Fante mmensuon. Legon Journal of the Humanities, 20, 105–22. [Google Scholar]

- Baker F.A., Gleadhill L.M., Dingle G.A. (2007). Music therapy and emotional exploration: exposing substance abuse clients to the experiences of non-drug-induced emotions. The Arts in Psychotherapy, 34(4), 321–30. [Google Scholar]

- Banse R., Scherer K.R. (1996). Acoustic profiles in vocal emotion expression. Journal of Personality and Social Psychology, 70(3), 614. [DOI] [PubMed] [Google Scholar]

- Bensimon M., Amir D., Wolf Y. (2008). Drumming through trauma: music therapy with post-traumatic soldiers. The Arts in Psychotherapy, 35(1), 34–48. [Google Scholar]

- Blood A.J., Zatorre R.J. (2001). Intensely pleasurable responses to music correlate with activity in brain regions implicated in reward and emotion. Proceedings of the National Academy of Sciences, 98(20), 11818–23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blood A.J., Zatorre R.J., Bermudez P., Evans A.C. (1999). Emotional responses to pleasant and unpleasant music correlate with activity in paralimbic brain regions. Nature Neuroscience, 2(4). [DOI] [PubMed] [Google Scholar]

- Boddaert N., Zilbovicius M. (2002). Functional neuroimaging and childhood autism. Pediatric Radiology, 32(1), 1–7. [DOI] [PubMed] [Google Scholar]

- Brothers L. (1990). The social brain: a project for integrating primate behavior and neurophysiology in a new domain. Concepts Neuroscience, 1, 27–51. [Google Scholar]

- Brown S., Martinez M.J., Parsons L.M. (2004). Passive music listening spontaneously engages limbic and paralimbic systems. Neuroreport, 15(13), 2033–7. [DOI] [PubMed] [Google Scholar]

- Carrington J.F. (1971). The talking drums of Africa. Scientific American, 225(6), 90–5. [Google Scholar]

- Carter R.M., Huettel S.A. (2013). A nexus model of the temporal–parietal junction. Trends in Cognitive Sciences, 17(7), 328–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casanova M.F., Buxhoeveden D.P., Switala A.E., Roy E. (2002). Minicolumnar pathology in autism. Neurology, 58(3), 428–32. [DOI] [PubMed] [Google Scholar]

- Castelli F., Frith C., Happé F., Frith U. (2002). Autism, Asperger syndrome and brain mechanisms for the attribution of mental states to animated shapes. Brain, 125(8), 1839–49. [DOI] [PubMed] [Google Scholar]

- Cevasco A.M., Kennedy R., Generally N.R. (2005). Comparison of movement-to-music, rhythm activities, and competitive games on depression, stress, anxiety, and anger of females in substance abuse rehabilitation. Journal of Music Therapy, 42(1), 64–80. [DOI] [PubMed] [Google Scholar]

- Cheng X., Li X., Hu Y. (2015). Synchronous brain activity during cooperative exchange depends on gender of partner: a fNIRS-based hyperscanning study. Human Brain Mapping, 36(6), 2039–48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cui X., Bray S., Bryant D.M., Glover G.H., Reiss A.L. (2011). A quantitative comparison of NIRS and fMRI across multiple cognitive tasks. Neuroimage, 54(4), 2808–21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Bellis M.D., Keshavan M.S., Frustaci K., et al. (2002). Superior temporal gyrus volumes in maltreated children and adolescents with PTSD. Biological Psychiatry, 51(7), 544–52. [DOI] [PubMed] [Google Scholar]

- De Bellis M.D., Keshavan M.S., Shifflett H., et al. (2002). Superior temporal gyrus volumes in pediatric generalized anxiety disorder. Biological Psychiatry, 51(7), 553–62. [DOI] [PubMed] [Google Scholar]

- Dommer L., Jäger N., Scholkmann F., Wolf M., Holper L. (2012). Between-brain coherence during joint n-back task performance: a two-person functional near-infrared spectroscopy study. Behavioural Brain Research, 234(2), 212–22. [DOI] [PubMed] [Google Scholar]

- Dravida S., Noah J.A., Zhang X., Hirsch J. (2017). Comparison of oxyhemoglobin and deoxyhemoglobin signal reliability with and without global mean removal for digit manipulation motor tasks. Neurophotonics, 5(1), 011006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eerola T., Friberg A., Bresin R. (2013). Emotional expression in music: contribution, linearity, and additivity of primary musical cues. Frontiers in Psychology, 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eggebrecht A.T., Ferradal S.L., Robichaux-Viehoever A., et al. (2014). Mapping distributed brain function and networks with diffuse optical tomography. Nature Photonics, 8(6), 448–54. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eggebrecht A.T., White B.R., Ferradal S.L., et al. (2012). A quantitative spatial comparison of high-density diffuse optical tomography and fMRI cortical mapping. Neuroimage, 61(4), 1120–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Erkkilä J., Punkanen M., Fachner J., et al. (2011). Individual music therapy for depression: randomised controlled trial. The British Journal of Psychiatry, 199(2), 132–9. [DOI] [PubMed] [Google Scholar]

- Ferradal S.L., Eggebrecht A.T., Hassanpour M., Snyder A.Z., Culver J.P. (2014). Atlas-based head modeling and spatial normalization for high-density diffuse optical tomography: in vivo validation against fMRI. Neuroimage, 85, 117–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferrari M., Quaresima V. (2012). A brief review on the history of human functional near-infrared spectroscopy (fNIRS) development and fields of application. Neuroimage, 63(2), 921–35. [DOI] [PubMed] [Google Scholar]

- Frith C.D. (2007). The social brain? Philosophical Transactions of the Royal Society of London B: Biological Sciences, 362(1480), 671–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Funane T., Kiguchi M., Atsumori H., Sato H., Kubota K., Koizumi H. (2011). Synchronous activity of two people's prefrontal cortices during a cooperative task measured by simultaneous near-infrared spectroscopy. Journal of Biomedical Optics, 16(7), 077011–077011-077010. [DOI] [PubMed] [Google Scholar]

- Gabrielsson A., Lindström E. (2001). The influence of Musical Structure on Emotional Expression. In P. N. Juslin & J. A. Sloboda (Eds.), Series in affective science. Music and emotion: Theory and research, pp. 223–248, New York, NY, USA: Oxford University Press. [Google Scholar]

- Gabrielsson A., Lindström E. (2010). The role of structure in the musical expression of emotions. Handbook of Music and Emotion: Theory, Research, Applications, 367–400. [Google Scholar]

- Gagnon L., Yücel M.A., Boas D.A., Cooper R.J. (2014). Further improvement in reducing superficial contamination in NIRS using double short separation measurements. Neuroimage, 85, 127–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gleick J. (2011). The Information: A History, A Theory, A Flood (Gleick, J.; 2011) [Book Review]. IEEE Transactions on Information Theory, 57(9), 6332–3. [Google Scholar]

- Hirsch J., Zhang X., Noah J. A., Ono Y. (2017). Frontal temporal and parietal systems synchronize within and across brains during live eye-to-eye contact. Neuroimage, 157, 314–30. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirsch J., Noah J. A., Zhang X., Dravida S., Ono Y. (2018). A cross-brain neural mechanism for human-to-human verbal communication. Journal of Social Cognitive and Affective Neuroscience, In Press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holper L., Scholkmann F., Wolf M. (2012). Between-brain connectivity during imitation measured by fNIRS. Neuroimage, 63(1), 212–22. [DOI] [PubMed] [Google Scholar]

- Husain G., Thompson W.F., Schellenberg E.G. (2002). Effects of musical tempo and mode on arousal, mood, and spatial abilities. Music Perception: An Interdisciplinary Journal, 20(2), 151–71. [Google Scholar]

- Ilie G., Thompson W.F. (2006). A comparison of acoustic cues in music and speech for three dimensions of affect. Music Perception: An Interdisciplinary Journal, 23(4), 319–30. [Google Scholar]

- Jiang J., Chen C., Dai B., et al. (2015). Leader emergence through interpersonal neural synchronization. Proceedings of the National Academy of Sciences, 112(14), 4274–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jou R.J., Minshew N.J., Keshavan M.S., Vitale M.P., Hardan A.Y. (2010). Enlarged right superior temporal gyrus in children and adolescents with autism. Brain Research, 1360, 205–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Juslin P.N., Laukka P. (2003). Communication of emotions in vocal expression and music performance: different channels, same code? Psychological Bulletin, 129(5), 770. [DOI] [PubMed] [Google Scholar]

- Kato T. (2004). Principle and Technique of NIRS-Imaging for Human Brain FORCE: Fast-Oxygen Response in Capillary Event. Paper presented at the International Congress Series.

- Kim J., André E. (2008). Emotion recognition based on physiological changes in music listening. IEEE Transactions on Pattern Analysis and Machine Intelligence, 30(12), 2067–83. [DOI] [PubMed] [Google Scholar]

- Kim J., Wigram T., Gold C. (2009). Emotional, motivational and interpersonal responsiveness of children with autism in improvisational music therapy. Autism, 13(4), 389–409. [DOI] [PubMed] [Google Scholar]

- Koelsch S. (2010). Towards a neural basis of music-evoked emotions. Trends in Cognitive Sciences, 14(3), 131–7. [DOI] [PubMed] [Google Scholar]

- Koike K., Suzuki H., Saito H. (1998). Prosodic Parameters in Emotional Speech, Sydney: Paper presented at the International Conference on Speech and Language Processing. [Google Scholar]

- LaGasse A.B. (2014). Effects of a music therapy group intervention on enhancing social skills in children with autism. Journal of Music Therapy, 51(3), 250–75. [DOI] [PubMed] [Google Scholar]

- Lang P., Bradley M., Cuthbert B. (2008). International affective picture system (IAPS): affective ratings of pictures and instruction manual. Technical report A-8 University of Florida, Gainesville, FL. [Google Scholar]

- Laukka P., Gabrielsson A. (2000). Emotional expression in drumming performance. Psychology of Music, 28(2), 181–9. [Google Scholar]

- Lawrence E., Shaw P., Giampietro V., Surguladze S., Brammer M., David A. (2006). The role of ‘shared representations’ in social perception and empathy: an fMRI study. Neuroimage, 29(4), 1173–84. [DOI] [PubMed] [Google Scholar]

- Leitman D.I., Wolf D.H., Ragland J.D., et al. (2010). “It's not what you say, but how you say it”: a reciprocal temporo-frontal network for affective prosody. Frontiers in Human Neuroscience, 4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu L. (2005). The Chinese Neolithic: Trajectories to Early States, Cambridge University Press. [Google Scholar]

- Maratos A., Gold C., Wang X., Crawford M. (2008). Music Therapy for Depression. The Cochrane Library. [DOI] [PubMed]

- Mazziotta J., Toga A., Evans A., et al. (2001). A probabilistic atlas and reference system for the human brain: International Consortium for Brain Mapping (ICBM). Philosophical Transactions of the Royal Society of London B: Biological Sciences, 356(1412), 1293–322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Møller A.S., Odell-Miller H., Wigram T. (2002). Indications in music therapy: Evidence from assessment that can identify the expectations of music therapy as a treatment for autistic spectrum disorder (ASD); meeting the challenge of evidence based practice. British Journal of Music Therapy, 16(1), 11–28. [Google Scholar]

- Müller J.L., Gänßbauer S., Sommer M., et al. (2008). Gray matter changes in right superior temporal gyrus in criminal psychopaths. Evidence from voxel-based morphometry. Psychiatry Research: Neuroimaging, 163(3), 213–22. [DOI] [PubMed] [Google Scholar]

- Narumoto J., Okada T., Sadato N., Fukui K., Yonekura Y. (2001). Attention to emotion modulates fMRI activity in human right superior temporal sulcus. Cognitive Brain Research, 12(2), 225–31. [DOI] [PubMed] [Google Scholar]

- Noah J.A., Dravida S., Zhang X., Yahil S., Hirsch J. (2017). Neural correlates of conflict between gestures and words: a domain-specific role for a temporal-parietal complex. PloS One, 12(3), e0173525. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noah J.A., Ono Y., Nomoto Y., et al. (2015). fMRI validation of fNIRS measurements during a naturalistic task. Journal of Visualized Experiments: JoVE, 100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Okamoto M., Dan I. (2005). Automated cortical projection of head-surface locations for transcranial functional brain mapping. Neuroimage, 26(1), 18–28. [DOI] [PubMed] [Google Scholar]

- Oldfield R.C. (1971). The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia, 9(1), 97–113. [DOI] [PubMed] [Google Scholar]

- Oluga S.O., Babalola H. (2012). Drummunication: the trado-indigenous art of communicating with talking drums in Yorubaland. Global Journal of Human Social Science, Arts and Humanities, 12(11). [Google Scholar]

- Ong W.J. (1977). African talking drums and oral noetics. New Literary History, 8(3), 411–29. [Google Scholar]

- Osaka N., Minamoto T., Yaoi K., Azuma M., Shimada Y.M., Osaka M. (2015). How two brains make one synchronized mind in the inferior frontal cortex: fNIRS-based hyperscanning during cooperative singing. Frontiers in Psychology, 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey K.A., Carter E.J. (2008). Charting the typical and atypical development of the social brain. Development and Psychopathology, 20(4), 1081–102. [DOI] [PubMed] [Google Scholar]

- Peng S.-M., Koo M., Kuo J.-C. (2010). Effect of group music activity as an adjunctive therapy on psychotic symptoms in patients with acute schizophrenia. Archives of Psychiatric Nursing, 24(6), 429–34. [DOI] [PubMed] [Google Scholar]

- Penny W.D., Friston K.J., Ashburner J.T., Kiebel S.J., Nichols T.E. (2011). Statistical Parametric Mapping: The Analysis of Functional Brain Images, Academic Press. [Google Scholar]

- Peretz I., Aubé W., Armony J.L. (2013). Towards a neurobiology of musical emotions. The Evolution of Emotional Communication: From Sounds in Nonhuman Mammals to Speech and Music in Man, 277. [Google Scholar]

- Piva M., Zhang X., Noah A., Chang S.W., Hirsch J. (2017). Distributed neural activity patterns during human-to-human competition. Frontiers in Human Neuroscience, 11, 571. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plichta M.M., Gerdes A.B., Alpers G.W., et al. (2011). Auditory cortex activation is modulated by emotion: a functional near-infrared spectroscopy (fNIRS) study. Neuroimage, 55(3), 1200–7. [DOI] [PubMed] [Google Scholar]

- Randall J.A. (2001). Evolution and function of drumming as communication in mammals. American Zoologist, 41(5), 1143–56. [Google Scholar]

- Remedios R., Logothetis N.K., Kayser C. (2009). Monkey drumming reveals common networks for perceiving vocal and nonvocal communication sounds. Proceedings of the National Academy of Sciences, 106(42), 18010–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reschke-Hernández A.E. (2011). History of music therapy treatment interventions for children with autism. Journal of Music Therapy, 48(2), 169–207. [DOI] [PubMed] [Google Scholar]

- Robins D.L., Hunyadi E., Schultz R.T. (2009). Superior temporal activation in response to dynamic audio-visual emotional cues. Brain and Cognition, 69(2), 269–78. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rorden C., Brett M. (2000). Stereotaxic display of brain lesions. Behavioural Neurology, 12(4), 191–200. [DOI] [PubMed] [Google Scholar]

- Russell J. A. (1980). A circumplex model of affect. Journal of Personality and Social Psychology ,39(6), 1161–78. doi: 10.1037/h0077714 [DOI] [Google Scholar]

- Sato H., Yahata N., Funane T., et al. (2013). A NIRS–fMRI investigation of prefrontal cortex activity during a working memory task. Neuroimage, 83, 158–73. [DOI] [PubMed] [Google Scholar]

- Scholkmann F., Holper L., Wolf U., Wolf M. (2013). A new methodical approach in neuroscience: assessing inter-personal brain coupling using functional near-infrared imaging (fNIRI) hyperscanning. Frontiers in Human Neuroscience, 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Silani G., Lamm C., Ruff C.C., Singer T. (2013). Right supramarginal gyrus is crucial to overcome emotional egocentricity bias in social judgments. Journal of Neuroscience, 33(39), 15466–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singh A.K., Okamoto M., Dan H., Jurcak V., Dan I. (2005). Spatial registration of multichannel multi-subject fNIRS data to MNI space without MRI. Neuroimage, 27(4), 842–51. [DOI] [PubMed] [Google Scholar]

- Srinivasan S.M., Bhat A.N. (2013). A review of “music and movement” therapies for children with autism: embodied interventions for multisystem development. Frontiers in Integrative Neuroscience, 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stern T. (1957). Drum and whistle “languages”: an analysis of speech surrogates. American Anthropologist, 59(3), 487–506. [Google Scholar]

- Strangman G., Culver J.P., Thompson J.H., Boas D.A. (2002). A quantitative comparison of simultaneous BOLD fMRI and NIRS recordings during functional brain activation. Neuroimage, 17(2), 719–31. [PubMed] [Google Scholar]

- Tachtsidis I., Scholkmann F. (2016). False positives and false negatives in functional near-infrared spectroscopy: issues, challenges, and the way forward. Neurophotonics, 3(3), 031405–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tak S., Uga M., Flandin G., Dan I., Penny W. (2016). Sensor space group analysis for fNIRS data. Journal of Neuroscience Methods, 264, 103–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Talwar N., Crawford M.J., Maratos A., Nur U., McDermott O., Procter S. (2006). Music therapy for in-patients with schizophrenia. The British Journal of Psychiatry, 189(5), 405–9. [DOI] [PubMed] [Google Scholar]

- Tomoda A., Sheu Y.-S., Rabi K., et al. (2011). Exposure to parental verbal abuse is associated with increased gray matter volume in superior temporal gyrus. Neuroimage, 54, S280–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vanutelli M.E., Crivelli D., Balconi M. (2015). Two-in-one: inter-brain hyperconnectivity during cooperation by simultaneous EEG-fNIRS recording. Neuropsychological Trends, 18(Novembre), 156–6. [Google Scholar]

- Wan C.Y., Bazen L., Baars R., et al. (2011). Auditory-motor mapping training as an intervention to facilitate speech output in non-verbal children with autism: a proof of concept study. PloS One, 6(9), e25505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winkelman M. (2003). Complementary therapy for addiction: “drumming out drugs”. American Journal of Public Health, 93(4), 647–51. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wolf R.K. (2000). Embodiment and ambivalence: emotion in South Asian Muharram drumming. Yearbook for Traditional Music, 32, 81–116. [Google Scholar]

- Ye J.C., Tak S., Jang K.E., Jung J., Jang J. (2009). NIRS-SPM: statistical parametric mapping for near-infrared spectroscopy. Neuroimage, 44(2), 428–47. [DOI] [PubMed] [Google Scholar]

- Zatorre R.J. (1988). Pitch perception of complex tones and human temporal-lobe function. The Journal of the Acoustical Society of America, 84(2), 566–72. [DOI] [PubMed] [Google Scholar]

- Zatorre R.J., Evans A.C., Meyer E., Gjedde A. (1992). Lateralization of phonetic and pitch discrimination in speech processing. Science, 846–9. [DOI] [PubMed] [Google Scholar]

- Zhang X., Noah J.A., Dravida S., Hirsch J. (2017). Signal processing of functional NIRS data acquired during overt speaking. Neurophotonics, 4(4), 041409. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang X., Noah J.A., Hirsch J. (2016). Separation of the global and local components in functional near-infrared spectroscopy signals using principal component spatial filtering. Neurophotonics, 3(1), 015004–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zilbovicius M., Garreau B., Samson Y., et al. (1995). Delayed maturation of the frontal cortex in childhood autism. American Journal of Psychiatry, 152(2), 248–52. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.