Abstract

A major obstacle to understanding neural coding and computation is the fact that experimental recordings typically sample only a small fraction of the neurons in a circuit. Measured neural properties are skewed by interactions between recorded neurons and the “hidden” portion of the network. To properly interpret neural data and determine how biological structure gives rise to neural circuit function, we thus need a better understanding of the relationships between measured effective neural properties and the true underlying physiological properties. Here, we focus on how the effective spatiotemporal dynamics of the synaptic interactions between neurons are reshaped by coupling to unobserved neurons. We find that the effective interactions from a pre-synaptic neuron r′ to a post-synaptic neuron r can be decomposed into a sum of the true interaction from r′ to r plus corrections from every directed path from r′ to r through unobserved neurons. Importantly, the resulting formula reveals when the hidden units have—or do not have—major effects on reshaping the interactions among observed neurons. As a particular example of interest, we derive a formula for the impact of hidden units in random networks with “strong” coupling—connection weights that scale with , where N is the network size, precisely the scaling observed in recent experiments. With this quantitative relationship between measured and true interactions, we can study how network properties shape effective interactions, which properties are relevant for neural computations, and how to manipulate effective interactions.

Author summary

No experiment in neuroscience can record from more than a tiny fraction of the total number of neurons present in a circuit. This severely complicates measurement of a network’s true properties, as unobserved neurons skew measurements away from what would be measured if all neurons were observed. For example, the measured post-synaptic response of a neuron to a spike from a particular pre-synaptic neuron incorporates direct connections between the two neurons as well as the effect of any number of indirect connections, including through unobserved neurons. To understand how measured quantities are distorted by unobserved neurons, we calculate a general relationship between measured “effective” synaptic interactions and the ground-truth interactions in the network. This allows us to identify conditions under which hidden neurons substantially alter measured interactions. Moreover, it provides a foundation for future work on manipulating effective interactions between neurons to better understand and potentially alter circuit function—or dysfunction.

Introduction

Establishing relationships between a network’s architecture and its function is a fundamental problem in neuroscience and network science in general. Not only is the architecture of a neural circuit intimately related to its function, but pathologies in wiring between neurons are believed to contribute significantly to circuit dysfunction [1–15].

A major obstacle to uncovering structure-function relationships is the fact that most experiments can only directly observe small fractions of an active network. State-of-the-art methods for determining connections between neurons in living networks infer them by fitting statistical models to neural spiking data [16–25]. However, the fact that we cannot observe all neurons in a network means that the statistically inferred connections are “effective” connections, representing some dynamical relationship between the activity of nodes but not necessarily a true physical connection [24–33]. Intuitively, reverberations through the network must contribute to these effective interactions; our goal in this work is to formalize this intuition and establish a quantitative relationship between measured effective interactions and the true synaptic interactions between neurons. With such a relationship in hand we can study the effective interactions generated by different choices of synaptic properties and circuit architectures, allowing us to not only improve interpretation of experimental measurements but also probe how circuit structure is tied to function.

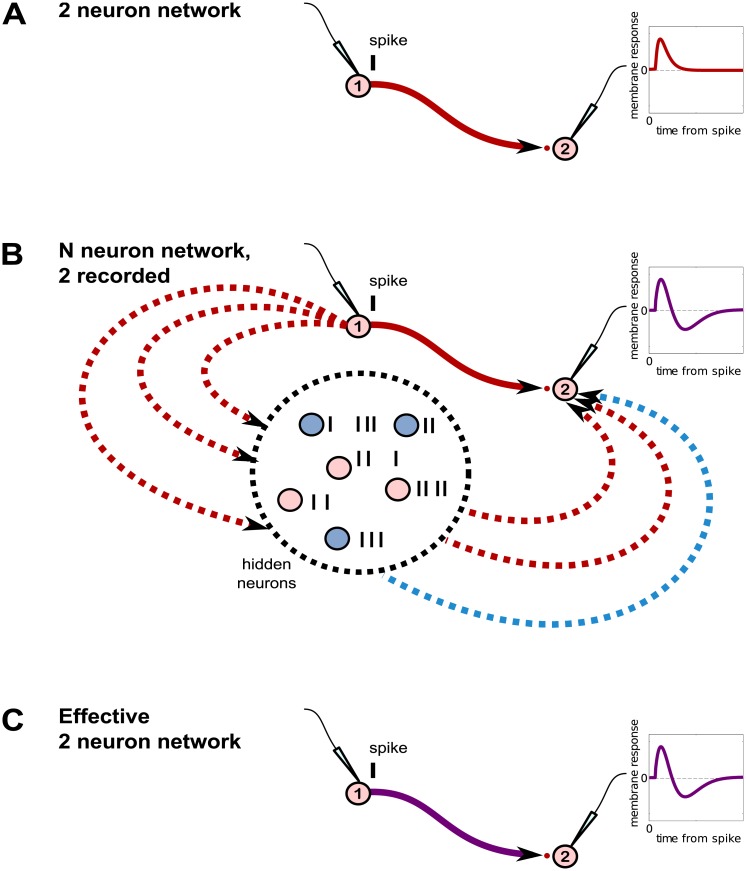

The intuitive relationship between measured and effective interactions is demonstrated schematically in Fig 1. Fig 1A demonstrates that in a fully-sampled network the directed interactions between neurons—here, the change in membrane potential of the post-synaptic neuron after it receives a spike from the pre-synaptic neuron—can be measured directly, as observation of the complete population means different inputs to a neuron are not conflated. However, as shown in Fig 1B, the vastly more realistic scenario is that the recorded neurons are part of a larger network in which many neurons are unobserved or “hidden.” The response of the post-synaptic neuron 2 to a spike from pre-synaptic neuron 1 is a combination of both the direct response to neuron 1’s input as well as input from the hidden network driven by neuron 1’s spiking. Thus, the measured membrane response of neuron 2 due to a spike fired by neuron 1—which we term the “effective interaction” from neuron 1 to 2—may be quite different from the true interaction. It is well-known that circuit connections between recorded neurons, as drawn in Fig 1C, are at best effective circuits that encapsulate the effects of unobserved neurons, but are not necessarily indicative of the true circuit architecture. The formalized relationship we will establish in the Results is given in Fig 2.

Fig 1. The hidden unit problem.

A. In a hypothetical circuit consisting of just two recorded neurons (no hidden neurons), we can measure the strength and time course of the directed interactions between neurons by measuring the response of the post-synaptic neuron’s membrane potential to a spike from the pre-synaptic neuron. B. Realistically, there are many more neurons in the network that are unrecorded and hence “hidden.” In this schematic, only two neurons are observed. The hidden neurons are driven by input from the presynaptic neuron labeled 1, and provide input to the recorded post-synaptic neuron labeled 2. Because the activity of the hidden neurons is not controlled, the membrane response reflects a combination of neuron 1’s direct influence on neuron 2 and its indirect influence through the hidden network. C. The “effective” 2 neuron network observed experimentally.

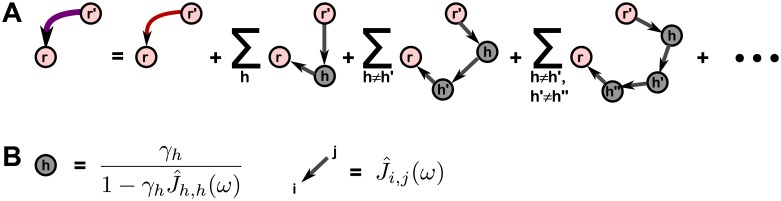

Fig 2. Expansion of effective interactions into contributions from hidden paths.

A. Graphical representation of Eq (2). The linear response of the hidden network, , has been expanded as a series (corresponding to the grey hidden nodes and links between them), such that each term in the overall series can be interpreted as a contribution from a path through which the pre-synaptic neuron r′ is able to send a signal to post-synaptic neuron r via 1, 2, etc. hidden neurons. This expression holds for any pair of neurons in the recorded subset. B. Quantitative expressions for each diagram in the series can be read off by assigning the shown factors for each hidden neuron node and each link between neurons, recorded or hidden, and multiplying them together. (No factor is assigned to the recorded neuron nodes). γh is the gain of neuron h and is the true interaction from j to i in the frequency domain.

Even once we establish a relationship between the effective and true connections, we will in general not be able to use measurements of effective interactions to extrapolate back to a unique set of true connections; at best, we may be able to characterize some of the statistical properties of the full network. The obstacle is that several different networks—different both in terms of architecture and intrinsic neural properties—may give rise to the same network behaviors, a theme of much focus in the neuroscience literature [34–39]. That is, inferring the connections and intrinsic neural properties in a full network from activity recordings from a subset of neurons is in general an ill-posed problem, possessing several degenerate solutions. Several statistical inference methods have been constructed to attempt to infer the presence of, and connections to, hidden neurons [28, 40–42]; the subset of the degenerate solutions that each of these methods finds will depend on the particular assumptions of the inference method (e.g., the regularization penalties applied). As an example, we demonstrate two small circuit motifs that give rise to nearly identical effective interactions, despite crucial differences between the circuits (Figs 3 and 4).

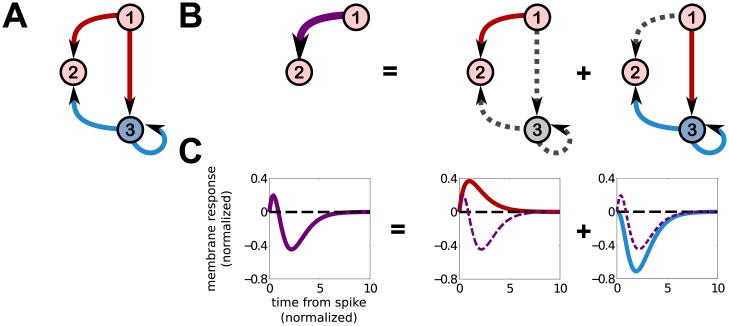

Fig 3. 3 neuron feedforward inhibition circuit.

A: A 3-neuron circuit displaying feedforward inhibition. Neuron 1 provides excitatory input to neurons 2 and 3, while neuron 3 provides inhibitory input to neuron 2. Neuron 3 also has a self-history coupling, denoted by an autaptic loop, which implements a refractory period in this circuit model. B: Leftmost, the effective interaction from neuron 1 to 2 when neuron 3 is unobserved. Subsequent plots decompose this interaction into contributions from neuron 1’s direct input to neuron 2, and its indirect input through neuron 3. The indirect input through neuron 3 also takes account of neuron 3’s self-history interaction. C. Leftmost, the effective interaction (membrane response) from neuron 1 to 2, subsequently decomposed into contributions from the direct interaction and the indirect interaction from 1 to 2.

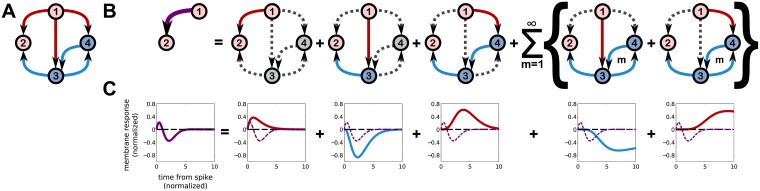

Fig 4. Different complete circuits may underly similar effective circuits.

A: A circuit very similar to that in Fig 3, except that neuron 1 also provides excitatory input to neuron 4, which in turn provides inhibitory input to neuron 3. The self-history coupling of neuron 3 to itself has also been removed in this example. B: Leftmost, the effective interaction from neuron 1 to 2, which is qualitatively and quantitatively similar to the effective interaction shown in Fig 3. Subsequent plots indicate each path through the circuit that neuron 1 can send a signal to neuron 2 through the hidden neurons 3 and 4. C. Leftmost, the effective interaction from neuron 1 to 2. Subsequent plots decompose this interaction into contributions from the paths shown above in B.

Understanding the effect of hidden neurons on small circuit motifs is only a piece of the hidden neuron puzzle, and a full understanding necessitates scaling up to large circuits containing many different motifs. Having an analytic relationship between true and effective interactions greatly facilitates such analyses by directly studying the structure of the relationship itself, rather than trying to extract insight indirectly through simulations. In particular, in going to large networks we focus on the degree to which hidden neurons skew measured interactions (Fig 5), and how we can predict the features of effective interactions we expect to measure when recording from only a subset of neurons in a network with hypothesized true interactions (Fig 6).

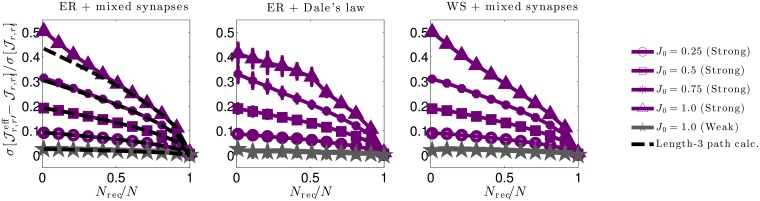

Fig 5. Relative changes in interaction strength due to hidden neurons for three network types.

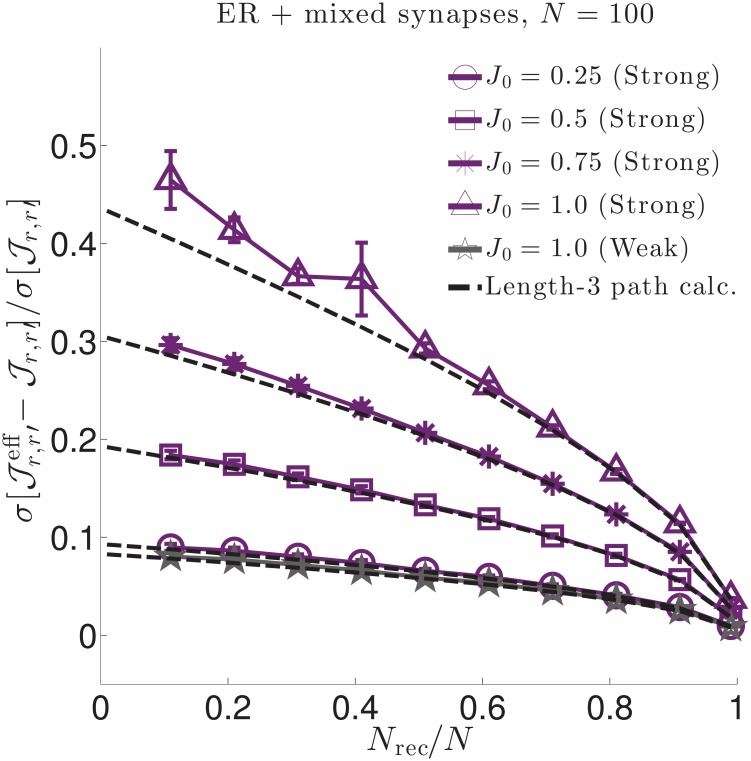

We quantify relative changes in interaction strength between effective () and true () interactions by the (sample) root-square-mean deviation, , normalized by the true synaptic weight (sample) standard deviation . We do so for three (sparse) network types: Left. An Erdős-Réyni (ER) network with “mixed synapses” (i.e., Dale’s law not imposed) with normally distributed synaptic weights. Middle. An ER network with Dale’s law imposed, (i.e., each neuron’s outgoing synaptic weights all have the same sign). Right. A Watts-Strogatz (WS) small world network with 30% rewired connections and mixed synapses. All network types yield qualitatively similar results. In each plot solid lines are numerical estimates of the sample standard deviation of the difference between effective coupling weights and true coupling weights between neurons r ≠ r′, normalized by the standard deviation of . These estimates account for all paths through hidden neurons. Purple lines correspond to synaptic weights with standard deviation (strong coupling), while grey lines correspond to synaptic weights with standard deviation J0/pN (weak coupling). For weak 1/N coupling (grey), the ratio of standard deviations is . For strong coupling (purple) the ratio is and grows in strength as the fraction of recorded neurons Nrec/N decreases or the typical synaptic strength J0 increases. The dashed black lines in the left plot show theoretical estimates accounting only for hidden paths of length-3 connecting recorded neurons (Eq (4). Deviations from the length-3 prediction at small f and large J0 indicate that contributions from circuit paths involving many hidden neurons are significant in these regimes.

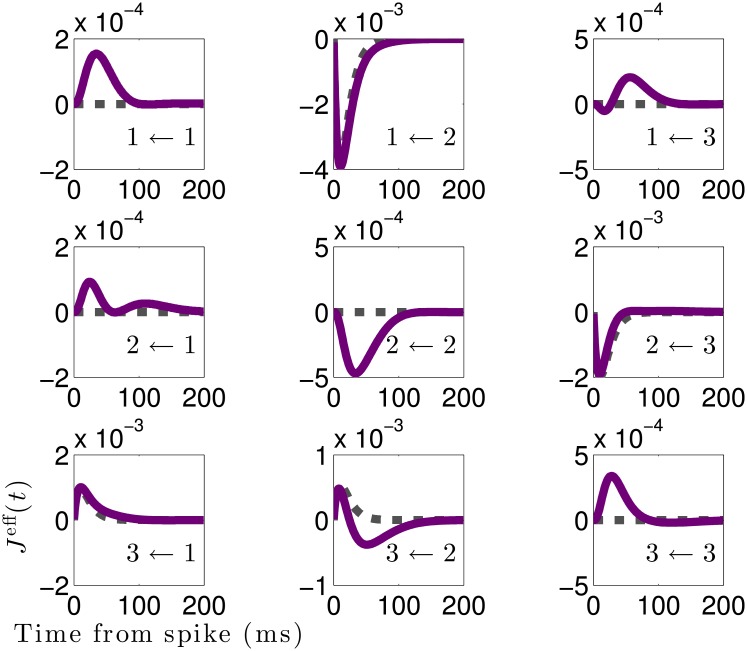

Fig 6. Effective interactions between recorded neurons differ qualitatively from true interactions.

Effective interactions (solid purple) versus true coupling filters (dashed black) for Nrec = 3 recorded neurons in a network of N = 1000 total neurons. Inset labels i ← j indicate the interaction is from neuron j to i, for i, j ∈ {1, 2, 3}. The simulated network has an Erdős-Réyni connectivity with sparsity p = 0.2 and normally distributed non-zero weights with zero mean and standard deviation . Although the network is sparse, the effective interactions are not: non-zero effective interactions develop where no direct connection exists. The effective interactions can differ qualitatively from the true interactions, as evidenced by the biphasic 3 ← 2 effective interaction, whereas the true 3 ← 2 is purely excitatory.

Establishing a theoretical relationship between measured and “true” interactions will thus enable us to study how one can alter the network properties to reshape the effective interactions, and will be of immediate importance not only for interpreting experimental measurements of synaptic interactions, but for elucidating their role in neural coding. Moreover, understanding how to shape effective interactions between neurons may yield new avenues for altering, in a principled way, the computations performed by a network, which could have applications for treating neurological diseases caused in part by pathological synaptic interactions.

Results

Overview

Our goal is to derive a relationship between the effective synaptic interactions between recorded neurons and the true synaptic interactions that would be obtained if the network were fully observed. This makes explicit how the synaptic interactions between neurons are modified by unobserved neurons in the network, and under what conditions these modifications are—or are not—significant. We derive this result first, using a probabilistic model of network activity in which all properties are known. We then build intuition by applying our result to two simple networks: a 3-neuron feedforward-inhibition circuit in which we are able to qualitatively reproduce measurements by Pouille and Scanziani [43], and a 4-neuron circuit that demonstrates how degeneracies in hidden networks are handled within our framework.

To extend our intuition to larger networks, we then study the effective interactions that would be observed in sparse random networks with N cells and strong synaptic weights that scale as [44–47], as has been recently observed experimentally [48]. We show how unobserved neurons significantly reshape the effective synaptic interactions away from the ground-truth interactions. This is not the case with “classical” synaptic scaling, in which synaptic strengths are inversely proportional to the number of inputs they receive (assumed ), as we will also show. (The case of classical scaling has also been studied previously using a different approach in [49–52]).

Model

We model the full network of N neurons as a nonlinear Hawkes process [53], often referred to as a “Generalized linear (point process) model” in neuroscience, and broadly used to fit neural activity data [16–23, 54]. Here we use it as a generative model for network activity, as it approximates common spiking models such as leaky integrate and fire systems driven by noisy inputs [55, 56], and is equivalent to current-based leaky integrate-and-fire models with soft-threshold (stochastic) spiking dynamics (see Methods).

To derive an approximate model for an observed subset of the network, we partition the network into recorded neurons (labeled by indices r) and hidden neurons (labeled by indices h). Each recorded neuron has an instantaneous firing rate λr(t) such that the probability that the neuron fires within a small time window [t, t + dt] is λr(t)dt, when conditioned on the inputs to the neuron. The instantaneous firing rate in our model is

| (1) |

where λ0 is a characteristic firing rate, ϕ(x) is a non-negative, continuous function, μr is a tonic drive that sets the baseline firing rate of the neuron, and is the convolution of the synaptic interaction (or “spike filter”) Ji,j(t) with spike train from pre-synaptic neuron j to post-synaptic neuron i, for neural indices i and j that may be either recorded or hidden. In this work we take the tonic drive to be constant in time, and focus on the steady-state network activity in response to this drive. We consider interactions of the form , where the temporal waveforms gj(t) are normalized such that for all neurons j. Because of this normalization, the weight carries units of time. We include self-couplings Ji,i(t) not to represent autapses, but to account for intrinsic neural properties such as refractory periods () or burstiness (). The firing rates for the hidden neurons follow the same expression with indices h and r interchanged.

We seek to describe the dynamics of the recorded neurons entirely in terms of their own set of spiking histories, eliminating the dependence on the activity of the hidden neurons. This demands calculating the effective membrane response of the recorded neurons by averaging over the activity of the hidden neurons conditioned on the activity of the recorded neurons. In practice this is intractable to perform exactly [57–60]. Here, we use a mean field approximation to calculate the mean input from the hidden neurons (again, conditioned on the activity of the recorded neurons). The value of deriving such a relationship analytically, as opposed to simply numerically determining the effective interactions, is that the resulting expression will give us insight into how the effective interactions decompose into contributions of different network features, how tuning particular features shapes the effective interactions, and conditions under which we expect hidden units to skew our measurements of connectivity in large partially observed networks.

As shown in detail in the Methods, the instantaneous firing rates of the recorded neurons can then be approximated as

The effective baselines , are simply modulated by the net tonic input to the neuron, so we do not focus on them here. The ξr(t) are effective noise sources arising from fluctuation input from the hidden network. At the level of our mean field approximation these fluctuations vanish; corrections to the mean field approximation are straightforward and yield non-zero noise correlations, but will not impact our calculation of the effective interactions (see the Methods and SI), so as with the effective baselines we will not focus on the effective noise here.

The effective coupling filters are given in the frequency domain by

| (2) |

These results hold for any pair of recorded neurons r′ and r, and any choice of network parameters for which the mean field steady state of the hidden network exists. Here, the νh are the steady-state mean firing rates of the hidden neurons and is the linear response function of the hidden network to perturbations in the input. That is, Γh,h′(t − t′) is the linear response of hidden neuron h at time t due to a perturbation to the input of neuron h′ at time t′, and incorporates the effects of h′ propagating its signal to h through other hidden neurons, as demonstrated graphically in Fig 2. Both νh and are calculated in the absence of the recorded neurons. In deriving these results, we have neglected both fluctuations around the mean input from the hidden neurons, as well as higher order filtering of the recorded neuron spikes. For details on the derivations and justification of approximations, see the Methods and Supporting Information (SI).

The effective coupling filters are what we would—in principle—measure experimentally if we observe only a subset of a network, for example by pairwise recordings shown schematically in Fig 1. For larger sets of recorded neurons, interactions between neurons are typically inferred using statistical methods, an extremely nontrivial task [16–23, 28, 40, 41], and details of the fitting procedure could potentially further skew the inferred interactions away from what would be measured by controlled pairwise recordings. We will put aside these complications here, and assume we have access to an inference procedure that allows us to measure without error, so that we may focus on their properties and relationship to the ground-truth coupling filters.

Structure of effective coupling filters

The ground-truth coupling filters (as defined in Eq (1)) are modified by a correction term . The linear response function admits a series representation in terms of paths through the network through which neuron r′ is able to send a signal to neuron r via hidden neurons only.

We may write down a set of “Feynmanesque” graphical rules for explicitly calculating terms in this series [53]. First, we define the input-output gain of a hidden neuron h, , calculated in the absence of recorded neurons. The contribution of each term can then be written down using the following rules, shown graphically in Fig 2: i) for the edge connecting recorded neuron r′ to a hidden neuron hi, assign a factor ; ii) for each node corresponding to a hidden neuron hi, assign a factor ; iii) for each edge connecting hidden neurons hi ≠ hj, assign a factor ; and iv) for the edge connecting hidden neuron hj to recorded neuron r, assign a factor . All factors for each path are multiplied together, and all paths are then summed over.

The graphical expansion is reminiscent of recent works expanding correlation functions of linear models of network spiking in terms of network “motifs” [61–63]. Computationally, this expression is practical for calculating the effective interactions in small networks involving only a few hidden neurons (as in the next section), but is generally unwieldy for large networks. In practice, for moderately large networks the linear response matrix can be calculated directly by numerical matrix inversion and an inverse Fourier transform back into the time domain. The utility of the path-length series is the intuitive understanding of the origin of contributions to the effective coupling filters and our ability to analytically analyze the strength of contributions from each path. For example, one immediate insight the path decomposition offers is that neurons only develop effective interactions between one another if there is a path by which one neuron can send a signal to the other.

Feedforward inhibition and degeneracy of hidden networks in small circuits

Effective interactions & emergent timescales in a small circuit

To build intuition for our result and compare to a well-known circuit phenomenon, we apply our Eq (2) to a 3-neuron circuit implementing feedforward inhibition, like that studied by Pouille and Scanziani [43]. Feedforward inhibition can sharpen the temporal precision of neural coding by narrowing the “window of opportunity” in which a neuron is likely to fire. For example, in the circuit shown in Fig 3A, excitatory neuron 1 projects to both neurons 2 and 3, and 3 projects to 2. Neuron 1 drives both 2 and 3 to fire more, while neuron 3 is inhibitory and will counteract the drive neuron 2 receives from 1. The window of opportunity can be understood by looking at the effective interaction between neurons 1 and 2, treating neuron 3 as hidden. We use our path expansion (Fig 2) to quickly write down the effective interaction we expect to measure in the frequency domain,

| (3) |

The corresponding true synaptic interactions and resulting effective interaction are shown in Fig 3B. The effective interaction matches qualitatively the observed changes measured by Pouille and Scanziani [43], and shows a narrow window after neuron 2 receives a spike in which the change in membrane potential is depolarized and neuron 2 is more likely to fire. Following this brief window, the membrane potential is hyperpolarized and the cell is less likely to fire until it receives more excitatory input.

The effective interaction from neuron 1 to 2 in this simple circuit also displays several features that emerge in more complex circuits. Firstly, although the true interactions are either excitatory (positive) or inhibitory (negative), the effective interaction has a mixed character, being initially excitatory (due to excitatory inputs from neuron 1 arriving first through the monosynaptic pathway), but then becoming inhibitory (due to inhibitory input arriving from the disynaptic pathway).

Secondly, emergent timescales develop due to reverberations between hidden neurons with bi-directional connections, represented as loops between neurons in our circuit schematics (e.g., between neurons 3 and 4 in Fig 4). This includes self-history interactions such as refractoriness, schematically represented by loops like the 3 → 3 loop shown in Fig 3, corresponding to the factor ). In the particular example shown in Fig 3, in which we use a self-history interaction , a new timescale develops. Other choices of interactions can generate more complicated emergent timescales and temporal dynamics, including oscillations. For example, in the 4-neuron circuit discussed below (Fig 4), the choice yields effective interactions with new decay and oscillatory timescales equal to and . In the larger networks we consider in the next section, inter-neuron interactions must scale with network size in order to maintain network stability. Because emergent timescales depend on the synaptic strengths of hidden neurons, we typically expect emergent timescales generated by loops between hidden neurons to be negligible in large random networks. However, because the magnitudes of the self-history interaction strengths need not scale with network size, they may generate emergent timescales large enough to be detected.

It is worth noting explicitly that only the interaction from neuron 1 to 2 has been modified by the presence of the hidden neuron 3, for the particular wiring diagram shown in Fig 3. The self-history interactions of both neurons 1 and 2, as well as the interaction from neuron 2 to 1 (zero in this case) are unmodified. The reason the hidden neuron did not modify these interactions is that the only link neuron 3 makes is from 1 to 2. There is no path by which neuron 1 can send a signal back to itself, hence its self-interaction is unmodified, nor is there a path that neuron 2 can send signals to neuron 3 or on to neuron 1, and hence neuron 2’s self-history interaction and its interaction to neuron 1 are unmodified.

Degeneracy of hidden networks giving rise to effective interactions

It is well known that different networks may produce the same observed circuit phenomena [34–39]. To illustrate that our approach may be used to identify degenerate solutions in which more than one network underlies observed effective interactions, we construct a 4-neuron circuit that produces a quantitatively similar effective interaction between the recorded neurons 1 and 2, shown in Fig 4. Specifically, in this circuit we have removed neuron 3’s self-history interaction and introduced a second inhibitory hidden network that receives excitatory input from neuron 1 and provides inhibitory input to neuron 3. By tuning the interaction strengths we are able to produce the desired effective interaction. This demonstrates that intrinsic neural properties such as refractoriness can trade off against inputs from other hidden neurons, making it difficult to distinguish the two cases from one another (or from a potential infinity of other circuits that could have produced this interaction; for example, a qualitatively similar interaction is produced in the N = 1000 network in which only three neurons are recorded, shown below in Fig 6). Statistical inference methods may favor one of the possible underlying choices of complete network consistent with a measured set of effective interactions, suggesting there may be some sense of a “best” solution. However, the particular “best” network will depend on many factors, including the amount and fidelity of data recorded, regularization choices, and how well the fitted model generalizes to new data (i.e., how “close” the fitted model is to the generative model). Potentially, if these conditions were met, with enough data the slight quantitative differences between the effective interactions produced by different hidden networks (including higher order effective interactions, which we assume to be negligible here; see SI), could help distinguish different hidden networks. However, the amount of data required to perform this discrimination and validate the result may be impractically large [36, 64–66]. It is thus worth studying the structure of the observed effective interactions directly in search of possible signatures that elucidate the statistical properties of the complete network.

Strongly coupled large networks

Constructing networks that produce particular effective interactions is tractable for small circuits, but much more difficult for larger circuits composed of many circuit motifs. Not only can combinations of different circuit motifs interact in unexpected ways, one must also take care to ensure the resulting network is both active and stable—i.e., that firing will neither die out nor skyrocket to the maximum rate. Stability in networks is often implemented by either building networks with classical (or “weak”) synapses whose strength scales inversely with the number of inputs they receive, assumed here to be proportional to network size, and hence , or by building balanced networks in which excitatory and inhibitory synaptic strengths balance out, on average, and scale as [44, 48] (but note the distinction that we use a “soft threshold” firing model with nonlinearity that is fixed as N varies, whereas previous work has typically used hard threshold models). In both cases the synapses tend to be small in value in large networks, but are compensated for by large numbers of incoming connections. In the case of 1/N scaling, neurons are driven primarily by the mean of their inputs, while in “strong” balanced networks neurons are driven primarily by fluctuations in their inputs.

Our goal is to understand how the interplay between the presence of hidden neurons and different synaptic scaling or network architectures shapes effective interactions. Previous work has studied the hidden-neuron problem in the weak coupling limit [49–52] using a different approach to relate inferred synaptic parameters to true parameters; here we use our approach to study the strong coupling limit, theoretically predicted to be an important feature that supports computations in networks in a balanced regime [44–47]. Moreover, experiments in cultured neural tissue have been found to be more consistent with the scaling than 1/N [48], indicating that it may have intrinsic physiological importance.

We analytically determine how significantly effective interaction strengths are skewed away from the true interaction strengths as a function of both the number of observed neurons and typical synaptic strength. We consider several simple networks ubiquitous in neural modeling: first, an Erdős-Réyni (ER) network with “mixed synapses” (i.e., a neuron may have both positive and negative synaptic weights), a balanced ER network with Dale’s law imposed (a neuron’s synapses are all the same sign), and a Watts-Strogatz (WS) small world network with mixed synapses. Each network has N neurons and connection sparsity p (only 100p% of connections are non-zero). Connections in ER networks are chosen randomly and independently, while connections in the WS network are determined by randomly rewiring a fraction β of the connections in a (pN)th-nearest-neighbor ring network. such that the overall network has a backbone of local synaptic connections with a web of sparse long-range connections. In each network Nrec neurons are recorded randomly.

For simplicity we take the baselines of all neurons to be equal, μi = μ0 (such that in the absence of synaptic input the probability that a neuron fires in a short time window Δt is λ0Δt exp(μ0)). We choose the rate nonlinearity to be exponential, ϕ(x) = ex; this is the “canonical” choice of nonlinearity often used when fitting this model to data [16–18, 20, 67]. We will further assume exp(μ0) ≪ 1, so that we may use this as a small control parameter. For i ≠ j, the non-zero synaptic weights between neurons are independently drawn from a normal distribution with zero mean and standard deviation J0/(pN)a, where J0 controls the overall strength of the weights and a = 1 or 1/2, corresponding to “weak” and “strong” coupling. For simplicity, we do not consider intrinsic self-coupling effects in this part of the analysis, i.e., we take for all neurons i. For the Dale’s law network, the overall distribution of synaptic weights follows the same normal distribution as the mixed synapse networks, but the signs of the weights correspond to whether the pre-synaptic neuron is excitatory or inhibitory. Neurons are randomly chosen to be excitatory and inhibitory, the average number of each type being equal so that the network is balanced. Numerical values of all parameters are given in Table 1.

Table 1. Network connectivity parameter values for Figs 5–8 and S1–S3.

See individual captions for other figures.

| Number of neurons N | 1000 |

|---|---|

| Number of hidden neurons Nhid | {1, 90, 190, 290, 390, 490, 590, 690, 790, 890, 990} |

| Number of recorded neurons Nrec | N-Nhid |

| Baselines μi | -1.0, ∀i |

| Sparsity p | 0.2 |

| Coupling weights | |

| Self-coupling weights | 0 |

| Coupling regime a | 1 (weak coupling) or 1/2 (strong coupling) |

| Rewiring probability β (Watts-Strogatz only) | 0.3 |

| Characteristic synaptic weight J0 | {0.25, 0.5, 0.75, 1.0} |

| Firing frequency λ0 | 1.0 |

We seek to assess how the presence of hidden neurons can shape measured network interactions. We first focus on the typical strength of the effective interactions as a function of both the fraction of neurons recorded, f = Nrec/N, and the strength of the synaptic weights J0. We quantify the strength of the effective interactions by defining the effective synaptic weights ; c.f. for the true synaptic weights. We then study the sample statistics of the difference, , averaged across both subsets of recorded neurons and network instantiations, to estimate the typical contribution of hidden neurons to the measured interactions. The mean of the synaptic weights is near zero (because the weights are normally distributed with zero mean in the mixed synapse networks and due to balance of excitatory and inhibitory neurons in the Dale’s law network), so we focus on the root-mean-square of . This measure is a conservative estimate of changes in strength, as may have both positive and negative components that partially cancel when integrated over time, unlike Jr,r′(τ). An alternative measure we could have chosen that avoids potential cancellations is , i.e., the integrated absolute difference between effective and true interactions. However, this will depend on our specific choices of waveform g(τ) in our definition , whereas does not due to our normalization . As |∫ dτ f(τ)| ≤ ∫ dτ |f(τ)|, for any f(τ), we can consider our choice of as a lower bound on the strength that would be quantified by .

Numerical evaluations of the population statistics for all three network types are shown as solid curves in Fig (5), for both strong coupling and weak coupling. All three networks yield qualitatively similar results. The vertical axes measure the root-mean-square deviations between the statistically expected true synaptic and the corresponding effective synaptic weight , normalized by the true root mean square of . Thus, a ratio of 0.5 corresponds to a 50% root-mean-square difference in effective versus true synaptic strength. We measure these ratios as a function of both the fraction of neurons recorded (horizontal axis) and the parameter J0 (labeled curves).

There are two striking effects. First, deviations are nearly negligible () for 1/N scaling of connections (gray traces in Fig 5). Thus, for large networks with synapses that scale with the system size, vast numbers of hidden neurons combine to have negligible effect on effective couplings. This is in marked contrast to the case when coupling is strong ( scaling), when hidden neurons have a pronounced impact (purple traces in Fig 5). This is particularly the case when f ≪ 1—the typical experimental case in which the hidden neurons outnumber observed ones by orders of magnitude—or when J0 ≲ 1.0, when typical deviations become half the magnitude of the true couplings themselves (upper blue line). For J0 ≳ 1.0, the network activity is unstable for an exponential nonlinearity.

To gain analytical insight into these numerical results, we calculate the standard deviation , normalized by , for contributions from paths up to length-3, focusing on the case of the ER network with mixed synapses (the Dale’s law and WS networks are more complicated, as the moments of the synaptic weights depend on the identity of the neurons). For strong coupling we find

| (4) |

corresponding to the black dashed curves in Fig 5 left. Eq (4) is a truncation of a series in powers of , where f = Nrec/N is the fraction of recorded neurons. The most important feature of this series is the fact that it only depends on the fraction of recorded neurons f, not the absolute number, N. Contributions from long paths remain finite, even as N → ∞. In contrast, the corresponding expression for in the case of weak 1/N coupling is a series in powers of , so that contributions from long paths are negligible in large networks N ≫ 1. (See [67] for derivation and results for N = 100.) Deviations of Eq (4) from the numerical solutions in Fig 5 indicate that contributions from truncated terms are not negligible when f ≪ 1. As these terms correspond to paths of length-4 or more, this shows that long chains through the network contribute significantly to shaping effective interactions.

The above analysis demonstrates that the strength of the effective interactions can deviate from that of the true direct interactions by as much as 50%. However, changes in strength do not give us the full picture—we must also investigate how the temporal dynamics of the effective interactions change. To illustrate how hidden units can skew temporal dynamics, in Fig 6 we plot the effective vs. true interactions between Nrec = 3 neurons in an N = 1000 neuron network. Because the three network types considered in Fig 5 yield qualitatively similar results, we focus on the Erdős-Réyni network with mixed synapses.

Four of the true interactions between neurons shown in Fig 6 are non-zero (, , , and ). Of these, three exhibit only slight differences between the true and effective interactions: and have slightly longer decay timescales than their true counterparts, while has a slightly shorter timescale, indicating the contribution of the hidden network to these interactions was either small or cancelled out. However, the interaction differs significantly from the true interaction, becoming initially excitatory but switching to inhibitory after a short time, as in our earlier example case of feedforward inhibition. This indicates that neuron 2 must drive a cascade of neurons that ultimately provide inhibitory input to neuron 3.

Contrasting the true and effective interactions shown in Fig 6 highlights many of the ways in which hidden neurons skew the temporal properties of measured interactions. An immediately obvious difference is that although the true synaptic connections in the network are sparse, the effective interactions are not. This is a generic feature of the effective interaction matrix, as in order for an effective interaction from a neuron r′ to r to be identically zero there cannot be any paths through the network by which r′ can send a signal to r.1 In a random network the probability that there are no paths connecting two nodes tends to zero as the network size N grows large. Note that this includes paths by which each neuron can send a signal back to itself, hence the neurons developed effective self-interactions, even though the true self-interactions are zero in these particular simulations.

Discussion

We have derived a quantitative relationship between “ground-truth” synaptic interactions and the effective interactions (interpreted here as post-synaptic membrane responses) that unobserved neurons generate between subsets of observed neurons. This relationship, Eq (2) and Fig 2, provides a foundation for studying how different network architectures and neural properties shape the effective interactions between subsets of observed neurons. Our approach can be also be used to study higher order effective interactions between 3 or more neurons, and can be systematically extended to account for corrections to our mean-field approximations and investigate effective noise generated by hidden neurons (using field theoretic techniques from [53], see SI), as well as time-dependent external drives or steady-states.

Here, as first explorations, we focused on the effective interactions corresponding to linear membrane responses. We first demonstrated that our approach applied to small feedforward inhibitory circuits yields effective interactions that capture the role of inhibition in shortening the time window for spiking, and are qualitatively similar to experimentally observed measurements [43]. Moreover, we used this example to demonstrate explicitly that different hidden networks can give rise to the same effective interactions between neurons. We then showed that the influence of hidden neurons can remain significant even in large networks in which the typical synaptic strengths scale with network size. In particular, when the synaptic weights scale as , the relative influence of hidden neurons depends only on the fraction of neurons recorded. Together with theoretical and experimental evidence for this scaling in cortical slices [44–48], this suggests that neural interactions inferred from cortical activity data may differ markedly from the true interactions and connectivity.

Dealing with degeneracy

The issue of degeneracy in complex biological systems and networks has been discussed at length in the literature, in the context of both inherent degeneracies—multiple different network architectures can produce the same qualitative behaviors [34, 37–39], as well as degeneracies in our model descriptions—many models may reproduce experimental observations, demanding sometimes arbitrary criteria for selecting one model over another. All have implications for how successfully one can infer unobserved network properties. One kind of model degeneracy, “sloppiness” [35, 65], describes models in which the behavior of the model is sensitive to changes in only a relatively small number of directions in parameter space. Many models of biological systems have been shown to be sloppy [35]; this could account for experimentally observed networks that are quite different in composition but produce remarkably similar behaviors. Sloppiness suggests that rather than trying to infer all properties of a hidden network, there may be certain parameter combinations that are much more important to the overall network operation, and could potentially be inferred from subsampled observations.

Another perspective on model degeneracy comes from the concepts of “universality” that occur in random matrix theory [68, 69] and Renormalization Group methods of statistical physics [64]. Many bulk properties of matrices (e.g., the distribution of eigenvalues) whose entrees are combinations random variables, such as our , are universal in that they depend on only a few key details of the distribution that individual elements are drawn from [70]. Similarly, one of the central results of the Renormalization Group shows that models with drastically disparate features may yield the same coarse-grained model structure when many degrees of freedom are averaged out, as in our case of approximately averaging out hidden neurons. Different distributions (in the case of random matrix theory) or different models (in the case of the Renormalization group) that yield the same bulk properties or coarse-grained models are said to be in the same “universality class.” Measuring particular quantities under a range of experimental conditions (e.g., different stimuli) may be able to reveal which universality class an experimental system belongs to and eliminate models belonging to other universality classes as candidate generating models of the data, but these measurements cannot distinguish between models within a universality class.

Purely feedforward networks and recurrent networks are simple examples of broad universality classes in this context. In any randomly sampled feedforward network, only the feedforward interactions are modified or generated; no lateral or feedback connections develop because there is no path through hidden neurons that a recorded neuron can send signals to recorded neurons in the same or previous layers. Thus, the feedforward structure—a topological property of the network—is preserved. However, adding even a single feedback connection can destroy this topological structure if it joins two neurons connected by a feedforward path—i.e., such a link creates a cycle within the network, and it is no longer feedforward. If this network is heavily subsampled (f ≪ 1) the resulting effective interactions can even be fully recurrent. The majority of the effective interactions may be very weak, but nonetheless from a topological perspective the network has been fundamentally altered. Accordingly, any interactions that represent a purely feedforward network could not have come from a network with recurrent interactions. In practice, we expect few, if any, cortical networks to be purely feedforward, so most networks will be recurrent if we consider only the network connections and not the connection strengths. Thus, a more interesting question is how the statistics and dynamics of synaptic weights further partition topologically-defined universality classes; for example, whether the distribution of synaptic weights can split the sets of that arise from recurrent networks and predominantly feedforward networks with sparse feedback and lateral interactions into different universality classes. A thorough investigation of such phenomena will be the focus of future work.

Inference of hidden network features

Despite the many possible confounds network degeneracy produces, much of the work on inference of hidden network properties has focused on inferring the individual interactions between neurons, with varying degrees of success. Both Dunn and Roudi [40] as well as Tyrcha and Hertz [41] studied inference of hidden activity in kinetic Ising models, sometimes used as simple minimal models of neuronal network activity. They found that the synaptic weights between pairs of observed neurons and observed-hidden pairs could be recovered to within reasonably small mean-squared-error when the number of hidden neurons was less than the number of observed neurons. However, both methods also found it difficult to infer connections between pairs of hidden neurons, resorting to setting such connections to zero in order to stabilize their algorithms. Tyrcha and Hertz also note their method recovers only an equivalence class of connections due to degeneracy in the possible assignment of signs of the synaptic weights and hidden neuron labels. This suggests inferring hidden network structure will be nearly impossible in the realistic limit Nrec ≪ Nhid.

A series of papers by Bravi and Sollich perform theoretical analyses of hidden dynamics inference in chemical reaction networks, modeled by a system of Langevin equations [57–60]. Although the applications the authors have in mind are signaling pathways such as epidermal growth factor reaction networks, one could imagine re-interpreting or adapting these equations to describe rate models. The authors develop a variety of approaches, including Plefka expansions [57–59] and variational Gaussian approximations [60], to study how observations constrain the inferred hidden dynamics, assuming particlar properties of the network structure. Ref. [60] in particular takes an approach most similar to ours, deriving an effective system of Langevin equations for the subsampled dynamics of the chemical reaction network. The effective system of equations contains a memory kernel that plays a role analogous to the correction to the interactions between neurons in our work (second term in our Eq (2). However, the structure of the memory kernel in [60] has a rather different form, being exponentially dependent on the integral of the hidden-hidden interactions, in contrast to our Γh,h′(t − t′), which depends on the inverse of δh,h′δ(t − t′) − γhJh,h′(t − t′) (see Methods). Though Bravi and Sollich do not expand their memory kernel in a series as we do, it would admit a similar series and interpretation in terms of paths through the hidden network, as in our Fig 2A. However, due to the exponential dependence on the hidden-hidden interactions, long paths of length ℓ through hidden networks are suppressed by factors ℓ!, suggesting the hidden network may have less influence in such networks compared to the network dynamics we study here.

Closest to our choice of model, Pillow and Latham [28] and Soudry et al[25] both use modifications of nonlinear Hawkes models to fit neural data with unobserved neurons. Pillow and Latham outline a statistical approach for inferring not just interactions with and between hidden neurons, but also the spike trains of hidden neurons, testing the method on a network of two neurons (one hidden). To properly infer the spike train of the hidden neurons, the model must allow for acausal synaptic interactions. This is acceptable if the goal is inferring hidden spike trains: for example, if the hidden neuron were to make a strong excitatory synapse onto the observed neuron, then a spike from the observed neuron increases the probability that the hidden neuron fired a spike in the recent past. An acausal synaptic interaction captures this effect, but is of course an unphysical feature in a mechanistic model, precluding physiological interpretation of such an interaction.

Soudry et al are concerned with the fact that common input from hidden neurons will skew estimates of network connectivity. To get around this issue, they present a different take on the hidden unit problem: rather than attempt to infer connectivity in a fixed subsample of a network, they propose a shotgun sampling method, in which a sequence of overlapping random subsets of the network are sampled over a long experiment. Under this procedure, a large fraction of the network can be sampled, just not contiguously in time, and reconstruction of the entire network could in principle be accomplished. Soudry et al show this strategy works in their simulated networks (even when the generative model is a hard-threshold leaky-integrate-and-fire rather than the nonlinear Hawkes model, which can be interpreted as a soft-threshold leaky-integrate-and-fire model; see SI). However, sampling the entire network may only be feasible in vitro; sampling of neurons in vivo, such as in wide-field calcium imaging studies, will still necessarily miss neurons not in the field of view or too deep in the tissue; in such cases our work provides the means to properly interpret the inferred effective interactions obtained with such a method.

Although a thorough treatment of statistical inference of hidden network properties is beyond the scope of our present work, we may make some general remarks on future work in these directions. The nonlinear Hawkes model we use here is commonly used to fit neural population activity data, and one could infer the effective baselines , interactions , and noise ξr(t) using existing techniques. In particular, Vidne et al. [22] explicitly fit the noise, which is likely important for proper inference, as otherwise effects of the noise could be artificially inherited by the effective interactions. Once such estimates are obtained, one could then in principle infer certain hidden network properties by combining a statistical model for these properties with the relationships between effective and true interactions derived in this work, such as Eq (2). (Detailed physiological measurements of ground-truth synaptic interactions in small volumes of neural tissue can be used to refine estimates). As we have stressed throughout this paper, inferring the exact connections between hidden neurons may be impossible due to a large number of degenerate solutions consistent with observations. However, one may be able to infer bulk properties of the network, such as the parameters governing the distribution of hidden-network connections, or even more exotic properties such as the eigenvalue distribution of the hidden network connection weight matrix. We leave these ideas as interesting directions for future work.

Hidden neurons and dimensionality reduction

Given the challenges that hidden network inference poses, one might wonder if there are network properties that can be reliably measured even with subsampled neural activity. Collective, low-dimensional dynamics have emerged as a possible candidate: recent work has investigated the effect that subsampled measurements have on estimating collective low-dimensional dynamics of trial-averaged network activity (using, e.g., principal components analysis). During a task, the effective dimensionality of a network’s dynamics is constrained [71, 72], opening the possibility that the subsampled population may be sufficient to accurately represent these task-constrained low-dimensional dynamics. Indeed, under certain assumptions—in particular that the collective dynamical modes are approximately random superpositions of neural activity and that sampled neurons are statistically representative of the hidden population—Gao et al. [72] calculate a conservative upper bound on the number of sampled neurons necessary to reconstruct the collective dynamics, finding it is often less than the effective dimensionality of the network.

The assumption that the collective dynamics are random superpositions of neural activity is crucial, because it means that each neuron’s trial-averaged dynamics are in turn a superposition of the collective modes. Hence, every neuron’s activity contains some information about the collective modes, and if only a few of these modes are important, then they can be extracted from any sufficiently large subset of neurons.

While modes of collective activity alone may be sufficient for answering certain questions, such as decoding task parameters or elucidating circuit function, explaining the structure of these modes—and in particular how the dynamical patterns that emerge under different task conditions or sensory environments are related—will ultimately require an understanding of the distribution of possible underlying network properties, which remain difficult to estimate from subsampled populations. We may be able to establish such structure-function relationships using our theory of effective interactions presented in this work: if we can relate the collective dynamics extracted from subsampled neurons to the properties of the effective interactions , then we can link them to the true interactions through our Eq (2). With an understanding of how network properties shape such collective dynamics, we can begin to understand what network manipulations achieve desired patterns of activity, and therefore circuit function.

Implications beyond experimental limitations

The fact that many different hidden networks may yield the same set of effective interactions or low-dimensional dynamics suggests that the effective interactions themselves may yield direct insight into a circuit’s functions. For instance, many circuits consist of principal neurons that transmit the results of circuit computation to downstream circuitry, but often do not make direct connections with one another, instead interacting through (predominantly inhibitory) intermediaries called interneurons. From the point of view of a downstream circuit, the principal neurons are “recorded” and the interneurons are “hidden.” A potential reason for this general arrangement is that direct synaptic interactions alone are insufficient to produce the membrane responses required to perform the circuit’s computations, and the network of interneurons reshapes the membrane responses of projection neurons into effective interactions that can perform the desired computations—it may thus be that the effective interactions should be of primary interest, not necessarily the (possibly degenerate choices of) physiological synaptic interactions. For example, in the feedforward inhibitory circuits of Figs 3 and 4, the roles of the hidden inhibitory neurons may simply be to act as interneurons that reshape the interaction between the excitatory projection neurons 1 and 2, and the choice of which particular circuit motif is implemented in a real network is determined by other physiological constraints, not only computational requirements.

One of the greatest achievements in systems neuroscience would be the ability to perform targeted modifications to a large neural circuit and selectively alter its suite of computations. This would have powerful applications for both studying a circuit’s native computations, but also repurposing circuits or repairing damaged circuitry (due to, e.g., disease). If the computational roles of circuits are indeed most sensitive to the effective interactions between principal neurons, this suggests we can exploit potential degeneracies in the interneuron architecture and intrinsic properties to find some circuit that achieves a desired computation, even if it is not a physiologically natural circuit. Our main result relating effective and true interactions, Eq (2), provides a foundation for future work investigating how to identify sets of circuits that perform a desired set of computations. We have shown in this work that it can be done for small circuits (Figs 3 and 4), and that the effective interactions in large random networks can be significantly skewed away from the true interactions when synaptic weights scale as , as observed in experiments [48]. This holds promise for identifying principled approaches to tuning or controlling neural interactions, such as by using neuromodulators to adjust interneuron properties or inserting artificial or synthetic circuit implants into neural tissue to act as “hidden” neurons. If successful, this could contribute to the long term goal of using such interventions to aid in reshaping the effective synaptic interactions between diseased neurons, and thereby restore healthy circuit behaviors.

Methods

Model definition and details

The firing rate of a neuron i in the full network is given by

| (5) |

where λ0 is a characteristic rate, ϕ(x) ≥ 0 is a nonlinear function, μi (potentially a function of some external stimulus θ) is a time-independent tonic drive that sets the baseline firing rate of the neuron in the absence of input from other neurons, is an external input current, and Jij(t − t′) is a coupling filter that filters spikes fired by presynaptic neuron j at time t′, incident on post-synaptic neuron i. We will take for simplicity in this work, focusing on the activity of the network due to the tonic drives μi (which could be still be interpreted as external tonic inputs, so the activity of the network need not be interpreted as spontaneous activity).

While we need not attach a mechanistic interpretation to these filters, a convenient interpretation is that the nonlinear Hawkes model approximates the stochastic dynamics of a leaky integrate-and-fire network model driven by noisy inputs [55, 56]. In fact, the nonlinear Hawkes model is equivalent to a current-based integrate-and-fire model in which the deterministic spiking rule (a spike fires when a neuron’s membrane potential reaches a threshold value Vth) is replaced by a stochastic spiking rule (the higher a neuron’s membrane potential, the higher the probability a neuron will fire a spike). (It can also be mapped directly to a conductance-based in special cases [73]). For completeness, we present the mapping from a leaky integrate-and-fire model with stochastic spiking to Eq (5) in the Supporting Information (SI).

Derivation of effective baselines and coupling filters

To study how hidden neurons affect the inferred properties of recorded neurons, we partition the network into “recorded” neurons, labeled by indices r (with sub- or superscripts to differentiate different recorded neurons, e.g., r and r′ or r1 and r2) and “hidden” neurons labeled by indices h (with sub- or superscripts). The rates of these two groups are thus

To simplify notation, we write . If we seek to describe the firing of the recorded neurons only in terms of their own spiking history, input from hidden neurons effectively acts like noise with some mean amount of input. We thus begin by splitting the hidden input to the recorded neurons up into two terms, the mean plus fluctuations around the mean:

where denotes the mean activity of the hidden neurons conditioned on the activity of the recorded units, and ξr(t) are the fluctuations, i.e., . Note that ξr(t) is also conditional on the activity of the recorded units.

By construction, the mean of the fluctuations is identically zero, while the cross-correlations can be expressed as

where is the cross-covariance between hidden neurons h1 and h2 (conditioned on the spiking of recorded neurons). If the autocorrelation of the fluctuations (r = r′) is small compared to the mean input to the recorded neurons (), or if Jr,h is small, then we may neglect these fluctuations and focus only on the effects that the mean input has on the recorded subnetwork. At the level of the mean field theory approximation we make in this work, the spike-train correlations are zero. One can calculate corrections to mean field theory (see SI) to estimate the size of this noise. Even when this noise is not strictly negligible, it can simply be treated as a separate input to the recorded neurons, as shown in the main text, and hence will not alter the form of the effective couplings between neurons. Averaging out the effective noise, however, would generate new interactions between neurons; we leave investigation of this issue for future work.

In order to calculate how hidden input shapes the activity of recorded neurons, we need to calculate the mean . This mean input is difficult to calculate in general, especially when conditioned on the activity of the recorded neurons. In principle, the mean can be calculated as

This is not a tractable calculation. Taylor series expanding the nonlinearity ϕ(x) reveals that the mean will depend on all higher cumulants of the hidden unit spike trains, which cannot in general be calculated explicitly. Instead, we again appeal to the fact that in a large, sufficiently connected network, we expect fluctuations to be small, as long as the network is not near a critical point. In this case, we may make a mean field approximation, which amounts to solving the self-consistent equation

| (6) |

In general, this equation must be solved numerically. Unfortunately, the conditional dependence on the activity of the recorded neurons presents a problem, as in principle we must solve this equation for all possible patterns of recorded unit activity. Instead, we note that the mean hidden neuron firing rate is a functional of the filtered recorded input , so we can expand it as a functional Taylor series around some reference filtered activity ,

Within our mean field approximation, the Taylor coefficients are simply the response functions of the network—i.e., the zeroth order coefficient is the mean firing rate of the neurons in the reference state , the first order coefficient is the linear response function of the network, the second order coefficient is a nonlinear response function, and so on.

There are two natural choices for the reference state . The first is simply the state of zero recorded unit activity, while the second is the mean activity of the recorded neurons. The zero-activity case conforms to the choice of nonlinear Hawkes models used in practice. Choosing the mean activity as the reference state may be more appropriate if the recorded neurons have high firing rates, but requires adjusting the form of the nonlinear Hawkes model so that firing rates are modulated by filtering the deviations of spikes from the mean firing rate, rather than filtering the spikes themselves. Here, we focus on the zero-activity reference state. We present the formulation for the mean field reference state in the SI.

For the zero-activity reference state , the conditional mean is

The mean inputs are the mean field approximations to the firing rates of the hidden neurons in the absence of the recorded neurons. Defining , these firing rates are given by

in writing this equation we have assumed that the steady-state mean field firing rates will be time-independent, and hence the convolution , where . This assumption will generally be valid for at least some parameter regime of the network, but there can be cases where it breaks down, such as if the nonlinearity ϕ(x) is bounded, in which case a transition to chaotic firing rates νh(t) may exist (c.f. [74]). The mean field equations for the νh are a system of transcendental equations that in general cannot be solved exactly. In practice we will solve the equations numerically, but we can develop a series expansion for the solutions (see below).

The next term in the series expansion is the linear response function of the hidden unit network, given by the solution to the integral equation

The “gain” γh is defined by

where ϕ′(x) is the derivative of the nonlinearity with respect to its argument.

For time-independent drives μr and steady states νh (and hence γh), we may solve for Γh,h′(t − t′) by first converting to the frequency domain and then performing a matrix inverse:

where .

If the zero and first order Taylor series coefficients in our expansion of are the dominant terms—i.e., if we may neglect higher order terms in this expansion—then we may approximate the instantaneous firing rates of the recorded neurons by

where

are the effective baselines of the recorded neurons and

are the effective coupling filters in the frequency domain, as given in the main text. In addition to neglecting the higher order spike filtering terms here, we have also neglected fluctuations around the mean input from the hidden network. These fluctuations are zero within our mean field approximation, but we could in principle calculate corrections to the mean field predictions using the techniques of [53]; we do so to estimate the size of the effective noise correlations in the SI.

In the main text, we decompose our expression for into contributions from all paths that a signal can travel from neuron r′ to r. To arrive at this interpretation, we note that we can expand in a series over paths through the hidden network. To start, we note that if for some matrix norm ||⋅||, then the matrix admits a convergent series expansion [75]

where is a matrix product and . We can write an element of the matrix product out as

inserting yields

This expression can be interpreted in terms of summing over paths through network of hidden neurons that join two observed neurons: the are represented by edges from neuron hj to hi, and the are represented by the nodes. In this expansion, we allow edges from one neuron back to itself, meaning we include paths in which signals loop back around to the same neuron arbitrarily many times before the signal is propagated further. However, such loops can be easily factored, contributing a factor . We thus remove the need to consider self-loops in our rules for calculating effective coupling filters by assigning a factor γh/(1 − γh Jh, h(ω)) to each node, as discussed in the main text and depicted in Fig 2. (The contribution of the self-feedback loops can be derived rigorously; see the SI for the full derivation).

Although we have worked here in the frequency domain, our formalism does adapt straightforwardly to handle time-dependent inputs; however, among the consequences of this explicit time-dependence are that the mean field rates νh(t) are not only time-dependent, but solutions of a system of nonlinear integral equations, and hence more challenging to solve. Furthermore, quantities like the linear response of the hidden network, Γh,h′(t, t′), will depend on both absolute times t and t′, rather than just their difference, t − t′, and hence we must also (numerically) solve for Γh,h′(t, t′) directly in the time domain. We leave these challenges for future work.

Model network architectures

Our main result, Eq (2), is valid for general network architectures with arbitrary weighted synaptic connections, so long as the hidden subset of the network has stable dynamics when the recorded neurons are removed. An example for which our method must be modified would be a network in which all or the majority of the hidden neurons are excitatory, as the hidden network is unlikely to be stable when the inhibitory recorded neurons are disconnected. Similarly, we find that synaptic weight distributions with undefined moments will generally cause the network activity to be unstable. For example, drawn from a Cauchy distribution generally yield unstable network dynamics unless the weights are scaled inversely with a large power of the network size N.

Specific networks—Common features

The specific network architectures we study in the main text share several features in common: all are sparse networks with sparsity p (i.e., only a fraction p of connections are non-zero) and non-zero synaptic weight strengths drawn independently from a random distribution with zero population mean and population standard deviation J0/(pN)a; the overall standard deviation of weights, accounting for the expected 1 − p fraction of zero weights is . The parameter a determines whether the synaptic strengths are “strong” (a = 1/2) or “weak” (a = 1). In most of our analytical results we only need the mean and variances of the weights, so we do not need to specify the exact distribution. In simulations, we use a normal distribution. The reason for scaling the weights as 1/(pN)a, as opposed to just 1/Na, is that the mean incoming degree of connections is p(N − 1) ≈ pN for large networks; this scaling thus controls for the typical magnitude of incoming synaptic currents.

For strongly coupled networks, the combined effect of sparsity and synaptic weight distribution yields an overall standard deviation of . Because the sparsity parameter p cancels out, it does not matter if we consider p to be fixed or k0 = pN to be fixed—both cases are equivalent. However, this is not the case if we scale by 1/k0, as the overall standard deviation would then be , which only corresponds to the weak-coupling limit if p is fixed. If k0 is fixed, the standard deviation would scale as .

It is worth noting that the determination of “weak” versus “strong” coupling depends not only on the power of N with which synaptic weights scale, but also on the network architecture and correlation structure of the weights . For example, for an all-to-all connected matrix with symmetric rank-1 synaptic weights of the form , where the ζi are independently distributed normal random variates, the standard deviation of each ζ must scale as in order for hidden paths to generate contributions to effective interactions, such that scales as 1/N but the coupling is still strong.

Specific networks—Differences in architecture and synaptic constraints

Beyond the common features outlined above, we perform our analysis of the distribution of effective synaptic interaction strengths for three network architectures commonly studied in network models. These architectures are not intended to be realistic representations of neuronal network structures, but to capture basic features of network architecture and therefore give insight into the basic features of the effective interaction networks.

Erdős-Réyni + mixed synapses—The first network we consider (and the one we perform most of our later analyses on as well) is an Erdős-Réyni random network architecture with “mixed synapses.” That is, each connection between neurons is chosen randomly with probably p. By “mixed synapses” we mean that each neuron’s outgoing synaptic weights are chosen completely independently. i.e., in this network there are no excitatory or inhibitory neurons; each neuron make make both excitatory and inhibitory connections. The corresponding analysis is shown in Fig 5A.

Erdős-Réyni + Dale’s law imposed—Real neurons appear to split into separate excitatory and inhibitory classes, a dichotomy know as “Dale’s law” (or alternatively, “Dale’s principle” to highlight that it is not really a law of nature). Neurons in a network that obeys this law will have coupling filters Ji,j(t) that are strictly positive for excitatory neurons and strictly negative for inhibitory neurons. This constraint complicates analytic calculations slightly, as the moments of the synaptic weights now depend on the identity of the neuron, and more care must be taken in calculating expected values or population averages. We instead impose this numerically to generate the results shown in Fig 5B. The trends are the same as in the network with mixed synapses, with the resulting ratios being slightly reduced.

As a technical point, because our analysis requires calculation of the mean field firing rates of the hidden network in absence of the recorded neurons, random sampling of the network may, by chance, yield hidden networks with an imbalance of excitatory neurons, for which the mean field firing rates of the hidden network may diverge for our choice of exponential nonlinearity. This is the origin of the relatively larger error bars in Fig 5B: less random samplings for which the hidden network was stable were available to perform the computation. One way this artifact can be prevented is by choosing a nonlinearity that saturates, such as ϕ(x) = c/(1 + exp(−x)), which prevents the mean-field firing rates from diverging and yields stable network activity (see Fig 8). Another is to choose a different reference state of network activity around which we perform our expansion of , such as the mean field state discussed in the SI.

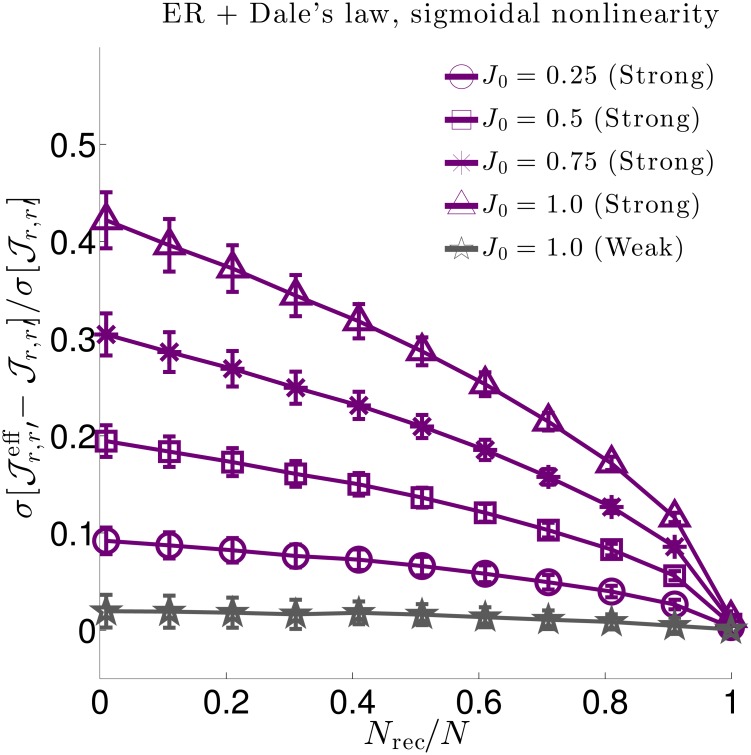

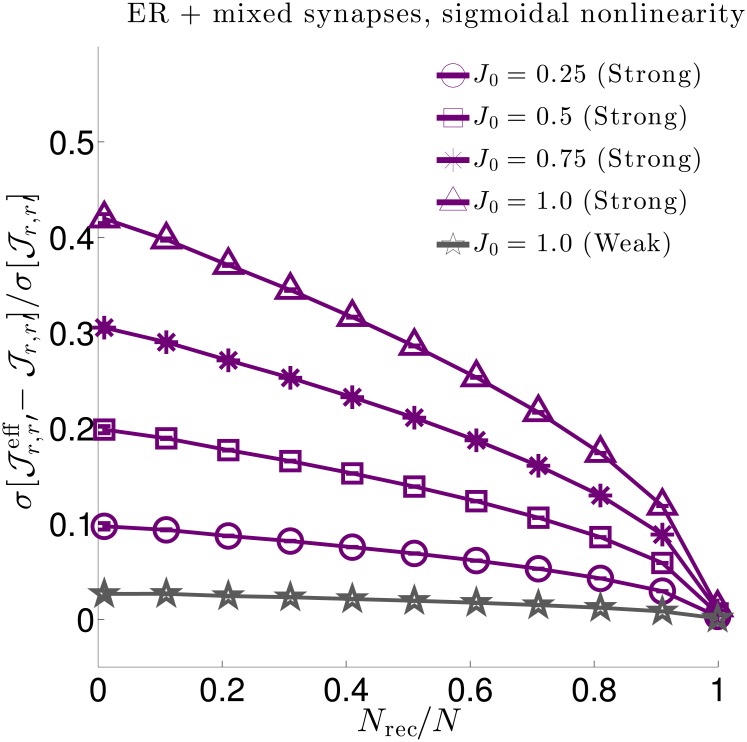

Fig 8. Same as Fig 5B in the main text, but for a sigmoidal nonlinearity ϕ(x) = 2/(1 + e−x).

Because the sigmoid is bounded the mean field solution cannot diverge, yielding better results.

Watts-Strogatz network + mixed synapses—Finally, although Erdős-Réyni networks are relatively easy to analyze analytically, and are ubiquitous in many influential computational and theoretical studies, real world networks typically have more structure. Therefore, we also consider a network architecture with more structure, a Watts-Strogatz (small world) network. A Watts-Strogatz network is generated by starting with a K-nearest neighbor network (such that fraction of non-zero connections each neuron makes is p = K/(N − 1)) and rewiring a fraction β of those connections. The limit β = 0 remains a K-nearest neighbor network, while β → 1 yields an Erdős-Réyni network. We generated the adjacency matrices of the Watts-Strogatz networks using code available in [76]. Here we consider only a Watts-Strogatz network with mixed synapses; a network with spatial structure and Dale’s law would become sensitive to both the spatial distribution of excitatory and inhibitory neurons in the network as well as the way in which the neurons are sampled, an investigation we leave for future work. The results for the Watts-Strogatz network with mixed synapses are shown in Fig 5C, and are qualitatively similar to the Erdős-Réyni network with mixed synapses.

Because all three network types we considered yield qualitatively similar results, for the remainder of our analyses, we focus on the Erdős-Réyni + mixed synapses network for simplicity in both simulations and analytical calculations.

Parameter values used to generate our networks are given in Table 1.

Choice of nonlinearity ϕ(x)

The nonlinear function ϕ(x) sets the instantaneous firing rate for the neurons in our model. Our main analytical results (e.g., Eq (2) hold for arbitrary choice of ϕ(x). Where specific choices are required in order to perform simulations, we used ϕ(x) = max(x, 0) for the results presented in Figs 3 and 4 and ϕ(x) = exp(x) otherwise. The rectified linear choice is convenient for small networks, as high-order derivatives are zero, which eliminates corresponding high-order “loop corrections” to mean field theory [53]. The exponential function is the “canonical” choice of nonlinearity for the nonlinear Hawkes process [16–18, 20]. The exponential has particularly nice theoretical properties, but is also convenient for fitting the nonlinear Hawkes model to data, as the log-likelihood function of the model simplifies considerably and is convex (though some similar families of nonlinearities also yield convex log-likelihood functions).

An important property that both choices of nonlinearity possess is that they are unbounded. This property is necessary to guarantee that a neuron spikes given enough input. A bounded nonlinearity imposes a maximum firing rate, and neurons cannot be forced to spike reliably by providing a large bolus of input. The downside of an unbounded nonlinearity is that it is possible for the average firing rates to diverge, and the network never reaches a steady state. For example, in a purely excitatory network (all ) with an exponential nonlinearity, neural firing will run away without a sufficiently strong self-refractory coupling to suppress the firing rate. This will not occur with a bounded nonlinearity, as excitation can only drive neurons to fire at some maximum but finite rate.