Abstract

Image-guided surgery can enhance cancer treatment by decreasing, and ideally eliminating, positive tumor margins and iatrogenic damage to healthy tissue. Current state-of-the-art near-infrared fluorescence imaging systems are bulky and costly, lack sensitivity under surgical illumination, and lack co-registration accuracy between multimodal images. As a result, an overwhelming majority of physicians still rely on their unaided eyes and palpation as the primary sensing modalities for distinguishing cancerous from healthy tissue. Here we introduce an innovative design, comprising an artificial multispectral sensor inspired by the Morpho butterfly’s compound eye, which can significantly improve image-guided surgery. By monolithically integrating spectral tapetal filters with photodetectors, we have realized a single-chip multispectral imager with 1000 × higher sensitivity and 7 × better spatial co-registration accuracy compared to clinical imaging systems in current use. Preclinical and clinical data demonstrate that this technology seamlessly integrates into the surgical workflow while providing surgeons with real-time information on the location of cancerous tissue and sentinel lymph nodes. Due to its low manufacturing cost, our bio-inspired sensor will provide resource-limited hospitals with much-needed technology to enable more accurate value-based health care.

1. INTRODUCTION

Surgery is the primary curative option for patients with cancer, with the overall objective of complete resection of all cancerous tissue while avoiding iatrogenic damage to healthy tissue. In addition, sentinel lymph node (SLN) mapping and resection is an essential step in staging and managing the disease [1]. Even with the latest advancements in imaging technology, incomplete tumor resection in patients with breast cancer is at an alarming rate of 20 to 25 percent, with recurrence rates of up to 27 percent [2]. The clinical need for imaging instruments that provide real-time feedback in the operating room is unmet, largely due to the use of imaging systems based on contemporary technological advances in the semiconductor and optical fields, which have bulky and costly designs with suboptimal sensitivity and co-registration accuracy between multimodal images [3–7].

Here, we demonstrate that image-guided surgery can be dramatically improved by shifting the design paradigm away from conventional advancements in the semiconductor and optical technology fields and instead adapting the elegant 500-million-year-old design of the Morpho butterfly’s compound eye [8,9]: a condensed biological system optimized for high-acuity detection of multispectral information. Nature has served as the inspiration for many engineering sensory designs, with performances exceeding state-of-the-art sensory technology and enabling new engineering paradigms such as achromatic circular polarization sensors [10], artificial vision sensors [11–15], silicon cochlea [16,17], and silicon neurons [18]. Our artificial compound eye, inspired by the Morpho butterfly’s photonic crystals, monolithically integrates pixelated spectral filters with an array of silicon-based photodetectors. Our bio-inspired image sensor has the prominent advantages of (1) capturing both color and near-infrared fluorescence (NIRF) with high co-registration accuracy and sensitivity under surgical light illumination, allowing simultaneous identification of anatomical features and tumor-targeted molecular markers; (2) streamlined design—at 20 g including optics, our bio-inspired image sensor does not impede surgical workflow; and (3) low manufacturing cost of ~$20, which will provide resource-limited hospitals with much-needed technology to enable more accurate value-based health care.

A. Nature-Inspired Design

Light has imposed significant selection pressure for perfecting, optimizing, and miniaturizing animal visual systems since the Cambrian period some 500 million years ago [19]. Sophisticated visual systems emerged in a tight race with prey coloration during that time, resulting in a proliferation of photonic crystals in the animal kingdom used for both signaling and sensing [20,21]. For example, not only are the tree-shaped photonic crystals of the Morpho butterfly the source of its wings’ magnificent iridescent colors [Fig. 1(a)], which can be sensed by conspecifics a mile away, but these crystals have inspired the design of photonics structures that can sense vapors [22] and infrared photons [23] with sensitivity that surpasses state-of-the-art manmade sensors.

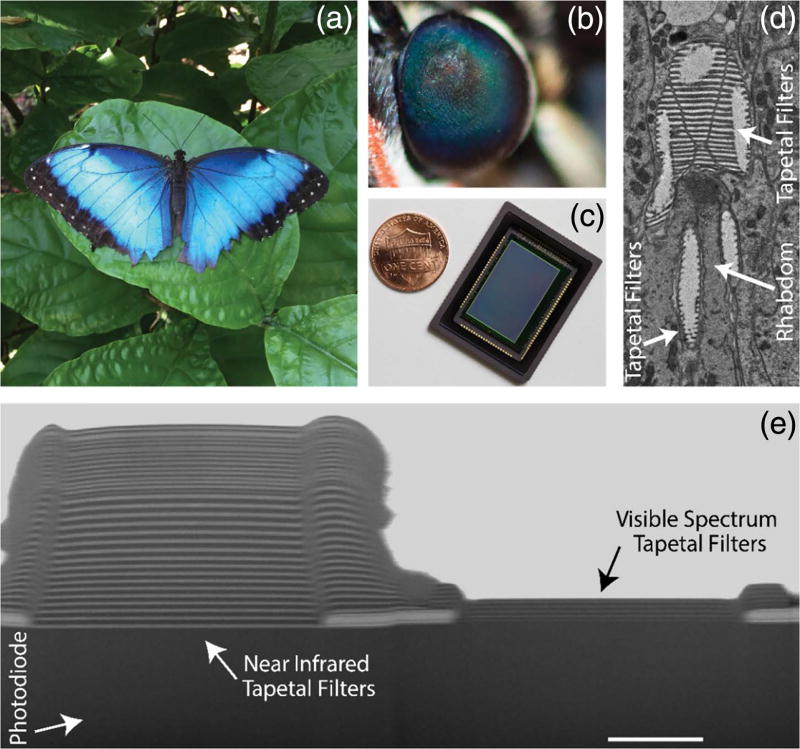

Fig. 1.

Our bio-inspired imaging sensor uses a new design paradigm to capture color and near-infrared fluorescence information. (a) Morpho butterfly (Morpho peleides) blue color is due to tree-shaped photonic crystals in its wings. Similar photonic crystals can be found in the butterfly’s ommatidia. (b) Close-up of the Morpho ommatidia indicating “eye-shine” due to tapetal filters together with screening and visual pigments in the rhabdom, which enable multispectral target detection. (c) Our compact bio-inspired multispectral imaging sensor combines an array of imaging elements with pixelated tapetal spectral filters. (d) Transmission electron microscope image of an individual Morpho ommatidium, which monolithically integrates tapetal filters with light-sensitive rhabdom. (e) Cross-sectional scanning electron microscope image of our bio-inspired imaging sensor. Tapetal filters combined with silicon photodetectors allow for high co-registration accuracy in the detected spectral information. Scale bar, 2 µm.

Similar photonic crystals are also present in the compound eye of the Morpho butterfly. These photonic crystals, known as tapetal filters, are realized by stacks of alternating layers of air and cytoplasm, which act as interference filters at the proximal end of the rhabdom within each ommatidium [Fig. 1(d)]. The light that enters an individual ommatidium and is not absorbed by the visual and screening pigments in the rhabdom will be selectively reflected by the tapetal filters and will have another chance of being absorbed before exiting the eye. The spectral responses of the tapetal filters, together with screening and visual pigments in the rhabdom, determine the eye shine of the ommatidia [8] and the inherent multispectral sensitivity of the butterfly’s visual system [Fig. 1(b)]. Individual ommatidia have different combinations of visual pigments and tapetal filter stacks, enabling selective spectral sensitivity across the ultraviolet, visible, and near-infrared (NIR) spectra.

By imitating the compound eye of the Morpho butterfly using dielectric materials and silicon-based photosensitive elements, we developed a multispectral imaging sensor that operates radically differently from the current state-of-the-art multispectral imaging technology [Fig. 1(c); Table 1]. The tapetal spectral filters are constructed using alternating nanometric layers of SiO2 and TiO2, which are pixelated with a 7.8 µm pitch and deposited onto the surface of a custom-designed silicon-based complementary metal-oxide semiconductor (CMOS) imaging array (see Methods). The alternating stack of dielectrics acts as an interference filter, allowing certain light spectra to be transmitted while reflecting others [Fig. 1(e)]. Four distinct pixelated spectral filters are replicated throughout the imaging sensor in a two-by-two pattern by modulating the thickness and periodicity of the dielectric layers in individual pixels. Three of the four pixels are designed to sense the red, green, and blue (RGB) spectra, respectively, and the fourth pixel captures NIR photons with wavelengths greater than 780 nm. An additional stack of interference filters is deposited across all pixels to block fluorescence excitation light between 770 and 800 nm. The proximity of the imaging array’s four base pixels inherently co-registers the captured multispectral information, similar to its biological counterpart.

Table 1.

Optical Performance of Various State-of-the-Art Near-Infrared Fluorescence (NIRF) Imaging Systems and Our Bio-Inspired NIRF Imager

| Device | ||||||||

|---|---|---|---|---|---|---|---|---|

|

|

||||||||

| Description | Fluobeam 800 |

PDE | SPY Elite | LAB-Flare | Iridium | Spectrum | Solaris | Bio-inspired |

| Company/university | Fluoptics | Hamamatsu | Novadaq | Curadel | Vision Sense | Quest | Perkin Elmer | UIUC &Washington Univ. |

| References | [26] | [26] | [26] | [26] | [26] | [26] | [26] | This paper |

| FDA/EMA approved | Yes | Yes | Yes | No | Yes | Yes | No | No |

| Instrument type | Single camera | Single camera | Two adjacent cameras | Beam splitter | Beam splitter | Optical prism | Beam splitter | Single camera |

| Real-time color and fluorescence overlay | No | No | No | Yes | Yes | Yes | Yes | Yes |

| Surgical light | Dim | Dim | Dim | Dim | Dim | On | On | On |

| Sensor type | CCD | CCD | CCD | CCD | CCD | CCD | sCMOS | CMOS |

| Sensor bit depth | 8 | 8 | 8 | 10 | 12 | 12 | 16 | 14 |

| Adjustable gain | No | No | No | Yes | Yes | NS | No | Yes |

| Fluorescence detection limit | ~5 nMb | ~15 nMb | ~5 nMb | NA | ~50 pMb | ~10 nMb | ~1 nMb | 100 pMa |

| Excitation light source | 750-nm laser | 760-nm LEDs | 805-nm laser | 665 ± 3 nm; 760 ± 3 nm | 805-nm laser | LEDs | Filtered LEDs | 780-nm laser |

| Exposure time | 1 ms to 1 s | NS | NS | 66 µs to 3 s | NS | NS | 1 ms | 0.5 ms (RGB) 40 ms (NIR) |

| Maximum FPS | 25 | 20 | 20 | 30 | NS | 20 | 100 | 40 |

| Sensor resolution | 720 × 576 | 640 × 480 | 1024 × 768 | 1024 × 1024 | 960 × 720 | NS | 1024 × 1024 | 1280 × 720 |

| Cost | $$$$c | $$$$c | $$$$c | $$$$c | $$$$c | $$$$c | $$$$c | $c |

Abbreviations: CCD, charge-coupled device; EMA, European Medicines Administration; FDA, U.S. Food and Drug Administration; FPS, frames per second; LED, light-emitting diode; NA, not applicable; NIR, near-infrared; NS, not stated; RGB, red-green-blue; sCMOS, scientific complementary metal-oxide semiconductor; CMOS, complementary metal-oxide semiconductor; UIUC, University of Illinois at Urbana-Champaign.

The detection limit was performed under visible light illumination. Indocyanine green (ICG) fluorescence marker was used for the experiment.

The detection limit was performed with no surgical light illumination. IRDye800CW fluorescence marker was used for the experiment.

The cost of the commercially available NIRF systems range from $20,000 to more than $200,000 depending on instrument capabilities and options. The estimate cost to manufacture our sensor is less than $20.

2. RESULTS

A. Optoelectronic Performance of the Bio-Inspired Sensor

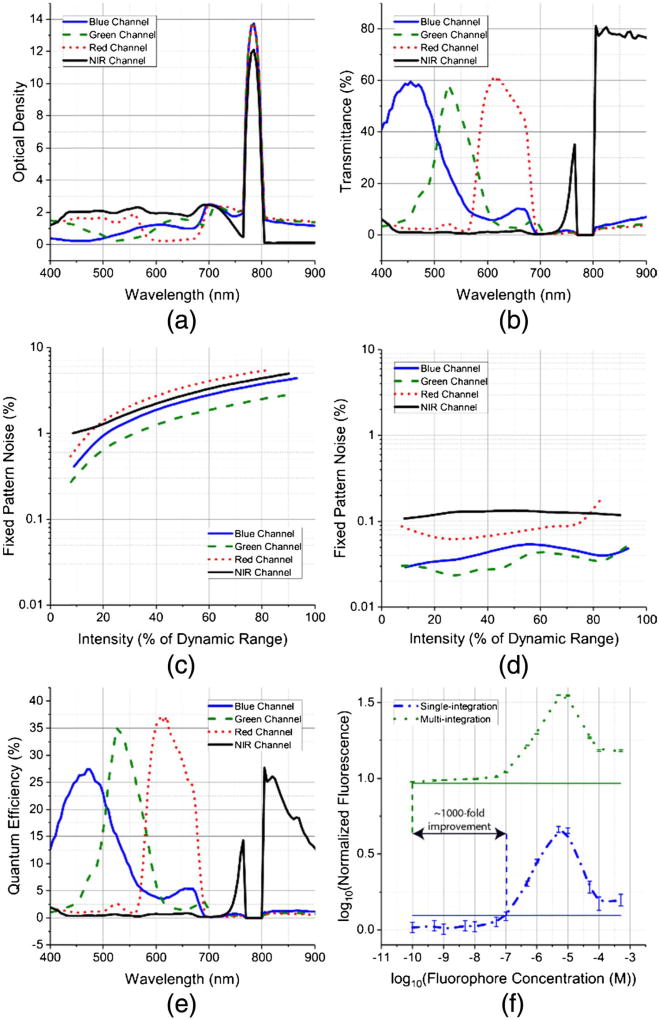

The optical density and transmission spectrum of the four base pixels from the artificial compound eye are presented in Figs. 2(a) and 2(b), respectively. The sensor was evaluated with uniform monochromatic light impinging normal to the surface of the imaging plane. The individual tapetal filters are optimized to achieve transmission of 60% in the visible spectrum and 80% in the NIR spectrum. The high optical density of ~12 ensures effective suppression of fluorescence excitation light between 770 and 800 nm. The spatial uniformity or fixed pattern noise (FPN) before calibration for the RGB and NIR filters is 6.5%, 1.9%, 4.5%, and 5%, respectively [Fig. 2(c)]. After first-order gain and offset calibration, the FPN is around 0.1% across different illumination intensities [Fig. 2(d)]. The fixed pattern noise for all four channels is evaluated under 30 ms exposure and different illumination intensities, ranging from dark conditions to intensities that almost saturate the pixel’s output signal. Hence, the x axes in Figs. 2(c) and 2(d) represent the mean output signal from a pixel represented as a percentage of the dynamic range. The spatial variations in the optical response of the tapetal filters are primarily due to variations in the underlying transistors and photodiodes within individual pixels, which can be mitigated via calibration, improving spatial uniformity under various illumination conditions. The peak quantum efficiencies for the RGB and NIR pixels are 28%, 35%, 38%, and 28%, respectively [Fig. 2(e); Table 2].

Fig. 2.

(a) Measured optical density and (b) transmittance of the four tapetal spectral filters in our bio-inspired multispectral imaging sensor. (c) Spatial uniformity or fixed pattern noise (FPN) before calibration as a function of light intensity. (d) The calibrated FPN shows that the bio-inspired camera captures data with ~0.1% spatial variations for uniform intensity targets. (e) Quantum efficiency of our bio-inspired image sensor. (f) Fluorescence detection limits under surgical light illumination for our bio-inspired sensor, which utilizes a multi-exposure method (green) compared with single-exposure method (blue) used in state-of-the-art pixelated color-NIR sensors. The dashed vertical lines are the individual detection thresholds for both sensors estimated as the mean value plus three standard deviations of the control vial. The bio-inspired sensor’s 1000 × improvement in detection limit is achieved due to multi-exposure (P < 0.0001), high NIR optical density, and high NIR quantum efficiency. Data are presented as mean ± SD.

Table 2.

Summary of the Optoelectronic Performance of Our Bio-Inspired Imaging Sensor

| Number of pixels | 1280 × 720 | |

|---|---|---|

| Pixel pitch | 7.8 µm × 7.8 µm | |

| Frames per second | 40 | |

| Full well capacity Sensitivity | 72, 000 e− | |

| Sensitivity | 9 µV/e− | |

| Maximum Quantum | Blue | 28% at 470 nm |

| Efficiency | Green | 35% at 525 nm |

| Red | 38% at 610 nm | |

| NIR | 28% at 805 nm | |

| Dynamic range | 62 dB | |

| Maximum signal-to-noise ratio | 48 dB (signal close to saturation) | |

| Fixed-pattern noise after calibration | 0.1% of saturated level | |

| PRNU for NIR channel | 1.1% of saturation level | |

| DSNU for NIR channel | 3.4% of saturation level | |

| Number of spectral bands | 4 | |

| Detection limit | 100 pM indocyanine green with 20 mW/cm2 laser excitation, 60 kLux surgical illumination at 25 frames/sec | |

| Power consumption | 250 mW | |

B. Acquiring NIR Fluorescence and Color Under Surgical Light Illumination

Simultaneous and real-time imaging of both NIR fluorescence and RGB information is essential in surgical settings, as these will enable the surgeon to identify the location of the tumor on the correct anatomical features. U.S. Food and Drug Administration (FDA) regulations require the optical power of visible-spectrum surgical illumination to be between 40 and 160 kLux. The optical power for NIR laser-based excitation sources typically does not exceed 10 mW/cm2. Hence, the intensity of the NIRF molecular probe, which could be emitted from tumors several centimeters deep in the tissue, is at least 5 orders of magnitude weaker than the intensity of the reflected visible-spectrum light [24,25]. To enable simultaneous color and NIR imaging in the operating room, most FDA-approved instruments work with dimmed surgical illumination, which significantly impedes the surgical workflow: physicians stop the resection, dim the surgical lights, evaluate the surgical margins with NIRF instrumentation, and then continue the surgery under either dim or normal illumination but without NIRF guidance [26]. This significant drawback short-circuits the intrinsic benefits of NIRF, preventing wide acceptance of this technology in the operating room and leading to positive margins and iatrogenic damage.

When imaging weak NIR fluorescent and high visible-spectrum photon flux during intraoperative procedures, the dynamic range of the scene can range between ~40 up to ~140 dB [24,25]. The imaged tissue is illuminated with a bright visible light (40 to 160 kLux) to highlight anatomical features in the wound site, as well as by NIR laser light with 10 mW/cm2 optical power to excite fluorophores. The NIR fluorescent photon flux depends on the concentration and depth location of the fluorescent dye, which will change during the surgical procedure as the tumor is located and resected. At the beginning of the surgery, the tumor might be located several millimeters beneath the surface of the tissue. As NIR photons travel to and from the skin and the tumor, the NIR light will be significantly attenuated and the dynamic range of the imaged scene (visible and NIR photons) can approach ~140 dB. The NIR photons will be less attenuated as the surrounding tissue is resected and the tumor is directly exposed and imaged. Depending on the fluorescent concentration, the dynamic range of the imaged scene can be between 40 and 80 dB when the tumor is at the surface [25]. To address the wide-dynamic-scene imaging demands in the operating room, our custom CMOS imager has programmable readout circuitry that enables independent exposure control and programmable gains for both visible and NIR pixels within a single frame (see Methods). Although single exposure time per frame will necessitate collection of two consecutive images with different exposure times (i.e., one frame with long and one with short exposure time), the reduction of fame rate will be a major shortcoming for intraoperative applications where real-time imaging is critical.

In our intraoperative experiments, due to the high visible-spectrum illumination in the operating room, the exposure time is typically set to ~0.1 ms or lower for the RGB pixels to ensure that non-saturated and high-contrast color images are recorded. Since the NIRF emission is weaker than the visible light reflected from tissue, the exposure time is set to 40 ms to ensure imaging rates of 25 frames/s and acquisition of high-contrast NIR images. This contrasts with current state-of-the-art pixelated NIRF systems that either utilize polymer-based [27] or Fabry–Perot absorptive pixelated spectral filters with low optical density (<1.5) coupled with CMOS sensors (see Tables 1 and 3), allowing only a single exposure time for all pixels, or utilize a multicamera approach, which lacks co-registration accuracy between multimodal images [26]. The multi-exposure capabilities of our CMOS imager, coupled with the high optical density and quantum efficiency of the NIR pixels, enables detection of 100 pM fluorescence concentrations of indocyanine green (ICG) under 60 kLux surgical light illumination (P < 0.0001), which is a thousand-fold improvement over current state-of-the-art single-exposure pixelated sensors [27] [Fig. 2(e)].

Table 3.

Optical Performance Comparison Between Pixelated NIRF Imaging Systems and Our Bio-Inspired NIRF Imager

| Device | ||||

|---|---|---|---|---|

|

|

||||

| Description | Piranha4 | OV4682 | RGB-NIR | Bio-inspired |

| Company/university | Teledyne DALSA | Omni Vision | Univ. of Arizona | UIUC & Wash. Univ |

| Reference | Company website | Company website | [18] | This article |

| Material for pixelated filter | NS | Polymer | Polymer | Dielectric interference filters |

| Resolution | 2048 × 4 | 2688 × 1520 | 2304 × 1296 | 1280 × 720 |

| Multi-integration | Yes | No | No | Yes |

| Maximum optical density | NS | NS | <1 | 14 |

| Maximum optical density NIR pixels in visible spectrum | NS | NS | 0.7 | ~2.1 |

| Maximum optical density RGB pixels in NIR spectrum | <1 | <1 | <1 | ~2.2 |

| NIR excitation light | NS | NS | 6 | 12 |

| ICG fluorescence detection limit | NS | NS | 130 nM | 100 pM |

| Sensor type | CMOS | CMOS | CMOS | CMOS |

| Sensor bit depth | 12 | 10 | 12 | 14 |

| Exposure time | NA | NS | 10 ms | 85 µs to 2 s |

| Maximum FPS | NA | 40 | 60 | 40 |

| Background correction | No | No | Yes | No |

Abbreviations: CMOS, complementary metal-oxide semiconductor; FPS, frames per second; ICG, indocyanine green; NIR, near infrared; NIRF, near infrared fluorescence; NS, not stated; CMOS, complementary metal-oxide semiconductor; UIUC, University of Illinois at Urbana-Champaign.

C. Multi-Exposure Imaging Under Surgical Light Illumination

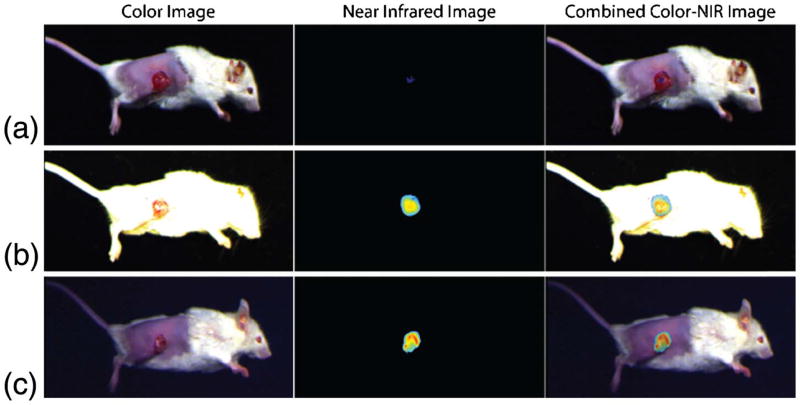

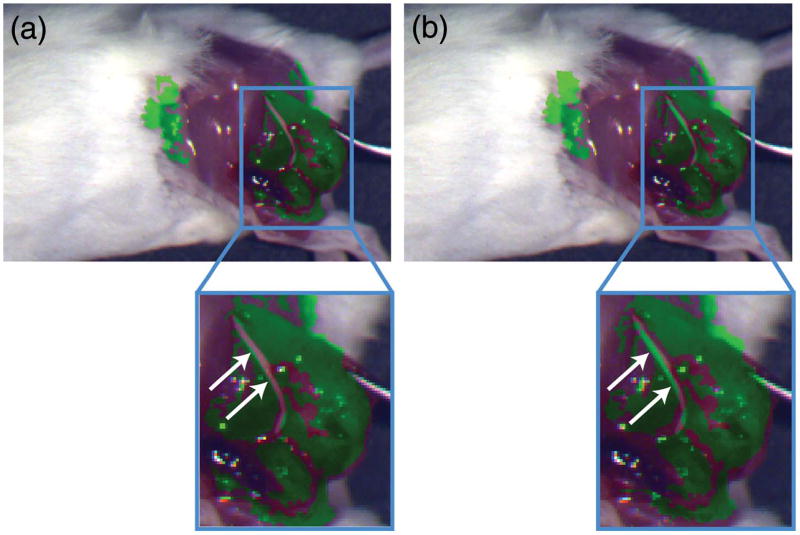

We demonstrate the preclinical relevance of the multi-exposure capabilities of our bio-inspired sensor by imaging a 4T1 breast cancer model under 60 kLux surgical light illumination and laser light excitation power of 5 mW/cm2 at 785 nm. The results are compared with a single-exposure pixelated CMOS camera (Fig. 3). Using the tumor-specific NIRF marker LS301 (a cypate-based contrast agent that typically accumulates in the periphery of tumors [28]), we obtained high target-to-background contrast images due to the tissue’s low auto-fluorescence, low scattering, and absorption in the 700 to 950 nm spectral bands. The images in Figs. 3(a) and 3(b) were obtained with a single-exposure CMOS camera with exposure times of 0.1 and 40 ms, respectively. When the animal was imaged with an exposure time of 0.1 ms, the color image was well illuminated, while the NIR image had very low contrast. The animal was then imaged with 40 ms exposure time, resulting in a well-illuminated NIR image but a saturated color image. This is due to the large difference between the visible and NIR photon flux in the operating room. Utilizing a single exposure time in a pixelated camera enables only one of the two imaging modalities to have satisfactory contrast and high signal-to-noise ratio, rendering this technology incompatible with the demands of intraoperative imaging applications.

Fig. 3.

Single-exposure cameras have limited capabilities for simultaneous imaging of color and near-infrared (NIR) images with high contrast under surgical light illumination. (a) Exposure time of 0.1 ms produces good color but poor NIR contrast images. (b) Exposure time of 40 ms produces oversaturated color image but good NIR contrast image. (c) Our bio-inspired camera captures color data with 0.1 ms exposure time and NIR data with 40 ms exposure time. This multi-exposure feature enables high-contrast images from both imaging modalities.

Figure 3(c) presents data collected with our bio-inspired imaging sensor. The exposure times for the color and NIR pixels were set to provide optimal contrast in both color and NIR channels: 0.1 ms for the color pixels and 40 ms for the NIR pixels. The combined images contain high signal-to-noise ratios and non-saturated information from both imaging modalities. Hence, the operator can clearly identify the anatomical features of the patient while accurately determining the location of the tumor as tagged by the molecular probe.

D. Multispectral Co-Registration Accuracy

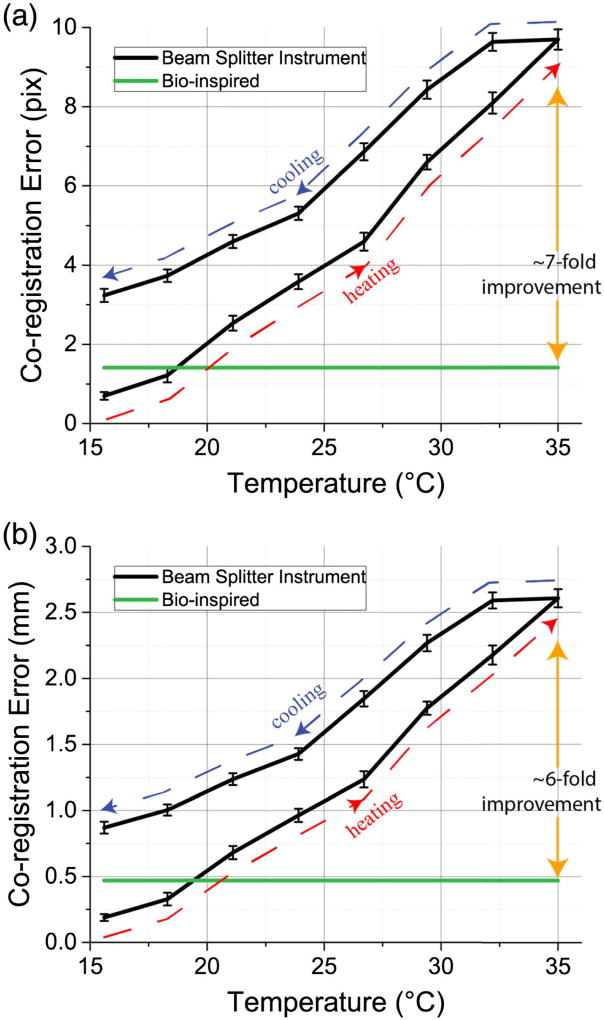

Co-registration accuracy between color and NIRF images is one of the most important attributes for an instrument to be clinically relevant. However, state-of-the-art NIRF instrumentation comprising a beam splitter and dichroic mirrors suffers from temperature-dependent co-registration inaccuracy due to thermal expansion and thermal shifts of individual optical components. These FDA-approved instruments are rated to function between 10°C and 35°C, though they fail to maintain co-registration accuracy in this range. In contrast, our bio-inspired sensor monolithically integrates filtering and imaging elements on the same substrate and is inherently immune to temperature-dependent co-registration errors.

We evaluated co-registration accuracy as a function of temperature for both our bio-inspired sensor and a state-of-the-art NIRF imaging system composed of a single lens, beam splitter, and two imaging sensors (Fig. 4). The sensors were placed 60 cm away from a calibrated checkerboard target to emulate the distance at which the sensor will be placed during preclinical and clinical trials. At the starting operating point, the beam-splitter NIRF system achieves subpixel co-registration accuracy using standard calibration methods. However, the disparity between the two images increases as the instrument’s operating temperature increases, leading to large co-registration errors. And, as the instrument is cooled, the trajectory of the co-registration error differs from that when the instrument is heated up. Hence, placing a temperature sensor on the instrument will not sufficiently correct for thermal expansion of the individual optical elements. In contrast, in our bio-inspired sensor, the worst-case co-registration error at the sensor’s plane is pixels due to the pixelated filter arrangement. Compared to the beam-splitter NIRF system, our bio-inspired sensor exhibits sevenfold improved spatial co-registration accuracy at the imaging plane when the sensors operate at 35°C.

Fig. 4.

Co-registration accuracy as a function of temperature: comparison between a beam-splitter NIRF system and our bio-inspired sensor at the (a) sensor plane and (b) imaging plane. Because of the monolithic integration of spectral filters with complementary metal-oxide semiconductor (CMOS) imaging elements, the co-registration between color and NIR images in our bio-inspired imaging system is independent of temperature.

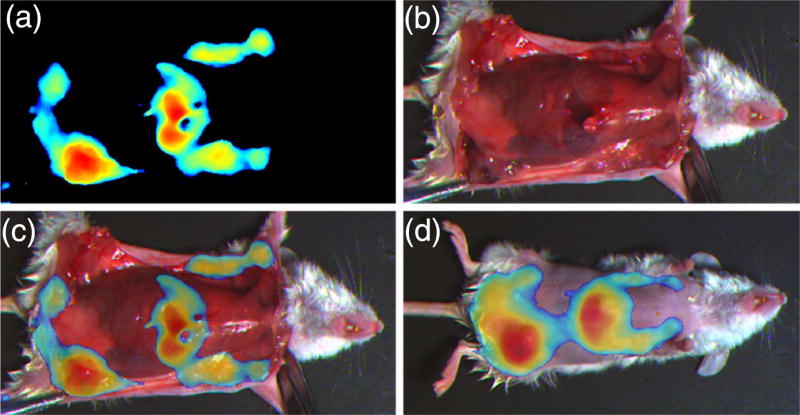

E. Implications on Co-Registration Accuracy in Murine Cancer Model

The implication of the temperature-dependent co-registration error between the NIR and RGB images in state-of-the-art NIRF systems is demonstrated in a murine model where 4T1 cancer cells are implanted next to a sciatic nerve. At ~2 weeks post-implantation, the tumor size is ~1 cm and is imaged with the tumor-targeted agent LS301. The animal is imaged with a beam-splitter NIRF imager placed inside a thermal chamber, which has a viewing port that allows imaging of the animal without perturbing the temperature of the instrument. The animal is kept on a heated thermal pad to maintain constant body temperature of ~37°C.

Figure 5(a) is a composite image taken with the NIRF system at 15°C operating temperature. The green false color indicates the NIRF signal from the tumor-targeted agent LS301. The fluorescence signal from the tumor tissue underneath the sciatic nerve is much weaker than the fluorescence signal from the surrounding tumor tissue. After thresholding the fluorescence signal, the location of the sciatic nerve is observed due to the absence of fluorescence signal [Fig. 5(a), arrow]. Since the image sensor is calibrated at 15°C operating temperature, the NIR image (i.e., location of the tumor) is correctly co-registered on the color image (i.e., anatomical features).

Fig. 5.

Color near-infrared (NIR) composite images recorded with a beam-splitter NIRF system while the instrument is at an operating temperature of (a) 15°C and (b) 32°C. The tumor-targeted probe LS301 is used to highlight the location of the tumor, which is under the sciatic nerve. The instrument is calibrated at 15°C, so the NIR and color images in (a) are correctly co-registered, and the location of the sciatic nerve is visible due to absence of fluorescence signal (arrow). In contrast, at 32°C (b), the NIR fluorescence image is spatially shifted and superimposed on the incorrect anatomic features (arrow) due to the thermal shift of individual optical elements comprising the instrument. Thus, the location of the sciatic nerve is incorrectly highlighted as cancerous tissue.

Figure 5(b) is another set of images recorded with the NIRF sensor at 32°C. Because of the thermally induced shift in the optical elements of the NIRF instrument, the fluorescence image is shifted with respect to the color image. The NIRF image incorrectly marks the sciatic nerve as cancerous tissue, while the cancerous tissue immediately next to the sciatic nerve has low fluorescence signal. This incorrect labeling of cancerous and nerve tissue can lead to iatrogenic damage to healthy tissue, which might not be visible to surgeons, while leaving behind cancerous tissue in the patient. In contrast, our bio-inspired sensor suffers no thermally induced co-registration error and accurately depicts the location of the tumor and sciatic nerve at both temperatures due to the monolithic integration of pixelated spectral filters and imaging elements.

F. Imaging Spontaneous Tumors Under Surgical Light Illumination

We used our bio-inspired sensor to identify spontaneous tumor development in a transgenic PyMT murine model for breast cancer (n = 5). All animals developed multifocal tumors throughout the mammary tissues by 5–6 weeks, and some of the small tumors blended in well with surrounding healthy tissue due to their color and were difficult to differentiate visually with the unaided eye. However, because our bio-inspired sensor has high co-registration accuracy and NIRF sensitivity, we could easily locate the tumors, resect them, and ensure that the tumor margins were negative [Figs. 6(a)–6(c)]. When we compared results obtained with our bio-inspired imaging sensor against histology results, we found that our sensor together with the tumor-targeted probe LS301 had a sensitivity of 80%, specificity of 75%, and area under the receiver operator curve of 73.4% using parametric analysis. In addition, while visible-spectrum imaging picks up only surface information, fluorescence imaging in the NIR spectrum enables deep-tissue imaging, which helps identify the location of tumors before surgery [Fig. 6(d)]. Compared to the state-of-the-art, non-real-time, bulky Pearl imaging system, with a receiver operator curve of 77.9% and relevant standard error of 6.3% [29], our bio-inspired sensor provides similar real-time accuracy under surgical light illumination.

Fig. 6.

(a)–(c) Snapshot images obtained with our bio-inspired imaging sensor during surgery with a spontaneous breast cancer murine model. (a) Near-infrared (NIR) image highlights the locations of the tumors as well as the liver, where the tumor-targeted contrast agent LS301 is cleared. (b) Color image of the animal obtained by our bio-inspired sensor during tumor removal. (c) Combined image of both NIR and color information as it is presented to the surgeon to assist with tumor resection. (d) Combined image of both NIR and color information indicating the location of the tumors under the skin. (See Visualization 1 and Visualization 2.)

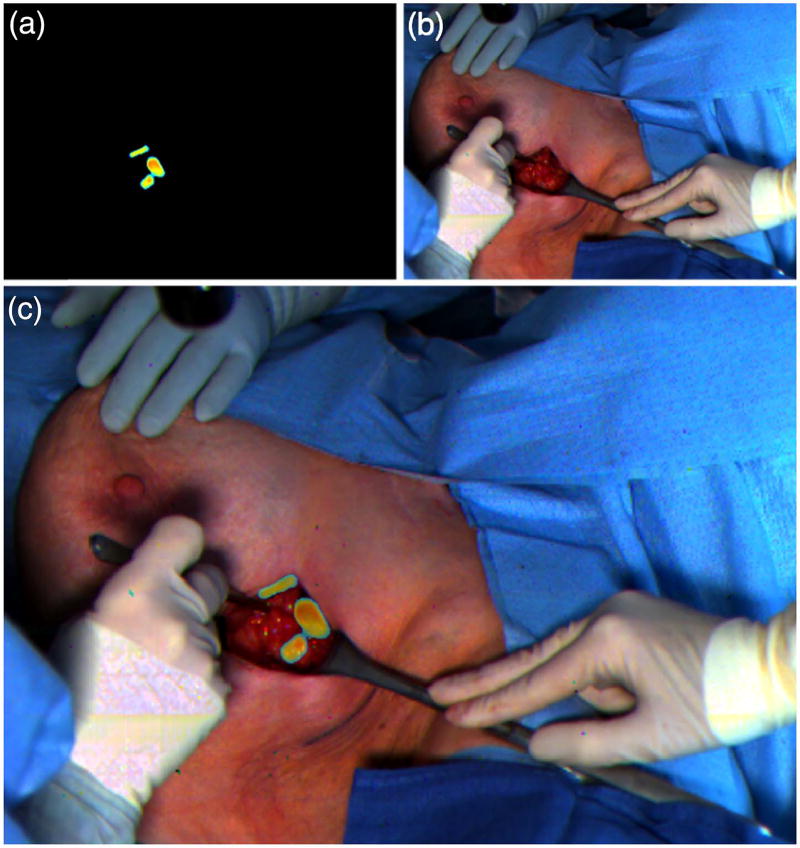

G. Clinical Translation of Our Bio-Inspired Technology

The current standard of care for tracking SLNs is to inject into the patient both a visible dye, such as ICG or methylene blue, and a radioactive 99 mTc sulfur-colloid tracer. The SLNs are generally first identified by the unaided eye, due to the coloration of the accumulated dye at the tissue’s surface, followed by gamma probe to check for radioactivity. In a pilot clinical trial, we investigated the utility of our bio-inspired imaging sensor for locating SLNs in human patients (n = 11) with breast cancer using the ICG lymphatic tracer. ICG naturally exhibits a green color due to its absorption spectra, as well as NIRF at 800 nm. ICG passively accumulates in the SLNs and is cleared through the liver and bile ducts within 24–36 h post injection (Fig. 7).

Fig. 7.

Clinical use of our bio-inspired imaging sensor for mapping sentinel lymph nodes (SLNs) using indocyanine green (ICG) contrast agent. (a) Fluorescence image obtained with the bio-inspired camera highlights the location of the SLNs. (b) Color image obtained with the sensor captures anatomical features during the surgical procedure. (c) Synthetic image obtained by combining color and near-infrared images and presented to the surgeon in the operating room to assist in determining the location of the SLNs (see Visualization 3).

Our bio-inspired imaging system provided the surgeon with real-time intraoperative imaging of the tissue in color that was enhanced with NIRF information from the ICG marker under surgical light illumination (Fig. 7). The surgeon could identify 100% of the SLNs when using information registered with our bio-inspired imaging system alone. In contrast, the surgeon identified 90% of SLNs when using the green color from ICG with the unaided eye, and 87% when using information from the radioactive tracer.

Noteworthy, during one of the procedures the surgeon identified and resected two uninvolved SLNs, using information from the green color of the ICG probe, which the gamma probe did not detect. With the assistance of the gamma probe, another two involved SLNs which did not exhibit visible green color accumulation of ICG were identified and resected. However, the bio-inspired imaging system correctly identified all four SLNs during this operation. We anticipate this to be because the green color of the ICG dye can be visually identified only at the tissue’s surface when viewed with the naked eye; in contrast, our bio-inspired sensor identifies NIRF signals several centimeters deep in the tissue [7]. The gamma ray detector failed to detect the radioactive tracer in two of the four SLNs because the limited space in the surgical cavity limited insertion of the relatively large radioactivity detection probe. In contrast, since both surgical and excitation light could clearly illuminate the surgical cavity, the bio-inspired sensor provided accurate visualization of both anatomic features and the location of the ICG dye in the SLNs.

3. MATERIALS AND METHODS

A. Animal Study

Animal study protocols were reviewed and approved by the Animal Studies Committee of Washington University in St. Louis. Female PyMT mice (n = 5) were obtained from Washington University Medical Center Breeding Core. Mice developed multiple mammary tumors as early as 5–6 weeks and were injected with 100 µl of 60 µM LS301, a tumor-targeted NIRF contrast agent, via the lateral tail vein. Images were taken 24 h post injection for best contrast.

During the in vivo study, the bio-inspired multispectral imaging sensor was set up at 1 m working distance, and the illumination module was placed at a 1 m distance. The animals were imaged under simultaneous surgical light illumination (60 kLux) and laser light excitation power of 10 mW/cm2 at 785 nm. Collimating lenses and diffusers were used to create a uniform circular excitation area with a 15 cm diameter. The RGB pixels’ exposure time was set to 0.1 ms, and the NIR pixels’ exposure time was set to 40 ms to ensure imaging rates of 25 frames/s.

A double-blind protocol was used for tissue specimen collection. Randomization was not used in this study, and all animals were included in the data analysis. During the imaging experiments, animals remained anesthetized through inhalation of isoflurane (2%–3% v/v in O2). The surgeon used fluorescence information detected by our bio-inspired sensor to locate and resect all tumor tissues. After the removal of each tumor, the surgeon resected two additional samples: a tissue sample next to the tumor identified as tumor margin and a fluorescence-negative muscle tissue. Six to eight tumors per mouse were resected, and a total of 102 samples were collected from all five mice. All harvested tissue samples were also imaged using a Lycor Pearl small animal imaging system. All tissue samples were then preserved for histology evaluation. Each sample was sliced with 8 µm thickness, stained with hematoxylin and eosin and examined by clinical pathologist. The pathologist was blind to the fluorescence results.

For the 4T1 studies, six-week-old Balb/C female mice were obtained from Jackson Laboratory and injected with 5 × 105 4T1 murine cancer cells. In the first study, the 4T1 cells were implanted into either the left or right inguinal mammary pad. In the second study, the 4T1 cells were implanted next to the sciatic nerve. At 5–7 mm tumor size (7–10 days post-implantation), these mice were injected with 100 µl of 60 µM LS301 agent via the lateral tail vein. The animals were imaged 24 h post injection.

B. Human Study

Human study protocols were approved by the Institutional Review Board of Washington University in St. Louis. The human procedure was carried out in accordance with approved guidelines. The inclusion criteria for patients in this study were newly diagnosed clinically node-negative breast cancer, negative nodal basin clinical exam, and at least 18 years of age. The exclusion criteria from this study were contraindication to surgery; receiving any investigational agents; history of allergic reaction to iodine, seafood, or ICG; presence of uncontrolled intercurrent illness; or pregnant or breastfeeding. All patients gave informed consent for this HIPAA-compliant study. The study was registered on the clinicaltrials.gov website (trial ID no. NCT02316795).

The mean ± SD age and body mass index of all patients were 64 ± 14 years and 32.7 ± 6.9 kg/m2, respectively. Before the surgical procedure, 99 m Tc sulfur colloid (834 µCi) and ICG (500 µmol, 1.6 mL) were injected into the patient’s tumor area, followed by site massage for approximately 5 min. At 10–15 min post injection, surgeons proceeded with the surgery per standard of care. Once the surgeon identified the SLNs using the visible properties of ICG (i.e., green color) and radioactivity using the gamma probe, the surgeon used our bio-inspired imaging system to locate the SLNs. The patients were imaged under simultaneous surgical light illumination (60 kLux) and laser light excitation power of 10 mW/cm2 at 785 nm. The surgeon then proceeded with the resection of the SLNs. The imaging system was set up at a 1 m working distance, and the illumination module was placed at a 1 m distance. The RGB pixels’ exposure time was set to 0.1 ms to ensure non-saturated color images were recorded, and the NIR pixels’ exposure time was set to 40 ms to ensure imaging rates of 25 frames/sec. The average imaging time with our bio-inspired sensor was 2.5 ± 0.6 min.

C. Cell Culture

4T1 breast cancer cells were used for in vivo tumor models. This cell line was obtained from American Type Culture Collection in Manassas, Virginia, USA. Mycoplasma Detection Kit from Thermo Fisher Scientific was used to verify negative status for mycoplasma contamination in the cell line. Cells were cultured in Dulbecco’s modified eagle medium from Thermo Fisher Scientific, with 10% fetal bovine serum and antibiotics.

D. Fluorescence Concentration Detection Limits Under Surgical Light Sources

Ten different ICG concentrations in plastic vials were imaged under surgical light illumination (60 kLux) and excited with laser light excitation power of 20 mW/cm2 at 785 nm. Six different vials at each concentration, as well as a control vial with deionized water, were imaged with two different pixelated imaging sensors. The first sensor was our bio-inspired imager, which has two separate exposure times per frame: the RGB pixels’ exposure time was set to 0.1 ms to ensure non-saturated color images were recorded, and the NIR pixels’ exposure time was set to 40 ms to ensure imaging rates of 25 frames/sec. The second sensor was a pixelated CMOS imaging sensor with single exposure time for both RGB and NIR pixels in the array. The exposure time for the second sensor was set to 0.1 ms because longer exposure times would saturate the color image due to the high photon flux from the surgical light source illumination.

A region of interest within each vial was selected to avoid edge artifacts. An average intensity value and standard deviation of the NIR pixels were computed on the region of interest, excluding 5% of the pixels’ outliers. The detection threshold was determined as the average NIR signal plus three standard deviations of the control vial.

E. Temperature-Dependent Co-Registration Accuracy Measurement

The imaging instruments were individually placed in a custom-built thermal chamber with a transparent viewing port, where the operating temperature was accurately controlled using a proportional-integral-derivative controller. The imaging instrument resided at 15°C for 24 h to reach thermal equilibrium. The disparity matrix between the NIR and color image for the beam splitter image was computed at 15°C temperature. The temperature of the thermal chamber was increased in increments of ~2.5°C, and the instrument was held at the new temperature for 15 min before an image of a calibrated checkerboard target was captured with both NIR and color sensors. These new images were co-registered using the disparity matrix computed at 15°C, and the co-registration error across the entire image was evaluated. The operating temperature of the instrument was increased to 35°C and then reduced back to 15°C. The co-registration error was computed at each temperature point.

F. CMOS Imager with Pixel-Level Multi-Exposure Capabilities

The custom CMOS imager, which is used as a substrate for our NIRF sensor, is custom designed and fabricated in 180 nm CMOS image sensor technology. The imager is composed of an array of 1280 by 720 pixels, programmable scanning registers, and readout analog circuits comprising switch capacitors, amplifiers, bandpass filters, voltage reference circuits, and analog-to-digital converters (Fig. S1 of Supplement 1). An individual pixel comprises a pinned photodiode and four transistors that control the access of the pixel to the readout circuitry and the exposure time of the pixel.

The digital scanning registers interface with the individual pixels and control the transistors’ gates within each pixel. The scanning registers are designed to be programmable by inserting different digital patterns and altering the clocking sequence via an external field programmable gate array. This enables pixel-level control of the exposure time for individual photodiodes and specialized readout sequence of individual pixels. Hence, individual groups of pixels (color or NIR) can be read out at different times, and the exposure time for both types of pixels is optimized to ensure acquisition of high signal-to-noise (SNR) color and NIR images, respectively.

During a single frame readout, NIR and color pixels can have different exposure times, and the frame rate is limited by the longest integration time of the two. For example, during intraoperative procedures, the light intensity from the surgical light sources that is reflected from the tissue is much higher than the NIRF signal from the molecular dye. In this scenario, to ensure high-SNR and non-saturated images, the integration time for the color pixels is set to ~0.1 ms and the exposure time for the NIR pixels is set to 40 ms to ensure imaging rates of 25 frames/s.

The timing sequence for two neighboring pixels with different spectral filters is shown in Fig. S2 of Supplement 1. Both pixels are reset initially, and then they start to collect photons on the photodiodes’ intrinsic capacitance. Since the photon flux for the visible-spectrum photons in the operating room is typically much higher than that of the NIRF photons, the photodiode voltage in the color pixels drops faster than in the NIR pixels over time. At the end of the exposure period, the photodiode voltages from the color pixels are sampled first on the column parallel readout capacitors. A readout control registers scans through the column parallel capacitors and digitizes the analog information after it has been amplified with a programmable gain amplifier. The same readout sequence is repeated with the NIR pixels at the end of the 40-ms exposure time.

G. Fabrication of Pixelated Spectral Filters

The pixelated spectral filters were fabricated via a set of optimized microfabrication steps. Here are the steps taken to fabricate the pixelated filters:

The carrier wafer was soaked for 30 min in isopropanol alcohol and rinsed with DI water.

The wafer was coated with 20 nm of chromium, which was used to block stray light between pixels [Fig. S3(a) of Supplement 1].

SU8 2000 photoresist was spin coated at 500 rpm for 10 s and then at 3000 rpm for 50 s with 500 rpm per second acceleration.

The sample was baked at 65°C for 1 min and then at 95°C for 2 min on a hot plate [Fig. S3(b)].

The photoresist was exposed at 375 nm wavelength for 22 s at 5 mW/cm2 intensity using a Karl Zuess mask aligner.

The sample was post-baked at 65°C for 1 min and then at 95°C for 3 min. The sample was cooled down to 65°C for 1 min to gradually decrease the temperature and minimize stress and cracking on the photoresist. The photoresist was developed for 3 min in an SU-8 developer using an ultrasound bath, and gently rinsed with isopropyl alcohol at the end of the procedure [Fig. S3(c)].

The exposed chromium was etched in an Oxford reactive ion etching, inductively coupled plasma instrument [Fig. S3(d)].

The omnicoat was spin coated at 4000 rpm and baked at 150°C for 1 min.

SU8 2000 photoresist was spin coated at 500 rpm for 10 s and then at 3000 rpm for 50 s with 500 rpm per second acceleration.

The sample was baked at 65°C for 1 min and then at 95°C for 2 min on a hot plate.

The photoresist was exposed at 375 nm wavelength for 22 s at 5 mW/cm2 intensity using a Karl Zuess mask aligner.

The sample was post-baked at 65°C for 1 min and then at 95°C for 3 min. The photoresist was developed for 3 min in an SU-8 developer using an ultrasound bath, and gently rinsed with isopropyl alcohol at the end of the procedure.

The exposed Omnicoat was etched using chlorine in an Oxford reactive ion etching inductively coupled plasma instrument [Fig. S3(e)].

Alternating layers of silicon dioxide and silicon nitrate were deposited across the entire sample using physical vapor deposition. The thickness of the dielectric layers was optimized for transmitting NIR light with high transmission ratio [Fig. S3(f)].

The sample was immersed in Remover PG and ultrasound bath for 30 min to lift off the unwanted structures [Fig. S3(g)].

Steps 2 through 13 were repeated three times to fabricate red, green, and blue pixels [Figs. S3(h) and S3(i)].

The final sample had all four different types of pixels: NIR and visible spectrum pixels [Fig. S3(j)].

H. Statistical Analysis

The sample size in our animal study of PyMT breast cancer was determined to ensure adequate power (>85%, at significance of 0.05) to detect predicted effects, which were estimated based on either preliminary data or previous experiences with similar experiments. The confidence interval for the fluorescence detection accuracy was calculated using the exact method (one-sided). Differences between ICG concentration and control vial were assessed using one-sided Student’s t-tests for the calculation of P values. Matlab was used for data analysis.

4. CONCLUSION

We have designed, fabricated, tested, and translated into a clinical setting a bio-inspired multispectral imaging system that provides critical information to health care providers in a space-, time-, and illumination-constrained operating room. Because of its compact size and excellent multispectral sensitivity (see Table 2), this paradigm-shifting sensor can assist physicians without impacting normal surgical workflow. The high co-registration accuracy between NIR and visible-spectrum images and the high NIR imaging sensitivity under surgical light illumination are key advantages over current FDA-approved instruments used in the operating room. The initial clinical results corroborate results from previous studies [30] and indicate the benefits of using fluorescence properties of ICG for mapping SLNs in patients with cancer.

Supplementary Material

Acknowledgments

Funding. Air Force Office of Scientific Research (AFOSR) (FA9550-12-1-0321); National Institutes of Health (NIH) (NCI R01 CA171651); National Science Foundation (NSF) (1724615, 1740737).

V. G. conceived the sensor idea and oversaw the entire project. M. G. and V. G. designed the imager and designed the animal studies. Optical evaluation of the imager was performed by M. G., C. E., T. Y., R. M, and V. G. Animal studies were performed by M. G., S. M., G. S., W. A., S. A., M. Y. P., and V. G. Surgical light source was designed by N. Z. and R. L. Human study was performed by J. M. Co-registration study was performed by M. G., V. G. and M. Z. Paper was written by V. G., M. Y. P., and M. G. All authors proofread the paper. The authors would like to thank James Hutchinson and Patricia J. Watson for paper editing.

Footnotes

The authors declare no competing financial interests.

Data Availability. All data supporting the findings of this study can be located on the Dryad website at http://datadryad.org/resource/doi:10.5061/dryad.q6188pm.

See Supplement 1 for supporting content.

References

- 1.Morton DL, Thompson JF, Cochran AJ, Mozzillo N, Nieweg OE, Roses DF, Hoekstra HJ, Karakousis CP, Puleo CA, Coventry BJ, Kashani-Sabet M, Smithers BM, Paul E, Kraybill WG, McKinnon JG, Wang H-J, Elashoff R, Faries MB. Final trial report of sentinel-node biopsy versus nodal observation in melanoma. N. Engl. J. Med. 2014;370:599–609. doi: 10.1056/NEJMoa1310460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.McLaughlin SA. Surgical management of the breast: breast conservation therapy and mastectomy. Surg. Clin. N. Am. 2013;93:411–428. doi: 10.1016/j.suc.2012.12.006. [DOI] [PubMed] [Google Scholar]

- 3.Gao L, Wang LV. A review of snapshot multidimensional optical imaging: measuring photon tags in parallel. Phys. Rep. 2016;616:1–37. doi: 10.1016/j.physrep.2015.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Yun SH, Kwok SJJ. Light in diagnosis, therapy and surgery. Nat. Biomed. Eng. 2017;1:0008. doi: 10.1038/s41551-016-0008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.van Dam GM, Themelis G, Crane LMA, Harlaar NJ, Pleijhuis RG, Kelder W, Sarantopoulos A, de Jong JS, Arts HJG, van der Zee AGJ, Bart J, Low PS, Ntziachristos V. Intraoperative tumor-specific fluorescence imaging in ovarian cancer by folate receptor-[alpha] targeting: first in-human results. Nat. Med. 2011;17:1315–1319. doi: 10.1038/nm.2472. [DOI] [PubMed] [Google Scholar]

- 6.Vahrmeijer AL, Hutteman M, van der Vorst JR, van de Velde CJH, Frangioni JV. Image-guided cancer surgery using near-infrared fluorescence. Nat. Rev. Clin. Oncol. 2013;10:507–518. doi: 10.1038/nrclinonc.2013.123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Hong G, Antaris AL, Dai H. Near-infrared fluorophores for biomedical imaging. Nat. Biomed. Eng. 2017;1:0010. [Google Scholar]

- 8.Miller WH, Bernard GD. Butterfly glow. J. Ultrastruct. Res. 1968;24:286–294. doi: 10.1016/s0022-5320(68)90065-8. [DOI] [PubMed] [Google Scholar]

- 9.Stavenga DG. Visual adaptation in butterflies. Nature. 1975;254:435–437. doi: 10.1038/254435a0. [DOI] [PubMed] [Google Scholar]

- 10.Zhao Y, Belkin MA, Alù A. Twisted optical metamaterials for planarized ultrathin broadband circular polarizers. Nat. Commun. 2012;3:870. doi: 10.1038/ncomms1877. [DOI] [PubMed] [Google Scholar]

- 11.Song YM, Xie Y, Malyarchuk V, Xiao J, Jung I, Choi K-J, Liu Z, Park H, Lu C, Kim R-H, Li R, Crozier KB, Huang Y, Rogers JA. Digital cameras with designs inspired by the arthropod eye. Nature. 2013;497:95–99. doi: 10.1038/nature12083. [DOI] [PubMed] [Google Scholar]

- 12.Liu H, Huang Y, Jiang H. Artificial eye for scotopic vision with bio-inspired all-optical photosensitivity enhancer. Proc. Natl. Acad. Sci. USA. 2016;113:3982–3985. doi: 10.1073/pnas.1517953113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Posch C, Serrano-Gotarredona T, Linares-Barranco B, Delbruck T. Retinomorphic event-based vision sensors: bioinspired cameras with spiking output. Proc. IEEE. 2014;102:1470–1484. [Google Scholar]

- 14.York T, Powell SB, Gao S, Kahan L, Charanya T, Saha D, Roberts NW, Cronin TW, Marshall J, Achilefu S, Lake SP, Raman B, Gruev V. Bioinspired polarization imaging sensors: from circuits and optics to signal processing algorithms and biomedical applications. Proc. IEEE. 2014;102:1450–1469. doi: 10.1109/JPROC.2014.2342537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Garcia M, Edmiston C, Marinov R, Vail A, Gruev V. Bio-inspired color-polarization imager for real-time in situ imaging. Optica. 2017;4:1263–1271. [Google Scholar]

- 16.Liu S-C, Delbruck T. Neuromorphic sensory systems. Curr. Opin. Neurobiol. 2010;20:288–295. doi: 10.1016/j.conb.2010.03.007. [DOI] [PubMed] [Google Scholar]

- 17.Wen B, Boahen K. A silicon cochlea with active coupling. IEEE Trans. Biomed. Circuits Syst. 2009;3:444–455. doi: 10.1109/TBCAS.2009.2027127. [DOI] [PubMed] [Google Scholar]

- 18.Indiveri G, Linares-Barranco B, Hamilton T, van Schaik A, Etienne-Cummings R, Delbruck T, Liu S-C, Dudek P, Häfliger P, Renaud S, Schemmel J, Cauwenberghs G, Arthur J, Hynna K, Folowosele F, Saïghi S, Serrano-Gotarredona T, Wijekoon J, Wang Y, Boahen K. Neuromorphic silicon neuron circuits. Front. Neurosci. 2011;5:73. doi: 10.3389/fnins.2011.00073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Land MF, Nilsson D-E. Animal Eyes. Oxford University; 2012. [Google Scholar]

- 20.Parker AR, McPhedran RC, McKenzie DR, Botten LC, Nicorovici N. Photonic engineering. Aphrodite’s iridescence. Nature. 2001;409:36–37. doi: 10.1038/35051168. [DOI] [PubMed] [Google Scholar]

- 21.Vukusic P, Sambles JR. Photonic structures in biology. Nature. 2003;424:852–855. doi: 10.1038/nature01941. [DOI] [PubMed] [Google Scholar]

- 22.Potyrailo RA, Ghiradella H, Vertiatchikh A, Dovidenko K, Cournoyer JR, Olson E. Morpho butterfly wing scales demonstrate highly selective vapour response. Nat. Photonics. 2007;1:123–128. [Google Scholar]

- 23.Pris AD, Utturkar Y, Surman C, Morris WG, Vert A, Zalyubovskiy S, Deng T, Ghiradella HT, Potyrailo RA. Towards high-speed imaging of infrared photons with bio-inspired nanoarchitectures. Nat. Photonics. 2012;6:195–200. [Google Scholar]

- 24.Wang LV, Wu H-I. Biomedical Optics: Principles and Imaging. Wiley; 2009. [Google Scholar]

- 25.Steven LJ. Optical properties of biological tissues: a review. Phys. Med. Biol. 2013;58:R37–R61. doi: 10.1088/0031-9155/58/11/R37. [DOI] [PubMed] [Google Scholar]

- 26.Dsouza AV, Lin H, Henderson ER, Samkoe KS, Pogue BW. Review of fluorescence guided surgery systems: identification of key performance capabilities beyond indocyanine green imaging. J. Biomed. Opt. 2016;21:080901. doi: 10.1117/1.JBO.21.8.080901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Chen Z, Zhu N, Pacheco S, Wang X, Liang R. Single camera imaging system for color and near-infrared fluorescence image guided surgery. Biomed. Opt. Express. 2014;5:2791–2797. doi: 10.1364/BOE.5.002791. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mondal S, Gao S, Zhu N, Sudlow G, Som A, Akers W, Fields R, Margenthaler J, Liang R, Gruev V, Achilefu S. Binocular goggle augmented imaging and navigation system provides real-time fluorescence image guidance for tumor resection and sentinel lymph node mapping. Sci. Rep. 2015;5:12117. doi: 10.1038/srep12117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Hanley JA, McNeil BJ. A method of comparing the areas under receiver operating characteristic curves derived from the same cases. Radiology. 1983;148:839–843. doi: 10.1148/radiology.148.3.6878708. [DOI] [PubMed] [Google Scholar]

- 30.Niebling MG, Pleijhuis RG, Bastiaannet E, Brouwers AH, van Dam GM, Hoekstra HJ. A systematic review and meta-analyses of sentinel lymph node identification in breast cancer and melanoma, a plea for tracer mapping. Eur. J. Surg. Oncol. 2016;42:466–473. doi: 10.1016/j.ejso.2015.12.007. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.