Abstract

Methods of estimating the local false discovery rate (LFDR) have been applied to different types of datasets such as high-throughput biological data, diffusion tensor imaging (DTI), and genome-wide association (GWA) studies. We present a model for LFDR estimation that incorporates a covariate into each test. Incorporating the covariates may improve the performance of testing procedures, because it contains additional information based on the biological context of the corresponding test. This method provides different estimates depending on a tuning parameter. We estimate the optimal value of that parameter by choosing the one that minimizes the estimated LFDR resulting from the bias and variance in a bootstrap approach. This estimation method is called an adaptive reference class (ARC) method. In this study, we consider the performance of ARC method under certain assumptions on the prior probability of each hypothesis test as a function of the covariate. We prove that, under these assumptions, the ARC method has a mean squared error asymptotically no greater than that of the other method where the entire set of hypotheses is used and assuming a large covariate effect. In addition, we conduct a simulation study to evaluate the performance of estimator associated with the ARC method for a finite number of hypotheses. Here, we apply the proposed method to coronary artery disease (CAD) data taken from a GWA study and diffusion tensor imaging (DTI) data.

1 Introduction

Methods of estimating the local false discovery rate (LFDR) [1], not suffering from the bias inherent in estimating other false discovery rates [2], have been applied to various datasets such as high-throughput biological data (e.g., gene expression, proteomics, and metabolomics), diffusion tensor imaging (DTI), and genome-wide association (GWA) study [3–5]. As an example, in a GWA study, the methods of estimating the LFDR are used in order to estimate the probability that a single nucleotide polymorphism (SNP) is associated with a disease [6–10]. In addition, in DTI brain scans, the LFDR estimates have been used to estimate the proportion of dyslexic-non-dyslexic differences [5, 11].

In many situations, the considered hypotheses are connected by a scientific context. However, ignorance of this scientific context in a data analysis can be misleading, because it may introduce bias into the LFDR estimates [11]. For example, each test in a GWA study corresponds to a specific genetic marker for which previous biological information may be available. Moreover, in a DTI study, each test corresponds to a voxel, where the voxel location can be incorporated as a scientific context.

1.1 Motivating example

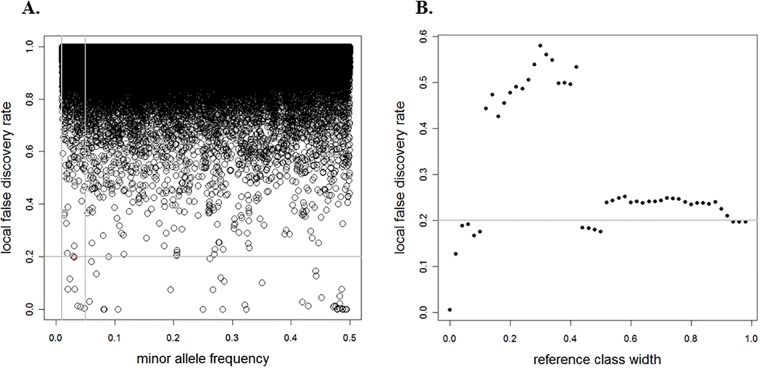

We consider coronary artery disease (CAD) data [12], where N = 394, 839 SNPs passed the quality control (QC) filtering methods explained in Section 3.2.1. The aim of this study is to identify whether each SNP is associated with a disease. We have two components (zi, xi) associated with each hypothesis for i = 1, …, N, where zi is an observed test statistic and xi presents the minor allele frequency (MAF). For our data, all observed test statistics are used to identify the disease-associated SNPs. Fig 1(A) the N = 394, 839 SNPs used to estimate the LFDR (Section 2). A total of 44 disease-associated SNPs with LFDR estimates lower than 0.2 are identified.

Fig 1. CAD data for LFDR estimates under the HB model versus the MAF xi (A) and reference class width (B) for a fixed MAF xi = 0.0306.

The horizontal line represents the threshold of 0.2. The vertical lines in (A) indicate the symmetry around xi = 0.0306 with Δ = 0.04.

A set of hypotheses or features used to determine the posterior probability of a null hypothesis is called a reference class, and the problem of finding such a set is an example of the reference class problem (e.g., Bickel [13]). For example, considering all SNPs when estimating the LFDR for a specific MAF, instead of considering the different subsets of SNPs, is called the combined reference class (CRC) method (Section 2). From Fig 1(A), consider the example of xi = 0.0306 and zi = −4.3971, with an estimated LFDR of 0.1973 that is close to the threshold of 0.2. For this MAF, we define a reference class of SNPs in such a way that the MAFs are within a symmetric window around xi = 0.0306, with width 2Δ. Different window widths yield different reference classes. Again, each subset of SNPs is used to estimate the LFDR. Fig 1(B) illustrates the LFDR estimates versus the reference class width. This figure shows that changing the reference class width provides different LFDR estimates, raising the important question of how we can estimate the optimal reference class in order to estimate the LFDR. For the considered CAD data, the reference class problem consists of deciding which SNPs should be used to determine whether a SNP is associated with a disease.

The hypotheses can be divided into groups based on the characteristics of the problem. For example, the CAD data can be divided into two distinct groups according to MAFs, low-frequency SNPs (1% ≤ xi ≤ 5%) and common SNPs (xi > 5%). Thus, we need to determine which reference class should be used to determine the posterior probability that the SNP is not associated with the disease occurring at the MAF xi = 0.0306. Should we use the entire set of SNPs, or the low-frequency SNPs [14]? In addition, SNPs can be divided into different classes, such as non-synonymous SNPs, genic SNPs, SNPs in highly conserved regions, SNPs in linkage disequilibrium with many (or few) other SNPs, or categorized SNPs based on their MAFs [12].

1.2 Previous research on the reference class problem

Many methods have been proposed for incorporating covariates into statistical techniques for testing multiple hypotheses. Bickel [15] considered the effect of selecting test statistics in estimators of the weighted and unweighted FDR, and found that smaller reference classes of null hypotheses yield lower estimated expected losses than larger reference classes do. Several researchers have applied the idea of incorporating a group structure and weights to improve the statistical power of tests. This group structure can be used when testing multiple hypotheses by assigning weights for the hypotheses or the p-values in each group. Benjamini and Hochberg [16] used a p-value weighting method to evaluate different procedures. Genovese et al. [17] demonstrated that a p-value weighting procedure can be employed to control the FWER and FDR while increasing the statistical power of the test. Subsequently, Wasserman and Roeder [18] introduced an optimal p-value weighting procedure to control the FWER. Sun et al. [19] proposed a stratified false discovery control approach for genetic studies, in which a large number of hypotheses include inherent stratification. In addition, Efron [11] argued that analyzing separate reference classes can be legitimate from a frequentist viewpoint, and Hu et al. [20] proposed a weighting scheme based on a simple Bayesian framework that employs the proportion of null hypotheses that are true within each group. Such an approach can control the FDR for p-values with certain dependence structures. The unknown proportion of true null hypotheses is estimated within each group. Moreover, Zablocki et al. [8] used a hierarchical Bayesian approach to incorporate a set of covariates, where the prior probability that the null hypothesis test is true and the alternative distribution of the test statistic are both modulated by covariates. In contrast to Zablocki et al. [8], instead of specifying the hyperprior distributions required for a hierarchical Bayesian approach, we follow Karimnezhad and Bickel [21] and use an empirical Bayes approach to estimate an optimal reference class to improve the LFDR estimate.

In this study, we assume the prior probability to be a function of covariates (Section 2.1). Then, we propose an adaptive reference class (ARC) method for estimating the LFDR, using a bootstrap approach to estimate the optimal reference class (Section 2.2). We compare the performance of the proposed ARC method and the CRC method using the mean squared error (MSE) as the performance criterion. We prove that, under certain assumptions, the ARC method has an MSE that is asymptotically no greater than that of the CRC method (see S1 File). In addition to the asymptotic results, we conduct a simulation study to investigate the finite dataset performances of the LFDR estimators for each method in Section 3.1. Both the asymptotic and simulation results show that, under certain assumptions, the ARC method performs well compared with the CRC method. We present an application of the ARC method on both CAD data and DTI data in Section 3.2 in order to demonstrate the practical importance of deciding between the ARC and CRC methods. Finally, we conclude the paper with a brief discussion in Section 4. The proof for the main theorem is included in S1 File.

2 Materials and methods

Suppose N null hypotheses H01,…, H0N are considered simultaneously. For example in GWA study, let H0i denote the null hypothesis that the ith SNP is not associated with the disease. Under the genetic additive model [22], each SNP yields a Wald χ2 test statistic Wi. Under the ith null hypothesis, it holds that , while under the ith alternative hypothesis, we have , where δ ∈ (0, ∞) is an unknown noncentrality parameter, following the models employed in [23] and [24]. Under the ith null hypothesis, assume that Zi ∼ N(0, 1), where Zi represents the z-transform that converts the Wald χ2 statistic into a standard normal statistic. In addition, for DTI data, let H0i denote the null hypothesis that there is no dyslexic-non-dyslexic difference for the ith voxel. Under the ith null hypothesis, assume that Zi ∼ N(0, 1), where Zi represents the z-transform which converts two-sample t-test satistic into a standard normal statistic.

The observed statistics z = (z1,…, zN)T are considered realizations of Z = (Z1,…, ZN)T. Let Ai be an indicator variable for the event that the ith alternative hypothesis Hai is true. Assume that Ai’s are independent and identically distributed (i.i.d.) Bernoulli(1 − π0) variables, where π0 is the prior probability that the ith null hypothesis is true. Let f0(zi) and f1(zi) be the null and alternative density, respectively.

The posterior probability that the ith null hypothesis is true, given Zi = zi is the LFDR [1], and is denoted as Ψ(zi), where

| (1) |

where f(zi; π0) denotes the mixture density of Zi given by

| (2) |

If the null hypothesis is true, then the null density f0(zi) of the statistic Zi is the standard normal, and is called the theoretical null hypothesis [1]. The model defined in Eq (2) with the following method of estimation is called the histogram- based (HB) model [25]. By assuming the theoretical null hypothesis and applying the Poisson regression, the mixture density f(zi;π0) is estimated by fitting a high-degree polynomial to the histogram counts, denoted by , where the estimate of the proportion of true null hypothesis is denoted by . The LFDR estimate is computed by substituting and into Eq (1). This model and estimation method are an example of the CRC method.

2.1 Proposed model

The described model in Eq (1) extends to the situation that incorporates a covariate related to the scientific context of each hypothesis test. For the CAD dataset, the covariate represents the MAF in the CAD data, while for the DTI data, the location is incorporated as a covariate. Let X = (X1,…, XN)T be i.i.d. random variables. Any test statistics are transformed to the standard normal statistic Zi, for i = 1,…, N. The observed statistics vector z = (z1,…, zN)T is considered a realization of Z = (Z1,…, ZN)T. Let Ai be the event that the ith alternative hypothesis Hai is true. Assume that Ai|Xi = xi ∼ Bernoulli(1 − π0(xi)), where π0(xi), the prior probability that the ith null hypothesis is true, is an unknown function of the given covariate Xi = xi. We denote the posterior probability that the ith null hypothesis is true, given Zi = zi and Xi = xi by

| (3) |

where the mixture density of Zi conditional on the covariate Xi = xi is given by

| (4) |

where f0(zi) denotes the null density of Zi and f1(zi;xi) is the alternative density of Zi. The mixture density in Eq (2) is a special form of Eq (4), where the effect of the covariates is ignored. The quantities π0(xi) and f1(zi;xi) are unknown. The ARC method is applied to estimate the LFDR in Eq (3). Under the CRC method, the effects of the covariates defined in Eq (1) are ignored, while under the proposed ARC method, some assumptions are considered locally in order to estimate the LFDR Ψ(zi; xi), defined in Eq (3).

2.2 Adaptive reference class (ARC) method

Under the ARC method, certain assumptions only hold locally within a symmetric window for each covariate. Let a symmetric window of width 2Δ be centered at given covariate Xi = xi. Such a symmetric window is denoted by , where

| (5) |

Let Δ0 denote the smallest considered value of the tuning parameter Δ. The reference class contains components zj such that their covariates are within a distance Δ of xi. Denoting the expected dimension of the reference class by , we have

| (6) |

Here, increases with number of null hypothesis tests N, provided that the probability is positive. For each reference class , we may apply any LFDR estimation approach such as the HB model in Section 2. In contrast to the CRC method, instead of using the entire collection of observed statistics z, only the reference class is used to obtain the LFDR estimate . The choice of tuning parameter Δ influences the LFDR estimate. Here, we choose the one that results in the lowest error when estimating the LFDR, which is called the optimal tuning parameter.

2.3 Optimal tuning parameter

The optimal tuning parameter Δ specifies the symmetric window width of a given reference class, and is determined by minimizing the errors resulting from the bias and the variance. In the following, we introduce several notational conventions.

Let the mean and variance of the estimator be defined as

| (7) |

respectively. When Xi = xi, the prediction bias for the estimator is denoted by , with

| (8) |

Determining the optimal choice of Δ depends on the choice of the loss function used to measure the errors in the estimation of the LFDR. A good estimator is accurate in the sense that its estimates are as close to the true values as possible. Accuracy measures typically take into account the difference between the estimated value and the true value. Using the MSE as a an accuracy measure is a commonly used way to indicate how close the estimator is to the true value by incorporating both the bias and the variance [26]. Hence, using the MSE provides our criterion for defining the optimal tuning parameter Δ. The MSE for the estimator conditional on Xi = xi is defined as

| (9) |

It can be shown that the portion of MSE that depends on Δ is given by

| (10) |

Here, we employ the errors resulting from the bias and the variance in Eq (10) to determine the optimal Δ. Denoting the optimal Δ by , we have

| (11) |

To estimate , it is necessary to estimate the variance and the prediction bias of the LFDR estimator, which we do using the bootstrap approach.

2.4 Bootstrap estimation of the optimal tuning parameter

We re-sample N pairs from {(z1, x1),…, (zN, xN)} until B bootstrap samples are obtained that contain the specific pair (zi, xi), where zi ∈ z and xi ∈ x. These samples are denoted by . The bth bootstrap sample contains pairs , for j = 1,…, N and b = 1,…, B. From Eq (5), the bth bootstrap reference class is defined as

| (12) |

The estimate of Ψ(zi; xi) based on the bth bootstrap reference class is denoted by . The random variables provide the estimators and , which we use to estimate μΔ(xi) and , respectively, where

| (13) |

In order to estimate the prediction bias in Eq (10), we need to estimate π0(xi). We propose using a reference class , which contains the observed statistics zjs the covariates of which are within a distance Δ0 of xi. Thus, the estimator from Eq (13) can be used to estimate π0(xi). Denoting the bootstrap estimator of the prediction bias by , we have that

| (14) |

The estimator of in Eq (10) is denoted by , and is computed by simply summing the bootstrap variance in Eq (13) and the squared bootstrap prediction bias in Eq (14). Let the optimal be denoted as , which is given by

| (15) |

After estimating the optimal tuning parameter (see Algorithm 1), the optimal reference class is estimated from Eq (5). The class contains zjs, the covariates of which are within a distance of xi. Then, the optimal reference class is used to estimate the LFDR in Eq (3). This LFDR estimate is denoted as . The estimation methods detailed above yield two estimators. The estimator is related to the CRC method and is computed using the ARC method. We compare the performance of two estimators using the MSE.

Algorithm 1 Pseudo-code of the estimation of the optimal tuning parameter for one simulated dataset.

Input: Test statistics and covariates (z1, x1),…, (zN, xN); number of bootstrap samples B; smallest value of the tuning parameterΔ0; tuning parameter Δ ≥ Δ0

For i = 1,…N

- Build bth bootstrap samples , b = 1,…, B and j = 1,…, N including pair (zi, xi) For b = 1,…, B

- Determine bootstrap reference class and in Eq (12)

- Estimate LFDR and using HB method

Minimize over Δ ≥ Δ0

Output: Estimate optimal tuning parameter .

Let π0(xi) denote the true prior probability that the ith null hypothesis is true. In GWA study, the null hypothesis means no disease association, and in the DTI study, it means no differences between dyslexic and non-dyslexic children. For a given x0, we suppose that the unknown prior probability π0(Xi) is a step function of the covariate Xi, given by

| (16) |

where the prior probabilities π01 and π02 are both unknown, and π01 ≤ π02. This function splits the N tests into two distinct groups, such that in each group, the test statistics are i.i.d. Moreover, the simplified function in Eq (16) will have a biologically meaningful interpretation. As an example, in CAD data, the N SNPs can be divided into two distinct groups; disease-associated and not disease-associated. The two groups may have different choices of prior probabilities. In addition, under the assigned values of x0 and Δ0, the observed vector of covariates x may be partitioned into three regions

| (17) |

for j = 1,.., N. Therefore, the following theorem ensures us that, under the assumptions explained above for π0(Xi) Eq (16) and the region of covariates Eq (17), the proposed ARC method has an MSE that is asymptotically no greater than that of the CRC method. The proof of the theorem is given as a series of lemmas (see S1 File).

Theorem 1 Let be a weakly consistent estimator of Ψ(zi) when N becomes large. If , then

where B is the number of bootstrap samples.

Remark 1 The step function of the covariates in Eq (16) and the partitioning of the covariates in Eq (17) affect the weak consistency of Eq (13) as an estimator of π0(Xi) in Eq (16) (see S1 File, Lemma 2). Then, for , the bootstrap mean is not a weakly consistent estimator of π0(Xi).

Remark 2 For , similar results to Theorem 1 can be derived.

3 Results

3.1 Simulation study

The aim of the simulation analysis presented here is to compare the finite dataset performances of the CRC and ARC methods when estimating the LFDR in Eq (3). In this section, each test statistic is assigned a prior probability that is a function of the covariate.

We assume that the proportion of disease-associated tends to be very small. Then, we present several simulation studies, each with a different value of x0 ∈ [0.05, 0.40]. The datasets are simulated as follows. In each simulation, we randomly generate 1000 datasets, each corresponding to an artificial case-control study. For each dataset, we simultaneously generate both the auxiliary information and the observed Wald χ2 test statistics, denoted by xi and wi, respectively. Each observed covariate xi is generated randomly from the uniform distribution between 0 and 1.

In each simulation, the true prior probability π0(xi) is determined according the given value of x0 as a function of the observed covariate in Eq (16). From (16), let for i = 1,…, N, where . To generate the observed statistics, we generate each Ai ∼ Bernoulli(1 − π0(xi)) independently. To generate the observed χ2 test statistics, if Ai = 1, the observed statistics are sampled from with a noncentrality parameter δ. For each given value of x0, a different value of δ ∈ [1.5, 7] is assigned. The Wald χ2 test statistics when Ai = 0 are sampled from . The Wald χ2 test statistics are then transformed into z-values.

Each dataset has N pairs (zi, xi). The total number of pairs N is equal to 300, 000. In each simulation, a pair (zi, xi) is selected randomly from each dataset to estimate Ψ(zi; xi). For a given covariate xi, the estimators of the LFDR are computed using the two methods. Under the ARC method, Δ0 has to be specified in advance in order to determine . We consider the range Δ0 ∈ (0, x0), and set B = 1000. Thus, under the HB model described in Section 1.1, we compute the estimators and . The conditional MSE approximations used to measure the performances of the estimators are given by

and the marginal MSE approximations are computed as follows:

where for the CRC method and for the ARC method. The relative MSE of the two estimators is a convenient measure for comparing the MSEs. The conditional and marginal relative MSEs are denoted by

| (18) |

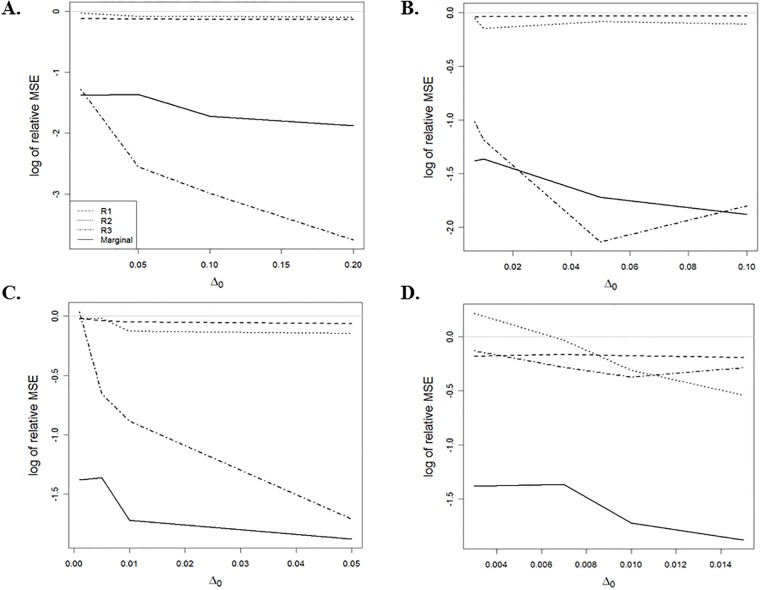

respectively. From Fig 2, we observe that the performance of the ARC method depends on the Δ0 values and the region of the covariates. When , increasing the value of Δ0 results in a smaller MSE approximation for the ARC method in the regions and that follow the results in Theorem 1. Then, increasing , Fig 2(D) shows that the MSE approximation for the proposed ARC method is greater than that of the CRC method in the region , for some Δ0.

Fig 2. The log2 value of the relative MSE conditional on regions and marginal versus different values of Δ0; for (A) , (B) , (C) and (D) , where for i = 1, …, N.

Fig 2 shows that the ARC method has a smaller marginal MSE approximation than that of the CRC method. From Table 1, if we consider the true prior probabilities for all SNPs to be independent of the covariate, that is, π0(xi) = π0 for i = 1,…, N, then the CRC method has a smaller MSE than that of the ARC method. In such cases, the CRC method should be used instead of the ARC method to analyze the data.

Table 1. MSE of the ARC method relative to the CRC method when there is no covariate effect.

The true prior probabilities are constant, π0(xi) = π0. The log2 value is given for the marginal relative MSE. Under the ARC method, Δ0 is 0.01.

| π0 | 0.60 | 0.80 | 0.90 | 0.95 |

| ReMSEmarg | 0.9030 | 0.8156 | 1.3729 | 2.0908 |

3.2 Real data analysis

We apply both the ARC and CRC methods to the CAD and DTI datasets, and compare the disease-associated SNPs and dyslexic-non-dyslexic difference voxels under each method, respectively. The purpose of this comparison is to demonstrate the practical difference between the methods rather than to determine which method performs better.

3.2.1 CAD data analysis

The CAD dataset originating from the United Kingdom includes 500, 568 SNPs genotyped for 2, 000 cases, and 3, 000 combined on 22 autosomal chromosomes. The control individuals come from two groups: 1500 individuals from the 1958 British Birth Cohort (58C), and 1500 individuals selected from UK blood services (UKBS) controls. Following [12], we use quality control filtering methods to exclude SNPs based on the exact Hardy-Weinberg equilibrium (HWE) test as well as individuals or SNPs with many missing genotypes. The following three filters are applied sequentially. For the first filter, an SNP fails when the proportion of missing data proportion is greater than 0.05 or when the minor allele frequency (MAF) is smaller than 0.05 and the missing data proportion is greater than 0.01. For the second filter, SNPs with p-values smaller than 0.05 using the exact HWE test in the combined controls are rejected. Finally, for the third filter, we reject SNPs with p-values smaller than 5 × 10−7 using trend tests and general genotype tests between each case and the combined controls. We also excluded SNPs with MAFs smaller than 0.01. A total of N = 394839 SNPs passed these quality control filtering methods and are used to identify disease-associated SNPs. We apply both the ARC and the CRC methods to the CAD data and compare the disease-associated SNPs under each method.

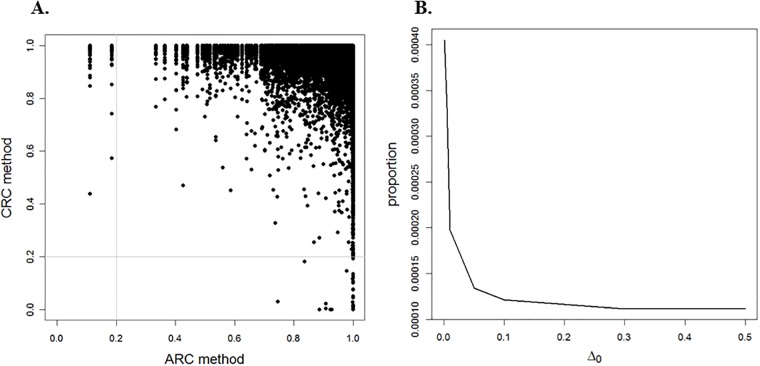

The CAD related data introduced in Section 1.1, with N = 394, 839 SNPs, is employed in the following statistical analysis. Under the CRC method, all observed statistics z are considered to estimate the LFDR, where 44 disease-associated SNPs are identified with LFDR lower than 0.2. The MAF is incorporated as a covariate. Under the ARC method, the optimal reference class is estimated for each MAF, which depends on the choice of Δ0. Fig 3(A) presents the LFDR estimates under the CRC method versus the ARC method when Δ0 = 0.001. The results show that, 160 SNPs are disease-associated based on the ARC method, while the CRC method detects 44 disease-associated SNPs. From Fig 3(B), we find that changing Δ0 has a direct effect on the number of disease-associated SNPs. Under the ARC method, increasing the value of Δ0 brings the proportion of disease-associated SNPs closer to the corresponding proportion under the CRC method.

Fig 3. CAD data analysis.

(A) presents the LFDR estimate under the ARC method for Δ0 = 0.001 versus that for the CRC method and (B) illustrates the proportion of disease-associated SNPs under the ARC method when the LFDR estimate is less than 0.2 versus Δ0 ∈ (0, 0.50).

3.2.2 DTI data analysis

Schwartzman et al. [5] used advanced MRI technology, DTI, to measure water diffusion in the human brain by scanning the brain. DTI is used to map and characterize the three-dimensional diffusion of a water molecule randomly moving in brain tissue to provide information regarding the direction of diffusion. The measured diffusivity; that is, the diffusion coefficient, relates the diffusive flux to a concentration gradient [27], and has units of (mm2/s). In this study, 12 children were tested and divided equally in each group (i.e., dyslexic or non-dyslexic group). Each child received DTI brain scans in N = 15443 locations, with each represented by its own voxel’s response. The aim is to determine the dyslexic-non-dyslexic difference at the ith voxel (location), in relation to reading development in children aged 7-13 [28]. Each test corresponds to a specific voxel. We have two components (zi, xi) associated with each hypothesis for i = 1,…,N, where zi is an observed test statistic that compares the dyslexic children with those who are not (see in Section 2), and xi is the location (i.e., the distance from back of brain to the front). We apply both the ARC and CRC methods to the DTI data and compare the dyslexic-non-dyslexic difference voxels under each method. The DTI brain scans data with a total of N = 15443 locations, each represented by its own voxel’s response, is employed in the following statistical analysis.

Let . The observed statistics w = (w1, w2,…, wN)T are considered as a realization of W = (W1, W2,…, WN)T. Under the ith null hypothesis, it holds that Wi ∼ χ1, while under the ith alternative hypothesis Wi ∼ χ1,δ, where δ ∈ (0, inf) is an unknown noncentrality parameter, according to the models employed in [23, 24]. Therefore, the LFDR in Eq (1) is defined as follows

| (19) |

where g0(wi) ∼ χ1 (i.e., null density), and g(wi; π0, δ) denotes the mixture density given by

| (20) |

where g1(wi; δ) represents the unknown alternative density. Under the CRC method, all observed statistics w are considered to estimate the LFDR using the type II maximum likelihood estimation (MLE) model [24]

| (21) |

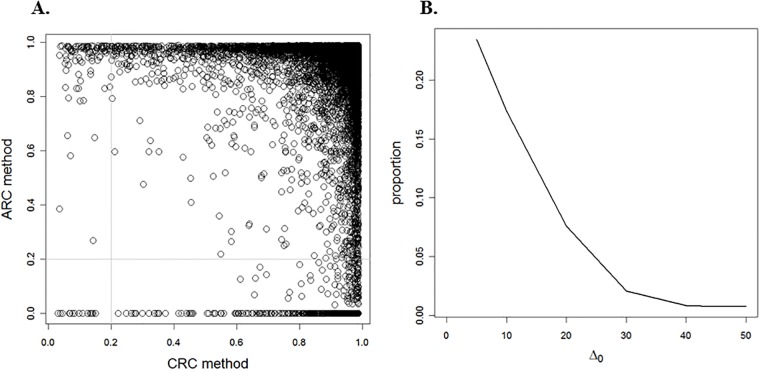

where and , and 119 dyslexic-non-dyslexic difference voxels are identified with LFDR lower than 0.2. Then, the brain location is incorporated as a covariate. Under the ARC method, the optimal reference class is estimated for each location, which depends on a choice of Δ0. Fig 4(A) presents the LFDR estimates under the CRC method versus the ARC method when Δ0 = 20. We observe from Fig 4(B), that changing Δ0 has a direct effect on the number of dyslexic-non-dyslexic difference voxels. Under the ARC method, increasing the value of Δ0 brings the proportion of dyslexic-non-dyslexic difference voxels closer to the corresponding proportion under the CRC method.

Fig 4. Brain data analysis.

(A) presents the LFDR estimate under the ARC method for Δ0 = 20 versus that for the CRC method and (B) illustrates the proportion of dislexic-non-dyslexic difference voxels under the ARC method when the LFDR estimate is less than 0.2 versus Δ0 ∈ (0, 50).

4 Discussion and conclusion

In this study, we employ a novel approach that incorporates a covariate (i.e., a scientific context corresponding to each hypothesis test) to improve the LFDR estimate when identifying alternative hypotheses. Using this approach, both the test statistic distribution under the alternative hypothesis and the prior probability that the null hypothesis is true, are modulated by the covariate. In the case where the prior probability π0(Xi) is the step function given in Eq (16), Theorem 1 states that the ARC method has an MSE asymptotically no greater than that of the CRC method. It would be interesting to investigate whether this result holds for a general prior probability. We leave this topic for future research. In addition, the simulation indicates that the ARC method performs in comparison to the CRC method for a finite number of hypotheses. Our simulation results confirm that for regions and , the LFDR estimator associated with the ARC method has a smaller MSE approximation than that of the CRC method (see Fig 2). Moreover, we could not prove the weak consistency of as an estimator of π0(xi) in region (see S1 File, Lemma 2). The ARC method was applied to both CAD and DTI datasets, as illustrated in Figs 3 and 4. Regardless of LFDR estimation methods (i.e., HB and MLE), by increasing the value of the tuning parameter Δ0, the proportion of significant null hypotheses decreases, and approaches the proportion based on the CRC method. This suggests that further investigation may be necessary on how the tuning parameter Δ0 can be controlled to improve results.

Supporting information

(PDF)

Acknowledgments

The Biobase by [29] and locfdr by [3] packages of R facilitated the computational work. We thank two anonymous reviewers for comments leading to the improvement of the paper. This study makes use of the data generated by the Wellcome Trust Case-Control Consortium. A full list of the investigators who contributed to the generation of this dataset is available from www.wtccc.org.uk. Funding for the project was provided by the Wellcome Trust under award 076113.

Data Availability

This study makes use of DTI data that are publicly available here: http://statweb.stanford.edu/~ckirby/brad/LSI/datasets-and-programs/datasets.html. This study also makes use of third party data generated by the Wellcome Trust Case-Control Consortium. The authors are not affiliated with the consortium, and the data are publicly available under a managed access mechanism. Researchers can apply to use the data for research purposes from https://www.sanger.ac.uk/legal/DAA/MasterController. The data are stored at the European Genome phenome Archive (EGA) and access is managed by the Wellcome Sanger Institute. The DAC can be contacted at datasharing@sanger.ac.uk.

Funding Statement

This research was partially supported by Canadian Institutes of Health Research, by two Discovery Grants from the Natural Sciences and Engineering Research Council of Canada (OGP0009068, Grant No. 123508), by the Canada Foundation for Innovation, by the Ministry of Research and Innovation of Ontario, by the Faculty of Science and Department of Mathematics and Statistics of the University of Ottawa, and by the Faculty of Medicine of the University of Ottawa. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Efron B, Tibshirani R, Storey JD, Tusher V. Empirical Bayes analysis of a microarray experiment. Journal of the American Statistical Association. 2001;96(456):1151–1160. 10.1198/016214501753382129 [Google Scholar]

- 2.Bickel DR. Correcting false discovery rates for their bias toward false positives. Working Paper, University of Ottawa, deposited in uO Research at http://hdlhandlenet/10393/34277. 2016;.

- 3. Efron B. Size, power and false discovery rates. The Annals of Statistics. 2007; p. 1351–1377. 10.1214/009053606000001460 [Google Scholar]

- 4. Ploner A, Calza S, Gusnanto A, Pawitan Y. Multidimensional local false discovery rate for microarray studies. Bioinformatics. 2005;22(5):556–565. 10.1093/bioinformatics/btk013 [DOI] [PubMed] [Google Scholar]

- 5. Schwartzman A, Dougherty RF, Taylor JE. Cross-subject comparison of principal diffusion direction maps. Magnetic Resonance in Medicine. 2005;53(6):1423–1431. 10.1002/mrm.20503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Noble WS. How does multiple testing correction work? Nature biotechnology. 2009;27(12):1135–1137. 10.1038/nbt1209-1135 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Adkins DE, Åberg K, McClay JL, Bukszár J, Zhao Z, Jia P, et al. Genomewide pharmacogenomic study of metabolic side effects to antipsychotic drugs. Molecular psychiatry. 2011;16(3):321–332. 10.1038/mp.2010.14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Zablocki RW, Schork AJ, Levine RA, Andreassen OA, Dale AM, Thompson WK. Covariate-modulated local false discovery rate for genome-wide association studies. Bioinformatics. 2014;30(15):2098–2104. 10.1093/bioinformatics/btu145 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. van den Oord EJ. Controlling false discoveries in genetic studies. American Journal of Medical Genetics Part B: Neuropsychiatric Genetics. 2008;147(5):637–644. 10.1002/ajmg.b.30650 [DOI] [PubMed] [Google Scholar]

- 10. Liao J, Lin Y, Selvanayagam ZE, Shih WJ. A mixture model for estimating the local false discovery rate in DNA microarray analysis. Bioinformatics. 2004;20(16):2694–2701. 10.1093/bioinformatics/bth310 [DOI] [PubMed] [Google Scholar]

- 11. Efron B. Simultaneous inference: When should hypothesis testing problems be combined? The Annals of Applied Statistics. 2008; p. 197–223. 10.1214/07-AOAS141 [Google Scholar]

- 12. Wellcome Trust Case Control Consortium. Genome-wide association study of 14,000 cases of seven common diseases and 3,000 shared controls. Nature. 2007;447:661–678. 10.1038/nature05911 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Bickel DR. Minimax-Optimal Strength of Statistical Evidence for a Composite Alternative Hypothesis. International Statistical Review. 2013;81(2):188–206. 10.1111/insr.12008 [Google Scholar]

- 14. Mei S, Karimnezhad A, Forest M, Bickel DR, Greenwood C. The performance of a new local false discovery rate method on tests of association between coronary artery disease (CAD) and genome-wide genetic variants. PLoS ONE. 2017;12:e0185174 10.1371/journal.pone.0185174 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Bickel DR. Error-rate and decision-theoretic methods of multiple testing: which genes have high objective probabilities of differential expression? Statistical Applications in Genetics and Molecular Biology. 2004;3(1):1–20. 10.2202/1544-6115.1043 [DOI] [PubMed] [Google Scholar]

- 16. Benjamini Y, Hochberg Y. Multiple hypotheses testing with weights. Scandinavian Journal of Statistics. 1997;24(3):407–418. 10.1111/1467-9469.00072 [Google Scholar]

- 17. Genovese CR, Roeder K, Wasserman L. False discovery control with p-value weighting. Biometrika. 2006;93(3):509–524. 10.1093/biomet/93.3.509 [Google Scholar]

- 18.Wasserman L, Roeder K. Weighted hypothesis testing. arXiv preprint math/0604172. 2006;.

- 19. Sun L, Craiu RV, Paterson AD, Bull SB. Stratified false discovery control for large-scale hypothesis testing with application to genome-wide association studies. Genetic epidemiology. 2006;30(6):519–530. 10.1002/gepi.20164 [DOI] [PubMed] [Google Scholar]

- 20. Hu JX, Zhao H, Zhou HH. False discovery rate control with groups. Journal of the American Statistical Association. 2010;105(491). 10.1198/jasa.2010.tm09329 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Karimnezhad A, Bickel DR. Incorporating prior knowledge about genetic variants into the analysis of genetic association data: An empirical Bayes approach. IEEE/ACM Transactions on Computational Biology and Bioinformatics. 2018;. [DOI] [PubMed]

- 22. Lewis CM. Genetic association studies: design, analysis and interpretation. Briefings in Bioinformatics. 2002;3(2):146–153. 10.1093/bib/3.2.146 [DOI] [PubMed] [Google Scholar]

- 23. Bukszár J, McClay JL, van den Oord EJCG. Estimating the posterior probability that genome-wide association findings are true or false. Bioinformatics. 2009;25:1807–1813. 10.1093/bioinformatics/btp305 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Yang Y, Aghababazadeh FA, Bickel DR. Parametric estimation of the local false discovery rate for identifying genetic associations. IEEE/ACM Transactions on Computational Biology and Bioinformatics (TCBB). 2013;10(1):98–108. 10.1109/TCBB.2012.140 [DOI] [PubMed] [Google Scholar]

- 25. Efron B. Large-scale simultaneous hypothesis testing: the choice of a null hypothesis. Journal of the American Statistical Association. 2004;99(465):96–104. 10.1198/016214504000000089 [Google Scholar]

- 26. Walther BA, Moore JL. The concepts of bias, precision and accuracy, and their use in testing the performance of species richness estimators, with a literature review of estimator performance. Ecography. 2005;28(6):815–829. 10.1111/j.2005.0906-7590.04112.x [Google Scholar]

- 27. Sundgren P, Dong Q, Gomez-Hassan D, Mukherji S, Maly P, Welsh R. Diffusion tensor imaging of the brain: review of clinical applications. Neuroradiology. 2004;46(5):339–350. 10.1007/s00234-003-1114-x [DOI] [PubMed] [Google Scholar]

- 28. Deutsch GK, Dougherty RF, Bammer R, Siok WT, Gabrieli JD, Wandell B. Children’s reading performance is correlated with white matter structure measured by diffusion tensor imaging. Cortex. 2005;41(3):354–363. 10.1016/S0010-9452(08)70272-7 [DOI] [PubMed] [Google Scholar]

- 29. Gentleman RC, Carey VJ, Bates DM, Bolstad B, Dettling M, Dudoit S, et al. Bioconductor: open software development for computational biology and bioinformatics. Genome biology. 2004;5(10):R80 10.1186/gb-2004-5-10-r80 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(PDF)

Data Availability Statement

This study makes use of DTI data that are publicly available here: http://statweb.stanford.edu/~ckirby/brad/LSI/datasets-and-programs/datasets.html. This study also makes use of third party data generated by the Wellcome Trust Case-Control Consortium. The authors are not affiliated with the consortium, and the data are publicly available under a managed access mechanism. Researchers can apply to use the data for research purposes from https://www.sanger.ac.uk/legal/DAA/MasterController. The data are stored at the European Genome phenome Archive (EGA) and access is managed by the Wellcome Sanger Institute. The DAC can be contacted at datasharing@sanger.ac.uk.