Abstract

The field of epidemiology has been defined as the study of the spread and control of disease. However, epidemiology frequently focuses on studies of etiology and distribution of disease at the cost of understanding the best ways to control disease. Moreover, only a small fraction of scientific discoveries are translated into public health practice, and the process from discovery to translation is exceedingly slow. Given the importance of translational science, the future of epidemiologic training should include competency in implementation science, whose goal is to rapidly move evidence into practice. Our purpose in this paper is to provide epidemiologists with a primer in implementation science, which includes dissemination research and implementation research as defined by the National Institutes of Health. We describe the basic principles of implementation science, highlight key components for conducting research, provide examples of implementation studies that encompass epidemiology, and offer resources and opportunities for continued learning. There is a clear need for greater speed, relevance, and application of evidence into practice, programs, and policies and an opportunity to enable epidemiologists to conduct research that not only will inform practitioners and policy-makers of risk but also will enhance the likelihood that evidence will be implemented.

Keywords: dissemination research, epidemiologic methods, implementation research, implementation science, translational science

The field of epidemiology has been broadly defined as “the study of how disease spreads and can be controlled” (1) or “the branch of medicine which deals with the incidence, distribution, and possible control of diseases and other factors relating to health” (2). Using the latter part of this definition, Galea (3) made the case for what he called a consequentialist epidemiology, suggesting that our inordinate focus on the etiology and distribution of disease comes at a major cost to the field and to public health in general. He called for a “more rigorous engagement with the second part of our vision for ourselves—the intent for us to intervene” or control disease (3, p. 1185). Heeding this call, Brownson and numerous other leaders in the field published a paper entitled “Charting a Future for Epidemiologic Training” (4). They identified macro-level trends influencing the field of epidemiology and developed a set of recommended competencies for future epidemiologic training. Among these noted trends was the emergence of translational sciences, specifically dissemination and implementation research, also known collectively as implementation science (the term we use in this article), whose goal is to move evidence into practice as effectively and efficiently as possible.

One may ask why a distinct field of research is needed in order to more effectively translate research into practice. Numerous studies have estimated that the amount of time it takes for even a fraction of original research to be implemented into practice is approximately 17 years (5–7). Moreover, life-saving measures are not always implemented despite years of evidence, as has been shown for hospital-acquired infections (8), heart disease (9, 10), diabetes (11), asthma (12), and cancer (13). This consistent failure to translate research findings into practice has resulted in an estimated 30%–40% of patients not receiving treatments of proven effectiveness and 20%–25% of patients receiving care that is not needed or potentially harmful (14–16). It is increasingly clear that the processes of dissemination and implementation are not passive but require active strategies to ensure that the evidence is effectively understood, adopted, implemented, and maintained in practice settings (5, 17). Furthermore, epidemiologists are critical in making this happen; we are among the primary generators of evidence, and that evidence is only as useful as it is effectively generated and communicated to stakeholders, including public health practitioners, policy-makers, providers, and patients. Even when evidence is relevant and effectiveness is demonstrated, implementation of an effective intervention may not occur. The questions are: 1) Will it be adopted?, 2) Will practitioners be equipped to deliver it?, and 3) Of those who are equipped, will they choose to deliver it or receive institutional support to do so—and if so, what portion of the population will actually benefit?

Brownson et al. (4) recommended increasing the competency of epidemiologists in implementation science in order to contribute effectively to the integration of evidence into practice. Our purpose in this paper is to provide epidemiologists with a primer in implementation science. We describe the basic principles of implementation science, highlight key components for conducting research, articulate roles for epidemiologists in this field, provide brief examples of implementation studies that encompass epidemiologic principles, and offer additional resources and opportunities for continued learning.

WHAT IS IMPLEMENTATION SCIENCE?

“Implementation science” is one of several terms that have been used to describe the science of putting knowledge or evidence into action and of understanding what, why, and how evidence or evidence-based practices work in the real world (18, 19). “Implementation science” is the predominant term used in the United States and Europe (18), and it has been defined in various ways by different agencies and organizations. For the purpose of this article, we are using the definition described in the journal Implementation Science—the study of methods to promote the integration of research findings and evidence into health-care policy and practice (20). This includes the study of how best to spread or sustain evidence, as well as the testing of strategies that can best facilitate the adoption and integration of evidence-based interventions into practice. In response to the need for such research, the National Institutes of Health has issued funding announcements to build the knowledge base on how to improve upon these processes (21–23). In these funding announcements, implementation science is broken down into 2 components, dissemination research and implementation research, which are defined as follows:

Dissemination research is the scientific study of targeted distribution of information and intervention materials to a specific public health or clinical practice audience. The intent is to understand how best to spread and sustain knowledge and the associated evidence-based interventions.

Implementation research is the scientific study of the use of strategies to adopt and integrate evidence-based health interventions into clinical and community settings in order to improve patient outcomes and benefit population health.

While we recognize that there may be some overlap, the general distinction between these two subfields is that dissemination research is focused on spread across multiple settings, while implementation research is focused on specific local efforts to implement in targeted settings (e.g., schools, worksites, clinics).

Implementation science broadly focuses on the how questions: How do we get evidence to drive practice and get evidence-based interventions to become standard care so that everyone who can benefit from them has access? A critical part of understanding that process is to examine the intermediary dissemination and implementation outcomes that are requisite to achieve population health impact. These include the awareness, acceptability, reach, adoption, appropriateness, feasibility, fidelity, cost, and sustainability of efforts to disseminate and/or implement evidence in practice settings (24, 25). Otherwise, it can be difficult to discern whether, for example, an intervention does not work because it is not effective in a particular population or setting or because it was not disseminated and/or implemented properly in a given context. And if implementation works in a given case, what can we learn from those successful strategies to enhance implementation of other public health measures? For the purpose of this paper, we will use the term “implementation science” to refer to the overall field and the term “implementation studies” for investigations within this broad area of research.

KEY COMPONENTS OF IMPLEMENTATION SCIENCE

Implementation science seeks to address gaps in the provision of evidence-based practices and is rooted in theories and methods from a variety of fields; it hinges on transdisciplinary collaboration, with an emphasis on engaging stakeholders; it focuses on the strategies used to disseminate and implement evidence and evidence-based interventions, and understanding how and why they work; it uses rigorous and relevant methodologies; and it emphasizes the importance of external validity and scalability. Table 1 summarizes key components of an implementation science study. These components were adapted from previously published work that assessed the most critical aspects of successful grant proposals in implementation science at the National Institutes of Health (26) and the Canadian Institutes of Health Research (27), taking into account key elements defined by the Department of Veterans Affairs (28). To provide context for each of these components, we walk through the specific example of implementing the hepatitis B vaccine in order to prevent liver cancer.

Table 1.

Key Components of an Implementation Studya

| Study Component | Why It Matters | Selected Resources |

|---|---|---|

| Research objective | Research question addresses a gap in the provision of an evidence-based intervention, practice, or policy |

|

| Evidence-based practice | Sufficient evidence of effectiveness and an appropriate fit for a given context |

|

| Theoretical justification | Conceptual model and theoretical justification supports the choice of intervention and informs the design, the variables to be measured, and the analytical plan |

|

| Stakeholder engagement | Clear engagement process and demonstrated support from relevant stakeholders to ensure the feasibility that implementation can be studied | Review of participatory research (72):

|

| Implementation strategy | Implementation strategy or strategies for implementing the evidence-based practice are justified and well-described | |

| Team expertise | Appropriate multidisciplinary expertise on the study team is demonstrated, including qualitative and/or quantitative expertise, to ensure rigorous data collection and analysis | Networking communities

|

| Study design | Study design is justified and feasible given the study context (e.g., feasibility of randomization) |

|

| Measurement | Implementation outcome measures should be included, conceptually justified, well-defined, and informed by existing measurement instruments, and should cover concepts of both internal and external validity | Society for Implementation Research Collaboration: https://societyforimplementationresearchcollaboration.org/ Grid-Enabled Measures Database (NCI): Grid-Enabled Measures Database methods paper (75) |

Abbreviations: EPOC, Effective Practice and Organisation of Care; HIV, human immunodeficiency virus; NCI, National Cancer Institute; RE-AIM, Reach, Effectiveness, Adoption, Implementation, and Maintenance; SAMHSA, Substance Abuse and Mental Health Services Administration.

a The study components selected for this table were based on those described by Proctor et al. (26) as key components for an implementation science grant.

Research objective

The first component of any strong research study is a clear research objective or set of specific aims; what distinguishes an implementation study is that the objective and aims address a gap in the provision of care or quality. Rather than focus on an increase in disease-specific incidence or mortality, an implementation study might focus on a clear gap in the provision of evidence-based practices to address disease. For example, where an epidemiologic study may focus on understanding the etiology of the increase in incidence of liver cancer in the United States, an implementation study may focus on understanding the barriers to and facilitators of implementing widespread hepatitis B vaccination or hepatitis C treatment and seek to develop and test strategies to address those barriers to implementation. Epidemiologic investigation identifying not only the incidence and mortality trends but also the most vulnerable populations and settings where gaps in hepatitis vaccination or treatment may exist would be critical to shaping the research objective for an implementation study.

Evidence-based practice

The second component has to do with the practice or intervention to be implemented and its evidence base regarding efficacy or effectiveness. Beyond the strength of evidence, additional critical questions to consider when selecting an evidence-based intervention to address a care or quality gap include: 1) How well does the intervention fit with the study population?, 2) What are the available resources to implement the intervention in the study settings?, and 3) How feasible is the intervention’s use in the given context?. While the nature of the evidence is important, implementation science further prioritizes the importance of the context for that evidence, including the population, setting, and political and policy environment. In our driving example of hepatitis B vaccination and liver cancer prevention, we might ask which population should be targeted (e.g., patient populations, clinics, public health departments, ministries of health), what resources are available to implement a vaccination program, and how feasible a vaccination program is in a given context (e.g., developing country, schools, clinics, pharmacies).

A useful tool to help guide researchers in thinking through relevant issues to consider when developing or selecting an intervention is the Pragmatic Explanatory Continuum Indicator Summary, called PRECIS-2 (https://www.precis-2.org/). PRECIS-2 was initially created to help trialists design trials that are fit for their purpose, whether that is to understand efficacy or the effectiveness of an intervention (29). The tool identifies 9 issues or domains for which design decisions can influence the degree to which a trial is explanatory (i.e., addresses efficacy) versus pragmatic (i.e., addresses effectiveness). The domains address features of the target of the intervention (e.g., participant eligibility, patient-centeredness of the primary outcome) as well as of the delivery of the intervention (e.g., setting, organization, delivery and adherence methods, follow-up protocol). Understanding the generalizability of the evidence for a given intervention, as well as the context in which it was proven effective, can help to inform decisions about selecting the appropriate practice or intervention to implement.

Theoretical justification

Just as epidemiologists may rely on causal directed acyclic graphs to determine which variables should be included in a regression model as possible confounders and which should be considered as potential mediators or effect modifiers (30, 31), researchers conducting implementation studies should be informed by theories, conceptual frameworks, and models, which serve to explain phenomena, organize conceptually distinct ideas, and help visualize relationships that cannot be observed directly. Although theories, conceptual frameworks, and models are distinct concepts with unique definitions and characteristics, they all serve a similar purpose, and thus we will henceforth refer to them singularly as “models” (32). In implementation science, models not only serve to inform which variables are relevant to measure and analyze but also can serve to inform the development or selection of an evidence-based practice or intervention, as well as the development or selection of a strategy for implementing that intervention. Theoretical models in implementation science have been categorized into 2 categories: Explanatory models describe whether and how implementation activities will affect a desired outcome, and process models inform which implementation strategies should be tested (33). We recognize that the selection of strategies will depend on the current stage of implementation for a given context. For example, if an intervention or practice has yet to be adopted, strategies may focus on influencing decision-makers by educating them about the value of the intervention. Alternatively, if an intervention has been adopted and implemented but the challenge is how best to sustain it, the strategies would focus on sustainment. A review of models in implementation science identified 61 different models that were characterized by construct flexibility (how loosely or rigidly defined are the concepts in the model), socioecological level (e.g., individual, organization, community, system), and the degree to which they addressed dissemination versus implementation processes (32). While each model had distinguishing features, common elements among them included an emphasis on the importance of change and characterization of the nature of change, the significance of context at both the local and external levels, and the recognition that most change requires active and deliberate facilitation (e.g., local champions, tools, training). Furthermore, barriers to dissemination and implementation exist across settings and sites and could include factors relating to leadership, resources, technology, and inertia.

Some of the most widely used models in implementation science include Roger’s Theory of Diffusion of Innovations (34), the RE-AIM (Reach, Effectiveness, Adoption, Implementation, and Maintenance) Framework (35), and the Consolidated Framework for Implementation Research (36). Table 1 lists useful resources that can help investigators select an appropriate model for their research. Some important factors to consider when selecting a model include the research question (whether it is addressing dissemination and/or implementation), the socioecological level of change (e.g., provider, clinic, organization, system), relevant characteristics of context, time frame, and availability of measures (37). Looking at the example of hepatitis B vaccination, important influences might exist at the national or state level (e.g., ministries of health, state health departments), the organizational level (e.g., integrated delivery systems), the clinic level, the provider level, and the patient level. For example, the culture of a health-care organization may not be open to adding an additional program, or a particular ministry of health may not prioritize prevention practices. Frameworks can help to identify these various key influences.

Stakeholder engagement

Many areas of science do not require engagement from stakeholders (e.g., patients, physicians, clinics, community members), particularly in basic and exploratory research, but when studying implementation, engagement is a necessity. In order to maximize the likelihood that stakeholders will implement an intervention, it is necessary to understand their needs and challenges to ensure that a given intervention and the approach to implementing it meet their contextual conditions, that they have the necessary resources to implement the intervention, and that they are able to sustain it (38). Review committees for implementation science proposals strongly weigh demonstrated support from relevant stakeholders for given projects. Reviewers assess the level of stakeholder engagement both by letters of support and by a clear record of collaboration between the researchers and stakeholders involved in implementing the evidence-based practice. As we think about key stakeholders in the example of hepatitis B vaccination and liver cancer prevention, we might focus on ministries of health, state health departments, schools, clinics, or pharmacies. For example, if the ministry of health in a given country of interest does not prioritize prevention, the ministry might not be the right locus for implementation; other stakeholders to consider in that situation might be local schools or pharmacies. The key is connecting to the appropriate stakeholders within a given setting who may be the lynchpins to widespread implementation. The more we work with these key stakeholders, the better we can identify the needs of and barriers to implementation, and thus develop feasible and sustainable solutions.

Implementation strategy

Implementation studies often focus on identifying, developing, testing, and/or evaluating strategies that enhance uptake of an intervention, program, or practice. Implementation strategies are the techniques used to ensure or enhance the adoption, implementation, and sustainability of an evidence-based practice. In a review of implementation strategies, Powell et al. (39) found 73 distinct strategies that could be grouped into 6 categories defined by key processes: planning, educating, financing, restructuring, managing quality, and attending to the policy context. Examples of implementation strategies for each of these categories include strategies with which to build stakeholder buy-in (planning), train practitioners to deliver an intervention (educating), modify incentives (financing), revise professional roles (restructuring), conduct audit and feedback (managing quality), and create credentialing or licensure standards (attending to the policy context). Implementation science focuses on understanding whether and/or how these strategies work to foster implementation and on understanding the mechanisms behind these strategies. In the example of hepatitis B vaccination, we might consider strategies related to educating patients about the value of the vaccine in cancer prevention, or strategies to help finance resource-strapped community health centers to facilitate both the implementation and sustainment of vaccination programs.

Team expertise

As with any research endeavor, having appropriate expertise on an implementation science team is critical and depends on the research questions at hand. Unlike epidemiology, in implementation science it is common to see mixed-methods studies that require the expertise of both quantitative and qualitative researchers. The qualitative component of a study can help to inform the findings from quantitative analysis, providing valuable data that can explain how or why X strategy to implement Y intervention did or did not work. Conversely, qualitative data can help to shape the quantitative component of a study (e.g., qualitative analysis to understand barriers to adoption can inform which strategies are developed or selected to be quantitatively tested).

In addition, key implementation outcomes may require specific expertise to be represented on the team. For example, costs and the cost-effectiveness of implementation strategies are commonly measured outcomes that would require the inclusion of an economist or cost-effectiveness analyst for effective evaluation. And unlike many other scientific endeavors, stakeholders (e.g., patients, providers, community representatives) also can be a critical part of the study team, as they can inform both the strategies used and the most feasible research designs for a given study population.

Finally, having someone with previous experience in conducting implementation science on the study team or as a consultant or mentor is often seen as a critical asset by reviewers. In the example of hepatitis B, epidemiologists seeking to close the gap in vaccination rates globally may wish to team with implementation scientists or qualitative researchers to investigate existing barriers to implementation using focus groups, or to identify strategies to facilitate the adoption of vaccination programs, or to conduct a mixed-methods analysis to understand why a tested strategy did or did not work.

Study design

There are a variety of rigorous study designs that have been developed and used in implementation science. These include both experimental (e.g., randomized controlled trial, cluster-randomized controlled trial, pragmatic trial, stepped wedge trial, dynamic wait-listed control trial) and quasi-experimental (e.g., nonequivalent groups, pre-/post-, regression discontinuity, interrupted time series), nonexperimental or observational (including designs from epidemiology), mixed-methods (i.e., the collection and integration of qualitative and quantitative data), qualitative methods (e.g., focus groups, semistructured interviews), and system science (e.g., system dynamics, agent-based modeling) approaches (40, 41). Additionally, study designs that simultaneously test intervention effectiveness as well as implementation are called hybrid designs (42). While epidemiologists may be familiar with many of these designs, mixed-methods approaches and qualitative analyses likely will be unfamiliar, and thus it would be critical for epidemiologists to team with qualitative researchers in conducting implementation studies, which often rely on these methods. Notably, there is a wide range of acceptable study designs, and we have seen successful grant applications for all of these, not only randomized controlled trials.

Selecting the appropriate study design for an implementation science study depends on the study question and the available evidence, as well as the study circumstances, such as whether randomization is possible. For example, if the study question addresses why or how dissemination or implementation occurs, a design that includes qualitative assessment might be required. If study participants will not accept randomization, then a quasi-experimental design might be indicated. A variety of resources exist to help researchers learn more about study designs for implementation science, including the Implementation Science Exchange hosted by the North Carolina Translational and Clinical Sciences Institute at the University of North Carolina at Chapel Hill (https://impsci.tracs.unc.edu) and webinars hosted by the Implementation Science Program in the Division of Cancer Control and Population Sciences at the National Cancer Institute, as referenced in Table 1. In the example of hepatitis B vaccination, the unit of analysis for implementation may be at the clinic level, and not all clinics may want to be randomized. Thus, a quasi-experimental design may be most appropriate.

Measurement

Implementation science requires specific measurement of constructs related to the key implementation outcomes described above (e.g., awareness, acceptability, reach, adoption, appropriateness, feasibility, fidelity, cost, and sustainability), which are informed by implementation science theories, models, or frameworks. There are online databases of existing measures and measurement tools for implementation science, including the National Cancer Institute’s Grid-Enabled Measures Database Dissemination and Implementation Initiative and the Society for Implementation Research Collaboration's Instrument Review Project and Instrument Repository, both listed in Table 1. Additionally, several systematic reviews of implementation science measurement instruments have been published (43–48). In an implementation study of hepatitis B vaccination in a low-resource setting, for example, the question is not only whether vaccination is effective at reducing the risk of liver cancer but also whether the vaccination program was adopted (e.g., measuring the rate of uptake), implemented with fidelity (e.g., measuring the dose and complete rate), or sustained (e.g., measuring whether clinics continued to vaccinate 12 months after the program was initiated).

WHAT ROLE CAN EPIDEMIOLOGISTS PLAY?

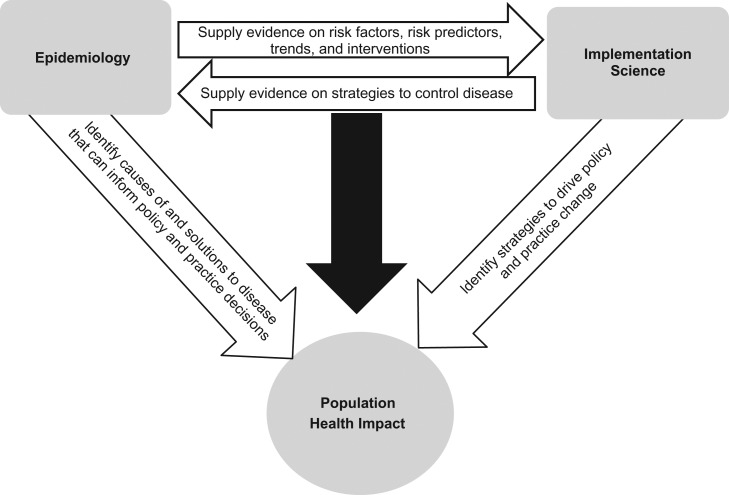

The interface between epidemiology and implementation science should be bidirectional, as illustrated in Figure 1. As a collaborative and interdisciplinary enterprise, implementation science relies on the work of epidemiologists to inform the evidence base, identify the gaps in health status, contribute to methods for design and measurement, and inform program and policy evaluation. On the other hand, the work of epidemiologists can be magnified through direct engagement in implementation science, enhancing the real-world relevance of epidemiologic research and increasing the likelihood that scientific findings will be useful or consequential (3).

Figure 1.

Interrelationship between epidemiology and implementation science for maximization of population health impact.

There are numerous ways in which epidemiologists contribute to and lead implementation studies, often as part of an interdisciplinary team (49). We provide several examples to illustrate the nexus between epidemiologic methods and implementation science.

Defining evidence

Evidence relevant to implementation science can come in multiple forms, including data on the size of a public health problem, the causes of the problem, and effective interventions. In particular, the notion of an evidence-based intervention is central to implementation science because the focus is often on the scale-up and spread of evidence-based programs and policies (50). As in many types of epidemiologic studies, both internal validity and external validity play key roles in an implementation study. Internal validity is threatened by multiple types of systematic error, and error rates are influenced by both study design and study execution. Too little attention has been paid to external validity (i.e., the degree to which findings from a study or set of studies can be generalizable to and relevant for populations, settings, and times other than those in which the original studies were conducted) (51). The epidemiologic skills (addressing systematic error) to describe the quality of intervention evidence related to internal validity and the potential for generalizability (external validity) are crucial for implementation research (52–55).

Contributing to systems approaches

Complex systems thinking is needed to address our most vexing public health issues (56) and is a central theme in implementation science (57). Systems thinking in implementation science may be operationalized in several ways. At a conceptual level, systems approaches take into account context involving multiple interacting agents and study processes that are nonlinear and iterative. Numerous systems methods (e.g., agent-based modeling, systems dynamics modeling), often developed in other disciplines, are becoming more commonly used in implementation science to predict the future impact, or impact at full scale, of new interventions. Epidemiologists are often well-equipped for dealing with multiple interacting factors and are therefore valuable team members in systems studies.

Viewing causality in a new light

It has been argued that the classical framework for causal thinking articulated by Hill has been critical to understanding causation of chronic diseases but does not fully represent causation for complex public health issues (58). The classic Hill criteria (59) and those outlined in the 1964 US Surgeon General’s Report (60) have proven highly useful in etiological research but may be less useful for studies of the effectiveness of interventions or the scale-up of effective programs and policies in implementation studies—namely that factors occur across multiple levels (from biological to macrosocial) and these factors often influence one another (61). Numerous epidemiologic skills contribute to an expanded view of causation (62–67), including those involving multilevel modeling, developing unbiased estimators of both cluster and individual-level effects over multiple time points, and developing causal diagrams to help understand complex relationships (30, 68).

Determining appropriate study designs

Designs for implementation science have greater variation than those used for more traditional epidemiologic research (often studying etiology, efficacy, or effectiveness) (69). The policy and political context for a given study may influence a researcher’s ability to randomize an intervention in practice settings, given potential concerns about cost, feasibility, or convenience. Therefore, while some study questions may allow for use of a randomized design, often implementation studies rely on a suite of alternative quasi-experimental designs, including interrupted time series, multiple-baseline (where the start of intervention conditions is staggered across settings/time), or regression discontinuity (where the intervention status is predetermined by an established cutoff or threshold) designs (40), which can allow for the estimation of causal effects. When randomization is possible in an implementation study, it is often at the group level rather than at the individual level (as in a clinical trial). Epidemiologists bring competencies that benefit implementation science in formulating research questions, determining the range of designs available, and assessing the trade-offs in various designs.

Measuring intermediate outcomes

As we described previously, in many cases the outcomes in an implementation science study are different than those in a more traditional epidemiologic study where one commonly measures clinical outcomes or changes in health status. Proximal measures of implementation processes and outcomes are often assessed (e.g., organizational climate or culture, the uptake of an evidence-based practice). Epidemiologists can play important roles in developing and testing new measures and in leading efforts to determine: which outcomes should be tracked and how long it will take to show progress; how to best determine criterion validity (how a measure compares with some “gold standard”); how to best measure effect modifiers across a range of settings (e.g., schools, worksites); and how common, practical measures can be developed and shared so researchers are not constantly reinventing measures.

We have enumerated 5 ways in which epidemiologists can and do contribute to implementation science. Ideally, all or most of these can contribute to a given study. In Table 2, based on a review of grant applications, we provide a selection of National Institutes of Health–funded implementation science studies to illustrate how epidemiology played a role for each.

Table 2.

Selected National Institutes of Health–Funded Grant Proposals in Implementation Science With Epidemiologic Contributions

| Project Title, Year (Funding Mechanism) | Principal Investigator (Department(s)/Center; Institution) | Study Setting/Design | Study Aims/Objectives | Example(s) of Epidemiologic Contributions |

|---|---|---|---|---|

| “Test and Treat TB: a Proof-of-Concept Trial in South Africa,” 2014 (R21a grant) | Ingrid Valerie Bassett, MD, MPH (Infectious Diseases, Medicine; Massachusetts General Hospital, Boston, Massachusetts) |

|

|

Defining the evidence: identifying the scope of the problem to inform the study and intervention design |

| “Enhancing Evidence-Based Diabetes Control Among Local Health Departments,” 2017 (R01b grant) | Ross Brownson, PhDc (Epidemiology, Prevention Research Center; Washington University in St. Louis, St. Louis, Missouri) |

|

|

|

| “SIngle-saMPLE Tuberculosis Evaluation to Facilitate Linkage to Care: The SIMPLE TB Trial,” 2016 (R01 grant) | Adithya Cattamanchi, MD, MAS (Medicine; University of California, San Francisco, San Francisco, California) |

|

|

Viewing causality in a new light: intervening at a health systems level to impact the epidemiology of TB in low-resource settings |

| “Online Social Networks for Dissemination of Smoking Cessation Interventions,” 2011 (R01 grant) | Nathan Cobb, MD (Institute for Tobacco Research; Truth Initiative Foundation, Washington, DC) | Facebook (Facebook, Inc., Menlo Park, California) Randomized trial (factorial design) |

|

|

| “A User-Friendly Epidemic-Economic Model of Diagnostic Tests for Tuberculosis,” 2012 (R21 grant) | David Dowdy, MD, PhDc (Epidemiology; Johns Hopkins University, Baltimore, Maryland) | Dynamic modeling |

|

|

| “Addressing Hepatitis C and Hepatocellular Carcinoma: Current and Future Epidemics,” 2013 (R01 grant) | Holly Hagan, PhDc, MPH (College of Nursing; New York University, New York, New York) | Agent-based modeling |

|

|

| “Translating Molecular Diagnostics for Cervical Cancer Prevention into Practice,” 2016 (R01 grant) | Patti Gravitt, PhDc (Global Health, Epidemiology; George Washington University, Washington, DC) |

|

|

|

| “A Retail Policy Laboratory: Modeling Impact of Retailer Reduction on Tobacco Use,” 2013 (R21 grant) | Douglas Luke, PhD (Center for Public Health Systems Science; Washington University in St. Louis, St. Louis, Missouri) | Agent-based modeling |

|

Contributing to a systems approach: parameters of Tobacco Town informed by epidemiologic evidence of tobacco use and purchasing |

| “Dissemination and Implementation of a Corrective Intervention to Improve Mediastinal Lymph Node Examination in Resected Lung Cancer,” 2013 (R01 grant) | Raymond Osarogiagbon, MD (School of Public Health; University of Memphis, Memphis, Tennessee) |

|

|

Defining evidence: data from the SEER Program on pathological lymph node staging informed the scope and magnitude of the problem |

| “Sustainable Financial Incentives to Improve Prescription Practices for Malaria,” 2012 (R21 grant) | Wendy Prudhomme-O’Meara, PhD (Medicine, Infectious Diseases; Duke University, Durham, North Carolina) |

|

Objective: test whether financial incentives offered at the facility level improve targeting of antimalarial medications to patients with parasitologically diagnosed malaria | Viewing causality in a new light |

| “Adapting Patient Navigation to Promote Cancer Screening in Chicago’s Chinatown,” 2012 (R01 grant) | Melissa Simon, MD (Obstetrics and Gynecology; Northwestern University at Chicago, Chicago, Illinois) |

|

|

Viewing causality in a new light: identifying multilevel barriers and facilitators to screening, including social, economic, cultural, and psychosocial barriers and community facilitators |

Abbreviations: HCV, hepatitis C virus; HIV, human immunodeficiency virus; MAS, master of advanced studies; MD, medical doctor; MPH, master of public health; PhD, doctor of philosophy; RE-AIM, Reach, Effectiveness, Adoption, Implementation, and Maintenance; SEER, Surveillance, Epidemiology, and End Results; TB, tuberculosis.

a The R21 grant mechanism at the National Institutes of Health is for funding smaller exploratory or developmental research.

b The R01 grant mechanism at the National Institutes of Health is for funding larger research projects.

c Degree in epidemiology.

CONCLUSION

While it has been widely asserted that epidemiologists’ core function is to observe and analyze the distribution and control of diseases, we may disproportionately focus on the etiological questions at the cost of addressing the solutions. Given the emerging trend in translational sciences and the call by leaders in the field to take a more consequentialist approach (3, 4), we have provided a primer in implementation science as a means of catalyzing the attention of epidemiologists towards increasing the likelihood that research findings will be useful or consequential. We have enumerated several ways in which epidemiologists can engage in and drive implementation science, highlighting the critical role that epidemiology plays in informing the evidence base as well as evaluating the ultimate impact of health interventions.

In Figure 1, we summarize the mutually beneficial relationship between the fields of epidemiology and implementation science and show how together these fields can ultimately affect population health. Epidemiology is critical to identifying patterns of disease distribution which can pinpoint public health problems, as well as understanding and measuring associated causes of and potential solutions or interventions with which to address those problems. These findings can lead to actionable information that may inform policy and practice decisions. However, effective interventions are only as useful as they are adopted, implemented, and sustained in practice. Implementation science is critical to identifying strategies that can drive adoption, implementation, and sustainability, ultimately leading to sustained practice change. In this way, the two fields contribute in parallel to improving population health. But these fields also can contribute to and collaborate with one another to the same end. Epidemiology can inform or drive implementation science by supplying evidence on causes of disease and effective interventions as well as informing study methods, measurement, and designs. Implementation science can enhance epidemiology by informing the research questions epidemiologists seek to answer as well as the measures and methods we use (70, 71). Together we can have a greater impact on improving population health.

ACKNOWLEDGMENTS

Author affiliations: Implementation Science, Division of Cancer Control and Population Sciences, National Cancer Institute, Bethesda, Maryland (Gila Neta, David A. Chambers); Prevention Research Center in St. Louis, Brown School, Washington University in St. Louis, St. Louis, Missouri (Ross C. Brownson); Division of Public Health Sciences, Department of Surgery, School of Medicine, Washington University in St. Louis, St. Louis, Missouri (Ross C. Brownson); and Alvin J. Siteman Cancer Center, School of Medicine, Washington University in St. Louis, St. Louis, Missouri (Ross C. Brownson).

Conflict of interest: none declared.

Abbreviations

- PRECIS-2

Pragmatic Explanatory Continuum Indicator Summary

REFERENCES

- 1. Merriam-Webster, Inc Definition of epidemiology. Springfield, MA: Merriam-Webster, Inc.; 2017. https://www.merriam-webster.com/dictionary/epidemiology. Accessed September 19, 2017. [Google Scholar]

- 2. Oxford Living Dictionaries Definition of epidemiology in English. New York, NY: Oxford University Press; 2017. https://en.oxforddictionaries.com/definition/epidemiology. Accessed September 19, 2017. [Google Scholar]

- 3. Galea S. An argument for a consequentialist epidemiology. Am J Epidemiol. 2013;178(8):1185–1191. [DOI] [PubMed] [Google Scholar]

- 4. Brownson RC, Samet JM, Chavez GF, et al. . Charting a future for epidemiologic training. Ann Epidemiol. 2015;25(6):458–465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Balas EA, Boren SA. Managing clinical knowledge for health care improvement. Yearb Med Inform. 2000;(1):65–70. [PubMed] [Google Scholar]

- 6. Morris ZS, Wooding S, Grant J. The answer is 17 years, what is the question: understanding time lags in translational research. J R Soc Med. 2011;104(12):510–520. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Hanney SR, Castle-Clarke S, Grant J, et al. . How long does biomedical research take? Studying the time taken between biomedical and health research and its translation into products, policy, and practice. Health Res Policy Syst. 2015;13:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Shekelle PG, Pronovost PJ, Wachter RM, et al. . Advancing the science of patient safety. Ann Intern Med. 2011;154(10):693–696. [DOI] [PubMed] [Google Scholar]

- 9. Freeman AC, Sweeney K. Why general practitioners do not implement evidence: qualitative study. BMJ. 2001;323(7321):1100–1102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Howitt A, Armstrong D. Implementing evidence based medicine in general practice: audit and qualitative study of antithrombotic treatment for atrial fibrillation. BMJ. 1999;318(7194):1324–1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Titler MG. The evidence for evidence-based practice implementation In: Hughes RG, ed. Patient Safety and Quality: An Evidence-Based Handbook for Nurses. Rockville, MD: Agency for Healthcare Research and Quality, US Department of Health and Human Services; 2008:113–161. [Google Scholar]

- 12. Massie J, Efron D, Cerritelli B, et al. . Implementation of evidence based guidelines for paediatric asthma management in a teaching hospital. Arch Dis Child. 2004;89(7):660–664. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Bryant J, Boyes A, Jones K, et al. . Examining and addressing evidence-practice gaps in cancer care: a systematic review. Implement Sci. 2014;9(1):37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. McGlynn EA, Asch SM, Adams J, et al. . The quality of health care delivered to adults in the United States. N Engl J Med. 2003;348:2635–2645. [DOI] [PubMed] [Google Scholar]

- 15. Institute of Medicine (US) Committee on Quality of Health Care in America Crossing the Quality Chasm: A New Health System for the 21st Century. Washington, DC: National Academies Press; 2001. [PubMed] [Google Scholar]

- 16. Levine DM, Linder JA, Landon BE. The quality of outpatient care delivered to adults in the United States, 2002 to 2013. JAMA Intern Med. 2016;176(12):1778–1790. [DOI] [PubMed] [Google Scholar]

- 17. Proctor EK. Leverage points for the implementation of evidence-based practice. Brief Treat Crisis Interv. 2004;4(3):227–242. [Google Scholar]

- 18. Straus SE, Tetroe J, Graham I. Defining knowledge translation. CMAJ. 2009;181(3-4):165–168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Peters DH, Adam T, Alonge O, et al. . Implementation research: what it is and how to do it. BMJ. 2013;347:f6753. [DOI] [PubMed] [Google Scholar]

- 20. Eccles MP, Mittman BS. Welcome to Implementation Science [editorial]. Implement Sci. 2006;1:1. [Google Scholar]

- 21. National Institutes of Health Department of Health and Human Services. Part 1. Overview information. Dissemination and Implementation Research in Health (R01). https://grants.nih.gov/grants/guide/pa-files/PAR-16-238.html. Published May 10, 2016. Accessed April 11, 2017.

- 22. National institutes of Health Department of Health and Human Services. Part 1. Overview information. Dissemination and Implementation Research in Health (R03). https://grants.nih.gov/grants/guide/pa-files/PAR-16-237.html. Published May 10, 2016. Accessed April 11, 2017.

- 23. National Institutes of Health Department of Health and Human Services. Part 1. Overview information. Dissemination and Implementation Research in Health (R21). https://grants.nih.gov/grants/guide/pa-files/PAR-16-236.html. Published May 10, 2016. Accessed April 11, 2017.

- 24. Proctor E, Silmere H, Raghavan R, et al. . Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Hlth. 2011;38(2):65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Proctor EK, Brownson RC. Measurement issues in dissemination and implementation research In: Brownson R, Colditz G, Proctor E, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. New York, NY: Oxford University Press; 2012:261–280. [Google Scholar]

- 26. Proctor EK, Powell BJ, Baumann AA, et al. . Writing implementation research grant proposals: ten key ingredients. Implement Sci. 2012;7:96. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Graham ID. Guide to Knowledge Translation Planning at CIHR: Integrated and End-of-Grant Approaches Ottawa, Ontario, Canada: Canadian Institutes of Health Research; 2015. http://www.cihr-irsc.gc.ca/e/45321.html#a4. Updated March 19, 2015. Accessed March 3, 2017. [Google Scholar]

- 28. Stetler CB, Mittman BS, Francis J. Overview of the VA Quality Enhancement Research Initiative (QUERI) and QUERI theme articles: QUERI Series. Implement Sci 2008;3:8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Loudon K, Treweek S, Sullivan F, et al. . The PRECIS-2 tool: designing trials that are fit for purpose. BMJ. 2015;350:h2147. [DOI] [PubMed] [Google Scholar]

- 30. Greenland S, Pearl J, Robins JM. Causal diagrams for epidemiologic research. Epidemiology. 1999;10(1):37–48. [PubMed] [Google Scholar]

- 31. Ahrens KA, Cole SR, Westreich D, et al. . A cautionary note about estimating effects of secondary exposures in cohort studies. Am J Epidemiol. 2015;181(3):198–203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Tabak RG, Khoong EC, Chambers DA, et al. . Bridging research and practice: models for dissemination and implementation research. Am J Prev Med. 2012;43(3):337–350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Sales A, Smith J, Curran G, et al. . Models, strategies, and tools. Theory in implementing evidence-based findings into health care practice. J Gen Intern Med. 2006;21(suppl 2):S43–S49. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Rogers EM. Diffusion of Innovations. 4th ed New York, NY: The Free Press; 1995. [Google Scholar]

- 35. Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE-AIM framework. Am J Public Health. 1999;89(9):1322–1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Damschroder LJ, Aron DC, Keith RE, et al. . Fostering implementation of health services research findings into practice: a consolidated framework for advancing implementation science. Implement Sci. 2009;4:50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Chambers DA. Guiding theory for dissemination and implementation research: a reflection on models used in research and practice In: Beidas RS, Kendall PC, eds. Dissemination and Implementation of Evidence-Based Practices in Child and Adolescent Mental Health. New York, NY: Oxford University Press; 2014:9–21. [Google Scholar]

- 38. Chambers DA, Azrin ST. Partnership: a fundamental component of dissemination and implementation research. Psychiatr Serv. 2013;64(6):509–511. [DOI] [PubMed] [Google Scholar]

- 39. Powell BJ, McMillen JC, Proctor EK, et al. . A compilation of strategies for implementing clinical innovations in health and mental health. Med Care Res Rev. 2012;69(2):123–157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Landsverk J, Brown CH, Chamberlain P, et al. . Design and analysis in dissemination and implementation research In: Brownson R, Colditz G, Proctor E, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. New York, NY: Oxford University Press; 2012:225–260. [Google Scholar]

- 41. Palinkas LA, Aarons GA, Horwitz S, et al. . Mixed method designs in implementation research. Adm Policy Ment Health. 2011;38(1):44–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Curran GM, Bauer M, Mittman B, et al. . Effectiveness-implementation hybrid designs: combining elements of clinical effectiveness and implementation research to enhance public health impact. Med Care. 2012;50(3):217–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Brennan SE, Bosch M, Buchan H, et al. . Measuring team factors thought to influence the success of quality improvement in primary care: a systematic review of instruments. Implement Sci. 2013;8:20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Chaudoir SR, Dugan AG, Barr CH. Measuring factors affecting implementation of health innovations: a systematic review of structural, organizational, provider, patient, and innovation level measures. Implement Sci. 2013;8:22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Chor KH, Wisdom JP, Olin SC, et al. . Measures for predictors of innovation adoption. Adm Policy Ment Health. 2015;42(5):545–573. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Emmons KM, Weiner B, Fernandez ME, et al. . Systems antecedents for dissemination and implementation: a review and analysis of measures. Health Educ Behav. 2012;39(1):87–105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Gagnon MP, Attieh R, Ghandour EK, et al. . A systematic review of instruments to assess organizational readiness for knowledge translation in health care. PLoS One. 2014;9(12):e114338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Lewis CC, Fischer S, Weiner BJ, et al. . Outcomes for implementation science: an enhanced systematic review of instruments using evidence-based rating criteria. Implement Sci. 2015;10:155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Hall KL, Vogel AL, Stipelman B, et al. . A four-phase model of transdisciplinary team-based research: goals, team processes, and strategies. Transl Behav Med. 2012;2(4):415–430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Rabin BA, Brownson R. Developing the terminology for dissemination and implementation research In: Brownson R, Colditz G, Proctor E, eds. Dissemination and Implementation Research in Health: Translating Science to Practice. New York, NY: Oxford University Press; 2012:23–51. [Google Scholar]

- 51. Green LW, Ottoson JM, García C, et al. . Diffusion theory and knowledge dissemination, utilization, and integration in public health. Annu Rev Public Health. 2009;30:151–174. [DOI] [PubMed] [Google Scholar]

- 52. Slack MK, Draugalis JR. Establishing the internal and external validity of experimental studies. Am J Health Syst Pharm. 2001;58(22):2173–2181. [PubMed] [Google Scholar]

- 53. Cole SR, Stuart EA. Generalizing evidence from randomized clinical trials to target populations: the ACTG 320 trial. Am J Epidemiol. 2010;172(1):107–115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Persaud N, Mamdani MM. External validity: the neglected dimension in evidence ranking. J Eval Clin Pract. 2006;12(4):450–453. [DOI] [PubMed] [Google Scholar]

- 55. Gordis L. More on causal inferences: bias, confounding, and interaction In: Epidemiology. 5th ed Philadelphia, PA: Saunders Elsevier; 2013:262–278. [Google Scholar]

- 56. Diez Roux AV. Complex systems thinking and current impasses in health disparities research. Am J Public Health. 2011;101(9):1627–1634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Glasgow RE, Chambers D. Developing robust, sustainable, implementation systems using rigorous, rapid and relevant science. Clin Transl Sci. 2012;5(1):48–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Glass TA, Goodman SN, Hernán MA, et al. . Causal inference in public health. Annu Rev Public Health. 2013;34:61–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Hill AB. The environment and disease: association or causation? Proc R Soc Med. 1965;58:295–300. [PMC free article] [PubMed] [Google Scholar]

- 60. Surgeon General’s Advisory Committee on Smoking and Health Smoking and Health: Report of the Advisory Committee to the Surgeon General of the Public Health Service. (PHS publication no. 1103). Washington, DC: Public Health Service, US Department of Health, Education, and Welfare; 1964. [Google Scholar]

- 61. Galea S, Riddle M, Kaplan GA. Causal thinking and complex system approaches in epidemiology. Int J Epidemiol. 2010;39(1):97–106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62. Hernán MA. A definition of causal effect for epidemiological research. J Epidemiol Community Health. 2004;58(4):265–271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Hernán MA, Robins JM. Instruments for causal inference: an epidemiologist’s dream? Epidemiology. 2006;17(4):360–372. [DOI] [PubMed] [Google Scholar]

- 64. Pearl J. An introduction to causal inference. Int J Biostat. 2010;6(2):Article 7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Parascandola M, Weed DL. Causation in epidemiology. J Epidemiol Community Health. 2001;55(12):905–912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66. Rothman KJ, Greenland S. Causation and causal inference in epidemiology. Am J Public Health. 2005;95(suppl 1):S144–S150. [DOI] [PubMed] [Google Scholar]

- 67. Greenland S. For and against methodologies: some perspectives on recent causal and statistical inference debates. Eur J Epidemiol. 2017;32(1):3–20. [DOI] [PubMed] [Google Scholar]

- 68. Joffe M, Gambhir M, Chadeau-Hyam M, et al. . Causal diagrams in systems epidemiology. Emerg Themes Epidemiol. 2012;9(1):1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Brown CH, Curran G, Palinkas LA, et al. . An overview of research and evaluation designs for dissemination and implementation. Annu Rev Public Health. 2017;38:1–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Westreich D. From exposures to population interventions: pregnancy and response to HIV therapy. Am J Epidemiol. 2014;179(7):797–806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. Westreich D, Edwards JK, Rogawski ET, et al. . Causal impact: epidemiological approaches for a public health of consequence. Am J Public Health. 2016;106(6):1011–1012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Cargo M, Mercer SL. The value and challenges of participatory research: strengthening its practice. Annu Rev Public Health. 2008;29:325–350. [DOI] [PubMed] [Google Scholar]

- 73. Proctor EK, Powell BJ, McMillen JC. Implementation strategies: recommendations for specifying and reporting. Implement Sci. 2013;8:139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Powell BJ, Waltz TJ, Chinman MJ, et al. . A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implement Sci. 2015;10:21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75. Rabin BA, Purcell P, Naveed S, et al. . Advancing the application, quality and harmonization of implementation science measures. Implement Sci. 2012;7:119. [DOI] [PMC free article] [PubMed] [Google Scholar]