Abstract

Purpose

Design of a preconditioner for fast and efficient parallel imaging (PI) and compressed sensing (CS) reconstructions for Cartesian trajectories.

Theory

PI and CS reconstructions become time consuming when the problem size or the number of coils is large, due to the large linear system of equations that has to be solved in and ‐norm based reconstruction algorithms. Such linear systems can be solved efficiently using effective preconditioning techniques.

Methods

In this article we construct such a preconditioner by approximating the system matrix of the linear system, which comprises the data fidelity and includes total variation and wavelet regularization, by a matrix that is block circulant with circulant blocks. Due to this structure, the preconditioner can be constructed quickly and its inverse can be evaluated fast using only two fast Fourier transformations. We test the performance of the preconditioner for the conjugate gradient method as the linear solver, integrated into the well‐established Split Bregman algorithm.

Results

The designed circulant preconditioner reduces the number of iterations required in the conjugate gradient method by almost a factor of 5. The speed up results in a total acceleration factor of approximately 2.5 for the entire reconstruction algorithm when implemented in MATLAB, while the initialization time of the preconditioner is negligible.

Conclusion

The proposed preconditioner reduces the reconstruction time for PI and CS in a Split Bregman implementation without compromising reconstruction stability and can easily handle large systems since it is Fourier‐based, allowing for efficient computations.

Keywords: compressed sensing, parallel imaging, preconditioning, split bregman

1. INTRODUCTION

The undersampling factor in parallel imaging (PI) is in theory limited by the number of coil channels.1, 2, 3, 4 Higher factors can be achieved by using compressed sensing (CS) which estimates missing information by adding a priori information.5, 6 The a priori knowledge relies on the sparsity of the image in a certain transform domain. It is possible to combine PI and CS, for example, Refs. 7 and 8 achieving almost an order of magnitude speed‐up factors in cardiac perfusion MRI and enabling free‐breathing MRI of the liver.9

CS allows reconstruction of an estimate of the true image even in the case of considerable undersampling factors, for which the data model generally describes an ill‐posed problem without a unique solution. This implies that the true image cannot be found by directly applying Fourier transforms. Instead, regularization is used to solve the ill‐posed problem by putting additional constraints on the solution. For CS, such a constraint enforces sparsity of the image in a certain domain, which is promoted by the ‐norm.6, 10, 11 However, practically the ‐norm is used instead as it is the closest representation that is numerically feasible to implement. The wavelet transform and derivative operators, integrated in total variation regularization, are examples of sparsifying transforms that can be used in the spatial direction8, 12, 13, 14, 15, 16 and temporal dimension,9 respectively.

Although CS has led to a considerable reduction in acquisition times either in PI applications or in single coil acquisitions, the benefit of the ‐norm regularization constraint comes with the additional burden of increased reconstruction times, because ‐norm minimization problems are in general difficult to solve. Many methods have been proposed that solve the problem iteratively.12, 14, 17, 18, 19, 20, 22, 23, 50 In this work, we focus on the Split Bregman (SB) approach because of its computational performance, and its well‐established track record.14, 24, 25, 26, 27, 28 SB transforms the initial minimization problem, containing both and ‐norm terms, into a set of subproblems that either require solving an ‐norm or an ‐norm minimization problem, each of which can be approached using standard methods.

The most expensive step in SB, which is also present in many other methods, is to solve an ‐norm minimization problem, which can be formulated as a linear least squares problem.29 The system matrix of the least squares problem remains constant throughout the SB iterations and this feature has shown to be convenient for finding an approximation of the inverse system matrix as is done in for example, Ref. 30. This approach eliminates the need for an iterative scheme to solve the ‐norm minimization problem, but for large problem sizes the initial computational costs are high, making it less profitable in practice.

An alternative approach for eliminating the iterative scheme to solve the ‐norm minimization problem was demonstrated in Ref. 31. In this approach, extra variable splitting is introduced to separate the coil sensitivity matrices from the Fourier transforms, such that all individual subproblems can be solved directly in the case of Cartesian sampling. This can lead to a considerable reduction in reconstruction time, provided that the reconstruction parameters are optimized. Simulations and in vivo experiments showed significant improvements in convergence compared to nonlinear conjugate gradient and a monotone fast iterative shrinkage‐thresholding algorithm. The extra variable splitting introduces penalty parameters, however, and unstable behavior can occur for certain parameter choices due to nontrivial null‐spaces of the operators.31, 32, 33 This can be seen as a drawback of this approach. Furthermore, determining the extra parameters is obviously nonunique. Considering the fact that each image slice would be reconstructed optimally with possibly different reconstruction parameters, we prefer the more straightforward SB scheme. Moreover, for non‐Cartesian trajectories, direct solutions are no longer possible and iterative schemes are needed.

Alternatively, to keep the number of reconstruction parameters to a minimum and to minimize possible stability issues, preconditioners can be used to reduce the number of iterations required for solving the least squares problem.34 The incomplete Cholesky factorization and hierarchically structured matrices are examples of preconditioners that reduce the number of iterations drastically in many applications.35, 36 The drawback of these type of preconditioners is that the full system matrix needs to be built before the reconstruction starts, which for larger problem sizes can only be done on a very powerful computer due to memory limitations. Although in Refs. 37, 38, 39 a penta‐diagonal matrix was constructed as a preconditioner, solving such a system is still relatively expensive. In addition, before constructing the preconditioner, patient‐specific coil sensitivity profiles need to be measured, which often leads to large initialization times. In Refs. 31 and 40, the extra variable splitting enables building a preconditioner independent of coil sensitivity maps, resulting in a preconditioner for non‐Cartesian reconstructions, but one that is not applicable for the more stable SB scheme.

In this work, we design a Fourier transform‐based preconditioner for PI–CS reconstructions and Cartesian trajectories in a stable SB framework, that takes the coil sensitivities on a patient‐specific basis into account, has negligible initialization time and which is highly scalable to a large number of unknowns, as often encountered in MRI.

2. THEORY

In this section we first describe the general PI and CS problems. Subsequently, the Split Bregman algorithm, which is used to solve these problems, is discussed. Hereafter, weintroduce the preconditioner that is used to speed up the PI–CS algorithm and elaborate on its implementation and complexity.

2.1. Parallel imaging reconstruction

In PI with full k‐space sampling the data, including noise, is described by the model

| (1) |

where the are the fully sampled k‐space data sets containing noise for , with the number of coil channels, and is the true image.3 Here, , where m and n define the image matrix size in the x‐ and y‐directions, respectively, for a 2D sampling case. Furthermore, are diagonal matrices representing complex coil sensitivity maps for each channel. Finally, is the discrete two‐dimensional Fourier transform matrix. In the case of undersampling, the data is described by the model

| (1) |

where are the under sampled k‐space data sets for with zeros at nonmeasured k‐space locations. The undersampling pattern is specified by the binary diagonal sampling matrix , so that the under sampled Fourier transform is given by . Here it is important to note that reduces the rank of , which means that solving for x in Equation 1 is in general an ill‐posed problem for each coil and a unique solution does not exist. However, if the individual coil data sets are combined and the undersampling factor does not exceed the number of coil channels, the image x can in theory be reconstructed by finding the least squares solution, that is, by minimizing

| (2) |

where is an estimate of the true image.

2.2. Parallel imaging reconstruction with compressed sensing

In the case of higher undersampling factors, the problem of solving Equation 2 becomes ill‐posed and additional regularization terms need to be introduced to transform the problem into a well‐posed problem. Since MR images are known to be sparse in some domains, ‐norm terms are a suitable choice for regularization. The techniques of PI and CS are then combined in the following minimization problem

| (3) |

with , and γ the regularization parameters for the data fidelity, the total variation, and the wavelet, respectively.8 A total variation regularization constraint is introduced by the first‐order derivative matrices , representing the numerical finite difference scheme

with periodic boundary conditions

so that and are circulant. A unitary wavelet transform further promotes sparsity of the image in the wavelet domain.

2.3. Split Bregman iterations

Solving Equation 3 is not straightforward as the partial derivatives of the ‐norm terms are not well‐defined around 0. Instead, the problem is transformed into one that can be solved easily. In this work, we use Split Bregman to convert Equation 3 into multiple minimization problems in which the ‐norm terms have been decoupled from the ‐norm term, as discussed in detail in Refs. 14 and 24. For convenience, the Split Bregman method is shown in Algorithm 1. The Bregman parameters are introduced by the Bregman scheme and auxiliary variables are introduced by writing the constrained problem as an unconstrained problem. The algorithm consists of two loops: an outer loop and an inner loop. In the inner loop (steps 4–11), we first compute the vector b that serves as a right‐hand side in the system of equations of step 5. Subsequently, the ‐norm subproblems are solved using the shrink function in steps 6–8. Hereafter, the residuals for the regularization terms are computed in steps 9–11 and are subsequently fed back into the system by updating the right‐hand side vector b in step 5. Steps 4–11 can be repeated several times, but one or two inner iterations are normally sufficient for convergence. Similarly, the outer loop feeds the residual encountered in the data fidelity term back into the system, after which the inner loop is executed again.

The system of linear equations,

| (4) |

in line 5 of the algorithm follows from a standard least squares problem, where the system matrix is given by

with right‐hand side

In this work we focus on solving Equation 4, which is computationally the most expensive part of Algorithm 1. It is important to note that the system matrix remains constant throughout the algorithm and only the right‐hand side vector b changes, which allows us to efficiently solve Equation 4 by using preconditioning techniques.

Algorithm 1. Split Bregman Iteration.

1: Initialize ,

Initialize

2: for j = 1 to nOuter do

3: for k = 1 to nInner do

4:

5: solve with as initial guess

6:

7:

8:

9:

10:

11:

12: end for

13: for i = 1 to do

14:

15: end for

16: end for

2.4. Structure of the system matrix

The orthogonal wavelet transform is unitary, so that . Furthermore, the derivative operators are constructed such that the matrices , and are block circulant with circulant blocks (BCCB). The product and sum of two BCCB matrices is again BCCB, showing that is also BCCB. These types of matrices are diagonalized by the two‐dimensional Fourier transformation, that is,

where is a BCCB matrix and and are diagonal matrices. This motivates us to write the system matrix in Equation 4 in the form

| (5) |

with given by

| (6) |

The term is BCCB, so that in becomes diagonal. If there is no sensitivity encoding, that is , the entire matrix becomes diagonal in which case the solution can be efficiently found by computing

| (7) |

for invertible . In practice, Fast Fourier Transforms (FFTs) are used for this step. With sensitivity encoding, and is not BCCB for any i, hence matrix is not diagonal. In that case we prefer to solve Equation 4 iteratively, since finding is now computationally too expensive. It can be observed that the system matrix is Hermitian and positive definite, which motivates the choice for the conjugate gradient (CG) method as an iterative solver.

2.5. Preconditioning

A preconditioner can be used to reduce the number of iterations required for CG convergence.41 It should satisfy the conditions

to cluster the eigenvalues of the matrix pair around 1, and

determination of and its evaluation on a vector should be computationally cheap.

Ideally, we would like to use a diagonal matrix as the preconditioner as this is computationally inexpensive. For this reason, the Jacobi preconditioner is used in many applications with the diagonal elements from matrix as the input. However, for the current application of PI and CS the Jacobi preconditioner is not efficient since it does not provide an accurate approximate inverse of the system matrix . In this work, we use a different approach and approximate the diagonal from in Equation 6 instead. The motivation behind this approach is that the Fourier matrices in matrix center a large part of the information contained in around the main diagonal of , so that neglecting off‐diagonal elements of has less effect than neglecting off‐diagonal elements of .

For the preconditioner used in this work we approximate by

| (8) |

where places the elements of its argument on the diagonal of a matrix. Furthermore, vector k is the diagonal of matrix and can be written as

| (9) |

where , and are the diagonals of , and , respectively. Note that and are diagonal matrices already, so that only will result in an approximation of the inverse for the final system matrix .

2.6. Efficient implementation of the preconditioner

The diagonal elements of for a certain i are found by noting that is in fact a BCCB matrix. The diagonal elements of can now be found on the diagonal of , so that

with being the jth row of matrix and the jth standard basis vector. Note that the scalar is the jth entry of vector . Since is a diagonal matrix which can be written as , we can also write

| (10) |

where denotes the element‐wise (Hadamard) product. Since the element‐wise product of two BCCB matrices is again a BCCB matrix, the circular convolution theorem tells us42, 43 that

The resulting matrix vector product in Equation 10 can now be efficiently computed as

| (11) |

Finally, the diagonal elements d of the diagonal matrix with structure can be computed efficiently by using , where is the first row of . Therefore, the first row of matrix is found as , with a row vector containing the diagonal elements of matrix . For multiple coils Equation 11 becomes

| (12) |

where the action of the Fourier matrix on a vector can be efficiently computed using the FFT.

Since is BCCB, the elements of can be quickly found by evaluating , where is the first row of . Finally, the elements of are all equal to one, since is the identity matrix.

Alternatively, in Equation 2 the summation over the coil sensitivity matrices can be removed by stacking the matrices. The derivation following this approach can be found online as supporting information.

2.7. Complexity

For every inner‐iteration of the Split Bregman algorithm we need to solve the linear system given in Equation 4, which is done iteratively using a Preconditioned Conjugate Gradient method (PCG). In this method, the preconditioner constructed above is used as a left preconditioner by solving the following system of equations:

| (13) |

where is the approximate solution constructed by the PCG algorithm. In PCG this implies that for every iteration the preconditioner should be applied once on the residual vector . The preconditioner can be constructed beforehand since it remains fixed for the entire Split Bregman algorithm as the regularization parameters μ, λ, and γ are constant. As can be seen in Table 1, is constructed in FLOPS. Evaluation of the diagonal preconditioner from Equation 8 on a vector amounts to two Fourier transforms and a single multiplication, and therefore requires FLOPS.

Table 1.

FLOPS required for construction of and for evaluation of on a vector.

| Operation | FLOPS | |||

|---|---|---|---|---|

| Construction of | , |

|

||

|

|

|

|||

|

|

|

|||

|

|

|

|||

|

|

2N | |||

|

|

N | |||

| Total |

|

|||

| Evaluation on vector |

|

|

||

|

|

5N | |||

|

|

0 | |||

| Summation of the three terms above | 2N | |||

| Total |

|

To put this into perspective, evaluation of matrix on a vector requires FLOPS, as shown in Table 1. The upper bound on the additional costs per iteration relative to the costs for evaluating on a vector is therefore

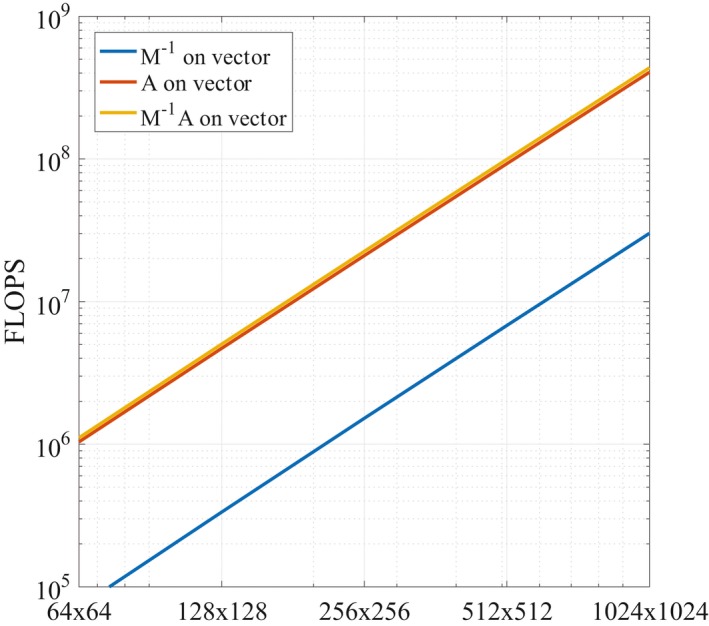

showing that the preconditioner evaluation step becomes relatively cheaper for an increasing number of coil elements. The scaling of the complexity with respect to the problem size is depicted in Figure 1 for a fixed number of coils .

Figure 1.

The complexity for different problem sizes. The number of FLOPS for the action of the preconditioner on a vector (blue), on a vector (red), and the combination of the two (yellow) are depicted for .

3. Methods

3.1. MR data acquisition

Experiments were performed on healthy volunteers after giving informed consent. The Leiden University Medical Center Committee for Medical Ethics approved the experiment. An Ingenia 3T dual transmit MR system (Philips Healthcare) was used to acquire the in vivo data. A 12‐element posterior receiver array, a 15‐channel head coil, a 16‐channel knee coil (also used for transmission) and a 16‐element anterior receiver array were used for reception in the spine, the brain, the knee and the lower legs, respectively. The body coil was used for RF transmission, except for the knee scan.

For the spine data set, T1‐weighted images were acquired using a turbo spin‐echo (TSE) sequence with the following parameters: field of view (FOV) = 340 × 340mm2; in‐plane resolution 0.66 × 0.66mm2; 4mm slice thickness; 15 slices; echo time (TE)/repetition time (TR)/TSE factor = 8 ms/648 ms/8; flip angle (FA) = 90°; refocusing angle = 120°; water–fat shift (WFS) = 1.5 pixels; and scan time = 2:12 minutes. T2‐weighted TSE scans had parameters: FOV = 340 × 340mm2; in‐plane resolution 0.66 × 0.66mm2; 4mm slice thickness; 15 slices; TE/TR/TSE factor = 113 ms/4008 ms/32; FA = 90°; WFS = 1.1 pixels; and scan time = 3:36 minutes.

For the brain data set, T1‐weighted images were acquired using an inversion recovery turbo spin‐echo (IR TSE) sequence with parameters: FOV = 230 × 230mm2; in‐plane resolution 0.90 × 0.90mm2; 4mm slice thickness; 24 slices; TE/TR/TSE factor = 20 ms/2000 ms/8; refocusing angle = 120°; IR delay: 800 ms; WFS = 2.6 pixels; and scan time = 05:50 minutes. T ‐weighted images were measured using a gradient echo (FFE) sequence with parameters: FOV = 230 × 230mm2; in‐plane resolution 0.90 × 0.90mm2; 4mm slice thickness; 28 slices; TE/TR = 16 ms/817 ms; FA = 18°; WFS = 2 pixels; and scan time = 3:33 minutes.

For the knee data set, T1‐weighted images were acquired using an FFE sequence with parameters: FOV = 160 × 160mm2; in‐plane resolution 1.25 × 1.25mm2; 3mm slice thickness; 32 slices; TE/TR = 10 ms/455 ms; FA = 90°; WFS = 1.4 pixels; and scan time = 1:01 minutes.

For the calf data set, T1‐weighted images were acquired using an FFE sequence with parameters: FOV = 300 × 300mm2; in‐plane resolution 1.17 × 1.17mm2; 7mm slice thickness; 24 slices; TE/TR = 16 ms/500 ms; FA = 90°; WFS = 1.5 pixels; and scan time = 2:11 minutes.

The different acquisitions techniques (TSE, FFE) were chosen to address different basic contrasts used in daily clinical practice. Undersampling in the case of nonstationary echo signals, such as during a T2‐decaying TSE train, can result in image quality degradation. This effect can be mitigated, for example, in TSE scans using variable refocusing angle schemes as outlined in Ref. 44.

To show the performance of the preconditioner also in the presence of these and similar effects, scans in the brain were acquired directly in undersampled mode employing a simple variable density sampling pattern, with acceleration factors R = 2 and R = 3. To validate the results, fully sampled data is acquired as well in a separate scan. Data for a T2‐weighted TSE scan (R = 2, FOV = 230 × 230mm2; in‐plane resolution 0.90 × 0.90mm2; 4mm slice thickness; 1 slice; TE/TR/TSE factor = 80 ms/3000 ms/16; refocusing angle = 120°; WFS = 2.5 pixels; and scan time = 00:30 minutes), a FLAIR scan (R = 2, FOV = 240 × 224mm2; in‐plane resolution 1.0 × 1.0mm2; 4mm slice thickness; 1 slice; TE/TR/TSE factor = 120 ms/9000 ms/24; IR delay = 2500 ms; refocusing angle = 110°; WFS = 2.7 pixels; and scan time = 01:30 minutes) and a 3D magnetization prepared T1‐weighted turbo field echo (TFE) scan (R = 3, FOV = 250 × 240 × 224mm2; 1.0mm3 isotropic resolution; TE/TR = 4.6 ms/9.9 ms; TFE factor = 112; TFE prepulse delay = 1050 ms; flip angle = 8°; WFS = 0.5 pixels; and scan time = 04:17 minutes).

3.2. Coil sensitivity maps

Unprocessed k‐space data was stored per channel and used to construct complex coil sensitivity maps for each channel.45 Note that the coil sensitivity maps are normalized such that

The normalized coil sensitivity maps were given zero intensity outside the subject, resulting in an improved SNR of the final reconstructed image. For the data model to be consistent, also the individual coil images were normalized according to

3.3. Coil compression

Reconstruction of the spine data set was performed with and without coil compression. A compression matrix was constructed as in Ref. 46 and multiplied by the normalized individual coil images and the coil sensitivity maps, to obtain virtual coil images and sensitivity maps. The six least dominant virtual coils were ignored to speed up the reconstruction.

3.4. Undersampling

Two variable density undersampling schemes were studied: a random line pattern in the foot‐head direction, referred to as structured sampling, and a fully random pattern, referred to as random sampling. Different undersampling factors were used for both schemes.

3.5. Reconstruction

The Split Bregman algorithm was implemented in MATLAB (The MathWorks, Inc.). All image reconstructions were performed in 2D on a Windows 64‐bit machine with an Intel i3–4160 CPU @ 3.6GHz and 8 GB internal memory.

Reconstructions were performed for reconstruction matrix sizes of 128 × 128, 256 × 256, and 512 × 512, and the largest reconstruction matrix was interpolated to obtain a simulated data set of size 1024 × 1024 for theoretical comparison. For prospectively undersampled scans, additional matrix sizes of 240 × 224 were acquired. For the 3D scan, an FFT was first performed along the readout direction, after which one slice was selected. To investigate the effect of the regularization parameters on the performance of the preconditioner, three different regularization parameter sets were chosen as:

set 1 , and

set 2 , and

set 3 , and

The Daubechies 4 wavelet transform was used for . Furthermore, the SB algorithm was performed with an inner loop of one iteration and an outer loop of 20 iterations. The tolerance (relative residual norm) in the PCG algorithm was set to .

4. RESULTS

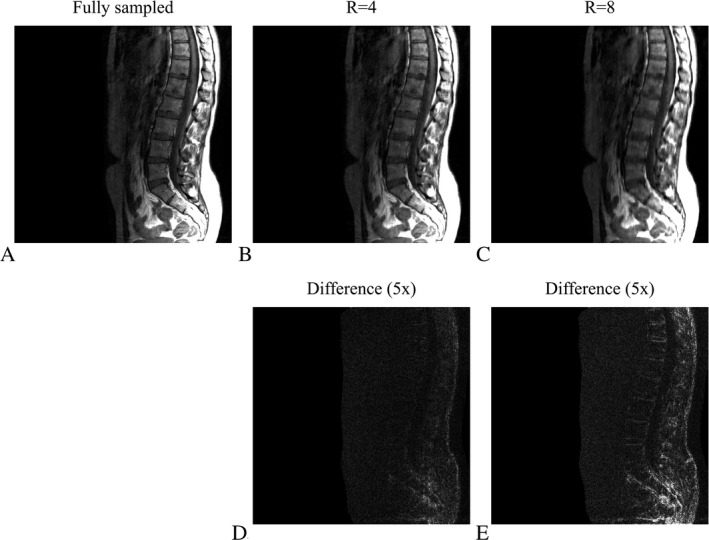

Figure 2 shows the T1‐weighted TSE spine images for a reconstruction matrix size of 512 × 512, reconstructed with the SB implementation for a fully sampled data set and for undersampling factors of four (R = 4) and eight (R = 8), where structured Cartesian sampling masks were used. The quality of the reconstructed images for R = 4 and R = 8 demonstrate the performance of the CS algorithm. The difference between the fully sampled and undersampled reconstructed images are shown (magnified five times) in Figure 2D,E for R = 4 and R = 8, respectively.

Figure 2.

Reconstruction results for different structured Cartesian undersampling factors. (A) shows the fully sampled scan as a reference, whereas (B) and (C) depict the reconstruction results for undersampling factors four (R = 4) and eight (R = 8), respectively. The absolute difference, magnified five times, is shown in (D) and (E) for R = 4 and R = 8, respectively. The reconstruction matrix has dimensions 512 × 512. Regularization parameters were set to , and .

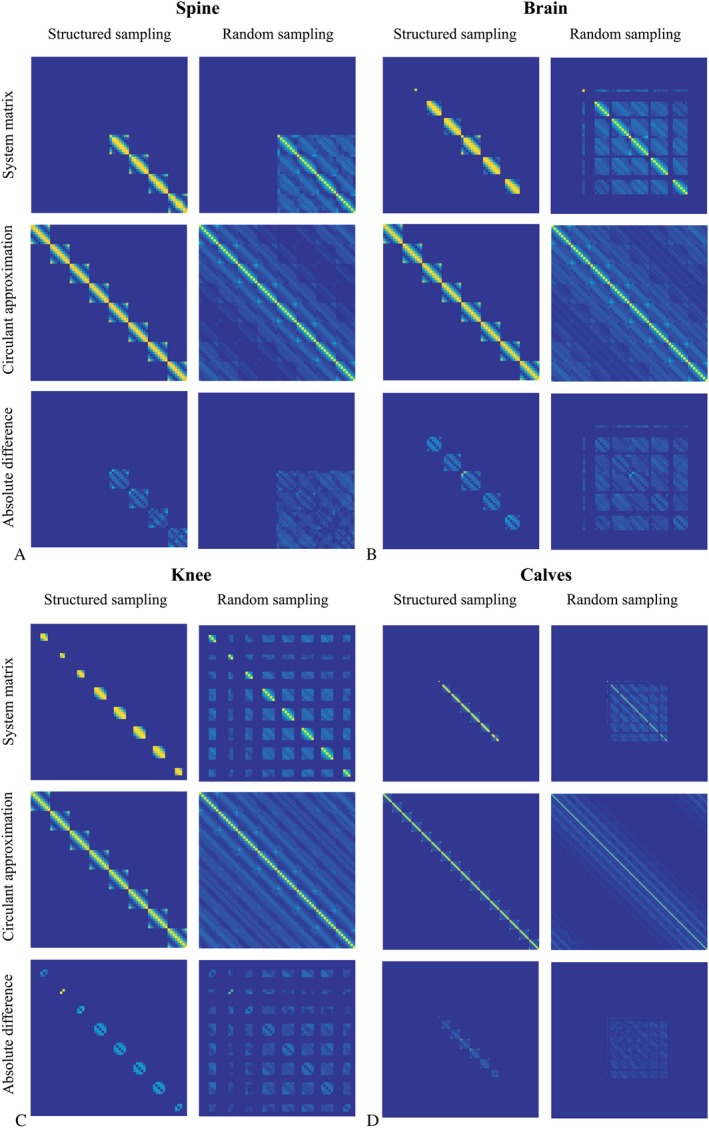

The fully built system matrix is compared with its circulant approximation in Figure 3A for both structured and random Cartesian undersampling in the spine, without regularization to focus on the approximated term containing the coil sensitivities. The elements of contain many zeros due to the lack of coil sensitivity in a large part of the image domain when using cropped coil sensitivity maps. These zeros are not present in the circulant approximation, since the circulant property is enforced by neglecting all off‐diagonal elements in . The entries introduced into the circulant approximation do not add relevant information to the system, because the image vector on which the system matrix acts contains zero signal in the region corresponding with the newly introduced entries. For the same reason, the absolute difference maps in the bottom row were masked by the coil‐sensitive region of , showing that the magnitude and phase are well approximated by assuming the circulant property. Figure 3B‐D show the same results for the brain, the knee and the calves, respectively, demonstrating the generalizability of this approach to different coil set‐ups and geometries.

Figure 3.

System matrix and its circulant approximation. The first and the second columns show the system matrix elements for structured and random undersampling and R = 4, respectively, for the spine (A), the brain (B), the knee (C), and the calves (D). The top row depicts the elementwise magnitude for the true system matrix , the second row depicts the elementwise magnitude for the circulant approximated system matrix and the bottom row shows the absolute difference between the true system matrix and the circulant approximation. The difference maps were masked by the nonzero‐region of , since only elements in the coil‐sensitive region of the preconditioner describe the final solution.

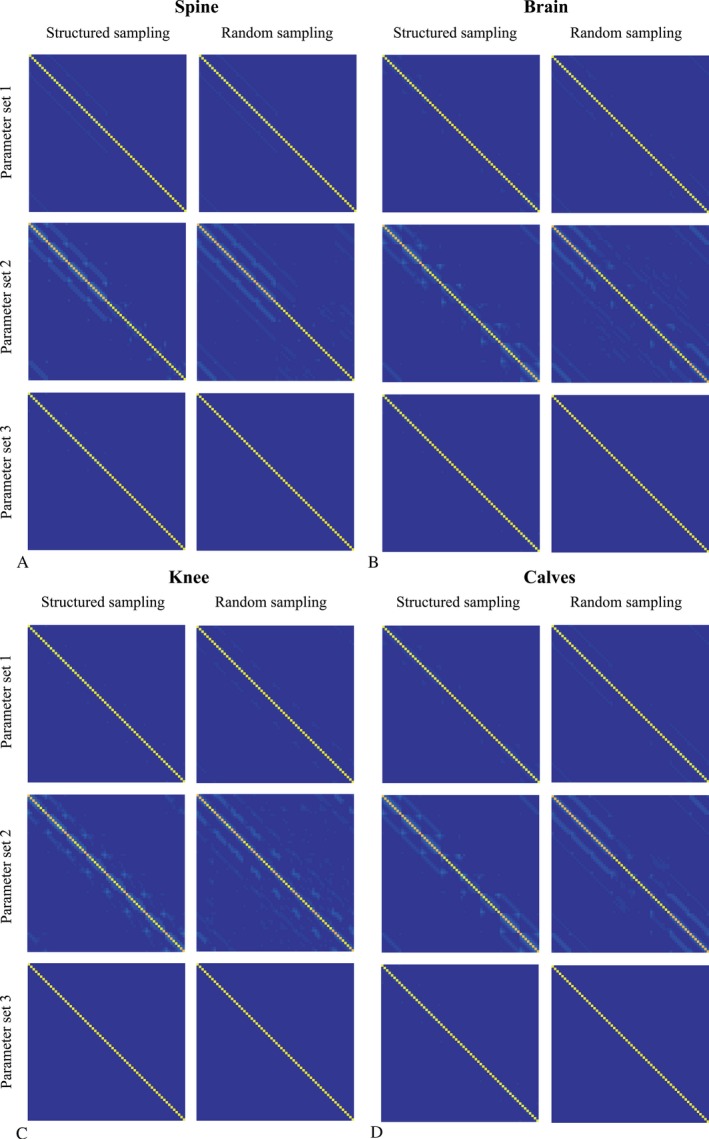

The product of the inverse of the preconditioner and the system matrix is shown for the spine, the brain, the knee and the calves in Figure 4A‐D, respectively. Different regularization parameter sets show that the preconditioner is a good approximate inverse, suggesting efficient convergence.

Figure 4.

The new system matrix. The first column and the second column show the elements of the effective new system matrix for structured and random undersampling and R = 4, respectively, for the spine (A), the brain (B), the knee (C), and the calves (D). The rows show this result for the three studied regularization parameter sets.

Table 2 reports the number of seconds needed to build the circulant preconditioner in MATLAB before the reconstruction starts, for different orders of the reconstruction matrix. Note that the actual number of unknowns in the corresponding systems is equal to the number of elements in the reconstruction matrix size, which leads to more than 1 million unknowns for the 1024 × 1024 case. For all matrix sizes the initialization time is negligible compared with the image reconstruction time.

Table 2.

Initialization times for constructing the preconditioner for different problem sizes.

| Problem size | 128 × 128 | 256 × 256 | 512 × 512 | 1024 × 1024 |

|---|---|---|---|---|

| Initialization time (s) | 0.0395 | 0.0951 | 0.3460 | 1.3371 |

| Additional costs (%) | 1.7 | 0.85 | 0.52 | 0.48 |

Even for very large problem sizes the initialization time does not exceed 2 seconds.

Additional costs are given as percentage of the total reconstruction time without preconditioning.

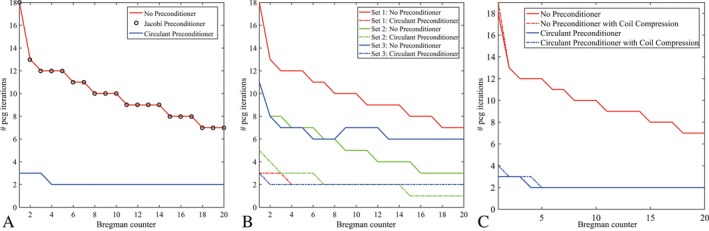

Figure 5A shows the number of iterations required for PCG to converge in each Bregman iteration without preconditioner, with the Jacobi preconditioner and with the circulant preconditioner for regularization parameters , and and a reconstruction matrix size of 256 × 256. The Jacobi preconditioner does not reduce the number of iterations, which shows that the diagonal of the system matrix does not contain enough information to result in a good approximation of . Moreover, it shows that the linear system is invariant under scaling. The circulant preconditioner, however, reduces the number of iterations considerably, leading to a total speed‐up factor of 4.65 in the PCG part.

Figure 5.

Number of iterations needed per Bregman iteration. The circulant preconditioner reduces the number of iterations considerably compared with the nonpreconditioned case. The Jacobi preconditioner does not reduce the number of iterations due to the poor approximation of the system matrix’ inverse. (A) depicts the iterations for Set 1: , whereas (B) depicts the iterations for Set 1, Set 2: , and Set 3: The preconditioner shows the largest speed up factor when the regularization parameters are well‐balanced. (C) Shown are the number of iterations needed per Bregman iteration with and without coil compression applied. The solid lines and the dashed lines depict the results with and without coil compression, respectively.

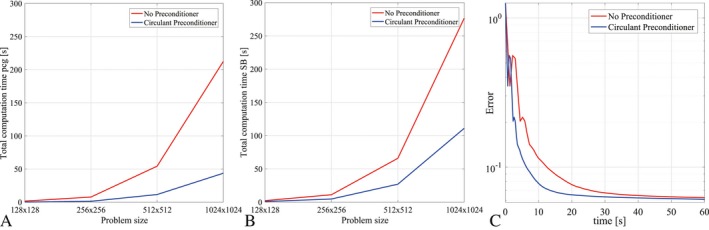

The effect of the reduced number of PCG iterations can directly be seen in the computation time for the reconstruction algorithm, plotted in Figure 6 for different problem sizes. Figure 6A shows the total PCG computation time when completing the total SB method, whereas Figure 6B shows the total computation time required to complete the entire reconstruction algorithm. A fivefold gain is achieved in the PCG part by reducing the number of PCG iterations, which directly relates to the results shown in Figure 5A. The overall gain of the complete algorithm, however, is a factor 2.5 instead of 5, which can be explained by the computational costs of the update steps outside the PCG iteration loop (see Algorithm 1). Figure 6C also shows the error, defined as the normalized 2‐norm difference with respect to the fully sampled image, as a function of time. The preconditioned SB scheme converges to the same accuracy as the original SB scheme, since the preconditioner only affects the required number of PCG iterations.

Figure 6.

Computation time for 20 Bregman iterations and different problem sizes. (A) Using the preconditioner, the total computation time for the PCG part in 20 Bregman iterations is reduced by more than a factor of 4.5 for all studied problem sizes. (B) The computation time for 20 Bregman iterations of the entire algorithm also includes the Bregman update steps, so that the total speedup factor is approximately 2.5 for the considered problem sizes. (C) The two methods converge to the same solution, plotted here for R = 4 and a reconstruction matrix size 256 × 256.

The number of iterations required by PCG for each Bregman iteration is shown in Figure 5B for the three parameter sets studied. The preconditioned case always outperforms the nonpreconditioned case, but the speed up factor depends on the regularization parameters. Parameter set 1 depicts the same result as shown in Figure 5A and results in the best reconstruction of the fully sampled reference image. In parameter set 2 more weight is given to the data fidelity term by increasing the parameter μ. Since the preconditioner relies on an approximation of the data fidelity term, it performs less optimally than for smaller μ (such as in set 1) for the first few Bregman iterations, but there is still a threefold gain in performance. This behavior was already predicted in Figure 4. Finally, there is very little change between parameter set 3 and parameter set 1, because the larger wavelet regularization parameter γ gives more weight to a term that was integrated in the preconditioner in an exact way, as for the total variation term, without any approximations.

Figure 5C illustrates the required iterations when half of the coils are taken into account by coil compression. Only a small discrepancy is encountered for the first few iterations, since the global structure and content of the system matrix A remain the same, which demonstrates that coil compression and preconditioning can be combined to optimally reduce the reconstruction time.

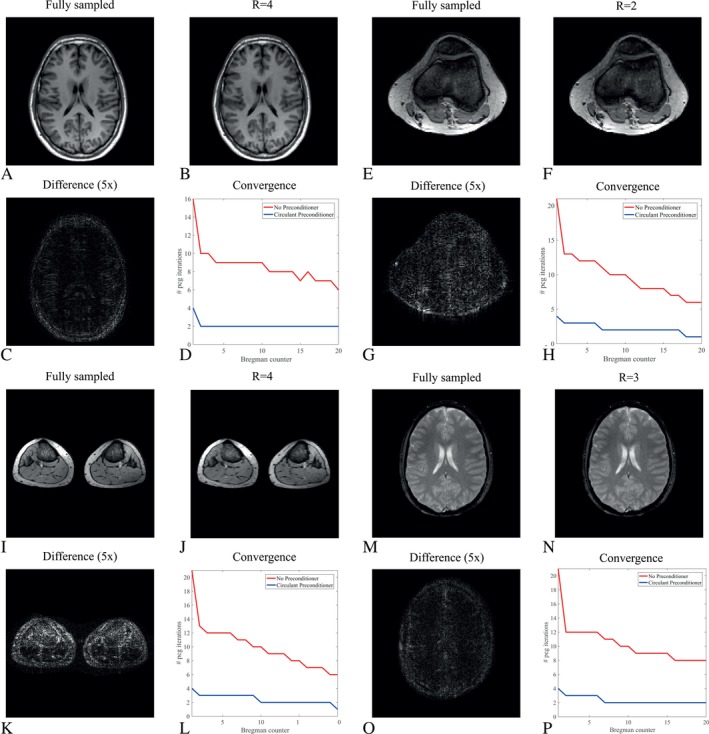

The method also works for different coil configurations. In Figure 7 the result is shown when using the 15‐channel head coil for the brain scans, the 16‐channel knee coil for a knee scan and the 16‐channel receive array for the calf scan. The circulant preconditioner clearly reduces the number of iterations, with an overall speed‐up factor of 4.1/4.4 and 4.5 in the PCG part for the brain (TSE/FFE) and the knee, respectively.

Figure 7.

Reconstruction results for different anatomies. (A) shows the fully sampled scan as a reference for the brain, whereas (B) depicts the reconstruction results for an undersampling factor of four (R = 4). The absolute difference, magnified five times, is shown in (C). The reconstruction matrix has dimensions 256 × 256 and regularization parameters were chosen as , and . The convergence results for the PCG part with and without preconditioner are plotted in (D), showing similar reduction factors as with the posterior coil. Results for the knee are shown in (E)‐(F) for a reconstruction matrix size 128 × 128 and an undersampling factor of 2 (R = 2). Regularization parameters were chosen as , and . Results for the calves are shown in (I)‐(L) for a reconstruction matrix size 256 × 256 and R = 4. Regularization parameters were chosen as , and . Results for the brain FFE scan are shown in (M)‐(P) for a reconstruction matrix size 256 × 256 and R = 3. Regularization parameters were chosen as , and .

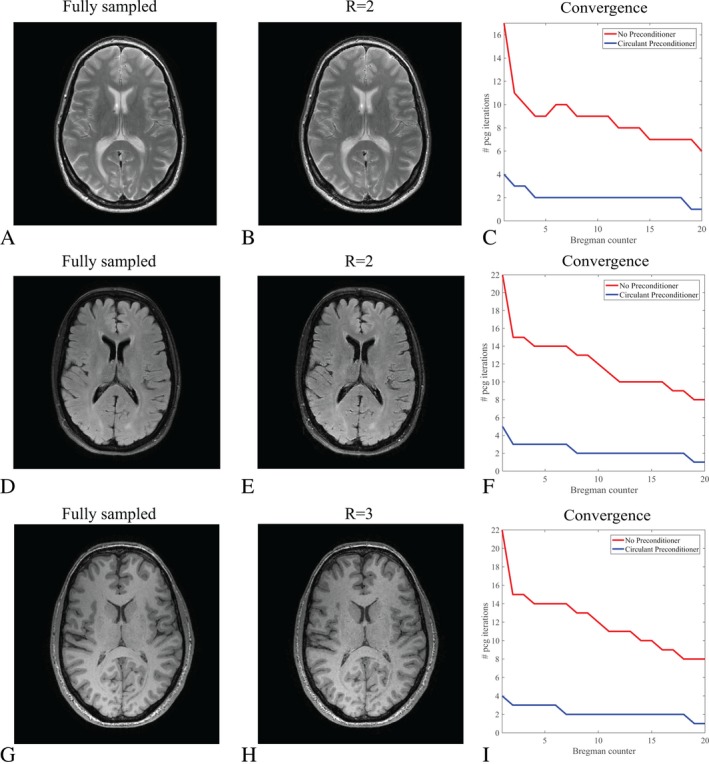

Figure 8 shows reconstruction results for scans where the data was directly acquired in under sampled mode instead of retrospectively undersampled, for a T2‐weighted TSE scan, a FLAIR scan and a 3D magnetization prepared T1‐weighted TFE scan, leading to PCG acceleration factors of 4.2, 5.1, and 5.4, respectively. The convergence behavior is similar to the one observed for the retrospectively undersampled data, demonstrating the robustness of the preconditioning approach in realistic scan setups.

Figure 8.

Reconstruction results for data acquired in fully and undersampled mode. (A) shows a fully sampled scan as a reference for a T2‐weighted TSE scan in the brain, whereas (B) depicts the reconstruction results for a prospectively undersampled scan with an acceleration factor of two (R = 2). The reconstruction matrix has dimensions 256 × 256 and regularization parameters were chosen as , and γ = 1. The convergence results for the PCG part with and without preconditioner are plotted in (C). Results for the FLAIR brain scan are shown in (D)‐(F) for a reconstruction matrix size 240 × 224 and R = 2. Regularization parameters were chosen as , and . Results for a 3D magnetization prepared T1‐weighted TFE scan in the brain are shown in (G)‐(I) for a reconstruction matrix size 240 × 224 and R = 3. Regularization parameters were chosen as , and . Note that the data in the left column stem from a different measurement as the data in the middle column

The performance of the preconditioner is also stable in the presence of different noise levels, as shown by experiments in which the excitation tip angle was varied from 10° to 90° in a TSE sequence, and results can be found online in Supporting Information Figure S1.

5. DISCUSSION AND CONCLUSIONS

In this work we have introduced a preconditioner that reduces the reconstruction times for CS and PI problems, without compromising the stability of the numerical SB scheme. Solving an ‐norm minimization problem is the most time‐consuming part of this algorithm. This ‐norm minimization problem is written as a linear system of equations characterized by the system matrix . The effectiveness of the introduced preconditioner comes from the fact that the system matrix is approximated as a BCCB matrix. Both the total variation and the wavelet regularization terms are BCCB, which means that only the data fidelity term, which is not BCCB due to the sensitivity profiles of the receive coils and the undersampling of k‐space, is approximated by assuming a BCCB structure in the construction of the preconditioner. This approximation has been shown to be accurate for CS–PI problem formulations. The efficiency of this approach comes from the fact that BCCB matrices are diagonalized by Fourier transformations, which means that the inverse of the preconditioner can simply be found by inverting a diagonal matrix and applying two additional FFTs.

With the designed preconditioner the most expensive ‐norm problem was solved almost five times faster than without preconditioning, resulting in an overall speed up factor of about 2.5. The discrepancy between the two speed up factors can be explained by the fact that apart from solving the linear problem, update steps also need to be performed. Step 4 and steps 13–15 of Algorithm 1 are especially time consuming since for each coil a 2D Fourier transform needs to be performed. Furthermore, the wavelet computation in steps 4, 8, and 11 are time consuming factors as well. Therefore, speed up factors higher than 2.5 are expected for an optimized Bregman algorithm. Further acceleration can be obtained through coil compression,46, 47 as the results in this study showed that it has negligible effect on the performance of the preconditioner.

The time required to construct the preconditioner is negligible compared with the reconstruction times as it involves only a few FFTs. The additional costs of applying the preconditioner on a vector is negligible as well, because it involves only two Fourier transformations and an inexpensive multiplication with a diagonal matrix. Therefore, the method is highly scalable and can handle large problem sizes.

The preconditioner works optimally when the regularization terms in the minimization problem are BCCB matrices in the final system matrix. This implies that the total variation operators should be chosen such that the final total variation matrix is BCCB, and that the wavelet transform should be unitary. Both the system matrix and the preconditioner can be easily adjusted to support single regularization instead of the combination of two regularization approaches.

The BCCB approximation for the data fidelity term supports both structured and random Cartesian undersampling patterns and works well for different undersampling factors. The performance of the preconditioner was experimentally validated using a variable density sampling scheme to prospectively undersample the data. The convergence behavior shows similar results as the retrospectively undersampled case.

The regularization parameters were shown to influence the performance of the preconditioner. Since the only approximation in the preconditioner comes from the approximation of the data fidelity term, the preconditioner results in poorer performance if the data fidelity term is very large compared with the regularization terms. In practice, such a situation is not likely to occur if the regularization parameters are chosen such that an optimal image quality is obtained in the reconstructed image. In this work, the regularization parameters were chosen empirically and were kept constant throughout the algorithm. For SB‐type methods, however, updating the regularization parameters during the algorithm makes the performance of the algorithm less dependent on the initial choice of the parameters.48 Moreover, it might result in improved convergence, from which our work can benefit.

This work focused on the linear part of the SB method, in which only the right‐hand side vector changes in each iteration and not the system matrix. Other ‐norm minimization algorithms exist that require a linear solver,49 such as IRLS or Second‐Order Cone Programming. For those type of algorithms linear preconditioning techniques can be applied as well. Although the actual choice for the preconditioner depends on the system matrix of the linear problem, which is in general different for different minimization algorithms, similar techniques as used in the current work can be exploited to construct a preconditioner for other minimization algorithms.

As outlined earlier in the introduction, there are alternative approaches to eliminating the iterative scheme to solve the ‐norm minimization problem. Although a detailed comparison of techniques is difficult due to the required choice of reconstruction parameters, it is worth noting that in Ref. 31 a comparison was made between the nonpreconditioned SB scheme that we also use as comparison in our work, and the authors’ extra variable splitting method. Their results suggest that the preconditioned SB scheme with an acceleration factor of 2.5 is very similar to the performance of the method adopting extra variable splitting. Moreover, variable splitting is not possible for non‐Cartesian data acquisition but is easily incorporated into the preconditioned SB approach. In this extension, the block circulant matrix with circulant blocks is replaced by the block Toeplitz matrix with Toeplitz blocks.40 Given the promising results for Cartesian trajectories, future work will therefore focus on including non‐Cartesian data trajectories into a single unified preconditioned SB framework.

Another large group of reconstruction algorithms involve gradient update steps; examples in this group are the Iterative Shrinkage‐Thresholding Algorithm (ISTA), FISTA, MFISTA, and BARISTA.50, 51, 52, 53 In Ref. 53 it was discussed that the performance of FISTA, for which convergence depends on the maximum sum of squared absolute coil sensitivity value, can be poor due to large variations in coil sensitivities. In our work, however, the coil sensitivity maps were normalized such that the corresponding sum‐of‐squares map is constant and equal to one in each spatial location within the object region. The normalization of these coil sensitivities might therefore lead to acceleration of FISTA‐type algorithms. Thus, it would be interesting to compare the performance of the preconditioned SB algorithm with the performance of FISTA when incorporating normalized coil sensitivities into both algorithms.

In conclusion, the designed FFT‐based preconditioner reduces the number of iterations required for solving the linear problem in the SB algorithm considerably, resulting in an overall acceleration factor of 2.5 for PI–CS reconstructions. The approach works for different coil‐array configurations, MR sequences, and nonpower of two acquisition matrices, and the time to construct the preconditioner is negligible. Therefore, it can be easily used and implemented, allowing for efficient computations.

Supporting information

FIGURE S1 The performance of the preconditioner for different SNR levels. Prospectively undersampled data sets were obtained for different SNR levels by varying the flip angle from 10 to 90 degrees in a TSE sequence. The difference in the number of CG iterations needed until convergence with and without preconditioner for different SNR levels is negligible.

ACKNOWLEDGMENT

We would like to thank Ad Moerland from Philips Healthcare Best (The Netherlands) and Mariya Doneva from Philips Research Hamburg (Germany) for helpful discussions on reconstruction. Furthermore, we express our gratitude toward the reviewers for their constructive criticism.

Koolstra K, van Gemert J, Börnert P, Webb A, Remis R. Accelerating compressed sensing in parallel imaging reconstructions using an efficient circulant preconditioner for cartesian trajectories. Magn Reson Med. 2019;81:670–685. 10.1002/mrm.27371

Funding information Jeroen van Gemert receives funding from STW 13375 and Kirsten Koolstra receives funding from ERC Advanced Grant 670629 NOMA MRI

REFERENCES

- 1. Blaimer M, Breuer F, Mueller M, Heidemann RM, Griswold MA, Jakob PM. SMASH, SENSE, PILS, GRAPPA: how to choose the optimal method. Top Magn Reson Imaging. 2004;15:223–236. [DOI] [PubMed] [Google Scholar]

- 2. Deshmane A, Gulani V, Griswold MA, Seiberlich N. Parallel MR imaging. JMagn Reson Imaging. 2012;36:305–317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Pruessmann KP, Weiger M, Scheidegger MB, Boesiger P. SENSE: sensitivity encoding for fast MRI. Magn Reson Med. 1999;42:952–962. [PubMed] [Google Scholar]

- 4. Griswold MA, Jakob PM, Heidemann RM, etal. Generalized autocalibrating partially parallel acquisitions (GRAPPA). Magn Reson Med. 2002;47:1202–1210. [DOI] [PubMed] [Google Scholar]

- 5. Donoho DL, Elad M, Temlyakov VN. Stable recovery of sparse overcomplete representations in the presence of noise. IEEE Trans Inf Theory. 2006;52:6–18. [Google Scholar]

- 6. Lustig M, Donoho D, Pauly JM. Sparse MRI: the application of compressed sensing for rapid MR imaging. Magn Reson Med. 2007;58:1182–1195. [DOI] [PubMed] [Google Scholar]

- 7. Otazo R, Kim D, Axel L, Sodickson DK. Combination of compressed sensing and parallel imaging for highly accelerated first‐pass cardiac perfusion MRI. Magn Reson Med. 2010;64:767–776. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Liang D, Liu B, Wang J, Ying L. Accelerating SENSE using compressed sensing. Magn Reson Med. 2009;62:1574–1584. [DOI] [PubMed] [Google Scholar]

- 9. Chandarana H, Feng L, Block T K, etal. Free‐breathing contrast‐enhanced multiphase MRI of the liver using a combination of compressed sensing, parallel imaging, and golden‐angle radial sampling. Invest Radiol. 2013;48:10–16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Boyd S, Vandenberghe L. Regularized approximation In: Convex Optimization. New York: Cambridge University Press;2004:305–317. [Google Scholar]

- 11. Candes EJ, Wakin MB, Boyd SP. Enhancing sparsity by reweighted 1 minimization. JFourier Anal Appl. 2008;14:877–905. [Google Scholar]

- 12. Block KT, Uecker M, Frahm J. Undersampled radial MRI with multiple coils. Iterative image reconstruction using a total variation constraint. Magn Reson Med. 2007;57:1086–1098. [DOI] [PubMed] [Google Scholar]

- 13. Vasanawala S, Murphy M, Alley MT. Practical parallel imaging compressed sensing MRI: summary of two years of experience in accelerating body MRI of pediatric patients. In Proceedings of IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Chicago, IL, 2011. pp. 1039–1043. [DOI] [PMC free article] [PubMed]

- 14. Goldstein T, Osher S. The split bregman method for 1‐regularized problems. SIAM J Imaging Sci. 2009;2:323–343. [Google Scholar]

- 15. Murphy M, Alley M, Demmel J, Keutzer K, Vasanawala S, Lustig M. Fast 1‐SPIRiT compressed sensing parallel imaging MRI: scalable parallel implementation and clinically feasible runtime. IEEE Trans Med Imaging. 2012;31:1250–1262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Ma S, Yin W, Zhang Y, Chakraborty A. An efficient algorithm for compressed MR imaging using total variation and wavelets. In Proceedings of IEEE Conference on CVPR, Anchorage, AK, 2008. pp. 1–8.

- 17. Ramani S, Fessler JA. An accelerated iterative reweighted least squares algorithm for compressed sensing MRI. In Proceedings of IEEE International Symposium on Biomedical Imaging: From Nano to Macro, Rotterdam, Netherlands, 2010. pp. 257–260.

- 18. Liu B, King K, Steckner M, Xie J, Sheng J, Ying L. Regularized sensitivity encoding (SENSE) reconstruction using bregman iterations. Magn Reson Med. 2009;61:145–152. [DOI] [PubMed] [Google Scholar]

- 19. Kim SJ, Koh K, Lustig M, Boyd S. An efficient method for compressed sensing. In Proceedings of IEEE ICIP, San Antonio, TX, 2007. pp. III‐117‐III‐120.

- 20. Candès EJ, Romberg JK. Signal recovery from random projections. In Proceedings of SPIE, San Jose, CA, 2005. pp. 76–86.

- 21. Daubechies I, Defrise M, De Mol C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun Pure Appl Math. 2004;57:1413–1457. [Google Scholar]

- 22. Lingala SG, Hu Y, DiBella E, Jacob M. Accelerated dynamic MRI exploiting sparsity and low‐rank structure: k‐t SLR. IEEE Trans Med Imaging. 2011;30:1042–1054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Donoho DL. Compressed sensing. IEEE Trans Inf Theory. 2006;52:1289–1306. [Google Scholar]

- 24. Brègman LM. A relaxation method of finding a common point of convex sets and its application to the solution of problems in convex programming. USSR Comp Math and Math Phys. 1967;7:620–631. [Google Scholar]

- 25. Jia RQ, Zhao H, Zhao W. Convergence analysis of the Bregman method for the variational model of image denoising. Appl Comput Harmon Anal. 2009;27:367–379. [Google Scholar]

- 26. Setzer S. Operator Splittings, Bregman methods and frame shrinkage in image processing. Int J Comput Vision. 2011;92:265–280. [Google Scholar]

- 27. Cai JF, Osher S, Shen Z. Split Bregman methods and frame based image restoration. Multiscale Model Simul. 2009;8:337–369. [Google Scholar]

- 28. Elvetun OL, Nielsen BF. The split Bregman algorithm applied to PDE‐constrained optimization problems with total variation regularization. Comput Optim Appl. 2016;64:699–724. [Google Scholar]

- 29. Zhou J, Li J, Gombaniro JC. Combining SENSE and compressed sensing MRI with a fast iterative contourlet thresholding algorithm. In Proceedings of the 12th International Conference on FSKD, Zhangjiajie, Chine, 2015. pp. 1123–1127.

- 30. Cauley SF, Xi Y, Bilgic B, etal. Fast reconstruction for multichannel compressed sensing using a hierarchically semiseparable solver. Magn Reson Med. 2015;73:1034–1040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Ramani S, Fessler JA. Parallel MR image reconstruction using augmented lagrangian methods. IEEE Trans Med Imaging. 2011;30:694–706. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Cai N, Xie W, Su Z, Wang S, Liang D. Sparse parallel MRI based on accelerated operator splitting schemes. Comput Math Methods Med. 2016;2016:1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Afonso MV, Bioucas‐Dias JM, Figueiredo MAT. Fast image recovery using variable splitting and constrained optimization. IEEE Trans Image Process. 2010;19:2345–2356. [DOI] [PubMed] [Google Scholar]

- 34. Benzi M. Preconditioning techniques for large linear systems: a survey. JComput Phys. 2002;182:418–477. [Google Scholar]

- 35. Scott J, Tůma M, On signed incomplete Cholesky factorization preconditioners for saddle‐point systems. SIAM J Sci Comput. 2014;36:2984–3010. [Google Scholar]

- 36. Baumann M, Astudillo R, Qiu Y, Ang EY, Van Gijzen MB, Plessix RE. An MSSS‐preconditioned matrix equation approach for the time‐harmonic elastic wave equation at multiple frequencies. Comput Geosci. 2018;22:43–61. [Google Scholar]

- 37. Chen C, Li Y, Axel L, Huang J. Real time dynamic mri with dynamic total variation. In Proceedings of International Conference on MICCAI, Boston, MA, 2014. pp. 138–145. [DOI] [PubMed]

- 38. Li R, Li Y, Fang R, Zhang S, Pan H, Huang J. Fast preconditioning for accelerated multi‐contrast MRI reconstruction. In Proceedings of International Conference on MICCAI, Munich, Germany, 2015. pp. 700–707.

- 39. Xu Z, Li Y, Axel L, Huang J. Efficient preconditioning in joint total variation regularized parallel MRI reconstruction. In Proceedings of the International Conference on MICCAI, Munich, Germany, 2015. pp. 563–570.

- 40. Weller DS, Ramani S, Fessler JA. Augmented Lagrangian with variable splitting for faster non‐cartesian L1‐SPIRiT MR image reconstruction. IEEE Trans Med Imaging. 2014;33:351–361. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Saad Y. Iterative Methods for Sparse Linear Systems. Philadelphia: SIAM; 2003. [Google Scholar]

- 42. Bracewell RN. The Fourier Transform and its Applications. New York: McGraw‐Hill; 1986. [Google Scholar]

- 43. Gray RM. Toeplitz and circulant matrices: a review. Found Trends Commun Inf Theory. 2006;2:155–239. [Google Scholar]

- 44. Mugler JP. Optimized three‐dimensional fast‐spin‐echo MRI. JMagn Reson Imaging. 2014;39:745–767. [DOI] [PubMed] [Google Scholar]

- 45. Uecker M, Lai P, Murphy MJ, etal. ESPIRiT–an eigenvalue approach to autocalibrating parallel MRI: where SENSE meets GRAPPA. Magn Reson Med. 2014;71:990–1001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Huang F, Vijayakumar S, Li Y, Hertel S, Duensing GR. A software channel compression technique for faster reconstruction with many channels. Magn Reson Imaging. 2008;26:133–141. [DOI] [PubMed] [Google Scholar]

- 47. Zhang T, Pauly JM, Vasanawala SS, Lustig M. Coil compression for accelerated imaging with cartesian sampling. Magn Reson Med. 2013;69:571–582. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Boyd S, Parikh N, Chu E, Peleato B, Eckstein J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found Trends Mach Learn. 2011;3:1–122. [Google Scholar]

- 49. Rodriguez P, Wohlberg B. Efficient minimization method for a generalized total variation functional. IEEE Trans Image Process. 2009;18:322–332. [DOI] [PubMed] [Google Scholar]

- 50. Daubechies I, Defrise M, De Mol C. An iterative thresholding algorithm for linear inverse problems with a sparsity constraint. Commun Pur Appl Math. 2004;57:1413–1457. [Google Scholar]

- 51. Beck A, Teboulle M. A fast iterative shrinkage‐thresholding algorithm for linear inverse problems. SIAM J Imaging Sci. 2009;2:183–202. [Google Scholar]

- 52. Beck A, Teboulle M. Fast gradient‐based algorithms for constrained total variation image denoising and deblurring problems. IEEE Trans Im Proc. 2009;18:2419–2434. [DOI] [PubMed] [Google Scholar]

- 53. Muckley MJ, Noll DC, Fessler JA. Fast parallel MR image reconstruction via B1‐based, adaptive restart, iterative soft thresholding algorithms (BARISTA). IEEE Trans Med Imaging. 2015;34:578–588. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

FIGURE S1 The performance of the preconditioner for different SNR levels. Prospectively undersampled data sets were obtained for different SNR levels by varying the flip angle from 10 to 90 degrees in a TSE sequence. The difference in the number of CG iterations needed until convergence with and without preconditioner for different SNR levels is negligible.