Short abstract

Background

Many physicians enter data into the electronic medical record (EMR) as unstructured free text and not as discrete data, making it challenging to use for quality improvement or research initiatives.

Objectives

The objective of this research paper was to develop and implement a structured clinical documentation support (SCDS) toolkit within the EMR to facilitate quality initiatives and practice-based research in a multiple sclerosis (MS) practice.

Methods

We built customized EMR toolkits to capture standardized data at office visits. Content was determined through physician consensus on necessary elements to support best practices in treating patients with demyelinating disorders. We also developed CDS tools and best practice advisories within the toolkits to alert physicians when a quality improvement opportunity exists, including enrollment into our DNA biobanking study at the point of care.

Results

We have used the toolkit to evaluate 541 MS patients in our clinic and begun collecting longitudinal data on patients who return for annual visits. We provide a description and example screenshots of our toolkits, and a brief description of our cohort to date.

Conclusions

The EMR can be effectively structured to standardize MS clinic office visits, capture data, and support quality improvement and practice-based research initiatives at the point of care.

Keywords: Best practices, clinical decision support, clinically isolated syndrome, cohort studies, electronic health records, multiple sclerosis, structured clinical documentation support, quality improvement

Introduction

Multiple sclerosis (MS) is a common, chronic, disabling disease among young adults, affecting more than 400,000 of the United States population.1 It is associated with significant costs, disability, and decreased quality of life for patients and their families.1–4

The American Academy of Neurology (AAN) has published quality measures for the care of adults with neurologic diseases, including MS.5,6 These guidelines address several domains including diagnosis, disease progression, and impact of the disease on quality of life. For example, specific diagnostic recommendations include the appropriate use of magnetic resonance imaging (MRI) and the importance of a scored disability scale to assess functional capabilities. Guidelines related to potential disease correlates include assessment for depression, fall risk, risk of bladder infections, fatigue, and presence of cognitive impairment. Lastly, the guidelines support ongoing assessment of depression, anxiety, and changes in other quality-of-life measures. It is not clear, however, whether these quality measures are routinely systematically implemented in clinical practice or how often they are being used.7,8 Further, whether compliance with these quality guidelines improves outcomes for patients with MS is unknown.

Early intervention with disease-modifying therapies has been shown to reduce progression and disability, and several such therapies have been approved.9 However, there is substantial variation in treatment response and it is likely that some of this variation can be predicted from clinical and demographic information. A recent study using the MSBase cohort identified and validated a prediction model for treatment response.10 Additional observational studies of comparative effectiveness in diverse patient cohorts are thus warranted.

Given the ever-increasing use of the electronic medical record (EMR), there exists an opportunity to standardize care to best practices and to use the EMR to facilitate quality improvement and practice-based research. A challenge to research based on the EMR is that clinical data are not consistently captured discretely, making it difficult to report performance and assess quality improvement opportunities. Structured clinical documentation support (SCDS) offers a solution to this problem and an opportunity to address potential quality gaps at the point of care. We have developed an SCDS toolkit for MS to document initial, interval, and annual follow-up encounters. These toolkits conform to clinical best practices, may be entered into progress notes, and support EMR-based, pragmatic research.

Additionally, these toolkits are the foundation of additional applications that we are designing and implementing. In addition to standardizing data collection, we are also seek consent from patients at the point of care for enrollment in DNA biobanking (DodoNA project) to be used for future biomarker studies. Lastly, we are sharing these tools with partner sites as part of the Neurology Practice-Based Research Network (NPBRN). This work will provide the opportunity for novel and innovative quality improvement and practice-based research. Here we describe our toolkit and our vision for future applications to improve the quality of care for MS patients.

Methods

NorthShore University HealthSystem (NorthShore), located in the northern suburbs of Chicago, includes three MS specialists practicing at four outpatient centers. Our seven-stage process for quality improvement and practice-based research using the EMR has been previously described.11

We briefly review here the development of our highly customized MS SCDS toolkit that is used at each patient encounter. This toolkit was built into our EMR environment, which is Epic Corporation (Epic). Thus, currently our toolkits are designed to be transferrable to any practice using Epic. The content of the toolkit was determined through frequent physician meetings to reach consensus on essential elements that conform to best practices in treating patients with demyelinating disorders, primarily MS. We reviewed the pertinent medical literature, AAN guidelines, Consortium of Multiple Sclerosis Center guidelines, and the Multiple Sclerosis Coalition principles.12–14

Once content was determined, we conducted meetings with programmers from NorthShore’s EMR Optimization team every two weeks. They built an SCDS toolkit that reflected the selected content, including navigators (a sidebar index of processes to choose from), electronic forms (which had the ability to auto-score and auto-interpret), and summary flowsheets. We also included free-text fields to allow for additional optional information. We are also continuing to meet to discuss ways to increase efficiency, for example, flowsheets carry forward and we are able to prepopulate fields from a previous visit for data that are unchanging (i.e. initial symptom). We also developed a summary view that shows a snapshot of historical values, providing an easy, quick reference of past measures. Specific score tests were chosen based on validity, accessibility, and cost. Their use here is based on the fact they were accessible (not proprietary) and tested against a general population. Potential cost was a major barrier to use outcome measures that are more validated in MS as the project is expected to reach several thousand patient-years, which would be financially unsustainable if a royalty were to be paid for each patient-year. The use of the Montreal Cognitive Assessment (MoCA) and Short Test of Mental Status (STMS) were also selected because of the need for cognitive assessment of patients in the whole DodoNA project (not just MS) to determine the ability of the patient to sign consent. Another reason includes ease of use for patients and staff as using multiple tests to measure the same outcome is time consuming and requires additional staff training. We thought that despite these shortcomings, valid data will be obtained using these measures because they will be monitored over time from a baseline. Also, the ability to use and monitor components of the MoCA or STMS separately will be valuable and may help or direct designing more valid tests to evaluate cognition in MS, which remains lacking an easy-to-use and highly sensitive measurement test. Score tests included the Center for Epidemiologic Studies Depression (CESD) scale,15 Generalized Anxiety Disorder 7-Item (GAD-7) scale,16 Expanded Disability Status Scale (EDSS),17,18 Fatigue Severity Scale (FSS),19 9-HPT (9-Hole Peg Test),20–22 and Timed 25-Foot Walk (25FW) test.21,22 Quality of life was measured using the Veterans RAND 12-Item Health Survey (VR-12).23–25 For cognitive assessment, we initially used the MoCA.26,27 Owing to the administration burden to the nurses, concerns over potential false positives, and changes in licensing permission, however, we switched to the STMS.28 Because scores from MoCA and STMS can be converted to the Mini-Mental State Examination (MMSE),29 for comparability we present all scores as their MMSE-converted scores.30-32 We also included discrete data fields to capture detailed information related to results of MRI testing. This includes specifics related to the region of imaging (brain, thoracic, or cervical spine), dates, whether information is based on radiologist report or physician review of the film, whether contrast was used, the number of T1 and T2 lesions, presence and number of gadolinium-enhancing lesions, and whether there is brainstem involvement or evidence of cerebral atrophy. We collected results of any evoked potentials (brainstem, somatosensory or visual recorded separately) testing. Data were collected on optical coherence tomography including dates performed, whether findings were from the radiology report or physician review of the film, and whether retinal fiber thinning was present. Lastly, we recorded whether there was cerebrospinal fluid analysis performed and whether findings were positive or negative. We designed workflows (the order and assignment of tasks to a care team that included a medical assistant, a nurse, and an MS neurologist) and mapped items to the progress notes (the order and layout in which the content would write). These progress notes were developed with the intent that they would be readable to other specialties (see screenshot in Supplemental File 1 for an example). The toolkit was thoroughly tested and revised in a development playground and, finally, put into production.

The toolkit was designed to support initial and follow-up visits (annually and at interval visits). Appointment lengths at our practices are 60 minutes for the initial visit, 30 minutes for interval visits, and 30–60 minutes for the annual visit. The toolkits were designed to be completed within these standard appointment lengths. These toolkits are the default Epic documentation screens used by all of our MS neurologists for all clinical encounters. All patient data collected at clinical encounters are charted using this toolkit. Quality improvement projects can be undertaken by evaluating any of the discrete information gathered at routine office visits. Those data are automatically extracted, transformed, and loaded from the EMR nightly into an enterprise data warehouse, making it possible to write reports using standard statistical and analytic software packages, such as R.11 Data quality is assessed at several points in the process. First, missing data reports are generated for each physician and are reviewed by research assistants who work with the physician to remediate missing data points, if possible. Fields are not autopopulated; physicians must click both positive and negative answers. If nothing is clicked, the field will appear as “NA” in the data reports. Additionally, our statistical team also reviews data for outliers and illogical values (for example, date of onset after date of diagnosis). If questionable values are identified, they are sent to the research assistants and/or physicians to edit or the value is determined to be missing. The SCDS toolkit was also designed to support clinical practice-based research by prompting enrollment in a DNA biobanking study if the physician documents the following information: the patient’s final diagnosis as MS or a differential diagnosis of acute disseminated encephalomyelitis, clinically isolated syndrome (CIS), demyelinating disease of the central nervous system, neuromyelitis optica, optic neuritis, subacute necrotizing myelitis or myelitis, and the impression form; the patient is 18 years or older; and he or she resides in Cook or Lake County, Illinois. This DNA biobank will serve as a resource for future molecular prognostic studies.

Statistical analyses

All statistical analyses were performed using R statistical software.11 We visually assessed the distribution of categorical variables using histograms to identify potential outliers for further review. For continuous variables, we assessed deviations from a normal distribution using quantile-quantile plots. We present medians and ranges for consistency, as many some variables were not normally distributed. Correlation coefficients were calculated using Pearson R2. Principal component (PC) analysis was calculated on numerical variables both with and without varimax rotation. Tests of statistical were conducted using a one-way analysis of variance.

Results

Baseline descriptive data

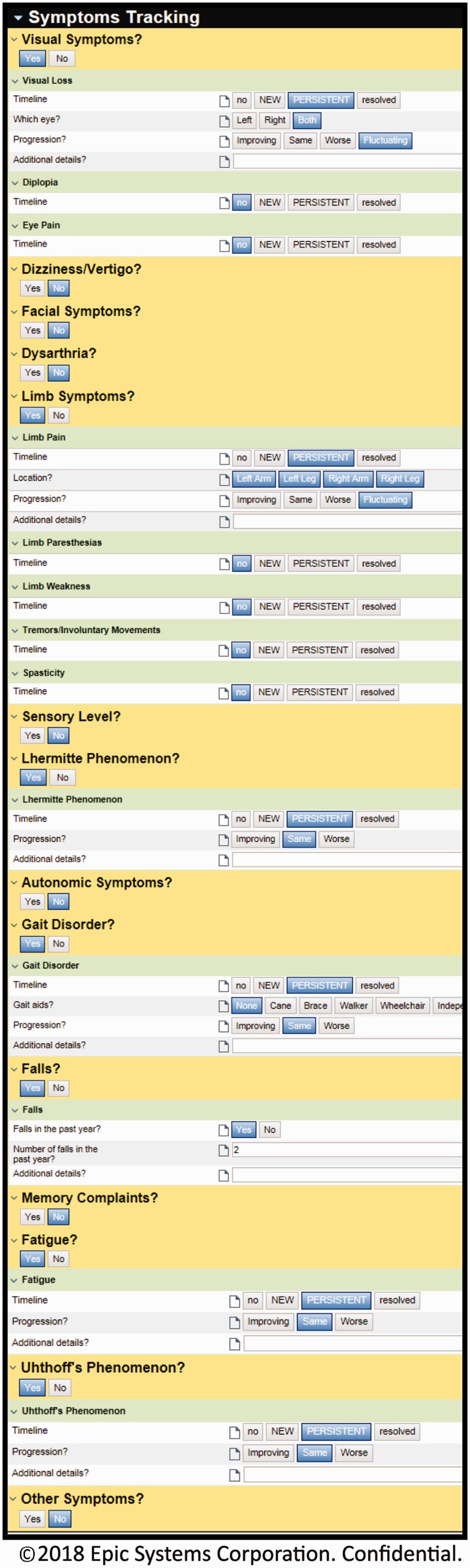

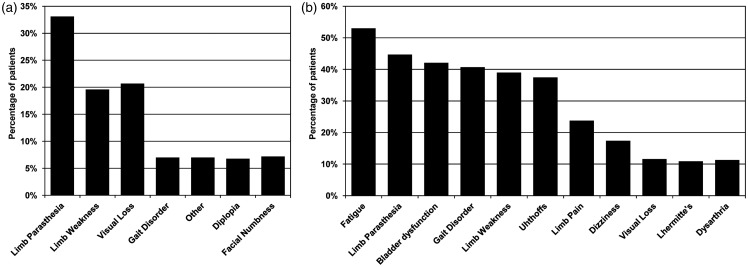

Toolkit use began on August 28, 2012. As of July 1, 2018, we have used the toolkit to evaluate patients with demyelinating disorders at 3195 visits, including 1039 initial visits. Of these, 541 patients were diagnosed with MS (530 individuals) or CIS (11 individuals). This includes up to 1370 fields of smart cascading data elements per office visit (example screenshots are shown in Figure 1; additional screenshots are shown in Supplemental File 1). The toolkit utilization rate was 100%. For the present descriptive study, we have restricted the findings to patients with MS/CIS, as this is the vast majority of patients. Select initial visit demographic and clinical characteristics are shown in Table 1. Consistent with population-based estimates,29 there was an approximate 3:1 female-to-male ratio. The median age of onset of MS was 34 years (range, 8–73 years) and the average duration of symptoms, at initial visit, was 11 years (range, 0–59 years). The most commonly reported initial symptom was limb paresthesia (33%), followed by visual loss (21%) and limb weakness (20%). The most commonly persistent symptoms reported by MS patients were fatigue (53%), followed by limb paresthesia (45%), bladder dysfunction (42%), and gait disorder (41%). Details of reported symptoms are shown in Figure 2.

Figure 1.

Screenshot of the Multiple Sclerosis SDCS toolkit within the electronic medical record for symptom tracking, ©2017 EPIC Systems Cooperation, used with permission.

Table 1.

Descriptive characteristics of multiple sclerosis/clinically isolated syndrome patients evaluated at initial visit.

| Total | |

|---|---|

| n = 541 | |

| Age at encounter (years), median (range) | 51 (19–90) |

| Disease duration (years), median (range) | 11 (0–59) |

| Female, n (%) | 411 (76) |

| Body mass index (mg/kg2), median (range) | 27 (16–50) |

| Race, n (%) | |

| Caucasian | 309 (82) |

| Hispanic/Latino | 16 (4) |

| African American | 34 (9) |

| Asian | 7 (2) |

| American Indian or Native Alaskan | 2 (<1) |

| Other | 8 (2) |

| Education (years), median (range) | 16 (5–27) |

| Tobacco, n (%) | |

| Never | 272 (50) |

| Former | 187 (35) |

| Current | 82 (15) |

Percentages calculated among nonmissing.

Missing data: race (n = 165, 31%), education (n = 26, 7%), body mass index (n = 34, 9%).

Figure 2.

(a) Reported initial symptoms and (b) reported persistent symptoms in multiple sclerosis patients. Shown for initial symptoms reported with greater than 5% frequency and persistent symptoms with greater than 10% frequency.

Score tests

The CESD, GAD-7, FSS, 25FW, 9-HPT, and EDSS scores are shown in Table 2 for initial visit, first annual visit, and second annual visit. Median time between visits is 12.5 months in our cohort. At the initial visit, men tended to have worse disability (as measured by the EDSS), with almost two-thirds scoring >2 (69% vs 61% in women). Men and women had similar GAD-7 scores, with 54% scoring consistent with anxiety (mild, moderate, or severe). On average, men were more likely to have CESD scores suggesting moderate or possible severe depression (45% in men vs 37% in women). The prevalence of possible cognitive impairment (as scored by the MoCA or STMS converted to MMSE) was low (10%). In general, women had similar scores for the measured functional tests.

Table 2.

Score test measures at initial and annual visits in multiple sclerosis/clinically isolated syndrome patients.

| Baseline | First annual | Second annual | |

|---|---|---|---|

| n = 374a | n = 235 | n = 147 | |

| GAD7, median (min–max) | 4 (0–21) | 3 (0–21) | 3 (0–21) |

| CESD, median (min–max) | 11 (0–46) | 11 (0–50) | 10 (0–50) |

| FSS, median (min–max) | 39 (9–63) | 39 (9–63) | 37 (9–63) |

| 25FW | 5 (2–180) | 6 (3–45) | 5 (3–39) |

| 9-HPT Dominant | 22 (14–138) | 21 (14–174) | 21 (14–93) |

| 9-HPT Nondominant | 23 (14–163) | 22 (14–93) | 20 (15–59) |

| EDSS | 3 (0–9) | 2 (0–8) | 2.5 (0–8) |

| MMSE | 28 (15–30) | 28 (12–30) | 28 (14–30) |

aTotal n is less the total number evaluated with the toolkit at initial visit (n = 541) because only individuals enrolled in our DNA biobanking study (n = 376) are followed annually with the toolkit and thus included in longitudinal analysis. An additional two patients did not have score test measures recorded.

25FW: Timed 25-Foot Walk; CESD: Center for Epidemiologic Studies Depression scale; EDSS: Expanded Disability Status Scale; FSS: Fatigue Severity Scale; GAD7: Generalized Anxiety Disorder 7 item; 9-HPT: 9-Hole Peg Test; max: maximum; min: minimum; MMSE: Mini-Mental State Examination.

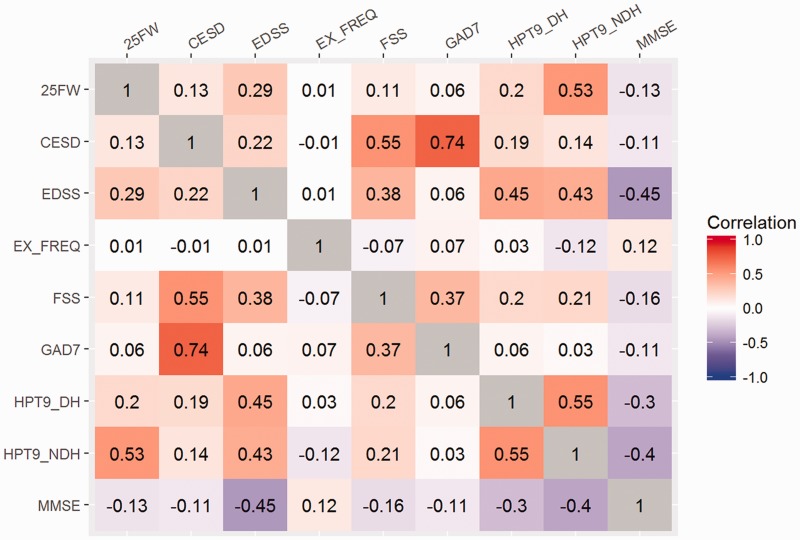

Correlation analysis

The pairwise correlations between the score test measures are shown in Figure 3. As expected, there was a strong correlation between GAD-7 and CESD. FSS showed a moderate correlation with CESD. EDSS was moderately correlated with 9-HPT and MMSE (inverse correlation).

Figure 3.

Pairwise correlations between score test measures.

25FW: Timed 25-Foot Walk; CESD: Center for Epidemiologic Studies Depression scale; EDSS: Expanded Disability Status Scale; FSS: Fatigue Severity Scale; GAD7: Generalized Anxiety Disorder 7 item; HPT9-DH: 9-Hole Peg Test dominant hand; HPT9-NDH: 9-Hole Peg Test nondominant hand; MMSE: Mini-Mental State Examination; EX_FREQ: Exercise Frequency.

Similarly, the PC analysis produced a three-factor solution with the 25FW, 9-HPT, MMSE, and EDSS loading on the first factor (PC1) while the FSS, GAD7, and CESD loaded on the second factor (PC2) and 25FW on the third factor (PC3) (data not shown). This suggests that the variation in these measures in our patient population is explained largely by the three domains, characterized by the score tests as described above.

Annual visits

Our clinical practice includes annual follow-up (in accordance with best practices) for MS patients. As such, we collect longitudinal data annually using the toolkits. Score test measures for patients enrolled in our DNA biobank at baseline and annual follow-up visits are shown in Table 2. Longitudinal data collection is ongoing, and we show here results for visits for which we have data on at least 100 patients (two annual visits after the initial visit). In general, little change was seen for the measured score tests over two years of annual follow-up visits.

Discussion

We have developed and implemented a customized EMR toolkit to evaluate MS patients in our neurology clinics. With simple mouse clicks, the toolkit writes progress notes and ensures that care conforms to best practices. The toolkits are also designed to support quality improvement projects and point-of-care research. To address potential quality gaps, we have designed and implemented BPAs that alert a physician when a quality improvement opportunity exists. Currently being implemented as a broad initiative in neurology are BPAs to address depression, anxiety, and cognitive impairment. Specifically, if a patient screens positive for depression, anxiety, or mild cognitive impairment, a BPA fires and presents a mouse-click option for the physician to place a medication order, write a referral, or defer (which prompts a selection of a reason for deferral). We will track patient outcomes to assess the effectiveness of these measures. Importantly, we will also assess how frequently these BPAs are acted on to assess the utility in clinical practice.

We are also planning to implement additional MS-specific BPAs. As a first step, we are planning to assess adherence to specific measures. For example, if a patient is prescribed immunotherapy, we are evaluating how often baseline lab tests or consultations are ordered. Based on these findings, we may develop a BPA to alert physicians to order lab tests or a consultation when immunotherapy is prescribed. Similarly, given that physical therapy has been shown to be beneficial as it relates to fall risk and balance,30 we could develop a BPA to alert physicians when a patient is a fall risk or has a history of falls and has not been evaluated by a physical therapist. Again, we will then track patient outcomes following initiation of these BPAs to assess the success of these quality improvement initiatives.

We have evaluated more than 500 MS patients with the toolkits to date. Data collection is ongoing as data captured in the toolkits are used in routine clinical care. Historically, the structure of the EMR has been a hindrance to rigorous data collection and analysis because of lack of standardization. Our clinical data are of high quality and easy to extract for analysis because they are discretized at the point of care. This results in uniformly collected data and eliminates the need to translate free text to discrete, comparable entries. Because our toolkits were developed by physicians to conform to best practices, and because each month we provide physicians with missing value reports, the amount of missing data for any given patient (and overall) is very low.11 This reduces the chance of bias related to data acquired only on select patients or subgroups of patients.

We also have longitudinal data that are continuing to accrue and, for some patients, we now have data for up to six annual visits. We have a high rate of patient follow-up (78%), suggesting that those returning for annual visits are likely representative of the initial patient population. For future hypothesis-driven studies, however, we will conduct a rigorous assessment of the potential for bias related to loss to follow-up and adjust analyses as appropriate. Seeing how our patient outcomes change over time and what might predict them will provide valuable opportunities for quality improvement. We did not observe significant changes in our score test measures over time for the sample overall. These are primarily descriptive analyses, however, and further refined analyses are planned to determine whether we can identify factors that predict better or worse progression in individuals.

As previously mentioned, we are also enrolling eligible patients in our DNA biobanking project (N = 346). The toolkit automatically evaluates every patient for eligibility in our DNA biobanking study. If a patient is eligible, a BPA appears, alerting the physician to the patient’s eligibility. If the patient agrees to participate, he or she is asked for consent at the point of care. Aside from an initial blood draw, data for these patients are collected wholly within the standard office encounter, with no research-related visits. Blood samples are used to generate data on more than one million single nucleotide polymorphisms. This genetic information can be used to complement the clinical data and conduct novel studies of the influence of biomarkers on disease etiology, progression, and treatment response.

Another opportunity is conducting pragmatic trials of comparative effectiveness using the EMR. For example, we could conduct a point-of-care trial to compare equivalent medications by comparing survival-free rates of discontinuation, adjunctive therapy, relapses, or progression using the EMR. BPAs prompting random assignment of equivalent medications would be triggered to enroll patients at the point of care, when a patient meets eligibility criteria as determined from data captured in our structured toolkit. Based on prior observations in subgroups, treatments could later be assigned adaptively.11 We have already implemented this design successfully for other projects (e.g. prevention therapies for migraine, nootropic therapies for mild cognitive impairment) in the Department of Neurology.

Beyond our MS practice, our toolkits also support data sharing through the NPBRN. With funding support from the Agency for Healthcare Research and Quality, the NorthShore Neurological Institute created an NPBRN for the purposes of standardizing care, benchmarking performance, and conducting multisite research collaborations across diverse patient populations. The NPBRN includes 14 neurology departments that use the same Epic EMR that have agreed to implement the toolkits and de-identify data sharing.

Despite these innovations, there are limitations to this EMR-based approach. There is a learning curve to using the toolkits, though our physicians have found that after this initial period, they are able to complete the toolkit within the allotted appointment time and we are continually discussing ways to improve efficiency (for example, determining values that can be prepopulated from previous visits). We believe the project will ultimately lead to time saving because free texting is replaced by clicking in a specific field. Notably, MS is a complex neurologic disease and one of the advantages of the toolkits is the ability to display all parameters in a table format, allowing for a rapid evaluation of progression of the disease that would require a much longer time by traditional chart review. Additionally, the toolkit provides opportunities for scholarly activities and practice-based research in the context of routine clinical practice. And though the toolkits present standardized data fields, there are invariably differences among physicians as to how questions are asked and how equivocal responses are interpreted. Though there is space for comments in many fields, providers are generally “forced” to choose from a preset response list that is used for descriptive reporting and analysis. However, all physicians were involved in the development of the toolkits and agreed on the content and responses. Notably, modifications can always be undertaken by the Health Information Technology team and frequent meetings are conducted on an ongoing basis to address these and other concerns. Individuals may also interpret the same question differently with respect to completing patient-entered questionnaires. However, this is not an issue specific to our methods and the score test measures (clinical assessments and patient-entered questionnaires) used in our toolkits have been validated previously. Lastly, our toolkits were designed by a consensus of MS physicians at NorthShore with our given appointment lengths. Thus, other groups may not prioritize the same data elements or may not have appointment lengths sufficient to support complete use. We are currently sharing these toolkits with other neurology departments and continually solicit feedback on the use of the toolkit and what necessitates a “minimal” dataset.

Conclusion

SCDS toolkits, as well as CDS features, can be used to standardize MS office visits. The toolkits can be built to enable physicians to provide care consistent with best practices, with the goal of optimizing patient outcomes. Additionally, the toolkits capture discrete data that can easily be translated into descriptive and analytic reports for the purpose of conducting quality improvement and practice-based research. We anticipate these toolkits will improve the care of patients with MS and provide future opportunities to engage in research to identify predictors of disease course, treatment response, and quality of life measures, moving closer to the reality of providing personalized medicine at the point of care.

Supplemental Material

Supplemental Material for Successful utilization of the EMR in a multiple sclerosis clinic to support quality improvement and research initiatives at the point of care by Kelly Claire Simon, Afif Hentati, Susan Rubin, Tiffani Franada, Darryck Maurer, Laura Hillman, Samuel Tideman, Monika Szela, Steven Meyers, Roberta Frigerio and Demetrius M Maraganore in Multiple Sclerosis Journal—Experimental, Translational and Clinical

Acknowledgments

The authors acknowledge the generous funding support of the Auxiliary of NorthShore University HealthSystem with respect to the initial building of the EMR toolkits, and thank the medical assistants, nurses, neurologists, EMR Optimization and Enterprise Data Warehouse programmers, administrators, and research personnel at NorthShore University HealthSystem who contributed to the quality improvement and practice-based research initiative using the EMRs. The authors thank Vimal Patel, PhD, for his assistance with editing, formatting, and submitting the manuscript for publication. Finally, the authors thank the neurology patients who inspire us to improve quality and to make our clinical practice innovative every day.

Conflict of Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This work was supported by the United States Department of Health and Human Services, Agency for Healthcare Research and Quality (R01HS024057).

Supplemental Material

Supplemental material for this article is available online.

References

- 1.Campbell JD, Ghushchyan V, Brett McQueen R, et al. Burden of multiple sclerosis on direct, indirect costs and quality of life: National US estimates. Mult Scler Relat Disord 2014; 3: 227–236. [DOI] [PubMed] [Google Scholar]

- 2.Hartung DM. Economics and cost-effectiveness of multiple sclerosis therapies in the USA. Neurotherapeutics 2017; 14: 1018–1026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Bassi M, Falautano M, Cilia S, et al. Illness perception and well-being among persons with multiple sclerosis and their caregivers. J Clin Psychol Med Settings 2016; 23: 33–52. [DOI] [PubMed] [Google Scholar]

- 4.Adelman G, Rane SG, Villa KF. The cost burden of multiple sclerosis in the United States: A systematic review of the literature. J Med Econ 2013; 16: 639–647. [DOI] [PubMed] [Google Scholar]

- 5.American Academy of Neurology. Quality measures, https://www.aan.com/practice/quality-measures/ (2018, accessed 24 January 2018).

- 6.Rae-Grant A, Bennett A, Sanders AE, et al. Quality improvement in neurology: Multiple sclerosis quality measures: Executive summary. Neurology 2015; 85: 1904–1908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Yadav V, Bever C, Jr, Bowen J, et al. Summary of evidence-based guideline: Complementary and alternative medicine in multiple sclerosis: Report of the Guideline Development Subcommittee of the American Academy of Neurology. Neurology 2014; 82: 1083–1092. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Kaufman DI, Trobe JD, Eggenberger ER, et al. Practice parameter: The role of corticosteroids in the management of acute monosymptomatic optic neuritis. Report of the Quality Standards Subcommittee of the American Academy of Neurology. Neurology 2000; 54: 2039–2044. [DOI] [PubMed] [Google Scholar]

- 9.Tsivgoulis G, Katsanos AH, Grigoriadis N, et al. The effect of disease modifying therapies on disease progression in patients with relapsing–remitting multiple sclerosis: A systematic review and meta-analysis. PLoS One 2015; 10: e0144538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kalincik T, Manouchehrinia A, Sobisek L, et al. Towards personalized therapy for multiple sclerosis: Prediction of individual treatment response. Brain 2017; 140: 2426–2443. [DOI] [PubMed] [Google Scholar]

- 11.Maraganore DM, Frigerio R, Kazmi N, et al. Quality improvement and practice-based research in neurology using the electronic medical record. Neurol Clin Pract 2015; 5: 419–429. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.American Academy of Neurology. Multiple sclerosis quality measures, https://www.aan.com/policy-and-guidelines/quality/quality-measures2/quality-measures/multiple-sclerosis/ (2018, accessed 24 January 2018).

- 13.Simon JH, Li D, Traboulsee A, et al. Standardized MR imaging protocol for multiple sclerosis: Consortium of MS Centers consensus guidelines. AJNR Am J Neuroradiol 2006; 27: 455–461. [PMC free article] [PubMed] [Google Scholar]

- 14.Multiple Sclerosis Coalition. The use of disease-modifying therapies in multiple sclerosis: Principles and current evidence (version as of March 2017), http://ms-coalition.org/cms/images/stories/dmt_consensus_ms_coalition042017.pdf (accessed 24 January 2018).

- 15.Radloff LS. The CES-D scale: A self-report depression scale for research in the general population. Appl Psychol Meas 1977. ;1: 385–401, http://www.nationalmssociety.org/getmedia/1e64b96c-9e55-400e-9a64-0cdf5e2d60fe/summaryDMTpaper_-final. [Google Scholar]

- 16.Spitzer RL, Kroenke K, Williams JB, et al. A brief measure for assessing generalized anxiety disorder: The GAD-7. Arch Intern Med 2006; 166: 1092–1097. [DOI] [PubMed] [Google Scholar]

- 17.Kurtzke JF. Rating neurologic impairment in multiple sclerosis: An Expanded Disability Status Scale (EDSS). Neurology 1983; 33: 1444-1452. [DOI] [PubMed] [Google Scholar]

- 18.Haber A, LaRocca NG. (eds) Minimal record of disability for multiple sclerosis. New York: National Multiple Sclerosis Society, 1985. [Google Scholar]

- 19.Krupp LB, LaRocca NG, Muir-Nash J, et al. The Fatigue Severity Scale. Application to patients with multiple sclerosis and systemic lupus erythematosus. Arch Neurol 1989; 46: 1121–1123. [DOI] [PubMed] [Google Scholar]

- 20.Oxford Grice K, Vogel KA, Le V, et al. Adult norms for a commercially available Nine Hole Peg Test for finger dexterity. Am J Occup Ther 2003; 57: 570–573. [DOI] [PubMed] [Google Scholar]

- 21.Polman CH, Rudick RA. The Multiple Sclerosis Functional Composite: A clinically meaningful measure of disability. Neurology 2010; 74 (Suppl 3): S8–S15. [DOI] [PubMed] [Google Scholar]

- 22.Fischer JS, Rudick RA, Cutter GR, et al. The Multiple Sclerosis Functional Composite measure (MSFC): An integrated approach to MS clinical outcome assessment. National MS Society Clinical Outcomes Assessment Task Force. Mult Scler 1999; 5: 244–250. [DOI] [PubMed] [Google Scholar]

- 23.Kazis LE, Selim A, Rogers W, et al. Dissemination of methods and results from the Veterans Health Study: Final comments and implications for future monitoring strategies within and outside the veterans healthcare system. J Ambul Care Manage 2006; 29: 310–319. [DOI] [PubMed] [Google Scholar]

- 24.Kazis LE, Miller DR, Skinner KM, et al. Applications of methodologies of the Veterans Health Study in the VA healthcare system: Conclusions and summary. J Ambul Care Manage 2006; 29: 182–188. [DOI] [PubMed] [Google Scholar]

- 25.Selim A, Rogers W, Qian S, et al. A new algorithm to build bridges between two patient-reported health outcome instruments: The MOS SF-36® and the VR-12 Health Survey. Qual Life Res 2018; 27: 2195–2206. [DOI] [PubMed] [Google Scholar]

- 26.Nasreddine ZS, Phillips NA, Bédirian V, et al. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J Am Geriatr Soc 2005; 53: 695–699. [DOI] [PubMed] [Google Scholar]

- 27.Freitas S, Batista S, Afonso AC, et al. The Montreal Cognitive Assessment (MoCA) as a screening test for cognitive dysfunction in multiple sclerosis. Appl Neuropsychol Adult 2018; 25: 57–70. [DOI] [PubMed] [Google Scholar]

- 28.Kokmen E, Naessens JM, Offord KP. A short test of mental status: Description and preliminary results. Mayo Clin Proc 1987; 62: 281–288. [DOI] [PubMed] [Google Scholar]

- 29.Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res 1975; 12: 189–198. [DOI] [PubMed] [Google Scholar]

- 30.Roalf DR, Moberg PJ, Xie SX, et al. Comparative accuracies of two common screening instruments for classification of Alzheimer’s disease, mild cognitive impairment, and healthy aging. Alzheimers Dement 2013; 9: 529–537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.van Steenoven I, Aarsland D, Hurtig H, et al. Conversion between Mini-Mental State Examination, Montreal Cognitive Assessment, and Dementia Rating Scale-2 scores in Parkinson’s disease. Mov Disord 2014; 29: 1809–1815. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Solomon TM, deBros GB, Budson AE, et al. Correlational analysis of 5 commonly used measures of cognitive functioning and mental status: An update. Am J Alzheimers Dis Other Demen 2014; 29: 718–722. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplemental Material for Successful utilization of the EMR in a multiple sclerosis clinic to support quality improvement and research initiatives at the point of care by Kelly Claire Simon, Afif Hentati, Susan Rubin, Tiffani Franada, Darryck Maurer, Laura Hillman, Samuel Tideman, Monika Szela, Steven Meyers, Roberta Frigerio and Demetrius M Maraganore in Multiple Sclerosis Journal—Experimental, Translational and Clinical