Abstract

Gravity is a defining force that governs the evolution of mechanical forms, shapes and anchors our perception of the environment, and imposes fundamental constraints on our interactions with the world. Within the animal kingdom, humans are relatively unique in having evolved a vertical, bipedal posture. Although a vertical posture confers numerous benefits, it also renders us less stable than quadrupeds, increasing susceptibility to falls. The ability to accurately and precisely estimate our orientation relative to gravity is therefore of utmost importance. Here we review sensory information and computational processes underlying gravity estimation and verticality perception. Central to gravity estimation and verticality perception is multisensory cue combination, which serves to improve the precision of perception and resolve ambiguities in sensory representations by combining information from across the visual, vestibular, and somatosensory systems. We additionally review experimental paradigms for evaluating verticality perception, and discuss how particular disorders affect the perception of upright. Together, the work reviewed here highlights the critical role of multisensory cue combination in gravity estimation, verticality perception, and creating stable gravity-centered representations of our environment.

Gravity is a defining force that governs the evolution of mechanical forms (Volkmann and Baluška, 2006), shapes and anchors our perception of the environment (Dichgans et al., 1972; Coppola et al., 1998a; Merfeld et al., 1999; Laurens and Angelaki, 2011; Rosenberg and Angelaki, 2014), and imposes fundamental constraints on how we interact with the world (Gaveau et al., 2011, 2014, 2016; Crevecoeur et al., 2014). Humans are unique in having evolved a vertical, bipedal posture that confers benefits such as freeing our hands to interact with objects, elevating our line of sight, and enabling metabolically efficient walking (Alexander, 2004). However, because our vertical posture has narrowed our base of support and raised our center of mass, it has also made us less stable than if we had a quadrupedal posture. Deviations from upright can thus have dire consequences for maintaining posture and safe locomotion (e.g., increasing the risk of falls), making the estimation of gravity and verticality critical steps in bipedalism.

In this chapter, we review sensory sources and computational processes underlying gravity estimation and verticality perception. Gravity estimation refers to the process of estimating the direction of the gravitational vector. In turn, verticality is defined relative to gravity: a vertical orientation is parallel to gravity, whereas a horizontal orientation is perpendicular to gravity. This chapter examines how the estimation of gravity and our spatial orientation are multisensory processes relying on visual, vestibular, and somatosensory signals. We distinguish between two forms of multisensory cue combination that contribute to gravity estimation and verticality perception (Seilheimer et al., 2014). First, cue integration improves the precision of gravity and self-orientation estimates. Second, cue disambiguation resolves ambiguities in sensory representations that exist at the level of a single modality by using sensory information from other modalities. For example, the visual orientation of objects in the world provides a valuable cue to gravity, but the orientation of the eyes in the world confounds visual orientation. To interpret visual cues to gravity properly, the contributions of object-in-world and eye-in-world orientations to the retinal images must be disambiguated (Sunkara et al., 2015). This is achieved through a reference frame transformation in which a multisensory estimate of gravity is used to re-express egocentrically (i.e., relative to the eyes) encoded visual signals relative to an allocentric, gravity-centered reference frame.

We begin by introducing concepts from cue integration theory, a framework that can be used to quantify the inference of verticality from multiple ambiguous and noisy sensory signals. We then review experimental paradigms for assessing verticality perception, and examine conditions under which the paradigms may yield different estimates of verticality. The discussion of different measures of verticality leads into a review of the contributions of the visual, vestibular, and somatosensory systems to verticality perception framed in terms of cue integration. Following the discussion of cue integration, we review two examples of cue disambiguation. First, we review the neural basis of reference frame transformations for achieving gravity-centered visual perception. Second, we review computations that can dissociate the contributions of gravitational and inertial forces to otolith signals, a classic example of cue disambiguation for estimating linear acceleration and head tilt. Together, the work reviewed here highlights the critical role of multisensory cue combination in estimating gravity, verticality perception, and creating gravity-centered representations of our environment.

MODELING VERTICALITY PERCEPTION USING CUE INTEGRATION THEORY

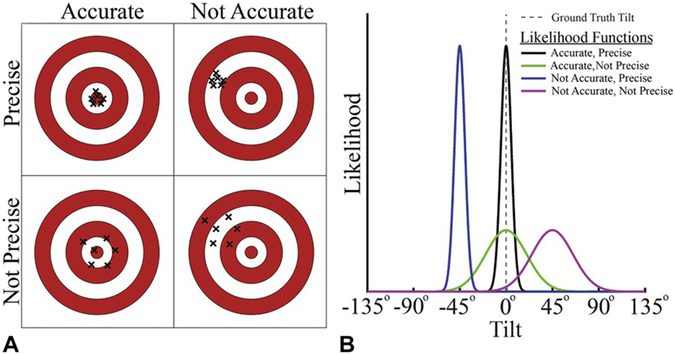

Maintaining verticality requires that we estimate our orientation relative to gravity (i.e., tilt) from noisy and ambiguous sensory signals. We can quantify the quality of our tilt inferences in terms of their accuracy and precision (Seilheimer et al., 2014). Inferences are accurate if they closely match the ground truth tilt (i.e., they are unbiased), and they are precise if they are reliable. As an analogy, consider a game of darts in which performance can vary independently in accuracy and precision (Fig. 3.1A). Performance is both accurate and precise if all the dart throws cluster tightly around the bullseye. However, if they cluster loosely around the bullseye, then performance is accurate but not precise. Conversely, performance may be precise but not accurate if the dart throws tightly cluster around a point other than the bullseye. Lastly, they are neither accurate nor precise if they cluster loosely around a point other than the bullseye. Successful interactions with our environment require that we maintain an accurate and precise estimate of our orientation relative to gravity; that is, a tight cluster centered on the bullseye.

Fig. 3.1.

Accuracy and precision of sensory representations. (A) Dartboard schematic illustrating four accuracy–precision scenarios. Accuracy describes how close the representation is to the ground truth (the bullseye). Performance is accurate if, on average, the dart hits the bullseye (left column). Precision describes the reliability of the representation. Performance is precise if multiple dart throws result in a tight cluster (top row). As is illustrated here, accuracy and precision can vary independently. (B) Sensory representations can be described as likelihood functions. Given a ground truth tilt of 0° (vertical dotted gray line), each curve shows a Gaussian-shaped likelihood function with different levels of accuracy and precision. The horizontal axis shows the possible tilts, and the vertical axis indicates the likelihood of sensing each of those tilts given the ground truth tilt of 0°.

Tilt perception can be understood as a problem of statistical inference, in which we infer our true tilt from noisy and ambiguous sensory information (Knill and Richards, 1996). As such, tilt perception can be described by a probability density function, with a more concentrated and less biased distribution reflecting perception that is less uncertain (i.e., more precise) and closer to the truth (i.e., more accurate). Inferences about our true tilt start with sensory representations of our tilt. Those representations can be described by tilt likelihood functions representing the evidence that we are tilted by a particular amount given the current sensory information (Knill and Pouget, 2004; Ma et al., 2006; Landy et al., 2011). The accuracy of a likelihood function is the difference between the center of the function and the ground truth (i.e., the true tilt that should be inferred), and the precision is inversely related to the function’s width (Fig. 3.1B). For example, if you are upright (tilt = 0°), an accurate and precise sensory representation of your orientation relative to gravity is described by a narrow-likelihood function centered on 0°.

Relying on a single sensory system to estimate tilt imposes fundamental limitations on the accuracy and precision of tilt perception because the ambiguities, biases, and noise impacting the estimates made by that system are not independent (Gingras et al., 2009). For example, pilots can mistake linear acceleration signals as tilt signals when a visual horizon cue is unavailable because the vestibular system’s otoliths ambiguously encode linear acceleration and head tilt. Visual presentation of the horizon, or an attitude indicator, can remedy the inaccurate perception of tilt by providing an orientation cue that is independent of the vestibular system. As a second example, fog adds noise to the visual scene, which decreases the precision of tilt estimates derived from visual orientation cues (which, as noted above, are also biased by the orientation of the eyes in the world). Because poor viewing conditions broadly affect vision (and the orientation of the eyes in the world biases all visual signals), the extent to which other visual cues to gravity, such as the motion of a falling object, can reduce uncertainty and bias, is limited. Instead, information provided by the vestibular and somatosensory systems is necessary to improve tilt estimates.

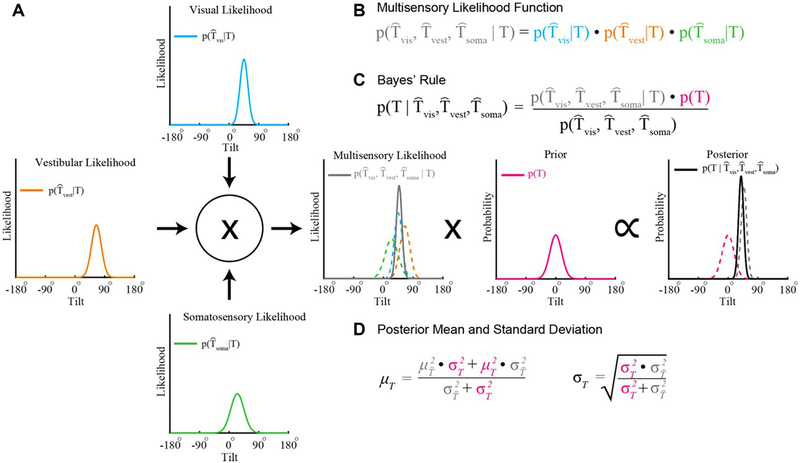

Cue integration theory provides a model for describing how the integration of information across sensory systems can yield statistically robust estimates in that the variance of the multisensory estimate is minimized (Ernst, 2006). Consider that the visual, vestibular, and somatosensory systems each provide their own tilt likelihood function. With optimal cue integration, the multiplication of these likelihood functions yields a multisensory likelihood whose precision is maximized given the unisensory likelihoods (Ernst and Banks, 2002; Ernst and Bülthoff, 2004). We illustrate this computation in Figure 3.2, where three hypothetical unisensory tilt likelihood functions differing in accuracy (each has a different mean) and precision (each has a different width) are integrated. The contribution of each unisensory likelihood function to the multisensory likelihood depends upon its precision, yielding statistically robust inferences (Landy et al., 2011).

Fig. 3.2.

Bayesian cue integration. (A) The visual, vestibular, and somatosensory systems each provide a representation of tilt modeled here as Gaussian likelihood functions. For example, the visual likelihood function describes the likelihood of visually sensing a particular tilt given the true tilt (T). The mean and standard deviation characterize the accuracy and precision of the representation, respectively. (B) The product of the individual sensory likelihoods produces a multisensory likelihood function (solid gray curve in the center plot; colored dashed curves show the unisensory likelihood functions). (C) According to Bayes’ rule, the posterior distribution describes the probability of a tilt given the sensory information (black curve, right-most plot). The posterior is equal to the product of the multisensory likelihood function and a prior describing the probability of each tilt (magenta curve, center-right plot), divided by a normalizing term (that can be safely ignored since it does not affect the shape of the posterior distribution). (D) The posterior has a mean that is equal to a weighted combination of the multisensory likelihood mean and the mean of the prior, and has minimal variance given the likelihood and prior. Colors in the equations correspond to the colors of the plotted functions.

Because sensory signals are noisy, tilt inferences can further benefit from integrating current sensory evidence with prior knowledge about the statistical regularities of the world (e.g., we are most likely upright when we are awake). More formally, prior experience can be described by a probability distribution, or prior. The multiplication of a likelihood and a prior is proportional to a posterior distribution that describes the probability of each possible tilt (Fig. 3.2). In particular, the resulting tilt estimate is Bayes optimal (the precision of the posterior is maximized) given the current sensory evidence and prior experience. Thus, cue integration is referred to as Bayesian cue integration when a prior is included (Knill and Pouget, 2004). A single tilt inference can then be made by selecting the most probable tilt (i.e., the one corresponding to the maxima of the posterior function).

MEASURING VERTICALITY PERCEPTION

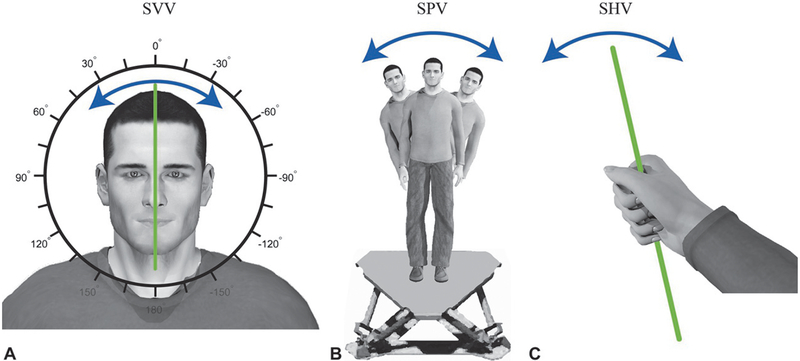

Subjective vertical tasks are a key experimental paradigm used to study verticality perception. In general, these tasks require participants to orient either themselves or an object with their perception of vertical. Because the sensory information available to estimate gravity differs across these tasks, each task provides insights into when and how particular sensory cues contribute to verticality perception. In the following subsections, we describe three variants of the subjective vertical task (visual, postural, and haptic) which are used to measure verticality perception, as well as conditions in which they yield either similar or differing verticality estimates.

Subjective visual vertical

In the subjective visual vertical (SVV) task, participants orient a visually presented bar with perceived vertical using an interfacing controller (Fig. 3.3A). After multiple trials of orienting the bar, the accuracy and precision of verticality perception are assessed using the average and variability of the participant-set bar orientations, respectively. Participants usually perform the SVV task in the dark, with the only visual information available arising from the bar used to indicate vertical. When the body is upright and in the dark, gravitational cues provided by the vestibular and somatosensory systems dominate SVV performance. Unilateral vestibular lesions systematically decrease the accuracy of SVV, biasing verticality perception in the direction of the lesion (Anastasopoulos et al., 1997). Likewise, in upright, head-fixed monkeys, electrical stimulation of vestibular afferents biases perceived vertical in a manner consistent with head tilt (Dakin et al., 2013; Lewis et al., 2013). In contrast, neither somatosensory loss nor water immersion (which reduces somesthetic orientation cues) affects the accuracy of perceived vertical. Instead, somatosensory loss decreases the precision of perceived vertical in upright participants (Barra et al., 2010).

Fig. 3.3.

Three subjective vertical tasks. (A) During a subjective visual vertical (SVV) task, participants orient a visually displayed bar with perceived vertical using an interfacing controller. (B) During a subjective postural vertical (SPV) task, participants either orient themselves with perceived vertical or are passively rotated until they indicate that they perceive themselves to be vertical. Here, a participant is shown standing on a motion platform that allows side-to-side tilt. Starting from a tilted platform orientation, the participant or experimenter will adjust the participant’s orientation. The participant will then indicate when his/her body is aligned with vertical. (C) During a subjective haptic vertical (SHV) task, participants align a hand-held object with perceived vertical.

A biasing effect of somesthetic cues on SVV becomes more apparent when the body is statically tilted relative to gravity, resulting in a bias in perceived vertical towards the midline of the body, called the Aubert effect (Aubert, 1861). The Aubert effect is thought to arise from somesthetic cues because its magnitude covaries with the degree of somesthetic sensory loss observed in hypoesthetic patients (Yardley, 1990; Anastasopoulos et al., 1999; Barra et al., 2010). It is attributable to an internal signal called the idiotropic vector (Mittelstaedt, 1983, 1986, 1999), which biases verticality perception in the direction of the body’s midline.

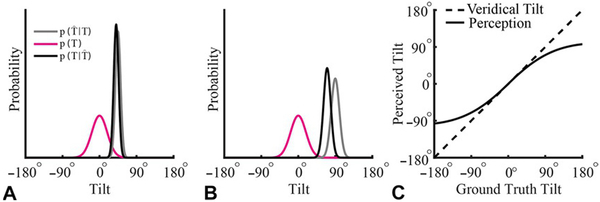

The idiotropic vector was first proposed as part of a computational strategy to overcome biases in verticality perception resulting from anisotropies in the number of hair cells in the utricle and saccule, and to help reduce the variability of verticality perception in the presence of small tilts (Rosenhall, 1972; Mittelstaedt, 1983). As the amount of tilt increases, the idiotropic vector more strongly biases SVV towards the midline of the body, reducing the accuracy of perceived visual vertical. This increasing bias in verticality perception with larger tilts can also be described by Bayesian cue integration. As tilt increases, the precision of the tilt representation is thought to decrease (i.e., the width of the likelihood function increases) due to a reduction in the precision of otolith-derived tilt signals (Quix, 1925; Graybiel and Patterson, 1955; Graybiel and Clark, 1962; Shöne and Udo De Haes, 1968). This decrease in precision reduces the weight of the likelihood function, resulting in the posterior distribution being pulled more strongly in the direction of a prior that assumes small tilts are most probable (MacNeilage et al., 2007; De Vrijer et al., 2008; Vingerhoets et al., 2009; Clemens et al., 2011) (Fig. 3.4).

Fig. 3.4.

Bayesian account of the Aubert effect. (A, B) Tilt estimation for ground truth tilts of 45° and 90° relative to the world, respectively. A prior for being upright relative to the world (tilt = 0°), p(T), is illustrated in magenta. The precision of sensory representations of tilt decrease the further an individual is from upright. Reflecting this change in precision, the sensory likelihood function representing a tilt of 45° is taller and narrower (i.e., more precise) than for a tilt of 90° (gray curves). With Bayesian cue integration, the prior has a larger effect on the posterior, (black curves), at larger tilts because of the decreased precision of the likelihood function. Correspondingly, the posterior is “pulled” more towards the prior in (B) compared to (A). (C) Taking the tilt with the highest probability as the estimate of the ground truth tilt, this “pull” on the posterior introduces a bias in the perceived tilt that increases the further the individual is from upright. Specifically, the perceived tilt (solid curve) is underestimated at large tilts (dashed curve), resulting in the Aubert effect.(Adapted from De Vrijer M, Medendorp WP, Van Gisbergen JAM (2008) Shared computational mechanism for tilt compensation accounts for biased verticality percepts in motion and pattern vision. J Neurophysiol 99: 915–930.)

If visual cues are available during the SVV task, they can exert a substantial influence on perceived vertical, as revealed by the rod and frame test (Witkin and Asch, 1948). In the rod and frame test, the SVV task is performed with the bar presented inside a rectangular frame whose orientation is experimentally manipulated. The presence of a tilted frame causes a shift in perceived vertical in the direction of the frame’s tilt. This shift is predicted by cue integration theory, in which the frame “pulls” the perceived vertical towards the frame’s orientation (Vingerhoets et al., 2009; Alberts et al., 2016a).

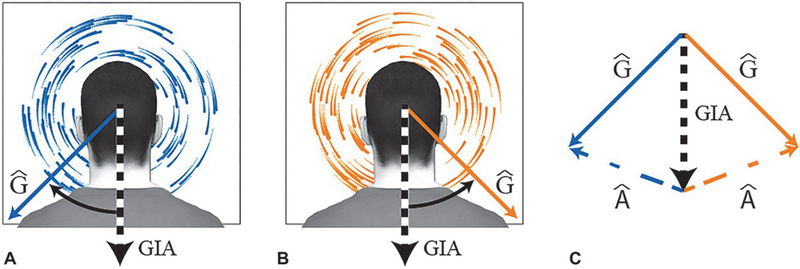

Avariation of the SVV task is the dynamic SVV task, in which a vertically oriented participant performs the SVV task while viewing large-field visual motion (Dichgans et al., 1972; Hughes et al., 1972; Held et al., 1974; Brandt and Dieterich, 1987). The visual field motion is often presented as dots rotating about the participant’s line of sight (i.e., roll) (see Fig. 3.8A and B, later in this chapter, for illustration). Dynamic SVV tasks assess how visual motion cues contribute to head tilt estimates (Zupan and Merfeld, 2003). Such tasks have shown that visual field motion biases the perception of vertical (Dichgans et al., 1972; Held et al., 1974) and induces ocular torsion (Ibbotson et al., 2005). People become increasingly dependent on such visual cues with age (Over, 1966; Kobayashi et al., 2002). Furthermore, tilt estimates made by older adults who fall relatively frequently are biased more by visual signals than older adults who fall less often (Lord and Webster, 1990).

Fig. 3.8.

Rotation of the visual scene biases estimates of the direction of gravity. Clockwise (A) and counterclockwise (B) rotation of the visual scene from the perspective of a static, upright observer. The black and white dotted arrows indicate the otolith signal (gravito-inertial acceleration: GIA). The solid blue and orange arrows indicate the estimated direction of gravity relative to the head (Ĝ), which is biased away from the otolith signal by the visual motion. (C) Clockwise (counterclockwise) visual rotation can be used to infer left (right) ear-down head tilt, and thus a rotation of the gravitational vector relative to the head in the clockwise (counterclockwise) direction away from the otolith signal (GIA). According to the gravito-inertial force resolution hypothesis, separation of the estimate of gravity relative to the head (Ĝ) from GIA results in the inference of an interaural and vertical acceleration (Â; dashed colored lines) whose magnitude and direction are given by the vector difference between GIA and Ĝ (Zupan and Merfeld, 2003). Variables with a circumflex are estimates of real-world variables.

Subjective postural vertical

In subjective postural vertical (SPV) tasks, participants indicate the orientation of their body relative to perceived vertical (Fig. 3.3B). SPV tasks are performed using a tilting table, chair, or motion platform in which participants either control their own orientation or indicate when they have been passively rotated into the vertical orientation. Typically, participants perform SPV tasks without visual feedback, so the sensory cues that most directly determine performance are vestibular and somatosensory in origin.

Performance is quantified by either comparing a participant’s orientation to earth vertical or by having participants indicate when they start to feel (or not feel) upright. The latter measure is parameterized using the entrance to and exit from verticality to define a cone of verticality when both roll and pitch are included (Bisdorff et al., 1996). The precision of a participant’s ability to orient is quantified by the angle of the sector between the entrance to and exit from verticality along the axis of interest, and the accuracy is given by the bias of the sector’s orientation relative to vertical. A loss in the precision of SPV increases the angle of the sector, whereas a decrease in accuracy results in a directional bias of the sector.

Impaired vestibular function, such as that arising from Menière’s disease or benign paroxysmal positional vertigo, increases the angle of the sector, indicating a decrease in the precision of perceived vertical (Bisdorff et al., 1996). In many cases, SPV appears resilient to unilateral vestibular lesion (Bisdorff et al., 1996; Anastasopoulos et al., 1997), whereas unilateral somatosensory loss (Anastasopoulos et al., 1999; Mazibrada et al., 2008) can decrease the accuracy of SPV by biasing perception towards the impaired side. Lastly, age can also decrease the accuracy of SPV estimates, resulting in a backward leaning bias (Manckoundia et al., 2007; Barbieri et al., 2010).

Subjective haptic vertical

In subjective haptic vertical (SHV) tasks, participants orient a hand-held object to perceived vertical (Fig. 3.3C). Because the task is performed in the dark, haptic vertical is primarily dependent on somatosensory information. Performance on the SHV task may also depend on the contextual relevance of the task. For example, participants are significantly better at orienting a glass of water than a metal bar (Wright and Glasauer, 2003, 2006). This highlights that the context under which a subjective vertical task is performed, including the available verticality cues and consequences of failing to achieve verticality, may influence the accuracy and precision of verticality estimates.

Comparison of verticality estimates

In some cases, performance across different verticality measures is similar. For example, a backward bias in both SPV and SHV can occur with normal aging (Manckoundia et al., 2007). Indeed, one study investigating verticality perception following hemispheric stroke found that 18% of participants exhibited biases in verticality that were transmodal (Perennou et al., 2008). However, verticality estimates made by the same participant for different subjective vertical tasks can also differ. For example, patients with Menière’s disease or benign paroxysmal positional vertigo perform SVV and SPV tasks with similar accuracy before unilateral vestibular nerve sectioning. After nerve sectioning, the patients’ SVV is significantly biased in the direction of the lesion whereas the accuracy of SPV is unaffected (Bisdorff et al., 1996; Bronstein, 1999). Likewise, cortical lesions to the posterior insula result in a bias in SVV but do not create an appreciable bias in SPV (Brandt et al., 1994). Stroke patients with contraversive pushing also exhibit a dissociation between SVV and SPV (Karnath et al., 2000a, b; Pérennou et al., 2002; Lafosse et al., 2007). These patients tend to push toward the hemiparetic side, leading to postural imbalance and falls toward the paralyzed side. Correspondingly, during a SPV task, they perceive themselves as vertical when they are tilted toward the nonhemiparetic side; but their SVV is relatively normal.

Similar dissociations are observed between SVV and SHV. For example, in patients with vestibular nuclear lesions, SVV can be strongly biased following the lesion, whereas SHV is only marginally affected (Bronstein et al., 2003). Such dissociations between different measures of subjective vertical have led to the suggestion that verticality is not a single unified concept (Bronstein, 1999; Bronstein et al., 2003); however, such dissociations are also consistent with cue integration. Specifically, cue integration predicts dissociations between measures of perceived vertical since subjective vertical tasks rely differentially on multiple sensory representations of verticality. The accuracies and precisions of these representations depend on the specific task (e.g., if the participant is standing or sitting, if tilt is head only or whole body, or if the arm is actively working against gravity as can occur predominantly in SHV tasks), the experimental instructions (e.g., attention is drawn to specific cues), contextual aspects of the task (Wright and Glasauer, 2003, 2006), and the participant’s orientation in space (De Vrijer et al., 2008; Vingerhoets et al., 2009; Alberts et al., 2016b).

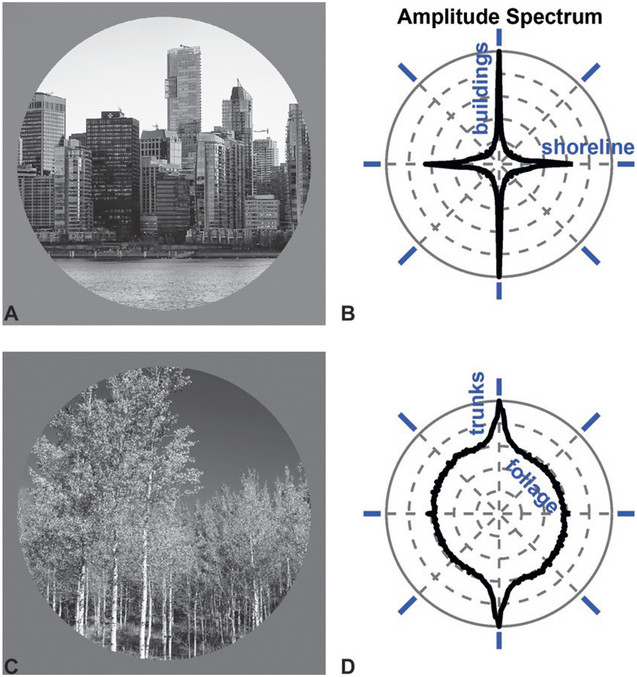

GRAVITY SHAPES THE VISUAL ENVIRONMENT

If you toss a handful of matchsticks into the air, the distribution of orientations that come to rest on the ground will be statistically uniform. In contrast, the distribution oriented contours in the world is systematically biased, with a higher prevalence of horizontal and vertical orientations compared to oblique orientations (Coppola et al., 1998a). This cardinal bias is observed in both urban Fig. 3.5A and B) and natural scenes (Fig. 3.5C and D). For instance, vertical orientations are prevalent in cities because of buildings and telephone poles, and in nature because taller plants receive greater exposure to sunlight. The prevalence of horizontal orientations is likewise associated with the horizon, a prominent feature of many scenes. As such, vertical and horizontal orientations provide potent static visual cues to the direction of gravity. Indeed, Kofka (1935) suggested that we define verticality by the contours in our visual environment.

Fig. 3.5.

The distribution of orientations in the environment provides a visual cue to the direction of gravity. (A) Urban scene: the Vancouver skyline. (B) Amplitude spectrum of the urban scene shows a prevalence of vertical orientations due to the columnar structure of the buildings, and horizontal orientations due to the roofs and floors of the buildings as well as the shoreline. The angular variable corresponds to the orientation of the contours in the scene, indicated by blue oriented bars. The radial variable shows the prevalence of each orientation in the scene. (C) Natural scene: Logan Canyon, UT. (D) Amplitude spectrum of the natural scene shows a prevalence of vertical orientations due to the tree trunks.

The cardinal bias is associated with greater perceptual sensitivity to the orientation or direction of motion of visual patterns at cardinal compared to oblique orientations (Westheimer and Beard, 1998; Dakin et al., 2005). Differences in behavioral sensitivity as a function of visual orientation (the oblique effect) are thought to arise as a consequence of Bayesian cue integration in which sensory evidence (i.e., a likelihood function reflecting visual orientation signals) is combined with a prior reflecting the overrepresentation of cardinal orientations in the environment (Girshick et al., 2011). This integration improves the precision of visual orientation estimates, but when the reliability of visual information is low (e.g., due to fog), the prior can “pull” the posterior function towards the closest cardinal orientation, resulting in biased orientation judgments. Analogous shifts in posterior functions are evident in Figures 3.2 and 3.4, which capture perception’s increased reliance on prior experience to interpret current sensory evidence when that evidence is noisier. Interestingly, a reduced oblique effect is observed in autism (Sysoeva et al., 2016), a disorder that is associated with a reduced reliance on priors to interpret current sensory information (Pellicano and Burr, 2012; Rosenberg et al., 2015).

Neural correlates of the oblique effect are first observed in primary visual cortex (V1), where more neurons respond preferentially to cardinal than oblique visual orientations. An anisotropy in V1 orientation preferences is found in multiple species, including humans (Furmanski and Engel, 2000), ferrets (Coppola et al., 1998b), and cats (Li et al., 2003). This cardinal bias in the neural representation of the visual scene suggests that gravity fundamentally shapes visual processing.

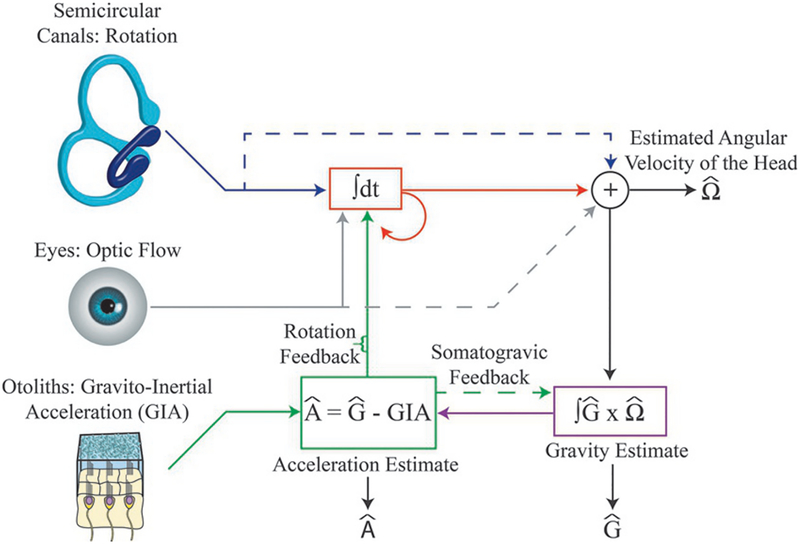

VISUAL MOTION CUES SIGNAL CHANGES IN HEAD TILT

Large-field visual motion in the form of angular velocity (as occurs during head tilt) provides a potent cue signaling the rate at which the orientation of the head is changing. Dynamic SVV tasks have shown that large-field angular visual motion can bias orientation perception as if the head were tilted relative to gravity (Dichgans et al., 1972; Held et al., 1974). The effect of large-field angular visual motion on SVV and postural reorienting increases with age, and is accentuated in some cerebellar disorders (Kobayashi et al., 2002; Bunn et al., 2015). For example, large-field angular visual motion heavily biases SVV and elicits abnormally large postural sway in patients with spinocerebellar ataxia type 6 (Bunn et al., 2015; Dakin et al., 2018). Neural representations of large-field angular visual motion, which may contribute to head orientation estimates, have been identified in multiple brain regions of monkeys, including the cerebellum (Kano et al., 1990, 1991), brainstem (Waespe and Henn, 1977), and ventral intraparietal area (Sunkara et al., 2016). The semi-circular canals (Fig. 3.6, top) also provide information about changes in head tilt, and through cue integration, visual motion and vestibular signals can potentially improve estimates of changes in head tilt (Laurens and Angelaki, 2011). Consistent with this possibility, neurons in the vestibular nucleus integrate visual and vestibular rotation signals (Waespe and Henn, 1977). As discussed below, such head tilt signals are important for disambiguating the contributions of gravitational and inertial forces to otolith signals.

Fig. 3.6.

Framework incorporating visual and vestibular contributions to gravito-inertial force resolution based on changes in the head’s orientation in space, as summarized by Laurens and Angelaki (2011). Rotation signals originate from the semicircular canals and the visual system. Semicircular canals signals (blue lines) are combined with visual signals (gray lines) to improve the estimate of the angular velocity of the head (shown in red). Changes in the orientation of gravity relative to the head (i.e., changes in head tilt) are estimated by integrating the cross-product of the previous estimate of gravity (Ĝ) and the current estimated angular velocity of the head (shown in purple). Once the new estimate of gravity (Ĝ) is determined, the net gravito-inertial acceleration (GIA) signaled by the otoliths can be subtracted to estimate the linear acceleration of the head (Â) Somatogravic feedback (green-dashed arrow) slowly pulls the estimate of gravity towards the otolith signal to correct for drift in the gravity estimate. This feedback loop can also be formalized as a prior for zero acceleration. Rotation feedback (green vertical arrow) corrects for errant angular velocity signals by adjusting the internal estimate of the angular velocity of the head, thereby also reducing the difference between the internal estimate of gravity (Ĝ) and GIA. Variables with a circumflex are estimates of real-world variables.

THE GRAVICEPTIVE VESTIBULAR SYSTEM

The mechanisms of signal transduction and the computational functions of the vestibular system are inherently related to gravity, and have a fundamental role in verticality perception. Vestibular receptors are located bilaterally within the inner ear, and are segregated into two anatomically distinct groups of end organs: semi-circular canals and otoliths. Semicircular canals are sensitive to angular motion, and signal rotation of the head. Otoliths are sensitive to linear acceleration, and therefore signal linear acceleration of the head as well as the head’s orientation relative to gravity (Fig. 3.6, bottom).

Vestibular-derived orientation cues are processed in the vestibular nuclei, cerebellum, and thalamic regions that project to the cerebral cortex (Akbarian et al., 1992; Angelaki et al., 2004; Shaikh et al., 2004; Dieterich, 2005; Green et al., 2005; Brandt and Dieterich, 2006; Yakusheva et al., 2007; Laurens et al., 2013, 2016). Studies conducted in the macaque monkey suggest that the cerebellum has an important role in disambiguating orientation signals from linear acceleration signals (Angelaki et al., 2004; Shaikh et al., 2004; Green et al., 2005; Yakusheva et al., 2007; Laurens et al., 2013). In the caudal intraparietal (CIP) area of macaque monkeys, vestibular/somatosensory-derived estimates of gravity underlie systematic changes in the visual orientation preferences of neurons that occur with changes in head–body orientation (Rosenberg and Angelaki, 2014). Within the human cerebral cortex, the processing of vestibular information occurs in regions in and around the temporoparietal junction, neighboring parietal cortex, and the posterior insula (Brandt et al., 1994; Perennou et al., 2008; Baier et al., 2012; Lopez et al., 2012). Interfering with activity in the human temporoparietal junction through repetitive transcranial magnetic stimulation can systematically bias SVV (Kheradmand et al., 2015; for review, see Kheradmand and Winnick, 2017). For detailed reviews of the contributions of specific brain regions to the processing of vestibular information, see Barmack (2003), Lopez and Blanke (2011), and Kheradmand and Winnick (2017).

Dysfunction along the vestibular pathways can lead to biases and/or loss of precision in the different measures of subjective vertical. For example, unilateral vestibular lesions cause biases in SVV towards the lesioned side, and bilateral vestibular deficits decrease the precision of SVV and SPV (Friedmann, 1970; Curthoys et al., 1991; Bisdorff et al., 1996; Bohmer et al., 1996; Anastasopoulos et al., 1997; Vibert, 2000; Lopez et al., 2007). Bilateral vestibular lesions also reduce or eliminate the vestibular contribution to verticality estimates, resulting in increased reliance on other sensory cues (Bronstein et al., 1996; Guerraz et al., 2001; Lopez et al., 2007). The behavioral consequences of vestibular deficits can be understood in terms of cue integration, in which unilateral deficits introduce a bias in the vestibular likelihood function, and bilateral deficits reduce the precision of the vestibular likelihood function.

SOMESTHETIC INFLUENCES ON PERCEIVED VERTICAL

The use of postural cues for gravity estimation and verticality perception has long been recognized (Gibson, 1952; Gibson and Mowrer, 1938). More recently, the specific contribution of somatosensory information has been inferred from patients with broad somatosensory deficits such as occurs with spinal cord injury, thalamic infarction, stroke, or Guillain–Barré syndrome. Data from patients with such deficits show that unilateral somatosensory loss can bias SPV towards the affected side, and bilateral somatosensory loss can decrease the precision of SPV (Anastasopoulos et al., 1999; Mazibrada et al., 2008). Somatosensory deficits can attenuate or abolish the Aubert effect, suggesting that somatosensory cues underlie the idiotropic vector and the associated bias of SVV towards the body’s midline (Yardley, 1990; Anastasopoulos et al., 1999; Barra et al., 2010). Perceived changes in orientation relative to gravity can also be induced using relatively focal stimuli such as muscle vibration, which is believed to target primarily muscle spindles (Roll and Vedel, 1982; Roll et al., 1989). For example, vibration of lower-limb muscles or the Achilles tendon can induce postural sway as well as bias SPV (Ceyte et al., 2007; Barbieri et al., 2008). Likewise, vibration of the neck muscles can alter perceived head-on-body orientation (Karnath, 1994; Karnath et al., 1994) and induce forward sway (Lekhel et al., 1997), suggesting that the vibration of specific combinations of neck muscles may bias verticality perception.

Several lines of evidence further suggest that the pull of gravity on our abdominal viscera has a graviceptive influence on perceived vertical (Mittelstaedt and Fricke, 1988; Mittelstaedt, 1992, 1996, 1997; vonGierke and Parker, 1993; Vaitl et al., 1997, 2002). In the vascular system, the weight of blood in the large vessels can stretch the surrounding connective tissues, stimulating nearby mechanoreceptors sensitive to tissue strain. The resulting mechanoreceptor signals provide a dynamic cue to gravity that may drive changes in perceived vertical (Mittelstaedt, 1992). Visceral mechanoreceptors, which may also contribute to verticality perception, are also found in the pericardium and inferior vena cava (Kostreva and Pontus, 1993a, b; Goodman-Keiser et al., 2010). Similarly, mechanoreceptors in the kidneys are sensitive to the changes in intrarenal pressure that occur with shifts in orientation relative to gravity (Uchida and Kamisaka, 1971; Niijima, 1975). As such, the kidneys may also have a graviceptive influence on verticality perception (Mittelstaedt, 1996). Because of Einstein’s equivalence principle, discussed below, somesthetic and otolith cues to gravity’s orientation cannot be differentiated from inertial (acceleration) cues without additional sensory information. In the next sections, we discuss mechanisms of cue disambiguation that can resolve such sensory ambiguities.

ACHIEVING GRAVITY-CENTERED VISUAL PERCEPTION

Unlike our sensory systems which encode information relative to different egocentric reference frames (e.g., the eyes, head, or body), we perceive an allocentric, gravity-centered representation of the world. For example, although the visual system encodes the environment in eye-centered coordinates, if you roll your head ear-down, you still perceive buildings as vertically oriented despite that they are obliquely oriented on your eyes. This perceptual constancy is the result of computational mechanisms that perform cue disambiguation, dissociating the contributions of eye-in-world and object-in-world orientations to retinal images. Specifically, visual information is transformed from an eye-centered reference frame into a gravity-centered reference frame to achieve a stable, up right visual representation of the world.

Neural recordings in monkeys confirm that V1 neurons represent the visual scene in egocentric coordinates (Daddaoua et al., 2014). This finding conflicts with several earlier studies conducted in cats, which suggested that some V1 neurons might represent the visual scene relative to gravity (Denney and Adorjanti, 1972; Horn et al., 1972; Tomko et al., 1981). However, the earlier observations were likely artifactual, arising from methodologic limitations (see Rosenberg and Angelaki (2014) for discussion). Interestingly, human perceptual studies have shown that, under conditions of head roll, the oblique effect is allocentrically referenced (i.e., relative to gravity) when an observer is upright, but egocentrically referenced (i.e., relative to the eyes or head) when the observer is supine (Mitchell and Blakemore, 1972; Mikellidou et al., 2015). This finding implicates graviceptive signals, from sources such as the otoliths, in the neural basis of the oblique effect. Together, these results suggest that, although anisotropies in the orientation preferences of V1 neurons may correlate with the oblique effect, perceptual sensitivity to visual orientation arises at a later stage of multisensory processing, possibly in parietal cortex.

A more robust correlate of gravity-centered visual perception is found in the CIP area of macaque monkeys (Rosenberg and Angelaki, 2014). For about half of CIP neurons, changes in head–body orientation result in systematic changes in visual orientation preference and/or the magnitude of visually evoked responses. In particular, the visual orientation preferences rotate away from an egocentric representation (i.e., constant relative to the eyes or head) and towards a gravity-centered representation (i.e., constant relative to gravity). For most CIP neurons, these shifts are incomplete (resulting in intermediate reference frame representations), but about 10% have visual orientation preferences that are gravity-centered.

Although achieving a purely gravity-centered representation is generally incomplete at the level of single CIP neurons, this finding is consistent with a population-level representation of visual orientation relative to gravity. Specifically, theoretical work has shown that reference frame transformations implemented through multisensory cue combination, such as using an estimate of gravity to transform eye-centered visual representations into gravity-centered representations, will result in intermediate reference frames and modulated response amplitudes at the level of single neurons (Deneve et al., 2001; Avillac et al., 2005; Beck et al., 2011). Lastly, a parietal origin of gravity-centered visual perception is consistent with both clinical studies and transcranial magnetic stimulation studies in healthy subjects that link parietal cortex to verticality perception (Brandt et al., 1994; Funk et al., 2010; Kheradmand et al., 2015).

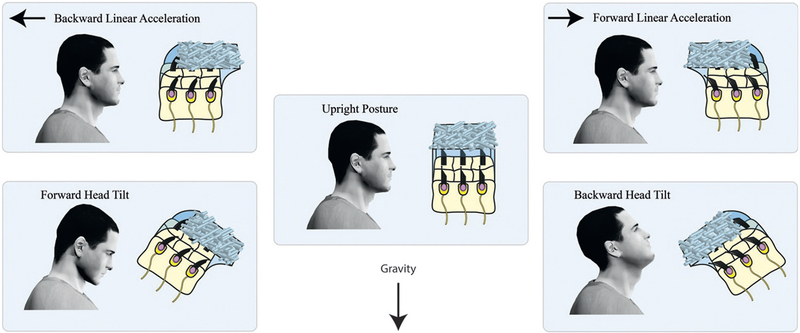

DISAMBIGUATING ORIENTATION RELATIVE TO GRAVITY FROM LINEAR ACCELERATION

Einstein’s equivalence principle states that inertial and gravitational forces are indistinguishable (Einstein, 1907). Consequently, the otoliths ambiguously signal a combination of the head’s linear acceleration and orientation relative to gravity. More specifically, the otoliths transduce gravito-inertial force, the sum of forces due to gravity and those caused by inertia, to provide an estimate of our net gravito-inertial acceleration (GIA). During linear acceleration, the “lag” of the otolithic membrane behind the skull applies an inertial force on vestibular hair cells such that the hair cells bend in the opposite direction of the head acceleration (Fig. 3.7, top left and right). The gravitational force acts on the hair cells through the “sag” of the otolithic membrane generated when the surface of one of the otolith organs (the saccule or utricle) is not perpendicular to gravity (Fig. 3.7, bottom left and right). Cue disambiguation is therefore necessary in order for otolith signals to be used in the processing of linear acceleration and/or estimating head tilt.

Fig. 3.7.

Einstein’s equivalence principle illustrated for the otoliths. During backward linear acceleration (top left), the inertial force acts in the direction opposite to the acceleration, causing the otolithic membrane to lag behind the skull and the sensory hair cells to bend. Similarly, tilting the head forward (bottom left) causes the otolithic membrane to sag and the sensory hair cells to bend as they do during backward linear acceleration. The otoliths therefore respond to both linear acceleration of the head and head tilt relative to gravity, and cannot distinguish between the two. The same is true for forward linear accelerations and backward head tilts (right column). Although illustrated here for head pitch, the ambiguity also exists between left–right translations and roll. Graviceptive signals arising from the abdominal viscera suffer from a similar ambiguity.

Head tilt estimates derived from non-otolith sensory signals can be used to disambiguate the contributions of inertial and gravitational forces to the otolith signals. In particular, changes in head tilt can be estimated independently of the otoliths by integrating angular velocity signals encoded by the semicircular canals and/or the visual system (Fig. 3.6, top center; also see the above section “Visual motion cues signal changes in head tilt”) (Benson et al., 1974; Merfeld et al., 1993, 1999; Merfeld, 1995; Merfeld and Young, 1995; Angelaki et al., 1999, 2004; Merfeld and Zupan, 2002; Zupan et al., 2002; Green and Angelaki, 2003, 2004, 2010a, b; MacNeilage et al., 2007). Neck somatosensory information resulting from head-on-body tilt (Lackner and Levine, 1979), as well as motor predictions arising during active motion, may further improve the accuracy and precision of head tilt estimates. With head tilt independently estimated (Fig. 3.6, bottom right), thereby providing an estimate of gravity’s orientation relative to the head (Ĝ), the linear acceleration experienced by the head (Â) can be estimated by subtracting the otolith signal (GIA) from Ĝ: Â =Ĝ – GIA (circumflexes indicate estimates of the true linear acceleration and orientation of gravity relative to the head) (Fig. 3.6, bottom center).

This computation is known as the gravito-inertial force resolution hypothesis (Merfeld, 1990), and it has received substantial theoretical and empirical support (Merfeld, 1990, 1995; Merfeld et al., 1993, 1999; Merfeld and Young, 1995; Angelaki et al., 1999; Merfeld and Zupan, 2002; Zupan et al., 2002; Green and Angelaki, 2004, 2010a, b; MacNeilage et al., 2007). Neural correlates of the gravito-inertial force resolution hypothesis have been observed in the brainstem and deep cerebellar nuclei of the macaque monkey, where neurons carry signals necessary to estimate linear acceleration (Angelaki et al., 2004; Green et al., 2005; Shaikh et al., 2004). Additionally, a separation of tilt and linear acceleration signals has been observed in the posterior cerebellar vermis (Yakusheva et al., 2007; Angelaki et al., 2010; Laurens et al., 2013), and neurons in the anterior thalamus encode pitch and roll relative to gravity (Laurens et al., 2016).

Further support for the gravito-inertial force resolution hypothesis comes from its ability to predict motion and orientation illusions resulting from the coupling between  and Ĝ (Merfeld, 1995; Merfeld and Young, 1995; Merfeld et al., 1999). For example, consider a situation in which the body is upright and motionless without visual cues. In this case, zero linear acceleration is inferred because the signals from the otoliths (GIA) and the estimated gravitational vector are aligned. If the gravito-inertial force hypothesis is correct, and visual signals are integrated over time to estimate head tilt, the addition of clockwise large-field angular motion to this scenario should result in an inference of left-ear-down head tilt (clockwise rotation of the estimate of gravity relative to the head) (Fig. 3.6, bottom right and Fig. 3.8). This would occur because integration of the visual rotation signal would cause the estimate of gravity relative to the head (Ĝ) to become biased away from the otolith signal (GIA) and, consequently, an illusory linear acceleration equal to the difference between Ĝ and GIA would result. Consistent with this prediction, roll rotations of the visual scene elicit horizontal nystagmus as if the body were being translated to the side (Zupan and Merfeld, 2003), and visual rotation in pitch induces perceived linear fore-aft acceleration (MacNeilage et al., 2007). As discussed next, the gravito-inertial force resolution hypothesis also correctly predicts that periods of prolonged acceleration can produce changes in perceived orientation relative to gravity as a consequence of coupling between  and Ĝ.

CONSTANT LINEAR ACCELERATION BIASES PERCEIVED ORIENTATION

If the orientation of the head is held constant relative to gravity, prolonged linear acceleration can result in an illusory perception of head tilt that increases over time (Graybiel et al., 1946; Graybiel, 1952; Paige and Seidman, 1999). This illusion, called the somatogravic effect, reflects a tendency to interpret constant or low-frequency gravito-inertial accelerations as being due to head tilt rather than linear acceleration. Although the behavioral consequences of this illusion are not generally obvious, it can affect orientation perception during flight. For example, a survey of US Air Force pilots showed that 27% of the sample reported experiencing the perception of upward tilt of the aircraft during prolonged linear acceleration (Sipes and Lessard, 2000).

One explanation for the somatogravic effect is that it derives from a feedback loop that “pulls” internal estimates of our orientation relative to gravity (Ĝ) in line with the otolith signal (GIA), thereby minimizing slow changing discrepancies between Ĝ and GIA (Fig. 3.6, dashed green arrow). This mechanism would minimally affect moment-to-moment orientation estimates during actual head tilts since they are typically fast (i.e., involving high-frequency movements), while eliminating the slow accumulation of errors that may occur during the integration of visual and vestibular signals for estimating head tilt. The somatogravic mechanism can also be formulated as a Bayesian model in which it is assumed that prolonged periods of constant linear acceleration are rare. In this model, sensory estimates of  are recursively integrated with a prior centered on zero acceleration, resulting in the inferred linear acceleration progressively moving towards zero over time. Because GIA remains constant over this period, and because Ĝ and  are coupled according to the gravito-inertial force resolution hypothesis, a decrease in  must be accompanied by a change in Ĝ (Fig. 3.6, bottom center). That change in Ĝ is then interpreted as a progressive tilting of the head (Laurens and Droulez, 2007; MacNeilage, 2007; MacNeilage et al., 2007; Laurens and Angelaki, 2011).

CONCLUSIONS

Gravity estimation and verticality perception are essential for maintaining an upright, bipedal posture, as well as achieving stable, allocentric perceptions of our environment. As discussed in this chapter, individual sensory systems provide ambiguous and noisy estimates of the direction of gravity and our orientation in space. To overcome limitations inherent to any one sensory system, gravity estimation and verticality perception rely on two forms of multisensory processing.

First, cue integration improves the precision of gravity and verticality estimates by combining multiple sources of sensory information, weighted by their individual reliabilities. The precision of these estimates is further improved by integrating current sensory information with prior information, such as the probability of being at a particular tilt. Although priors improve the precision of estimates, they can also introduce perceptual biases under conditions of weak sensory evidence or unlikely scenarios. However, because these biases “pull” perception towards events that are statistically likely, they are generally beneficial for behavior. Furthermore, dissociations between different measures of subjective vertical may be a consequence of cue integration mechanisms, rather than a reflection of distinct representations of verticality. A cue integration explanation for these differences rests upon the observation that different subjective vertical tasks rely differentially on particular sources of sensory information, which vary in accuracy and precision in a task-dependent manner. Future work can potentially determine if differences in subjective vertical measures reflect cue integration or distinct verticality representations through experimental manipulation of cue reliabilities within and across senses.

Second, cue disambiguation refers to mechanisms that compensate for ambiguities inherent in unimodal sensory representations. For example, a multisensory estimate of gravity derived from vestibular, somato-sensory, and visual information is used to anchor eye-centered visual information to gravity, thereby eliminating ambiguities in the interpretation of retinal images. Cue disambiguation is also critical for interpreting otolith signals, which reflect a combination of inertial and gravitational forces. Indeed, multisensory processing is essential to achieving stable, unified perceptions of our environment.

Moving forward, an important area of research will be to assess the role of sensorimotor processes and prediction in gravity estimation and verticality perception. For instance, how predictions of our future orientation modulate or gate the processing of tilt information remains uncertain, but such mechanisms are likely critical for estimating gravity and verticality during active movements. Investigations into such questions promise to yield insights into the neural basis and computational processes underlying gravity estimation and verticality perception in the years to come.

ACKNOWLEDGMENTS

We thank Andrea Green and Jean-Sebastien Blouin for valuable comments. This work was supported by National Institutes of Health grant DC014305 and the Alfred P. Sloan Foundation (AR).

REFERENCES

- Akbarian S, Grusser OJ, Guldin WO (1992). Thalamic connections of the vestibular cortical fields in the squirrel monkey. J Comp Neurol 326: 423–441. [DOI] [PubMed] [Google Scholar]

- Alberts BBGT, de Brouwer AJ, Selen LPJ et al. (2016a). A Bayesian account of visuo-vestibular interactions in the rod-and-frame task. eNeuro 3 (5). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alberts BBGT, Selen LPJ, Bertolini G et al. (2016b). Dissociating vestibular and somatosensory contributions to spatial orientation. J Neurophysiol 116: 30–40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander RM (2004). Bipedal animals, and their differences from humans. J Anat 204: 321–330. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anastasopoulos D, Haslwanter T, Bronstein A et al. (1997). Dissociation between the perception of body verticality and the visual vertical in acute peripheral vestibular disorder in humans. Neurosci Lett 233: 151–153. [DOI] [PubMed] [Google Scholar]

- Anastasopoulos D, Bronstein AM, Haslwanter T et al. (1999). The role of somatosensory input for the perception of verticality. Ann NY Acad Sci 871: 379–383. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, McHenry MQ, Dickman JD et al. (1999). Computation of inertial motion: neural strategies to resolve ambiguous otolith information. J Neurosci 19: 316–327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Angelaki DE, Shaikh AG, Green AM et al. (2004). Neurons compute internal models of the physical laws of motion. Nature 430: 560–564. [DOI] [PubMed] [Google Scholar]

- Angelaki DE, Yakusheva TA, Green AM et al. (2010). Computation of egomotion in the macaque cerebellar vermis. The Cerebellum 9: 174–182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aubert H (1861). Eine scheinbare bedeutende Drehung von Objekten bei Neigung des Kopfes nach rechts oder links. Virchows Arch 20: 381–393. [Google Scholar]

- Avillac M, Denève S, Olivier E et al. (2005). Reference frames for representing visual and tactile locations in parietal cortex. Nat Neurosci 8: 941–949. [DOI] [PubMed] [Google Scholar]

- Baier B, Suchan J, Karnath H-O et al. (2012). Neural correlates of disturbed perception of verticality. Neurology 78: 728–735. [DOI] [PubMed] [Google Scholar]

- Barbieri G, Gissot A-S, Fouque F et al. (2008). Does proprioception contribute to the sense of verticality? Exp Brain Res 185: 545–552. [DOI] [PubMed] [Google Scholar]

- Barbieri G, Gissot A-S, Pérennou D (2010). Ageing of the postural vertical. Age 32: 51–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barmack NH (2003). Central vestibular system: vestibular nuclei and posterior cerebellum. Brain Res Bull 60: 511–541. [DOI] [PubMed] [Google Scholar]

- Barra J, Marquer A, Joassin R et al. (2010). Humans use internal models to construct and update a sense of verticality. Brain 133: 3552–3563. [DOI] [PubMed] [Google Scholar]

- Beck JM, Latham PE, Pouget A (2011). Marginalization in neural circuits with divisive normalization. J Neurosci 31: 15310–15319. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benson AJ, Bischof N, Collins WE et al. (1974). Vestibular system part 2: psychophysics, applied aspects and general interpretations In: Kornhuber HH (Ed.), Handbook of Sensory Physiology, vol. 6/2 Springer-Verlag, Berlin Heidelberg, p 684 https://doi.org/10.10007/978-3-642-65920-1. [Google Scholar]

- Bisdorff AR, Wolsley CJ, Anastasopoulos D et al. (1996). The perception of body verticality (subjective postural vertical) in peripheral and central vestibular disorders. Brain 119: 1523–1534. [DOI] [PubMed] [Google Scholar]

- Bohmer A, Mast F, Jarchow T (1996). Can a unilateral loss of otolithic function be clinically detected by assessment of the subject visual vertical? Brain Res Bull 40: 423–429. [DOI] [PubMed] [Google Scholar]

- Brandt T, Dieterich M (1987). Pathological eye-head coordination in roll: tonic ocular tilt reaction in mesencephalic and medullary lesions. Brain 110: 649–666. [DOI] [PubMed] [Google Scholar]

- Brandt T, Dieterich M (2006). The vestibular cortex, its locations, functions and disorders. Ann NY Acad Sci 871: 293–312. [DOI] [PubMed] [Google Scholar]

- Brandt T, Dieterich M, Danek A (1994). Vestibular cortex lesions affect the perception of verticality. Ann Neurol 35: 403–412. [DOI] [PubMed] [Google Scholar]

- Bronstein AM (1999). The interaction of otolith and proprioceptive information in the perception of verticality: the efects of labyrinthine and CNS disease. Ann NY Acad Sci 871: 324–333. [DOI] [PubMed] [Google Scholar]

- Bronstein AM, Yardley L, Moore AP et al. (1996). Visually and posturally mediated tilt illusion in Parkinson’s disease and in labyrinthine defective subjects. Neurology 47: 651–656. [DOI] [PubMed] [Google Scholar]

- Bronstein AM, Perennou DA, Guerraz M et al. (2003). Dissociation of visual and haptic vertical in two patients with vestibular nuclear lesions. Neurology 61: 1260–1262. [DOI] [PubMed] [Google Scholar]

- Bunn LM, Marsden JF, Voyce DC et al. (2015). Sensorimotor processing for balance in spinocerebellar ataxia type 6: sensorimotor processing in SCA6. Mov Disord 30: 1259–1266. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ceyte H, Cian C, Zory R et al. (2007). Effect of Achilles tendon vibration on postural orientation. Neurosci Lett 416: 71–75. [DOI] [PubMed] [Google Scholar]

- Clemens IAH, De Vrijer M, Selen LPJ et al. (2011). Multisensory processing in in spatial orientation: an inverse probabilistic approach. J Neurosci 31: 5365–5377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coppola DM, Purves HR, McCoy AN et al. (1998a). The distribution of oriented contours in the real world. Proc Natl Acad Sci 95: 4002–4006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coppola DM, White LE, Fitzpatrick D et al. (1998b). Unequal representation of cardinal and oblique contours in ferret visual cortex. Proc Natl Acad Sci 95: 2621–2623. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crevecoeur F, McIntyre J, Thonnard J-L et al. (2014). Gravity-dependent estimates of object mass underlie the generation of motor commands for horizontal limb movements. J Neurophysiol 112: 384–392. [DOI] [PubMed] [Google Scholar]

- Curthoys IS, Dai MJ, Halmagyi GM (1991). Human ocular torsional position before and after unilateral vestibular neurectomy. Exp Brain Res 85: 218–225. [DOI] [PubMed] [Google Scholar]

- Daddaoua N, Dicke PW, Thier P (2014). Eye position information is used to compensate the consequences of ocular torsion on V1 receptive fields. Nat Commun 5: 1–9. [DOI] [PubMed] [Google Scholar]

- Dakin SC, Mareschal I, Bex PJ (2005). An oblique effect for local motion: psychophysics and natural movie statistics. J Vis 5: 878–887. [DOI] [PubMed] [Google Scholar]

- Dakin CJ, Elmore LC, Rosenberg A (2013). One step closer to a functional vestibular prosthesis. J Neurosci 33: 14978–14980. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dakin CJ, Peters A, Giunti P et al. (2018).Cerebellar degeneration increases visual influence on dynamic estimates of verticality. Curr Bio 28 https://doi.org/10.1016/j.cub.2018.09.049. [DOI] [PubMed] [Google Scholar]

- De Vrijer M, Medendorp WP, Van Gisbergen JAM (2008). Shared computational mechanism for tilt compensation accounts for biased verticality percepts in motion and pattern vision. J Neurophysiol 99: 915–930. [DOI] [PubMed] [Google Scholar]

- Deneve S, Latham PE, Pouget A (2001). Efficient computation and cue integration with noisy population codes. Nat Neurosci 4: 826–831. [DOI] [PubMed] [Google Scholar]

- Denney D, Adorjanti C (1972). Orientation specificity of visual cortical neurons after head tilt. Exp Brain Res 14: 312–317. [DOI] [PubMed] [Google Scholar]

- Dichgans J, Held R, Young L et al. (1972). Moving visual scenes influence the apparent direction of gravity. Science 178: 1217–1219. [DOI] [PubMed] [Google Scholar]

- Dieterich M (2005). Thalamic infarctions cause side-specific suppression of vestibular cortex activations. Brain 128: 2052–2067. [DOI] [PubMed] [Google Scholar]

- Einstein A (1907). Uber das Relativitatsprinzip und die aus demselben gezogenen Folgerungen. Jahrb Radioakt Elektron 4: 411–462. [Google Scholar]

- Ernst MO (2006). A Bayesian view on multimodal cue integration. Hum Body Percept Out 131: 105–131. [Google Scholar]

- Ernst M, Banks M (2002). Humans integrate visual and haptic information in a statistically optimal fashion. Nature 415: 429–433. [DOI] [PubMed] [Google Scholar]

- Ernst MO, Bülthoff HH (2004). Merging the senses into a robust percept. Trends Cogn Sci 8: 162–169. [DOI] [PubMed] [Google Scholar]

- Friedmann G (1970). The judgement of the visual vertical and horizontal with peripheral and central vestibular lesions. Brain 93: 313–328. [DOI] [PubMed] [Google Scholar]

- Funk J, Finke K, M€uller HJ et al. (2010). Effects of lateral head inclination on multimodal spatial orientation judgments in neglect: Evidence for impaired spatial orientation constancy. Neuropsychologia 48: 1616–1627. [DOI] [PubMed] [Google Scholar]

- Furmanski CS, Engel SA (2000). An oblique effect in human primary visual cortex. Nat Neurosci 3: 535–536. [DOI] [PubMed] [Google Scholar]

- Gaveau J, Paizis C, Berret B et al. (2011). Sensorimotor adaptation of point-to-point arm movements after spaceflight: the role of internal representation of gravity force in trajectory planning. J Neurophysiol 106: 620–629. [DOI] [PubMed] [Google Scholar]

- Gaveau J, Berret B, Demougeot L et al. (2014). Energy-related optimal control accounts for gravitational load: comparing shoulder, elbow, and wrist rotations. J Neurophysiol 111: 4–16. [DOI] [PubMed] [Google Scholar]

- Gaveau J, Berret B, Angelaki DE et al. (2016). Direction-dependent arm kinematics reveal optimal integration of gravity cues. eLife 5: e16394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gibson JJ (1952). The relation between visual and postural determinants of the phenomenal vertical. Psychol Rev 59: 370–375. [DOI] [PubMed] [Google Scholar]

- Gibson JJ, Mowrer OH (1938). Determinants of the perceived vertical and horizontal. Psychol Rev 45: 300–323. [Google Scholar]

- Gingras G, Rowland BA, Stein BE (2009). The differing impact of multisensory and unisensory integration on behavior. J Neurosci 29: 4897–4902. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Girshick AR, Landy MS, Simoncelli EP (2011). Cardinal rules: visual orientation perception reflects knowledge of environmental statistics. Nat Neurosci 14: 926–932. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodman-Keiser MD, Qin C, Thompson AM et al. (2010). Upper thoracic postsynaptic dorsal column neurons conduct cardiac mechanoreceptive information, but not cardiac chemical nociception in rats. Brain Res 1366: 71–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graybiel A (1952). Oculogravic illusion. AMA Arch Ophthalmol 48: 605–615. [DOI] [PubMed] [Google Scholar]

- Graybiel A, Clark B (1962). Perception of the horizontal or vertical with head upright, on the side, and inverted under static conditions, and during exposure to centripetal force. Aerosp Med 33: 147–155. [PubMed] [Google Scholar]

- Graybiel A, Patterson JL (1955). Thresholds of stimulation of the otolith organs as indicated by the oculogravic illusion. J Appl Physiol 7: 666–670. [DOI] [PubMed] [Google Scholar]

- Graybiel A, Hupp DI, Patterson JL (1946). The law of the otolith organs. Fed Proc Am Soc Exp Biol 5: 35. [PubMed] [Google Scholar]

- Green AM, Angelaki DE (2003). Resolution of sensory ambiguities for gaze stabilization requires a second neural integrator. J Neurosci 23: 9265–9275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green AM, Angelaki DE (2004). An integrative neural network for detecting inertial motion and head orientation. J Neurophysiol 92: 905–925. [DOI] [PubMed] [Google Scholar]

- Green AM, Angelaki DE (2010a). Multisensory integration: resolving sensory ambiguities to build novel representations. Curr Opin Neurobiol 20: 353–360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green AM, Angelaki DE (2010b). Internal models and neural computation in the vestibular system. Exp Brain Res 200: 197–222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Green AM, Shaikh AG, Angelaki DE (2005). Sensory vestibular contributions to constructing internal models of self-motion. J Neural Eng 2: S164–S179. [DOI] [PubMed] [Google Scholar]

- Guerraz M, Yardley L, Bertholon P et al. (2001). Visual vertigo: symptom assessment, spatial orientation and postural control. Brain 124: 1646–1656. [DOI] [PubMed] [Google Scholar]

- Held R, Dichgans J, Bauer J (1974). Characteristics of moving visual scenes influencing spatial orientation. Vision Res 15: 357–365. [DOI] [PubMed] [Google Scholar]

- Horn G, Stechler G, Hill RM (1972). Receptive fields of units in the visual cortex of the cat in the presence and absence of bodily tilt. Exp Brain Res 15: 113–132. [DOI] [PubMed] [Google Scholar]

- Hughes PC, Brecher GA, Fishkin SM (1972). Effects of rotating backgrounds upon the perception of verticality. Atten Percept Psychophys 11: 135–138. [Google Scholar]

- Ibbotson MR, Price NSC, Das VE et al. (2005). Torsional eye movements during psychophysical testing with rotating patterns. Exp Brain Res 160: 264–267. [DOI] [PubMed] [Google Scholar]

- Kano M, Kano M-S, Kusunoki M et al. (1990). Nature of opto-kinetic response and zonal organization of climbing fiber afferents in the vestibulocerebellum of the pigmented rabbit. Exp Brain Res 80: 238–251. [DOI] [PubMed] [Google Scholar]

- Kano M, Kano M-S, Maekawa K (1991). Optokinetic response of simple spikes of Purkinje cells in the cerebellar flocculus and nodulus of the pigmented rabbit. Exp Brain Res 87: 484–496. [DOI] [PubMed] [Google Scholar]

- Karnath H-O (1994). Subjective body orientation in neglect and the interactive contribution of neck muscle proprioception and vestibular stimulation. Brain 117: 1001–1012. [DOI] [PubMed] [Google Scholar]

- Karnath H-O, Sievering D, Fetter M (1994). The interactive contribution of neck muscle proprioception and vestibular stimulation to subjective “straight ahead” orientation in man. Exp Brain Res 101: 140–146. [DOI] [PubMed] [Google Scholar]

- Karnath H-O, Ferber S, Dichgans J (2000a). The origin of contraversive pushing Evidence for a second graviceptive system in humans. Neurology 55: 1298–1304. [DOI] [PubMed] [Google Scholar]

- Karnath H-O, Ferber S, Dichgans J (2000b). The neural representation of postural control in humans. Proc Natl Acad Sci 98: 13931–13936. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kheradmand A, Winnick A (2017). Perception of upright: multisensory convergence and the role of temporal-parietal cortex. Front Neurol 8: 552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kheradmand A, Lasker A, Zee DS (2015). Transcranial magnetic stimulation (TMS) of the supramarginal gyrus: a window to perception of upright. Cereb Cortex 25: 765–771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knill DC, Pouget A (2004). The Bayesian brain: the role of uncertainty in neural coding and computation. Trends Neurosci 27: 712–719. [DOI] [PubMed] [Google Scholar]

- Knill DC, Richards W (1996). Perception as Bayesian inference, Cambridge University Press, Cambridge. [Google Scholar]

- Kobayashi H, Hayashi Y, Higashino K et al. (2002). Dynamic and static subjective visual vertical with aging. Auris Nasus Larynx 29: 325–328. [DOI] [PubMed] [Google Scholar]

- Kofka K (1935). Principles of gestalt psychology, Harcourt-Brace, New York. [Google Scholar]

- Kostreva DR, Pontus SP (1993a). Pericardial mechanoreceptors with phrenic afferents. Am J Physiol-Heart Circ Physiol 264: H1836–H1846. [DOI] [PubMed] [Google Scholar]

- Kostreva DR, Pontus SP (1993b). Hepatic vein, hepatic parenchymal, and inferior vena caval mechanoreceptors with phrenic afferents. Am J Physiol-Gastrointest Liver Physiol 265: G15–G20. [DOI] [PubMed] [Google Scholar]

- Lackner JR, Levine MS (1979). Changes in apparent body orientation and sensory localization induced by vibration of postural muscles: vibratory myesthetic illusions. Aviat Space Environ Med 50: 346–354. [PubMed] [Google Scholar]

- Lafosse C, Kerckhofs E, Vereeck L et al. (2007). Postural abnormalities and contraversive pushing following right hemisphere brain damage. Neuropsychol Rehabil 17: 374–396. [DOI] [PubMed] [Google Scholar]

- Landy MS, Banks MS, Knill DC (2011). Ideal-observer models of cue integration In: sensory cue integration, computation neuroscience, Oxford University Press, Oxford. [Google Scholar]

- Laurens J, Angelaki DE (2011). The functional significance of velocity storage and its dependence on gravity. Exp Brain Res 210: 407–422. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurens J, Droulez J (2007). Bayesian processing of vestibular information. Biol Cybern 96: 389–404. [DOI] [PubMed] [Google Scholar]

- Laurens J, Meng H, Angelaki DE (2013). Neural representation of orientation relative to gravity in the macaque cerebellum. Neuron 80: 1508–1518. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Laurens J, Kim B, Dickman JD (2016). Gravity orientation tuning in macaque anterior thalamus. Nat Neurosci 19: 1566–1568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lekhel H, Popov K, Anastasopoulos D (1997). Postural responses to vibration of neck muscles in patients with idiopathic torticollis. Brain 120: 583–591. [DOI] [PubMed] [Google Scholar]

- Lewis RF, Haburcakova C, Gong W et al. (2013). Electrical stimulation of semicircular canal afferents affects the perception of head orientation. J Neurosci 33: 9530–9535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li B, Peterson MR, Freeman RD (2003). Oblique effect: a neural basis in the visual cortex. J Neurophysiol 90: 204–217. [DOI] [PubMed] [Google Scholar]

- Lopez C, Blanke O (2011). The thalamocortical vestibular system in animals and humans. Brain Res Rev 67: 119–146. [DOI] [PubMed] [Google Scholar]

- Lopez C, Lacour M, Ahmadi A et al. (2007). Changes of visual vertical perception: a long-term sign of unilateral and bilateral vestibular loss. Neuropsychologia 45: 2025–2037. [DOI] [PubMed] [Google Scholar]

- Lopez C, Blanke O, Mast FW (2012). The human vestibular cortex revealed by coordinate-based activation likelihood estimation meta-analysis. Neuroscience 212: 159–179. [DOI] [PubMed] [Google Scholar]

- Lord SR, Webster IW (1990). Visual field dependence in elderly fallers and non-fallers. Int J Aging Hum Dev 31: 269–279. [DOI] [PubMed] [Google Scholar]

- Ma WJ, Beck JM, Latham PE (2006). Bayesian inference with probabilistic population codes. Nat Neurosci 9: 1432–1438. [DOI] [PubMed] [Google Scholar]

- MacNeilage PR (2007). Psychophysical investigations of visual-vestibular interactions in human spatial orientation, University of California, Berkeley, Berkeley, CA. [Google Scholar]

- MacNeilage PR, Banks MS, Berger DR (2007). A Bayesian model of the disambiguation of gravitoinertial force by visual cues. Exp Brain Res 179: 263–290. [DOI] [PubMed] [Google Scholar]

- Manckoundia P, Mourey F, Pfitzenmeyer P et al. (2007). Is backward disequilibrium in the elderly caused by an abnormal perception of verticality? A pilot study. Clin Neurophysiol 118: 786–793. [DOI] [PubMed] [Google Scholar]

- Mazibrada G, Tariq S, Pérennou D (2008). The peripheral nervous system and the perception of verticality. Gait Posture 27: 202–208. [DOI] [PubMed] [Google Scholar]

- Merfeld DM (1990). Spatial orientation in the squirrel monkey: an experimental and theoretical investigation, PhD thesis. MIT, Cambridge, MA. [Google Scholar]

- Merfeld DM (1995). Modeling the vestibulo-ocular reflex of the squirrel monkey during eccentric rotation and roll tilt. Exp Brain Res 106: 123–134. [DOI] [PubMed] [Google Scholar]

- Merfeld DM, Young LR (1995). The vestibulo-ocular reflex of the squirrel monkey during eccentric rotation and roll tilt. Exp Brain Res 106: 111–122. [DOI] [PubMed] [Google Scholar]

- Merfeld DM, Zupan LH (2002). Neural processing of gravitoinertial cues in humans. III. Modeling tilt and translation responses. J Neurophysiol 87: 819–833. [DOI] [PubMed] [Google Scholar]

- Merfeld DM, Young L, Oman CM (1993). A multidimensional model of the effect of gravity on the spatial orientation of the monkey. J Vestib Res 3: 141–161. [PubMed] [Google Scholar]

- Merfeld DM, Zupan LH, Peterka RJ (1999). Humans use internal models to estimate gravity and linear acceleration. Science 398: 615–618. [DOI] [PubMed] [Google Scholar]

- Mikellidou K, Cicchini GM, Thompson PG et al. (2015). The oblique effect is both allocentric and egocentric. J Vis 15: 24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell DE, Blakemore C (1972). The site of orientational constancy. Perception 1: 315–320. [DOI] [PubMed] [Google Scholar]

- Mittelstaedt H (1983). A new solution to the problem of the subjective vertical. Naturwissenschaften 70: 272–281. [DOI] [PubMed] [Google Scholar]

- Mittelstaedt H (1986). The subjective vertical as a function of visual vertical and extra retinal cues. Acta Psychol (Amst.) 63: 63–85. [DOI] [PubMed] [Google Scholar]

- Mittelstaedt H (1992). Somatic versus vestibular gravity reception in man. Ann NY Acad Sci 656: 124–139. [DOI] [PubMed] [Google Scholar]

- Mittelstaedt H (1996). Somatic graviception. Biol Psychol 42: 53–74. [DOI] [PubMed] [Google Scholar]

- Mittelstaedt H (1997). Interaction of eye-, head-, and trunk-bound information in spatial perception and control. J Vestib Res 7: 283–302. [PubMed] [Google Scholar]

- Mittelstaedt H (1999). The role of the otoliths in perception of the vertical and in path integration. Ann NY Acad Sci 871: 334–344. [DOI] [PubMed] [Google Scholar]

- Mittelstaedt H, Fricke E (1988). The relative effect of saccular and somatosensory information on spatial perception and control Adv Otorhinolaryngol 42: pp. 24–30. [DOI] [PubMed] [Google Scholar]

- Niijima A (1975). Observation on the localization of mechanoreceptors in the kidney and afferent nerve fibres in the renal nerves in the rabbit. J Physiol 245: 81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Over R (1966). Possible visual factors in falls by old people. Gerontologist 6: 212–214. [Google Scholar]

- Paige GD, Seidman SH (1999). Characteristics of the VOR in response to linear acceleration. Ann NY Acad Sci 871: 123–135. [DOI] [PubMed] [Google Scholar]

- Pellicano E, Burr D (2012). When the world becomes “too real”: a Bayesian explanation of autistic perception. Trends Cogn Sci 16: 504–510. [DOI] [PubMed] [Google Scholar]

- Pérennou DA, Amblard B, Laassel EM (2002). Understanding the pusher behavior of some stroke patients with spatial deficits: a pilot study. Arch Phys Med Rehabil 83: 570–575. [DOI] [PubMed] [Google Scholar]

- Perennou DA, Mazibrada G, Chauvineau V (2008). Lateropulsion, pushing and verticality perception in hemisphere stroke: a causal relationship. Brain 131: 2401–2413. [DOI] [PubMed] [Google Scholar]

- Quix FH (1925). The function of the vestibular organ and the clinical examination of the otolithic apparatus. J Laryngol Otol 40: 425–443. [Google Scholar]

- Roll JP, Vedel JP (1982). Kinaesthetic role of muscle afferents in man, studied by tendon vibration and microneurography. Exp Brain Res 47: 177–190. [DOI] [PubMed] [Google Scholar]

- Roll JP, Vedel JP, Ribot E (1989). Alteration of proprioceptive messages induced by tendon vibration in man: a micro-neurographic study. Exp Brain Res 76: 213–222. [DOI] [PubMed] [Google Scholar]