Abstract

We explore the application of probability generating functions (PGFs) to invasive processes, focusing on infectious disease introduced into large populations. Our goal is to acquaint the reader with applications of PGFs, moreso than to derive new results. PGFs help predict a number of properties about early outbreak behavior while the population is still effectively infinite, including the probability of an epidemic, the size distribution after some number of generations, and the cumulative size distribution of non-epidemic outbreaks. We show how PGFs can be used in both discrete-time and continuous-time settings, and discuss how to use these results to infer disease parameters from observed outbreaks. In the large population limit for susceptible-infected-recovered (SIR) epidemics PGFs lead to survival-function based models that are equivalent to the usual mass-action SIR models but with fewer ODEs. We use these to explore properties such as the final size of epidemics or even the dynamics once stochastic effects are negligible. We target this primer at biologists and public health researchers with mathematical modeling experience who want to learn how to apply PGFs to invasive diseases, but it could also be used in an applications-based mathematics course on PGFs. We include many exercises to help demonstrate concepts and to give practice applying the results. We summarize our main results in a few tables. Additionally we provide a small python package which performs many of the relevant calculations.

1. Introduction

The spread of infectious diseases remains a public health challenge. Increased interaction between humans and wild animals leads to increased zoonotic introductions, and modern travel networks allows these diseases to spread quickly. Many mathematical approaches have been developed to give us insight into the early behavior of disease outbreaks. An important tool for understanding the stochastic behavior of an outbreak soon after introduction is the probability generating function (PGF) (Allen, 2010; Wilf, 2005; Yan, 2008).

Specifically, PGFs frequently give insight about the statistical behavior of outbreaks before they are large enough to be affected by the finite-size of the population. In these cases, both susceptible-infected-recovered (SIR) disease (for which nodes recover with immunity) and susceptible-infected-susceptible (SIS) disease (for which nodes recover and can be reinfected immediately) are equivalent. In the case of SIR disease PGFs can also be used to study the dynamics of disease once an epidemic is established in a large population.

We can investigate properties such as the early growth rate of the disease, the probability the disease becomes established, or the distribution of final sizes of outbreaks that fail to become established. Similar questions also arise in other settings where some introduced agent can reproduce or die, such as invasive species in ecological settings (Lewis, Petrovskii, & Potts, 2016), early within-host pathogen dynamics (Conway & Coombs, 2011), and the accumulation of mutations in precancerous and cancerous cells (Antal & Krapivsky, 2011; Durrett, 2015) or in pathogen evolution (Volz, Romero-Severson, & Leitner, 2017). These are all examples of branching processes, and PGFs are a central tool for the analysis of branching processes (Bartlett, 1949; Kendall, 1949; Kimmel & Axelrod, 2002). Except for Section 4 where we develop deterministic equations for later-time SIR epidemics, based on (Miller, 2011; Miller, Slim, & Volz, 2012; Volz, 2008), the approaches we describe here have direct application in these other branching processes as well.

Before proceeding, we define what a PGF is. Let denote the probability of drawing the value i from a given distribution of non-negative integers. Then is the PGF of this distribution. We should address a potential confusion caused by the name. A “generating function” is a function which is defined from (or “generated by”) a sequence of numbers and takes the form . So a “probability generating function” is a generating function defined from a probability distribution on integers. It is not a function that generates probabilities when values are plugged in for x. There are other generating functions, including the “moment generating function”, defined to be where (the moment and probability generating functions turn out to be closely related).

PGFs have a number of useful properties which we derive in Appendix A. We have structured this paper so that a reader can skip ahead now and read Appendix A in its entirety to get a self-contained introduction to PGFs, or wait until a particular property is referenced in the main text and then read that part of the appendix.

As we demonstrate in Table 1, for many important distributions the PGF takes a simple form. We derive this for the Poisson distribution.

Example 1.1

Consider the Poisson distribution with mean λ

For this we find

Table 1.

A few common probability distributions and their PGFs.

| Distribution | PGF |

|---|---|

| Poisson, mean λ: | |

| Uniform: | |

| Binomial: n trials, with success probability p: for | |

| Geometrica: for and | |

| Negative binomialb: for | |

Another definition of the geometric distribution with different indexing, for , gives a different PGF.

Typically the negative binomial is expressed in terms of a parameter r which is the number of failures at which the experiment stops, assuming each with success probability p. For us plays an important role, so to help distinguish these, we use rather than r. Then is the probability of i successes.

In this primer, we explore the application of PGFs to the study of disease spread. We will use PGFs to answer questions about the early-time behavior of an outbreak (neglecting depletion of susceptibles):

-

•

What is the probability an outbreak goes extinct within g generations (or by time t) in an arbitrarily large population?

-

•

What is the probability an index case causes an epidemic?

-

•

What is the final size distribution of small outbreaks?

-

•

What is the size distribution of outbreaks at generation g (or time t)?

-

•

How fast is the initial growth for those outbreaks that do not go extinct?

Although we present these early-time results in the context of SIR outbreaks they also apply to SIS outbreaks and many other invasive processes.

We can also use PGFs for some questions about the full behavior accounting for depletion of susceptibles. Specifically:

-

•

In a continuous-time Markovian SIR or SIS outbreak spreading in a finite population, what is the distribution of possible system states at time t?

-

•

In the large-population limit of an SIR epidemic, what fraction of the population is eventually infected?

-

•

In the large-population limit of an SIR epidemic, what fraction of the population is infected or recovered at time t?

We will consider both discrete-time and Markovian continuous-time models of disease. In the discrete-time case each infected individual transmits to some number of “offspring” before recovering. In the continuous-time case each infected individual transmits with a rate β and recovers with a rate γ.

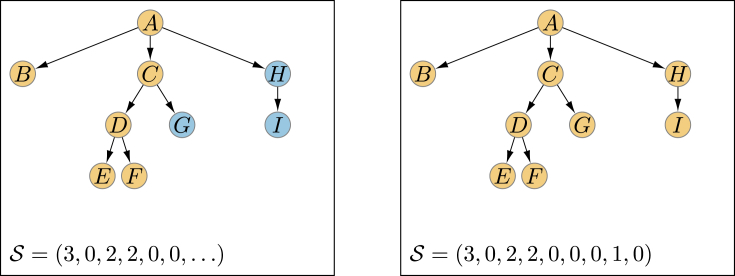

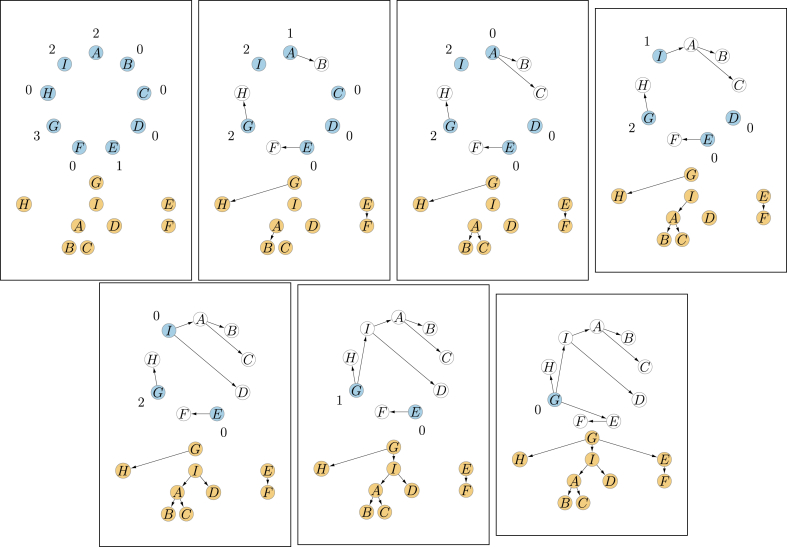

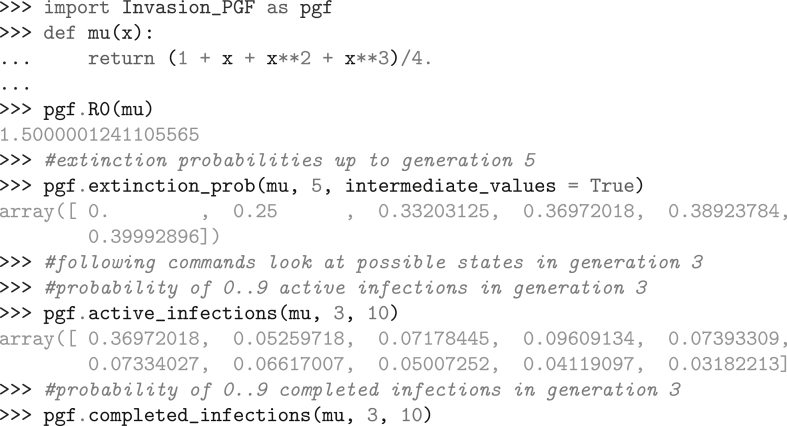

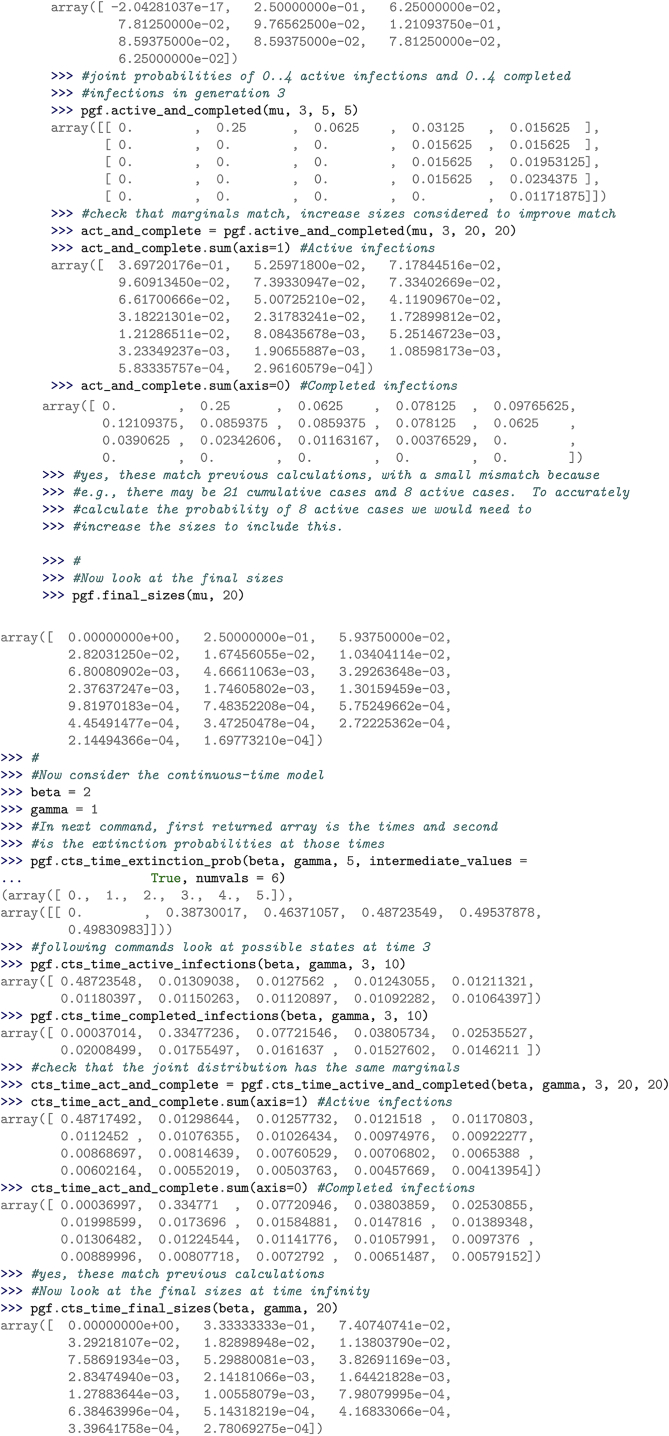

In Section 2 we begin our study investigating properties of epidemic emergence in a discrete-time, generation-based framework, focusing on the probability of extinction and the sizes of outbreaks assuming that the disease is invading a sufficiently large population with enough mixing that we can treat the infections caused by any one infected individual as independent of the others. We also briefly discuss how we might use our observations to infer disease parameters from observed small outbreaks. In Section 3, we repeat this analysis for a continuous-time case treating transmission and recovery as Poisson processes, and then adapt the analysis to a population with finite size N. Next in Section 4 we use PGFs to derive simple models of the large-time dynamics of SIR disease spread, once the infection has reached enough individuals that we can treat the dynamics as deterministic. Finally, in Section 5 we explore multitype populations in which there are different types of infected individuals, which may produce different distributions of infections. We provide three appendices. In Appendix A, we derive the relevant properties of PGFs, in Appendix B we provide elementary (i.e., not requiring Calculus) derivations of two important theorems, and in Appendix C we provide details of a Python package Invasion_PGF available at https://github.com/joelmiller/Invasion_PGF that implements most of the results described in this primer. Python code that uses this package to implement the figures of Section 2 is provided in the supplement.

Our primary goal here is to provide modelers with a useful PGF-based toolkit, with derivations that focus on developing intuition and insight into the application rather than on providing fully rigorous proofs. Throughout, there are exercises designed to increase understanding and help prepare the reader for applications. This primer (and Appendix A in particular) could serve as a resource for a mathematics course on PGFs. For readers wanting to take a deep dive into the underlying theory, there are resources that provide a more technical look into PGFs in general (Wilf, 2005) or specifically using PGFs for infectious disease (Yan, 2008).

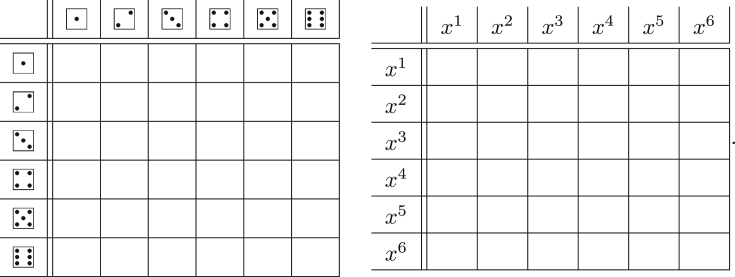

1.1. Summary

Before presenting the analysis, we provide a collection of tables that summarize our main results. Table 2 summarizes our notation. Table 3, Table 4 summarize our main results for the discrete-time and continuous-time models. Table 5 shows applications of PGFs to the continuous-time dynamics of SIR epidemics once the disease has infected a non-negligible proportion of a large population, effectively showing how PGFs can be used to replace most common mass-action models. Finally, Table 6 provides the probability of each finite final outbreak size assuming a sufficiently large population that susceptible depletion never plays a role.

Table 2.

Common function and variable names. When we use a PGF for the number of susceptible individuals, active infections, and/or completed infections x and s correspond to susceptible individuals, y and i to active infections, and z and r to completed infections.

| Function/variable name | Interpretation |

|---|---|

| Arbitrary PGFs. | |

|

Without hats: The PGF for the offspring distribution in discrete time. With hats: The PGF for the outcome of an unknown event in a continuous-time Markovian outbreak: y accounts for active infections and z accounts for completed infections. |

|

| α, , | Probability of either eventual extinction, extinction by generation g, or by time t in an infinite population. |

| PGF for the number of active infections in generation g or at time t in an infinite population. | |

| The PGF for the distribution of completed infections at the end of a small outbreak, in generation g, or at time t in an infinite population. If , then one of the terms in the expansion of is where is the probability of an epidemic. | |

| The PGF for the joint distribution of current infections and completed infections either at generation g or time t in an infinite population. | |

| The PGF for the joint distribution of susceptibles and current infections at time t in a finite population of size N (used for continuous time only). In the SIR case we can infer the number recovered from this and the total population size. | |

| PGF for the ‘‘ancestor distribution’’, analogous to the offspring distribution. | |

| PGF for the distribution of susceptibility for the continuous time model where rate of receiving transmission is proportional to κ. | |

| β, γ | The individual transmission and recovery rates for the Markovian continuous time model. |

Table 3.

A summary of our results for application of PGFs to discrete-time SIS and SIR disease processes in the infinite population limit. The function is the PGF for the offspring distribution. The notation in the exponent denotes function composition g times. For example, .

| Question | Section | Solution |

|---|---|---|

| Basic Reproductive Number [the average number of transmissions an infected individual causes early in an outbreak]. |

Intro to 2 | . |

| Probability of extinction, α, given a single introduced infection. | 2.1 | or, equivalently, the smallest x in for which . |

| Probability of extinction within g generations | 2.1.2 | . |

| PGF of the distribution of the number of infected individuals in the g-th generation. | 2.2 | where solves . |

| Average number of active infections in generation g and average number if the outbreak has not yet gone extinct. | 2.2 | , and . |

| PGF of the number of completed cases at generation g in an infinite population. | 2.3.1 | where solves with . |

| PGF of the joint distribution of the number of current and completed cases at generation g in an infinite population. | 2.3.2 | where solves with . |

| PGF of the final size distribution. | 2.4 | where solves . It also solves . This has a discontinuity at if epidemics are possible. |

| Probability an outbreak infects exactly j individuals | 2.4 | where is the coefficient of in the expansion of . |

| Probability a disease has a particular set of parameters given a set of observed independent outbreak sizes and a prior belief . | 2.4.1 | , which can be solved numerically using our prior knowledge and our knowledge of the probability of each given . |

Table 4.

A summary of our results for application of PGFs to the continuous-time disease process. We assume individuals transmit with rate β and recover with rate γ. The functions and are given in System (14).

| Question | Section | Solution |

|---|---|---|

| Probability of eventual extinction α given a single introduced infection. | 3.1 | |

| Probability of extinction by time t, . | 3.1.1 | where and . |

| PGF of the distribution of number of infected individuals at time t (assuming one infection at time 0). | 3.2 | where and solves either or |

| PGF of the number of completed cases at time t. | 3.4 | where and solves |

| PGF of the joint distribution of the number of current and completed cases at time t (assuming one infection at time 0). | 3.3 | where and solves either or |

| PGF of the final size distribution. | 3.4 | . This also solves . If epidemics are possible this has a discontinuity at . |

| Probability an outbreak infects exactly j individuals | 3.4 | . |

| PGF for the joint distribution of the number susceptible and infected at time t for SIS dynamics in a population of size N. | 3.5.1 | where solves |

| PGF for the joint distribution of the number susceptible and infected at time t for SIR dynamics in a population of size N. | 3.5.2 | where solves |

Table 5.

A summary of our results for application of PGFs to the final size and large-time dynamics of SIR disease. The PGFs χ and ψ encode the heterogeneity in susceptibility. The PGF χ is the PGF of the ancestor distribution (an ancestor of u is any individual who, if infected, would infect u). The PGF encodes the distribution of the contact rates.

| Question | Section | Solution |

|---|---|---|

| Final size relation for an SIR epidemic assuming a vanishingly small fraction ρ randomly infected initially with . | 4.2 | . [For standard assumptions, including the usual continuous-time assumptions, .] |

| Discrete-time number susceptible, infected, or recovered in a population with homogeneous susceptibility and given , assuming an initial fraction ρ is randomly infected with . | 4.3 | For : with the initial condition , , and . |

| Discrete-time number susceptible, infected, or recovered in a population with heterogeneous susceptibility for SIR disease after g generations with an initial fraction ρ randomly infected where . | 4.3 | For : with the initial condition , , and . |

| Continuous time number susceptible, infected, or recovered for SIR disease as a function of time with an initial fraction ρ randomly infected where . Assumes u receives infection at rate | 4.4 | For : with the initial condition . |

Table 6.

The probability of j total infections in an infinite population for different offspring distributions, derived using Theorem 2.7 and the corresponding log-likelihoods. For any one of these, if we sum the probability of j over (finite) j, we get the probability that the outbreak remains finite in an infinite population. This is particularly useful when inferring disease parameters from observed outbreak sizes (Section 2.4.1). The parameters' interpretations are given in Table 1.

| Distribution | PGF | Probability of j infections | Log-Likelihood of Parameters given j |

|---|---|---|---|

| Poisson | |||

| Uniform | |||

| Binomial | |||

| Geometric | |||

| Negative Binomial | |||

1.2. Exercises

We end each section with a collection of exercises. We have designed these exercises to give the reader more experience applying PGFs and to help clarify some of the more subtle points.

Exercise 1.1

Except for the Poisson distribution handled in Example 1.1, derive the PGFs shown in Table 1 directly from the definition .

For the negative binomial, it may be useful to use the binomial series:

using and .

Exercise 1.2

Consider the binomial distribution with n trials, each having success probability Using Table 1, show that the PGF for the binomial distribution converges to the PGF for the Poisson distribution in the limit , if is fixed.

2. Discrete-time spread of a simple disease: early time

We begin with a simple model of disease transmission using a discrete-time setting. In the time step after becoming infected, an infected individual causes some number of additional cases and then recovers. We let denote the probability of causing exactly i infections (referred to as “offspring”) before recovering. It will be useful to define the PGF for the offspring distribution

| (1) |

For results related to early extinction or early-time dynamics, we will assume that the population is large enough and sufficiently well-mixed that the transmissions in successive generations are all independent events and unaffected by depletion of susceptible individuals. Before deriving our results for the early-time behavior of our discrete-time model, we offer a summary in Table 3.

Often in disease spread we are interested in the expected number of infections caused by an infected individual early in an outbreak, which we define to be .

| (2) |

where . The value of is related to disease dynamics, but it is not the only important property of μ.

Example 2.1

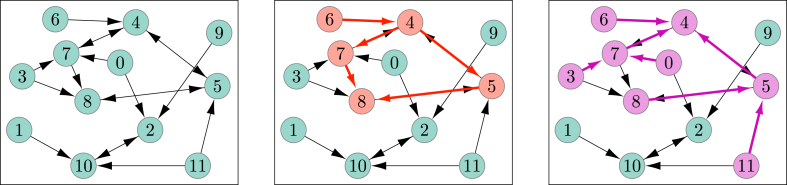

We demonstrate a few sample outbreaks in Fig. 1. Here we take a bimodal case with such that a proportion 0.3 of the population cause 3 infections and the remaining 0.7 cause none. Most of the outbreaks die out immediately, but some persist, surviving multiple generations before extinction.

Example 2.2

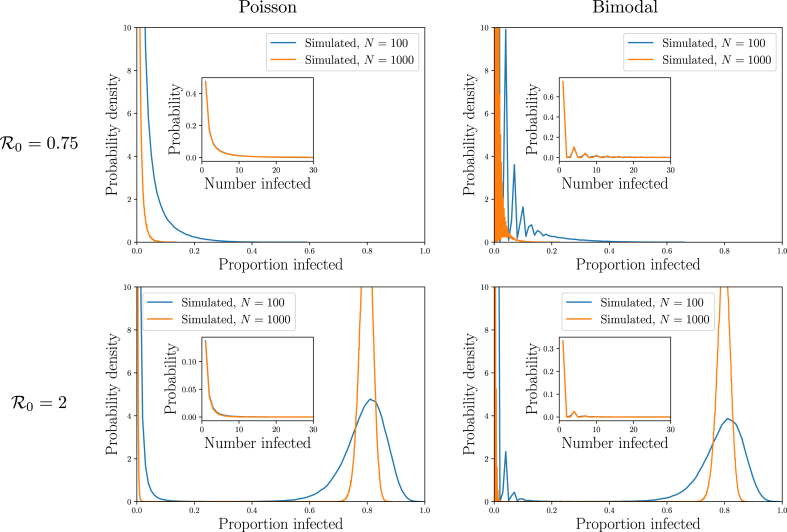

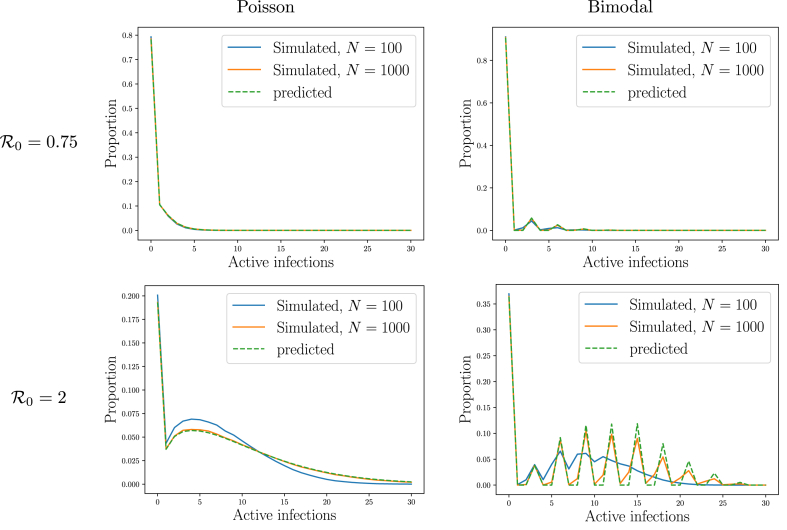

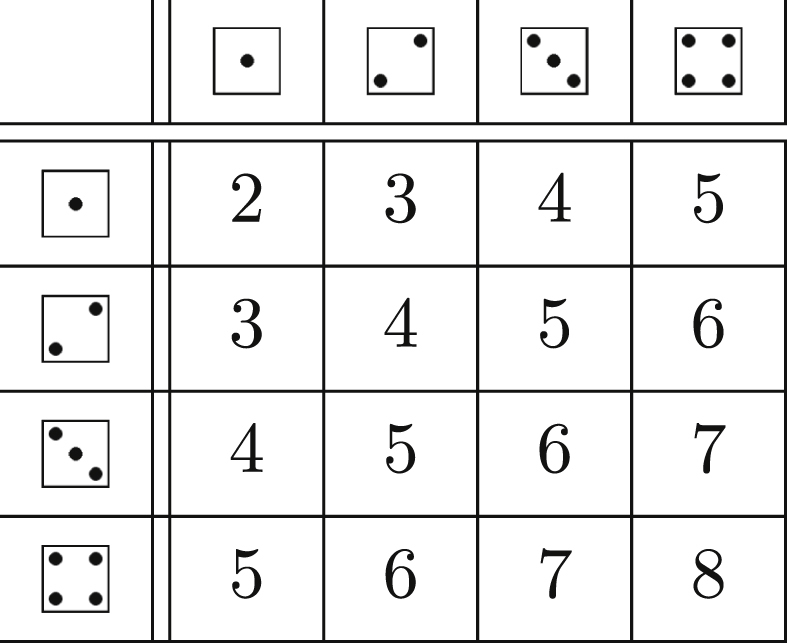

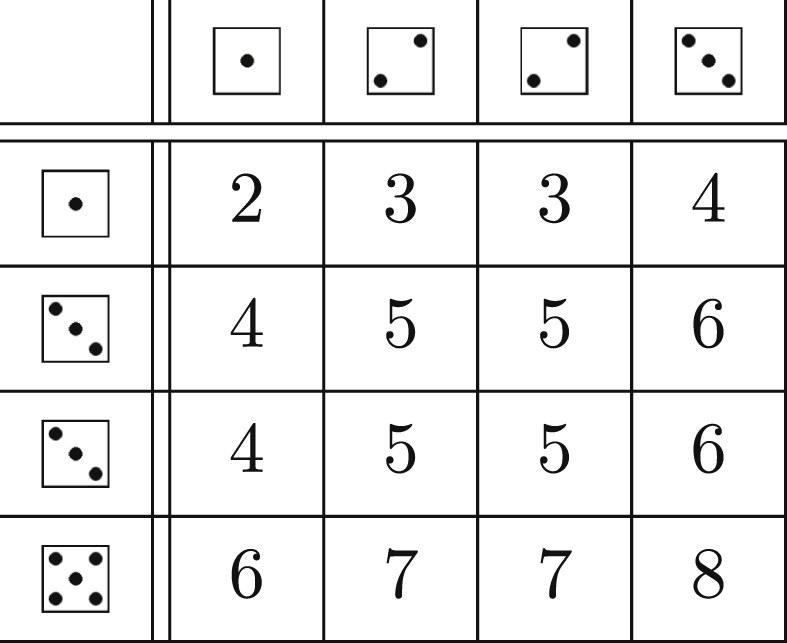

Throughout Section 2 we compare simulated SIR outbreaks with the theoretical predictions which we calculate using the Python package Invasion_PGF described in Appendix C. We assume that all individuals are equally likely to be infected by any transmission, and we focus on and . For each , we consider two distributions for the number of new infections an infected individual causes:

- •

a Poisson-distributed number of infections with mean , or

- •

a bimodal distribution with either 0 or 3 infections, with the proportion chosen to give a mean of . The probabilities are and ( is impossible).

The bimodal distribution is similar to that of Fig. 1, but with different probabilities of 0 or 3. After an individual chooses the number of infections to cause, the recipients are selected uniformly at random (with replacement) from the population. If they are susceptible, an infection occurs at the next time step, otherwise nothing happens. We use simulations for and .

Fig. 2 looks at the final size distribution. The distribution of the number infected in small outbreaks (insets) is not significantly affected by the total population size. This is because they do not grow large enough to “see” the system size. They would die out even in an infinite population. Large outbreaks, or epidemics, on the other hand would grow without bound in an infinite population, and their growth is limited by the finiteness of the population. We will see that (assuming homogeneous susceptibility and the large population limit), the proportion infected in an SIR epidemic depends only on .

Fig. 1.

A sample of 10 outbreaks starting with a bimodal distribution having in which of the population causes 3 infections and the rest cause none. The top row denotes the initial states, showing each of the 10 initial infections. An edge from one row to the next denotes an infection from the higher node to the lower node. Most outbreaks die out immediately.

Fig. 2.

Simulated outcomes of SIR outbreaks in populations as described in Example 2.2. Outbreaks tend to be either small or large. The typical number infected in small outbreaks (insets) is affected by the details of the offspring distribution, but not the population size. The typical proportion infected in large outbreaks (epidemics) appears to depend on the average number of transmissions an individual causes, but not the population size or the offspring distribution. These observations will be explained later. These simulations are reused throughout this section to show how PGFs capture different properties of the distributions.

2.1. Early extinction probability

A common misconception is that if an epidemic is inevitable. In fact, if we are lucky an outbreak can die out stochastically before the number infected is large. Conversely, if we are not lucky it may initially grow faster than our deterministic models predict.

In any finite population a disease will eventually go extinct because the disease interferes with its own spread. Our observations show that the typical final outcomes of an outbreak are either an “epidemic” which grows until the number infected is limited by the finiteness of the population or a small outbreak which dies out before it can see the system size. One of our first questions about a possible disease emergence is “what is the probability that an outbreak will grow into an epidemic?” We focus on the equivalent question, “what is the probability the outbreak goes extinct before causing an epidemic?”. We aim to calculate the probability that the disease would go extinct if it never interferes with its own spread, or in other words, if it were spreading through an unlimited population. Throughout we assume that disease is introduced with a single randomly chosen index case.

The theory for the extinction probability in an unbounded population has been developed extensively in the context of Galton–Watson processes (Watson & Galton, 1875). It has been applied to infectious disease many times, e.g. (Easley & Kleinberg, 2010, section 21.8), and (Getz & Lloyd-Smith, 2006; Lloyd-Smith, Schreiber, Kopp, & Getz, 2005).

2.1.1. Derivation as a fixed point equation

We present two derivations of the extinction probability. Our first is quicker, but gives less insight. We start with the a priori observation that the extinction probability takes some value between 0 and 1 inclusive. Our goal is to filter out the vast majority of these options by finding a property of the extinction probability that most values between 0 and 1 do not have.

Let α be the probability of extinction if the spread starts from a single infected individual. Then from Property A.1 of Appendix A we have where is the probability that, in isolation, an offspring of the initial infected individual would not cause an epidemic. Because we assume that the offspring distribution of later cases is the same as for the index case, we must have and so the extinction probability solves .

We have established:

Theorem 2.1

Assuming that each infected individual produces an independent number of offspring i chosen from a distribution having PGF , then α, the probability an outbreak starting from a single infected individual goes extinct, satisfies

(3) Not all solutions to must give the extinction probability.

There can be more than one x solving . In fact is always a solution, and from Property A.9 it follows that there is another solution if and only if . In this case, our derivation of Theorem 2.1 does not tell us which of the solutions is correct. However, Section 2.1.2 shows that the correct solution is the smaller solution when it exists. More specifically the extinction probability is where starting with . This gives a condition for a nonzero epidemic probability. Namely .

Example 2.3

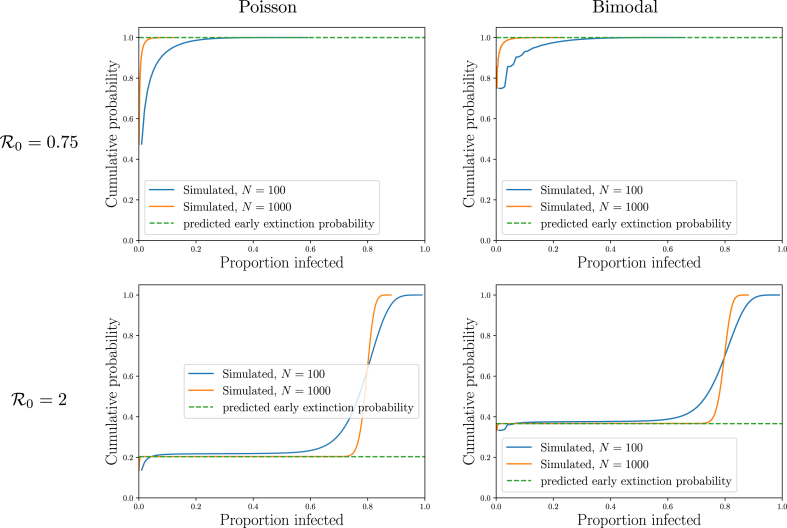

We now consider the Poisson and bimodal offspring distributions described in Example 2.2. We saw that typically an outbreak either affects a small proportion of the population (a vanishing fraction in the infinite population limit) or a large number (a nonzero fraction in the infinite population limit).

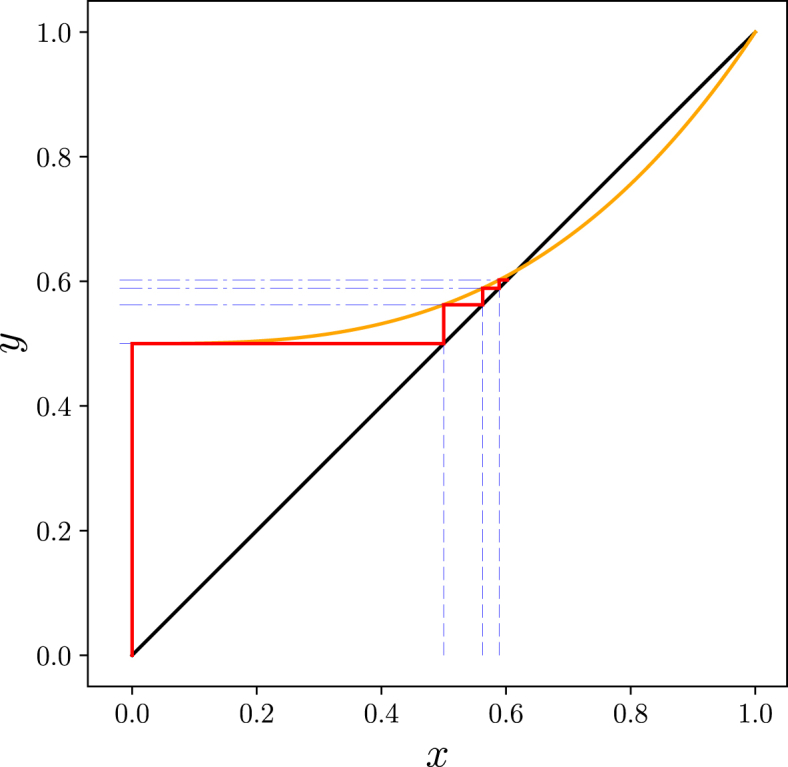

By plotting the cumulative density function (cdf) of proportion infected in Fig. 3, we extend our earlier observations. The cdf is steep near zero (becoming vertical in the infinite population limit). Then it is effectively flat for a while. Finally if it again grows steeply at some proportion infected well above 0 (the size of epidemic outbreaks).

The plateau’s height is the probability that an outbreak dies out while small. Fig. 3 shows that this is well-predicted by choosing the smaller of the solutions to .

For a fixed , the the plateau’s height (i.e., the early extinction probability) depends on the details of the offspring distribution and not simply . However, the critical value at which the cdf increases for the second time depends only on . This suggests that even though the probability of an epidemic depends on the details of the offspring distribution, the proportion infected in an SIR epidemic depends only on , the reproductive number. We explore this in more detail in Section 4.2.

Fig. 3.

Illustration ofTheorem 2.1. The cumulative density function (cdf) for the total proportion ever infected (effectively the integral of Fig. 2). For small , all outbreaks die out without affecting a sizable portion of the population. For larger , there are many small outbreaks and many large outbreaks, but very few outbreaks in between, so the cdf is flat in this range. The height of this plateau is the probability the outbreak dies out while small. This is approximately the predicted extinction probability for an infinite population (dashed). The probability of a small outbreak is different for the different offspring distributions, but the proportion infected corresponding to epidemics is the same (for given ).

2.1.2. Derivation from an iterative process

In our second derivation, we calculate the probability that the outbreak dies out within g “generations”. Then the probability the outbreak would die out after a finite number of steps in an infinite population is simply the limit of this as . In our counting of “generations”, we consider the index case to be generation 0. An individual's generation is equal to the number of transmissions occurring in the chain from the index case to that individual.

We define to be the probability that the longest chain an index case will initiate has fewer than g transmissions. So because there are always at least 0 transmissions, . The probability that there is no transmission is by definition . Recalling that the probability the index case causes zero infections is , we have

is the probability that the index case does not cause a chain of 1 or more transmissions. The probability that all chains die out after at most 1 transmission (that is, there are no second generation cases) is the probability that the index case causes i infections, , times the probability none of those i individuals causes further infections, , summed over all i. We introduce the notation to be the result of iterative applications of μ to x g times, so and for , . Then following Property A.1 we have

We generalize this by stating that the probability an initial infection fails to initiate any length g chains is equal to the probability that all of its i offspring fail to initiate a chain of length .

So the probability of not starting a chain of length at least g is found by iteratively applying the function μ g times to . Taking gives the extinction probability (Getz & Lloyd-Smith, 2006):

| (4) |

The fact that there is a biological interpretation of starting with is important. It effectively guarantees that the iterative process converges and that the speed of convergence reflects the typical speed of extinction. Iteration appears to be an efficient way to solve numerically and because of the biological interpretation, we can avoid questions that might arise about whether there are multiple solutions of and, if so, which of them corresponds to the biological problem. Instead we simply iterate starting from 0 and the result must converge to the probability that in an infinite population the outbreak would go extinct in finite time, regardless of what other solutions might have.

Exercise 2.1 shows that if then the limit of the sequence is 1 if and some satisfying if . This proves:

Theorem 2.2

Assume that each infected individual produces an independent number of offspring i chosen from a distribution having PGF . Then

- •

The probability an outbreak goes extinct within g generations is

(5)

- •

The probability of extinction in an infinite population is

- •

If and then . If extinction occurs with probability .

Example 2.4

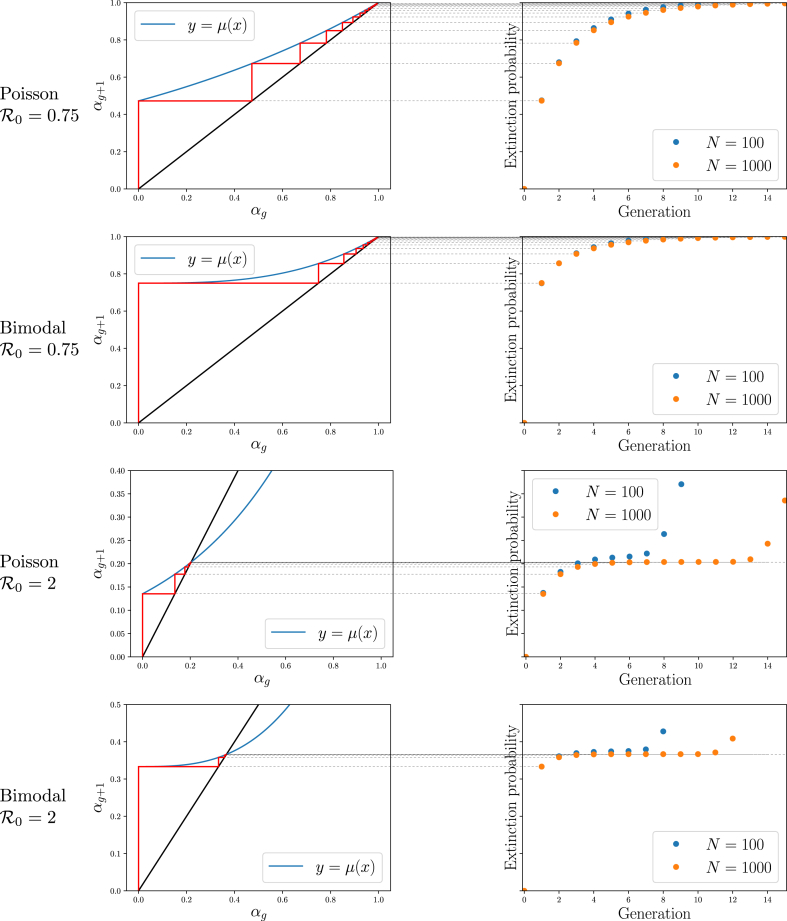

We now consider the Poisson and bimodal offspring distributions described in Example 2.2

Fig. 4 shows that starting with and defining , the values of emerging from the iterative process correspond to the observed probability that outbreaks have gone extinct by generation g for early values of g.

In the infinite population limit, this provides a match for all g. So this gives the probability the outbreak goes extinct by generation g assuming it has not grown large enough to see the finite-size of the population (i.e., assuming it has not become an epidemic). For SIR epidemics in the finite populations we use for simulations, the plateaus eventually give way to extinction because eventually there are not enough remaining susceptibles.

Fig. 4.

Illustration ofTheorem 2.2. Left: Cobweb diagrams showing convergence of iterations to the predicted outbreak extinction probability (see Fig. A.10). Right: Observed probabilities of no infections remaining after each generation for simulations of Fig. 2 showing the probability of extinction by generation g. Thin lines show the relation between the cobweb diagram and the extinction probabilities. The simulated probability initially rises quickly, representing outbreaks that die out early on, then it remains steady at a level representing the probability of outbreaks dying out while small. For it increases again because the epidemics burn through the finite population (and so the infinite population theory breaks down). The values match the corresponding iteration of the cobweb diagrams.

2.2. Early-time outbreak dynamics

We now explore the number of active infections present in generation g. We will need to use in this subsection, so to avoid confusion we use as our indexing variable rather than . Setting to be the probability active infections exist at generation g, we define the PGF . Assuming at generation 0 there is a single infection () then the initial condition is . From inductive application of Property A.8 for composition of PGFs (exercise 2.7) it is straightforward to conclude that for , where is the PGF for the offspring distribution.

Theorem 2.3

Assuming that each infected individual produces an independent number of offspring chosen from a distribution with PGF , the number infected in the g-th generation has PGF

(6) where is the probability there are active infections in generation g. This does not provide information about the cumulative number infected.

It is worth highlighting that for general distributions, the calculation of coefficients of may seem quite challenging. Luckily, it is not so difficult. Property A.3 states (taking )

for large M and any . For each we can calculate by numerically iterating μ g times. Then for large enough M, this gives a remarkably accurate and efficient approximation to the individual coefficients.

Example 2.5

We demonstrate Theorem 2.3 in Fig. 5, using the simulations from Example 2.2. Simulations and predictions are in excellent agreement.

There is a mismatch noticeable for the bimodal distribution with particularly with , which is a consequence of the fact that the population is finite. In stochastic simulations, occasionally an individual receives multiple transmissions even early in the outbreak, but in the PGF theory this does not happen.

We are often interested in the expected number of active infections in generation g, (however, as seen below this is not the most relevant measure to use if ). Property A.5 shows that this is given by . To calculate this we use for all g (Property A.4) and . Then through induction and the chain rule we show that :

we initialized the induction with the case which is the definition of . If , this shows that we expect decay.

Fig. 5.

Illustration ofTheorem 2.3. Comparison of predictions and the simulations from Fig. 2 for the number of active infections in the third generation. The bimodal case with shows a clear impact of population size as a sizable number of transmissions fail because the population is finite. The predictions were made numerically using the summation in Property A.3.

If , there is a more relevant measure. On average we see growth, but a sizable fraction of outbreaks may go extinct, and these zeros are included in the average, which alters our prediction. This is closely related to the “push of the past” effect observed in phylodynamics (Nee, Holmes, May, & Harvey, 1994). For policy purposes, we are more interested in the expected size if the outbreak is not yet extinct because a response that is scaled to deal with the average size (where the average includes those that are extinct) is either too big (if the disease has gone extinct) or too small (if the disease has become established) (Miller, Davoudi, Meza, Slim, & Pourbohloul, 2010). It is very unlikely to be just right. The expected number infected in generation g conditional on the outbreaks not dying out by generation g is . This has an important consequence. We can have different extinction probabilities for different offspring distributions with the same . The disease with a higher extinction probability tends to have considerably more infections in those outbreaks that do not go extinct.

We have

Corollary 2.1

In the infinite population limit, the expected number infected in generation g starting from a single infection is

(7) and the expected number starting from a single infection conditional on the disease persisting to generation g is

(8)

We can explore higher moments of the distribution of the number infected by taking more derivatives of and evaluating at .

2.3. Cumulative size distribution

We now look at the total number infected while the outbreak is small. There are multiple ways to calculate how the cumulative size of small outbreaks is distributed. We look at two of these. The first focuses just on the number of completed infections by generation g. The second calculates the joint distribution of the number of completed infections and the number of active infections at generation g. Later we address the distribution of final sizes of small outbreaks.

2.3.1. Focused approach to find the cumulative size distribution

We begin by calculating just the number of completed infections at generation g. We define to be the probability that there are j completed infections at generation g (by “completed” we only include individuals who are no longer infectious in generation g). We will use PGFs of the variable z when focusing on completed infections.

We define

to be the PGF for the number of completed infections j at generation g. Although we use j to represent recoveries, this model is still appropriate for SIS disease because we are interested in small outbreak sizes in a well-mixed infinite population for which we can assume no previously infected individuals have been reexposed. If the outbreak begins with a single infection, then

showing that the first individual (infectious during generation 0) completes his infection at the start of generation 1. For generation 2 we have the initial individual and his direct offspring, so .

More generally, to calculate for , the completed infections consist of

-

•

the initial infection

-

•

the active infections in generation 1.

-

•

any descendants of those active infections in generation 1 that will have recovered by generation g.

The distribution of the number of descendants of a generation 1 individual (including that individual) who have recovered by generation g is given by . That is each generation 1 individual and its descendants for the following infections have the same distribution as an initial infection and its descendants after generations.

From Property A.8 the number of descendants by generation g (not counting the initial infection) that have recovered is distributed like . Accounting for the initial individual requires that we increment the count by 1 which requires increasing the exponent of z by 1. So we multiply by z. This yields

To sustain an outbreak up to generation g there must be at least one infection in each generation from 0 to . So any outbreak with fewer than g completed infections at generation g must be extinct. So the coefficient of does not change once . Thus we have shown

Theorem 2.4

Assuming a single initial infection in an infinite population, the PGF for the distribution of the number of completed infections at generation is given by

(9) with . Once , the coefficient is constant.

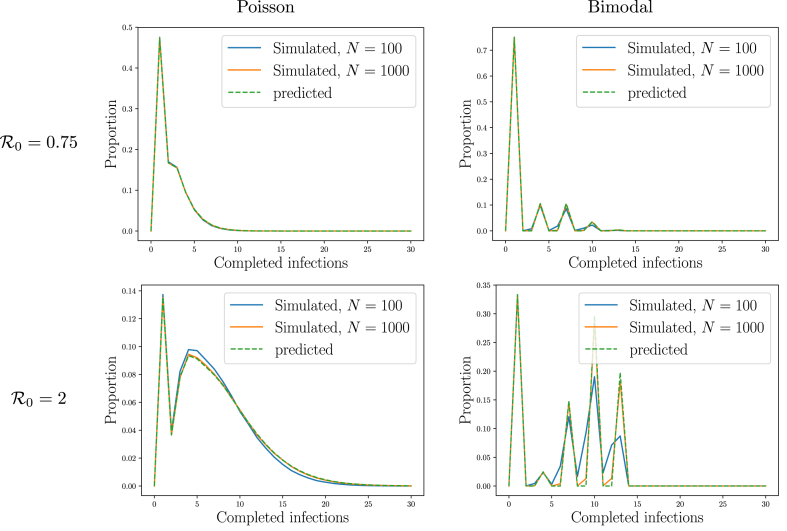

Example 2.6

We test Theorem 2.4 in Fig. 6, using the simulations from Example 2.2. Simulations and predictions are in excellent agreement.

Example 2.7

Expected cumulative size It is instructive to calculate the expected number of completed infections at generation . Note that , , and . We use induction to show that for the expected number of completed infections is :

This is in agreement with our earlier result that the expected number that are infected in generation j is .

This is

As with our previous results, the sum shows a threshold behavior at . If , then in the limit , the expected cumulative outbreak size converges to the finite value . If , it diverges.

Fig. 6.

Illustration ofTheorem 2.4 Comparison of predictions with the simulations from Fig. 2 for the number of completed infections at the start of the third generation. The predictions were calculated using Property A.3.

This example shows

Corollary 2.2

In the infinite population limit the expected number of completed infections at the start of generation g assuming a single randomly chosen initial infection is

(10a) For this diverges as . Otherwise it converges to .

2.3.2. Broader approach

An alternate approach calculates both the current and cumulative size at generation g. We let be the probability that there are i actively infected individuals and r completed infections in generation g. We define , so y represents the active infections and z the completed infections.

Assume we know the values and for generation . Then is simply and is distributed according to . So given those known and , the distribution for the next generation would be . Summing over all possible and yields

with the initial condition

The first few iterations are

and we can use induction on this to show that in general

Theorem 2.5

Given a single initial infection in an infinite population, the PGF for the joint distribution of the number of active i and completed infections r in generation g is given by

(11) with .

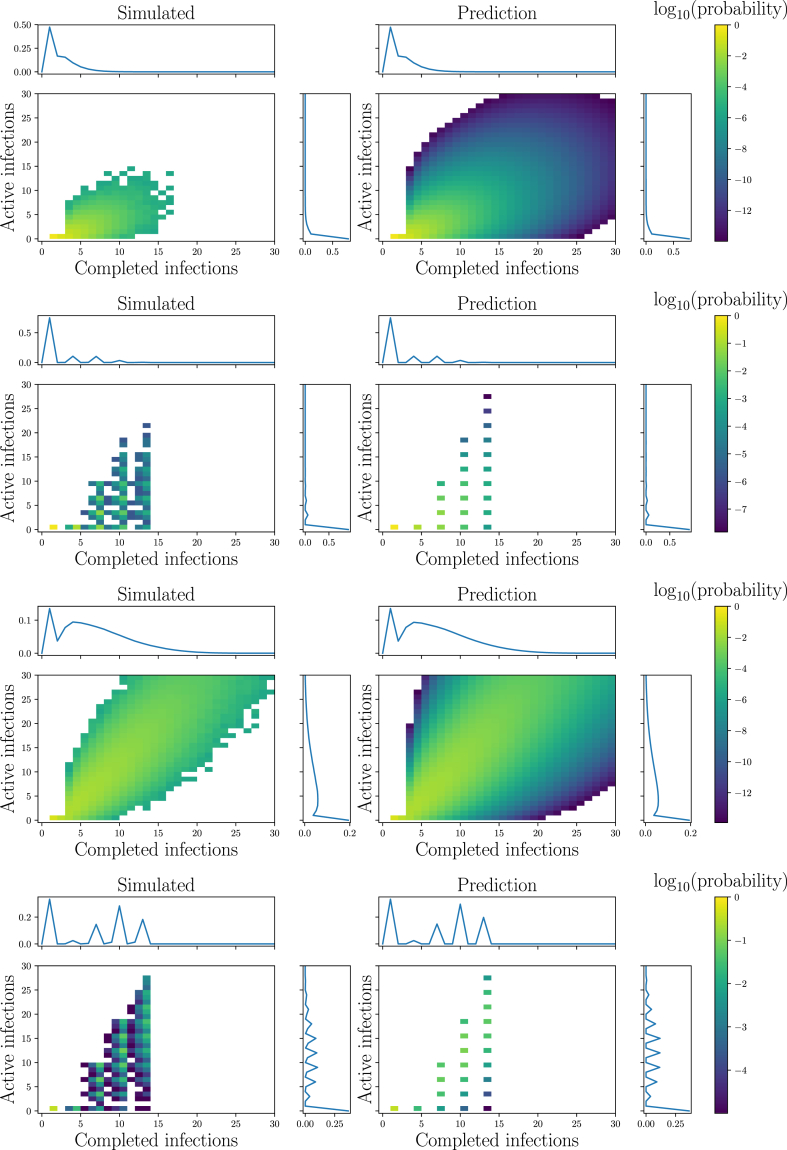

Example 2.8

We demonstrate Theorem 2.5 in Fig. 7, using the same simulations as in Example 2.2. Simulations and predictions are in excellent agreement.

Fig. 7.

Illustration ofTheorem 2.5. Comparison of predictions and simulations for the joint distribution of the number of current and completed infections at generation . The predictions were calculated using Property A.3. Left: simulations from Fig. 2 for and Right: predictions (note vertical scales on left and right are the same). Top to Bottom: Poisson , Bimodal , Poisson , and Bimodal . The predictions match our observations, with some difference for two reasons: 1) because simulations cannot resolve events with probabilities as small as , but the PGF approach can, and 2) due to finite-size effects as occasionally an individual receives multiple transmissions even early on. The plots also show the marginal distributions, matching Fig. 5, Fig. 6.

2.4. Small outbreak final size distribution

There are many diseases for which there have been multiple small outbreaks in recent years but no large-scale epidemics (such as Nipah, H5N1 avian influenza, Pneumonic Plague, Monkey pox, and — prior to 2013 — Ebola). A natural question emerges: what can we infer about the epidemic potential of these diseases? The size distribution may help us to infer properties of the disease and in particular to estimate the probability that (Blumberg & Lloyd-Smith, 2013; Kucharski & Edmunds, 2015; Nishiura, Yan, Sleeman, & Mode, 2012).

We have found that gives the PGF for the number of completed infections by generation g. We noted earlier that for a given r, once , the coefficient of in is fixed and equal to the probability that the outbreak goes extinct after exactly r infections. Motivated by this, we look for the limit as . We define

We expect this to be the PGF for the final size of the outbreaks.

We can express the pointwise limit1 as

where for the coefficient is the probability an outbreak causes exactly r infections in an infinite population. We use to denote the probability that the outbreak is infinite in an infinite population (i.e., that it is an epidemic), and we interpret as 1 when and 0 for . So if epidemics are possible, has a discontinuity at , and the limit as from below gives which is the extinction probability α.

We now look for a recurrence relation for in the infinite population limit. Each offspring of the initial infection independently causes a set of infections. The distribution of these new infections (including the original offspring) also has PGF . So the distribution of the number of descendants of the initial infection (but not including the initial infection) has PGF . To include the initial infection, we must increase the exponent of z by one, which we do by multiplying by z. We conclude that . Although we have shown that solves , we have not shown that there is only one function that solves this.

We may be interested in the outbreak size distribution conditional on the outbreak going extinct. For this we are looking at for any , and at , this is simply 1. Note that if then .

Summarizing this we have

Theorem 2.6

Given a single initial infection in an infinite population, consider , the PGF for the final size distribution: where if and 1 if .

- •

Then

(12)

- •

We have . If then is discontinuous at , with a jump discontinuity of , the probability of an epidemic.

- •

The PGF for outbreak size distribution conditional on the outbreak being finite is

Perhaps surprisingly we can often find the coefficients of analytically if is known. We use a remarkable result showing that the probability of infecting exactly n individuals is equal to the coefficient of in (Blumberg & Lloyd-Smith, 2013; Dwass, 1969; van der Hofstad & Keane, 2008; Wendel, 1975). The theorem is

Theorem 2.7

Given an offspring distribution with PGF , for the coefficient of in is where is defined by .

That is, for j < ∞ the probability of having exactly infections in an outbreak starting from a single infection is times the coefficient of in .

We prove this theorem in Appendix B. The proof is based on observing that if we draw a sequence of j numbers from the offspring distribution, the probability they sum to (corresponding to transmissions and hence j infected individuals including the index case) is the coefficient of in . A fraction of these satisfy additional constraints needed to correspond to a valid transmission tree2 and thus the probability of a valid transmission tree with exactly transmissions is times .

Because the coefficient of in is (by Property A.2), we have that the probability of an outbreak of size is

It is enticing to think there may be a similar theorem for coefficients of , but we are not aware of one. The theorem has been generalized to models having multiple types of individuals (Kucharski & Edmunds, 2015).

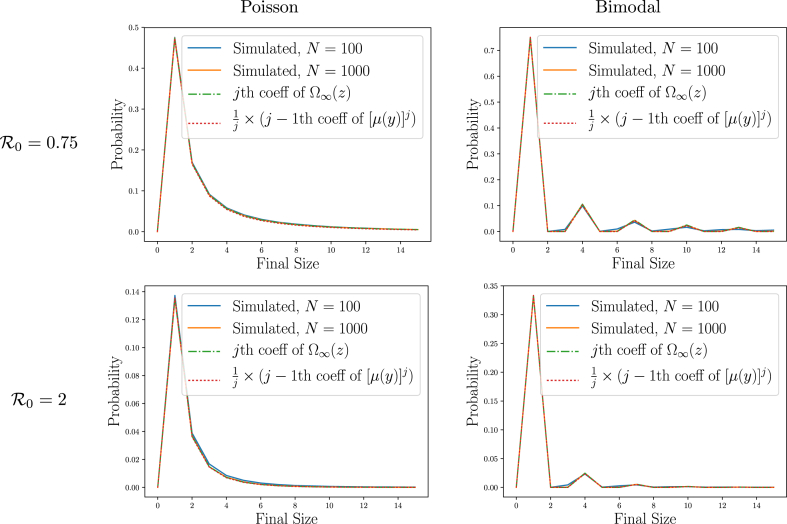

Example 2.9

We demonstrate Theorems 2.6 and 2.7 in Fig. 8, using the same simulations as in Example 2.2.

Example 2.10

The PGF for the negative binomial distribution with parameters p and (with ) is

We can rewrite this as

We will use this to find the final size distribution. We expand using the binomial series

which holds for integer or non-integer η. Then with , , and playing the role of δ, η, and i:

[the negatives all cancel]. So the coefficient of is (assuming is an integer). Looking at times this, we conclude that the probability an outbreak infects exactly j individuals is

A variation of this result for non-integer is commonly used in work estimating disease parameters (Blumberg & Lloyd-Smith, 2013; Nishiura et al., 2012). Exercise 2.12 generalizes the formula for this.

Fig. 8.

Illustration ofTheorems 2.6 and 2.7. The final size of small outbreaks predicted by Theorem 2.6 and by Theorem 2.7 as calculated using Property A.3 matches observations from the simulations in Fig. 2 (see also insets of Fig. 2).

Applying Theorem 2.7 to several different families of distributions yields Table 6 for the probability of a final size .

2.4.1. Inference based on outbreak sizes

A major challenge in infectious disease modeling is inferring the parameters of an infectious disease. In Section 2.4 we alluded to the use of PGFs to infer disease properties from observations of the size distribution of small outbreaks. In this section we describe how to do this using a Bayesian approach, using the probabilities given in Table 6. A number of researchers have used this approach to estimate disease parameters (Blumberg & Lloyd-Smith, 2013; Kucharski & Edmunds, 2015; Nishiura et al., 2012).

We assume that we know what type of distribution the offspring distribution, but that there are some unknown parameters (often it is assumed to be a negative binomial distribution). We also assume that we have some prior belief about the probability of various parameters. For practical purposes, we will assume that we have some finite number of possible parameter values, each with a probability.

We use Bayes' Theorem (Hoff, 2009):

| (13) |

Here we think of as the specific parameter values and X as the observed data (typically the observed size of an outbreak or sizes of multiple independent outbreaks, in which case comes from Theorem 2.7 or Table 6). In our calculations we can simply use the fact that with a normalization constant which can be dealt with at the end.

The prior for is the probability distribution we assume for the parameter values before observing the data, given by . We often simply assume that all parameter values are equally probable initially.

The likelihood of the parameters is defined to be , the probability that we would observe X for the given parameter values. If we are choosing between two sets of parameter values and and the observations have consistently higher likelihood for , then we intuitively expect that is the more probable parameter value.

In practice the likelihood may be very small which can lead to numerical error. It is often useful to instead look at log-likelihood,3 . For example, if we have many observed outbreak sizes, the likelihood under independence is the product of the probabilities of each individual outbreak size. The likelihood is thus quite small (perhaps less than machine precision), while the log-likelihood is simply the sum of the log-likelihoods of each individual observation.

We know that

where C is the logarithm of the proportionality constant in Equation (13). If we have a prior and the likelihood, the right hand side can be calculated. It is often possible (and advisable) to calculate the log likelihood directly rather than calculating and then taking the logarithm.

Exponentiating the right hand side and then finding the appropriate normalization constant will yield . Numerically the numbers may be very small when we exponentiate, so to prior to exponentiating it is advisable to add a constant value to all of the expressions. This constant is corrected for in the final normalization step.

We now provide the steps for a numerical calculation of given the prior , the observations X, and the log likelihood .

-

1.

For each , calculate .

-

2.

Find the maximum over all and subtract it to yield . Note that , and this brings all of our numbers closer to zero.

-

3.

Calculate . This will be proportional to . Note that by using rather than we have reduced the impact of roundoff error.

-

4.

Find the normalization constant . Then

Note that if comes from a continuous distribution rather than a discrete distribution, then the same approach works, except that P is a probability density and the summation in the final step becomes an integral.

Example 2.11

A frequent assumption is that the offspring distribution is negative binomial. Let us make this assumption with unknown p and .

To artificially simplify the problem, we assume that we know that there are only two possible pairs of , namely or , and that our a priori belief is that they are equally probable.

After observing 2 independent outbreaks, with total sizes and , we want to use our observations to update .

From Table 6, the likelihood of a given given the two independent observations is

In problems like this, we will often encounter logarithms of factorials. Many programming languages provide this, typically using Stirling's approximation. For example, Python, R, and C++ all have a special function lgamma which calculates the natural log of the absolute value of the gamma function.4 We find

So and . Exponentiating, we have

So now

So rather than the two parameter sets being equally probable, is now about half as likely as given the observed data.

2.5. Generality of discrete-time results

Thus far we have measured time in generations. However, many models measure time differently and different generations may overlap. For both SIS and SIR disease, our results above about final size distribution or extinction probability still apply. To see this, we note first that our results have been derived assuming that the population is infinite and well-mixed so no individuals receive multiple transmissions. Regardless of the clock time associated with transmission and recovery, there is still a clear definition of the length of the transmission chain to an infected individual. Once we group individuals by length of the transmission chain, we get the generation-based model used above. This equivalence is studied more in (Ludwig, 1975; Yan, 2008).

2.6. Exercises

Exercise 2.1

Monotonicity of

- a.

By considering the biological interpretation of , explain why the sequence of inequalities should hold. That is, explain why , why the form a monotonically increasing sequence, and why all of them are at most 1.

- b.

Show that therefore converges to some non-negative limit α that is at most 1 and that .

- c.

Use Property A.9 to show that if there exists a unique solving if and only if .

- d.

Assuming , use Property A.9 to show that if then converges to the unique solving , and otherwise converges to 1.

Exercise 2.2

Use Theorem 2.2 to prove Theorem 2.1.

Exercise 2.3

Show that if , then . By referring to the biological interpretation of , explain this result.

Exercise 2.4

Find all PGFs with and . Why were these excluded from Theorem 2.2?

Exercise 2.5

Larger initial conditions

Assume that disease is introduced with infections rather than just 1, or that it is not observed by surveillance until infections are present. Assume that the offspring distribution PGF is .

- a.

If m is known, find the extinction probability.

- b.

If m is unknown but its distribution has PGF , find the extinction probability.

Exercise 2.6

Extinction probability

Consider a disease in which , , , and with a single introduced infection.

- a.

Numerically approximate the probability of extinction within 0, 1, 2, 3, 4, or 5 generations up to five significant digits (assuming an infinite population).

- b.

Numerically approximate the probability of eventual extinction up to five significant digits (assuming an infinite population).

- c.

A surveillance program is being introduced, and detection will lead to a response. But it will not be soon enough to affect the transmissions from generations 0 and 1. From then on , , , and . Numerically approximate the new probability of eventual extinction after an introduction in an unbounded population [be careful that you do the function composition in the right order – review Properties A.1 and A.8].

Exercise 2.7

We look at two inductive derivations of . They are similar, but when adapted to the continuous-time dynamics we study later, they lead to two different models. We take as given that gives the distribution of the number of infections caused after generations starting from a single case. One argument is based on discussing the results of outcomes attributable to the infectious individuals of generation in the next generation. The other is based on the outcomes indirectly attributable to the infectious individuals of generation 1 through their descendants after another generations.

- a.

Explain why Property A.8 shows that .

- b.

(without reference to a) Explain why Property A.8 shows that .

Exercise 2.8

Use Theorem 2.3 to prove the first part of Theorem 2.2.

Exercise 2.9

How does Corollary 2.1 change if we start with k infections?

Exercise 2.10

Assume the PGF of the offspring size distribution is .

- a.

What offspring size distribution yields this PGF?

- b.

Find the PGF for the number of completed infections at 0, 1, 2, 3, and 4 generations [it may be helpful to use a symbolic math program once .].

- c.

Check that for these cases, once , the coefficient of does not change.

Exercise 2.11

By setting , use Theorem 2.5 to prove Theorem 2.4.

Exercise 2.12

Redo Example 2.10 if is a real number, rather than an integer. It may be useful to use the –function, which satisfies for any x and for integer n.

Exercise 2.13

Except for the negative binomial case done in Example 2.10, derive the probabilities in Table 6.

- a.

For the Poisson distribution, use Property A.2.

- b.

For the Uniform distribution, use Property A.2.

- c.

For the Binomial distribution, use the binomial theorem: .

- d.

For the Geometric distribution, follow Example 2.10 (noting that p and q interchange roles).

Exercise 2.14

To help model continuous-time epidemics, Section 3 will use a modified version of μ, which in some contexts will be written as . To help motivate the use of two variables, we reconsider the discrete case. We think of a recovery as an infected individual disappearing and giving birth to a recovered individual and a collection of infected individuals. Look back at the discrete-time calculation of and . Define a two-variable version of μ as .

- a.

What is the biological interpretation of ?

- b.

Rewrite the recursive relations for using rather than .

- c.

Rewrite the recursive relations for using rather than .

The choice to use versus is purely a matter of convenience.

Exercise 2.15

Consider Example 2.11. Assume that a third outbreak is observed with 4 infections. Calculate the probability of and given the data starting

- a.

with the assumption that and X consists of the three observations , , and .

- b.

with the assumption that and and X consists only of the single observation .

- c.

Compare the results and explain why they should have the relation they do.

Exercise 2.16

Assume that we know a priori that the offspring distribution for a disease has a negative binomial distribution with . Assume that our a priori knowledge of is that it is an integer uniformly distributed between 1 and 80 inclusive. Given observed outbreaks of sizes 1, 4, 5, 6, and 10:

- a.

For each , calculate where X is the observed outbreak sizes. Plot the result.

- b.

Find the probability that is greater than 1.

3. Continuous-time spread of a simple disease

We now develop PGF-based approaches adapting the results above to continuous-time processes. In the continuous-time framework, generations will overlap, so we need a new approach if we want to answer questions about the probability of being in a particular state at time t rather than at generation g. Questions about the final state of the population can be answered using the same techniques as for the discrete case, but the techniques introduced here also apply and yield the same predictions. Unlike Section 2, we do not do a detailed comparison with simulation.

In the continuous-time model, infected individuals have a constant rate of recovery γ and a constant rate of transmission β. Then is the probability that the first event is a recovery, while is the probability it is a transmission. If the event is a recovery, then the individual is removed from the infectious population. If the event is a transmission, then the individual is still available to transmit again, with the same rate. If the recipient of a transmission is susceptible, it becomes infectious.

Unlike the discrete-time case, we do not focus on the offspring distribution. Rather, we focus on the resulting number of infected individuals after an event. Early on we treat the process as if as if each infected individual were removed and replaced by either 2 or 0 new infections. Although this is not the true process (she either recovers or she creates one additional infection and remains present), it is equivalent as far as the number of infections at any early time is concerned. We focus on a PGF for the outcome of the next event.

We define and so

| (14a) |

When we are calculating the number of completed cases, it will be useful to have a two-variable version of :

| (14b) |

Most of the results in this section are the continuous-time analog of the discrete-time results above for the infinite population limit. In the discrete-time approach we did not attempt to address outbreaks in finite populations. However, we end this section by deriving the equations for , the PGF for the joint distribution of the number of susceptibles and active infections in a population of finite size N.

3.1. Extinction probability

For the extinction probability, we can apply the same methods derived in the discrete case to . Thus we can find the extinction probability iteratively starting from the initial guess and setting .

Exercises 3.1 and 3.2 each show that

Theorem 3.1

For the continuous-time Markovian model of disease spread in an infinite population, the probability of extinction given a single initial infection is

(15)

3.1.1. Extinction probability as a function of time

In the discrete-time case, we were interested in the probability of extinction after some number of generations. When we are using a continuous-time model, we are generally interested in “what is the probability of extinction by time t?”

To answer this, we set to be the probability of extinction within time t. We will calculate the derivative of α at time t by using some mathematical sleight of hand to find . Then dividing this by and taking will give the result. Our approach is closely related to backward Kolmogorov equations (described later below).

We choose the time step to be small enough that we can assume that at most one event happens between time 0 and . The probabilities of having 0, 1, or 2 infections are , and where the notation means that the error goes to zero fast enough that as . The probability of having 3 or more infections (that is, multiple transmission events in the interval) is as well.

If there are two infected individuals at time , then the probability of extinction by time is . Similarly, if there is one infected at time , the probability of extinction by time is ; and if there are no infections at time , then the probability of extinction by time is . So up to we have

| (16) |

Thus

and so

Theorem 3.2

Given an infinite population with constant transmission rate β and recovery rate γ, then , the probability of extinction by time t assuming a single initial infection at time 0 solves

(17) with and the initial condition .

We could solve this analytically (Exercise 3.4), but most results are easier to derive directly from the ODE formulation.

3.2. Early-time outbreak dynamics

We now explore the number of infections at time t. We define the PGF

where is the probability of i actively infected individuals at time t. We will derive equations for the evolution of . We assume that so a single infected individual exists at time 0.

Our goal is to derive equations telling us how changes in time. We will use two approaches which were hinted at in Exercise 2.7, yielding two different partial differential equations. Although their appearance is different, for the appropriate initial condition, their solutions are the same. These equations are called the forward and backward Kolmogorov equations.

We briefly describe the analogy between the forward and backward Kolmogorov equations and Exercise 2.7:

-

•

Our first approach finds the forward Kolmogorov equations. This is akin to Exercise 2.7 where we found by knowing the PGF for the number infected in generation and recognizing that since the PGF for the number of infections each of them causes is , we must have .

-

•

Our second approach finds the backward Kolmogorov equations which are more subtle and can be derived similarly to how we derived the ODE for extinction probability in Theorem 3.2. This is akin to Exercise 2.7 where we found by knowing that the PGF for the number infected in generation 1 is , and recognizing that after another generations each of those creates a number of infections whose PGF is and so .

For both approaches, we make use of the observation that for , we can write the PGF for the number of infections resulting from a single infected individual at time to be

This says that with probability approximately a transmission happens and we replace y by , and with probability approximately a recovery happens and we replace y by 1. With probability multiple events happen. We can rewrite this as

Note that and .

Both of our approaches rely on the observation that by Property A.8. This states that if we take the PGF at time , and then substitute for each y the PGF for the number of descendants of a single individual after units of time, the result is the PGF for the total number at time .

Forward equations. For this we use with playing the role of and playing the role of t.

So . For small (and taking to be the partial derivative of with respect to its first argument), we have

Then

More generally, we can directly apply Property A.10 to get this result. Exercise 3.6 provides an alternate direct derivation of these equations.

Backward equations. In the backward direction we have with playing the role of t and playing the role of .

So . Note that because , we have . Thus for small , we expand as a Taylor Series in its second argument t

To avoid ambiguity, we use above to denote the partial derivative of with respect to its second argument t. So

This result also follows directly from Property A.12.

So we have

Theorem 3.3

The PGF for the distribution of the number of current infections at time t assuming a single introduced infection at time 0 solves

(18) as well as

(19) both with the initial condition .

It is perhaps remarkable that such seemingly different equations yield the same solution for the given initial condition.

Example 3.1

The expected number of infections in the infinite population limit is given by . From this we have

We used to eliminate the term and replaced with . Using this and , we have

This example proves

Corollary 3.1

In the infinite population limit, if a disease starts with a single infection, then the expected number of active infections at time t solves

(20)

3.3. Cumulative and current outbreak size distribution

Let be the probability of having i currently infected individuals and r completed infections at time t. We define to be the PGF at time t. We have . As before we assume the population is large enough that the spread of the disease is not limited by the size of the population.

We give an abbreviated derivation of the Kolmogorov equations for . A full derivation is requested as an exercise.

Forward Kolmogorov formulation. To derive the forward Kolmogorov equations for the PGF , we use Property A.11, noting that all transition rates are proportional to i. The rate of transmission is and the rate of recovery is . There are no interactions to consider. So

Backward Kolmogorov formulation. To derive the backward Kolmogorov equations for the PGF , we use a modified version of Property A.12 to account for two types of individuals (Exercise A.14, with events proportional only to the infected individuals). We find

Combining our backward and forward Kolmogorov equation results, we get

Theorem 3.4

Assuming a single initial infection in an infinite population, the PGF for the joint distribution of the number of current and completed infections at time t solves

(21) as well as

(22) both with the initial condition .

It is again remarkable that these seemingly very different equations have the same solution.

Example 3.2

The expected number of completed infections at time t is

(although we use R, this approach is equally relevant for counting completed infections in the SIS model because of the infinite population assumption). Its evolution is given by

where we use the fact that , , and . Our result says that the rate of change of the expected number of completed infections is γ times the expected number of current infections.

This example proves

Corollary 3.2

In the infinite population limit the expected number of recovered individuals as a function of time solves

(23)

We will see that this holds even in finite populations.

3.4. Small outbreak final size distribution

We define

to be the PGF of the distribution of outbreak final sizes in an infinite population, with representing epidemics and for representing the probability that an outbreak infects exactly j individuals. We use the convention that for and 1 for . To calculate , we make observations that the outbreak size coming from a single infected individual is 1 if the first thing that individual does is a recovery or it is the sum of the outbreak sizes of two infected individuals if the first thing the individual does is to transmit (yielding herself and her offspring).

Thus we have

As for the discrete-time case we may solve this iteratively, starting with the guess . Once n iterations have occurred, the first n coefficients of remain constant. Note that unlike the discrete case, here . This yields

Theorem 3.5

The PGF for the final size distribution assuming a single initial infection in an infinite population solves

(24) with . This function is discontinuous at . For the final size distribution conditional on the outbreak being finite, the PGF is continuous and equals

As in the discrete-time case, we can find the coefficients of analytically.

Theorem 3.6

Consider continuous-time outbreaks with transmission rate β and recovery rate γ in an infinite population with a single initial infection. The probability the outbreak causes exactly j infections for [that is, the coefficient of in ] is

We prove this theorem in Appendix B. The proof is based on observing that if there are j total infected individuals, this requires transmissions and j recoveries. Of the sequences of events that have the right number of recoveries and transmissions, a fraction of these satisfy additional constraints required to be a valid sequence leading to j infections (the sequence cannot lead to 0 infections prior to the last step). Alternately, we can note that the offspring distribution is geometric and use Table 6.

3.5. Full dynamics in finite populations

We now derive the PGFs for continuous time SIS and SIR outbreaks in a finite population.

PGF-based techniques are easiest when we can treat events as independent. In the continuous-time model, when we look at the system in a given state, each event is independent of the others. Once the next event happens the possible events change, but conditional on the new state, they are still independent. Thus we can use the forward Kolmogorov approach.

The backward Kolmogorov approach will not work because in a finite population descendants of any individual are not independent. We do not look at the discrete-time version because in a single time step, multiple events can occur, some of which affect one another. So we would lose independence as we go from one time step to another. For these reasons we focus on the forward Kolmogorov formulations for the continuous-time models. Much of our approach here was derived previously in (Bailey, 1953; Bartlett, 1949). See also (Allen, 2008).

For a given population size N, we let s, i, and r be the number of susceptible, infected and immune (removed) individuals. For the SIS model and we have while for the SIR model we have .

3.5.1. SIS

We start with the SIS model. We set to be the probability of s susceptible and i actively infected individuals at time t. We define the PGF for the joint distribution of susceptible and infected individuals

At rate , successful transmissions occur, moving the system from the state to , which is equivalent to removing one susceptible individual and one infected individual, and replacing them with two infected individuals. Following Property A.11, this is represented by

At rate , recoveries occur, moving the system from the state to , which is equivalent to removing one infected individual and replacing it with a susceptible individual. This is represented by

So the PGF solves

It is sometimes useful to rewrite this as

We have

Theorem 3.7

For SIS dynamics in a finite population we have

(25)

We can use this to derive equations for the expected number of susceptible and infected individuals.

Example 3.3

We use and to denote the expected number of susceptible and infected individuals at time t. We have

We also define the expected value of the product ,

Then we have

In the final line, we eliminated the first term because is zero at . Similar steps show that

but the derivation is faster if we simply note is constant. This proves

Corollary 3.3

For SIS disease, the expected number infected and susceptible solves

(26)

(27) where is the expected value of the product .

3.5.2. SIR

Now we consider the SIR model. A review of various techniques (including PGF-based methods) to find the final size distribution of outbreaks in finite-size populations can be found in (House, Ross, & Sirl, 2013). Here we focus on the application of PGFs to find the full dynamics. To reduce the number of variables we track, we focus just on and and use to find the number recovered. So does not have any dependence on . For a given s and i, infection occurs at rate . It appears as a departure from the state and entry into . Following Property A.11, this is captured by

Recovery is captured by

[note the difference from the SIS case in the recovery term]. So we have

Theorem 3.8

For SIR dynamics in a finite population we have

(28)

We follow similar steps to Example 3.3 to derive equations for and in Exercise 3.16. The result of this exercise should show

Corollary 3.4

For SIR disease, the expected number of susceptible, infected, and recovered individuals solves

(29)

(30)

(31) where is the expected value of the product .

3.6. Exercises

Exercise 3.1

Extinction Probability. Let and be given with .

- a.

Analytically find solutions to .

- b.

Assume . Find all solutions in .

- c.

Assume . Find all solutions in .

Exercise 3.2

Consistency with discrete-time formulation. Although we have argued that a transmission in the continuous-time disease transmission case can be treated as if a single infected individual has two infected offspring and then disappears, this is not what actually happens. In this exercise we look at the true offspring distribution of an infected individual before recovery, and we show that the ultimate predictions of the two versions are equivalent. Consider a disease in which individuals transmit at rate and recover at rate . Let be the probability an infected individual will cause exactly new infections before recovering.

- a.

Explain why .

- b.

Explain why . So form a geometric distribution.

- c.

Show that can be expressed as . [This definition of μ without the hat corresponds to the discrete-time definition]

- d.

Show that the solutions to are the same as the solutions to . So the extinction probability can be calculated either way. (You do not have to find the solutions to do this, you can simply show that the two equations are equivalent).

Exercise 3.3

Relation with . Take as given in Exercise 3.2 and .

- a.

Show that in general.

- b.

Show that when , then . So both are still threshold parameters.

Exercise 3.4

Revisiting eventual extinction probability. We revisit the results of Exercise 3.1 using Eq. (17) (without solving it).

- a.

By substituting for , show that .

We have . Taking this initial condition and expression for , show that

- b.

as if (i.e., ) and

- c.

as if (i.e., ).

- d.

Set up (but do not solve) a partial fraction integration that would give analytically.

Exercise 3.5

Understanding the backward Kolmogorov equations. Let denote the probability of having i active infections at time t given that at time 0 there was a single infection []. We have . We extend the derivation of Eq. (16) to . Assume and are known.

- a.

Following the derivation of Eq. (16), approximate , , and for small .

- b.

From biological grounds explain why if there are 0 infections at time then there are also 0 infections at time .

- c.

If there is 1 infection at time , what is the probability of 1 infection at time ?

- d.

If there are 2 infections at time , what is the probability of 1 infection at time ?

- e.

Write in terms of , , , and .

- f.

Using the definition of the derivative, find an expression for in terms of and .

Exercise 3.6

Derivation of the forward Kolmogorov equations. In this exercise we derive the PGF version of the forward Kolmogorov equations by directly calculating the rate of change of the probabilities of the states. Define to be the probability that there are j active infections at time t.

We have the forward Kolmogorov equations:

- a.

Explain each term on the right hand side of the equation for .

- b.

By expanding , arrive at Equation (18).

Exercise 3.7

Derivation of the backward Kolmogorov equations. In this exercise we follow (Allen, 2017; Bailey, 1964) and derive the PGF version of the backward Kolmogorov equations by directly calculating the rate of change of the probabilities of the states. Define to be the probability of i infections at time t given that there were k infections at time 0. Although we assume that at time 0 there is a single infection, we will need to derive the equations for arbitrary k.

- a.

Explain why

for small .

- b.

By using the definition of the derivative , find

Define to be the PGF for the number of active infections assuming that there are k initial infections.

- c.

Show that

- d.

Explain why .

- e.

Complete the derivation of Equation (19).

Exercise 3.8

Define to be the PGF for the probability of having i infections at time t given k infections at time 0.

- a.

Explain why .

- b.

Show that if we substitute in place of in Eq. (18) the equation remains true with the initial condition .

- c.

Show that if we substitute in place of in equation (19) we do not get a true equation.

So Eq. (18) applies regardless of the initial condition, but Eq. (19) is only true for the specific initial condition of one infection.

Exercise 3.9