Significance

How do brain networks shape our listening behavior? We here develop and test the hypothesis that, during challenging listening situations, intrinsic brain networks are reconfigured to adapt to the listening demands and thus, to enable successful listening. We find that, relative to a task-free resting state, networks of the listening brain show higher segregation of temporal auditory, ventral attention, and frontal control regions known to be involved in speech processing, sound localization, and effortful listening. Importantly, the relative change in modularity of this auditory control network predicts individuals’ listening success. Our findings shed light on how cortical communication dynamics tune selection and comprehension of speech in challenging listening situations and suggest modularity as the network principle of auditory attention.

Keywords: functional connectome modularity, spatial attention, semantic prediction, auditory cortex, cingulo-opercular network

Abstract

Speech comprehension in noisy, multitalker situations poses a challenge. Successful behavioral adaptation to a listening challenge often requires stronger engagement of auditory spatial attention and context-dependent semantic predictions. Human listeners differ substantially in the degree to which they adapt behaviorally and can listen successfully under such circumstances. How cortical networks embody this adaptation, particularly at the individual level, is currently unknown. We here explain this adaptation from reconfiguration of brain networks for a challenging listening task (i.e., a linguistic variant of the Posner paradigm with concurrent speech) in an age-varying sample of n = 49 healthy adults undergoing resting-state and task fMRI. We here provide evidence for the hypothesis that more successful listeners exhibit stronger task-specific reconfiguration (hence, better adaptation) of brain networks. From rest to task, brain networks become reconfigured toward more localized cortical processing characterized by higher topological segregation. This reconfiguration is dominated by the functional division of an auditory and a cingulo-opercular module and the emergence of a conjoined auditory and ventral attention module along bilateral middle and posterior temporal cortices. Supporting our hypothesis, the degree to which modularity of this frontotemporal auditory control network is increased relative to resting state predicts individuals’ listening success in states of divided and selective attention. Our findings elucidate how fine-tuned cortical communication dynamics shape selection and comprehension of speech. Our results highlight modularity of the auditory control network as a key organizational principle in cortical implementation of auditory spatial attention in challenging listening situations.

Speech comprehension requires the fidelity of the auditory system, but it also hinges on higher-order cognitive abilities. In noisy, multitalker listening situations, speech comprehension turns into a challenging cognitive task for our brain. Under such circumstances, listening is often facilitated by a stronger engagement of auditory spatial attention (“where” to expect speech) and context-dependent semantic predictions (“what” to expect) (1–4). It has been long recognized that individuals differ substantially in utilizing these cognitive strategies in adaptation to challenging listening situations (5–7). The cortical underpinning of this adaptation and the corresponding interindividual variability are poorly understood, hindering future attempts to aid hearing or to rehabilitate problems in speech comprehension.

Functional neuroimaging has demonstrated that understanding degraded speech draws on cortical resources far beyond traditional perisylvian regions, involving cingulo-opercular, inferior frontal, and premotor cortices (8–13). While listening clearly marks a large-scale neural process shared across cortical nodes and networks (14–18), we do not know whether and how challenging speech comprehension relies on large-scale cortical networks (i.e., the functional connectome) and their adaptive reconfiguration.

The functional connectome is described by measuring correlated spontaneous brain responses using blood oxygen level-dependent (BOLD) fMRI. When measured at rest, the result can be used to investigate whole-brain intrinsic networks (19–21), which are relatively stable within individuals and reflect correlation patterns primarily determined by structural connectivity (22–24). However, the makeup of resting-state networks varies across individuals and undergoes subtle reconfigurations during the performance of specific tasks (25–31).

Accordingly, the variation from resting-state to task-specific brain networks has been proposed to predict individuals’ behavioral states or performance (29, 32–36). Interestingly, most of the variance in the configuration of brain networks arises from interindividual variability rather than task contexts (37). Here, we ask whether this variance contributes to the interindividual variability in successful adaptation to a challenging listening situation.

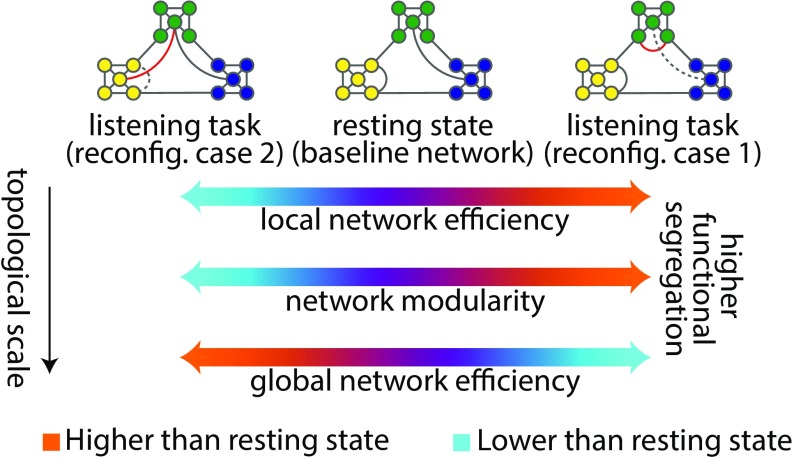

Knowing that cortical systems supporting speech processing show correlated activity during rest (38–40), we treat the whole-brain resting-state network as the putative task network at its “idling” baseline (Fig. 1). As a listening challenge arises, different functional specializations for speech comprehension are conceivable across cortex depending on the task demands (41).

Fig. 1.

Two possible network reconfigurations (case 1 and case 2) characterized by a shift of the functional connectome toward either higher segregation (more localized cortical processing) or lower segregation (more distributed cortical processing) during a listening challenge. Global efficiency is inversely related to the sum of shortest path lengths between every pair of nodes, indicating the capacity of a network for parallel processing. Modularity describes the segregation of nodes into relatively dense subsystems (here shown in distinct colors), which are sparsely interconnected. Mean local efficiency is equivalent to global efficiency computed on the direct neighbors of each node, which is then averaged over all nodes. Toward higher functional segregation, the hypothetical baseline network loses the shortcut between the blue and green module (dashed link). Instead, within the green module, a new connection emerges (red link). Together, these form a segregated configuration tuned for a more localized cortical processing.

These functional specializations of speech comprehension can be studied at the system level within the framework of network segregation and integration using graph-theoretical connectomics (33, 42). Accordingly, it is plausible to expect that attending to and processing local elements in the speech stream (13, 43) would shift the topology of the functional connectome toward higher segregation (more localized cortical processing), while processing of larger units of speech (44, 45) would lead to a reconfiguration toward lower segregation (more distributed cortical processing) (Fig. 1).

As such, we here capitalize on the degree and direction of the functional connectome reconfiguration as a proxy for an individual’s successful adaptation and thus, speech comprehension success in a challenging listening task (Fig. 2A).

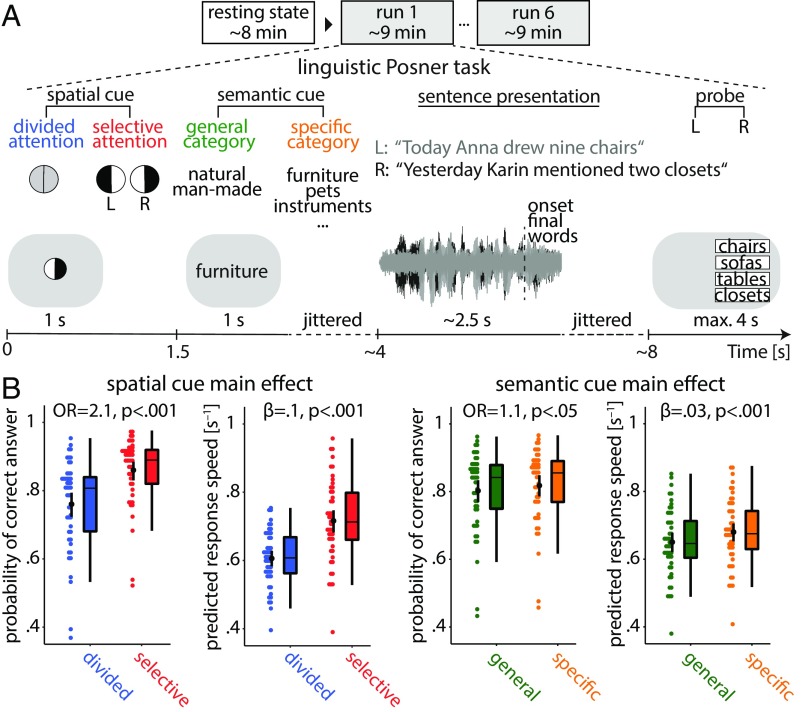

Fig. 2.

Listening task and individuals’ performance. (A) The linguistic Posner task with concurrent speech (i.e., cued speech comprehension). Participants listened to two competing dichotomously presented sentences. Each trial started with the visual presentation of a spatial cue. An informative cue provided information about the side (left ear vs. right ear) of the to be probed sentence final word (invoking selective attention). An uninformative cue did not provide information about the side of to be probed sentence final word (invoking divided attention). A semantic cue was visually presented, indicating a general or a specific semantic category for both sentence final words (allowing semantic predictions). At the end of each trial, a visual response array appeared on the left or right side of the screen with four word choices asking the participant to identify the final word of the sentence presented to the left or right ear, depending on the side of the response array. (B) Predictions from linear mixed effects models. Scattered data points (n = 49) represent trial-averaged predictions derived from the model. Black points and vertical lines show mean ± bootstrapped 95% CI. OR is the odds ratio parameter estimate resulting from generalized linear mixed effects models; β is the slope parameter estimate resulting from general linear mixed effects models.

Results

We measured brain activity using fMRI in an age-varying sample of healthy adults (n = 49; 19–69 y old) and constructed large-scale cortical network models (SI Appendix, Fig. S1) during rest and while individuals performed a linguistic Posner task with concurrent speech. The task presented participants with two competing dichotic sentences uttered by the same female speaker (Fig. 2A). Participants were probed on the last word (i.e., target) of one of these two sentences. Crucially, two visual cues were presented upfront. Either a spatial attention cue indicated the to be probed side, thus invoking selective attention, or it was uninformative, thus invoking divided attention. The second cue specified the semantic category of the sentence final words either very generally or more specifically, which allowed for more or less precise semantic prediction of the upcoming target word. Using (generalized) linear mixed effects models, we examined the influence of the listening cues and brain network reconfiguration on listening success, controlling for individuals’ age, head motion, and the probed side.

Informative Spatial and Semantic Cues Facilitate Speech Comprehension.

The analysis of listening performance using linear mixed effects models revealed an overall behavioral benefit from more informative cues. As expected, participants performed more accurately and faster under selective attention compared with divided attention [accuracy: odds ratio (OR) = 2.1, P < 0.001; response speed: β = 0.1, P < 0.001] (Fig. 2B, Left). Moreover, participants performed more accurately and faster when they were cued to the specific semantic category of the target word compared with a general category (accuracy: OR = 1.1, P < 0.05; response speed: β = 0.03, P < 0.01) (Fig. 2B, Right). Participants benefitted notably more from the informative spatial cue than from the informative (i.e., specific) semantic cue. We did not find any evidence of an interactive effect of the two cues on behavior (likelihood ratio tests; accuracy: = 0.005, P = 0.9; response speed: = 2.5, P = 0.1). Lending validity to our results, younger participants performed more accurately and faster than older participants as expected (main effect of age; accuracy: OR = 0.63, P < 0.0001; response speed: β = –0.03, P < 0.001). Furthermore, as to be expected from the well-established right ear advantage for linguistic materials (46), participants were faster when probed on the right compared with the left ear (main effect of probe: β = 0.018, P < 0.01) (SI Appendix, Table S1 has all model terms and estimates).

Higher Segregation of the Whole-Brain Network During Cued Speech Comprehension.

A main question in this study was whether and how resting-state brain networks are reconfigured when listening challenges arise. We compared functional connectivity and three key topological network metrics between resting state and the listening task (SI Appendix has the definitions of brain metrics). We expected that resting-state brain networks would functionally reshape and shift either toward higher segregation (more localized cortical processing) or lower segregation (more distributed cortical processing) during the listening task (Fig. 1). Our network comparison revealed how the whole-brain network reconfigured in adaptation to cued speech comprehension (Fig. 3).

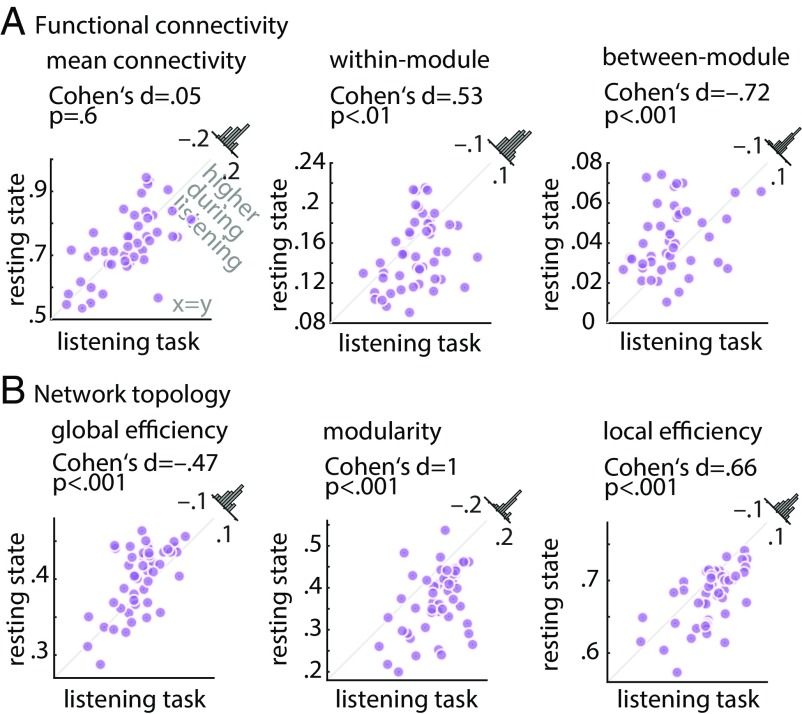

Fig. 3.

Alterations in whole-brain network metrics during the listening task relative to the resting state. Functional segregation was significantly increased from rest to task. This was manifested in (A) higher within-module connectivity but lower between-module connectivity, and (B) higher network modularity and local efficiency, but lower global network efficiency. Histograms show the distribution of the change (task minus rest) of the network metrics across all 49 participants.

First, while mean within-module functional connectivity of the whole-brain network showed a significant increase from rest to task (Cohen’s d = 0.53, P < 0.01) (Fig. 3A, Center), between-module connectivity showed the opposite effect (Cohen’s d = −0.72, P < 0.001) (Fig. 3A, Right). Importantly, resting-state and listening task networks did not differ in their overall mean functional connectivity (P = 0.6) (Fig. 3A, Left), which emphasizes the importance of modular reconfiguration (i.e., change in network topology) in brain network adaptation in contrast to a mere alteration in overall functional connectivity (change in correlation strength).

Second, during the listening task, functional segregation of the whole-brain network increased relative to resting state. This effect was consistently observed on the local, intermediate, and global scales of network topology, a reconfiguration regime in the direction of the hypothetical case 1 depicted in Fig. 1.

More specifically, global network efficiency—a metric that has been proposed to measure the capacity of brain networks for parallel processing (47)—decreased from rest to task (Cohen’s d = −0.47, P < 0.001) (Fig. 3B, Left). In addition, modularity of brain networks—a metric related to the decomposability of a network into subsystems (48)—increased under the listening task in contrast to resting state (Cohen’s d = 1, P < 0.001) (Fig. 3B, Center). Across participants, network modularity was higher in 43 of 49 individuals, with a considerable degree of interindividual variability in its magnitude. This result complements the significant changes in within- and between-module connectivity described earlier.

Moreover, the whole-brain network during the task was characterized by an increase in its local efficiency relative to resting state (Cohen’s d = 0.66, P < 0.001) (Fig. 3B, Right). Knowing that local efficiency is inversely related to the topological distance between nodes within local cliques or clusters (48), this effect is another indication of a reconfiguration toward higher functional segregation, reflecting more localized cortical processing. The above results were robust with respect to the choice of network density (SI Appendix, Fig. S2). Similar results were found when brain graphs were constructed (and compared with rest) separately per task block (SI Appendix, Fig. S3), which rules out any systematic difference between resting state and task due to the difference in scan duration (the duration of each block is approximately the duration of resting state).

In an additional analysis, we investigated the correlation of brain network measures between resting state and listening task state. Reassuringly, the brain network measures were all positively correlated across participants (SI Appendix, Fig. S4), supporting the idea that state- and trait-like determinants both contribute to individuals’ brain network configurations (28).

We next investigated whether reconfiguration of the whole-brain networks from rest to task can account for the interindividual variability in listening success. To this end, using the same linear mixed effects model that revealed the beneficial effects of the listening cues on behavior, we tested whether the change in each whole-brain network metric (i.e., task minus rest) as well as its modulation by the different cue conditions could predict a listener’s speech comprehension success.

We found a significant interaction between the spatial cue and the change in individuals’ whole-brain network modularity in predicting accuracy (OR = 1.13, P < 0.01). This interaction was driven by a weak positive relationship of modularity and accuracy during selective attention trials (OR = 1.17, P = 0.13) and the absence of such a relationship during divided attention trials (OR = 1.02, P = 0.8) (SI Appendix, Tables S1 and S2). This result provides initial evidence that, at the whole-brain level, higher network modularity relative to resting state coincides with successful implementation of selective attention to speech. Notably, when changes in other network measures were included in the model (i.e., change in local or global network efficiency instead of change in network modularity), no significant relationships were found. Also, the effect of the semantic cue on behavior was not significantly modulated by change in whole-brain network topology. More precisely, adding an interaction term between the semantic cue and change in brain network modularity did not significantly improve the model fit (likelihood ratio tests; accuracy: = 2.6, P = 0.1; response speed: = 3.8, P = 0.06). There was also no significant interaction between age and change in network modularity in predicting behavior (likelihood ratio tests; accuracy: = 1, P = 0.3; response speed: = 3.1, P = 0.08).

In the following sections, we will explore the cortical origin of the topological reconfiguration outlined above: first, at the whole-brain level and second, at a subnetwork level in more detail. In the final section of the results, we will illustrate the behavioral relevance of these reconfigurations at the individual level.

Reconfiguration of the Whole-Brain Network in Adaptation to Cued Speech Comprehension.

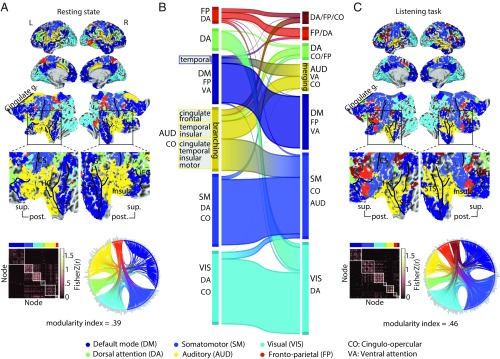

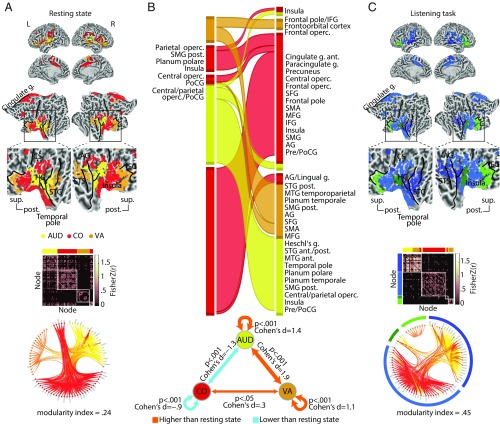

Fig. 4 provides a comprehensive overview of group-level brain networks, functional connectivity maps, and the corresponding connectograms (circular diagrams) during resting state (Fig. 4A) and during the listening task (Fig. 4C). In Fig. 4, cortical regions defined based on ref. 49 are grouped and color coded according to their module membership determined using the graph-theoretical consensus community detection algorithm (48, 50) (SI Appendix has details).

Fig. 4.

Modular reconfiguration of the whole-brain network in adaptation to the listening task. (A) The whole-brain resting-state network decomposed into six distinct modules shown in different colors. The network modules are visualized on the cortical surface, within the functional connectivity matrix, and on the connectogram using a consistent color scheme. Modules are functionally identified according to their node labels as in ref. 49. Group-level modularity partition and the corresponding modularity index were obtained using graph-theoretical consensus community detection. Gray peripheral bars around the connectograms indicate the number of connections per node. (B) Flow diagram illustrating the reconfiguration of brain network modules from resting state (Left) in adaptation to the listening task (Right). Modules shown in separate vertical boxes in Left and Right are sorted from bottom to top according to the total PageRank of the nodes that they contain, and their heights correspond to their connection densities. The streamlines illustrate how nodes belonging to a given module during resting state change their module membership during the listening task. (C) Whole-brain network modules during the listening task. The network construction and visualization scheme are identical to A. AG, angular gyrus; AUD, auditory; CO, cingulo-opercular; CS, central sulcus; DA, dorsal attention; DM, default mode; FP, frontoparietal; g., gyrus; HG, Heschl’s gyrus; post., posterior; SM, somatomotor; STG, superior temporal gyrus; sup., superior; VA, ventral attention; VIS, visual.

When applied to the resting-state data in our sample, the algorithm decomposed the group-averaged whole-brain network into six modules (Fig. 4A). The network module with the highest number of nodes largely overlapped with the known default mode network (Fig. 4A, dark blue). At the center of our investigation was the second largest module, comprising mostly auditory nodes and a number of cingulo-opercular nodes (Fig. 4A, yellow). For simplicity, we will refer to this module as the auditory module. Additional network modules included (in the order of size) a module made up of visual nodes (Fig. 4A, cyan) and one encompassing mostly somatomotor nodes (Fig. 4A, light blue) as well as a dorsal attention module (Fig. 4A, green) and a frontoparietal module (Fig. 4A, red).

Fig. 4B shows how the configuration of the whole-brain network changed from rest to task. This network flow diagram illustrates whether and how cortical nodes belonging to a given network module during resting state (Fig. 4B, Left) changed their module membership during the listening task (Fig. 4B, Right). The streamlines tell us how the nodal composition of network modules changed, indicating a functional reconfiguration. According to the streamlines, the auditory module showed the most prominent reconfiguration (Fig. 4B, yellow module) (auditory), while the other modules underwent only moderate reconfigurations.

This reconfiguration of the auditory module can be summarized by two dominant nodal alterations. On the one hand, a nodal “branching” arises: a number of cingulate, frontal, temporal, and insular nodes change their module membership in adaptation to the listening task. On the other hand, a group of temporal and insular nodes merges with other temporal nodes from the default mode network module (Fig. 4B, Left, dark blue) and forms a new common module during the listening task (Fig. 4B, Right, yellow). Note that nodal merging should not be mistaken for functional integration. In a graph-theoretical sense, higher functional integration would be reflected by stronger between-module connectivity, which is opposite to what is found in this study (Fig. 3).

Interestingly, this latter group of temporal nodes includes posterior temporal nodes that are functionally associated with the ventral attention network (21, 49, 51). Considering the cortical surface maps, these alterations occur at the vicinity of the middle and posterior portions of the superior temporal lobes [note the dark blue regions in the right superior temporal sulcus (STS)/superior temporal gyrus in resting-state maps, which turn yellow in the listening-state maps].

In line with the changes described above, the reconfiguration of the auditory module is also observed as a change in the connection patterns shown by the connectograms (Fig. 4 A and C, circular diagrams). Relative to rest, tuning in to the listening task condenses the auditory module, and its nodes have fewer connections to other modules. In addition, a group of cingulate and frontal nodes from the auditory module at rest merges with several nodes from the frontoparietal/dorsal attention module and forms a new module during the listening task (Fig. 4 B, Right, dark brown and C).

Taken together, we have observed a reconfiguration of the whole-brain network that is dominated by modular branching and merging across auditory, cingulo-opercular, and ventral attention nodes (Fig. 4B).

Reconfiguration of a Frontotemporal Auditory Control Network in Adaptation to Cued Speech Comprehension.

As outlined above, we had observed two prominent reconfigurations described in Fig. 4B, namely nodal branching and nodal merging. Accordingly, we identified the cortical origin of these nodal alterations based on the underlying functional parcellation (49). The identified nodes include auditory, ventral attention, and cingulo-opercular regions. We will refer to these frontotemporal cortical regions collectively as the auditory control network. This network encompasses 86 nodes (of 333 whole-brain nodes). According to Fig. 4B, this network is in fact a conjunction across all auditory, ventral attention, and cingulo-opercular nodes, irrespective of their module membership. For the purpose of a more transparent illustration, the cortical map of the auditory control network is visualized in Fig. 5A.

Fig. 5.

Modular reconfiguration of the frontotemporal auditory control network in adaptation to the listening task. (A) The auditory control network. Cortical regions across the resting-state frontotemporal map are functionally identified and color coded according to their node labels as in ref. 49. This network is decomposed into four distinct modules shown within the group-level functional connectivity matrix and the connectogram (circular diagram). Group-level modularity partition and the corresponding modularity index were obtained using graph-theoretical consensus community detection. Gray peripheral bars around the connectograms indicate the number of connections per node. (B, Upper) Flow diagram illustrating the reconfiguration of the auditory control network from resting state (Left) to the listening task (Right). Modules shown in separate vertical boxes in Left and Right are sorted from bottom to top according to the total PageRank of the nodes that they contain, and their heights correspond to their connection densities. The streamlines illustrate how nodes belonging to a given module during resting state change their module membership during the listening task. (B, Lower) Alteration in functional connectivity within the auditory control network complements the topological reconfiguration illustrated by the flow diagram (C) Modules of the auditory control network during the listening task. The network construction and visualization scheme are identical to A. Since auditory and ventral attention nodes are merged (yellow and orange nodes), an additional green–blue color coding is used for a clearer illustration of modules. AG, angular gyrus; ant., anterior; AUD, auditory; CO, cingulo-opercular; CS, central sulcus; g., gyrus; HG, Heschl’s gyrus; MFG, middle frontal gyrus; MTG, middle temporal gyrus; Operc., operculum; post., posterior; Pre/PoCG, pre-/postcentral gyrus; PoCG, postcentral gyrus; post., posterior; SFG, superior frontal gyrus; SMA, supplementary motor area; SMG, suparmarginal gyrus; STG, superior temporal gyrus; sup., superior; VA, ventral attention.

Similar to the whole-brain analysis (Fig. 4), the auditory control network can be decomposed into modules by applying the consensus community detection algorithm again but this time, to the graph encompassing the 86 nodes. The result is visualized in Fig. 5A by grouping the nodes within the group-level functional connectivity matrix and the corresponding connectogram. During resting state, the consensus community detection algorithm had decomposed the auditory control network into four distinct modules that correspond well with their functional differentiation as in ref. 49. That is, auditory, ventral attention, and cingulo-opercular nodes form their own distinct homogeneous modules.

During the listening task, however, the configuration of the auditory control network changed (Fig. 5B, Upper). According to the streamlines, the majority of auditory nodes (yellow) and posterior ventral attention nodes (orange) merged to form a common network module during the task (Fig. 5B, Right, first module from bottom). This reconfiguration co-occurred with an increase in functional connectivity within the auditory nodes and ventral attention nodes as well as between auditory and ventral attention nodes (Fig. 5B, Lower and SI Appendix, Fig. S5). The cortical and connectivity maps of the auditory–ventral attention module are shown in Fig. 5C, dark blue module.

This network module emerging during the listening task involves cortical regions mostly in the vicinity of the bilateral STS and Heschl’s gyrus as well as the right angular gyrus. Note also the increase in the functional connectivity across these nodes (Fig. 5C, connectivity matrix, dark blue module) and the increase in the number of connections within this module (Fig. 5C, connectogram, gray bars inside the dark blue module) (all relative to resting state). Collectively, this reconfiguration indicates a stronger functional cross-talk between auditory and posterior ventral attention regions during the listening task compared with resting state.

In contrast, frontal ventral attention nodes do not merge with other nodes and remain connected within a separate module during both resting state and task (Fig. 5B, Right, second module from top). These nodes overlap with cortical regions at the vicinity of inferior frontal gyrus (IFG) (Fig. 5C, light green module). Moreover, the majority of cingulo-opercular nodes (Fig. 5C, red) are grouped together during the listening task (Fig. 5C, light blue module). This co-occurs with a decrease in functional connectivity within the cingulo-opercular nodes as well as between auditory and cingulo-opercular nodes (Fig. 5B, Lower and SI Appendix, Fig. S5). This indicates higher functional segregation within the cingulo-opercular regions during the listening challenge relative to resting state.

Higher Modularity of the Auditory Control Network Predicts Speech Comprehension Success.

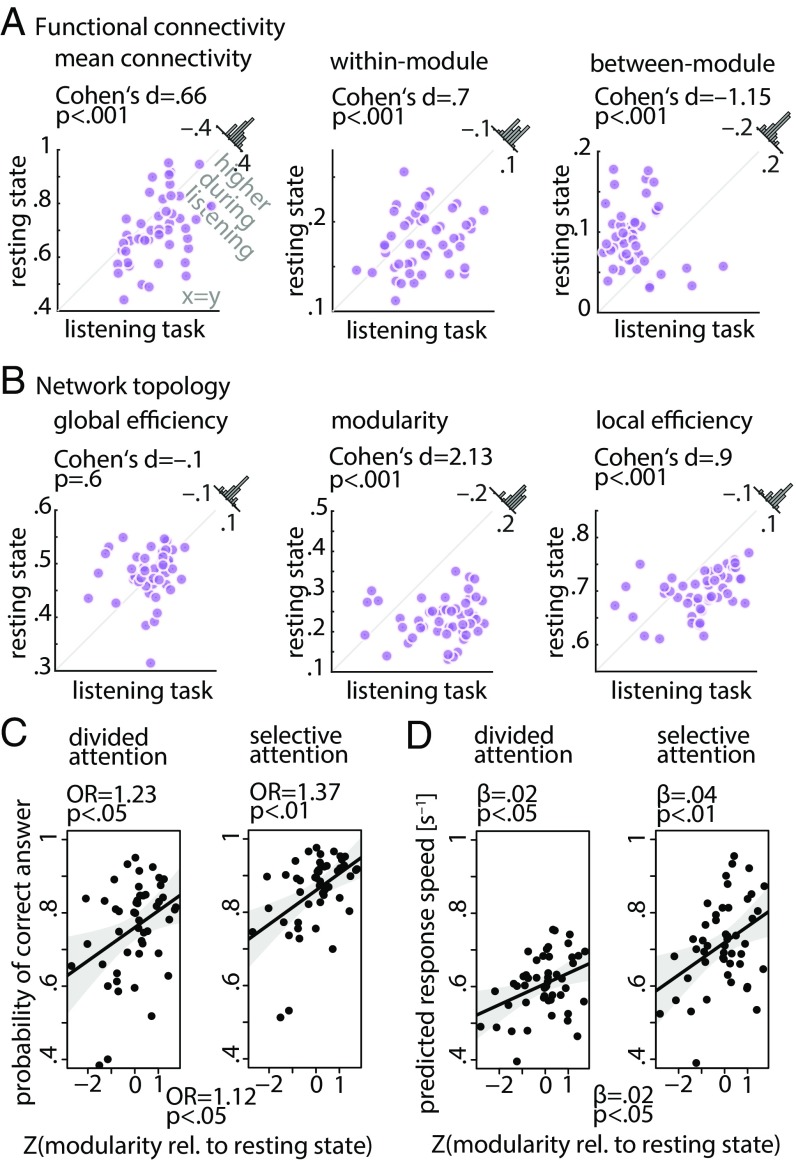

To quantitatively assess the reconfiguration of the auditory control network described above, we compared functional connectivity and topology of this network between resting state and the listening task (Fig. 6A).

Fig. 6.

Brain network metrics derived from the frontotemporal auditory control network and prediction of listening success from modularity of this network. (A and B) Functional segregation within the auditory control network was significantly increased during the listening task relative to resting state. This was manifested in higher network modularity, within-module connectivity, and local network efficiency but lower between-module connectivity. Histograms show the distribution of the change (task minus rest) of the network metrics across all 49 participants. (C and D) Interaction of spatial cue and change in modularity of the auditory control network. The data points represent trial-averaged predictions derived from the (generalized) linear mixed effects model. Solid lines indicate linear regression fit to the trial-averaged data. Shaded area shows two-sided parametric 95% CIs. OR is the odds ratio (OR) parameter estimate resulting from generalized linear mixed effects models; β is the slope parameter estimate resulting from general linear mixed effects models.

First, while mean overall connectivity and within-module connectivity of this network significantly increased from rest to task (mean connectivity: Cohen’s d = 0.66, P < 0.001; within-module connectivity: Cohen’s d = 0.7, P < 0.001), between-module connectivity showed the opposite effect (Cohen’s d = −1.15, P < 0.001) (Fig. 6A).

Second, functional segregation of the auditory control network was significantly increased from rest to task as manifested by a higher degree of modularity and mean local efficiency (modularity: Cohen’s d = 2.13, P < 0.001; local efficiency: Cohen’s d = 0.9, P < 0.001) (Fig. 6B).

Critically, (generalized) linear mixed effects analyses revealed significant interactions of the spatial cue and change in modularity of the auditory control network for both behavioral dependent measures. More precisely, the change in modularity of this network showed positive correlations with accuracy (Fig. 6C) and response speed (Fig. 6D) under both divided and selective attention trials. However, these brain–behavior correlations were stronger under selective (accuracy: OR = 1.37, P < 0.01; response speed: β = 0.04, P < 0.01) than under divided attention trials (accuracy: OR = 1.23, P < 0.05; response speed: β = 0.02, P < 0.05) (SI Appendix, Table S3 has all model terms and estimates). We did not find any evidence for an interaction between the semantic cue and change in modularity of the auditory control network in predicting behavior (likelihood ratio tests; accuracy: = 0.3, P = 0.6; response speed: = 0.4, P = 0.5). There was also no significant interaction between age and change in modularity of the auditory control network in predicting behavior (accuracy: = 0.15, P = 0.7; response speed: = 0.4, P = 0.5) (SI Appendix, Fig. S6).

Moreover, an additional linear mixed effects model with resting-state modularity and task modularity as two separate regressors revealed a significant main effect of the auditory control network modularity during the task (but not rest) in predicting accuracy (OR = 1.35, P < 0.001). Additionally, there was a significant interaction between modularity of the auditory control network during the task with the spatial cue in predicting response speed (β = 0.01, P < 0.05) (SI Appendix, Table S4).

Apart from the nodal branching and merging in the auditory module, other reconfigurations, although less profound, could also be identified (Fig. 4B). These include modular reconfiguration of subnetworks encompassing (i) visual, dorsal attention, and cingulo-opercular nodes; (ii) somatomotor, auditory, and cingulo-opercular nodes; (iii) default mode, frontoparietal, and ventral attention nodes; and (iv) dorsal attention, frontoparietal, and cingulo-opercular nodes. To investigate whether these reconfigurations predict listening behavior, we performed analyses analogous to those undertaken for the auditory control network. That is, using four separate linear mixed effects models, we included the change (i.e., task minus rest) in network modularity of each of the four aforementioned subnetworks. None of these models were able to predict individuals’ listening behavior (accuracy or response speed). Thus, the prediction of speech comprehension success from modular reconfiguration of brain subnetworks was specific to the frontotemporal auditory control network (Fig. 6B).

Discussion

First, we have shown here how resting-state brain networks reshape in adaptation to a challenging listening task. This reconfiguration predominantly emerges from a frontotemporal auditory control network that shows higher modularity and local efficiency during the listening task, shifting the whole-brain connectome toward higher segregation (more localized cortical processing). Second, the degree to which modularity of this auditory control network increases relative to its resting-state baseline predicts an individual’s listening success. To illustrate, one SD change in modularity of the auditory control network increased a listener’s chance of successful performance in a cued trial by about 3% on average, and it made her response about 40 ms faster. While the effect of modularity on behavior was found in states of both divided and selective attention, it was stronger for selective attention trials.

Optimal Brain Network Configuration for Successful Listening.

The functional connectome was reconfigured toward more localized cortical processing during the listening task as reflected by higher segregation of the whole-brain network. This functional specialization suggests that resolving the listening task mainly required attending to and processing the sentences as unconnected speech, arguably at the level of single words, which is often associated with cortical regions localized to auditory, cingulo-opercular, and inferior frontal regions under adverse listening situations (1, 13, 43).

However, segregated topology is not necessarily the optimal brain network configuration to resolve every difficult speech comprehension task. Instead, we argue that the most favorable configuration depends on the nature and instruction of the task, in particular on the level of linguistic and semantic information that needs to be integrated to resolve the task (cf. refs. 41, 44, and 45). Indeed, previous studies in the contexts of working memory and complex reasoning have found a shift of the functional connectome toward higher network integration (33, 36). Along these lines of research, our findings emphasize the importance of the degree (rather than the direction) of modular reconfiguration of brain networks in predicting individuals’ behavior.

Functional Segregation Within the Auditory Control Network Is Critical for Cued Speech Comprehension.

In adaptation to the listening task, a segregated module emerged along bilateral STS and superior temporal gyrus with a posterior extension (Fig. 4 A and C, cortical maps and Fig. 5C, dark blue module). This reconfiguration is in line with the proposal of a ventral stream in the functional neuroanatomy of speech processing (also referred to as the auditory what stream), which has been suggested to be involved in sound-to-meaning mapping during speech recognition tasks (52–56). In addition, the functional convergence of the auditory nodes with the posterior ventral attention nodes parallels the proposal that the posterior portion of the primary auditory cortex is involved in spatial separation of speech and sounds (57–61).

Our connectomic approach thus extends the existing evidence by demonstrating an increased functional cross-talk between neural systems involved in speech processing and sound localization during a cued speech comprehension task. Notably, the functional significance of this task-driven network reconfiguration was corroborated by its impact on listening success, in particular in states of selective attention. During selective attention trials, we assume that an active attentional filter mechanism is being invoked to “tune out” the distracting sentence presented to the opposite ear and to selectively amplify the sentence presented to the cued ear. Thus, the stronger brain–behavior correlation under selective attention trials suggests that this flexible filter is implemented by the formation of a more segregated and specialized cortical module composed of auditory and ventral attention nodes.

Another key observation here was auditory regions in anterior and superior temporal cortices as well as anterior insula and cingulate cortex merging with the somatomotor module during the listening task (Fig. 4C, light blue). In parallel, the frontal ventral attention nodes in the vicinity of the IFG remained within the default mode module (Fig. 4C, dark blue). Within the auditory control network, these reconfigurations appeared as the formation of highly segregated modules (Fig. 5C, modules except the dark blue one). Importantly, the degree of the modular reconfiguration of cingulo-opercular and ventral attention nodes was predictive of listening success only when their network interactions were modeled in combination with the auditory nodes (i.e., the auditory control network) and not with somatomotor or default mode nodes.

Our findings concur conceptually with the earlier work by Vaden et al. (13), who showed that, during a word recognition experiment, regions within the cingulo-opercular network displayed elevated activation in response to speech in noise, and regions along the ventral attention network showed this pattern during transitions between trials. Moreover, the authors found that cingulo-opercular regions exhibited higher functional connectivity relative to silence periods. Our results refine their findings and provide a clear distinction between cingulo-opercular and ventral attention nodes in their communication with auditory nodes during cued speech processing (Fig. 5B). Our findings support the hypothesis that, during a listening challenge, cingulo-opercular regions form an autonomous “core” control system that monitors effortful listening performance (62–64), while ventral attention nodes implement attentional filtering of the relevant speech (8).

Our connectomic approach can be distinguished from previous activation studies in two aspects. First, we view the neural systems involved in challenging speech comprehension as a large-scale dynamic network with configuration that changes from idling or resting-state baseline to a high-duty listening task state. This allowed us to precisely delineate the cortical network embodiment of behavioral adaptation to a listening challenge.

Second, our graph-theoretical module identification unveiled a more complete picture (i.e., nodes, connections, and functional boundaries) of cortical subsystems supporting listening success. This allowed us to identify and characterize an auditory control network with modular organization that revealed how functional coordination within and between auditory, cingulo-opercular, and ventral attention regions controls the selection and processing of speech during a listening challenge.

Is Modularity the Network Principle of Auditory Attention?

The main finding of this study is that higher modularity of the auditory control network relative to resting state predicts individuals’ listening success in states of both divided and selective attention. Among the three network metrics, only modularity linked the reconfiguration of brain networks with this behavioral utilization of a spatial cue. This suggests that auditory spatial attention in adverse listening situations is implemented across cortex by a fine-tuned communication within and between neural assemblies across auditory, ventral attention, and cingulo-opercular regions. This is in line with recent studies showing that higher modularity of brain networks supports auditory perception or decision making (65, 66).

Inherent in the principle of hierarchy, modularity allows formation of complex architectures that facilitate functional specificity, robustness, and behavioral adaptation (67, 68). Our results suggest that individuals’ ability in modifying intrinsic brain network modules is crucial for their attention to speech and ultimately, successful adaptation to a listening challenge. Notably, our results help us better understand individual differences in speech comprehension at the systems level, with implications for hearing assistive devices and rehabilitation strategies.

Limitations.

The logic of modular reconfiguration of an auditory control brain network in individual listeners implies that it is possible to get reliable individual-specific estimates of network modularity. Recently, Gordon et al. (69) demonstrated that reliability of functional connectivity differs greatly across individuals, measures of interest, and anatomical locations. Important to this study, they showed that network modularity achieved an average difference of <3% from the split data sample with only 10 min of data, while other network measures required longer scan duration. It is possible that the relatively short duration of the resting-state scan in this study may be hiding some significant individual variability. Future studies will be important to recover such important variation by dense sampling of individual brains (70, 71).

Knowing that fMRI provides an indirect measure of neural activations, it remains unknown how far our findings generalize to networks constructed based on brain neurophysiological responses recorded during resting state and listening (72). In this respect, an important question is how network coupling between neural oscillations relates to listening behavior (73, 74). In addition, although the sentences used in our linguistic Posner task were carefully designed to experimentally control individuals’ use of spatial and semantic cues during listening, their trial-by-trial presentation makes the listening task less naturalistic. Future studies will be important to investigate the brain network correlates of listening success during attention to continuous speech. Lastly, in this study, the brain networks were constructed based on correlations between regional time series, which disregard directionality in the functional influences that regions may have on one another. Therefore, investigating causal relationships across cortical regions within the auditory control network would help us better understand the (bottom-up vs. top-down) functional cross-talks between auditory, ventral attention, and cingulo-opercular regions during challenging speech comprehension.

Conclusion.

In sum, our findings suggest that behavioral adaptation of a listener hinges on task-specific reconfiguration of the functional connectome at the level of network modules. We conclude that the communication dynamics within the frontotemporal auditory control network are closely linked with the successful deployment of auditory spatial attention in challenging listening situations.

Materials and Methods

Participants.

Seventy-one healthy adult participants were invited from a larger cohort in which we investigate the neurocognitive mechanisms underlying adaptive listening in middle-aged and older adults [a large-scale study entitled “The listening challenge: How ageing brain adapt (AUDADAPT)”; https://cordis.europa.eu/project/rcn/197855_en.html]. All participants were right-handed [assessed by a translated version of the Edinburgh Handedness Inventory (75)] and had normal or corrected-to-normal vision. None of the participants had any history of neurological or psychiatric disorders. However, three participants had to be excluded after an incidental diagnosis made by an in-house radiologist based on the acquired structural MRI. In addition, 15 participants were excluded because of incomplete measurements due to technical issues, claustrophobia, or task performance below chance level. Four additional participants completed the experiment but were removed from data analysis due to excessive head motion inside the scanner (i.e., scan-to-scan movement >1.5 mm of translation or 1.5° of rotation; rms of framewise displacement >0.5 mm). Accordingly, 49 participants were included in the main analysis (mean age of 45.6 y old; age range =19–69 y old; 37 females). All procedures were in accordance with the Declaration of Helsinki and approved by the local ethics committee of the University of Lübeck. All participants gave written informed consent and were financially compensated (8€/h).

Procedure.

Each imaging session consisted of seven fMRI runs (Fig. 2A): eyes-open resting state (∼8 min) followed by six runs in which participants performed a challenging speech comprehension task (∼9 min each) (SI Appendix has a detailed description of the task). A structural scan (∼5 min) was acquired at the end of the imaging session (SI Appendix has MRI acquisition parameters).

Preprocessing.

During the resting state, 480 functional volumes were acquired, and during each run of the listening task, 540 volumes were acquired. To allow for signal equilibration, the first 10 volumes of resting state and each run of the task were removed. Preprocessing steps were undertaken in SPM12. First, the functional images were spatially realigned to correct for head motion using a least square approach and the six rigid body affine transformations. Second, the functional volumes were corrected for slice timing to adjust for differences in image acquisition times between slices. Subsequently, functional images were coregistered to each individual’s structural image. Third, the normalization parameters from the subject’s native space to the standard space [Montreal Neurological Institute and Hospital (MNI) coordinate system] were obtained via the unified segmentation of their T1 images (76). The normalization parameters were then applied to each of the echo-planar image (EPI) volumes. No spatial smoothing was applied on the volumes to avoid potential artificial distance-dependent correlations between voxels’ BOLD signals (77, 78).

Motion Artifact Removal.

In recent years, the potentially confounding impact of head motion or nonneural physiological trends on the temporal correlations between BOLD signals has been raised by multiple studies (79, 80). Knowing that older participants often make larger within-scan movements than younger participants (28, 81), the issue of motion is particularly important in this study, where the age of the participants spans across a wide range. Thus, we accounted for motion artifacts in three stages of our analysis. First, we used the denoising method proposed by ref. 82 that decomposes brain signals in wavelet space, identifies and removes trains of spikes that likely reflect motion artifacts, and reconstitutes denoised signals from the remaining wavelets. Second, we implemented a nuisance regression to suppress nonneural and motion artifacts as explained in the next section. Third, we used rms of framewise displacement as a regressor of no interest in the mixed effects analyses (Statistical Analysis).

Cortical Parcellation.

Cortical nodes were defined using a previously established functional parcellation (49), which has shown relatively higher accuracy compared with other parcellations (83). This parcellation is based on surface-based resting-state functional connectivity boundary maps and encompasses 333 cortical areas. We used this template to estimate mean BOLD signals across voxels within each cortical region per participant.

Nuisance Regression.

To minimize the effects of spurious temporal correlations induced by physiological and movement artifacts, a general linear model was constructed to regress out white matter and cerebrospinal fluid mean time series together with the six rigid body movement parameters and their first-order temporal derivatives. Subsequently, the residual time series obtained from this procedure were concatenated across the six runs of the listening task for each participant. The cortical time series of the resting state and listening task were further used for functional connectivity and network analysis (SI Appendix, Fig. S1). Note that global signal was not regressed out from the regional time series, as it is still an open question in the field what global signal regression in fact regresses out (84–86). In this study, wavelet despiking of motion artifacts allowed us to accommodate the spatial and temporal heterogeneity of motion artifacts. Importantly, our main results point to the reconfiguration of a frontotemporal auditory control network, which does not overlap with the mainly sensorimotor disruption map of the global signal (84).

Functional Connectivity.

Mean residual time series were band-pass filtered by means of maximum overlap discrete wavelet transform (Daubechies wavelet of length eight), and the results of the filtering within the range of 0.06–0.12 Hz (wavelet scale three) were used for additional analyses. It has been previously documented that the behavioral correlates of the functional connectome are best observed by analyzing low-frequency large-scale brain networks (87–90). The use of wavelet scale three was motivated by previous work showing that behavior during cognitive tasks predominately correlated with changes in functional connectivity in the same frequency range (67, 90–92). To obtain a measure of association between each pair of cortical regions, Fisher’s Z-transformed Pearson correlations between wavelet coefficients were computed, which resulted in one 333 × 333 correlation matrix per participant for each resting state and listening task (SI Appendix, Fig. S1).

Connectomics.

Brain graphs were constructed from the functional connectivity matrices by including the top 10% of the connections in the graph according to the rank of their correlation strengths (77, 93). This resulted in sparse binary undirected brain graphs at a fixed network density of 10% and assured that the brain graphs were matched in terms of density across participants, resting state, and listening task (94, 95). To investigate whether choosing a different (range of) graph density threshold(s) would affect the results (96), we examined the effect of graph thresholding using the cost integration approach (93) (SI Appendix, Fig. S2). Mean functional connectivity was calculated as the average of the upper diagonal elements of the sparse connectivity matrix for each participant. In addition, three key topological network metrics were estimated: mean local efficiency, modularity, and global efficiency. These graph-theoretical metrics were used to quantify the configuration of large-scale brain networks on the local, intermediate, and global scales of topology, respectively (97) (Fig. 1). For each topological metric, we computed a whole-brain metric, which collapses that network property into one single metric [Brain Connectivity Toolbox (48)] (the mathematical formalization of these metrics is in SI Appendix).

Statistical Analysis.

Behavioral data.

Performance of the participants during the listening task was assessed based on (a binary measure of) correct identification of the probed sentence final word (accuracy) and the inverse of reaction time on correct trials (response speed). Trials during which no response was given (within the response time window) were considered as incorrect. The single-trial behavioral measures across all individual participants were treated as the dependent variable in the (generalized) linear mixed effects analysis (Statistical Analysis).

Brain data.

Statistical comparisons of network metrics between resting state and listening task were based on permutation tests for paired samples (randomly permuting the rest and task labels 10,000 times). We used Cohen’s d for paired samples as the corresponding effect size.

Generalized Linear Mixed Effects Models.

Brain–behavior relationship was investigated within a linear mixed effects analysis framework. To this end, either of the single-trial behavioral measures (accuracy or response speed) across all participants was treated as the dependent variable. The main predictors in the model were the spatial and semantic cues, each at two levels (divided vs. selective and general vs. specific, respectively). Since in this study, the main question is whether reconfiguration of resting-state brain networks in adaptation to the listening challenge relates to listening success, we used the difference between a given network parameter across resting state and the listening task (i.e., task minus rest) as the third main predictor. The linear mixed effects analysis framework allowed us to control for other variables that entered as regressors of no interest in the model. These included age, head motion (i.e., difference in rms of framewise displacement between resting state and task), and side probed (left or right). Mixed effects analyses were performed in R (R Core Team) using the packages lme4 and sjPlot.

Model Selection.

To estimate the best fit of the linear mixed effects models, we followed an iterative model fitting procedure, which started with an intercept-only null model (7). Fixed effects terms were added in a stepwise procedure, and the change of model fit (performed using maximum likelihood estimation) was assessed using likelihood ratio tests. Deviation coding was used for categorical predictors. All continuous variables were Z scored. Since accuracy is a binary variable, we used a generalized linear mixed effects model with the logit as link function. In the case of response speed, the distribution of the data did not significantly deviate from normal distribution (Shapiro–Wilk test P value = 0.7). Thus, in this case, we used linear mixed effects models with the underlying distribution set to Gaussian. For both models predicting accuracy or response speed, we report P values for individual model terms that were derived using the Satterthwaite approximation for degrees of freedom. As a measure of effects size, for the model predicting correctness, we report OR, and for response speed, we report the regression coefficient (β).

Data Visualization.

Brain surfaces were visualized using the Connectome Workbench. Connectograms were visualized using the Brain Data Viewer. Network flow diagrams were visualized in MapEquation.

Data Availability.

The complete dataset associated with this work, including raw data and connectivity maps, as well as code of network and statistical analyses are publicly available at https://osf.io/28r57/.

Supplementary Material

Acknowledgments

Anne Herrmann, Susanne Schellbach, Franziska Scharata, and Leonhard Waschke helped acquire, manage, and archive the data. Research was supported by European Research Council Consolidator Grant AUDADAPT 646696 (to J.O.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

Data deposition: The complete dataset associated with this work, including raw data and connectivity maps, as well as code of network and statistical analyses are publicly available at https://osf.io/28r57/.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1815321116/-/DCSupplemental.

References

- 1.Obleser J, Wise RJ, Dresner MA, Scott SK. Functional integration across brain regions improves speech perception under adverse listening conditions. J Neurosci. 2007;27:2283–2289. doi: 10.1523/JNEUROSCI.4663-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Davis MH, Ford MA, Kherif F, Johnsrude IS. Does semantic context benefit speech understanding through “top-down” processes? Evidence from time-resolved sparse fMRI. J Cogn Neurosci. 2011;23:3914–3932. doi: 10.1162/jocn_a_00084. [DOI] [PubMed] [Google Scholar]

- 3.Wöstmann M, Herrmann B, Maess B, Obleser J. Spatiotemporal dynamics of auditory attention synchronize with speech. Proc Natl Acad Sci USA. 2016;113:3873–3878. doi: 10.1073/pnas.1523357113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Dai L, Best V, Shinn-Cunningham BG. Sensorineural hearing loss degrades behavioral and physiological measures of human spatial selective auditory attention. Proc Natl Acad Sci USA. 2018;115:E3286–E3295. doi: 10.1073/pnas.1721226115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Colflesh GJ, Conway AR. Individual differences in working memory capacity and divided attention in dichotic listening. Psychon Bull Rev. 2007;14:699–703. doi: 10.3758/bf03196824. [DOI] [PubMed] [Google Scholar]

- 6.Kidd GR, Watson CS, Gygi B. Individual differences in auditory abilities. J Acoust Soc Am. 2007;122:418–435. doi: 10.1121/1.2743154. [DOI] [PubMed] [Google Scholar]

- 7.Tune S, Wöstmann M, Obleser J. Probing the limits of alpha power lateralisation as a neural marker of selective attention in middle-aged and older listeners. Eur J Neurosci. 2018;48:2537–2550. doi: 10.1111/ejn.13862. [DOI] [PubMed] [Google Scholar]

- 8.Eckert MA, et al. At the heart of the ventral attention system: The right anterior insula. Hum Brain Mapp. 2009;30:2530–2541. doi: 10.1002/hbm.20688. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Obleser J, Kotz SA. Expectancy constraints in degraded speech modulate the language comprehension network. Cereb Cortex. 2010;20:633–640. doi: 10.1093/cercor/bhp128. [DOI] [PubMed] [Google Scholar]

- 10.Adank P. The neural bases of difficult speech comprehension and speech production: Two Activation Likelihood Estimation (ALE) meta-analyses. Brain Lang. 2012;122:42–54. doi: 10.1016/j.bandl.2012.04.014. [DOI] [PubMed] [Google Scholar]

- 11.Wild CJ, et al. Effortful listening: The processing of degraded speech depends critically on attention. J Neurosci. 2012;32:14010–14021. doi: 10.1523/JNEUROSCI.1528-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Erb J, Henry MJ, Eisner F, Obleser J. The brain dynamics of rapid perceptual adaptation to adverse listening conditions. J Neurosci. 2013;33:10688–10697. doi: 10.1523/JNEUROSCI.4596-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Vaden KI, Jr, et al. The cingulo-opercular network provides word-recognition benefit. J Neurosci. 2013;33:18979–18986. doi: 10.1523/JNEUROSCI.1417-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Fedorenko E, Thompson-Schill SL. Reworking the language network. Trends Cogn Sci. 2014;18:120–126. doi: 10.1016/j.tics.2013.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Hagoort P. Nodes and networks in the neural architecture for language: Broca’s region and beyond. Curr Opin Neurobiol. 2014;28:136–141. doi: 10.1016/j.conb.2014.07.013. [DOI] [PubMed] [Google Scholar]

- 16.Fuertinger S, Horwitz B, Simonyan K. The functional connectome of speech control. PLoS Biol. 2015;13:e1002209. doi: 10.1371/journal.pbio.1002209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chai LR, Mattar MG, Blank IA, Fedorenko E, Bassett DS. Functional network dynamics of the language system. Cereb Cortex. 2016;26:4148–4159. doi: 10.1093/cercor/bhw238. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Price C, Thierry G, Griffiths T. Speech-specific auditory processing: Where is it? Trends Cogn Sci. 2005;9:271–276. doi: 10.1016/j.tics.2005.03.009. [DOI] [PubMed] [Google Scholar]

- 19.Raichle ME, et al. A default mode of brain function. Proc Natl Acad Sci USA. 2001;98:676–682. doi: 10.1073/pnas.98.2.676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dosenbach NU, et al. Distinct brain networks for adaptive and stable task control in humans. Proc Natl Acad Sci USA. 2007;104:11073–11078. doi: 10.1073/pnas.0704320104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Power JD, et al. Functional network organization of the human brain. Neuron. 2011;72:665–678. doi: 10.1016/j.neuron.2011.09.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Honey CJ, Kötter R, Breakspear M, Sporns O. Network structure of cerebral cortex shapes functional connectivity on multiple time scales. Proc Natl Acad Sci USA. 2007;104:10240–10245. doi: 10.1073/pnas.0701519104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Shen K, Hutchison RM, Bezgin G, Everling S, McIntosh AR. Network structure shapes spontaneous functional connectivity dynamics. J Neurosci. 2015;35:5579–5588. doi: 10.1523/JNEUROSCI.4903-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Laumann TO, et al. On the stability of BOLD fMRI correlations. Cereb Cortex. 2017;27:4719–4732. doi: 10.1093/cercor/bhw265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Mueller S, et al. Individual variability in functional connectivity architecture of the human brain. Neuron. 2013;77:586–595. doi: 10.1016/j.neuron.2012.12.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cole MW, Bassett DS, Power JD, Braver TS, Petersen SE. Intrinsic and task-evoked network architectures of the human brain. Neuron. 2014;83:238–251. doi: 10.1016/j.neuron.2014.05.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Finn ES, et al. Functional connectome fingerprinting: Identifying individuals using patterns of brain connectivity. Nat Neurosci. 2015;18:1664–1671. doi: 10.1038/nn.4135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Geerligs L, Rubinov M, Cam-Can, Henson RN. State and trait components of functional connectivity: Individual differences vary with mental state. J Neurosci. 2015;35:13949–13961. doi: 10.1523/JNEUROSCI.1324-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gratton C, Laumann TO, Gordon EM, Adeyemo B, Petersen SE. Evidence for two independent factors that modify brain networks to meet task goals. Cell Rep. 2016;17:1276–1288. doi: 10.1016/j.celrep.2016.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Gordon EM, Laumann TO, Adeyemo B, Petersen SE. Individual variability of the system-level organization of the human brain. Cereb Cortex. 2017;27:386–399. doi: 10.1093/cercor/bhv239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Gordon EM, et al. Individual-specific features of brain systems identified with resting state functional correlations. Neuroimage. 2017;146:918–939. doi: 10.1016/j.neuroimage.2016.08.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Spadone S, et al. Dynamic reorganization of human resting-state networks during visuospatial attention. Proc Natl Acad Sci USA. 2015;112:8112–8117. doi: 10.1073/pnas.1415439112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Cohen JR, D’Esposito M. The segregation and integration of distinct brain networks and their relationship to cognition. J Neurosci. 2016;36:12083–12094. doi: 10.1523/JNEUROSCI.2965-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Schultz DH, Cole MW. Higher Intelligence is associated with less task-related brain network reconfiguration. J Neurosci. 2016;36:8551–8561. doi: 10.1523/JNEUROSCI.0358-16.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Bolt T, Nomi JS, Rubinov M, Uddin LQ. Correspondence between evoked and intrinsic functional brain network configurations. Hum Brain Mapp. 2017;38:1992–2007. doi: 10.1002/hbm.23500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hearne LJ, Cocchi L, Zalesky A, Mattingley JB. Reconfiguration of brain network architectures between resting-state and complexity-dependent cognitive reasoning. J Neurosci. 2017;37:8399–8411. doi: 10.1523/JNEUROSCI.0485-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gratton C, et al. Functional brain networks are dominated by stable group and individual factors, not cognitive or daily variation. Neuron. 2018;98:439–452.e5. doi: 10.1016/j.neuron.2018.03.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Tomasi D, Volkow ND. Laterality patterns of brain functional connectivity: Gender effects. Cereb Cortex. 2012;22:1455–1462. doi: 10.1093/cercor/bhr230. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Muller AM, Meyer M. Language in the brain at rest: New insights from resting state data and graph theoretical analysis. Front Hum Neurosci. 2014;8:228. doi: 10.3389/fnhum.2014.00228. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Tavor I, et al. Task-free MRI predicts individual differences in brain activity during task performance. Science. 2016;352:216–220. doi: 10.1126/science.aad8127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Peelle JE. The hemispheric lateralization of speech processing depends on what “speech” is: A hierarchical perspective. Front Hum Neurosci. 2012;6:309. doi: 10.3389/fnhum.2012.00309. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Sporns O. Network attributes for segregation and integration in the human brain. Curr Opin Neurobiol. 2013;23:162–171. doi: 10.1016/j.conb.2012.11.015. [DOI] [PubMed] [Google Scholar]

- 43.DeWitt I, Rauschecker JP. Phoneme and word recognition in the auditory ventral stream. Proc Natl Acad Sci USA. 2012;109:E505–E514. doi: 10.1073/pnas.1113427109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lerner Y, Honey CJ, Silbert LJ, Hasson U. Topographic mapping of a hierarchy of temporal receptive windows using a narrated story. J Neurosci. 2011;31:2906–2915. doi: 10.1523/JNEUROSCI.3684-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.de Heer WA, Huth AG, Griffiths TL, Gallant JL, Theunissen FE. The hierarchical cortical organization of human speech processing. J Neurosci. 2017;37:6539–6557. doi: 10.1523/JNEUROSCI.3267-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Hugdahl K. In: The Asymmetrical Brain. Hugdahl K, Davidson RJ, editors. MIT Press; Cambridge, MA: 2003. pp. 441–475. [Google Scholar]

- 47.Bullmore E, Sporns O. Complex brain networks: Graph theoretical analysis of structural and functional systems. Nat Rev Neurosci. 2009;10:186–198. doi: 10.1038/nrn2575. [DOI] [PubMed] [Google Scholar]

- 48.Rubinov M, Sporns O. Complex network measures of brain connectivity: Uses and interpretations. Neuroimage. 2010;52:1059–1069. doi: 10.1016/j.neuroimage.2009.10.003. [DOI] [PubMed] [Google Scholar]

- 49.Gordon EM, et al. Generation and evaluation of a cortical area parcellation from resting-state correlations. Cereb Cortex. 2016;26:288–303. doi: 10.1093/cercor/bhu239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Blondel VD, Guillaume J-L, Lambiotte R, Lefebvre E. Fast unfolding of communities in large networks. J Stat Mech Theory Exp. 2008;2008:P10008. [Google Scholar]

- 51.Corbetta M, Patel G, Shulman GL. The reorienting system of the human brain: From environment to theory of mind. Neuron. 2008;58:306–324. doi: 10.1016/j.neuron.2008.04.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Alain C, Arnott SR, Hevenor S, Graham S, Grady CL. “What” and “where” in the human auditory system. Proc Natl Acad Sci USA. 2001;98:12301–12306. doi: 10.1073/pnas.211209098. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Ahveninen J, et al. Task-modulated “what” and “where” pathways in human auditory cortex. Proc Natl Acad Sci USA. 2006;103:14608–14613. doi: 10.1073/pnas.0510480103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- 55.Saur D, et al. Ventral and dorsal pathways for language. Proc Natl Acad Sci USA. 2008;105:18035–18040. doi: 10.1073/pnas.0805234105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Rauschecker JP, Scott SK. Maps and streams in the auditory cortex: Nonhuman primates illuminate human speech processing. Nat Neurosci. 2009;12:718–724. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Bushara KO, et al. Modality-specific frontal and parietal areas for auditory and visual spatial localization in humans. Nat Neurosci. 1999;2:759–766. doi: 10.1038/11239. [DOI] [PubMed] [Google Scholar]

- 58.Zatorre RJ, Bouffard M, Ahad P, Belin P. Where is ‘where’ in the human auditory cortex? Nat Neurosci. 2002;5:905–909. doi: 10.1038/nn904. [DOI] [PubMed] [Google Scholar]

- 59.Arnott SR, Binns MA, Grady CL, Alain C. Assessing the auditory dual-pathway model in humans. Neuroimage. 2004;22:401–408. doi: 10.1016/j.neuroimage.2004.01.014. [DOI] [PubMed] [Google Scholar]

- 60.Hill KT, Miller LM. Auditory attentional control and selection during cocktail party listening. Cereb Cortex. 2010;20:583–590. doi: 10.1093/cercor/bhp124. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Puschmann S, et al. The right temporoparietal junction supports speech tracking during selective listening: Evidence from concurrent EEG-fMRI. J Neurosci. 2017;37:11505–11516. doi: 10.1523/JNEUROSCI.1007-17.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Dosenbach NU, et al. A core system for the implementation of task sets. Neuron. 2006;50:799–812. doi: 10.1016/j.neuron.2006.04.031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Vaden KI, Jr, Kuchinsky SE, Ahlstrom JB, Dubno JR, Eckert MA. Cortical activity predicts which older adults recognize speech in noise and when. J Neurosci. 2015;35:3929–3937. doi: 10.1523/JNEUROSCI.2908-14.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Sadaghiani S, D’Esposito M. Functional characterization of the cingulo-opercular network in the maintenance of tonic alertness. Cereb Cortex. 2015;25:2763–2773. doi: 10.1093/cercor/bhu072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Sadaghiani S, Poline JB, Kleinschmidt A, D’Esposito M. Ongoing dynamics in large-scale functional connectivity predict perception. Proc Natl Acad Sci USA. 2015;112:8463–8468. doi: 10.1073/pnas.1420687112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Alavash M, Daube C, Wöstmann M, Brandmeyer A, Obleser J. Large-scale network dynamics of beta-band oscillations underlie auditory perceptual decision-making. Netw Neurosci. 2017;1:166–191. doi: 10.1162/NETN_a_00009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Bassett DS, et al. Dynamic reconfiguration of human brain networks during learning. Proc Natl Acad Sci USA. 2011;108:7641–7646. doi: 10.1073/pnas.1018985108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Bassett DS, Gazzaniga MS. Understanding complexity in the human brain. Trends Cogn Sci. 2011;15:200–209. doi: 10.1016/j.tics.2011.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Gordon EM, et al. Precision functional mapping of individual human brains. Neuron. 2017;95:791–807.e7. doi: 10.1016/j.neuron.2017.07.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Poldrack RA. Precision neuroscience: Dense sampling of individual brains. Neuron. 2017;95:727–729. doi: 10.1016/j.neuron.2017.08.002. [DOI] [PubMed] [Google Scholar]

- 71.Satterthwaite TD, Xia CH, Bassett DS. Personalized neuroscience: Common and individual-specific features in functional brain networks. Neuron. 2018;98:243–245. doi: 10.1016/j.neuron.2018.04.007. [DOI] [PubMed] [Google Scholar]

- 72.Keitel A, Gross J. Individual human brain areas can be identified from their characteristic spectral activation fingerprints. PLoS Biol. 2016;14:e1002498. doi: 10.1371/journal.pbio.1002498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Peelle JE, Davis MH. Neural oscillations carry speech rhythm through to comprehension. Front Psychol. 2012;3:320. doi: 10.3389/fpsyg.2012.00320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Giraud AL, Poeppel D. Cortical oscillations and speech processing: Emerging computational principles and operations. Nat Neurosci. 2012;15:511–517. doi: 10.1038/nn.3063. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Oldfield RC. The assessment and analysis of handedness: The Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- 76.Ashburner J, Friston KJ. Unified segmentation. Neuroimage. 2005;26:839–851. doi: 10.1016/j.neuroimage.2005.02.018. [DOI] [PubMed] [Google Scholar]

- 77.Fornito A, Zalesky A, Breakspear M. Graph analysis of the human connectome: Promise, progress, and pitfalls. Neuroimage. 2013;80:426–444. doi: 10.1016/j.neuroimage.2013.04.087. [DOI] [PubMed] [Google Scholar]

- 78.Stanley ML, et al. Defining nodes in complex brain networks. Front Comput Neurosci. 2013;7:169. doi: 10.3389/fncom.2013.00169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Murphy K, Birn RM, Bandettini PA. Resting-state fMRI confounds and cleanup. Neuroimage. 2013;80:349–359. doi: 10.1016/j.neuroimage.2013.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Power JD, Schlaggar BL, Petersen SE. Recent progress and outstanding issues in motion correction in resting state fMRI. Neuroimage. 2015;105:536–551. doi: 10.1016/j.neuroimage.2014.10.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Van Dijk KR, Sabuncu MR, Buckner RL. The influence of head motion on intrinsic functional connectivity MRI. Neuroimage. 2012;59:431–438. doi: 10.1016/j.neuroimage.2011.07.044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Patel AX, et al. A wavelet method for modeling and despiking motion artifacts from resting-state fMRI time series. Neuroimage. 2014;95:287–304. doi: 10.1016/j.neuroimage.2014.03.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Arslan S, et al. Human brain mapping: A systematic comparison of parcellation methods for the human cerebral cortex. Neuroimage. 2018;170:5–30. doi: 10.1016/j.neuroimage.2017.04.014. [DOI] [PubMed] [Google Scholar]

- 84.Power JD, Plitt M, Laumann TO, Martin A. Sources and implications of whole-brain fMRI signals in humans. Neuroimage. 2017;146:609–625. doi: 10.1016/j.neuroimage.2016.09.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Schölvinck ML, Maier A, Ye FQ, Duyn JH, Leopold DA. Neural basis of global resting-state fMRI activity. Proc Natl Acad Sci USA. 2010;107:10238–10243. doi: 10.1073/pnas.0913110107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Murphy K, Fox MD. Towards a consensus regarding global signal regression for resting state functional connectivity MRI. Neuroimage. 2017;154:169–173. doi: 10.1016/j.neuroimage.2016.11.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.Achard S, Salvador R, Whitcher B, Suckling J, Bullmore E. A resilient, low-frequency, small-world human brain functional network with highly connected association cortical hubs. J Neurosci. 2006;26:63–72. doi: 10.1523/JNEUROSCI.3874-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.Achard S, Bassett DS, Meyer-Lindenberg A, Bullmore E. Fractal connectivity of long-memory networks. Phys Rev E Stat Nonlin Soft Matter Phys. 2008;77:036104. doi: 10.1103/PhysRevE.77.036104. [DOI] [PubMed] [Google Scholar]

- 89.Giessing C, Thiel CM, Alexander-Bloch AF, Patel AX, Bullmore ET. Human brain functional network changes associated with enhanced and impaired attentional task performance. J Neurosci. 2013;33:5903–5914. doi: 10.1523/JNEUROSCI.4854-12.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Alavash M, Hilgetag CC, Thiel CM, Gießing C. Persistency and flexibility of complex brain networks underlie dual-task interference. Hum Brain Mapp. 2015;36:3542–3562. doi: 10.1002/hbm.22861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Alavash M, Thiel CM, Gießing C. Dynamic coupling of complex brain networks and dual-task behavior. Neuroimage. 2016;129:233–246. doi: 10.1016/j.neuroimage.2016.01.028. [DOI] [PubMed] [Google Scholar]

- 92.Alavash M, et al. Dopaminergic modulation of hemodynamic signal variability and the functional connectome during cognitive performance. Neuroimage. 2018;172:341–356. doi: 10.1016/j.neuroimage.2018.01.048. [DOI] [PubMed] [Google Scholar]

- 93.Ginestet CE, Nichols TE, Bullmore ET, Simmons A. Brain network analysis: Separating cost from topology using cost-integration. PLoS One. 2011;6:e21570. doi: 10.1371/journal.pone.0021570. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.van Wijk BCM, Stam CJ, Daffertshofer A. Comparing brain networks of different size and connectivity density using graph theory. PLoS One. 2010;5:e13701. doi: 10.1371/journal.pone.0013701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.van den Heuvel MP, et al. Proportional thresholding in resting-state fMRI functional connectivity networks and consequences for patient-control connectome studies: Issues and recommendations. Neuroimage. 2017;152:437–449. doi: 10.1016/j.neuroimage.2017.02.005. [DOI] [PubMed] [Google Scholar]

- 96.Garrison KA, Scheinost D, Finn ES, Shen X, Constable RT. The (in)stability of functional brain network measures across thresholds. Neuroimage. 2015;118:651–661. doi: 10.1016/j.neuroimage.2015.05.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97.Betzel RF, Bassett DS. Multi-scale brain networks. Neuroimage. 2017;160:73–83. doi: 10.1016/j.neuroimage.2016.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials