Abstract

Modern bio-technologies have produced a vast amount of high-throughput data with the number of predictors far greater than the sample size. In order to identify more novel biomarkers and understand biological mechanisms, it is vital to detect signals weakly associated with outcomes among ultrahigh-dimensional predictors. However, existing screening methods, which typically ignore correlation information, are likely to miss weak signals. By incorporating the inter-feature dependence, a covariance-insured screening approach is proposed to identify predictors that are jointly informative but marginally weakly associated with outcomes. The validity of the method is examined via extensive simulations and a real data study for selecting potential genetic factors related to the onset of multiple myeloma.

Keywords: Covariance-insured screening, Dimensionality reduction, High-dimensional data, Variable selection

1. Introduction

Rapid biological advances have generated a vast amount of ultrahigh-dimensional genetic data. Extracting information from these data have become a major driving force for the development of modern statistics in the last decade.

A seminal paper by [1] proposed sure independence screening (SIS) for selecting variables from ultrahigh-dimensional data. The essence of this approach is to select variables with strong marginal correlations with the response. Much research has been inspired thereafter. [2] expanded SIS to accommodate generalized linear models, [3] studied variable screening under the Cox proportional hazards models, and further proposed a score test-based screening method [4]. Additional researches have ensured on semiparametric and nonparametric screening: semiparametric marginal screening methods have been proposed for linear transformation models [5] and general single-index models [6], whereas nonparametric marginal screening methods have been proposed for linear additive models [7] and quantile regressions [8].

Though varied in many contexts, these methods are based on marginal associations of individual predictors with the outcome; i.e. they assume that the true association between the individual predictors and outcomes can be inferred from their marginal associations. The condition, however, is often violated in practice. As marginal screening methods ignore inter-feature correlations, they tend to select irrelevant variables that are highly correlated with important variables (false positive) and fail to select relevant variables that are marginally unimportant but jointly informative (false negative).

Because of these limitations, there has been a surge of interest in conducting multivariate screenings that account for inter-feature dependence: [9] developed a partial correlation based algorithm (PC-simple); [10] proposed a sequential approach (Tilting) that measures the contribution of each variable after controlling for the other correlated variables; [11] introduced high-dimensional ordinary least squares projection (HOLP) that projects response to the row vectors of the design matrix, which may preserve the ranks of regression coefficients; and [12] proposed Graphlet Screening (GS) by using the sample covariance matrix to construct a regularized graph and sequentially screening connected subgraphs.

Conceptually, multivariate screenings have been appealing. However, the computational burden increases substantially with the number of covariates. Although simplifications, such as PC-simple and Titling, have been applied to improve computational efficiency in ultrahigh-dimensional cases, they may not adequately assess the true contribution of each covariate.

For adequately assessing the association of each covariate with the response, while maintaining computational feasibility, this paper presents a covariance-insured screening (CIS). Leveraging the inter-feature dependence, the proposed approach is able to identify marginally unimportant but jointly informative features that are likely to be missed by conventional screening procedures. In our methodological development, we have relaxed marginal correlation conditions that have often been assumed in the literature. Without such restrictive assumptions, we can still produce the consistency results for variable selection in ultrahigh-dimensional situations. Moreover, the proposed method is computationally efficient and suitable for the analysis of ultrahigh-dimensional data.

The remaining article is organized as follows. In Section 2, we provide some requisite preliminaries and describe our proposed method in Section 3. We then compare it with existing methods in Section 4. In Section 5, we study the theoretical properties and propose a procedure for selecting tuning parameters. Finite-sample properties are examined in Section 6. We apply the proposed method to analyze multiple myeloma data in Section 7. We conclude with a discussion in Section 8. All technical proofs have been deferred to Appendix.

2. Notation and Model

Consider a multiple linear regression model with n independent samples, y = Xβ + ϵ, where y = (Y1, …, Yn)T is the response vector, ϵ = (ϵ1, …, ϵn)T is a vector of independently and identically distributed random errors, X is an n × p design matrix, and β = (β1, …, βp)T is the coefficient vector. We write X = [X1, …, Xn]T = [x1, …, xp], where Xi is a p-dimension covariate vector for the i-th subject and xj is the j-th column of the design matrix, 1 ≤ i ≤ n, 1 ≤ j ≤ p. Without loss of generality, we assume that each covariate xj is standardized to have sample mean 0 and sample standard deviation 1. For any set , we define sub-vectors, and . Let Xi,−j = {Xi,1, …, Xi,p} \ {Xi,j} and denote by Σ = Cov(Xi).

When p ≫ n, β is difficult to estimate without the common sparsity condition that assumes only a small number of variables related with the response. For improved model interpretability and accuracy of estimation, our overarching goal is to identify the active set

| (1) |

2.1. Partial Correlation and PC-simple Algorithm

The direct linkage between β and the partial correlations has been well established in the literature; see [13] and [14], among many others. Recently there has been much interest [9, 10] in conducting variable screening via partial correlations, which are defined below.

Definition 1 The partial correlation, ρ*(Yi,Xi,j|Xi,−j), is the correlation between the residuals resulting from the linear regression of Xi,j on Xi,−j and Yi on Xi,−j

| (2) |

When p is large, estimating partial correlations is computationally cumbersome. [9] proposed a PC-simple algorithm to compute lower-order partial correlations sequentially for some with the cardinality , where m is a pre-specified integer. When m = 0 or is empty, the PC-simple algorithm is a special case of the SIS procedure.

The PC-simple algorithm reduces the computational burden in multivariate screening and provides a new approach for variable screening. The validity of this algorithm hinges upon the condition that implies ρ*(Yi,Xi,j|Xi,−j) = 0. To further examine this condition, [10] considered a sample version of (2)

where In is the identity matrix and is the projection matrix onto the space spanned by . The numerator of can be decomposed as

| (3) |

Equation (3) indicates that, only when the last two terms on the right hand side of (3) are negligible compared to the first one, the PC-algorithm is valid and can be used to identify in lieu of . However, there is no guarantee this condition would hold for an arbitrary set .

3. Proposed Method

As discussed previously, to adequately assess the true contribution of each covariate, the conditional set is critical. We propose compartmentalizing covariates into blocks so that variables from distinct blocks are less correlated. This solution may bypass the difficulty encountered in existing multivariate screening procedures and render improved computational feasibility, better screening efficiency and weaker theoretical conditions.

3.1. Preamble

First, in order to identify the active set , we consider the semi-partial correlation [15], a modified version of partial correlation that is defined below.

Definition 2 The semi-partial correlation, ρ(Yi,Xi,j|Xi,−j), is the correlation between Yi. and the residuals resulting from the linear regression of Xi,j on Xi,−j, i.e.

| (4) |

Indeed, the following lemma reveals that ρ(Yi,Xi,j|Xi,−j) infers the effect of Xi,j on Yi conditional on Xi,−j and hence identifying (1) is equivalent to finding

| (5) |

Lemma 1 Suppose that Σ is positive definite. Then

The intuitions of the proposed CIS method are further provided by the following lemma.

Lemma 2 Suppose that the predictors can be partitioned into independent blocks, . For any j = 1, …, p and some g such that ,

We first note that the equality in Lemma 2 does not hold for partial correlations, which motivates the use of semi-partial correlations instead. Second, Lemma 2 provides the intuition behind the proposed method. However, the independent block assumption is not required for the proposed method, which is valid for more general settings by thresholding the sample covariance matrix [16] and compartmentalizing covariates into blocks. Constructing covariance-based blocks is well understood in genetics literature and is often of interest per se [17]. For example, in a cutaneous melanoma study [18], 2,339 single-nucleotide polymorphisms (SNPs) could be grouped into 15 blocks; see Figure 1.

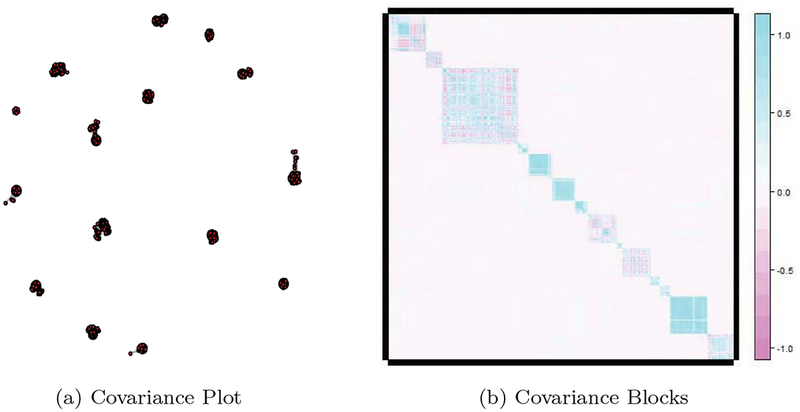

Figure 1:

(a) Graphical representation of 2,339 SNPs shown in He et al. (2016). The covariance plot clearly shows that SNPs form 15 distinct pathways. Specifically, SNPs are placed in the same pathway when their absolute sample correlation ≥ 0.2. (b) Fifteen pathways presented in (a) can be presented by a block-diagonal covariance matrix.

3.2. Thresholding Sample Covariance Matrix

To formalize the idea of thresholding, consider the sample estimate of Σ. For a constant δ > 0, let be the thresholded matrix of such that

We then partition the vector β into blocks, , in a way such that all off-diagonal blocks of are zero; e.g.

Here G is the number of blocks and forms a partition of the p predictors:

To identify the partition, we use a simple correlation based partition procedure, which is along the lines of the breadth-first search algorithm for finding connected components in graph theory [19]. To illustrate the idea, we consider a toy example. Suppose we have five variables (Xl, X2, X3, X4, X5) with a sample correlation matrix,

and the thresholding parameter δ = 0:4. The corresponding adjacency matrix is

Further details for the partition are provided below:

Step 1: We begin by treating each of the variables as its own block: {1}, {2}, {3}, {4}, {5}.

Step 2: Two variable Xj and Xj′ are connected if and only of the jj′-th element in the adjacency matrix is non-zero. Note that X3 is the only variable connected with X1 and, hence, these two variables are merged to form the block {1, 3}. Thus, there are four blocks left: {1, 3}, {2}, {4}, {5}.

Step 3: Next, X1 and X5 are the only variable connected with X3. Therefore, X5 is merged with the block {1, 3} to form a new block of {1, 3, 5}. That is, we have three blocks: {1, 3, 5}, {2}, {4}.

Step 4: Because X1 and X3 are the only variable connected with X5, there is no other variable that can be fused into the block {1, 3, 5}. Moreover, because X2 and X4 are connected, they are merged to form a new block of {2, 4}. The blocking process terminates as there are no unvisited variables. We have two blocks with respect to δ = 0.4: {1, 3, 5}, {2, 4}.

To implement the above algorithm, we utilize the R package igraph [20], which is computationally efficient for large p (e.g. from 10, 000 to 100, 000). Further discussion on thresholding the sample covariance matrix and detecting the block-diagonal structure can be found in [21].

3.3. Covariance-Insured Screening

With the block diagonal , the disconnected blocks are approximately orthogonal, which motivates us to apply block-wise procedures to compute the semi-partial correlation within each identified block. The proposed approach can be summarized as follows.

Step 1: Identify the disconnected blocks by thresholding the sample covariance matrix.

- Step 2: Compute the block-wise sample semi-partial correlations For each , 1 ≤ g ≤ G denote by the projection matrix onto the space spanned by . That is,

Then the block-wise sample semi-partial correlation can be calculated as - Step 3: Compute

where ν is a pre-defined threshold.

As suggested by a referee, one may also implement the ordinary least square (OLS) regression within each block and screen variables based on the OLS regression estimates. Empirically, we find that, when the within-block correlations are high, the proposed approach slightly outperforms the OLS-based approach. However, the OLS-based approach is relatively easier to be extended with the penalized methods to allow a large number of predictors within blocks.

4. Related Works

4.1. Sure Independence Screening (SIS)

The sure independence screening (SIS) [1] is the simplest approach for ultrahigh-dimensional variable screening, which selects all variables having sufficiently large absolute values of marginal sample correlation with the response. For a threshold parameter ν > 0, and let be the sample correlation between Yi and Xij, the selection index set by SIS is

4.2. Tilting Procedure

The marginal screening methods ignore the correlation between the predictors. As a remedy, the Tilting procedure [10] considers partial correlation. For a threshold parameter ν > 0, the selection index set by Tilting is

Specifically, for each variable under consideration, the corresponding contains all variables that are highly correlated with it. While successful in some applications, this way of selecting may not adequately assess the true contribution of each covariate. As a result, important predictors that have weak marginal effects but strong joint effects can be missed (a simple example is provided in the Supplementary Materials). Moreover, the computational cost grows drastically with the number of predictors.

4.3. Proposed Procedure

These concerns motivate the proposed method, which leverages the group information including covariance by compartmentalizing covariates into disconnected blocks. This approach may bypass the difficulty encountered in the Tilting procedure, assess the true contribution of each covariate, and render improved computational feasibility. However, the computation burden of the proposed method increases when the maximal number of variables in the disconnected blocks is large. Further investigations are needed to extend the proposed method to more general settings.

5. Asymptotic Property for CIS

5.1. Conditions and Assumptions

To make our results general, we allow the dimension of covariates and the active set to grow as functions of sample size, i.e., p = pn, G = Gn, and . Under some commonly assumed conditions below, we show that the CIS procedure identifies the true active set with probability tending to 1.

(A1) for some , where ‖ · ‖0 denotes the cardinality.

(A2) The dimension of the covariates is log(pn) = O(nc) for some c ∈ [0,1 – 2b), where .

- (A3) Assume that the predictors can be partitioned into disconnected blocks, , in a way such that all off-diagonal blocks of Σ are zero; e.g.

where Gn is the number of blocks (depending on n). Moreover, assume that the maximal number of variables in the disconnected blocks is bounded

for some constant C1 > 0 and d ∈ [0, b − a). - (A4) For a threshold with K a positive constant, assume that all nonzero elements of Σ satisfy

where - (A5) For 1 ≤ j ≤ pn, let Fj be the cumulative distribution function of . Assume

for 0 < |λ| < λ0 with some λ0 > 0. - (A6) Let λmax(A) and λmin(A) represent the largest and smallest eigenvalues of a positive definite matrix A. There exist positive constants γ, τmin and τmax such that

for any with cardinality . (A7) Assume that, with probability 1, max1≤j≤p ‖xj‖∞ ≤ Kx for a constant Kx > 0, where ‖xj‖∞ = max1≤i≤n |Xi,j|.

(A8) Assume non-zero coefficients βj satisfying for some M ∈ (0, ∞) and nκ for κ ∈ [0, b − a − d).

- (A9) The random errors follow a sub-exponential distribution; i.e. ϵ1, …, ϵn are independent random variables with mean 0 and satisfy

where Kϵ is a constant depending on the distribution.

Condition (A1) allows the number of non-zero coefficients to grow with the sample size n. Condition (A2) allows for ultrahigh-dimensionality. Conditions (A3)-(A5) guarantee the existence of the projection matrix and hence the corresponding sample semi-partial correlations. In particular, Condition (A3) assumes a block diagonal structure of the population covariance matrix. Conditions (A4) and (A5) are also assumed in [21], which imply the consistency of the covariance matrix partitioning procedure. Condition (A6) rules out the strong collinearity between variables, which is similar to Assumption 1 in [11] and Assumption 5 in [10]. Moreover, as shown in Lemma 1 of [22], there is a connection between Condition (A6) and the condition requiring strict positive definiteness of the population covariance matrix, which is commonly assumed in the variable selection literature [23, 24, 9]. For instance, when both X and ϵ follow the normal distribution, the former is implied by the latter. Condition (A7) is usually satisfied in practice. Condition (A8) controls the magnitude of the non-zero coefficients, which was also assumed in [1] and [11]. We note that, when the magnitude of the non-zero coefficients is small, to correctly identify the true signals, a smaller bound is needed for the maximum number of predictors within each correlated blocks. The sub-exponential distribution in Condition (A9) is general and includes many commonly assumed distributions.

5.2. Main Theorem

We start from a lemma summarizing some results for the consistency property of thresholding covariance matrix, meaning that the partitioning algorithm identifies the true diagonal blocks with probability tending to 1.

Lemma 3 Let be the thresholded covariance matrix:

Assume conditions (A2)-(A5), with positive constants C2 and C3,

where we choose , with the constant K large enough such that C3K2 > 2. Applying the additional condition ,

Therefore,

We establish the important properties of CIS by presenting the following theorem.

Theorem 1 (screening consistency) Assume that (A1)-(A9) hold. Denote by the set of selected variables from the CIS procedure with the tuning parameters νn = O(n−κ) and . Then the following two statements are true:

for a poistive constant η. These results imply the screening consistency property

5.3. Iterative CIS

Even though theoretical thresholds have been derived in various variable screening procedures, it remains a challenge to implement them. Moreover, strong correlations among predictors may deteriorate the performance of screening procedures in finite samples. To address these challenges, iterative SIS (ISIS) [1] was proposed as a remedy for marginal screening procedures. Along the same lines, we design an iterative CIS algorithm (termed ICIS) and further build a thresholding procedure to control false discoveries.

Step 1: Resample the original data with replacement multiple (say B) times.

Step 2: For each resampled data, first identify the variables by the proposed CIS procedure, followed by applying adaptive Lasso for variable selection and computing the associated residuals in the regression.

Step 3: Treating those residuals as new responses, we apply CIS to the remaining variables.

Step 4: We repeat the procedure until a pre-specified number of iterations is achieved or the selected variables do not change.

- Step 5: Denote the selected variable index set from the r-th resampled data as for r = 1, …, B. Let be the empirical probability that the j-th variable is selected:

For a threshold ψ ∈ (0, 1), the procedure selects variables with(6)

To determine data-driven thresholds for the selection frequency ψ, we further adopt a random permutation-based approach [18] to control the empirical Bayes false discovery rate [25]. For a pre-specified value q ∈ (0, 1), ψ will be chosen to ensure that at most q proportion of the selected variables would be false positives. Further technical details are provided in the Supplementary Materials.

6. Simulation Study

6.1. Performance of the CIS

We assess the performance of the proposed CIS method by comparing it with SIS, non-iterative versions of HOLP and the Tilting under various simulation configurations. Block-wise semi-partial correlation are estimated by applying R package corpcor. For each configuration a total of 100 independent data are generated.

- (Model A) Data are generated with n = 1, 000 and p = 10, 000, from a multivariate normal distribution with a block-diagonal covariance structure (m = 100 independent blocks, each with 100 predictors). Within each block the variables follow an AR1 model with the auto-correlation (ρ) varying from 0.5 to 0.9. The variables with non-zero effects are

with the corresponding coefficients 1, −1,1, −1, −1,1, −1,1, −1,1. - (Model B) This model is similar to Model A, but the variables with non-zero effects are

with the corresponding coefficients 1, 1, −1, 1, −1, 1, −1, 1, −1, 1. - (Model C) This model is similar to Model A, but the covariance matrix is not block-diagonal (e.g. the variables follow an AR1 model with the auto-correlation (ρ) varying from 0.5 to 0.9). The variables with non-zero effects are

with the corresponding coefficients 1, −1, 1, −1, −1, 1, −1, 1, −1, 1, where the indices j1, …, j8 are randomly drawn from {1, …, p}.

Table 1 compares the minimum model size (MMS) to include the true model. For Model A and B, the threshold is used to determine blocks in the CIS procedure. The purpose of simulation Model C is to provide a sensitivity analysis so that we can assess the proposed method when its assumed conditions are violated. Instead of applying δ, we partition variables into blocks with 10 variables within each block (stop merging new variables if the number of variables achieves the limit). When the correlations are low, all methods perform well and the MMS is close to 10, the true model size. When the correlation is greater than 0.6, CIS outperforms SIS and Tilting in the presence of signal cancellation (Models A and C). The poor performance of SIS and Tilting can be explained in part because the strong marginal correlation condition is not satisfied. Interestingly, HOLP is competitive and performs well for Model B and C, but does not work well for Model A. This might be caused by the violation of the diagonal dominance of XT(XXT)−1X required by HOLP.

Table 1:

The minimum model size (MMS) to include the true model (standard deviation in parentheses) for Models A-C.

| Models | ρ | SIS | HOLP | Tilting | CIS |

|---|---|---|---|---|---|

| A | 0.9 | 7103.2 (1937.4) | 3458.4 (2932.2) | 1041.1 (648.7) | 73.7 (141.7) |

| 0.8 | 2835.0 (2334.0) | 660.7 (877.2) | 168.3 (131.2) | 11.4 (5.1) | |

| 0.7 | 505.9 (594.9) | 102.9 (253.2) | 31.0 (8.7) | 10.0 (0.0) | |

| 0.6 | 60.4 (75.3) | 21.9 (20.9) | 21 (2.9) | 10.0 (0.0) | |

| 0.5 | 18.1 (7.7) | 13.2 (2.2) | 19.5 (21.7) | 10.0 (0.0) | |

| B | 0.9 | 21.2 (5.4) | 12.2 (1.9) | 154.2 (515.1) | 68.6 (80.4) |

| 0.8 | 13.2 (2.5) | 10.7 (1.1) | 13.9 (4.4) | 10.9 (2.2) | |

| 0.7 | 10.7 (1.1) | 10.2 (0.5) | 10.7 (1.1) | 10.0 (0.0) | |

| 0.6 | 10.2 (0.5) | 10.0 (0.0) | 10.2 (0.5) | 10.0 (0.0) | |

| 0.5 | 10.0 (0.0) | 10.0 (0.0) | 10.0 (0.0) | 10.0 (0.0) | |

| C | 0.9 | 3729.2 (944.3) | 220.7 (555.1) | 910.4 (1215.3) | 270.3 (453.4) |

| 0.8 | 1570.4 (1343.2) | 47.2 (946.1) | 147.8 (86.2) | 14.8 (8.6) | |

| 0.7 | 350.6 (523.2) | 27.0 (11.6) | 47.4 (16.5) | 10.8 (0.8) | |

| 0.6 | 58.1 (54.5) | 17.8 (3.3) | 26.5 (5.7) | 10.2 (0.4) | |

| 0.5 | 21.9 (5.0) | 11.5 (1.7) | 18.3 (3.9) | 10.0 (0.1) |

6.2. Performance of the iterative CIS (ICIS)

We compare ICIS with Lasso, adaptive Lasso, ISIS, iterative HOLP and Tilting.

(Model D) This model is similar to Model A. Within each block, the variables follow a AR1 model with parameter 0.9. The effect size of β is chosen as 0.5, 0.75 and 1 to generate a wide range of signal strength.

Data are generated with p = 1, 000 or 10, 000. No results are reported for Tilting with p = 10, 000 due to its intensive computation. As indicated in Table 2, iterative CIS outperforms most methods, yielding the smallest false negative (FN) and false positive (FP) combined.

Table 2:

Numbers of false positives (FP) and numbers of false negatives (FN) for Model D.

| P | |β| | Measures | Lasso | Adaptive Lasso | ISIS | HOLP | Tilting | ICIS |

|---|---|---|---|---|---|---|---|---|

| 10,000 | 0.5 | FP | 45.47 | 0.01 | 0.02 | 0.13 | NA | 0.20 |

| FN | 3.47 | 4.00 | 4.00 | 4.00 | NA | 0.33 | ||

| 0.75 | FP | 145.57 | 0.10 | 26.88 | 0.19 | NA | 0.22 | |

| FN | 1.31 | 2.31 | 2.85 | 4.00 | NA | 0.00 | ||

| 1 | FP | 220.87 | 0.06 | 23.62 | 0.21 | NA | 0.08 | |

| FN | 0.00 | 0.00 | 0.14 | 4.00 | NA | 0.00 | ||

| 1,000 | 0.5 | FP | 85.35 | 7.64 | 0.80 | 0.27 | 0.21 | 0.73 |

| FN | 0.28 | 0.52 | 3.80 | 3.09 | 3.08 | 0.06 | ||

| 0.75 | FP | 90.85 | 3.83 | 10.15 | 0.75 | 0.95 | 0.80 | |

| FN | 0.00 | 0.00 | 0.12 | 0.32 | 0.90 | 0.00 | ||

| 1 | FP | 92.49 | 2.28 | 2.15 | 0.13 | 0.78 | 0.22 | |

| FN | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 | 0.00 |

We next compare the proposed method with iterative Graphlet Screening (GS). We consider Experiment 2b reported in [12], which is described as follows:

(Model E) Data are generated with p = 5,000 and n = pκ with κ = 0.975. We consider the following Asymptotic Rare and Weak (ARW) model [12]. The signal vector β is modeled by β = b ○ μ, where ○ denotes the Hadamard product (i.e. entrywise product). The vector of μ consists of zj|μj|, j = 1, …, p, where zj = ±1 with equal probability and , where is the point mass at τp with . The h(x) is the density of τp(1 + V/6), . We choose the correlation matrix to be a diagonal block matrix where each block is a 4 by 4 matrix satisfying Corr(Xi,j, Xi,j′) = I(j = j′) + 0.4I(|j − j′| = 1) × sign(6 − j − j′) + 0.05I(|j − j′| > 2) × sign(5.5 − j − j′), 1 ≤ j, j′ ≤ 4. The vector b consists of bj, j = 1, …, p, where bj = 0 or 1. Let k be the number of variables with bj ≠ 0 within each block. With π = 0.2 and ϑ = 0.35, we randomly choose (1 − 4p−ϑ) fraction of the blocks for k = 0 (e.g. bj = 0 for all j belongs to these block), 4(1 − π)p−ϑ fraction of the blocks for k = 1, and 4πp−ϑ fraction of the block for k ∈ {2, 3,4}.

Table 3 compares Lasso, adaptive Lasso, ISIS, ICIS and GS. No results are reported for HOLP (intensive computation for large n) or Tilting (intensive computation for large p). Web Figure A1 in the Supplementary Materials compares ICIS and GS with various choices of tuning parameters. The results suggest that the perturbation of tuning parameters has relatively small effects on the proposed ICIS, which outperforms GS.

Table 3:

Numbers of false positives (FP) and false negatives (FN) for ModelE.

| Measures | Lasso | Adaptive Lasso | ISIS | GS | ICIS |

|---|---|---|---|---|---|

| FP | 959.84 | 0.16 | 137.90 | 48.78 | 20.13 |

| FN | 2.99 | 21.66 | 10.90 | 26.04 | 2.55 |

7. Real Data Study

7.1. Multiple Myeloma Data

Multiple myeloma (MM) represents more than 10 percent of all hematologic cancers in the U.S. [26], resulting in more than 10,000 deaths each year. Developments in gene-expression profiling and sequencing of MM patients have offered effective ways of understanding the cancer genome [27]. Despite this promising outlook, analytic methods remain insufficient for achieving truly personalized medicine. The standard procedure is to evaluate one gene at a time, which results in low statistical power to identify the disease-associated genes [28]. Thus, more accurate models that leverage the large amounts of genomic data now available are in great demand.

Our goal is to identify genes that are relevant to the Beta-2-microglobulin (Beta-2-M), which is a continuous prognostic factor for multiple myeloma. We use gene expression and Beta-2-M from 340 multiple myeloma patients who were recruited into clinical trial UARK 98–026, which studied total therapy II (TT2). These data are described in [29], and can be obtained through the MicroArray Quality Control Consortium II study [30], available on GEO (GSE24080). Gene expression profiling was performed using Affymetrix U133Plus2.0 microarrays. Following the strategy in [4], we averaged the expression levels of probesets corresponding to the same gene, resulting in 20,502 covariates.

7.2. Analysis Methods

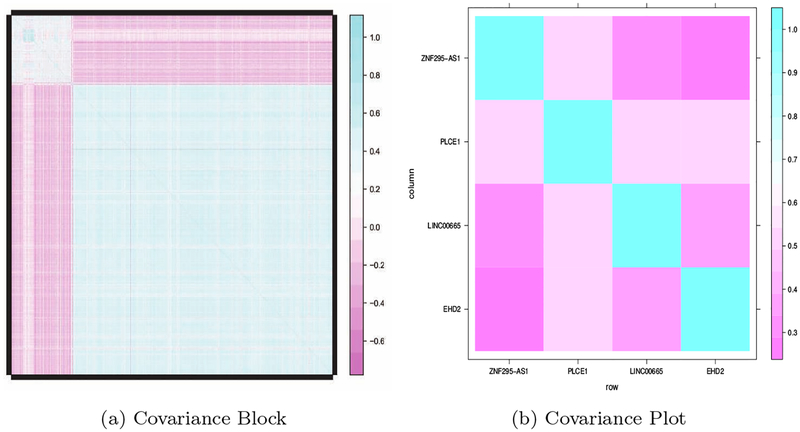

The genetic variants often possess block covariance structures. In our motivating MM study, the estimated covariance matrix of gene expressions is nearly block diagonal under a suitable permutation of the variables. The predictors are strongly correlated within blocks and are less correlated between blocks (a sample covariance plot is shown in Figure 2a). Hence, many elements of the covariance matrix are small. A major challenge, arising from such a covariance structure, is that some genes can be jointly relevant but not marginally relevant to the disease outcome. In such a difficult setting, popular methods such as marginal screening and multivariate screening are overwhelmed, because marginal screening largely neglects correlations across predictors. Exhaustive multivariate screening is computationally infeasible.

Figure 2:

(a) The covariance block containing PLCE1, EHD2, LINC00665 and ZNF295-AS1. (b) Covariance plot

To select the informative genes, the proposed ICIS is implemented on the MM data set with 340 subjects. The thresholding parameter for δn is fixed at such that the maximal number of variables in the disconnected blocks satisfies qn ≤ n. The importance of predictors is evaluated by the selection frequencies among the 50 resampled data. The estimated false discovery rate is calculated to determine a data-driven threshold ψ (defined in Section 5.3) for the selection frequency such that at most q proportion of the selected variables would be false positives. We compare the ICIS with Lasso, adaptive Lasso, ISIS and iterative HOLP.

7.3. Results

Using our method, a total of 24 genes pass the threshold for q = 0.1. In comparison, the Lasso, adaptive Lasso, ISIS and HOLP procedures select 74, 0, 0 and 54 genes, respectively. All these results are consistent with those from the Simulation section. The Lasso tends to select many irrelevant variables, while the adaptive Lasso and ISIS suffer from a reduced power to identify informative predictors. The proposed method selects substantially fewer variables than the HOLP and provides a control for false discoveries. Some of the genes selected by the proposed method confirm those identified by Lasso and HOLP. One of the top common genes, MMSET (multiple myeloma SET domain containing protein), is known as the key molecular target in MM [31] and has been involved in the chromosomal translocation in MM. Another selected gene, FAM72A (Family With Sequence Similarity 72 Member A), has been reported to be associated with poor prognosis in multiple myeloma [32]. Moreover, expression level of gene ATF6 (Activating Transcription Factor 6) has been reported to predict the response of multiple myeloma to the proteasome inhibitor Bortezomib [33].

In addition, among the genes in our finding but not in other methods, Phospholipase C epsilon 1 (PLCE1), EH-domain containing 2 (EHD2), long inter-genic non-protein coding RNA 665 (LINC00665) and ZNF295 Antisense RNA 1 (ZNF295-AS1) are correlated with each other (see Figure 2b) and have reversed covariate effects (−0.51, 1.02, −0.23 and −0.43). These results suggest the existence of signal cancelations. The failure of identifying such genes by other screening methods may be explained in part because the strong marginal correlation condition is not satisfied. In fact, these genes are likely to be associated with the prognostic of the MM, as reported by previous literature. For instance, PLCE1, located on chromosome 10q23, encodes a phospholipase that has been reported to be associated with intracellular signaling through the regulation of a variety of proteins such as the protein kinase C (PKC) isozymes and the proto-oncogene ras [34, 35]. On the other hand, EDH2 is a plasma membrane-associated member of the EHD family, which regulates internalization and is related to actin cytoskeleton. Abnormal expression of EHD2 has been linked to metastasis of carcinoma [36]. In addition, [37] suggested linc00665 might play a role as sponge to indirectly de-repress a series of mRNAs in nasopharyngeal nonkeratinizing carcinoma. It appears that the proposed approach is able to identify jointly-informative variables that only have marginally weak associations with outcomes.

8. Discussion

We have developed a covariance-insured screening method for ultrahigh-dimensional variables. The innovation lies in that, as opposed to conventional variable screening methods, the proposed approach leverages the dependence structure among covariates and is able to identify jointly informative variables that only have weak marginal associations with outcomes. Moreover, the proposed method is computationally efficient, and thus suitable for the analysis of ultrahigh-dimensional data.

Supplementary Material

ACKNOWLEDGEMENT

The work was partially supported by grants from the NSA (H9823-15-1-0260: Hong) and the National Natural Science Foundation of China (No.11528102: Lin and Li).

Appendix

Proof of Lemma 3

By the construction of , the set

Thus,

Assuming condition (A5) and applying Lemma 1 of [21], we have

Assuming condition (A2),

with C3K2 > 2. Similarly,

Thus,

with n1−c(τn − δn)2 → ∞. Therefore,

In fact, the first term dominates the second one. In summary,

Proof of Theorem 1

The block-wise sample semi-partial correlation can be calculated as

where for some g, a factor 1/n is applied to both numerator and denominator to facilitate asymptotic derivations, and is the projection matrix onto the space spanned by

Because for , the numerator can be decomposed as

| (7) |

To show the first and the second statements in Theorem 1, we consider the following two scenarios respectively: (1) and (2) .

Step 1:

Step 1.1 We first aim to show that for the absolute value of the first term on the right hand side of (7) can be bounded from below and the last two terms on the right hand side of (7) are negligible compared to the first term.

Specifically, for the first term we can show that for some g such that ,

where α > 0 is a given constant. By the property of the determinant of a partitioned matrix, when A is non-singular,

Then we have

By Condition (A6), there exists a constant α > 0 such that

Therefore,

where the second term dominates the first one. Thus,

Step 1.2 We next consider the second term in (7) and show that for j = 1, …, pn and some g such that ,

Indeed, by the triangular inequality, given ,

By Condition (A1), . By the construction of ,

for and , where . By Condition (A7), . Therefore, given ,

| (8) |

Moreover, given ,

where ‖u‖2 is the ℓ2–norm for . By Condition (A6),

and applying Lemma 3

Moreover, given ,

We have

| (9) |

Step 1.3 We move on to study the third term in (7) and show that, under Condition (A7) and (A9), the third term is negligible compared to the first term. To proceed, we first reproduce a result from Lemma 14.9 of [38] for the sake of readability.

Lemma 4 (Bernstein’s inequality) Assume Condition (A9). Let t > 0 be an arbitrary constant. Then

The following Lemma provides the ground for the proof of Step 1.3.

Lemma 5 Assume Condition (A7) and (A9). For t > 0

where K0 = KxKϵ and σ0 = Kxσ.

Proof of Lemma 5

We have

Therefore, ϵiXi,j follows a sub-exponential distribution as well. Lemma 4 (Bernstein’s inequality) implies that

Therefore,

Proof of Step 1.3

We move on to study the third term in (7) and show that the third term is negligible compared to the first term. We have

Therefore,

where the last inequality holds by Lemma 5. Similarly

Step 1.4 We are now in a position to show the first statement in Theorem 1. Indeed, we have so far shown that for , the last two terms in (7) are negligible compared to the first term. Similarly, the two terms in the denominator of can be shown to be bounded from above. Combining these results and applying the Bonferroni inequality, we have

| (10) |

| (11) |

That is, the CIS procedure satisfies the sure screening property

Step 2:

We then move on to prove the second statement in Theorem 1 by considering the scenario when . Since βj = 0 for , the first term in (7) vanishes. Also, we showed that the absolute values of the second and the third terms can be bounded from above. Coupled with the fact that the denominator of is bounded from below, an application of the Bonferroni inequality yields

| (12) |

| (13) |

Finally, the first and the second statements in Theorem 1 immediately imply the screening consistency property:

Footnotes

SUPPLEMENTARY MATERIAL

Example R codes, technical details referenced in Sections 2–6, technical proofs for Lemmas 1–2 and Web Figures are available online. The complete data set can be downloaded from The Cancer Genome Atlas (https://cancergenome.nih.gov/).

References

- [1].Fan J, Lv J, Sure independence screening for ultrahigh dimensional feature space with discussion, Journal of the Royal Statistical Society: Series B 70 (5) (2008) 849–911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Fan J, Song R, Sure independence screening in generalized linear models and np-dimensionality, Annals of Statistics 38 (6) (2010) 3567–3604. [Google Scholar]

- [3].Zhao DS, Li Y, Principled sure independence screening for Cox models with ultra-high-dimensional covariates, Journal of Multivariate Analysis 105 (1) (2012) 397–411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Zhao DS, Li Y, Score test variable screening, Biometrics 70 (4) (2014) 862–871. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Zhu L, Li L, Li R, Zhu L, Model-free feature screening for ultrahigh-dimensional data, Journal of the American Statistical Association 106 (496) (2011) 1464–1475. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Li G, Peng H, Zhang J, Zhu L, Robust rank correlation based screening, Annals of Statistics 40 (2012) 1846–1877. [Google Scholar]

- [7].Fan J, Feng Y, Song R, Nonparametric independence screening in sparse ultra-high-dimensional additive models, Journal of the American Statistical Association 106 (494) (2011) 544–557. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].He X, Wang L, Hong HG, Quantile-adaptive model-free variable screening for high-dimensional heterogeneous data, Annals of Statistics 41 (1) (2013) 342–369. [Google Scholar]

- [9].Bühlmann P, Kalisch M, Maathuis M, Variable selection in high-dimensional linear models: partially faithful distributions and the PC-simple algorithm, Biometrika 97 (2) (2010) 261–278. [Google Scholar]

- [10].Cho H, Fryzlewicz P, High dimensional variable selection via tilting, Journal of the Royal Statistical Society: Series B 74 (3) (2012) 593–622. [Google Scholar]

- [11].Wang X, Leng C, High dimensional ordinary least squares projection for screening variables, Journal of the Royal Statistical Society: Series B 78 (3) (2016) 589–611. [Google Scholar]

- [12].Jin J, Zhang CH, Zhang Q, Optimality of graphlet screening in high dimensional variable selection, Journal of Machine Learning Research 15 (2014) 2723–2772. [Google Scholar]

- [13].Whittaker J, Graphical Models in Applied Multivariate Statistics, Wiley series in probability and mathematical statistics: Probability and mathematical statistics, 1990. [Google Scholar]

- [14].Peng J, Wang P, Zhou N, Zhu J, Partial correlation estimation by joint sparse regression models, Journal of the American Statistical Association 104 (486) (2009) 735–746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Kim S, ppcor: An R package for a fast calculation to semi-partial correlation coefficients, Communications for Statistical Applications and Methods 22 (6) (2015) 665–674. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Bickel P, Levina E, Covariance regularization by thresholding, Annals of Statistics 36 (6) (2008) 2577–2604. [Google Scholar]

- [17].Berisa T, Pickrell J, Approximately independent linkage disequilibrium blocks in human populations, Bioinformatics 32 (2) (2016) 283–285. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].He K, Li Y, Zhu J, Liu H, Lee JE, Amos CI, Hyslop T, Jin J, Lin H, Wei Q, Li Y, Component-wise gradient boosting and false discovery control in survival analysis with high-dimensional covariates, Bioinformatics 32 (1) (2016) 50–57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Even S, Graph Algorithms (Second Edition), Cambridge University Press, Cambridge, 2011. [Google Scholar]

- [20].Csardi G, Nepusz T, The igraph software package for complex network research, InterJournal, Complex Systems 1695 (6) (2006) 1–9. [Google Scholar]

- [21].Rothman A, Levina E, Zhu J, Generalized thresholding of large covariance matrices, Journal of the American Statistical Association 104 (485) (2009) 177–186. [Google Scholar]

- [22].Wang H, Forward regression for ultra-high dimensional variable screening, Journal of the American Statistical Association 104 (488) (2009) 1512–1524. [Google Scholar]

- [23].Fan J, Li R, Variable selection via nonconcave penalized likelihood and its oracle properties, Journal of the American Statistical Association 96 (456) (2001) 1348–1360. [Google Scholar]

- [24].Zou H, The adaptive Lasso and its oracle properties, Journal of the American statistical association 101 (476) (2006) 1418–1429. [Google Scholar]

- [25].Efron B, Large-Scale Inference: Empirical Bayes Methods for Estimation, Testing, and Prediction, Institute of Mathematical Statistics Monographs, Cambridge University Press, 2012. [Google Scholar]

- [26].Kyle R, Rajkuma S, Multiple myeloma, Blood 111 (2008) 2962–2972. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Chapman MA, Lawrence MS, Keats JJ, Cibulskis K, Sougnez C, Schinzel AC, Golub TR, Initial genome sequencing and analysis of multiple myeloma, Nature 471 (7339) (2011) 467–472. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Sun S, Hood M, Scott L, Peng Q, Mukherjee S, Tung J, Zhou X, Differential expression analysis for RNAseq using poisson mixed models, Nucleic Acids Research 45 (11) (2017) e106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Shaughnessy J, Zhan F, Burington B, Huang Y, Colla S, Hanamura I, Stewart J, Kordsmeier B, Randolph C, Williams D, Xiao Y, Xu H, Epstein J, Anaissie E, Krishna S, Cottler-Fox M, Hollmig K, Mohiuddin A, Pineda-Roman M, Tricot G, van Rhee F, Sawyer J, Alsayed Y, Walker R, Zangari M, Crowley J, Barlogie B, A validated gene expression model of high-risk multiple myeloma is defined by deregulated expression of genes mapping to chromosome 1, Blood 109 (2007) 2276–2284. [DOI] [PubMed] [Google Scholar]

- [30].Consortium M, The MAQC-II project: A comprehensive study of common practices for the development and validation of microarray-based predictive models, Nature Biotechnology 28 (2010) 827–838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Mirabella F, Wu P, Wardell C, Kaiser M, Walker B, Johnson D, Morgan G, Mmset is the key molecular target in t(4;14) myeloma, Blood Cancer Journal 3 (2013) e114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Noll J, Vandyke K, Hewett D, Mrozik K, Bala R, Williams S, Zannettino A, PTTG1 expression is associated with hyperproliferative disease and poor prognosis in multiple myeloma, Journal of Hematology and Oncology 8 (2015) 106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Nikesitch N, Tao C, Lai K, Killingsworth M, Bae S, Wang M, Ling SCW, Predicting the response of multiple myeloma to the proteasome inhibitor bortezomib by evaluation of the unfolded protein response, Blood Cancer Journal 6 (2016) e432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Rhee S, Regulation of phosphoinositide-specific phospholipase c, Annu Rev Biochem 70 (2001) 281–312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Bunney T, Baxendale R, Katan M, Regulatory links between plc enzymes and ras superfamily gtpases: signalling via plcepsilon, Adv Enzyme Regul 49 (2009) 54–58. [DOI] [PubMed] [Google Scholar]

- [36].Li M, Yang X, Zhang J, Shi H, Hang Q, Huang X, Wang H, Effects of ehd2 interference on migration of esophageal squamous cell carcinoma, Medical Oncology 30 (1) (2013) 396. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Zhang B, Wang D, Wu J, Tang J, Chen W, Chen X, Zhang D, Deng Y, Guo M, Wang Y, Luo J, Chen R, Expression profiling and functional prediction of long noncoding RNAs in nasopharyngeal nonkeratinizing carcinoma, Discov Med 21 (116) (2016) 239–250. [PubMed] [Google Scholar]

- [38].Bühlmann P, van de Geer S, Statistics for High-Dimensional Data: Methods, Theory and Applications, Springer-Verlag, Berlin Heidelberg, 2011. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.