Key Points

Question

Can the networks of 2 validated deep learning models for referable diabetic retinopathy and glaucomatous optic neuropathy be reliably visualized?

Findings

In this cross-sectional study, lesions typically observed in cases of referable diabetic retinopathy (exudate, hemorrhage, or vessel abnormality) were identified as the most important prognostic regions in 96 of 100 true-positive diabetic retinopathy cases. All 100 glaucomatous optic neuropathy cases displayed heat map visualization within traditional disease regions.

Meaning

These findings substantiate the validity of deep learning models, verifying the reliability of a visualization method that may promote clinical adoption of these models.

Abstract

Importance

Convolutional neural networks have recently been applied to ophthalmic diseases; however, the rationale for the outputs generated by these systems is inscrutable to clinicians. A visualization tool is needed that would enable clinicians to understand important exposure variables in real time.

Objective

To systematically visualize the convolutional neural networks of 2 validated deep learning models for the detection of referable diabetic retinopathy (DR) and glaucomatous optic neuropathy (GON).

Design, Setting, and Participants

The GON and referable DR algorithms were previously developed and validated (holdout method) using 48 116 and 66 790 retinal photographs, respectively, derived from a third-party database (LabelMe) of deidentified photographs from various clinical settings in China. In the present cross-sectional study, a random sample of 100 true-positive photographs and all false-positive cases from each of the GON and DR validation data sets were selected. All data were collected from March to June 2017. The original color fundus images were processed using an adaptive kernel visualization technique. The images were preprocessed by applying a sliding window with a size of 28 × 28 pixels and a stride of 3 pixels to crop images into smaller subimages to produce a feature map. Threshold scales were adjusted to optimal levels for each model to generate heat maps highlighting localized landmarks on the input image. A single optometrist allocated each image to predefined categories based on the generated heat map.

Main Outcomes and Measures

Visualization regions of the fundus.

Results

In the GON data set, 90 of 100 true-positive cases (90%; 95% CI, 82%-95%) and 15 of 22 false-positive cases (68%; 95% CI, 45%-86%) displayed heat map visualization within regions of the optic nerve head only. Lesions typically seen in cases of referable DR (exudate, hemorrhage, or vessel abnormality) were identified as the most important prognostic regions in 96 of 100 true-positive DR cases (96%; 95% CI, 90%-99%). In 39 of 46 false-positive DR cases (85%; 95% CI, 71%-94%), the heat map displayed visualization of nontraditional fundus regions with or without retinal venules.

Conclusions and Relevance

These findings suggest that this visualization method can highlight traditional regions in disease diagnosis, substantiating the validity of the deep learning models investigated. This visualization technique may promote the clinical adoption of these models.

This cross-sectional study develops a method to visualize the convolutional neural networks of 2 validated deep learning models for detecting referable diabetic retinopathy and glaucomatous optic neuropathy to help promote clinical adoption of deep learning models for ophthalmic disease diagnosis.

Introduction

Convolutional neural networks (CNNs) have recently been applied to ophthalmic diseases, achieving excellent sensitivities and specificities for detecting diabetic retinopathy (DR)1,2,3,4,5 and glaucomatous optic neuropathy (GON)6,7 using standard color fundus photographs. Despite the accuracy of this artificial intelligence–based technology, a key challenge for clinical adoption is a major mindset shift in how clinicians entrust clinical care to machines. That is, deep learning models are often referred to as a “black box” because they use millions of image features to classify disease, as opposed to explicitly detecting clinical features that physicians are familiar with. Thus, the clinical adoption of these systems is unlikely to be grounded purely in accuracy-driven performance. In an attempt to alleviate the apprehension generated between accuracy and interpretability among clinicians, we propose a method to visualize the CNNs of 2 validated deep learning models.4,7

Methods

The development of the GON and DR models has been described elsewhere.4,7 In brief, the GON and referable DR deep learning algorithms were previously developed and validated (holdout method) using 48 116 and 66 790 retinal photographs, respectively, derived from a third-party database (LabelMe) containing deidentified photographs from various clinical settings in China. Retinal photographs were graded by a panel of ophthalmologists, with a criterion standard grading for each image assigned when consistent grading outcomes were achieved by at least 3 graders. Using this ground truth grading, we have a 2-level detection for referable GON (1, nonreferable GON; 2, referable GON [ie, suspect and certain cases]) and referable DR (1, nonreferable DR; 2, referable DR [ie, preproliferative DR or worse or diabetic macular edema]). The present study was approved by the Zhongshan Ophthalmic Center Institutional Review Board (Guangzhou, Guangdong, China). Because of the retrospective nature and fully anonymized usage of images in this study, the review board waived the need for obtaining informed patient consent.

To visualize the learning procedure of the networks in the present study, we developed and applied a CNN-independent adaptive kernel visualization technique. The original fundus images were preprocessed using the standard methods proposed by Ebner.8 In brief, this involved applying a sliding window (28 × 28 pixels, with a stride of 3 pixels) to crop images into smaller subimages and produce a feature map 172 × 172 (ie, [(544 − 28)/3] × [(544 − 28)/3)]). These subimages were used as inputs for the trained deep learning models. The threshold scale was adjusted to achieve an optimal heat map for each model. The threshold was set at 0.5 for the GON model and at 0.7 for the DR model, meaning that discriminative image regions were highlighted when the classification possibility output of being diagnosed was greater than 50% for GON and greater than 70% for DR. On the basis of the classification results of the overlapping subimages, a heat map was generated highlighting highly prognostic regions in the input image.

Using a simple random sampling method, we selected 100 true-positive photographs from each of the 1967 GON and 1468 DR validation data sets that were collected between March and June 2017. In addition, all false-positive cases without other abnormal ocular findings were included in the analysis (ie, true false-positives). On the basis of the CNN visualization output, a single optometrist (P.Y.L.) allocated each image to one of the predefined categories outlined in the Table. Any cases of uncertainty were adjudicated by the study ophthalmologist (M.H.).

Table. Summary of Visualization Regions for True-Positive and False-Positive Cases.

| Disease and Visualization Region | Cases, No. (%) [95% CI] | |

|---|---|---|

| True-Positive | False-Positive | |

| Glaucomatous optic neuropathy | ||

| ONH areas only | ||

| Superior or inferior rim | 48 (48) [38-58] | 4 (18) [5-40] |

| Superior or inferior rim and nontraditional ONH areasa | 42 (42) [32-52] | 11 (50) [28-72] |

| Superior or inferior rim and RNFL | 6 (6) [2-13] | 4 (18) [5-40] |

| RNFL arcades and nontraditional ONH areasa | 4 (4) [1-10] | 3 (14) [3-35] |

| Total, No. | 100 | 22 |

| Diabetic retinopathy | ||

| Predominant lesion | ||

| Exudate | 30 (30) [21-40] | |

| Hemorrhage | 34 (34) [25-44] | |

| Vesselb | 22 (22) [14-31] | 7 (15) [6-29] |

| Combined lesions | 10 (10) [5-18] | |

| Retinal vein and nontraditional areas | 1 (1) [0-5] | 30 (65) [50-79] |

| Nontraditional areas only | 3 (3) [1-8] | 9 (20) [9-34] |

| Total, No. | 100 | 46 |

Abbreviations: ONH, optic nerve head; RNFL, retinal nerve fiber layer.

Nontraditional ONH areas include central cup and temporal or nasal rim.

Includes intraretinal microvascular abnormality, neovascularization, or venular changes.

Results

GON Visualization

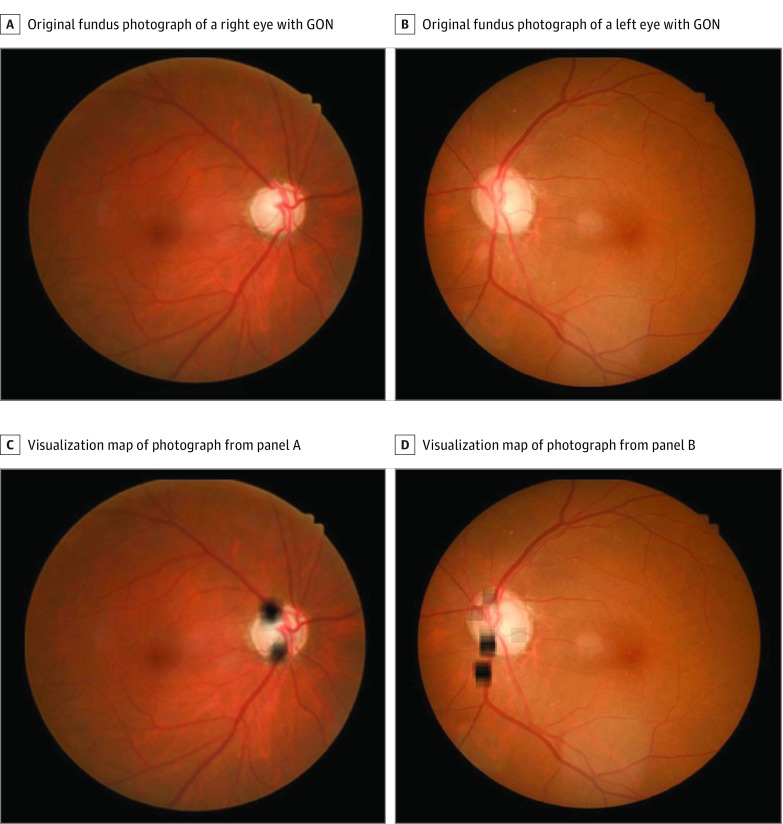

Of 100 true-positive GON cases, 90 (90%; 95% CI, 82%-95%) had highlighted regions within the optic nerve head (ONH) only, and 6 (6%; 95% CI, 2%-13%) displayed heat map visualization of the superior or inferior retinal nerve fiber layer (RNFL) arcades (Table and Figure 1). In the remaining 4 cases (4%; 95% CI, 1%-10%), the visualization region included superior or inferior RNFL arcades in combination with nontraditional ONH areas (central cup or nasal or temporal neuroretinal rim). Of 22 false-positive GON cases, 15 (68%; 95% CI, 45%-86%) displayed highlighted regions within the ONH only, 4 (18%; 95% CI, 5%-40%) showed visualization of RNFL arcades only, and 3 (14%; 95% CI, 3%-35%) recognized regions within the superior or inferior RNFL arcades in combination with nontraditional ONH areas (eFigure 1 in the Supplement).

Figure 1. Examples of Visualization Maps of True-Positive Cases of Glaucomatous Optic Neuropathy (GON).

A and B, Original color fundus photographs without heat maps. C, Heat map of image from panel A predominantly visualizing traditional areas of glaucomatous loss (ie, superior and inferior rim thinning). D, Heat map of image from panel B showing visualizations in the superior and inferior neuroretinal rim and the inferior superior and inferior retinal nerve fiber layer.

Referable DR Visualization

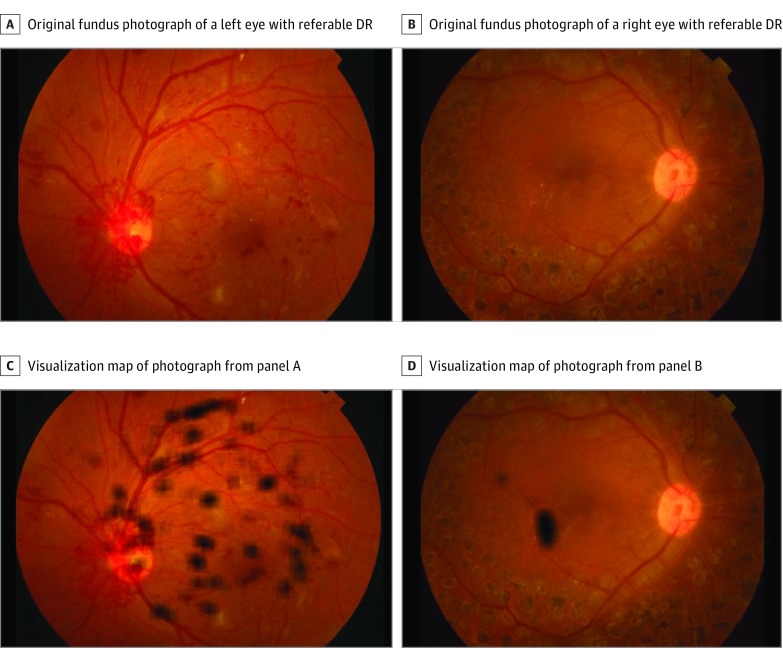

Typical referable DR lesions, such as exudates, hemorrhage, and vessels (ie, intraretinal microvascular abnormality [IRMA], neovascularization, or venular changes) or a combination of these lesions, were identified as the most important prognostic regions in 96 of 100 true-positive DR cases (96%; 95% CI, 90%-99%) (Table and Figure 2). The remaining 4 cases (4%; 95% CI, 1%-10%) displayed visualization maps in nontraditional prognostic regions for referable DR (ie, optic disc or areas adjacent to vessels). Of 46 false-positive referable DR cases, 39 (85%; 95% CI, 71%-94%) displayed visualization of nontraditional fundus regions with or without retinal venules, whereas the remaining 7 cases (15%; 95% CI, 6%-29%) showed highlighted regions on retinal venules only (eFigure 2 in the Supplement).

Figure 2. Examples of Visualization Maps of True-Positive Cases of Diabetic Retinopathy (DR).

A and B, Original color fundus photographs without heat maps. C, Heat map of image from panel A predominantly visualizing retinal vascular changes in the superior arcade and neovascularization at the optic nerve head. D, Heat map of image from panel B predominantly visualizing exudates close to the macula.

Discussion

The recent emergence of deep learning offers great potential to revolutionize practice patterns in the field of ophthalmology. However, the rationale for the outputs generated by these complex CNNs is inscrutable to clinicians, significantly hampering clinical adoption. Thus, in the present study, we developed a heat map visualization method that represents the CNN learning procedure.

Assessments of the optic disc and RNFL are the foundation of glaucoma diagnosis.9 In line with this notion, visualization of the CNN of true-positive GON cases revealed that the superior or inferior neuroretinal rim and the superior or inferior RNFL arcades were the most important features for prediction in our model. In a large proportion of cases (46%), nontraditional ONH features, including the central cup and the nasal or temporal neuroretinal rim, were also highlighted in conjunction with traditional GON signs. We hypothesize that heat map visualization of these features may represent the optic cup (color, depth, or shape) and neuroretinal rim sector changes associated with advanced GON.10,11

The most important regions for the DR model prediction were predominantly typical clinical findings associated with referable disease. That is, heat map visualization identified image regions of retinal hemorrhage, exudate, IRMA, venous beading or looping, neovascularization, or a combination thereof, in 97% of true-positive referable DR cases. Nontraditional areas, including the optic disc and fundus areas adjacent to vessels, were identified in the remaining 3% of true-positive cases. It may be speculated that these nontraditional regions were recognized as landmarks for localizing adjacent lesions or that these image regions offer some additional prognostic value for DR beyond what is currently recognized. However, a more likely explanation is that these cases were a result of erroneous classification of the network, which may be associated with some minor inconsistencies in the training data set.

Our finding that approximately 70% of false-positive cases in the DR model displayed localized heat maps on the retinal venules suggests that future optimization of the model with a more diverse range of image examples of venous looping or beading may further improve our specificity metric. Analysis of the visualization output for GON false-positive cases revealed that traditional fundus features (ONH and RNFL with or without nontraditional features) were identified as the most important image regions. Given the complex nature of glaucoma diagnosis, particularly as diagnosed from nonstereoscopic images, the addition of real-world clinical data (eg, optical coherence tomography and visual field data) would likely lead to an improvement in automated glaucoma classification.

Limitations

A potential limitation of the present study is that only a small, random sample of true-positive DR and GON images (100 images per group) were selected to be analyzed. Analysis of a larger data set may be required to substantiate reliability and reproducibility of the visualization tool.

Conclusions

Our findings suggest that the presented visualization method can highlight traditional regions in ophthalmic disease diagnosis, substantiating the validity of the deep learning models investigated. To promote the clinical adoption of these models, future work will focus on designing a fully automated visualization tool to enable clinicians to understand important exposure variables in real time.

eFigure 1. Visualization of GON False-Positive Examples

eFigure 2. Visualization of DR False-Positive Examples

References

- 1.Abràmoff MD, Lou Y, Erginay A, et al. Improved automated detection of diabetic retinopathy on a publicly available dataset through integration of deep learning. Invest Ophthalmol Vis Sci. 2016;57(13):5200-5206. doi: 10.1167/iovs.16-19964 [DOI] [PubMed] [Google Scholar]

- 2.Gargeya R, Leng T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology. 2017;124(7):962-969. doi: 10.1016/j.ophtha.2017.02.008 [DOI] [PubMed] [Google Scholar]

- 3.Gulshan V, Peng L, Coram M, et al. Development and validation of a deep learning algorithm for detection of diabetic retinopathy in retinal fundus photographs. JAMA. 2016;316(22):2402-2410. doi: 10.1001/jama.2016.17216 [DOI] [PubMed] [Google Scholar]

- 4.Keel S, Lee PY, Scheetz J, et al. Feasibility and patient acceptability of a novel artificial intelligence-based screening model for diabetic retinopathy at endocrinology outpatient services: a pilot study. Sci Rep. 2018;8(1):4330. doi: 10.1038/s41598-018-22612-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Ting DSW, Cheung CY, Lim G, et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318(22):2211-2223. doi: 10.1001/jama.2017.18152 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Li A, Cheng J, Wong DWK, Liu J. Integrating holistic and local deep features for glaucoma classification. Conf Proc IEEE Eng Med Biol Soc. 2016;2016:1328-1331. doi: 10.1109/EMBC.2016.7590952 [DOI] [PubMed] [Google Scholar]

- 7.Li Z, He Y, Keel S, Meng W, Chang RT, He M. Efficacy of a deep learning system for detecting glaucomatous optic neuropathy based on color fundus photographs. Ophthalmology. 2018;125(8):1199-1206. doi: 10.1016/j.ophtha.2018.01.023 [DOI] [PubMed] [Google Scholar]

- 8.Ebner M. Color constancy based on local space average color. Mach Vis Appl. 2009;20(5):283-301. doi: 10.1007/s00138-008-0126-2 [DOI] [Google Scholar]

- 9.Van Buskirk EM, Cioffi GA. Glaucomatous optic neuropathy. Am J Ophthalmol. 1992;113(4):447-452. doi: 10.1016/S0002-9394(14)76171-9 [DOI] [PubMed] [Google Scholar]

- 10.Hwang YH, Kim YY. Glaucoma diagnostic ability of quadrant and clock-hour neuroretinal rim assessment using cirrus HD optical coherence tomography. Invest Ophthalmol Vis Sci. 2012;53(4):2226-2234. doi: 10.1167/iovs.11-8689 [DOI] [PubMed] [Google Scholar]

- 11.Jonas JB, Fernández MC, Stürmer J. Pattern of glaucomatous neuroretinal rim loss. Ophthalmology. 1993;100(1):63-68. doi: 10.1016/S0161-6420(13)31694-7 [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eFigure 1. Visualization of GON False-Positive Examples

eFigure 2. Visualization of DR False-Positive Examples