Abstract

In the present study we examined the neural correlates of facial emotion processing in the first year of life using ERP measures and cortical source analysis. EEG data were collected cross-sectionally from 5- (N = 49), 7- (N = 50), and 12-month-old (N = 51) infants while they were viewing images of angry, fearful and happy faces. The N290 component was found to be larger in amplitude in response to fearful and happy than angry faces in all posterior clusters and showed largest response to fear than the other two emotions only over the right occipital area. The P400 and Nc components were found to be larger in amplitude in response to angry than happy and fearful faces over central and frontal scalp. Cortical source analysis of the N290 component revealed greater cortical activation in the right fusiform face area in response to fearful faces. This effect started to emerge at 5 months and became well established at 7 months, but it disappeared at 12 months. The P400 and Nc components were primarily localized to the PCC/Precuneus where heightened responses to angry faces were observed. The current results suggest the detection of a fearful face in infants’ brain can happen shortly (~200 – 290 ms) after the stimulus onset, and this process may rely on the face network and develop substantially between 5 to 7 months of age. The current findings also suggest the differential processing of angry faces occurred later in the P400/Nc time window, which recruits the PCC/Precuneus and is associated with the allocation of infants’ attention.

Keywords: infant facial emotion processing, ERPs, cortical source analysis

Accurately decoding facial emotion is an essential skill in social communication, particularly before the onset of language. The ability to process and accurately respond to facial emotion develops rapidly over the first year of life (see Bayet & Nelson, in press; Leppänen & Nelson, 2009 for a review), and is particularly important for developing skills necessary for successfully navigating human social interactions throughout the lifespan. Research utilizing behavioral and event-related potential (ERP) measures has advanced our understanding of infant facial emotion processing. However, inconsistent findings have been reported in terms of the effect of facial expressions on infant behavioral and ERP responses and the developmental course of infant emotion perception (e.g. Grossman, Striano, & Friederici, 2007; Kobiella, Grossman, Reid, & Striano, 2008). In addition, the neural networks involved in facial emotion processing during infancy remain unclear. The current study aims to systematically examine the ERP correlates of infant facial emotion processing and to expand our knowledge regarding the neural basis involved in this process with infants at 5, 7, and 12 months of age.

Behavioral and ERP measures of infant facial emotion processing

A well-documented shift in behavioral facial emotion processing occurs around 7 months of age. It has been demonstrated across multiple studies that infants younger than 7 months of age exhibit preferential looking to happy compared to negative emotional (fearful or angry) faces (Farroni, Menon, Rigato, & Johnson, 2007; LaBarbera, Izard, Vietze, & Parisi, 1976). However, between 5 and 7 months of age, there appears to be a shift to a prioritized processing of, or preference for, fearful faces. Seven-month-old infants take longer to habituate to fearful faces than to happy faces (Nelson, Morse, & Leavitt, 1979) and spontaneously look longer at fearful than at happy faces when the two are presented side by side in a visual paired comparison (VPC) task (Kotsoni, de Haan, & Johnson, 2001). This attentional bias to fearful faces, in turn, results in slower disengagement of infants’ attention away from fearful than from happy faces (Peltola, Leppänen, Vogel-Farley, Hietanen, & Nelson, 2009). Peltola, Hietanen, Forssman, and Leppänen (2013) further show in a cross-sectional study of 5-, 7-, 9-, and 11-month-olds that while 7- and 9-month-olds were less likely to shift their attention away from fearful faces, 5- and 11-month-olds were not. These findings provide evidence for the emergence of the fear bias between 5- and 7-months of age and further suggest that this bias may begin to dissipate by the end of the first year. In contrast, Nakagawa and Sukigara (2012) showed that the attentional bias to fear is longitudinally stable between 12 and 36 months of age.

Event-related potentials (ERPs) have been a particularly useful tool for studying the neural correlates of infant emotion processing. Recent work has examined the effects of emotional valence on infant face-sensitive (the N290 and P400) and attention associated (the negative central; Nc) ERP components, and varying results have been reported. Despite varying results, several components emerge as associated with viewing emotional facial expressions, such as the N290 and P400 components. In a study with 7-month-old infants, Leppänen, Moulson, Vogel-Farley and Nelson (2007) observed that the amplitude of the P400 was greater in response to a fearful face compared to both the happy and neutral expressions, although the N290 did not vary by emotion in amplitude or peak latency. Kobiella and colleagues (2008) observed similar findings in 7-month-olds, showing that the P400 was greater in response to fearful faces compared to angry faces; they additionally reported that the N290 was greater in response to angry than fearful faces. It is important to note that there is indeed great variability reported, particularly for the N290 component. Several additional studies have observed larger N290 responses to fearful faces compared to angry faces in 7-month-olds (Hoehl & Striano, 2008) as well as larger N290 responses for fearful faces compared to happy faces in 9- to 10-month-old infants (van den Boomen, Munsters, & Kemner, 2017). However, others have observed larger N290 responses to happy (Jessen & Grossman, 2015) and non-fear faces (Happy and Neutral; Yrttiaho, Forssman, Kaatiala, & Leppänen, 2014) compared to fearful faces in 7-month-old infants. The N290 and P400 are thought to reflect the developmental precursors to the adult face-sensitive N170, whose amplitude has also been found to be modulated by facial emotional expressions. In particular, the N170 has consistently been shown to be larger in response to fearful faces than happy or neutral faces in neurotypical adults (Batty & Taylor, 2006; Faja, Dawson, Aylward, Wijsman, & Webb, 2016; Leppänen et al., 2007; Rigato, Farroni, & Johnson, 2010).

The Nc component, an index of infant attention allocation, is also modulated by facial emotion. For example, 7-month-old infants display a larger Nc to fearful than happy faces, indicating increased attention to fearful faces (de Haan, Belsky, Reid, Volein, & Johnson, 2004; Grossmann et al., 2011; Leppänen et al., 2007; Nelson & de Haan, 1996; Taylor-Colls & Pasco Fearon, 2015). However, not every electrophysiological study has replicated this fear, or even a negative emotion bias at 7 months of age. Researchers have reported larger Nc responses to happy compared to angry faces (Grossman et al., 2007) and larger Nc responses to angry compared to fearful faces (Kobiella et al., 2008) in 7-month-old infants. Within the same study, Grossman and colleagues (2007) found an opposite pattern of Nc response in 12-month-olds who displayed a larger Nc to angry compared to happy faces. Additionally, Vanderwert and colleagues (2015) observed no effect of emotion (happy, angry, and fear) on the amplitude of the N290 or the P400 in 7-month-old infants. Taken together, these studies suggest that the N290, P400, and Nc components can serve as indexes of emotional face processing in infancy, but their particular patterns of responses to emotion still need further investigation.

Though a sizeable body of literature exists documenting both the behavioral and neural correlates of emotion processing at various age groups across different studies, the body of work consists of multiple different paradigms, potentially contributing to the varying results reported above. To address this issue, large-scale studies that utilize the same paradigm concurrently across age are needed. The current study aims to provide this needed approach.

Neural basis of infant facial emotion processing

Research conducted to date using ERPs to investigate the development of facial emotion processing suggests that there may be an underlying brain network that emerges in the first year (Leppänen & Nelson, 2009). For example, Leppänen and Nelson (2009) posited that the fusiform gyrus (fusiform face area; FFA), superior temporal sulcus (STS), orbitofrontal cortex (OFC), and the amygdala might already be on-line in the first year of life and play an important role in infants’ perception of facial emotions. These brain regions are also the core systems of the face and emotion networks that have been identified and studied extensively with adult participants. For example, the core face network (Haxby, Hoffman, & Gobbini, 2000) including the FFA, OFA, and the inferior occipital gyrus (occipital face area; OFA) has been found to show greater activation in response to fearful than neutral expressions (Hadj-Bouziane, Bell, Knusten, Ungerleider, & Tootell, 2008; Hooker, Germine, Knight, & D’Esposito, 2006). The FFA, OFA, and the STS have also been found to be the neural generators of the N170 component whose amplitude is amplified in response to fearful faces as mentioned earlier (Deffke et al., 2007; Itier & Taylor, 2004: Rossion, Joyce, Cottrell, & Tarr, 2003).

It has been difficult to shed light on the development of the neural bases of facial emotion processing because interpretation of network functioning from infant behaviors and ERP components is limited. Cortical source analysis with age-appropriate MRI models may allow us to identify the neural substrates of infant behaviors and provide additional evidence for the involvement of corresponding brain areas in infants’ cognitive processes that may not be obtained by just measuring the activities on the scalp (Reynolds & Richards, 2009). This method may be especially useful in the study of infant brain functions given the difficulty of testing infants in an MRI scanner in experimental tasks. A recent study conducted cortical sources of the infant N290, P400, and Nc components and localized the N290 component primarily to the FFA and the P400 and Nc to the posterior cingulate cortex (PCC), middle frontal, and posterior temporal and occipital brain areas (Guy, Zieber, & Richards, 2016). It remains unclear whether these cortical sources would show different responses to negative (e.g., fear and angry) than positive (e.g., happy) emotions in infants. Therefore, there is a need to examine the developmental course of the neural bases of facial emotion processing in infancy using cortical source analysis of infant ERP components that are sensitive to facial emotional expressions. The current study takes this needed approach.

Current study

The first objective of the current study was to systematically examine infants’ ERP responses to facial emotions and the development of these responses across the first year of life. Amplitudes of the N290, P400, and Nc ERP components from cross-sectional groups of 5-, 7-, and 12-month-old infants were compared in response to facial emotion expressions (happy, anger, and fear). We expected to see effects of emotion and age in all three ERP components. Previous research has demonstrated that 7-month-old infants display larger N290 responses to fearful compared to angry and happy faces, while it is important to note that some authors have reported the reverse as reviewed above. Thus, we offered our hypothesis not only based on the infant literature but also the adult N170 findings, as well as the notion that infant N290 may be the precursor of the N170. We hypothesized that 7- and 12-month-old infants (but not 5-month-old infants) would show greater N290 responses to fearful than angry and happy faces. Further, in accordance with the extant literature (Kobiella et al., 2008; Leppänen et al., 2007), it was hypothesized that 7- and 12-month-old infants would show greater P400 responses to fearful than angry or happy faces. Although previous research has also shown inconsistent findings in terms of the effect of facial emotions on the Nc component, the majority of the prior studies have observed greater Nc responses to fearful (de Haan et al., 2004; Grossmann et al., 2011; Leppänen et al., 2007; Nelson & de Haan, 1996; Taylor-Colls & Pasco-Fearon, 2015) and angry (Grossmann et al., 2007; Kobiella et al., 2008) faces than other facial expressions (e.g., happy) during the second half of the first year of life. Thus, we expected to observe greater Nc responses to fearful and angry than happy faces for 7- and 12-month-old infants.

The second goal of the current study was to determine the cortical regions that support emotion processing in infancy, to shed light on the specific brain network that may underlie emotion-processing development. Cortical source analysis of the N290, P400, and Nc components was conducted with realistic head models constructed with age-appropriate MRI templates (Richards, Sanchez, Phillips-Meek, & Xie, 2016; Richards & Xie, 2015). We used volumetric current density reconstruction (CDR) to measure the activity in the source volumes and restricted our analyses to specific ROIs theoretically expected to be sensitive to facial emotions (Xie & Richards, 2017). For example, the brain areas (STS, FFA, OFA and the OFC) hypothesized to be the key components of the infant emotion processing network or the face network were selected as pre-defined ROIs. Infants were expected to show greater cortical activation in response to fearful faces in the ROIs of the face network and infant emotion processing network (OFA, FFA, and STS) during the N290 time window. These three areas also have been shown as cortical sources of the N290 (Guy et al., 2016) or the N170 (Itier & Taylor, 2004) components. Infants were also expected to show greater cortical activation in response to the two negative emotions (angry and fear) later in the P400/Nc time window. This effect was expected to be found in the ROIs (the PCC/Precuneus, MPFC, and the inferior temporal and occipital regions) that are the cortical sources of the P400 and Nc components (Guy et al., 2016), whose activation may also be modulated by infant attention (Xie, Mallin, & Richards, 2017).

Method

The Institutional Review Board approved all methods and procedures used in this study and all parents gave informed consent prior to testing.

Participants

Five-, 7-, and 12-month-old infants were recruited to participate in the current study (N = 337, 113 5-month-olds, 119 7-month-olds, and 105 12-month-olds). All infants were typically developing, born full-term, and had no pre- or peri-natal complications. Participants were recruited from an existing database of families who had volunteered for research following the birth of their child. The demographic information (e.g., income, education, and ethnicity) of the participants is described in Supplemental Information. The parents of participants were paid $20 and the infants received a small toy for their participation.

The final sample for analysis consisted of 150 participants (49 5-month-olds, 23 females; 50 7-month-olds, 23 females; 51 12-month-olds, 26 females). The additional 187 infants were excluded from the analysis due to excessive eye and/or body movements, artifacts or fussiness that resulted in insufficient number of trials (N = 161), electroencephalogram (EEG) net refusal (N = 15), poor EEG net placement (N = 1), computer/procedural error (N = 1), a later diagnosis of Autism Spectrum Disorder (N = 5), or prenatal medication use (N = 4). The current 44.5% retention rate is similar to previous ERP studies with infants of similar ages (Hoehl, 2015; Jessen & Grossmann, 2015; Leppänen et al., 2007).

Stimuli and Task Procedure

Stimuli were images of female faces expressing happy, angry, and fearful emotions from the NimStim Face Stimulus Set (Tottenham et al., 2009). A maximum of 150 trials were presented to each infant, 50 of each emotional categories (happy, anger, and fear). Each category consisted of five individual exemplars, meaning that each stimulus could repeat a maximum of 10 times. Stimuli were presented on a gray background subtended 14.3° × 12.2° of visual angle.

Testing occurred in an acoustically shielded room with minimal lighting. Continuous EEG was recorded while infants were seated on a caregiver’s lap approximately 65 cm from the presentation screen. During testing, image presentation was controlled by an experimenter in an adjacent room while the infant’s looking behavior was monitored using a video camera. The camera was connected to the computer and plugged into the same buss as the amplifier. It was recently discovered and verified by EGI (Electrical Geodesics Inc., 2016) that a temporal artifact (36 ms timing offset) was introduced under these conditions, and thus we corrected this before any measurements and analyses were conducted (see Luyster, Bick, Westerlund, & Nelson, 2017 for more details).

Image presentation was controlled using E-Prime 2.0 (Psychological Software Products, Harrisburg, PA) and a stimulus was only presented if the infant was attending to the presentation screen. Stimuli were presented for 1000 ms each, followed by a fixation cross that remained on the screen until the experimenter presented the next trial. The minimum inter-stimulus interval was 700 ms. A second experimenter was seated next to the infant for the duration of testing to assist in directing the infant’s attention to the presentation screen when necessary. Testing continued until the maximum number of trials had been reached, or until the infant’s attention could no longer be maintained (5-month-olds: M trials completed = 132.43, SD = 24.67, range = 56–150; 7-month-olds: M trials completed = 138.22, SD = 16.66, range = 90–150; 12-month-olds: M trials completed = 139.57 SD = 14.65, range = 101–150).

EEG Recording, Processing, and Analysis

The EEG recording and analysis procedures are similar to those described in Vanderwert et al., 2015. Continuous scalp EEG was recorded from a 128-channel HydroCel Geodesic Sensor Net (HCGSN; Electrical Geodesic Inc., Eugene, OR) that was connected to a NetAmps 300 amplifier (Electrical Geodesic Inc., Eugene, OR) and referenced online to a single vertex electrode (Cz). Channel impedances were kept at or below 100 kΩ and signals were sampled at 500 Hz. EEG data were preprocessed offline using NetStation 4.5 (Electrical Geodesic Inc., Eugene, OR).

The EEG signal was segmented to 1200 ms post-stimulus onset, with a baseline period beginning 100 ms prior to stimulus onset. Data segments were filtered using a 0.3–30 Hz bandpass filter and baseline-corrected using mean voltage during the 100 ms pre-stimulus period. Automated artifact detection was applied to the segmented data in order to detect individual sensors that showed > 200 μV voltage changes within the segment period. Segments containing eye blinks, eye movements, or drift were also rejected based on visual inspection of each segmented trial. Bad segments identified by either procedure were excluded from further analysis. Consistent with previous infant ERP studies (Luyster, Powell, Tager-Flusberg, & Nelson, 2014; Righi, Westerlund, Congdon, Troller-Renfree, & Nelson, 2014) an entire trial was excluded if more than 18 sensors (15%) overall had been rejected. Of the remaining trials, individual channels containing artifact were replaced using spherical spline interpolation. The final data were re-referenced to the average reference. Each infant was required to contribute at least 9 artifact-free trials per condition to be included in the final analysis. The mean number of trials per condition (M = 21.96) was not different between the emotional categories or between different ages (Supplemental Table 2).

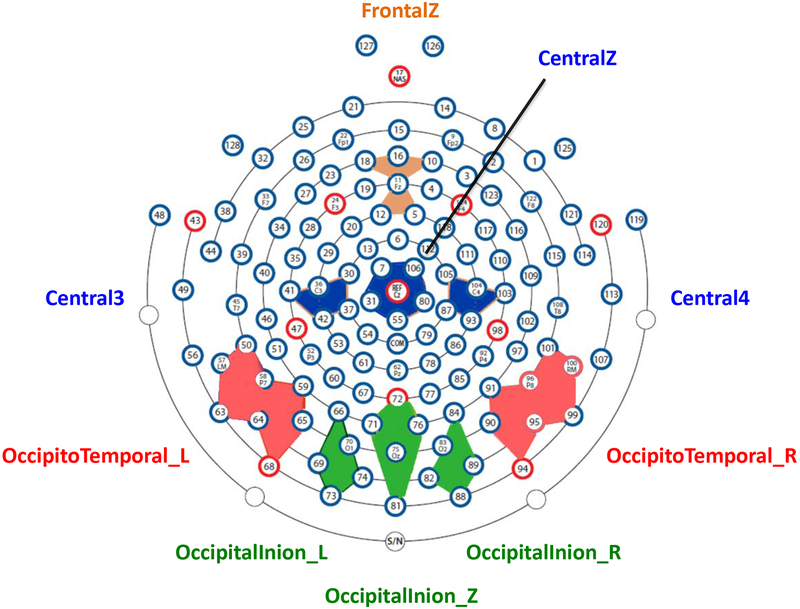

The HCGSN electrodes were combined into 9 electrode clusters covering most of the scalp regions that have been used in the examination of the N290, P400, and Nc components in previous research (e.g., Leppänen et al., 2007; Peltola, Leppänen, Mäki, & Hietanen, 2009; Rigato et al., 2010; Vanderwert et al., 2015; Xie & Richards, 2016). The 9 clusters were named based on the 10–10 electrode positions surrounded by HGSN electrodes (‘FrontalZ’, ‘CentralZ’, ‘Central3’, and ‘Central4’) or the scalp regions covered by the electrodes (‘Occipito-Temporal_L’, ‘Occipito-Temporal_R’, ‘Occipital-Inion_L’, ‘Occipital-Inion_R’, and ‘Occipital-Inion_Z’). The 4 clusters in the frontal and central regions were used for the Nc analysis, and the 5 posterior clusters were used for the N290 and P400 analysis. These 9 clusters and their corresponding HCGSN electrodes are listed in Supplemental Table1, and their layout is demonstrated in Figure 1. The N290 component was examined in the time window of 190 to 290 ms. The P400 and the Nc components were examined in the time window of 300 to 600 ms. Time windows were chosen through visual inspection of the grand average waveform across the three conditions and are comparable to those used in previous infant emotion processing research (Kobiella et al., 2008; Leppänen et al., 2007; Vanderwert et al., 2015).

Figure 1.

2D layout of the channel clusters used for the ERP analysis. This figure illustrates the location and the name of the clusters, as well as the HGSN electrodes used to create the clusters.

The N290 peak amplitude was corrected for the pre-N290 positive peak by measuring the peak-to-peak amplitude. This was conducted by measuring the pre-N290 positive peak amplitude and subtracting it from the N290 peak amplitude for each condition. Using the peak-to-peak amplitude controls for the differences at the pre-N290 positive peak and reduces the effect of positive or negative trends in the ERP, which both might drive the differences found between the infant N290 amplitudes (Guy et al., 2016; Kuefner, de Heering, Jacques, Palmero-Soler, & Rossion, 2010; Peykarjou, Pauen, & Hoehl, 2014). An additional rationale for using the peak-to-peak amplitude is that infants’ positive N290 amplitudes may confound the source reconstruction results (Guy et al., 2016). For example, an infant N290 response with a 1 μV amplitude is seen as a greater negative deflection than a N290 response with a 2 μV peak; however, the greater N290 response (1 μV amplitude) on the scalp would end up with a smaller amplitude in the source space because the source amplitude mostly reflects the absolute amplitude on the scalp. One solution is to use the different ERP amplitudes between conditions for source analysis, but the source localization of a difference score is not the same as the difference between sources calculated separately (Richards, 2013). Therefore, a peak-to-peak N290 amplitude was measured to solve this issue for the source analysis (Guy et al., 2016). A third motivation for using the peak-to-peak amplitude for the N290 is to obtain better scalp distribution of this component. The positive infants’ N290 amplitudes would make the N290 component much less distinct in a topographical map (cf., Supplemental Figure 2 and Supplemental Figure 4). Although our analyses focused on the corrected N290 amplitude, we included the results for using the uncorrected N290 amplitude in the Supplemental Information for comparison given this way of measuring the N290 is also prevalent in the infant ERP literature.

Mean amplitude was computed for the P400 and the Nc components to better quantify their prolonged activation.

Cortical Source Analysis

The cortical source analysis of the N290, P400 and the Nc components was conducted with Fieldtrip (FT; Oostenveld, Fries, Maris, & Schoffelen, 2011) programs and in-house custom MATLAB scripts. The major steps for the current source analysis included the construction of realistic head models, definition of regions of interests (ROIs), and cortical source reconstruction (i.e., current density reconstruction; CDR).

Realistic finite element method (FEM) models were created with age-appropriate infant MRI templates obtained from the Neurodevelopmental MRI Database (Richards et al., 2016; Richards & Xie, 2015). A 6-month-old template was used for the current source analysis for the 5-month-olds and 7-month-olds, and a 12-month-old template was used for the 12-month-olds. The infant MRI templates were segmented into different materials including scalp, skull, cerebral spinal fluid (CSF), white matter (WM), gray matter (GM), nasal cavity, and eyes. Only GM volumes were used as the sources in the source analysis.

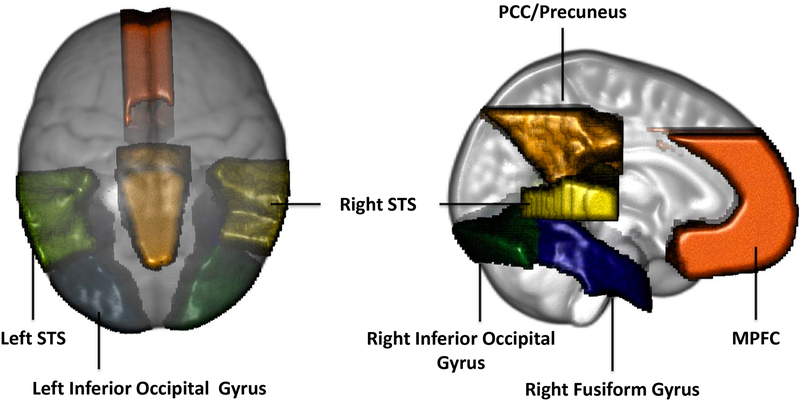

Ten brain ROIs were chosen based on previous research (Guy et al., 2016; Leppänen & Nelson, 2009; Xie et al., 2017) for the source analysis of the N290 (6 ROIs) and the P400 and Nc (4 ROIs) components. The ROIs used for the N290 source analysis included the left and right fusiform gyri, inferior occipital gyri, and STS. The ROIs for the P400 and Nc source analysis included the MPFC, PCC/Precuenus, left and right fusiform gyri and inferior occipital gyri. Figure 2 displays these ROIs in the 6 months MRI template.

Figure 2.

Regions of interests (ROIs) used for cortical source analysis shown on the 12-month-old average MRI atlas.

Cortical source reconstruction was conducted using the distributed CDR technique with exact-LORETA (eLORETA; Pascual-Marqui, 2007; Pascual-Marqui et al., 2011) as the constraint for localization. The current density amplitude (i.e., CDR value) in each ROI was calculated with the ERP data in all electrodes, separately for the N290 (190 – 290 ms) and the P400/Nc (300 – 600 ms) time windows. The peak CDR value in the N290 time window was measured to be consistent with the measurement of the peak amplitude in the N290 ERP analysis. The mean CDR value of the P400/Nc time window was measured to be consistent with the measurement of the mean amplitudes in their ERP analysis. Additional information for the cortical source reconstruction and the CDR values could be found in the Supplemental Information.

Design for Statistical Analysis

Statistical analyses were performed with repeated-measures analyses of variance in IBM SPSS Statistics version 24 (IBM Corp, Armonk, NY). The ERP amplitudes and the cortical source activities (CDR values) were analyzed as the dependent variables. The independent variables included age (3: 5, 7, and 12 months) as a between-subjects factor, and emotion (3: angry, fear, and happy), channel cluster (5 clusters for the N290 and P400 and 4 clusters for the Nc), or brain ROI (6 for the N290 source analysis and 4 for the P400/Nc source analysis) as within-subjects factors. Greenhouse-Geisser corrections were applied when the assumption of sphericity was violated. When significant (p < .05) main or interaction effects emerged, post hoc comparisons were conducted and a Bonferroni correction for multiple comparisons was applied following major main or interaction effects.

Results

ERP analysis

N290

Emotion effects

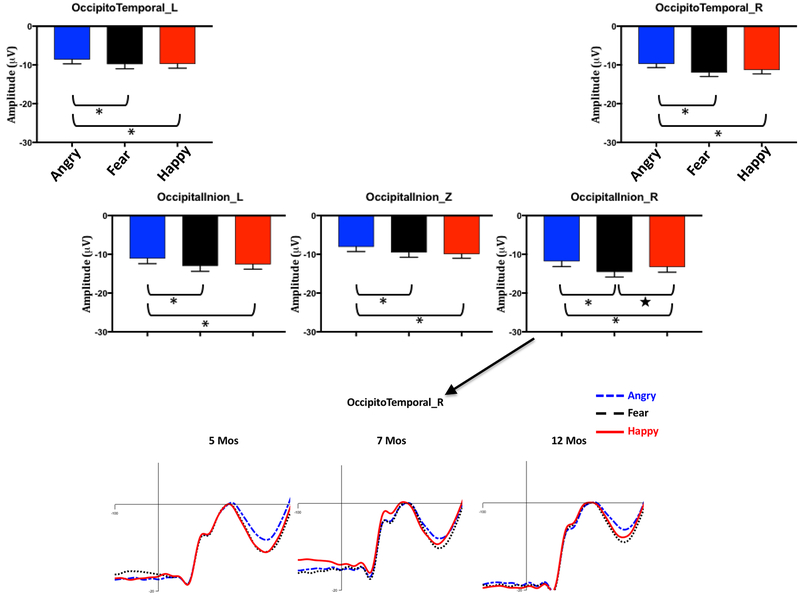

Analysis of the corrected N290 peak amplitude revealed a significant interaction of channel cluster and emotion, F (8,1176) = 2.18, p = .041, ηp2 = .02. Follow-up comparisons (N = 15) between the three conditions in the five clusters with Bonferroni correction showed the N290 amplitude in four clusters was significantly larger in response to fear (left Occipital-Inion (OI): M = −12.99 μV, 95%CI [−14.39 −11.59]; middle OI: M = −9.52 μV, 95%CI [−10.77 −8.27]; left Occipito-Temporal (OT): M = −9.81 μV, 95%CI [−10.98 −8.63]; right OT: M = −11.95 μV, 95%CI [−12.92 –10.99]) and happy (left OI: M = −12.58 μV, 95%CI [−13.86 −11.31]; middle OI: M = −9.94 μV, 95%CI [−11.03 −8.85]; left OT: M = −9.71 μV, 95%CI [−10.77 −8.65]; right OT: M = −11.30 μV, 95%CI [−12.26 –10.33]) than angry (left OI: M = −11.08 μV, 95%CI [−12.41 −9.75]; middle OI: M = −8.09 μV, 95%CI [−9.30 −6.88]; left OT: M = −8.58 μV, 95%CI [−9.69 −7.48]; right OT: M = −9.70 μV, 95%CI [−11.62 –8.78]) faces, ps < .05 for all comparisons after Bonferroni adjustment (Figure 3). The N290 responses in the right Occipital-Inion cluster showed a different pattern (Figure 3): the N290 amplitude in response to fear (M = −14.55 μV, 95%CI [−15.88, −13.22]) was not only greater than that to angry (M = −11.83 μV, 95%CI [−13.17, −10.49], p < .001) but also greater than that to happy (M = −13.28 μV, 95%CI [−14.62 −11.93], p = .021) with LSD adjustment, but the difference between fear and happy was gone after the Bonferroni correction.

Figure 3.

The top panel shows the peak-to-peak N290 amplitude for the three emotion conditions, separately for the five posterior channel clusters and collapsed across ages, i.e., the interaction between emotion and cluster. The error bars stand for the standard errors of mean. *p < 0.05 with Bonferroni correction for the 15 (3 × 5) comparisons. ★ p < 0.05 with LSD adjustment for comparisons. The bottom panel shows the N290 component corrected for the pre-positive peak in the right OccipitalInion cluster for the three emotion conditions, separately for the three ages.

Although the interaction between age, emotion, and channel cluster was not significant (p = .158), the N290 peak amplitude in the right Occipital-Inion and Occipito-Temporal clusters was illustrated in Supplemental Figure 1, separately for the three ages. There appears to be a “fear-bias” (i.e., heightened response to fearful faces) in the N290 component in these right hemisphere clusters peaking at 7 months of age.

Age and channel effects

There was also a significant interaction of channel cluster and age, F(8,588) = 5.09, p < .001, ηp2 = .07. For the 5- and 7-month-olds, the N290 amplitudes in the left (5mos: M = −12.00 μV; 7mos: M = −12.83 μV) and right (5mos: M = −13.66 μV; 7mos: M = −13.48 μV) Occipital-Inion clusters were greater than those in the left (5mos: M = −7.37 μV, p < .001; 7mos: M = −12.83 μV, p = .002) and right (5mos: M = −8.68, p = .007) Occipito-Temporal and middle Occipital-Inion (7mos: M = −9.79 μV, p < .001) clusters. For the 12-month-old, the N290 amplitude was significantly smaller in the middle Occipital-Inion cluster (M = −8.01 μV) compared to the other four clusters: left Occipital-Inion (M = −11.82 μV, p < .001), right Occipital-Inion (M = −12.51 μV, p < .001), left Occipito-Temporal (M = 10.76 μV, p = .033), and right Occipital-Temporal (M = −12.81 μV, p < .001). In addition, the N290 amplitudes in the clusters on the right side of the scalp (OI: M [μV] = −13.66, −13.48, and −12.51; OT: −8.68, −11.46, and −12.81 for 5, 7, and 12 months) were greater than those on the left side (OI: M [μV] = −12.00, −12.83, and −11.82; OT: −7.37, −9.98, and −10.76) for all three ages, ps < .05 (see Supplemental Figure 2 for topographical maps). The results for the analyses with uncorrected N290 peak amplitudes are reported in the Supplemental Information and demonstrated in Figure 4 and Supplemental Figure 4.

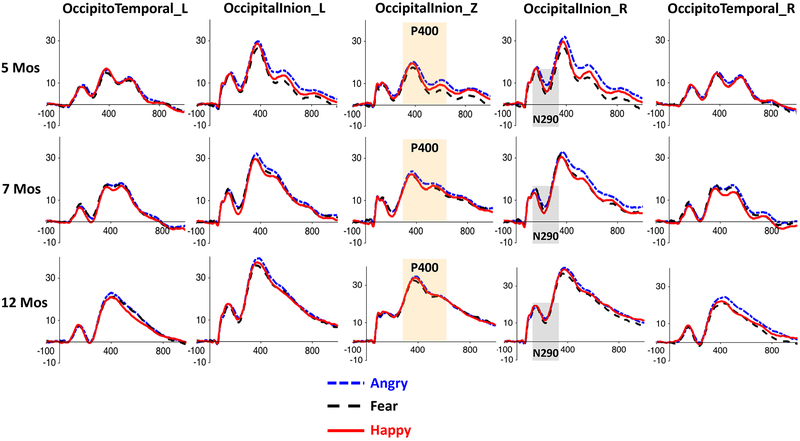

Figure 4.

ERP waveforms for the three emotion conditions in the five posterior channel clusters used for the N290 and P400 analyses, separately for the three age groups. The shaded areas indicate the time windows of the ERP components. The P400 effect (i.e., greater response to angry faces) can be easily seen in the Occipital-Inion clusters.

P400

Emotion effects

Analysis of the mean amplitude of the P400 revealed a significant interaction of emotion and channel cluster, F(8,588) = 4.18, p < .001, ηp2 = .03. Follow-up multiple comparisons with Bonferroni correction showed that the P400 amplitudes in the left and right Occipital-Inion and the right Occipito-Temporal clusters were greater in response to angry (left OI: M = 25.85 μV, 95%CI [24.18 27.52]; right OI: M = 27.22 μV, 95%CI [25.56 28.89]; right OT: M = 15.64 μV, 95%CI [14.40 16.88]) than fear (left OI: M = 23.20 μV, 95%CI [21.65 24.75]; right OI: M = 23.54 μV, 95%CI [22.04 25.05]; right OT: M = 13.97 μV, 95%CI [12.80 15.13], ps < .001) and happy (left OI: M = 23.75 μV, 95%CI [22.12 25.38]; right OI: M = 24.53 μV, 95%CI [23.02 26.04]; right OT: M = 13.70 μV, 95%CI [12.71 14.69], ps < .001) faces (Figure 4). In contrast, no difference was found between the three conditions in the left Occipito-Temporal cluster (ps >.05), and only a difference between angry and fear (angry > fear, Mdiff = 2.33, p < .001) was found in the middle Occipital-Inion cluster. There was a significant main effect of emotion, F(2,294) = 14.97, p < .001, ηp2 = .09. Overall, the P400 amplitude was greater in response to angry (M = 20.94 μV, 95%CI [19.69 22.18]) than fear (M = 18.70 μV, 95%CI [17.55 19.86], p < .001) and happy (M = 19.12μV, 95%CI [17.98 20.27], p < .001) faces.

Age and channel effects

There was also a significant interaction of channel cluster and age, F(8,588) =7.42, p < .001, ηp2 = .09. Follow-up analysis showed that for 5- and 7-month-olds, the P400 amplitudes in the left (5mos: M = 18.63 μV; 7mos: M = 23.78 μV) and right (5mos: M = 19.51 μV; 7mos: M = 24.06 μV) Occipital-Inion clusters were greater than that in the middle Occipital-Inion cluster (5mos: M = 12.47 μV, p < .001; 7mos: M = 18.32 μV, p < .001), which in turn was greater than the amplitudes in the left (5mos: M = 11.92 μV, p < .001; 7mos: M = 14.42 μV, p < .001) and right (5mos: M = 12.22 μV, p < .001; 7mos: M = 13.58 μV, p =.003) Occipito-Temporal clusters(Figure 4). For the 12-month-olds, the P400 amplitudes in the three Occipital-Inion clusters (left OI: M = 30.40 μV, right OI: M = 31.73 μV, middle OI: M = 27.86 μV) were greater than those in the two Occipito-Temporal clusters (left OT: M = 17.40 μV, right OT: M = 18.52 μV), ps < .001 (Figure 4). There was also a main effect of age, F(2,147) = 31.24, p < .001, ηp2 = .3. Post-hoc comparisons showed that the P400 amplitude increased with age (5mos: M = 14.75 μV; 7mos:18.83 μV; 12mos: 25.18 μV, 5mos vs 7mos, p = .008, 7mos vs 12mos, p < .001) (Figure 4, and see Supplemental Figure 2 for topographical maps).

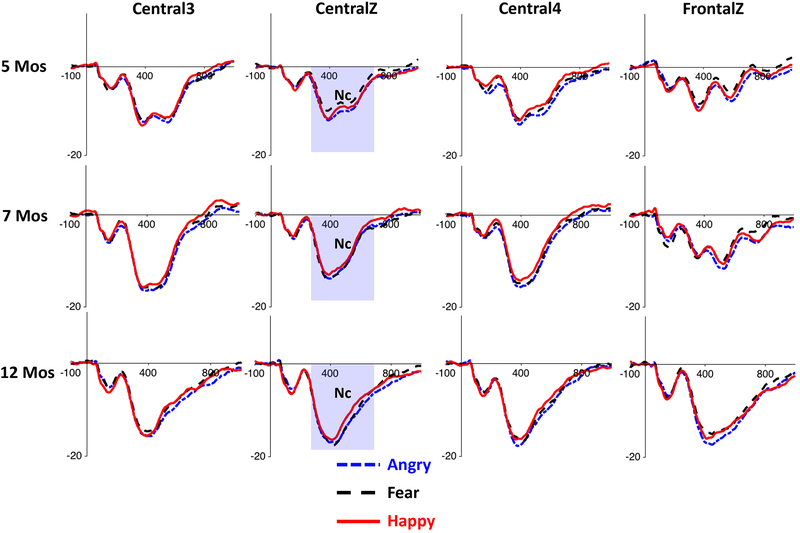

NC

Emotion effects

Analysis of the mean amplitude of the NC revealed a significant main effect of emotion, F(2,294) = 7.32, p = .001, ηp2 = .05. Post-hoc comparisons showed that the Nc amplitude for angry faces (M = −11.71 μV, 95%CI [−12.46, −10.97]) was larger than that for fear (M = −10.88 μV, 95%CI [−11.62, −10.13] , p = .008), and happy (M = −10.77 μV, 95%CI [−11.43, −10.11] , p = .002) faces (Figure 5), which is similar to the P400 emotion effect. The Nc responses to fear and happy faces were not significantly different from each other, p = 1.00. The interaction between emotion and age on the Nc amplitude was not significant, F(4,294) = .946, p = .438, ηp2 = .013, neither was the interaction between emotion, channel cluster, and age, F(12, 284) = .514, p = .907, ηp2 = .007.

Figure 5.

ERP waveforms for the three emotion conditions in the four central and frontal channel clusters used for the Nc analysis, separately for the three age groups.

Age and channel effects

The analysis also revealed main effects of channel cluster, F(3,441) = 23.64, p < .001, ηp2 = .14], and age group, F(2,147) = 13.58, p < .001, ηp2 = .16. Post-hoc comparisons for the channel clusters showed that the Nc ampltidue was largest in the CentralZ cluster (M = −12.71 μV) compared to the CentralL (M = −11.60 μV), CentralR (M = −11.65 μV), and FrontalZ (M = −9.51 μV) clusters, ps < .01. The Nc amplitude also increased with age (5mos: M = −9.04 μV; 7mos: M = −11.08 μV; 12mos: M = −13.24 μV, 5mos vs 7mos, p = .040, 5mos vs 12mos, p < .001; 7mos vs 12mos, p = .023)(Figure 5; Supplemental Figure 2), which is similar to the age effect found for the P400.

Cortical source analysis

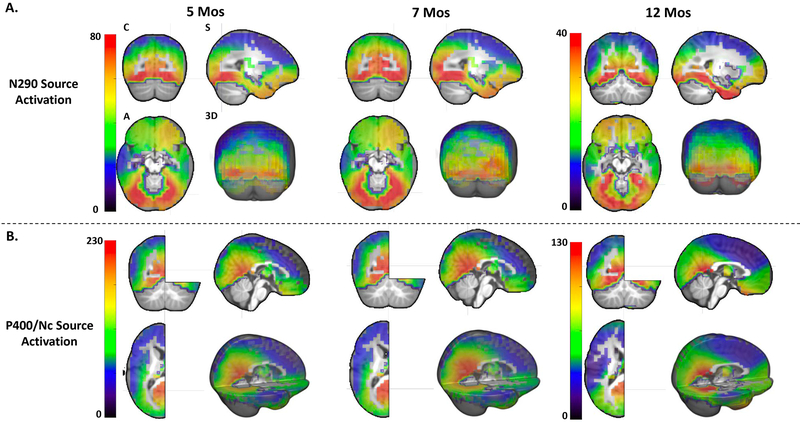

Figure 6 presents the source reconstruction of the ERPs in the time windows of 190 – 290 ms (the N290) and 300 – 600 ms (the P400 and the Nc), averaged across the three emotion conditions and separately for the three ages. We also investigated the cortical sources of these ERP components separately for the three emotions, and no difference in their source locations was found between the three emotions. Since the P400 and the Nc components occur in the same time window (Figure 4, 5, and Supplemental Figure 3), we discuss these two components together in terms of their source analysis results in the following paragraphs.

Figure 6.

Distributed source localization of (A) the N290 (190 – 290 ms) and (B) the P400/Nc (300 – 600 ms) components individually shown for the three age groups. The source activation (or CDR amplitude) shown in the figure represents the averaged activation for the entire time window of the ERP components. The source activation is also averaged across the three emotion condition

As observed in Figure 6A, the cortical sources of the N290 are primarily located in the inferior temporal and inferior and middle occipital lobes, especially the OFA and FFA regions. The temporal pole also seems to be a cortical source of the N290 component for the 12-month-olds. The cortical sources of the P400 and Nc components are primarily located in the PCC/Precuneus (Figure 6B), as well as the inferior temporal lobe that can be seen from the Coronal (Figure 6) views. Figure 6 demonstrates that the ROIs selected based on the literature overlap with the cortical sources reconstructed with the current data. The results from the statistical analyses of the source activation as a function of emotion, ROIs, and age are reported below, separately for the N290 and P400/Nc time windows.

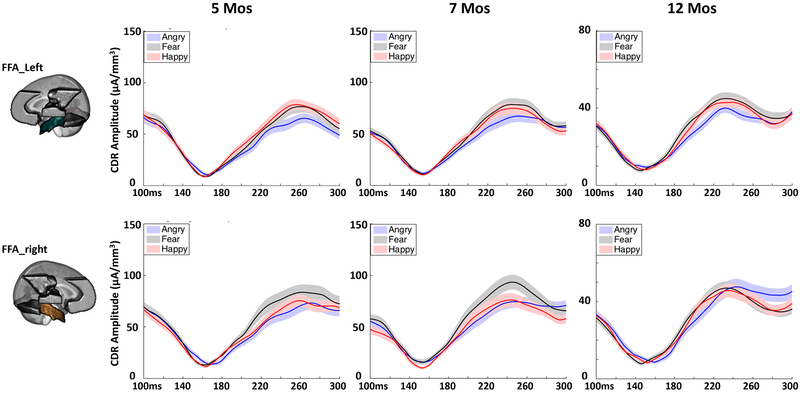

Source Activity during the Time Window of the N290

Analysis of the peak CDR amplitude in the N290 time window (190 – 290 ms) revealed a significant interaction of emotion and age, F(4,292) = 2.443, p = .049, ηp2 = .032. Follow-up analysis showed that the CDR amplitude for happy (M = 88.12 μA/mm3, 95%CI = [80.64 95.60]) and fear (M = 90.18 μA/mm3, 95%CI = [82.16 98.21]) were both greater than angry faces at 5 months, ps < .001. The CDR amplitude was greater for fear (M = 95.02 μA/mm3, 95%CI = [87.06 102.95]) than angry (M = 85.32 μA/mm3, 95%CI = [78.67 91.98], p = .001) and happy (M = 89.84 μA/mm3, 95%CI = [82.43 97.24], p = .03) faces at 7 months. No difference between the three conditions was found at 12 months, ps > 0.05. There was also an interaction of ROI and emotion, F (10,138) = 3.355, p = .002, ηp2 = .022. Follow-up comparisons showed that the CDR amplitude for fear (M = 90.56 μA/mm3, 95%CI = [82.97 98.16]) was greater than angry (M = 82.81 μA/mm3, 95%CI = [75.98 89.64], p = .043) and happy (M = 82.18 μA/mm3, 95%CI = [76.05 88.32], p = .005) faces only in the right FFA (Figure 7). The left and right OFA and the left FFA showed greater CDR amplitude for fear (OFA_L: M = 99.73 μA/mm3, 95%CI = [91.96 107.50]; OFA_R: M = 102.97 μA/mm3, 95%CI = [93.87 112.07]; FFA_L: M = 82.00 μA/mm3, 95%CI = [76.14 87.87]) and happy (OFA_L: M = 100.79 μA/mm3, 95%CI = [92.26 109.33]; OFA_R: M = 99.62 μA/mm3, 95%CI = [91.72 112.07]; FFA_L: M = 82.70 μA/mm3, 95%CI = [75.23 86.16]) than angry (OFA_L: M = 91.43 μA/mm3, 95%CI = [83.84 99.01]; OFA_R: M = 89.59 μA/mm3, 95%CI = [82.41 96.77]; FFA_L: M = 70.23 μA/mm3, 95%CI = [67.01 77.45]), ps < .05 (Figure 7), and the left and right STS did not show much difference between the three conditions, ps > 0.05. The three-way interaction of emotion, ROI and age was not significant, p = .17.

Figure 7.

Cortical source activity (CDR amplitude) for the three emotion conditions in the left (top) and right (bottom) FFA across the N290 time window. The N290 ERP amplitudes used for the current cortical source reconstruction were corrected for the pre-positive peak (~160 ms).

The analysis of the N290 source activity also shows a significant main effect of ROI, F(5, 143) = 161.683, p < .001, ηp2 = .524. Post hoc comparisons showed that the CDR amplitude in the left (M = 97.32 μA/mm3) and right (M = 97.39 μA/mm3) OFA was greater than that in the left (M = 78.31 μA/mm3) and right (M = 85.19 μA/mm3) FFA, which in turn was greater than the CDR amplitude in the left (M = 40.44 μA/mm3) and right (M = 38.42 μA/mm3) STS, ps < 0.001 (Figure 6). There was a significant age effect, F(2, 147) = 60.284, p < .001, ηp2 = .451. The CDR amplitude was greater for the 7-monthd-olds compared to 12 months Mdiff = 47.34 μA/mm3, 95%CIdiff = [35.79 58.89], p < .001 (Figure 6).

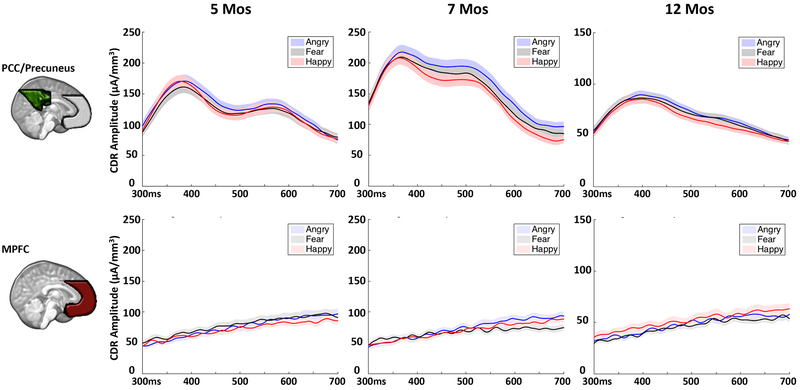

Source Activity during the Time Window of the P400 and Nc

Analysis of the mean CDR amplitude during the P400 and Nc time window (300 – 600 ms) revealed a significant main effect of emotion, F(2, 147) = 6.716, p = .001, ηp2 = .044. Post hoc comparisons showed that the CDR amplitude is greater for angry (M = 110.04 μA/mm3, 95%CI = [104.64 115.42]) than fear (M = 102.95 μA/mm3, 95%CI = [98.51 107.39], p = .004) and happy (M = 103.61 μA/mm3, 95%CI = [98.63 108.59], p = .007) faces. Figure 8 and Supplemental Figure 5 illustrate the CDR amplitude across the P400/Nc time window for the three emotions, separately for different ROIs. The emotion effect was primarily shown in the PCC/Precuneus and the OFA and FFA, with a mild effect shown in the MPFC. However, no individual analysis was conducted for each ROI because the interaction of emotion and ROI was not significant.

Figure 8.

Cortical source activity for the three emotion conditions in the PCC/Precuneus (top) and MPFC (bottom) across the P400/Nc time window.

There was also a significant interaction of ROI and age, F(10, 286) = 12.508, p < .001, ηp2 = .145. Follow-up comparisons showed that the CDR amplitude was greatest in the PCC/Precuneus (5ms: M = 134.31 μA/mm3; 7mos: M = 177.88 μA/mm3) and smallest in the MPFC (5ms: M = 69.84 μA/mm3; 7mos: M = 45.63 μA/mm3) for the 5- and 7-month-olds, ps <.01. The CDR amplitude was equally high in the PCC/Precuneus (M = 83.42 μA/mm3) and the FFA (L: M = 92.90 μA/mm3; R: M = 95.13 μA/mm3) for the 12-month-olds. The main effect of age was significant, F(2, 147) = 65.833, p < .001, ηp2 = .472. The CDR amplitude for the 5- (M = 117.40 μA/mm3 , 95%CI = [109.85 124.94]) and 7-month-olds (M = 128.31 μA/mm3, 95%CI = [120.84 135.78]) was greater than that for the 12-month-olds (M = 70.89 μA/mm3 , 95%CI = [63.49 78.28]), ps <.001 (Figure 7).

Discussion

The current study examined the ERP correlates of facial emotion processing and its corresponding neural sources in 5-, 7-, and 12-month-old infants. Our first goal was to examine infants’ N290, P400, and Nc responses to facial expressions of happiness, fear, and anger. Infants showed greater N290 responses to fearful than both happy and angry faces in the right Occipital-Inion cluster, and greater N290 responses to fearful and happy than angry faces in the other four channel clusters. Infants also displayed greater P400 and Nc responses to angry than happy and fearful faces. The amplitude of these three ERP components increased in magnitude with age, but no emotion by age interactions were found in the scalp level analyses. Our second goal was to investigate the cortical regions responsible for emotional face processing in infancy through cortical source analysis of the ERP components. The N290 component was primarily localized to the core areas (OFA and FFA) of the face network, and the P400 and Nc components were primarily localized to the PCC/Precuneus. Activation in these cortical sources was modulated by emotion and age. While the 7 months old infants showed the greatest N290 cortical responses to fearful faces, the other two ages showed greater responses to fearful and happy faces or no difference between the three emotions. In addition, the right FFA but not the other ROIs showed a greater N290 cortical response to fear than the other two emotions, and this effect appears to peak at 7 months of age, although the three-way interaction of emotion, ROI, and age was not found to be significant. The PCC/Precuneus, OFA, and FFA exhibited greater responses to angry than happy and fearful faces in the P400/Nc time window for all three ages.

The ERP correlates of infant facial emotion processing

The increased N290 amplitude in response to fearful faces suggests that a threatening stimulus can be rapidly detected (within a few hundred milliseconds following its onset) and subjected to prioritized processing in the infants’ brain. This early detection of a fearful face in the brain should in theory precede and may drive the attentional bias to this kind of stimulus that has been observed in infant behaviors (Kotsoni et al., 2001). In the current study, a greater N290 response to fearful faces than both angry and happy faces was only shown in the right Occipitotemporal scalp regions (Figure 3, Supplemental Figure 1), which further implicates the N290 as a developmental precursor to the adult N170 component, whose amplitude is magnified in response to fearful faces mostly in the right Occipitotemporal electrodes (e.g., Faja et al., 2016; Leppänen et al., 2007). One unexpected finding is that while the amplitude of the N290 is larger in response to both fearful and happy faces than angry faces in the other four channel clusters, fearful and happy face responses do not differ significantly in amplitude here, contrary to previous research with adults (Batty & Taylor, 2006; Rigato, Farroni, & Johnson, 2010). It should also be noted that current findings are inconsistent with two previous infant N290 studies which found either no difference in the N290 amplitude between fear and other emotions (Leppänen et al., 2007) or a greater N290 response to angry than fearful faces (Kobiella et al., 2008). The measurement of the peak-to-peak N290 amplitude may contribute to the differences in the N290 effects between the current study and some prior studies, although the analysis of the uncorrected N290 amplitude also showed a greater N290 amplitude in response to fearful than angry faces. The current study was designed in a slightly different way (three emotion conditions with five models displaying each emotion) and involved a larger sample size compared to the prior studies, which may also influence the novel N290 results. Research following these infants longitudinally will assess the stability of electrophysiological responses to facial emotion through early childhood.

The results from the analyses of the P400 and Nc components highlight the particular salience of angry faces within the first year of life. The facial features of angry faces displayed in the current study may be more striking or prominent, e.g., baring more teeth and appearing to approach the viewer more than other expressions (Supplemental Figure 6), which are indicative of potential threat behaviors. These features are like to make the angry faces more salient to infants and easily catch their attention, and thus elicit a larger amplitude of the P400 and Nc components that have been found to be modulated by allocation of attention (Guy et al., 2016; Richards, 2003; Xie & Richards, 2016) and stimulus salience (Nelson & de Haan, 1996). However, non-significant differences in the P400 and Nc amplitudes between fearful and happy faces are inconsistent with our hypothesis and previous findings (Leppänen et al., 2007; Nelson & de Haan, 1996). It is possible that the facial features in angry expressions are more arousing and discomforting compared with the features in the fearful expressions for infants in the first year of life (Kobiella et al., 2008). A second explanation is that the exposure of two categories of negative emotions diminishes the salience of either category. As a result, there might be insufficient power in the current study to detect the potential difference between the Nc responses to fearful and happy faces, although the Nc amplitude in response to fearful faces did appear to be larger than that to happy faces in some clusters at 7 and 12 months (Figure 5; Supplemental Figure 2B, C). This explanation is built upon the assumption that infants at these ages have the ability to group fearful and angry expressions as negative emotions (Farroni et al., 2007; LaBarbera, et al., 1976), which has not been consistently justified in the literature and thus needs further investigation.

The neural networks of infant facial emotion processing

The result from the cortical source analysis of the N290 component suggests the infant emotion processing network is already functional in the first year of life. The FFA is hypothesized to be a key component in the infant emotion processing network (Leppänen & Nelson, 2009). The current finding of heightened cortical activation (greater CDR amplitude) in the right FFA in response to fearful than angry and happy faces (Figure 7) provides direct evidence for the important role this region plays in infant emotion processing. The current finding also sheds light on the developmental origin of the functional significance of the face network (Haxby et al., 2000) in emotion processing (Hadj-Bouziane et al., 2008; Hooker et al., 2006). Facial emotion processing has been shown to recruit a few key components of the face network (e.g., the FFA and STS) based on the studies on adult participants using electrophysiological and fMRI measurements (e.g., Hooker et al., 2006; Pourtois et al., 2004), although the activation in other cortical areas (e.g., the Amygdala) in response to emotions may be independent of face perception (Hadj-Bouziane et al., 2008). The current findings suggest the infant facial emotion processing also highly relies on the face-sensitive cortical areas and indicate the intertwined development of the two networks in the first year of life.

The function of the FFA in infant emotion processing might change with age during the first year of life. The “fear-bias” in the right FFA started to emerge at 5 months and became well established by 7 months, but the effect disappeared by 12 months (Figure 7). This non-linear developmental course is in line with a behavioral study showing the “fear-bias” at 7 and 9 but not 5 and 11 months (Peltola et al., 2013), although when dynamic faces are presented increased attention to fearful faces may be observed at even earlier ages (e.g., 5 months) (Heck, Hock, White, Jubran, & Bhatt, 2016). The current finding also supports the idea that the critical period for the development of “fear-bias” is between 5 and 7 months of age (Leppänen & Nelson, 2009). The decreased neural and behavioral responses to fearful faces from 7 to 12 months of age might suggest the development of a top-down process that suppresses the cortical activation in the initial perception of this type of stimuli, and consequently facilitates the shift of infants’ attention away from them. In other words, the increased background knowledge of fearful faces gained from infants’ experiences with this type of stimuli may influence infants’ perception. This top-down bias against fearful faces reflects infants’ enhanced capacity to control their attention and may help infants’ emotion regulation and facilitate the exploration of other kinds of stimuli in the environment. Future work should consider a longitudinal design to further test this theory with older children.

The interaction between emotion and age found in the right FFA is consistent with prior ERP studies (e.g., Jessen & Grossmann, 2016; Peltola, Leppänen, Mäki, & Hietanen, 2009) but not with the findings in the channel clusters on the scalp. There is likely to be variability in the location of electrodes that may show the interaction effect. Thus, the average of the amplitudes across electrodes and the selection of electrodes for clusters might have reduced the power to detect the potential interaction effect between emotion and age (Supplemental Figure 1), which also might have been smeared out by volume conduction. These issues could be resolved by cortical source analysis that applies the activity in all electrodes and localizes the sources of the effect into the brain.

Another core system of the infant emotion processing network, the STS, did not show the expected different responses to the three emotions. The STS is highly involved in the processing of dynamic facial information (Polosecki et al., 2013) and emotional information in human voice (Blasi et al., 2011). The current study presented static images of facial expressions, which might have failed to trigger any high-level activation in the STS.

The PCC/Precuenus could be an additional component of the infant emotional processing network. This area may be dissociated from the general face processing network but represent the attention allocation processing during infant emotion processing. The P400 and Nc components were primarily localized to the PCC/Precuneus, which showed increased cortical activation in response to angry faces (Figure 6 and 8). The PCC/Precuneus has been associated with infant alpha desynchronization and sustained attention (Xie et al., 2017). We present evidence supporting the idea that the expression of anger is very likely to attract infants’ attention and result in enhanced processing in infants’ brain. The MPFC did not show the expected heightened activation in response to the negative emotions, but a linear increase of activity was observed in the P400/Nc time window (Figure 8). The medial frontal area, such as the OFC, is also hypothesized to be a core area in the infant emotion network, with reciprocal connections with the rest of the network and the amygdala (Leppänen & Nelson, 2009). It is possible that the frontal portion of the network is not recruited until a couple of seconds later and is responsible for the regulation of emotion and top-down control of the network.

It is plausible that there is an early (~ 100 to 300 ms) and a late (~ 300 to 1000 ms) stage of facial emotion processing in infants, which relies on distinct neural sources. The early process might involve initial and automatic detection of facial emotions and bottom-up orienting to facial signals of emotion, predominately relying on the “face areas”, such as the FFA; whereas the later process might be associated with attention allocation and deeper processing of the stimulus, recruiting the PCC/Precuneus. This later process may also be accompanied by an overall increase of brain arousal that follows the presence of a salient stimulus, which in turn may have a broad impact on multiple brain networks. To this end, increased activation in response to angry faces was also observed in the inferior occipital and temporal regions (Supplemental Figure 3).

Source localization of the N290, P400, and Nc components

It should be noted that the current cortical source localization of these components mostly replicates the findings of previous infant source analysis studies (e.g., Guy et al., 2016; Reynolds & Richards, 2005). The N290 component is primarily localized to the inferior occipital and temporal regions, including the OFA and the FFA (Figure 6), which is consistent with the finding from Guy et al. (2016) who identified the cortical sources of the N290 component with individual MRIs for a subset of the participants. The localization of the P400/Nc component to the PCC/Precuneus and the temporal areas is also consistent with findings from Guy et al. (2016) but inconsistent with those from Reynolds and Richards (2005), who localized the Nc primarily to the frontal areas. Head models and techniques (CDR vs. dipole fitting) utilized by the current study differed from Reynolds and Richards (2005), which might cause this discrepancy. The current study as well as Guy et al. (2016) failed to find separate sources for the P400 and the Nc components. It is possible that the P400 is merely the positive deflection of the Nc, or vice versa (see Supplemental Figure 2). Future studies should further test this possibility.

Limitations

As a field, infant electrophysiological research tends to have high rates of data loss (Bell & Cuevas, 2012) and the current study is no exception. A great number of trials were eliminated during artifact detection and rejection, as well as following visual inspection of individual trials. Future research may consider using independent component analysis (ICA) to clean infant EEG data by identifying and removing ocular and movement artifacts (Gabard-Durnam, Mendez Leal, Wilkinson, & Levin, 2018; Xie et al., 2017), especially with a small sample size. Further, we suggest that future infant ERP research may use paradigms that more effectively engage infant’s attention to obtain more usable trials before the infants get fussy (Xie & Richards, 2016). These practices will allow for larger numbers of trials and participants to be included in data analysis.

There is also a great deal of variability in results gleaned from infant emotional face processing studies. As stated in the introduction, while much of the current infant ERP literature finds larger neural responses to fear faces compared to angry or happy faces, many others find opposite effects where neural responses are larger in response to happy than fearful faces. One potential reason for this is the wide variety of stimuli and paradigms used in infant emotional face processing studies. For example, much of the research in the field finds that infants show a biased processing of fearful faces from 7-months-of age, but a recent study using dynamic face images suggests that this bias may be detected as young of 5-months-of age (Heck et al., 2016). Further, while the current study used uncropped, open mouth images from the NimStim Face Stimulus Set (Tottenham et al., 2009), other research has used the FACES database (Ebner, Riediger, & Lindenberger, 2010), the Picture of Facial Affect database (Ekman & Friesen, 1976), or individual in-laboratory stimulus sets, some of which are cropped to eliminate any non-facial features such as the hair. Future research should carefully consider this issue and aim to use the most ecologically valid stimuli possible.

Conclusion

The current study provides insights about the neural correlates of infant facial emotion processing in the first year of life. The ERP findings suggest that the detection of fearful expressions in the infant brain can happen as early as 200 – 290 ms, and increased brain arousal and enhanced processing of angry expressions may continue through the P400 and Nc time window (e.g., 400–800 ms). Cortical source reconstruction of the ERP components for different emotion conditions sheds light on the neural networks responsible for infant emotion processing and their developmental time courses. One neural process that might be responsible for the automatic detection of fear recruits the right FFA, with 5 to 7 months being the critical period for its development. Another brain area, the PCC/Precuneus, might be associated with the allocation of attention to negative emotions (e.g., angry) and is already established by 5 months of age. The current findings have the potential to serve as a reference on the typical development of the neural mechanisms underlying infant facial emotion processing and allow us to better identify disruptions in brain networks of children who display atypical emotion processing.

Supplementary Material

Research Highlights.

The current study concurrently examines 5-, 7-, and 12-month-old infants’ neural responses to happy, angry, and fearful facial emotion expressions.

Scalp level ERP analysis shows heightened N290 responses to fearful faces and greater P400 and Nc responses to angry faces.

Source analysis shows the right FFA underlies the distinct N290 responses to fearful faces; whereas the PCC/Precuneus generates the heightened P400/Nc responses to angry faces.

The current results have the potential to serve as a reference on the typical development of the brain processes underlying infant facial emotion processing.

Acknowledgements

The current work is support by a grant from National Institute of Mental Health, #MH078829. We would like to thank all the families for their participation in this study and the entire Emotion Project. We would also like to acknowledge the Emotion Project staff past and present for their assistance in data acquisition, data processing, and relevant discussion.

Footnotes

Conflicts of Interest

The authors have approved the manuscript and agreed with its submission. The authors declare no conflict of interest.

References

- Batty M, & Taylor MJ (2006). The development of emotional face processing during childhood. Developmental Science, 9(2), 207–220. doi: 10.1111/j.1467-7687.2006.00480.x [DOI] [PubMed] [Google Scholar]

- Bayet L, & Nelson CA (in press) The perception of facial emotion in typical and atypical development To appear in: LoBue V, Perez-Edgar K, & Kristin Buss K (Eds.), Handbook of Emotional Development. [Google Scholar]

- Bell MA, & Cuevas K (2012). Using EEG to study cognitive development: Issues and practices. Journal of Cognition and Development, 13(3), 281–294. doi: 10.1080/15248372.2012.691143 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blasi A, Mercure E, Lloyd-Fox S, Thomson A, Brammer M, Sauter D, … Murphy DG (2011). Early specialization for voice and emotion processing in the infant brain. Current Biology, 21(14), 1220–1224. doi: 10.1016/j.cub.2011.06.009 [DOI] [PubMed] [Google Scholar]

- de Haan M, Belsky J, Reid V, Volein A, & Johnson MH (2004). Maternal personality and infants’ neural and visual responsivity to facial expressions of emotion. Journal of Child Psychology and Psychiatry, 45(7), 1209–1218. doi: 10.1111/j.1469-7610.2004.00320.x [DOI] [PubMed] [Google Scholar]

- Deffke I, Sander T, Heidenreich J, Sommer W, Curio G, Trahms L, & Lueschow A (2007). MEG/EEG sources of the 170-ms response to faces are co-localized in the fusiform gyrus. Neuroimage, 35(4), 1495–1501. doi: 10.1016/j.neuroimage.2007.01.034 [DOI] [PubMed] [Google Scholar]

- Ebner NC, Riediger M, & Lindenberger U (2010). FACES—A database of facial expressions in young, middle-aged, and older women and men: Development and validation. Behavior Research Methods, 42(1), 351–362. doi: 10.3758/BRM.42.1.351 [DOI] [PubMed] [Google Scholar]

- Ekman P, & Friesen WV (1976). Pictures of facial affect. Palo Alto, CA: Consulting Psychologists Press. [Google Scholar]

- Electrical Geodesics, Inc. (2016). Introduced Stimulus Presentation Change During Impedance. [Web based white paper]. Retrieved from https://www.egi.com/images/kb/downloads/EGI_White_Paper_-_Introduced_Stimulus_Presentation_Change_During_Impedance.pdf

- Faja S, Dawson G, Aylward E, Wijsman EM, & Webb SJ (2016). Early event-related potentials to emotional faces differ for adults with autism spectrum disorder and by serotonin transporter genotype. Clinical Neurophysiology, 127(6), 2436–2447. doi: 10.1016/j.clinph.2016.02.022 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Farroni T, Menon E, Rigato S, & Johnson MH (2007). The perception of facial expressions in newborns. European Journal of Developmental Psychology, 4(1), 2–13. doi: 10.1080/17405620601046832 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gabard-Durnam LJ, Mendez Leal AS, Wilkinson CL, & Levin AR (2018). The Harvard Automated Processing Pipeline for Electroencephalography (HAPPE): standardized processing software for developmental and high-artifact data. Frontiers in Neuroscience, 12, 97. doi: 10.3389/fnins.2018.0097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann T, Johnson MH, Vaish A, Hughes DA, Quinque D, Stoneking M, & Friederici AD (2011). Genetic and neural dissociation of individual responses to emotional expressions in human infants. Developmental Cognitive Neuroscience, 1(1), 57–66. doi: 10.1016/j.dcn.2010.07.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grossmann T, Striano T, & Friederici AD (2007). Developmental changes in infants’ processing of happy and angry facial expressions: a neurobehavioral study. Brain and Cognition, 64(1), 30–41. doi: 10.1016/j.bandc.2006.10.002 [DOI] [PubMed] [Google Scholar]

- Guy MW, Zieber N, & Richards JE (2016). The cortical development of specialized face processing in infancy. Child Development. doi: 10.1111/cdev.12543 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadj-Bouziane F, Bell AH, Knusten TA, Ungerleider LG, & Tootell RB (2008). Perception of emotional expressions is independent of face selectivity in monkey inferior temporal cortex. Proceedings of the National Academy of Sciences of the United States of America, 105(14), 5591–5596. doi: 10.1073/pnas.0800489105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, & Gobbini MI (2000). The distributed human neural system for face perception. Trends in Cognitive Sciences, 4(6), 223–233. doi: 10.1016/S1364-6613(00)01482-0 [DOI] [PubMed] [Google Scholar]

- Heck A, Hock A, White H, Jubran R, & Bhatt RS (2016). The development of attention to dynamic facial emotions. Journal of Experimental Child Psychology, 147, 100–110. doi: 10.1016/j.ecp.2016.03.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoehl S (2015). How do neural responses to eyes contribute to face-sensitive ERP components in young infants? A rapid repetition study. Brain and Cognition, 95, 1–6. doi: 10.1016/j.bandc.2015.01.010 [DOI] [PubMed] [Google Scholar]

- Hoehl S, & Striano T (2008). Neural processing of eye gaze and threat-related emotional facial expressions in infancy. Child Development, 79(6), 1752–1760. doi: 10.1111/j.1467-8624.2008.01223.x [DOI] [PubMed] [Google Scholar]

- Hooker CI, Germine LT, Knight RT, & D’Esposito M (2006). Amygdala response to facial expressions reflects emotional learning. Journal of Neuroscience, 26(35), 8915–8922. doi: 10.1523/JNEUROSCI.3048-05.2006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itier RJ, & Taylor MJ (2004). N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cerebral Cortex, 14(2), 132–142. doi: 10.1093/cercor/bhg111 [DOI] [PubMed] [Google Scholar]

- Jessen S, & Grossmann T (2015). Neural signatures of conscious and unconscious emotional face processing in human infants. Cortex, 64, 260–270. doi: 10.1016/j.cortex.2014.11.007 [DOI] [PubMed] [Google Scholar]

- Jessen S, & Grossmann T (2016). The developmental emergence of unconscious fear processing from eyes during infancy. Journal of Experimental Child Psychology, 142, 334–343. doi: 10.1016/j.jecp.2015.09.009 [DOI] [PubMed] [Google Scholar]

- Kobiella A, Grossmann T, Reid VM, & Striano T (2008). The discrimination of angry and fearful facial expressions in 7-month-old infants: An event-related potential study. Cognition and Emotion, 22(1), 134–146. doi: 10.1080/02699930701394256 [DOI] [Google Scholar]

- Kotsoni E, de Haan M, Johnson MH (2001). Categorical perception of facial expressions by 7-month-old infants. Perception, 30(9), 1115–1125. doi: 10.1068/p3155 [DOI] [PubMed] [Google Scholar]

- Kuefner D, de Heering A, Jacques C, Palmero-Soler E, & Rossion B (2010). Early visually evoked electrophysiological responses over the human brain (P1, N170) show stable patterns of face-sensitivity from 4 years to adulthood. Frontiers in Human Neuroscience, 3, 67. doi: 10.3389/neuro.09.067.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaBarbera JD, Izard CE, Vietze P, & Parisi SA (1976). Four- and six-month-old infants’ visual responses to joy, anger, and neutral expressions. Child Development, 47(2), 535–538. doi: 10.2307/1128816 [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Moulson MC, Vogel-Farley VK, & Nelson CA (2007). An ERP study of emotional face processing in the adult and infant brain. Child Development, 78(1), 232–245. doi: 10.1111/j.1467-8624.2007.00994.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leppänen JM, & Nelson CA (2009). Tuning the developing brain to social signals of emotions. Nature Reviews Neuroscience, 10(1), 37–47. doi: 10.1038/nrn2554 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luyster RJ, Bick J, Westerlund A, & Nelson CA (2017). Testing the effects of expression, intensity and age on emotional face processing in ASD. Neuropsychologia. doi: 10.1016/j.neuropsychologia.2017.06.023 [DOI] [PubMed] [Google Scholar]

- Luyster RJ, Powell C, Tager-Flusberg H, & Nelson CA (2014). Neural measures of social attention across the first years of life: characterizing typical development and markers of autism risk. Developmental Cognitive Neuroscience, 8, 131–143. doi: 10.1016/j.dcn.2013.09.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakagawa A, & Sukigara M (2012). Difficulty in disengaging from threat and temperamental negative affectivity in early life: a longitudinal study of infants aged 12–36 months. Behavioral And Brain Functions, 8(40). doi: 10.1186/1744-9081-8-40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nelson CA, & de Haan M (1996). Neural correlates of infants’ visual responsiveness to facial expressions of emotion. Developmental Psychobiology, 29(7), 577–595. doi: [DOI] [PubMed] [Google Scholar]

- Nelson CA, Morse PA, & Leavitt LA (1979). Recognition of facial expressions by seven-month-old infants. Child Development, 50(4), 1239–1242. doi: 10.2307/1129358 [DOI] [PubMed] [Google Scholar]

- Oostenveld R, Fries P, Maris E, & Schoffelen JM (2011). FieldTrip: Open source software for advanced analysis of MEG, EEG, and invasive electrophysiological data. Computational Intelligence and Neuroscience, 2011, 156869. doi: 10.1155/2011/156869 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pascual-Marqui RD (2007). Discrete, 3D distributed, linear imaging methods of electric neuronal activity. Part 1: exact, zero error localization. arXiv:0710.3341 [math-ph]. [Google Scholar]

- Pascual-Marqui RD, Lehmann D, Koukkou M, Kochi K, Anderer P, Saletu B, … Kinoshita T (2011). Assessing interactions in the brain with exact low-resolution electromagnetic tomography. Philosophical Transactions of the Royal Society A: Mathematical Physical and Engineering Sciences, 369(1952), 3768–3784. doi: 10.1098/rsta.2011.0081 [DOI] [PubMed] [Google Scholar]

- Peltola MJ, Hietanen JK, Forssman L, & Leppänen JM (2013). The emergence and stability of the attentional bias to fearful faces in infancy. Infancy, 18(6), 905–926. doi: 10.1111/infa.12013 [DOI] [Google Scholar]

- Peltola MJ, Leppänen JM, Mäki S, & Hietanen JK (2009). Emergence of enhanced attention to fearful faces between 5 and 7 months of age. Social Cognitive and Affective Neuroscience, 4(2), 134–142. doi: 10.1093/scan/nsn046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peltola MJ, Leppänen JM, Vogel-Farley VK, Hietanen JK, & Nelson CA (2009). Fearful faces but not fearful eyes alone delay attention disengagement in 7-month-old infants. Emotion, 9(4), 560–565. doi: 10.1037/a0015806 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peykarjou S, Pauen S, & Hoehl S (2014). How do 9‐month‐old infants categorize human and ape faces? A rapid repetition ERP study. Psychophysiology, 51(9), 866–878. doi: 10.1111/psyp.12238 [DOI] [PubMed] [Google Scholar]

- Polosecki P, Moeller S, Schweers N, Romanski LM, Tsao DY, & Freiwald WA (2013). Faces in motion: selectivity of macaque and human face processing areas for dynamic stimuli. Journal of Neuroscience, 33(29), 11768–11773. doi: 10.1523/JNEUROSCI.5402-11.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Grandjean D, Sander D, & Vuilleumier P (2004). Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cereb Cortex, 14(6), 619–633. doi: 10.1093/cercor/bhh023 [DOI] [PubMed] [Google Scholar]

- Reynolds GD, & Richards JE (2005). Familiarization, attention, and recognition memory in infancy: an event-related potential and cortical source localization study. Developmental Psychology, 41(4), 598. doi: 10.1037/0012-1649.41.4.598 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reynolds GD & Richards JE (2009). Cortical source localization of infant cognition. Developmental Neuropsychology, 34(3), 312–329. doi: 10.1080/87565640902801890 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JE (2003). Attention affects the recognition of briefly presented visual stimuli in infants: An ERP study. Developmental Science, 6(3), 312–328. doi: 10.1111/1467-7687.00287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JE (2013). Cortical sources of ERP in prosaccade and antisaccade eye movements using realistic source models. Frontiers in Systems Neuroscience, 7, 27. doi: 10.3389/fnsys.2013.00027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JE, Sanchez C, Phillips-Meek M, & Xie W (2015) A database of age-appropriate average MRI templates. Neuroimage, 124(B), 1254–1259. doi: 10.1016/j.neuroimage.2015.04.055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richards JE, Xie W (2015). Brains for all the ages: Structural neurodevelopment in infants and children from a life-span perspective In Benson J (Ed.), Advances in Child Development and Behavior (Vol. 48, pp. 1–52). Philadephia, PA: Elsevier. [DOI] [PubMed] [Google Scholar]

- Rigato S, Farroni T, & Johnson MH (2010). The shared signal hypothesis and neural responses to expressions and gaze in infants and adults. Social Cognitive and Affective Neuroscience, 5(1), 88–97. doi: 10.1093/scan/nsp037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Righi G, Westerlund A, Congdon EL, Troller-Renfree S, & Nelson CA (2014). Infants’ experience-dependent processing of male and female faces: insights from eye tracking and event-related potentials. Developmental Cognitive Neuroscience, 8, 144–152. doi: 10.1016/j.dcn.2013.09.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rossion B, Joyce CA, Cottrell GW, & Tarr MJ (2003). Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage, 20(3), 1609–1624. doi: 10.1016/j.neuroimage.2003.07.010 [DOI] [PubMed] [Google Scholar]

- Taylor-Colls S, & Pasco Fearon RM (2015). The effects of parental behavior on infants’ neural processing of emotion expressions. Child Development, 86(3), 877–888. doi: 10.1111/cdev.12348 [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, McCarry T, Nurse M, Hare TA, … Nelson C (2009). The NimStim set of facial expressions: Judgments from untrained research participants. Psychiatry Research, 168(3), 242–249. doi: 10.1016/j.psychres.2008.05.006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van den Boomen C, Munsters NM, & Kemner C (2017). Emotion processing in the infant brain: The importance of local information. Neuropsychologia. doi : 10.1016/j.neuropsychologia.2017.09.006 [DOI] [PubMed] [Google Scholar]

- Vanderwert RE, Westerlund A, Montoya L, McCormick SA, Miguel HO, & Nelson CA (2015). Looking to the eyes influences the processing of emotion on face-sensitive event-related potentials in 7-month-old infants. Developmental Neurobiology, 75(10), 1154–1163. doi: 10.1002/dneu.22204 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie W, Mallin BM, & Richards JE (2017). Development of infant sustained attention and its relation to EEG oscillations: an EEG and cortical source analysis study. Developmental Science, 21(3). doi: 10.1111/desc.12562 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie W, & Richards JE (2016). Effects of interstimulus intervals on behavioral, heart rate, and event-related potential indices of infant engagement and sustained attention. Psychophysiology, 53(8), 1128–1142. doi: 10.1111/psyp.12670 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xie W, & Richards JE (2017). The Relation between Infant Covert Orienting, Sustained Attention and Brain Activity. Brain Topography, 30(2), 198–219. doi: 10.1007/s10548-016-0505-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yrttiaho S, Forssman L, Kaatiala J, & Leppänen JM (2014). Developmental precursors of social brain networks: The emergence of attentional and cortical sensitivity to facial expressions in 5 to 7 months old infants. PLoS ONE, 9(6): e100811. doi: 10.1371/journal.pone.0100811 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.