Abstract

Objectives:

To offer practical guidance to nurse investigators interested in multidisciplinary research that includes assisting in the development of artificial intelligence (AI) algorithms for “smart” health management and aging-in-place.

Methods:

Ten health-assistive Smart Homes were deployed to chronically ill older adults from 2015–2018. Data were collected using five sensor types (infrared motion, contact, light, temperature, and humidity). Nurses used telehealth and home visitation to collect health data and provide ground truth annotation for training intelligent algorithms using raw sensor data containing health events.

Findings:

Nurses assisting with the development of health-assistive AI may encounter unique challenges and opportunities. We recommend: (a) using a practical and consistent method for collecting field data, (b) using nurse-driven measures for data analytics, (c) multidisciplinary communication occur on an engineering-preferred platform.

Conclusions:

Practical frameworks to guide nurse investigators integrating clinical data with sensor data for training machine learning algorithms may build capacity for nurses to make significant contributions to developing AI for health-assistive Smart Homes.

Keywords: smart home, sensors, artificial intelligence, ground truth, mixed methods, data processing, conceptual model, aging-in-place

The population of older adults in the United States (U.S.) is growing rapidly. By 2030 one in five U.S. residents will be age 65 or older (U.S. Census Bureau, 2018). Many of these older adults wish to age-in-place (National Institute on Aging, 2017b). A growing number are interested in using sensor technology to potentially extend their independence (Fritz, Corbett, Vandermause, & Cook, 2016; Demiris, Hensel, Skubic, & Rantz, 2008; National Institute on Aging, 2017a). Health-assistive smart homes (hereafter referred to as Smart Home; described below) may be an option that could foster the independence of older adults. Smart homes are an emerging technology designed to assist older adults with aging-in-place by monitoring their movement in the home and intervening on their behalf as needed (Cook, Crandall, Thomas, & Krishnan, 2012; Rantz et al., 2014).

Using smart technologies, such as the Smart Home, to meet older adult’s healthcare needs is gaining popularity, and nurses are increasingly called upon to be at the forefront of integrating new technology into the delivery of patient care. Nurses historically claim professional ownership of the space between technology and the patient (Risling, 2018), and are involved in deploying and managing these systems (Courtney, Alexander, & Demiris, 2008; Moen, 2003; Toromanovic, Hasanovic, & Masic, 2010). However, little is known about how nurses use their clinical knowledge to implement these emerging technologies, especially artificial intelligence (AI). Artificial intelligence refers to computer software algorithms capable of performing tasks normally requiring human intelligence. Despite the call for rigorous research to support the integration of AI into complex systems such as modern healthcare (Campolo, Sanfilippo, Whittaker, & Crawford, 2017), a key gap exists regarding transferring clinical knowledge from nursing to engineering. This knowledge gap may lead to sub-optimal clinical relevance of AI and inadvertently lead to the development of deficient health-assistive smart technologies.

A paucity of nursing literature exists on how nurses can guide AI training for Smart Homes using their knowledge of how humans respond to illness. Specifically, a gap in the literature exists for methods describing how to infuse nursing clinical knowledge into the training of the AI algorithms designed to assist with health maintenance. We conducted an extensive search across Google Scholar, Semantic Scholar, PubMed, CINAHL, Cochrane, Embase, and IEEE Xplore using various combinations including: nursing, nursing knowledge, artificial intelligence, machine learning, tools, techniques, methods, design, developing, medicine, healthcare, and consumer. A large body of knowledge exists on developing and applying AI tools for health-assistance, including Smart Homes. Within this body of literature nursing’s contribution is lacking regarding their role in guiding AI training and development. Several research teams in the U.S. use sensors and intelligent algorithms to monitor older adults as part of developing technologies for supporting aging-in-place. No literature was located from these research centers on the role of nursing in training AI or the transference of clinical knowledge to engineering for training AI. The U.S. teams include the University of Missouri-Columbia, TigerPlace; Washington State University, Center for Advanced Studies in Adaptive Systems (CASAS); and the University of Texas-Arlington, SmartCare Technology Development Center; Oregon Health Sciences University, Oregon Center for Aging and Technology (ORCATECH); Georgia Tech, Aware Home; Massachusetts Institute of Technology, AgeLab; Yale University, Y-Age; Stanford University, Center on Longevity. A majority of the Smart Home literature that includes nursing comes from the work of Rantz (nursing) and Skubic (engineering) and colleagues at the University of Missouri-Columbia. Nurses are actively involved at Washington State University’s CASAS lab and at the SmartCare Technology Development Center at the University of Texas-Arlington. It is not clear how, or if, nurses are involved in Smart Home research at any of the other universities.

We make an attempt to fill this gap by introducing a nurse-driven method for guiding the development of AI for Smart Homes. The method was developed based on frontline experience working with a multidisciplinary team consisting of nursing, engineering, and computer science. This pragmatic experience informed the training of an intelligent algorithm that recognized three changes in health states of chronically ill persons: side effect of radiation treatment, insomnia, and a fall (Sprint, Cook, Fritz, & Schmitter-Edgecombe, 2016a; Sprint, Cook, Fritz, Schmitter-Edgecombe, 2016b). This nurse-driven method is useful for documenting clinically relevant ground truth. Ground truth refers to information collected in real-world settings; allowing sensor data to be related to real features. Collecting ground-truth data enables calibration of remote-sensing data, and aids in the interpretation and analysis of what is being sensed in the context of the real-world (Bhatta, 2013). Contextualizing Smart Home data includes coupling specific attributes in sensor data to behaviors that may be associated with a human response to illness.

The purpose of this methodological paper is to discuss our own techniques and experiences working as a nurse researcher on a multidisciplinary Smart Home research team of engineers, computer scientists, and psychologists. We provide a blueprint for a nurse-driven method (hereafter referred to as the Fritz Method) for integrating clinical knowledge into training AI for Smart Homes. The Fritz Method leads to an expert-guided approach to machine learning resulting in clinician-based AI. Our work is value-laden, grounded in a post-positivist stance, and theoretically framed within critical realism and a pragmatic approach to frontline problem-solving (e.g., preventing falls) and clinical applications (e.g., relevant alerts, auto-prompting interventions). Critical realism is based on the writings of Bhaskar (1978; 1989). It is a meta-theoretical philosophical position acknowledging the value of contextualizing aspects of human existence. Pragmatism is based on the writings of Pierce (Pierce, 1905). Pragmatism is not idealistic, nor reductionist, but rather offers a practical philosophical methodology for navigating Cartesian scientific approaches while acknowledging the influences of everyday living. Pragmatism guides our thoughts as we seek to balance the quantitative approaches of computer scientists and a nursing translation of the smart home to clinical practice. We also embrace Idhe’s (1990) post-phenomenology philosophy of technology: that technology impacts the end-user in a real way, influencing their everyday life. Our approach is based on a participatory, mixed methods research design that places the clinician directly in the iterative design loop for developing AI (described below). See Dermody and Fritz (2018) for more information on the conceptual framework underpinning the Fritz Method. The use of this method can guide nurse researchers to obtain consistent clinical ground truth that is auditable and coherent for engineering and computer science colleagues. We iteratively honed our processes while working with Smart Homes deployed in the field (i.e., to independent community-dwelling older adults) from 2015–2018. Research studies informing this work were approved by the Washington State University Institutional Review Board.

Artificial Intelligence

The idea of “thinking machines” has been around since the 1930’s. Alan Turning is credited with the development of a computing machine (The Enigma) that was able to execute algorithms considered to be intelligent. However, John McCarthy was credited with being the father of artificial intelligence, coining the term in the late 1950’s (McCarthy, n.d.). In our Smart Home research, we define artificial intelligence as a computer algorithm that acts as a rational agent capable of assessing human movement over time and making decisions about that person’s movement, much like a human would.

Intelligent algorithms are capable of learning older adults’ normal movement patterns and facilitating interventions that are clinically relevant, should abnormal patterns occur (i.e., alerting a nurse, prompting to take a medication). Examples of abnormal patterns include a significant decrease in overall activity, slower walking speed, increased bathroom use, increased activity at night, decreased entering or exiting the home, and more. (Abnormal patterns are based on the individual’s normal baseline, not a population baseline.) To train AI algorithms engineers must use training data sets representative of ground truth. This is accomplished by humans who review sensor data and relate real-world features by annotating the data. To generate ground truth, humans must verify that the data chosen to train the algorithm is a good representation of the real-world event the algorithm is being trained to address. ‘Good representation’ of data includes being able to identify clear boundaries of when an event started and ended, the degree of the event (e.g., fall with 2-hour duration versus 2-minutes), repetitive sub-events (i.e., minor activities or events associated with major events), and unique attributes essential to understanding event coupling (i.e., comparing commonalities in sensor data across similar events). Good representation of an event within the sensor data is critical to also coupling what is being measured (e.g., sequential sensor activation combinations) with the event that actually occurred. For more information on training machines to identify changes in health states see (Sprint, Cook, Fritz, & Schmitter-Edgecombe, 2016a; Sprint, Cook, Fritz, & Schmitter-Edgecombe, 2016b; Fritz & Cook, 2017).

Smart Homes

Smart Homes use hardware (i.e., sensors, relays, computers) and computer software to attempt to assist with health maintenance and illness prevention. Software tools include algorithms which are sophisticated probability and statistics programs that run on computers. These algorithms are referred to as activity-aware algorithms because they are capable of recognizing human behavior and daily activity patterns (Krishnan, & Cook, 2014). To accomplish this, they rapidly sort and label data derived from sensors designed to detect the state of an environment.

Smart Homes use multiple sensor types to detect movement in the home’s environment. Smart Home researchers use a variety of sensors including infrared motion, door use (i.e., contact sensors), light, temperature, humidity, pressure, vibration, and more (Cook, Schmitter-Edgecombe, Jonsson, & Morant, 2018). Each sensor provides unique information important to understanding clinically relevant human behaviors and activities.

To demonstrate a nurse-driven method for training AI we highlight the --- Smart Home that uses five environmental sensor types: infrared motion, contact, light, temperature, and humidity. (The scope of this paper excludes wearable sensor monitoring.) These sensors are used because they are unobtrusive, can continuously monitor, are low cost, and data are low fidelity and relatively easy to store and manage. This particular sensor combination is used because the compiled data provides a fairly comprehensive picture of an older adult’s in-home movements and behaviours without needing to use cameras or microphones. Many older adults prefer not to be monitored with cameras and microphones (Fritz, Corbett, Vandermause, & Cook, 2016; Demiris et al., 2004; Mann, Belchior, Tomita, & Kemp, 2007).

Other sensors can be added to improve capturing specific movements (behaviors and activities). Floor pressure sensors can provide information about older adults’ gait and walking speed, as well as falls (Jideofor, Young, Zaruba, & Daniel, 2012; Muheidat, Tyrer, Popescu, & Rantz, 2017). Vibration sensors can enhance knowledge about ambient noise levels, equipment use, and may be useful in assisting with fall detection (Cook et al., 2018). Some research teams use silhouette cameras and microphones such as Microsoft’s Kinect® (Bian, Hou, Chau, & Magnenat-Thalmann, 2015; Stone & Skubic, 2015). Many researchers, including our research team, use wearable devices such as smart watches or Fitbits® for tracking movement outside the home.

Motion sensors.

Infrared movement-detecting sensors are much like motion-activated porch lights that are found outside of a home. In the Smart Home, this sensor detects when the resident is moving around within the home. Some motion sensors detect any movement occurring in an entire room while others only detect motion occurring near the sensor, and within its direct line-of-sight. These sensors send automated text messages over the internet regarding their current ON/OFF state (i.e., when motion is detected an ON message is sent). Infrared motion sensors can provide information on the resident’s location, time spent in a single location, sequential movement, frequency of toilet use, sleeping, and overall activity levels (Cook & Das, 2004; Ghods, Caffrey, Lin, Fraga, Fritz, Schmitter-Edgecombe, Hundhausen, & Cook, 2018; Lê, Nguyen, & Barnett, 2012; Skubic, Guevara, & Rantz, 2015). Walking speed can be calculated using this data. This information becomes knowledge when combined with AI tools such as activity-aware algorithms (Krishnan, & Cook, 2014) providing information about an older adult’s movement that does not fit the individual’s normal pattern. Knowledge of abnormal motion could inform efficacious care decision-making (Rantz et al., 2015; Skubic et al., 2015).

Contact sensors.

Similar to chair and bed alarms, these sensors use magnetic relays (i.e., a switch) to capture when two magnets are in close proximity to each other allowing current to flow. When the magnets are in close proximity, current flows and the alarm is not activated. When the magnet is pulled away, current does not flow and the alarm is activated. Similarly, contact sensors (placed on doors in the Smart Home) can provide information about whether the door is OPEN or CLOSED. When the door is open, the magnet is not in close proximity and current cannot flow and the text message will indicate the door is OPEN. Magnets in contact sensors are low power and do not interfere with other signals or electronics, such as implantable cardioverter defibrillators. Contact sensors provide information regarding door use behaviour patterns related to entering and exiting the home and opening or closing cabinet doors. Information on older adults’ door use can lead to knowledge about depression (Kaye et al., 2011), social isolation (Demiris et al., 2004), and medication use (Wagner, Basran, & Dal Bello-Haas, 2012).

Light, temperature, humidity sensors.

A light sensor placed inside the refrigerator provides information about potential interest in food, eating patterns, and food related routines (e.g., retrieving creamer for coffee every morning at 5:30 A.M.). Light sensors located throughout the home (e.g., office, bedroom, living room) provide information on the ambient light level in that room by time of day. This information augments understandings of the resident’s activity levels during the day or night.

Combining temperature, humidity, and motion sensor data provides information on activities involving use of the kitchen stove and bathroom showers. This information informs understandings of eating patterns, bathing, and grooming. It also allows the intelligent algorithm (AI agent) to assess when a stove may have been accidently left on (e.g., the air temperature around the stove rises significantly and no motion has been detected in the kitchen in the last 30 minutes).

Data, Ground Truth, and AI Tools

Data.

The goal for the Smart Home is to identify and/or predict a change in health state so a caregiver or nurse can proactively intervene and a negative health event can be avoided or mitigated. To accomplish this, large amounts of data of persons experiencing health events are needed. We define ‘health event’ as any change in health state with the potential to cause harm if not treated. We collect health event data by monitoring older adults with multiple chronic conditions. Exacerbations of chronic conditions have well-known symptoms that result in typical behaviors. These behaviors are associated with physical, observable movements that motion sensors can detect, and motion patterns that AI algorithms are capable of recognizing.

Both qualitative and quantitative data are needed to train the Smart Home to recognize changes in health states. We conduct semi-structured and unstructured health interviews and physical assessments, and extract health information from medical records. These data are recorded as qualitative text-based language. Examples are: the story of a fall (where, when, how, time duration, associated injuries, outcome); description of feeling sad or lonely during the first holiday without a spouse of 60 years (i.e., situational sadness); description of weakening legs and increased dyskinesia of a person with Parkinson’s, and the effect on daily activities and routines; and, effects of a medication changes. Such descriptions are recorded and organized on a spreadsheet capturing each major body system (e.g., respiratory, cardiac, musculoskeletal). We also assess (and search the medical record for) functional status, use of assistive equipment, socialization, and multidisciplinary care planning. From the medical record, we extract information about previous surgeries or injuries, symptom complaints, medications, symptom response to pharmacological treatment.

Quantitative data includes ambient ubiquitous sensor data from the five types of sensors mention above (motion, contact, light, temperature, humidity) and quantifiable nursing assessment data such as vital signs and Timed Up and Go Tests (time in seconds). Copious amounts of ambient sensor data are collected. Each sensor is capable of activating multiple times per second as the resident moves about within the home. Sensors are labeled and appear as discrete text-based data of ON/OFF activations that include the date and time the sensor was activated (e.g., “2018–02-19 05:06.23.0955587 LivingRoomAArea ON”; representing movement in the living room at on February 19, 2018 at 5:06 A.M.).

Ground truth.

Ground truth documentation of the human response to illness and the associated events are critical to training clinically efficacious AI (Liao, Hsu, Chu, & Chu, 2015; Pearl, Glymour, Madelyn, & Jewell, 2016; Risling, 2018). Additionally, choosing appropriate data representing real-world events is important (Brynjolfsson & Mitchell, 2017). Sensor data alone tell an incomplete story, leaving the AI agent at risk of unreliable decision making. Accordingly, the “full story” can only be understood within the context of the older adult’s experience of the health event. Reliable ground truth is obtained through: (a) listening to the participant’s story; (b) recording reported symptoms; (c) conducting a physical nursing assessment; (d) reviewing medical records (as needed); and, (e) asking probing questions designed to elicit event-specific information about changes in movement and motion patterns that can be measured using sensor data. (Table 4 below includes examples of probing questions.)

Table 4:

Semi-Structured Weekly Telehealth Interview Questions

| General Questions | Telehealth Interview Questions |

|---|---|

| Health & functional status in the previous 7 days |

|

| Probing questions if changes have occurred |

|

| Perceptions about sensors |

|

| Targeted Questions (CHF) | Associated Detected Sensor Motion |

| Has there been a change in your medication this week? | Number of trips to bathroom, walking speed, sleep/wake times, sleep location |

| Have you gained more than 2 pounds since we last talked? | Walking speed, sleep location |

| Are you experiencing shortness of breath? | Walking speed, interrupted walking |

| Are you sleeping in your bed at night? | Sleep location |

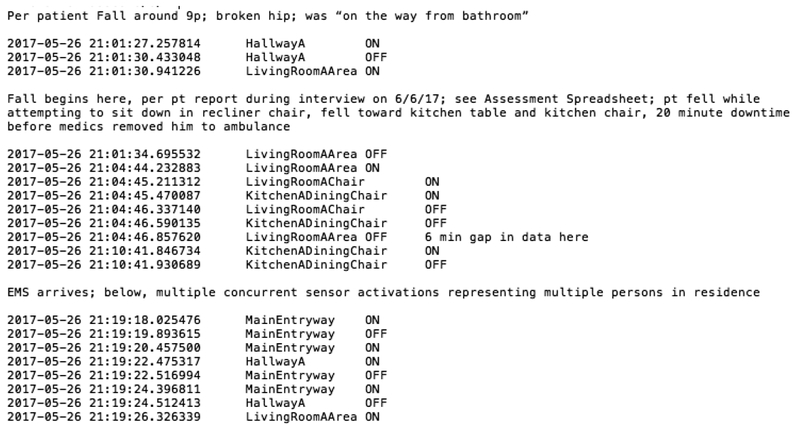

To communicate reliable clinical ground truth to engineers, our nurses keep a log of all health events (i.e., anything that is a significant change from norm; Table 1) and annotate pre-labeled sensor data (Figure 1). Multiple characteristics of health events are captured on a spreadsheet that is made available to the engineering team via a HIPAA compliant web-cloud.

Table 1.

Clinical Ground Truth for Health Events. Documentation recorded on a consolidated sortable form including all health events.

| Participant Code | Date | Health State | Anomaly Detection Category | Diagnosis | Start Time Stamp | End Time Stamp | Notes |

|---|---|---|---|---|---|---|---|

| P1* | 5/29/2017 | Fall | Fall | Parkinson’s | 2101 | 2119 | Gaps in data during fall; while P1 on floor |

| P2 | 11/15–16/2017 | Leg cramps | Sleep | Parkinson’s | 2354 | 0537 | Interrupted sleep |

| P3 | 1/29–31/2018 | Med change; IM injection antibiotics | GI/GU | UTI | 1/29 @ 1039 | 1/31 @ 1247 | Home health nurse |

| P4 | 2/28/2018 | Fall | Fall | Parkinson’s | 2235 | 2254 | Normal routine described |

This fall is represented in Figure 1.

Figure 1.

Segment of the sensor data of the fall of a person with Parkinson’s with clinical ground truth annotations made by a Registered Nurse. The fall occurred in the living room May 26, 2017 at approximately 9 P.M.

Nurses are uniquely positioned to provide an important clinical perspective and description of events for training the AI agent because of their holistic training, clinical knowledge, and the frontline expertise. Annotated data sets and the health event log inform shared interdisciplinary decisions regarding the choice of training data sets (Figure 1).

AI tools.

Our engineers use a combination of AI tools to train the Smart Home AI agent (Table 2). They supervise the machine as it learns; referred to as supervised learning techniques. These techniques use algorithms that are essentially sophisticated probability and statistics tools capable of solving computations so large that it would take a human several months, even years, to complete the task. However, computers can complete the task in seconds or minutes.

Table 2.

Tools for Training Smart Home AI.

| Hidden Markov Models | Computes the probability that an environmental state is occurring based on secondary observations instead direct observations. |

| Naive Bayes Classifiers | Using statistics to predict the likelihood that an event will occur in a given data classification with specific classification attributes. Assumes that all variables are independent. |

| Gaussian Mixture Models | Using statistics modeling to capture the distribution of a cluster of data (a pattern) while accounting for both mean and variance. |

| Conditional Random Fields | Probabilistic model used to analyze structured data and to discriminately label and segment sequential data. Independence is not assumed for current output observations in time-series analysis. |

| Time-Series Forecasting Models | Uses statistics modeling to predict future values based on previously observed data. |

| Decision Trees | A decision flow chart with possible outcomes for each branch of the chart. Similar to the decision tree used in an Advanced Cardiac Life Support course. |

| Leave-one-home-out | Tests the generalizability of the learned model by training the model on all but one home and testing it on the remaining homes, then repeating and averaging the results over all of the test homes. |

Our engineers use a combination of AI techniques to train the AI agent. Techniques listed in Table 2 have achieved statistical significance across multiple health events at the point in time the event reportedly occurred. For more information on this process see Sprint, Cook, Fritz, & Schmitter-Edgecombe (2016a).

Ambient Sensor Data Collection

In this section, we provide a description of the Fritz Method, a nurse-driven method to support training a rational AI agent whereby we identify training data sets that accurately represent health events. We begin by providing a blueprint for efficient collection of both quantitative and qualitative data that includes mapping our visits with participants and highlighting important considerations at each step. Following this section, we discuss data analysis and annotating for ground truth. A pilot study including 10 Smart Homes and hundreds of sensors serves to develop the methodology needed by nurse researchers to interpret Smart home data.

Recruiting Visit (Phone or Home Visit)

To obtain the necessary health event data for training the AI agent, we include participants age 55 and over with two or more chronic conditions, living independently, having internet, and having no plans for extended vacations (greater than one month) while the Smart Home is installed in their home. We prefer participants living alone but no stipulations are specified regarding other people in the home (i.e., partners, caregivers, visitors). Most of our participants live alone but some have spouses, partners, or live-in caregivers. We prefer a home visit for recruiting community-dwelling independent participants because home visits allow us to immediately observe the layout of the home. Additionally, we can assess the older adult’s internet connection and whether they live alone or have pets (how many and what kind). [Persons living alone, without pets that move about the home (i.e., bird versus cat), provide “cleaner” data that is easier to analyze. Sensors activated by pets have more erratic patterns than humans, making the data difficult to interpret and use for training the AI agent. We make team decisions regarding the value of admitting a more data-complex case into the study.]

We involve community stakeholders (e.g., a continuing care retirement community organization [CCRC]). Many CCRC leaders are interested in the potential benefits of Smart Homes and we find they are often open to hosting the research. Utilizing CCRCs simplifies many aspects of the research. CCRCs may provide: (a) large sample populations available in one location; (b) advertising and recruiting of interested participants; (c) staff who are excellent resources on residents’ health and functional status; (d) introduction of researcher to participant; and (e) assistance with installation and maintenance technicalities (e.g., connecting to secure Wi-Fi, changing batteries).

Enrolling Participant Visit (Home Visit)

Here, we explain to the participant the level of sophistication and realistic capabilities of the Smart Home. Many smart homes used in research are not yet capable of alerting researchers to a health event (e.g., a fall). Advising participants on the Smart Home’s specific capabilities is important. We advise them to call the emergency help line or follow the CCRC’s protocol in the event of an emergency.

Environmental considerations for sensor placement.

In-home assessments provide baseline information on the participant’s routine activities and behaviors and the use of space within the home. We look for health and safety risks such as lighting, throw rugs, clutter, shower mats, condition of food, heating and cooling, and more. Knowledge of these items informs data analysis and interpretations around events such as falls. This knowledge guides thoughts of how and where events may occur within the home. We perform the full home assessment only when enrolling participants. Thereafter, targeted home assessments are performed, based on clinical findings.

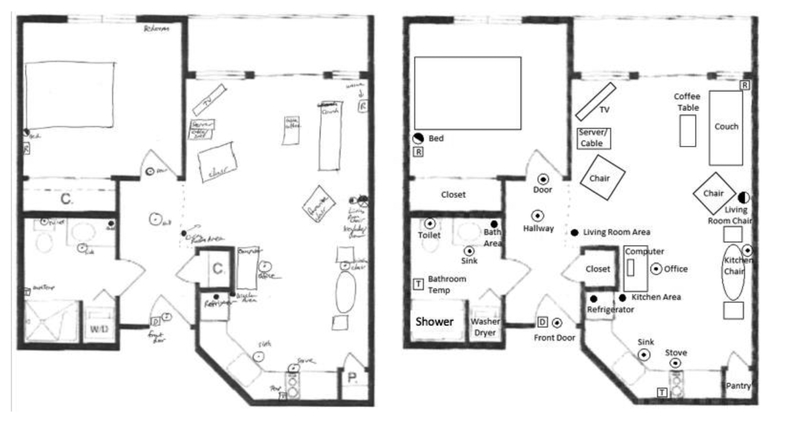

The number and type of sensors needed to construct the Smart Home is based on the floorplan, placement of furniture, and the resident’s use of the space. Furniture locations are marked on the floor plan. The participant’s favorite locations in each room are also recorded; including, the favorite dining room chair, living room chair, side of bed, bathroom and/or bathroom sink (Figure 2). Labeling participants’ favorite locations helps with discriminating one person’s movements from another when multiple people are in the home (i.e., spouse or visitors).

Figure 2.

Floor plan of residence with field notes of furniture locations and the plan for sensor location by type (Left) and a digital copy that is created after the visit (Right). ⊙ direct sensor ceiling; ◑ direct sensor wall;● area sensor;  relay;

relay;  temperature-humidity.

temperature-humidity.

We note the type and location of each sensor, as well as where the server will be located. We create a digital file of the labeled floorplan for the engineering team so the individualized Smart Home can be assembled. Typically, a two-bedroom two-bath home requires a total of 15–20 sensors (Table 3).

Table 3:

Sensor Locations and Types

| Area | Direct | Area | Contact | Light | Humidity | Temp. |

|---|---|---|---|---|---|---|

| Entry door | √ | |||||

| Hallway | √ | √ | ||||

| Living room entrance | √ | √ | ||||

| Favorite living room chair | √ | |||||

| Office entrance | √ | √ | ||||

| Office chair | √ | |||||

| Dining room | √ | √ | ||||

| Favorite dining room chair | √ | |||||

| Kitchen entrance | √ | √ | ||||

| Kitchen sink | √ | |||||

| Kitchen stove | √ | √ | √ | |||

| Refrigerator | √ | |||||

| Bathroom Entrance | √ | |||||

| Bathroom Sink | √ | |||||

| Toilet | √ | |||||

| Shower | √ | √ | ||||

| Bedroom entrance | √ | √ | ||||

| Favorite side of bed | √ |

Sensors are placed on ceilings, walls, and doors. One motion area sensor and multiple motion direct sensors are placed in every room. Area motion sensors have a 360-degree visual field and they activate anytime there is motion in the room. Direct motion sensors require the resident to be in close proximity (< 2 meters) to the sensor. A direct sensor is placed at the entry of each interior room (to detect someone entering the room) and one or two direct sensors are placed near the resident’s favorite location in that room. Direct sensors are necessary for tracking sequential motion throughout the home. A direct sensor is placed just inside the bathroom door and above the sink and the toilet to monitor use of each. An area and a temperature-humidity sensor are placed in the bathroom as well. The temperature-humidity sensor provides information about bathing (e.g., taking a shower increases the temperature and humidity in the bathroom). (There are no cameras or microphones in the smart home.) A light sensor is placed inside the refrigerator to inform understandings of eating patterns. A temperature-humidity sensor is placed on the wall by the kitchen stove. A contact sensor is placed on the main door entering the home. Command Strips™ attach sensors to walls, ceilings, and doors.

Sensor data is relayed from the sensor to an onsite server via a secure Wi-Fi network. The onsite server then sends data over a secure internet connection to our main servers, which are kept in a temperature controlled secure room on the university campus. Our sensor network does not interfere with commercial home security systems because each system has its own unique digital identifiers that secure data. Additionally, our infrared motion sensors are passive (not emitting infrared) so they do not interfere with home security systems detecting infrared.

Nursing Assessment Visits

Nurses conduct interviews and complete targeted assessments during weekly telehealth and monthly in-home nursing visits. In the first monthly home visit, we initiate a year-long monitoring process. We conduct a comprehensive nursing interview and physical assessment. The initial assessment establishes a baseline for health and daily routines. We obtain a health history tailored to the participants’ physical conditions and diagnoses. Semi-structured and open-ended questions are asked such as “Tell me about your health both recently and in the past;” and “Have you been diagnoses with anything, or had any surgeries, and so forth?;” and “Tell me about your medications and how long you have been taking them?” and “Tell me about your daily schedule or routines? Weekly?” We document: (a) medications, (b) functional status, (c) balance and gait speed, (d) use of assistive personnel and/or equipment, usual daily routines (e.g., normal wake time), (e) reported sleep quality, (f) a head-to-toe physical assessment, and (g) the participant’s perception of their overall health. We also assess psycho-social well-being and record reported interactions/relationships with family, friends, visitors, social engagements, and perceived stressors and levels of stress. We use reliable scales so comparisons between participants can be made if needed. We use the Timed-Up-and-Go, Tinetti Balance Assessment Tool, Hedva Barenholtz Levy (HbL) Medication Risk Questionnaire, Geriatric Depression Scale, and the Katz Index of Independence in Activities of Daily Living. During routine monthly visits, we perform a physical assessment and ask about changes to health in the previous week. Weekly telehealth visits are shorter and address recent changes (within the last 7 days) and record vital signs.

We keep detailed records of participants’ self-reported patterns (e.g., wake times, eating, napping, sleep) and their activities of daily living (e.g., exercise class, shopping, bookkeeping, arrival and departure of visitors). These activities and movement habits express how a person moves through space over time. Attributes associated with these activities can be measured and tracked, and regular patterns can be recognized in the sensor data by both humans and the AI agent. We conduct a new and nursing interview specifically regarding routines quarterly.

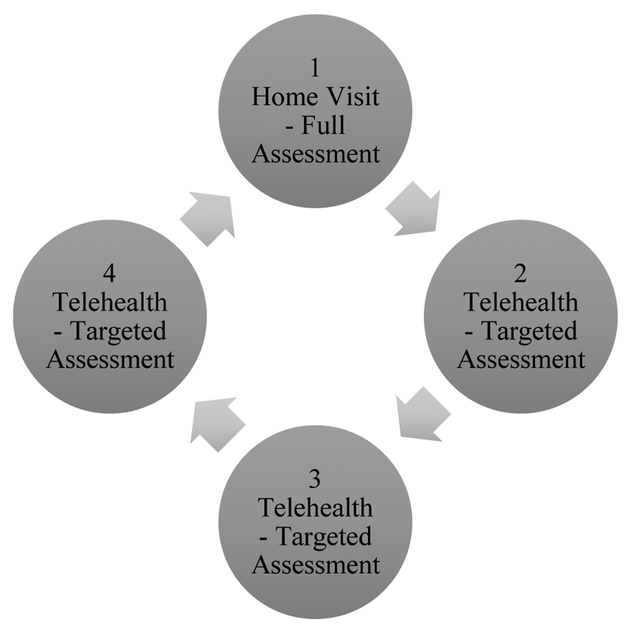

Telehealth Visits (Phone or Video Call)

We use telehealth equipment to collect vital signs and weight. Using a telehealth system allows the nurse researcher to obtain a direct report of objective measures like vital signs without making weekly in-home visits. Using telehealth makes this type of study more feasible. We ask participants to recall only one week at a time because it is easier for them to remember details with closer time-distance to an event. Weekly telehealth visits are made between monthly telehealth visits (Figure 3). We schedule telehealth visits for the same day and time each week. Monthly home visits are scheduled a month in advance. This results in few missed appointments. Missed appointments often result in a struggle to recall event details.

Figure 3.

Monthly Visit Cycle. Weekly phone or audio-visual telehealth visits are made between monthly home visits.

A semi-structured interview guides weekly telehealth visits. This guide is designed to elicit information from participants about recent changes in health and functional status; changes occurring in the previous seven days (Table 4). If a change is reported, probing questions are asked, and participant responses are entered on a spreadsheet containing information organized by body systems.

Each visit, participants are asked how they feel about the sensors and being monitored. They are reminded that data collection can cease immediately by unplugging the server should they become uncomfortable with the presence of the sensors. Unplugging the server results in discontinuing participation in the study. Subsequent removal of the equipment is scheduled. Weekly telehealth visits are typically < 10 minutes unless a major health event occurred. Reporting of health events require longer visits.

Data Processing and Analysis

We use investigative techniques to accurately determine aspects of health (and associated movements) that can be measured with environmental sensors. Understanding how sensors work and what they can detect is required to successfully implement this method. In using these techniques, the nurse must keep in mind the type of data sensors produce and what can be measured. Key to the process, is the need to couple quantifiable measurements of motion sensor data to an actual health event. To do this, the nurse elicits details from participants to illuminate changes in behaviors and activities, which are demonstrated in movement patterns.

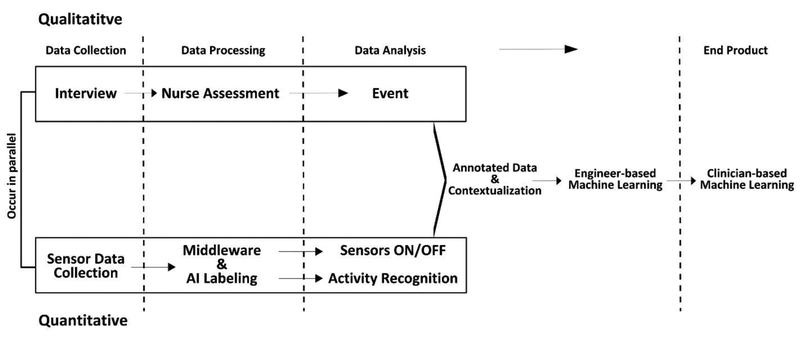

Two data types are analyzed in parallel: qualitative (nursing interviews, assessments, medical records) and quantitative (discrete ON/OFF sensor labels, health data such as vital signs). An interplay occurs between the two data types (Figure 4). Data derived from nursing assessments informs review of sensor data. The nurse uses nurse-documented dates, times, locations, and durations of health events to guide navigation to specific locations within the sensor data to begin the process of identifying the associated event. Once data representing the event is located, the nurse looks for beginning and ending boundaries of the event. These are annotated and the event is labeled (e.g., fall begins here).

Figure 4.

Parallel processing of qualitative and quantitative data in the Fritz Method. The nurse collects qualitative data to contextualize quantitative sensor data for training the Smart Home AI agent. The middleware (software) receives ON/OFF text-based data and identifies individual sensors. Sophisticated activity-aware algorithms label incoming data (e.g., grooming, sleeping, leaving home). Nurse annotated data sets are used to train the machine to recognize a change in health state.

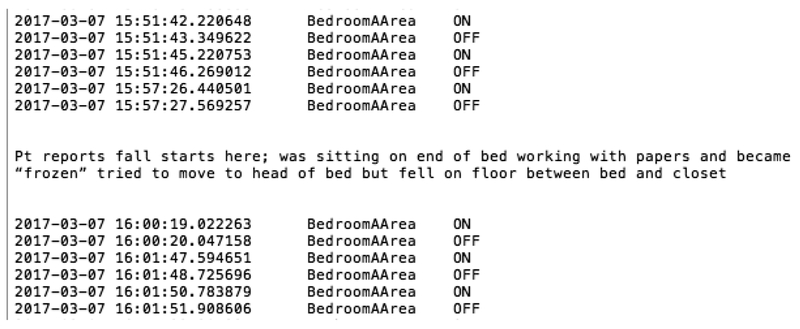

Additionally, the nurse labels the participant’s movement during the event (Figure 5). This provides detailed information regarding data clusters and attributes associated with the event (i.e., sensor activation combinations associated with certain events). Nurses also communicate any patterns or commonalities they note emerging across events. For example, in Figure 5 the repeated activation of a single sensor (BedroomAArea) is common across multiple fall data sets.

Figure 5.

Data showing sensor activations of a participant who fell in their bedroom on March 7, 2017 about 4:00 P.M. In the lower section of data, the participant is moving after falling but before getting up.

We provide further context on the spreadsheet where all events are recorded along with analytic notes (Table 1). This documentation includes how the event directly relates to participants’ health and/or diagnosis, reported symptoms at time of event, and anomalies noted by the nurse in the sensor data. If needed, when data are unclear, we conduct a post-event interview with the participant to further illuminate event start-end times as well as movements during the event. This is accomplished during the proceeding nursing visit. Completed analysis is then sent to the engineering team to be used for training the AI agent (i.e., annotated data and spreadsheet). This is a non-linear, interactive, iterative, and dynamic process driven by the health event and situational context. The goal of co-processing the data (by nurses and engineers) is to build a ‘clinically’ intelligent smart home. The co-processing also enhances engineers’ knowledge of clinical aspects of chronic illness and the effect on movement and motion patterns.

Understanding the details of an event (i.e., how it affected movement, location, time in location) significantly increases the accuracy of the nurse’s ground truth annotations. In turn, the accuracy impacts training data set choices, which ultimately impacts the efficacy of the AI agent. Here, nurses are well-positioned to elicit key information about how the individual responded to illness and to ascertain how that response is revealed in the sensor data. Several components enhance nurses’ ability to provide ground truth. Knowing the individual’s health and activity baseline and health information is key. A holistic baseline includes a clear understanding of normal routines and use of space (based on the floorplan).

Abnormal movement is identified after normal baselines are understood. We locate and use any measures already identified in the literature that sensors can detect regarding specific diagnoses (e.g., walking speed for cognitive decline). We annotate data that are known to include sensor activations of visitors if they were present in the home near the time of the event. When two people reside in the smart home, we annotate a sample data set showing each resident’s discriminative data. We also verify the sensor data is reliable; meaning, there was good connectivity and sensor batteries were working at the time of the event. Finally, we conduct a scoping review of the sensor data to identify event beginning and ending points and any changes in baseline occurring in the pre-event data. Improving understanding of patterns in pre-event data may be important to future work that regards predicting events. (For a more in-depth discussion on the annotation process see Fritz and Cook, 2017).

Engineers use the information provided by nurse researchers for training the AI agent. They use: (a) anomaly detection categories to classify events the AI agent is being trained on; (b) the event time-stamps to locate pre-event and event data; (c) time stamps to select time windows for the automatic detection of clinically relevant health events; (d) routine and change in routine comparisons to inform choice of juxtaposed training set examples (event versus routine data); and, (e) the measures (i.e., sensor activation combinations) to inform choice of tools and techniques.

Discussion

Privacy is a concern for many older adults (Fritz, Corbett, Vandermause, & Cook, 2016; Boise, 2013; Courtney, 2008; Demiris, 2009). In-home monitoring with sensors could increase privacy concerns. Older adults are generally more open to sensor monitoring than monitoring that includes cameras or microphones (Fritz, Corbett, Vandermause, & Cook, 2016; Boise, 2013; Courtney, 2008; Demiris, 2009; Demiris et al., 2004). Some older adults agree to cameras and microphones if they can choose where they are located in the home (University of Washington School of Nursing, 2017). If the older adult desires cameras and microphones, they want them in the main living areas (i.e., kitchen, living room, entry) and not in the bedroom, bedroom closet, or bathroom (Demiris et al., 2004). Although we do not use cameras of microphones, we do place sensors in the bathroom. With data from bathroom sensors, we can determine when someone is showering or using the toilet and for how long. We cannot discriminate between voiding and defecating (additional sensors would need to be placed in the toilet to determine this) and we cannot see or hear bathroom activities. Maintaining this level of privacy, has been acceptable to our participants. Despite privacy concerns, older adults indicate they are open to Smart Home monitoring for extending independence if it is individualized and works as intended (Fritz, Corbett, Vandermause, & Cook, 2016), is secured against identity theft and other criminal intent (Seelye, Schmitter-Edgecombe, Das, & Cook, 2012; Wagner et al., 2015), and not cost prohibitive (Steggell, Hooker, Bowman, Choun, & Kim, 2010).

Nursing implications.

The move to value-based care models (Center for Medicare and Medicaid, 2018) and the shortage of care providers (American Association of Colleges of Nursing, 2017) will continue to catalyze the use of smart environments and AI in delivering healthcare. Clinical nursing knowledge will need to be incorporated to optimize meaningful health-related features and functionality. The stakes are high when using AI to monitor and assist older adults in managing their health at home. However, without assistive technologies many older adults may face a premature transition to care facilities, resulting in greater cost to self and society.

Nursing research.

The Fritz Method is designed to guide nurse researchers who are the clinical expert in an expert-guided approach to training AI. Further development of this method should focus on: (a) increasing the number changes in health states that algorithms can detect; (b) providing ground truth for predicting changes in health states (requires annotating data in the hours, days or weeks before an event occurs); (c) compiling a list from the literature of all sensors used to detect health states or symptoms by diagnosis or health condition; (d) expanding anomaly detection categories; (e) honing communication with engineers; and, (f) validating clinical usefulness.

Limitations.

A major limitation is that this method has not been validated for clinical usefulness. Literature is scarce regarding: (a) anomaly detection categories, (b) the association between motion characteristics, specific diagnoses, and sensor activation combinations, and (c) AI algorithms capable of identifying changes in health states. Our anomaly detection categories are chosen based on logic and clinical expertise. Many of the motion characteristics (detectable by sensors) that relate to specific diagnoses are chosen based on clinical knowledge and knowledge of how sensors work, not validated tools. Additionally, few nurses have been trained to use the method (n=5) in a small sample size (n=10).

Nursing education.

Nurses are the workforce that will most likely be called upon to implement smart technologies so their involvement in research and design is critical. Currently, nursing education provides limited exposure to using data beyond patient alarm systems and electronic health records (EHR) informatics (Fetter, 2009). This may leave nurses unprepared to imagine and explore uses for new types of data (derived outside of the EHR). The lack of exposure may also limit nurses’ ability to function at the design table where they are desperately needed. To better position nurses for this role, nurse educators must expand coursework to include training on AI. Specifically, students should understand how AI uses sensor data and how AI training works. Future nurses need to comprehend AI’s potential, impact on healthcare delivery, and how it fits within precision health paradigms. They should also understand Smart Homes may be capable of ‘measuring’ patient outcomes and therefore play a major role in the move toward value-based care. Nurses will need to combine knowledge of Smart Homes capabilities and new care models to optimally advocate for patients’ right to privacy, autonomy, and self-determination.

Undergraduate pre-licensure nurse educators should consider introducing the general concept of AI and should facilitate discussing primary applications in healthcare (e.g., clinical decision support). Graduate nursing faculty should master and teach concepts that regard turning sensor data-driven information into knowledge about health. Nursing instructors who feel unqualified to this could consider using guest lecturers who are AI-experts (e.g., engineering and computer science faculty) or showing free online videos created by academic researchers regarding managing sensor data for data-driven health. A good resource is the ‘SERC WSU’ YouTube channel. Optimal courses for integrating AI content are informatics, community health, gerontology, ethics, and research design. Evidence-based practice and theory courses may also be suitable places to integrate AI content. Nursing education will need to keep pace with technology to prepare future nurses (Risling, 2017).

Conclusion

Humans cannot analyze copious amounts of motion data and they are not well suited to provide continuous in-home monitoring over weeks, months, and years. However, intelligent machines trained in activity recognition, are capable of this. Conversely, machines are unable to intuitively understand and incorporate context. For this, humans are needed. To train a Smart Home AI agent, data sets are required representing ground truth that have been labeled by experienced nurse-clinicians.

Smart environments and Smart Homes will likely be part of the future of integrated models of care. Health-assistive Smart Homes using less sophisticated AI are already being marketed. The push to use Smart Homes in the delivery of in-home care will likely intensify as more people age and AI becomes more sophisticated. It is critical that expert nursing knowledge be integrated in AI training so accurate detection of risky changes in health can be achieved. The Fritz Method is a practical approach to integrating critical nursing knowledge into the Smart Home’s AI agent.

Highlights.

Nurses make significant contributions to artificial intelligence (AI) development

Nurse-driven AI may facilitate aging-in-place with smart homes

Nurses need practical methods for working with AI development for healthcare

Acknowledgments

This work was supported in part by a grant from the National Institute of Nursing Research R01NR0116732; the National Institutes of Health R25EB024327; the Touchmark Foundation, and the -- -- University College of Nursing Foundation.

The authors would like to acknowledge their colleagues Drs. Diane Cook and Maureen Schmitter-Edgecombe for their leadership and foresight in inviting nurses to the design table of an innovative health-assistive “smart” home and Touchmark on South Hill in Spokane, Washington for collaborating with us in this research endeavour.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

No conflict of interest has been declared by the authors.

References

- Bhaskar R (1978). A realist theory of science. (2nd ed). Brighton, UK: Harvester. [Google Scholar]

- Bhaskar R (1989). Reclaiming reality: A critical introduction to contemporary philosophy. London: Verso. [Google Scholar]

- Bhatta B (2013). Research methods in remote sensing. London: Springer. [Google Scholar]

- Bian Z, Hou J, Chau L, & Magnenat-Thalmann N (2015). Fall detection based on body part tracking using a depth camera. IEEE Journal of Biomedical and Health Informatics, 19(2), 430–439. doi: 10.1109/JBHI.2014.2319372 [DOI] [PubMed] [Google Scholar]

- Boise L et al. (2013). Willingness of older adults to share data and privacy concerns after exposure to unobtrusive home monitoring. Gerontechnology, 11, 428–435. doi: 10.4017/gt.2013.11.3.001.00 [DOI] [PMC free article] [PubMed] [Google Scholar]

- U.S. Census Bureau. (2018). Older people projected to outnumber children for first time in U.S. history https://www.census.gov/newsroom/press-releases/2018/cb18-41-population-projections.html

- Campolo A, Sanfilippo M, Whittaker M, & Crawford K (2017). AI now 2017 report. New York University; https://ainowinstitute.org/AI_Now_2017_Report.pdf [Google Scholar]

- Cook DJ, Crandall A, Thomas B & Krishnan N (2012). CASAS: A smart home in a box. IEEE Comput, 46, 62–69. doi: 10.1109/MC.2012.328 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook D, Das S, & (2004). Smart environments technology, protocols and applications. Hoboken: John Wiley. [Google Scholar]

- Cook DJ, Schmitter-Edgecombe M, Jonsson L, & Morant AV (2018). Technology-enabled assessment of functional health IEEE Reviews in Biomedical Engineering, to appear. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Courtney KL (2008). Privacy and senior willingness to adopt smart home information technology in residential care facilities. Methods Inf. Med, 47, 76–81. doi: 10.3414/ME9104 [DOI] [PubMed] [Google Scholar]

- Courtney KL, Alexander GL, & Demiris G (2008). Information technology from novice to expert: Implementation implications. Journal of Nursing Management, 16(6), 692–699. doi: 10.1111/j.1365-2834.2007.00829.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dermody G, & Fritz R (2018). A conceptual framework for clinicians working with artificial intelligence and health-assistive smart homes. Nursing Inquiry. e12267. doi: 10.1111/nin.12267 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Demiris G (2009). Privacy and social implications of distinct sensing approaches to implementing smart homes for older adults In 31st Annual International Conference of the IEEE EMBS (pp. 4311–4314). Minneapolis, Minnesota, USA. doi: 10.1109/IEMBS.2009.5333800 [DOI] [PubMed] [Google Scholar]

- Demiris G et al. (2004). Older adults’ attitudes towards and perceptions of ‘smart home’ technologies: A pilot study. Med. Informatics Internet Med, 29, 87–94. doi: 10.1080/14639230410001684387 [DOI] [PubMed] [Google Scholar]

- Demiris G, Hensel BK, Skubic M, & Rantz M (2008). Senior residents’ perceived need of and preferences for “smart home” sensor technologies. International Journal of Technology Assessment in Health Care, 24(1), 120–4. doi: 10.1017/S0266462307080154 [DOI] [PubMed] [Google Scholar]

- Demiris G, Rantz M, Aud M, Marek K, Tyrer H, Skubic M, & Hussam A (2004). Older adults’ attitudes towards and perceptions of “smart home” technologies: A pilot study. Medical Informatics and the Internet in Medicine, 29(2), 87–94. doi: 10.1080/14639230410001684387 [DOI] [PubMed] [Google Scholar]

- Erik Brynjolfsson TM (2017). What can machine learning do? Workforce implications. Science, 358(6370). doi: 10.1126/science.aap8062 [DOI] [PubMed] [Google Scholar]

- Fetter MS (2009). Improving information technology competencies: Implications for psychiatric mental health nursing. Issues in Mental Health Nursing, 30(1), 3–13. doi: 10.1080/01612840802555208 [DOI] [PubMed] [Google Scholar]

- Fritz RL, Cook D (July 2017). Identifying varying health states in smart home sensor data: An expert-guided approach. World Multi-Conference of Systemics, Cybernetics and Informatics: WMSCI 2017. Conference paper Indexed Scopus. [Google Scholar]

- Fritz RL, Corbett CL, Vandermause R, & Cook D (2016). The influence of culture on older adults’ adoption of smart home monitoring. Gerontechnology, 14(3), 146–156. doi: 10.4017/gt.2016.14.3.010.00 [DOI] [Google Scholar]

- Ghods A, Caffrey K, Lin Beiyu, Fraga K, Fritz R, Schmitter-Edgecombe M, Hundhausen C & Cook D (2018). Iterative Design of Visual Analytics for a Clinician-in-the-loop Smart Home. IEEE Journal of Biomedical and Health Informatics. doi: 10.1109/JBHI.2018.2864287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Idhe D (1990). Technology and the lifeworld: From garden to earth. Indiannapolis, IN: Indiana University Press. [Google Scholar]

- Jideofor V, Young C, Zaruba G, & Daniel KM (2012). Intelligent sensor floor for fall prediction and gait analysis. Available on Google Scholar. [Google Scholar]

- Kaye J, Maxwell S, Mattek N, Hayes TL, Dodge H, Pavel M, … Zitzelberger T (2011). Intelligent systems for assessing aging changes: Home-based, unobtrusive, and continuous assessment of aging. The Journals of Gerontology. Series B, Psychological Sciences and Social Sciences, 66 Suppl 1(suppl 1), i180–90. doi: 10.1093/geronb/gbq095 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krishnan N & Cook DJ (2014). Activity recognition on streaming sensor data. Pervasive Mob. Comput, 10, 138–154. doi: 10.1016/j.pmcj.2012.07.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lê Q, Nguyen HB, & Barnett T (2012). Smart homes for older people: Positive aging in a digital world. Future Internet, 4(4), 607–617. doi: 10.3390/fi4020607 [DOI] [Google Scholar]

- Liao P-H, Hsu P-T, Chu W, & Chu W-C (2015). Applying artificial intelligence technology to support decision-making in nursing: A case study in Taiwan. Health Informatics Journal, 21(2), 137–148. doi: 10.1177/1460458213509806 [DOI] [PubMed] [Google Scholar]

- Mann W; Belchior P; Tomita M; Kemp B (2007). Older adults’ perception and use of PDAs, home automation system, and home health monitoring system. Topics in Geriatric Rehabilitation, 23(1), 35–46. [Google Scholar]

- McCarthy J (n.d.). Programs with Common Sense” at the Wayback Machine In Proceedings of the Teddington Conference on the Mechanization of Thought Processes (pp. 756–791). London: Her Majesty’s Stationery Office. [Google Scholar]

- Moen A (2003). A nursing perspective to design and implementation of electronic patient record systems. Journal of Biomedical Informatics, 36(4–5), 375–378. doi: 10.1016/j.jbi.2003.09.019 [DOI] [PubMed] [Google Scholar]

- Muheidat F, Tyrer HW, Popescu M, & Rantz M (March 2017). Estimating walking speed, stride length, and stride time using a passive floor based electronic scavenging system. In Sensors Applications Symposium (SAS), IEEE (pp. 1–5). doi: 10.1109/SAS.2017.7894112 [DOI] [Google Scholar]

- National Institute on Aging. (January 2017a). NIH initiative tests in-home technology to help older adults age in place. https://www.nia.nih.gov/news/nih-initiative-tests-home-technology-help-older-adults-age-place

- National Institute on Aging. (May 2017b). Aging in place: Growing old at home. https://www.nia.nih.gov/health/aging-place-growing-old-home

- Pearl J, Glymour Madelyn, & Jewell NP (2016). Causal inference in statistics: A primer. Chichester, West Sussex, UK: John Wiley & Sons. [Google Scholar]

- Pierce CS (1905). What is pragmatism. The Monist, 15(2), 161–181. [Google Scholar]

- Rantz M, Lane K, Phillips LJ, Despins LA, Galambos C, Alexander GL, … Miller SJ (2015). Enhanced registered nurse care coordination with sensor technology: Impact on length of stay and cost in aging in place housing. Nursing Outlook, 63(6), 650–655. doi: 10.1016/j.outlook.2015.08.004 [DOI] [PubMed] [Google Scholar]

- Rantz M, Popejoy LL, Galambos C, Phillips LJ, Lane KR, Marek KD, … Ge B (2014). The continued success of registered nurse care coordination in a state evaluation of aging in place in senior housing. Nursing Outlook, 62(4), 237–246. doi: 10.1016/j.outlook.2014.02.005 [DOI] [PubMed] [Google Scholar]

- Risling T (2017). Educating the nurses of 2025: Technology trends of the next decade. Nurse Education in Practice, 22, 89–92. doi: 10.1016/j.nepr.2016.12.007 [DOI] [PubMed] [Google Scholar]

- Risling T (2018). Why AI needs Nursing. http://policyoptions.irpp.org/magazines/february-2018/why-ai-needs-nursing/

- Seelye AM, Schmitter-Edgecombe M, Das B, & Cook DJ (2012). Application of cognitive rehabilitation theory to the development of smart prompting technologies. IEEE Reviews in Biomedical Engineering, 5, 29–44. doi: 10.1109/RBME.2012.2196691 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Skubic M, Guevara RD, & Rantz M (March 2015). Automated health alerts using in-home sensor data for embedded health assessment. IEEE Journal of Translational Engineering in Health and Medicine. doi: 10.1109/JTEHM.2015.2421499 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sprint G, Cook DJ, Fritz R, & Schmitter-Edgecombe M (2016a). Using Smart Homes to Detect and Analyze Health Events. Computer, 49(11), 29–37. doi: 10.1109/mc.2016.338 [DOI] [Google Scholar]

- Sprint G, Cook D, Fritz R, Schmitter-Edgecombe M (2016b). Detecting health and behavior change by analyzing smart home sensor data. 2016 IEEE International Conference on Smart Computing (SMARTCOMP). Conference Paper. doi: 10.1109/SMARTCOMP.2016.7501687 [DOI] [Google Scholar]

- Steggell CD, Hooker K, Bowman S, Choun S, & Kim S (2010). The role of technology for healthy aging among korean and hispanic women in the United States: A pilot study. Gerontechnology, 9(4), 433–449. doi: 10.4017/gt.2010.09.04.007.00 [DOI] [Google Scholar]

- Stone E, Skubic M (2015). Fall detection in homes of older adults using the Microsoft Kinect. IEEE J Biomed Health Inform, 19(1), 290–301. doi: 10.1109/JBHI.2014.2312180 [DOI] [PubMed] [Google Scholar]

- Toromanovic S, Hasanovic E, & Masic I (2010). Nursing information systems. Materia Socio Medica, 22(3), 168. doi: 10.5455/msm.2010.22.168-171 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wagner F, Basran J, & Dal Bello-Haas V (2012). A review of monitoring technology for use with older adults. Journal of Geriatric Physical Therapy, 35(1), 28–34. doi: 10.1519/JPT.0b013e318224aa23 [DOI] [PubMed] [Google Scholar]

- Wagner F, Basran J, Dal Bello-Haas V, Maxwell J. a, Vurgun S, Vurgun S, … Miller S (2015). The elderly’s independent living in smart homes: A characterization of activities and sensing infrastructure survey to facilitate services development. Workshop Proceedings of the 8th International Conference on Intelligent Environments, 3(5), 214–253. doi: 10.1016/j.pmcj.2006.12.001 [DOI] [Google Scholar]

- University of Washington (2017). Creating Safer, Smarter Homes. https://www.washington.edu/boundless/nursing-smart-home/