Summary

Weighting methods offer an approach to estimating causal treatment effects in observational studies. However, if weights are estimated by maximum likelihood, misspecification of the treatment assignment model can lead to weighted estimators with substantial bias and variance. In this paper, we propose a unified framework for constructing weights such that a set of measured pretreatment covariates is unassociated with treatment assignment after weighting. We derive conditions for weight estimation by eliminating the associations between these covariates and treatment assignment characterized in a chosen treatment assignment model after weighting. The moment conditions in covariate balancing weight methods for binary, categorical and continuous treatments in cross-sectional settings are special cases of the conditions in our framework, which extends to longitudinal settings. Simulation shows that our method gives treatment effect estimates with smaller biases and variances than the maximum likelihood approach under treatment assignment model misspecification. We illustrate our method with an application to systemic lupus erythematosus data.

Keywords: Causal inference, Confounding, Continuous treatment, Covariate balance, Inverse probability weighting, Propensity function

1. Introduction

Weighting methods are widely used to estimate causal treatment effects. The propensity function, the conditional probability of receiving treatment given a set of measured pretreatment covariates (Imai & van Dyk, 2004), features prominently in weighting methods. A natural choice of weights is a ratio of the marginal probability of treatment assignment and the propensity function, henceforth referred to as the stabilized inverse probability of treatment weights (Robins, 2000; Robins et al., 2000). Despite the appeal of weighting methods, problems arise when the propensity function is unknown. Hence weights are usually constructed using the stabilized inverse probability of treatment weight structure with an estimated propensity function, often obtained by maximum likelihood (Imbens, 2000; Robins et al., 2000), although other methods have been proposed (Lee et al., 2010). However, because these estimation procedures do not directly aim at the goal of weighting, which is to eliminate the association between a set of measured pretreatment covariates satisfying the conditions in § 2·1 and treatment assignment after weighting, a slightly misspecified propensity function model can result in badly biased treatment effect estimates (Kang & Schafer, 2007). Recently, this problem has motivated new weighting methods that optimize covariate balance, the covariate balancing weights (Graham et al., 2012; Hainmueller, 2012; McCaffrey et al., 2013; Imai & Ratkovic, 2014, 2015; Zhu et al., 2015; Zubizarreta, 2015; Chan et al., 2016; Fong et al., 2018). These weights can dramatically improve the performance of weighting methods, but there is a lack of a framework to generalize them to complex treatment types, such as semicontinuous or multivariate treatments, and even to longitudinal settings.

In this paper, we introduce covariate association eliminating weights, a unified framework for constructing weights with the goal being that a set of measured pretreatment covariates will be unassociated with treatment assignment after weighting. Our method can be used to estimate causal effects for semicontinuous, count, ordinal, or even multivariate treatments, and it extends to longitudinal settings. An example of estimating the direct effect of a time-varying treatment on a longitudinal outcome is provided in § 8. Utilizing the generality of the propensity function and its capacity to characterize covariate associations with treatment assignment, we derive conditions for weight estimation by eliminating the association between the set of measured pretreatment covariates and treatment assignment specified in a chosen propensity function model after weighting, i.e., by solving the weighted score equations of the propensity function model at parameter values which indicate that the covariates are unassociated with treatment assignment.

Our method has several attractive characteristics. First, it encompasses existing covariate balancing weight methods and provides a unified framework for weighting with treatments of any distribution; see § 4. By eliminating the associations between the covariates and treatment assignment after weighting, our method can provide some robustness against misspecification of the functional forms of the covariates in a propensity function model, particularly if they are predictive of the outcome; see § 6. Second, it is clear from our framework what type of covariate associations are eliminated after weighting. For example, the covariate balancing weight method proposed in Fong et al. (2018) will only eliminate the associations between the covariates and the mean of a continuous treatment; see § 4·2. Our method can also eliminate the associations between the covariates and the variance of the continuous treatment. Third, our method extends to longitudinal settings; see § 8. In particular, apart from handling treatments of any distribution, it can accommodate unbalanced observation schemes and can incorporate a variety of stabilized weight structures. In contrast, to the best of our knowledge, the only available covariate balancing weight method for longitudinal settings, proposed by Imai & Ratkovic (2015), focuses on binary treatments in a balanced observation scheme, and it is not clear how to incorporate arbitrary stabilized weight structures in their approach. Finally, our method can be implemented with standard statistical software by solving a convex optimization problem that identifies minimum-variance weights subject to our conditions (Zubizarreta, 2015). This is especially appealing for nonbinary treatments with outliers (Naimi et al., 2014), because it protects against extreme weights which often lead to unstable treatment effect estimates in practice.

2. The propensity function

2·1. Definition and assumptions

Let Xi, Ti and Yi be respectively a set of measured pretreatment covariates, the possibly multivariate treatment variable and the outcome for the ith unit (i = 1, … , n) in a simple random sample of size n. Following Imai & van Dyk (2004), we define the propensity function as the conditional probability of treatment given the set of measured pretreatment covariates, i.e., pr(Ti | Xi; βtrue), where βtrue parameterizes this distribution. The parameter βtrue is assumed to be unique and finite-dimensional, and is such that pr(Ti | Xi) depends on Xi only through a subset of βtrue; this is the uniquely parameterized propensity function assumption of Imai & van Dyk (2004). For example, if pr(Ti | Xi) follows a regression model, then βtrue would include regression coefficients that characterize the dependence of Ti on Xi and intercept terms that describe the baseline distribution of Ti. In addition, we make the strong ignorability of treatment assignment assumption (Rosenbaum & Rubin, 1983), also known as the unconfoundedness assumption, pr{Ti | Yi(tP), Xi} = pr(Ti | Xi) where Yi(tP) is a random variable that maps a potential treatment tP to a potential outcome, and the positivity assumption (Imai & van Dyk, 2004), for all Xi and any set with positive measure. Finally, the distribution of potential outcomes for one unit is assumed to be independent of the potential treatment value of another unit given the set of pretreatment covariates; this is the stable unit treatment value assumption. Throughout the paper, we make the above assumptions; otherwise our method may result in severely biased causal effect estimates, even compared with an unadjusted analysis. For example, when unconfoundedness holds without conditioning on Xi, adjusting for Xi can induce M-bias (Ding & Miratrix, 2015).

2·2. Covariate selection

We briefly review some methods for covariate selection. When the causal structure is known and represented by a directed acyclic graph, Shpitser et al. (2010) gave a complete graphical criterion, the adjustment criterion, to determine whether adjusting for a set of covariates ensures unconfoundedness. The adjustment criterion generalizes the back-door criterion of Pearl (1995), which is sufficient but not necessary for unconfoundedness. In the absence of knowledge about how covariates are causally related to each other, VanderWeele & Shpitser (2011) proposed the disjunctive cause criterion. This says that if any subset of pretreatment covariates suffices to ensure unconfoundedness, then the subset of pretreatment covariates that are causes of the treatment assignment and/or the outcome will also suffice.

Given that an adjustment set that ensures unconfoundedness has been identified, many researchers have proposed dimension reduction procedures to increase efficiency while maintaining unconfoundedness (de Luna et al., 2011; VanderWeele & Shpitser, 2011), or to minimize mean squared error (Vansteelandt et al., 2012). Broadly, these methods tend to remove from the adjustment set covariates that are unassociated with the outcome.

2·3. Stabilized inverse probability of treatment weighting

A popular approach to causal effect estimation is to weight each unit’s data by stabilized inverse probability of treatment weights Wi = Wi(Ti, Xi) = pr(Ti)/pr(Ti | Xi) (Robins et al., 2000). The idea is that if the propensity function is known, the propensity function after weighting by Wi, pr*(Ti | Xi), will be equivalent to pr(Ti) and hence does not depend on Xi, as shown in the Supplementary Material. Here * denotes the pseudo-population after weighting. Under the assumptions in § 2·1, weighting by Wi also preserves the causal effect of tP on E{Yi(tP)} in the original population (Robins, 2000; Zhang et al., 2016), and so the causal effect can be consistently estimated without adjusting for Xi in the weighted data. For example, E{Yi(tP)} can be consistently estimated by modelling E(Yi | Ti) in the weighted data (Robins, 2000).

2·4. Maximum likelihood estimation

Estimating the weights by maximum likelihood involves specifying parametric models pr(Ti; α) and where are functionals of elements in Xi, and then estimating the unknown parameters α and β by solving the score equations

If and pr(Ti; α) are correctly specified, then the weights where and are the maximum likelihood estimates of α and β, are equivalent to asymptotically. Thus weighting by Wi will result in being asymptotically equivalent to pr(Ti), i.e., Ti does not depend on after weighting. Here depends on and through the estimated weights. However, when is misspecified, this estimation procedure not only will result in diverging from pr(Ti) but also does not even guarantee that the association between and Ti is reduced in the weighted data relative to the observed data. Researchers are therefore encouraged to check for the absence of this association in the weighted data before proceeding to causal effect estimation. For nonbinary treatments, correctly specifying the propensity function model will generally also entail correct specification of the distribution and the dependence structure on covariates for higher-order moments of the treatment variable. Therefore, model misspecification for nonbinary treatments is likely to be worse.

3. Methodology

3·1. General framework

Maximum likelihood estimation indirectly aims to achieve the asymptotic equivalence of and pr(Ti) by fitting a model for the propensity function. We instead propose to use weighting to directly eliminate the association between and Ti characterized by a chosen propensity function in the weighted data. When is misspecified, our method, in contrast to maximum likelihood estimation, will eliminate the association between and Ti as characterized by after weighting. This is necessary for Ti to be independent of after weighting. In the unlikely scenario that is correctly specified, maximum likelihood estimation will asymptotically eliminate the association between and Ti after weighting, while our method will eliminate their association in finite samples.

We now formalize our ideas. Given a set of known weights W = (W1, … , Wn), we can fit a parametric propensity function model to the data weighted by W by solving the score equations

| (1) |

where β(W) is a vector of parameters. Here we write β(W) as a function of W because the resulting maximum likelihood estimates, will depend on W. We use the uniquely parameterized propensity function assumption in § 2·1 to partition β(W) into {βb(W), βd(W)}, where βd(W) are the unique parameters that characterize the dependence of Ti on e.g., regression coefficients, and βb(W) are parameters that characterize the baseline distribution, e.g., the intercept terms. Here the subscripts ‘d’ and ‘b’ stand for dependence and baseline, respectively. Without loss of generality, we assume The conditions for covariate association eliminating weights are then derived by inverting (1) such that the weights W satisfy the equations

| (2) |

where is obtained by fitting pr(Ti; α) to the observed data. Our method therefore sets the goal of weighting as attaining that is, after weighting by Wi, (i) Ti is unassociated with as described by and (ii) the marginal distribution of Ti is preserved from the observed data, as described by pr(Ti; α). Here (ii) is a statement concerning the projection function, the treatment assignment distribution in the weighted data. The choice of projection function, as long as it does not depend on does not affect the consistency of weighted estimators for causal treatment effects, although it does affect their efficiency (Peterson et al., 2010). In our method, the projection function may be altered by fixing βb(W) in (2) at other values instead of ; an example is provided in § 4·1.

Our method is linked to the use of regression to assess covariate balance, for example in matched data (Lu, 2005). Specifically, if applying regression to matched data indicates small associations between the covariates and treatment assignment, it would be reasonable to assume that the covariate distributions are approximately balanced across treatment levels. Inspired by this, we propose to invert this covariate balance measure for weight estimation such that it implies no imbalances in covariate distributions across treatment levels, i.e., after weighting.

3·2. Convex optimization for weight estimation

We consider a convex optimization approach in the spirit of Zubizarreta (2015), intended to estimate minimum-variance weights subject to the conditions (2) being satisfied. We formulate the estimation problem as a quadratic programming task and use the lsei function in the R (R Development Core Team, 2018) package limSolve (Soetaert et al., 2009) to obtain the optimal weights. Specifically, we solve the following quadratic programming problem for W:

| (3) |

| (4) |

| (5) |

The constraints (4) are the equations (2), and so should eliminate the associations between covariates and treatment assignment after weighting. They also preserve the marginal distribution of the treatment variable in the observed data. The first constraint in (5) ensures equality of the numbers of units in the weighted and observed data. Since this also ensures that the mean of W is unity, (3) minimizes the variance of W. Interestingly, since the weights in the observed data are ones, (3) can also be interpreted as identifying the least extrapolated data as characterized by the L2-norm. The second constraint in (5) requires that each element of W be nonnegative (Hainmueller, 2012; Zubizarreta, 2015). Allowing some elements of W to be zero can let the estimation problem be formulated as a convex quadratic programming problem. Then the estimation procedure could remove units which contribute greatly to the variability of the weights, while forcing the remaining units to allow unbiased estimation of causal treatment effects (Crump et al., 2009). Following Zubizarreta (2015), it is possible to relax the strict equality constraints (4) to inequalities; the R function lsei has this option. This allows for less extrapolation at the expense of possibly introducing bias. For simplicity, we consider only the case where the strict equality constraints (4) are enforced.

4. Relation to previous methods

4·1. Binary treatment

The moment conditions specified in existing covariate balancing weight methods (Imai & Ratkovic, 2014; Fong et al., 2018) are special cases of the conditions (2) after slight modifications. In this section we focus on binary treatments. The details for more general categorical treatments can be found in the Supplementary Material.

Unless stated otherwise in our derivations, we implicitly take βd(W) and βb(W) in (2) to be, respectively, the vector of regression coefficients of and the vector of the remaining parameters, including intercept terms, in the chosen propensity function model.

Let When Ti is a binary treatment variable, i.e., Ti = 1 if the ith unit received treatment and Ti = 0 otherwise, the following covariate balancing conditions for the estimation of the propensity score have been proposed (Imai & Ratkovic, 2014):

| (6) |

Because includes 1, these covariate balancing conditions constrain the number of units in the treated and control groups to be equal in the weighted data. The other conditions for constrain the weighted means of each element in in the treated and control groups to be equal.

These conditions can be derived using our framework. Suppose that we specify a logistic regression model for the propensity function/score, in the weighted data induced by a set of known weights W. This model can be fitted to the weighted data by solving the score equations

Conditions can be derived by fixing i.e., letting in these weighted score equations, where is the proportion of units that received treatment in the observed data, and then inverting these equations so that we are solving for W:

| (7) |

The correspondence between (6) and (7) can then be established by changing the projection function from to 1/2.

4·2. Continuous treatment

When Ti is continuous on the real line, Fong et al. (2018) proposed the following covariate balancing conditions for weight estimation,

| (8) |

where the superscript c denotes the centred version of the variable and We now derive these covariate balancing conditions using the proposed framework. First, we assume a simple normal linear model for the propensity function, The score equations for this model in the weighted data are

By inverting these score equations, we find weights W that satisfy

| (9) |

where and are the sample mean and variance of Ti. The first set of conditions in (9) is equivalent to the conditions (8), except that the are not necessarily centred. The usefulness of our framework can also be exemplified by the insight it gives into how conditions can be specified for the variance of Ti. Specifically, suppose that we specify an alternative propensity function model that is, we allow the variance of Ti, to depend on with For this model, the conditions for weight estimation are derived by setting the regression coefficient elements in βμ(W) and βσ(W) to zero in the score equations. This corresponds to solving the equations

| (10) |

Thus, the additional conditions in (10) are designed to remove the association between and the variance of Ti. More details can be found in § 6.

5. Other treatment types

Having demonstrated that our framework encompasses previously proposed work, we now widen its applicability by considering semicontinuous treatments, motivated by our application in § 7. Details about count treatments can be found in the Supplementary Material.

Semicontinuous variables are characterized by a point mass at zero and a right-skewed continuous distribution with positive support (Olsen & Schafer, 2001). Semicontinuous treatments are common in clinical settings because only treated patients will be prescribed a continuous dose of treatment; otherwise their dose will be recorded as zero (Moodie & Stephens, 2010). A common approach to modelling semicontinuous data is by using a two-part model, such as that in Olsen & Schafer (2001):

| (11) |

where and with is the standard normal density function, and I (·) is an indicator function. Here g(·) is a monotonic function included to make the normal assumption for the positive values of Ti more tenable. The likelihoods for the binary and continuous components of the two-part model are separable, so the results in § 4 imply that the conditions based on (11) are

| (12) |

| (13) |

where are maximum likelihood estimates of πi, μi and σi obtained by fitting (11), but without covariates, to the observed Ti. The conditions (12) are derived from the score equations for the binary component and are equivalent to (7). The conditions (13) are derived from the score equations of the continuous component and are similar to (10). In our framework, the weights W are estimated by solving (12) and (13) simultaneously, whereas maximum likelihood estimation obtains weights Wbi and Wci separately from the binary and continuous components, respectively, and then uses their unit-specific product Wi = WbiWci as the final weight.

6. Simulation study

We consider the set-up where there are three independent standard normal pretreatment covariates X1i, X2i and X3i. The treatment Ti is semicontinuous. We first simulate a binary indicator for Ti > 0 with pr(Ti > 0) = 1/[1 + exp{−(0·5 + X1i + X2i + X3i)}]. Then if Ti > 0, Ti is drawn from a normal distribution with mean 1 + 0·5X1i + 0·2X2i + 0·4X3i and standard deviation exp(0·3 + 0·3X1i + 0·1X2i + 0·2X3i). The outcome Yi follows a negative binomial distribution

| (14) |

where θ = 1 and λi = E(Yi | Ti, X1i, X2i, X3i) = exp[−1 + 0·5Ti + 2/{1 + exp(−3X1i)} + 0·2X2i − 0·2 exp(X3i)]. Using this set-up, we generate four sets of 2500 simulated datasets with n = 500, 1000, 2500 and 4000.

For each of the four sets of simulations, we use the proposed method for semicontinuous treatments in § 5, referred to as Approach 1, and maximum likelihood estimation, referred to as Approach 2, with the propensity function model (11) to obtain the weights. For both methods we consider two different model structures and two sets of covariates for the propensity function model. The correct model structure A allows the mean and variance of Ti conditional on Ti > 0 to depend on covariates, whereas the incorrect model structure B restricts σi to be a constant σ. The first set of covariates are the correct covariates, and the second set are transformed covariates of the form The covariates were chosen to be highly predictive of the outcome. In total, we fit eight models to each simulated dataset to estimate the weights, which are scaled by their averages so that they sum to n. Then, using the estimated weights, we fit a weighted negative binomial model (14) to the outcome with the marginal mean λi = exp(γ0 + γ1Ti), where γ0, γ1 and θ are parameters to be estimated. The true causal treatment effect of interest is γ1 = 0·5.

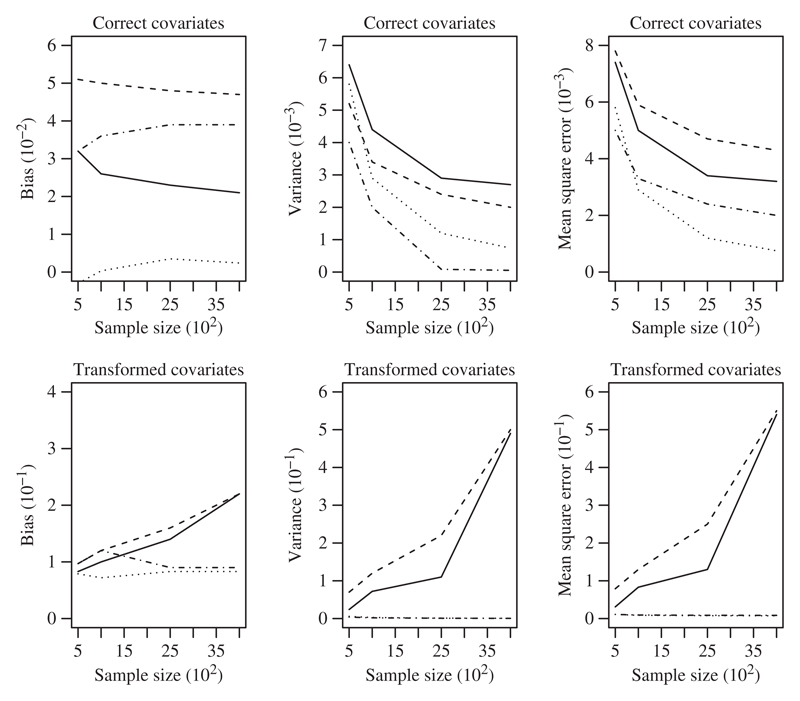

The left panels of Fig. 1 show that the estimates from Approach 1 have smaller biases than those from Approach 2, particularly when the covariates are transformed. In this case, bias increases with sample size in Approach 2 but not in Approach 1. When the correct model structure A is used with the correct covariates, Approach 1 has smaller bias than Approach 2, perhaps because Approach 2 requires the law of large numbers to work well before the associations between covariates and treatment assignment can be eliminated after weighting.

Fig. 1.

Plots of the bias (left panels), empirical variance (middle panels) and mean squared error (right panels) of the treatment effect estimates as a function of sample size, for the correct (top panels) and transformed (bottom panels) covariate sets; in each panel the different line types represent Approach 1 with model structure A (dotted), Approach 1 with model structure B (dot-dash), Approach 2 with model structure A (solid), and Approach 2 with model structure B (dashed).

The middle panels of Fig. 1 present the empirical variances of the treatment effect estimates. When the correct covariates are used, both approaches have small variances that decrease with sample size, although Approach 1 is better. Within each approach, estimates from the incorrect model structure B are less variable than those from model structure A, perhaps because the weights are less variable under model structure B as it has fewer degrees of freedom. The variances under Approach 1, but not under Approach 2, decrease with sample size when the transformed covariates are used. This behaviour in Approach 2 with the transformed covariates is due to a few sets of estimated weights exacerbating the extremeness of the tails of the sampling distribution as the sample size increases; see Robins et al. (2007, pp. 553–4) for more details. Similar phenomena are observed with the mean squared error.

Because Approach 2 performs so poorly relative to Approach 1 when the transformed covariates are used, it is difficult to distinguish between the performances of Approach 1 under model structures A and B in Fig. 1, so we summarize the results here: within Approach 1, estimates from model structure A have smaller biases but larger variances than estimates from model structure B. Overall, estimates from model structure A have smaller mean squared errors for n ≥ 1000.

In summary, in all examined scenarios, estimates based on the proposed method have smaller biases and variances than the maximum likelihood estimates.

7. Application

Steroids are effective and low-cost treatments for relieving disease activity in patients with systemic lupus erythematosus, a chronic autoimmune disease that affects multiple organ systems. However, there is evidence that steroid exposure could be associated with permanent organ damage that might not be attributable to disease activity. Motivated by a recent study (Bruce et al., 2015), we aim to estimate the causal dose-response relationship between steroid exposure and damage accrual shortly after disease diagnosis using data from the Systemic Lupus International Collaborating Clinics inception cohort.

We focus on 1342 patients who were enrolled between 1999 and 2011 from 32 sites and had at least two yearly assessments in damage accrual after disease diagnosis. The outcome of interest Yi is the number of items accrued in the Systemic Lupus International Collaborating Clinics/American College of Rheumatology Damage Index during the period Oi, defined as the time interval between the two initial assessments in years. The semicontinuous treatment variable Ti is the average steroid dose per day in milligrams over the same time period. Based on clinical inputs, we consider the following pretreatment covariates Xi: the numerical scoring version of the British Isles Lupus Assessment Group disease activity index (Hay et al., 1993; Ehrenstein et al., 1995), which was developed according to the principle of physicians’ intentions to treat; age at diagnosis in years; disease duration in years; and the groups of race/ethnicity and geographic region.

We consider the model (11) for Ti with g(x) = log(x + 1) to reduce right skewness in the positive steroid dose. For , we include the main effects of Xi. We use the same four models as in the simulations to estimate weights. Further details can be found in the Supplementary Material.

We model Yi with a weighted negative binomial model (14), where for examining the effect of presence of steroid use on damage accrual we specify λi = E(Yi | Ti; γ0, γ1) = Oi exp{γ0 + γ1I(Ti > 0)}, and for examining the dose-response relationship we specify λi = E(Yi | Ti; ξ0, ξ1) = Oi exp{ξ0 + ξ1 log(Ti + 1)}, with γ0, γ1, ξ0, ξ1 and θ parameters to be estimated. We construct 95% bootstrap percentile confidence intervals for parameter estimates using 1000 nonparametric bootstrap samples.

The results of the outcome regression models based on four sets of estimated weights are reported in Table 1. Consistent with current clinical evidence (Bruce et al., 2015), estimates from all weighted outcome regression models indicate that steroid use and the average steroid dose are positively and significantly associated with damage accrual in the initial period following diagnosis, although the estimated effect sizes from Approach 1 are larger than those from Approach 2. Approach 1 also yields narrower confidence intervals than Approach 2. Within each approach, model structure B gives slightly smaller standard errors than model structure A.

Table 1. Parameter estimates and 95% confidence intervals from fitting the weighted outcome regression models to the systemic lupus erythematosus data.

| Approach 1 | Approach 2 | |||

|---|---|---|---|---|

| Model structure A | Model structure B | Model structure A | Model structure B | |

| Binary treatment | ||||

| γ0 | −2·50 (−2·93, −2·19) | −2·50 (−2·93, −2·18) | −2·36 (−2·87, −1·98) | −2·36 (−·87, −1·98) |

| γ1 | 0·76 (0·41, 1·21) | 0·73 (0·37, 1·19) | 0·57 (0·10, 1·10) | 0·57 (0·12, 1·10) |

| θ | 1·60 (0·77, 2·53) | 1·62 (0·83, 2·54) | 1·30 (0·38, 2·48) | 1·19 (0·23, 2·41) |

| Semicontinuous treatment | ||||

| ξ0 | −2·57 (−2·93, −2·29) | −2·54 (−2·88, −2·27) | −2·47 (−2·89, −2·11) | −2·45 (−2·84, −2·12) |

| ξ1 | 0·40 (0·24, 0·56) | 0·37 (0·23, 0·52) | 0·33 (0·16, 0·52) | 0·32 (0·15, 0·49) |

| θ | 1·41 (0·70, 2·27) | 1·48 (0·75, 2·32) | 1·17 (0·34, 2·22) | 1·11 (0·28, 2·14) |

We also fitted models with I (Ti > 0) and I (Ti > 0) log(Ti + 1) included in the outcome regression. This provides the dose-response relationship after removing the patients unexposed to steroids (Greenland & Poole, 1995). The estimated dose-response functions were similar to those obtained from the models that include the semicontinuous treatment.

Overall, our results suggest that steroid dose is related to the damage accrual rate at the early disease stage of systemic lupus erythematosus. This suggests that clinicians might need to seek steroid-sparing therapies even at the early disease stage in order to reduce damage accrual.

8. Longitudinal setting

Our framework can be extended to the longitudinal setting. Here we give an example using a similar setting to that in Moodie & Stephens (2010). Suppose that for the ith unit (i = 1, … , n), in each time interval [sij−1, sij) (j = 1, … , mi; si0 = 0) of a longitudinal study, we observe in chronological order a vector of covariates Xij, a time-varying treatment Tij that can be of any distribution, and a longitudinal outcome Yij. The units are not necessarily followed up at the same time-points. Let the random variable be the potential outcome that would have arisen had treatment been administered in the time interval [sij−1, sij). We consider the setting where interest lies in estimating the direct causal effect of on which may be confounded by histories of covariates, treatment assignment and response, and Ȳij−1. Here an overbar represents the history of a process; for example,

In order to identify the direct causal effect of on we make the sequential ignorability assumption, for any time interval, and the positivity assumption, for all and Ȳij−1 and for any set with positive measure. Under these assumptions, the effect of on can be consistently estimated by weighting the ith unit’s data in the interval [sij−1, sij) with for all units and time intervals. The weights are typically estimated by where are functionals of and Ȳij−1, and and are the maximum likelihood estimates of α and β.

An alternative approach to weight estimation is to generalize our proposed method. Following the same strategy as in § 3·1, we assume that given known weights Wij, the time-varying propensity function in the time interval [sij−1, sij) follows a model As in § 3·1, we partition β(W) into {βb(W), βd (W)}, where βd (W) are regression coefficients that characterize the association between and Tij over time, and βb(W) are parameters that characterize the baseline distribution, e.g., the intercept terms. Similarly to before, conditions for weight estimation can be derived by inverting the weighted score equations

| (15) |

where is obtained by fitting the model pr(Tij; α) to the observed data for the time-varying treatment Tij. Thus these conditions are designed to eliminate the association between and Tij and to preserve the observed marginal distribution of Tij after weighting. Other projection functions that can help to further stabilize the weights, such as those that depend on some of the covariates, can also be considered in the proposed framework with only minor modifications. This would be useful when the interactions between these covariates and treatment are of interest in the outcome regression model. Finally, the convex optimization approach in § 3·2 can be used for weight estimation by replacing the equations (4) with (15). For large sample sizes, a parametric approach to solving the conditions (15) would be useful.

9. Discussion

The proposed method has some limitations. First, both our method and existing covariate balancing weight methods treat covariates equally and balance them simultaneously. This can lead to poor performance in high-dimensional settings, so it would be of interest to incorporate different weights for the covariates when estimating the weights for the units. Second, it can be hard to detect near violations of the positivity assumption with our method, because it generally results in small variance of the causal effect estimates by exactly balancing the covariates. In such circumstances, e.g., when there is strong confounding, the results from our method can hide the fact that the observed data alone carry little information about the target causal effect and can have large bias under model misspecification because our method will almost entirely rely on extrapolation. It is therefore important to assess the positivity assumption when applying our framework. Third, our method does not necessarily estimate the causal effect for the population of interest, such as the target population of a health policy. This can be remedied by supplementing the conditions (4) with the additional conditions where c is the sample mean of with respect to the target population (Stuart et al., 2011).

Supplementary Material

Supplementary material available at Biometrika online includes properties of stabilized inverse probability of treatment weights, derivations of conditions for other treatment distributions, simulation results and details of the application.

Acknowledgement

We thank two referees, the associate editor, the editor and Dr Shaun Seaman for helpful comments and suggestions. This work was supported by the U.K. Medical Research Council. We thank the Systemic Lupus International Collaborating Clinics for providing the data.

References

- Bruce IN, O’Keeffe AG, Farewell V, Hanly JG, Manzi S, Su L, Gladman DD, Bae S-C, Sanchez-Guerrero J, Romero-Diaz J, et al. Factors associated with damage accrual in patients with systemic lupus erythematosus: Results from the Systemic Lupus International Collaborating Clinics (SLICC) inception cohort. Ann Rheum Dis. 2015;74:1706–13. doi: 10.1136/annrheumdis-2013-205171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chan KCG, Yam SCP, Zhang Z. Globally efficient nonparametric inference of average treatment effects by empirical balancing calibration weighting. J R Statist Soc B. 2016;78:673–700. doi: 10.1111/rssb.12129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crump RK, Hotz VJ, Imbens GW, Mitnik OA. Dealing with limited overlap in estimation of average treatment effects. Biometrika. 2009;96:187–99. [Google Scholar]

- de Luna X, Waernbaum I, Richardson TS. Covariate selection for the nonparametric estimation of an average treatment effect. Biometrika. 2011;98:861–75. [Google Scholar]

- Ding P, Miratrix LW. To adjust or not to adjust? Sensitivity analysis of M-bias and butterfly-bias. J Causal Infer. 2015;3:41–57. [Google Scholar]

- Ehrenstein MR, Conroy SE, Heath J, Latchman DS, Isenberg DA. The occurrence, nature and distribution of flares in a cohort of patients with systemic lupus erythematosus. Br J Rheumatol. 1995;34:257–60. doi: 10.1093/rheumatology/34.3.257. [DOI] [PubMed] [Google Scholar]

- Fong C, Hazlett C, Imai K. Covariate balancing propensity score for a continuous treatment: Application to the efficacy of political advertisements. Ann Appl Statist. 2018;12:156–77. [Google Scholar]

- Graham BS, Campos de Xavier Pinto C, Egel D. Inverse probability tilting for moment condition models with missing data. Rev Econ Stud. 2012;79:1053–79. [Google Scholar]

- Greenland S, Poole C. Interpretation and analysis of differential exposure variability and zero-exposure categories for continuous exposures. Epidemiology. 1995;6:326–8. doi: 10.1097/00001648-199505000-00024. [DOI] [PubMed] [Google Scholar]

- Hainmueller J. Entropy balancing for causal effects: Multivariate reweighting method to produce balanced samples in observational studies. Polit Anal. 2012;20:24–46. [Google Scholar]

- Hay E, Bacon P, Gordan C, Isenberg DA, Maddison P, Snaith ML, Symmons DP, Viner N, Zoma A. The BILAG index: A reliable and validated instrument for measuring clinical disease activity in systemic lupus erythematosus. Quart J Med. 1993;86:447–58. [PubMed] [Google Scholar]

- Imai K, Ratkovic M. Covariate balancing propensity score. J R Statist Soc B. 2014;76:243–63. [Google Scholar]

- Imai K, Ratkovic M. Robust estimation of inverse probability weights for marginal structural models. J Am Statist Assoc. 2015;110:1013–23. [Google Scholar]

- Imai K, ven Dyk DA. Causal inference with general treatment regimes: Generalizing the propensity score. J Am Statist Assoc. 2004;99:854–66. [Google Scholar]

- Imbens GW. The role of the propensity score in estimating dose-response functions. Biometrika. 2000;87:706–10. [Google Scholar]

- Kang JD, Schafer JL. Demystifying double robustness: A comparison of alternative strategies for estimating a population mean from incomplete data (with Discussion) Statist Sci. 2007;22:523–39. doi: 10.1214/07-STS227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lee BK, Lessler J, Stuart EA. Improving propensity score weighting using machine learning. Statist Med. 2010;29:337–46. doi: 10.1002/sim.3782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lu B. Propensity score matching with time-dependent covariates. Biometrics. 2005;61:721–8. doi: 10.1111/j.1541-0420.2005.00356.x. [DOI] [PubMed] [Google Scholar]

- Mccaffrey DF, Griffin BA, Almirall D, Slaughter ME, Ramchard R, Burgette LF. A tutorial on propensity score estimation for multiple treatment using generalized boosted models. Statist Med. 2013;32:3388–414. doi: 10.1002/sim.5753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moodie EM, Stephens DA. Estimation of dose-response functions for longitudinal data using the generalised propensity score. Statist Meth Med Res. 2010;21:149–66. doi: 10.1177/0962280209340213. [DOI] [PubMed] [Google Scholar]

- Naimi AI, Moodie EE, Auger N, Kaufman JS. Constructing inverse probability weights for continuous exposures: A comparison of methods. Epidemiology. 2014;25:292–9. doi: 10.1097/EDE.0000000000000053. [DOI] [PubMed] [Google Scholar]

- Olsen MK, Schafer JL. A two-part random effects model for semicontinuous longitudinal data. J Am Statist Assoc. 2001;96:730–45. [Google Scholar]

- Pearl J. Causal diagrams for empirical research. Biometrika. 1995;82:669–88. [Google Scholar]

- Peterson ML, Porter KE, Gruber SG, Wang Y, van der Laan MJ. Diagnosing and responding to violations in the positivity assumption. Statist Meth Med Res. 2010;21:31–54. doi: 10.1177/0962280210386207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- R Development Core Team. R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing; Vienna, Austria: 2018. ISBN 3-900051-07-0. http://www.R-project.org. [Google Scholar]

- Robins J, Sued M, Lei-Gomez Q, Rotnitzky A. Comment: Performance of doubly-robust estimators when “inverse probability” weights are highly variable. Statist Sci. 2007;22:544–59. [Google Scholar]

- Robins JM. Marginal structural models versus structural nested models as tools for causal inference. In: Halloran ME, Berry D, editors. Statistical Models in Epidemiology, the Environment and Clinical Trials. New York: Springer; 2000. pp. 95–133. [Google Scholar]

- Robins JM, Hernán MA, Brumback B. Marginal structural models and causal inference in epidemiology. Epidemiology. 2000;11:550–60. doi: 10.1097/00001648-200009000-00011. [DOI] [PubMed] [Google Scholar]

- Rosenbaum PR, Rubin DB. The central role of the propensity score in observational studies for causal effects. Biometrika. 1983;70:41–55. [Google Scholar]

- Shpitser I, VanderWeele TJ, Robins JM. On the validity of covariate adjustment for estimating causal effects. In: Grünwald P, Spirtes P, editors. Proceedings of the 26th Conference on Uncertainty and Artificial Intelligence; AUAI Press; 2010. pp. 527–36. [Google Scholar]

- Soetaert K, van den Meersche K, van Oevelen D. limSolve: Solving Linear Inverse Models. R-package version 1.5.1. 2009.

- Stuart EA, Cole SR, Bradshaw CP, Leaf PJ. The use of propensity scores to assess the generalizability of results from randomized trials. J R Statist Soc A. 2011;174:369–86. doi: 10.1111/j.1467-985X.2010.00673.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- VanderWeele TJ, Shpitser I. A new criterion for confounder selection. Biometrics. 2011;67:1406–13. doi: 10.1111/j.1541-0420.2011.01619.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vansteelandt S, Bekaert M, Claeskens G. On model selection and model misspecification in causal inference. Statist Meth Med Res. 2012;21:7–30. doi: 10.1177/0962280210387717. [DOI] [PubMed] [Google Scholar]

- Zhang Z, Zhou J, Cao W, Zhang J. Causal inference with a quantitative exposure. Statist Meth Med Res. 2016;25:315–35. doi: 10.1177/0962280212452333. [DOI] [PubMed] [Google Scholar]

- Zhu Y, Coffman DL, Ghosh D. A boosting algorithm for estimating generalized propensity scores with continuous treatments. J Causal Infer. 2015;3:25–40. doi: 10.1515/jci-2014-0022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zubizarreta JR. Stable weights that balance covariates for estimation with incomplete outcome data. J Am Statist Assoc. 2015;110:910–22. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.