Abstract

Predictive modeling based on machine learning with medical data has great potential to improve healthcare and reduce costs. However, two hurdles, among others, impede its widespread adoption in hdealthcare. First, medical data are by nature longitudinal. Pre-processing them, particularly for feature engineering, is labor intensive and often takes 50–80% of the model building effort. Predictive temporal features are the basis of building accurate models, but are difficult to identify. This is problematic. Healthcare systems have limited resources for model building, while inaccurate models produce sub-optimal outcomes and are often useless. Second, most machine learning models provide no explanation of their prediction results. However, offering such explanations is essential for a model to be used in usual clinical practice. To address these two hurdles, this paper outlines: 1) a data-driven method for semi-automatically extracting predictive and clinically meaningful temporal features from medical data for predictive modeling; and 2) a method of using these features to automatically explain machine learning prediction results and suggest tailored interventions. This provides a roadmap for future research.

Keywords: Temporal feature, Medical data, Machine learning, Recurrent neural network, Predictive modeling, Automatic explanation

1. Introduction

Machine learning studies computer algorithms that learn from data [1] and has won most data science competitions [2]. Examples of machine learning algorithms include deep neural network (a.k.a. deep learning) [3], support vector machine, random forest, and decision tree. By enabling tasks like identifying high-risk patients for preventive interventions, predictive modeling based on machine learning with medical data holds great potential to improve health-care and lower costs. Trials showed using machine learning helped: 1) reduce patient no-show rate by 19% and boost appointment rescheduling or cancel rate by 17% in outpatients at high risk of no-shows [4]; 2) cut 30-day mortality rate (odds ratio = 0.53) in emergency department patients with community-acquired pneumonia [5]; 3) trim cost by $1500 and ventilator use by 5.2 days per patient at a hospital respiratory care center [6]; 4) boost on- target hemoglobin values by 8.5–17% and reduce hospitalization days by 15%, cardiovascular events by 15%, hemoglobin fluctuation by 13%, expensive darbepoetin consumption by 25%, and blood transfusion events by 40–60% in end-stage renal disease patients on dialysis [7–10]; and 5) cut healthcare cost in Medicare patients’ last half year of life by 4.5% [11].

Despite its potential for many clinical activities, machine learning-based predictive modeling is used by only 15% of hospitals for limited purposes [12]. Two hurdles, among others, impede the widespread adoption of machine learning in healthcare.

1.1. Hurdle 1: predictive temporal features are essential for building accurate predictive models, but are difficult to identify

Most attributes in medical data are longitudinal. It is labor intensive and often takes 50–80% of the model building effort to pre-process medical data, particularly for feature engineering [13–15]. Predictive temporal features are the basis of building accurate predictive models, but are difficult to identify, even with many human resources. This is problematic. Healthcare systems have limited resources for model building, while inaccurate models produce suboptimal outcomes and are often useless.

At present, clinical predictive models are usually created in the following way. Given a modeling task and a long list of attributes in the medical data like those stored in the electronic health record, a clinician uses his/her judgment to choose from the long list a short list of attributes that are potentially relevant to the task. For each longitudinal attribute in the short list, the clinician uses his/her judgment to specify how to aggregate the attribute’s values over time into a temporal feature, e.g., by taking their average or maximum. Then a data scientist uses the features (a.k.a. independent variables) to build a model. If model accuracy is unsatisfactory, which is frequently the case, the process is repeated. From what we have seen at three institutions, it often takes the clinician several months and multiple iterations to finish the manual attribute and feature specification for each modeling task.

Besides being labor intensive, the above model building approach has two other drawbacks. First, many attributes could be useful for the modeling task, but are missing in the short list of attributes chosen by the clinician. Second, many temporal features could have additional predictive power, but are not included in those specified by the clinician [16]. Both drawbacks result from our limited understanding of diseases and lead to degraded model accuracy. Moreover, although the data mining community has done much work on mining and constructing temporal [17,18] and sequence features [19], often many temporal features useful for the modeling task are still waiting to be discovered.

As evidence of all of these issues, Google recently reported using all attributes in the electronic health record and long short-term memory (LSTM) [20,21], a type of deep neural network, to automatically learn temporal features from medical data [22]. For predicting each of three outcomes: in-hospital mortality, unexpected read-missions within 30 days, and long hospital stay, this resulted in a boost of the area under the receiver operating characteristic curve accuracy measure by almost 10% [22]. Several other studies [23–25] also showed that for various clinical prediction tasks and compared to using temporal features specified by experts, using LSTM to automatically learn temporal features from medical data improved prediction accuracy. This is consistent with what has happened in several areas like speech recognition, natural language processing, and video classification, where temporal features automatically learned from data by LSTM outperform those specified by experts or mined by other methods [3]. It is common that many temporal features have additional predictive power, but have not been identified before.

Without prelimiting to a small number of longitudinal attributes and possibly missing many other useful ones, LSTM can examine many attributes and automatically learn temporal features from irregularly sampled medical data of varying lengths in a data-driven way. However, the learned features are suboptimal and unsuitable for direct clinical use. When learning temporal features, the standard LSTM does not restrict the number of longitudinal attributes used in each feature. Consequently, a learned feature often involves lots of attributes, many of which have little or no relationship with each other. This results in three problems.

Problem 1.

The learned features tend to overfit the training data’s peculiarities and become less generalizable, leading to suboptimal model accuracy. As evidence of this, for several modeling tasks engaging longitudinal attributes that can be naturally partitioned into a small number (e.g., three) of modalities at a coarse granularity, researchers have improved LSTM model accuracy using multimodal LSTM [26,27]. A multimodal LSTM network includes several constituent LSTM networks, one per modality. Each feature learned by a constituent network involves only those attributes in the modality linking to the constituent network. Usually, the medical data set contains a lot of longitudinal attributes, many of which could be useful for the modeling task. If we could partition longitudinal attributes meaningfully at a finer granularity and let multimodal LSTM take advantage of this aspect, we would expect the learned features’ quality and consequently model accuracy to improve further. Intuitively, a clinically meaningful temporal feature should typically involve no more than a few attributes.

Problem 2.

Differing healthcare systems collect overlapping yet different attributes. The more attributes a feature involves, the less likely a predictive model built with the feature will be used by other healthcare systems beyond the one that originally developed the model.

Problem 3.

A feature involving many longitudinal attributes is difficult to understand. As reviewed in Section 2, in LSTM, each memory cell vector element depicts some learned feature(s). Karpathy et al. [28] showed that only ~10% of these elements could be interpreted [29]. In clinical practice, clinicians usually refuse to use what they do not understand.

1.2. Hurdle 2: most machine learning models are black boxes, but clinical practice requires transparency of model prediction results

This hurdle is related to Problem 3 mentioned above. Most machine learning models including LSTM provide no explanation of their prediction results. Yet, offering such explanations is essential for a model to be used in usual clinical practice. When lives are at risk, clinicians need to know the reasons to trust a model’s prediction results. Understanding the reasons for poor outcomes can help clinicians select tailored interventions that typically work better than non-specific ones. Explanations for prediction results can provide hints to help discover new knowledge. In addition, if sued for malpractice, clinicians will need to use their understanding of the prediction results to justify their decisions in court.

Previously, for tabular data whose columns have easy-to-understand meanings, we developed a method that can automatically explain any machine learning model’s prediction results with no accuracy loss [30]. This method cannot handle longitudinal data directly. Using the temporal features automatically learned by LSTM, one could convert longitudinal medical data to tabular data and then build machine learning models on the tabular data. But, if the automatically learned features have no easy-to-understand meanings, we still cannot use this method to automatically explain the models prediction results.

1.3. Our contributions

To address the two hurdles, this paper makes two contributions, offering a roadmap for future research.

First, we outline a data-driven method for semi-automatically extracting predictive and clinically meaningful temporal features from medical data for predictive modeling. Using this method can reduce the effort needed to build useable predictive models for the current modeling task. Complementing expert-engineered features, the extracted features can be used to build machine learning, statistical, or rule-based predictive models, improve model accuracy [31] and generalizability, and identify data quality issues. In addition, as shown by Gupta et al. [32], many extracted features reflect general properties of the medical attributes involved in the features, and can be useful for other modeling tasks. Using the extracted features to form a temporal feature library to facilitate feature reuse, we can reduce the effort needed to build predictive models for other modeling tasks.

Second, we outline a method of using the extracted features to automatically explain machine learning prediction results and suggest tailored interventions. This can enable machine learning models to be used in clinical practice, and help transform health-care to be more proactive. At present, healthcare is often reactive. Existing clinical predictive models rarely use trend features [16]. When a health risk is identified, e.g., with existing models, it is often at a relatively late stage of persisting deterioration of health. At that point, resolving it tends to be difficult and costly, and the patient is at increased risk of a poor outcome. Our feature extraction method can find many temporal features reflecting trends. By using these features and our automatic explanation method to identify risky trends early, we can proactively apply preventive interventions to stop further deterioration of health. The automatically generated explanations can help us identify new interventions, warn clinicians of risky patterns, and reduce the time clinicians need to review patient records to find the reasons why a specific patient is at high risk for a poor outcome. The automatically suggested interventions can reduce the likelihood of missing suitable interventions for a patient. All of these factors can help improve outcomes and cut costs.

1.4. Organization of the paper

The rest of the paper is organized as follows. Section 2 reviews the current approach of using LSTM to build predictive models with medical data. Section 3 sketches our data-driven method for semi-automatically extracting predictive and clinically meaningful temporal features from medical data for predictive modeling. Section 4 outlines our method of using the extracted features to automatically explain machine learning prediction results and suggest tailored interventions. Section 5 discusses related work. We conclude in Section 6.

In this paper, we refer to both clinical and administrative data as medical data. We focus on predicting one outcome per data instance (e.g., per patient) rather than per data instance per time step (e.g., per patient per day). When a data instance has one outcome per time step, one way to extract temporal features is to focus on the outcome at the last time step of each data instance.

2. The current approach of using LSTM to build predictive models with medical data

In this section, we review the current standard approach of using LSTM to build predictive models with medical data. In Section 3, we present our temporal feature extraction method based on this approach. Variations of this approach are used in many LSTM-based clinical predictive modeling papers [22–25,33–46]. With proper modifications, our temporal feature extraction method also applies to these variations.

A deep neural network is a neural network with many layers of computation. Ching et al. [47–50] reviewed existing work using deep neural networks on medical data. Deep neural networks have several types, such as recurrent neural network (RNN), convolutional neural network, and deep feedforward neural network. Among them, RNN handles irregularly sampled longitudinal medical data of varying lengths the most naturally. LSTM [20,21] is a specific kind of RNN that uses a gating mechanism to better model long-range dependencies. Much work has been done using LSTM to build predictive models with medical data [22–25,33–46]. Other kinds of RNN like gated recurrent unit have also been used for this purpose [32,51–63]. In this paper, we focus on LSTM having memory cells, from which we extract temporal features.

LSTM processes a sequence of input vectors from the same data instance, one input vector at a time. Each input vector is indexed by a time step t. After processing the entire sequence, LSTM obtains results that are used to predict the data instance’s outcome. Often, each data instance refers to a distinct patient. Each input vector includes one patient visit’s information, such as diagnoses and vital signs. The sequence length can vary across data instances. This helps boost model accuracy, as LSTM can use as much of the information of each patient as possible, without having to drop information to make each patient s history be of the same length. This also allows us to make predictions on new patients in a timely manner, without having to wait until each patient accumulates a certain length of history. With a single patient visit’s information available, LSTM can already start to make predictions on the patient.

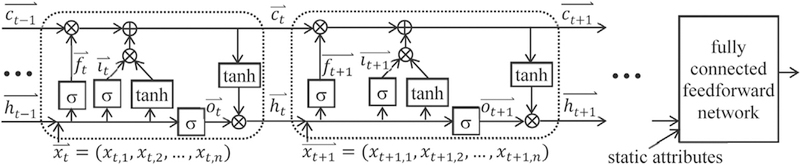

As shown in Fig. 1, an LSTM network contains a sequence of units, one per time step. In Fig. 1, each rounded rectangle denotes a unit. ⊕ is the element-wise sum. ⊗ is the element-wise multiplication. A unit has a memory cell ct, a hidden state ht, an input gate it, an output gate ot, and a forget gate ft. The memory cell keeps long-term memory and stores summary information from all previous inputs. It is known that LSTM can maintain memory over 1000 time steps [20]. The input gate regulates the input flowing into the memory cell. The forget gate adjusts the forgetting of the memory cell. The output gate controls the output flowing from the memory cell.

Fig. 1.

An LSTM network.

For a sequence with m time steps, LSTM works based on the following formulas:

Here, σ and tanh are the element-wise sigmoid and hyperbolic tangent functions, respectively. is the input vector at time step t (1 ≤ t ≤ m). Each has the same dimensionality n. and are the forget, input, and output gates’ activation vectors, respectively. is the memory cell vector. is the hidden state vector. and are bias vectors. All vectors except for have the same dimensionality. Wf, Wi, Wo, and Wc are the input vector weight matrices. Uf, Ui, Uo, and Uc are the hidden state vector weight matrices. The hidden state vector in the last time step summarizes the whole sequence. Along with the sequence, the data instance often contains some static attributes, such as gender and race. We concatenate with the static attributes, if any, into a vector. We input the vector to a fully connected feedforward network and compute the data instance’s predicted outcome [26].

The input vector contains information of all longitudinal attributes at time step t. We can make the i-th longitudinal attribute’s value at t. Alternatively, we can embed each categorical attribute value, such as diagnosis or procedure code, into a vector representation and merge all embedded vectors at t into [22]. In this case, each embedded xt,i becomes difficult to interpret.

In LSTM, each element of the memory cell vector depicts some learned temporal feature(s). Karpathy et al. [28] showed that only ~10% of these elements could be interpreted [29]. Our goal is to modify LSTM so that it can be used to extract predictive and clinically meaningful temporal features from medical data for predictive modeling.

3. Semi-automatically extracting predictive and clinically meaningful temporal features from medical data

In this section, we sketch our data-driven method for semi-automatically extracting predictive and clinically meaningful temporal features from medical data for predictive modeling. Our method is semi-automatic because its last step requires a human to extract features via visualization. Since temporal feature is one form of phenotype, our method belongs to computational pheno-typing [64–66]. Our method has a different focus than most existing phenotyping algorithms, which use medical data to detect whether a patient has a specific disease.

The standard LSTM imposes no limit on how many input vector elements can link to each memory cell vector element. All input vector elements could be used in each element of the forget and input gates’ activation vectors, and subsequently link to each memory cell vector element. As a result, even if each input vector element links to a distinct longitudinal attribute, no limit is placed on the number of attributes used in each learned temporal feature. A feature involving many attributes is difficult to understand. Our key idea for semi-automatically extracting temporal features from medical data is to restrict the number of longitudinal attributes linking to each memory cell vector element. In this way, more memory cell vector elements will represent clinically meaningful temporal features. The learned features are likely to be predictive, as LSTM frequently produces more accurate clinical predictive models than other machine learning algorithms [22–25].

The rest of Section 3 is organized as follows. Section 3.1 describes how to modify LSTM to limit the number of longitudinal attributes linking to each memory cell vector element. Section 3.2 shows how to visualize the memory cell vector elements in our trained LSTM network to extract predictive and clinically meaningful temporal features. Section 3.3 mentions several ways of using the extracted features and lists our feature extraction method’s advantages. Section 3.4 sketches a method for efficiently automating LSTM model selection. Section 3.5 provides some additional details.

3.1. Multi-component LSTM

To limit the number of longitudinal attributes linking to each memory cell vector element, we use a new type of LSTM termed multi-component LSTM (MCLSTM).

3.1.1. Overview

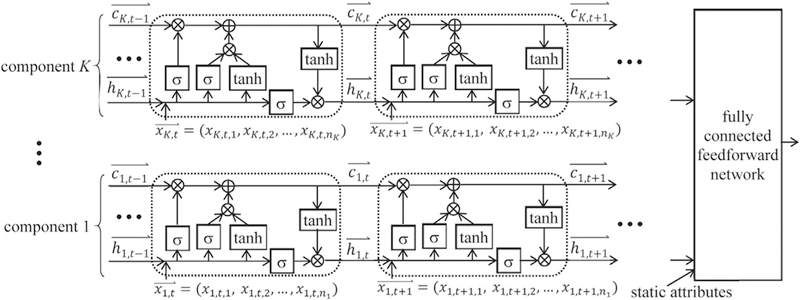

As shown in Fig. 2, an MCLSTM network contains multiple component LSTM networks. In a given component network and at any time step, each input vector element links to a distinct longitudinal attribute. Each component network uses only a subset of the longitudinal attributes rather than all of them. This is similar to the case of multimodal LSTM [26,27]. Yet, MCLSTM differs from multimodal LSTM in several ways. In multimodal LSTM, all longitudinal attributes are partitioned into a small number of sets, one per modality, based on existing knowledge of the modalities. A set can possibly contain many attributes. Each longitudinal attribute appears in exactly one of the sets. The multimodal LSTM model is trained after attribute partitioning is finalized. In comparison, in MCLSTM, we preselect an integer K that is not necessarily small. All longitudinal attributes are partitioned into K sets, one per component, in a data-driven way when the MCLSTM model is trained. Each set tends to contain one or a few attributes. The same attribute could appear in more than one set. Also, some longitudinal attributes may appear in none of the sets.

Fig. 2.

A multi-component LSTM network with K components.

In Fig. 2, nq denotes the number of longitudinal attributes used in the q-th (1 ≤ q ≤ K) component network. For each element xq,t,j (1 ≤ j ≤ ni) of the input vector at time step t, the first, second, and third subscripts indicate the component number, time step, and element number in the component, respectively. For both the memory cell vector and the hidden state vector , the first and second subscripts indicate the component number and time step, respectively.

Consider a data instance containing a sequence with m time steps and perhaps some static attributes. The MCLSTM network includes K component networks. We concatenate all K hidden state vectors (1 ≤ q ≤ K) at the last time step, one from each component network, and the static attributes, if any, into a vector [26]. We input to a fully connected feedforward network to compute the data instance’s predicted outcome.

In MCLSTM, by controlling the number of longitudinal attributes used in each component network, we limit the number of attributes linking to each memory cell vector element, and subsequently the number of attributes involved in each learned temporal feature. This offers several advantages. First, a larger portion of learned features will be understandable and clinically meaningful. Clinicians are more willing to use these features than those they do not understand. Second, the learned features become more generalizable and less likely to overfit the training data’s peculiarities. This helps improve the accuracy of predictive models built using these features [67]. Third, MCLSTM naturally has feature selection capability. Often, some longitudinal attributes appear in none of the component networks, and are regarded as having no predictive power. Only the other longitudinal attributes appearing in the MCLSTM network are deemed relevant and need to be collected for the modeling task. This reduces the number of attributes involved in the predictive model built using the learned features. Such a model is more likely to be used by other healthcare systems beyond the one that originally developed the model, as differing healthcare systems collect overlapping but different attributes.

3.1.2. Setting the network configuration hyper-parameters

Before training an MCLSTM network, we need to set a few hyper-parameters for its configuration. First, we need to select K, the number of component networks in it. Second, for each component network, we need to choose its memory cell vector dimensionality. Recall that except for the input vector, all vectors used in an LSTM unit have the same dimensionality. The memory cell vector is one of them.

We set the network configuration hyper-parameters based on two considerations. First, which component network uses which longitudinal attributes is generally determined in a data-driven way when the MCLSTM network is trained. Ideally, when training is completed, we want to achieve the effect that each component network uses one or a few attributes. That is, every nq (1 ≤ q ≤ K) is small. Each memory cell vector element of the component network represents some temporal feature(s) involving no more than these attributes. Such a feature is more likely to be understood and clinically meaningful than one involving many attributes. When the medical data set contains lots of longitudinal attributes, many of them could be useful for the modeling task. In this case, we use a large K to allow the useful attributes to appear in the MCLSTM network. Otherwise, when the medical data set contains only a few longitudinal attributes, we use a small K.

Second, for the one or a few longitudinal attributes used in a component network, intuitively no more than a few temporal features using these attributes would be clinically meaningful, predictive, and non-redundant for the modeling task. Hence, the memory cell vectors (1 ≤ q ≤ K) used in each component network should have a low dimensionality. We can use the same low dimensionality for the memory cell vectors in each component network. Alternatively, we can partition all K component networks into multiple groups, and choose a different low dimensionality for the memory cell vectors in each group.

The optimal hyper-parameter values vary by the modeling task and data set. Finding the optimal hyper-parameter values belongs to machine learning model selection, for which much work has been done [68]. We conduct this search by maximizing the MCLSTM network’s prediction accuracy.

3.1.3. Exclusive group Lasso regularization

After setting the network configuration hyper-parameters, the MCLSTM network’s configuration is only partly in place. To complete it, we need to figure out which component network uses which longitudinal attributes. We do this in a data-driven way when the MCLSTM network is trained.

The MCLSTM network contains K component networks. We have n longitudinal attributes. Initially, not knowing which component network will use which attributes, we give all n attributes to each component network. At time step t, all component networks receive the same input vector = (xt,1, xt,2,…,xt,n), with xt,i (1 ≤ i ≤ n) being the i-th longitudinal attribute’s value.

We want the data to tell us which component network should use which longitudinal attributes. The i-th (1 ≤ i ≤ n) longitudinal attribute links to the i-th column of each input vector weight matrix in every component network. An attribute is unused by a component network if and only if all columns of the input vector weight matrices in the component network linking to the attribute are all zeros. After the MCLSTM network is trained, we want to achieve the effect that each component network uses only one or a few attributes. That is, most columns of the input vector weight matrices in the component network are all zeros. Lasso (least absolute shrinkage and selection operator) regularization is widely used to make most weights in a machine learning model zero. Existing Lasso regularization methods cannot achieve our desired effect, as the weights used in the MCLSTM network have a special structure [67]. We design a new Lasso regularization method tailored to this structure to serve our purpose.

Our regularization method performs one type of structured regularization. It is related to, but different from multimodal group regularization, the type of structured regularization conducted in Lenz et al. [67]. Our regularization method is designed for MCLSTM to handle longitudinal data. The goal is to limit the number of longitudinal attributes used in each component network. In comparison, the multimodal group regularization method was developed for a deep feedforward neural network handling static data. There, all attributes are partitioned into a small number of groups, one per modality, based on existing knowledge of the modalities. The goal is to limit the number of modalities that each neuron on the first layer of the network links to. Lenz et al. [67] showed that standard L1 regularization cannot achieve this goal without degrading the quality of the features learned by the neurons on the first layer. Using multimodal group regularization improved both feature quality and model accuracy.

3.1.3.1. Notations.

Before describing our regularization method’s technical details, we first introduce a few notations. Consider the q-th (1 ≤ q ≤ K) component network. It works based on the following formulas at time step t:

Compared to those listed in Section 2, each vector except for the input vector and each weight matrix have an added subscript: q. Let dq denote the q-th component network’s memory cell vector dimensionality. Wf,q, Wi,q, Wo,q, and Wc,q are the dq × n input vector weight matrices for the forget gate, input gate, output gate, and memory cell, respectively. Wf,q,s,r, Wi,q,s,r, Wo,q,s,r, and Wc,q,s,r denote the element in the s-th (1 ≤ s ≤ dq) row and r-th (1 ≤ r ≤ n) column of Wf,q, Wi,q, Wo,q, and Wc,q, respectively. Uf,q, Ui,q, Uo,q, and Uc,q are the dq × dq hidden state vector weight matrices for the forget gate, input gate, output gate, and memory cell, respectively. Uf,q,s,r, Ui,q,s,r, Uo,q,s,r, and Uc,q,s,r denote the element in the s-th (1 ≤ s ≤ dq) row and r-th (1 ≤ r ≤ dq) column of Uf,q, Ui,q, Uo,q, and Uc,q, respectively.

3.1.4. Basic method

To obtain the desired effect that each component network uses only one or a few longitudinal attributes, our regularization method needs to achieve two goals simultaneously. First, in a component network, the n longitudinal attributes compete with each other. If one attribute is used, the other attributes are less likely to be used. In other words, if an input vector weight matrix element linking to an attribute is non-zero, the regularizer tends to assign zeros to the input vector weight matrix elements linking to the other attributes. Second, in a component network, all input vector weight matrix elements linking to the same attribute tend to be zero (or non-zero) concurrently. Non-zero means the component network uses this attribute.

We borrow ideas from exclusive Lasso [69,70] and group Lasso [71] to reach these two goals. Consider a set of weights wi,j (1 ≤ i ≤ G, 1 ≤ j ≤ gi) partitioned into G groups. The i-th group has gi weights. Exclusive Lasso [69,70] uses the regularizer to make the weights in the same group compete with each other. If one weight in a group is non-zero, the regularizer tends to assign zeros to the other weights in the same group. This can be used to reach our first goal. In comparison, group Lasso [71] uses the regularizer to make all weights in the same group tend to be zero (or non-zero) concurrently. This can be used to reach our second goal.

Our regularization method combines exclusive Lasso and group Lasso, and is thus called exclusive group Lasso. In the q-th (1 ≤ q ≤ K) component network, the input vector weight matrix elements linking to the r-th (1 ≤ r ≤ n) longitudinal attribute are Wf,q,s,r, Wi,q,s,r, Wo,q,s,r, and Wc,q,s,r for each s between 1 and dq. We treat these elements as a group, and use their L2 norm to make them tend to be zero (or non-zero) concurrently. If Rq,r = 0, all of them are zero. For each q (1 ≤ q ≤ K), the L2 norms linking to the n longitudinal attributes are Rq,r for every r between 1 and n. We treat these L2 norms as a group, and use the regularizer to make them compete with each other for being non-zero. Putting everything together, we use the exclusive group Lasso regularizer

to reach our two goals simultaneously. RW is a convex function of all input vector weight matrix elements.

For the hidden state vector weight matrices Uf q, Ui,q, Uo,q, and Uc,q, we do not need to make most of their elements zero. Instead, we use the L2 regularizer

for their elements Uf,q,s,r, Ui,q,s,r, Uo,q,s,r, and Uc,q,s,r. Let L denote the loss function measuring the discrepancy between the predicted and actual outcomes of the data instances. Rf denotes the L2 regularizer for the weights in the fully connected feedforward network used at the end of the MCLSTM network. To train the MCLSTM network, we use a standard subgradient optimization algorithm to minimize the overall loss function Lo = L+λ1RW+λ2RU+λ3Rf [3]. λ1, λ2, λ3 are the parameters controlling the importance of the regularizers RW, RU, and Rf, respectively.

3.1.5. Extension of the basic method

Sometimes, based on medical intuition, we know which longitudinal attribute by itself or which several longitudinal attributes combined are likely to form predictive and clinically meaningful temporal features, even if we do not know the exact features. In this case, before training the MCLSTM network, for each subset of longitudinal attributes with this property, we specify a separate component network to receive in its input vectors the values of the attributes in this subset rather than all attributes’ values. This can ease model training and help make more learned features represented by the memory cell vector elements clinically meaningful. This also expedites model training by reducing the number of weights that need to be handled.

By default, all component networks in an MCLSTM network use the same set of time steps. Sometimes, all longitudinal attributes fall into several groups, each collected at a distinct frequency. For instance, one group of longitudinal attributes like diagnosis codes is collected per patient visit. Another group of longitudinal attributes, such as air quality measurements and vital signs that a patient self-monitors at home, is collected every day. In this case, for each group of longitudinal attributes, we can specify a different subset of component networks, whose input vectors include only these attributes’ values. Each subset uses a distinct set of time steps based on the frequency at which the corresponding group of longitudinal attributes is collected.

Sometimes, based on medical knowledge or our prior experience with other modeling tasks, we know some temporal features that are clinically meaningful, formed by some of the longitudinal attributes, and likely to be predictive for the current modeling task. In this case, we compute these features, treat them as static attributes used near the end of the MCLSTM network, and can opt to not use the raw longitudinal attributes involved in them when training the network. This can ease model training and help the network form predictive and clinically meaningful temporal features from the other longitudinal attributes.

Section 3.2 outlines our method of visualizing the memory cell vector elements in a trained MCLSTM network to extract predictive and clinically meaningful temporal features. To increase the number of such extracted features, we can iteratively train the MCLSTM network and extract features in multiple rounds. After extracting some features via visualization in one round, we reduce the number of component networks in the MCLSTM network, compute these features, add them to the list of static attributes used near the end of the MCLSTM network, and no longer use the raw longitudinal attributes involved in them when training the MCLSTM network in the next round. This helps the MCLSTM network form predictive and clinically meaningful temporal features from the remaining longitudinal attributes.

Often, the input vector at each time step includes an element showing the elapsed time between the current and previous time steps [33,35,46,51]. For the first time step, the elapsed time is zero. Sometimes, a log transformation is applied to the elapsed time to reduce its skewed distribution [52]. The elapsed time attribute has a different property from the other longitudinal attributes. Intuitively, any other longitudinal attribute tends to be used by one or a few component networks in the MCLSTM network to form temporal features. In comparison, many component networks could use the elapsed time attribute to form temporal features. To reflect this difference, we use the L2 regularizer rather than the exclusive group Lasso regularizer for the input vector weight matrix elements linking to the elapsed time attribute in each component network.

The above discussion focuses on LSTM with one recurrent layer. Our method also applies to stacked LSTM with multiple recurrent hidden layers stacked on top of each other [72]. Having multiple recurrent hidden layers often helps an RNN learn better features [51]. Fig. 3 illustrates a multi-component stacked LSTM network. It has multiple component networks, each of which is a stacked LSTM network using a subset of longitudinal attributes. In each component network and at each recurrent layer above the first, the input vector at time step t incorporates the hidden state vector elements outputted by the layer below at t. If nothing else is included in the input vector, we use the same method mentioned above to figure out which component network uses which longitudinal attributes. Otherwise, if the input vector at each recurrent layer above the first one at t also includes the input vector elements at the first layer at t, we first use an MCLSTM network with one recurrent layer and the method mentioned above to figure out which component network uses which longitudinal attributes. Then we use this information to form the multi-component stacked LSTM network and train it. In this way, we ensure that in each component network, every recurrent layer links to the same subset of longitudinal attributes.

Fig. 3.

A multi-component stacked LSTM network with K components and two recurrent layers.

In Fig. 3, nq denotes the number of longitudinal attributes used in the q-th (1 ≤ q ≤ K) component network. For each element xq,t,j (1 ≤ j ≤ ni) of the input vector the first, second, and third subscripts indicate the component number, time step, and element number in the component, respectively. For both the memory cell vector and the hidden state vector , the first, second, and third subscripts indicate the component number, layer number, and time step, respectively.

3.2. Visualizing the memory cell vector elements in a trained MCLSTM network to extract predictive and clinically meaningful temporal features

In LSTM, each memory cell vector element depicts some learned temporal feature(s). After using the training instances to train the MCLSTM network, we visualize its memory cell vector elements to extract clinically meaningful temporal features. These features are likely to be predictive, as LSTM frequently produces more accurate clinical predictive models than other machine learning algorithms [22–25].

We design the visualization method based on three observations. First, LSTM has been shown to use high positive and low negative values of its memory cell vector elements to express information [73]. Second, Kale et al. [31,74–76] showed one can use training instances with the highest activations of a neuron in a deep neural network to identify clinically meaningful features. A memory cell vector element is a neuron. Third, intuitively, an informative sequence of input vectors in a training instance contains one or more segments, each depicting a temporal feature.

Taking these observations as insights, we proceed in four steps to extract zero or more clinically meaningful temporal features from each memory cell vector element at the last time step of the MCLSTM network. In Step 1, we find the top and bottom few training instances with the highest positive and lowest negative values in the memory cell vector element, respectively. These training instances are likely to contain information of useful temporal features. In Step 2, we identify one or more so-called effective segments of the input vector sequence in each of these training instances. Each effective segment tends to reflect a useful temporal feature. In Step 3, we partition all identified effective segments into several clusters. In Step 4, we visualize each cluster of effective segments in a separate figure to extract zero or more clinically meaningful temporal features. By reducing the number of effective segments in each figure and making the effective segments in the same figure more homogeneous, clustering eases visualization and temporal feature extraction. The temporal features extracted from the MCLSTM network include all features extracted from every memory cell vector element at the last time step of the MCLSTM network.

In the rest of Section 3.2, we describe each of the four steps one by one. Our description focuses on a single memory cell vector element at the last time step of the MCLSTM network. For this element, we find the corresponding component network and the longitudinal attributes used in it. Each temporal feature depicted by this element involves no more than these attributes. When mentioning an input vector, we always refer to an input vector of the component network containing only the values of these attributes. The component network usually uses one or a few longitudinal attributes. This is crucial for making our visualization method effective in identifying features describing temporal relationships [77]. Psychology studies have shown that humans can correctly analyze the relationship among up to four attributes [78]. The more complex the relationship among the attributes, the lower the upper limit on the number of attributes [79].

3.2.1. Step 1: finding the top and bottom few training instances with the highest positive and lowest negative values in the memory cell vector element, respectively

We preselect a number N as the maximum number of top/bottom training instances that will be obtained for each memory cell vector element at the last time step of the MCLSTM network. In Step 4, we conduct visualization to extract clinically meaningful temporal features. To avoid cluttering any given figure and creating difficulty with visualization, N should not be too large. To obtain enough signal for identifying clinically meaningful temporal features, N should not be too small. One possible good value of N is 50, as adopted in Che et al. [75].

Consider the given memory cell vector element at the last time step of the MCLSTM network. Let n+ denote the number of training instances with positive values in the element. n− denotes the number of training instances with negative values in the element. We sort all training instances in descending order of the element’s value. Multiple training instances with the same value in the element can be put in any order. We find the top N+ = min (N, n+) training instances with the highest positive values in the element [75], and record the lowest one of these values. In addition, we find the bottom N− = min (N, n−) training instances with the lowest negative values in the element [75], and record the highest one of these values. In Step 2, we will use and to identify the effective segments of the input vector sequences in the top N+ and bottom N− training instances, respectively.

Intuitively, the top N+ training instances include one set of temporal features. The bottom N− training instances include another set of temporal features. In Step 4, we will visualize the effective segments of the input vector sequences in the top N+ and bottom N- training instances to identify clinically meaningful features in the first and second sets, respectively.

Previously, for image data, researchers have used the activation maximization method to explain the meaning of each neuron in a deep neural network [80]. For each neuron in the network, that method creates a synthetic data instance maximizing the neuron’s output, and uses the data instance to explain the neuron’s meaning. That method does not serve our purpose of extracting temporal features from longitudinal data. For instance, consider a sequence of results of a specific lab test obtained over time. Suppose the actual temporal feature depicted by the memory cell vector element is whether the lab test result is above a fixed threshold value ≥40% of the time. The synthetic data instance maximizing the element’s value is a sequence of lab test results all above the threshold value. From this data instance, we cannot deduce the feature’s property of being ≥40% of the time. In comparison, training instances are real and usually do not push the element to have extreme values. After viewing multiple training instances satisfying this property in various ways, such as one being 40% of the time and another being 50% of the time, we are more likely to identify this property.

3.2.2. Step 2: identifying one or more effective segments of the input vector sequence in each training instance found in Step 1

Consider the given memory cell vector element at the last time step of the MCLSTM network and a training instance found in Step1. The training instance has a sequence of input vectors containing the information of some useful temporal features. Often, the sequence has one or more uninformative segments, which are unrelated to these features and do not contribute to making the element’s value high positive or low negative. Displaying these segments during visualization will clutter the figure and make it harder to identify these features. To address this issue, for each training instance found in Step 1, we identify one or more effective segments of its input vector sequence. Each effective segment tends to reflect a useful temporal feature. During visualization in Step 4, we display only the effective segments rather than the whole input vector sequence.

In the following, we show how to identify the effective segments for a top training instance found in Step 1. The case with identifying the effective segments for a bottom training instance found in Step 1 can be handled similarly.

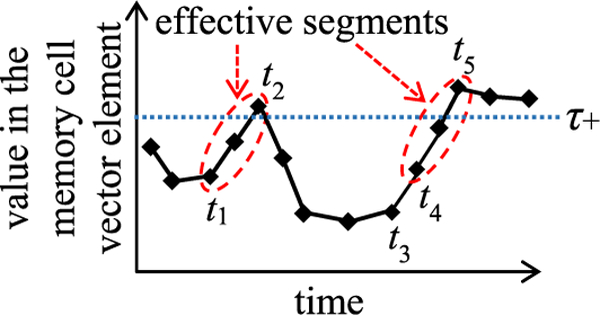

Recall that in Step 1, we find the top N+ training instances with the highest positive values in the memory cell vector element at the last time step of the component network, and record the lowest one of these values. As shown in Fig. 4, for each top training instance, the element’s value evolves over time and becomes at the last time step of the training instance’s input vector sequence. can be regarded as a threshold value found in a data-driven way. When the element’s value becomes at a specific time step, it indicates with high likelihood that a useful temporal feature appears there. We use this information to find the effective segment at or around the time step. In Fig. 4, each dashed ellipse denotes an effective segment. The horizontal dotted line depicts

Fig. 4.

Identifying the effective segments of the input vector sequence in a top training instance.

Consider a given top training instance found in Step 1. We define a segment of its input vector sequence to be effective if the segment satisfies two properties simultaneously.

-

1)

Property 1: If we input the segment into the component network, the memory cell vector element at the segment’s last time step will produce a value Typically, the segment and input vector sequence start at different time steps. If we input the segment vs. the input vector sequence into the component network, we get a different value in the memory cell vector element at the segment’s last time step.

-

2)

Property 2: The segment is as short as possible. This eases identifying temporal features via visualization in Step 4. It is easier to recognize a temporal feature from a short segment than from a long segment.

Both properties combined make an effective segment the shortest segment that holds the signal of a useful temporal feature.

The top training instance’s input vector sequence contains one or more effective segments. Each segment is a section of the sequence between a starting time step tstart and an ending time step tend. We use a sequential search algorithm to find the effective segments one by one. Our high-level idea is to start from the sequence’s last time step and keep going backwards. For each effective segment, we find first its ending and then its starting time step. Then we move on to pinpoint the next effective segment. To make our search algorithm easy to understand, we describe it using the case shown in Fig. 4 as an example.

We start from the last time step of the top training instance’s input vector sequence. Here, the memory cell vector element’s value is We go backwards, one time step at a time. If the element’s value increases, we go back one more time step. We keep going backwards until the element’s value will decrease if we go back one more time step. This is the first effective segment’s ending time step tend, at which the element’s value reaches a local maximum In Fig. 4, tend is t5. To avoid violating Property 2, the section between t5 and the last time step is excluded from the first effective segment. Then we continue to go backwards, one time step at a time. For each time step t that we reach, we check whether the segment between t and tend satisfies Property 1. If so, this segment also satisfies Property 2 and is the first effective one, with t being its starting time step tstart. Otherwise, if this segment violates Property 1, we keep going backwards until we find a time step, at which Property 1 is satisfied. Such a time step must exist. In the worst case, we reach the first time step of the training instance’s input vector sequence. The segment between the first time step and tend always satisfies Property 1. In Fig. 4, tstart is t4. The segment between time steps t3 and t5 satisfies Property 1, but not Property 2, and thus is not an effective one.

After finding the first effective segment’s starting time step, we go back one time step to start searching for the second effective segment. In Fig. 4, this refers to starting from time step t3. We keep going backwards until reaching a time step t’, at which the memory cell vector element’s value is In Fig. 4, this time step is t2. If we keep going backwards and still cannot find t’ when reaching the first time step of the training instance’s input vector sequence, the second effective segment does not exist. Otherwise, if we can find t’, we repeat the procedure mentioned in the above paragraph to find first the ending and then the starting time step of the second effective segment. For the same reason explained in the above paragraph, these two time steps must exist. In Fig. 4, the second effective segment is the section between time steps t1 and t2. After finding the second effective segment, we move on to pinpoint the third effective segment, and so on. We keep iterating until reaching the first time step of the training instance’s input vector sequence. Our search process ends there.

3.2.3. Step 3: partitioning all identified effective segments into several clusters

Consider the given memory cell vector element at the last time step of the MCLSTM network. In Step 1, we find its top N+ and bottom N− training instances. After identifying all effective segments in these training instances, we partition the segments into multiple clusters to ease visualization in Step 4.

We preselect a number k to set the number of clusters. There are two groups of effective segments, one obtained from the top N+ training instances and the other from the bottom N− training instances. These two groups tend to reflect different temporal features. For either group, we partition the effective segments in it into k clusters, hoping each will reflect a distinct set of temporal features. The memory cell vector element usually depicts no more than a few temporal features. Accordingly, k should be a small number like three. For each group of effective segments, we can test different k values to see which one works the best.

Many clustering algorithms for time series data exist [81]. Each relies on a distance measure for temporal sequences. In the following, we describe our distance measure first, and then present the clustering algorithm used to partition effective segments into clusters.

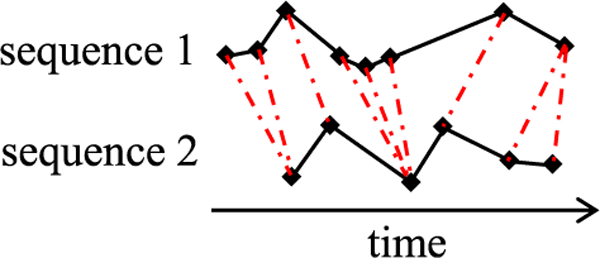

3.2.3.1. Distance measure for temporal sequences.

We use the multivariate dynamic time warping distance measure, which Kale et al. [82] proposed as an extension of the dynamic time warping technique [83]. Dynamic time warping is widely used for measuring similarity between two temporal sequences, which can be multi-dimensional and have different lengths and sampling intervals. As shown in Fig. 5, dynamic time warping allows time shifting and matches similar shapes even in the presence of a time-phase difference. In Fig. 5, each dash-dotted line links two aligned points, one from each temporal sequence.

Fig. 5.

Time alignment of two sequences.

Consider two temporal sequences We use a distance measure such as the Euclidean one, between each pair of elements and , one from each sequence. A warping path of length |p| aligns Y and Z via linking It satisfies two conditions:

-

(1)

r1 = s1 = 1, r|p| = m1, and s|p| = m2. This condition makes Y’s first element align with Z’s first element, and Y’s last element align with Z’s last element.

-

(2)

For each j between 1 and is (0,1), (1, 0), or (1, 1). Consequently, This condition makes each element of Y align with one element of Z, and vice versa. Also, only forward movements along Y and Z are allowed.

The total distance between Y and Z along p is the sum of the distance between each pair of elements aligned via p: The dynamic time warping distance between Y and Z is the minimum total distance across all possible warping paths P(Y, Z) between Y and Z:

Other things being equal, the dynamic time warping distance increases as temporal sequences become longer. To make the distance comparable across sequences of different lengths, Kale et al. [82] proposed using the multivariate dynamic time warping distance. This distance between sequences Y and Z is computed as their dynamic time warping distance divided by their optimal warping path’s length: MDTW (Y, Z) = DTW (Y, Z)/|p*| = dp* (Y, Z)/|p*|. Here, |p*| is the length of

Dynamic time warping is designed for temporal sequences sampled at equidistant points in time [84]. Yet, this is often not the case with medical data. For medical data that violate this property, we can compute the multivariate dynamic time warping distance in one of several ways. One way is to ignore the equidistance constraint and do the computation as presented above. Another way is to use the weighting mechanism in Siirtola et al. [84] to prevent areas of high point density from dominating the distance computation. This mechanism gives smaller and larger weights to points with near and distant neighbors in the temporal sequence, respectively.

Differing longitudinal attributes’ values can be on different orders of magnitude. If this occurs, one attribute could dominate the distance computation for multi-dimensional temporal sequences. This is undesirable. To address this issue, before computing distances, we first normalize each attribute’s values so that the values of different attributes become comparable with each other. More specifically, for each attribute, we compute its mean and standard deviation across all of its values in all training instances. For each value of the attribute, we compute its normalized value by subtracting the mean and then dividing by the standard deviation. During visualization in Step 4, we show the original rather than normalized values to make the presented values easier to understand.

Our distance computation approach considers not only shape, but also amplitude that matters. For instance, for making predictions, a lab test result above its normal range often gives a different signal from one within its normal range. Thus, we do not use the value normalization approach that Paparrizos et al. [85] adopted for computing shape-based distances for temporal sequences. That approach ignores amplitude and computes one mean and one standard deviation per temporal sequence to normalize the values in it.

3.2.3.2. Clustering algorithm.

We use the k-medoids clustering algorithm [86] based on the multivariate dynamic time warping distance measure to partition each group of effective segments into k clusters. A medoid is a representative object of a cluster with the highest average similarity to all objects in the cluster. The k-medoids algorithm is inefficient for clustering many objects [86]. Yet, this is not an issue in our case. For the given memory cell vector element, we find a modest number of top and bottom training instances, and need to cluster only a moderate number of effective segments.

We do not use the k-means clustering algorithm that requires computing the average of multiple objects. For multiple effective segments of different lengths, it is difficult to compute their average properly. Besides the k-medoids algorithm, other clustering algorithms based on dynamic time warping also exist [87] and could be used for our clustering purpose.

3.2.4. Step 4: visualizing each cluster of effective segments in a separate figure to extract zero or more clinically meaningful temporal features

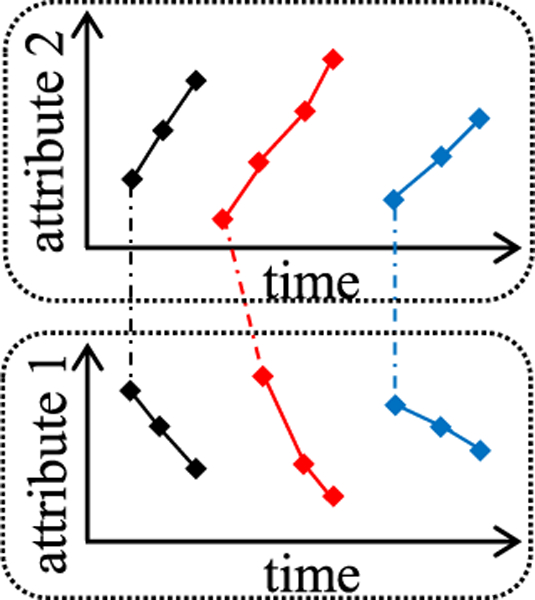

We visualize each cluster of effective segments obtained in Step 3 one by one. For each cluster, we show the effective segments in it in a figure to extract zero or more clinically meaningful temporal features. The figure includes one panel per longitudinal attribute used in the cluster. All panels are aligned by time and stacked on top of each other, as shown in Fig. 6, with each rounded rectangle denoting a panel.

Fig. 6.

Visualizing a cluster of three effective segments involving two longitudinal attributes.

Each panel shows the value sequence of its linked longitudinal attribute in every effective segment in the cluster. An effective segment has one value sequence per longitudinal attribute used in the cluster. If the cluster uses more than one attribute, for each effective segment, we use a dash-dotted polyline to link the first element of each of the segment’s attribute value sequences across all panels. In this way, one can easily know that these sequences belong to the same segment. Each effective segment comes from a training instance. To ease visualization, we use different colors to mark differing training instances in the figure.

Usually, a clinician and a data scientist collaborate to build a clinical predictive model. They view the figure to identify zero or more clinically meaningful temporal features. Each feature involves one or more longitudinal attributes used in the cluster, and is reflected by one or more attribute value sequences in the figure. It is easier to recognize the feature by viewing the sequences than to think of it on one’s own. For each identified feature, the clinician and the data scientist use their domain knowledge to jointly arrive at an exact mathematical definition of an extracted feature. Often, the extracted feature reflects the trend more precisely and performs better than the raw one learned by the MCLSTM network.

Marlin et al. [88] proposed identifying temporal patterns by grouping numeric physiologic time series into clusters. All time series start and end at the same time steps. For every cluster, a distinct panel shows each longitudinal attribute’s mean and standard deviation over time. That approach does not serve our purpose. In our case, each effective segment can start and end at different time steps. Non-numeric attributes like categorical ones can be part of temporal features and need to be shown along with numeric ones. Also, the same feature can appear at different time steps in differing effective segments. If we show each numeric attribute’s mean and standard deviation over time instead of individual effective segments, we are likely to miss such features.

Wanget al. [89] proposed identifying temporal patterns by visualizing multiple patients’ longitudinal medical data in the same figure. The figure includes one panel per patient. All panels are aligned by time and stacked on top of each other. Each panel shows multiple value sequences of a patient, one for each longitudinal attribute. For the same attribute, different patients’ value sequences appear in differing panels. This makes it harder to identify temporal patterns, particularly if the number of patients is not small [90]. In comparison, for the same attribute, our visualization approach puts multiple patients’ value sequences in the same panel.

3.2.4.1. Handling categorical attributes

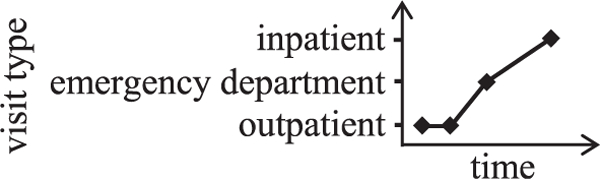

A neural network takes only numeric inputs. To use LSTM, one converts each categorical longitudinal attribute into one or more numeric attributes using one hot encoding. During visualization, we show the original categorical attribute values instead of the converted numeric ones to make the presentation more succinct and easier to understand. The figure includes a panel for each categorical attribute linking to the cluster of effective segments. In the panel, each distinct value of the attribute appears on a separate row, as illustrated in Fig. 7.

Fig. 7.

Displaying a sequence of values of the visit type attribute.

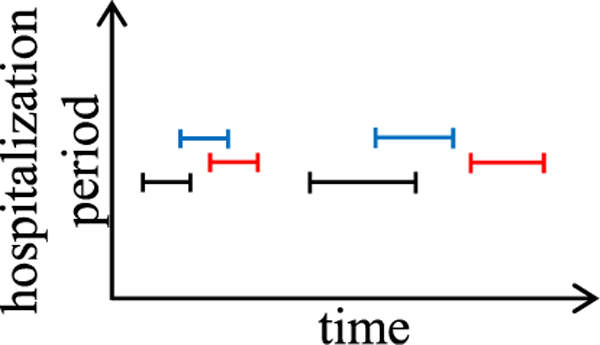

3.2.4.2. Handling interval attributes

Medical data often include interval attributes, such as the medication use period and hospitalization period. A common way to use interval attributes in LSTM is to convert each interval into two attribute values: its starting time step and its duration. During visualization, we show the original interval instead of the converted attribute values to make the presentation easier to understand. Recall that if the cluster of effective segments uses more than one attribute, for each effective segment, we use a dash-dotted polyline to link the first element of each of the segment’s attribute value sequences across all panels. For each interval attribute used in the segment, the dash-dotted polyline links to the starting point of the first interval in the attribute’s value sequence. To ease visualization, we put the intervals from distinct data instances on different and adjacent horizontal lines, with one line per data instance, as illustrated in Fig. 8.

Fig. 8.

Displaying the interval sequences from three patients’ hospitalization period attribute.

3.2.4.3. Handling missing values

Neural network does not take any missing input value. To use LSTM, one needs to fill in every missing value first. One way to do this is as follows. Consider a value sequence of an attribute. If the value sequence is completely missing, we impute a clinically normal value defined by the clinician [23,31]. Otherwise, for each missing value before the first occurrence or after the last occurrence of a non-missing one, we fill in the missing value with the non-missing one [91]. For each missing value between two non-missing ones, we linearly interpolate them to fill in the missing value. Another way to handle missing values for an attribute is to use a binary indicator for whether a value of it is missing, compute the amount of time since its last observation, and decay its value over time toward its empirical mean value rather than use its last observed value [57,92].

During visualization, no filled-in value is shown. This makes the figure consistent with the raw data to help ensure genuine temporal features are identified.

3.2.4.4. Avoiding using an excessive number of longitudinal attributes

In LSTM, we sometimes embed each categorical attribute value into a vector representation to reduce the input vector dimensionality. This makes model training more efficient and effective [22]. In MCLSTM, no value embedding is used. Instead, each input vector element is a longitudinal attribute’s value. This is essential for making the identified temporal features easy to understand. To make model training efficient and effective, we need to avoid using an excessive number of longitudinal attributes. This requires handling two cases.

First, consider three longitudinal attributes: disease, procedure, and drug. Each attribute is categorical with many possible values. If no value embedding is used, by default the attribute is converted into many numeric attributes, one per possible value, using one hot encoding. This explodes the input vector dimensionality and is undesirable. To address this issue, we can proceed in one or more of the following ways:

-

(1)

We use grouper models like the Diagnostic Cost Groups (DCG) system to group diseases, procedures, and drugs and reduce the numbers of their possible values [93,94].

-

(2)

For each of the three attributes, we use a few of its most common values and ignore the others.

-

(3)

For each of the three attributes, we use a few values of it deemed most relevant to the modeling problem based on medical knowledge, and ignore the others.

-

(4)

Rajkomar et al. [22] provided a method using LSTM with value embedding and an attribution mechanism to rank categorical attribute values. For each of the three attributes, we use the top few values ranked by this method in MCLSTM and ignore the others.

Second, many lab tests exist. We will have an excessive number of longitudinal attributes, if we use one for each lab test’s values. This is undesirable. To address this issue, we can proceed in one or more of the following ways:

-

(1)

Pivovarov et al. [95] identified 70 common lab tests of interest to primary care and internal medicine. We use these lab tests and ignore the others.

-

(2)

We use a few lab tests deemed most relevant to the modeling problem based on medical knowledge, and ignore the others.

-

(3)

Rajkomar et al. [22] converted numeric attributes to categorical ones via discretization, and provided a method using LSTM with value embedding and an attribution mechanism to compute a weight for each categorical attribute value. For a categorical attribute with multiple possible values, we compute its weight as these values’ maximum weight reflecting its importance. We use the top few lab tests with the highest weights in MCLSTM and ignore the others. This is a form of feature selection for longitudinal attributes.

3.3. Several ways of using the extracted temporal features and our feature extraction method’s advantages

The extracted temporal features are clinically meaningful and tend to be predictive. We combine them with expert-engineered features to build machine learning, statistical, or rule-based predictive models. For machine learning models, this can improve model accuracy [31], as many extracted features reflect trends more precisely and can perform better than the raw ones learned by the MCLSTM network. Also, we can use the method described in Section 4 to automatically explain the models’ prediction results.

Wang et al. [89] showed properly visualizing temporal sequences in medical data could help us spot data quality issues, such as an impossible order of events. When visualizing each cluster of effective segments, we could identify some temporal features that make no sense and reflect the underlying data quality issues. By fixing these issues and enhancing data quality, we can boost model accuracy and improve other applications using the same data set.

Using our feature extraction method can reduce the effort needed to build useable predictive models for the current modeling task. Moreover, Gupta et al. [32] showed that many features an RNN learns from a medical data set reflect general properties of the medical attributes involved in the features, and can be useful for other modeling tasks. Using the features extracted by our method to form a temporal feature library to facilitate feature reuse, we can reduce the effort needed to build predictive models for other modeling tasks.

3.4. Efficiently automating MCLSTM model selection

Each machine learning algorithm has two types of parameters: normal parameters automatically tuned during model training, and hyper-parameters that must be set before model training. Before training a MCLSTM network, we need to set the values of multiple hyper-parameters, such as the number of component networks in it and the learning rate. These values can affect model accuracy greatly, e.g., by two or more times [96]. The optimal hyperparameter value combination is found via an iterative model selection process. In each iteration, we use a combination to train a model. Its accuracy is used to guide the selection of the combination that will be tested in the next iteration.

3.4.1. The need for and the state of the art of automatic machine learning model selection

Machine learning model selection, if done manually, is labor intensive and time-consuming. Frequently, several hundred to several thousand iterations are needed to find a good hyperparameter value combination [96,97]. On a data set of non-trivial size and particularly for deep neural network, testing a combination in one iteration often takes several hours or longer [98]. To cut the human labor needed for model selection, researchers have developed multiple automatic machine learning model selection methods [68]. For certain machine learning algorithms including deep neural network, some of these methods can find better hyper-parameter value combinations than manual search by human experts [68,99].

Recently, Google set up an automatic model selection service called Google Vizier [99]. It has become the de facto model selection engine within Google. Using it to conduct model selection, Google researchers [22] built clinical LSTM models that greatly improved prediction accuracy for three outcomes. The medical data set used there is of moderate size and has 216,221 data instances. As mentioned in the paper posted at https://arxiv.org/pdf/1801.07860v1.pdf, using Google Vizier to perform automatic model selection on the data set consumed >201,000 GPU (graphics processing unit) hours. This is beyond the computational resources available to many healthcare systems and would exceed their budgets quickly. When standard techniques are used, the time needed for automatic model selection usually increases superlinearly with the data set size. On a medical data set larger than the above one, using Google Vizier to perform automatic model selection would consume more computational resources and a higher cost, and quickly reach a point that almost no healthcare system could afford. In fact, this could even become a problem for Google, which has a lot of resources. To run its business, Google regularly needs to build predictive models on large data sets. As mentioned in the Google Vizier paper [99], using Google Vizier to perform automatic model selection on a large data set often takes months or years. As a result, for some mission critical applications, Google has to deploy a model without fully tuning it, and then keep tuning it over several years. Using suboptimal models leads to degraded outcomes. In our case, the situation could become even worse, if we iteratively train the MCLSTM network and extract features in multiple rounds, as each round requires automatic MCLSTM model selection.

3.4.2. Our prior work on efficiently automating machine learning model selection

To expedite automatic machine learning model selection, we recently developed a progressive sampling-based Bayesian optimization method for it. We showed that depending on the data set, our method can speed up the search process by one to two orders of magnitude [97,100,101 ]. Our idea is to conduct progressive sampling [102], filtering, and fine-tuning to quickly shrink the search space. We use a random sample of the data set termed the training sample to train models. We do fast trials on a small training sample to drop unpromising hyper-parameter value combinations early, keeping resources to fine-tune promising ones. We test multiple combinations. For each combination, we test it by training a model using it and the training sample. A combination is promising if the trained model reaches accuracy above an initial threshold. We then raise the threshold, expand the training sample, test and adjust combinations on it, and reduce the search space several times. In the last round, we use the full data set to find a good combination.

For several reasons described below, if we directly apply our progressive sampling-based Bayesian optimization method to automate MCLSTM model selection, we may not obtain the desired search efficiency and search result quality. Instead, for it to better automate MCLSTM model selection, we use four techniques to improve our method. The first technique is specific to deep neural network. The second technique is specific to LSTM. The third and fourth techniques apply to general machine learning algorithms.

3.4.3. Technique 1: performing early stopping when testing a hyperparameter value combination

To train a machine learning model, we often need to process each training instance multiple times. Our progressive sampling-based Bayesian optimization method is designed for the case that satisfies two conditions concurrently. First, it is fast to process a training instance once during model training. This ensures a hyper-parameter value combination can be tested on a small training sample quickly. Second, using a relatively small training sample, we can estimate a combination’s quality with reasonable accuracy. This reduces the likelihood that a high-quality combination is identified as unpromising and dropped at an early stage of the search process.

Neither condition is satisfied on deep neural network. When training a deep neural network, it often takes a non-trivial amount of time to process a training instance once. As a result, quite some time is needed to test a hyper-parameter value combination on even a small training sample. This degrades search efficiency. Moreover, deep neural network is data hungry. To reasonably estimate a combination’s quality for a deep neural network, a large training set is needed. If we start from using a small training sample to identify unpromising combinations, we are likely to drop many high-quality combinations erroneously in the first few rounds of the search process. This can degrade search result quality.

To address these issues, we adopt an early stopping technique for automating deep neural network model selection. Instead of starting from a small training sample, the search process starts from a relatively large training sample. A neural network is trained in epochs. As a model is trained for more epochs, its accuracy generally improves. In the first few rounds of the search process, when testing a hyper-parameter value combination, we train the model for a few rather than for the full number of epochs. In this way, without spending too much time on the test, we can estimate the combination’s quality with reasonable accuracy. This type of early stopping technique has been used previously for expediting automatic machine learning model selection [98,99], but not in combination with progressive sampling.

3.4.4. Technique 2: tuning the learning rate hyper-parameter before tuning the other hyper-parameters in depth

Greff et al. [103] showed that LSTM’s learning rate hyperparameter has a special property. For each data set, there is a large interval, in which the learning rate offers good model accuracy with little variation. The LSTM model can be trained relatively quickly when the learning rate is at the high end of the interval. When searching for a good learning rate, we can start from a high value like one and keep dividing it by ten until model accuracy no longer improves.

Based on this insight, we expedite automatic LSTM model selection by tuning the learning rate before tuning the other hyperparameters in detail. We proceed in four steps. In step one, we use a relatively large training sample to test a few random hyperparameter combinations, and select the one reaching the highest model accuracy. Intuitively, this combination would have reasonable and neither optimal nor terrible performance. In step two, for all hyper-parameters excluding the learning rate, we fix their values according to this combination and use the training sample to tune the learning rate. We start from a high learning rate like one and keep dividing it by ten until model accuracy no longer improves. In step three, we fix the learning rate at the value found in step two, and use our progressive sampling-based Bayesian optimization method to tune all of the other hyper-parameters. In step four, if desired, we perform some final fine-tuning of all hyperparameters simultaneously without significantly changing the value of any of them.

3.4.5. Technique 3: conducting stable Bayesian optimization

Machine learning model selection aims to find an optimal hyper-parameter value combination in the hyper-parameter space. As mentioned in Nguyen et al. [104], when the training or validation set is small, spurious peaks often appear on the performance surface defined over all possible combinations. These peaks are narrow and scattered randomly in low-performance regions. In this case, the search process of automatic machine learning model selection frequently stops at a spurious peak instead of a more stable one. The final model built there has suboptimal accuracy when deployed in the real world.

To prevent the search process from stopping at a spurious peak, Nguyen et al. [104] proposed a stable Bayesian optimization method for automating machine learning model selection.Bayesian optimization uses a regression model to predict a machine learning model’s accuracy based on the hyper-parameter value combination, and an acquisition function to select the combination to test in the next iteration. The regression model is usually a random forest [96] or a Gaussian process [104]. The former has been shown to outperform the latter for making this prediction [105].

The main idea of the stable Bayesian optimization method [104] is to measure a hyper-parameter value combination’s performance stability and include the measure in the acquisition function. The method is designed for the case in which the regression model is a Gaussian process, and each step of the search process uses the whole data set. The technique used in that method does not directly apply to our progressive sampling-based Bayesian optimization method [97], which uses a random forest as the regression model, and a gradually expanded training sample over rounds of the search process.