Abstract

There is substantial evidence for individual differences in personality and cognitive abilities, but we lack clear intuitions about individual differences in visual abilities. Previous work on this topic has typically compared performance with only two categories, each measured with only one task. This approach is insufficient for demonstration of domain–general effects. Most previous work has used familiar object categories, for which experience may vary between participants and categories, thereby reducing correlations that would stem from a common factor. In Study 1, we adopted a latent variable approach to test for the first time whether there is a domain–general Object Recognition Ability, o. We assessed whether shared variance between latent factors representing performance for each of five novel object categories could be accounted for by a single higher–order factor. On average, 89% of the variance of lower–order factors denoting performance on novel object categories could be accounted for by a higher–order factor, providing strong evidence for o. Moreover, o also accounted for a moderate proportion of variance in tests of familiar object recognition. In Study 2, we assessed whether the strong association across categories in object recognition is due to third–variable influences. We find that o has weak to moderate associations with a host of cognitive, perceptual and personality constructs and that a clear majority of the variance in and covariance between performance on different categories is independent of fluid intelligence. This work provides the first demonstration of a reliable, specific and domain–general Object Recognition Ability, and suggest a rich framework for future work in this area.

Keywords: visual abilities, structural equation modeling, latent variable modeling, holistic processing, intelligence

Individual Differences in Object Recognition

There is substantial evidence for individual differences in personality and cognitive abilities. In the case of personality traits, although test–retest correlations across 1–3 years are in the .2–.5 range during childhood, they are in the .6–.8 range among adults across even longer time spans (e.g., Hampson & Goldberg, 2006; Roberts & DelVecchio, 2000). Cross–situational consistency of behaviors is not as high as we might intuitively believe (e.g., Mischel, 1968; Mischel & Peake, 1982), and r = .30 is the proverbial “personality coefficient” (Mischel, 1968). Nonetheless, personality traits: 1) often account for substantial variance in behaviors, thoughts, and moods averaged across situations, contexts, or measures (e.g., Rushton, Brainerd, & Pressley, 1983); 2) are generally associated with validity coefficients comparable to modal effect sizes found in experimental studies (Meyer, Finn, Eyde, et al. 2001); and 3) predict important life outcomes (e.g., mortality, occupational attainment, vulnerability to psychopathology), with effect sizes comparable to those of SES or cognitive abilities (e.g., Goodwin & Friedman, 2006; Roberts, Kuncel, Shiner, Caspi, & Goldberg, 2007). Similarly, psychometric intelligence is extremely stable over time (e.g., test–retest correlations generally in the .6–.8 range in the time span between childhood and old age, e.g., Deary, Whalley, Lemon, Crawford, & Starr, 2000; Deary, Whiteman, Starr, et al., 2004), and it predicts achievement and other important life outcomes (e.g., health and morbidity) independent of socio–demographic variables (e.g., Deary et al., 2004).

In contrast, we lack clear intuitions about individual differences in visual perception. We have little to no access to the quality of others’ perception and we are very poor at estimating our perceptual abilities, even in a specific domain, relative to other people (Barton et al., 2009; McGugin et al., 2012). Studies of perceptual expertise that reveal variability in ability with specific object categories, such as birds (e.g., Gauthier et al., 2000; Tanaka et al., 2005), fingerprints (Busey & Vanderwolk, 2005), and cars (e.g., Gauthier et al., 2000, 2003) do not address the stability or consistency of individual differences in object recognition performance—is ability in one domain stable over time and related to performance in another domain? Does someone’s ability to recognize birds predict how well they will be able to recognize fingerprints? Surprisingly, despite decades of research on object recognition, there has been almost no work seeking to find evidence of a common “object recognition” ability across domains. Here, we test whether object recognition ability (o) is a valid and reliable construct that can account for performance across categories. The o we are speculating about here would be at least general enough to predict the ability to learn how to discriminate items in any subordinate–level category, such as different dogs, birds or fingerprints1.

The hypothesis of a general object recognition ability parallels a number of models in the areas of personality, psychometric intelligence, and cognitive abilities. In these areas, hierarchical structures for individual differences have predominated for a number of years (e.g., Guilford, 1967; Markon, Krueger, & Watson, 2005; Reeve & Bonaccio, 2011; Rushton & Irwing, 2011; Spearman, 1927). Such models posit superordinate dimensions or factors (e.g., the general intelligence factor g, negative affectivity in the domain of emotion and temperament) that account for substantial variability in both lower–order factors and observed measures (e.g., Markon et al., 2005; Reeve & Bonaccio, 2011; Zinbarg & Barlow, 1996).

However, a great deal of vision research seems to suggest that visual abilities are more fractionated than common, with the visual system dividing things by the way they look. For instance, neuroimaging studies have identified different brain regions associated with the processing of basic visual properties such as symmetry (e.g., Sasaki et al., 2005), curvature (e.g., Yue et al., 2014), and rectangularity (e.g., Nasr et al., 2014), and with different object categories such as animals, tools (e.g., Chao et al., 2002), houses (e.g., Epstein & Kanwisher, 1998), and faces (e.g., Kanwisher et al., 1997). Measures of connectivity to face selective–areas are found to correlate with face, but not scene, recognition performance (Gomez et al., 2015). Such results raise the possibility that different brain networks support independent recognition abilities for different object categories, such that car recognition ability would not predict ability to match fingerprints or recognize faces.

Starting with the development of the Cambridge Face Memory Test (CFMT; Duchaine & Nakayama, 2006), which captures a wide range of face processing ability with high reliability (e.g., test–retest with 6 months delay =.70, Duchaine & Nakayama, 2006; Cronbach’s alpha = .91, Wilmer et al., 2012), the small body of work that speaks to this question has generally focused on the specificity of face recognition abilities; that is, is face recognitions an independent ability or a special case of a more global ability to learn and/or recognize objects? However, the conclusions that can be drawn from work in this area are limited because 1) the experimental designs typically only include one task (e.g., a single memory test) and two categories (e.g., faces and cars), and 2) only familiar object categories are used, so experience can vary between participants and categories. In what follows, we discuss each of these issues in turn and how they are addressed by the present study.

Importance of Using Multiple Tasks and Categories

Wilmer et al. found only 7% shared variance (r–squared) between the CFMT and performance on a similar task with unfamiliar abstract art (n = 3004, r = .26, Wilmer et al., 2010; n = 1469, r = .26, Wilmer et al., 2012), and studies comparing the CFMT with a similar task using cars have found 8% shared variance (n = 1042, r = .29; Shakeshaft & Plomin, 2015) and 14% (n = 142, r = .37; Dennett et al., 2012). These small but significant relationships are difficult to interpret because performance with different categories was measured using a single task for all categories. Performance with faces, abstract art, and cars could share a small amount of variance either due to the recruitment of a general object recognition system or due to task–specific processes such as working memory or sensitivity to proactive interference, and important to this task. Interpretation is further limited by the fact that only two categories of objects were compared (faces with either abstract art, cars, or houses; see Gauthier & Nelson, 2001).

When studies find only a moderate correlation between a face and a non–face object recognition task, authors often take it as evidence for a face recognition ability that is distinct from an object recognition ability (e.g., Dennett et al., 2012; Shakeshaft & Plomin, 2015; Wilmer et al., 2010). An untested assumption, however, is that abilities to recognize different non–face categories would be more strongly related to one another than each of them would be to face recognition ability. Only a few studies have used several object categories but all with the same task (e.g., Ćepulić et al., 2018). In some of these studies using the VET (McGugin et al., 2012; Van Gulick et al., 2015), the average pairwise correlation between any two non–face categories is no larger (r=.34) than what is typically found between face and non–face object recognition tests (e.g. r=0.37 in Dennett et al, 2012). In other words, when many categories are used, face recognition does not stand out as a particularly distinct ability. Testing with more than two categories shifts the question of whether face recognition ability is distinct to a more general question: given the domain–specificity of performance in high–level vision, is there evidence for a strong domain general object recognition ability, o?

Beyond problems for interpretation, using only two categories each assessed with one task may underestimate true dependence on a common factor. Consider research in the area of personality, where the cross–situational consistency of behavioral measures (particularly when assessed on only one occasion) is typically rather low (e.g., Mischel, 1968; Mischel & Peake, 1982). Importantly, correlations are much higher when behavioral measures are aggregated across a number of situations and correlated with other behavioral aggregates or personality measures (e.g., Jaccard, 1974; Rushton, Brainerd, & Pressley, 1983). Thus, the correlation between one task for cars and one task for faces likely does not provide sufficient aggregation to reveal the influence of a broader construct. Indeed, the few studies that used multiple tasks treated as indicators of a higher–order category–specific latent variable (i.e., factor) found moderate to substantial relationships between distinct face and house perception factors (44–69% shared variance, Hildebrandt et al., 2013; 24% shared variance, Wilhelm et al., 2010). These studies still suffer from the interpretative problems described above, as only two categories were compared. To circumvent these problems, participants in our study completed three tasks of visual object perception and recognition for each of five object categories.

Importance of Controlling for Experience

Although some studies have measured performance for several categories (e.g., Gauthier et al., 2014; McGugin et al., 2012; Van Gulick et al., 2015), most used familiar object categories. This makes it difficult to disentangle variability due to experience from variability in a domain–general ability. Furthermore, differences in experience between categories for the same individual might reduce correlations that would stem from a common factor (Gauthier et al., 2014; Ryan & Gauthier, 2016; Van Gulick et al., 2015). In recent work, object recognition ability was measured with three categories of novel objects (in three groups with ns >325, each tested on two of the three categories, with a single task). The average pairwise correlation (r=.48) was higher than the typical pairwise correlation for familiar object categories, consistent with the idea that differences in experience with familiar objects complicates the measurement of a common visual ability (Richler et al., 2017). To circumvent the problems associated with variability in experience, here we used five categories of novel, unfamiliar objects. Because these novel object categories vary on several perceptual dimensions shown to be associated with unique neural substrates (e.g., animate/inanimate appearance, symmetry, and curvature), their use in the present study will provide a rigorous test of the presence of a common ability.

It is possible, however, that some amount of experience with a category is important for individual differences in ability to be fully reflected in performance. After all, one important component of object recognition is the ability to learn object categories. In other domains, differences among individuals in genetic or other predispositions commonly require the appropriate environmental inputs to be expressed behaviorally (for reviews see Dick, 2011; Manuck & McCaffery, 2014). Therefore, we tested four object categories following a training phase that provided participants with the same amount of controlled experience for each category. We tested performance with the fifth category without any prior training, to assess whether experience affects the expression of a domain–general ability. If so, this design leaves us with four categories to use in the main analyses. We used a training protocol that is relatively short and limits the contribution of non–visual abilities (e.g., it did not require naming). In addition, learning in this task transfers to new exemplars of the category and results in training effects typical of the early stages of perceptual expertise (Bukach et al., 2012).

Latent Variable Modeling Approach

Self–reports of visual abilities are generally poor predictors of performance for most categories (McGugin et al., 2012; Richler et al., 2017; see also Barton et al., 2009). Thus, individual differences in object recognition ability can only be inferred from behavioral measures of task performance. Whatever the nature of the measure, research in the areas of personality and temperament has shown that broad individual differences constructs are optimally assessed using multiple measures (to allow for conclusions that are appropriately generalizable across different measures) and often best modeled as latent variables. Narrowly defined, latent variables are constructs (e.g., ‘object recognition ability’) that are not directly observable. Latent variables are useful whenever unobserved constructs are invoked, for instance in behavioral and social sciences (e.g., Bollen, 2002). The present study adopts a latent variable analytic approach to test the hypothesis that the shared variance in performance across several object categories can be accounted for by a single domain–general visual ability that is modeled as a higher–order latent variable (i.e., factor). This structural representation will be tested using confirmatory factor analysis (CFA, for reviews see Brown, 2015; Tomarken & Waller, 2005). CFA confers several distinct advantages in the present context relative to exploratory factor analysis (EFA) or principal components analysis (PCA). These include the ability to: specify and test the absolute and relative fit of competing models using a variety of indices; specify factor models that posit both lower–order factors that account for correlations among observed indicators and higher–order factors that account for the correlations among lower–order factors; estimate correlations among categories that are free from the attenuating effects of measurement error and category–irrelevant variance, and specify correlated error terms or task–specific method factors that can estimate the contribution of shared methods to correlations among measures (see, e.g., Brown, 2015; Hancock & Mueller, 2006; Tomarken & Waller, 2005).

In our study, participants completed three tasks of visual object perception and recognition for each of five novel object categories (four categories for which they received a fixed amount of training in a simple video game, and one for which they received no training). We then used CFA to estimate the correlations among the different categories in performance. Each category itself was represented as a first–order factor with task scores as observable indicators. Our primary interest was testing a related model that posited an overarching, second–order construct that we denote as ‘o’ representing individual difference in object recognition ability that influences performance on specific object categories. Our goal was to assess the fit of the second–order factor model and estimate the proportion of variance in performance on the lower–order, category–specific factors accounted for by the overarching factor. Finally, via CFA, we assessed the relation between individual differences on the object recognition latent factors and scores on measures of facial recognition and perceptual expertise with familiar objects.

Study 1

Methods

Participants

Two–hundred–and–eighty–five members of the Vanderbilt University Community were recruited for the experiment (123 male, 162 female; mean age = 21.5, age range = 18–38; Caucasian = 170, Asian = 70, African American = 35, Hispanic = 8, Other = 2). There were four at–home sessions of approximately 1.75 hours each, and six lab sessions of one hour each. Participants were compensated $26.25 for each at–home session, and $15.00 for each lab session, for a total of $195.00 for the entire experiment (13 hours). Payment was based on the sessions participants completed, and was not contingent on finishing the experiment. Both the original sample size (285) and the sample size ultimately used for analyses (n=246, see Data Analysis section for elaboration) are very large for studies in the area of perception but on the small side relative to typical confirmatory factor analyses and structural equation models. The target N reflected a tradeoff between practical considerations (i.e., each participant attended 5 laboratory sessions with home sessions intermixed) and the desire to maximize sample size for a statistical procedure that typically requires large sample sizes. In this regard, two considerations are particularly relevant: (1) A priori power analyses indicated excellent power to detect misspecifications under a range of reasonable parameter values with n’s in the range of 250 or so; and, (2) Published simulation studies that mirror various features of the present experiment (e.g., the method of treating missing data, as discussed below) have demonstrated good performance (e.g., empirical Type 1 error rates that correspond to nominal levels) when n’s are in the same range (e.g., Savalei & Falk, 2014).

Stimuli

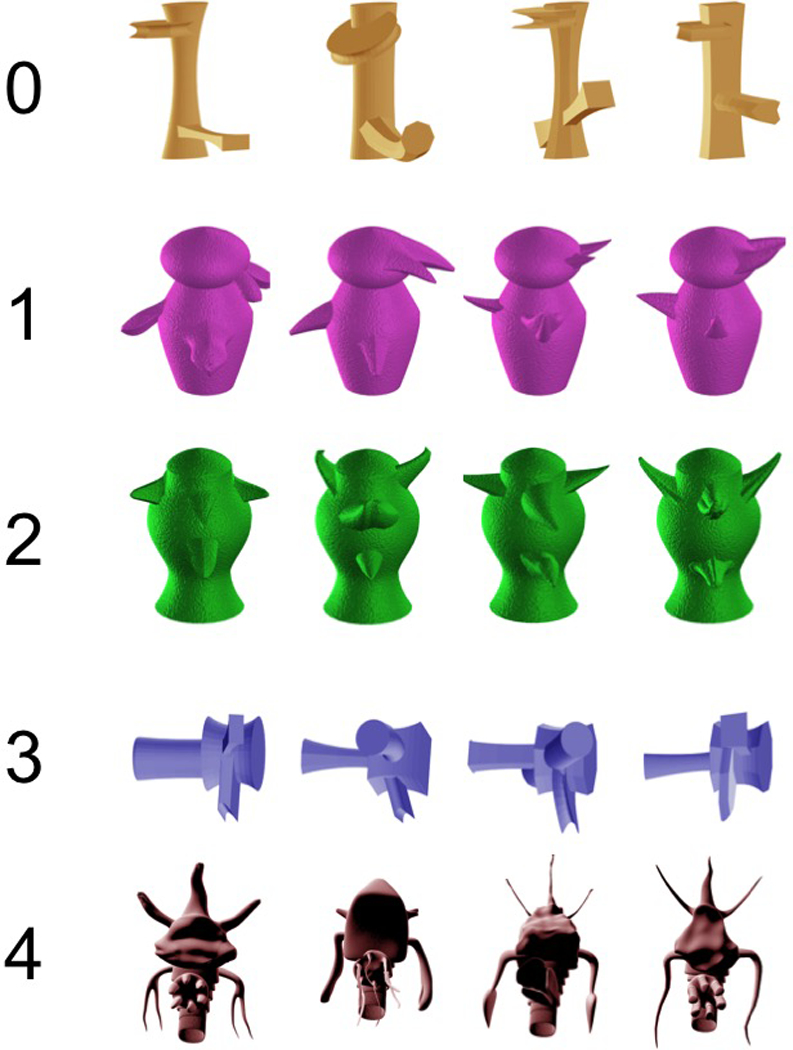

We used five novel object categories (vertical Ziggerins, asymmetrical Greebles, symmetrical Greebles, horizontal Ziggerins, and Sheinbugs; arbitrary numbers were assigned to categories to simplify data coding and presentation of results, see Figure 1) with 80 exemplars each, and two views per exemplar. Categories were defined by general configuration of parts and color. Thirty exemplars (approximately 1 × 1 degree of visual angle) were used in the training phase, and the remaining 50 exemplars (approximately 2 × 2 degrees of visual angle) were used for testing. A sixth novel object category (YUFOs, Gauthier et al., 2003) was used to practice the training task during an introductory lab session and in the instructions for all test tasks.

Figure 1.

Example stimuli from the five novel object categories used in this study. Numbers were arbitrarily assigned to categories to simplify data coding and presentation of results. Category 0 was the non–exposed category for all participants.

General Procedure

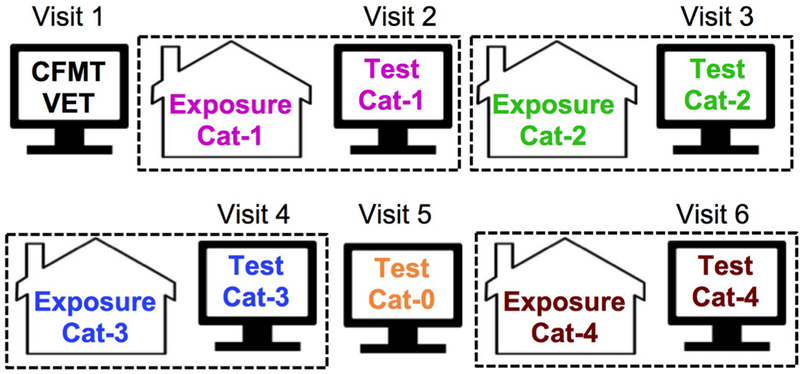

The general procedure is illustrated in Figure 2. First, participants came to the lab for an introductory session in which they completed measures of familiar object recognition (described below), and practiced the training game. At the end of the introductory session (and all subsequent lab sessions), participants scheduled their next lab session and were given a passcode to access the training game that had to be completed at home prior to that date. Participants were told that they should return to the lab within five days of completing the game, and this was taken into consideration during scheduling 2. Participants completed the assigned training game for a category at home, and then completed three test tasks (described below) with the same category in the subsequent lab test session (e.g., participants completed the training game with Cat–1 at home, then returned to the lab to complete the test tasks with Cat–1). Home–training and lab–test sessions were repeated until participants completed test sessions for all five categories, with the exception that there was no training session prior to the test session for Cat–0. Category order was randomized for each participant.

Figure 2.

General experiment procedure. Participants completed an introductory lab session on Visit 1, followed by home–training and lab–test sessions for four novel object categories (Cat–1–4), and a lab–test session only for one novel object category (Cat–0). Home sessions and lab visits were grouped for Cat–1–4, such that the home and test sessions for a given category were always consecutive and separated by no more than five days. Category order was randomized.

Training Game

The training game was modeled after the classic arcade game Space Invaders (see Bukach et al., 2012). In each wave, an array of nine objects moved laterally and downward toward participants’ avatar. Objects in the array and the avatar could appear in one of two viewpoints. The arrow keys moved the avatar left and right. Pressing “z” produced a laser that changed the invader’s identity on contact, and pressing “x” produced a laser that eliminated the invader if it matched the identity of the avatar. If an invader with a different identity from the avatar was shot with an “x,” the speed of array movement increased. Importantly, the target invader and avatar could be shown in the same or different viewpoint. Thus, successful task performance required matching on object identity, regardless of viewpoint. Participants had to successfully clear 250 waves (approximately 90 minutes). Because the training game was relatively easy across categories, on average participants initiated 276.41, 259.69, 271.20, and 272.22 waves for categories 1–4, respectively (overall mean = 269.88). A repeated measures linear mixed effects analysis of variance (ANOVA) and subsequent pairwise comparisons indicated that category 2 was associated with fewer waves than the three other categories (category 2 pairwise ps < .025; all other pairwise ps > .20; omnibus test F(3,219) = 7.52, p <.0001). Waves did not have to be completed during a single sitting. Avatar identity and array composition were randomized. During the introductory session participants had to clear 30 waves of YUFOs to familiarize themselves with the task.

Introductory Lab Session

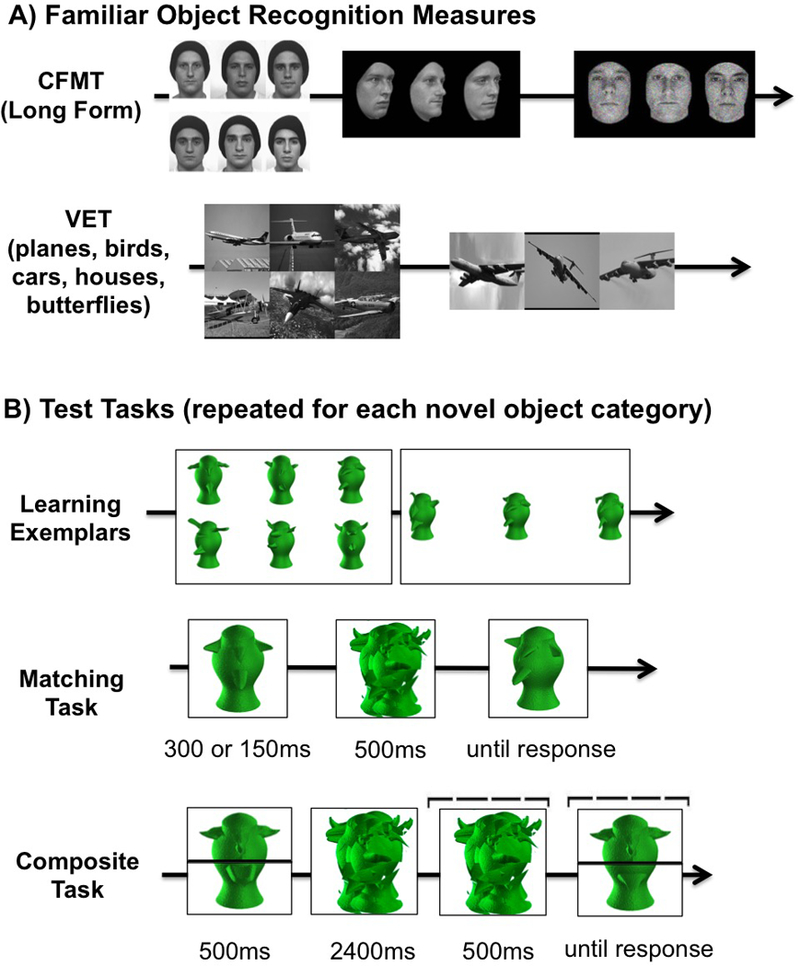

Example trials for the familiar object recognition measures (CFMT and VET) are shown in Figure 3. Task order and trial order within each task were the same for all participants.

Figure 3.

A) Example trials for familiar object recognition measures (CFMT and VET). B) Example trials for each of the three test tasks (Learning Exemplars, Matching Task, Composite Task, denoted by LE, MA and CO in Figure 1).

Cambridge Face Memory Test (CFMT)– Long Form.

In the CFMT (Duchaine & Nakayama, 2006), participants complete an 18–trial introductory learning phase, in which a target is presented in three views, followed by three forced–choice test displays containing the target face and two distractor faces. Then, participants study frontal views of all six target faces together for a total of 20 s, followed by 30 forced–choice test displays. Participants are told to select the face that matches one of the original six target faces. The matching faces vary from the studied versions in terms of lighting condition, pose, or both. Next, participants are given another opportunity to study the six target faces, followed by 24 test displays presented in Gaussian noise. Finally, the last block includes 30 “difficult” test displays where faces are shown as silhouettes, in extreme noise, or with varying expressions. The CFMT is scored as accuracy (percent correct) across all blocks, excluding the introductory learning trials, for a total of 84 trials. Previous work found that the CFMT produces measurements of high reliability in a normal adult population (e.g., test–retest with 6 months delay = .70, Duchaine & Nakayama, 2006; Cronbach’s alpha = .91, Wilmer et al., 2012).

Vanderbilt Expertise Test (VET).

The Vanderbilt Expertise Test (VET; McGugin et al., 2012) is similar in format to the CFMT. Participants study six target exemplars from a category, and are then presented with triplets and asked to indicate by key–press which object is the same identity (but different image) as any of the targets. Five categories were tested in the following order: houses, cars, birds, planes, and butterflies. There were 51 trials for each category. Three trials were catch trials that were not analyzed. Participants were also asked to rate their experience with each of the five categories (“interest in, years exposure to, knowledge of, and familiarity with” from 1 to 9). Accuracy (percent correct) was computed separately for each VET category. Previous work has produced good reliability in measurements with a normal adult population on the various VET subscales (e.g., Cronbach’s alpha = .64–.85 in McGugin et al., 2012; Cronbach’s alpha = .71–.93 in Van Gulick et al., 2015).

Test Sessions

Example test task trials are shown in Figure 3. Test task order was the same for all categories and participants. One trial order was generated for each test task for each category and was the same for all participants.

Learning Exemplars Task.

Thirty–six test objects (6 targets, 30 foils) from each category were used. The Learning Exemplars task was similar in format to the CFMT and VET. Participants studied an array of six target objects (three in view A, three in view B). On the subsequent test trials, three objects were shown in any combination of views A and B, and participants had to indicate by key–press which object matched the identity of any of the targets, regardless of changes in viewpoint. Chance was .33. There were two blocks of 24 trials. In the first block, targets were shown in the same view as during study. In the second block, targets were shown in the unstudied view. In the last six trials of block 1 and the last 12 trials of block 2 objects were presented in visual noise. All targets were shown with an equal frequency for each trial type (e.g., same/different identity x same/different viewpoint), and the same target was never presented on consecutive trials. Performance was scored as accuracy (percent correct) across all 48 trials. Cronbach alphas were .73–.89 (see Table 1). For cross–reference, this task is an earlier version of the Novel Object Memory Test developed later for 3 of the categories (Richler et al., 2017).

Table 1.

Reliability (Cronbach’s α), mean performance measures, and tests of normality for each test task and category (see Figure 2 for number–category mappings).

| Category | N | Cronbach’s α | Mean (SD) accuracy (% correct or d’) | Skewness | Kurtosis | Shapiro–Francia Normality Test (V’) |

|---|---|---|---|---|---|---|

| Learning Exemplars | ||||||

| 0 | 225 | .84 | 65.16 (15.04) | −0.44 | 3.37 | 2.59* |

| 1 | 199 | .78 | 49.82 (14.02) | 0.17 | 2.81 | 1.46 |

| 2 | 208 | .89 | 68.35 (17.69) | −0.58* | 3.16 | 4.45*** |

| 3 | 201 | .74 | 48.05 (12.68) | 0.62*** | 3.58 | 3.62** |

| 4 | 212 | .73 | 50.47 (12.36) | 0.14 | 2.68 | 0.81 |

| Matching Task | ||||||

| 0 | 225 | .95 | 1.62 (.62) | −1.07*** | 6.49*** | 10.82*** |

| 1 | 203 | .92 | 1.04 (.50) | −0.79*** | 5.77*** | 6.22*** |

| 2 | 212 | .96 | 1.83 (.71) | −1.26*** | 6.71*** | 12.10*** |

| 3 | 210 | .91 | 1.03 (.42) | −0.50 | 4.72 | 4.86*** |

| 4 | 218 | .88 | .82 (.40) | −0.55** | 3.83** | 3.57** |

| Composite Task | ||||||

| 0 | 225 | .91 | 1.72 (.92) | −0.29 | 3.38 | 1.83 |

| 1 | 208 | .95 | 1.25 (.71) | −0.10 | 3.34* | 0.87 |

| 2 | 213 | .97 | 1.69 (.97) | 0.17 | 3.23 | 1.11 |

| 3 | 208 | .95 | 1.23 (.73) | −0.29 | 3.03 | 1.99 |

| 4 | 215 | .95 | 1.12 (.69) | −0.42** | 3.56** | 2.30* |

Note. Accuracy measures are percent correct for Learning Exemplars and d’ for Matching and Composite tasks. Under normality, the expected values of measures of skewness and kurtosis are 0 and 3, respectively. Under normality, when scores are sampled from a normal distribution, the median value of the Shapiro− Francia V’ measure equals 1 (Royston, 1991).

p < .05

p < .01

p < .001

Matching Task.

All 50 test objects from each category were used. On each trial, a study object was presented (300 ms in block 1, 150 ms in block 2), followed by a category–specific random pattern mask (500 ms), then a second object was presented (until response or a maximum of 3 s; time–out trials accounted for less than 1% of the data and were excluded from the analyses). Participants had to indicate by key–press whether the two objects were the same or different identity, regardless of changes in viewpoint or size (on different–size trials the test object was approximately 1.3 × 1.3 degrees of visual angle). There were 45 trials for each combination of correct response, viewpoint (same or different), and size conditions (same or different) for a total of 360 trials. Due to a minor programming error, the number of same and different trials were not evenly divided between blocks (range = 84–96 trials per block). Sensitivity (d’) was calculated separately for each block. Sensitivity was computed using Zhit rate – Zfalse alarm rate, adjusting for hit rates of 1 or false alarm rates of 0 using 1 – 1/(2N) and 1/(2N), respectively where N is the number of same (or different) trials. These scores were correlated (rs = .57–.78, all ps < .001) and were averaged to create a single matching task score for each category with Cronbach alpha .88–.96 (see Table 1).

Composite Task.

Because prior work suggested that using a small number of stimuli improves the reliability of the composite task (Ross et al., 2015), the tops of five objects and the bottoms of a different five objects were used to make composites for each category. These ten objects were not used in the Learning Exemplars task. Trial timing was based on Wong et al. (2009). On each trial, a study composite (top of one object combined with the bottom of another object) was presented (500 ms), followed by a category–specific mask (2900 ms). A cue indicating whether the top or bottom was the target was presented during the last 500 ms of the mask presentation. Then, a test composite was presented with the cue (until response, maximum 3 s; time–out trials accounted for 1% of the data and were excluded from the analyses) and participants had to indicate by key–press whether the cued part was the same or different as the study composite, while ignoring the uncued half. On congruent trials, the cued and uncued parts were associated with the same response (i.e., both parts were the same or both parts were different); on incongruent trials, the cued and uncued parts were associated with different responses (i.e., one part was the same, the other part was different). There were 36 trials for each combination of correct response (same/different), cued part (top/bottom), and congruency (congruent/incongruent) for a total of 288 trials. Sensitivity (d’) was calculated separately for top–congruent, bottom–congruent, top–incongruent, and bottom–incongruent conditions. These scores were correlated (average rs = .41–.60, all ps < .001) and were averaged to create a single composite task score for each category with Cronbach’s alpha .91–.97 (see Table 1). This average composite score indexes overall performance on the task, which is the construct that is most similar to that measured by the other two tasks. It does not reflect congruency (the difference in performance between congruent and incongruent trials), which is an index of holistic processing (Richler & Gauthier, 2014). We did however compute congruency effects for use in an analysis comparing the 4 categories that received pre–training to the 5th, untrained, category.

Data Analysis

The data and software code for the primary analyses are available in the figshare repository (see supplemental online material). Due to experimenter or computer error, VET data for one or more subscales were missing for five participants and CFMT data were missing from one participant. Thirty–six participants withdrew from the study after the pre–test session (leaving 249 participants from the original 285). CFMT accuracy did not differ between participants who withdrew after the introductory session (M % correct = 61.77, SD = 10.85) and those who completed test sessions for at least one category (M % correct = 63.22, SD = 14.23; t282 = 0.59, p = .56, Cohen’s d = .11); however, VET accuracy (aggregated across all categories) was significantly lower for participants who withdrew (M % correct = 63.00, SD = 9.87) vs. those who completed any number of test sessions (M % correct = 66.96, SD = 9.60; t278 = 2.30, p = .022, Cohen’s d = .41). Data from three participants were excluded for not completing the exposure game for any category. Thus, data from 246 participants (86% of sample; 105 male, 140 female, 1 not disclosed; mean age = 21.4 years; Caucasian = 144, Asian = 64, African American = 30, Hispanic = 6, Other = 2) are included in the analyses.

Among the 246 participants included in analyses, data for some task–category combinations were not collected due to experimenter or computer error (2.68%) or because participants withdrew from the study after completing at least one test session (n = 30; 8.21% of expected data). Both the intraclass correlation analyses and the confirmatory factor analyses that we report below can accommodate such participants with incomplete data. Of the collected data, 96.95% was included in the analyses. The remaining 3.05% of observations were excluded because of: 1) Failure to finish the exposure game for a given category or excessive delay between home–exposure and lab–test sessions for that category (1.38%); and, 2) Median RTs less than 200 ms for individual Composite and Matching Task categories and median RTs less than 1000 ms for individual Learning Exemplars categories (1.67%).

Intraclass Correlations.

We computed intraclass correlation coefficients (ICCs) on a within–task basis to assess the consistency of individual differences in task performance across categories. ICCs indicate the proportion of the total variability in the data due to consistent differences among people. They are simultaneously a measure of between–subjects variability and within–subjects similarity (for reviews, see, e.g., Shrout & Fleiss, 1979; Strube & Newman, 2007). Here, ICCs assessed the proportion of the total variability in the data due to differences among subjects that are stable across categories.

We computed two different types of ICCs because we think that a case could be made for each. Because we did not equate categories on task difficulty, we calculated the consistency of individual differences (Shrout and Fleiss, 1979). Like a Pearson correlation, it rewards consistency in the relative ranks of a given participant across categories and does not penalize for overall shifts in category means due to variations in task difficulty or other factors that can produce absolute shifts in a participant’s scores across categories. Because categories were made of novel objects, one could argue that the specific categories we used are a random sample from a hypothetical universe of categories. This perspective would favor a second ICC model (categories as random effects) and so we computed a measure of agreement (Shrout and Fleiss, 1979). Within each ICC type, we computed two measures. The first (denoted ICC1 below) indicates the proportion of variance in performance on one category that is due to individual differences and is analogous to a test–retest correlation coefficient. The second (denoted ICC5 below) applied the Spearman–Brown formula to the ICC1 values and assesses the proportion of variance in composite scores averaged across the 5 categories that is due to individual differences.

To estimate ICCs including participants with incomplete data and compute confidence intervals, we adopted a Bayesian analytic approach previously implemented by Tomarken, Han, and Corbett (2015) (cf. Spielhalter, 2001; Turner, Omar, & Thompson, 2001) using SAS PROC MCMC, Version 9.4 of the SAS System for WindowsTM (Copyright © 2002–2014 SAS Institute Inc). We computed medians of the posterior distribution as our ICC estimates and formed 95% Bayesian Highest Posterior Density (HPD) intervals that represent the narrowest intervals with 95% probability (e.g., Christensen, Johnson, Brascum, & Hanson, 2011).

Confirmatory Factor Analyses.

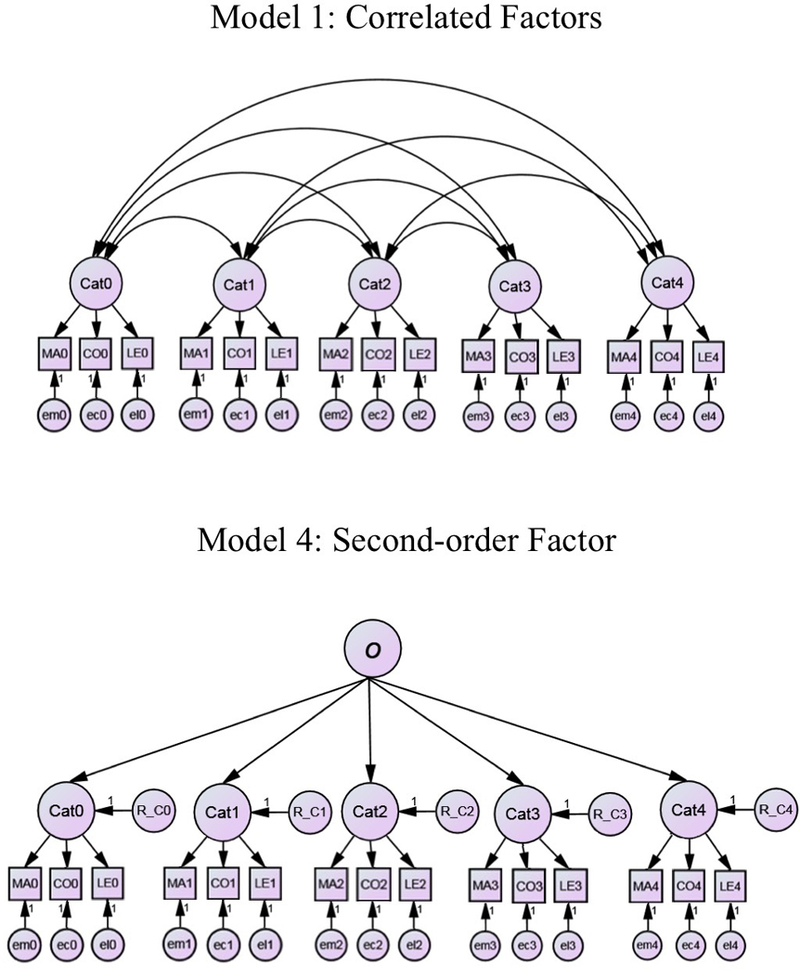

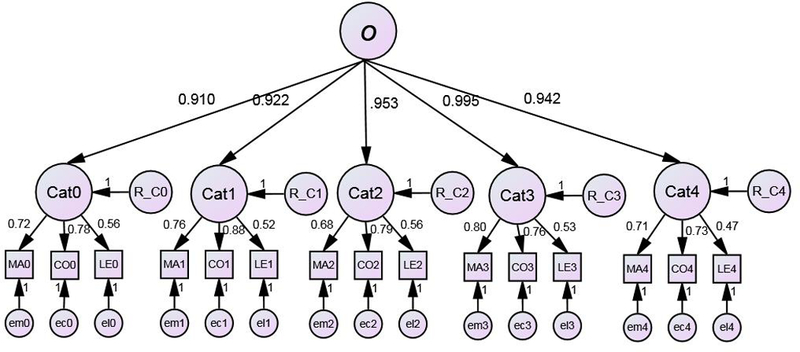

Confirmatory factor analyses were conducted using EQS Version 6.3 (Bentler, 2008). The top panel of Figure 4 depicts the base model that was elaborated in subsequent steps. This model specifies that each of the 15 tasks (Matching (MA), Composite (CO), and Learning Exemplars (LE), for each of the five categories) loads on the factor denoting individual differences in performance on the target category. Rectangles denote observed measures (e.g., MA1) and ovals denote latent variables or, equivalently, factors (e.g., Cat1). The directed arrows from factors to observed measures specifies that a proportion of the variance of each observed task measure is influenced by the latent construct indicating individual differences in ability on a given category. Each directed arrow is associated with a factor–loading coefficient denoting the regression of the observed measure on the latent factor. The double–headed arrows among the category factors specify covariances among the factors. The small circles shown at the bottom of the model (e.g., em0) are residual (i.e., error) terms that denote a combination of reliable influences on observed scores that is specific to that indicator and random measurement error.

Figure 4.

Confirmatory Factor Analysis models for measurement structure of five latent category factors (represented as ovals) each assessed with three measures (indicators) of visual object perception and recognition (represented as rectangles). Both models specify that each of the three tasks assessing performance with a given category loads on the appropriate lower–order category factor. The directed arrows from the factors to each observed measure reflects the specification that a proportion of the variance of each observed task measure (e.g., MA1) is due to the latent construct (e.g., Cat1) of individual differences in ability with a given category. Task–specific correlated errors are not shown for the sake of brevity. Top panel: 1st–order Correlated Factor Model. Bottom panel: 2nd–order Factor Model specifying that covariances among category factors are entirely accounted for by one over–arching Object Recognition Ability (o). The R terms (e.g., R_C0) in the 2nd order model denote the component of the variance of each category factor that is not due to o.

We developed a systematic sequence of models to test both substantive and methodological questions of interest. First, we tested Model 1, depicted in the top panel of Figure 4, that specifies five correlated lower–order category factors. Model 2 assessed whether task–specific influences on the correlations among the observed indicators should be added to the specifications of Model 1. Such influences, often termed “method effects”, could partially account for the inter–correlations among measures of a given task (e.g., MA) assessed across different categories. If so, such effects should be specified and estimated to obtain a better fitting model and less biased estimates of the covariances and correlations among category factors. Although, in theory, the optimal approach would be to specify three method factors, CFA models with a full array of method factors commonly run into failures to converge and inadmissible estimates due to empirical under–identification and other factors (see, e.g., Kenny & Kashy, 1992; Lance, Noble, & Scullen, 2002). We experienced such difficulties when trying to fit models specifying three method factors, one each for the LME, CO, and MA tasks. Instead we estimated task–specific components of variance by specifying covariances among the residual terms (e.g., em0–em4) for a given task. We specified correlated errors among each of the five LME, CO, and MA performance measures, respectively. This correlated uniqueness (CU) (e.g., Lance et al., 2002) approach to modeling method effects is commonly used in confirmatory factor analyses and structural equation modeling (SEM) (Brown, 2015).3 In terms of our sequence of models, we adopted the decision rule that, if, as we expected, the correlated uniqueness model (Model 2) fit better than Model 1 this feature would be included in all subsequent models that we tested. For clarity, Figure 4 omits correlated error terms.

Model 3 built on the best–fitting model from the previous stage and constrained the factor loadings of the three tasks to be equal (i.e., invariant) across the five categories. These constraints were imposed on a within–task cross–category basis (e.g., each of the five MA factor loadings were constrained to be equal). This specification did not reflect a strong prediction of invariance because categories were not equated on task difficulty and other psychometric features. However, this model was of interest because it provided a rather rigorous test of the consistency of individual differences across categories. It additionally allowed us to assess whether the category for which participants received no training (category 0) had a different psychometric structure than the trained categories (1–4).

Model 4 directly tested our prediction that performance across all categories is driven by a higher–order construct that reflects a general visual ability with objects. As shown in the bottom panel of Figure 4, this is a second–order factor model specifying that an over–arching Object Recognition Ability (o) dimension of individual differences influences performance on the lower–order factors. The residual terms (e.g., R_C0) that also influence the lower–order factors represent category–specific influences on individual differences in performance. Model 4 specifies that the higher–order factor is the only determinant of the correlations among the lower–order category factors. It can be shown that this model is a restricted version of the correlated factors model shown in the top panel of Figure 1, such that the relative fit of the two models can be directly compared (see details below). A popular alternative to the second–order factor model is a bifactor model (e.g., Chen, West, & Sousa, 2006; Reise, 2012). The online supplemental discusses bifactor modeling in the present context and why we have chosen to focus on the second–order factor model.

After modeling the internal structure of the performance on the five categories, we addressed the issue of relations to external variables. In model 5, using the best–fitting model from the previous sequence of models 1–4, we examined the correlation between individual differences in performance on the manipulated categories and individual differences in the ability to recognize familiar object categories as assessed by the VET and CFMT. We computed two sets of correlations. The first set is between the observed measures and the latent factor or factors of interest. Using estimated reliabilities, the second set corrected the individual difference measures for measurement error using a latent variable approach in which: 1) Each measure constituted a factor with a single indicator; and, 2) The variances of error terms were fixed at values that yielded the appropriate true score variance estimate for the factor. Random measurement error can attenuate correlations and such reliability corrections are consistent with our emphasis on latent variables.

To estimate all models, we used the robust two–stage estimator (TS) developed by Savalei, Bentler, and colleagues (Savalei & Bentler, 2009; Savalei & Falk, 2014; see also Yuan & Lu, 2008) because of two features of our data: 1) The presence of some missing data; and 2) Non–normality. For the 246 participants included in analyses (i.e., those who completed at least one task for at least one category), 14.94% of the maximal possible number of data points across tasks and categories were missing. In addition, although the most commonly used SEM estimators assume multivariate normality, the set of 15 tasks demonstrated deviations from multivariate normality according to the Doornik–Hansen (2008) test, χ2(30) = 412.42, p < .001 and to Yuan, Lambert, and Fouladi’s (2004) extension to incomplete data structures of Mardia’s (1970) test of multivariate kurtosis, z = 21.78, p < .001. On univariate assessments, the Shapiro–Francia tests of non–normality (Shapiro & Francia, 1972; Royston, 1983) and assessments of skew and kurtosis (D’Agostino, Belanger, & D’Agostino, 1990) indicated significant deviations from normality for all five of the Matching tasks, three of the five Learning Exemplar tasks, and one Composite task (see Table 1). Violations of normality were not extreme but of sufficient magnitude to warrant a robust estimator.

In the first stage of the robust TS algorithm, maximum likelihood (ML) estimates of the vector of means and the covariance matrix of the observed data (including observations with incomplete data) are obtained from a saturated (i.e., unrestricted) model. In the second stage, the specified model is estimated with the covariance matrix generated in the first stage used in place of the observed sample covariance matrix typically used for maximum likelihood (ML) estimation of CFA models. These steps allow for the inclusion of observations with incomplete data. The robust TS estimator uses two additional mechanisms to correct for non–normality: (1) A sandwich–type covariance matrix (Yuan & Lu, 2008) that yields standard errors for parameter estimates that are adjusted for non–normality and for the fact that a two–stage estimation procedure is used; and, (2) The Satorra–Bentler (SB; Satorra & Bentler, 1994) scaled chi–square correction to adjust the overall chi–square test of model fit and fit indices. This correction is used by a variety of SEM estimation methods when data are non–normal and is specifically designed to adjust for non–normal kurtosis. In accord with the statistical theory underlying structural equation modeling (e.g., Cudeck, 1989), all analyses were performed on the covariance matrix estimated in the first–stage and not the correlation matrix. To aid interpretation, however, at several points below we report standardized results (e.g., correlations among factors) calculated either directly (when models allowed fixing factor variances at 1) or from re–scaling of the non–standardized estimates yielded by the TS estimator.4

We assessed both the absolute and relative fit of models using several measures. In conventional null hypothesis testing, the hypothesis tested is typically not the researcher’s substantive hypothesis (which is typically aligned with the alternative hypothesis). In contrast, in CFA and structural equation modeling (SEM) in general, the model being directly tested often reflects the researcher’s substantive hypothesis. Thus, non–significant results often favor the researcher’s hypothesis. In terms of absolute fit, although we report the chi–square test of exact fit, it has well–known limitations: 1) There is a strong influence of sample size such that models with only rather trivial misspecifications can be rejected (e.g., Tomarken & Waller, 2003). Although our sample size was on the small side for a SEM analysis, such influence might still have been operative to some extent; 2) It is a measure of model “mis–fit” that favors binary reject/no–reject decisions rather than an evaluation of degree of fit on a more continuous metric; and, 3) It imposes a criterion – that a model fits perfectly – that may be too stringent considering that SEM models have numerous facets and that all models are, at best, approximations (e.g., MacCallum, Browne, & Sugawara, 1996). For this reason, SEM analysts almost always rely on other indices to evaluate model fit. We used the root mean–squared error of approximation (RMSEA; Steiger & Lind, 1980), standardized root mean squared residual (SRMR; Bentler, 1995), and Comparative Fit Index (CFI; Bentler, 1990) to assess model fit. The SRMR is a measure of absolute fit that can be interpreted as the average discrepancy between the correlations among the observed variables and the correlations predicted by the model. Lower values indicate better fit. The RMSEA is an estimate of a parsimony–corrected fit index because it assesses the degree of discrepancy between the observed and model–implied covariances while also penalizing for model complexity (e.g., for equivalent discrepancy it rewards the more parsimonious model that estimates fewer parameters and has more degrees of freedom). Smaller values indicate better fit. The RMSEA is typically treated as the degree to which a model fits approximately in the population, with values < .06 typically taken to indicate close fit (e.g., Hu & Bentler, 1998, 1999). Confidence intervals can also be formed around the estimated RMSEA value in a given sample. We computed the RMSEA estimate and confidence bounds for non–normal data that was developed by Li and Bentler (2006; see Brosseau–Liard, Savalei, & Li, 2012) and that is an option in EQS. The CFI is an index of the incremental or comparative fit of the target model relative to a baseline model of independence in which all the covariances among the observed indicators are fixed at 0. CFI values vary from 0 to 1, with values closer to 1 indicating better fit. Given that the comparison is to the independence model, the CFI often tends to indicate better fit than the other indices. Based on simulations, Hu and Bentler (1998, 1999) recommend the following criteria for adequate fit on these measures: SMSR ≤ .08, RMSEA ≤ .06, and CFI ≥ .95. The RMSEA and CFI were computed using the Satorra–Bentler scaled chi–square values.

A primary focus was the comparison of alternative models, most of which were nested versions of one another. Model A is nested in model B if it is a restricted version of model B; that is, if it is identical to model B except that certain parameters that are freely estimated in B are restricted in A by being fixed at specific values (often 0) or constrained to be equal to other parameters or combinations of parameters. Nested models were compared using the scaled χ2 difference test (Satorra & Bentler, 2001) that is appropriate when the Satorra–Bentler (S–B) correction for non–normality is used. We used the version of the scaled difference test developed by Satorra and Bentler (2001) that computes a scaling factor for the test as

Where are the degrees of freedom for the more and less restrictive models, respectively, and and are the scaling factors for the two models (equal to the ratio of the uncorrected χ2 value for the test of exact fit to the S–B corrected value for each model) (e.g., Bryant & Satorra, 2012). In turn, the scaled difference test is computed as the difference between the uncorrected tests of exact fit divided by cdif, with degrees of freedom equal to the difference in degrees of freedom between the two models. We used an Excel macro written by Bryant and Satorra (2013) to conduct the S–B difference tests.5 If restrictions imposed by Model A do not impair overall model fit relative to Model B, the result would be a non–significant χ2 test. Thus, in the context of nested tests, non–significant results often serve to corroborate the researcher’s hypotheses.

In addition, we report values of the Akaike Information Criterion (AIC; Akaike, 1973) and the Bayesian Information Criterion (BIC; Raftery, 1995; Schwarz, 1978) to convey the relative fit of both nested and non–nested models. Both indices penalize for model complexity, operationalized as the number of free parameters estimated by a given model. To compute these indices. we used what is probably the most common approach in SEM analyses, adding to the chi–square test of overall fit a penalty factor that is a function of the number of free parameters estimated by a model (denoted below as k).

We computed these indices using the following formulae:

We also present a small–sample corrected version of the BIC (e.g., Enders & Tofigi, 2008), computed as,

Lower values of all three indices indicate better fit. The information indices were computed using the Satorra–Bentler scaled chi–square values.

A major focus of our analyses was not simply evaluation of model fit but examining and interpreting parameter estimates of interest (e.g., correlations among factors). Much of the discussion of results below emphasizes model fit not only because it is important in its own right but also because good fit can be considered a necessary condition for examination and evaluation of parameter estimates.

Results

Univariate Descriptive Statistics

Reliability (Cronbach’s alpha), mean performance measures (mean accuracy or d’) and tests of normality (skewness, kurtosis, Shapiro–Francia normality test) for the test tasks and familiar object recognition measures (CFMT and VET) are shown in Tables 1 and 2, respectively. All tasks demonstrated good internal consistency reliability and 10 of the 15 tasks demonstrated statistically significant violations of normality. It is also of interest that average performance on the Learning Exemplar task ranged from .48 to .68 depending on the category. Coupled with the fact that per–subject proportions were calculated across 48 trials, these values indicate that ceiling and floor effects were not significant factors and that transformations of proportions (e.g., computing odds or log odds) were not necessary.

Table 2.

Reliability (Cronbach’s α), mean performance measures, and tests of normality for the CFMT and VET sub−scales.

| N | Cronbach’s α | Mean (SD) Accuracy (% correct) | Skewness | Kurtosis | Shapiro−Francia Normality Test | |

|---|---|---|---|---|---|---|

| CFMT (faces) | 245 | .90 | 63.27 (14.23) | −0.22 | 2.07*** | 3.26* |

| VET Subscales | ||||||

| Birds | 243 | .83 | 68.98 (13.44) | −0.52*** | 3.44 | 3.61* |

| Butterflies | 241 | .80 | 60.17 (13.71) | −0.39* | 2.82 | 2.50* |

| Cars | 243 | .80 | 59.60 (14.60) | 0.17 | 3.09 | 1.11 |

| Houses | 243 | .83 | 75.90 (13.10) | −0.33* | 2.63 | 2.05 |

| Planes | 242 | .77 | 70.38 (12.02) | −0.40 | 3.35 | 3.39** |

Note. Under normality, the expected values of measures of skewness and kurtosis are 0 and 3, respectively. Under normality, when scores are sampled from a normal distribution, the median value of the Shapiro−Francia V’ measure equals 1 (Royston, 1991).

p < .05

p < .01

p < .001

Effect of Training

Because we did not equate categories for difficulty, we cannot directly compare mean performance to test for a training effect (in fact, Table 1 suggests that the non–exposed category was generally one of the easier categories). However, the composite task has been used in previous studies to assess training effects, and controls for difficulty differences across categories as it includes its own baseline, allowing a within–category measure of whether training had an influence on performance. Specifically, a difference in performance between congruent and incongruent trials (in either accuracy or RT) is a common marker of face–like expertise (see Richler & Gauthier, 2014), and has been observed following individuation training for novel objects like the ones used here (e.g., Chua et al., 2015; Wong et al., 2009). Indeed, we based the composite task parameters on Wong et al. (2009), who found slower response times on incongruent compared to congruent trials only in participants trained to individuate objects from the tested category.

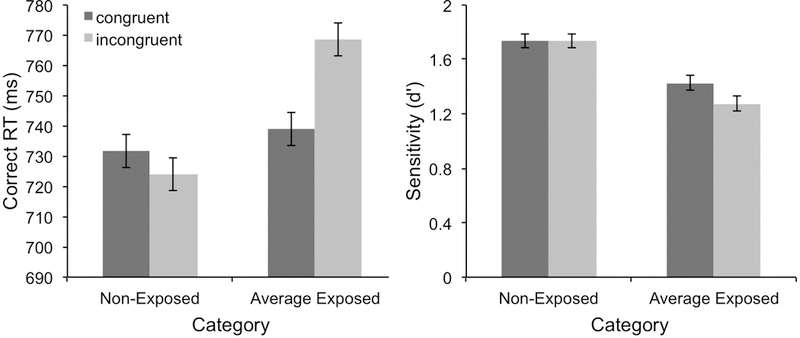

To test whether a similar effect of training was observed here on the same dependent measures as in Wong et al. (2009), we conducted 2 × 2 repeated measures ANOVAs on sensitivity (d’) and correct RT in the composite task with category (untrained vs. average of trained categories) and congruency (congruent vs. incongruent) as factors. The qualitative effects were the same for both RT and d’ (see Figure 5). There were significant main effects of training (RT: F1,219 = 9.18 , MSE = 15999.67, p = .003, ηp2 = .04; d’: F1,219 = 81.59 , MSE = .40, p < .001, ηp2 = .27) and congruency (RT: F1,219 = 17.26, MSE = 1561.11, p < .001, ηp2 = .07; d’: F1,219 = 7.06, MSE = .18, p = .008, ηp2 = .03). More importantly, the interaction between training and congruency was significant (RT: F1,219 = 46.34, MSE = 1659.16, p < .001, ηp2 = .18; d’: F1,219 = 8.13, MSE = .16, p = .005, ηp2 = .04), such that there was a significant congruency effect for the trained categories (RT: F1,219 = 99.60, MSE = 978.16, p < .001, ηp2 = .31; d’: F1,219 = 37.20, MSE = .07, p < .001, ηp2 = .15), but not the untrained category (RT: F1,219 = 2.85, MSE = 2242.11, p = .09, ηp2 = .01; d’: F1,219 < .00, MSE = .27, p = .99, ηp2 < .00)6.

Figure 5.

Mean correct RTs (left) and sensitivity (d’; right) for congruent and incongruent composite task trials for the non–exposed category and average of exposed categories. Error bars show 95% confidence intervals for within–subject effects.

We also computed correlations between all categories for each task to determine whether some amount of experience is necessary for individual differences in ability to be reflected in performance. As indicated in Table 3, for each of the three tasks, the means of the correlations between the untrained and trained categories were almost identical to the average of the correlations within trained categories. Thus, although training influenced performance, resulting in effects similar to those seen in previous studies (e.g., Wong et al., 2009) only for trained categories, it does not influence the expression of individual differences. We therefore included the untrained category in the ICC and CFA analyses. As described below, the results of specific CFA analyses also underscore the similarity between the untrained category and the trained ones.

Table 3.

Correlations (Pearson’s r) between all categories for each task. Correlations with the untrained category are highlighted in gray. N per cell ranges from 182 to 207. All correlations are significant at p < .001. (See Figure 2 for number−category mappings).

| Category | 0 | 1 | 2 | 3 | Mean untrained | Mean trained |

|---|---|---|---|---|---|---|

| Learning Exemplars | .50 | .49 | ||||

| 1 | .43 | |||||

| 2 | .53 | .54 | ||||

| 3 | .52 | .52 | .56 | |||

| 4 | .50 | .32 | .53 | .42 | ||

| Matching Task | .54 | .52 | ||||

| 1 | .52 | |||||

| 2 | .S | .45 | ||||

| 3 | .60 | .46 | .56 | |||

| 4 | .52 | .55 | .50 | .59 | ||

| Composite Task | .61 | .62 | ||||

| 1 | .64 | |||||

| 2 | .64 | .64 | ||||

| 3 | .60 | .66 | .59 | |||

| 4 | .60 | .61 | .61 | .59 | ||

Note. Correlations were Fisher−transformed before averaging.

Intraclass Correlations

Intraclass correlations and 95% HPD intervals are shown in Table 4. Several patterns are evident. First, the ICC1 values indicate that a significant proportion of the variance in task performance on any single category was attributable to individual differences among participants. Across the three tasks, when consistency of performance was assessed (i.e., category is modeled as a fixed effect), approximately 50–60% of the total variability in the data was due to individual differences. When category was modeled as a random effect and agreement assessed, the proportions of variance were lower, especially for the LE and MA tasks, but by no means trivial. Measurably lower correlations for the agreement measure would be expected in this case because no attempt was made to equate categories on difficulty level. Finally, the ICC5 values indicating the reliability of task performance averaged across categories were quite high and either approached or exceeded the expected range (≥.70) for measures of individual differences in the areas of personality and temperament. This conclusion holds for both measures of consistency and agreement. These results exemplify the beneficial effects of aggregation on reliability and consistency (e.g., Rushton et al., 1983).

Table 4.

Intraclass correlations for each measure.

| ICC1 | ICC5 | |||

|---|---|---|---|---|

| Measure | Consistency | Agreement | Consistency | Agreement |

| Learning Exemplars | .50 (.44,.55) | .34 (.17,.46) | .83 (.80, .87) | .72 (.52,.82) |

| Matching Task | .49 (.43,.56) | .31 (.15,.43) | .83 (.79, .86) | .69 (.49,.81) |

| Composite Task | .62 (.56,.68) | .55 (.40,.65) | .89 (.87, .91) | .86 (.78,.90) |

Note: ICC1 estimates both the average correlation in performance among pairs of categories and the proportion of variance in a given category due to between−person differences. ICC5 is the estimated correlation between the aggregate of scores across the five categories and a hypothetical equivalent set of aggregate scores. It estimates the proportion of variance in the average score across categories due to between−person differences. 95% Bayesian highest posterior density (HPD) intervals are shown for each measure. The category factor is modeled as a fixed effect when consistency is assessed and as a random effect when agreement is assessed.

Confirmatory Factor Analysis

Fit statistics for the sequence of CFA models are provided in Table 5. As summarized above, we relied more on other indices than the chi–square test of exact fit. Model 1 specified five correlated category factors, with the relevant LE, MA, and CO task performance measures serving as the observed indicators for each factor. As anticipated, the overall fit of this model was unsatisfactory because it omitted parameters reflecting the correlations within a task (e.g., MA) across categories (see Table 5). For example, the RMSEA was clearly above the range typically recommended for evaluation of fit as adequate. Model 1 also was associated with several inadmissible estimates (e.g., covariances among factors that implied correlations greater than 1) that may also indicate model mis–specification, although other factors (e.g., the generally high correlations among variables) may also have contributed.

Table 5.

Fit statistics for confirmatory factor analyses.

| Model | Description | df | Robust χ 2 | RMSEA (90% CI) | CFI | SRMR | AIC | BIC | SABIC |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Correlated Categories (No Task Effects) | 80 | 218.17, p < 001 | .116 (.098, .134) | .977 | .078 | 298.17 | 438.38 | 311.59 |

| 2 | Model 1 +Errors Across Tasks | 50 | 73.95, p=.02 | .050 (.022, .073) | .996 | .030 | 213.95 | 459.32 | 237.43 |

| 3 | Model 2 +Within−task Invariant Loadings on Category Factors | 58 | 89.56, p=.005 | .054 (.030, .074) | .995 | .069 | 213.56 | 430.89 | 234.35 |

| 4 | Model 3+Second−order Category Factor | 63 | 92.01, p=.01 | .049 (.025, .070) | .995 | .048 | 206.01 | 405.82 | 225.22 |

| 5 | Model 4 + CFMT and VET measures | 147 | 216.34 P <.001 | .050 (.035, .064) | .967 | .064 | 384.34 | 678.79 | 412.51 |

Note: Robust χ2 =Satorra−Bentler robust chi−square test of overall fit generated by the Savalei−Bentler robust two−stage estimator. RMSEA = root mean squared error of approximation. CFI = Comparative Fit Index. SRMR = standardized root mean squared residual. AIC = Akaike Information Criterion. BIC = Bayesian Information Criterion. SABIC = Small− sample corrected Bayesian Information Criterion. Lower scores on the RMSEA, SRMR, AIC, BIC, and SABIC and higher scores on the CFI indicate better fit. Hu and Bentler (1998, 1999) recommended the following criteria for adequate fit on the first three measures: CFI ≥ .95, RMSEA ≤ .06, and SRMR ≤ .08. Because Model 5 includes measures not included in Models 1−4, its values for the AIC, BIC, and SABIC are not directly comparable to those of Models 1−4.

As expected, when correlated errors among the observable task indicators were added in Model 2, the fit was notably improved (nested χ2(30) = 108.61, p < .0001). The values of the RMSEA, CFI, and SMMR all indicate that this model met conventional criteria for adequate fit. Although the AIC and SABIC values for Model 2 were notably lower than the corresponding values for Model 1, somewhat surprisingly the model 2 BIC was higher. This discrepancy is likely due to the fact that the BIC more strongly favors parsimony than the other indices. Nevertheless, the clear weight of the evidence and the plausibility of task–specific shared variance favors Model 2 relative to Model 1.

Using Model 2, we also assessed whether the correlations involving the factor for the untrained category (denoted category 0) were different from the correlations involving only the other four categories. We imposed the linear constraint that the average of the four correlations involving category 0 was equal to the average of the six correlations not involving category 0. That this constraint did not produce a significant impairment in fit relative to Model 2 (S–B nested χ2 (1) = 1.51, p = .22) indicates that correlations involving the untrained category were not unique. Similarly there were no differences when the same linear constraint was imposed on factor covariances rather than correlations (S–B nested χ2 (1) = 0.80, p = .37).

We also assessed whether correlations within the two relatively visually similar Ziggerin (categories 0 and 3) and the two Greeble (categories 1 and 2) stimulus types were higher than the between–type correlations. We conducted four sets of analyses, each of which compared within–type to across–type correlations. Specifically we tested whether: (1) r03=r01=r02; (2) r03=r13=r23; (3) r12=r01=r13; and, (4) r12=r02=r23. In all four cases, Satorra–Bentler nested chi–square tests indicated that these equality constraints induced no significant impairment in model fit, or even trends, relative to Model 2 (χ2 (2) = 3.03, p=.22;χ2 (2) = 1.69, p=.44;χ2 (2) = 2.58, p=.28; χ2 (2) = 1.75, p=.42, respectively). Thus, correlations within a stimulus type were not different from correlations across stimulus types.

Model 3 built upon Model 2 but imposed the restriction of equal factor loadings across categories (e.g., the loadings of MA1–MA5 on their respective category factors were constrained equal). This model also fit adequately (see Table 5). Although the value of the SRMR clearly increased in Model 3 relative to Model 2, it still falls within the conventional range of good fit on this measure. The other 5 indices all adjust for complexity to some degree (i.e., rewarding more parsimonious models) and indicate much smaller differences between the two models (RMSEA, CFI) or favor Model 3 (AIC, BIC, SABIC). A nested chi–square test indicated that the restrictions imposed by Model 3 did not significantly impair fit relative to Model 2, although caution is necessary because the significance level was very close to the rejection threshold (nested χ2(8) = 15.36, p = .052). On balance, we think that these results indicate that the restrictions imposed by Model 3 fit well enough to use it as the starting point for the next steps in the modeling sequence. However, we report below the fit of separate higher–order factor models that include and do not include the restrictions on the loadings. Overall the Model 3 results indicate that the factor structure of the three tasks could be considered reasonably invariant across categories. Such invariance is another indication that that the untrained category (0) did not have a unique structure relative to the other categories.

A notable feature of Model 3 (also characteristic of Model 2) is the magnitude of the association among the category factors. Table 6 shows the correlations among the factors generated by the standardized solution and 95% bias–corrected bootstrap confidence intervals (Williams & MacKinnon, 2008) around these values. As indicated, the correlations among the category factors were quite high, ranging from .82 to .96 (mean r = .895), with even the lower bounds of confidence intervals at very high values (all were greater than .73).

Table 6.

Correlations among the Category Factors (Model 3)

| Category | 0 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|

| 0 | –––––––– | ||||

| 1 | .82 (.74,.89) | –––––––– | |||

| 2 | .87 (.78,.96) | .90 (.81,.98) | ––––––– | ||

| 3 | .91 (.81,.98) | .96 (.88,1.00) | .93 (.85,1.00) | ––––––––– | |

| 4 | .92(.84,.99) | .84(.74,.92) | .92(.85,1.00) | .91(.80,.99) | ––––––––– |

Note: All ps < .001. 95% bias–corrected bootstrap confidence intervals are shown in parentheses. When an upper bound slightly exceeded 1.00,it was fixed at 1.00.

Using Model 3 as a starting point, Model 4 specified the higher–order factor (o) to account for the covariances and correlations among the category factors. This model did not significantly impair fit compared to Model 3 (nested χ2(5) = 2.11, p = .83) and fit well in an absolute sense (see Table 5). Indeed, as indicated by Table 5, the Model 4 values of the fit indices that most explicitly penalize for model complexity were the lowest (RMSEA, AIC, BIC, and SABIC) or essentially tied for the lowest (CFI) among the four models tested. This provides support for our hypothesis that performance across novel object categories can be accounted for by a single overarching Object Recognition Ability factor.

Model 4 is shown in Figure 6 with standardized parameter estimates. The lower–order loadings of the observed measures on factors are generally high, with values for MA, CO and LE ranging, respectively from .68 to .80, .73 to .80, and .47 to .56.7 The most notable feature of Model 4 is that the standardized loadings from the higher–order factor (o) to lower–order category factors are quite high (.910–.995; all ps highly significant based on bootstrap assessments), suggesting that the higher–order o factor accounts for on average 89% of the variance in lower–order category factors (% variance = .83, .85,.91,.99, and .89 for categories 0–4). Because of the borderline acceptability of the model imposing invariant factor loadings, we also specified a higher–order factor model in which the lower–order loadings (i.e., of observed indicators on category factors) were not constrained to be equal and compared its fit to model 2 rather than model 3. This model also fit well in an absolute sense (e.g., Satorra–Bentler χ2(55) = 81.10, RMSEA=.050), with no impairment in fit relative to model 2 (nested χ2(5) = 7.16, p

Figure 6.

Higher–order factor model standardized solution (both factors and observed measures standardized). For the sake of clarity, correlated errors among within–task errors are not shown but were specified as part of the model. Factor loadings for a given task are not invariant because invariance was imposed on the non–standardized solution based on covariances instead of correlations.

Correlations Between o and Familiar Object Recognition

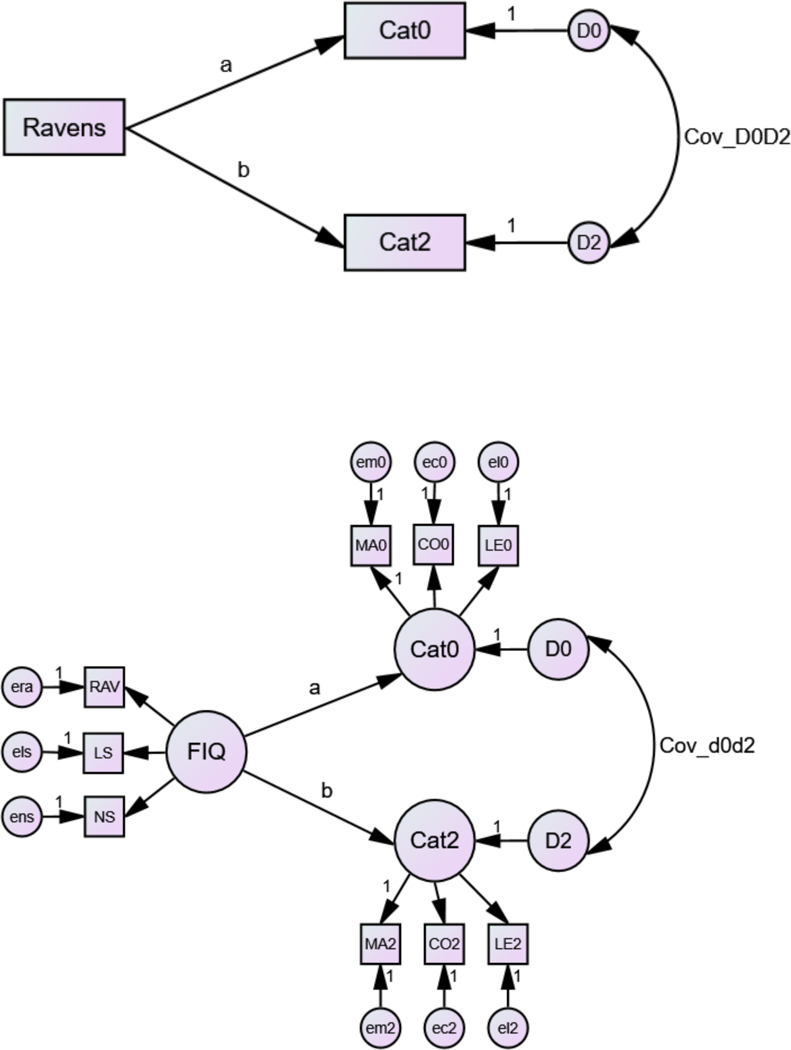

Results summarized so far indicate that a single higher–order factor, o, accounts for performance across novel object categories. To test whether o also predicts recognition performance on familiar object categories, we specified a fifth model that examined correlations between o and each familiar object category (faces measured with the CFMT; birds, butterflies, houses, cars, and planes measured with the VET). Table 7 presents correlations when the familiar object measures were corrected for unreliability (using the values of coefficient alpha summarized in Table 2), and uncorrected values. We reliability–corrected the latter by specifying each individual measure as a latent variable and fixing the error variance and factor loading at appropriate values such that the proportion of variance of the observed measure accounted for by the latent factor equaled the reliability of the variable. We then allowed these latent variables to be freely correlated with each other and, most importantly, with o. Other model specifications were identical to that of Model 4. As indicated by Table 5, this model fit well. Because Model 5 introduces several additional variables not included in Models 1–4, its fit indices should not be directly compared to those of the other models. As shown in Table 7, all corrected correlations were statistically significant, with o more highly correlated with performance on birds, butterflies, houses, and planes than faces and cars. The un–corrected correlations shown in the right–hand columns are slightly attenuated but still statistically significant.

Table 7.

Correlations between o and each familiar object category.

| Predictor | Reliabilty Corrected | Un–corrected | ||

|---|---|---|---|---|

| r | r2 | r | r2 | |

| Faces | .28 | .08 | .26 | .07 |

| Birds | .43 | .18 | .39 | .15 |

| Butterflies | .60 | .36 | .54 | .29 |

| Cars | .27 | .07 | .24 | .06 |

| Houses | .47 | .22 | .42 | .17 |

| Planes | .53 | .28 | .46 | .21 |

Note: All ps <.001

Study 2

In Study 1 we found evidence for o, a higher order factor supporting object recognition performance across different tasks and categories. In Study 2, as an initial effort to establish the divergent validity of o, we explore the extent to which it is related to a battery of cognitive and perceptual constructs, as well as measures of personality. The primary goal is to quantify how much of the individual differences captured in our tasks remain after controlling for such factors. To this end, we measured performance on all three tasks with two of the object categories from Study 1. Although we use a smaller sample in Study 2, we expected to replicate results from Study 1 with moderate to strong relations between categories and object recognition tasks but, at the same time, evidence for discriminant validity.

Prior work with LE tasks with both familiar and novel objects found that performance for each object recognition task was correlated with IQ (r ~ .1–.3) but that virtually none of the shared variance among different categories was explained by IQ (Richler et al., 2017). Here, we also assess IQ, using tests associated with fluid intelligence (gF) and targeting the ability to solve new problems (Engle, Tuholski, Laughlin & Conway, 1999). We expect that despite moderate correlations with some individual object recognition tasks, most of the shared variance between object recognition tasks will not be accounted for by IQ.

Aside from IQ, we selected a variety of tasks from prior individual differences research that could be plausibly expected to account for some of the variance in o (note that our goal was not to decompose o into its constituent parts). We included tasks that tap into different aspects of executive function (Miyake & Friedman, 2012): two Stroop tasks and a shifting task that requires switching between mental sets. We also included a measure of visual short–term memory capacity and a measure of local/global perceptual style. Finally, because our approach requires completion of a large number of tasks (over many sessions in Study 1), we were concerned that more conscientious subjects may have performed better, accounting for some of the shared variance across tasks and categories in Study 1. In Study 2 we gave subjects a personality inventory that includes a measure of conscientiousness. Aside from this self–report measure, the contribution of any aspect of motivation or personality to our object recognition tasks would also be evidenced by strong correlations between object recognition tasks and any of the other performance measures mentioned above.

Methods

Participants

We analyze data for fifty–four participants (13 male, 41 female, 0 not disclosed; mean age = 20.4 years; 50 right–handed). Sixty–six Vanderbilt University Community members were originally recruited (15 male, 51 female, 0 not disclosed; mean age = 20.5 years; 61 right–handed). Four participants only completed the first session and were thus excluded. Additionally, data from 5 participants were excluded because of median RTs less than 200 ms for Composite or Matching Tasks and/or median RTs less than 1000 ms for individual Learning Exemplars categories. Lastly, all data from 3 additional participants were excluded because of too many (>57 out of 144 trials) timed–out trials on the Composite task (on which trials timed out after 3 seconds). Thus, data from 12 total participants were excluded. Power calculations for Pearson correlations indicated that with n=54 we would have 80% power to detect a correlation of .37 or higher and 70% power to detect a correlation of .33 or higher. Note that our primary concern was not so much whether there was any correlation between our object recognition measures and individual difference measures but whether there was a sufficiently large correlation to warrant significant concern that the strong correlations between categories were largely due to associations with individual difference measures.

Of these remaining 54 participants, Fluid IQ data for 3 participants, Stroop data for 1 participant and VSTM data for 1 participant were missing due to computer error, but the rest of their data were analyzed. Finally, due to experimenter error, Stroop data from 4 additional participants were missing after the first session. Thus, these participants completed the Stroop task again at the beginning of the second session.

Test Sessions and Tasks