Abstract.

The segmentation of the dermal–epidermal junction (DEJ) in in vivo confocal images represents a challenging task due to uncertainty in visual labeling and complex dependencies between skin layers. We propose a method to segment the DEJ surface, which combines random forest classification with spatial regularization based on a three-dimensional conditional random field (CRF) to improve the classification robustness. The CRF regularization introduces spatial constraints consistent with skin anatomy and its biological behavior. We propose to specify the interaction potentials between pixels according to their depth and their relative position to each other to model skin biological properties. The proposed approach adds regularity to the classification by prohibiting inconsistent transitions between skin layers. As a result, it improves the sensitivity and specificity of the classification results.

Keywords: in vivo microscopy, reflectance confocal microscopy, machine learning, biomedical imaging

1. Introduction

In this paper, we address the problem of segmenting the dermal–epidermal junction (DEJ) in in vivo reflectance confocal microscopy (RCM) using a three-dimensional (3-D) conditional random field (CRF) model.

The DEJ is a complex, surface-like, 3-D boundary separating the epidermis from the dermis. Its peaks and valleys, called dermal papillae, are due to projections of the dermis into the epidermis. The DEJ undergoes multiple changes under pathological or aging conditions. Alterations of the epidermal and dermal layers induce a flattening of the DEJ,1,2 reducing the surface for exchange of water and nutrient from the dermis to the epidermis.

RCM is a powerful tool for noninvasively assessing skin architecture and associated cytology. RCM images provide a representation of the skin at the cellular level, with melanin and keratin working as natural autofluorescent agents.3 Pellacani et al.4 have shown that the use of RCM can improve the diagnosis of pathological lesions, while reducing the number of unnecessary excisions. However, the review of confocal images by trained dermatologists requires a lot of time and expertise. Several approaches to automate confocal skin image analysis have been proposed. In this context, they focus on quantifying the epidermal state,5 performing computer-aided diagnostic of skin lesions,6 or on identifying the layers of human skin.7–9

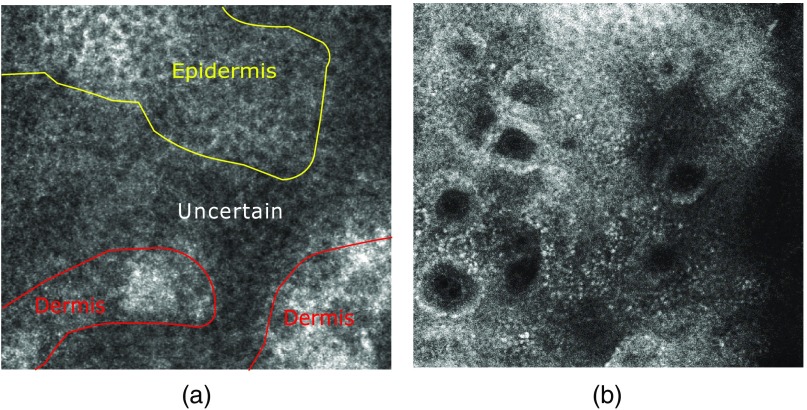

One particularly difficult point is the visualization of the DEJ, which is hard to identify purely by visual means. In fair skin, the DEJ can have multiple patterns. It can appear as an amorphous and low-contrasted structure or as circular rings, which correspond to the two-dimensional vertical view of a dermal papillae (see Fig. 1). Automating the DEJ segmentation makes it easier for clinicians to locate it and opens the way to quantitatively characterize its appearance. It could further improve the diagnosis of pathological lesions and could also result in a better understanding of the skin physiological response toward aging or other environmental conditions.

Fig. 1.

Examples of different DEJ patterns. The circular rings pattern in (a) provides an easy identification of the DEJ compared to the uncertain one in (b). However, the latter one is the most frequent, especially on the cheeks.

1.1. Related Work

As the DEJ appearance varies significantly and its contrast can be low, the precise localization of the DEJ is difficult. Therefore, in practice ground truth annotations often consist of three thick layers: the epidermis (E), an uncertain area containing the DEJ (U), and the dermis (D). There exist different approaches to delineate skin strata in RCM, which can be divided two main groups: on one hand, finding a continuous 3-D boundary between the layers of the skin, which implies a pixel-level classification, and on the other hand, performing an image-level classification to estimate the location of the transitions between layers.

Kurugol et al.7 proposed a machine learning-based method using textural features to automatically locate the DEJ location. They reproduce the key aspects of human visual expertise, which relies on texture and contrast differences between layers of the epidermis and dermis, in order to locate the DEJ. They used an LS-SVM method, a variant of support vector machine (SVM), which takes into account the expected similarity between neighboring tiles within images to include spatial relationship between neighbors and to increase robustness. They also propose a second approach, which incorporates a mathematical shape model for the DEJ using a Bayesian model.10 The DEJ shape is modeled using a marked Poisson process. Their model can account for uncertainty in number, location, shape, and appearance of the dermal papillae. Their main focus is to find the location of the DEJ rather than to study its shape.

Hames et al.9 addressed the problem of identifying all of the anatomical strata of human skin using a one-dimensional (1-D) linear chain conditional random field and structured SVMs to model the skin structure. They show an improvement in the classification scores with the use of such a model. However, their 1-D linear chain does not take advantage of the 3-D organization of the skin structure to regularize their output segmentation.

The following methods perform an image-level classification instead of a pixel-level classification.

Somoza et al.11 used an unsupervised clustering method to classify a whole en-face image as a single distinct layer, resulting in a good correlation between human classification and automated assessment. However, the classification assumes that each image contains a single class, and therefore does not allow to capture the complex shape of the DEJ.

Kaur et al.12 used a hybrid of classical methods in texture recognition and recent deep learning methods, which gives good results on a moderate size database of 15 stacks. They classify each confocal image as one of the skin layers. They introduce a hybrid deep learning approach which uses traditional feature vectors as input to train a deep neural network.

Bozkurt et al.13 proposed the use of deep-recurrent conventional neural networks (RNN)14,15 to delineate the skin strata. The dataset used in this study is composed of 504 RCM stacks. Each confocal image is labeled in one of three classes: epidermis, DEJ, or dermis. They make the assumption that the skin layers are ordered with depth: the epidermis is the top layer of the skin, followed by the DEJ and the dermis. The use of deep RNN on a large dataset allows them to take the sequential dependencies between different images into account. They have trained numerous models with varying networks architectures to overcome the computational memory issues. This approach is potentially more flexible, as it provides the model with the complete RCM stack and allows it to learn what information is useful for slice-wise classification. With partial sequence training, the authors showed no improvement when enlarging the neighborhood of images around the RCM image to be classified. Bozkurt et al.16 added a soft attention mechanism in order the get information about which images the network pays attention to which making a decision.

Due to the uncertainty in visual labeling and intersubject variability, state-of-the-art methods tend to combine textural information and prior information on the DEJ shape, either by modeling the DEJ shape or by using a regularization of the segmentation through depth. The use of an RNN enables the representation of the dependencies between images.

Most methods focus on the localization of the DEJ in depth, with no consideration toward the characterization of its shape. Our goal is to segment the DEJ shape in order to quantify the modifications it undergoes during aging. In order to do so, we aim to combine textural information and 3-D dependencies between pixels within an RCM stack to perform a pixel-level segmentation.

Graphical models appear to be well-adapted and useful tools toward this purpose.

1.2. Modeling with Conditional Random Fields

Segmenting boundaries of interest in 3-D microscopy images are often challenging due to high intra- and intersubject (or specimen) variability and the complexity of the boundary structures. This task involves predicting a large number of variables (each image pixel is a variable) that depend on each other as well as on other observed variables. In this paper, we address the problem of segmenting the DEJ, a complex 3-D structure, imaged using in vivo RCM.

The way output variables depend on each other can be represented by graphical models, which include various classes of Bayesian networks, factor graphs, Markov random fields, and conditional random fields.

Most works in graphical models have focused on models that explicitly attempt to model a joint probability distribution , where and are random variables, respectively, ranging over observations and their corresponding label sequences. These models are fully generative, and they identify dependent variables and define the strength of their interactions. The dependencies between features can be quite complex, and the construction of the probability distribution over them can be challenging.

A solution to this problem is the modeling of a conditional distribution. This is the approach taken by CRF.17 A detailed review can be found in Sutton et al.18 Conditional random fields are popular techniques for image labeling because of their flexibility in modeling dependencies between neighbors and image features. Linear chain CRFs are the simplest and the most widely used. They have become very popular in natural language processing19,20 and bioinformatics.21 Applications of CRFs have also extended the use of graphical structures in computer vision.22,23 Medical imaging has been a field of interest in applying CRFs to many segmentation problems such as brain and liver tumor segmentation.24,25

1.3. Contribution

Our approach consists of designing a 3-D conditional random field, which allows us to provide a spatial regularization on label distribution and to model skin biological properties. Our emphasis lies on exploiting the additional depth and 3-D information of the skin architecture. To our knowledge, this is the first method to include 3-D spatial relationships and to incorporate prior information about the global skin architecture.

We aim to segment the confocal images in three classes: epidermis (E), uncertain (U), and dermis (D). Our approach starts with a random forest (RF) classifier, providing the probabilities of a pixel to belong to one of these three classes, with no assumptions on the dependencies between pixels. The RF output encodes the textural information and gets in the CRF potentials.

The CRF model parameterization is inspired by prior information on the skin structure. The skin architecture is modeled by the conditional relationship between pixels. The relations between pixel neighbors mimic the skin layers behavior in 3-D by imposing a specified potential function according to their depth and their relative position to each other. The CRF potentials are learned from annotated skin RCM data. The CRF model allows us not only to incorporate resemblance between neighbors, but also to specify biological information.

We present several experiments, proving the benefit of the adapted CRF potentials to model the skin properties compared to more standard CRF parameterization strategies and to state-of-the-art methods.

2. Conditional Random Fields

An image consists of pixels with observed data , i.e., . Pixels are organized in layers (en-face images) forming a 3-D structure. We want to assign a discrete label to each pixel from a given set of classes . The classification problem can be formulated as finding the configuration that maximizes , the posterior probability of the labels given the observations.

A CRF is a model of that can be represented with an associated graph , where is the set of vertices representing the image pixels and the set of edges modeling the interaction between neighbors.26 Here is the usual 3-D six-connectivity.

We use a model with pairwise interactions defined by

| (1) |

where is the node potential linking the observations to the class label at pixel , and is the interaction potentials modeling the dependencies between the labels of two neighboring pixels and .

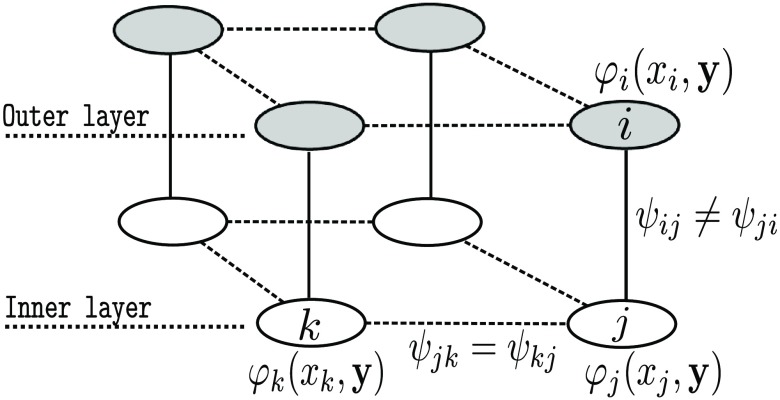

The CRF model is represented in Fig. 2.

Fig. 2.

Three-dimensional CRF modelization. The set of nodes in gray and in white belong to two different en-face sections. The edge potentials of each en-face sections [Eq. (3)] are learned at each depth. Edge potentials between en-face sections [Eq. (3)] impose biological transition constraints.

In this paper, we propose to specify the CRF potentials in order to incorporate biological information. The skin layers are strictly ordered according to their depth. The epidermis is the top skin layer, followed by the uncertain area (containing the DEJ) and then by the dermis. Therefore, a pixel located near the surface will have a higher probability to belong to the epidermis than to the uncertain area or to the dermis. The CRF potentials are defined in order to forbid the incoherent transitions between layers.

The CRF parameters are depth-dependent. We define the set of all depths of the image . For each , we define:

-

•

is the set of pixels at depth ,

-

•

is the set of edges at depth ,

-

•

is the set of edges between depth and , resp. .

The notation represents the indicator function, which takes the values 1 when and 0 otherwise. We denote by the transposed vector of . The operators and denote, respectively, the Hamadar product (element-wise multiplication) and the dot (or scalar) vector product.

2.1. Node Potential

The node potential is defined as the probability of a label to take a value given the observed data , that is:

| (2) |

with a feature vector computed at pixel from the observed data.

In our case, each node potential is associated with the predicted class probability vector produced by an RF classifier.27 The node potentials are linked by the relation

| (3) |

The parameter balances the bias introduced by labels appearing more often in the training data, i.e., the epidermal and the dermal one.

The feature vector is a vector of probability for a pixel to belong to each label. It is produced by an RF classifier27 along with bootstrap aggregating and feature bagging.

Features are computed within a window, which is large enough to include two epidermal cells forming the epidermal honeycomb pattern as their diameter varies from 15 to . We use the following well-known textural features inspired by Ref. 7:

-

1.

First- and second-order statistics. We calculate statistical metrics mean, variance, skewness, and kurtosis.

-

2.

Power spectrum28 of the window.

-

3.

Gray level co-occurrence matrix contrast, energy, and homogeneity.29 The features are calculated for four orientations (0 deg, 45 deg, 90 deg, and 135 deg).

-

4.

Gabor response filter output.30,31 We compute a bank of Gabor filters with four levels of frequency and four orientations. As features, the local energy and the mean amplitude of the response are used.

We propose new features to estimate the distance of the current pixel to the DEJ.

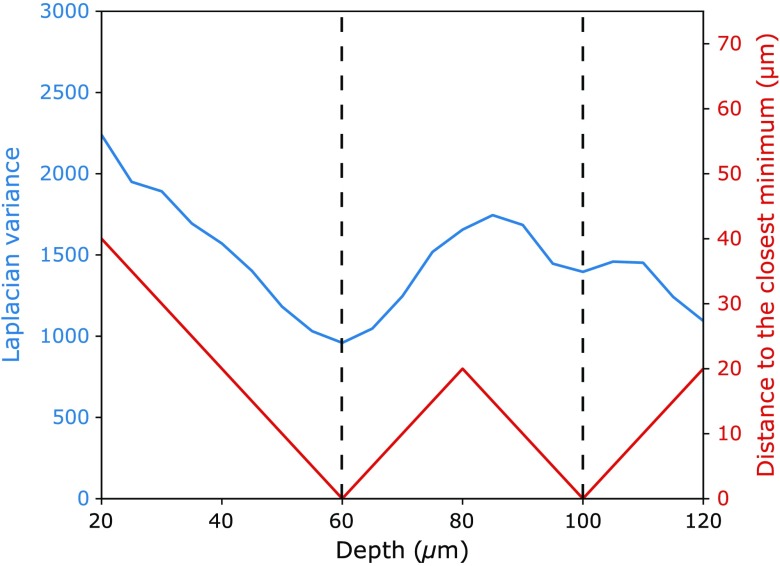

The Laplacian is related to the curvature of intensity changes. In classical edge detection theory,32 the zero-crossings of the Laplacian indicate contour locations. High values in the Laplacian are also associated with rapid intensity changes. The DEJ is an amorphous area compared to the epidermis, which appears as a honeycomb pattern, and to the dermis, which contains collagen fibers. Thus we expect low values in the Laplacian variance32 in confocal sections around the DEJ location. For a pixel at a given confocal section , we calculate the Laplacian variance for every confocal section at its location.

A feature vector is computed containing the Laplacian variance at pixel coordinates at all depths. The pixel is characterized by its distance along the axis to its closest minimum in the feature vector. We add to the set of features: the Laplacian variance of pixel , its distance to its closest minimum as described above and the features (Laplacian variance and depth) of its closest minimum within the Laplacian feature vector. An example is presented in Fig. 3. The distance to the closest minimum is expressed as the product of the number of confocal images to the closest minimum and the step that has been set for the 3-D acquisition. It is thus expressed in micrometer. For a given acquisition protocol, the distance to the closest minimum should be adjusted by the step parameter.

Fig. 3.

Laplacian variance and distance to the closest minimum for a pixel between 20 and . The blue line represents the Laplacian variance at coordinates at all depths. The dashed vertical lines mark the position of the minimum of the Laplacian variance. The red line corresponds to the distance to the closest minimum.

These features were chosen for their ability to discriminate texture from blurry patterns, which mostly corresponds to the DEJ pattern. The ringed DEJ pattern can be identified via its strong contrast and specific spatial arrangement. A summary of the proposed features is presented in Table 1.

Table 1.

Set of the 52 features used to produce the node potentials.

| Feature type | Number of features | Presumed use |

|---|---|---|

| Statistics | 4 | Low intensity of the blurry DEJ pattern |

| Power spectrum | 1 | Low intensity of the blurry DEJ pattern |

| Gray-level co-occurrence matrix | 12 | Epidermal pattern and ringed DEJ pattern |

| Gabor output filter | 32 | Blurry pattern of the DEJ, epidermal, and dermal contrasted pattern |

| Laplacian | 3 | Blurry pattern of the DEJ and its location in depth |

2.2. Interaction Potential

The interaction potential describes how likely is to take the value given the label of one of its neighboring pixel :

| (4) |

Prior information on skin structure is essential to determine efficiently the interaction potentials in our CRF model. The interaction potentials are modeled by a matrix representing the transition probabilities between classes.

We define two types of transitions: the transitions within a layer referred to as , which are symmetrical and depth-dependent and the transitions interlayers and , which are directional and also depth-dependent.

The product of the pairwise interaction potentials is expressed as

| (5) |

2.2.1. Within-layer interaction potential

The within-layer interaction potential models the behavior of the skin at a given depth. We know that several skin layers can coexist in a single en-face section. In an en-face section, edges are modeled symmetrically, i.e., (dashed lines in Fig. 2). The within-layer interaction potential is then modeled as a symmetrical matrix, see Table 2.

Table 2.

Horizontal transition matrix with neighboring pixels and where are the learned probabilities of transition see Sec. 2.3. The symmetric, nonzero values ensure that transitions in both ways are equally possible.

| Epidermis | Uncertain | Dermis | ||

|---|---|---|---|---|

| Epidermis | ||||

| Uncertain | ||||

| Dermis | ||||

2.2.2. Interlayer interaction potential

The skin layers follow a specific order from the surface to inner layers: the epidermis is the top skin layer, followed by the uncertain area (containing the DEJ), and then by the dermis. We define an inconsistent transition as a transition not following this specific order. Between en-face sections, only consistent transitions are allowed, the edge potentials thus depend of their direction, i.e., .

To impose the biological transition order in depth, constraints are added to the transition matrix according to the edge direction. We define in Table 3 as the vertical transition matrix from pixels to , with above . The reverse transition matrix from to is then defined in Table 4.

Table 3.

Vertical transition matrix with above where are the learned probabilities of transition. The null values ensure that inconsistent transitions are impossible.

| Epidermis | Uncertain | Dermis | ||

|---|---|---|---|---|

| Epidermis | ||||

| Uncertain | 0 | |||

| Dermis | 0 | 0 | 1 | |

Table 4.

Vertical transition matrix with below where are the learned probabilities of transition. The null values ensure that inconsistent transitions are impossible.

| Epidermis | Uncertain | Dermis | ||

|---|---|---|---|---|

| Epidermis | 1 | 0 | 0 | |

| Uncertain | 0 | |||

| Dermis | ||||

2.3. Parameter Optimization

The parameters described above are learned from the ground truth dataset.

Our model can be expressed as

| (6) |

We define the set of parameters at depth and the set of parameters for all depths. The set of parameters is summarized in Table 5.

Table 5.

Set of parameters of for the CRF model.

| Parameter | Form | Number of parameters | Use |

|---|---|---|---|

| vector | 3 | Weight bias vector used to balance the labels occurrences | |

| symmetrical matrix | 6 | Transition matrix between classes at depth | |

| upper triangular matrix | 5 | Transition matrix between classes between and | |

| lower triangular matrix | 5 | Transition matrix between classes between and |

Our goal is to find the set of parameters that maximizes the log-likelihood:

| (7) |

with is the class of , and is the feature vector.

The parameters , , , and are depth-dependent. We estimate such transition probabilities from the frequency of co-occurrence of classes ( and ) between neighboring pixels and in the ground truth images. Co-occurrence frequencies are learned at each depth of en-face sections. The value of is initialized at [0.3 0.3 0.3] for all depths and optimized to increase the model accuracy.

For each depth , we estimate the parameters of . The number of parameters to estimate for depths is given by the following formula: , which leads us to 370 parameters for 20 depths (see Table 5). The parameters in are trained using the Powell search method, an iterative optimization algorithm33 that does not require estimating the gradient of the objective function. We use a loopy belief propagation method to estimate . The computation is done using the library developed in DGM Lib.34

3. Experimentation

3.1. Database

Image acquisition was carried out on the cheek to further assess chronological aging. RCM images were acquired using a near-infrared reflectance confocal laser scanning microscope (Vivascope 1500; Lucid Inc., Rochester, New York).35 On each imaged site, stacks were acquired from the skin surface to the reticular dermis with a step of . Our dataset consists of 23 annotated stacks of confocal images acquired from 15 healthy volunteers, with stacks/subject, with phototypes from I to III,36 i.e., from volunteers whose skin strongly reacts to sun-exposure. As melanin is a strong contrast agent in confocal images, confocal images of fair skin represent the most challenging analysis compared to higher melanin content. The more melanin, the more contrasted skin confocal patterns.

Neither cosmetic products nor skin treatment was allowed on the day of the acquisitions. Appropriate consent was obtained from all subjects before imaging. Visual labeling of the DEJ is not easy to perform even for experts. An expert, whose grading had been previously validated, delineated the stacks in three zones: epidermis (E), uncertain (U), and dermis (D) (see Fig. 1). We segmented confocal images between depths 20 and , the images above belonging to the epidermis with high confidence. We used a subject-wise 10-fold cross validation test to evaluate the segmentation results. In order to compare the results, the same subdataset is used for both the training and testing parts for all the experiments. As several stacks acquired from one subject can contribute to the annotation dataset, stacks acquired from the same subject are not considered as independant data. Therefore, a subject-wise cross-validation is conducted, i.e., one subject cannot belong to both the training and testing sets at each fold.

3.2. Feature Evaluation

To evaluate our proposed set of features used for the RF classification, we compare the mean accuracy of our classification results to the state-of-the-art methods. The mean accuracies of the RF classifications are presented in Table 6. Kurugol et al.7 achieved 64%, 41%, and 75% of correct classification of tiles for epidermis, transition region, and dermis, respectively. Hames et al.9 achieved 82%, 78%, and 88% of correct classification for the epidermis, DEJ, and dermis, respectively. With the proposed set of features, we were able to achieve 90%, 54%, and 75% accuracy, respectively, for the epidermal, uncertain area, and dermal classification. These results suggest that our set of features is relevant to identify the three skin labels according to the visual inspection by experts. However, the result of our initial classification still contains 11% of inconsistent transitions (not following the expected biological order), see Table 10, motivating the introduction of spatial constraints with the CRF regularization.

Table 6.

Results for the unregularized experiments. Mean accuracy of the RF classifications of the three labels. The RF classification provides the node potentials for the CRF model.

Table 10.

Percentage of inconsistent transitions between the skin layers. Epidermis, E; uncertain, U; and dermis, D.

| U → E | D → U | D → E | Total (%) | |

|---|---|---|---|---|

| RF | 0.6 | 2 | 3 | 11 |

| 7 | 3 | 8 | 18 | |

| 0 | 4 | 0 | 4 | |

| 0 | 0 | 0 | 0 |

3.3. CRF Parameters Evaluation

To evaluate the chosen parameters, we compare three cases:

-

1.

is the CRF with only horizontal regularization. Each confocal section is regularized independently using the horizontal transition matrix .

-

2.

is the CRF with horizontal regularization and symmetrical vertical regularization, i.e., .

-

3.

is the CRF with horizontal regularization and asymmetrical vertical regularization, i.e., . This is our proposed model where the skin layers order is imposed.

The global accuracies, presented in Table 7, for the three experiments are comparable. We also achieve a high specificity for the three classes (above 0.90%), see Table 8. The parameterization allows us to increase the sensitivity of the uncertain area classification, compared to and , while maintaining the epidermal and dermal sensitivity above 0.90%, see Table 9.

Table 7.

Global accuracy percentage for the three regularization schemes.

| Accuracy | |

|---|---|

| RF | 78 |

| 85 | |

| 85 | |

| 86 |

Table 8.

Specificity of the three labeling in the regularized cases.

| Specificity | |||

|---|---|---|---|

| Epidermis | Uncertain | Dermis | |

| 0.90 | 0.89 | 0.93 | |

| 0.94 | 0.92 | 0.95 | |

| 0.96 | 0.92 | 0.95 | |

Table 9.

Sensitivity of the three labeling in the regularized cases.

| Sensitivity | |||

|---|---|---|---|

| Epidermis | Uncertain | Dermis | |

| 0.90 | 0.63 | 0.83 | |

| 0.91 | 0.55 | 0.90 | |

| 0.90 | 0.68 | 0.93 | |

We have defined an inconsistent transition as a transition between skin layers, which does not follow the biological order. The resulting segmentation with contains 18% of inconsistent transitions. still contains 4% of inconsistent transition, whereas contains none. The inconsistent transitions’ percentages are presented in Table 10.

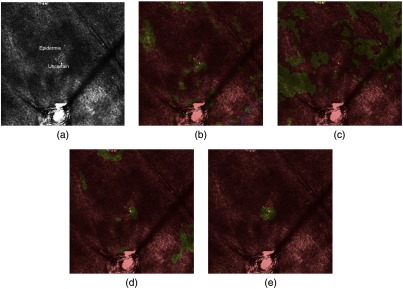

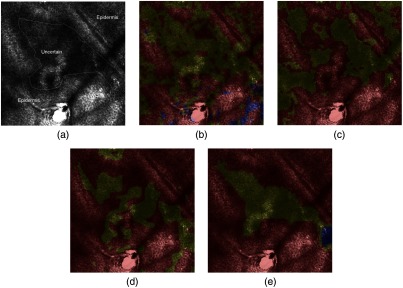

Examples of resulting segmentations at two following depths are presented in Figs. 4 and 5. A direct classification after features calculation leads to a misclassification and inconsistent transitions between classes. The positive impact of our CRF model is noticeable. Moreover, the computation of the DEJ segmentation only takes a few minutes per stack on a common computer, which enables the use of its use in large-scale analysis and clinical studies.

Fig. 4.

Segmentations at depth : epidermis (red), uncertain (yellow), dermis (blue). (a) Annotated image at depth , (b) RF at depth , (c) at depth , (d) at depth , and (e) at depth . The addition of constraints into the CRF model improves the accuracy of the segmentation.

Fig. 5.

Segmentations at depth . (a) Annotated image at depth , (b) RF at depth , (c) at depth , (d) at depth , and (e) at depth . Inconsistent transitions exist between depth and . One can notice the misclassification obtained by RF and . The use of provides a coherent segmentation.

One can notice that the optimization of the parameter set improves the sensitivity of the uncertain area classification from 66% to 68% and the epidermal and dermal specificity up to 95%.

3.4. Comparison to State-of-the-Art Methods

We compare our results to the state-of-the-art methods. The results presented below should be considered with caution, because of the differences in labeling and dataset size.

Global accuracy of our model is similar to state-of-the-art methods. The sensitivity and specificity results of the regularized CRF model are presented in Tables 11 and 12. The specificity results obtained by Kurugol et al.7 are not available.

Table 11.

Sensitivity results of compared to state-of-the-art methods.

Table 12.

Specificity results of compared to state-of-the-art methods

Deep learning methods by Bozkurt et al.16 and Kaur et al.12 seem to also take into account the dependencies between images to perform the classification, the author did not observe any incoherent transitions, but the regression might lack interpretability.

4. Conclusion

We have proposed a method to segment the DEJ in confocal microscopy images. This method combines RF classification with a spatial regularization based on a 3-D CRF. Moreover, the 3-D CRF imposes constraints related to the skin biological properties. The ablation analysis of the model has shown the importance of each of its components—RF, spatial regularization, and biological constraints—in order to achieve the best results. In addition, the results show that the proposed method is competitive compared to state-of-the art methods.

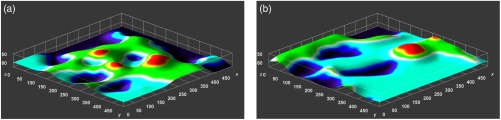

Our DEJ segmentation algorithm has already been used in the context of automatic skin aging characterization from RCM images.37 Indeed, the alteration of the epidermal and dermal layers within skin aging induces a flattening of the DEJ (see Fig. 6) and Longo et al.38,39 have identified the shape of the peaks and valleys of the DEJ as a skin aging confocal descriptor. We have proposed a characteristic measurement of regularity of the DEJ that can be computed automatically. This measurement, extracted on the DEJ segmented by the method presented in this paper, has a significant positive correlation with the age of the subjects.

Fig. 6.

Visual appearance of the transition from the epidermis to the uncertain area, which corresponds to the epidermal lower boundary. Notice that the aged DEJ (b) appears flatter than the young DEJ (a), as expected. Colors encode the depth.

Biographies

Julie Robic received her PhD in computer vision from the University of Marne-la-Vallée, France, in 2018. She is a project manager in charge of skin image processing for Clarins Laboratories.

Benjamin Perret received his MSc degree in computer science in 2007, and his PhD in image processing in 2010 from the Université de Strasbourg, France. He currently holds a teacher–researcher position at ESIEE Paris, affiliated with the Laboratoire d’Informatique Gaspard Monge of the Université Paris-Est. His current research interests include image processing and image analysis.

Alex Nkengne holds a PhD in medical computer science and has spent the last 14 years as a researcher in the field of skin biophysics and imaging for different companies. He currently leads the Skin Models and Methods Team for Clarins Laboratories.

Michel Couprie received his PhD from the Université Pierre et Marie Curie, Paris, France, in 1988, and the Habilitation à Diriger des Recherches in 2004 from the Université de Marne-la-Vallée, France. Since 1988, he has been working in the Computer Science Department of ESIEE. He is also a member of the Laboratoire d’Informatique Gaspard-Monge of the Université Paris-Est. He has authored/co-authored more than 100 papers in archival journals and conference proceedings as well as book contributions. He supervised or co-supervised nine PhD students who successfully defended their thesis. His current research interests include image analysis and discrete mathematics.

Hugues Talbot received his habilitation degree from the Université Paris-Est in 2013, his PhD from École des Mines de Paris in 1993, and his engineering degree from École Centrale de Paris in 1989. He was a principal research scientist at CSIRO, Sydney, from 1994 to 2004, a professor at ESIEE Paris from 2004 to 2018, and is now a full professor at CentraleSupelec of the University Paris-Saclay. During the period 2015 to 2018, he was the research dean at ESIEE Paris. He is the co-author of 6 books and more than 200 articles in various areas of computer vision, including low-level vision, mathematical morphology, discrete geometry, and combinatorial and continuous optimization.

Disclosures

No conflicts of interest, financial or otherwise, are declared by the authors.

References

- 1.Lavker R. M., Zheng P., Dong G., “Aged skin: a study by light, transmission electron, and scanning electron microscopy,” J. invest. Dermatol. 88, 44s–51s (1987). 10.1111/jid.1987.88.issue-s3 [DOI] [PubMed] [Google Scholar]

- 2.Rittié L., Fisher G. J., “Natural and sun-induced aging of human skin,” Cold Spring Harbor Perspect. Med. 5(1), a015370 (2015). 10.1101/cshperspect.a015370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Calzavara-Pinton P., et al. , “Reflectance confocal microscopy for in vivo skin imaging,” Photochem. Photobiol. 84(6), 1421–1430 (2008). 10.1111/php.2008.84.issue-6 [DOI] [PubMed] [Google Scholar]

- 4.Pellacani G., et al. , “Reflectance confocal microscopy as a second-level examination in skin oncology improves diagnostic accuracy and saves unnecessary excisions: a longitudinal prospective study,” Br. J. Dermatol. 171(5), 1044–1051 (2014). 10.1111/bjd.2014.171.issue-5 [DOI] [PubMed] [Google Scholar]

- 5.Robic J., et al. , “Automated quantification of the epidermal aging process using in-vivo confocal microscopy,” in IEEE 13th Int. Symp. Biomed. Imaging (ISBI), IEEE, pp. 1221–1224 (2016). 10.1109/ISBI.2016.7493486 [DOI] [Google Scholar]

- 6.Kose K., et al. , “A machine learning method for identifying morphological patterns in reflectance confocal microscopy mosaics of melanocytic skin lesions in-vivo,” Proc. SPIE 9689, 968908 (2016). 10.1117/12.2212978 [DOI] [Google Scholar]

- 7.Kurugol S., et al. , “Automated delineation of dermal–epidermal junction in reflectance confocal microscopy image stacks of human skin,” J. Invest. Dermatol. 135(3), 710–717 (2015). 10.1038/jid.2014.379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Robic J., et al. , “Classification of the dermal–epidermal junction using in-vivo confocal microscopy,” in IEEE 14th Int. Symp. Biomed. Imaging (ISBI 2017), IEEE, pp. 252–255 (2017). 10.1109/ISBI.2017.7950513 [DOI] [Google Scholar]

- 9.Hames S. C., et al. , “Automated segmentation of skin strata in reflectance confocal microscopy depth stacks,” PloS One 11(4), e0153208 (2016). 10.1371/journal.pone.0153208 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ghanta S., et al. , “A marked Poisson process driven latent shape model for 3d segmentation of reflectance confocal microscopy image stacks of human skin,” IEEE Trans. Image Process. 26(1), 172–184 (2017). 10.1109/TIP.2016.2615291 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Somoza E., et al. , “Automatic localization of skin layers in reflectance confocal microscopy,” Lect. Notes Comput. Sci. 8815, 141–150 (2014). 10.1007/978-3-319-11755-3 [DOI] [Google Scholar]

- 12.Kaur P., et al. , “Hybrid deep learning for reflectance confocal microscopy skin images,” in 23rd Int. Conf. Pattern Recognit. (ICPR), IEEE, 1466–1471 (2016). 10.1109/ICPR.2016.7899844 [DOI] [Google Scholar]

- 13.Bozkurt A., et al. , “Delineation of skin strata in reflectance confocal microscopy images with recurrent convolutional networks,” in IEEE Conf. Comput. Vision and Pattern Recognit. Workshops (CVPRW), IEEE, pp. 777–785 (2017). 10.1109/CVPRW.2017.108 [DOI] [Google Scholar]

- 14.Krizhevsky A., Sutskever I., Hinton G. E., “Imagenet classification with deep convolutional neural networks,” in Adv. Neural Inf. Process. Syst., pp. 1097–1105 (2012). [Google Scholar]

- 15.He K., et al. , “Deep residual learning for image recognition,” in Proc. IEEE Conf. Comput. Vision and Pattern Recognit., pp. 770–778 (2016). 10.1109/CVPR.2016.90 [DOI] [Google Scholar]

- 16.Bozkurt A., et al. , “Delineation of skin strata in reflectance confocal microscopy images using recurrent convolutional networks with Toeplitz attention,” arXiv:1712.00192 (2017). [DOI] [PMC free article] [PubMed]

- 17.Lafferty J. D., et al. , “Conditional random fields: probabilistic models for segmenting and labeling sequence data,” in Proc. Eighteenth Int. Conf. Mach. Learn. (ICML), pp. 282–289 (2001). [Google Scholar]

- 18.Sutton C., et al. , “An introduction to conditional random fields,” Found. Trends® Mach. Learn. 4(4), 267–373 (2012). 10.1561/2200000013 [DOI] [Google Scholar]

- 19.Peng F., McCallum A., “Information extraction from research papers using conditional random fields,” Inf. Process. Manage. 42(4), 963–979 (2006). 10.1016/j.ipm.2005.09.002 [DOI] [Google Scholar]

- 20.Sha F., Pereira F., “Shallow parsing with conditional random fields,” in Proc. 2003 Conf. North Am. Chapter of the Assoc. for Comput. Ling. on Hum. Lang. Technol., Association for Computational Linguistics, Vol. 1, pp. 134–141 (2003). 10.3115/1073445.1073473 [DOI] [Google Scholar]

- 21.Liu Y., et al. , “Protein fold recognition using segmentation conditional random fields (SCRFs),” J. Comput. Biol. 13(2), 394–406 (2006). 10.1089/cmb.2006.13.394 [DOI] [PubMed] [Google Scholar]

- 22.He X., Zemel R. S., Carreira-Perpiñán M. Á., “Multiscale conditional random fields for image labeling,” in IEEE Comput. Soc. Conf. Proc. Comput. Vision and Pattern Recognit. CVPR 2004, IEEE, Vol. 2, pp. II–II (2004). 10.1109/CVPR.2004.1315232 [DOI] [Google Scholar]

- 23.Müller A. C., Behnke S., “Learning depth-sensitive conditional random fields for semantic segmentation of RGB-D images,” in IEEE Int. Conf. Rob. and Autom. (ICRA), IEEE, pp. 6232–6237 (2014). 10.1109/ICRA.2014.6907778 [DOI] [Google Scholar]

- 24.Bauer S., Nolte L.-P., Reyes M., “Fully automatic segmentation of brain tumor images using support vector machine classification in combination with hierarchical conditional random field regularization,” Lect. Notes Comput. Sci. 6893, 354–361 (2011). 10.1007/978-3-642-23626-6 [DOI] [PubMed] [Google Scholar]

- 25.Christ P. F., et al. , “Automatic liver and lesion segmentation in ct using cascaded fully convolutional neural networks and 3D conditional random fields,” Lect. Notes Coput. Sci. 9901, 415–423 (2016). 10.1007/978-3-319-46723-8 [DOI] [Google Scholar]

- 26.Kumar S., Hebert M., “Discriminative random fields,” Int. J. Comput. Vision 68(2), 179–201 (2006). 10.1007/s11263-006-7007-9 [DOI] [Google Scholar]

- 27.Breiman L., “Random forests,” Mach. Learn. 45, 5–32 (2001). 10.1023/A:1010933404324 [DOI] [Google Scholar]

- 28.Van der Schaaf V. A., van Hateren J. V., “Modelling the power spectra of natural images: statistics and information,” Vision Res. 36(17), 2759–2770 (1996). 10.1016/0042-6989(96)00002-8 [DOI] [PubMed] [Google Scholar]

- 29.Haralick R. M., “Statistical and structural approaches to texture,” Proc. IEEE 67(5), 786–804 (1979). 10.1109/PROC.1979.11328 [DOI] [Google Scholar]

- 30.Weldon T. P., Higgins W. E., Dunn D. F., “Efficient Gabor filter design for texture segmentation,” Pattern Recognit. 29(12), 2005–2015 (1996). 10.1016/S0031-3203(96)00047-7 [DOI] [Google Scholar]

- 31.Jain A. K., Ratha N. K., Lakshmanan S., “Object detection using gabor filters,” Pattern Recognit. 30(2), 295–309 (1997). 10.1016/S0031-3203(96)00068-4 [DOI] [Google Scholar]

- 32.Marr D., Hildreth E., “Theory of edge detection,” Proc. R. Soc. Lond. B 207(1167), 187–217 (1980). 10.1098/rspb.1980.0020 [DOI] [PubMed] [Google Scholar]

- 33.Kramer O., “Iterated local search with Powell’s method: a memetic algorithm for continuous global optimization,” Memetic Comput. 2(1), 69–83 (2010). 10.1007/s12293-010-0032-9 [DOI] [Google Scholar]

- 34.Kosov S., “Direct graphical models C++ library,” 2013, http://research.project-10.de/dgm/.

- 35.Rajadhyaksha M., et al. , “In vivo confocal scanning laser microscopy of human skin: melanin provides strong contrast,” J. Invest. Dermatol. 104(6), 946–952 (1995). 10.1111/1523-1747.ep12606215 [DOI] [PubMed] [Google Scholar]

- 36.Fitzpatrick T. B., “The validity and practicality of sun-reactive skin types i through vi,” Arch. Dermatol. 124(6), 869–871 (1988). 10.1001/archderm.1988.01670060015008 [DOI] [PubMed] [Google Scholar]

- 37.Robic J., “Automated characterization of skin aging using in vivo confocal microscopy,” Doctoral Dissertation, Paris Est (2018). [Google Scholar]

- 38.Longo C., et al. , “Skin aging: in vivo microscopic assessment of epidermal and dermal changes by means of confocal microscopy,” J. Am. Acad. Dermatol. 68(3), e73–e82 (2013). 10.1016/j.jaad.2011.08.021 [DOI] [PubMed] [Google Scholar]

- 39.Longo C., et al. , “Proposal for an in vivo histopathologic scoring system for skin aging by means of confocal microscopy,” Skin Res. Technol. 19(1), e167–e173 (2013). 10.1111/j.1600-0846.2012.00623.x [DOI] [PubMed] [Google Scholar]