Abstract

Knowledge about the collective dynamics of cortical spiking is very informative about the underlying coding principles. However, even most basic properties are not known with certainty, because their assessment is hampered by spatial subsampling, i.e., the limitation that only a tiny fraction of all neurons can be recorded simultaneously with millisecond precision. Building on a novel, subsampling-invariant estimator, we fit and carefully validate a minimal model for cortical spike propagation. The model interpolates between two prominent states: asynchronous and critical. We find neither of them in cortical spike recordings across various species, but instead identify a narrow “reverberating” regime. This approach enables us to predict yet unknown properties from very short recordings and for every circuit individually, including responses to minimal perturbations, intrinsic network timescales, and the strength of external input compared to recurrent activation “thereby informing about the underlying coding principles for each circuit, area, state and task.

Keywords: balanced state, criticality, perturbations, timescales

Introduction

In order to understand how each cortical circuit or network processes its input, it would be desirable to first know its basic dynamical properties. For example, knowing which impact one additional spike has on the network (London et al. 2010) would give insight into the amplification of small stimuli (Douglas et al. 1995; Suarez et al. 1995; Miller 2016). Knowing how much of cortical activity can be attributed to external activation or internal activation (Reinhold et al. 2015) would allow to gauge how much of cortical activity is actually induced by stimuli, or rather internally generated, for example in the context of predictive coding (Rao and Ballard 1999; Clark 2013). Knowing the intrinsic network timescale (Murray et al. 2014) would inform how long stimuli are maintained in the activity and can be read out for short-term memory (Buonomano and Merzenich 1995; Wang 2002; Jaeger et al. 2007; Lim and Goldman 2013). However, not even these basic properties of cortical network dynamics are generally known with certainty.

In the past, insights about these network properties have been strongly hampered by the inevitable limitations of spatial subsampling, i.e., the fact that only a tiny fraction of all neurons can be recorded experimentally with millisecond precision. Such spatial subsampling fundamentally limits virtually any recording and hinders inferences about the collective response of cortical networks (Priesemann et al. 2009, 2014; Ribeiro et al. 2010, 2014; Levina and Priesemann 2017).

To describe network responses, two contradicting hypotheses have competed for more than a decade, and are the subjects of ongoing scientific debate: one hypothesis suggests that collective dynamics are “asynchronous-irregular” (AI) (Burns and Webb 1976; Softky and Koch 1993; Stein et al. 2005), i.e., neurons spike independently of each other and in a Poisson manner, which may reflect a balanced state (van Vreeswijk and Sompolinsky 1996; Brunel 2000). The other hypothesis proposes that neuronal networks operate at criticality (Beggs and Plenz 2003; Levina et al. 2007, 2009; Beggs and Timme 2012; Plenz and Niebur 2014; Tkačik et al. 2015; Humplik and Tkačik 2017; Muñoz 2018). Criticality is a particular state at a phase transition, characterized by high sensitivity and long-range correlations in space and time.

These hypotheses have distinct implications for the coding strategy of the brain. The typical balanced state minimizes redundancy (Atick 1992; Bell and Sejnowski 1997; van Hateren and van der Schaaf 1998; Hyvärinen and Oja 2000; Barlow 2012), supports fast network responses (van Vreeswijk and Sompolinsky 1996), and shows vanishing autocorrelation time or network timescale. In contrast, criticality in models optimizes performance in tasks that profit from extended reverberations of activity in the network (Bertschinger and Natschläger 2004; Haldeman and Beggs 2005; Kinouchi and Copelli 2006; Wang et al. 2011; Boedecker et al. 2012; Shew and Plenz 2013; Del Papa et al. 2017).

Surprisingly, there is experimental evidence for both AI and critical states in cortical networks, although both states are clearly distinct. Evidence for the AI state is based on characteristics of single-neuron spiking, resembling a Poisson process, i.e., exponential inter-spike interval (ISI) distributions and a Fano factor close to unity (Burns and Webb 1976; Tolhurst et al. 1981; Vogels et al. 1989; Softky and Koch 1993; de Ruyter van Steveninck et al. 1997; Gur et al. 1997; Kara et al. 2000; Carandini 2004). Moreover, spike count cross-correlations (Ecker et al. 2010; Cohen and Kohn 2011) are small. In contrast, evidence for criticality was typically obtained from a population perspective instead, and assessed neuronal avalanches, i.e., spatio-temporal clusters of activity (Beggs and Plenz 2003; Pasquale et al. 2008; Priesemann et al. 2009; Friedman et al. 2012; Tagliazucchi et al. 2012; Shriki et al. 2013), whose sizes are expected to be power-law distributed if networks are critical (Bak et al. 1987). Deviations from power-laws, typically observed for spiking activity in awake animals (Bédard et al. 2006; Hahn et al. 2010; Ribeiro et al. 2010; Priesemann et al. 2014), were attributed to subsampling effects (Girardi-Schappo et al. 2013; Priesemann et al. 2009, 2013, 2014; Ribeiro et al. 2010, 2014; Levina and Priesemann 2017). Hence, different analysis approaches provided evidence for one or the other hypothesis about cortical dynamics.

We here resolve the contradictory results about cortical dynamics, building on a subsampling-invariant approach presented in a companion study (Wilting and Priesemann 2018): (i) we establish an analytically tractable minimal model for in vivo-like activity, which can interpolate from AI to critical dynamics (Fig. 1a); (ii) we estimate the dynamical state of cortical activity based on a novel, subsampling-invariant estimator (Wilting and Priesemann 2018) (Figs. 1b–d); (iii) the model reproduces a number of dynamical properties of the network, which are experimentally accessible and enable us to validate our approach; (iv) we predict a number of yet unknown network properties, including the expected number of spikes triggered by one additional spike, the intrinsic network timescale, the distribution of the total number of spikes triggered by a single extra action potential, and the fraction of activation that can be attributed to afferent external input compared to recurrent activation in a cortical network.

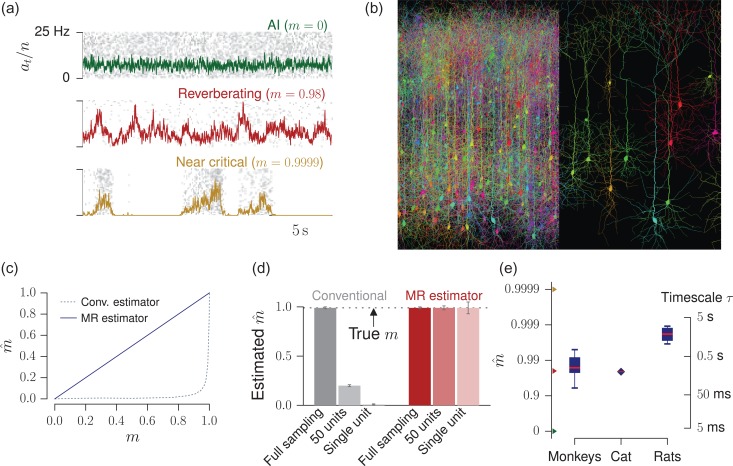

Figure 1.

Reverberating versus critical and irregular dynamics under subsampling. (a) Raster plot and population rate for networks with different spike propagation parameters or neural efficacy . They exhibit vastly different dynamics, which readily manifest in the population activity. (b) When recording spiking activity, only a small subset of all neurons can be sampled with millisecond precision. This spatial subsampling can hinder correct inference of collective properties of the whole network; figure created using TREES (Cuntz et al. 2010) and reproduced from Wilting and Priesemann (2018). (c) Estimated branching ratio as a function of the simulated, true branching ratio , inferred from subsampled activity (100 out of 10 000 neurons). While the conventional estimator misclassified from this subsampled observation (gray, dotted line), the novel multistep regression (MR) estimator returned the correct values. (d) For a reverberating branching model with , the conventional estimator inferred or when sampling 50 or 1 units, respectively, in contrast to MR estimation, which returned the correct even under strong subsampling. (e) Using the novel MR estimator, cortical network dynamics in monkey prefrontal cortex, cat visual cortex, and rat hippocampus consistently showed reverberating dynamics, with (median over all experimental sessions, boxplots indicate median/25–75%/0–100% over experimental sessions per species). These correspond to intrinsic network timescales between 80 ms and 2 s.

Material and Methods

We analyzed in vivo spiking activity from Macaque monkey prefrontal cortex during a short-term memory task (Pipa et al. 2009; Franke et al. 2010; Pröpper and Obermayer 2013), anesthetized cat visual cortex with no stimulus (Blanche and Swindale 2006; Blanche 2009), and rat hippocampus during a foraging task (Mizuseki et al. 2009a, 2009b) (Supp. 1). We compared the recordings of each experimental session to results of a minimal model of spike propagation, which is detailed in the following.

Minimal Model of Spike Propagation

To gain an intuitive understanding of our mathematical approach, make a thought experiment in your favorite spiking network: apply one additional spike to an excitatory neuron, in analogy to the approach by (London et al. 2010). How does the network respond to that perturbation? As a first order approximation, one quantifies the number of spikes that are directly triggered additionally in all postsynaptic neurons. This number may vary from trial to trial, depending on the membrane potential of the postsynaptic neurons. However, what interests us most is , the mean number of spikes triggered by the one extra spike. Any of these triggered spikes can in turn trigger spikes in their postsynaptic neurons in a similar manner, and thereby the perturbation may cascade through the system.

In the next step, assume that perturbations are started continuously at rate , for example through afferent input from other brain areas or sensory modalities. Together, this leads to the mathematical framework of a branching model (Harris 1963; Heathcote 1965; Pakes 1971; Beggs and Plenz 2003; Haldeman and Beggs 2005; Ribeiro et al. 2010; Priesemann et al. 2013, 2014). This framework describes the number of active neurons in discrete time bins of length . Here, should reflect the propagation time of spikes between neurons. Formally, each spike at the time bin excites a random number of postsynaptic spikes, on average . The activity , i.e., the total number of spikes in the next time bin is then defined as the sum of the postsynaptic spikes of all current spikes , as well as the input :

| (1) |

This generic spiking model can generate dynamics spanning AI and critical states depending on the input (Zierenberg et al. 2018), and hence is well suited to probe network dynamics in vivo (see Supp. 3 for details). Most importantly, this framework enables us to infer and other properties from the ongoing activity proper. Mathematical approaches to infer are long known if the full network is sampled (Heyde and Seneta 1972; Wei 1991). Under subsampling, however, it is the novel estimator described in Wilting and Priesemann (2018) that for the first time allows an unbiased inference of , even if only a tiny fraction of neurons is sampled.

A precise estimate of is essential, because the dynamics of the model is mainly governed by (Fig. 1a). Therefore, after inferring , a number of quantities can be analytically derived, and others can be obtained by simulating a branching model, which is constrained by the experimentally measured and the spike rate.

Simulation

We simulated a branching model by mapping a branching process (Eq. (1) and Supp. 3) onto a random network of neurons in the annealed disorder limit (Haldeman and Beggs 2005). An active neuron activated each of its postsynaptic neurons with probability . Here, the activated postsynaptic neurons were drawn randomly without replacement at each step, thereby avoiding that two different active neurons would both activate the same target neuron. The branching model is critical for in the infinite-size limit, and subcritical (supercritical) for (). We modeled input to the network at rate by Poisson activation of each neuron at rate . Subsampling (Priesemann et al. 2009) was applied to the model by sampling the activity of neurons only, which were selected randomly before the simulation, and neglecting the activity of all other neurons. Thereby, instead of the full activity , only the subsampled activity was considered for observation.

If not stated otherwise, simulations were run for time steps (corresponding to ~11 h). Confidence intervals were estimated according to Wilting and Priesemann (2018) from realizations of the model, both for simulation and experiments.

We compared the experimental recordings to three different models: AI, near-critical, and reverberating. All three models were set up to match the experiment in the number of sampled neurons and firing rate . The AI and near-critical models were set up with branching ratios of or , respectively. In addition, the reverberating model matched the recording in , where was estimated from the recording using the novel subsampling-invariant estimator (see below). For all models, we chose a full network size of and then determined the appropriate input in order to match the experimental firing rate. Exemplarily for the cat recording, which happened to represent the median , this yielded , , and . From these numbers, , and followed for the AI, reverberating, and near-critical models, respectively.

In Fig. 2, the reverberating branching model was also matched to the length of the cat recording of 295 s. To test for stationarity, the cat recording and the reverberating branching model were split into 59 windows of 5 s each, before estimating for each window. In Fig. 1c, subcritical and critical branching models with and were simulated, and units sampled.

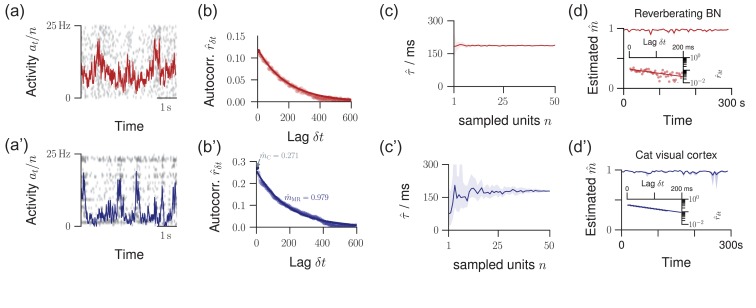

Figure 2.

Validation of the model assumptions. The top row displays properties from a reverberating model, the bottom row spike recordings from cat visual cortex. (a/a’) Raster plot and population activity within bins of , sampled from neurons. (b/b’) Multistep regression (MR) estimation from the subsampled activity (5 min recording). The predicted exponential relation provides a validation of the applicability of the model. The experimental data are fitted by this exponential with remarkable precision. (c/c’) When subsampling even further, MR estimation always returns the correct timescale (or ) in the model. In the experiment, this invariance to subsampling also holds, down to neurons (shaded area: 16–84% confidence intervals estimated from 50 subsets of neurons). (d/d’) The estimated branching parameter for 59 windows of length suggests stationarity of over the entire recording (shaded area: 16–84% confidence intervals). The variability in over consecutive windows was comparable for experimental recording and the matched model (, Levene test). Insets: exponential decay exemplified for one example window each.

Subsampling-Invariant Estimation of

Details on the analysis are found in Supp. 2. For each experimental recording, we collected the spike times of all recorded units (both single and multi-units) into one single train of population spike counts , where denotes how many neurons spiked in the time bin . If not indicated otherwise, we used , reflecting the propagation time of spikes from one neuron to the next.

From these experimental time series, we estimated using the multistep regression (MR) estimator described in all detail in Wilting and Priesemann (2018). In brief, we calculated the linear regression slope , which describes the linear statistical dependence of upon , for different time lags with . In our branching model, these slopes are expected to follow the relation , where is an unknown parameter that depends on the higher moments of the underlying process and the degree of subsampling. However, it can be partialled out, allowing for an estimation of without further knowledge about . Throughout this study, we chose (corresponding to 10 s) for the rat recordings, (600 ms) for the cat recording, and kmax = 500 (2000 ms) for the monkey recordings, assuring that was always in the order of multiple intrinsic network timescales. In order to test for the applicability of a MR estimation, we used a set of conservative tests (Wilting and Priesemann 2018). The exponential relation can be rewritten as an exponential autocorrelation function , where the intrinsic network timescale relates to as . While the precise value of depends on the choice of the bin size and should only be interpreted together with the bin size ( throughout this study), the intrinsic network timescale is independent of . Therefore, we report both values in the following.

Results

Reverberating Spiking Activity In Vivo

We applied MR estimation to the binned population spike counts of the recorded neurons of each experimental session across three different species (see Methods). We identified a limited range of branching ratios in vivo: in the experiments ranged from to (median , for a bin size of ), which is only a narrow window in the continuum from AI () to critical (). For these values of found in cortical networks, the corresponding are between 100 ms and 2 s (median 247 ms, Figs 1e and S1). This clearly suggests that spiking activity in vivo is neither AI-like, nor consistent with a critical state. Instead, it is poised in a regime that, unlike critical or AI, does not maximize one particular property alone but may flexibly combine features of both (Wilting et al. 2018). Without a prominent characterizing feature, we name it the reverberating regime, stressing that activity reverberates (different from the AI state) at timescales of hundreds of milliseconds (different from a critical state, where they can persist infinitely).

Validity of the Approach

There is a straight-forward verification of the validity of our phenomenological model: it predicts an exponential autocorrelation function for the population activity . We found that the activity in cat visual cortex (Fig. 2a,a’) is surprisingly well described by this exponential fit (Fig. 2b,b’). This validation holds to the majority of experiments investigated (14 out of 21, Fig. S1).

A second verification of our approach is based on its expected invariance under subsampling: We further subsampled the activity in cat visual cortex by only taking into account spikes recorded from a subset out of all available single units. As predicted (Fig. 2c), the estimates of , or equivalently of the intrinsic network timescale , coincided for any subset of single units if at least about five of the available 50 single units were evaluated (Fig. 2c’). Deviations when evaluating only a small subset of units most likely reflect the heterogeneity within cortical networks. Together, these results demonstrate that our approach returns consistent results when evaluating the activity of neurons, which were available for all investigated experiments.

Origin of the Activity Fluctuations

The fluctuations found in cortical spiking activity, instead of being intrinsically generated, could in principle arise from non-stationary input, which could in turn lead to misestimation of (Priesemann and Shriki 2018). This is unlikely for three reasons: first, the majority of experiments passed a set of conservative tests that reject recordings that show any signature of common non-stationarities, as defined in (Wilting and Priesemann 2018). Second, recordings in cat visual cortex were acquired in absence of any stimulation, excluding stimulus-related non-stationarities. Third, when splitting the spike recording into short windows, the window-to-window variation of in the recording did not differ from that of stationary in vivo-like reverberating models (, Fig. 2d,d’). For these reasons, the observed fluctuations in the estimates likely originate from the characteristic fluctuations of collective network dynamics within the reverberating regime.

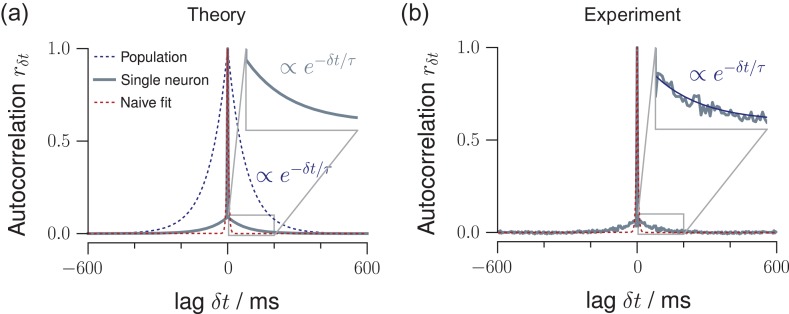

Timescales of the Network and Single Units

The dynamical state described by directly relates to an exponential autocorrelation function with an intrinsic network timescale . Exemplarily for the cat recording, implies an intrinsic network timescale of , with reflecting the spike propagation time from one neuron to the next. While the autocorrelation function of the full network activity is expected to show an exponential decay (Fig. 3a, blue), this is different for the autocorrelation of single neurons—the most extreme case of subsampling. We showed that subsampling can strongly decrease the absolute values of the autocorrelation function for any non-zero time lag (Fig. 3a, gray). This effect is typically interpreted as a lack of memory, because the autocorrelation of single neurons decays at the order of the bin size (Fig. 3a, red). However, ignoring the value at , the floor of the autocorrelation function still unveils the exponential relation. Remarkably, the autocorrelation function of single units in cat visual cortex displayed precisely the shape predicted under subsampling (compare Fig. 3a,b).

Figure 3.

MR estimation and intrinsic network timescales. (a) In a branching model, the autocorrelation function of the population activity decays exponentially with an intrinsic network timescale (blue dotted line). In contrast, the autocorrelation function for single neurons shows a sharp drop from at lag to the next lag (gray solid line). We showed previously that this drop is a subsampling-induced bias. When ignoring the zero-lag value, the autocorrelation strength is decreased, but the exponential decay and even the value of the intrinsic network timescale of the network activity are preserved (inset). The red, dashed line shows a potential, naive exponential function, fitted to the single-neuron autocorrelation function (gray). This naive fit would return a much smaller . (b) The autocorrelation function of single-neuron activity recorded in cat visual cortex (gray) precisely resembles this theoretical prediction, namely a sharp drop and then an exponential decay (blue, inset), which persists over more than 100 ms. A naive exponential fit (red) to the single-neuron data would return an extremely short .

Established Methods are Biased to Identifying AI Dynamics

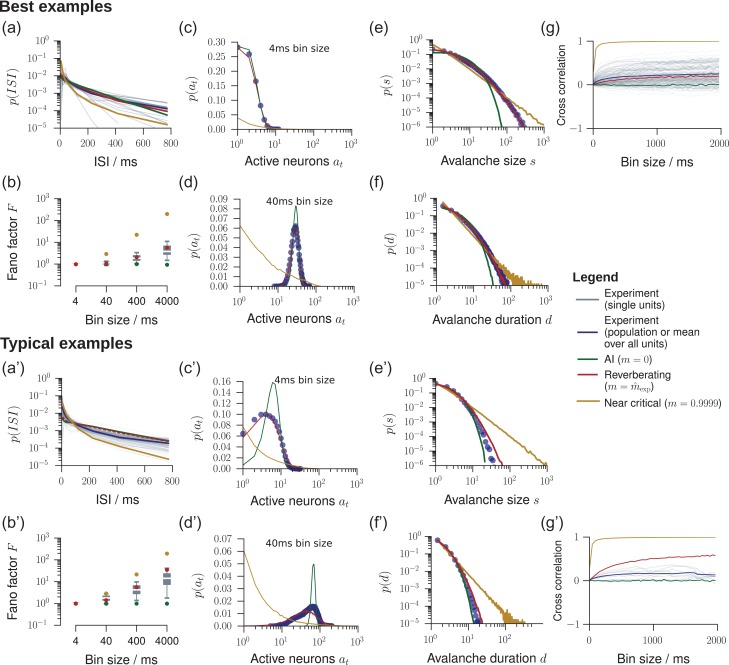

On the population level, networks with different are clearly distinguishable (Fig. 1a). Surprisingly, single-neuron statistics, namely inter-spike interval (ISI) distributions, Fano factors, conventional estimation of , and the autocorrelation strength , all returned signatures of AI activity regardless of the underlying network dynamics, and hence these single-neuron properties do not serve as a reliable indicator for the network’s dynamical state.

First, exponential ISI distributions are considered a strong indicator of Poisson-like firing. Surprisingly, the ISIs of single neurons in the in vivo-like branching model closely followed exponential distributions as well. The ISI distributions were almost indistinguishable for reverberating and AI models (Figs 4a,a’ and S2). In fact, the ISI distributions are mainly determined by the mean firing rate. This result was further supported by coefficients of variation close to unity, as expected for exponential ISI distributions and Poisson firing (Fig. S2).

Figure 4.

Model validation for in vivo spiking activity. We validated our model by comparing experimental results to predictions obtained from the in vivo-like, reverberating model, which was matched to the recording in the mean rate, inferred , and number of recorded neurons. In general, the experimental results (gray or blue) were best matched by this reverberating model (red), compared to asynchronous-irregular (AI, green) and near-critical (yellow) models. From all experimental sessions, best examples (top) and typical examples (bottom) are displayed. For results from all experimental sessions see Figs S2–S8. (a/a’) Inter-spike-interval (ISI) distributions. (b/b’) Fano factors of single neurons for bin sizes between 4 ms and 4 s. (c/c’) Distribution of spikes per bin at a bin size of 4 ms. (d/d’) Same as c/c’ with a bin size of 40 ms. (e/e’) Avalanche size distributions for all sampled units. AI activity lacks large avalanches, near-critical activity produces power-law distributed avalanches, even under subsampling. (f/f’) Same as e/e’, but for the avalanche duration distributions . (g/g’) Spike count cross-correlations () as a function of the bin size.

Second, for both the AI and reverberating regime, the Fano factor for single unit activity was close to unity, a hallmark feature of irregular spiking (Tolhurst et al. 1981; Vogels et al. 1989; Softky and Koch 1993; de Ruyter van Steveninck et al. 1997; Gur et al. 1997; Kara et al. 2000; Carandini 2004) (Fig. 5g, analytical result: Eq. (S9)). Hence it cannot serve to distinguish between these different dynamical states. When evaluating more units, or increasing the bin size to 4 s, the differences became more pronounced, but for experiments, the median Fano factor of single unit activity did not exceed in any of the experiments, even in those with the longest reverberation (Figs 4b,b’ and S3). In contrast, for the full network the Fano factor rose to for the in vivo-like branching model and diverged when approaching criticality (Fig. 5g, analytical result: Eq. (S5)).

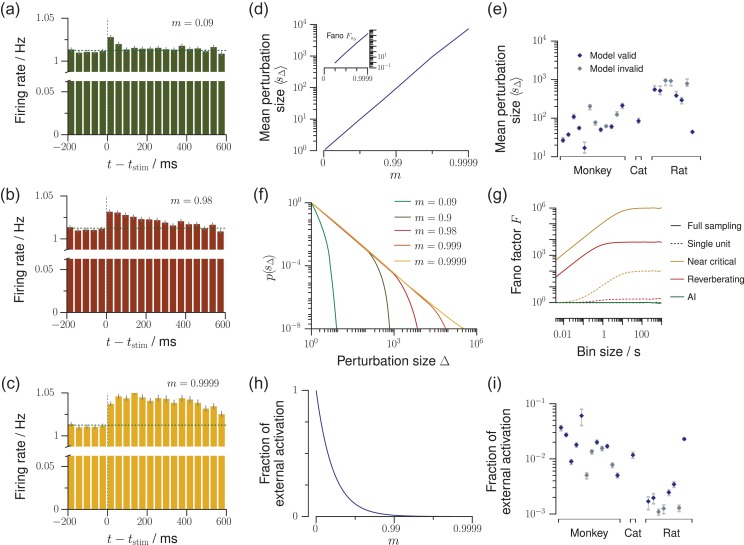

Figure 5.

Predictions about network dynamics and propagation of perturbations. Using our in vivo-like, reverberating model, we can predict several network properties, which are yet very complicated or impossible to obtain experimentally. (a–c) In response to one single extra spike, a perturbation propagates in the network depending on the branching ratio , and can be observed as a small increase of the average firing rate of the sampled neurons, here simulated for 500 trials (as in London et al. 2010). This increase of firing rate decays exponentially, with the decay time being determined by . The perturbation a is rapidly quenched in the asynchronous-irregular state, b decays slowly over hundreds of milliseconds in the reverberating state, or c persists almost infinitely in the critical state. (d) The average perturbation size and Fano factor (inset) increase strongly with . (e) Average total perturbation sizes predicted for each spike recording of mammalian cortex (errorbars: 5–95% confidence intervals). (f) Distribution of total perturbation sizes . The asynchronous-irregular models show approximately Poisson distributed, near-critical models power-law distributed perturbation sizes. (g) Bin size dependent Fano factors of the activity, here exemplarily shown for the asynchronous-irregular (, green), representative reverberating (, red), and near critical (, yellow) models. While the directly measurable Fano factor of single neurons (dotted lines) underestimates the Fano factor of the whole network, the model allows to predict the Fano factor of the whole network (solid lines). (h) The fraction of the externally generated spikes compared to all spikes in the network strongly decreases with larger . (i) Fraction of the externally generated spikes predicted for each spike recording of mammalian cortex (errorbars as in e).

Third, conventional regression estimators (Heyde and Seneta 1972; Wei 1991) are biased towards inferring irregular activity, as shown before. Here, conventional estimation yielded a median of for single-neuron activity in cat visual cortex, in contrast to returned by MR estimation (Fig. S9).

Fourth, for the autocorrelation function of an experimental recording (Fig. 3b) the rapid decay of prevails, and hence single-neuron activity appears uncorrelated in time.

Cross-validation of Model Predictions

We compared the experimental results to an in vivo-like model, which was matched to each experiment only in the average firing rate, and in the inferred branching ratio . Remarkably, this in vivo-like branching model could predict statistical properties not only of single neurons (ISI and Fano factor, see above), but also pairwise and population properties, as detailed below. This prediction capability further underlines the usefulness of this simple model to approximate the default state of cortical dynamics.

First, the model predicted the activity distributions, , better than AI or critical models for the majority of experiments (15 out 21, Figs 4c,d,c’,d’, S5, and S6), both for the exemplary bin sizes of 4 ms and 40 ms. Hence, the branching models, which were only matched in their respective first moment of the activity distributions (through the rate) and first moment of the spreading behavior (through ), in fact approximated all higher moments of the activity distributions .

Likewise, the model predicted the distributions of neural avalanches, i.e., spatio-temporal clusters of activity (Figs 4e,f,e’,f’, S7, and S8). Characterizing these distributions is a classic approach to assess criticality in neuroscience (Beggs and Plenz 2003; Priesemann et al. 2014), because avalanche size and duration distributions, and , respectively, follow power laws in critical systems. In contrast, for AI activity, they are approximately exponential (Priesemann and Shriki 2018). The matched branching models predicted neither exponential nor power law distributions for the avalanches, but very well matched the experimentally obtained distributions (compare red and blue in Figs 4e,f,e’,f’, S7, and S8). Indeed, model likelihood (Clauset et al. 2009) favored the in vivo-like branching model over Poisson and critical models for the majority experiments (18 out of 21, Fig. S7). Our results here are consistent with those of spiking activity in awake animals, which typically do not display power laws (Bédard et al. 2006; Ribeiro et al. 2010; Priesemann et al. 2014). In contrast, most evidence for criticality in vivo, in particular the characteristic power-law distributions, has been obtained from coarse measures of neural activity (LFP, EEG, BOLD; see Priesemann et al. 2014 and references therein).

Last, the model predicted the pairwise spike count cross-correlation . In experiments, is typically between 0.01 and 0.25, depending on brain area, task, and most importantly, the analysis timescale (bin size) (Cohen and Kohn 2011). For the cat recording the model even correctly predicted the bin size dependence of from at a bin size of 4 ms (analytical result: Eq. (S12)) to at a bin size of 2 s (Fig. 4g). Comparable results were also obtained for some monkey experiments. In contrast, correlations in most monkey and rat recordings were smaller than predicted (Figs 4g’ and S4). It is very surprising that the model correctly predicted the cross-correlation even in some experiments, as was inferred only from the temporal structure of the spiking activity alone, whereas characterizes spatial dependencies.

Overall, by only estimating the effective synaptic strength from the in vivo recordings, higher-order properties like avalanche size distributions, activity distributions and in some cases spike count cross-correlations could be closely matched using the generic branching model.

The Dynamical State Determines Responses to Small Stimuli

After validating the model using a set of statistical properties that are well accessible experimentally, we now turn to making predictions about yet unknown properties, namely network responses to small stimuli. In the line of London et al. (2010), assume that on a background of spiking activity one single extra spike is triggered. This spike may in turn trigger new spikes, leading to a cascade of additional spikes propagating through the network. A dynamical state with branching ratio implies that on average, this perturbation decays with time constant . Similar to the approach in London et al. (2010), the evolution of the mean firing rate, averaged over a reasonable number of trials (here: 500) unveils the nature of the underlying spike propagation: depending on , the rate excursions will last longer, the higher (Figs 5a–c and S11). The perturbations are not deterministic, but show trial-to-trial variability which also increases with (S11b).

Unless , the theory of branching models ensures that perturbations will die out eventually after a duration , having accumulated a total of extra spikes in total. This perturbation size and duration follow specific distributions (Harris 1963), which are determined by : they are power law distributed in the critical state (), with a cutoff for any (Figs 5f and S11c,d). These distributions imply a characteristic mean perturbation size (Fig. 5d), which diverges at the critical point. The variability of the perturbation sizes is also determined by and also diverges at the critical point (inset of Figs 5d and S11e).

Taken together, these results imply that the closer a neuronal network is to criticality, the more sensitive it is to external perturbations, and the better it can amplify small stimuli. At the same time, these networks also show larger trial-to-trial variability. For typical cortical networks, we found that the response to one single extra spike will on average comprise between 20 and 1000 additional spikes in total (Fig. 5e).

The Dynamical State Determines Network Susceptibility and Variability

Moving beyond single spike perturbations, our model gives precise predictions for the network response to continuous stimuli. If extra action potentials are triggered at rate in the network, the network will again amplify these external activations, depending on . Provided an appropriate stimulation protocol, this rate response could be measured and our prediction tested in experiments (Fig. S11g). The susceptibility diverges at the critical transition and is unique to a specific branching ratio . We predict that typical cortical networks will amplify a small, but continuous input rate by about a factor fifty (Fig. S11h, red).

While the input and susceptibility determine the network’s mean activity, the network still shows strong rate fluctuations around this mean value. The magnitude of these fluctuations in relation to the mean can be quantified by the network Fano factor (Fig. 5g). This quantity cannot be directly inferred from experimental recordings, because the Fano factor of subsampled populations severely underestimates the network Fano factor, as shown before. We here used our in vivo-like model to obtain estimates of the network Fano factor: for a bin size of it is about and rises to for bin sizes of several seconds, highlighting that network fluctuations probably are much stronger than one would naively assume from experimental, subsampled spiking activity.

Distinguishing Afferent and Recurrent Activation

Last, our model gives an easily accessible approach to solving the following question: given a spiking neuronal network, which fraction of the activity is generated by recurrent activation from within the network, and which fraction can be attributed to external, afferent excitation h? The branching model readily provides an answer: the fraction of external activation is (Fig. 5h). In this framework, AI-like networks are completely driven by external input currents or noise, whereas reverberating networks generate a substantial fraction of their activity intrinsically. For the experiments investigated in this study, we inferred that between 0.1% and 7% of the activity are externally generated (median 2%, Fig. 5i).

While our model is quite simplistic given the complexity of neuronal network activity, keep in mind that “all models are wrong, but some are useful” (Box 1979). Here, the model has proven to provide a good first order approximation to a number of statistical properties of spiking activity and propagation in cortex. Hence, it promises insight into cortical function because (i) it relies on simply assessing spontaneous cortical activity, (ii) it does not require manipulation of cortex, (iii) it enables reasonable predictions about sensitivity, amplification, and internal and external activation, (iv) this analysis is possible in an area specific, task- and state-dependent manner as only short recordings are required for consistent results.

Discussion

Our Results Resolve Contradictions Between AI and Critical States

Our results for spiking activity in vivo suggest that network dynamics show AI-like statistics, because under subsampling the observed correlations are underestimated. In contrast, typical experiments that assessed criticality potentially overestimated correlations by sampling from overlapping populations (LFP, EEG) and thereby hampered a fine distinction between critical and subcritical states (Pinheiro Neto and Priesemann, in preparation). By employing for the first time a consistent, quantitative estimation, we provided evidence that in vivo spiking population dynamics reflects a reverberating regime, i.e., it operates in a narrow regime around . This result is supported by the findings by Dahmen et al. (2016): based on distributions of covariances, they inferred that cortical networks operate in a regime below criticality. Given the generality of our results across different species, brain areas, and cognitive states, our results suggest self-organization to this reverberating regime as a general organization principle for cortical network dynamics.

The Reverberating Regime Combines Features of AI and Critical State

At first sight, of the reverberating regime may suggest that the collective spiking dynamics is very close to critical. Indeed, physiologically a 1.6% difference to criticality () is small in terms of the effective synaptic strength. However, this apparently small difference in single unit properties has a large impact on the collective dynamical fingerprint and makes AI, reverberating, and critical states clearly distinct: for example, consider the sensitivity to a small input, i.e., the susceptibility . The susceptibility diverges at criticality, making critical networks overly sensitive to input. In contrast, states with assure sensitivity without instability. Because this has a strong impact on network dynamics and putative network function, finely distinguishing between dynamical states is both important and feasible even if the corresponding differences in effective synaptic strength () appear small.

We cannot ultimately rule out that cortical networks self-organize as close as possible towards criticality, the platonic ideal being impossible to achieve for example due to finite-size, external input, and refractory periods. Therefore, the reverberating regime might conform with quasi-criticality (Williams-García et al. 2014) or neutral theory (Martinello et al. 2017). However, we deem this unlikely for two reasons. First, in simulations of finite-size networks with external input, we could easily distinguish the reverberating regime from states with (Wilting and Priesemann 2018), which are more than one order of magnitude closer to criticality than any experiment we analyzed. Second, operating in a reverberating regime, which is between AI and critical, may combine the computational advantages of both states (Wilting et al. 2018): the reverberating regime enables rapid changes of computational properties by small parameter changes, keeps a sufficient safety-margin from instability to make seizures sufficiently unlikely (Priesemann et al. 2014), balances competing requirements (e.g., sensitivity and specificity (Gollo 2017)), and may carry short-term memory and allow to integrate information over limited, tunable timescales (Wang 2002; Boedecker et al. 2012). For these reasons, we consider the reverberating regime to be the explicit target state of self-organization. This is in contrast to the view of “as close to critical as possible,” which still holds criticality as the ideal target.

More Complex Network Models

Cortical dynamics is clearly more complicated than a simple branching model. For example, heterogeneity of single-neuron morphology and dynamics, and non-trivial network topology likely impact population dynamics. However, we showed that statistics of cortical network activity are well approximated by a branching model. Therefore, we interpret branching models as a statistical approximation of spike propagation, which can capture a fair extent of the complexity of cortical dynamics. By using branching models, we draw on the powerful advantage of analytical tractability, which allowed for basic insight into dynamics and stability of cortical networks.

In contrast to the branching model, doubly stochastic processes (i.e., spikes drawn from an inhomogeneous Poisson distribution) failed to reproduce many statistical features (Fig. S10). We conjecture that the key difference is that doubly stochastic processes propagate the underlying firing rate instead of the actual spike count. Thus, propagation of the actual number of spikes (as e.g., in branching or Hawkes processes; Kossio et al. 2018), not some underlying firing rate, seems to be integral to capture the statistics of cortical spiking dynamics.

Our statistical model stands in contrast to generative models, which generate spiking dynamics by physiologically inspired mechanisms. One particularly prominent example are networks with balanced excitation and inhibition (van Vreeswijk and Sompolinsky 1996, 1997; Brunel 2000), which became a standard model of neuronal networks (Hansel and van Vreeswijk 2012). A balance of excitation and inhibition is supported by experimental evidence (Okun and Lampl 2008). Our statistical model reproduces statistical properties of such networks if one assumes that the excitatory and inhibitory contributions can be described by an effective excitation. In turn, the results obtained from the well-understood estimator can guide the refinement of generative models. For example, we suggest that network models need to be extended beyond the asynchronous-irregular state (Brunel 2000) to incorporate the network reverberations observed in vivo. Possible candidate mechanisms are increased coupling strength or inhomogeneous connectivity. Both have already been shown to induce rate fluctuations with timescales of several hundred milliseconds (Litwin-kumar and Doiron 2012; Ostojic 2014; Kadmon and Sompolinsky 2015).

Because of the assumption of uncorrelated, Poisson-like network firing, models that study single neurons typically assume that synaptic currents are normally distributed. Our results suggest that one should rather use input with reverberating properties with timescales of a few hundred milliseconds to reflect input from cortical neurons in vivo. This could potentially change our understanding of single-neuron dynamics, for example of their input-output properties.

Deducing Network Properties from the Tractable Model

Using our analytically tractable model, we could predict and validate network properties, such as avalanche size and duration, ISI, or activity distributions. Given the experimental agreement with these predictions, we deduced further properties, which are impossible or difficult to assess experimentally and gave insight into more complex questions about network responses: How do perturbations propagate within the network, and How susceptible is the network to external stimulation?

One particular question we could address is the following: Which fraction of network activity is attributed to external or recurrent, internal activation? We inferred that about 98% of the activity is generated by recurrent excitation, and only about 2% originates from input or spontaneous threshold crossing. This result may depend systematically on the brain area and cognitive state investigated: For layer 4 of primary visual cortex in awake mice, Reinhold et al. (2015) concluded that the fraction of recurrent cortical excitation is about 72%, and cortical activity dies out with a timescale of about 12 ms after thalamic silencing. Their numbers agree perfectly well with our phenomenological model: a timescale of implies that the fraction of recurrent cortical excitation is , just as found experimentally. Under anesthesia, in contrast, they report timescales of several hundred milliseconds, in agreement with our results. These differences show that the fraction of external activation may strongly depend on cortical area, layer, and cognitive state. The novel estimator can in future contribute to a deeper insight into these differences, because it allows for a straight-forward assessment of afferent versus recurrent activation, simply from evaluating spontaneous spiking activity, without the requirement of thalamic or cortical silencing.

Supplementary Material

Note

J.W. received support from the Gertrud-Reemstma-Stiftung. V.P. received financial support from the German Ministry for Education and Research (BMBF) via the Bernstein Center for Computational Neuroscience (BCCN) Göttingen under Grant no. 01GQ1005B, and by the German-Israel-Foundation (GIF) under Grant no. G-2391-421.13. J.W. and V.P. received financial support from the Max Planck Society. Conflict of Interest: The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

- Atick JJ. 1992. Could information theory provide an ecological theory of sensory processing? Network-Comp Neural. 3(2):213–251. [DOI] [PubMed] [Google Scholar]

- Bak P, Tang C, Wiesenfeld K. 1987. Self-organized criticality: an explanation of the 1/f noise. Phys Rev Lett. 59(4):381–384. [DOI] [PubMed] [Google Scholar]

- Barlow HB. 2012. Possible principles underlying the transformations of sensory messages In: Rosenblith WA, editor. Sensory communication. Cambridge, MA: The MIT Press; p. 217–234. [Google Scholar]

- Beggs JM, Plenz D. 2003. Neuronal avalanches in neocortical circuits. J Neurosci. 23(35):11167–11177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beggs JM, Timme N. 2012. Being critical of criticality in the brain. Front Physiol. 3:1–14. JUN(June). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bell AJ, Sejnowski TJ. 1997. The ‘independent components’ of natural scenes are edge filters. Vision Res. 37(23):3327–3338. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bertschinger N, Natschläger T. 2004. Real-time computation at the edge of chaos in recurrent neural networks. Neural Comput. 16(7):1413–1436. [DOI] [PubMed] [Google Scholar]

- Blanche T. 2009. Multi-neuron recordings in primary visual cortex.

- Blanche TJ, Swindale NV. 2006. Nyquist interpolation improves neuron yield in multiunit recordings. J Neurosci Methods. 155(1):81–91. [DOI] [PubMed] [Google Scholar]

- Boedecker J, Obst O, Lizier JT, Mayer NM, Asada M. 2012. Information processing in echo state networks at the edge of chaos. Theory Biosci. 131(3):205–213. [DOI] [PubMed] [Google Scholar]

- Box GEP. 1979. Robustness in the strategy of scientific model building In: Launer RL, Wilkinson GN, editors. Robustness in statistics. vol. 1 New York: Academic Press; p. 201–236. [Google Scholar]

- Brunel N. 2000. Dynamics of networks of randomly connected excitatory and inhibitory spiking neurons. J Physiol Paris. 94(5–6):445–463. [DOI] [PubMed] [Google Scholar]

- Buonomano D, Merzenich M. 1995. Temporal information transformed into a spatial code by a neural network with realistic properties. Science. 267(5200):1028–1030. [DOI] [PubMed] [Google Scholar]

- Burns BD, Webb AC. 1976. The spontaneous activity of neurones in the cat’s cerebral cortex. Proc R Soc Lond B Biol Sci. 194(1115):211–223. [DOI] [PubMed] [Google Scholar]

- Bédard C, Kröger H, Destexhe A. 2006. Does the 1/f frequency scaling of brain signals reflect self-organized critical states? Phys Rev Lett. 97(11):1–4. [DOI] [PubMed] [Google Scholar]

- Carandini M. 2004. Amplification of trial-to-trial response variability by neurons in visual cortex. PLoS Biol. 2(9):e264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clark A. 2013. Whatever next? Predictive brains, situated agents, and the future of cognitive science. Behav Brain Sci. 36(03):181–204. [DOI] [PubMed] [Google Scholar]

- Clauset A, Rohilla Shalizi C, Newman MEJ. 2009. Power-law distributions in empirical data. SIAM Rev. 51(4):661–703. [Google Scholar]

- Cohen MR, Kohn A. 2011. Measuring and interpreting neuronal correlations. Nat Neurosci. 14(7):811–819. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cuntz H, Forstner F, Borst A, Häusser M. 2010. One rule to grow them all: a general theory of neuronal branching and its practical application. PLoS Comput Biol. 6(8):e1000877. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahmen D, Diesmann M, Helias M. 2016. Distributions of covariances as a window into the operational regime of neuronal networks. Preprint at http://arxiv.org/abs/1605.04153.

- de Ruyter van Steveninck RR, Lewen GD, Strong SP, Koberle R, Bialek W. 1997. Reproducibility and variability in neural spike trains. Science. 275(5307):1805–1808. [DOI] [PubMed] [Google Scholar]

- Del Papa B, Priesemann V, Triesch J. 2017. Criticality meets learning: criticality signatures in a self-organizing recurrent neural network. PLoS One. 12(5):1–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Douglas RJ, Koch C, Mahowald M, Martin KA, Suarez HH. 1995. Recurrent excitation in neocortical circuits. Science. 269(5226):981–985. [DOI] [PubMed] [Google Scholar]

- Ecker, Alexander S; Berens, Philipp; Keliris, Georgios A; Bethge, Matthias; Logothetis, Nikos K; Tolias, Andreas S.. Decorrelated Neuronal Firing in Cortical Microcircuits. Science (80). 327, 584–587 (2010). [DOI] [PubMed] [Google Scholar]

- Franke F, Natora M, Boucsein C, Munk MHJ, Obermayer K. 2010. An online spike detection and spike classification algorithm capable of instantaneous resolution of overlapping spikes. J Comput Neurosci. 29(1–2):127–148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friedman N, Ito S, Brinkman BAW, Shimono M, DeVille REL, Dahmen KA, Beggs JM, Butler TC. 2012. Universal critical dynamics in high resolution neuronal avalanche data. Phys Rev Lett. 108(20):208102. [DOI] [PubMed] [Google Scholar]

- Girardi-Schappo M, Kinouchi O, Tragtenberg MHR. 2013. Critical avalanches and subsampling in map-based neural networks coupled with noisy synapses. Phys Rev E. 88(2):1–5. [DOI] [PubMed] [Google Scholar]

- Gollo LL. 2017. Coexistence of critical sensitivity and subcritical specificity can yield optimal population coding. J. Royal Soc. Interface. 14(134):20170207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gur M, Beylin A, Snodderly DM. 1997. Response variability of neurons in primary visual cortex (V1) of alert monkeys. TL - 17. J Neurosci. 17(8):2914–2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hahn G, Petermann T, Havenith MN, Yu S, Singer W, Plenz D, Nikolic D. 2010. Neuronal avalanches in spontaneous activity in vivo. J Neurophysiol. 104(6):3312–3322. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haldeman C, Beggs J. 2005. Critical branching captures activity in living neural networks and maximizes the number of metastable states. Phys Rev Lett. 94(5):058101. [DOI] [PubMed] [Google Scholar]

- Hansel D, van Vreeswijk C. 2012. The mechanism of orientation selectivity in primary visual cortex without a functional map. J Neurosci. 32(12):4049–4064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harris TE. 1963. The theory of branching processes. Berlin: Springer. [Google Scholar]

- Heathcote CR. 1965. A branching process allowing immigration. J R Stat Soc Series B. 27(1):138–143. [Google Scholar]

- Heyde CC, Seneta E. 1972. Estimation theory for growth and immigration rates in a multiplicative process. J Appl Probab. 9(2):235. [Google Scholar]

- Humplik J, Tkačik G. 2017. Probabilistic models for neural populations that naturally capture global coupling and criticality. PLoS Comput Biol. 13(9):1–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hyvärinen A, Oja E. 2000. Independent component analysis: algorithms and applications. Neural Networks. 13:411–430. [DOI] [PubMed] [Google Scholar]

- Jaeger H, Maass W, Principe J. 2007. Special issue on echo state networks and liquid state machines. Neural Networks. 20(3):287–289. [Google Scholar]

- Kadmon J, Sompolinsky H. 2015. Transition to chaos in random neuronal networks. Phys Rev X. 5(4):1–28. [Google Scholar]

- Kara P, Reinagel P, Reid RC. 2000. Low response variability in simultaneously recorded retinal, thalamic, and cortical neurons. Neuron. 27(3):635–646. [DOI] [PubMed] [Google Scholar]

- Kinouchi O, Copelli M. 2006. Optimal dynamical range of excitable networks at criticality. Nat Phys. 2(5):348–351. [Google Scholar]

- Kossio FYK, Goedeke S, van den Akker B, Ibarz B, Memmesheimer R-M. 2018. Growing critical: self-organized criticality in a developing neural system. Phys Rev Lett. 121(5):058301. [DOI] [PubMed] [Google Scholar]

- Levina A, Herrmann JM, Geisel T. 2007. Dynamical synapses causing self-organized criticality in neural networks. Nat Phys. 3(12):857–860. [Google Scholar]

- Levina A, Herrmann J, Geisel T. 2009. Phase transitions towards criticality in a neural system with adaptive interactions. Phys Rev Lett. 102(11):118110. [DOI] [PubMed] [Google Scholar]

- Levina A, Priesemann V. 2017. Subsampling scaling. Nat Commun. 8:15140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lim S, Goldman MS. 2013. Balanced cortical microcircuitry for maintaining information in working memory. Nat Neurosci. 16(9):1306–1314. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Litwin-kumar A, Doiron B. 2012. Slow dynamics and high variability in balanced cortical networks with clustered connections. Nat Neurosci. 15(11):1498–1505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- London M, Roth A, Beeren L, Häusser M, Latham PE. 2010. Sensitivity to perturbations in vivo implies high noise and suggests rate coding in cortex. Nature. 466(7302):123–127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martinello M, Hidalgo J, Maritan A, Di Santo S, Plenz D, Muñoz MA. 2017. Neutral theory and scale-free neural dynamics. Phys Rev X. 7(4):1–11. [Google Scholar]

- Miller KD. 2016. Canonical computations of cerebral cortex. Curr Opin Neurobiol. 37:75–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mizuseki K, Sirota A, Pastalkova E, Buzsáki G. 2009. a. Multi-unit recordings from the rat hippocampus made during open field foraging.

- Mizuseki K, Sirota A, Pastalkova E, Buzsáki G. 2009. b. Theta oscillations provide temporal windows for local circuit computation in the entorhinal-hippocampal loop. Neuron. 64(2):267–280. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murray JD, Bernacchia A, Freedman DJ, Romo R, Wallis JD, Cai X, Padoa-Schioppa C, Pasternak T, Seo H, Lee D, Wang X-J. 2014. A hierarchy of intrinsic timescales across primate cortex. Nat Neurosci. 17(12):1661–1663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muñoz MA. 2018. Colloquium: criticality and dynamical scaling in living systems. Rev Mod Phys. 90(3):031001. [Google Scholar]

- Okun M, Lampl I. 2008. Instantaneous correlation of excitation and inhibition during ongoing and sensory-evoked activities. Nat Neurosci. 11(5):535–537. [DOI] [PubMed] [Google Scholar]

- Ostojic S. 2014. Two types of asynchronous activity in networks of excitatory and inhibitory spiking neurons. Nat Neurosci. 17(4):594–600. [DOI] [PubMed] [Google Scholar]

- Pakes AG. 1971. Branching processes with immigration. J Appl Probab. 8(1):32. [Google Scholar]

- Pasquale V, Massobrio P, Bologna LL, Chiappalone M, Martinoia S. 2008. Self-organization and neuronal avalanches in networks of dissociated cortical neurons. Neuroscience. 153(4):1354–1369. [DOI] [PubMed] [Google Scholar]

- Pinheiro Neto J, Priesemann V Coarse sampling bias inference of criticality in neural system. In preparation.

- Pipa G, Städtler ES, Rodriguez EF, Waltz JA, Muckli LF, Singer W, Goebel R, Munk MHJ. 2009. Performance- and stimulus-dependent oscillations in monkey prefrontal cortex during short-term memory. Front Integr Neurosci. 3:25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Plenz D, Niebur E, editors. 2014. Criticality in neural systems. Weinheim, Germany: Wiley-VCH Verlag GmbH & Co. KGaA. [Google Scholar]

- Priesemann V, Wibral M, Valderrama M, Pröpper R, Le Van Quyen M, Geisel T, Triesch J, Nikolić D, Munk MHJ. 2014. Spike avalanches in vivo suggest a driven, slightly subcritical brain state. Front Syst Neurosci. 8:108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priesemann V, Munk MHJ, Wibral M. 2009. Subsampling effects in neuronal avalanche distributions recorded in vivo. BMC Neurosci. 10:40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priesemann V, Shriki O. 2018. Can a time varying external drive give rise to apparent criticality in neural systems? PLoS Comput Biol. 14(5):e1006081. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priesemann V, Valderrama M, Wibral M, Le Van Quyen M. 2013. Neuronal avalanches differ from wakefulness to deep sleep—evidence from intracranial depth recordings in humans. PLoS Comput Biol. 9(3):e1002985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pröpper R, Obermayer K. 2013. Spyke Viewer: a flexible and extensible platform for electrophysiological data analysis. Front Neuroinform. 7:26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rao RPN, Ballard DH. 1999. Predictive coding in the visual cortex: a functional interpretation of some extra-classical receptive-field effects. Nat Neurosci. 2(1):79–87. [DOI] [PubMed] [Google Scholar]

- Reinhold K, Lien AD, Scanziani M. 2015. Distinct recurrent versus afferent dynamics in cortical visual processing. Nat Neurosci. 18(12):1789–1797. [DOI] [PubMed] [Google Scholar]

- Ribeiro TL, Copelli M, Caixeta F, Belchior H, Chialvo DR, Nicolelis MAL, Ribeiro S. 2010. Spike avalanches exhibit universal dynamics across the sleep-wake cycle. PLoS One. 5(11):e14129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ribeiro TL, Ribeiro S, Belchior H, Caixeta F, Copelli M. 2014. Undersampled critical branching processes on small-world and random networks fail to reproduce the statistics of spike avalanches. PLoS One. 9(4):e94992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shew WL, Plenz D. 2013. The functional benefits of criticality in the cortex. Neuroscientist. 19(1):88–100. [DOI] [PubMed] [Google Scholar]

- Shriki O, Alstott J, Carver F, Holroyd T, Henson RNA, Smith ML, Coppola R, Bullmore E, Plenz D. 2013. Neuronal avalanches in the resting MEG of the human brain. J Neurosci. 33(16):7079–7090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Softky WR, Koch C. 1993. The highly irregular firing of cortical cells is inconsistent with temporal integration of random EPSPs. J Neurosci. 13(1):334–350. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein RB, Gossen ER, Jones KE. 2005. Neuronal variability: noise or part of the signal? Nat Rev Neurosci. 6(5):389–397. [DOI] [PubMed] [Google Scholar]

- Suarez H, Koch C, Douglas R. 1995. Modeling direction selectivity of simple cells in striate visual cortex within the framework of the canonical microcircuit. J Neurosci. 15(10):6700–6719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tagliazucchi E, Balenzuela P, Fraiman D, Chialvo DR. 2012. Criticality in large-scale brain fmri dynamics unveiled by a novel point process analysis. Front Physiol. 3:1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tkačik G, Mora T, Marre O, Amodei D, Palmer SE, Berry MJ, Bialek W. 2015. Thermodynamics and signatures of criticality in a network of neurons. Proc Natl Acad Sci U S A. 112(37):11508–11513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tolhurst DJ, Movshon JA, Thompson ID. 1981. The dependence of response amplitude and variance of cat visual cortical neurones on stimulus contrast. Exp Brain Res. 41(3–4):414–419. [DOI] [PubMed] [Google Scholar]

- van Hateren JH, van der Schaaf A. 1998. Independent component filters of natural images compared with simple cells in primary visual cortex. Proc Biol Sci. 265(1394):359–366. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Vreeswijk C, Sompolinsky H. 1996. Chaos in neuronal networks with balanced excitatory and inhibitory activity. Science. 274(5293):1724–1726. [DOI] [PubMed] [Google Scholar]

- van Vreeswijk C, Sompolinsky H. 1997. Irregular firing in cortical circuits with inhibition/excitation balance In: Bower, editor. Computational neuroscience: trends in reserach, 1997. New York: Plenum Press; p. 209–213. [Google Scholar]

- Vogels R, Spileers W, Orban GA. 1989. The response variability of striate cortical neurons in the behaving monkey. Exp Brain Res. 77(2):432–436. [DOI] [PubMed] [Google Scholar]

- Wang XJ. 2002. Probabilistic decision making by slow reverberation in cortical circuits. Neuron. 36(5):955–968. [DOI] [PubMed] [Google Scholar]

- Wang XR, Lizier JT, Prokopenko M. 2011. Fisher information at the edge of chaos in random Boolean networks. Artif Life. 17(4):315–329. [DOI] [PubMed] [Google Scholar]

- Wei CZ. 1991. Convergence rates for the critical branching process with immigration. Stat Sin. 1:175–184. [Google Scholar]

- Williams-García RV, Moore M, Beggs JM, Ortiz G. 2014. Quasicritical brain dynamics on a nonequilibrium Widom line. Phys Rev E. 90(6):062714. [DOI] [PubMed] [Google Scholar]

- Wilting J, Dehning J, Pinheiro Neto J, Rudelt L, Wibral M, Zierenberg J, Priesemann V. 2018. Operating in a reverberating regime enables rapid tuning of network states to task requirements. frontiers in systems. Neuroscience. 12:55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilting J, Priesemann V. 2018. Inferring collective dynamical states from widely unobserved systems. Nat Commun. 9(1):2325. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zierenberg J, Wilting J, Priesemann V. 2018. Homeostatic plasticity and external input shape neural network dynamics. Phys Rev X. 8(3):031018. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.