Key Points

Question

How do outcomes of hospitals eligible for the American College of Surgeons National Accreditation Program for Rectal Cancer compare with those of hospitals less likely to be accredited?

Findings

This cohort study of 1315 American College of Surgeons Commission on Cancer–accredited hospitals found that those most prepared for accreditation are usually academic institutions with the best survival outcomes. These hospitals more often serve affluent populations.

Meaning

The current standards and scope of the National Accreditation Program for Rectal Cancer may not reach hospitals and patients most in need of improvement and could exacerbate disparities in access to high-quality care, which may be mitigated by quality improvement interventions and redirection of socioeconomically disadvantaged patients to high-quality accredited institutions.

Abstract

Importance

The American College of Surgeons National Accreditation Program for Rectal Cancer (NAPRC) promotes multidisciplinary care to improve oncologic outcomes in rectal cancer. However, accreditation requirements may be difficult to achieve for the lowest-performing institutions. Thus, it is unknown whether the NAPRC will motivate care improvement in these settings or widen disparities.

Objectives

To characterize hospitals’ readiness for accreditation and identify differences in the patients cared for in hospitals most and least prepared for accreditation.

Design, Setting, and Participants

A total of 1315 American College of Surgeons Commission on Cancer–accredited hospitals in the National Cancer Database from January 1, 2011, to December 31, 2015, were sorted into 4 cohorts, organized by high vs low volume and adherence to process standards, and patient and hospital characteristics and oncologic outcomes were compared. The patients included those who underwent surgical resection with curative intent for rectal adenocarcinoma, mucinous adenocarcinoma, or signet ring cell carcinoma. Data analysis was performed from November 2017 to January 2018.

Exposures

Hospitals’ readiness for accreditation, as determined by their annual resection volume and adherence to 5 available NAPRC process standards.

Main Outcomes and Measures

Hospital characteristics, patient sociodemographic characteristics, and 5-year survival by hospital.

Results

Among the 1315 included hospitals, 38 (2.9%) met proposed thresholds for all 5 NAPRC process standards and 220 (16.7%) met the threshold on 4 standards. High-volume hospitals (≥20 resections per year) tended to be academic institutions (67 of 104 [64.4%] vs 159 of 1211 [13.1%]; P = .001), whereas low-volume hospitals (<20 resections per year) tended to be comprehensive community cancer programs (530 of 1211 [43.8%] vs 28 of 104 [26.9%]; P = .001). Patients in low-volume hospitals were more likely to be older (11 429 of 28 076 [40.7%] vs 4339 of 12 148 [35.7%]; P < .001) and have public insurance (13 054 of 28 076 [46.5%] vs 4905 of 12 148 [40.4%]; P < .001). Low-adherence hospitals were more likely to care for black and Hispanic patients (1980 of 19 577 [17.2%] vs 3554 of 20 647 [10.1%]; P < .001). On multivariable Cox proportional hazards model regression, high-volume hospitals had better 5-year survival outcomes than low-volume hospitals (hazard ratio, 0.99; 95% CI, 0.99-1.00; P < .001), but there was no significant survival difference by hospital process standard adherence.

Conclusions and Relevance

Hospitals least likely to receive NAPRC accreditation tended to be community institutions with worse survival outcomes, serving patients at a lower socioeconomic position. To possibly avoid exacerbating disparities in access to high-quality rectal cancer care, the NAPRC study findings suggest enabling access for patients with socioeconomic disadvantage or engaging in quality improvement for hospitals not yet achieving accreditation benchmarks.

This cohort study examines the accreditation status and readiness for accreditation of hospitals caring for patients with rectal cancer.

Introduction

Despite well-established evidence-based guidelines, marked shortcomings remain in the quality of rectal cancer care in the United States.1 Aiming to reduce unwanted variation in care practices and improve multidisciplinary engagement in rectal cancer care, the American College of Surgeons Commission on Cancer has begun implementation of a National Accreditation Program for Rectal Cancer (NAPRC).2 Like other nationally endorsed accreditation programs, such as the National Cancer Institute cancer center designation or bariatric centers of excellence, the NAPRC intends to improve outcomes by certifying process standards in their member institutions. Some of these accreditation programs have faced controversy around their uncertain effect on access to care.3 In the case of the bariatric accreditation program, efforts to improve patient safety resulted in decreased access to bariatric surgery for nonwhite Medicare beneficiaries.4

The NAPRC aims to improve the quality of rectal cancer care on a national scale, but it is not yet clear how accreditation might affect patients’ access to high-quality care in the hospitals they are most likely to use. Furthermore, nationwide data suggest that only slightly more than half (56.3%) of patients with rectal cancer in the United States currently receive guideline-concordant care at the adherence levels specified by the NAPRC.5 Still, the characteristics of hospitals capable of achieving such levels of adherence remain unknown, and so also remains the effect of the NAPRC on patients’ access to quality care. The availability of NAPRC designation could motivate improvement in the delivery of guideline-concordant care across the spectrum of institutions. However, if accreditation is achieved primarily in high-volume specialty institutions already providing high-quality care, the quality of care at unaccredited hospitals may stagnate or worsen. Recognizing that the quality of rectal cancer care is associated with patients’ socioeconomic position,6,7 the NAPRC could have the unintended consequence of widening disparities and limiting access to high-quality care for certain patient populations if, as with the bariatric accreditation program, it favors already high-performing institutions.

In this study, we first modeled each hospital’s readiness for accreditation, according to validated measures of quality, including surgical volume and adherence to the NAPRC-recommended rectal cancer process measures that can be ascertained in National Cancer Database (NCDB) data. We then compared patient characteristics, hospital characteristics, and outcomes between hospitals, based on their procedure volume and process adherence. We hypothesized that hospitals most ready for accreditation will tend to serve higher-resourced patient populations, and that patients with socioeconomic disadvantage may have lesser access to these institutions. Understanding of this potential source of increased disparity may enable the design and dissemination of the NAPRC to prevent unintended consequences.

Methods

Inclusion Criteria

This was a retrospective cohort analysis in which we queried the NCDB Participant Use File from January 1, 2011, to December 31, 2015. Data analysis was conducted from November 2017 to January 2018. The NCDB Participant Use File captures patient data from Commission on Cancer–accredited hospitals,8 which account for approximately 70% of patients with cancer in the United States.9 This date range was selected to most accurately represent hospitals’ current readiness for accreditation. This study was deemed exempt from human subjects review by the institutional review board of the University of Michigan; data were deidentified.

Analysis was limited to patients who underwent surgical resection with curative intent for adenocarcinoma, mucinous adenocarcinoma, or signet ring cell carcinoma of the rectum. Patients with missing data regarding chemotherapy or radiotherapy were excluded (n = 162), as were patients with incomplete clinical staging information (n = 9287), as the hospital’s adherence in care provided could not be established.

Modeling Readiness for Accreditation

We categorized hospitals according to both annual procedure volume and adherence to NAPRC-defined process measures. Although many of the NAPRC measures are not captured in available data registries, 3 pathologic measures (circumferential radial margin, proximal and distal margins, and tumor regression), as well as clinical staging, timing of definitive treatment, and carcinoembryonic antigen level, are available in the NCDB Participant Use File.5 In addition, the NCDB captures the Commission on Cancer standard for treatment of rectal cancer, which requires delivery of neoadjuvant chemoradiotherapy for clinical stage II or III disease or adjuvant chemoradiotherapy administered within 180 days postoperatively for pathologic stages II and III disease, for a total of 5 process standards captured in this database.10

In accordance with the NAPRC’s requirements for accreditation and accreditation with contingency, we defined high adherence to be performance above the mandated threshold for at least 3 of the 5 measurable process standards (Table 1).11 Hospitals meeting 2 or fewer standards were designated as low-adherence institutions. These cutoffs were chosen to match those used in previous studies.5,12 These cutoffs also closely reflect the NAPRC accreditation standards. Programs may be accredited with contingency if they are found to be deficient in up to 5 of the 22 standards put forth in the National Accreditation Program for Rectal Cancer Standards Manual.11

Table 1. Hospitals Meeting Threshold for National Accreditation Program for Rectal Cancer Adherence by Process Standard.

| Process Standards per NAPRC Threshold | Hospitals Meeting Standard by Hospital Volume, No. (% of All Hospitals) | Mean Adherence to Specific Process Standard Across All Hospitals, % | |

|---|---|---|---|

| <20 Cases per Year (n = 1211) | ≥20 Cases per Year (n = 104) | ||

| Complete pathologic test report (95%) | 153 (12.6) | 4 (3.8) | 76.0 |

| Clinical staging performed (95%) | 1007 (83.1) | 92 (88.5) | 97.6 |

| CEA obtained (75%) | 486 (40.1) | 34 (32.7) | 66.4 |

| Treatment within 60 d of diagnosis (80%) | 998 (82.4) | 87 (83.7) | 86.9 |

| Neoadjuvant/adjuvant chemoradiotherapy for stages II and III rectal cancer (85%) | 499 (41.2) | 30 (28.8) | 78.7 |

| Aggregate No. of process standards met | |||

| 0/5 | 31 (2.6) | 2 (1.9) | NA |

| 1/5 | 143 (11.8) | 16 (15.4) | NA |

| 2/5 | 393 (32.5) | 41 (39.4) | NA |

| 3/5 | 399 (32.9) | 32 (30.8) | NA |

| 4/5 | 208 (17.2) | 12 (11.5) | NA |

| 5/5 | 37 (3.1) | 1 (1.0) | NA |

Abbreviations: CEA, carcinoembryonic; NA, not applicable; NAPRC, National Accreditation Program for Rectal Cancer.

We computed mean hospital procedure volume as a structural measure of rectal cancer care quality, as it is well established that higher case volume is associated with superior outcomes.1,13,14 Hospitals with a mean of 20 or more surgical rectal cancer cases annually were considered high volume, and hospitals with fewer than 20 cases were categorized as low volume. This cutoff was chosen to be consistent with previous studies and reflects the observed distribution of case volume between institutions; this level also allowed for similar-size comparison groups.12

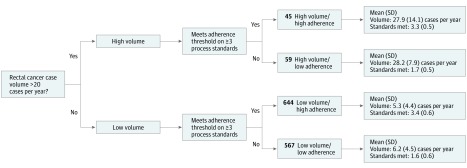

Using the high and low adherence and volume assignments, hospitals were categorized into 4 groups: high volume/high adherence, high volume/low adherence, low volume/high adherence, and low volume/low adherence (Figure 1).

Figure 1. Hospital Classification Schema.

Volume and process standard thresholds for hospital categorization.

Statistical Analysis

Descriptive statistics were used to analyze adherence to the measurable NAPRC process standards across all hospitals. We then analyzed the characteristics of the hospitals and the patients they serve between the 4 hospital groups, using Fisher exact and χ2 tests for categorical variables, t test for continuous variables, and analysis of variance for multicategory comparisons of continuous data. We compared overall 5-year survival between groups of hospitals using multivariable Cox proportional hazards regression models, adjusting for a priori clinically relevant patient factors, including age, sex, race/ethnicity, and rectal cancer stage. Observations were censored according to NCDB data after the date of last contact. We used clustered SEs to account for clustering of outcomes within hospitals and considered a 2-tailed, unpaired P value <.05 to be significant. All statistical analyses were conducted using Stata, version 14 (StataCorp LP).

Results

Hospital Accreditation Readiness Classification

We identified 1315 hospitals that performed a total of 40 224 rectal cancer resections meeting inclusion criteria. Within this group of hospitals, 38 (2.9%) met the thresholds for adherence to all 5 NAPRC measures, 220 (16.7%) met 4 measures, and 431 (32.8%) met 3 measures. The measures are reported by volume of cases per year in Table 1. The mean (SD) number of process measures observed across institutions was 2.6 (1.1), and the median was 3 (interquartile range, 5). Pathologic testing was the most commonly deficient process measure, and within that composite measure, the tumor regression measurement was most often missing.

The mean (SD) rectal cancer surgical volume across all hospitals was 7.5 (8.0) cases per year. Of the 1211 (92.1%) low-volume hospitals (<20 cases per year), 644 (53.2%) were considered high quality, with above-threshold adherence to 3 or more of the 5 NAPRC measures. Of the 104 (8%) high-volume hospitals (≥20 or more cases per year), 45 (43.3%) were classified as high adherence.

Forty-five hospitals (3.4%) met criteria for designation as a high-volume/high-adherence institution, with a mean (SD) annual case volume of 27.9 (14.1). These hospitals met a mean (SD) of 3.3 (0.5) of 5 total process standards. In the high-volume/low-adherence group, there were 59 hospitals (4.5%) with a mean (SD) annual case volume of 28.2 (7.9) cases that met a mean (SD) of 1.7 (0.5) process standards. In the low-volume/high-adherence group, there were 644 hospitals (49.0%) with a mean (SD) annual case volume of 5.3 (4.4) cases that met 3.4 (0.6) process standards. In addition, there were 567 hospitals (43.1%) in the low-volume/low-adherence group, with a mean (SD) annual case volume of 6.2 (4.5) cases that met 1.6 (0.6) process standards (Figure 1).

Hospital Characteristics by Accreditation Readiness

High-volume hospitals were more likely than low-volume hospitals to be academic institutions (67 of 104 [64.4%] vs 159 of 1211 [13.1%]; P = .001), whereas low-volume hospitals tended to be comprehensive community cancer programs (530 of 1211 [43.8%] vs 28 of 104 [26.9%]; P = .001) (Table 2). Most of the 1315 hospitals were located in the South Atlantic (Washington, DC; Delaware; Florida; Georgia; Maryland; North Carolina; South Carolina; Virginia; West Virginia) (272 [20.7%]) and East North Central (Illinois, Indiana, Michigan, Ohio, Wisconsin) (265 [20.2%]) regions. There was a slight trend toward low-adherence hospitals clustering in southern regions.

Table 2. Hospital Characteristics by Hospital Group.

| Hospital Characteristics | No. (%) | P Value | |||

|---|---|---|---|---|---|

| High Volume/High Adherence (n = 45) | High Volume/Low Adherence (n = 59) | Low Volume/High Adherence (n = 644) | Low Volume/Low Adherence (n = 567) | ||

| Hospital type | |||||

| Community cancer program | 0 | 0 | 219 (34.0) | 156 (27.5) | <.001 |

| Comprehensive community cancer program | 12 (26.7) | 16 (27.1) | 277 (43.0) | 253 (44.6) | |

| Academic/research program | 29 (64.4) | 38 (64.4) | 58 (9.0) | 101 (17.8) | |

| Integrated network cancer program | 4 (8.9) | 5 (8.5) | 90 (14.0) | 57 (10.1) | |

| Hospital region | |||||

| New England | 2 (4.4) | 5 (8.5) | 50 (7.8) | 33 (5.8) | <.001 |

| Middle Atlantic | 7 (15.6) | 5 (8.5) | 79 (12.3) | 82 (14.5) | |

| South Atlantic | 8 (17.8) | 15 (25.4) | 125 (19.4) | 124 (21.9) | |

| East North Central | 11 (24.4) | 10 (17.0) | 163 (25.3) | 81 (14.3) | |

| East South Central | 3 (6.7) | 4 (6.8) | 40 (6.2) | 39 (6.9) | |

| West North Central | 5 (11.1) | 2 (3.4) | 67 (10.4) | 25 (4.4) | |

| West South Central | 4 (8.9) | 7 (11.9) | 34 (5.3) | 67 (11.8) | |

| Mountain | 1 (2.2) | 4 (6.8) | 27 (4.2) | 28 (4.9) | |

| Pacific | 4 (8.9) | 7 (11.9) | 59 (9.2) | 88 (15.5) | |

Patient Characteristics by Accreditation Readiness

Low-volume hospitals served a higher proportion of older patients (11 429 of 28 076 [40.7%] vs of 4339 of 12 148 [35.7%]; P < .001) and patients with public insurance (Medicaid and Medicare, 13 054 [46.5%] vs 4905 [40.4%]; P < .001). High-volume hospitals were more likely to serve patients with higher levels of education (4551 of 12 148 [37.5%] vs 8915 of 28 076 [31.8%]; P < .001) and private insurance (6438 [53.0%] vs 12 964 [46.2%]; P < .001). Patients with stage III or IV disease were more likely to be seen at high-volume hospitals (54.7% vs 48.3%; P < .001) (Table 3).

Table 3. Patient Characteristics by Hospital Group.

| Patient Characteristics | No. (%) | P Value | |||

|---|---|---|---|---|---|

| High Volume/High Adherence (n = 5428) | High Volume/Low Adherence (n = 6720) | Low Volume/High Adherence (n = 14 149) | Low Volume/Low Adherence (n = 13 927) | ||

| Age, mean (SD), y | 59.5 (12.6) | 59.8 (12.7) | 61.4 (12.5) | 61.5 (12.6) | <.001 |

| Age >65 y, mean (SD) | 1877 (34.6) | 2462 (36.6) | 5713 (40.4) | 5716 (41.0) | <.001 |

| Women | 2050 (37.8) | 2602 (38.7) | 5347 (37.8) | 5360 (38.5) | .44 |

| Race/ethnicity | |||||

| White | 4652 (86.8) | 5280 (79.4) | 12 024 (85.6) | 10 420 (75.6) | <.001 |

| Black | 326 (6.1) | 555 (8.3) | 983 (7.0) | 1317 (9.6) | |

| Hispanic | 123 (2.3) | 448 (6.7) | 548 (3.9) | 1234 (9.0) | |

| Other | 261 (4.9) | 368 (5.5) | 487 (3.5) | 809 (5.9) | |

| Insurance | |||||

| Private | 2873 (52.9) | 3565 (53.1) | 6701 (47.4) | 6263 (45.0) | <.001 |

| Medicare | 1858 (34.2) | 2270 (33.8) | 5446 (38.5) | 5366 (38.5) | |

| Medicaid | 365 (6.7) | 412 (6.1) | 995 (7.0) | 1247 (9.0) | |

| Other government insurance | 75 (1.4) | 106 (1.6) | 196 (1.4) | 162 (1.2) | |

| Not insured | 191 (3.5) | 299 (4.5) | 610 (4.3) | 643 (4.6) | |

| Income quartiles, $a | |||||

| <38 000 | 905 (16.7) | 1058 (15.8) | 2320 (16.4) | 2759 (19.9) | <.001 |

| 38 000-48 000 | 1317 (24.3) | 1450 (21.6) | 3834 (27.2) | 3152 (22.7) | |

| >48 000-63 000 | 1478 (27.3) | 1779 (26.5) | 3971 (28.1) | 3521 (25.4) | |

| >63 000 | 1714 (31.7) | 2423 (36.1) | 3994 (28.3) | 4457 (32.1) | |

| No high school degree, %a,b | |||||

| ≥29.0 | 716 (13.5) | 979 (15.1) | 1983 (14.5) | 2978 (22.1) | <.001 |

| 20.0%-28.9 | 1292 (24.4) | 1418 (21.8) | 3411 (24.9) | 3334 (24.7) | |

| 14.0%-19.9 | 1339 (25.3) | 1503 (23.1) | 3722 (27.1) | 2878 (21.3) | |

| <14.0% | 1945 (36.8) | 2606 (40.1) | 4604 (33.6) | 4311 (31.9) | |

| Patient location | |||||

| Metropolitan | 3976 (74.7) | 5421 (82.2) | 10 265 (74.6) | 11 365 (83.3) | <.001 |

| Urban | 1190 (22.4) | 1049 (15.9) | 3030 (22.0) | 2014 (14.8) | |

| Rural | 154 (2.9) | 122 (1.9) | 475 (3.5) | 261 (1.9) | |

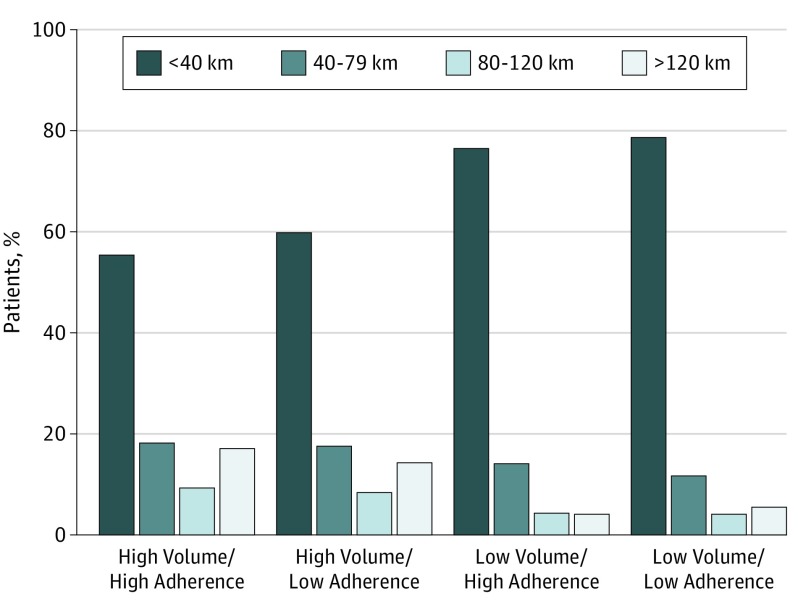

| Distance traveled to hospital, mean (SD), km | 97.7 (270.6) | 74.7 (208.8) | 42.8 (161.5) | 36.5 (104.3) | <.001 |

| Rectal cancer stage | |||||

| I | 740 (13.6) | 1112 (16.6) | 2065 (14.6) | 2661 (19.1) | <.001 |

| II | 1654 (30.5) | 2002 (29.8) | 5114 (36.2) | 4682 (33.6) | |

| III | 2472 (45.6) | 2916 (43.4) | 5773 (40.8) | 5343 (38.4) | |

| IV | 561 (10.3) | 690 (10.3) | 1196 (8.5) | 1240 (8.9) | |

| Charlson-Deyo scorec | |||||

| 0 | 4184 (77.1) | 5295 (78.8) | 10 897 (77.0) | 10 895 (78.2) | .11 |

| 1 | 971 (17.9) | 1104 (16.4) | 2522 (17.8) | 2392 (17.2) | |

| 2 | 206 (3.8) | 238 (3.5) | 537 (3.8) | 472 (3.4) | |

| 3 | 67 (1.2) | 83 (1.2) | 193 (1.4) | 168 (1.2) | |

Assigned by zip code of patient’s residence.

Proportion of population without high school degree.

The Charlson-Deyo score indicates the number of comorbid conditions that a patient has, using only those found in the Charlson Comorbidity Score Mapping Table.15

Low-adherence hospitals were more likely to care for black and Hispanic patients (3554 [17.2%] vs 1980 [10.1%]; P < .001). Low-adherence hospitals were also most likely to serve patients from large cities (16 786 [81.3%] vs 14 241 [72.7%]; P < .001). There was no distinct pattern in patients’ comorbidity scores or income level between hospital groups. Finally, patients traveled nearly twice as far to be seen at high-volume hospitals compared with patients who traveled to low-volume hospitals (mean distance, 85.0 vs 39.5 km; P < .001) (Figure 2). Exclusions owing to missing data were somewhat less common in the high-volume/high-adherence hospitals (high-volume/high-adherence, 857 [13.3%]; high-volume/low-adherence, 1611 [19.3%]; low-volume/high-adherence, 3019 [17.6%]; low-volume/low-adherence, 3707 [21.0%]).

Figure 2. Distance Traveled by Hospital Group.

Distance traveled by patients to high-volume/high-adherence (A), high-volume/low-adherence (B), low-volume/high-adherence (C), and low-volume/low-adherence hospitals.

Overall Survival by Accreditation Readiness

Patients at hospitals with high volume/high adherence survived the longest and served as the reference group for the analysis. There was no significant difference in overall 5-year survival compared with patients in high-volume/low-adherence hospitals, with an HR of 1.02 (95% CI, 0.922-1.12; P = .42). Low-volume/high-adherence hospitals demonstrated an HR of 1.18 (95% CI, 1.08-1.28; P = .001). Low-volume/low-adherence hospitals demonstrated an HR of 1.21 (95% CI, 1.11-1.31; P < .001). These HRs reflect the 5-year mortality risk; they are reported for each group compared with the reference group and were adjusted for age, sex, race/ethnicity, Charlson-Deyo comorbidity score, and rectal cancer stage (eFigure and eTable in the Supplement). The Charlson-Deyo score indicates the number of comorbid conditions that a patient has, using only those found in the Charlson Comorbidity Score Mapping Table.15 Regarding relative survival between the other 3 groups of hospitals, compared with the high-volume/low-adherence hospitals, the low-volume/high-adherence hospitals demonstrated an HR of 1.15 (95% CI, 1.04-1.28; P = .006), and the low-volume/low-adherence hospitals demonstrated an HR of 1.19 (95% CI, 1.07-1.32; P = .001). The HR for the low-volume/low-adherence hospitals compared with the low-volume/high-adherence hospitals was 1.03 (95% CI, 0.97-1.1; P = .36). On multivariable Cox proportional hazards model regression, high-volume hospitals had better 5-year survival outcomes than low-volume hospitals (HR, 0.99; 95% CI, 0.99-1.00; P < .001), but there was no significant survival difference by hospital process standard adherence.

Discussion

This study has 3 key findings. First, the hospitals most prepared for accreditation are a small group of predominantly academic centers that serve a highly resourced patient population. Second, most patients with rectal cancer are cared for at low-volume or low-adherence hospitals, which are most often comprehensive community cancer centers serving patients with fewer socioeconomic resources. Patients tend to travel shorter distances to receive care at these hospitals that are less likely to be prepared for NAPRC accreditation. Third, mean 5-year survival is lowest among patients in low-volume/low-adherence hospitals, which are least likely to receive accreditation.

Previous studies have found that many patients who undergo treatment for rectal cancer in the United States do not receive guideline-concordant care.5 Accordingly, in this study we found that a minority of hospitals are currently well positioned to achieve the requirements for NAPRC accreditation. In a recent study that queried hospitals’ readiness for accreditation, their self-reported rates of adherence were consistent with our findings.11 Low-volume/low-adherence hospitals are unlikely to be accredited, and they have significantly worse survival compared with other hospital groups. However, they perform half of all rectal cancer operations and, as found in other analyses, serve a larger proportion of older, Medicare and/or Medicaid, and black and Hispanic patients, and patients who do not travel far for care.13,16 Whether the NAPRC can successfully improve the quality of care in these settings or induce selective referral to accredited institutions remains unknown.

It is essential that we understand the possible mechanisms for care improvement in the setting of the NAPRC because of the considerable gap identified between the highest- and lowest-performing hospitals. Without efforts to improve access to high-quality care for patients who receive treatment at the lowest-performing hospitals, an accreditation program for high-performing institutions could easily exclude the places most in need of improvement. Failing to include these lower-volume, lower-adherence hospitals in the NAPRC will leave some of the most vulnerable patients with access to inferior care.17

The findings of this study suggest 2 different strategies by which the NAPRC might successfully enable reductions in rectal cancer care disparities. Both structural determinants, such as case volume, and process measures, such as the NAPRC standards, contribute to the quality of care that a hospital can provide.18 Hospitals in the high-volume/low-adherence group, as well as the low-volume/high-adherence group, are compelling targets for focused quality improvement efforts. The first strategy is geared toward the high-volume centers. We believe that the current NAPRC standards are suited for improving care at hospitals with high-volume but low-guideline adherence. Promoting improved adherence to process standards via, for example, training sessions tailored to the departments required to meet certain guidelines, could facilitate accreditation for these hospitals. Similar survival outcomes between this hospital group and the high-volume and high-adherence group provide further rationale for the NAPRC to promote process improvement and allow these institutions to earn accreditation.

The second strategy for broader inclusion targets low-volume hospitals that already achieve high-level adherence to the measured process standards. This strategy is more challenging, but would likely make a greater contribution to expanding access to high-quality care, as the patients who receive treatment at these hospitals share sociodemographic characteristics with the underserved populations that seek care at low-volume/low-adherence hospitals. In addition to serving a high proportion of low-income, publicly insured, and racial minority patients, this hospital group provides care for the largest share of patients from rural areas. It is encouraging that low-volume/high-adherence institutions already deliver care concordant with multiple process standards despite their lower rectal cancer case volume, but this is tempered by their patients’ survival outcomes, which are shorter than those of the high-volume hospitals. The NAPRC might consider a strategy in which it encourages selective referral of clinically complex patients with rectal cancer receiving care at low-volume/low-adherence hospitals to the low-volume/high-adherence hospitals.19 In addition, partnering these low-volume hospitals with academic centers to coordinate care plans, for example, via regional collaboratives, could further encourage high-quality care.20,21

Although there has been reasonable concern about the effect of expanding systems of care, proceeding deliberately with proper oversight for patient safety can mitigate these risks.22 Admittedly, these considerations are ambitious and may require resources and influences beyond those available within the proposed accreditation program.

Limitations

There are limitations to this study. First, owing to the observational retrospective nature of the NCDB, these findings may be biased by unmeasured confounding variables. However, this analysis is bolstered by the fact that it is conducted at the hospital level, which lessens the effect of differences between individual patients by pooling data into larger cohorts. Second, our process standards are an imperfect surrogate for the actual NAPRC standards. These 5 standards do not capture all relevant hospital quality attributes; however, they are the best available means of describing program quality from a large national database and have been used in studies authored by the architects of NAPRC to assess national trends in quality adherence in rectal cancer care.5 A more granular study of particular process measures and their effect on patient outcomes will be needed after the NAPRC is implemented. Third, the NCDB is not a comprehensive national database, as it includes only Commission on Cancer–accredited institutions.8 However, it is anticipated that nonaccredited hospitals would have even lower surgical volumes and lower rates of adherence to guidelines, and thus their exclusion is likely conservative. Although the findings can be generalized only to Commission on Cancer–accredited hospitals, NAPRC accreditation requires Commission on Cancer accreditation, and thus NCDB data cover the full set of hospitals relevant to these questions. Furthermore, we excluded patients with missing clinical staging, as their care could not be assessed for adherence to key measures, and exclusions were least common in the high-volume/high-adherence hospitals. Any bias from this difference would again be conservative, however, as their inclusion would exaggerate differences between groups.

In addition, the survival analysis demonstrated that volume was a stronger predictor of survival than process standard adherence. Volume is not included as an accreditation standard in the NAPRC but may be associated with a hospital’s ability to provide complex multidisciplinary care.23,24 The NAPRC has designated multiple clinical protocols beyond just the process standards (eg, multidisciplinary tumor boards and quality-reporting systems) as criteria for accreditation. Our model included volume as a proxy for these unmeasured structural standards because they will be most readily achieved in specialty institutions with established, high-volume rectal cancer practices. Our findings remain relevant to the NAPRC and potential efforts to increase the inclusivity of the accreditation program.

Conclusions

If the NAPRC aims to make substantive progress in bettering rectal cancer care in the United States, preserving access via broader accreditation appears to be necessary. Specifically, low-volume/low-adherence hospitals could selectively refer patients with more complex conditions to low-volume/high-adherence hospitals. In addition, it appears that high-volume hospitals should renew their commitment to process standards, and those with lower rates of adherence to these measures should be supported in improving the delivery of high-quality care to achieve accreditation. In this way, the NAPRC could improve access to high-quality rectal cancer care while maintaining a commitment to excellence.

The NAPRC seems to need to hold institutions to a high standard; however, it does not appear that this goal should be at the expense of preserving, and even expanding, access to high-quality care for socioeconomically disadvantaged patients. Effort to improve the care of underserved populations will probably yield the largest improvements in survival and quality of life for patients with rectal cancer.

eFigure. Adjusted Survival Curves by Hospital Group

eTable. Cox Proportional Hazards Model Results for Each Term in the Model

References

- 1.Monson JR, Probst CP, Wexner SD, et al. ; Consortium for Optimizing the Treatment of Rectal Cancer (OSTRiCh) . Failure of evidence-based cancer care in the United States: the association between rectal cancer treatment, cancer center volume, and geography. Ann Surg. 2014;260(4):625-631. doi: 10.1097/SLA.0000000000000928 [DOI] [PubMed] [Google Scholar]

- 2.American College of Surgeons National Accreditation Program for Rectal Cancer. What is the NAPRC? https://www.facs.org/quality-programs/cancer/naprc. Accessed February 1, 2018.

- 3.Telem DA, Talamini M, Altieri M, Yang J, Zhang Q, Pryor AD. The effect of national hospital accreditation in bariatric surgery on perioperative outcomes and long-term mortality. Surg Obes Relat Dis. 2015;11(4):749-757. doi: 10.1016/j.soard.2014.05.012 [DOI] [PubMed] [Google Scholar]

- 4.Nicholas LH, Dimick JB. Bariatric surgery in minority patients before and after implementation of a centers of excellence program. JAMA. 2013;310(13):1399-1400. doi: 10.1001/jama.2013.277915 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Brady JT, Xu Z, Scarberry KB, et al. ; Consortium for Optimizing the Treatment of Rectal Cancer (OSTRiCh) . Evaluating the current status of rectal cancer care in the US: where we stand at the start of the Commission on Cancer’s National Accreditation Program for Rectal Cancer. J Am Coll Surg. 2018;226(5):881-890. doi: 10.1016/j.jamcollsurg.2018.01.057 [DOI] [PubMed] [Google Scholar]

- 6.Gabriel E, Thirunavukarasu P, Al-Sukhni E, Attwood K, Nurkin SJ. National disparities in minimally invasive surgery for rectal cancer. Surg Endosc. 2016;30(3):1060-1067. doi: 10.1007/s00464-015-4296-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Joseph DA, Johnson CJ, White A, Wu M, Coleman MP. Rectal cancer survival in the United States by race and stage, 2001 to 2009: findings from the CONCORD-2 study. Cancer. 2017;123(suppl 24):5037-5058. doi: 10.1002/cncr.30882 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Guillem JG, Díaz-González JA, Minsky BD, et al. cT3N0 rectal cancer: potential overtreatment with preoperative chemoradiotherapy is warranted. J Clin Oncol. 2008;26(3):368-373. doi: 10.1200/JCO.2007.13.5434 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Bilimoria KY, Stewart AK, Winchester DP, Ko CY. The National Cancer Data Base: a powerful initiative to improve cancer care in the United States. Ann Surg Oncol. 2008;15(3):683-690. doi: 10.1245/s10434-007-9747-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Commission on Cancer Quality Measures. Chicago, IL: American College of Surgeons; 2016. [Google Scholar]

- 11.American College of Surgeons Commission on Cancer The National Accreditation Program for Rectal Cancer Standards Manual. American College of Surgeons Commission on Cancer. Chicago, IL: American College of Surgeons; 2017. [Google Scholar]

- 12.Lee L, Dietz DW, Fleming FJ, et al. Accreditation readiness in US multidisciplinary rectal cancer care: a survey of OSTRICH member institutions. JAMA Surg. 2018;153(4):388-390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Xu Z, Becerra AZ, Justiniano CF, et al. Is the distance worth it? patients with rectal cancer traveling to high-volume centers experience improved outcomes. Dis Colon Rectum. 2017;60(12):1250-1259. doi: 10.1097/DCR.0000000000000924 [DOI] [PubMed] [Google Scholar]

- 14.Archampong D, Borowski D, Wille-Jørgensen P, Iversen LH. Workload and surgeon’s specialty for outcome after colorectal cancer surgery. Cochrane Database Syst Rev. 2012;(3):CD005391. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.American College of Surgeons Charlson-Deyo score. http://ncdbpuf.facs.org/content/charlsondeyo-comorbidity-index. Published 2016. Accessed January 10, 2019.

- 16.Dimick J, Ruhter J, Sarrazin MV, Birkmeyer JD. Black patients more likely than whites to undergo surgery at low-quality hospitals in segregated regions. Health Aff (Millwood). 2013;32(6):1046-1053. doi: 10.1377/hlthaff.2011.1365 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Livingston EH, Burchell I. Reduced access to care resulting from centers of excellence initiatives in bariatric surgery. Arch Surg. 2010;145(10):993-997. doi: 10.1001/archsurg.2010.218 [DOI] [PubMed] [Google Scholar]

- 18.Donabedian A. The quality of care. how can it be assessed? JAMA. 1988;260(12):1743-1748. doi: 10.1001/jama.1988.03410120089033 [DOI] [PubMed] [Google Scholar]

- 19.Huang LC, Tran TB, Ma Y, Ngo JV, Rhoads KF. Factors that influence minority use of high-volume hospitals for colorectal cancer care. Dis Colon Rectum. 2015;58(5):526-532. doi: 10.1097/DCR.0000000000000353 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Wong RS, Vikram B, Govern FS, et al. National Cancer Institute’s Cancer Disparities Research Partnership Program: experience and lessons learned. Front Oncol. 2014;4:303. doi: 10.3389/fonc.2014.00303 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Luckenbaugh AN, Miller DC, Ghani KR. Collaborative quality improvement. Curr Opin Urol. 2017;27(4):395-401. doi: 10.1097/MOU.0000000000000404 [DOI] [PubMed] [Google Scholar]

- 22.Haas S, Gawande A, Reynolds ME. The risks to patient safety from health system expansions. JAMA. 2018;319(17):1765-1766. doi: 10.1001/jama.2018.2074 [DOI] [PubMed] [Google Scholar]

- 23.Hollenbeck BK, Daignault S, Dunn RL, Gilbert S, Weizer AZ, Miller DC. Getting under the hood of the volume-outcome relationship for radical cystectomy. J Urol. 2007;177(6):2095-2099. doi: 10.1016/j.juro.2007.01.153 [DOI] [PubMed] [Google Scholar]

- 24.Kowalski C, Lee SY, Ansmann L, Wesselmann S, Pfaff H. Meeting patients’ health information needs in breast cancer center hospitals—a multilevel analysis. BMC Health Serv Res. 2014;14:601. doi: 10.1186/s12913-014-0601-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eFigure. Adjusted Survival Curves by Hospital Group

eTable. Cox Proportional Hazards Model Results for Each Term in the Model