Abstract

Sorted L-One Penalized Estimation (SLOPE, Bogdan et al., 2013, 2015) is a relatively new convex optimization procedure which allows for adaptive selection of regressors under sparse high dimensional designs. Here we extend the idea of SLOPE to deal with the situation when one aims at selecting whole groups of explanatory variables instead of single regressors. Such groups can be formed by clustering strongly correlated predictors or groups of dummy variables corresponding to different levels of the same qualitative predictor. We formulate the respective convex optimization problem, gSLOPE (group SLOPE), and propose an efficient algorithm for its solution. We also define a notion of the group false discovery rate (gFDR) and provide a choice of the sequence of tuning parameters for gSLOPE so that gFDR is provably controlled at a prespecified level if the groups of variables are orthogonal to each other. Moreover, we prove that the resulting procedure adapts to unknown sparsity and is asymptotically minimax with respect to the estimation of the proportions of variance of the response variable explained by regressors from different groups. We also provide a method for the choice of the regularizing sequence when variables in different groups are not orthogonal but statistically independent and illustrate its good properties with computer simulations. Finally, we illustrate the advantages of gSLOPE in the context of Genome Wide Association Studies. R package grpSLOPE with an implementation of our method is available on CRAN.

Keywords: Asymptotic Minimax, False Discovery Rate, Group selection, Model Selection, Multiple Regression, SLOPE

1 Introduction

Consider the classical multiple regression model of the form

| (1.1) |

where y is the n dimensional vector of values of the response variable, X is the n by p experiment (design) matrix and z ~ 𝒩(0, σ2In). We assume that y and X are known, while β is unknown. In many applications the purpose of the statistical analysis is to recover the support of β, which identifies the set of important regressors. Here, the true support corresponds to truly relevant variables (i.e. variables which have impact on observations). Common procedures to solve this model selection problem rely on minimization of some objective function consisting of the weighted sum of two components: first term responsible for the goodness of fit and second term penalizing the model complexity. Among such procedures one can mention classical model selection criteria like the Akaike Information Criterion (AIC) (Akaike, 1974) and the Bayesian Information Criterion (BIC) (Schwarz, 1978), where the penalty depends on the number of variables included in the model, or LASSO (Tibshirani, 1996), where the penalty depends on the ℓ1 norm of regression coefficients. The main advantage of LASSO over classical model selection criteria is that it is a convex optimization problem and, as such, it can be easily solved even for very large design matrices.

LASSO solution is obtained by solving the optimization problem

| (1.2) |

where λL is a tuning parameter defining the trade-off between the model fit and the sparsity of solution. In practical applications the selection of good λL might be very challenging. For example it has been reported that in high dimensional settings the popular cross-validation typically leads to detection of a large number of false regressors (see e.g. Bogdan et al., 2015). The general rule is that when one reduces λL, then LASSO can identify more elements from the true support (true discoveries) but at the same time it generates more false discoveries. In general the numbers of true and false discoveries for a given λL depend on unknown properties on the data generating mechanism, like the number of true regressors and the magnitude of their effects. A very similar problem occurs when selecting thresholds for individual tests in the context of multiple testing. Here it was found that the popular Benjamini-Hochberg rule (BH Benjamini and Hochberg, 1995), aimed at control of the False Discovery Rate (FDR), adapts to the unknown data generating mechanism and has some desirable optimality properties under a variety of statistical settings (see e.g. Abramovich et al., 2006; Bogdan et al., 2011; Neuvial and Roquain, 2012; Frommlet and Bogdan, 2013). The main property of this rule is that it relaxes the thresholds along the sequence of test statistics, sorted in the decreased order of magnitude. Recently the same idea was used in a new generalization of LASSO, named SLOPE (Sorted L-One Penalized Estimation, Bogdan et al., 2013, 2015). Instead of the ℓ1 norm (as in LASSO case), the method uses FDR control properties of Jλ norm, defined as follows; for sequence satisfying λ1 ≥ … ≥ λp ≥ 0 and b ∈ ℝp, , where |b|(1) ≥ … ≥ |b|(p) is the vector of sorted absolute values of coordinates of b. SLOPE is the solution to a convex optimization problem

| (1.3) |

which clearly reduces to LASSO for λ1 = … = λp =: λL. Similarly as in classical model selection, the support of the solution defines the subset of variables estimated as relevant. In (Bogdan et al., 2013, 2015) it is shown that when the sequence λ corresponds to the decreasing sequence of thresholds for BH then SLOPE controls FDR under orthogonal designs, i.e. when XTX = In. Moreover, in (Su and Candès, 2016) it is proved that SLOPE with this sequence of tuning parameters adapts to unknown sparsity and is asymptotically minimax under orthogonal and random Gaussian designs.

In the sequence of examples presented in (Bogdan et al., 2013, 2015; Brzyski et al., 2017) it was shown that SLOPE has very desirable properties in terms of FDR control in the case when regressor variables are weakly correlated. While there exist other interesting approaches which allow to control FDR under correlated designs (e.g. Barber and Candès, 2015), the efforts to prevent detection of false regressors which are strongly correlated with true ones inevitably lead to a loss of power. An alternative approach to deal with strongly correlated predictors is to simply give up the idea of distinguishing between them and include all of them into the selected model as a group. This leads to the problem of group selection in linear regression, extensively investigated and applied in many fields of science. In many of these applications the groups are selected not only due to the strong correlations but also by taking into account the problem-specific scientific knowledge. It is also common to cluster dummy variables corresponding to different levels of qualitative predictors.

Probably the most well known convex optimization method for selection of groups of explanatory variables is the group (gLASSO Bakin, 1999). For a fixed tuning parameter, λgL > 0, the gLASSO estimate is most frequently (e.g. Yuan and Lin, 2006; Simon et al., 2013) defined as a solution to optimization problem

| (1.4) |

where the sets I1, …, Im form a partition of the set {1, …, p}, |Ii| denotes the number of elements in set Ii, XIi is the submatrix of X composed of columns indexed by Ii and bIi is the restriction of b to indices from Ii. The method introduced in this article is, however, closer to the alternative version of gLASSO, in which penalties are imposed on ||XIi bIi||2 rather than ||bIi||2. This method was formulated in (Simon and Tibshirani, 2013), where the authors defined an estimate of β by

| (1.5) |

with the condition serving as a group relevance indicator.

Similarly as in the context of regular model selection, the properties of gLASSO strongly depend on the shrinkage parameter λgL, whose optimal value is the function of unknown parameters of true data generating mechanism. Thus, a natural question arises of whether the idea of SLOPE can be used for construction of a similar adaptive procedure for the group selection. To answer this query in this paper we define and investigate the properties of the group SLOPE (gSLOPE). We formulate the respective optimization problem and provide the algorithm for its solution. We also define the notion of the group FDR (gFDR), and provide the theoretical choice of the sequence of regularization parameters, which guarantees that gSLOPE controls gFDR in the situation when variables in different groups are orthogonal to each other. Moreover, we prove that the resulting procedure adapts to unknown sparsity and is asymptotically minimax with respect to the estimation of the proportions of variance of the response variable explained by regressors from different groups. Additionally, we provide a way of constructing the sequence of regularization parameters under the assumption that the regressors from distinct groups are independent and use computer simulations to show that it allows to control gFDR. Good properties of group SLOPE are illustrated using the practical example of Genome Wide Association Study. R package grpSLOPE with an implementation of our method is available on CRAN. All scripts used in simulations as well as in real data analysis are available at https://github.com/dbrzyski/gSLOPE. This repository contains also R scripts which were used to generate article figures.

2 Group SLOPE

2.1 Formulation of the optimization problem

Let the design matrix X belong to the space M(n, p) of matrices with n rows and p columns. Furthermore, suppose that I = {I1, …, Im} is some partition of the set {1, …, p}, i.e. Ii’s are nonempty sets, Ii ∩ Ij = ∅ for i ≠ j and ⋃Ii = {1, …, p}. We will consider the linear regression model with m groups of the form

| (2.1) |

where XIi is the submatrix of X composed of columns indexed by Ii and βIi is the restriction of β to indices from the set Ii. We will use notation l1, …, lm to refer to the ranks of submatrices XI1, …, XIm. To simplify notation later, we will assume that li > 0 (i.e. there is at least one nonzero entry of XIi for all i). Besides this, X may be an arbitrary matrix, in particular linear dependence inside each of the submatrices XIi is allowed.

In this article we will treat the value ||XIi βIi||2 as a measure of an impact of ith group on the response and we will say that the group i is truly relevant if and only if ||XIi βIi||2 > 0. Thus our task of the identification of the relevant groups is equivalent with finding the support of the vector ⟦β⟧I,X := (||XI1βI1||2, …, ||XIm βIm||2)⊤.

To estimate the nonzero coefficients of ⟦β⟧I,X, we will use a new penalized method, namely group SLOPE (gSLOPE). For a given nonincreasing sequence of nonnegative tuning parameters, λ1, …, λm, a given sequence of positive weights, w1, …, wm, and a design matrix, X, the gSLOPE estimator of regression coefficients, βgS, is defined as any solution to the optimization problem

| (2.2) |

where W is a diagonal matrix with Wi,i := wi, for i = 1, …, m. The estimate of ⟦β⟧I,X support is simply defined by the indices corresponding to nonzeros of ⟦β gS ⟧I,X.

It is easy to see that when one considers p groups containing only one variable (i.e. singleton groups situation), then taking all weights equal to one reduces (2.2) to SLOPE (1.3). On the other hand, taking and putting λ1 = … = λm =: λgL, immediately gives gLASSO problem (1.5) with the smoothing parameter λgL. The gSLOPE could be therefore treated both: as the extension to SLOPE, and the extension to group LASSO.

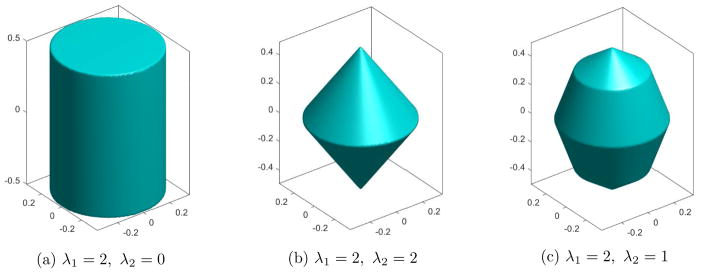

As shown in Appendix B the function Jλ,I,W,X(b) := Jλ(W⟦b⟧I,X) is a seminorm and becomes a norm when the design matrix X is of the full rank. Figure 1 illustrates how the shape of the unit ball in the norm Jλ,I,W(b) := Jλ(W⟦b⟧I) depends on the selection of the λ sequence. In this example p = 3, m = 2, I1 := {1, 2} and I2 := {3}. In case when only the first coefficient in the λ sequence is larger than zero Jλ,I,W(b) = λ1 maxi∈{1,2} wi||βIi||2, and the corresponding ball takes form of the cylinder. The privileged solutions occur on the “edges” of this cylinder and have the same weighted group effects for both groups (i.e. ). When λ1 = λ2 > 0 then the the ball takes the form of the “spinning top”, with “edges” occuring when at least one group effect is equal to zero. Then the group SLOPE reduces to the group LASSO and has a tendency to select a sparse solution. When λ1 > λ2 > 0 the corresponding ball has both types of edges and encourages the dimensionality reduction in both ways: by inducing the sparsity and making some of the weighted group effects to be equal to each other.

Figure 1.

Unit balls of Jλ,I,W norm for different λ. Weights w1 and w2 are equal to and 1. The edges in (a) correspond to the same weighted group effects, i.e. ; all edges in (b) contain at least one zero group effect (gLASSO); in (c) both types of edges appear.

Now, let us again consider an arbitrary m > 0, define p̃ = l1+…+lm and examine the following partition, 𝕀 = {𝕀1, …, 𝕀m}, of the set {1, …, p̃}

Observe that each XIi can be represented as XIi = UiRi, where Ui is a matrix with li orthogonal columns of a unit l2 norm, whose span coincides with the space spanned by the columns of XIi, and Ri is the corresponding matrix of a full row rank. Define n by l matrix X̃ by putting X̃𝕀i := Ui for i = 1, …, m. Now observe that after defining vector ω by conditions ω𝕀i := RibIi for i ∈ {1, …, m}, we immediately obtain

| (2.3) |

and for ⟦ω⟧𝕀 := (||ω𝕀1||2, …, ||ω𝕀m||2)⊤ the problem (2.2) can be equivalently presented in the form

| (2.4) |

where ωgS and βgS are linked via conditions , i = 1, …, m. Therefore to identify the relevant groups and estimate their group effects it is enough to solve the optimization problem (2.4). We will say that (2.4) is the standardized version of the problem (2.2).

Remark 2.1

The formulation of the group SLOPE was proposed independently in (Brzyski et al., 2015) (earlier version of this article) and in (Gossmann et al., 2015). In (Gossmann et al., 2015) only the case when the weights wi are equal to the square root of the group size is considered and penalties are imposed directly on ||βIi||2 rather than on group effects ||XIi βIi||2. This makes the method of (Gossmann et al., 2015) dependent on scaling or rotations of variables in a given group. In comparison to (Gossmann et al., 2015), where a Monte Carlo approach for estimating the regularizing sequence was proposed, our article lays theoretical foundations and provides the guidelines for the choice of the sequence of smoothing parameters, so gSLOPE can control FDR and has desired estimation properties.

2.2 Numerical algorithm

We will at first show that the problem of solving (2.4) can be easily reduced to the situation when W is the identity matrix. For this aim we define a diagonal matrix M such that for . Then observe that

| (2.5) |

Since M is nonsingular, we can substitute η := M−1ω and consider equivalent formulation of (2.4), , and recover ωgS as ωgS = Mη*. This allows to recast gSLOPE as a problem with unit weights.

Now, the above problem is of the form , where g and h are convex functions and g is differentiable. There exist efficient methods, namely proximal gradient algorithms, which could be applied to find numerical solution in such situation. To design efficient algorithms, however, h must be prox-capable, meaning that there is known fast algorithm for computing the proximal operator for h,

| (2.6) |

for each u ∈ ℝp̃ and t > 0.

To derive the proximal operator for the group SLOPE, we at first assume without the loss of generality that σ = 1. Now, we need to find the algorithm to minimize , for any u ∈ ℝp̃ and t > 0, which is equivalent to finding the numerical solution to the problem

| (2.7) |

As discussed in Appendix D, this problem can be solved in two steps

| (2.8) |

Consequently, calculating proxJ (u) in fact reduces to identifying c*, which can be efficiently done using the fast prox algorithm for regular SLOPE, provided e.g. in (Bogdan et al., 2013, 2015).

After defining the proximal operator, the solution to the gSLOPE can be obtained by the Procedure 1. There exist many ways in which ti’s can be selected to ensure that f(b(k)) converges to the optimal value (see e.g. Beck and Teboulle, 2009; Tseng, 2008). In our R package grpSLOPE available on CRAN (The Comprehensive R Archive Network) the accelerated proximal gradient method known as FISTA (Beck and Teboulle, 2009) is applied, which uses the specific procedure for choosing steps sizes, to achieve a fast convergence rate. To derive the proper stopping criterion, we have considered the dual problem to gSLOPE and employed the strong duality property. The detailed description of the dual norm, conjugate of grouped sorted l1 norm and the stopping criterion are provided in the Appendix C.

Procedure 1.

Proximal gradient algorithm

| input: b[0] ∈ ℝp̃, k=0 |

while ( Stopping criteria are not satisfied) do

|

| end while |

2.3 Group FDR

Group SLOPE is designed to select groups of variables, which might be very strongly correlated within a group or even linearly dependent. In this context we do not intend to identify single important predictors but rather want to point at the groups which contain at least one true regressor. To theoretically investigate the properties of gSLOPE in this context we now introduce the respective notion of group FDR (gFDR).

Definition 2.2

Consider model (2.1) and let βgS be an estimate given by (2.2). We define two random variables: the number of all groups selected by gSLOPE (Rg) and the number of groups falsely discovered by gSLOPE (Vg), as

Definition 2.3

We define the false discovery rate for groups (gFDR) as

| (2.9) |

2.4 Control of gFDR when variables from different groups are orthogonal

Our goal is the identification of the regularizing sequence for gSLOPE such that gFDR can be controlled at any given level q ∈ (0, 1). In this section we will provide such a sequence, which provably controls gFDR in case when variables in different groups are orthogonal to each other. In subsequent sections we will replace this condition with the weaker assumption of the stochastic independence of regressors in different groups. Before the statement of the main theorem on gFDR control, we will recall the definition of χ distribution and define a scaled χ distribution.

Definition 2.4

We will say that a random variable X1 has a χ distribution with l degrees of freedom, and write X1 ~ χl, when X1 could be expressed as , for X2 having a χ2 distribution with l degrees of freedom. We will say that a random variable X1 has a scaled χ distribution with l degrees of freedom and scale 𝒮, when X1 could be expressed as X1 = 𝒮 · X2, for X2 having a χ distribution with l degrees of freedom. We will use the notation X1 ~ 𝒮χl.

Theorem 2.5 (gFDR control under orthogonal case)

Consider model (2.1) with the design matrix X satisfying , for any i ≠ j. Denote the number of zero coefficients in ⟦β⟧I,X by m0 and let w1, …, wm be positive numbers. Moreover, define the sequence of regularizing parameters , with

| (2.10) |

where Fχlj is a cumulative distribution function of χ distribution with lj degrees of freedom. Then any solution, βgS, to problem gSLOPE (2.2) generates the same vector ⟦βgS⟧I,X and it holds

Proof

Consider the standardized version of the gSLOPE problem, given by (2.4). Since X is orthogonal at groups level, X̃ in problem (2.4) is an orthogonal matrix, i.e. X̃⊤ X̃ = Ip̃. It is easy to show that this implies , where C does not depend on b (see Appendix D). Hence under orthogonal situation the optimization problem in (2.4) can be recast as

| (2.11) |

where ỹ:= X̃⊤y is a whitened version of y, which has the multivariate normal distribution 𝒩(β, σ2Ip̃), with β̃𝕀i := RiβIi, i = 1, …, m. As discussed in the previous section the problem (2.11) can be equivalently formulated as

| (2.12) |

The above formulation yields the conclusion, that indices of groups estimated by gSLOPE as relevant coincide with the support of the solution to the SLOPE problem with the diagonal design matrix D such that . After defining β̃ ∈ ℝ p̃ by conditions β̃𝕀i := RiβIi, i = 1, …, m, we also have ỹ ~ 𝒩(β̃, σ2Ip̃).

Now, we define random variables and Clearly, then Rg = R and V g = V. Consequently, it is enough to show that

Without loss of generality we can assume that groups I1, …, Im0 are truly irrelevant, which gives ||β̃𝕀1||2 = …= ||β̃𝕀m0||2 = 0 and ||β̃𝕀j||2 > 0 for j > m0. Suppose now that r, i are some fixed indices from {1, …, m}. From definition of

| (2.13) |

Now, let us assume that i ≤ m0. Since σ−1||ỹ𝕀i||2 ~ χli we have

| (2.14) |

Denote by R̃i the number of nonzero coefficients in SLOPE estimate (2.12) after eliminating ith group of explanatory variables. Thanks to lemmas E.6 and E.7 in the Appendix, we immediately get

| (2.15) |

which together with (2.14) raises

| (2.16) |

where the equality follows from the independence between ||ỹ𝕀i||2 and R̃i. Therefore

| (2.17) |

which finishes the proof.

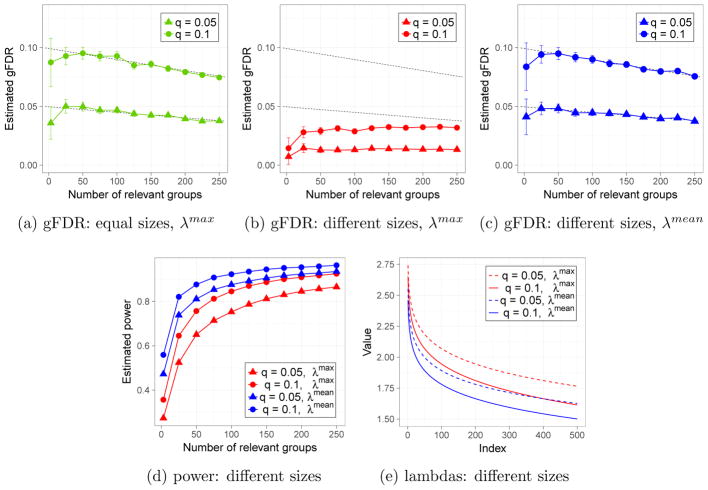

Figure 2 illustrates the performance of gSLOPE under the design matrix X = Ip (hence li, the rank of group i, coincides with its size), with p = 5000. In Figure 2(a) all groups are of the same size l = 5, while in Figures 2(b)–(d) the explanatory variables are clustered into m = 1000 groups of sizes from the set {3, 4, 5, 6, 7}; 200 groups of each size. Each coefficient of βIi, in a truly relevant group i, was generated independently from a U[0.1, 1.1] distribution and then βIi was scaled such that . Parameter a was selected to satisfy the condition

Figure 2.

Orthogonal situation with n = p = 5000 and m = 1000. In (a) all groups are of the same size l = 5, while in (b)–(d) there are 200 groups of each of sizes li ∈ {3, 4, 5, 6, 7}. In (a) and (b) gSLOPE works with the regularizing sequence λmax, while in (c) and (d) λmean is used. First 500 elements of different λ sequences are shown in (e). For each target gFDR level and true support size, 300 iterations were performed. Bars correspond to ±2SE. Black straight lines represent the “nominal” gFDR level q · ((m − k)/m), for k being true support size. Weights are defined as .

which, according to the calculations presented in the Appendix H, yields signals comparable to the maximal noise. Such signals can be detected with moderate power, which allows for a meaningful comparison between different methods.

Figure 2(a) illustrates that the sequence λmax keeps gFDR very close to the “nominal” level when groups are of the same size. However, Figure 2(b) shows that for groups of different size λmax is rather conservative, i.e. the achieved gFDR is significantly lower than assumed. This suggests that the shrinkage (dictated by λ) could be decreased, such that the method gets more power and still achieves the gFDR below the assumed level. Returning to the proof of Theorem 2.5, we can see that for each i ∈ {1, …, m} we have

| (2.18) |

with equality holding only for i being the index of the maximum in (2.10). In the result the inequality in (2.17) is usually strict and the true gFDR might be substantially smaller than the nominal level. The natural relaxation of (2.18) is to require only that

| (2.19) |

Replacing the inequality in (2.19) by equality yields the strategy of choosing the relaxed λ sequence

| (2.20) |

where is the cumulative distribution function of scaled chi distribution with li degrees of freedom and scale . In Figure 2(c) we present estimated gFDR, for tuning parameters given by (2.20). The results suggest that with a relaxed version of tuning parameters, we can still achieve the “average” gFDR control, where the “average” is with respect to the uniform distribution over all possible signal placements. As shown in Figure 2(d), application of λmean allows to achieve a substantially larger power than the one provided by λmax. Such a strategy could be especially important in situations where differences between the smallest and the largest quantiles (among distributions ) are relatively large and all groups have the same prior probability of being relevant.

2.5 The accuracy of estimation

Up until this point, we have only considered the testing properties of gSLOPE. Though originally proposed to control the FDR, surprisingly, SLOPE enjoys appealing estimation properties as well, (see e.g Su and Candès, 2016; Bellec et al., 2016b,a). It thus would be desirable to extend this link between testing and estimation for gSLOPE. In measuring the deviation of an estimator from the ground truth β, as earlier, we focus on the group instead of an individual level. Accordingly, here we aim to estimate parts of variance of Y explained by every group, which are contained in the vector ⟦β⟧X,I := (||XI1βI1||2, …, ||XImβIm||2)⊤ or ⟦β̃⟧𝕀 := (||β̃𝕀1||2, …, ||β̃𝕀m||2)⊤, equivalently. For illustration purpose, we employ the setting described as follows. Imagine that we have a sequence of problems with the number of groups m growing to infinity: the design X is orthonormal at groups level; ranks of submatrices XIi, li, are bounded, that is, max li ≤ l for some constant integer l; denoting by k ≥ 1 the sparsity level (that is, the number of relevant groups), we assume the asymptotics k/m → 0. Now we state our minimax theorem, where we write a ~ b if a/b → 1 in the asymptotic limit, and ||⟦β⟧I,X||0 denotes the number of nonzero entries of ⟦β⟧I,X. The proof makes use of the same techniques for proving Theorem 1.1 in (Su and Candès, 2016) and is deferred to the Appendix.

Theorem 2.6

Fix any constant q ∈ (0, 1), let wi = 1 and for i = 1, …, m. Under the preceding conditions m → ∞ and k/m → 0, gSLOPE is asymptotically minimax over the nearly black object {β : ||⟦β⟧||I,X||0 ≤ k}, i.e.,

where the infimum is taken over all measurable estimators β̂(y, X).

Notably, in this theorem the choice of λi does not assume the knowledge of sparsity level. Or putting it differently, in stark contrast to gLASSO, gSLOPE is adaptive to a range of sparsity in achieving the exact minimaxity. Combining Theorems 2.5 and 2.6, we see the remarkable link between FDR control and minimax estimation also applies to gSLOPE (Abramovich et al., 2006; Su and Candès, 2016). While it is out of the scope of this paper, it is of great interest to extend this minimax result to general design matrices.

2.6 The impact of chosen weights

In this subsection we will discuss the influence of chosen weights, , on results. Let I = {I1, …, Im} be a given partition into groups and l1, …, lm be ranks of submatrices XIi. Assume the orthogonality at group level, i.e., that it holds , for i ≠ j, and suppose that σ = 1. The support of ⟦β⟧I,X coincides with the support of vector c* defined in (2.12), namely

| (2.21) |

where W−1 is a diagonal matrix with positive numbers on the diagonal. Suppose now, that c* has exactly r nonzero coefficients. From Corollary E.4 in the Appendix E, these indices are given by {π(1), …, π(r)}, where π is permutation which orders W−1⟦ỹ⟧𝕀. Hence, the order of realizations decides about the subset of groups labeled by gSLOPE as relevant. Suppose that groups Ii and Ij are truly relevant, i.e., ||β̃𝕀i||2 > 0 and ||β̃𝕀j||2 > 0. The distributions of ||ỹ𝕀i||2 and ||ỹ𝕀j||2 are noncentral χ distributions, with li and lj degrees of freedom, and the noncentrality parameters equal to ||β̃𝕀i||2 and ||β̃𝕀j||2, respectively. Now, the expected value of the noncentral χ distribution could be well approximated by the square root of the expected value of the noncentral χ2 distribution, which gives

Therefore, roughly speaking, truly relevant groups Ii and Ij are treated as comparable, when it occurs . This gives us the intuition about the behavior of gSLOPE with the choice for each i. Firstly, gSLOPE treats all irrelevant groups as comparable, i.e. the size of the group has a relatively small influence on it being selected as a false discovery. Secondly, gSLOPE treats two truly relevant groups as comparable, if groups effect sizes satisfy the condition . The derived condition could be recast as . This gives a nice interpretation: with the choice , gSLOPE treats two groups as comparable, when these groups have similar squared effect group sizes per coefficient. One possible idealistic situation, when such a property occurs, is when all βi’s in truly relevant groups are comparable.

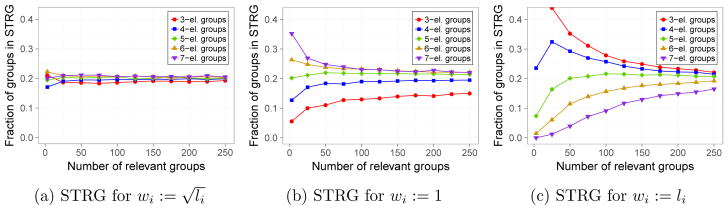

In Figure 3 we see that when the condition is met, the fractions of groups with different sizes in the selected truly relevant groups (STRG) are approximately equal. To investigate the impact of selected weights on the set of discovered groups, we performed simulations with different settings, namely we used wi = 1 and wi = li (without changing other parameters). With the first choice, larger groups are penalized less than before, while the second choice yields the opposite situation. This is reflected in the proportion of each groups in STRG (Figure 3). The values of gFDR are very similar under all choices of weights.

Figure 3.

Fraction of each group sizes in selected truly relevant groups (STRG). Beyond the weights, this simulation was conducted with the same setting as in experiments summarized in Figure 1 for λmean. In particular, for truly relevant groups i and j, it occurs . Target gFDR level was fixed as 0.05.

2.7 Independent groups and unknown σ

The assumption that variables in different groups are orthogonal to each other can be satisfied only in rare situations of specifically designed experiments. However, in a variety of applications one can assume that variables in different groups are independent. Such a situation occurs for example in the context of identifying influential genes using distant genetic markers, whose genotypes can be considered as stochastically independent. In this case a group can be formed by clustering dummy variables corresponding to different genotypes of a given marker. Though the difference between stochastic independence and algebraic orthogonality seems rather small, it turns out that small sample correlations between independent regressors together with the shrinkage of regression coefficients lead to magnifying the effective noise and require the adjustment of the tuning sequence λ (see Su et al., 2015, for discussion of this phenomenon in the context of LASSO). Concerning regular SLOPE, this problem was addressed by heuristic modification of λ, proposed in (Bogdan et al., 2013, 2015). This modified sequence was calculated based upon the assumption that explanatory variables are randomly sampled from the Gaussian distribution. However, simulation results from (Bogdan et al., 2015) illustrate that it controls FDR also in case when the columns of the design matrix correspond to additive effects of independent SNPs and the number of causal genes is moderately small.

To derive the similar heuristic adjustment for the group SLOPE we will at first confine ourselves to the case σ = 1, l1 = … = lm := l, w1 = … = wm := w.

The first step in our derivation relies on specifying the optimality conditions for the standardized version of group SLOPE provided in (2.4), under which , for all i ∈ {1, …, m}.

Theorem 2.7 (Optimality conditions)

Let X be the standardized design matrix satisfying for each i and let β̂ be the solution to the gSLOPE problem. Let us order the groups such that ||β̂I1||2 > … > ||β̂Is||2 > 0 and ||β̂Ii||2 = 0 for i > s and consider the partition of I into IS := {I1, …, Is} and IC := {Is+1, …, Im}. Moreover, let us define , where X\Ii is a matrix X without columns from Ii. Then the following two sets of conditions are met

| (2.22) |

where , λc = (λs+1, …, λm) and is the unit ball of the dual norm to Jwλc.

Proof

The proof of Theorem 2.7 is provided in Appendix G.

The task now is to select λi’s such that the condition ⟦v⟧IC ∈ Cwλc regulates the rate of false discoveries. Let us at first observe that

| (2.23) |

Note that under the orthogonal design the last expression reduces to for i > s and has χ distribution with l degrees of freedom. This fact was used in subsection 2.4 to define the sequence λ. In the considered near-orthogonal situation, the term does not vanish and creates an additional “noise”, which needs to be taken into account when designing the λ sequence. To approximate the distribution of vIi under the assumption of independence between different groups we will use the following simplifying assumptions. To estimate the distribution of vIi we will first simplify the situation by assuming that true and estimated signals define the same set of relevant groups, with indices from the set {1, …, s}, and that the signal strength is sufficiently large to assume that can be well approximated by for i ≤ s. After defining IS := ∪i≤s Ii, from the left set of conditions in (2.22) we get that

| (2.24) |

which gives . Finally, combining the last expression with (2.23) let us to assume vIi ≈ v̂Ii, where

| (2.25) |

Now, we will assume that the distribution of v̂Ii can be well approximated by assuming that the individual entries of X come from the normal distribution . This assumption can be justified if the distribution of the individual entries of X is sufficiently regular and n is substantially larger than lm0. The following Theorem 2.8 provides the expected value and the covariance matrix of the random vector v̂Ii for i > s under the assumption of normality.

Theorem 2.8

Assume that the entries of the design matrix X are independently drawn from 𝒩(0, 1/n) distribution. Then for each i > s the expected value of v̂Ii is equal to 0 and the covariance matrix of this random vector is given by

| (2.26) |

Proof

The proof of Theorem 2.8 is provided in Appendix G.

Now, if n is large enough with respect to sl, then by the Central Limit Theorem the distribution of vIi can be approximated by the multivariate normal distribution and the distribution of ||vIi||2 by the scaled χ distribution with l degrees of freedom and a scale parameter . Now, analogously to the orthogonal situation, lambdas could be defined as . Since s is unknown, we will apply the strategy used in (Bogdan et al., 2013): define λ1 as in orthogonal case and for i ≥ 2 define λi by incorporating the scale parameter corresponding to the sparsity s = i − 1. This yields the following procedure.

Procedure 2.

Selecting lambdas under the assumption of independence: equal groups sizes

| input: q ∈ (0, 1), w > 0, p, n, m, l ∈ ℕ |

| ; |

| For i ∈ {2, . . . , m}: |

| λS := (λ1, . . . , λi−1)⊤; |

| ; |

| ; |

| if , then put . Otherwise, stop the procedure and put λj := λi−1 for j ≥ i; |

| end for |

Consider now the Gaussian design with arbitrary group sizes and a sequence of positive weights w1, . . . , wm. One possible approach is to construct consecutive λi by taking the largest scaled quantiles among all distributions, i.e. as , with the scale parameter 𝒮j adjusted to lj (the conservative strategy). In this article, however, we will stick to the more liberal strategy based on λmean, which leads to the modified sequence of tuning parameters presented in Procedure 3.

Procedure 3.

Sequence of tuning parameters for independent groups

| input: q ∈ (0, 1), w1, . . . , wm > 0, p, n, m, l1, . . . , lm ∈ ℕ |

| , for ; |

| for i ∈ {2, . . . , m}: |

| λS := (λ1, . . . , λi−1)⊤; |

| , for j ∈ {1, . . . , m}; |

| , for ; |

| if , then put . Otherwise, stop the procedure and put λj := λi−1 for j ≥ i; |

| end for |

Up until this moment, we have used σ in gSLOPE optimization problem, assuming that this parameter is known. However, in many applications σ is unknown and its estimation is an important issue. When n > p, the standard procedure is to use the unbiased estimator of σ2, , given by

| (2.27) |

For the target situation, with p much larger than n, such an estimator can not be used. To estimate σ we will therefore apply the procedure which was dedicated for this purpose in (Bogdan et al., 2015) in the context of SLOPE. Below we present algorithm adjusted to gSLOPE (Procedure 4). The idea standing behind the procedure is simple. The gSLOPE property of producing sparse estimators is used, and in each iteration columns in design matrix are first restricted to support of βgS, so that the number of rows exceeds the number of columns and (2.27) can be used. Algorithm terminates when gSLOPE finds the same subset of relevant variables as in the preceding iteration.

Procedure 4.

gSLOPE with estimation of σ

| input: y, X and λ (defined for some fixed q) |

| initialize: S+ = ∅; |

| repeat |

| S = S+; |

| compute RSS obtained by regressing y onto variables in S; |

| set σ̂2 = RSS/(n − |S| − 1); |

| compute the solution βgS to gSLOPE with parameters σ̂ and sequence λ; |

| set S+ = supp(βgS); |

| until S+ = S |

To investigate the performance of gSLOPE under the Gaussian design and various group sizes, we performed simulations with 1000 groups. Their sizes were drawn from the binomial distribution, Bin(1000; 0.008), so as the expected value of the group size was equal to 8 (Figure 4(c)). As a result, we obtained 7917 variables, divided into 1000 groups (the same division was used in all iterations and scenarios). For each sparsity level and the gFDR level 0.1, and each iteration we generated entries of the design matrix using distribution, then X was standardized and the values of response variable were generated according to model (2.1) with σ = 1 and signals generated as in simulations for Figure 2. To identify relevant groups based on the simulated data we have used the iterative version of gSLOPE, with σ estimation (Procedure 4) and lambdas given by Procedure 3. We performed 200 repetitions for each scenario, n was fixed as 5000. Results are represented in Figure 4 and show that our procedure allows to control gFDR at the assumed level.

Figure 4.

Results for the example with independent regressors and various group sizes: m = 1000, p = 7917 and n = 5000. Bars correspond to ±2SE. Entries of design matrix were drawn from 𝒩(0, 1/n) distribution and truly relevant signal, i, was generated such as , where B(m, l) is defined in (H.4).

Additionally, Figure 4 compares gSLOPE to gLASSO with two choices of the smoothing parameter λ. Firstly, we used , which allows control of the gFDR under the total null hypothesis. Secondly, for each of the iterations we chose λ based on leave-one-out cross-validation. It turns out that the first of these choices becomes rather conservative when the number of truly relevant groups increases. Then gLASSO has a smaller FDR but also a much smaller power than SLOPE (by a factor of three for k = 60). Cross-validation works in the opposite way - it yields a large power but also results in a huge proportion of false discoveries, which in our simulations systematically exceeds 60%.

2.8 Simulations under Genome-Wide Association Studies

To test the performance of gSLOPE in the context of Genome-Wide Association Studies (GWAS) we have used the North Finland Birth Cohort (NFBC1966) dataset, available in dbGaP with accession number phs000276.v2.p1 (http://www.ncbi.nlm.nih.gov/projects/gap/cgi-bin/study.cgi?study_id=phs000276.v2.p1) and described in detail in (Sabatti et al., 2009). The raw data contains 364, 590 markers for 5, 402 subjects. To obtain roughly independent SNPs this data set was initially screened using the clump procedure in the PLINK software (Purcell et al., 2007; Purcell, 2009) and additional screening in R such that in the final data set the maximal correlation between any pair of SNPs does not exceed . The reduced data set contains p = 26, 315 SNPs. The details of the screening procedure are provided in Appendix I.

The explanatory variables for our genetic model were defined in Table 1, where a denotes the less frequent (variant) allele. In case when population frequencies of both alleles are the same, variables X̃ and Z̃ are uncorrelated. In other cases correlations between these variables is different from zero and can be very strong for rare genetic variants. Since each SNP is described by two dummy variables, the full design matrix [X̃ Z̃] contains 52, 630 potential regressors. This matrix was then centered and standardized, so the columns of the final matrix [X Z] have zero mean and unit norm.

Table 1.

Coding for explanatory variables

| Genotype aa | Genotype aA | Genotype AA | |

|---|---|---|---|

| additive dummy variable X̃ | 2 | 1 | 0 |

| dominance dummy variable Z̃ | 0 | 1 | 0 |

The trait values are simulated according to two scenarios. In Scenario 1 we simulate from an additive model, where each of the causal SNPs influences the trait only through the additive dummy variable in matrix X,

| (2.28) |

Here ε ~ 𝒩(0, I), the number of ‘causal’ SNPs k varies between 1 and 80 and each causal SNP has an additive effect (non-zero components of βX) equal to 5 or −5, with P(βXi = 5) = P(βXi = −5) = 0.5. In each of 100 iterations of our experiment causal SNPs were randomly selected from the full set of 26, 315 SNPs.

Since SNPs were selected in such a way that they are only weakly correlated, the identification of ”causal” mutations based on the additive model (2.28) can be done with regular SLOPE, as it was demonstrated in (Bogdan et al., 2015). However, the additive model (2.28) implicitly assumes that for each of the SNPs the expected value of the trait for the heterozygote aA is the average of expected trait values for both homozygotes aa and AA. This idealistic assumption is usually not satisfied and many of the SNPs exhibit some dominance effects. To investigate the performance of model selection criteria in the presence of the dominance effects, we simulated data according to Scenario 2;

| (2.29) |

which differs from Scenario 1 by adding dominance effects (non-zero components of βZ), which for each of k selected SNPs are sampled from the uniform distribution on [−5, −3] ∪ [3, 5]. Now, the influence of i-th SNP on the trait is described by the vector βi = (βXi, βZi), containing its additive and dominance effects, which sets the stage for the application of the gSLOPE.

The data simulated according to Scenario 1 and Scenario 2 were analyzed using three different approaches:

-

A.1

gSLOPE with p = 26, 315 groups, where each of the groups contains two explanatory variables, for the additive and the dominance effect of the same SNP,

-

A.2

SLOPEX, where the regular SLOPE is used to search through the reduced design matrix X (as in Bogdan et al., 2015; Brzyski et al., 2017),

-

A.3

SLOPEXZ, where the regular SLOPE is used to search through the full design matrix [X Z].

In all versions of SLOPE we used the iterative procedure for estimation of σ and the sequence λ heuristically adjusted to the case of the Gaussian design matrix, as implemented in the CRAN packages SLOPE and grpSLOPE. All scripts used in simulations as well as in real data analysis are available at https://github.com/dbrzyski/gSLOPE.

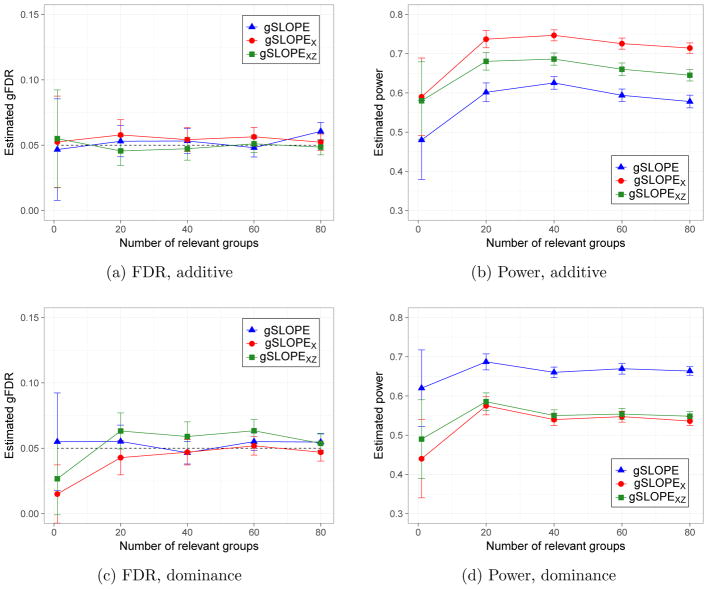

Figure 5 summarizes this simulation study. Here FDR and power are calculated at the SNP level. Specifically, in case of SLOPEXZ the SNP is counted as a one discovery if the corresponding additive or the dominance dummy variable is selected.

Figure 5.

Simulations using real SNP genotypes: n = 5, 402, p = 26, 315. Power and gFDR are estimated based on 100 iterations of each simulation scenario. Upper panel illustrates the situation where all causal SNPs have only additive effects, while in lower panel each causal SNP has also some dominance effect.

As shown in Figure 5, for both of the simulated scenarios all versions of SLOPE control gFDR for all considered values of k. When the data are simulated according to the additive model the highest power is offered by SLOPEX, with the power of gSLOPE being smaller by approximately 13% over the whole range of k. However, in the presence of large dominance effects the situation is reversed and gSLOPE offers the highest power, which systematically exceeds the power of SLOPEX by the symmetric amount of 13%. In our simulations SLOPEXZ has intermediate performance and does not substantially improve the power of SLOPEX in the presence of dominance effects.

Thus our simulations suggest that gSLOPE provides an information complementary to SLOPEX and our recommendation is to use both these methods when performing GWAS. SNPs detected by gSLOPE and not detected by SLOPEX almost certainly exhibit strong dominance effects and might represent rare recessive variants, as suggested by the real data analysis reported in the following section.

2.9 gSLOPE under GWAS application: real phenotype data

Finally, we have applied group SLOPE to identify SNPs associated with four lipid phenotypes available in NFBC1966 dataset. This data set contains many characteristics of individuals from the Northern Finland Birth Cohort 1966 (NFBC1966) ((Rantakallio, 1969; Jarvelin et al., 2004)), a sample that enrolled almost all individuals born in 1966 in the two northernmost Finnish provinces. The most advantageous feature of this study is that ”participants derive from a genetic isolate that is relatively homogeneous in genetic background and environmental exposures and that has more extensive linkage disequilibrium (i.e. neighboring markers are more strongly correlated) than in most other populations”(see Sabatti et al., 2009). The second of these features allows to capture the associations resulting from mutations which are not genotyped (i.e. they are represented in the design matrix only through their neighbors). In (Sabatti et al., 2009) this data set was used to look for associations for ”nine quantitative traits that are heritable risk factors for cardiovascular disease (CVD) or type 2 diabetes (T2D): body mass index (BMI), fasting serum concentrations of lipids (triglycerides (TG), high-density lipoproteins (HDL) and low-density lipoproteins (LDL)), indicators of glucose homeostasis (glucose (GLU), and insulin (INS)) and inflammation (CRP), and systolic (SBP) and diastolic (DBP) blood pressure. Extreme values of these traits, in combination, identify a metabolic syndrome, hypothesized to increase risks for both CVD and T2D”. In (Brzyski et al., 2017) four lipid phenotypes: HDL, LDL, TG, and total cholesterol (CHOL), were reanalyzed using the geneSLOPE method based on SLOPEX. The results were compared to those obtained with the up-to-date EMMAX procedure (Kang et al., 2010), which controls for the polygenic background by using the mixed model approach. The study reported in (Brzyski et al., 2017) shows that in this example geneSLOPE usually points at the same genomic regions as EMMAX, but allows to obtain a better resolution of gene location. Here we analyze the same four traits (HDL, LDL, TG, and CHOL) with the geneSLOPE based on SLOPEXZ and group SLOPE and compare the results with those obtained with SLOPEX and reported in (Brzyski et al., 2017).

We started with 5, 402 individuals and 334, 103 SNPs, obtained after first step of screening procedure described in details in Appendix I. Since this pre-processing selects most promising SNPs by performing multiple testing on the full set of p = 334, 103 SNPs, the sequence of the tuning parameters for SLOPE needs to be adjusted to this value of p rather than to the number of selected representatives (see Brzyski et al., 2017). The algorithm for GWAS analysis with SLOPE (the entire procedure is called geneSLOPE) is implemented in R package geneSLOPE and its details are explained in (Brzyski et al., 2017). According to an extensive simulation study and real data analysis reported in (Brzyski et al., 2017), geneSLOPE allows to control FDR for the analysis with full size GWAS data.

In our data analysis we used three methods: geneSLOPE for additive effects (as in Brzyski et al., 2017), geneSLOPEXZ, with the design matrix extended by inclusion of dominance dummy variables, and gene group SLOPE (geneGSLOPE). In geneSLOPEXZ and geneGSLOPE representative SNPs were selected based on one-way ANOVA tests. For all these procedures the pre-processing was based on p-value threshold p < 0.05 and the correlation cutoff ρ < 0.3, which allowed to reduce the data set to roughly 8500 of interesting representative SNPs (this number depends on the phenotype). For the convenience of the reader, the Procedure 5 for the full geneGSLOPE analysis is provided below.

Procedure 5.

geneGSLOPE procedure

| Input: r ∈ (0, 1), π ∈ (0, 1] |

| Screen SNPs: |

| (1) For each SNP calculate independently the p-value for the ANOVA test with the null hypothesis, H0: μaa = μaA = μAA. |

| (2) Define the set ℬ of indices corresponding to SNPs whose p-values are smaller than π. |

| Cluster SNPs: |

| (3) Select the SNP j in ℬ with the smallest p-value and find all SNPs whose Pearson correlation with this selected SNP is larger than or equal to r. |

| (4) Define this group as a cluster and SNP j as the representative of the cluster. Include SNP j in 𝒮, and remove the entire cluster from ℬ. |

| (5) Repeat steps (3)–(4) until ℬ is empty. Denote by m number of all clumps (this is also the number of elements in 𝒮). |

| Selection: |

| (6) Apply the iterative gSLOPE method (i.e. gSLOPE with σ estimation and correction for independent regressors) on X𝒮, being matrix X restricted to columns corresponding to the set 𝒮 of selected SNPs. Here, the tuning parameters, vector λ, is defined as in Procedure 3, with p being the number of all initial SNPs, and then this vector is restricted only to first m coefficients. |

| (7) Representatives which were selected indicate the selection of entire clumps. |

Results in the context of number of discoveries given by geneSLOPE, geneSLOPEXZ and geneGSLOPE are summarized in Table 2, where we can observe that both geneSLOPE and geneSLOPEXZ, gave identical results for LDL, CHOL and TG. Compared to these methods geneGSLOPE did not reveal any new response-related SNPs for LDL and CHOL. Actually, for these two traits geneGSLOPE missed some SNPs detected by the other two methods. A different situation takes place for TG, where geneGSLOPE identifies 6 additional SNPs as compared to the other two methods. All these detections have a similar structure, showing a significant recessive effect of the minor allele. In all these cases the minor allele frequency was smaller than 0.1. The detection of such “rare” recessive effects by the simple linear regression model is rather difficult, since the regression line adjusts mainly to the two prevalent genotype groups and is almost flat (Lettre et al., 2007).

Table 2.

Number of discoveries in real data analysis

| HDL | LDL | TG | CHOL | |

|---|---|---|---|---|

| geneSLOPE | 7 | 6 | 2 | 5 |

| geneSLOPEXZ | 8 | 6 | 2 | 5 |

| geneGSLOPE | 8 | 4 | 8 | 4 |

| New discoveries: geneSLOPEXZ | 1 | 0 | 0 | 0 |

| New discoveries: geneGSLOPE | 2 | 0 | 6 | 0 |

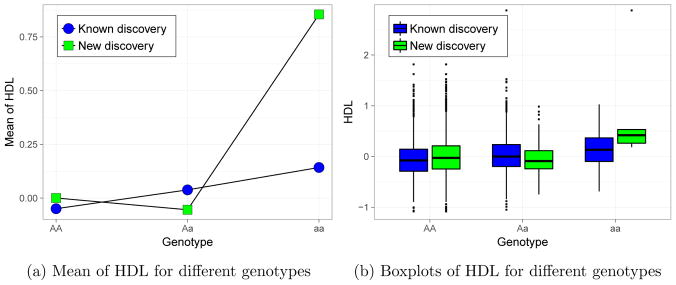

In case of HDL all three versions of SLOPE gave different results. geneSLOPEXZ identifies one new SNP as compared to geneSLOPE, while geneGSLOPE identifies one more SNP and misses one of the discoveries obtained by other two methods. In Figure 6 we compare two exemplary discoveries: one detected at the same time by geneSLOPE and geneGSLOPE (known discovery) and one detected only by geneGSLOPE (new discovery). This example clearly shows the additive effect of the previously detected SNP and the recessive character of the second SNP. In case of new discovery there are only 5 individuals in the last genotype group, which makes the change in the mean not detectable by simple linear regression.

Figure 6.

Comparison of a discovery detected by both geneSLOPE and geneGSLOPE (known discovery), and a discovery detected only by geneGSLOPE (new discovery). The mean values of HDL for different genotypes are shown in (a) and the corresponding boxplots are presented presented in (b).

The results of real data analysis agree with results of simulations. They show that geneGSLOPE has a lower power than geneSLOPE for detection of additive effects but can be very helpful in detecting rare recessive variants. Thus these two methods are complementary to each other and should be used together to enhance the power of detection of influential genes.

3 Discussion

Group SLOPE is a new convex optimization procedure for selection of important groups of explanatory variables, which can be considered as a generalization of group LASSO and of SLOPE. In this article we provide an algorithm for solving group SLOPE and discuss the choice of the sequence of regularizing parameters. Our major focus is the control of group FDR, which can be obtained when variables in different groups are orthogonal to each other or they are stochastically independent and the signal is sufficiently sparse. After some pre-processing of the data such situations occur frequently in the context of genetic studies, which in this paper serve as a major example of applications.

The major purpose of controlling FDR rather than absolutely eliminating false discoveries is the wish to increase the power of detection of signals which are comparable to the noise level. As shown by a variety of theoretical and empirical results, this allows SLOPE to obtain an optimal balance between the number of false and true discoveries and leads to very good estimation and predictive properties (see e.g. Bogdan et al., 2013, 2015; Su and Candés, 2016). Our Theorem 2.6 illustrates that these good estimation properties are inherited by group SLOPE.

We provide the regularizing sequence λmax, which provably controls gFDR in case when variables in different groups are orthogonal. Additionally, we propose its relaxation λmean, which according to our extensive simulations controls “average” gFDR, where the average is with respect to all possible signal placements. This sequence can be easily modified taking into account the prior distribution on the signal placement. Such “Bayesian” version of gSLOPE and the proof of control of the respective average gFDR remains an interesting topic for a further research.

Another important topic for a further research is the formal proof of gFDR control when variables in different groups are independent and setting precise limits on the sparsity levels under which it can be done. Asymptotic formulas, which allow for accurate prediction of FDR for LASSO under Gaussian design are provided in (Su et al., 2015). We expect that similar results can be obtained for SLOPE and gSLOPE and generalized to the case of random matrices, where variables are independent and come from sub-Gaussian distributions. We consider this as an interesting topic for a further research.

While we concentrated on control of FDR in case when groups of variables are roughly orthogonal to each other, it is worth mentioning that original SLOPE has very interesting properties also in case when regressors are strongly correlated. As shown e.g. in (Figueiredo and Nowak, 2016), the Sorted L-One norm has a tendency to average estimated regression coefficients over groups of strongly correlated predictors, which enhances the predictive properties. This also allows not to lose important predictors due to their correlation with other features. Moreover, minimax estimation and prediction properties of SLOPE under correlated designs have been recently proved in (Bellec et al., 2016b) and (Bellec et al., 2016a). We expect similar properties to hold for gSLOPE, which would pave the way for the applications in a variety of applications, where the groups of predictors are not necessarily independent.

Our proposed construction of the group SLOPE allows for the estimation of the group effects but does not allow to estimate the regressor coefficients by individual explanatory variables. We believe that the estimation of the individual effects would require a modification of the penalty term, so that a penalty would be imposed not only on entire groups, but also on individual coefficients. Such an idea was used in (Simon et al., 2013) in the context of sparse-group LASSO, where an additional l1 penalty on individual coefficients was used. The modification of gSLOPE in this direction would be an interesting contribution, since it could be applied for a bi-level selection the selection of groups and particular variables within the selected groups at the same time. However, achieving gFDR control with such a modified penalty currently seems to be a challenging task.

We proposed a specific application of gSLOPE for Genome Wide Association Studies, where groups contain different effects of the same SNP. It is also worth mentioning that gSLOPE can be used to group SNPs based on biological function, physical location etc. We also expect this method to be advantageous in the context of identification of groups of rare genetic variants, where considering their joint effect on phenotype should substantially increase the power of detection. Going beyond genetic analysis group SLOPE could be used also for example in neuroimaging studies, where one can group voxel-wise brain activity measures, such as the ones derived from functional magnetic resonance imaging (fMRI), using the region of interest (ROI) definitions given by available anatomical atlases. Apart from these bioinformatics and medicine applications, one could also consider application of a group SLOPE for a variety of compressed sensing tasks. Here the most basic application of block/group sparsity is the extension to complex numbers in which the real and imaginary parts are split (and all represented by their coefficient), with each pair forming a group (see e.g. van den Berg and Friedlander, 2008; Maleki et al., 2013). As discussed e.g. in (Elhamifar and Vidal, 2012) block sparsity arises also in a variety of other applications such as reconstructing multiband signals, face/digit/speech recognition or clustering of data on multiple subspaces etc (see Elhamifar and Vidal, 2012, for the respective references). Another interesting application discussed in (van den Berg and Friedlander, 2011) is the identification of the temporal signals arriving from different, unknown, but stationary directions. Here the sparsity is the direction of arrival, whereas the L2-norm is over the time series corresponding to this direction. These few possible applications represent only a small part of the real life group sparsity scenarios and exciting potential applications for the group SLOPE and we look forward investigating practical properties of group SLOPE in these real life problems.

Supplementary Material

Acknowledgments

We would like to thank Ewout van den Berg, Emmanuel J. Candés and Jan Mielniczuk for helpful remarks and suggestions. D. B. would like to thank Professor Jerzy Ombach for significant help with the process of obtaining access to the data. D. B. and M. B. are supported by European Union’s 7th Framework Programme for research, technological development and demonstration under Grant Agreement no 602552 and by the Polish Ministry of Science and Higher Education according to agreement 2932/7.PR/2013/2. Additionally D.B. acknowledges the support from NIMH grant R01MH108467.

Footnotes

An earlier version of the paper appeared on arXiv.org in November 2015: arXiv:1511.09078

References

- Abramovich F, Benjamini Y, Donoho DL, Johnstone IM. Adapting to unknown sparsity by controlling the false discovery rate. Ann Statist. 2006;34(2):584–653. [Google Scholar]

- Akaike H. A new look at the statistical model identification. IEEE Transactions on Automatic Control. 1974;19(6):716–723. [Google Scholar]

- Bakin S. PhD thesis. Australian National University; 1999. Adaptive regression and model selection in data mining problems. [Google Scholar]

- Barber RF, Candés EJ. Controlling the false discovery rate via knock-offs. The Annals of Statistics. 2015;43(5):2055–2085. [Google Scholar]

- Beck A, Teboulle M. A fast iterative shrinkage-thresholding algorithm for linear inverse problems. SIAM Journal on Imaging Sciences. 2009;1:183–202. [Google Scholar]

- Bellec P, Lecué G, Tysbakov A. Bounds on the prediction error of penalized least squares estimators with convex penalty. 2016a arXiv:1609.06675. [Google Scholar]

- Bellec P, Lecué G, Tysbakov A. Slope meets lasso: improved oracle bounds and optimality. 2016b arXiv:1605.08651. [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. Journal of the Royal Statistical Society, Series B. 1995;57(1):289–300. [Google Scholar]

- Bogdan M, Chakrabarti A, Frommlet F, Ghosh JK. Asymptotic Bayes optimality under sparsity of some multiple testing procedures. Annals of Statistics. 2011;39:1551–1579. [Google Scholar]

- Bogdan M, van den Berg E, Sabatti C, Su W, Candés EJ. Slope – adaptive variable selection via convex optimization. Annals of Applied Statistics. 2015;9(3):1103–1140. doi: 10.1214/15-AOAS842. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bogdan M, van den Berg E, Su W, Candés EJ. Statistical estimation and testing via the ordered ℓ1 norm. 2013 arXiv:1310.1969. [Google Scholar]

- Brzyski D, Peterson C, Sobczyk P, Candés E, Bogdan M, Sabatti C. Controlling the rate of gwas false discoveries. Genetics. 2017;205:61–75. doi: 10.1534/genetics.116.193987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brzyski D, Su W, Bogdan M. Group slope - adaptive selection of groups of predictors. 2015 doi: 10.1080/01621459.2017.1411269. arXiv:1310.1969. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Elhamifar E, Vidal R. Block-sparse recovery via convex optimization. IEEE Transactions on Signal Processing. 2012;60(8):4094–4107. [Google Scholar]

- Figueiredo MAT, Nowak RD. Ordered weighted l1 regularized regression with strongly correlated covariates: Theoretical aspects. Proceedings of the 19th International Conference on Artificial Intelligence and Statistics, JMLR:W&CP. 2016;51:930–938. [Google Scholar]

- Frommlet F, Bogdan M. Some optimality properties of FDR controlling rules under sparsity. Electronic Journal of Statistics. 2013;7:1328–1368. [Google Scholar]

- Gossmann A, Cao S, Wang Y-P. Identification of significant genetic variants via SLOPE, and its extension to group SLOPE. Proceedings of the International Conference on Bioinformatics, Computational Biology and Biomedical Informatics.2015. [Google Scholar]

- Jarvelin M, Sovio U, King V, Lauren L, Xu B, McCarthy M, Hartikainen A, Laitinen J, Zitting P, Rantakallio P, Elliott P. Early life factors and blood pressure at age 31 years in the 1966 northern finland birth cohort. Hypertension. 2004;44:838–846. doi: 10.1161/01.HYP.0000148304.33869.ee. [DOI] [PubMed] [Google Scholar]

- Kang H, Sul J, Service S, Zaitlen N, Kong S, Freimer N, Sabatti C, Eskin E. Variance component model to account for sample structure in genome-wide association studies. Nature Genetics. 2010;42:348–355. doi: 10.1038/ng.548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lettre G, Lange C, Hirschhorn JN. Genetic model testing and statistical power in population-based association studies of quantitative traits. Genetic Epidemiology. 2007;31(4):358–362. doi: 10.1002/gepi.20217. [DOI] [PubMed] [Google Scholar]

- Maleki A, Anitori L, Yang Z, Baraniuk RG. Asymptotic analysis of complex lasso via complex approximate message passing (camp) IEEE Transactions on Information Theory. 2013;59(7):4290–4308. [Google Scholar]

- Neuvial P, Roquain E. On false discovery rate thresholding for classification under sparsity. Annals of Statistics. 2012;40:2572–2600. [Google Scholar]

- Purcell S. package plink. 2009. [Google Scholar]

- Purcell S, Neale B, Todd-Brown K, Thomas L, Ferreira MAR, Bender D, Maller J, Sklar P, de Bakker PIW, Daly MJ, Sham PC. Plink: a toolset for whole-genome association and population-based linkage analysis. American Journal of Human Genetics. 2007:81. doi: 10.1086/519795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rantakallio P. Groups at risk in low birth weight infants and perinatal mortality. Acta Paediatr Scand Suppl. 1969;193:43. [PubMed] [Google Scholar]

- Sabatti C, Service SK, Hartikainen A, Pouta A, Ripatti S, Brodsky J, Jones CG, Zaitlen NA, Varilo T, Kaakinen M, Sovio U, Ruokonen A, Laitinen J, Jakkula E, Coin L, Hoggart C, Collins A, Turunen H, Gabriel S, Elliot P, McCarthy MI, Daly MJ, Jrvelin M, Freimer NB, Peltonen L. Genome-wide association analysis of metabolic traits in a birth cohort from a founder population. Nature Genetics. 2009;41(1):35–46. doi: 10.1038/ng.271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwarz G. Estimating the dimension of a model. The Annals of Statistics. 1978;6(2):461–464. [Google Scholar]

- Simon N, Friedman J, Hastie T, Tibshirani R. A sparse-group lasso. Journal of Computational and Graphical Statistics. 2013;22(2):231–245. [Google Scholar]

- Simon N, Tibshirani R. Standardization and the group lasso penalty. Statistica Sinica. 2013;22(3):983–1001. doi: 10.5705/ss.2011.075. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Su W, Bogdan M, Candés E. False discoveries occur early on the lasso path. 2015 arXiv:1511.01957, to appear in Ann. Statist.

- Su W, Candés E. SLOPE is adaptive to unknown sparsity and asymptotically minimax. Annals of Statistics. 2016;40:1038–1068. [Google Scholar]

- Tibshirani R. Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B. 1996;58:267–288. [Google Scholar]

- Tseng P. Technical report. University of Washington; 2008. On accelerated proximal gradient methods for convex-concave optimization. [Google Scholar]

- van den Berg E, Friedlander MP. Probing the pareto frontier for basis pursuit solutions. SIAM J on Scientific Computing. 2008;31(2):890–912. [Google Scholar]

- van den Berg E, Friedlander MP. Sparse optimization with least-squares constraints. SIAM J on Optimization. 2011;21(4):1201–1229. [Google Scholar]

- Yuan M, Lin Y. Model selection and estimation in regression with grouped variables. Journal of the Royal Statistical Society, Series B. 2006;68(1):49–67. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.