Abstract

The Army Study to Assess Risk and Resilience in Servicemembers (Army STARRS) is a research project aimed at identifying risk and protective factors for suicide and related mental health outcomes among Army Soldiers. The New Soldier Study component of Army STARRS included the assessment of a range of cognitive and emotion processing domains linked to brain systems related to suicidal behavior including PTSD, mood disorders, substance use disorders, and impulsivity. We describe the design and application of the Army STARRS neurocognitive test battery to a sample of 56,824 soldiers. We investigate its structural and concurrent validity through factor analysis and correlation of scores with demographics. We conclude that, in addition to being composed of previously well-validated measures, the Army STARRS neurocognitive battery as a whole demonstrates good psychometric properties. Correlations of scores with age and sex differences mostly replicate previously published findings, highlighting moderate to large effect sizes even within this restricted age range. Factor structures of scores conform to theoretical expectations. This neurocognitive battery provides a brief, valid measurement of neurocognition that may be helpful in predicting mental health and military performance. These measures can be integrated with neuroimaging to offer a powerful tool for assessing neurocognition in Servicemembers.

Keywords: Army STARRS, Penn Computerized Neurocognitive Battery, Neurocognitive Assessment, Post-Traumatic Stress Disorder, Psychometrics

The Army Study to Assess Risk and Resilience in Servicemembers (Army STARRS, 2012) is a research project aiming primarily to investigate the recent increase in suicide rates among Army Soldiers (Kessler et al., 2013; Ursano et al., 2014). The study includes retrieval of historical data, prospective data collection, and biological sample collection across a number of sub-studies. One of these sub-studies, the New Solider Study (NSS), involves the administration of computerized psychiatric symptom inventories, personality assessments, and neurocognitive tests to Army Soldiers at the onset of basic training. The final sample for the NSS comprises over 50,000 participants. Note that the NSS is only one of several studies within the Army STARRS project, and is the focus of the present study due to the assessment goals (neurocognitive and clinical) that motivated it.

The neurocognitive tests selected for inclusion in the Army STARRS battery are designed to assess a broad range of cognitive and emotion processing domains that have been related to disorders and problems of interest in Army STARRS, including: suicidal behavior, posttraumatic stress disorder (PTSD), mood disorders, substance and alcohol use disorders, and impulsive behavior. Most tests in this battery are from the Penn Computerized Neurocognitive Battery (CNB; Gur et al., 2001; 2010), and were included because they are based on functional neuroimaging (Gur, Erwin, & Gur, 1992; Gur et al., 2001; Roalf et al., 2014), normed on large samples (Gur et al., 2012; 2014), and adaptable for minimally proctored group administration. Other tasks chosen for this battery—specifically, the Go/No-Go task (GNG) and the Emotional Stroop task (ESTROOP), were not part of the original CNB but were added to augment the Army STARRS battery by additional established suicide-related behavioral measures of impulsivity (Keilp, Sackeim, & Mann, 2005; Nock et al., 2010). That is, tests selected from the CNB were chosen because of the CNB’s well-established validity and history of use; however, the coverage of neurocognitive domains by the CNB is not without gaps, and the ESTROOP and GNG were selected to fill those gaps.

There is evidence that neurocognitive correlates of suicidal behavior include primarily deficits in executive control related to frontal lobe functioning, such as problems with abstraction and mental flexibility, attention, impulse control and decision-making (reviewed in Jollant et al., 2011; Richard-Devantoy et al., 2014). Related mental health problems such as PTSD and traumatic brain injury (TBI) also have been associated with deficits in executive functions that include sustained attention, working memory, but also episodic memory (e.g., Uddo et al., 1993; Vasterling et al., 2002; Leskin & White, 2007; Brenner et al., 2010; review in Pitman, Shalev, & Orr, 2000). Notably, alcohol and substance abuse exacerbate these deficits specifically in the areas of verbal memory, attention, and processing speed performance (Samuelson et al., 2006, p. 716), and face memory (Samuelson et al., 2009). These domains are also implicated in depression (reviews in Kurtz & Gerraty, 2009; McClintock et al., 2010). Deficits in affect processing are more specifically linked to depression (e.g., Gur, Erwin, & Gur, 1992; Naranjo et al., 2011), as well as to proneness to aggression (Weiss et al., 2006).

Our aims in selecting a neurocognitive battery for Army STARRS were: 1. To sample behavioral measures that are sensitive to the integrity of fronto-temporal brain systems, which are implicated in conditions that enhance proneness to suicide; and 2. Measure both cognitive and emotion-processing (social cognition) domains of functioning that have been documented in these conditions and are relevant to vocational and social adjustment. Because of time constrains (two sessions of about 20 minutes each were allotted by the protocol), we had to forego administration of other tests such as measures of verbal and spatial reasoning, additional episodic memory and social cognition domains and motor speed. Data showing psychometric properties of individual tests in initial subsamples have been reported (Thomas et al., 2013; Thomas et al., 2015).

With these aims in mind, the following tests were selected for inclusion in the battery: Penn Conditional Exclusion Test (PCET), Penn Continuous Performance Test (PCPT), Short Letter-N-Back (SLNB), Go/No-Go (GNG), Penn Face Memory Test (PFMT), Penn Emotion Identification Test (ER40), and Emotion Stroop-style Test (ESTROOP). The PCET was chosen because deficits in abstraction, problem-solving and mental flexibility have been associated with suicidal behavior (Neuringer, 1964; Schotte & Clum, 1987; Schotte, Cools, & Payvar, 1990; Keilp et al, 2001; see LeGris & van Reekum, 2006 for a review). Deficits in mental flexibility, abstract reasoning, and problem-solving are also associated with several psychiatric conditions that confer high risk for suicide, including borderline personality disorder (Fertuck et al., 2006), PTSD (Danckwerts & Leathem, 2003), major depression (Mialet et al, 1996; Paelecke-Habermann, Pohl, & Leplow, 2005), and alcohol abuse (Noël et al., 2007), as well as schizophrenia and spectrum disorders (Saykin et al., 1991). Impairments in executive functioning may be of particular concern for military personnel who suffer the combined effects of mild TBI and PTSD, as deficits in these areas of cognition are seen with high frequency.

The PCPT was chosen because lapses in executive control of attention and vigilance contribute to impairments in declarative memory (Takashima et al., 2006) and complex problem-solving. Many psychiatric conditions that are associated with attentional deficits (including PTSD, major depressive disorder, bipolar disorder, and psychosis) are known to contribute to suicidal behavior (Arsenault-Lapierre, Kim, & Turecki, 2004; Keilp et al., 2008).

The SLNB was chosen because the ability to actively maintain and refresh goal-related information is a major executive domain (Baddeley & Della Sala, 1996) that relates to dorsolateral prefrontal structures in healthy people (Ragland et al., 1997, 2002) and is sensitive to effects of TBI (Vallat-Azouvi et al., 2007), depressive disorders (Christopher & MacDonald, 2005) and PTSD (Shaw et al., 2009).

The GNG was chosen because poor GNG performance has been found in attention deficit disorder (Barkley, 1997; Durston et al., 2007), those at genetic risk for attention deficit disorder (Durston et al., 2006; Wood et al., 2011), drug abusers (Verdejo-Garcia et al., 2006), bipolar disorder with suicidal behavior (Harkavy-Friedman et al., 2006), subjects who had experienced childhood abuse (Navalta et al., 2006), subjects undergoing tryptophan depletion (LeMarquand et al., 1998; Robinson & Sahakian, 2009), and after administration of alcohol (Ostling & Fillmore, 2010). Poor performance has also been associated with self-ratings of impulsiveness in healthy volunteers (Keilp et al., 2005), and with more violent suicidal behavior. The GNG has been used extensively in EEG and functional imaging studies to produce reliable activation of ventral prefrontal and striatal brain regions in both healthy people (Durston et al., 2002; Horn et al., 2003) and patients with ADHD (Casey et al., 1997).

The PFMT (Gur et al., 1997) was chosen because the memory system is to some extent domain-specific, with greater left hemispheric involvement in verbal memory and greater right hemispheric involvement in face and shape memory. Memory for faces is related to emotional processing, and may be sensitive to the effects of PTSD and depressive disorders (Gur, Erwin, & Gur, 1992; Naranjo et al., 2011). In short, detection of memory deficits is of obvious importance because they not only could impair work performance but, in the case of deficits in memory for faces, may also indicate damage to limbic structures involved in affect regulation.

The ER40 was chosen because emotion recognition is a critical aspect of social information processing and social problem-solving. Difficulties in decoding facial affect lead to misjudgment of intentions of peers or foes, and can fuel social isolation, alienation and hostility (e.g., Weiss et al., 2006). Various psychiatric conditions modify emotional information processing; for example individuals with PTSD have a heightened sensitivity to fearful faces (Masten et al., 2008), whereas individuals with Borderline Personality Disorder are quick to categorize emotional expressions (Fertuck et al., 2009). Impairment in affect processing is also linked to depression (e.g., Gur, Erwin, & Gur, 1992, Naranjo et al., 2011), proneness to aggression (Weiss et al., 2006), as well as to schizophrenia (Heimberg et al., 1992; Kohler et al., 2003; Kohler, Hanson, & March, 2013).

The ESTROOP was chosen because stroop-style tasks using pathology-specific words have demonstrated a relationship between psychopathology and attentional bias in depression (Williams et al., 1996), anxiety (Foa et al., 1991; McNally et al., 1990; Teachman et al., 2007), PTSD (Kaspi, McNally, & Amir, 1995), and substance use (Cox, Fadardi, & Pothos, 2006). Suicide-specific emotional Stroop tasks have found significant interference in suicide-specific trials in recent suicide attempters (Williams et al., 1986) over and above bias to generally negative or neutral words (Becker, Strohbach, & Rinck, 1999). Recent work has also demonstrated the utility of suicide-specific ESTROOP scores as behavior markers for future suicide attempts (Cha et al., 2010).

The present analysis examined the psychometric structure of the neurocognitive battery in an effort to derive useful indices of performance that can help link clinical parameters to neuroimaging and genomic measures in a translational context. We took advantage of the unusually large sample to obtain estimates of factorial structure on half the sample, and replicated in the other half.

Methods

Participants and Administration

Army soldiers were recruited to volunteer without compensation for the Army STARRS NSS at the start of basic training. Due to the potential danger of soldiers feeling compelled to participate due to the fear of disapproval (or worse) from their commanding officers, extra emphasis was given to the fact that this was a voluntary procedure. Those who did not participate were explicitly offered the opportunity for recreational activities of their choosing (as opposed to, e.g., fitness training or other less desirable activities). All participants described below were recruited specifically for the NSS.

The current sample comprises 56,824 participants (82.3% male) from three Army bases in the United States tested between February of 2011 and November of 2012. Mean age was 21.0 (SD = 3.6) with only 2% age 32+, and racial breakdown was as follows: 69% White; 20% Black; 2.8% Asian; 1.4% American Indian; 0.8% Pacific Islander; and 5.8% other. All soldiers were asked to provide informed, written consent prior to participation in research. Army commanders provided sufficient time to complete all surveys and tests, which were administered in a group format using laptop computers. Research proctors monitored the testing environment and assisted with questions and technical difficulties. Surveys and tests were administered in a fixed order in 90-minute sessions over two days of testing. The neurocognitive part of the computerized assessment was administered in the last 20 minutes of the 90-minute assessment session.

Tests Administered

Penn Conditional Exclusion Test (PCET).

The PCET (Kurtz et al., 2004) is designed to test a participant’s ability to learn rules and principles, recognize unexpected changes in those rules, and adjust accordingly. It is based on the “Odd Man Out” paradigm, participants are asked to determine which particular object does not belong to a group of other objects. In the case of the PCET version administered in the Army STARRS battery, the objects vary on three characteristics: size, shape, and the thickness of the lines composing them. For example, if three of the objects are stars, and one of the objects is a square, it might be the case that the square is the “odd man out” (and therefore the correct answer), because it’s the only non-star. On the other hand, if the square and two of the stars are large and one of the stars is small, the small star might be the “odd man out,” because it is the only small object.

On each trial, participants select the object they believe to be the ‘odd man out,’ and are immediately told if they were correct or incorrect. Participants are given 48 trials to learn which characteristic (size, shape, or line thickness) is determining the “odd man out,” and then must get ten consecutive correct answers. After those correct answers, the characteristic is changed—e.g., the participant might have correctly learned that size is the important characteristic, but after the ten consecutive correct answers selecting the odd size, the important characteristic will change (perhaps to shape). The participant must then recognize that the rule has changed, determine what the new rule is, and again respond with ten consecutive correct answers. Finally, after those ten correct answers, the rule is changed a third time, and the participant must again determine the new rule (and respond accordingly).

The PCET is scored based on a composite of total correct responses and the number of rules/principles the participant learned. Specifically, a performance composite score is calculated by multiplying the number of principles learned (plus 1 to accommodate those who do not learn a single rule) by proportion of correct responses (i.e. correct responses / total responses).

Penn Continuous Performance Test (PCPT).

The PCPT (Kurtz et al., 2001) is a test of vigilance and visual attention. Participants are shown a series of configurations of red 7-segment displays (as on a digital clock display), and asked to press a space bar when the stimulus is a number (first half) or letter (second half). Each trial lasts one second, during which the stimulus is displayed for 300 milliseconds followed by a blank screen displayed for 700 milliseconds. Total test time is three minutes (1.5 for numbers and 1.5 for letters).

Short Letter-N-Back (SLNB).

In the SLNB, participants are asked to pay attention to letters that flash on the computer screen one at a time, and to press the spacebar according to a specified principle. In the Army STARRS implementation the participant was instructed to press the spacebar whenever the letter on the screen is the same as the one before the previous letter (2-back). In all trials, the participant has 2.5 seconds to press the spacebar, and is given a practice session before beginning. This task is scored based on the total number of true positives.

Go/No-Go (GNG).

The Go/No-Go task is a measure of impulse control that requires subjects to respond to either a single designated target or a series of targets, and to inhibit responding to a particular low frequency non-target. The goal of the task is to induce subjects to develop a tendency to respond, and then to interrupt that tendency with an intermittent non-target. In their simplest form, Go/No-Go tasks use a series of letters or symbols as targets, and a single letter or figure as a non-target. In the Army STARRS Go/No-Go task, participants see a series of Xs and Ys quickly displayed at different positions on the screen. Each stimulus is shown for 300ms, followed by a uniform black screen for 900ms. Participants are instructed to respond (press the spacebar) if and only if an X appears in the upper-half of the screen. Thus, participants must inhibit the impulse to respond to both Xs in the lower half of the screen and Ys generally.

Penn Face Memory Test (PFMT).

The PFMT presents examinees 20 faces that they will be asked to identify later. Faces are shown in succession for an encoding period of 5 seconds each. After this initial learning period, examinees are immediately shown a series of 40 faces—20 targets and 20 distractors—and are asked to decide whether they have seen each face before by choosing 1 of 4 ordered categorical response options: “definitely yes”; “probably yes”; “probably no”; or “definitely no.” Stimuli consist of black-and-white photographs of faces presented on a black background. All faces were rated as having neutral expressions and were balanced for gender and age (Gur et al., 1993, 2001). Responses and response times are recorded during test administration; however, there are no time limits during recognition testing or explicit instructions to work quickly.

Penn Emotion Identification Test (ER40).

The ER40 (Carter et al., 2009; Erwin et al., 1992; Habel et al., 2000; Kohler et al., 2003; Mathersul et al., 2009) measures the ability of an individual to recognize the specific emotion being expressed by a poser. Participants are shown a series of forty faces, and asked to choose (among five options) which emotion the person in the photograph is expressing. The five options are Happy, Sad, Anger, Fear, or No Emotion. There are four male and four female faces for each emotion, for a total of forty faces (8 actor photos × 5 emotions = 40).

Emotion Stroop-style Test (ESTROOP).

The traditional Stroop paradigm measures the degree to which semantic processing interferes with color identification (Stroop, 1935). In the classic case, color words (e.g., GREEN, RED, BLUE) are displayed in potentially incongruous font colors. Participants are required to name the font colors while ignoring the semantic content of the color words. Response latencies on incongruous words (interference trials) are thought to capture an effort to inhibit a prepotent bias to ignore font color when reading. The Emotional Stroop adds another layer to this paradigm by displaying emotionally valenced words in addition to color words. These valenced words are either generally negative (e.g., alone, rejected, stupid) or specific to suicide (e.g., suicide, dead, funeral) and have been previously used in other suicide-related behavioral measures (Nock et al., 2010). The ESTROOP measures interference due to attentional bias by subtracting response latencies to neutral words from those for negative or suicide-specific words.

Data Analysis

Data cleaning.

Flags were assigned to test sessions with response patterns consistent with hardware/software malfunction and/or subject inattention, misunderstanding, or non-compliance. First, histograms for all measures (accuracy and speed) were examined visually for impossible results (e.g. negative response times), suspicious patterns (e.g. unusually high frequency of an exact millisecond-resolution response time), or suspicious distributions (e.g. bimodal), any of which would suggest possible software/hardware failure. No such problems were found. Next, response patterns for individual tests were examined for subject-related problems (e.g. non-compliance); that is, thresholds for whether to flag a test session varied by test. For example, sessions for the CPT (a rapid task) were flagged if there were 10 consecutive responses (presses) or 20 consecutive non-responses. Sessions for the ER40 (a deliberative task) were flagged if the same emotion was selected ≥ 7 times in a row and/or if there was at least 1 response time ≤ 250 milliseconds. Such rules were established for each of the seven tests based on post hoc examination of the data, such that a flagging rule was applied only if it described a situation where a participant-related problem almost certainly existed.. These flagged test sessions were excluded from analysis unless otherwise indicated. Note that only the flagged test was excluded, not the entire battery; thus, it was possible for some participants to have data for only some of the individual tests. Thus, missing data were handled using pairwise deletion in all analyses described below. However, as an added precaution, we also re-ran all analyses using more stringent quality assurance criteria—specifically, participants with any missing data were removed (listwise), and outliers > 3 standard deviations (SDs) from the mean on each score were removed. Supplementary Table S1 shows the percentages of scores on each test that fell outside this range of +/− 3 SDs. All results using the more stringent criteria are shown in the Supplement as indicated below in the Results section for each analysis.

Concurrent validity.

To assess the concurrent validity of the individual tests composing the Army STARRS battery, we examined gender differences within each test using t-tests, and plotted each test score’s (accuracy and speed) relationship with age. The tests’ relationships with age were tested statistically via robust linear regression (Maronna & Yohai, 2000) including both age and age-squared to account for nonlinearity. Robust linear regression was used due to our suspicion that the assumption of homoscedasticity would be violated. This suspicion was tested using the Breusch and Pagan (1979) method, which tests the likelihood (given the sample size) of the linear relationship between the independent variables and regression residuals; the test statistic is distributed as a χ2, and a statistically significant value indicates a violation of the homoscedasticity assumption. All analyses were performed using the stats, car (Fox & Weisberg, 2011), and robustbase (Rousseeuw et al., 2016) packages in R (v3.2.0; R Core Team, 2016), and plots were created using SPSS (version 21). Additionally, a large number of participants (N = 3949) reported having ever experienced a TBI, here defined as having resulted in, a) a perforated eardrum, b) loss of consciousness greater than 30 minutes, or both. Thus, because TBI is an obvious potential confounder, the above analyses were performed again after excluding participants who reported TBI.

Factor analysis.

To assess the latent structure of the Army STARRS battery, we first estimated unidimensional and 2-factor exploratory factor solutions (EFAs). Note that a major step of most EFAs—i.e. judging, empirically or theoretically, the appropriate number of factors to extract—was not necessary in this case due to the small number of variables (seven). Extracting three or more factors would guarantee that at least one of the factors was indicated by fewer than three variables, making those factors not properly identified. We thus chose to estimate only unidimensional and 2-factor solutions.

Next, based on the exploratory results and the theory that motivated test selection, we estimated a confirmatory bifactor model of the efficiency scores with two specific factors and one general factor. Bifactor modeling is a way to estimate the contribution of a test to an overall dimension (performance in this case) after controlling for its specific factor, and vice versa. Bifactor models are similar to higher-order models (in which one general factor comprises the lower-order factors, which themselves comprise the individual tests), except that, in a bifactor model, there are direct effects of the general factor on the individual tests. For more information on strengths and weaknesses of bifactor modeling, see Reise (2012) and Reise, Moore, and Haviland (2010). Note, however, that because of the brevity of the battery, a higher-order model was not feasible (without mathematical constraints), as the higher-order factor needs at least three lower-order factors in order to be identified. Here, we have only two lower-order factors. Also, note that we do not compare the bifactor model to a standard correlated-traits model because, even if the latter had better fit indices and lower (better) information criteria, it would not be the model of choice because one of the purposes of the confirmatory model is to generate one overall score, something not possible with a correlated-traits model. When sub-factor scores are desired, we use and recommend the two-factor exploratory model shown below.

All EFAs were performed using least squares extraction and oblimin rotation in the psych package (Revelle, 2013) in R, and the confirmatory model was estimated using the robust maximum likelihood (MLR) estimator in Mplus (v6; Muthén & Muthén, 2010). Also, due to a minor estimation problem for the CFA, the residual variance of ER40 had to be constrained to be > 0. Note that this value was not fixed (specified in the model), but was simply constrained using the MODEL CONSTRAINT command in Mplus, which removes a problematic portion of the maximum likelihood estimation search space.

Though there are a number of ways to evaluate the fit of a model (and many corresponding “thresholds” for acceptable fit), we follow the recommendations of Hu and Bentler (1998; 1999) throughout this manuscript. Missing data were handled using pairwise deletion (but see below), and the confirmatory model was identified by setting one loading to 1.0 per factor. Additionally, to achieve some level of cross-validation and avoid sample-specific solutions, the total sample was randomly split into an exploratory group (N = 26,050) and confirmatory group (N = 25,000). All EFA results reported below are based on the exploratory group, and the CFA on the confirmatory group. Note, however, that one of the potential hazards of random-split cross-validation is that the two groups, by chance, might differ in some consequential way. Here, the most important way they might differ is in the variances of the test scores (accuracy, RT, and efficiency). We therefore tested for equal variance between groups using F-tests, and compared the groups on age and sex.

Results

Sex Differences

Table 1 shows the results of the analyses examining sex differences. Because scores were z-scores standardized to the global mean, values in the rightmost column of Table 1 (“Difference”) can be interpreted as effect sizes. The sex differences in accuracy are mostly consistent with previous findings using the same tests (Gur et al., 2012). Specifically, for accuracy females outperform males on attention (CPT), impulse control (GNG and ESTROOP), face memory (PFMT), and emotion identification (ER40), while males outperform females on mental flexibility (PCET) and working memory (SLNB). In terms of speed, females are faster (lower RT) on the PCET, PFMT and ER40. We found no significant sex differences on the SLNB, and the slower performance of males on the CPT is not consistent with previous findings. Finally, males perform faster on the ESTROOP and much faster on the GNG, both measures of impulsivity. This finding is consistent with their poorer accuracy reported above and a speed/accuracy trade-off characteristic of the Go/No-Go paradigm (Trommer et al., 1988).

Table 1.

Gender Effects on the Army STARRS Neurocognitive Test Battery

| Gender differences | |||||||

|---|---|---|---|---|---|---|---|

| Male (M) | Female (F) | Difference | |||||

| Test | Mean | SD | N | Mean | SD | N | (M – F) |

| ESTROOP_Accuracy | −.02 | 1.00 | 46788 | .15 | .90 | 10036 | −.17* |

| CPT_Accuracy | .00 | 1.00 | 43282 | .04 | .97 | 9321 | −.04 |

| ER40_Accuracy | −.02 | 1.01 | 43893 | .10 | .92 | 9544 | −.12* |

| GNG_Accuracy | .00 | 1.00 | 43283 | .04 | .98 | 9216 | −.04* |

| SLNB_Accuracy | .02 | 1.00 | 35744 | −.06 | 1.00 | 7231 | .08* |

| PCET_Accuracy | .04 | .99 | 37293 | −.17 | 1.03 | 7538 | .21* |

| PFMT_Accuracy | −.01 | 1.00 | 40281 | .06 | 1.00 | 8915 | −.07* |

| ESTROOP_RT | −.03 | 1.00 | 46788 | .09 | .97 | 10036 | −.12* |

| CPT_RT | .01 | 1.01 | 43282 | −.05 | .90 | 9321 | .06* |

| ER40_RT | .05 | 1.01 | 43893 | −.27 | .87 | 9544 | .32* |

| GNG_RT | −.06 | .97 | 43283 | .29 | 1.08 | 9216 | −.35* |

| SLNB_RT | −.01 | .99 | 35744 | .02 | 1.04 | 7231 | −.03 |

| PCET_RT | .01 | 1.01 | 37293 | −.06 | .94 | 7538 | .07* |

| PFMT_RT | .01 | 1.01 | 40281 | −.03 | .96 | 8915 | .04 |

| ESTROOP_Efficiency | .00 | 1.00 | 46788 | .05 | .94 | 10036 | −.05* |

| CPT_Efficiency | .00 | 1.00 | 43282 | .05 | .94 | 9321 | −.05* |

| ER40_Efficiency | −.05 | 1.00 | 43893 | .25 | .91 | 9544 | −.30* |

| GNG_Efficiency | .04 | .98 | 43283 | −.18 | 1.04 | 9216 | .22* |

| SLNB_Efficiency | .02 | .99 | 35744 | −.06 | 1.03 | 7231 | .08* |

| PCET_Efficiency | .02 | 1.00 | 37293 | −.07 | .99 | 7538 | .09* |

| PFMT_Efficiency | −.01 | 1.00 | 40281 | .07 | .98 | 8915 | −.08* |

Note. Accuracy, response times, and efficiency scores are in z-score units such that a difference of .05 indicates a .05 standard deviation difference; RT = response time; SD = standard deviation; CPT = Continuous Performance Task; ER40 = Emotion Recognition; GNG = Go/No-Go; SLNB = Short Letter-N-Back; PCET = Penn Conditional Exclusion Task; PFMT = Penn Face Memory Test; ESTROOP = Emotional Stroop Task;

* = p < .001.

The bottom portion of Table 1 shows sex differences in efficiency, which is the average of an individual’s accuracy and speed scores. All six gender differences in efficiency reported here are highly significant. Specifically, females outperform males on the CPT, ESTROOP, and PFMT, and quite substantially on the ER40. Males outperform females on the GNG, SLNB, and PCET.

Supplementary Table S2 shows the results of the above analyses performed using listwise-deletion and outlier-removal. All significant sex differenced remain significant, and no previously non-significant effect becomes significant. In addition, when the above analyses were conducted on the full sample after removing participants reporting a TBI, almost all results remained. The only exception was that the difference between males and females on PFMT RT became significant (new M – F difference = 0.6; p < 0.001).

Age Effects

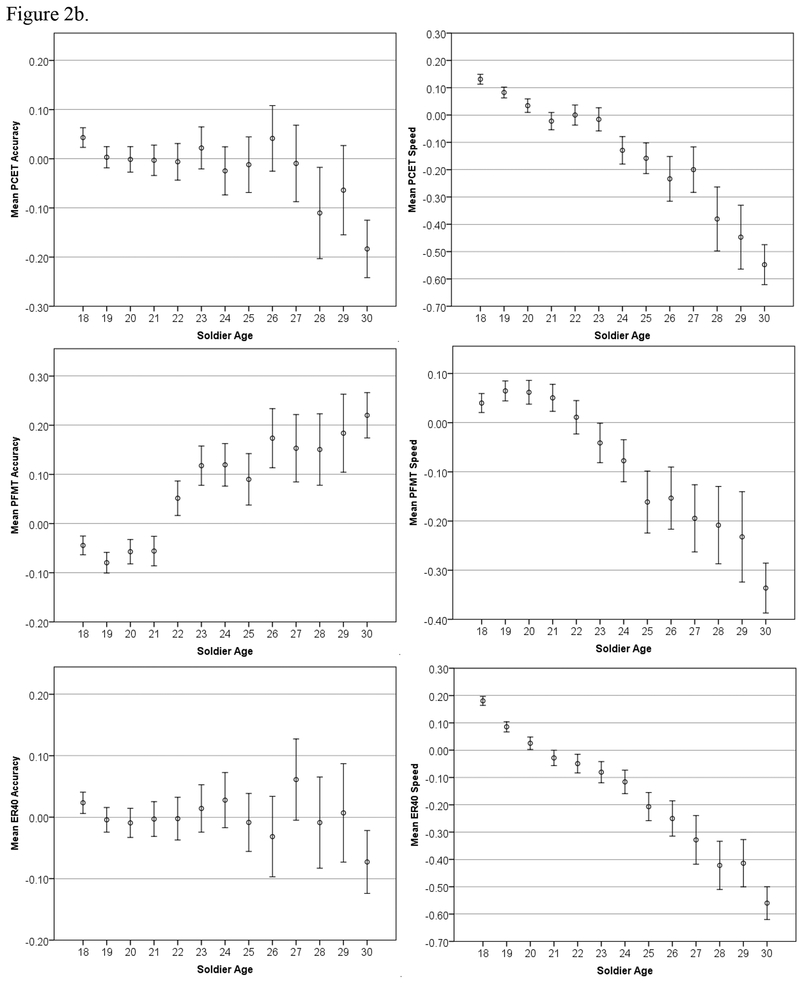

Tests of heteroscedasticity were confirmed (p < 0.05) for all variables, and we thus proceeded with robust regression. All linear effects of age were significant (p < 0.05) except for ER40 accuracy (p = 0.70). Additionally, many nonlinear terms (age-squared) were significant, and this information is shown in Table 2. To further explore the nonlinear relationships, these associations are presented graphically. Figure 1 shows the relationships between age and overall accuracy and speed, and Figure 2 shows each individual test score’s relationship with age. The age trends in Figure 1 are clear: Accuracy increases with age until approximately 27 years old, and speed correspondingly decreases with age at enlistment.

Table 2.

Associations of Age with Accuracy and Speed on Seven Neurocognitive Tests (Full Sample)

| Age (Standardized) | Age-Squared | |||

|---|---|---|---|---|

| Score | Beta | p-value | Beta | p-value |

| ESTROOP_Accuracy | .118 | <.001 | −.015 | <.001 |

| CPT_Accuracy | .190 | <.001 | −.030 | <.001 |

| ER40_Accuracy | −.002 | .702 | −.003 | .052 |

| GNG_Accuracy | .125 | <.001 | −.014 | <.001 |

| SLNB_Accuracy | .043 | <.001 | −.010 | <.001 |

| PCET_Accuracy | −.019 | .029 | −.005 | .168 |

| PFMT_Accuracy | .099 | <.001 | −.009 | <.001 |

| ESTROOP_Speed | −.110 | <.001 | .007 | <.001 |

| CPT_Speed | .038 | <.001 | −.004 | <.001 |

| ER40_Speed | −.175 | <.001 | .012 | <.001 |

| GNG_Speed | −.097 | <.001 | .005 | .022 |

| SLNB_Speed | −.043 | <.001 | .006 | <.001 |

| PCET_Speed | −.113 | <.001 | −.001 | .620 |

| PFMT_Speed | −.079 | <.001 | −.001 | <.001 |

Note. Age-Squared is the square of standardized age; significant effects bolded.

Figure 1.

Age trends in Standardized Overall Accuracy and Speed (z-scores) for the STARRS battery, with 95% Confidence Intervals.

Figure 2.

Age trends in Standardized Accuracy and Speed (z-scores) for the STARRS battery, with 95% Confidence Intervals.

Both age-related trends (in accuracy and speed) are further examined in Figure 2 by reducing the summary scores to their individual component test scores; Figure 2a shows the four executive-/frontal lobe-related tests (CPT, SLNB, GNG, and ESTROOP), and Figure 2b shows the three reasoning-/memory-related tasks (PCET, PFMT, and ER40).

Finally, Supplementary Table S3 shows the results of the above analyses performed using listwise-deletion and outlier-removal. Relationships with age remained largely consistent with the full sample, with the following exceptions: the nonlinear association with SLNB accuracy became non-significant; the linear association with PCET accuracy became non-significant; the nonlinear association with PCET accuracy became significant; the nonlinear association with GNG speed became non-significant; the nonlinear association with PCET speed became significant; and the nonlinear association with PFMT speed became non-significant. In addition, when the above analyses were conducted on the full sample after removing participants reporting a TBI, almost all results remained. The only exceptions were: 1) the age-squared term for PFMT speed became nonsignificant, 2) the linear age term for PCET accuracy became non-significant, and 3) the age-squared term for ER40 accuracy became significant (p < 0.05).

Exploratory Factor Analysis

Results of the comparison of the exploratory (E) and confirmatory (C) samples were mixed. They did not differ significantly by age (mean = 21.01 years for E and 21.02 for C) or sex (17.76% female for E and 17.38% for C). However, the variance of 7 out of the 21 scores did differ significantly between groups, even after correcting for multiple comparisons. Table 3 lists the adjusted p-values for the tests of equality of variances; unequal variance was detected for ESTROOP RT, GNG RT, SLNB RT, PFMT RT, ESTROOP Efficiency, CPT Efficiency, and ER40 Efficiency. Thus, cross-validation of the EFA with the CFA below should be interpreted with some caution.

Table 3.

P-values for F-tests of Equal Variance between Exploratory and Confirmatory Samples Used for Factor Analyses.

| Score | p-value |

|---|---|

| ESTROOP_Accuracy | .118 |

| CPT_Accuracy | 1.000 |

| ER40_Accuracy | .095 |

| GNG_Accuracy | 1.000 |

| SLNB_Accuracy | 1.000 |

| PCET_Accuracy | 1.000 |

| PFMT_Accuracy | 1.000 |

| ESTROOP_RT | <.001 |

| CPT_RT | .422 |

| ER40_RT | .118 |

| GNG_RT | .048 |

| SLNB_RT | .050 |

| PCET_RT | 1.000 |

| PFMT_RT | <.001 |

| ESTROOP_Efficiency | <.001 |

| CPT_Efficiency | <.001 |

| ER40_Efficiency | .008 |

| GNG_Efficiency | 1.000 |

| SLNB_Efficiency | 1.000 |

| PCET_Efficiency | 1.000 |

| PFMT_Efficiency | .486 |

Note. P-values are corrected for multiple comparisons using the Holm (1979) method.

Table 4 shows the unidimensional (1-factor) and 2-factor exploratory solutions of the Army STARRS efficiency, accuracy, and speed scores. The fit of the unidimensional models for all three score types was moderate-to-poor. Specifically, the root mean square error of approximation (RMSEA) for efficiency, accuracy, and speed were 0.082 (± 0.003), 0.065 (± 0.003), and 0.096 (± 0.003), respectively; and the df-corrected root mean square residuals (RMSRs) were 0.07, 0.05, and 0.08, respectively. The borderline fit of the unidimensional accuracy model indicates that one might be justified in calculating an “overall accuracy” score while ignoring multidimensionality, but the same could not be said of the speed and efficiency models, which are clearly multidimensional.

Table 4.

Unidimensional and Two-Factor Solutions of Efficiency, Accuracy, and Speed Scores from the Army STARRS Battery

| Efficiency | Accuracy | Speed | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Two-Factor | Two-Factor | Two-Factor | |||||||

| Test | Uni | F1 | F2 | Uni | F1 | F2 | Uni | F1 | F2 |

| PCET | .31 | .41 | .23 | .45 | .50 | .55 | |||

| CPT | .49 | .39 | .59 | .32 | .33 | .31 | .39 | ||

| SLNB | .53 | .50 | .47 | .22 | .32 | .25 | .40 | ||

| GNG | .66 | .77 | .76 | .90 | .43 | .69 | |||

| PFMT | .37 | .31 | .42 | .30 | .40 | .37 | |||

| ER40 | .40 | .68 | .30 | .39 | .60 | .70 | |||

| ESTROOP | .46 | .45 | .52 | .45 | .25 | .23 | |||

Note. Rotation = oblimin; inter-factor correlations for efficiency, accuracy, and speed are .45, .58, and .41, respectively; loadings with absolute value less than .20 not shown; Uni = unidimensional; CPT = Continuous Performance Task; ER40 = Emotion Recognition; GNG = Go/No-Go; SLNB = Short Letter-N-Back; PCET = Penn Conditional Exclusion Task; PFMT = Face Memory; ESTROOP = Emotional Stroop Task.

The two-factor models from Table 4 mostly confirm the hypothesis that the Army STARRS battery scores are multidimensional. The fit of the efficiency model is excellent (RMSEA = 0.038 ± 0.003; RMSR = 0.03), and mostly conforms to theory. Factor one comprises the four tests designed to measure frontal executive control functions of attention, working memory, impulse control, and management of emotional interference (CPT, LNB, GNG, and ESTROOP respectively). By contrast, Factor 2 comprises the three tests that require more complex cognition involving additional temporo-parietal functions of abstraction and mental flexibility, episodic memory, and emotion recognition (PCET, PFMT, and ER40, respectively). In light of age group effects in Figure 1 and Table 2, it is notable that the tests included in Factor 1 show better scores in the older cohorts while those of Factor 2 remain stable or get lower with increased cohort age. Note also that the moderate inter-factor correlation (0.45) between Factors 1 and 2 suggests that, despite the multidimensional structure of efficiency scores, an underlying (general performance) factor explaining covariance among all six tests does exist. Finally, the factor pattern shown in Table 4, in which “rapid” tests load on F1 and “deliberative” tests load on F2, is consistent with the idea that there are two “modes” of thinking (fast and slow) that recruit different brain regions. This phenomenon is discussed in an influential book (Kahneman, 2011).

To further examine the structure of the efficiency scores, we analyzed each component of efficiency (accuracy and speed) separately. The rationale is that the structure of efficiency could be, a) the result of accuracy and speed having the same structure as each other, inevitably resulting in the same structure for efficiency; or b) the result of some combination of unique accuracy and speed structures, which combine in such a way as to yield the efficiency structure in Table 4. The two-factor model for accuracy in Table 4 suggests the latter, because the structure of accuracy deviates somewhat from the structure of efficiency and maintains moderate-to-good fit (RMSEA = 0.051 ± 0.003; RMSR = 0.03). Specifically, Factor 1 now comprises only two tests (GNG and ESTROOP) plus two cross-loadings1 (CPT and SLNB), and factor two comprises five tests (PCET, CPT, LNB, PFMT, and ER40). Essentially, the attention and working memory tasks (CPT and LNB) switch from factor 1 (in the efficiency model) to factor 2 (in the accuracy model), though both retain cross-loadings on factor 1. The reason for the shift of the LNB is unclear, but it might be due to task difficulty that creates a distribution of accuracy scores more similar to the complex cognition tasks. The nearly equal loadings of the CPT on factor 1 and factor 2 (0.32 and 0.33, respectively) make interpretation difficult.

Finally, the two-factor model for speed in Table 4 very closely matches that for efficiency, and has good fit (RMSEA = 0.031 ± 0.003; RMSR = 0.02). Factor 1 comprises four tests that emphasize vigilant, rapid responses, while factor 2 comprises three tests that put less emphasis on speed. Note that soldiers were asked to work as quickly as possible for all seven tests, but the three tests composing factor 2 (PCET, PFMT, and ER40) require at least a momentary pause to contemplate the response. Thus, the answer to the question posed above—i.e. how do the structures of accuracy and speed combine to result in the clean, 2-factor structure for efficiency?—appears to be that speed exerts enough influence over the somewhat complex structure of accuracy to result in a structure of efficiency that more nearly mimics that for speed.

Finally, Supplementary Table S4 shows the results of the above analyses performed using listwise-deletion and outlier-removal. Results are consistent with those of the full sample, except that, for accuracy, the CPT and LNB have no cross-loadings—i.e. they cleanly load on F1 and F2, respectively. In the full sample, they both cross-loaded on F1 and F2.

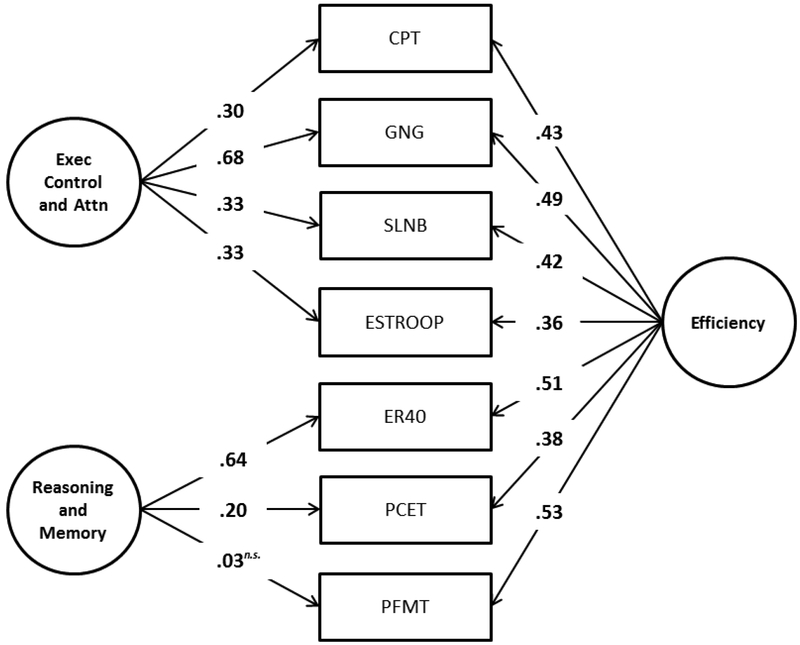

Confirmatory Factor Analysis

The exploratory analyses described above provided clear guidance about which tests should compose which factors in a confirmatory model; these specifications were consistent with neurocognitive theory. Figure 3 shows the 2-factor confirmatory bifactor model of the Army STARRS battery efficiency scores based on results obtained in the above exploratory analyses. We chose to model efficiency, because it combines both types of performance information (accuracy and speed), and there is a published bifactor model of the CNB (Moore et al., 2015) using five of the seven tests used in the STARRS battery as part of a larger battery. The fit of the model in the second half of the sample, seen in Figure 3, is excellent (CFI = 0.98; RMSEA = 0.026 ± 0.003; SRMR = 0.015), and some important characteristics are notable. First, as suggested by the moderate (0.53) inter-factor correlation in the 2-factor exploratory model (see Note in Table 4), the overall efficiency factor underlying all tests (right side of Figure 3) is also moderate (mean loading = 0.44). This supports the idea that a general efficiency score can be calculated from all seven test scores, but that one should not ignore the multidimensionality (as one could if the overall efficiency dimension was very strong).

Figure 3.

Confirmatory bifactor analysis of the Army STARRS Neurocognitive Battery efficiency scores.

Figure Note. Results are standardized such that the variance of the latent variables is 1.00. All coefficient estimates are significant at the 0.005 level unless indicated otherwise. Exec = Executive; Attn = Attention; CPT = Continuous Performance Task; ER40 = Emotion Recognition; GNG = Go/No-Go; SLNB = Short Letter-N-Back; PCET = Penn Conditional Exclusion Task; PFMT = Penn Face Memory Test; n.s. = not significant.

Second, it is worth noting that the overall efficiency factor is slightly more determined by the cognition and memory tests (mean loading = 0.57) than by the executive attention tests (mean loading = 0.35), which means that the neuropsychological phenomena determining soldiers’ overall efficiency are measured more precisely by the former (though only moderately). By contrast, the individual group factors (left side of Figure 3) show the opposite effect—i.e. the executive/attention factor (mean loading = 0.47) is stronger than the reasoning/memory factor (mean absolute loading = 0.28). This means that, even after controlling for general performance efficiency, a moderate amount of the covariance among tests is explained by neuropsychological processes related specifically to executive/attention abilities. The same cannot be said of the reasoning/memory tests, because most of the covariance among those three tests seems to be explained almost entirely by general efficiency ability. Indeed, after controlling for general efficiency, the group factor loading of the PFMT becomes negative (−0.48), though not significantly so. Such a weak group factor indicates that although the reasoning/memory tests are good measures of the neuropsychological phenomena controlling general efficiency ability, one should use extreme caution if trying to create sub-scale scores designed to test reasoning and memory uniquely with the current combination of tests. Instead, one could use the general efficiency score as a close proxy to reasoning and memory.

Finally, Supplementary Figure S1 shows the results of the above analysis performed using listwise-deletion and outlier-removal. Fit of the model remains excellent (CFI = 0.98; RMSEA = 0.036 ± 0.005; SRMR = 0.014), and relative loadings remain mostly unchanged. For example, the Executive Control factor remains dominated by GNG, Reading/Memory remains dominated by ER40, and the general efficiency factor remains dominated by PFMT and GNG. The only exception is that the loading of the ER40 on the general factor is somewhat higher in the full sample (0.51) compared to the more limited sample (0.40).

Discussion

With the recent increase in soldier suicides, as well as the constant hazard of TBI in battle, rapid and efficient neurocognitive testing of executive control functioning is becoming important for the military. Abnormalities or changes in neurocognitive test scores have been implicated in myriad problems related to the military, including suicidal behavior, PTSD, mood disorders, substance and alcohol use disorders, and impulsive behavior. The computerized neurocognitive battery used in the Army STARRS research project is an efficient, easily administered battery that could be used for research (as in this case) or for more applied needs, such as in the war theater.

We have applied this battery to a large sample of Servicemembers with minimal complications and have obtained data on over 50,000 soldiers within a short period of time. In the present study we examined the factorial and concurrent validity of this battery by testing its sensitivity to sex differences and age effects on performance and by evaluating its factorial structure. Robust sex differences were documented on measures that have revealed such differences in earlier studies. For example, Gur et al. (2012) found that females outperformed males on ER40 and PFMT accuracy and on ER40 speed; Longenecker et al. (2010) found that males outperformed females on N-Back accuracy; von Kluge (1992) found that females outperformed males on Stroop task accuracy, and males outperformed females on Stroop task speed2;, while age effects were either novel or consistent with previous findings. With respect to aggregate (cross-battery) results, the improvement in accuracy (Figure 1a) until age 27 is consistent with findings reported by Schaie (1994) and Whitley et al. (2016), and is further supported by evidence for continuing brain development until that age (Yakovlev & Lecours, 1967; Sowell et al., 1999). The steady decrease in speed (Figure 1b) is also fully consistent with previous literature, which shows greater adverse effects of age on speed than on memory (e.g., Irani et al., 2012). Additionally, Salthouse (2000) found uniform decreases in performance speed with age on multiple neurocognitive tests.

Three of the five significant positive correlations of accuracy with age (SLNB, CPT, and PFMT) are consistent with a previous study using the same tests in a large civilian sample (Gur et al., 2012). The fourth significant positive correlation (GNG150) is consistent with previous findings that accuracy increases with age in response inhibition tasks (e.g., Kramer et al., 1994, p. 500). To summarize, the face memory test (PFMT) and two of the faster-paced tests that more overtly require executive functions like attention and cognitive control (CPT and GNG) correlate positively with age, whereas two of the three tests that require more contemplation (ER40 and PCET) correlate negatively with age (at least after age 18). To our knowledge, there has been no previous finding that ESTROOP accuracy increases with age until age ~25, at which time it plateaus; it is therefore unclear whether the ESTROOP accuracy results speak to the concurrent validity of the Army STARRS battery. Overall, however, it appears that older recruits have better attention skills (CPT), are less impulsive (GNG), and have better memory for faces (PFMT). On the other hand, they are less sensitive to emotions (ER40) and have less mental flexibility (PCET). These age effects are quite surprising in their consistency and magnitude. Age of recruits makes a difference; older recruits are demonstrably more accurate but also slower across nearly all tests. When specific domains are examined, older recruits are less impulsive although, after age 26, they tend to become more rigid.

Notably, trends in speed (all negative except CPT, indicating higher RT or slower responding with increased age) are opposite to those reported in Gur et al. (2012), who found almost all positive correlations between age and speed. This is because of the differing age cohorts—18+ here, compared to 8–21 in Gur et al. (2012). The Gur et al (2012) study showed in a cohort of 3500 children annually faster response speed from age 8 to 17, where it flattens through age 21. The present results indicate that within the age range of 18 to 30 response speed is generally lower with increased age of cohorts, consistent with several previous studies (e.g. Deary & Der, 2005; Fozard et al., 1994; Myerson et al., 1990; Salthouse, Hambrick, & McGuthry, 1998). On the other hand, accuracy on most tasks does continue to improve until approximately age twenty-seven. The more pronounced age group effects evident in the GNG, CPT, ESTROOP, and PFMT are consistent with the hypothesized association of these measures with frontal lobe functioning. Frontal lobe maturation is protracted in humans, reaching its apex in the early twenties, whereas the motor cortex (responsible for immediate response times) matures by late adolescence (e.g., Yakovlev & Lecours, 1967; Matsuzawa et al., 2001; Pfefferbaum et al., 1994; Huttenlocher 1979; Filipek et al., 1994; Sowell et al., 1999; Gogtay et al., 2004). These results indicate that the battery is sensitive to demographic parameters that affect neurocognitive performance.

The factor analysis supported the theory that motivated the construction of the STARRS battery, which was to emphasize the measurement of executive functioning and sample to a more limited extent the domains of memory, reasoning and social cognition. Based on the results of the present study, in which concurrent and structural validity were largely confirmed, we are comfortable recommending the STARRS battery for research and applied purposes. Of theoretical interest, the factor analyses also provide some evidence in favor of a dual-process model of cognition (Chaiken & Trope, 1999). Specifically, when performance efficiency is separated into accuracy and speed components, the factor structures of the latter two do not perfectly match the structure of efficiency scores (see Table 4). A dual process framework would predict such a phenomenon, because dual-process models posit separate cognitive processes (one fast, one slower) for arriving at an accurate response (see Kahneman, 2011). Thus, the neural activation required to arrive at a correct answer on two different tasks might involve different proportions of rapid versus slow neurocognitive processing. The result could be two different patterns of covariance among speed scores and among accuracy scores that combine to form a nonetheless theoretically sound covariance structure for efficiency. Evidence from neuroimaging (Goel et al., 2000; Goel & Dolan, 2003) lends additional support to dual-process models of cognition.

Two other popular test batteries that have similar goals deserve mention for brief comparison: the Automated Neuropsychological Assessment Metrics (ANAM; Reeves, Kane, & Winter, 1995) and the NIH Toolbox (Gershon et al., 2010). The ANAM, designed by the U.S. Department of Defense, includes twenty-two tests designed to measure accuracy and speed in the cognitive domains of executive function, episodic memory, and decision-making. Similarly, the NIH Toolbox includes approximately fifty-five tests designed to measure cognition, emotion, motor, and sensation (nihtoolbox.org). The Army STARRS battery is more focused on executive control and uniquely examines social cognition (via the ER40) and nonconscious emotional processing (via the ESTROOP). It is also unique in including tests that have demonstrated links to regional brain function (Gur, Erwin & Gur, 1992; Gur et al., 2010; Roalf et al., 2014). These two advantages are notable for a couple of reasons. First, by definition, the psychopathologies of interest here (including those associated with suicide risk, such as depression) are associated not only with changes in executive control, but also changes in emotional and social processing phenomena such as those tapped by the ER40 and ESTROOP. Second, to demonstrate a link between brain function and measurement outcome is to meet the highest standard of validity, clearly articulated by Borsboom (2005; pp. 149–72). Five of the seven tests on the Army STARRS battery (SLNB, ER40, PFMT, PCET, and CPT) meet this standard (Roalf et al., 2014), which is more than can be said for either the ANAM or NIH Toolbox as of 2016.

An important limitation of the present study is that the sample is likely unique in that it comprised individuals who chose to join the US Army and are therefore likely more novelty-seeking and less risk-averse than the general population (see Rademaker et al., 2008). An implication is that the effects found here with respect to age and sex may in fact be underestimated due to the narrow sampling—i.e. if the sample comprised the whole spectrum of impulsivity and risk-aversion found in the general population, the increased variance in tests of executive control might result in larger effects. Further research is needed to determine the extent to which US Army personnel, especially those in non-combat roles, resemble the general population psychologically. A second limitation of the present study is that the optimal evaluation of valid score interpretation—namely, tests of whether the scores are sensitive to outcomes of interest such as psychopathology and suicide risk—has not been presented here. This work is currently underway by multiple Army STARRS collaborators, and information about the progress of said research is available here: http://starrs-ls.org/#/list/publications. To be clear, only when this further work is completed will the validity of the Army STARRS battery (as intended) be established. The present study established only what may be considered preliminary validity.

Notwithstanding the sample’s limited scope and cross-sectional nature, the present analysis supports the psychometric validity of the Army STARRS neurocognitive battery. The availability of this battery can have great potential for augmenting the set of assessment tools that can help in the early detection and intervention of vulnerability to neurocognitive deficits. Neuroimaging has increasingly been used for early diagnosis, and the present battery can be administered in the scanner to confirm linkage between dysfunction and regional brain activation. Out of the scanner, the battery can provide a more affordable step for identifying individuals with regional brain dysfunction. Arguably, the future of neuropsychology would likely involve such a combined use of neuroimaging-validated cognitive tests with in-scanner verification in smaller subsamples where specific hypotheses can be pursued. The present study is a step in that direction.

Supplementary Material

Acknowledgement:

Army STARRS was sponsored by the Department of the Army and funded under cooperative agreement number U01MH087981 with the U.S. Department of Health and Human Services, National Institutes of Health, National Institute of Mental Health (NIH/NIMH). The contents are solely the responsibility of the authors and do not necessarily represent the views of the Department of Health and Human Services, NIMH, the Veterans Administration, Department of the Army, or the Department of Defense.

Footnotes

Technically, the 0.22 cross-loading of the SLNB on Factor 1 does not meet the conventional cutoff of 0.30 for evaluating factor loading salience, but we selected 0.20 as the cutoff for discussing factor loadings here. See Kline (2014) for a nuanced discussion of factor loadings and their meanings.

Note, however, that the task used by von Kluge (1992) was a traditional Stroop task, whereas ours was an emotional Stroop task. Concurrent validity support provided by von Kluge, therefore, is only partial.

References

- Army STARRS (2012). Retrieved January 1, 2012, from http://www.armystarrs.org/

- Arsenault-Lapierre G, Kim C, & Turecki G (2004). Psychiatric diagnoses in 3275 suicides: A meta-analysis. BMC Psychiatry, 4(1), 37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baddeley A, & Della Sala S (1996). Working memory and executive control. Philosophical Transactions of the Royal Society of London. Series B: Biological Sciences, 351(1346), 1397–1404. [DOI] [PubMed] [Google Scholar]

- Barkley RA (1997). Behavioral inhibition, sustained attention, and executive functions: Constructing a unifying theory of ADHD. Psychological Bulletin, 121(1), 65. [DOI] [PubMed] [Google Scholar]

- Becker ES, Strohbach D, & Rinck M (1999). A specific attentional bias in suicide attempters. Journal of Nervous and Mental Disease, 187, 730–735. [DOI] [PubMed] [Google Scholar]

- Borsboom D (2005). Measuring the mind: Conceptual issues in contemporary psychometrics. Cambridge University Press. [Google Scholar]

- Brenner LA, Terrio H, Homaifar BY, Gutierrez PM, Staves PJ, Harwood JE, … & Warden D (2010). Neuropsychological test performance in soldiers with blast-related mild TBI. Neuropsychology, 24(2), 160. [DOI] [PubMed] [Google Scholar]

- Breusch TS & Pagan AR (1979) A simple test for heteroscedasticity and random coefficient variation. Econometrica, 47, 1287–1294. [Google Scholar]

- Carter CS, Barch DM, Gur R, Gur R, Pinkham A, & Ochsner K (2009). CNTRICS final task selection: Social cognitive and affective neuroscience–based measures. Schizophrenia Bulletin, 35(1), 153–162. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Casey BJ, Castellanos FX, Giedd JN, Marsh WL, Hamburger SD, Schubert AB, … & Rapoport JL (1997). Implication of right frontostriatal circuitry in response inhibition and attention-deficit/hyperactivity disorder. Journal of the American Academy of Child & Adolescent Psychiatry, 36(3), 374–383. [DOI] [PubMed] [Google Scholar]

- Cha CB, Najmi S, Park JM, Finn CT, & Nock MK (2010). Attentional bias toward suicide-related stimuli predicts suicidal behavior. Journal of Abnormal Psychology, 119(3), 616. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chaiken S, & Trope Y (Eds.). (1999). Dual-process theories in social psychology. New York: Guilford Press. [Google Scholar]

- Christopher G, & MacDonald J (2005). The impact of clinical depression on working memory. Cognitive Neuropsychiatry, 10(5), 379–399. [DOI] [PubMed] [Google Scholar]

- Cox WM, Fadardi JS, & Pothos EM (2006). The addiction-Stroop test: Theoretical considerations and procedural recommendations. Psychological Bulletin, 132, 443–476. [DOI] [PubMed] [Google Scholar]

- Danckwerts A, & Leathem J (2003). Questioning the link between PTSD and cognitive dysfunction. Neuropsychology Review, 13(4), 221–235. [DOI] [PubMed] [Google Scholar]

- Deary IJ, & Der G (2005). Reaction time, age, and cognitive ability: Longitudinal findings from age 16 to 63 years in representative population samples. Aging, Neuropsychology, and Cognition, 12(2), 187–215. [Google Scholar]

- Durston S, Thomas KM, Worden MS, Yang Y, & Casey BJ (2002). The effect of preceding context on inhibition: an event-related fMRI study. Neuroimage, 16(2), 449–453. [DOI] [PubMed] [Google Scholar]

- Durston S, Mulder M, Casey BJ, Ziermans T, & van Engeland H (2006). Activation in ventral prefrontal cortex is sensitive to genetic vulnerability for attention-deficit hyperactivity disorder. Biological Psychiatry, 60(10), 1062–1070. [DOI] [PubMed] [Google Scholar]

- Durston S, Davidson MC, Mulder MJ, Spicer JA, Galvan A, Tottenham N, … & Casey BJ (2007). Neural and behavioral correlates of expectancy violations in attention‐deficit hyperactivity disorder. Journal of Child Psychology and Psychiatry, 48(9), 881–889. [DOI] [PubMed] [Google Scholar]

- Erwin RJ, Gur RC, Gur RE, Skolnick B, Mawhinney-Hee M, & Smailis J (1992). Facial emotion discrimination: I. Task construction and behavioral findings in normal subjects. Psychiatry Research, 42(3), 231–240. [DOI] [PubMed] [Google Scholar]

- Fertuck EA, Jekal A, Song I, Wyman B, Morris MC, Wilson ST, … & Stanley B (2009). Enhanced ‘Reading the Mind in the Eyes’ in borderline personality disorder compared to healthy controls. Psychological Medicine, 39(12), 1979–1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fertuck EA, Lenzenweger MF, Clarkin JF, Hoermann S, & Stanley B (2006). Executive neurocognition, memory systems, and borderline personality disorder. Clinical Psychology Review, 26(3), 346–375. [DOI] [PubMed] [Google Scholar]

- Filipek PA, Richelme C, Kennedy DN, & Caviness VS (1994). The young adult human brain: An MRI-based morphometric analysis. Cerebral Cortex, 4(4), 344–360. [DOI] [PubMed] [Google Scholar]

- Foa EB, Feske U, Murdock TB, Kozak MJ, & McCarthy PR(1991). Processing of threat-related information in rape victims. Journal of Abnormal Psychology, 100, 156–162. [DOI] [PubMed] [Google Scholar]

- Fox J & Weisberg S (2011). An {R} Companion to applied regression, second edition Thousand Oaks, CA: Sage; URL: http://socserv.socsci.mcmaster.ca/jfox/Books/Companion [Google Scholar]

- Fozard JL, Vercruyssen M, Reynolds SL, Hancock PA, & Quilter RE (1994). Age differences and changes in reaction time: the Baltimore Longitudinal Study of Aging. Journal of gerontology, 49(4), P179–P189. [DOI] [PubMed] [Google Scholar]

- Goel V, Buchel C, Frith C, & Dolan RJ (2000). Dissociation of mechanisms underlying syllogistic reasoning. NeuroImage, 12(5), 504–514. [DOI] [PubMed] [Google Scholar]

- Goel V, & Dolan RJ (2003). Explaining modulation of reasoning by belief. Cognition, 87(1), B11–B22. [DOI] [PubMed] [Google Scholar]

- Gogtay N, Giedd JN, Lusk L, Hayashi KM, Greenstein D, Vaituzis AC, … & Thompson PM (2004). Dynamic mapping of human cortical development during childhood through early adulthood. Proceedings of the National Academy of Sciences of the United States of America, 101(21), 8174–8179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gershon RC, Cella D, Fox NA, Havlik RJ, Hendrie HC, & Wagster MV (2010). Assessment of neurological and behavioural function: The NIH Toolbox. The Lancet Neurology, 9(2), 138–139. [DOI] [PubMed] [Google Scholar]

- Gur RC, Calkins ME, Satterthwaite TD, Ruparel K, Bilker WB, Moore TM, … & Gur RE (2014). Neurocognitive growth charting in psychosis spectrum youths. JAMA Psychiatry, 71(4), 366–374. [DOI] [PubMed] [Google Scholar]

- Gur RC, Erwin RJ, & Gur RE (1992). Neurobehavioral probes for physiologic neuroimaging studies. Archives of General Psychiatry, 49, 409–414. [DOI] [PubMed] [Google Scholar]

- Gur RC, Erwin RJ, Gur RE, Zwil AS, Heimberg C, & Kraemer HC (1992). Facial emotion discrimination: II. Behavioral findings in depression. Psychiatry Research, 42(3), 241–251. [DOI] [PubMed] [Google Scholar]

- Gur RC, Jaggi JL, Ragland JD, Resnick SM, Shtasel D, Muenz L, et al. (1993). Effects of memory processing on regional brain activation: Cerebral blood flow in normal subjects. The International Journal of Neuroscience, 72, 31–44. [DOI] [PubMed] [Google Scholar]

- Gur RC, Ragland JD, Moberg PJ, Turner TH, Bilker WB, Kohler C, et al. (2001). Computerized neurocognitive scanning: I. Methodology and validation in healthy people. Neuropsychopharmacology, 25, 766–776. [DOI] [PubMed] [Google Scholar]

- Gur RC, Ragland JD, Mozley LH, Mozley PD, Smith R, Alavi A, … & Gur RE (1997). Lateralized changes in regional cerebral blood flow during performance of verbal and facial recognition tasks: Correlations with performance and “effort.” Brain and Cognition, 33(3), 388–414. [DOI] [PubMed] [Google Scholar]

- Gur RC, Richard J, Calkins ME, Chiavacci R, Hansen JA, Bilker WB, … Gur RE (2012). Age group and sex differences in performance on a computerized neurocognitive battery in children age 8–21. Neuropsychology, 26, 251–265. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gur RC, Richard J, Hughett P, Calkins ME, Macy L, Bilker WB, … Gur RE (2010). A cognitive neuroscience-based computerized battery for efficient measurement of individual differences: Standardization and initial construct validation. Journal of Neuroscience Methods, 187, 254–262. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Habel U, Gur RC, Mandal MK, Salloum JB, Gur RE, & Schneider F (2000). Emotional processing in schizophrenia across cultures: Standardized measures of discrimination and experience. Schizophrenia Research, 42(1), 57–66. [DOI] [PubMed] [Google Scholar]

- Harkavy-Friedman JM, Keilp JG, Grunebaum MF, Sher L, Printz D, Burke AK, … & Oquendo M (2006). Are BPI and BPII suicide attempters distinct neuropsychologically?. Journal of Affective Disorders, 94(1), 255–259. [DOI] [PubMed] [Google Scholar]

- Heimberg C, Gur RE, Erwin RJ, Shtasel DL, & Gur RC (1992). Facial emotion discrimination: III. Behavioral findings in schizophrenia. Psychiatry Research, 42(3), 253–265. [DOI] [PubMed] [Google Scholar]

- Horn NR, Dolan M, Elliott R, Deakin JFW, & Woodruff PWR (2003). Response inhibition and impulsivity: An fMRI study. Neuropsychologia,41(14), 1959–1966. [DOI] [PubMed] [Google Scholar]

- Hu L, & Bentler PM (1998). Fit indices in covariance structure analysis: Sensitivity to underparameterized model misspecification. Psychological Methods, 3, 424–453. [Google Scholar]

- Hu L, & Bentler PM (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6(1), 1–55. [Google Scholar]

- Huttenlocher PR (1979). Synaptic density in human frontal cortex: Developmental changes and effects of aging. Brain Research, 163, 195–205. [DOI] [PubMed] [Google Scholar]

- Irani F, Brensinger CM, Richard J, Calkins ME, Moberg PJ, Bilker W, … & Gur RC (2012). Computerized neurocognitive test performance in schizophrenia: A lifespan analysis. The American Journal of Geriatric Psychiatry, 20(1), 41–52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jollant F, Lawrence NL, Olié E, Guillaume S, & Courtet P (2011). The suicidal mind and brain: A review of neuropsychological and neuroimaging studies. World Journal of Biological Psychiatry, 12(5), 319–339. [DOI] [PubMed] [Google Scholar]

- Kahneman D (2011). Thinking, fast and slow. New York: Farrar, Strauss, Giroux. [Google Scholar]

- Kaspi SP, McNally RJ, & Amir N (1995). Cognitive processing of emotional information in posttraumatic stress disorder. Cognitive Therapy and Research, 19(4), 433–444. [Google Scholar]

- Keilp JG, Sackeim HA, & Mann JJ (2005). Correlates of trait impulsiveness in performance measures and neuropsychological tests. Psychiatry Research, 135(3), 191–201. [DOI] [PubMed] [Google Scholar]

- Keilp JG, Sackeim HA, Brodsky BS, Oquendo MA, Malone KM, & Mann JJ (2001). Neuropsychological dysfunction in depressed suicide attempters. American Journal of Psychiatry, 158(5), 735–741. [DOI] [PubMed] [Google Scholar]

- Keilp JG, Gorlyn M, Oquendo MA, Burke AK, & Mann JJ (2008). Attention deficit in depressed suicide attempters. Psychiatry Research, 159(1), 7–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kessler RC, Colpe LJ, Fullerton CS, Gebler N, Naifeh JA, Nock MK, … & Heeringa SG (2013). Design of the Army Study to Assess Risk and Resilience in Servicemembers (Army STARRS). International journal of methods in psychiatric research, 22(4), 267–275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kline P (2014). An easy guide to factor analysis. New York: Routledge. [Google Scholar]

- Kohler CG, Hanson E, & March ME (2013). Emotion processing in schizophrenia In Roberts DL & Penn DL (Eds.), Social cognition in schizophrenia: From evidence to treatment (Chapter 7). New York: Oxford UP. [Google Scholar]

- Kohler CG, Turner TH, Bilker WB, Brensinger CM, Siegel SJ, Kanes SJ, … & Gur RC (2003). Facial emotion recognition in schizophrenia: Intensity effects and error pattern. American Journal of Psychiatry, 160(10), 1768–1774. [DOI] [PubMed] [Google Scholar]

- Kramer AF, Humphrey DG, Larish JF, Logan GD, & Strayer DL (1994). Aging and inhibition: Beyond a unitary view of inhibitory processing in attention. Psychology and Aging, 9(4), 491–512. [PubMed] [Google Scholar]

- Kurtz MM, Ragland JD, Bilker W, Gur RC, & Gur RE (2001). Comparison of the continuous performance test with and without working memory demands in healthy controls and patients with schizophrenia. Schizophrenia Research, 48(2), 307–316. [DOI] [PubMed] [Google Scholar]

- Kurtz MM, Ragland JD, Moberg PJ, & Gur RC (2004). The Penn Conditional Exclusion Test: A new measure of executive-function with alternate forms for repeat administration. Archives of Clinical Neuropsychology, 19(2), 191–201. [DOI] [PubMed] [Google Scholar]

- Kurtz MM, & Gerraty RT (2009). A meta-analytic investigation of neurocognitive deficits in bipolar illness: Profile and effects of clinical state. Neuropsychology, 23(5), 551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeGris BN, & van Reekum MD (2006). The neuropsychological correlates of borderline personality disorder and suicidal behaviour. Canadian Journal of Psychiatry, 51(3), 131–142. [DOI] [PubMed] [Google Scholar]

- LeMarquand DG, Pihl RO, Young SN, Tremblay RE, Séguin JR, Palmour RM, & Benkelfat C (1998). Tryptophan depletion, executive functions, and disinhibition in aggressive, adolescent males. Neuropsychopharmacology, 19(4), 333–341. [DOI] [PubMed] [Google Scholar]

- Leskin LP, & White PM (2007). Attentional networks reveal executive function deficits in posttraumatic stress disorder. Neuropsychology, 21(3), 275. [DOI] [PubMed] [Google Scholar]

- Longenecker J, Dickinson D, Weinberger DR, & Elvevåg B (2010). Cognitive differences between men and women: A comparison of patients with schizophrenia and healthy volunteers. Schizophrenia Research, 120(1–3), 234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maronna RA, & Yohai VJ (2000). Robust regression with both continuous and categorical predictors. Journal of Statistical Planning and Inference, 89, 197–214. [Google Scholar]

- Masten CL, Guyer AE, Hodgdon HB, McClure EB, Charney DS, Ernst M, … & Monk CS (2008). Recognition of facial emotions among maltreated children with high rates of post-traumatic stress disorder. Child Abuse & Neglect, 32(1), 139–153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mathersul D, Palmer DM, Gur RC, Gur RE, Cooper N, Gordon E, & Williams LM (2009). Explicit identification and implicit recognition of facial emotions: II. Core domains and relationships with general cognition. Journal of Clinical and Experimental Neuropsychology, 31(3), 278–291. [DOI] [PubMed] [Google Scholar]

- Matsuzawa J, Matsui M, Konishi T, Noguchi K, Gur RC, Bilker W, & Miyawaki T (2001). Age-related volumetric changes of brain gray and white matter in healthy infants and children. Cerebral Cortex, 11(4), 335–342. [DOI] [PubMed] [Google Scholar]

- McClintock SM, Husain MM, Greer TL, & Cullum CM (2010). Association between depression severity and neurocognitive function in major depressive disorder: A review and synthesis. Neuropsychology, 24(1), 9. [DOI] [PubMed] [Google Scholar]

- McNally RJ, Kaspi SP, Riemann BC, & Zeitlin SB (1990). Selective processing of threat cues in posttraumatic stress disorder. Journal of Abnormal Psychology, 99(4), 398. [DOI] [PubMed] [Google Scholar]

- Mialet JP, Pope HG, & Yurgelun-Todd D (1996). Impaired attention in depressive states: A non-specific deficit? Psychological Medicine, 26(5), 1009–1020. [DOI] [PubMed] [Google Scholar]

- Moore TM, Reise SP, Gur RE, Hakonarson H, & Gur RC (2015). Psychometric properties of the Penn Computerized Neurocognitive Battery. Neuropsychology, 29(2), 235–246. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Muthén, L. K., & Muthén, B. O. (1998–2013). Mplus User’s Guide. Seventh Edition. Los Angeles, CA: Muthén & Muthén. [Google Scholar]

- Myerson J, Hale S, Wagstaff D, Poon LW, & Smith GA (1990). The information-loss model: a mathematical theory of age-related cognitive slowing. Psychological Review, 97(4), 475. [DOI] [PubMed] [Google Scholar]

- Naranjo C, Kornreich C, Campanella S, Noël X, Vandriette Y, Gillain B, … & Constant E (2011). Major depression is associated with impaired processing of emotion in music as well as in facial and vocal stimuli. Journal of Affective Disorders, 128(3), 243–251. [DOI] [PubMed] [Google Scholar]

- Navalta CP, Polcari A, Webster DM, Boghossian A, & Teicher MH (2006). Effects of childhood sexual abuse on neuropsychological and cognitive function in college women. The Journal of Neuropsychiatry and Clinical Neurosciences, 18(1), 45–53. [DOI] [PubMed] [Google Scholar]

- Neuringer C (1964). Rigid thinking in suicidal individuals. Journal of Consulting Psychology, 28(1), 54. [DOI] [PubMed] [Google Scholar]

- Nock MK, Park JM, Finn CT, Deliberto TL, Dour HJ, & Banaji MR (2010). Measuring the “suicidal mind”: Implicit cognition predicts suicidal behavior. Psychological Science, 21, 511–517. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Noël X, Bechara A, Dan B, Hanak C, & Verbanck P (2007). Response inhibition deficit is involved in poor decision making under risk in nonamnesic individuals with alcoholism. Neuropsychology, 21(6), 778. [DOI] [PubMed] [Google Scholar]

- Ostling EW, & Fillmore MT (2010). Tolerance to the impairing effects of alcohol on the inhibition and activation of behavior. Psychopharmacology, 212(4), 465–473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Paelecke-Habermann Y, Pohl J, & Leplow B (2005). Attention and executive functions in remitted major depression patients. Journal of Affective Disorders, 89(1), 125–135. [DOI] [PubMed] [Google Scholar]

- Pfefferbaum A, Mathalon DH, Sullivan EV, Rawles JM, Zipursky RB, & Lim KO (1994). A quantitative magnetic resonance imaging study of changes in brain morphology from infancy to late adulthood. Archives of Neurology, 51(9), 874–887. [DOI] [PubMed] [Google Scholar]

- Pitman RL, Shalev AY, & Orr SP (2000). Posttraumatic stress disorder: emotion, conditioning, and memory In Gazzaniga MS (Ed.), The new cognitive neurosciences (2nd ed.) (pp. 1133–1147). Cambridge, MA: MIT Press [Google Scholar]

- R Core Team (2014). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria: URL http://www.R-project.org/. [Google Scholar]

- Rademaker AR, Vermetten E, Geuze E, Muilwijk A, & Kleber RJ (2008). Self‐reported early trauma as a predictor of adult personality: a study in a military sample. Journal of Clinical Psychology, 64(7), 863–875. [DOI] [PubMed] [Google Scholar]

- Ragland JD, Glahn DC, Gur RC, Censits DM, Smith RJ, Mozley PD, … & Gur RE (1997). PET regional cerebral blood flow during working and declarative memory: Relationship with task performance. Neuropsychology, 11(2), 222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ragland JD, Turetsky BI, Gur RC, Gunning-Dixon F, Turner T, Schroeder L, … & Gur RE (2002). Working memory for complex figures: An fMRI comparison of letter and fractal n-back tasks. Neuropsychology, 16(3), 370. [PMC free article] [PubMed] [Google Scholar]

- Reeves D, Kane R, & Winter K (1995). Automated Neuropsychological Assessment Metrics (ANAM): Test administrators guide Version 3.11 (Report No. NCRF-95–01). San Diego, CA: National Cognitive Recovery Foundation [Google Scholar]

- Reise SP (2012). The rediscovery of bifactor measurement models. Multivariate Behavioral Research, 47(5), 667–696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reise SP, Moore TM, & Haviland MG (2010). Bifactor models and rotations: Exploring the extent to which multidimensional data yield univocal scale scores. Journal of Personality Assessment, 92, 544–559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Revelle W (2013) psych: Procedures for Personality and Psychological Research, Northwestern University, Evanston, Illinois, USA, http://CRAN.R-project.org/package=psychVersion=1.4.2. [Google Scholar]

- Richard-Devantoy S, Orsat M, Dumais A, Turecki G, & Jollant F (2014). Neurocognitive vulnerability: Suicidal and homicidal behaviours in patients with schizophrenia. Canadian Journal of Psychiatry. Revue Canadienne de Psychiatrie, 59(1), 18–25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roalf DR, Ruparel K, Gur RE, Bilker W, Gerraty R, Elliott MA, … & Gur RC (2014). Neuroimaging predictors of cognitive performance across a standardized neurocognitive battery. Neuropsychology, 28, 161–179. [DOI] [PMC free article] [PubMed] [Google Scholar]