Abstract

Background

The preconditioned conjugate gradient (PCG) method is an iterative solver of linear equations systems commonly used in animal breeding. However, the PCG method has been shown to encounter convergence issues when applied to single-step single nucleotide polymorphism BLUP (ssSNPBLUP) models. Recently, we proposed a deflated PCG (DPCG) method for solving ssSNPBLUP efficiently. The DPCG method introduces a second-level preconditioner that annihilates the effect of the largest unfavourable eigenvalues of the ssSNPBLUP preconditioned coefficient matrix on the convergence of the iterative solver. While it solves the convergence issues of ssSNPBLUP, the DPCG method requires substantial additional computations, in comparison to the PCG method. Accordingly, the aim of this study was to develop a second-level preconditioner that decreases the largest eigenvalues of the ssSNPBLUP preconditioned coefficient matrix at a lower cost than the DPCG method, in addition to comparing its performance to the (D)PCG methods applied to two different ssSNPBLUP models.

Results

Based on the properties of the ssSNPBLUP preconditioned coefficient matrix, we proposed a second-level diagonal preconditioner that decreases the largest eigenvalues of the ssSNPBLUP preconditioned coefficient matrix under some conditions. This proposed second-level preconditioner is easy to implement in current software and does not result in additional computing costs as it can be combined with the commonly used (block-)diagonal preconditioner. Tested on two different datasets and with two different ssSNPBLUP models, the second-level diagonal preconditioner led to a decrease of the largest eigenvalues and the condition number of the preconditioned coefficient matrices. It resulted in an improvement of the convergence pattern of the iterative solver. For the largest dataset, the convergence of the PCG method with the proposed second-level diagonal preconditioner was slower than the DPCG method, but it performed better than the DPCG method in terms of total computing time.

Conclusions

The proposed second-level diagonal preconditioner can improve the convergence of the (D)PCG methods applied to two ssSNPBLUP models. Based on our results, the PCG method combined with the proposed second-level diagonal preconditioner seems to be more efficient than the DPCG method in solving ssSNPBLUP. However, the optimal combination of ssSNPBLUP and solver will most likely be situation-dependent.

Electronic supplementary material

The online version of this article (10.1186/s12711-019-0472-8) contains supplementary material, which is available to authorized users.

Background

Since its introduction in the late 1990s [1], the preconditioned conjugate gradient (PCG) method has been the method of choice to solve breeding value estimation models in animal breeding. Likewise, the systems of linear equations of the different single-step single nucleotide polymorphism BLUP (ssSNPBLUP) models are usually solved with the PCG method with a diagonal (also called Jacobi) or block-diagonal preconditioner [2–4]. Several studies [3–6] observed that the PCG method with such a preconditioner applied to ssSNPBLUP is associated with slower convergence. By investigating the reasons for these convergence issues, Vandenplas et al. [4] observed that the largest eigenvalues of the preconditioned coefficient matrix of ssSNPBLUP proposed by Mantysaari and Stranden [7], hereafter referred to as ssSNPBLUP_MS, resulted from the presence of the equations for single nucleotide polymorphism (SNP) effects. In their study, applying a deflated PCG (DPCG) method to ssSNPBLUP_MS solved the convergence issues [4]. In comparison to the PCG method, the DPCG method introduces a second-level preconditioner that annihilates the effect of the largest eigenvalues of the preconditioned coefficient matrix of ssSNPBLUP_MS on the convergence of the iterative solver. After deflation, the largest eigenvalues of the ssSNPBLUP_MS preconditioned deflated coefficient matrix were reduced and close to those of single-step genomic BLUP (ssGBLUP). As a result the associated convergence patterns of ssSNPBLUP were, at least, similar to those of ssGBLUP [4].

While it solves the convergence issues associated with ssSNPBLUP, the DPCG method requires the computation and storage of the so-called Galerkin matrix, which is a dense matrix that could be computationally expensive for very large evaluations and that requires some effort to be implemented in existing software. In addition, as implemented in Vandenplas et al. [4], each iteration of the DPCG method requires two multiplications of the coefficient matrix by a vector, instead of one multiplication for the PCG method. As a result, computing time per iteration with the DPCG method is roughly twice as long as with the PCG method. Accordingly, it is of interest to develop a second-level preconditioner that would reduce the largest eigenvalues of the preconditioned coefficient matrix of ssSNPBLUP at a lower cost than the DPCG method. As such, the aim of this study was to develop a second-level preconditioner that would decrease the unfavourable largest eigenvalues of the preconditioned coefficient matrix of ssSNPBLUP and to compare its performance to the DPCG method. The performance of the proposed second-level preconditioner was tested for two different ssSNPBLUP models.

Methods

Data

The two datasets used in this study, hereafter referred to as the reduced and field datasets, were provided by CRV BV (The Netherlands) and are the same as in Vandenplas et al. [4], in which these two datasets are described in detail.

Briefly, for the reduced dataset, the data file included 61,592 ovum pick-up sessions from 4109 animals and the pedigree included 37,021 animals. The 50K SNP genotypes of 6169 animals without phenotypes were available. A total of 9994 segregating SNPs with a minor allele frequency higher than or equal to 0.01 were randomly sampled from the 50K SNP genotypes. The number of SNPs was limited to 9994 to facilitate the computation and the analysis of the left-hand side of the mixed model equations. The univariate mixed model included random effects (additive genetic, permanent environmental and residual), fixed co-variables (heterosis and recombination) and fixed cross-classified effects (herd-year, year-month, parity, age in months, technician, assistant, interval, gestation, session and protocol) [8].

For the field dataset, the data file included 3,882,772 records with a single record per animal. The pedigree included 6,130,519 animals. The genotypes, including 37,995 segregating SNPs, of 15,205 animals without phenotypes and of 75,758 animals with phenotypes were available. The four-trait mixed model included random effects (additive genetic and residual), fixed co-variables (heterosis and recombination) and fixed cross-classified effects (herd x year x season at classification, age at classification, lactation stage at classification, milk yield and month of calving) [9, 10].

Single-step SNPBLUP models

In this study, we investigated two ssSNPBLUP linear equations systems. The first system was proposed by Mantysaari and Stranden [7] (ssSNPBLUP_MS). This system was also investigated in Vandenplas et al. [4]. The standard multivariate model associated with the ssSNPBLUP_MS system of equations can be written as:

where the subscripts g and n refer to genotyped and non-genotyped animals, respectively, is the vector of records, is the vector of fixed effects, is the vector of additive genetic effects for non-genotyped animals, is the vector of residual polygenic effects for genotyped animals, is the vector of SNP effects and is the vector of residuals. The matrices , and are incidence matrices relating records to their corresponding effects. The matrix is equal to , with being an identity matrix with size equal to the number of traits and the matrix containing the SNP genotypes (coded as 0 for one homozygous genotype, 1 for the heterozygous genotype, or 2 for the alternate homozygous genotype) centred by their observed means.

The system of linear equations for multivariate ssSNPBLUP_MS can be written as follows:

where

is a symmetric positive (semi-)definite coefficient matrix, is the vector of solutions, and

is the right-hand side with

being the inverse of the residual (co)variance structure matrix. The matrix is the inverse of the covariance matrix associated with , and , and is equal to

The matrix is equal to , with

being the inverse of the pedigree relationship matrix. The parameter w is the proportion of variance (due to additive genetic effects) considered as residual polygenic effects and with being the allele frequency of the oth SNP.

The second system of linear equations investigated in this study is the system of equations proposed by Gengler et al. [11] and Liu et al. [5], hereafter referred to as ssSNPBLUP_Liu. The system of linear equations for a multivariate ssSNPBLUP_Liu can be written as follows:

where

and

The matrix is equal to

with .

It is worth noting that the absorption of the equations associated with of ssSNPBLUP_Liu results in the mixed model equations of single-step genomic BLUP (ssGBLUP) for which the inverse of the genomic relationship matrix is calculated using the Woodbury formula [12]. Several studies (e.g., [13–15]) investigated the possibility of using specific knowledge of a priori variances to weight differently some SNPs in ssGBLUP. Such approaches are difficult to extend to multivariate ssGBLUP, while they can be easily applied in ssSNPBLUP by replacing the matrix by a symmetric positive definite matrix that contains SNP-specific (co)variances obtained by, e.g., Bayesian regression [5].

In the following, matrix will refer to either or (and similarly for the vectors and ). In addition, the matrices and have the same structure, and both can be partitioned between the equations associated with SNP effects (S) and the equations associated with the other effects (O), as follows:

From this partition, it follows that and that , , and are dense matrices.

The PCG method

The PCG method is an iterative method that uses successive approximations to obtain more accurate solutions for a linear system at each iteration step [16]. The preconditioned systems of the linear equations of ssSNPBLUP_MS and of ssSNPBLUP_Liu have the form:

| 1 |

where is a (block-)diagonal preconditioner.

In this study, the (block-)diagonal preconditioner is defined as:

where the subscripts f and r refer to the equations associated with fixed and random effects, respectively and is a block-diagonal matrix with blocks corresponding to equations for different traits within a level (e.g., an animal).

After k iterations of the PCG method applied to the Eq. (1), the error is bounded by [16, 17]:

where , is the -norm of , defined as , and is the effective spectral condition number of , that is defined as with () being the largest (smallest) non-zero eigenvalue of .

The deflated PCG method

Vandenplas et al. [4] showed that the largest eigenvalues of the ssSNPBLUP_MS preconditioned coefficient matrix were larger than those of the ssGBLUP preconditioned coefficient matrix, while the smallest eigenvalues were similar. This resulted in larger effective condition numbers and convergence issues for ssSNPBLUP_MS. As applied by Vandenplas et al. [4], the DPCG method annihilates the largest unfavourable eigenvalues of the ssSNPBLUP_MS preconditioned coefficient matrix , which resulted in effective condition numbers and convergence patterns of ssSNPBLUP_MS similar to those of ssGBLUP solved with the PCG method. The preconditioned deflated linear systems of ssSNPBLUP_MS and of ssSNPBLUP_Liu mixed model equations have the form:

where is a second-level preconditioner, called the deflation matrix, equal to , with the matrix being the deflation-subspace matrix as defined in Vandenplas et al. [4] and being the Galerkin matrix.

A second-level diagonal preconditioner

The DPCG method requires the computation and the storage of the Galerkin matrix , which is computationally expensive for very large evaluations [4]. Furthermore, as implemented in Vandenplas et al. [4], each iteration of the DPCG method requires two multiplications of the coefficient matrix by a vector, instead of one multiplication for the PCG method. Here, our aim is to develop another second-level preconditioner that decreases the largest eigenvalues of the preconditioned coefficient matrix at a lower cost than the DPCG method and results in smaller effective condition numbers and better convergence patterns.

To achieve this aim, we introduce a second-level diagonal preconditioner defined as:

where is an identity matrix of size equal to the number of equations that are not associated with SNP effects, is an identity matrix of size equal to the number of equations that are associated with SNP effects, and are real positive numbers and . Possible values for and are discussed below.

Therefore, the preconditioned system of Eq. (1) is modified as follows:

| 2 |

Hereafter, we show that the proposed second-level preconditioner applied to ssSNPBLUP systems of equations results in smaller effective condition numbers by decreasing the largest eigenvalues of the preconditioned coefficient matrices. For simplicity, the symmetric preconditioned coefficient matrix with is used instead of . Indeed, these two matrices have the same spectrum, i.e., the same set of eigenvalues. In addition, the effective condition number of , , is equal to the effective condition number of , , because:

with , and .

The result is that depends only on and therefore only on the ratio.

Regarding the largest eigenvalues of the preconditioned coefficient matrix or equivalently of , the effect of the second-level preconditioner on can be analysed using the Gershgorin circle theorem [18]. From this theorem, it follows that the largest eigenvalue of the preconditioned coefficient matrix is bounded by, for all ith and jth equations:

| 3 |

Partitioned between the equations associated with SNP effects (S) and with the other effects (O), it follows from Eq. (3) that has the following lower and upper bounds (see Additional file 1 for the derivation):

| 4 |

with ,

and k and l referring to the equations not associated with and associated with SNP effects, respectively.

Therefore, for a fixed value of , the upper bound of will decrease with decreasing ratios, up to the lowest upper bound , that is the upper bound of .

Nevertheless, decreasing the largest eigenvalue does not (necessarily) mean decreasing the effective condition number , because could decrease at the same rate as, or faster than leading to constant or larger . As such, it is required that decreases at a lower rate, remains constant, or even increases, when decreases with decreasing ratios. This would be achieved if is independent of . Hereafter, we formulate a sufficient condition to ensure that for any ratio.

Let the matrix be a matrix containing (columnwise) all the eigenvectors of sorted according to the ascending order of their associated eigenvalues. The set of eigenvalues of sorted according to their ascending order is hereafter called spectrum of . The matrix can be partitioned into a matrix storing eigenvectors associated with eigenvalues at the left-hand side of the spectrum (that includes the smallest eigenvalues) of and a matrix storing eigenvectors at the right-hand side of the spectrum (that includes the largest eigenvalues)of , and between equations associated with SNP effects or not, as follows:

A sufficient condition to ensure that is that , and that all eigenvalues associated with an eigenvector of are equal to, or larger than (see Additional file 2 for proof). Therefore, the effective condition numbers will decrease with decreasing ratios until the largest eigenvalue reaches its lower bound , as long as the sufficient condition is satisfied. In practice, the pattern of the matrix will never be as required by the sufficient condition, because the submatrices and contain non-zero entries. However, this sufficient condition is helpful to formulate the expectation that convergence of the models will improve with decreasing ratios up to a point that can either be identified from the analyses or by computing the eigenvalues of .

Analyses

Eigenvalues and eigenvectors of ssSNPBLUP_MS preconditioned coefficient matrices with values of from 1 to (and ) were computed for the reduced dataset using the subroutine dsyev provided by Intel(R) Math Kernel Library (MKL) 11.3.2.

Using the matrix-free version of the software developed in Vandenplas et al. [4], the system of ssSNPBLUP_MS and ssSNPBLUP_Liu equations for the reduced and field datasets were solved with the PCG and DPCG methods together with the second-level preconditioner for different values of (with ). The second-level preconditioner was implemented by combining it with the preconditioner , as . Accordingly, its implementation has no additional costs for an iteration of the PCG and DPCG methods. The DPCG method was applied with 5 SNP effects per subdomain [4]. To illustrate the effect of , the system of ssSNPBLUP_MS equations was also solved for the reduced dataset with the PCG method and different values of (with ). For both the PCG and DPCG methods, the iterative process stopped when the relative residual norm was smaller than . For all systems, the smallest and largest eigenvalues that influence the convergence of the iterative methods were estimated using the Lanczos method based on information obtained from the (D)PCG method [16, 19, 20]. Effective condition numbers were computed from these estimates [17].

All real vectors and matrices were stored using double precision real numbers, except for the preconditioner, which was stored using single precision real numbers. All computations were performed on a computer with 528 GB and running RedHat 7.4 (x86_64) with an Intel Xeon E5-2667 (3.20 GHz) processor with 16 cores. The number of OpenMP threads was limited to 5 for both datasets. Time requirements are reported for the field dataset. All reported times are indicative, because they may have been influenced by other jobs running simultaneously on the computer.

Results

Reduced dataset

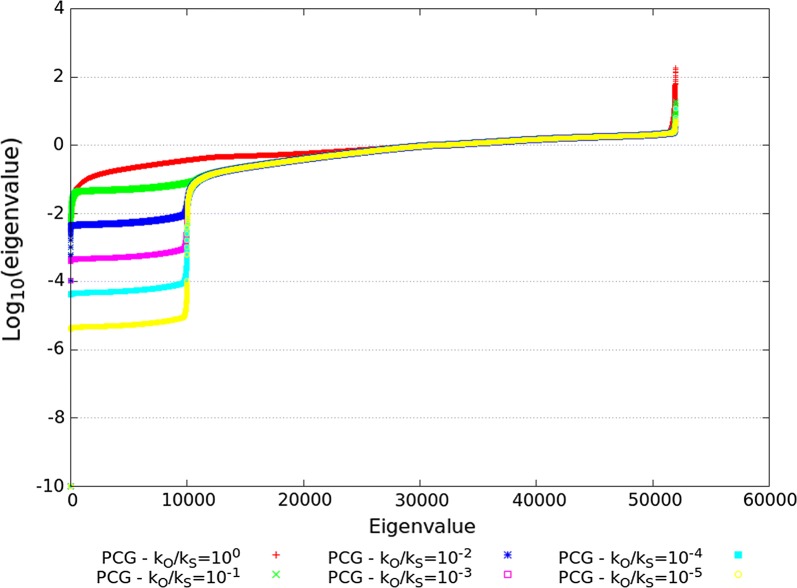

The spectra of the ssSNPBLUP_MS preconditioned coefficient matrices solved with the PCG method and with values from 1 to (and ) are depicted in Fig. 1. It can be observed that the largest eigenvalues decreased with decreasing ratios, up to (Fig. 1; Table 1). On the other side of the spectrum, a set of approximately 10,000 small eigenvalues that decrease with decreasing ratios can be observed.

Fig. 1.

Eigenvalues of different preconditioned coefficient matrices for the reduced dataset. Eigenvalues of the preconditioned coefficient matrices of ssSNPBLUP_MS are depicted on a logarithmic scale. All eigenvalues less than were set to . Eigenvalues are sorted in ascending order

Table 1.

Characteristics of preconditioned (deflated) coefficient matrices, and of PCG and DPCG methods for solving ssSNPBLUP applied to the reduced dataset

| MS | PCG | 1 | 1 | 1 | 1499 | |||

| MS | PCG | 1 | 2 | 0.5 | 1103 | |||

| MS | PCG | 1 | 3.3 | 0.3 | 862 | |||

| MS | PCG | 1 | 560 | |||||

| MS | PCG | 1 | 417 | |||||

| MS | PCG | 1 | 608 | |||||

| MS | PCG | 1 | 1254 | |||||

| MS | PCG | 1 | 2350 | |||||

| MS | PCG | 1 | 557 | |||||

| MS | PCG | 1 | 416 | |||||

| MS | PCG | 1 | 606 | |||||

| MS | PCG | 1 | 1254 | |||||

| MS | PCG | 1 | 2367 | |||||

| MS | DPCG (1) | 1 | 1 | 1 | 6.44 | 294 | ||

| MS | DPCG (1) | 1 | 6.44 | 293 | ||||

| MS | DPCG (5) | 1 | 1 | 1 | 6.44 | 342 | ||

| MS | DPCG (5) | 1 | 6.44 | 331 | ||||

| MS | DPCG (5) | 1 | 6.44 | 385 | ||||

| MS | DPCG (5) | 1 | 6.44 | 544 | ||||

| MS | DPCG (5) | 1 | 6.44 | 961 | ||||

| MS | DPCG (5) | 1 | 6.44 | 1456 | ||||

| Liu | PCG | 1 | 1 | 1 | 1401 | |||

| Liu | PCG | 1 | 561 | |||||

| Liu | PCG | 1 | 563 | |||||

| Liu | PCG | 1 | 1154 | |||||

| Liu | DPCG (5) | 1 | 1 | 1 | 6.44 | 419 | ||

| Liu | DPCG (5) | 1 | 6.44 | 399 | ||||

| Liu | DPCG (5) | 1 | 6.44 | 520 | ||||

| Liu | DPCG (5) | 1 | 6.44 | 1046 |

MS = ssSNPBLUP model proposed by Mantysaari and Stranden [7]; Liu = ssSNPBLUP model proposed by Liu et al. [5]

Number of SNP effects per subdomain is within brackets

Parameters used for the second-level preconditioner

Smallest and largest eigenvalues of the preconditioned (deflated) coefficient matrix

Condition number of the preconditioned (deflated) coefficient matrix

Number of iterations. A number of iterations equal to 10,000 means that the method failed to converge within 10,000 iterations

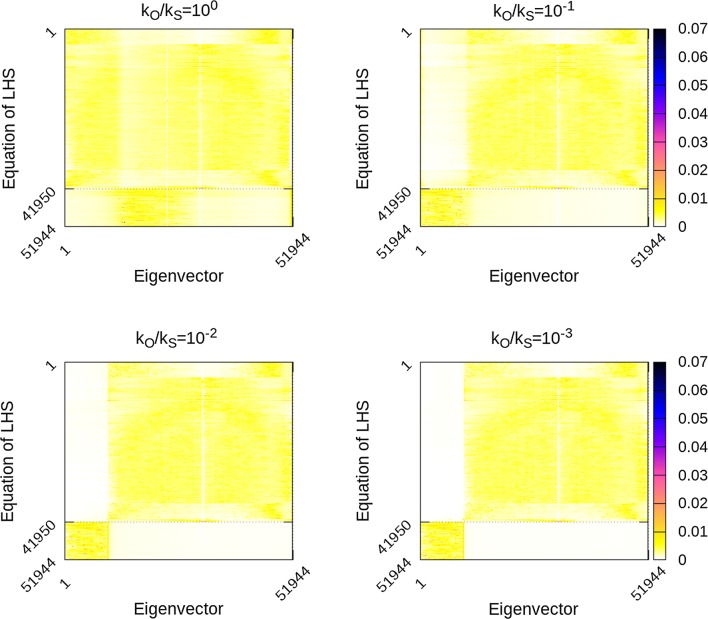

Figures 2, 3 and 4 depict all the eigenvectors of the ssSNPBLUP_MS preconditioned coefficient matrices with different values of (and ). Non-zero eigenvector entries indicate an association of the eigenvalue (associated with this eigenvector) and the corresponding equations, while (almost) zero entries indicate no such (or a very weak) association. When , it can be observed that the smallest eigenvalues of are mainly associated with the equations that are not associated with SNP effects. On the other side, with , the largest eigenvalues of are mainly associated with the equations that are associated with SNP effects (Figs. 2 and 3). Decreasing ratios resulted in modifying the associations of the extremal eigenvalues (i.e. the smallest and largest eigenvalues) with the equations. Indeed, decreasing ratios resulted in the smallest eigenvalues of mainly associated with the equations that are associated with SNP effects, and in the largest eigenvalues of mainly associated with the equations that are not associated with SNP effects.

Fig. 2.

Eigenvectors of preconditioned coefficient matrices with different ratios for the reduced dataset. Reported values are aggregate absolute values of sets of 15 eigenvectors sorted following the ascending order of associated eigenvalues, and of 15 entries per eigenvector. Equations associated with SNP effects are from the 41,950th equation until the 51,944th equation

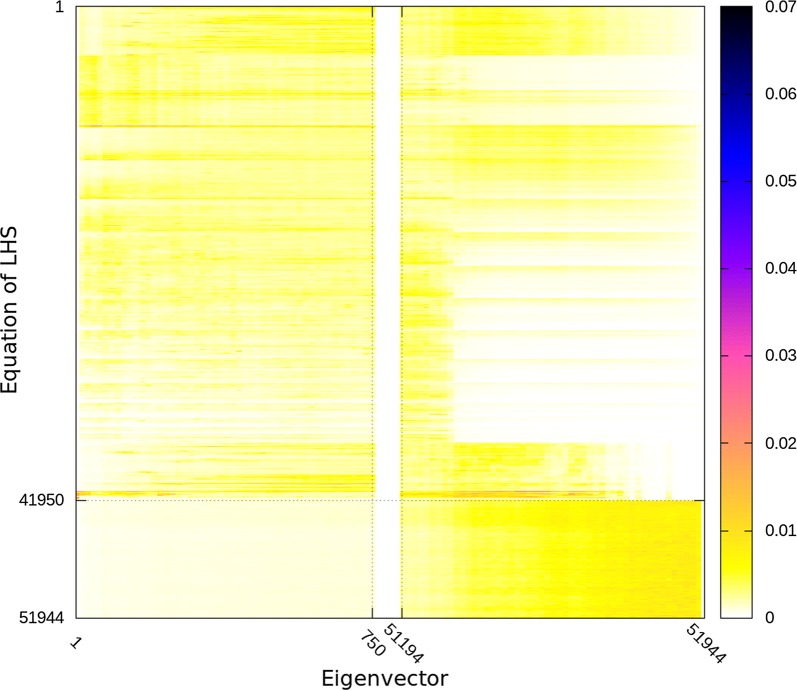

Fig. 3.

Eigenvectors associated with the 750 smallest and largest eigenvalues of the preconditioned coefficient matrix with the ratio for the reduced dataset. Reported values are aggregate absolute values of sets of 15 eigenvectors sorted following the ascending order of associated eigenvalues, and of 15 entries per eigenvector. Darker colors correspond to higher values. Equations associated with SNP effects are from the 41,950th equation until the 51,944th equation

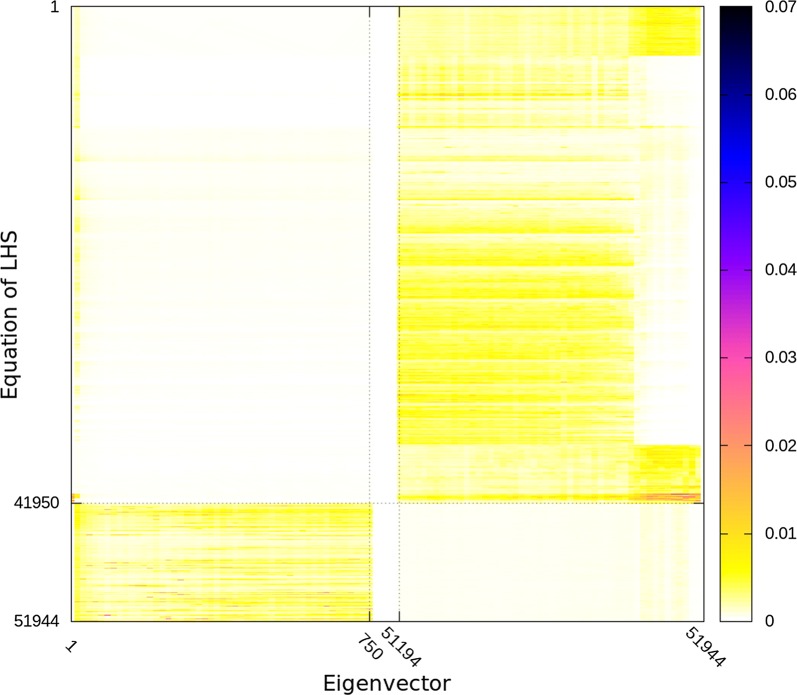

Fig. 4.

Eigenvectors associated with the 750 smallest and largest eigenvalues of the preconditioned coefficient matrix with the ratio for the reduced dataset. Reported values are aggregate absolute values of sets of 15 eigenvectors sorted following the ascending order of associated eigenvalues, and of 15 entries per eigenvector. Darker colors correspond to higher values. Equations associated with SNP effects are from the 41,950th equation until the 51,944th equation

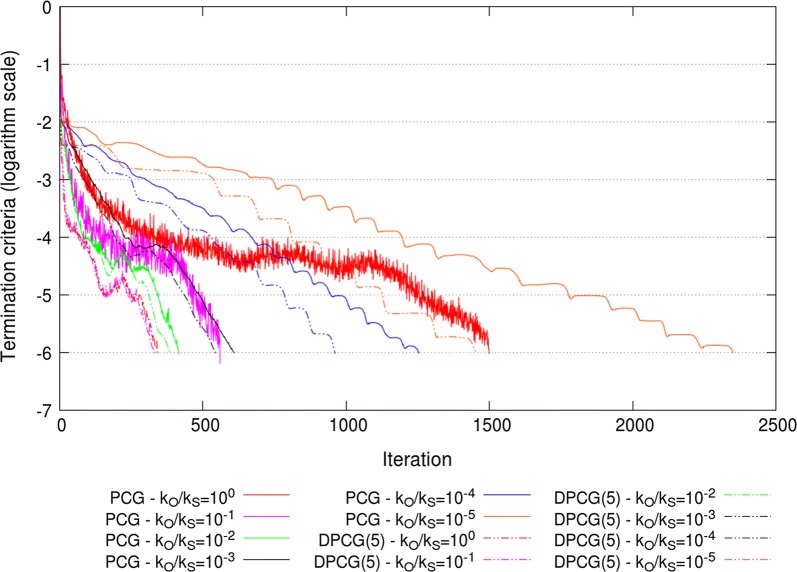

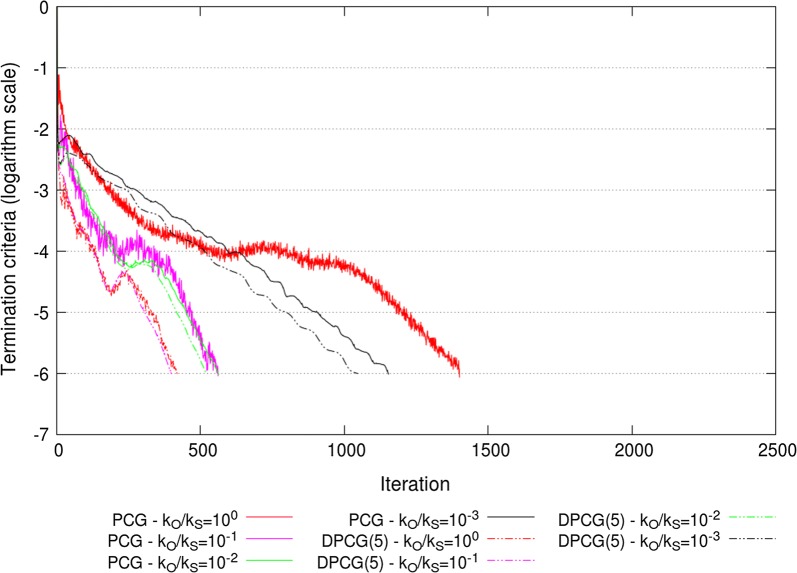

The extremal eigenvalues of the ssSNPBLUP_MS and ssSNPBLUP_Liu preconditioned (deflated) coefficient matrices, with various values for and , are in Table 1. For both ssSNPBLUP_MS and ssSNPBLUP_Liu solved with the PCG method, the largest eigenvalues of the preconditioned coefficient matrix decreased with decreasing ratios to a lower value of 11.9 that was reached when . In addition, for both models, the smallest eigenvalues remained constant with decreasing ratios, until for ssSNPBLUP_MS and for ssSNPBLUP_Liu. Due to these results, the effective condition numbers and the number of iterations to reach convergence were the smallest for for ssSNPBLUP_MS and for for ssSNPBLUP_Liu (Table 1; Figs. 5 and 6). In comparison to the PCG method without the second-level preconditioner (i.e., with ), the number of iterations to reach convergence decreased by a factor of more than 3.5 for ssSNPBLUP_MS and by a factor of more than 2.4 for ssSNPBLUP_Liu. The minimum number of iterations to reach convergence with the PCG method was 417 for ssSNPBLUP_MS and 561 for ssSNPBLUP_Liu (Table 1; Figs. 5 and 6).

Fig. 5.

Termination criteria for the reduced dataset for ssSNPBLUP_MS using the PCG and DPCG methods

Fig. 6.

Termination criteria for the reduced dataset for ssSNPBLUP_Liu using the PCG and DPCG methods

For the same ratio, the extremal eigenvalues (i.e. the smallest and largest eigenvalues) of the different preconditioned coefficient matrices were proportional by a factor of (Table 1). Therefore, for the same ratio the effective condition numbers of the different preconditioned coefficient matrices and the associated numbers of equations to reach convergence were the same (Table 1). It is also worth noting that, for a fixed value of , the largest eigenvalues decreased almost proportionally by a factor of with decreasing ratios until they reached their lower bound (Table 1).

For both ssSNPBLUP_MS and ssSNPBLUP_Liu solved with the DPCG method and 5 SNPs per subdomain, the largest eigenvalues of the preconditioned deflated coefficient matrices remained constant (around 6.44) for all ratios (Table 1). However, for both models, the smallest eigenvalues started to decrease for ratios smaller than () for ssSNPBLUP_MS (ssSNPBLUP_Liu). These unfavourable decreases of the smaller eigenvalues with decreasing ratios resulted in increasing the effective condition numbers and the number of iterations to reach convergence when the second-level preconditioner was applied with the DPCG method (Table 1; Figs. 5 and 6).

Field dataset

For the field dataset, regarding the extremal eigenvalues, the application of the second-level preconditioner together with the PCG method led to a decrease of the largest eigenvalues of the preconditioned coefficient matrix from for ssSNPBLUP_MS, and from for ssSNPBLUP_Liu, to about 5. Ratios of smaller than for ssSNPBLUP_MS and smaller than for ssSNPBLUP_Liu did not further change the largest eigenvalues (Table 2). For the DPCG method applied to ssSNPBLUP_MS, the largest eigenvalues of the preconditioned deflated coefficient matrices remained constant for all ratios (Table 2). For the DPCG method applied to ssSNPBLUP_Liu, the largest eigenvalues of the preconditioned deflated coefficient matrices slightly decreased with and then remained constant for all ratios (Table 2). The application of the second-level preconditioner with both the PCG and DPCG methods led to the smallest eigenvalues of the preconditioned (deflated) coefficient matrices decreasing with decreasing ratios (Table 2).

Table 2.

Characteristics of preconditioned (deflated) coefficient matrices, and of PCG and DPCG methods for solving ssSNPBLUP applied to the field dataset

| Method | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| MS | PCG | 1 | 10,000 | 44,808 | 4.5 | 46,081 | |||

| MS | PCG | 10,000 | 51,768 | 5.2 | 53,550 | ||||

| MS | PCG | 6210 | 34,139 | 5.5 | 35,812 | ||||

| MS | PCG | 5.08 | 3825 | 19,043 | 5.0 | 20,866 | |||

| MS | PCG | 5.07 | 7336 | 54,326 | 7.4 | 56,475 | |||

| MS | DPCG | 1 | 4.77 | 748 | 6527 | 8.7 | 17,229 | ||

| MS | DPCG | 4.77 | 1211 | 11,864 | 9.8 | 22,947 | |||

| MS | DPCG | 4.77 | 1778 | 17,030 | 9.6 | 28,615 | |||

| MS | DPCG | 4.77 | 2569 | 23,676 | 9.2 | 35,497 | |||

| Liu | PCG | 1 | 10,000 | 44,122 | 4.4 | 45,083 | |||

| Liu | PCG | 6049 | 31,085 | 5.1 | 32,018 | ||||

| Liu | PCG | 5.07 | 2669 | 13,225 | 5.0 | 13,888 | |||

| Liu | PCG | 5.07 | 3606 | 20,578 | 5.7 | 21,458 | |||

| Liu | PCG | 5.07 | 7033 | 33,534 | 4.8 | 34,675 | |||

| Liu | DPCG | 1 | 5.31 | 2877 | 22,791 | 7.9 | 26,521 | ||

| Liu | DPCG | 4.77 | 1628 | 14,231 | 8.7 | 18,049 | |||

| Liu | DPCG | 4.77 | 2234 | 23,244 | 10.4 | 28,057 | |||

| Liu | DPCG | 4.77 | 3106 | 34,950 | 11.3 | 39,603 |

MS = ssSNPBLUP model proposed by Mantysaari and Stranden [7]; Liu = ssSNPBLUP model proposed by Liu et al. [5];

Parameters used for the second-level preconditioner;

Smallest and largest eigenvalues of the preconditioned (deflated) coefficient matrix;

Condition number of the preconditioned (deflated) coefficient matrix;

Number of iterations. A number of iterations equal to 10,000 means that the method failed to converge within 10,000 iterations;

Wall clock time (seconds) for the iterative process;

Average wall clock time (seconds) per iteration;

Wall clock time (seconds) for a complete process (including I/O operations)

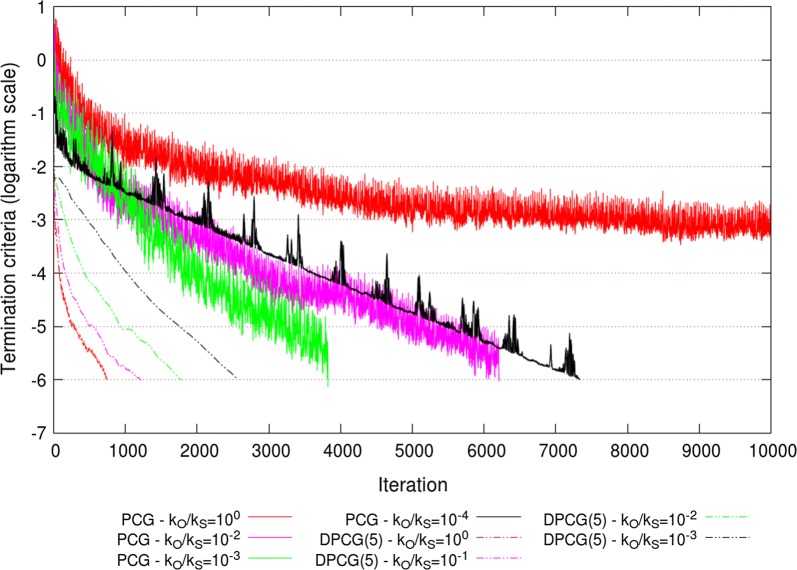

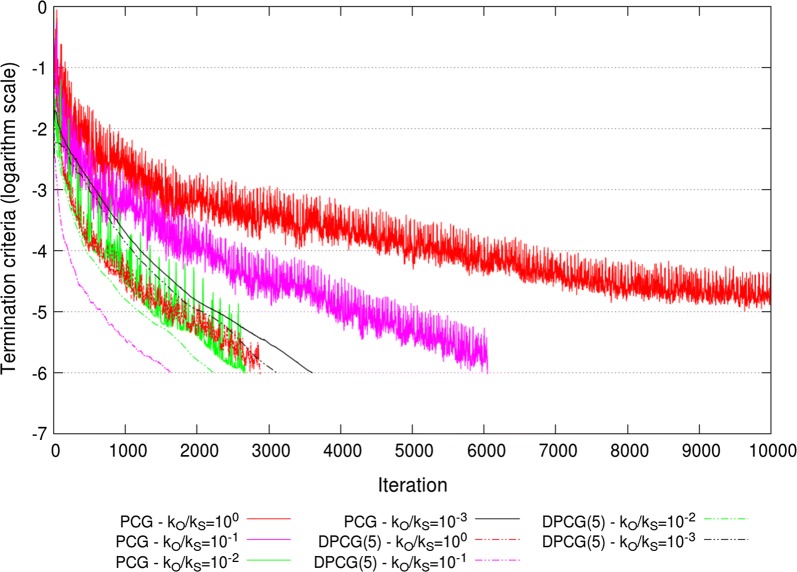

These observed patterns of extremal eigenvalues resulted in an optimal ratio for the PCG method applied to ssSNPBLUP_MS and an optimal ratio for the PCG method applied to ssSNPBLUP_Liu, in terms of effective condition numbers and numbers of iterations to reach convergence (Table 2; Figs. 7 and 8). With these ratios, the PCG method converged within 3825 iterations for ssSNPBLUP_MS and within 2665 iterations for ssSNPBLUP_Liu, while the PCG method did not converge within 10,000 iterations for both models (Table 2; Figs. 7 and 8). For the DPCG method, the application of the second-level preconditioner generally deteriorated the effective condition numbers and numbers of iterations to reach convergence, for both ssSNPBLUP_MS and ssSNPBLUP_Liu. The DPCG method converged within 748 iterations for ssSNPBLUP_MS with and within 2877 iterations for ssSNPBLUP_Liu with (Table 2; Figs. 7 and 8).

Fig. 7.

Termination criteria for the field dataset for ssSNPBLUP_MS using the PCG and DPCG methods

Fig. 8.

Termination criteria for the field dataset for ssSNPBLUP_Liu using the PCG and DPCG methods

The total wall clock times of the iterative processes and for the complete processes (including I/O operations and computation of the preconditioners, and Galerkin matrices) for the PCG and DPCG methods are in Table 2. Across all combinations of systems of equations and solvers, the smallest wall clock time for the complete process was approximately 14,000 s for the PCG method with the second-level preconditioner applied to ssSNPBLUP_Liu. Slightly greater wall clock times were needed for ssSNPBLUP_MS solved with the DPCG method (without the second-level preconditioner ). It is worth noting that the wall clock times needed for the computation of the inverse of the Galerkin matrix () were approximately 9700 s for ssSNPBLUP_MS and approximately 2500 s for ssSNPBLUP_Liu.

Discussion

In this study, we introduced a second-level diagonal preconditioner that results in smaller effective condition numbers of the preconditioned (deflated) coefficient matrices and in improved convergence patterns for two different ssSNPBLUP mixed model equations. From the theory and based on the results, the use of the second-level preconditioner results in improved effective condition numbers of the preconditioned (deflated) coefficient matrices of ssSNPBLUP by decreasing the largest eigenvalues, while the smallest eigenvalues remain constant, or decrease at a lower rate than the largest eigenvalues. In this section, we will discuss the following three points: (1) the influence of the second-level diagonal preconditioner on the eigenvalues and associated eigenvectors of the preconditioned (deflated) coefficient matrices of ssSNPBLUP; (2) the application of the second-level preconditioner in ssSNPBLUP evaluations; and (3) the possible application of the second-level preconditioner to more complex ssSNPBLUP models and to models other than ssSNPBLUP.

Influence of on the eigenvalues and associated eigenvectors

Applying the second-level preconditioner with an optimal ratio to the linear systems of ssSNPBLUP results in a decrease of the largest eigenvalues of the preconditioned (deflated) coefficient matrices of ssSNPBLUP. As observed by Vandenplas et al. [4] and in comparison with ssGBLUP, the largest eigenvalues that influence the convergence of the PCG method applied to ssSNPBLUP_MS were associated with SNP effects. The second-level preconditioner allows a decrease of these largest eigenvalues by multiplying all entries of these SNP equations of the preconditioned coefficient matrices by a value proportional to , as shown with the Gershgorin circle algorithm [18] [see Eq. (4)]. However, if the ratio is applied to a set of equations that are not associated with the largest eigenvalues of the preconditioned (deflated) coefficient matrices, the second-level preconditioner will not result in decreased largest eigenvalues. This behaviour was observed when the second-level preconditioner was applied to ssSNPBLUP_MS with the DPCG method for the reduced dataset (Table 1). For these scenarios, the DPCG method already annihilated all the largest unfavourable eigenvalues up to the lower bound of the largest eigenvalue that is allowed with the second-level preconditioner . Therefore, the second-level preconditioner did not further decrease the largest eigenvalues. It is worth noting that, if the DPCG method did not annihilate all the unfavourable largest eigenvalues up to the lower bound defined by Eq. (4), the application of the second-level preconditioner with the DPCG method did remove these remaining largest eigenvalues, as shown by the results for ssSNPBLUP_Liu applied to the field dataset (Table 2).

The decrease of the largest eigenvalues of the preconditioned coefficient matrices with decreasing ratios (and until the lower bound is reached) can be explained by the sparsity pattern of the eigenvectors associated with the largest eigenvalues of the preconditioned coefficient matrices of ssSNPBLUP. Indeed, Figs. 2 and 3 show that the entries that correspond to the equations that are not associated with SNP effects, are close to 0 for the eigenvectors associated with the largest eigenvalues of of ssSNPBLUP_MS. Accordingly, if we assume that these entries are 0, i.e.,

being an eigenvector associated with one of largest eigenvalues of , it follows that the largest eigenvalues of multiplied by are also the eigenvalues of . These largest eigenvalues of will therefore be equal to the largest eigenvalues of until the lower bound defined by Eq. (4) is reached (see Additional file 2 for the derivation). This observation can also motivate an educated guess for an optimal ratio for ssSNPBLUP with one additive genetic effect. If the largest eigenvalues and are (approximately) known, an educated guess for the ratio can be equal to . For example, in our cases, was always equal to the largest eigenvalue of the preconditioned coefficient matrix of a pedigree BLUP (results not shown). It follows that the educated guess for the field dataset is equal to for ssSNPBLUP_MS and for ssSNPBLUP_Liu, since . Both values are of the same order as the corresponding optimal ratios. However, the second-level preconditioner will be effective only if the smallest eigenvalues of the preconditioned coefficient matrices are not influenced, or at least less influenced than the largest eigenvalues, by the second-level preconditioner .

The decrease of the smallest eigenvalues of the preconditioned (deflated) coefficient matrices mainly depends on the sparsity pattern of the eigenvectors associated with the smallest eigenvalues. We formulated a sufficient condition such that the smallest eigenvalues remain constant when the second-level preconditioner is applied. While this sufficient condition is not fulfilled for the reduced dataset (and probably also not for the field dataset), it can help us to predict the behaviour of the smallest eigenvalues based on the sparsity pattern of the associated eigenvectors. For example, if the eigenvector associated with the smallest eigenvalue of has mainly non-zero entries corresponding to the equations associated with SNP effects, the use of the second-level preconditioner will most likely result in a decrease of the smallest eigenvalues proportional to , which is undesirable. Other behaviours of the smallest eigenvalues of the preconditioned (deflated) coefficient matrices can lead to the conclusion that the associated eigenvectors have a different sparsity pattern, which helps understand if and how the use of the proposed second-level diagonal preconditioner will be beneficial.

Application of in ssSNPBLUP evaluations

The second-level preconditioner is easy to implement in existing software and does not influence the computational costs of a PCG iteration, since it can be merged with the preconditioner . Indeed, it is sufficient to multiply the entries of that correspond to the equations associated with SNP effects by an optimal ratio to implement the second-level preconditioner . Furthermore, the value of an optimal ratio for a ssSNPBLUP evaluation can be determined by testing a range of values around the educated guess defined previously and then re-used for several subsequent ssSNPBLUP evaluations, because additional data for each new evaluation is only a fraction of the data previously used and will therefore not modify, or will modify only slightly, the properties of the preconditioned coefficient matrices .

In this study, we used the second-level diagonal preconditioner for two different ssSNPBLUP models. To our knowledge, it is the first time that ssSNPBLUP_Liu was successfully applied until convergence with real datasets [3, 5]. From our results, it seems that the preconditioned coefficient matrices of ssSNPBLUP_Liu are better conditioned than the preconditioned coefficient matrices of ssSNPBLUP_MS, leading to better convergence patterns for ssSNPBLUP_Liu. Therefore, among all possible combinations of linear systems (i.e., ssSNPBLUP_MS and ssSNPBLUP_Liu), solvers (i.e., the PCG and DPCG methods) and the application (or not) of the second-level preconditioner , it seems that ssSNPBLUP_Liu solved with the PCG method combined with the second-level preconditioner is the most efficient in terms of total wall clock times and implementation. However, in our study it was tested only on two datasets and the most efficient combination of linear system and solver will most likely be situation-dependent.

Application of to other scenarios

The proposed second-level preconditioner can be applied and may be beneficial for ssSNPBLUP models that involve multiple additive genetic effects, or for other models that include an effect that would result in an increase to the largest eigenvalues of the preconditioned coefficient matrices. The developed theory does not require a multivariate ssSNPBLUP with only one additive genetic effect. As such, for example, if multiple additive genetic effects are fitted into the ssSNPBLUP model, such as direct and maternal genetic effects, the second-level preconditioner could be used with different ratios applied separately to the direct and maternal SNP effects. A similar strategy was successfully applied for ssSNPBLUP proposed by Fernando et al. [2] with French beef cattle datasets (Thierry Tribout, personal communication). Furthermore, the second-level preconditioner could be used to improve the convergence pattern of models other than ssSNPBLUP. For example, with the field dataset, the addition of the genetic groups fitted explicitly as random covariables in the model for pedigree-BLUP (that is, without genomic information) led to an increase of the largest eigenvalue of the preconditioned coefficient matrix from 5.1 to 14.8. The introduction of the second-level preconditioner into the preconditioned linear system of pedigree-BLUP with a ratio applied to the equations associated with the genetic groups reduced the largest eigenvalues to 6.0, resulting in a decrease of the effective condition number by a factor of 2.6. This decrease of the effective condition number translated to a decrease in the number of iterations to reach convergence from 843 to 660.

Conclusions

The proposed second-level preconditioner is easy to implement in existing software and can improve the convergence of the PCG and DPCG methods applied to different ssSNPBLUP methods. Based on our results, the ssSNPBLUP system of equations proposed by Liu et al. [5] solved using the PCG method and the second-level preconditioner seems to be most efficient. However, the optimal combination of ssSNPBLUP and solver will most likely be situation-dependent.

Additional files

Additional file 1. Bounds of the largest eigenvalue of the preconditioned coefficient matrix of ssSNPBLUP Derivation of the lower and upper bounds of the largest eigenvalue of the preconditioned coefficient matrix of ssSNPBLUP.

Additional file 2. Proof of the sufficient condition Proof of the sufficient condition.

Acknowlegements

The use of the high-performance cluster was made possible by CAT-AgroFood (Shared Research Facilities Wageningen UR, Wageningen, the Netherlands).

Authors' contributions

JV conceived the study design, ran the tests, and wrote the programs and the first draft. JV and CV discussed and developed the theory. HE prepared data. CV and MPLC provided valuable insights throughout the writing process. All authors read and approved the final manuscript.

Funding

This study was financially supported by the Dutch Ministry of Economic Affairs (TKI Agri & Food Project 16022) and the Breed4Food partners Cobb Europe (Colchester, Essex, United Kingdom), CRV (Arnhem, the Netherlands), Hendrix Genetics (Boxmeer, the Netherlands), and Topigs Norsvin (Helvoirt, the Netherlands).

Ethics approval and consent to participate

The data used for this study were collected as part of routine data recording for a commercial breeding program. Samples collected for DNA extraction were only used for the breeding program. Data recording and sample collection were conducted strictly in line with the Dutch law on the protection of animals (Gezondheids- en welzijnswet voor dieren).

Consent for publication

Not applicable.

Competing interests

The authors declare that they have no competing interests.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

Jeremie Vandenplas, Email: jeremie.vandenplas@wur.nl.

Mario P. L. Calus, Email: mario.calus@wur.nl

Herwin Eding, Email: herwin.eding@crv4all.com.

Cornelis Vuik, Email: c.vuik@tudelft.nl.

References

- 1.Strandén I, Lidauer M. Solving large mixed linear models using preconditioned conjugate gradient iteration. J Dairy Sci. 1999;82:2779–2787. doi: 10.3168/jds.S0022-0302(99)75535-9. [DOI] [PubMed] [Google Scholar]

- 2.Fernando RL, Cheng H, Garrick DJ. An efficient exact method to obtain GBLUP and single-step GBLUP when the genomic relationship matrix is singular. Genet Sel Evol. 2016;48:80. doi: 10.1186/s12711-016-0260-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Taskinen M, Mäntysaari EA, Strandén I. Single-step SNP-BLUP with on-the-fly imputed genotypes and residual polygenic effects. Genet Sel Evol. 2017;49:36. doi: 10.1186/s12711-017-0310-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Vandenplas J, Eding H, Calus MPL, Vuik C. Deflated preconditioned conjugate gradient method for solving single-step BLUP models efficiently. Genet Sel Evol. 2018;50:51. doi: 10.1186/s12711-018-0429-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Liu Z, Goddard M, Reinhardt F, Reents R. A single-step genomic model with direct estimation of marker effects. J Dairy Sci. 2014;97:5833–5850. doi: 10.3168/jds.2014-7924. [DOI] [PubMed] [Google Scholar]

- 6.Legarra A, Ducrocq V. Computational strategies for national integration of phenotypic, genomic, and pedigree data in a single-step best linear unbiased prediction. J Dairy Sci. 2012;95:4629–4645. doi: 10.3168/jds.2011-4982. [DOI] [PubMed] [Google Scholar]

- 7.Mäntysaari EA, Strandén I. Single-step genomic evaluation with many more genotyped animals. In: Proceedings of the 67th annual meeting of the European Association for Animal Production, 29 August–2 September 2016, Belfast; 2016.

- 8.Cornelissen MAMC, Mullaart E, Van der Linde C, Mulder HA. Estimating variance components and breeding values for number of oocytes and number of embryos in dairy cattle using a single-step genomic evaluation. J Dairy Sci. 2017;100:4698–4705. doi: 10.3168/jds.2016-12075. [DOI] [PubMed] [Google Scholar]

- 9.CRV Animal Evaluation Unit. Management guides, E16: breeding value-temperament during milking; 2010. https://www.crv4all-international.com/wp-content/uploads/2016/03/E-16-Temperament.pdf. Accessed 15 Mar 2018.

- 10.CRV Animal Evaluation Unit. Statistical indicators, E-15: breeding value milking speed; 2017. https://www.crv4all-international.com/wp-content/uploads/2017/05/E_15_msn_apr-2017_EN.pdf. Accessed 15 Mar 2018.

- 11.Gengler N, Nieuwhof G, Konstantinov K, Goddard ME. Alternative single-step type genomic prediction equations. In: Proceedings of the 63rd annual meeting of the European Association for Animal Production, 27–31 Aug 2012; Bratislava; 2012.

- 12.Mäntysaari EA, Evans RD, Strandén I. Efficient single-step genomic evaluation for a multibreed beef cattle population having many genotyped animals. J Anim Sci. 2017;95:4728–4737. doi: 10.2527/jas2017.1912. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Fragomeni BO, Lourenco DAL, Masuda Y, Legarra A, Misztal I. Incorporation of causative quantitative trait nucleotides in single-step GBLUP. Genet Sel Evol. 2017;49:59. doi: 10.1186/s12711-017-0335-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Raymond B, Bouwman AC, Wientjes YCJ, Schrooten C, Houwing-Duistermaat J, Veerkamp RF. Genomic prediction for numerically small breeds, using models with pre-selected and differentially weighted markers. Genet Sel Evol. 2018;50:49. doi: 10.1186/s12711-018-0419-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Wang H, Misztal I, Aguilar I, Legarra A, Muir WM. Genome-wide association mapping including phenotypes from relatives without genotypes. Genet Res. 2012;94:73–83. doi: 10.1017/S0016672312000274. [DOI] [PubMed] [Google Scholar]

- 16.Saad Y. Iterative methods for sparse linear systems. 2. Philadelphia: Society for Industrial and Applied Mathematics; 2003. [Google Scholar]

- 17.Frank J, Vuik C. On the construction of deflation-based preconditioners. SIAM J Sci Comput. 2001;23:442–462. doi: 10.1137/S1064827500373231. [DOI] [Google Scholar]

- 18.Varga RS. Geršgorin and his circles. Springer series in computational mathematics. Berlin: Springer; 2004. [Google Scholar]

- 19.Paige C, Saunders M. Solution of sparse indefinite systems of linear equations. SIAM J Numer Anal. 1975;12:617–629. doi: 10.1137/0712047. [DOI] [Google Scholar]

- 20.Kaasschieter EF. A practical termination criterion for the conjugate gradient method. BIT Numer Math. 1988;28:308–322. doi: 10.1007/BF01934094. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Additional file 1. Bounds of the largest eigenvalue of the preconditioned coefficient matrix of ssSNPBLUP Derivation of the lower and upper bounds of the largest eigenvalue of the preconditioned coefficient matrix of ssSNPBLUP.

Additional file 2. Proof of the sufficient condition Proof of the sufficient condition.